#Location from IP api

Explore tagged Tumblr posts

Text

What factors should you consider when selecting the most suitable IP Geo API for your requirements?

Integrating Location Services API With Your Mobile App: A Step-by-Step Guide:

Most mobile applications today require accurate location data, but some developers struggle to obtain it efficiently. Integrating a location API can be the perfect solution. This step-by-step guide explores integrating an Ip Geo API into your mobile app.

Location APIs provide developers access to and control over location-related capabilities in their apps. GPS-based APIs use a device's GPS or wifi access points to deliver real-time location data, while geocoding APIs translate addresses into geographic coordinates and vice versa, enabling functions like map integration and location-based search.

When choosing a location services API, consider factors like reliability, accuracy, documentation, support, pricing, and usage restrictions.

Here's a step-by-step guide using the ipstack API:

Obtain an API key from ipstack after signing up for a free account.

Set up your development environment, preferably using VS Code.

Install the necessary HTTP client library (e.g., Axios in JavaScript, Requests in Python).

Initialize the API and make calls using the provided base URL and your API key.

Implement user interface components to display the data.

To maximize efficiency, follow best practices, like using caching systems, adhering to usage constraints, and thorough testing.

ipstack proves to be a valuable choice for geolocation functionality with its precise data and user-friendly API. Sign up and unlock the power of reliable geolocation data for your application.

1 note

·

View note

Text

Polludrone

Polludrone is a Continuous Ambient Air Quality Monitoring System (CAAQMS). It is capable of monitoring various environmental parameters related to Air Quality, Noise, Odour, Meteorology, and Radiation. Polludrone measures the particulate matter and gaseous concentrations in the ambient air in real-time. Using external probes, it can also monitor other auxiliary parameters like traffic, disaster, and weather. Polludrone is an ideal choice for real-time monitoring applications such as Industries, Smart Cities, Airports, Construction, Seaports, Campuses, Schools, Highways, Tunnels, and Roadside monitoring. It is the perfect ambient air quality monitoring system to understand a premise's environmental health.

Product Features:

Patented Technology: Utilizes innovative e-breathing technology for higher data accuracy.

Retrofit Design: Plug-and-play design for ease of implementation.

Compact: Lightweight and compact system that can be easily installed on poles or walls.

Internal Storage: Internal data storage capacity of up to 8 GB or 90 days of data.

On-device Calibration: On-site device calibration capability using built-in calibration software.

Identity and Configuration: Geo-tagging for accurate location (latitude and longitude) of the device.

Tamper-Proof: IP 66 grade certified secure system to avoid tampering, malfunction, or sabotage.

Over-the-Air Update: Automatically upgradeable from a central server without the need for an onsite visit.

Network Agnostic: Supports a wide range of connectivity options, including GSM, GPRS, Wi-Fi, LoRa, NBIoT, Ethernet, Modbus, Relay, and Satellite.

Real-Time Data: Continuous monitoring with real-time data transfer at configurable intervals.

Weather Resistant: Durable IP 66 enclosure designed to withstand extreme weather conditions.

Fully Solar Powered: 100% solar-powered system, ideal for off-grid locations.

Key Benefits:

Robust and Rugged: Designed with a durable enclosure to withstand extreme climatic conditions.

Secure Cloud Platform: A secure platform for visualizing and analyzing data, with easy API integration for immediate action.

Accurate Data: Provides real-time, accurate readings to detect concentrations in ambient air.

Easy to Install: Effortless installation with versatile mounting options.

Polludrone Usecases:

Industrial Fenceline: Monitoring pollution at the industry fenceline ensures compliance with policies and safety regulations, and helps monitor air quality levels.

Smart City and Campuses: Pollution monitoring in smart cities and campuses provides authorities with actionable insights for pollution control and enhances citizen welfare.

Roads, Highways, and Tunnels: Pollution monitoring in roads and tunnels supports the creation of mitigation action plans to control vehicular emissions.

Airports: Pollution and noise monitoring at taxiways and hangars helps analyze the impact on travelers and surrounding neighborhoods. Visit www.technovalue.in for more info.

#AirQualityMonitoring#CAAQMS#EnvironmentalMonitoring#SmartCitySolutions#RealTimeData#PollutionControl#IoTDevice

2 notes

·

View notes

Text

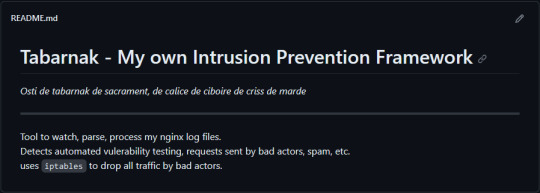

(this is a small story of how I came to write my own intrusion detection/prevention framework and why I'm really happy with that decision, don't mind me rambling)

Preface

About two weeks ago I was faced with a pretty annoying problem. Whilst I was going home by train I have noticed that my server at home had been running hot and slowed down a lot. This prompted me to check my nginx logs, the only service that is indirectly available to the public (more on that later), which made me realize that - due to poor access control - someone had been sending me hundreds of thousands of huge DNS requests to my server, most likely testing for vulnerabilities. I added an iptables rule to drop all traffic from the aforementioned source and redirected remaining traffic to a backup NextDNS instance that I set up previously with the same overrides and custom records that my DNS had to not get any downtime for the service but also allow my server to cool down. I stopped the DNS service on my server at home and then used the remaining train ride to think. How would I stop this from happening in the future? I pondered multiple possible solutions for this problem, whether to use fail2ban, whether to just add better access control, or to just stick with the NextDNS instance.

I ended up going with a completely different option: making a solution, that's perfectly fit for my server, myself.

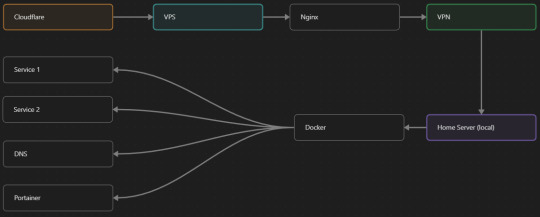

My Server Structure

So, I should probably explain how I host and why only nginx is public despite me hosting a bunch of services under the hood.

I have a public facing VPS that only allows traffic to nginx. That traffic then gets forwarded through a VPN connection to my home server so that I don't have to have any public facing ports on said home server. The VPS only really acts like the public interface for the home server with access control and logging sprinkled in throughout my configs to get more layers of security. Some Services can only be interacted with through the VPN or a local connection, such that not everything is actually forwarded - only what I need/want to be.

I actually do have fail2ban installed on both my VPS and home server, so why make another piece of software?

Tabarnak - Succeeding at Banning

I had a few requirements for what I wanted to do:

Only allow HTTP(S) traffic through Cloudflare

Only allow DNS traffic from given sources; (location filtering, explicit white-/blacklisting);

Webhook support for logging

Should be interactive (e.g. POST /api/ban/{IP})

Detect automated vulnerability scanning

Integration with the AbuseIPDB (for checking and reporting)

As I started working on this, I realized that this would soon become more complex than I had thought at first.

Webhooks for logging This was probably the easiest requirement to check off my list, I just wrote my own log() function that would call a webhook. Sadly, the rest wouldn't be as easy.

Allowing only Cloudflare traffic This was still doable, I only needed to add a filter in my nginx config for my domain to only allow Cloudflare IP ranges and disallow the rest. I ended up doing something slightly different. I added a new default nginx config that would just return a 404 on every route and log access to a different file so that I could detect connection attempts that would be made without Cloudflare and handle them in Tabarnak myself.

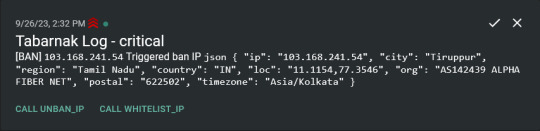

Integration with AbuseIPDB Also not yet the hard part, just call AbuseIPDB with the parsed IP and if the abuse confidence score is within a configured threshold, flag the IP, when that happens I receive a notification that asks me whether to whitelist or to ban the IP - I can also do nothing and let everything proceed as it normally would. If the IP gets flagged a configured amount of times, ban the IP unless it has been whitelisted by then.

Location filtering + Whitelist + Blacklist This is where it starts to get interesting. I had to know where the request comes from due to similarities of location of all the real people that would actually connect to the DNS. I didn't want to outright ban everyone else, as there could be valid requests from other sources. So for every new IP that triggers a callback (this would only be triggered after a certain amount of either flags or requests), I now need to get the location. I do this by just calling the ipinfo api and checking the supplied location. To not send too many requests I cache results (even though ipinfo should never be called twice for the same IP - same) and save results to a database. I made my own class that bases from collections.UserDict which when accessed tries to find the entry in memory, if it can't it searches through the DB and returns results. This works for setting, deleting, adding and checking for records. Flags, AbuseIPDB results, whitelist entries and blacklist entries also get stored in the DB to achieve persistent state even when I restart.

Detection of automated vulnerability scanning For this, I went through my old nginx logs, looking to find the least amount of paths I need to block to catch the biggest amount of automated vulnerability scan requests. So I did some data science magic and wrote a route blacklist. It doesn't just end there. Since I know the routes of valid requests that I would be receiving (which are all mentioned in my nginx configs), I could just parse that and match the requested route against that. To achieve this I wrote some really simple regular expressions to extract all location blocks from an nginx config alongside whether that location is absolute (preceded by an =) or relative. After I get the locations I can test the requested route against the valid routes and get back whether the request was made to a valid URL (I can't just look for 404 return codes here, because there are some pages that actually do return a 404 and can return a 404 on purpose). I also parse the request method from the logs and match the received method against the HTTP standard request methods (which are all methods that services on my server use). That way I can easily catch requests like:

XX.YYY.ZZZ.AA - - [25/Sep/2023:14:52:43 +0200] "145.ll|'|'|SGFjS2VkX0Q0OTkwNjI3|'|'|WIN-JNAPIER0859|'|'|JNapier|'|'|19-02-01|'|'||'|'|Win 7 Professional SP1 x64|'|'|No|'|'|0.7d|'|'|..|'|'|AA==|'|'|112.inf|'|'|SGFjS2VkDQoxOTIuMTY4LjkyLjIyMjo1NTUyDQpEZXNrdG9wDQpjbGllbnRhLmV4ZQ0KRmFsc2UNCkZhbHNlDQpUcnVlDQpGYWxzZQ==12.act|'|'|AA==" 400 150 "-" "-"

I probably over complicated this - by a lot - but I can't go back in time to change what I did.

Interactivity As I showed and mentioned earlier, I can manually white-/blacklist an IP. This forced me to add threads to my previously single-threaded program. Since I was too stubborn to use websockets (I have a distaste for websockets), I opted for probably the worst option I could've taken. It works like this: I have a main thread, which does all the log parsing, processing and handling and a side thread which watches a FIFO-file that is created on startup. I can append commands to the FIFO-file which are mapped to the functions they are supposed to call. When the FIFO reader detects a new line, it looks through the map, gets the function and executes it on the supplied IP. Doing all of this manually would be way too tedious, so I made an API endpoint on my home server that would append the commands to the file on the VPS. That also means, that I had to secure that API endpoint so that I couldn't just be spammed with random requests. Now that I could interact with Tabarnak through an API, I needed to make this user friendly - even I don't like to curl and sign my requests manually. So I integrated logging to my self-hosted instance of https://ntfy.sh and added action buttons that would send the request for me. All of this just because I refused to use sockets.

First successes and why I'm happy about this After not too long, the bans were starting to happen. The traffic to my server decreased and I can finally breathe again. I may have over complicated this, but I don't mind. This was a really fun experience to write something new and learn more about log parsing and processing. Tabarnak probably won't last forever and I could replace it with solutions that are way easier to deploy and way more general. But what matters is, that I liked doing it. It was a really fun project - which is why I'm writing this - and I'm glad that I ended up doing this. Of course I could have just used fail2ban but I never would've been able to write all of the extras that I ended up making (I don't want to take the explanation ad absurdum so just imagine that I added cool stuff) and I never would've learned what I actually did.

So whenever you are faced with a dumb problem and could write something yourself, I think you should at least try. This was a really fun experience and it might be for you as well.

Post Scriptum

First of all, apologies for the English - I'm not a native speaker so I'm sorry if some parts were incorrect or anything like that. Secondly, I'm sure that there are simpler ways to accomplish what I did here, however this was more about the experience of creating something myself rather than using some pre-made tool that does everything I want to (maybe even better?). Third, if you actually read until here, thanks for reading - hope it wasn't too boring - have a nice day :)

10 notes

·

View notes

Text

How To Check Your Public IP Address Location

Determining your public IP address location is a straightforward process that allows you to gain insight into the approximate geographical region from which your device is connecting to the internet.

This information can be useful for various reasons, including troubleshooting network issues, understanding your online privacy, and accessing region-specific content. This introduction will guide you through the steps to check your public IP address location, providing you with a simple method to retrieve this valuable information.

How To Find The Location Of Your Public Ip Address? To find the location of your public IP address, you can use online tools called IP geolocation services. Simply visit a reliable IP geolocation website or search "What is my IP location" in your preferred search engine.

These services will display your approximate city, region, country, and sometimes even your Internet Service Provider (ISP) details based on your IP address. While this method provides a general idea of your IP's location, keep in mind that it might not always be completely accurate due to factors like VPN usage or ISP routing.

What Tools Can I Use To Identify My Public Ip Address Location? You can use various online tools and websites to identify the location of your public IP address. Some commonly used tools include:

IP Geolocation Websites: Websites like "WhatIsMyIP.com" and "IPinfo.io" provide instant IP geolocation information, displaying details about your IP's approximate location.

IP Lookup Tools: Services like "IP Location" or "IP Tracker" allow you to enter your IP address to retrieve location-related data.

Search Engines: Simply typing "What is my IP location" in search engines like Google or Bing will display your IP's geographical information.

IP Geolocation APIs: Developers can use APIs like the IPinfo API to programmatically retrieve location data for their public IP addresses.

Network Diagnostic Tools: Built-in network diagnostic tools on some operating systems, such as the "ipconfig" command on Windows or "ifconfig" command on Linux, provide basic information about your IP.

Some browser extensions, like IP Address and Domain Information can display your IP's location directly in your browser. Remember that while these tools provide a general idea of your IP address location, factors like VPN usage or ISP routing can impact the accuracy of the information displayed.

Can I Find My Ip Address Location Using Online Services?

Yes, you can determine your IP address location using online services. By visiting websites like WhatIsMyIP.com or "IPinfo.io" and searching What is my IP location you'll receive information about your IP's approximate geographical region.

However, it's important to note that if you're using a No Lag VPN – Play Warzone, the displayed location might reflect the VPN server's location rather than your actual physical location. Always consider the possibility of VPN influence when using online services to check your IP address location.

What Should Players Consider Before Using A Vpn To Alter Their Pubg Experience? Before players decide to use a VPN to alter their PUBG experience, there are several important factors to consider:

Ping and Latency: Understand that while a VPN might provide access to different servers, it can also introduce additional ping and latency, potentially affecting gameplay.

Server Locations: Research and select a VPN server strategically to balance potential advantages with increased distance and latency.

VPN Quality: Choose a reputable VPN service that offers stable connections and minimal impact on speed.

Game Stability: Be aware that VPN usage could lead to instability, causing disconnections or disruptions during gameplay.

Fair Play: Consider the ethical aspect of using a VPN to manipulate gameplay, as it might affect the fairness and balance of matches.

VPN Compatibility: Ensure the VPN is compatible with your gaming platform and PUBG.

Trial Period: Utilise any trial periods or money-back guarantees to test the VPN's impact on your PUBG experience.

Security and Privacy: Prioritise a VPN that ensures data security and doesn't compromise personal information.

Local Regulations: Be aware of any legal restrictions on VPN usage in your region.

Feedback and Reviews: Read user experiences and reviews to gauge the effectiveness of the VPN for PUBG.

By carefully considering these factors, players can make informed decisions about using a VPN to alter their PUBG experience while minimising potential drawbacks and ensuring an enjoyable and fair gaming environment.

What apps can help you discover your public IP address location and how do they work? Yes, there are apps available that can help you discover your public IP address location. Many IP geolocation apps, such as IP Location or IP Tracker are designed to provide this information quickly and conveniently.

These apps can be found on various platforms, including smartphones and computers, allowing you to easily check your IP's approximate geographical region. However, please note that if you're using a VPN, the location displayed might reflect the VPN server's location. Also, unrelated to IP address location, if you're interested in learning about How To Get Unbanned From Yubo you would need to explore specific guidelines or resources related to that topic.

How Can I Check My Public Ip Address Location? You can easily check your public IP address location by visiting an IP geolocation website or using an IP lookup tool. These online services provide details about your IP's approximate geographic region.

Are There Mobile Apps To Help Me Determine My Public Ip Address Location? Yes, there are mobile apps available on various platforms that allow you to quickly find your public IP address location. These apps provide a user-friendly way to access this information while on the go.

CONCLUSION Checking your public IP address location is a straightforward process facilitated by numerous online tools and websites. These resources offer quick access to valuable information about your IP's approximate geographic region.

Whether through IP geolocation websites, search engines, or dedicated mobile apps, determining your public IP address location can assist in troubleshooting network issues, enhancing online privacy awareness, and accessing region-specific content. By utilizing these tools, users can easily gain insights into their digital presence and make informed decisions regarding their online activities

2 notes

·

View notes

Text

One of the reasons that I believe google docs is such a bastard to use with a screen reader is that it's not a web page it's an application shoved into a browser. It's competition is native apps that run from compiled binaries for your operating system, it needs to be as responsive, adaptable, and have a similar interface to those. Notice how you're allowed to right click in google docs without calling up your browser's context menu. The best way to conceptualize it is that google docs is a flash game... a game that just so happens to be about writing text. So yes, it makes sense that methods meant to read text formatted to be a web page wouldn't work out of the box.

But it's still google's responsibility to figure that out and document their god damn api, something that they won't do because of the fucking rot. Google has lost the mandate of heaven and it doesn't matter how much their shit sucks because they have enough of a vice grip on the market that their adoption isn't really dependent on whether or not their shit sucks.

Google docs is a slightly above average word processor with a full feature set. It's missing important features like local custom font support or having a spell check that actually works so I don't have to attempt to spell the word in the google search bar to get google to guess it right (you're both google why the fuck do I need to do this what the fuck is happening at that hellhole?). The point I'm getting at is MS word 2004 is probably on par if not better than google docs purely in the business of processing words, however, merely processing words isn't where the value of google docs comes in.

Your documents are stored in the cloud, meaning that it's impossible to lose your work to a failure to save or a system crash. It means that you can pick up writing on any computer in any location that has internet access. More importantly, you can share your documents effortlessly. You need only send someone one link and then they're instantly reading (and maybe commenting) on your document. It doesn't ask for an account, and even if it does basically everyone got a Gmail for youtube or because your school assigned you one. It's very rare to find someone who doesn't have a google account, so you can very reasonably assume that if you send someone a link they'll be able to open it. It doesn't matter how shit the editor is, how many people it excludes, how poorly documented the api is, how often it shits the bed. If I switch to Libre office then I have to store my files locally and email them to people to get them to read it, then I'd have to make sure they're all versioned correctly and keep track of feedback in multiple places. If you're just using google docs to write words and nothing else then yeah, Libre Office will probably be fine, but I'd assume that a lot of people who write on this website do so socially and rely on the sharing aspect to make it worth it.

I've seen some interesting alternatives to sharing documents online, but most of them require you to pay a fee, learn to host your own server, or get everyone who wants to read your stuff to sign up for an obscure account. Eventually the ubiquity of your system becomes a selling point in and of itself. This is why competition can't meaningfully serve as a force for better products. Once you achieve a selling point that cannot be contested, weather that's through market share like Google or IP law like adobe the rot sets in.

We either need to get everyone a lot more computer literate (which I don't think is going to happen) or we need a strong regulatory effort to force these giants to turn away from the rot (also not going to happen). I don't think this is going to get better.

writer survey question time:

inspired by seeing screencaps where the software is offering (terrible) style advice because I haven't used a software that has a grammar checker for my stories in like a decade

if you use multiple applications, pick the one you use most often.

19K notes

·

View notes

Text

Flame Proof Motors in Oil & Gas: Why They’re Critical for Offshore and Onshore Safety

The oil and gas industry operates in some of the most hazardous environments on the planet. Whether it’s offshore drilling rigs, onshore refineries, or gas processing facilities, the risk of flammable gases, vapors, and combustible dusts is ever-present. In such high-risk zones, even a minor spark from an electrical component can lead to catastrophic consequences.

This is where flame proof motors play a vital role. Specially designed to contain and prevent internal explosions from igniting the surrounding atmosphere, these motors are an indispensable part of safety systems across oil and gas installations.

What Are Flame Proof Motors?

Flame proof motors, also referred to as explosion-proof motors, are a specific type of electric motor designed to operate safely in environments with explosive gas or dust. Unlike standard motors, these are built to contain any explosion that may occur within the motor housing, preventing the flame or hot gases from escaping and igniting the surrounding atmosphere.

They are commonly certified under standards such as:

ATEX (Europe)

IECEx (International)

UL/CSA (North America)

These certifications ensure the motor complies with strict design and testing criteria for hazardous area use.

Why Oil & Gas Environments Are High-Risk

In oil and gas facilities, flammable substances are constantly present—either in process systems, storage tanks, or pipelines. Common hazards include:

Methane, propane, and hydrogen sulfide gases

Volatile vapors from petroleum products

Dust clouds in gas treatment plants or drilling operations

High-pressure and high-temperature operating conditions

In such environments, equipment must not only perform reliably but also eliminate the risk of ignition, making flame proof motors a necessity rather than a luxury.

Key Applications in the Oil & Gas Sector

Flame proof motors are used extensively across both onshore and offshore oil and gas operations. Common applications include:

Pumps (for crude oil, water injection, chemical dosing)

Compressors (gas compression and vapor recovery systems)

Fans and blowers (ventilation and gas handling)

Drilling and rig motors

Mixers and agitators (in refineries and chemical blending units)

These motors are integrated into explosion-prone zones, typically classified as Zone 1 or Zone 2, depending on the frequency and presence of flammable atmospheres.

How Flame Proof Motors Enhance Safety

1. Explosion Containment

The motor's enclosure is built to withstand any internal explosion, preventing hot gases from escaping. This containment design ensures that the explosion does not spread to the surrounding volatile atmosphere.

2. Temperature Control

Flame proof motors are engineered to operate within strict surface temperature limits, ensuring the motor’s external parts don’t become an ignition source—even under full load or fault conditions.

3. Ingress Protection

High IP ratings (e.g., IP55, IP65) prevent dust, water, or gas from entering the motor housing, which could otherwise cause sparks, overheating, or short circuits.

4. Long-Term Durability

These motors are ruggedly constructed to resist corrosion, vibration, and environmental wear, especially in offshore rigs where salt, humidity, and extreme weather are common.

Compliance and Regulatory Importance

Using flame proof motors isn’t just a safety best practice—it’s often a legal requirement. Regulatory bodies like OSHA, API, IEC, and NEC mandate the use of explosion-proof equipment in classified hazardous locations. Non-compliance can lead to hefty fines, shutdowns, or worse—loss of life.

Proper certification, labeling (e.g., Ex d, Ex e), and maintenance protocols are critical to ensure continuous compliance and risk mitigation.

Conclusion

In the oil and gas sector, safety is non-negotiable. Flame proof motors are a frontline defense against explosion hazards, offering a proven solution for reliable performance in high-risk environments. Their ability to contain internal faults, resist harsh conditions, and comply with stringent global standards makes them a cornerstone of safe electrical system design in both offshore and onshore applications.

For any operation where flammable gases or vapors are present, investing in certified flame proof motors is not just about compliance—it’s about protecting assets, people, and the environment.

0 notes

Text

The Role of Residential Proxies in Cybersecurity Testing

Cybersecurity testing demands authentic simulation of real-world attack scenarios. Residential proxies have become indispensable tools for security professionals conducting penetration testing, vulnerability assessments, and security audits. By providing genuine residential IP addresses, these proxies enable realistic testing that reveals vulnerabilities invisible to traditional datacenter-based approaches.

Authentic Attack Simulation

Modern security systems easily identify and block datacenter IP ranges, creating dangerous blind spots in security assessments. Attackers don’t limit themselves to datacenter IPs—they leverage compromised residential devices, VPNs, and distributed networks. Testing exclusively from datacenter IPs provides false confidence while leaving residential-origin vulnerabilities unexposed.

Residential proxies enable security teams to simulate attacks from diverse geographic locations and ISP networks. This authenticity reveals location-based security gaps, geo-blocking effectiveness, and behavioral analysis accuracy. Testing from residential IPs mirrors actual threat actor techniques, providing realistic vulnerability assessments.

Penetration Testing Applications

Web application penetration testing benefits significantly from residential proxy deployment. Many applications implement different security measures for datacenter versus residential traffic. Rate limiting, CAPTCHA challenges, and behavioral analysis often relax for residential IPs, assuming legitimate user activity.

Security teams leverage residential proxies to test authentication systems, session management, and access controls from realistic sources. Distributed testing across multiple residential IPs reveals race conditions, improper state management, and authentication bypass vulnerabilities that single-source testing misses.

API security testing requires residential proxies to accurately assess rate limiting implementations and geographic restrictions. Many APIs trust residential sources more than datacenter IPs, potentially exposing security vulnerabilities to attackers using similar infrastructure.

Compliance and Reconnaissance

Security assessments must verify compliance with geographic restrictions and data residency requirements. Residential proxies from specific countries enable thorough testing of geo-blocking implementations, ensuring sensitive data remains inaccessible from restricted regions.

Reconnaissance phases benefit from residential proxy anonymity. Security teams gather public information about targets without revealing corporate IP addresses that might trigger defensive measures or alert targets to upcoming assessments. This stealth enables more accurate vulnerability discovery.

Ethical Considerations and Best Practices

Responsible security testing requires explicit authorization and defined scope. Document residential proxy usage in testing methodologies, ensuring clients understand the comprehensive nature of assessments. Maintain detailed logs of testing activities, IP addresses used, and actions performed.

Choose proxy providers committed to ethical sourcing and user consent. Legitimate security testing demands legitimate infrastructure. Verify providers obtain proper consent from residential IP owners, maintaining ethical standards throughout testing engagements.

Enhanced Security Posture

Organizations embracing residential proxy-based security testing discover vulnerabilities traditional methods miss. This comprehensive approach strengthens defenses against sophisticated attackers who routinely leverage residential infrastructure. Investment in proper testing tools, including residential proxies, proves minimal compared to potential breach costs.

#penetration testing#cyber security#residential proxies#security testing#proxy lust inc.#proxy lust proxies

0 notes

Text

Reliable Residential & Mobile Proxies with Global Coverage | SimplyNode

In today's digital world, data accessibility and online anonymity are vital for businesses and individuals alike. Whether you're managing SEO campaigns, conducting market research, or accessing geo-restricted content, the quality and reliability of your proxy provider can make a significant difference. SimplyNode is a trusted provider of residential and mobile proxies designed to deliver seamless performance and global coverage for a wide range of online tasks.

Why Choose SimplyNode?

1. Real IPs for Real Results SimplyNode offers high-quality residential and mobile IP addresses, meaning all connections originate from actual devices in real locations. This makes it much harder for websites to detect or block the traffic. Whether you're scraping data, monitoring ads, or managing multiple accounts, you can rely on proxies that look and act like real users.

2. Global Proxy Network With coverage in countries across all continents, SimplyNode ensures that users can easily access localized content, test geo-targeted campaigns, and perform tasks that require specific regional IPs. From the US to Europe, Asia to South America, their proxy network is built for truly global operations.

3. Exceptional Reliability and Speed Performance is at the heart of SimplyNode’s service. Their proxies are optimized for low latency and high uptime, ensuring smooth operation even under demanding workloads. Whether you're running automated scripts or browsing manually, the network is stable and fast.

4. Versatile Use Cases SimplyNode proxies are suitable for a wide variety of applications, including:

Web scraping and data collection

Ad verification

Social media management

Sneaker and ticket bots

Market research and competitor analysis

5. Privacy and Security In a world where digital footprints are constantly monitored, SimplyNode prioritizes anonymity and security. Their infrastructure is designed to protect your identity and data at every step.

Designed for Developers and Businesses

SimplyNode’s API and dashboard are built with usability in mind. Developers can easily integrate proxies into their workflows, while businesses can manage IPs and track usage effortlessly. With scalable plans and transparent pricing, the service caters to both individuals and enterprise-level clients.

Conclusion If you’re looking for a powerful proxy solution with real IP addresses, excellent performance, and worldwide reach, SimplyNode is the partner you can trust. Their combination of residential and mobile proxies, global network, and developer-friendly tools makes them a top choice in today’s competitive digital environment. Experience the difference with SimplyNode — where speed, stability, and security come standard.

0 notes

Text

Ip Address To Country Api | Location Lookup Api | DB-IP

DB-IP offers reliable IP address to country API solutions that help businesses identify user locations accurately in real time. This powerful tool enhances geo-targeting, fraud prevention, and analytics. With fast and scalable integration, DB-IP provides data you can trust. The location lookup API goes beyond basic IP data by offering detailed insights like city, region, and timezone. Whether you're optimizing content delivery or improving security, DB-IP’s APIs deliver precise location intelligence for smarter online operations.

0 notes

Text

Identify Suspicious Logins with Location and Device Risk Signals

When a user logs in from an unexpected location or on a new device, it could signal an account compromise. The Fraud Detection API helps detect login anomalies by comparing user behavior, device history, and IP geolocation with past activity. If a login happens from a TOR network, emulator, or unrecognized fingerprint, the API flags it instantly. Businesses can then choose to block access, trigger 2FA, or require re-authentication. This targeted risk response keeps real users safe while minimizing friction. It’s an ideal solution for fintech, social platforms, or apps where account integrity is crucial.

0 notes

Text

AI Maps Talabat Delivery Surge Trends with Heat Maps – UAE

Introduction

The UAE’s leading food delivery app, Talabat, uses dynamic surge pricing—charging higher delivery fees during peak hours or in high-demand zones. These price changes aren’t random; they follow patterns based on weather, locality, daypart, and traffic conditions.

Actowiz Solutions offers AI-based web scraping systems to track Talabat’s surge fee trends in real time and represent them visually using delivery heat maps—empowering restaurants, delivery partners, and Q-commerce players with predictive insights.

What Are Delivery Surge Trends?

Increased delivery fees during peak hours (e.g., 1–3 PM, 7–10 PM)

Location-based surges in Dubai Marina, Downtown, Abu Dhabi Corniche, etc.

Surge intensity may vary based on order backlog, rider shortage, or special occasions

Some restaurants absorb the surge fee, others pass it to customers

These surges can increase delivery cost by 30–50% in affected zones.

Actowiz’s AI Scraping Methodology

1. Geo-Coverage Mapping

Scrapers simulate user behavior from 100+ UAE postal codes, including Dubai, Abu Dhabi, Sharjah, and Ajman.

2. Time-Series Surge Fee Tracking

Hourly scraping logs base delivery fee, surge markups, and estimated delivery time.

3. Heat Map Generation Engine

Using AI, we convert city-wise delivery surges into heat maps that visualize surge intensity over time and geography.

4. Pattern Detection with ML

Machine learning models detect recurring surge hotspots and alert on unexpected spikes.

Sample Data Extracted

1:00 PM – Dubai (Business Bay):

Base Fee: AED 5.00

Surge Fee: AED 4.00

Total Fee: AED 9.00

Surge Level: Medium

ETA: 32 mins

7:30 PM – Dubai (JLT):

Base Fee: AED 6.00

Surge Fee: AED 6.00

Total Fee: AED 12.00

Surge Level: High

ETA: 40 mins

8:00 PM – Abu Dhabi (Reem Island):

Base Fee: AED 4.50

Surge Fee: AED 5.50

Total Fee: AED 10.00

Surge Level: High

ETA: 38 mins

10:00 AM – Sharjah (Al Nahda):

Base Fee: AED 3.00

Surge Fee: AED 0.00

Total Fee: AED 3.00

Surge Level: Low

ETA: 25 mins

AI-Powered Use Cases

✅ Restaurant Chains

Identify where high delivery charges are hurting conversion—adjust menu pricing or promo coverage accordingly.

✅ Logistics Partners

Optimize delivery fleet placement in areas with predicted surge patterns.

✅ Quick Commerce Players

Time your ad campaigns or free delivery offers to undercut peak surge periods and win more orders.

✅ Talabat Sellers

Adjust availability or delivery radius based on predictive surge timelines.

Visualization Example

🗺️ AI Heat Map: Real-time surge zones in Dubai, updated hourly

📈 Line Chart: Delivery surge levels by hour/day of week

📊 Histogram: Surge fee distribution across top 10 UAE localities

Business Impact

💡 A Dubai-based cloud kitchen reduced order abandonments by 17% by offering in-app cashback when Talabat surge crossed AED 6.

💡 A last-mile logistics firm saved 14% on operational cost by optimizing rider deployments based on Actowiz surge prediction models.

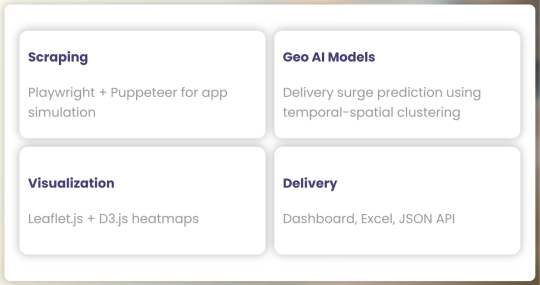

AI & Tech Stack

Scraping: Playwright + Puppeteer for app simulation

Geo AI Models: Delivery surge prediction using temporal-spatial clustering

Visualization: Leaflet.js + D3.js heatmaps

Delivery: Dashboard, Excel, JSON API

Compliance & Ethics

Delivery surge info is publicly visible on Talabat’s customer interface

No login credentials or personal data scraped

Geo-simulation abides by UAE IP governance best practices

📍 Want to see live Talabat surge zones on an interactive map?

Start your free demo with Actowiz Solutions today and harness delivery intelligence like never before.

Contact Us Today!

Final Thoughts

In the competitive UAE delivery space, timing and geography equal revenue. Surge pricing isn’t a threat—it’s a signal. With Actowiz Solutions’ AI-driven scraping and heat map intelligence, businesses can get ahead of surge trends, optimize logistics, and win in real time.

Learn More >>

#ScrapingDeliverySurgeTrendsInTalabat#SurgePredictionModels#RealTimeSurgeZonesInDubai#AIBasedWebScraping#HeatMapGenerationEngine#QCommercePlayersWithPredictiveInsights#PatternDetectionWithML

0 notes

Text

Cloud VPS Server Hosting in 2025 The Ultimate Guide by SEOHostKing

What Is Cloud VPS Server Hosting in 2025? Cloud VPS server hosting in 2025 represents the perfect fusion between the flexibility of cloud computing and the dedicated power of a Virtual Private Server. It delivers enterprise-level infrastructure at affordable pricing, backed by scalable resources, high availability, and powerful isolation—ideal for startups, developers, agencies, eCommerce, and high-traffic websites. Why Cloud VPS Hosting Is Dominating in 2025 Unmatched Performance and Flexibility Traditional hosting is rapidly being replaced by cloud-powered VPS because it offers dynamic resource allocation, 99.99% uptime, lightning-fast SSD storage, and dedicated compute environments—all without the high costs of physical servers. Fully Scalable Infrastructure Cloud VPS adapts to your growth. Whether you're hosting one blog or managing a SaaS platform, you can scale CPU, RAM, bandwidth, and storage with zero downtime. Global Data Center Deployment In 2025, global presence is non-negotiable. Cloud VPS servers now operate in multiple zones, allowing users to deploy applications closest to their target audience for ultra-low latency. Built for High-Security Demands Modern Cloud VPS hosting comes with AI-based DDoS protection, automatic patching, firewalls, and end-to-end encryption to meet the increasing cyber threats of 2025. Benefits of Cloud VPS Hosting with SEOHostKing Ultra-Fast SSD NVMe Storage Enjoy 10x faster data access, low read/write latency, and superior performance for databases and dynamic websites. Dedicated IPv4/IPv6 Addresses Each VPS instance receives its own IPs for full control, SEO flexibility, and better email deliverability. Root Access and Full Control SEOHostKing offers root-level SSH access, so you can install any software, configure firewalls, or optimize the server at your will. Automated Daily Backups Your data is your business. Enjoy daily backups with one-click restoration to eliminate risks. 24/7 Expert Support Get support from VPS professionals with instant response, ticket escalation, and system monitoring—available around the clock. Use Cases for Cloud VPS Hosting in 2025 eCommerce Websites Run WooCommerce, Magento, or Shopify-like custom stores on isolated environments with strong uptime guarantees and transaction-speed optimization. SaaS Platforms Deploy microservices, API endpoints, or full-scale SaaS applications using scalable VPS nodes with Docker and Kubernetes-ready support. WordPress Hosting at Scale Run multiple WordPress sites, high-traffic blogs, and landing pages with isolated resources and one-click staging environments. Proxy Servers and VPNs Use VPS instances for private proxies, rotating proxy servers, or encrypted VPNs for privacy-conscious users. Game Server Hosting Host Minecraft, Rust, or custom gaming servers on high-CPU VPS plans with dedicated bandwidth and GPU-optimized add-ons. Forex Trading and Bots Deploy MT5, expert advisors, and trading bots on low-latency VPS nodes connected to Tier 1 financial hubs for instant execution. AI & Machine Learning Applications Run ML models, data training processes, and deep learning algorithms with GPU-ready VPS nodes and Python-friendly environments. How to Get Started with Cloud VPS Hosting on SEOHostKing Step 1: Choose Your Ideal VPS Plan Select from optimized VPS plans based on CPU cores, memory, bandwidth, and disk space. For developers, choose a minimal OS template. For businesses, go for cPanel or Plesk-based configurations. Step 2: Select Your Server Location Pick from global data centers such as the US, UK, Germany, Singapore, or the UAE for latency-focused deployment. Step 3: Configure Your OS and Add-ons Choose between Linux (Ubuntu, CentOS, AlmaLinux, Debian) or Windows Server (2019/2022) along with optional backups, cPanel, LiteSpeed, or GPU add-ons. Step 4: Launch Your VPS in Seconds Your VPS is auto-deployed in under 60 seconds with full root access and login credentials sent directly to your dashboard. Step 5: Optimize Your Cloud VPS Install web servers like Apache or Nginx, set up firewalls, enable fail2ban, configure caching, and use CDN integration for top-tier speed and security. Features That Make SEOHostKing Cloud VPS #1 in 2025

Self-Healing Hardware Intelligent hardware failure detection with real-time automatic migration of your VPS to healthy nodes with zero downtime. AI Resource Optimization Machine learning adjusts memory and CPU allocation based on predictive workload behavior, minimizing resource waste. Custom ISO Support Install your own operating systems, recovery environments, or penetration testing tools from uploaded ISO files. Integrated Firewall and Anti-Bot Protection Protect websites from automated bots, brute force attacks, and injections with built-in AI firewall logic. One-Click OS Reinstallation Reinstall your OS or template with a single click when you need a clean slate or configuration reset. Managed vs Unmanaged Cloud VPS Hosting Unmanaged VPS Hosting Ideal for developers, sysadmins, or users who need total control. You handle OS, updates, security patches, and application configuration. Managed VPS Hosting Perfect for businesses or beginners. SEOHostKing handles software installation, server updates, security hardening, and 24/7 monitoring. How to Secure Your Cloud VPS in 2025 Enable SSH Key Authentication Use SSH key pairs instead of passwords for better login security and brute-force protection. Keep Your Software Updated Apply security patches and system updates regularly to close vulnerabilities exploited by hackers. Use UFW or CSF Firewall Rules Limit open ports and restrict traffic to only necessary services, reducing attack surfaces. Monitor Logs and Alerts Use logwatch or fail2ban to track suspicious login attempts, port scanning, or abnormal resource usage. Use Backups and Snapshots Schedule automatic backups and use point-in-time snapshots before major upgrades or changes. Best Operating Systems for Cloud VPS in 2025 Ubuntu 24.04 LTS Perfect for developers and modern web applications with access to the latest packages. AlmaLinux 9 Stable, enterprise-grade CentOS alternative compatible with cPanel and other control panels. Debian 12 Rock-solid performance with minimal resource usage for minimalistic deployments. Windows Server 2022 Supports ASP.NET, MSSQL, and remote desktop applications for Windows-specific environments. Performance Benchmarks for Cloud VPS Hosting Website Load Time Under 1.2 seconds for optimized WordPress and Laravel websites with CDN and cache. Database Speed MySQL transactions complete 45% faster with SSD-NVMe storage on SEOHostKing Cloud VPS. Uptime and Availability 99.99% SLA-backed uptime with proactive failure detection and automatic failover systems. Latency & Response Time Average response times below 50ms when hosted in geo-targeted locations near the end user. How Cloud VPS Differs from Other Hosting Types Cloud VPS vs Shared Hosting VPS has dedicated resources and isolation while shared hosting shares CPU/memory with hundreds of users. Cloud VPS vs Dedicated Server VPS provides better flexibility, scalability, and cost-efficiency than traditional physical servers. Cloud VPS vs Cloud Hosting Cloud VPS offers more control and root access, while generic cloud hosting is often limited in configurability. Why SEOHostKing Cloud VPS Hosting Leads in 2025 Transparent Pricing No hidden costs or upsells—simple billing based on resources and usage. Developer-First Infrastructure With APIs, Git integration, staging environments, and CLI tools, it's built for real developers. Enterprise-Grade Network 10 Gbps connectivity, Tier 1 providers, and anti-DDoS systems built directly into the backbone. Green Energy Hosting All data centers are carbon-neutral, with renewable power and efficient cooling systems. Use Cloud VPS to Host Anything in 2025 Web Apps and Portfolios Host your resume, portfolios, client work, or personal websites with blazing-fast speeds. Corporate Intranet and File Servers Create secure internal company environments with Nextcloud, OnlyOffice, or SFTP setups. Dev/Test Environments Spin up a test environment instantly to stage deployments, run QA processes, or experiment with new stacks. Media Streaming Platforms Host video or audio streaming servers using Wowza, Icecast, or RTMP-ready software. Best Practices for Optimizing Cloud VPS Use a Content Delivery Network (CDN) Serve static content from edge locations worldwide to reduce bandwidth and load times. Install LiteSpeed or OpenLiteSpeed Boost performance for WordPress and PHP apps with HTTP/3 support and advanced caching. Use Object Caching (Redis/Memcached) Offload database queries for faster application processing and better scalability. Compress Images and Enable GZIP Save bandwidth and improve load times with server-side compression and caching headers.

Cloud VPS server hosting in 2025 is no longer a premium—it’s the new standard for performance, scalability, and control. With SEOHostKing leading the way, businesses and developers can deploy fast, secure, and reliable virtual servers with confidence. Whether you're launching a project, scaling an enterprise, or securing your digital presence, Cloud VPS with SEOHostKing is the ultimate hosting solution in 2025. Read the full article

0 notes

Text

Why Custom Software Is the Future of Competitive Advantage

1. Introduction

In today’s hyper-accelerated digital economy, standard tools no longer define success. Businesses that once competed on price and product now battle through technology. The era of customization has taken center stage, and custom software is not just a luxury; it’s a weapon for differentiation.

Off-the-shelf software forces organizations into rigid molds. Custom-built platforms, however, align precisely with the company’s DNA, its workflows, users, and long-term goals. This shift is not about technology alone. It is a strategic response to a world demanding digital precision.

2. Tailored Efficiency vs. Generic Solutions

Generic solutions often bring built-in limitations:

Features you don’t need

Processes you can’t adjust

Updates you can’t control

These one-size-fits-all tools require businesses to conform to them. Conversely, custom web application development flips this narrative. It builds the software around the user, not the other way around.

This tailoring empowers teams to work faster, make fewer errors, and operate with laser precision. Every screen, every function, every API exists for a reason. That kind of efficiency can’t be bought off a shelf; it must be engineered.

3. Scalability and Integration with Evolving Systems

Growth is unpredictable. Markets shift. Teams expand. Products pivot. Custom software is inherently elastic.

With modular design, businesses can scale vertically (adding new functionalities) or horizontally (serving more users, locations, or devices). Furthermore, it integrates fluidly with CRMs, ERPs, legacy systems, and modern third-party tools.

Bulletproof custom solutions offer:

Long-term adaptability

Seamless cross-platform communication

Freedom from vendor lock-in

This adaptability ensures software evolves with the business, not against it.

4. Data Ownership, Security, and Custom Control

Data is the new oil, but only if fully controlled and responsibly protected. Off-the-shelf platforms often store data on shared infrastructure, leaving companies vulnerable to breaches and compliance issues.

Custom systems offer:

Full ownership of source code and data

Built-in encryption and access controls

GDPR, HIPAA, or industry-specific compliance baked in

This proprietary architecture enables businesses to guard their IP, enforce governance policies, and monitor usage at a granular level. A trusted custom software development company will architect every line of code with security in mind.

5. Empowering Innovation with Custom Platforms

Standard tools rarely enable bold experimentation. Innovation demands freedom to create, test, and pivot.

Custom platforms offer fertile ground for:

Embedding AI, automation, and analytics

Rapid prototyping of new digital services

Developing user-centric interfaces tailored to niche needs

When a business is no longer confined to third-party software roadmaps, it starts to shape its technological destiny. The result is not just operational improvement, it’s a culture of innovation embedded in digital infrastructure.

6. Conclusion

Competitive advantage is no longer just about being faster or cheaper. It’s about being smarter, more adaptive, and unmistakably unique. The future belongs to businesses that harness software not as a tool, but as a tailored growth engine.

With custom web application development, organizations position themselves ahead of the curve, automating the mundane, innovating the core, and owning every byte of their digital environment.

Is your business ready to lead with technology that’s built just for you?

Visit: https://justtrytech.com/custom-web-development-company/

Contact us: +91 9500139200

Mail address: [email protected]

#custom app development#custom web development#custom software development company#software development company

0 notes

Text

Automating Restaurant Menu Data Extraction Using Web Scraping APIs

Introduction

The food and restaurant business sector is going very heavily digital with millions of restaurant menus being made available through online platforms. Companies that are into food delivery, restaurant aggregation, and market research require menu data on a real-time basis for competition analysis, pricing strategies, and enhancement of customer experience. Manually collecting and updating this information is time-consuming and a laborious endeavor. This is where web scraping APIs come into play with the automated collection of such information to scrape restaurant menu data efficiently and accurately.

This guide discusses the importance of extracting restaurant menu data, how web scraping works for this use case, some challenges to expect, the best practices in dealing with such issues, and the future direction of menu data automation.

Why Export Restaurant Menu Data?

1. Food Delivery Service

Most online food delivery services, like Uber Eats, DoorDash, and Grubhub, need real-time menu updates for accurate pricing or availability. With the extraction of restaurant menu data, at least those online platforms are kept updated and discrepancies avoidable.

2. Competitive Pricing Strategy

Restaurants and food chains make use of web scraping restaurant menu data to determine their competitors' price positions. By tracking rival menus, they will know how they should price their products to remain competitive in the marketplace.

3. Nutritional and Dietary Insights

Health and wellness platforms utilize menu data for dietary recommendations to customers. By scraping restaurant menu data, these platforms can classify foods according to calorie levels, ingredients, and allergens.

4. Market Research and Trend Analysis

This is the group of data analysts and research firms collecting restaurant menu data to analyze consumer behavior about cuisines and track price variations with time.

5. Personalized Food Recommendations

Machine learning and artificial intelligence now provide food apps with the means to recommend meals according to user preferences. With restaurant menu data web scraping, food apps can access updated menus and thus afford personalized suggestions on food.

How Web Scraping APIs Automate Restaurant Menu Data Extraction

1. Identifying Target Websites

The first step is selecting restaurant platforms such as:

Food delivery aggregators (Uber Eats, DoorDash, Grubhub)

Restaurant chains' official websites (McDonald's, Subway, Starbucks)

Review sites (Yelp, TripAdvisor)

Local restaurant directories

2. Sending HTTP Requests

Scraping APIs send HTTP requests to restaurant websites to retrieve HTML content containing menu information.

3. Parsing HTML Data

The extracted HTML is parsed using tools like BeautifulSoup, Scrapy, or Selenium to locate menu items, prices, descriptions, and images.

4. Structuring and Storing Data

Once extracted, the data is formatted into JSON, CSV, or databases for easy integration with applications.

5. Automating Data Updates

APIs can be scheduled to run periodically, ensuring restaurant menus are always up to date.

Data Fields Extracted from Restaurant Menus

1. Restaurant Information

Restaurant Name

Address & Location

Contact Details

Cuisine Type

Ratings & Reviews

2. Menu Items

Dish Name

Description

Category (e.g., Appetizers, Main Course, Desserts)

Ingredients

Nutritional Information

3. Pricing and Discounts

Item Price

Combo Offers

Special Discounts

Delivery Fees

4. Availability & Ordering Information

Available Timings

In-Stock/Out-of-Stock Status

Delivery & Pickup Options

Challenges in Restaurant Menu Data Extraction

1. Frequent Menu Updates

Restaurants frequently update their menus, making it challenging to maintain up-to-date data.

2. Anti-Scraping Mechanisms

Many restaurant websites implement CAPTCHAs, bot detection, and IP blocking to prevent automated data extraction.

3. Dynamic Content Loading

Most restaurant platforms use JavaScript to load menu data dynamically, requiring headless browsers like Selenium or Puppeteer for scraping.

4. Data Standardization Issues

Different restaurants structure their menu data in various formats, making it difficult to standardize extracted information.

5. Legal and Ethical Considerations

Extracting restaurant menu data must comply with legal guidelines, including robots.txt policies and data privacy laws.

Best Practices for Scraping Restaurant Menu Data

1. Use API-Based Scraping

Leveraging dedicated web scraping APIs ensures more efficient and reliable data extraction without worrying about website restrictions.

2. Rotate IP Addresses & Use Proxies

Avoid IP bans by using rotating proxies or VPNs to simulate different users accessing the website.

3. Implement Headless Browsers

For JavaScript-heavy pages, headless browsers like Puppeteer or Selenium can load and extract dynamic content.

4. Use AI for Data Cleaning

Machine learning algorithms help clean and normalize menu data, making it structured and consistent across different sources.

5. Schedule Automated Scraping Jobs

To maintain up-to-date menu data, set up scheduled scraping jobs that run daily or weekly.

Popular Web Scraping APIs for Restaurant Menu Data Extraction

1. Scrapy Cloud API

A powerful cloud-based API that allows automated menu data scraping at scale.

2. Apify Restaurant Scraper

Apify provides pre-built restaurant scrapers that can extract menu details from multiple platforms.

3. Octoparse

A no-code scraping tool with API integration, ideal for businesses that require frequent menu updates.

4. ParseHub

A flexible API that extracts structured restaurant menu data with minimal coding requirements.

5. CrawlXpert API

A robust and scalable solution tailored for web scraping restaurant menu data, offering real-time data extraction with advanced anti-blocking mechanisms.

Future of Restaurant Menu Data Extraction

1. AI-Powered Menu Scraping

Artificial intelligence will improve data extraction accuracy, enabling automatic menu updates without manual intervention.

2. Real-Time Menu Synchronization

Restaurants will integrate web scraping APIs to sync menu data instantly across platforms.

3. Predictive Pricing Analysis

Machine learning models will analyze scraped menu data to predict price fluctuations and customer demand trends.

4. Enhanced Personalization in Food Apps

By leveraging scraped menu data, food delivery apps will provide more personalized recommendations based on user preferences.

5. Blockchain for Menu Authentication

Blockchain technology may be used to verify menu authenticity, preventing fraudulent modifications in restaurant listings.

Conclusion

Automating the extraction of restaurant menus from the web through scraping APIs has changed the food industry by offering real-time prices, recommendations for food based on liking, and analysis of competitors. With advances in technology, more AI-driven scraping solutions will further improve the accuracy and speed of data collection.

Know More : https://www.crawlxpert.com/blog/restaurant-menu-data-extraction-using-web-scraping-apis

#RestaurantMenuDataExtraction#ScrapingRestaurantMenuData#ExtractRestaurantMenus#ScrapeRestaurantMenuData

0 notes

Text

Custom vs Template Websites: Which One Wins for Your Dubai Business?

In bustling Dubai, where businesses bloom at lightning pace, your website is often the first impression you make. But when deciding how to build that site, you’re faced with a critical crossroads: custom development or template-based design? Each shows its strengths and its trade-offs. Let’s walk through this decision together, with empathy, insight, and a flair for the cultural nuances of the region.

1. What's Driving Your Decision? Understanding Your Needs

Every business has unique demands. To make an informed choice, begin by asking:

Budget reality: How much can you invest now—and in the long run?

Feature wishlist: Do you need unusual tools like multi-vendor ecommerce, AI integrations, or personalized user journeys?

Growth trajectory: Are you launching a brand-new concept, or scaling an established one?

The answers frame whether a template-based site gets you closer faster, or a custom site sets a stronger foundation for future success. And if you’re pairing the journey with a digital marketing company in Dubai, your investment becomes part of a bigger, more strategic plan.

2. Custom Websites: The Tailored Path

Pros Detailed:

Design That Speaks Your Brand Imagine a site crafted from day one to echo your visual identity—fonts, visuals, layout, everything tailored to your soul.

Built to Scale As features expand—think API integrations, custom dashboards, user portals—a custom site flexes with those needs.

Advanced Functionality From membership logins to real-time data dashboards, a custom build can accommodate any unique requirement.

Ownable IP & Unique Experience Your site feels yours, not like a crowded shopping mall.

Cons to Acknowledge:

Higher Investment Quality, reliability, and uniqueness come with a higher cost. A mid-tier custom site in Dubai might range from AED 50K–150K+.

Longer Timeline Expect 3–6 months—or more—between initial wireframes and final launch.

Skilled Development Team Required You'll need experienced developers, UX designers, and QA testers to ensure smooth delivery and future upkeep.

3. Template-Based Websites: Quick, Affordable, Ready for Action

Pros in Focus:

Lower Cost, Higher Speed Templates launch websites in days, sometimes hours, with minimal initial investment—ideal for quick market entry.

Simple UX Tools Drag-and-drop editors streamline content updates—especially helpful if you’re working with limited technical support.

Cons to Consider:

Cookie-Cutter Design Millions of others might use the same layout—hard to stand out unless you heavily customize.

Functionality Limits Want a custom booking feature or advanced filters? You might hit a wall.

Potential Speed Issues Some templates include unused plugins or bloated code, which can slow down mobile performance.

Scalability Challenges As your business and website maturity grow, templates may struggle to keep pace.

4. When to Choose Custom: Real-Life UAE Examples

Example 1: Luxury Real Estate Firm in DIFC

Clients expected a sophisticated, image-rich site with search that filtered villas by size, amenities, and location. Custom development delivered the elegant, interactive experience the brand deserved—impossible with any template.

Example 2: Education Startup with Custom Lesson Portal

A Dubai EdTech service needed student dashboards, video libraries, and quiz integrations. Custom architecture allowed seamless scalability while maintaining a clean UX designed for both students and parents.

5. When a Template Is Just Right: UAE Case Studies

Example 1: Boutique Café Launching in JBR

With a tight budget and a desire to start fast, the café used a WordPress theme with restaurant-specific features. Within a week, they had menus, an events page, reservation tools, and Instagram integration—enough to support initial growth.

Example 2: Local Fitness Trainer Building Personal Brand

Using a Squarespace template, the instructor showcased classes, bios, schedules, and testimonials quickly. It matched need, budget, and audience—proving templates can shine when used strategically.

6. The Long View: Investment vs Returns

Custom Sites:

Higher initial cost, but potential longevity and brand differentiation.

Lower friction when adding features or integrations.

Tube-shaped ROI curve: slower start, but exponential returns with scale.

Template Sites:

Low initial cost, immediate launch, but can cap growth.

Great for MVPs (minimum viable products) or seasonal initiatives.

Score quick wins—but switching later can require rebuilds.

7. How to Choose (Without Pressure)

Define business goals: Is uniqueness or speed your priority?

Map critical features: Do you need custom forms, unique workflows, or scaling capabilities?

Estimate budget: Including dev, hosting, content, and future updates.

Plan for growth: Will your site need to adapt in 1, 3, or 5 years?

Ask professionals: Consult a digital marketing company in Dubai or digital marketing services in UAE. They can walk through the roadmap with you.

8. Synergy With Marketing: More Than Just a Build

Whether you choose custom or template, your website should be strategically integrated into broader growth efforts.

SEO and Performance: Speed, mobile friendliness, schema markup, keyword optimization—all essential for visibility.

Content Strategy: Platforms support blogs, guides, video, podcasts, and lead magnets—breathe life into your domain.

Analytics: Conversion funnels, heatmapping, user journey tracking—knowledge fuels better evolution.

Custom sites are flexible powerhouses, while template sites fast-track initial visibility—if they’re optimized and aligned with marketing goals.

9. Real-Life Pricing Ranges in Dubai

Type Budget Range (AED) Timeline Best For Template Website 5k–15k 2–6 weeks Quick launch, limited budget startups Mid-Tier Custom 30k–70k 2–4 months SMEs needing branding & moderate complexity High-End Custom 100k+ 4–9 months Enterprises, multi-language, high scale

10. Beyond the Basics: When a Custom Website Truly Shines

While template sites do their job, custom websites bring a level of strategic value that often goes unnoticed at first glance. Here’s how:

🔹 Smart Personalization

Modern websites built from scratch can integrate AI-driven personalization. For example, an e-commerce site can greet returning users by name, show them recently viewed items, and recommend similar products based on browsing behavior. These subtle touches drastically improve engagement and conversion rates.

🔹 Seamless Third-Party Integrations

If your operations involve tools like HubSpot, Salesforce, or a custom ERP system, a custom site can integrate directly—without hacking plugins or making do with limited API connections.

🔹 Dynamic Content Capabilities

Want to show region-specific content, change layouts based on the device, or A/B test every part of your homepage? These features are native to custom builds and critical in competitive markets like Dubai.

11. Risks of Relying Solely on Templates

Template-based sites are appealing for cost and convenience—but they’re not without real risks:

Security Vulnerabilities: Widely used templates often become targets for bots or malware.

Poor Code Quality: Some templates are cluttered with legacy or unnecessary code, leading to poor performance and ranking.

Dependency on Theme Providers: If your theme developer stops support or updates, you might face functionality issues down the road.

SEO Limitations: Clean, semantic markup is essential for ranking. Many templates sacrifice this for visual style, which can hurt visibility in competitive spaces like digital marketing in the UAE.

12. The Dubai Digital Landscape: Local Relevance Matters 🇦🇪

Dubai isn’t just any market. It's a fast-moving, highly visual, and digitally mature environment. Consumers expect excellence—and they judge fast. A poorly designed website can make or break trust within seconds.

If your audience includes tourists, expats, or B2B clients across the Middle East and North Africa, your web presence must support multilingual content, mobile responsiveness, and cultural sensitivity. For instance:

Right-to-left (RTL) design for Arabic-speaking users.

GDPR & UAE data privacy compliance.

Support for local payment gateways and currency.

This is where partnering with the best digital marketing company in Dubai makes the difference—they bring nuanced understanding of regional expectations into every digital touchpoint.

13. Where Digital Marketing and Web Development Intersect

A brilliant site isn’t just about how it looks—it's about what it achieves. Once your site is live, the real work begins:

On-page SEO: Custom metadata, keyword-rich URLs, schema markup.

Speed optimization: Lazy loading, CDN integration, image compression.

Conversion Rate Optimization (CRO): Strategically placed CTAs, trust elements (like testimonials and certifications), and clean navigation.

Retargeting scripts & Pixel setup: For Facebook Ads, Google Ads, and LinkedIn campaigns.

Templates may not offer the flexibility or backend support for these advanced tactics, while custom sites can be tailored from day one.

If you’re running Google Ads or social campaigns through digital marketing services in UAE, ensure your website converts, not just exists.

14. Making the Final Call: Template or Custom?

So, let’s bring this home.

Choose a Template-Based Website if:

You’re just launching and need to test your idea.

Budget and time are your top constraints.

Your business doesn’t require much beyond a basic digital footprint.

Choose a Custom Website if:

You’re focused on growth, scale, or brand uniqueness.

Your audience demands a standout, seamless experience.

You want full control over performance, security, and integrations.

You can also start with a template and gradually transition to custom—though keep in mind that migration might require a full rebuild down the line.

15. A Hybrid Approach? Yes, That’s a Thing

Some companies in Dubai are opting for semi-custom websites—where a base template is used but deeply modified by developers to create something unique. This middle path can balance cost, speed, and personalization, especially when working with affordable digital marketing services in Dubai.

For instance, businesses can use a CMS like WordPress or Webflow, but still employ expert developers to build custom widgets, animations, or back-end workflows. It's a smarter way to maximize early budget while laying the groundwork for future flexibility.

16. Future-Proofing Your Web Investment

Whichever route you choose, make sure your website is ready for what’s next:

Voice Search Optimization: With more users speaking queries, your site should be structured for conversational SEO.

Core Web Vitals: Google’s ranking algorithm now rewards better page experience—speed, interactivity, and visual stability.

Sustainability: Optimize code and hosting to reduce carbon footprint—yes, digital sustainability is now a metric in CSR reporting.

You don't just need a website—you need a digital asset that evolves with your brand, audience, and market dynamics.

Final Thoughts: Custom vs Template Isn’t a War—It’s a Strategy

There’s no one-size-fits-all winner here. What matters is alignment—between your digital goals, your audience’s expectations, and your growth roadmap. Whether you choose a polished template or commission a hand-crafted masterpiece, your website is a living, breathing brand ambassador.

If you’re ready to make the move—or refine your current setup—collaborating with a results-focused digital marketing company in Dubai ensures you aren’t walking the road alone.

#Web Development Dubai#Custom Website vs Template#Affordable Digital Marketing Dubai#Website Design Trends UAE#Digital Growth Strategy#Online Presence Dubai#E-commerce Web Development#SEO Web Design UAE#Mobile-Friendly Websites

0 notes

Text

Enhancing ARMxy Performance with Nginx as a Reverse Proxy

Introduction

Nginx is a high-performance web server that also functions as a reverse proxy, load balancer, and caching server. It is widely used in cloud and edge computing environments due to its lightweight architecture and efficient handling of concurrent connections. By deploying Nginx on ARMxy Edge IoT Gateway, users can optimize data flow, enhance security, and efficiently manage industrial network traffic.

Why Use Nginx on ARMxy?

1. Reverse Proxying – Nginx acts as an intermediary, forwarding client requests to backend services running on ARMxy.

2. Load Balancing – Distributes traffic across multiple devices to prevent overload.

3. Security Hardening – Hides backend services and implements SSL encryption for secure communication.

4. Performance Optimization – Caching frequently accessed data reduces latency.

Setting Up Nginx as a Reverse Proxy on ARMxy

1. Install Nginx

On ARMxy’s Linux-based OS, update the package list and install Nginx:

sudo apt update sudo apt install nginx -y

Start and enable Nginx on boot:

sudo systemctl start nginx sudo systemctl enable nginx

2. Configure Nginx as a Reverse Proxy

Modify the default Nginx configuration to route incoming traffic to an internal service, such as a Node-RED dashboard running on port 1880:

sudo nano /etc/nginx/sites-available/default

Replace the default configuration with the following:

server { listen 80; server_name your_armxy_ip;

location / {

proxy_pass http://localhost:1880/;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

Save the file and restart Nginx:

sudo systemctl restart nginx

3. Enable SSL for Secure Communication

To secure the reverse proxy with HTTPS, install Certbot and configure SSL:

sudo apt install certbot python3-certbot-nginx -y sudo certbot --nginx -d your_domain

Follow the prompts to automatically configure SSL for your ARMxy gateway.

Use Case: Secure Edge Data Flow

In an industrial IoT setup, ARMxy collects data from field devices via Modbus, MQTT, or OPC UA, processes it locally using Node-RED or Dockerized applications, and sends it to cloud platforms. With Nginx, you can:

· Secure data transmission with HTTPS encryption.

· Optimize API requests by caching responses.

· Balance traffic when multiple ARMxy devices are used in parallel.

Conclusion

Deploying Nginx as a reverse proxy on ARMxy enhances security, optimizes data handling, and ensures efficient communication between edge devices and cloud platforms. This setup is ideal for industrial automation, smart city applications, and IIoT networks requiring low latency, high availability, and secure remote access.

0 notes