#ML and AI

Explore tagged Tumblr posts

Text

youtube

Welcome to Imarticus Learning! In this third part of our Machine Learning Modelling Flow, we cover the final, critical steps of the ML pipeline: deployment, monitoring, and maintenance of your models. In this video, you'll learn how to seamlessly transition your ML models from development to production, ensure their performance with continuous monitoring, and implement strategies to scale and update them over time. Whether you're a budding data scientist or an experienced ML engineer, mastering these techniques is key to delivering robust, real-world solutions.

0 notes

Text

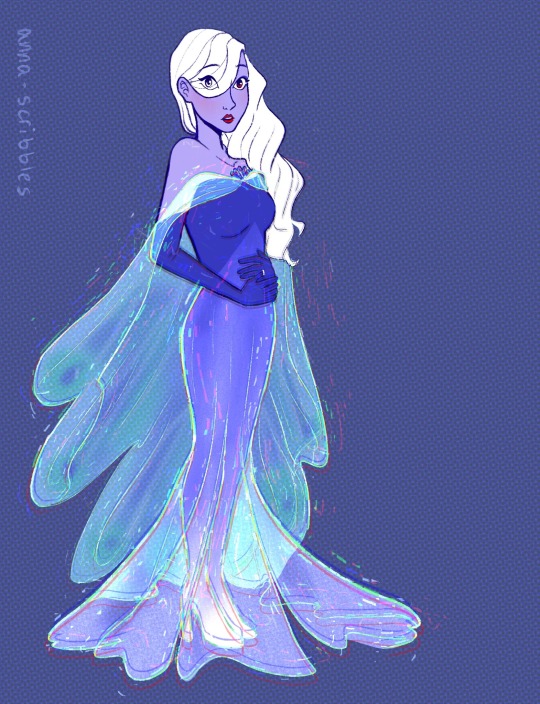

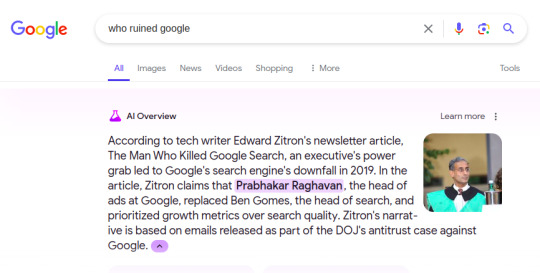

adrien tell ur mom to leave me alone !

#rip emilie agreste u would’ve loved breaking dawn pt. 1#and family vlogger youtube channels#and AI#my art#emilie agreste#ml#she’s plaguing me. can you tell#miraculous#miraculous ladybug#gabriel agreste#nathalie sancoeur#i think it’s so swag that peacock holders don’t get masks#they just turn purple#the peacock miraculous made everyone slay#except for gabe. that man couldn’t slay if his life depended on it. he only knows how do get uglier.#anyway shout out to the emilie agreste who lives in my brain. that girl is crazy#thirteen#eye strain#tw eyestrain#eyestrain

5K notes

·

View notes

Text

“things were so hard with dad in recent years...how did he go from paparapluie to père? i wish i could face him and understand, but while he was still here i didn't dare try to tell him [any of my feelings] and now...it's too late.” * paparapluie is a pun on the words papa and parapluie (umbrella) since the plush is a frog. père is the french word for 'father.'

#ml spoilers#ml s6 spoilers#miraculous spoilers#ml el toro de piedra#mledit#miraculous#miraculous ladybug#miraculous lb#miraculousedit#adrien agreste#adrienette#adrinette#my edits#fascinated at umbrellas constantly being a motif for protection in this show. the theme is “in the rain” because marinette fell for adrien#in the rain but he offered her an umbrella (an act of kindness and protection from the weather). next to how#adrien's father used a pun about umbrellas as his own nickname when adrien was younger and he was still caring for him as a dad should#but as he got older his father stopped protecting him so the nickname (and also any form of 'papa') fell through in favor of the#cold + formal + distant 'père.' this specific pun between parapluie and papa might also come from the french poem un papa by pierre ruaud#which is a poem about papas serving as protection and a sort of shelter for their children. so ig ml is saying gabriel started this way too#i think the fandom glosses over the complexity of adrien's feelings for his father bc in earlier seasons he defended + made excuses for him#part of this is because he was sheltered + didn't know better but it's also bc he DOES recall a time before his mother's illness grew worse#(some time between age 6 and the werepapas flashback) when he didn't have an absentee father. the show writes gabriel agreste#inconsistently: in earlier seasons he had moments of concern for his son before he became awful all the time. and these on/off moments give#adrien whiplash because he's left doing things like becoming a model for his father (i'm choosing to believe gabriel didn't use the rings#until later bc much of the earlier seasons make no sense if he was controlling adrien) in the hopes that they'll bond only to realize#his father still won't spend time with him even for a meal. s5 has gabriel making him pancakes (the wrong way) and asking about his day#and his friends and interests only for him to become even more controlling and mean. how he let him quit modeling only to create an#AI version of him without his consent and when he said that made him feel uncomfortable gabriel convinced him it was fine bc now he had#more free time! only to still control how he spent that free time. adrien didn't start grappling with these things until s5#and now he laments the things he never actually got to say about the papa he misses and the father he wished had unconditionally loved him

1K notes

·

View notes

Text

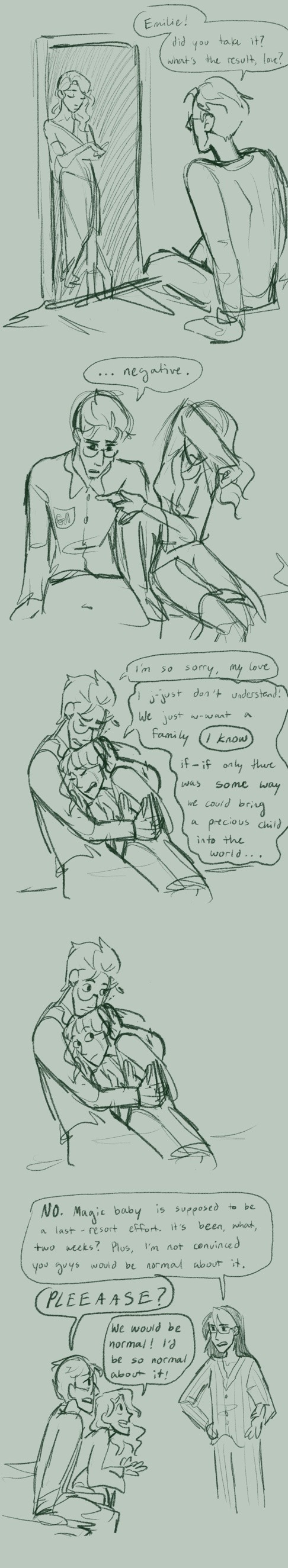

I asked Google "who ruined Google" and they replied honestly using their AI, which is now forced on all of us. It's too funny not to share!

1K notes

·

View notes

Text

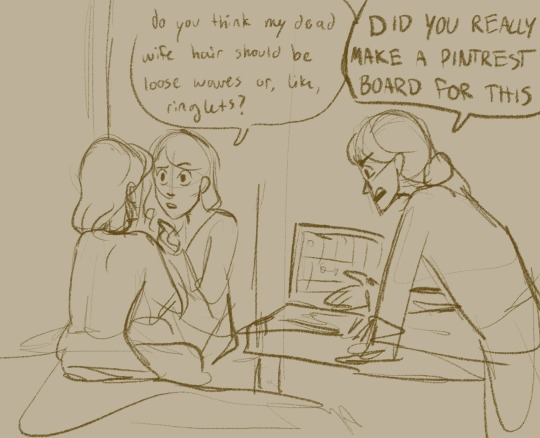

Doodles :)

I think the Graham de Vanilys should have some cats and ferrets, Félix seems like a ferret liker <3 The doodles on the last image are old ones i never got aroun to posting, mostly consisting of the aged up designs i have 4 feligami

#Miraculous is eating at my brain again who wouldve guessed (me)#its always eating at my brain tbh tho#Adrien Agreste#Felix Graham de Vanily#Nathalie Sancoeur#Amelie Graham de Vanily#amenath#Kagami Tsurugi#Feligami#the names for the cats r based off a Felix ai I had a couple chats with#Do i just like giving characters im attached to cats because I have cats? yeah. probably. it brings me joy <3#my art#miraculous tales of ladybug and chat noir#miraculous adventures of ladybug and chat noir#miraculous ladybug#ml representation spoilers#ml representation#I am an its Amelie not Emilie believer btw#ALSO Felix would love Shad ow the Hedg ehog

4K notes

·

View notes

Text

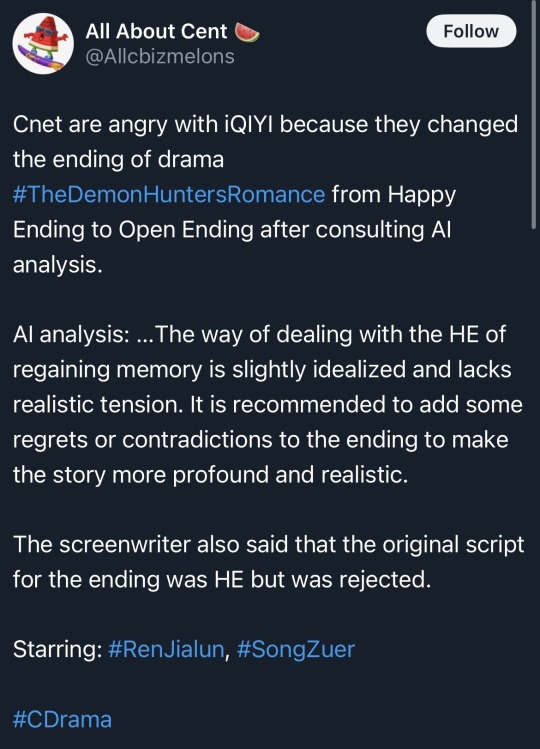

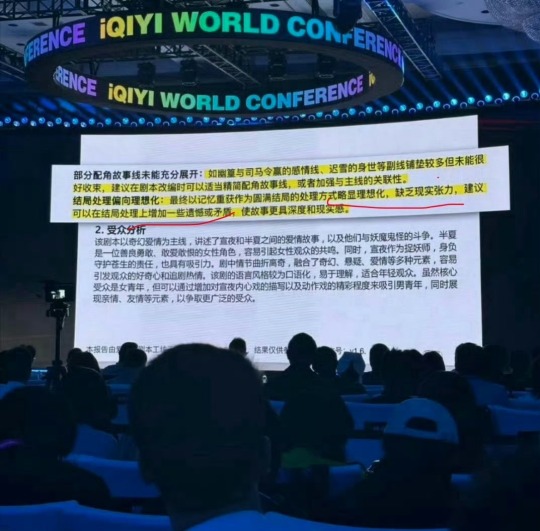

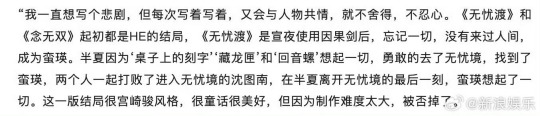

Excuse me but what the fuck? I’m still several episodes from the end, but I knew about the OE and wasn’t that annoyed by it (ie I still plan on finishing), but this is infuriating. Asking AI? I’m so angry on behalf of the screenwriter.

#the demon hunter's romance#the demon hunter’s romance spoilers#I do have some frustration with the ending but it’s not really in ‘oh they’re separated’#I wasn’t that invested in an HE for the romance#it’s in how it concludes ML’s story specifically#but in all my mediocre cdrama ending considerations I never considered#’oh well they asked AI to pick it’

161 notes

·

View notes

Text

90% of documentation sucks. 99% of LLM documentation sucks. Why? Possibilities:

LLM devs lack the necessary skills to write docs because they're under the age of 25 and have been working on the same couple projects that whole time.

LLM devs don't understand why docs are important because they're under the age of 25 and have been working on the same couple projects that whole time.

LLM devs view their work in the way that mystery cults view their worship, and enter an ecstatic state not conducive to communication upon opening Jupyter Notebook/Google Colab/etc. (It's like when a snake-handling churchgoer picks up the snake.)

LLM devs choose not to write docs because they think that providing publicly-accessible information on how their code can be used makes their expertise less-valuable in the job market:

"If I'm the only one who understands the tools I made, people who want to use my work will have to pay me."

-- Final words of 10,000 naive LLM devs who have spent the last 2 years duplicating each other's work without realizing it, because they have never provided a coherent public explanation of what their work is intended to do, making it impossible either for them to find each other or for prospective users to find them. They died of dysentery.

If they had lived only a few months longer, they'd have gotten back in the computer chair and discovered, with mounting horror, that they no longer know how to use their own work, because they spent a few months doing something else (hospital, PT, etc) and the unwritten knowledge that they thought they had hoarded - in fact recalled only due to near-daily repetition - fell out of their heads, teaching them an important lesson about their own fallibility.

They didn't, though. They died of dysentery. Very sad.

177 notes

·

View notes

Text

Museru Kurai no Ai o Ageru

#Museru Kurai no Ai o Ageru#Museru Kurai no Ai wo Ageru#Choking on Love#Iwashita Keiko#shoujo#manga#shoujo manga#shoujo romance#romance manga#manga cap#shoujo cap#romance cap#university manga#shoujo caps#manga ml#shoujo ml

84 notes

·

View notes

Text

Felix: I don’t know what you all are talkin about, but it’s probably absolutely ridiculous.

Adrien and Nino:

Nino: AbsoLOOTeLAY

#cockney Felix would be SO CUTEEE#AMELIE ALSO HAVING A COCKNEY ACCENT TOO-#she would annoy Gabriel 10x more#mlb#mlb ai#chocoau#miraculous ladybug#ml#delete later

177 notes

·

View notes

Text

i think it would be funny if one of the ways that adrien responded to Everything was by becoming the most offline unreachable hermit of all time. no phone no computer nothing. don’t talk to him about ai or instagram brand deals or twitter drama he can’t even receive a text message. if you want to contact him he checks his email once a week at the local library. he also has a flip phone with customized ringtones and no internet connection, and a number he gives out to an extremely select list of people. don’t try to reference a pop culture phenomenon to him he won’t understand it. his flip phone can’t open tiktoks. he’s protecting his peace.

#something about being turned into an ai siri for millions of people at age 14 turned him off from technology#he does the wordle. maybe.#more likely he does the crossword#ml#anna rambles

687 notes

·

View notes

Text

"the reason adrien is just instantly good at everything he tries is because he is programmed to be that way as a senti" aside from the fact that i don't think that's how it works (and also while he was decent at everything he tried with marinette he wasn't instantly good at all of them, and what marinette actually said to him was that he could improve in anything with practice but it was a great first attempt) did we all collectively forget about how adrien actually canonically isn't the best singer?

#adrien agreste#miraculous#miraculous ladybug#ml s6 spoilers#ml season 6#ml climatiqueen#miraculous spoilers#ml spoilers#actually never saw that episode in french so maybe the french voice actor did a better job idk but given that adrien doesn't#usually sing for kitty section or ever the way i saw it was he used his poetry writing skills to write a song#and as a songwriter he was probably great but being a good lyricist doesn't make you a great singer obviously#so to me that's what his deal is#i actually like that throughout this show adrien has some things he picks up easily and some things he has to work on and might never do as#well as people with more experience#i also think as a kids show the lesson they want to put out is anyone can improve with effort and attempt#like he fumbled that science lab experiment but enjoys particle physics#languages tend to come easily to him precisely because it's been something he was forced to do since he was young#a lot of polygots especially if they start young develop skills and see linguistic patterns and iirc he already knew some#japanese from anime and his familiarity with mandarin should help#but i love that he took it further and took on morse code like the cute nerd he is#and now he's studying ancient greek for fun??? what a cute#marinette says his macarons tasted fine but we saw him struggle with the creme#what i mean to say is#he has discipline (basically second nature now) and dedication so he can do well but it DOES require effort#and i think it dismisses how much adrien TRIES or the fact that a lot of skills he was taught to have since a young age aid him#and i just don't think all sentis are “perfect” in an AI robotic way (even if that's how their parents wished they were)#it also just lessens his humanity and iirc the writers have stated multiple times that they are still human#(we can discuss how inconsistent ml is about sentis in general but eh idc for that conversation tbh agdhsjsjks)#anyway adrien will forever be#my nerdy son i love him so much

46 notes

·

View notes

Text

422 notes

·

View notes

Text

Seemingly-counterintuitive thing about "AI" output: these things are much better at identifying and recreating emotion/tone than they are at facts/reasoning. The LLMs typically know whether a story is "funny" or "depressing," whether a picture of a dog is "cute" or "scary," when it's appropriate to use a puke emoji, and so on. They do not know that the Russian sleep experiment and the Dashcon baby are fake.

Makes sense! People are more likely to lie or be wrong about reality than about their own feelings about it. And the datasets are, ultimately, just stuff people say.

73 notes

·

View notes

Text

The surprising truth about data-driven dictatorships

Here’s the “dictator’s dilemma”: they want to block their country’s frustrated elites from mobilizing against them, so they censor public communications; but they also want to know what their people truly believe, so they can head off simmering resentments before they boil over into regime-toppling revolutions.

These two strategies are in tension: the more you censor, the less you know about the true feelings of your citizens and the easier it will be to miss serious problems until they spill over into the streets (think: the fall of the Berlin Wall or Tunisia before the Arab Spring). Dictators try to square this circle with things like private opinion polling or petition systems, but these capture a small slice of the potentially destabiziling moods circulating in the body politic.

Enter AI: back in 2018, Yuval Harari proposed that AI would supercharge dictatorships by mining and summarizing the public mood — as captured on social media — allowing dictators to tack into serious discontent and diffuse it before it erupted into unequenchable wildfire:

https://www.theatlantic.com/magazine/archive/2018/10/yuval-noah-harari-technology-tyranny/568330/

Harari wrote that “the desire to concentrate all information and power in one place may become [dictators] decisive advantage in the 21st century.” But other political scientists sharply disagreed. Last year, Henry Farrell, Jeremy Wallace and Abraham Newman published a thoroughgoing rebuttal to Harari in Foreign Affairs:

https://www.foreignaffairs.com/world/spirals-delusion-artificial-intelligence-decision-making

They argued that — like everyone who gets excited about AI, only to have their hopes dashed — dictators seeking to use AI to understand the public mood would run into serious training data bias problems. After all, people living under dictatorships know that spouting off about their discontent and desire for change is a risky business, so they will self-censor on social media. That’s true even if a person isn’t afraid of retaliation: if you know that using certain words or phrases in a post will get it autoblocked by a censorbot, what’s the point of trying to use those words?

The phrase “Garbage In, Garbage Out” dates back to 1957. That’s how long we’ve known that a computer that operates on bad data will barf up bad conclusions. But this is a very inconvenient truth for AI weirdos: having given up on manually assembling training data based on careful human judgment with multiple review steps, the AI industry “pivoted” to mass ingestion of scraped data from the whole internet.

But adding more unreliable data to an unreliable dataset doesn’t improve its reliability. GIGO is the iron law of computing, and you can’t repeal it by shoveling more garbage into the top of the training funnel:

https://memex.craphound.com/2018/05/29/garbage-in-garbage-out-machine-learning-has-not-repealed-the-iron-law-of-computer-science/

When it comes to “AI” that’s used for decision support — that is, when an algorithm tells humans what to do and they do it — then you get something worse than Garbage In, Garbage Out — you get Garbage In, Garbage Out, Garbage Back In Again. That’s when the AI spits out something wrong, and then another AI sucks up that wrong conclusion and uses it to generate more conclusions.

To see this in action, consider the deeply flawed predictive policing systems that cities around the world rely on. These systems suck up crime data from the cops, then predict where crime is going to be, and send cops to those “hotspots” to do things like throw Black kids up against a wall and make them turn out their pockets, or pull over drivers and search their cars after pretending to have smelled cannabis.

The problem here is that “crime the police detected” isn’t the same as “crime.” You only find crime where you look for it. For example, there are far more incidents of domestic abuse reported in apartment buildings than in fully detached homes. That’s not because apartment dwellers are more likely to be wife-beaters: it’s because domestic abuse is most often reported by a neighbor who hears it through the walls.

So if your cops practice racially biased policing (I know, this is hard to imagine, but stay with me /s), then the crime they detect will already be a function of bias. If you only ever throw Black kids up against a wall and turn out their pockets, then every knife and dime-bag you find in someone’s pockets will come from some Black kid the cops decided to harass.

That’s life without AI. But now let’s throw in predictive policing: feed your “knives found in pockets” data to an algorithm and ask it to predict where there are more knives in pockets, and it will send you back to that Black neighborhood and tell you do throw even more Black kids up against a wall and search their pockets. The more you do this, the more knives you’ll find, and the more you’ll go back and do it again.

This is what Patrick Ball from the Human Rights Data Analysis Group calls “empiricism washing”: take a biased procedure and feed it to an algorithm, and then you get to go and do more biased procedures, and whenever anyone accuses you of bias, you can insist that you’re just following an empirical conclusion of a neutral algorithm, because “math can’t be racist.”

HRDAG has done excellent work on this, finding a natural experiment that makes the problem of GIGOGBI crystal clear. The National Survey On Drug Use and Health produces the gold standard snapshot of drug use in America. Kristian Lum and William Isaac took Oakland’s drug arrest data from 2010 and asked Predpol, a leading predictive policing product, to predict where Oakland’s 2011 drug use would take place.

[Image ID: (a) Number of drug arrests made by Oakland police department, 2010. (1) West Oakland, (2) International Boulevard. (b) Estimated number of drug users, based on 2011 National Survey on Drug Use and Health]

Then, they compared those predictions to the outcomes of the 2011 survey, which shows where actual drug use took place. The two maps couldn’t be more different:

https://rss.onlinelibrary.wiley.com/doi/full/10.1111/j.1740-9713.2016.00960.x

Predpol told cops to go and look for drug use in a predominantly Black, working class neighborhood. Meanwhile the NSDUH survey showed the actual drug use took place all over Oakland, with a higher concentration in the Berkeley-neighboring student neighborhood.

What’s even more vivid is what happens when you simulate running Predpol on the new arrest data that would be generated by cops following its recommendations. If the cops went to that Black neighborhood and found more drugs there and told Predpol about it, the recommendation gets stronger and more confident.

In other words, GIGOGBI is a system for concentrating bias. Even trace amounts of bias in the original training data get refined and magnified when they are output though a decision support system that directs humans to go an act on that output. Algorithms are to bias what centrifuges are to radioactive ore: a way to turn minute amounts of bias into pluripotent, indestructible toxic waste.

There’s a great name for an AI that’s trained on an AI’s output, courtesy of Jathan Sadowski: “Habsburg AI.”

And that brings me back to the Dictator’s Dilemma. If your citizens are self-censoring in order to avoid retaliation or algorithmic shadowbanning, then the AI you train on their posts in order to find out what they’re really thinking will steer you in the opposite direction, so you make bad policies that make people angrier and destabilize things more.

Or at least, that was Farrell(et al)’s theory. And for many years, that’s where the debate over AI and dictatorship has stalled: theory vs theory. But now, there’s some empirical data on this, thanks to the “The Digital Dictator’s Dilemma,” a new paper from UCSD PhD candidate Eddie Yang:

https://www.eddieyang.net/research/DDD.pdf

Yang figured out a way to test these dueling hypotheses. He got 10 million Chinese social media posts from the start of the pandemic, before companies like Weibo were required to censor certain pandemic-related posts as politically sensitive. Yang treats these posts as a robust snapshot of public opinion: because there was no censorship of pandemic-related chatter, Chinese users were free to post anything they wanted without having to self-censor for fear of retaliation or deletion.

Next, Yang acquired the censorship model used by a real Chinese social media company to decide which posts should be blocked. Using this, he was able to determine which of the posts in the original set would be censored today in China.

That means that Yang knows that the “real” sentiment in the Chinese social media snapshot is, and what Chinese authorities would believe it to be if Chinese users were self-censoring all the posts that would be flagged by censorware today.

From here, Yang was able to play with the knobs, and determine how “preference-falsification” (when users lie about their feelings) and self-censorship would give a dictatorship a misleading view of public sentiment. What he finds is that the more repressive a regime is — the more people are incentivized to falsify or censor their views — the worse the system gets at uncovering the true public mood.

What’s more, adding additional (bad) data to the system doesn’t fix this “missing data” problem. GIGO remains an iron law of computing in this context, too.

But it gets better (or worse, I guess): Yang models a “crisis” scenario in which users stop self-censoring and start articulating their true views (because they’ve run out of fucks to give). This is the most dangerous moment for a dictator, and depending on the dictatorship handles it, they either get another decade or rule, or they wake up with guillotines on their lawns.

But “crisis” is where AI performs the worst. Trained on the “status quo” data where users are continuously self-censoring and preference-falsifying, AI has no clue how to handle the unvarnished truth. Both its recommendations about what to censor and its summaries of public sentiment are the least accurate when crisis erupts.

But here’s an interesting wrinkle: Yang scraped a bunch of Chinese users’ posts from Twitter — which the Chinese government doesn’t get to censor (yet) or spy on (yet) — and fed them to the model. He hypothesized that when Chinese users post to American social media, they don’t self-censor or preference-falsify, so this data should help the model improve its accuracy.

He was right — the model got significantly better once it ingested data from Twitter than when it was working solely from Weibo posts. And Yang notes that dictatorships all over the world are widely understood to be scraping western/northern social media.

But even though Twitter data improved the model’s accuracy, it was still wildly inaccurate, compared to the same model trained on a full set of un-self-censored, un-falsified data. GIGO is not an option, it’s the law (of computing).

Writing about the study on Crooked Timber, Farrell notes that as the world fills up with “garbage and noise” (he invokes Philip K Dick’s delighted coinage “gubbish”), “approximately correct knowledge becomes the scarce and valuable resource.”

https://crookedtimber.org/2023/07/25/51610/

This “probably approximately correct knowledge” comes from humans, not LLMs or AI, and so “the social applications of machine learning in non-authoritarian societies are just as parasitic on these forms of human knowledge production as authoritarian governments.”

The Clarion Science Fiction and Fantasy Writers’ Workshop summer fundraiser is almost over! I am an alum, instructor and volunteer board member for this nonprofit workshop whose alums include Octavia Butler, Kim Stanley Robinson, Bruce Sterling, Nalo Hopkinson, Kameron Hurley, Nnedi Okorafor, Lucius Shepard, and Ted Chiang! Your donations will help us subsidize tuition for students, making Clarion — and sf/f — more accessible for all kinds of writers.

Libro.fm is the indie-bookstore-friendly, DRM-free audiobook alternative to Audible, the Amazon-owned monopolist that locks every book you buy to Amazon forever. When you buy a book on Libro, they share some of the purchase price with a local indie bookstore of your choosing (Libro is the best partner I have in selling my own DRM-free audiobooks!). As of today, Libro is even better, because it’s available in five new territories and currencies: Canada, the UK, the EU, Australia and New Zealand!

[Image ID: An altered image of the Nuremberg rally, with ranked lines of soldiers facing a towering figure in a many-ribboned soldier's coat. He wears a high-peaked cap with a microchip in place of insignia. His head has been replaced with the menacing red eye of HAL9000 from Stanley Kubrick's '2001: A Space Odyssey.' The sky behind him is filled with a 'code waterfall' from 'The Matrix.']

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

—

Raimond Spekking (modified) https://commons.wikimedia.org/wiki/File:Acer_Extensa_5220_-_Columbia_MB_06236-1N_-_Intel_Celeron_M_530_-_SLA2G_-_in_Socket_479-5029.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

—

Russian Airborne Troops (modified) https://commons.wikimedia.org/wiki/File:Vladislav_Achalov_at_the_Airborne_Troops_Day_in_Moscow_%E2%80%93_August_2,_2008.jpg

“Soldiers of Russia” Cultural Center (modified) https://commons.wikimedia.org/wiki/File:Col._Leonid_Khabarov_in_an_everyday_service_uniform.JPG

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

#pluralistic#habsburg ai#self censorship#henry farrell#digital dictatorships#machine learning#dictator's dilemma#eddie yang#preference falsification#political science#training bias#scholarship#spirals of delusion#algorithmic bias#ml#Fully automated data driven authoritarianism#authoritarianism#gigo#garbage in garbage out garbage back in#gigogbi#yuval noah harari#gubbish#pkd#philip k dick#phildickian

833 notes

·

View notes

Text

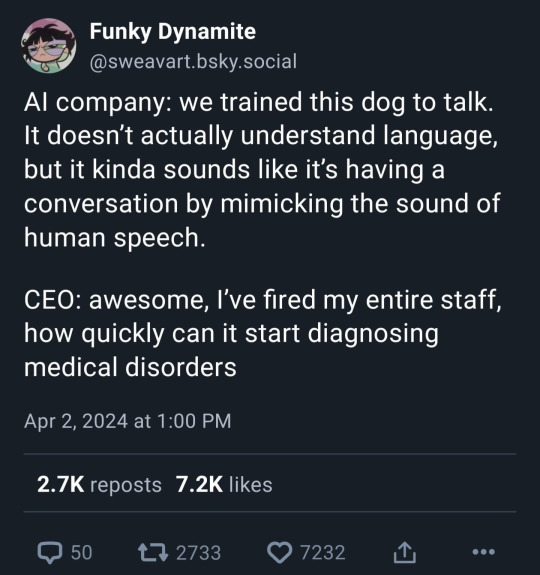

Vibe coding in a nutshell

115 notes

·

View notes