#Marr's computational model

Explore tagged Tumblr posts

Text

computational models are boring to learn

clean

sterile

or maybe this lecturer needs to stop using blank white slides

#please explore powerpoint default themes or design ideas at least#Marr's computational model#complaining about boring lectures#cognitive science

0 notes

Text

Armadillo (EMR 1020) by Feedback Inc. (1985). This "computer-controlled, educational robot is ideally suited to teach robotics principles. Under computer control, the ARMADILLO will run around forward, backward, and to the left or right at a speed of 15 feet per minute. Each wheel is independently controlled. Whenever the robot encounters an unmovable object, touch sensors send data back to the remote computer, which then directs either evasive or exploratory action. ARMADILLO has blinking "eyes," beeps in either of two tones, and when directed by the computer, will press down a pen and chart it programmed progress on paper. According to the company, the ARMADILLO connects to the input/output ports of the ZX81 (Timex/Sinclair 1000), the AIM65, or other microcomputers. The interface circuit enables the robot to be treated as a memory-mapped I/O device so data can be sent to and received from the robot as if it were another memory location in RAM or ROM. The robot comes fully assembled and tested or, for increasing learning, is available in kit form for self-assembly. It runs on a dc supply of 9 to 15 volts drawn from the host computer. Price of the fully assembled model is $525." – The Personal Robot Book, by Texe Marrs.

32 notes

·

View notes

Text

Interesting Papers for Week 15, 2025

Surprise!—Clarifying the link between insight and prediction error. Becker, M., Wang, X., & Cabeza, R. (2024). Psychonomic Bulletin & Review, 31(6), 2714–2723.

Learning enhances behaviorally relevant representations in apical dendrites. Benezra, S. E., Patel, K. B., Perez Campos, C., Hillman, E. M., & Bruno, R. M. (2024). eLife, 13, e98349.3.

Symmetry breaking organizes the brain’s resting state manifold. Fousek, J., Rabuffo, G., Gudibanda, K., Sheheitli, H., Petkoski, S., & Jirsa, V. (2024). Scientific Reports, 14, 31970.

Stimulus-invariant aspects of the retinal code drive discriminability of natural scenes. Hoshal, B. D., Holmes, C. M., Bojanek, K., Salisbury, J. M., Berry, M. J., Marre, O., & Palmer, S. E. (2024). Proceedings of the National Academy of Sciences, 121(52), e2313676121.

Dynamic responses of striatal cholinergic interneurons control behavioral flexibility. Huang, Z., Chen, R., Ho, M., Xie, X., Gangal, H., Wang, X., & Wang, J. (2024). Science Advances, 10(51).

Bridging the gap between presynaptic hair cell function and neural sound encoding. Jaime Tobón, L. M., & Moser, T. (2024). eLife, 12, e93749.4.

Reducing the Influence of Time Pressure on Risky Choice. Jiang, Y., Huang, P., & Qian, X. (2024). Experimental Psychology, 71(4), 238–246.

Broadscale dampening of uncertainty adjustment in the aging brain. Kosciessa, J. Q., Mayr, U., Lindenberger, U., & Garrett, D. D. (2024). Nature Communications, 15, 10717.

Temporal context effects on suboptimal choice. McDevitt, M. A., Pisklak, J. M., Dunn, R. M., & Spetch, M. L. (2024). Psychonomic Bulletin & Review, 31(6), 2737–2745.

A computational model for angular velocity integration in a locust heading circuit. Pabst, K., Gkanias, E., Webb, B., Homberg, U., & Endres, D. (2024). PLOS Computational Biology, 20(12), e1012155.

A neuronal least-action principle for real-time learning in cortical circuits. Senn, W., Dold, D., Kungl, A. F., Ellenberger, B., Jordan, J., Bengio, Y., Sacramento, J., & Petrovici, M. A. (2024). eLife, 12, e89674.3.

Eye pupils mirror information divergence in approximate inference. Shirama, A., Nobukawa, S., & Sumiyoshi, T. (2024). Scientific Reports, 14, 30808.

Inferring context-dependent computations through linear approximations of prefrontal cortex dynamics. Soldado-Magraner, J., Mante, V., & Sahani, M. (2024). Science Advances, 10(51).

Noisy Retrieval of Experienced Probabilities Underlies Rational Judgment of Uncertain Multiple Events. Spiliopoulos, L., & Hertwig, R. (2024). Journal of Behavioral Decision Making, 37(5).

Evaluating hippocampal replay without a ground truth. Takigawa, M., Huelin Gorriz, M., Tirole, M., & Bendor, D. (2024). eLife, 13, e85635.

Future spinal reflex is embedded in primary motor cortex output. Umeda, T., Yokoyama, O., Suzuki, M., Kaneshige, M., Isa, T., & Nishimura, Y. (2024). Science Advances, 10(51).

The emergence of visual category representations in infants’ brains. Yan, X., Tung, S. S., Fascendini, B., Chen, Y. D., Norcia, A. M., & Grill-Spector, K. (2024). eLife, 13, e100260.3.

Cortisol awakening response prompts dynamic reconfiguration of brain networks in emotional and executive functioning. Zeng, Y., Xiong, B., Gao, H., Liu, C., Chen, C., Wu, J., & Qin, S. (2024). Proceedings of the National Academy of Sciences, 121(52), e2405850121.

The representation of abstract goals in working memory is supported by task-congruent neural geometry. Zhang, M., & Yu, Q. (2024). PLOS Biology, 22(12), e3002461.

Theta phase precession supports memory formation and retrieval of naturalistic experience in humans. Zheng, J., Yebra, M., Schjetnan, A. G. P., Patel, K., Katz, C. N., Kyzar, M., Mosher, C. P., Kalia, S. K., Chung, J. M., Reed, C. M., Valiante, T. A., Mamelak, A. N., Kreiman, G., & Rutishauser, U. (2024). Nature Human Behaviour, 8(12), 2423–2436.

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience

11 notes

·

View notes

Text

Using Language to Give Robots a Better Grasp of an Open-Ended World - Technology Org

New Post has been published on https://thedigitalinsider.com/using-language-to-give-robots-a-better-grasp-of-an-open-ended-world-technology-org/

Using Language to Give Robots a Better Grasp of an Open-Ended World - Technology Org

Imagine you’re visiting a friend abroad, and you look inside their fridge to see what would make for a great breakfast. Many of the items initially appear foreign to you, each encased in unfamiliar packaging and containers. Despite these visual distinctions, you begin to understand what each one is used for and pick them up as needed.

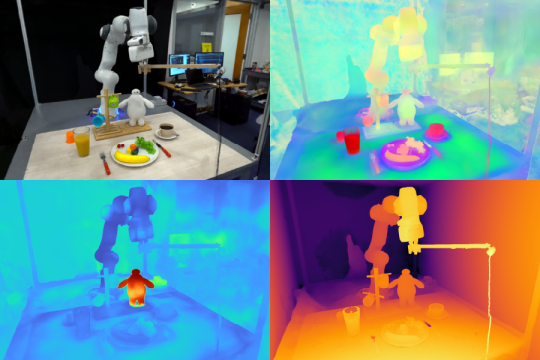

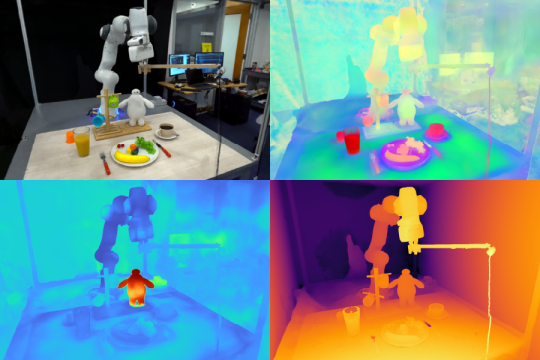

Robotic image recognition – illustrative photo. Image credit: MIT CSAIL

Inspired by humans’ ability to handle unfamiliar objects, a group from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) designed Feature Fields for Robotic Manipulation (F3RM), a system that blends 2D images with foundation model features into 3D scenes to help robots identify and grasp nearby items.

F3RM can interpret open-ended language prompts from humans, making the method helpful in real-world environments that contain thousands of objects, like warehouses and households.

F3RM allows robots to interpret open-ended text prompts using natural language, helping the machines manipulate objects. As a result, the machines can understand less specific human requests and still complete the desired task.

For example, if a user asks the robot to “pick up a tall mug,” the robot can locate and grab the item that best fits that description.

“Making robots that can actually generalize in the real world is incredibly hard,” says Ge Yang, postdoc at the National Science Foundation AI Institute for Artificial Intelligence and Fundamental Interactions and MIT CSAIL.

“We really want to figure out how to do that, so with this project, we try to push for an aggressive level of generalization, from just three or four objects to anything we find in MIT’s Stata Center. We wanted to learn how to make robots as flexible as ourselves, since we can grasp and place objects even though we’ve never seen them before.”

Robots learning “what’s where by looking”

The method could assist robots with picking items in large fulfillment centers with inevitable clutter and unpredictability. In these warehouses, robots are often given a description of the inventory that they’re required to identify. The robots must match the text provided to an object, regardless of variations in packaging, so that customers’ orders are shipped correctly.

For example, the fulfillment centers of major online retailers can contain millions of items, many of which a robot will have never encountered before. To operate at such a scale, robots need to understand the geometry and semantics of different items, with some being in tight spaces.

With F3RM’s advanced spatial and semantic perception abilities, a robot could become more effective at locating an object, placing it in a bin, and then sending it along for packaging. Ultimately, this would help factory workers ship customers’ orders more efficiently.

“One thing that often surprises people with F3RM is that the same system also works on a room and building scale, and can be used to build simulation environments for robot learning and large maps,” says Yang.

“But before we scale up this work further, we want to first make this system work really fast. This way, we can use this type of representation for more dynamic robotic control tasks, hopefully in real-time, so that robots that handle more dynamic tasks can use it for perception.”

The MIT team notes that F3RM’s ability to understand different scenes could make it useful in urban and household environments. For example, the approach could help personalized robots identify and pick up specific items. The system aids robots in grasping their surroundings — both physically and perceptively.

“David Marr defined visual perception as the problem of knowing ‘what is where by looking,’” says senior author Phillip Isola, MIT associate professor of electrical engineering and computer science and CSAIL principal investigator.

“Recent foundation models have gotten really good at knowing what they are looking at; they can recognize thousands of object categories and provide detailed text descriptions of images. At the same time, radiance fields have gotten really good at representing where stuff is in a scene. The combination of these two approaches can create a representation of what is where in 3D, and what our work shows is that this combination is especially useful for robotic tasks, which require manipulating objects in 3D.”

Creating a “digital twin”

F3RM begins to understand its surroundings by taking pictures on a selfie stick. The mounted camera snaps 50 images at different poses, enabling it to build a neural radiance field (NeRF), a deep learning method that takes 2D images to construct a 3D scene. This collage of RGB photos creates a “digital twin” of its surroundings in the form of a 360-degree representation of what’s nearby.

In addition to a highly detailed neural radiance field, F3RM also builds a feature field to augment geometry with semantic information. The system uses CLIP, a vision foundation model trained on hundreds of millions of images to efficiently learn visual concepts. By reconstructing the 2D CLIP features for the images taken by the selfie stick, F3RM effectively lifts the 2D features into a 3D representation.

Keeping things open-ended

After receiving a few demonstrations, the robot applies what it knows about geometry and semantics to grasp objects it has never encountered before. Once a user submits a text query, the robot searches through the space of possible grasps to identify those most likely to succeed in picking up the object requested by the user.

Each potential option is scored based on its relevance to the prompt, similarity to the demonstrations the robot has been trained on, and if it causes any collisions. The highest-scored grasp is then chosen and executed.

To demonstrate the system’s ability to interpret open-ended requests from humans, the researchers prompted the robot to pick up Baymax, a character from Disney’s “Big Hero 6.” While F3RM had never been directly trained to pick up a toy of the cartoon superhero, the robot used its spatial awareness and vision-language features from the foundation models to decide which object to grasp and how to pick it up.

F3RM also enables users to specify which object they want the robot to handle at different levels of linguistic detail. For example, if there is a metal mug and a glass mug, the user can ask the robot for the “glass mug.”

If the bot sees two glass mugs and one of them is filled with coffee and the other with juice, the user can ask for the “glass mug with coffee.” The foundation model features embedded within the feature field enable this level of open-ended understanding.

“If I showed a person how to pick up a mug by the lip, they could easily transfer that knowledge to pick up objects with similar geometries such as bowls, measuring beakers, or even rolls of tape. For robots, achieving this level of adaptability has been quite challenging,” says MIT PhD student, CSAIL affiliate, and co-lead author William Shen.

“F3RM combines geometric understanding with semantics from foundation models trained on internet-scale data to enable this level of aggressive generalization from just a small number of demonstrations.”

Written by Alex Shipps

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#3d#A.I. & Neural Networks news#ai#approach#artificial#Artificial Intelligence#awareness#bot#Building#coffee#collage#collisions#computer#Computer Science#Containers#data#Deep Learning#engineering#Features#form#Foundation#Fundamental#Geometric#geometry#Hardware & gadgets#how#how to#human#humans#Ideas

0 notes

Text

Mitchell: The World as Exhibition

"The four Egyptians spent several days in the French capital, climbing twice the height (they were told) of the Great Pyramid in Alexandre Eiffel's new tower..."

"The Egyptian exhibit had been built by the French to represent a street of medieval Cairo...'It was intended,' one of the Egyptians wrote, 'to resemble the old aspect of Cairo.' So carefully was this done, he noted, that 'even the paint on the buildings was made dirty.'"

To the West, the rest of the world is archaic, antiquated spectacle – a curious view of the past, an antithesis to Western progress "in the right direction"

"The Egyptian visitors were disgusted by all this...Their final embarrassment had been to enter the door of the mosque and discover that...it had been erected as what the Europeans called a façade."

"Together with other non-European delegates, the Egyptians were received with hospitality–and a great curiosity...they found themselves something of an exhibit."

"What [can] this process of exhibiting tell us about the modern West[?]"

modernity as defined by the West positions its own culture as the norm and the mundane, from which "the ordering up of the world itself as an endless exhibition" can be procured as entertainment, curiosity, and an object of "interested study, intellectual analysis"

how can Islam's relationship with modernity be a positive one, defined as it is by this sort of cheapened commodification of its premises as entertainment for the "civilized West"?

Yuval Noah Harari in Sapiens posits that one of the key reasons for Western/European dominance is 'curiosity' and 'the cultural backing to question tradition/history.' Does the "curiosity of the European [encountered] in almost every subsequent Middle Eastern account" following the first Arabic description of 1800s Europe then become a defining factor/formidable strong suit of Western modernity?

Does the "[demonstration of] the history of human labor by means of 'objects and things themselves'" espoused by the European exhibitions of the latter 1800s speak to the beginnings of an increased focus on the "hardware" and "observable, empirical data" of any one subject of study?

Marr's 1982 argument in computational social science: any informational system can be analyzed via 1) problem/computation 2) algorithm 3) physical system hardware; science and especially neuroscience today emphasizes (3) and thus comes away, as argued by some, as an incomplete understanding of the way the brain works

i.e., a bird's mechanisms and purposes for flight cannot be deduced from the study of its physical hardware components alone (feather)

in the same way, an "object lesson" is not the definitive experience of another culture/lifestyle as imagined by the "Histoire du Travail" display of the 1889 Exhibition but rather an overemphasis on the "physical hardware" and material of that culture and European imaginations and (mis)interpretations of its computational/algorithmic functions and purposes

One major Arabic response to Western creations of spectacle is heavy documentation, "[devoting] hundreds of pages to describing the peculiar order and technique of these events–the curious crowds of spectators, the scholarly exhibit and the model...the systems of classifications...the lectures, the plans and the guide books–in short the entire machinery of what we think of as representation."

"They were taken to the theater, a place where Europeans represented their history to themselves..." – non-Western cultures are represented in the same fashion, as spectacle, as traditions and culture from the West's own history. In unifying their systems of representation the West has relegated the rest of the world, including the Arab world and Islam, to the same status as its own history

Is a society's/culture's own history also a form of subjugated knowledge? i.e., when history is remembered as tragic and painted as a negative, primitive state rather than a series of traditions and stories to be revered, does that indicate the modern knowledge vanquishing/subjugating the past knowledge?

"The Europe in Arabic accounts was a place of spectacle and visual arrangement, of the organization of everything, and everything organized to represent...some larger meaning."

"intizam al-manzar, the organization of the view" – is this...the panopticon? Western visual organization of spaces, spectacles, and symbols to convey their interpretations of non-Western cultures feels very much akin to a disciplinary technique to exercise power subtly over the bodies and <souls> of non-Western individuals/populations.

the spectator role holds no power – the spectator can only witness and be complicit to his own objectification

'objectification' in the sense that the individual becomes a material representation of 'the Orient' or 'the East' and all of his actions, thoughts, speech, mannerisms are subsequently first filtered through the lens of this representation to fit to the Western idea of 'his culture and people' before they are attributed to him, and the resulting communication of his identity and actions is so garbled and perverted that it really only serves to reinforce the West's perception of them.

"First, there was the apparent realism of the representation. The model or display always seemed to stand in perfect correspondence to the external world...Second, the model, however realistic, always remained distinguishable from the reality it claimed to represent...the medieval Egyptian street at the Paris Exhibition remained only a Parisian copy of the Oriental original."

Is this an example of Baudrillard's hyperreality? And if it is, does that mean that, as he states, 'neither the representation nor the real remains, just the hyperreal'?

furthermore, if only the hyperreal remains, what is the hyperreal? we know that the representation is the Parisian perception of the non-Western world, and that the real is the non-Western world itself (but is that world in the past or the present?)

so in this instance I suppose neither of them remain and the strangely perfect-but-not "Parisian copy of the Oriental original" is the only thing present – the "effect called the real world"

"...the world of representation is being admired for its dazzling order, yet the suspicion remains that all this reality is only an effect."

Is the "search for a pictorial certainty of representation" unique to the West?

was the creation of hyperreality uniquely borne of Western society? The argument is that the East is "...a world where, unlike the West, such 'objectivity' was not yet build in"

does Western hyperreality alone fall between Islam and an understanding reached with Western Judeo-Christian societies?

can the obstacle of exhibition/spectacle be overcome? Who needs to make the first step to overcome it, the West or the East? What does this first step look like, and it is actually possible to achieve given the nature of media coverage and social media in the modern era that creates a new hyperreality with regards to our understanding of the outside world?

The only objects and locations of value to Western modernity are those whose "pictorial certainties of representation" can inspire awe, wonder, marvel – or fit in with the overall Western representation of non-Western cultures as an exhibition of interest.

"The ability to see without being seen confirmed one's separation from the world, and constituted, at the same time, a position of power." – the panopticon guard!!

"To establish the objectness of the Orient, as a picture-reality containing no sign of the increasingly pervasive European presence required that the presence itself, ideally, become invisible." – this harkens to covert US support of Middle Eastern regimes that benefitted its own oil interests in the area while at the same time performing espousal of the area's "democratic rule" and "self-governance," "autonomy"

in pursuing an "authentic experience" as an outsider, the European spectator necessarily creates his flawed, hyperrealistic representation of the non-Western individual by appropriating "the dress and [feigning] the religious belief of the local Muslim inhabitants" as his disguise of invisibility and non-perception, despite "...being a person who had no right to intrude among them."

"Unaware that the Orient has not been arranged as an exhibition, the visitor nevertheless attempts to carry out the characteristic cognitive maneuver of the modern subject, separating himself from an object-world and observing it from a position that is invisible and set apart."

Western modernity is the European pursuit and implementation of a hyperreal representation of Islam with the underlying desire to both observe the true nature of the spectacle within and remain unobserved (thus holding onto a position of power)

"This, then, was the contradiction of Orientalism. Europeans brought to the Middle East the cognitive habits of the world-as-exhibition, and tried to grasp the Orient as a picture."

I'm not sure if this is still the prevalent world-view, or if it is one example of the Western tendency to impose its own perspective of the Middle East onto the reality of issues in Arab and non-Western countries

i.e., the viewpoint that democratization is the best and inevitable system of governance and steps away from it are 'regression' towards 'the archaic past'

when the Orient that is not created as an exhibition fails to meet the European spectator's expectations, that reality is 1) dismissed as inferior or 2) painted as corrupted by non-Western modernity, which is always 'straying away from the true form of the Orient,' which can now only be found in European definitions of the non-Western world in the eyes of the European tourist/scholar

very similar to Christian scholars stating that they 'saved Buddhism' by 'rediscovering the pure, original form of the religion' beneath 'idolatry and other corruptions of the core Buddhism'

14 notes

·

View notes

Quote

Perhaps the recognition of his mortality gave [David] Marr's prose a grander perspective than the details of a model of vision might otherwise have warranted. He put his view of how the brain worked into a far broader, ethical context, telling us something about how we have evolved and the origin of our most deeply-held views in the effects of natural selection: 'To say the brain is a computer is correct but misleading. It's really a highly specialised information-processing device - or rather, a whole lot of them. Viewing our brains as information-processing devices is not demeaning and does not negate human values. If anything, it tends to support them and may in the end help us to understand what from an information-processing view human values actually are, why they have selective value and how they are knitted into the capacity for social mores and organisation with which our genes have endowed us.' It has been said that the density of Marr's mathematics means that his work has more often been cited than understood. This quip reveals that Marr's significance lies not in the precise detail of his computational models of vision - even his most ardent supporters accept that much of his book is now largely of historical interest - but rather in his overall approach. Unlike [Horace] Barlow, Marr did not think that the activity of single neurons could explain how circuits function and how perception works. As Marr put it in a somewhat barbed justification of his new method: 'Trying to understand perception by studying only neurons is like trying to understand bird flight by studying only feathers: it just cannot be done. In order to study bird flight, we have to understand aerodynamics: only then do the structure of the feathers and the different shapes of bird wings make sense.'

Matthew Cobb, The Idea of the Brain

1 note

·

View note

Text

[mod list] Star Wars: Knights of the Old Republic - The Sith Lords

I assemled this list for myself and friends/family, but I’m also sharing it with the world in case anyone mods like me and finds this useful. Here’s an excerpt from the convo that sparked this list’s formation as it is...

i have a pretty basic approach to mods for kotor 1&2...

1.) no big cheats (petty stuff -- like slightly beefier stats on armor or something idc, nbd, but i don't install "hack pads," or "skip peragus," things like that)

2.) no weird sex shit. in fact, sometimes i use modesty-mods to combat cringe exploitation.

3.) no heavy-handed mods that disqualify you from using a lot of other mods (so, the massive TSL Restored Content mod is basically off the table, sad to say, sorry... maybe one day i can figure out how to make them all work together) [edit: i have made an exception for TSLRCM, because it’s pretty widely accepted by the community at this point, however this adds SIGNIFICANTLY to the whole install order issue.]

4.) i improve textures on characters, items, effects, skyboxes... but i usually don't mess with super high-res texture replacements of the world/surfaces/deco because 4k textures aren't gonna make KOTOR look THAT much better... however it DOES make your system run like garbage and present more render errors.

5.) gotta be canon (for this game/world) as possible.

- UNOFFICIAL PATCH -

KOTOR 2 Community Patch 1.5.1 https://deadlystream.com/files/file/1280-kotor-2-community-patch/

- BUG FIXES -

Hide Weapons in Animations 🔥 https://www.nexusmods.com/kotor2/mods/53

Darth Malaks Armour https://www.nexusmods.com/kotor2/mods/9

TSL Jedi Malak Mouth Fix 1.1.0 https://deadlystream.com/files/file/1444-tsl-jedi-malak-mouth-fix/

Kotor 1 Texture to Kotor 2 Game Bridge 1.0.0 (note: these textures are the barest necessity, highly suggested even for those that don’t replace textures for quality... but will be overwritten if you load up on HD textures in the latter category) ⚠️ https://deadlystream.com/files/file/1330-kotor-1-texture-to-kotor-2-game-bridge/

JC's Supermodel Fix for K2 1.6 https://deadlystream.com/files/file/1141-jcs-supermodel-fix-for-k2/

Maintenance Officer Realistic Reskin 1.1 https://deadlystream.com/files/file/165-maintenance-officer-realistic-reskin/

Darth Nihilus Animation Fix Update https://www.nexusmods.com/kotor2/mods/1076

Revan Cutscene Forcepower Fix K2 https://www.nexusmods.com/kotor2/mods/71

Kreia's Fall cutscene (in-game) 1.1 https://deadlystream.com/files/file/1228-kreias-fall-cutscene-in-game/

- DIALOGUE CHANGES -

Dahnis Flirt Option for Female PC 1.0 https://deadlystream.com/files/file/1400-dahnis-flirt-option-for-female-pc/

Bao Dur Shield Dialogue Restoration 1.1 https://deadlystream.com/files/file/1206-bao-dur-shield-dialogue-restoration/

Choose Mira or Hanharr 🔥 https://www.moddb.com/mods/the-sith-lords-restored-content-mod-tslrcm/addons/choose-mira-or-hanharr

- PLAYER HEADMORPHS -

Canonical Jedi Exile 1.2 👩 🔥 (note: this is, personally, my fave headmorph.) https://deadlystream.com/files/file/170-canonical-jedi-exile/

PFHA04 Reskin 👩 (dark brown, contour, lips) https://www.nexusmods.com/kotor2/mods/74

TOR Ports: Meetra Surik AKA Jedi Exile Female Player Head for TSL 1.0.1 👩 https://deadlystream.com/files/file/1646-darth333s-ez-swoop-tsl/

Black Haired PMHC04 👨 https://www.nexusmods.com/kotor2/mods/1025

- COMPANION MORPHS & TEXTURES -

Darth Sapien's Presents T3M4 HD 2k 1.00 https://deadlystream.com/files/file/514-darth-sapiens-presents-t3m4-hd-2k/

Darth Sapiens presents: HD 2K Visas Marr 1.0 https://deadlystream.com/files/file/519-darth-sapiens-presents-hd-2k-visas-marr/

4k Atton 1.0 (note: only used clothes, resized to 25%) 🗜️ https://deadlystream.com/files/file/441-4k-atton/

Atton Rand with scruff https://www.nexusmods.com/kotor2/mods/38

Sparkling Mira https://deadlystream.com/files/file/527-sparkling-mira/

TSL Mira Unpoofed 1.0.1 https://deadlystream.com/files/file/1733-tsl-mira-unpoofed/

Mira Shirt Edit https://www.nexusmods.com/kotor2/mods/1075

Player & Party Underwear 2.0 🔥 https://deadlystream.com/files/file/344-player-party-underwear/

- NPC MORPHS & TEXTURES -

Luxa Hair/Body Fix 🔥 (note: INCLUDE outfit change) https://deadlystream.com/files/file/452-luxa-hair-fix/

Darth Sion remake https://www.nexusmods.com/kotor2/mods/984

Darth Sapiens Presents: HD Darth Nihilus 1.00 https://deadlystream.com/files/file/367-darth-sapiens-presents-hd-darth-nihilus/

TSL Better Male Twi'lek Heads 1.3.1 (note: thin-neck version) https://deadlystream.com/files/file/1432-tsl-better-male-twilek-heads/

Malak with Hair (note: this shit’s canon) https://deadlystream.com/files/file/919-malak-with-hair/

- ADDITIONAL ARMORS -

Jedi Journeyman Robes (Luke ROTJ Outfit) 1.0.2 🔥 https://deadlystream.com/files/file/1511-jedi-journeyman-robes-luke-rotj-outfit

TSL Bao-Dur's Charged Armor https://www.nexusmods.com/kotor2/mods/20

TSL Improved Party Outfits 🔥 https://www.nexusmods.com/kotor2/mods/934

Exile Armor (note: greyscaled in photoshop) 🛃 https://www.nexusmods.com/kotor2/mods/4

- MISC MODS -

Party Leveler TSL https://www.nexusmods.com/kotor2/mods/966

Green Level-Up Icon https://www.nexusmods.com/kotor2/mods/26

Remote Tells Influence (note: appears to not currently work with TSLRCM) ⚠️ https://www.gamefront.com/games/knights-of-the-old-republic-ii/file/remote-tells-influence

Invisible Headgear 🔥 https://deadlystream.com/files/file/736-invisible-headgear/

Darth333's EZ Swoop [TSL] 1.0.0 (note: players say it does currently work with TSLRCM) https://deadlystream.com/files/file/1646-darth333s-ez-swoop-tsl/

- HD TEXTURE UPGRADES -

note: many of these textures overlap and will overwrite one another. i haven’t listed them in any particular order... except the first two. i suggest, if you really want almost every texture in the game to be upgraded, to install them first and let everything else overwrite according to your prefs. if you wanna keep it simple, i suggest just skipping this and the next category (loading screens)

...

*KOTOR 2 UNLIMITED WORLD TEXTURE MOD (note: resized to 25%) 🗜️ https://www.nexusmods.com/kotor2/mods/1062

*Complete Character Overhaul - Ultimate HD Pack (note: resized to 25%) 🗜️ https://www.nexusmods.com/kotor2/mods/1060

High Quality Blasters https://www.nexusmods.com/kotor2/mods/919

HD Lightsabers https://www.nexusmods.com/kotor2/mods/1013

The IceEclipse Power Textures https://www.nexusmods.com/kotor2/mods/960

Fire and Ice HD https://www.nexusmods.com/kotor2/mods/25

HD Foot Locker https://www.nexusmods.com/kotor2/mods/990

TSL Backdrop Improvements https://www.nexusmods.com/kotor2/mods/922

Improved Peragus Asteroid Fields 1.2 https://deadlystream.com/files/file/321-improved-peragus-asteroid-fields/

Telos Polar Academy Hangar Skybox 1.0.0 https://deadlystream.com/files/file/1389-telos-polar-academy-hangar-skybox/

High Quality Ravager Backdrop https://www.nexusmods.com/kotor2/mods/41

High Quality Stars and Nebulas https://www.nexusmods.com/kotor2/mods/31

Sith Soldier armor retexture https://www.nexusmods.com/kotor2/mods/1037

High Poly Grenades K2 https://www.nexusmods.com/kotor2/mods/1001

HD Muzzle Flash https://www.nexusmods.com/kotor2/mods/991

KotOR 2 Remastered (AI Upscaled) Cutscenes (note: 720p) https://www.nexusmods.com/kotor2/mods/1066

K2 - Upscaled maps 🔥 https://www.nexusmods.com/kotor2/mods/1086

Atris Holocrons https://www.nexusmods.com/kotor2/mods/949

TSL HD Cockpit Skyboxes 3.1 (High Res TPC) https://deadlystream.com/files/file/931-tsl-hd-cockpit-skyboxes/

More Vibrant Skies 1.0 https://deadlystream.com/files/file/156-more-vibrant-skies/

Vurt's Exterior Graphics Overhaul https://www.nexusmods.com/kotor2/mods/1044

Ebon Hawk HD - 4X Upscaled Texture (note: resized to 25%) 🗜️ https://www.nexusmods.com/kotor2/mods/1059

Ebon Hawk Model Fixes 2.0 https://deadlystream.com/files/file/1033-ebon-hawk-model-fixes/

Peragus Administration Level Room Model Fixes 1.0.0 https://deadlystream.com/files/file/1275-peragus-administration-level-room-model-fixes/

Peragus Large Monitor Adjustment 1.0 https://deadlystream.com/files/file/317-peragus-large-monitor-adjustment/

[TSL] Animated Computer Panel 1.1.0 https://deadlystream.com/files/file/1385-tsl-animated-computer-panel/

Citadel Station Signage 1.1 https://deadlystream.com/files/file/308-citadel-station-signage/

Replacement Peragus II Artwork by Trench 1.0 https://deadlystream.com/files/file/361-replacement-peragus-ii-artwork-by-trench/

Replacement Texture for Lightning on Malachor V 1.2 https://deadlystream.com/files/file/298-replacement-texture-for-lightning-on-malachor-v/

Peragus Medical Monitors and Computer Panel 1.0.0 https://deadlystream.com/files/file/1375-peragus-medical-monitors-and-computer-panel/

TSL Animated Galaxy Map 4.1 https://deadlystream.com/files/file/219-tsl-animated-galaxy-map/

- HD LOADING SCREENS -

Replacement Loading Screens for KotOR2: Original Pack (with or without TSLRCM) - Part 1 1.1 https://deadlystream.com/files/file/1255-replacement-loading-screens-for-kotor2-original-pack-with-or-without-tslrcm-part-1/

Replacement Loading Screens for KotOR2: Original Pack (with or without TSLRCM) - Part 2 1.1 https://deadlystream.com/files/file/1256-replacement-loading-screens-for-kotor2-original-pack-with-or-without-tslrcm-part-2/

Replacement Loading Screens for KotOR2: Original Pack (with or without TSLRCM) - Part 3 1.0 https://deadlystream.com/files/file/1257-replacement-loading-screens-for-kotor2-original-pack-with-or-without-tslrcm-part-3/

Replacement Loading Screens for KotOR2: Add-On Pack (with or without TSLRCM) 1.3 https://deadlystream.com/files/file/1253-replacement-loading-screens-for-kotor2-add-on-pack-with-or-without-tslrcm/

Nar Shaddaa Loadscreens (using Sharen Thrawn skybox and with horizontal overlay) 1.0 https://deadlystream.com/files/file/1238-nar-shaddaa-loadscreens-using-sharen-thrawn-skybox-and-with-horizontal-overlay/

- UNOFFICIAL RESTORATIONS -

The Sith Lords Restored Content Mod (TSLRCM) https://www.moddb.com/mods/the-sith-lords-restored-content-mod-tslrcm

Extended Enclave (TSLRCM add-on) https://www.moddb.com/mods/the-sith-lords-restored-content-mod-tslrcm/addons/extended-enclave-tslrcm-add-on

NPC Overhaul Mod (TSLRCM add-on) (note: this is an inane mess to install, tedious AF, and idk if i can say it’s worth it... but i did it and it didn’t break anything) https://www.moddb.com/mods/the-sith-lords-restored-content-mod-tslrcm/addons/npc-overhaul-mod

Party Swap (TSLRCM add-on) 🔥 https://www.moddb.com/mods/partyswap/news/partyswap-133

Coruscant Scene No Overlay (TSLRCM add-on) https://www.moddb.com/mods/the-sith-lords-restored-content-mod-tslrcm/addons/coruscant-scene-no-overlay

5 notes

·

View notes

Text

#Blog Post 9. ARTIFICIAL INTELLIGENCE AND MUSIC

Music is undoubtedly one of the most intriguing human intelligence activities. For composers, artists, authors, engineers and scientists, computers are a fantastic tool. All of these give music a different perspective, but all of them can benefit from computers ' precision and automation. Computers have more to offer than just a decent calculator, printer, audio recorder, digital effects box, or whatever the computer's immediate useful purpose. The computer's real significance lies in the new paradigms of art and scientific thinking that computation creates. The technology of using Artificial Intelligence for creating music is latest innovations in the field of technology. Discussion days are over when artificial intelligence (AI) will impact the music industry. In many ways, artificial intelligence is already being used. Today, in music composition, production, theory and digital sound processing, you can find AI applications. In addition, AI helps musicians test new ideas, find the best emotional context, incorporate music into modern media, and just have fun. In huge data sets, AI automates processes, finds trends and observations, and helps create efficiencies.

Into the history

First phase

Work at this time focused primarily on algorithmic composition which aimed at a new composition that is aesthetically satisfying:

In 1957, Lejaren Hiller and Leonard Isaacson of Urbana's University of Illinois programmed “Illiac Suite for String Quartet”, the first work entirely written by artificial intelligence.

In 1960, the first paper on algorithmic music composition was written by Russian researcher R.Kh. Zaripov using the "Ural-1" computer.

Breakthroughs

The more significant level of music intelligence emerged in the generative modelling of music as research began to focus on understanding music.

In the 1970s, interest in algorithmic music also reached the pop scene's well-known artists. David Bowie, an unquestionable iconic figure in the music industry, was the one who first started thinking in this direction. He created Verbasizer, a lyric-writing Mac app, with Ty Roberts.

In 1975, it was N. Rowe from the MIT Experimental Music Studio has created a smart music perception system that allows a musician to play freely on an acoustic keyboard while the computer infers a metre, its tempo and note durations.

In 1980, David Cope founded EMI (Experiments in Musical Intelligence) from the University of California, Santa Cruz. The system was based on generative models for analysing and creating new pieces based on existing music.

Current research

AI and music research continues on composition of music, intelligent analysis of sound, cognitive science and music, etc.

Initiatives like Google Magenta, Sony Flow Machines, IBM Watson Beat, would like to find out whether AI can compose music that is convincing.

Pop music composed by AI was first published by the Sony CSL Research Laboratory in 2016 and the results were impressive. Daddy's Car's is a cheerful, bright tune that resembles The Beatles. The AI system offered to write the song melodies and lyrics based on the original parts of The Beatles, but the further arrangement was rendered by live musicians.

Taryn Southern became a sensation after taking part in the American Idol TV show. The next logical step was for a new album to be released. Southern decided to take a non-standard approach — to use AI to write an album. She opted for a startup called Amper as a tool. This programme is capable of producing sets of melodies according to a given genre and mood with the help of internal algorithms. The outcome was the “Break Free” song.

CJ Carr and Zack Zukowski are engaged in a very unusual thing founded by Dadabots Boston programmers— they teach artificial intelligence how to write "heavy" music. The developers presented the black metal album "Coditany of Timeness" in 2017 and presented the results of the algorithm that composes music in the death metal style to the public. According to them, the algorithm produces a pretty decent music for this genre, which needs no corrections, so they decided to give him the willingness to write live tracks on YouTube.

Interesting developments in the Musical Intelligence field

Research now focuses on using artificial intelligence in compiling musical composition, performance, and digital sound processing, as well as selling and consuming music. For teaching and creating music, most AI-based systems and applications are used. Some of them are here:

AlgoTunes is a software company that develops music-generating applications. On the site, with one keystroke, anyone can create a random piece of music with a given style and mood— but the choice of settings is very limited. Music is created in a few seconds by a web application and can be downloaded as WAV or MIDI files.

Founded in 2015, MXX (Mashtraxx Ltd) is the world's first artificial intelligence mechanism to instantly convert music to video using a stereo file. MXX enables you to adapt music to specific user content, such as sports and playing, computer games plots, and so on. Audition pro, the first MXX app, allows anyone to edit music for video: load an existing song and automatically adjust the increase in sound frequency, amplification and pause according to the video's dynamics. MXX also provides services to leading commercial libraries, music services, game developers, and production studios that include music tailored to modern media.

Orb Composer — a program designed by Hexachords to help compile orchestral compositions at genre selection stages, instrument selection, track composition.

OrchExtra may help collect a complete Broadway score from a small high school or city theatre ensemble. OrchExtra plays the role of missing devices, recording variations in tempo and musical language.

Is AI a threat to musicians?

AI's capabilities create tension among the groups of musicians and producers who see it as a challenge to their jobs first. AI-music start-ups ' argument is that AI is a creative tool that frees musicians to create more and better art. While AI can outperform humans to help you sleep in areas like video backup music or soundtracks, it is not capable of creating great original music without human intervention.

Conclusion

AI will eventually change the music industry as computers and AI become more powerful and accessible. The choice is how we work with AI, though. The reason that robots can take over our jobs is that we are robotic about the jobs. However the creation and production of music is a creative process. After all, the possible mechanism of typically developing legendary songs happens on its own, from deep within the heart of the poet, his passionate feelings and unique experience of life. Giving more control over AI applications to musicians is important rather than letting AI take over. After all, we love musicians because of their humanity and personality — not just their music itself.

References

1. Deahl, D. (2018). How AI-generated music is changing the way hits are made. [Online] The Verge. Available at: https://www.theverge.com/2018/8/31/17777008/artificial-intelligence-taryn-southern-amper-music [Accessed 9 Nov. 2019].

2. Drake, J. (2018). AI & Music. [Online] Soundonsound.com. Available at: https://www.soundonsound.com/music-business/ai-music [Accessed 21 Nov. 2019].

3. En.wikipedia.org. (2019). Music and artificial intelligence. [Online] Available at: https://en.wikipedia.org/wiki/Music_and_artificial_intelligence [Accessed 21 Nov. 2019].

4. Kharkovyna, O. (n.d.). Artificial Intelligence and Music: What to Expect? [Online] Medium. Available at: https://towardsdatascience.com/artificial-intelligence-and-music-what-to-expect-f5125cfc934f [Accessed 21 Nov. 2019].

5. Li, C. (2019). A Retrospective of AI + Music. [Online] Medium. Available at: https://blog.prototypr.io/a-retrospective-of-ai-music-95bfa9b38531 [Accessed 21 Nov. 2019].

6. Love, T. (2019). Do androids dream of electric beats? How AI is changing music for good. [Online] the Guardian. Available at: https://www.theguardian.com/music/2018/oct/22/ai-artificial-intelligence-composing [Accessed 21 Nov. 2019].

7. Marr, B. (2019). The Amazing Ways Artificial Intelligence Is Transforming The Music Industry. [Online] Forbes.com. Available at: https://www.forbes.com/sites/bernardmarr/2019/07/05/the-amazing-ways-artificial-intelligence-is-transforming-the-music-industry/#23c4c1a65072 [Accessed 21 Nov. 2019].

1 note

·

View note

Text

KOTOR 2 Mod list

For personal reference. Please feel free to add to this if you have further recommendations!! :) I love this game so much istg

Widescreen Fix

Reshade

Gameplay

TSLRCM M4-78EP Extended Enclave NPC Overhaul Mod Sion's arrival at Peragus - High Resolution Movies Nihilus Zombies Death CORUSCANT - JEDI TEMPLE Coruscant - Jedi Temple Compatibility For M4-78EP Peragus Sith Troops To Sith Assassins My Dantooine Statue Power Cost Correction Create all Mira's Rockets Full Jedi Council TSL Loot & Immersion Upgrade v.3b (caused crashing) TSL Improved Party Outfits The Exile's Robe Holowan Duplisaber Beta .215b Casino PAZAAK animated 1.2 SWKOTOR2 Secret Tomb Bugfix

Textures&models

KOTOR 2 Starter Pack Kotor 1 Texture to Kotor 2 Game Bridge 1.0.0 Textures Improvement Project 0.4.1 Replacement Loading Screens for KotOR2: Original Pack (Part 1) Replacement Loading Screens for KotOR2: Original Pack (Part 2) Classic Jedi Project KOTOR II Original Trilogy Lightsaber Blades (Animated) Classic Jedi Project KOTOR II Original Trilogy Lightsaber Sounds High Quality Blasters The IceEclipse Power Textures My Realistic Improved Effect Retexture Mod 1.0 Animated Galaxy Map High Quality Stars and Nebulas Movie-style Jedi Master Robes Sith Soldier Armor Retexture Combat Suit Revisited 4.2 Fixed Hologram Models and Admiralty Redux for TSLRCM 1.61 [TSL] Animated Computer Panel 1.1.0 TSL Backdrop Improvements HD Foot Locker TSL Twi'lek Male 3D Ears 1.2.1 Improved Jedi Sacks TSL Harbinger and Hammerhead Visual Appearance Mod 1.0

Places

Peragus OTE & A Darker Peragus + A Darker Peragus REDUX 1.0 Improved Peragus Asteroid Fields Peragus Large Monitor Adjustment 1.0 Peragus Medical Monitors and Computer Panel 1.0.0 TSL ORIGINS - Telos Overhaul 1.1.1 & Telos OTE / K2TSLR - The Sith Lords Remastered WIP Alpha 1-1 TSL ORIGINS: Harbinger Overhaul 1.0 New Texture of Holocrons in the Telos Secret Academy 1.2 Quansword's HD Ebon Hawk Retexture Ebon Hawk Past KOTOR2 More Subtle Animated Ebon Hawk Monitors (still not including Galaxy Map) 1.2 Dantooine and Korriban High Resolution Improved Dantooine Skybox 1.0.1 DXUN 2013 K2 Exterior Textures, Part 1 Version 0.1 Vurt’s Exterior Graphics Overhaul Narshadaa O.T.E. Realistic Nar Shaddaa Skybox HD Skybox for M4-78 Malachor Exterior Reskin By Quanon V1.3 Replacement Texture for Lightning on Malachor V 1.2 Side Opening Doors on Malachor Realistic Skybox for deathdisco's Coruscant 1.0.0 More Vibrant Skies 1.0

Characters

Party Member Reskin Mod (Kreia, Bao-Dur, Desciple, Hanharr) Atton Reskin 4K Atton (outfit) Sparkling Mira Darth Sapiens presents: HD 2K Visas Marr 1.0 Darth Sapien's Presents T3M4 HD 2k 1.00 HK-50 & HK-51 Reskin Movie Mandalorians TFU-style Darth Sion model Darth Sapiens Presents: HD Darth Nihilus 1.00 Visually Repair HK-47 1.0.0 Taibhrigh's Female Player Head Replacement Skin (PFHC07) PFHA04 Reskin Luxa Hair Fix Maintenance Officer Realistic Reskin 1.1

9 notes

·

View notes

Text

The way direct realism was described to me made it seem very hippy

JJ Gibson's ideas being compared and contrasted to the computational model guys (Marr and Bierderman) really did it for me.

Like computational models give me the vibe of the ultimate pursuit of pure science. Break everything down into this clean, sterile and categorisation of things.

Where direct realism is like, nature is chaotic. Our psychology is chaotic. You wanna study leaf litter ecology? You can't study each animal in isolation and know what would happen when all the biotic and abiotic elements are placed together! This can't be expressed with nice mathematical models

(Nature can oft be studied using mathematical models yes. But they get so big and complicated just to try and account for all the different factors lol)

#direct idealism#J J Gibson#Marr's computational model#cogntive psychology#poorly thought out psychology rant

0 notes

Text

promissory notes

Complete satisfaction OR your money back!!! - is something I don't think anyone in videogames has ever willingly said,so maybe it doesn't make sense to talk of anything as stable as a "guarantee". Maybe it's more like a system of overlapping promises, designed to contain the idea that a videogame exists in at least some kind of provisional relationship to human happiness, even if the rate at which the two could be converted is never quite nailed down. We have the promise the game will work on a given system or set of minimum requirements, the hazier assurance it might at least resemble the screenshots on the box, the genre assurance that it formally and hence experientially resembles some other game you like. The assurance, in press leading up to release, of passion and artistic intent rattling around in there somewhere as well, the assurance that the game will have x y and z new features and scope. Press and external reviewers can so to speak cosign a guarantee or write their own more ambivalent one on the basis of their reputation. Storefronts as well can tacitly endorse some promise - that this thing exists, functions, falls into the category of "entertainment" - when they put it on their shelves, virtual or otherwise. There's the promise of personal reputation, that the people involved wouldn't want to associate themselves with a bogus product, and the promise of monetary interest - this game obviously had a fair bit of money put into it, they're expecting to make that back, therefore we can expect some moderate fidelity to customer expectation and the sort of general polished feel that comes with being able to hire lots of people to create bark textures.

Most of these institutions aren't specific to videogames, but I do think they have a greater prominence there, owing both to the higher amount of fussy technical variation in the format (it's hard to imagine a book, say, refusing to boot or secretly installing a bitcoin miner in your head) and also to its historical novelty. The idea that something called a "videogame" exists, is an entertainment format, is linked to some kind of prospective emotional value - all these have to be rhetorically insisted upon, particularly as the format moved from spaces with immediately visible analogues (pinball tables, mechanical amusements) to a more diffuse place alongside the family television or home computer. They had to insinuate, and to an extent still have to insinuate, the exact role they played in everyday life. And the shift from being a sort of weird, garish, once-off toy into an ongoing home-improvement project, with new games and consoles to choose between and new add-ins to improve your machine, had to be accompanied by the emergence of institutions that could offer some reassurance this ongoing investment wouldn't be a waste.

So you can maybe glibly think of videogames as a form of currency, built on the premise that they can be "exchanged" at any time for some measure of enjoyment, where this exchange rate is underwritten and co-signed by various institutions. And as having something of the abstraction of currency, as well. If one videogame is a moment of enjoyment then 6000 videogames are in principle 6000 moments of enjoyment - never mind that you may never have a chance to play all those discounted games in your Steam library within one lifetime. Think of it as saving them up for a rainy day. And I suspect that as this relationship between possession and affect grows more abstracted and tenuous, institutions take on a correspondingly more important role in confirming that the central exchange relationship still holds true. A bit like debt rating agencies - it's not so much about actually untangling the complicated sale of good, bad and nonexistent debt packages from one financial institution to another, it's more the promise that at some point this untangling COULD occur, that all this imaginary money still bears some kind of distant relationship to actual human needs.

I wonder if the paranoid style in videogames culture stems partly from this sense of underlying contingency. It's not that games are just experiences, which can't be taken away - they're more like deposits on hypothetical experience, and those deposits can indeed depreciate in value if not turn out to be worthless from the start. Bad reviews, spoilers, the general reputation of a game can all cause it to drop in expected value. The fuss that happens every time a new GTA game gets below 9.5 on IGN or wherever is not so much that the game might really have problems so much that having those problems flagged from the start can marr the sense of occasion, the I-was-there-ness and anticipated retrospective value that's part of the package being sold. And of course the consistent anxiety around corrupt reviewers, incorrect press releases, "fake games", all those other things that could adulterate the currency...

And maybe we could consider the current anxiety around "asset flips" on Steam in the same light. After all, who's really playing these things - besides Youtubers doing so ironically? They're easy to spot and easily refunded and even if some kid really does buy "Cuphat" or "Battleglounds" by accident, well, the worst that could happen is that they develop the same misplaced affection for exploitative consumer garbage as everybody else who grew up playing videogames. And indeed the fact that nobody really buys them is part of the critique - what's unsettling is the fact that they seem more connected to the shadow economies of cheap bundles and trading-card-store manipulation (which is so easy and widespread that PC Gamer could publish a how-to guide with no apparent pushback from anyone). You can easily unpick the specific arguments about what constitutes an asset flip versus a game that just uses premade assets, or how to tell a "scam" from just a regular bad videogame - demonstrative sincerity?? Producing cynical knockoffs with premade asset packs is not necessarily the act of poorly-funded fly-by-nights, as witness the recent news about Voodoo recieving $200 million from Goldman Sachs. But of course they're the chief source of anxiety around the issue, and the ways in which that anxiety manifests is often weirdly racialised - the automatic bad faith extended to the Global South, the fear of nameless hordes overrunning our valuable, exclusive institutions, even a sort of weird variant on the “welfare mother” imagery - the asset flipper with 100 interchangeable games, driving a cadillac... Leaching off the accumulated value stockpiled by the Steam brand, devaluing our libraries and the institutions that have been telling us they're worth something...

I don't really have a lot of sympathy with the asset-flip discourse, both because exactly the same anxiety has been rolled out in the past to Unity, walking simulators, visual novels, Game Maker, Twine, and basically anything else that lowers the barriers to entry around making videogames; and also because I love many games I think those anxieties would try to exclude ("The Zoo Race", GoreBagg games, the Johnny series, even Limbo Of The Lost is as close as Oblivion ever came to being creatively exciting), and I think the calls for hard work and sincerity and so forth function as just so much evasive kitsch. We already HAVE a ton of games like that; and that's maybe the real problem. Why is there so much anxiety about discovering good games when, say, people are complaining about having to choose between the two different, polished, labour-of-love, years-in -the-making narrative platform games being released the same week? Doesn't this just mean the "enjoyment standard" of the videogame promissory note is just by now so abstract and intangible that it's basically just an empty convention, useful for nothing but perpetuating itself - perpetuating the idea of an unadulterated good-game-ness, stretching aimlessly into space like a 1950s radio broadcast. It's a convention which is basically exclusive, which works by trying to put a cordon around the vast swathes of human culture it thinks it's safe to ignore.

Which is maybe fine - nobody can pay attention to anything, and some "rating institutions" are presumably less pernicious than others (the advice of a friend? a critic you enjoy? your own intuition?). There are obviously a lot of critiques that can be levelled at the existing one for videogames, including in particular the assumption that anything that cost a lot of money is worth at least checking out. But there's also something more generally sad about this kind of enforced, perpetual scarcity in a time of abundance, about a model that just pines for less shit so that it can start to feel relevant again, about one that can think of nothing to do with the sheer volume of things being made and rabbit holes being burrowed than wish they didn't exist and try to shut them out entirely.

More people being able to make things is good, and hard to get to; it can also be unnerving and disorienting and also push against some of the happier ideas we might have had about the democratization of art-production (for example, that this wouldn't co-exist with monopolies of arbitrary unaccountable control of the kind exercised by Youtube, Steam, the App store, Google, etc...), it can be a space to view some of the weirder machinations of capital as they leave traces through the culture (money-laundering $9000 books on Amazon and viral Pregnant Spiderman youtube vids). I don't think continuing to defend the value of the medium will help think about these, or become anything but more and more paranoid and quixotic over time.

34 notes

·

View notes

Text

Interesting Papers for Week 10, 2023

A computational model to explore how temporal stimulation patterns affect synapse plasticity. Amano, R., Nakao, M., Matsumiya, K., & Miwakeichi, F. (2022). PLOS ONE, 17(9), e0275059.

Distinct population and single-neuron selectivity for executive and episodic processing in human dorsal posterior cingulate. Aponik-Gremillion, L., Chen, Y. Y., Bartoli, E., Koslov, S. R., Rey, H. G., Weiner, K. S., … Foster, B. L. (2022). eLife, 11, e80722.

Hippocampal astrocytes encode reward location. Doron, A., Rubin, A., Benmelech-Chovav, A., Benaim, N., Carmi, T., Refaeli, R., … Goshen, I. (2022). Nature, 609(7928), 772–778.

Classical center-surround receptive fields facilitate novel object detection in retinal bipolar cells. Gaynes, J. A., Budoff, S. A., Grybko, M. J., Hunt, J. B., & Poleg-Polsky, A. (2022). Nature Communications, 13, 5575.

Inferring stimulation induced short-term synaptic plasticity dynamics using novel dual optimization algorithm. Ghadimi, A., Steiner, L. A., Popovic, M. R., Milosevic, L., & Lankarany, M. (2022). PLOS ONE, 17(9), e0273699.

Context-dependent selectivity to natural images in the retina. Goldin, M. A., Lefebvre, B., Virgili, S., Pham Van Cang, M. K., Ecker, A., Mora, T., … Marre, O. (2022). Nature Communications, 13, 5556.

Dendritic axon origin enables information gating by perisomatic inhibition in pyramidal neurons. Hodapp, A., Kaiser, M. E., Thome, C., Ding, L., Rozov, A., Klumpp, M., … Both, M. (2022). Science, 377(6613), 1448–1452.

Long‐term modulation of the axonal refractory period. Jankowska, E., Kaczmarek, D., & Hammar, I. (2022). European Journal of Neuroscience, 56(7), 4983–4999.

Flexible control as surrogate reward or dynamic reward maximization. Liljeholm, M. (2022). Cognition, 229, 105262.

Mixed synapses reconcile violations of the size principle in zebrafish spinal cord. Menelaou, E., Kishore, S., & McLean, D. L. (2022). eLife, 11, e64063.

Adolescents sample more information prior to decisions than adults when effort costs increase. Niebaum, J. C., Kramer, A.-W., Huizenga, H. M., & van den Bos, W. (2022). Developmental Psychology, 58(10), 1974–1985.

Neural signatures of evidence accumulation in temporal decisions. Ofir, N., & Landau, A. N. (2022). Current Biology, 32(18), 4093-4100.e6.

Offset responses in the auditory cortex show unique history dependence. Olsen, T., & Hasenstaub, A. (2022). Journal of Neuroscience, 42(39), 7370–7385.

Look-up and look-down neurons in the mouse visual thalamus during freely moving exploration. Orlowska-Feuer, P., Ebrahimi, A. S., Zippo, A. G., Petersen, R. S., Lucas, R. J., & Storchi, R. (2022). Current Biology, 32(18), 3987-3999.e4.

Interaction of bottom-up and top-down neural mechanisms in spatial multi-talker speech perception. Patel, P., van der Heijden, K., Bickel, S., Herrero, J. L., Mehta, A. D., & Mesgarani, N. (2022). Current Biology, 32(18), 3971-3986.e4.

Spatial distances affect temporal prediction and interception. Schroeger, A., Grießbach, E., Raab, M., & Cañal-Bruland, R. (2022). Scientific Reports, 12, 15786.

Never run a changing system: Action-effect contingency shapes prospective agency. Schwarz, K. A., Klaffehn, A. L., Hauke-Forman, N., Muth, F. V., & Pfister, R. (2022). Cognition, 229, 105250.

Contraction bias in temporal estimation. Tal-Perry, N., & Yuval-Greenberg, S. (2022). Cognition, 229, 105234.

Response Time Distributions and the Accumulation of Visual Evidence in Freely Moving Mice. Treviño, M., Medina-Coss y León, R., & Lezama, E. (2022). Neuroscience, 501, 25–41.

Active neural coordination of motor behaviors with internal states. Zhang, Y. S., Takahashi, D. Y., El Hady, A., Liao, D. A., & Ghazanfar, A. A. (2022). Proceedings of the National Academy of Sciences, 119(39), e2201194119.

#science#Neuroscience#computational neuroscience#Brain science#research#cognition#cognitive science#psychophysics#neurons#neural networks#neurobiology#neural computation#scientific publications

5 notes

·

View notes

Text

Using language to give robots a better grasp of an open-ended world

New Post has been published on https://thedigitalinsider.com/using-language-to-give-robots-a-better-grasp-of-an-open-ended-world/

Using language to give robots a better grasp of an open-ended world

Imagine you’re visiting a friend abroad, and you look inside their fridge to see what would make for a great breakfast. Many of the items initially appear foreign to you, with each one encased in unfamiliar packaging and containers. Despite these visual distinctions, you begin to understand what each one is used for and pick them up as needed.

Inspired by humans’ ability to handle unfamiliar objects, a group from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) designed Feature Fields for Robotic Manipulation (F3RM), a system that blends 2D images with foundation model features into 3D scenes to help robots identify and grasp nearby items. F3RM can interpret open-ended language prompts from humans, making the method helpful in real-world environments that contain thousands of objects, like warehouses and households.

F3RM offers robots the ability to interpret open-ended text prompts using natural language, helping the machines manipulate objects. As a result, the machines can understand less-specific requests from humans and still complete the desired task. For example, if a user asks the robot to “pick up a tall mug,” the robot can locate and grab the item that best fits that description.

“Making robots that can actually generalize in the real world is incredibly hard,” says Ge Yang, postdoc at the National Science Foundation AI Institute for Artificial Intelligence and Fundamental Interactions and MIT CSAIL. “We really want to figure out how to do that, so with this project, we try to push for an aggressive level of generalization, from just three or four objects to anything we find in MIT’s Stata Center. We wanted to learn how to make robots as flexible as ourselves, since we can grasp and place objects even though we’ve never seen them before.”

Learning “what’s where by looking”

The method could assist robots with picking items in large fulfillment centers with inevitable clutter and unpredictability. In these warehouses, robots are often given a description of the inventory that they’re required to identify. The robots must match the text provided to an object, regardless of variations in packaging, so that customers’ orders are shipped correctly.

For example, the fulfillment centers of major online retailers can contain millions of items, many of which a robot will have never encountered before. To operate at such a scale, robots need to understand the geometry and semantics of different items, with some being in tight spaces. With F3RM’s advanced spatial and semantic perception abilities, a robot could become more effective at locating an object, placing it in a bin, and then sending it along for packaging. Ultimately, this would help factory workers ship customers’ orders more efficiently.

“One thing that often surprises people with F3RM is that the same system also works on a room and building scale, and can be used to build simulation environments for robot learning and large maps,” says Yang. “But before we scale up this work further, we want to first make this system work really fast. This way, we can use this type of representation for more dynamic robotic control tasks, hopefully in real-time, so that robots that handle more dynamic tasks can use it for perception.”

The MIT team notes that F3RM’s ability to understand different scenes could make it useful in urban and household environments. For example, the approach could help personalized robots identify and pick up specific items. The system aids robots in grasping their surroundings — both physically and perceptively.

“Visual perception was defined by David Marr as the problem of knowing ‘what is where by looking,’” says senior author Phillip Isola, MIT associate professor of electrical engineering and computer science and CSAIL principal investigator. “Recent foundation models have gotten really good at knowing what they are looking at; they can recognize thousands of object categories and provide detailed text descriptions of images. At the same time, radiance fields have gotten really good at representing where stuff is in a scene. The combination of these two approaches can create a representation of what is where in 3D, and what our work shows is that this combination is especially useful for robotic tasks, which require manipulating objects in 3D.”

Creating a “digital twin”

F3RM begins to understand its surroundings by taking pictures on a selfie stick. The mounted camera snaps 50 images at different poses, enabling it to build a neural radiance field (NeRF), a deep learning method that takes 2D images to construct a 3D scene. This collage of RGB photos creates a “digital twin” of its surroundings in the form of a 360-degree representation of what’s nearby.

In addition to a highly detailed neural radiance field, F3RM also builds a feature field to augment geometry with semantic information. The system uses CLIP, a vision foundation model trained on hundreds of millions of images to efficiently learn visual concepts. By reconstructing the 2D CLIP features for the images taken by the selfie stick, F3RM effectively lifts the 2D features into a 3D representation.

Keeping things open-ended

After receiving a few demonstrations, the robot applies what it knows about geometry and semantics to grasp objects it has never encountered before. Once a user submits a text query, the robot searches through the space of possible grasps to identify those most likely to succeed in picking up the object requested by the user. Each potential option is scored based on its relevance to the prompt, similarity to the demonstrations the robot has been trained on, and if it causes any collisions. The highest-scored grasp is then chosen and executed.

To demonstrate the system’s ability to interpret open-ended requests from humans, the researchers prompted the robot to pick up Baymax, a character from Disney’s “Big Hero 6.” While F3RM had never been directly trained to pick up a toy of the cartoon superhero, the robot used its spatial awareness and vision-language features from the foundation models to decide which object to grasp and how to pick it up.

F3RM also enables users to specify which object they want the robot to handle at different levels of linguistic detail. For example, if there is a metal mug and a glass mug, the user can ask the robot for the “glass mug.” If the bot sees two glass mugs and one of them is filled with coffee and the other with juice, the user can ask for the “glass mug with coffee.” The foundation model features embedded within the feature field enable this level of open-ended understanding.

“If I showed a person how to pick up a mug by the lip, they could easily transfer that knowledge to pick up objects with similar geometries such as bowls, measuring beakers, or even rolls of tape. For robots, achieving this level of adaptability has been quite challenging,” says MIT PhD student, CSAIL affiliate, and co-lead author William Shen. “F3RM combines geometric understanding with semantics from foundation models trained on internet-scale data to enable this level of aggressive generalization from just a small number of demonstrations.”

Shen and Yang wrote the paper under the supervision of Isola, with MIT professor and CSAIL principal investigator Leslie Pack Kaelbling and undergraduate students Alan Yu and Jansen Wong as co-authors. The team was supported, in part, by Amazon.com Services, the National Science Foundation, the Air Force Office of Scientific Research, the Office of Naval Research’s Multidisciplinary University Initiative, the Army Research Office, the MIT-IBM Watson Lab, and the MIT Quest for Intelligence. Their work will be presented at the 2023 Conference on Robot Learning.

#2023#3d#ai#air#air force#Amazon#approach#artificial#Artificial Intelligence#awareness#bot#Building#coffee#collage#collisions#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#Computer vision#conference#Containers#data#Deep Learning#Electrical Engineering&Computer Science (eecs)#engineering#Features#form#Foundation#Fundamental

0 notes

Link