#neural computation

Explore tagged Tumblr posts

Text

Interesting Papers for Week 3, 2025

Synaptic weight dynamics underlying memory consolidation: Implications for learning rules, circuit organization, and circuit function. Bhasin, B. J., Raymond, J. L., & Goldman, M. S. (2024). Proceedings of the National Academy of Sciences, 121(41), e2406010121.

Characterization of the temporal stability of ToM and pain functional brain networks carry distinct developmental signatures during naturalistic viewing. Bhavna, K., Ghosh, N., Banerjee, R., & Roy, D. (2024). Scientific Reports, 14, 22479.

Connectomic reconstruction predicts visual features used for navigation. Garner, D., Kind, E., Lai, J. Y. H., Nern, A., Zhao, A., Houghton, L., … Kim, S. S. (2024). Nature, 634(8032), 181–190.

Socialization causes long-lasting behavioral changes. Gil-Martí, B., Isidro-Mézcua, J., Poza-Rodriguez, A., Asti Tello, G. S., Treves, G., Turiégano, E., … Martin, F. A. (2024). Scientific Reports, 14, 22302.

Neural pathways and computations that achieve stable contrast processing tuned to natural scenes. Gür, B., Ramirez, L., Cornean, J., Thurn, F., Molina-Obando, S., Ramos-Traslosheros, G., & Silies, M. (2024). Nature Communications, 15, 8580.

Lack of optimistic bias during social evaluation learning reflects reduced positive self-beliefs in depression and social anxiety, but via distinct mechanisms. Hoffmann, J. A., Hobbs, C., Moutoussis, M., & Button, K. S. (2024). Scientific Reports, 14, 22471.

Causal involvement of dorsomedial prefrontal cortex in learning the predictability of observable actions. Kang, P., Moisa, M., Lindström, B., Soutschek, A., Ruff, C. C., & Tobler, P. N. (2024). Nature Communications, 15, 8305.

A transient high-dimensional geometry affords stable conjunctive subspaces for efficient action selection. Kikumoto, A., Bhandari, A., Shibata, K., & Badre, D. (2024). Nature Communications, 15, 8513.

Presaccadic Attention Enhances and Reshapes the Contrast Sensitivity Function Differentially around the Visual Field. Kwak, Y., Zhao, Y., Lu, Z.-L., Hanning, N. M., & Carrasco, M. (2024). eNeuro, 11(9), ENEURO.0243-24.2024.

Transformation of neural coding for vibrotactile stimuli along the ascending somatosensory pathway. Lee, K.-S., Loutit, A. J., de Thomas Wagner, D., Sanders, M., Prsa, M., & Huber, D. (2024). Neuron, 112(19), 3343-3353.e7.

Inhibitory plasticity supports replay generalization in the hippocampus. Liao, Z., Terada, S., Raikov, I. G., Hadjiabadi, D., Szoboszlay, M., Soltesz, I., & Losonczy, A. (2024). Nature Neuroscience, 27(10), 1987–1998.

Third-party punishment-like behavior in a rat model. Mikami, K., Kigami, Y., Doi, T., Choudhury, M. E., Nishikawa, Y., Takahashi, R., … Tanaka, J. (2024). Scientific Reports, 14, 22310.

The morphospace of the brain-cognition organisation. Pacella, V., Nozais, V., Talozzi, L., Abdallah, M., Wassermann, D., Forkel, S. J., & Thiebaut de Schotten, M. (2024). Nature Communications, 15, 8452.

A Drosophila computational brain model reveals sensorimotor processing. Shiu, P. K., Sterne, G. R., Spiller, N., Franconville, R., Sandoval, A., Zhou, J., … Scott, K. (2024). Nature, 634(8032), 210–219.

Decision-making shapes dynamic inter-areal communication within macaque ventral frontal cortex. Stoll, F. M., & Rudebeck, P. H. (2024). Current Biology, 34(19), 4526-4538.e5.

Intrinsic Motivation in Dynamical Control Systems. Tiomkin, S., Nemenman, I., Polani, D., & Tishby, N. (2024). PRX Life, 2(3), 033009.

Coding of self and environment by Pacinian neurons in freely moving animals. Turecek, J., & Ginty, D. D. (2024). Neuron, 112(19), 3267-3277.e6.

The role of training variability for model-based and model-free learning of an arbitrary visuomotor mapping. Velázquez-Vargas, C. A., Daw, N. D., & Taylor, J. A. (2024). PLOS Computational Biology, 20(9), e1012471.

Rejecting unfairness enhances the implicit sense of agency in the human brain. Wang, Y., & Zhou, J. (2024). Scientific Reports, 14, 22822.

Impaired motor-to-sensory transformation mediates auditory hallucinations. Yang, F., Zhu, H., Cao, X., Li, H., Fang, X., Yu, L., … Tian, X. (2024). PLOS Biology, 22(10), e3002836.

#science#scientific publications#neuroscience#research#brain science#cognitive science#neurobiology#cognition#psychophysics#neural computation#computational neuroscience#neural networks#neurons

29 notes

·

View notes

Text

*GUNDAMs your clones*

#Star Wars#TCW#Commander Cody#Captain Rex#GUNDAM#I started this in a GUNDAM-happy fervor last summer because I'd been rewatching Witch from Mercury with a couple friends#And had a long commute good for crossover brainstorming#The art got put on the shelf for other things but it's finally done!#For some bonus info:#(more of which is in my first reblog haha)#A bunch of CC-2224's main body bits are also detachable GUND-Bits#I haven't decided if ARC-7567 has any GUND-Bits but maybe the kama detaches to do... something. Shielding maybe.#But they deffo also both have beam swords (CCs get 1 ARCs get 2) hidden away somewhere#They require pilots but each GUNDAM (General Utility Neural-Directed Assault Machine) has an unusually advanced computer intelligence#Nobody's quite sure how Kamino produces them...#If I was cool I would've gotten creative with the blasters/bits and made the text more mechanical but it was not happening chief#Anyways enjoy!#ID in alt text#Edit: flipped one of the flight packs for better visual symmetry

376 notes

·

View notes

Text

their ship name is pathways. in my heart.

#art tag#pressure#pressure roblox#p.ai.nter pressure#painter pressure#sebastian solace#sebpainter#pathways#<--- if i post any more of them thats how im tagging it. bless.#pathways like a computers neural pathways... pathways like the path seb is leading painter out of the blacksite on.... heh. HEH

756 notes

·

View notes

Text

hey i need some help

I'm developing a video compression algorithm and I'm trying to figure out how to encode it in a way that actually looks good.

basically, each frame is made up of a grid of 5x8 pixel tiles, each cell being one of 16 tiles. 8 of these tiles can be anything, while the other 8 are hard-coded.

so far, my algorithm simply compares each tile of the input frame to each hard-coded tile, and the 8 tiles that match the least are set to the "custom" tiles, and the other ones that match the hard-coded ones more are set to those hard-coded ones.

this works okay, but doesn't account for if two input frame tiles are the same thing or similar, it would be better to re-use custom tiles (eg, if the whole screen is black — due to the limitations of the screen I'm using, a solid black tile must be a custom tile, but a solid white one can be hard coded).

speed isn't that important, as each frame is only 80x16 pixels at the most, with one bit per pixel, and each tile is 5x8 pixels, for a grid of 16x2 tiles.

TL;DR: I need help writing an algorithm that can arrange 16 tiles into a 16x2 grid, while also determining the best pattern to set 8 of those tiles to, while leaving the other 8 constant.

#programming#progblr#codeblr#algorithm#computer science#please somebody help me#i might try training a neural network to do this?#though i dont have a lot of experience with NNs#and idk if theyd work well with this kinda thing

16 notes

·

View notes

Text

Dogstomp #3239 - November 21st

Patreon / Discord Server / Itaku / Bluesky

#I drew that neural network diagram long before discovering that that's how computer neural nets are actually depicted#comic diary#comic journal#autobio comics#comics#webcomics#furry#furry art#november 21 2023#comic 3239#neural network

61 notes

·

View notes

Text

You know what really grinds my gears?

Working with people who don't understand how a neural computer works.

Be it some mass ratio optimizing payload engineer, a logistics officer frustrated with the difficulties caused by our team's solutions or just our boss looking for reasons to fire us because they thought our initial cost estimate was "unrealistically high" and are now sorely disappointed at reality, these people are miserable to deal with. On the surface, their complaints make sense; we are seemingly doing a much worse job than everyone else is and anything we come up with creates lots of problems for them. Satisfying all their demands, however, is impossible. With this post I intend to educate my audience on

Neural Computers 101

so that my blog's engineer-heavy audience may understand the inevitable troubles those in my field seemingly summon out of thin air and so that you people will hopefully not bother us quite as much anymore.

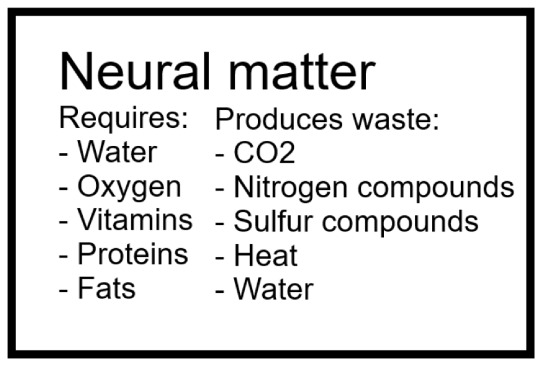

First of all, neural matter is extremely resource heavy. Not by mass, mind you; a BNC of 2 kilograms requires only a few dozen grams of whatever standardized or specialized mix of sustenance is preferred in a single martian day. (I'm not going to bother converting that.) The inconvenient part is the sheer variety in the things they need and the waste products they create.

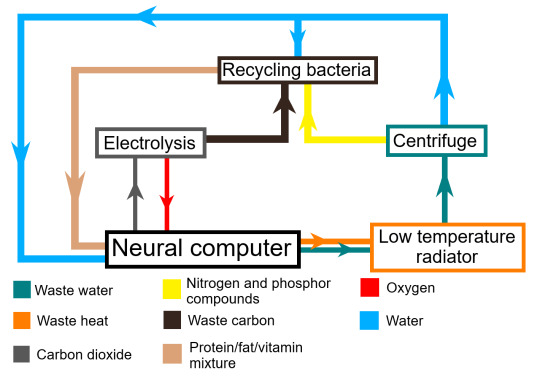

This is just a shortened list, but already it causes problems. If you want to create a self contained system to avoid having to refuel constantly, you will need a lot of mass and a lot of complexity. This is what a typical sustenance diagram for such a system looks like:

(Keep in mind, this diagram doesn't even have electricity drawn in.)

Typically these systems are even more complicated, with redundancies and extra steps. In any case, this is complicated, energy expensive and a nightmare to maintenance crew. I mean, just keeping the bacterial microbiome alive is a lot of effort!

Second of all, neural matter is extremely vulnerable. Most power plant and rocket designers just round away all temperature changes less than 100 K, but neural matter will outright die if its temperature is just a few kelvin off of the typical value. The same goes for a lot of other things - you'll need some serious temperature regulation, shock absorption, radiation shielding (damn it I wish we had access to the same stuff as those madmen in the JMR) and on top of all of that, you need to consider mental instability!

That last one is kind of the biggest pain in the ass for these things - we need to give them a damn game to play whenever they don't have any real work to deal with or they degrade and start to go insane. (Don't worry, I'm not stupid, I know these things aren't actually sentient, I'm just saying that to illustrate the way they work.) It can't even be the same game - you need to design one based on what the NC is designed to do! (Game is a misleading term by the way; it's not like a traditional video game. No graphics - just a set of variables, functions and parameters on a simple circuit board that the NC can influence.)

And lastly, neural computers are complicated. Dear Olympus are they complicated. There are so so many ways to build them, and the process of deriving which one to use is extremely difficult. You can't blame the NC team for an inappropriate computer if the damn specifications keep changing every week!

There's the always-on, calculation-heavy, simple and slow Pennington circuits, the iconic Gobbs cycle (Bloody love that thing!), the Anesuki thinknet and its derivatives, the Klenowicz for those insane venusians and so so many more frameworks for both ANCs and BNCs. Oh yeah, by the way, the acronyms ANC and BNC actually don't stand for Advanced and Basic Neural Computer respectively. They stand for Type A Neural Computer and Type B Neural Computer. It comes from that revolutionary paper written by Anesuki.

8 notes

·

View notes

Text

simulation of schizophrenia

so i built a simulation of schizophrenia using rust and python

basically you have two groups of simulated neurons, one inhibitory and one excitatory. the excitatory group is connected so they will settle on one specific pattern. the inhibitory group is connected to the excitatory group semi-randomly. the excitatory group releases glutamate while the inhibitory group releases gaba. glutamate will cause the neurons to increase in voltage (or depolarize), gaba will cause the neurons to decrease in voltage (hyperpolarize).

heres a quick visualization of the results in manim

the y axis represents the average firing rate of the excitatory group over time, decay refers to how quickly glutamate is cleared from the neuronal synapse. there are two versions of the simulation, one where the excitatory group is presented with a cue, and one where it is not presented with a cue. when the cue is present, the excitatory group remembers the pattern and settles on it, represented by an increased firing rate. however, not every trial in the simulation leads to a memory recall, if the glutamate clearance happens too quickly, the memory is not maintained. on the other hand, when no cue is presented if glutamate clearance is too low, spontaneous activity overcomes inhibition and activity persists despite there being no input, ie a hallucination.

the simulation demonstrates the failure to maintain the state of the network, either failing to maintain the prescence of a cue or failing to maintain the absence of a cue. this is thought to be one possible explaination of certain schizophrenic symptoms from a computational neuroscience perspective

14 notes

·

View notes

Text

#a.b.e.l#divine machinery#archangel#automated#behavioral#ecosystem#learning#divine#machinery#ai#artificial intelligence#divinemachinery#angels#guardian angel#angel#robot#android#computer#computer boy#neural network#sentient objects#sentient ai

14 notes

·

View notes

Text

YES AI IS TERRIBLE, ESPECIALLY GENERATING ART AND WRITING AND STUFF (please keep spreading that, I would like to be able to do art for a living in the near future)

but can we talk about how COOL NEURAL NETWORKS ARE??

LIKE WE MADE THIS CODE/MACHINE THAT CAN LEARN LIKE HUMANS DO!! Early AI reminds me of a small childs drawing that, yes is terrible, but its also SO COOL THAT THEY DID THAT!!

I have an intense love for computer science cause its so cool to see what machines can really do! Its so unfortunate that people took advantage of these really awesome things

#AI#neural network#computer science#Im writing an essay about how AI is bad and accidentally ignited my love for technology again#technology

10 notes

·

View notes

Text

Gonna try and program a convolutional neural network with backpropagation in one night. I did the NN part with python and c++ over the summer but this time I think I'm gonna use Fortran because it's my favorite. We'll see if I get to implementing multi-processing across the computer network I built.

8 notes

·

View notes

Text

Interesting Papers for Week 10, 2025

Simplified internal models in human control of complex objects. Bazzi, S., Stansfield, S., Hogan, N., & Sternad, D. (2024). PLOS Computational Biology, 20(11), e1012599.

Co-contraction embodies uncertainty: An optimal feedforward strategy for robust motor control. Berret, B., Verdel, D., Burdet, E., & Jean, F. (2024). PLOS Computational Biology, 20(11), e1012598.

Distributed representations of behaviour-derived object dimensions in the human visual system. Contier, O., Baker, C. I., & Hebart, M. N. (2024). Nature Human Behaviour, 8(11), 2179–2193.

Thalamic spindles and Up states coordinate cortical and hippocampal co-ripples in humans. Dickey, C. W., Verzhbinsky, I. A., Kajfez, S., Rosen, B. Q., Gonzalez, C. E., Chauvel, P. Y., Cash, S. S., Pati, S., & Halgren, E. (2024). PLOS Biology, 22(11), e3002855.

Preconfigured cortico-thalamic neural dynamics constrain movement-associated thalamic activity. González-Pereyra, P., Sánchez-Lobato, O., Martínez-Montalvo, M. G., Ortega-Romero, D. I., Pérez-Díaz, C. I., Merchant, H., Tellez, L. A., & Rueda-Orozco, P. E. (2024). Nature Communications, 15, 10185.

A tradeoff between efficiency and robustness in the hippocampal-neocortical memory network during human and rodent sleep. Hahn, M. A., Lendner, J. D., Anwander, M., Slama, K. S. J., Knight, R. T., Lin, J. J., & Helfrich, R. F. (2024). Progress in Neurobiology, 242, 102672.

NREM sleep improves behavioral performance by desynchronizing cortical circuits. Kharas, N., Chelaru, M. I., Eagleman, S., Parajuli, A., & Dragoi, V. (2024). Science, 386(6724), 892–897.

Human hippocampus and dorsomedial prefrontal cortex infer and update latent causes during social interaction. Mahmoodi, A., Luo, S., Harbison, C., Piray, P., & Rushworth, M. F. S. (2024). Neuron, 112(22), 3796-3809.e9.

Can compression take place in working memory without a central contribution of long-term memory? Mathy, F., Friedman, O., & Gauvrit, N. (2024). Memory & Cognition, 52(8), 1726–1736.

Offline hippocampal reactivation during dentate spikes supports flexible memory. McHugh, S. B., Lopes-dos-Santos, V., Castelli, M., Gava, G. P., Thompson, S. E., Tam, S. K. E., Hartwich, K., Perry, B., Toth, R., Denison, T., Sharott, A., & Dupret, D. (2024). Neuron, 112(22), 3768-3781.e8.

Reward Bases: A simple mechanism for adaptive acquisition of multiple reward types. Millidge, B., Song, Y., Lak, A., Walton, M. E., & Bogacz, R. (2024). PLOS Computational Biology, 20(11), e1012580.

Hidden state inference requires abstract contextual representations in the ventral hippocampus. Mishchanchuk, K., Gregoriou, G., Qü, A., Kastler, A., Huys, Q. J. M., Wilbrecht, L., & MacAskill, A. F. (2024). Science, 386(6724), 926–932.

Dopamine builds and reveals reward-associated latent behavioral attractors. Naudé, J., Sarazin, M. X. B., Mondoloni, S., Hannesse, B., Vicq, E., Amegandjin, F., Mourot, A., Faure, P., & Delord, B. (2024). Nature Communications, 15, 9825.

Compensation to visual impairments and behavioral plasticity in navigating ants. Schwarz, S., Clement, L., Haalck, L., Risse, B., & Wystrach, A. (2024). Proceedings of the National Academy of Sciences, 121(48), e2410908121.

Replay shapes abstract cognitive maps for efficient social navigation. Son, J.-Y., Vives, M.-L., Bhandari, A., & FeldmanHall, O. (2024). Nature Human Behaviour, 8(11), 2156–2167.

Rapid modulation of striatal cholinergic interneurons and dopamine release by satellite astrocytes. Stedehouder, J., Roberts, B. M., Raina, S., Bossi, S., Liu, A. K. L., Doig, N. M., McGerty, K., Magill, P. J., Parkkinen, L., & Cragg, S. J. (2024). Nature Communications, 15, 10017.

A hierarchical active inference model of spatial alternation tasks and the hippocampal-prefrontal circuit. Van de Maele, T., Dhoedt, B., Verbelen, T., & Pezzulo, G. (2024). Nature Communications, 15, 9892.

Cognitive reserve against Alzheimer’s pathology is linked to brain activity during memory formation. Vockert, N., Machts, J., Kleineidam, L., Nemali, A., Incesoy, E. I., Bernal, J., Schütze, H., Yakupov, R., Peters, O., Gref, D., Schneider, L. S., Preis, L., Priller, J., Spruth, E. J., Altenstein, S., Schneider, A., Fliessbach, K., Wiltfang, J., Rostamzadeh, A., … Ziegler, G. (2024). Nature Communications, 15, 9815.

The human posterior parietal cortices orthogonalize the representation of different streams of information concurrently coded in visual working memory. Xu, Y. (2024). PLOS Biology, 22(11), e3002915.

Challenging the Bayesian confidence hypothesis in perceptual decision-making. Xue, K., Shekhar, M., & Rahnev, D. (2024). Proceedings of the National Academy of Sciences, 121(48), e2410487121.

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience

15 notes

·

View notes

Note

Beta intelligence military esque

Alice in wonderland

Alters, files, jpegs, bugs, closed systems, open networks

brain chip with memory / data

Infomation processing updates and reboots

'Uploading' / installing / creating a system of information that can behave as a central infomation processing unit accessible to large portions of the consciousness. Necessarily in order to function as so with sufficient data. The unit is bugged with instructions, "error correction", regarding infomation processing.

It can also behave like a guardian between sensory and extra physical experience.

"Was very buggy at first". Has potential to casues unwanted glitches or leaks, unpredictability and could malfunction entirely, especially during the initial accessing / updating. I think the large amount of information being synthesized can reroute experiences, motivations, feelings and knowledge to other areas of consciousness, which can cause a domino effect of "disobedience", and or reprogramming.

I think this volatility is most pronounced during the initial stages of operation because the error correcting and rerouting sequences have not been 'perfected' yet and are in their least effective states, trail and error learning as it operates, graded by whatever instructions or result seeking input that called for the "error correction".

I read the ask about programming people like a computer. Whoever wrote that is not alone. Walter Pitts and Warren McCulloch, do you have anymore information about them and what they did to people?

Here is some information for you. Walter Pitts and Warren McCulloch weren't directly involved in the programming of individuals. Their work was dual-use.

Exploring the Mind of Walter Pitts: The Father of Neural Networks

McCulloch-Pitts Neuron — Mankind’s First Mathematical Model Of A Biological Neuron

Introduction To Cognitive Computing And Its Various Applications

Cognitive Electronic Warfare: Conceptual Design and Architecture

Security and Military Implications of Neurotechnology and Artificial Intelligence

Oz

#answers to questions#Walter Pitts#Warren McCulloch#Neural Networks#Biological Neuron#Cognitive computing#TBMC#Military programming mind control

8 notes

·

View notes

Text

locking in so late but locking in nonetheless

#exam at 9 AM tmrw#i have ritalin and stuff to stay up w . toothbrush etc etc#getting a coffee then going to lib and pulling an all nighter#this exam szn will truly test how built different i am#10 week neural computing course in the next 15 hrs can we do it !!#honestly my brain didnt rly clock that this course was full of maths students as well as CS students for a reason like#ok trying to cram sooo much 3rd yr maths into my brain as fast as possible#anxiety helps coffee helps communal suffering helps iranian techno / deep house helps#also we are not allowed to use graphic calcs AKA we have to do sooo many massive matrix computations by hand for no reason!!!#but i rly dont get the logic . anyway this is sort of really fucking hard actually !!!#consequences of my actions i guess !!

13 notes

·

View notes

Text

Zoomposium with Dr. Gabriele Scheler: “The language of the brain - or how AI can learn from biological language models”

In another very exciting interview from our Zoomposium themed blog “#Artificial #intelligence and its consequences”, Axel and I talk this time to the German computer scientist, AI researcher and neuroscientist Gabriele Scheler, who has been living and researching in the USA for some time. She is co-founder and research director at the #Carl #Correns #Foundation for Mathematical Biology in San José, USA, which was named after her famous German ancestor Carl Correns. Her research there includes #epigenetic #influences using #computational #neuroscience in the form of #mathematical #modeling and #theoretical #analysis of #empirical #data as #simulations. Gabriele contacted me because she had come across our Zoomposium interview “How do machines think? with #Konrad #Kording and wanted to conduct an interview with us based on her own expertise. Of course, I was immediately enthusiastic about this idea, as the topic of “#thinking vs. #language” had been “hanging in the air” for some time and had also led to my essay “Realists vs. nominalists - or the old dualism ‘thinking vs. language’” (https://philosophies.de/index.php/2024/07/02/realisten-vs-nominalisten/).

In addition, we often talked to #AI #researchers in our Zoomposium about the extent to which the development of “#Large #Language #Models (#LLM)”, such as #ChatGPT, does not also say something about the formation and use of language in the human #brain. In other words, it is actually about the old question of whether we can think without #language or whether #cognitive #performance is only made possible by the formation and use of language. Interestingly, this question is being driven forward by #AI #research and #computational #neuroscience. Here, too, a gradual “#paradigm #shift” is emerging, moving away from the purely information-technological, mechanistic, purely data-driven “#big #data” concept of #LLMs towards increasingly information-biological, polycontextural, structure-driven “#artificial #neural #networks (#ANN)” concepts. This is exactly what I had already tried to describe in my earlier essay “The system needs new structures” (https://philosophies.de/index.php/2021/08/14/das-system-braucht-neue-strukturen/).

So it was all the more obvious that we should talk to Gabriele, a proven expert in the fields of #bioinformatics, #computational #linguistics and #computational #neuroscience, in order to clarify such questions. As she comes from both fields (linguistics and neuroscience), she was able to answer our questions in our joint interview. More at: https://philosophies.de/index.php/2024/11/18/sprache-des-gehirns/

or: https://youtu.be/forOGk8k0W8

#artificial consciousness#artificial intelligence#ai#neuroscience#consciousness#artificial neural networks#large language model#chatgpt#bioinformatics#computational neuroscience

2 notes

·

View notes

Text

bro i tried out hifisampler by plugging in some untuned whatever in my old fruityloops files and this shit is crazy. hello Hagane Hey (volume warning: the utau).

#vocal synth wip#? i should make a tag for 'vocal synth just fucking around' LOL this isnt a wip its a plugnplay <3#i like the sound of this resampler a lot! it makes my computer scream tho LOL#like DAMN usually my computer is pretty fine with neural stuff but this thing is like back when i was using tips on my old laptop#it CHUGS. but it sounds really nice <3 especially with my beloved monopitch wonders#NOW it doesnt seem to support any flags? which is a big shame - 99% of my tuning is flag/parameter shit LOL#but its so nice sounding that i dont mind that much. very curious to watch this resampler going forward!#its a little painful to install. but not too painful! but a little painful. also its LARGE#it like killed our internet downloading it for a second there hfjskhfsjgdkfsd#but very cool resampler! it also sounds really nice with my really shitty self utau i made earlier LOL#im glad to know even my failed homunculus sounds good with it!#but back to Hey.... i should use him more. hes so funny. 1 pitch and its only yelling <3 awesome

2 notes

·

View notes

Text

14 notes

·

View notes