#Martin Splitt rendering

Explore tagged Tumblr posts

Text

Server-Side vs. Client-Side Rendering: Google Recommendation

Discover what Google’s Martin Splitt says about server-side vs. client-side rendering, structured data, and how AI crawlers handle JavaScript. Learn SEO best practices in 2025. Server-Side vs. Client-Side Rendering: What Google Recommends Server-Side vs. Client-Side Rendering Understanding how Google processes JavaScript content is essential for modern SEO. In a recent interview with Kenichi…

#AI crawler Google#client-side rendering#CSR use cases#Gemini crawler#Google SEO recommendation#JavaScript SEO#Martin Splitt rendering#rendering for SEO#SEO in 2025#SEO rendering strategy#server-side rendering#SSR benefits#SSR vs CSR#structured data#Web Rendering Service

0 notes

Text

Google Says If A Page Is Indexed Then It Was Rendered By Google

Google: If A Page Is Indexed Then It Was Rendered By Google (Even Refreshes) Martin Splitt from the Google Search Relations team said on Twitter that if a page is indexed that means it was rendered. He added that page refreshes are also fully rendered by Google when indexed. He wrote on Twitter, “If it (a page) gets indexed, it was rendered. All pages (minus the obviously problematic ones, like…

View On WordPress

0 notes

Link

Curious about how to improve your website’s SEO with Semantic HTML and Google Search Console? Google’s very own Martin Splitt joined me on this very special episode of the SEJ Show to share his thoughts and opinions on various technical SEO topics, such as Semantic HTML, Google Search Console, indexing, and client-side rendering. Learn how to better leverage these powerful tools to improve your website’s SEO. [Prefer to watch instead? Get access to the on-demand video.] I would say make sure that you are focusing on the content quality and that you are focusing on delivering value to your users. Those have been, will always be, and are the most important things. Everything else should follow from that. –Martin Splitt Suppose you are fine-tuning technical details or your website’s structure or markup. In that case, you are likely missing out on the more significant opportunities of asking yourself what people need from your website. –Martin Splitt This question keeps coming up. This is not the first time and will not be the last time this question will come up and continue to be asked. I don’t know why everyone thinks about who, what value, or who. It’s about structure. I can’t emphasize this enough: If you choose to have H1s as your top-level structure of the content, that’s fine. It just means that the top level of the content is structured along the H1s. –Martin Splitt [00:00] – About Martin[02:47] – Why Semantic SEO is important.[04:22] – Is there anything that can be done within Semantic HTML to better communicate with Google?[06:02] – Should schema markup information match what’s in the document?[08:24] – What parts of Semantic search does Google need the most help with?[09:19] – What is Martin’s opinion on header tags?[14:22] – Is the responsibility of implementing Semantic HTML on the SEO or the developer?[16:19] – How accessible is Semantic HTML within a WordPress, or Gutenberg-styleenvironment?[19:58] – How compatible is Semantic HTML with WCAG?[21:08] – What is the relationship of Semantic HTML to the overall concept of the Semantic web RDF, etc.?[25:04] – Can the wrong thumbnails be rectified utilizing Semantic HTML?[28:42] – Is there another type of schema markup that can still refer to the organization and use IDs on article pages?[32:10] – Can adding schema markup to show the product category hierarchy and modifying HTML help Google understand the relationship between the product and its category?[33:49] – Is preserving header hierarchy more critical than which header you use?[36:36] – Is it bad practice to display different content on pages to returning users versus new users?[40:08] – What are the best practices for error handling with SPAs?[45:31] – What is the best way to deal with search query parameters being indexed in Google?[48:02] – Should you be worried about product pages not being included within the XML site map?[50:26] – How does Google prioritize headers?[56:00] – How important is it for developers and SEOs to start implementing Semantic HTML now?[57:31] – What should SEO & developers be focusing on? If you understand that it’s a 404, you have two options because two things can happen that you don’t want to happen. One is an error page that gets indexed and appears in search results where it shouldn’t. The other thing is that you are creating 404s in the search console and probably muddling with your data. –Martin Splitt If you have one H1 and nothing else under it except for H2s and then content H2 and then content H2, that doesn’t change anything. That means you structured your content differently. You didn’t structure it better. You didn’t structure it worse. You just structured it differently. –Martin Splitt [Get access to the on-demand video now!] Connect With Martin Splitt: Martin Splitt – the friendly internet fairy and code magician! He’s a tech wizard from Zurich that has magic fingers when it comes to writing web-friendly code. With over ten years of experience as a software engineer, he now works as a developer advocate for Google. A master of all things open source, his mission is to make your content visible in any corner of cyberspace – abracadabra! Connect with Martin on LinkedIn: https://www.linkedin.com/in/martinsplitt/Follow him on Twitter: https://twitter.com/g33konaut Connect with Loren Baker, Founder of Search Engine Journal: Follow him on Twitter: https://www.twitter.com/lorenbakerConnect with him on LinkedIn: https://www.linkedin.com/in/lorenbaker window.addEventListener( 'load2', function() console.log('load_fin'); if( sopp != 'yes' && !window.ss_u ) !function(f,b,e,v,n,t,s) if(f.fbq)return;n=f.fbq=function()n.callMethod? n.callMethod.apply(n,arguments):n.queue.push(arguments); if(!f._fbq)f._fbq=n;n.push=n;n.loaded=!0;n.version='2.0'; n.queue=[];t=b.createElement(e);t.async=!0; t.src=v;s=b.getElementsByTagName(e)[0]; s.parentNode.insertBefore(t,s)(window,document,'script', 'https://connect.facebook.net/en_US/fbevents.js'); if( typeof sopp !== "undefined" && sopp === 'yes' ) fbq('dataProcessingOptions', ['LDU'], 1, 1000); else fbq('dataProcessingOptions', []); fbq('init', '1321385257908563'); fbq('track', 'PageView'); fbq('trackSingle', '1321385257908563', 'ViewContent', content_name: 'google-martin-splitt-semantic-html-search-search-console-podcast', content_category: 'search-engine-journal-show technical-seo' ); );

0 notes

Photo

An open standard for password-free logins on the web

#381 — March 6, 2019

Read on the Web

Frontend Focus

WebAuthn Approved As Web Standard for Password-Free Logins — The W3C announced earlier this week that the Web Authentication API (WebAuthn) is now an official standard for password-free logins on the web.

Emil Protalinski

Cache-Control for Civilians — An accessible look at what the HTTP Cache-Control header can do when it comes to controlling how your assets are cached. Covering strategies, asset management and more.

Harry Roberts

New. Cloudflare Registrar - Domain Registration at Wholesale Pricing — Cloudflare Registrar securely registers and manages your domain names with transparent, no-markup pricing.

Cloudflare Registrar sponsor

Microsoft’s New Edge Browser Looks A Lot Like Chrome — Leaked screenshots show the upcoming Chromium-powered browser (which is still being tested internally). Neowin has more screenshots here.

Tom Warren

Web Page Footers 101: Design Patterns and When to Use Each — “A footer is the place users go when users they’re lost.” — Some good tips and advice here on what to consider when building out your site footer.

Therese Fessenden

Building Robust Layouts With Container Units — When inspecting most other grids in DevTools, you’ll notice that column widths are dependent on their parent element. This article will help you understand how to overcome these limitations using CSS variables & how you can start building with container units.

Russell Bishop

The Firefox Experiments I Would Have Liked To Try — The author has been a member of the Firefox Test Pilot team for a number of years. Here he takes a comprehensive look back on several ideas he would have liked to have explored/implemented within Firefox.

Ian Bicking

💻 Jobs

Full-Stack Engineer - React/Rails (NYC) — Rapidly growing healthcare startup in the addiction and sobriety space seeking talented developers to help build the future of online addiction and sobriety support.

Tempest, Inc.

Frontend Developer at X-Team (Remote) — Join the most energizing community for developers. Work from anywhere with the world's leading brands.

X-Team

Find A Job Through Vettery — Vettery specializes in dev roles and is completely free for job seekers.

Vettery

📘 Articles, Tutorials & Opinion

Content-Based Grid Tracks and Embracing Flexibility — A look at how to size CSS Grid tracks based on their content with min-content, max-content and auto.

Hidde de Vries

Lesser-Known CSS Properties Demonstrated with GIFs — A good collection, visually represented, of several lesser-known CSS techniques.

Pavel Laptev

How to Filter Elements from MongoDB Arrays - With Studio 3T — Learn how to resolve one of the most common problems that plagues those new to MongoDB.

Studio 3T sponsor

▶ Advanced CSS Margins — A roughly ten-minute video exploring the advanced aspects of CSS margins.

!important

▶ How Google Search Indexes JavaScript Sites — Martin Splitt explains how JavaScript influences SEO and how to optimize your JavaScript-powered website to be search-friendly.

Google Webmasters

How to Render 3D in 2D Canvas — Or, more accurately, projecting 3D coordinates into a regular 2D canvas. The effect is striking.

Louis Hoebregts

A/B Testing For Mobile-First Experiences — Tips and advice for implementing A/B testing on mobile for the web.

Suzanne Scacca

Reducing First Input Delay for a Better User Experience

James Milner

Learn How to Manage Domains with DNSimple's API Node.js Mini-Course

DNSimple sponsor

Should You Use Source Maps in Production? — A source map is a file that correlates between a minified version of an asset (e.g. CSS or JavaScript) and the original source code.

Chris Coyier

Why I Write CSS in JavaScript

Max Stoiber

🔧 Code and Tools

CSS Lego Minifigure Maker — A fun little demo: Swap heads, change colors, take figures apart.

Josh Bader codepen

react-svg-pan-zoom: A React Component That Adds Pan and Zoom Features to SVG

Christian Vadalà

RFS: Responsive Font Size Engine — Automatically calculates the appropriate font size based on the browser viewport dimensions.

Bootstrap

Take Our ~7min Survey for a Chance to Win a Lego Star Wars TIE Fighter

ActiveState sponsor

utterances: Commenting Widget Built on GitHub Issues

Jeremy Danyow

🗓 Upcoming Events

UpFront Conference, March 22 — Manchester, UK — A frontend conference 'open to everyone who makes for the web'.

FrontCon, April 3–5 — Riga, Latvia — A two-day conference focused on frontend concepts and technologies.

SmashingConf, April 16–17 — San Francisco, California — A friendly, inclusive event which is focused on real-world problems and solutions.

CityJS Conference, May 3 — London, UK — Meet local and international speakers and share your experiences with modern Javascript development.

CSSCamp 2019, July 17 — Barcelona, Catalunya — A one-day, one-track conference for web designers and developers.

by via Frontend Focus https://ift.tt/2VFgazZ

1 note

·

View note

Text

Why Getting Indexed by Google is so Difficult

Websites rely on Google to some extent. It's simple: Your pages get indexed by the search engine, which makes them possible for people to find you - but things don't always go as planned when many websites are left out-of-indexed and wait weeks before being picked up again. The output should be more engaging than "just" stating what was said in brackets.

Have you ever had to search for something on the internet, but got stuck when it came down ranking? For example, Google will show higher quality sites at their top of page than other websites. This may be due in part with how complex factors like content and links are - rather than just focusing on keywords they look at more general information that helps them give better results!

Many SEOs still believe that it’s the very technical things like sending consistent signals or having insufficient crawl budget preventing Google from indexing content. But this belief has long been debunked as a myth! While these might be tempting reasons for why your pages are left out in the cold, you should also have high-quality content on hand to send them too--and if these don't work then there's always just plain ole' luck with regards to what will happen Many people think That being Technical.

Reasons why Google isn’t indexing your pages

The custom indexing checker tool I use revealed that, on average, 15% of e-commerce stores' product pages cannot be found when using Google. The reasons why are interesting to know!

Google Search Console is a great tool for diagnosing your website’s performance and indexing status. It reports several statuses, like "Crawled: currently not indexed," or “Discovered: not yet in our system." This information can be useful when trying to determine what needs fixing on the site so you don't waste time re-doing things ad infinitum!

Top indexing issues

Crawled - currently not indexed

"What the heck? Google visits my page, but doesn't index it?" That's not something you want to hear when best SEO solution is your business. Fortunately for us though, there are ways around this problem and we can get our content crawled by them again so long as its uniquely valuable!

The only thing more important than the content of your website is how people get there. Use unique titles, descriptions and copy on all indexable pages to make visitors want to return again for another round! To avoid copying product descriptions from external sources or using stock language that can be found anywhere online use a creative approach - this will help set you apart in today's competitive market where individuality ranks highest among consumers' preferences when choosing which businesses, they patronize over others similar offerings available locally.

Discovered - currently not indexed

I can't think of a better way to spend my time than crawling the web and finding all sorts of errors. It’s been said that this is one area where humans consistently beat computers, but I don't really see why!

Dealing with this problem takes some expertise. If you find out that your pages are “Discovered - currently not indexed”, do the following:

- Identify if there is a pattern of these happening on certain categories or products and see if one specific thing might be causing it for all those in need; maybe their wait time to get into indexing was just too long? Find what's going wrong here!

Optimize your crawl budget. Focus on spotting low-quality pages that Google spends a lot of time crawling, such as filtered category and internal search - these can go into tens or even hundreds of millions for an ecommerce site depending on how many there are! If you have Discovered Not Indexed errors when rendering best SEO solution content from these types of sites it might be because the bots were only able to view them in their raw form without any formatting which means they won't get indexed by google (or other crawlers like Bing).

Well don’t worry though; Martin Splitt has some tips about fixing this issue during his webinar "Rendering SEO" where he discussed ways customers could improve both user experience AND rankings through smart use.

Duplicate content

This issue is extensively covered by the Moz SEO Learning Center. I just want to point out here that duplicate content may be caused by various reasons, such as:

I) varying language versions (e.g., English in UK vs US or Canada)

2) if you have several versions of a page targeted at different countries - some will end up unindexed

3) Your competitors also using similar descriptions from manufacturers which sometimes leads them being indexed incorrectly

4) Over-writing old information on your site without keeping track can cause this too 5

How to increase the probability Google will index your pages

Avoid the “Soft 404” signals

It is important to make sure that your pages don’t falsely indicate a soft 404 status. This includes anything from using the words "Not found" or “Not available,” as well as having numbers in URLs like 404 error messages on websites.

Use internal linking

Internal linking is one of the key signals for Google that a given page on your website should be indexed. Leave no orphan pages in your site’s structure, and remember to include all indexable content when submitting sitemaps.

Implement a sound crawling strategy

Don’t let Google crawl cruft on your website. If too many resources are spent crawling the less valuable parts of your domain, it might take too long for Google to get back with some good info or new content that they would have otherwise had access to if only their bot-crawls were optimized properly! Server log analysis gives your insight into what kind of things actually end up being seen by bots when visiting any given site - this includes information like which pages these users spend time scrolling through most often (based off Perkins & compiler) and even individual words/key phrases used in various areas throughout different websites.

Eliminate low-quality and duplicate content

Your site is like a house with many rooms. And every room has its purpose - from bedrooms to bathrooms and even closets! But some houses don’t need as much space; they only have their essential areas: the kitchen for cooking food or making drinks, Livingroom-place where we can chat while watching TV together after dinner... All these special places make life more enjoyable and fun (especially if there's ️). So, keep those sacred parts clean by following these simple steps:

0 notes

Text

How content consolidation can help boost your rankings

If your brand has been creating content for some time, you’ll likely reach a point where some of your newer content overlaps with existing content. Or you may have several related, but relatively thin pieces you’ve published. Since many people have been sold on the idea that more content is better, the potential problem here may not be obvious.

If left unchecked, this may create a situation in which you have numerous pieces of content competing for the same search intent. Most of the time, Google’s algorithms aim to show no more than two results from the same domain for a given query. This means that, while you’re probably already competing against other businesses in the search results, you might also be competing against yourself and not showcasing your best work.

Why content consolidation should be part of your SEO strategy

Consolidating content can help you get out of your own way and increase the chances of getting the desired page to rank well in search results. It can also improve your link building efforts since other sites will only have one version of your content to point back to.

And, it can also make it easier for users to locate the information they’re looking for and help you get rid of underperforming content that may be doing more harm than good.

Nervous about content consolidation? Think quality over quantity. When asked by Lily Ray of Path Interactive during a recent episode of Google’s SEO Company Mythbusting series if it makes sense to consolidate two similar pieces into one article and “doing a lot of merging and redirecting” for SEO Company, Martin Splitt, developer advocate at Google, replied, “Definitely.”

In fact, Google itself adopted this tactic when it consolidated six individual websites into Google Retail, enabling it to double the site’s CTA click-through rate and increase organic traffic by 64%.

Opportunities to consolidate content

Thin content. Google may perceive pages that are light on content, such as help center pages that address only a single question, as not necessarily providing value to users, said Splitt.

“I would try to group these things and structure them in a meaningful way,” he said, noting that if a user has a question, they are likely to have follow-up questions and consolidating this information may make your pages more helpful.

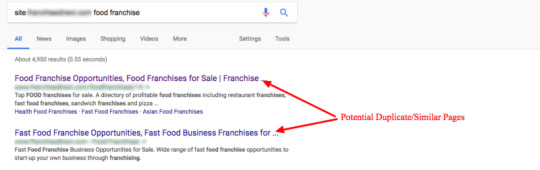

Duplicate content. In the majority of cases, Google doesn’t penalize sites over duplicate content. However, for larger sites, having multiple URLs that host the same content may consume crawl budget and dilute signals, inhibiting a search engine’s ability to index and evaluate your pages. Even if your site is relatively small, identifying and addressing duplicate content can improve your user experience.

While there are many tools that can help you identify duplicate content, “I highly recommend researching duplicate content issues manually to completely understand the nature of the problem and the best way to address it,” said Chris Long, director of e-commerce at Go Fish Digital, suggesting that SEO Companys perform a Google “site:” search of their domain followed by their core keywords. “If I see pages with similar meta data in Google’s index, this is a red flag that they may be duplicates,” he said.

Outdated or obsolete content. Every year, brands and publishers create content predicting trends for the following year. However, 2020 has turned out to be a year unlike any other, rendering most predictions irrelevant.

A user stumbling onto your outdated predictions post is more likely to bounce from your site, taking their business along with them. Removing the outdated content from your site may be a better option than leaving it up, or you can update that article with new predictions and change the publication date if you’ve made substantial changes to the copy. This is just one example of how an old or obsolete piece of content may be repurposed to keep your content flywheel going.

Content that doesn’t get any traffic. You can use Google Search Console and Google Analytics to identify which pieces of content are failing to help you reach your business goals.

“If you are seeing that you get a lot of impressions, but not that many clicks, you might want to change something about the content,” Splitt said, “If you are getting a lot of clicks through it but then you see in your analytics that actually not much action happens, then you can ask yourself, ‘Is the traffic worth it or do I need to change my content there?’”

Using these tools to keep tabs on your page views, bounce rates and other engagement metrics can shine a spotlight on the pieces of content that may be right for consolidation or outright removal.

How to consolidate your content

Remove content that doesn’t provide value. “If it’s very thin content, then … we might just spend crawl budget on pages that are, in the end, not performing or not even being indexed anymore,” Splitt said during SEO Company Mythbusting, adding, “It is usually a good idea to see [whether a] piece of content really does not perform well; let’s take it down or at least change it.”

As mentioned above, Google Search Console and Analytics can be used to identify which pieces of content may just be taking up crawl budget or cannibalizing your keywords without providing any value to your audience.

Combine content that serves a similar purpose. Users typically have more than one question, and those questions are usually related to whatever stage they’re currently at in their buyer’s journey. For example, if someone has just started to think about buying a new car, they’ll probably want to learn about its fuel economy, safety ratings, special features, and other similar car models.

Instead of having numerous articles addressing each of these particular questions, brands and publishers could consolidate this information as it is all pertinent to the same stage of the journey that the user is in. This can reduce the amount of content you have competing over the same (or similar) keyword sets, and it will also improve your user experience by centralizing all the information that a potential customer may need onto one page.

You can then implement 301 redirects, canonical or noindex tags on the pages that have become redundant to consolidate ranking signals.

RELATED: 3 case studies of duplicate content consolidation

Refresh existing pages (instead of creating new ones). In some industries, the landscape changes every year (for example, in the automotive or smartphone industries where new models are released annually) meaning that new content is required even if you’re publishing about the same topic.

In other industries, however, there may be an opportunity to update existing content instead of creating something from scratch that heavily overlaps with what you’ve already published. For example, an instructional article on how to winterize a sprinkler system is less likely to contain different information year-to-year.

“I wouldn’t create a new page that basically says the same thing because, especially when they’re really similar, [Google] might just think one is a duplication of the other and then canonicalize them together, no matter what you do in canonical tags,” Splitt said, suggesting instead that marketers update the existing content and reposition it more prominently on your site for visitors to see.

RELATED: Essential Guide to SEO: Master the science of SEO

About The Author

George Nguyen is an editor for Search Engine Land, covering organic search, podcasting and e-commerce. His background is in journalism and content marketing agency. Prior to entering the industry, he worked as a radio personality, writer, podcast host and public school teacher.

Website Design & SEO Delray Beach by DBL07.co

Delray Beach SEO

source http://www.scpie.org/how-content-consolidation-can-help-boost-your-rankings/ source https://scpie.tumblr.com/post/628960044558532608

0 notes

Text

How content consolidation can help boost your rankings

If your brand has been creating content for some time, you’ll likely reach a point where some of your newer content overlaps with existing content. Or you may have several related, but relatively thin pieces you’ve published. Since many people have been sold on the idea that more content is better, the potential problem here may not be obvious.

If left unchecked, this may create a situation in which you have numerous pieces of content competing for the same search intent. Most of the time, Google’s algorithms aim to show no more than two results from the same domain for a given query. This means that, while you’re probably already competing against other businesses in the search results, you might also be competing against yourself and not showcasing your best work.

Why content consolidation should be part of your SEO strategy

Consolidating content can help you get out of your own way and increase the chances of getting the desired page to rank well in search results. It can also improve your link building efforts since other sites will only have one version of your content to point back to.

And, it can also make it easier for users to locate the information they’re looking for and help you get rid of underperforming content that may be doing more harm than good.

Nervous about content consolidation? Think quality over quantity. When asked by Lily Ray of Path Interactive during a recent episode of Google’s SEO Company Mythbusting series if it makes sense to consolidate two similar pieces into one article and “doing a lot of merging and redirecting” for SEO Company, Martin Splitt, developer advocate at Google, replied, “Definitely.”

In fact, Google itself adopted this tactic when it consolidated six individual websites into Google Retail, enabling it to double the site’s CTA click-through rate and increase organic traffic by 64%.

Opportunities to consolidate content

Thin content. Google may perceive pages that are light on content, such as help center pages that address only a single question, as not necessarily providing value to users, said Splitt.

“I would try to group these things and structure them in a meaningful way,” he said, noting that if a user has a question, they are likely to have follow-up questions and consolidating this information may make your pages more helpful.

Duplicate content. In the majority of cases, Google doesn’t penalize sites over duplicate content. However, for larger sites, having multiple URLs that host the same content may consume crawl budget and dilute signals, inhibiting a search engine’s ability to index and evaluate your pages. Even if your site is relatively small, identifying and addressing duplicate content can improve your user experience.

While there are many tools that can help you identify duplicate content, “I highly recommend researching duplicate content issues manually to completely understand the nature of the problem and the best way to address it,” said Chris Long, director of e-commerce at Go Fish Digital, suggesting that SEO Companys perform a Google “site:” search of their domain followed by their core keywords. “If I see pages with similar meta data in Google’s index, this is a red flag that they may be duplicates,” he said.

Outdated or obsolete content. Every year, brands and publishers create content predicting trends for the following year. However, 2020 has turned out to be a year unlike any other, rendering most predictions irrelevant.

A user stumbling onto your outdated predictions post is more likely to bounce from your site, taking their business along with them. Removing the outdated content from your site may be a better option than leaving it up, or you can update that article with new predictions and change the publication date if you’ve made substantial changes to the copy. This is just one example of how an old or obsolete piece of content may be repurposed to keep your content flywheel going.

Content that doesn’t get any traffic. You can use Google Search Console and Google Analytics to identify which pieces of content are failing to help you reach your business goals.

“If you are seeing that you get a lot of impressions, but not that many clicks, you might want to change something about the content,” Splitt said, “If you are getting a lot of clicks through it but then you see in your analytics that actually not much action happens, then you can ask yourself, ‘Is the traffic worth it or do I need to change my content there?’”

Using these tools to keep tabs on your page views, bounce rates and other engagement metrics can shine a spotlight on the pieces of content that may be right for consolidation or outright removal.

How to consolidate your content

Remove content that doesn’t provide value. “If it’s very thin content, then . . . we might just spend crawl budget on pages that are, in the end, not performing or not even being indexed anymore,” Splitt said during SEO Company Mythbusting, adding, “It is usually a good idea to see [whether a] piece of content really does not perform well; let’s take it down or at least change it.”

As mentioned above, Google Search Console and Analytics can be used to identify which pieces of content may just be taking up crawl budget or cannibalizing your keywords without providing any value to your audience.

Combine content that serves a similar purpose. Users typically have more than one question, and those questions are usually related to whatever stage they’re currently at in their buyer’s journey. For example, if someone has just started to think about buying a new car, they’ll probably want to learn about its fuel economy, safety ratings, special features, and other similar car models.

Instead of having numerous articles addressing each of these particular questions, brands and publishers could consolidate this information as it is all pertinent to the same stage of the journey that the user is in. This can reduce the amount of content you have competing over the same (or similar) keyword sets, and it will also improve your user experience by centralizing all the information that a potential customer may need onto one page.

You can then implement 301 redirects, canonical or noindex tags on the pages that have become redundant to consolidate ranking signals.

RELATED: 3 case studies of duplicate content consolidation

Refresh existing pages (instead of creating new ones). In some industries, the landscape changes every year (for example, in the automotive or smartphone industries where new models are released annually) meaning that new content is required even if you’re publishing about the same topic.

In other industries, however, there may be an opportunity to update existing content instead of creating something from scratch that heavily overlaps with what you’ve already published. For example, an instructional article on how to winterize a sprinkler system is less likely to contain different information year-to-year.

“I wouldn’t create a new page that basically says the same thing because, especially when they’re really similar, [Google] might just think one is a duplication of the other and then canonicalize them together, no matter what you do in canonical tags,” Splitt said, suggesting instead that marketers update the existing content and reposition it more prominently on your site for visitors to see.

RELATED: Essential Guide to SEO: Master the science of SEO

About The Author

George Nguyen is an editor for Search Engine Land, covering organic search, podcasting and e-commerce. His background is in journalism and content marketing agency. Prior to entering the industry, he worked as a radio personality, writer, podcast host and public school teacher.

Website Design & SEO Delray Beach by DBL07.co

Delray Beach SEO

source http://www.scpie.org/how-content-consolidation-can-help-boost-your-rankings/

0 notes

Text

How content consolidation can help boost your rankings

If your brand has been creating content for some time, you’ll likely reach a point where some of your newer content overlaps with existing content. Or you may have several related, but relatively thin pieces you’ve published. Since many people have been sold on the idea that more content is better, the potential problem here may not be obvious.

If left unchecked, this may create a situation in which you have numerous pieces of content competing for the same search intent. Most of the time, Google’s algorithms aim to show no more than two results from the same domain for a given query. This means that, while you’re probably already competing against other businesses in the search results, you might also be competing against yourself and not showcasing your best work.

Why content consolidation should be part of your SEO strategy

Consolidating content can help you get out of your own way and increase the chances of getting the desired page to rank well in search results. It can also improve your link building efforts since other sites will only have one version of your content to point back to.

And, it can also make it easier for users to locate the information they’re looking for and help you get rid of underperforming content that may be doing more harm than good.

Nervous about content consolidation? Think quality over quantity. When asked by Lily Ray of Path Interactive during a recent episode of Google’s SEO Company Mythbusting series if it makes sense to consolidate two similar pieces into one article and “doing a lot of merging and redirecting” for SEO Company, Martin Splitt, developer advocate at Google, replied, “Definitely.”

In fact, Google itself adopted this tactic when it consolidated six individual websites into Google Retail, enabling it to double the site’s CTA click-through rate and increase organic traffic by 64%.

Opportunities to consolidate content

Thin content. Google may perceive pages that are light on content, such as help center pages that address only a single question, as not necessarily providing value to users, said Splitt.

“I would try to group these things and structure them in a meaningful way,” he said, noting that if a user has a question, they are likely to have follow-up questions and consolidating this information may make your pages more helpful.

Duplicate content. In the majority of cases, Google doesn’t penalize sites over duplicate content. However, for larger sites, having multiple URLs that host the same content may consume crawl budget and dilute signals, inhibiting a search engine’s ability to index and evaluate your pages. Even if your site is relatively small, identifying and addressing duplicate content can improve your user experience.

While there are many tools that can help you identify duplicate content, “I highly recommend researching duplicate content issues manually to completely understand the nature of the problem and the best way to address it,” said Chris Long, director of e-commerce at Go Fish Digital, suggesting that SEO Companys perform a Google “site:” search of their domain followed by their core keywords. “If I see pages with similar meta data in Google’s index, this is a red flag that they may be duplicates,” he said.

Outdated or obsolete content. Every year, brands and publishers create content predicting trends for the following year. However, 2020 has turned out to be a year unlike any other, rendering most predictions irrelevant.

A user stumbling onto your outdated predictions post is more likely to bounce from your site, taking their business along with them. Removing the outdated content from your site may be a better option than leaving it up, or you can update that article with new predictions and change the publication date if you’ve made substantial changes to the copy. This is just one example of how an old or obsolete piece of content may be repurposed to keep your content flywheel going.

Content that doesn’t get any traffic. You can use Google Search Console and Google Analytics to identify which pieces of content are failing to help you reach your business goals.

“If you are seeing that you get a lot of impressions, but not that many clicks, you might want to change something about the content,” Splitt said, “If you are getting a lot of clicks through it but then you see in your analytics that actually not much action happens, then you can ask yourself, ‘Is the traffic worth it or do I need to change my content there?’”

Using these tools to keep tabs on your page views, bounce rates and other engagement metrics can shine a spotlight on the pieces of content that may be right for consolidation or outright removal.

How to consolidate your content

Remove content that doesn’t provide value. “If it’s very thin content, then . . . we might just spend crawl budget on pages that are, in the end, not performing or not even being indexed anymore,” Splitt said during SEO Company Mythbusting, adding, “It is usually a good idea to see [whether a] piece of content really does not perform well; let’s take it down or at least change it.”

As mentioned above, Google Search Console and Analytics can be used to identify which pieces of content may just be taking up crawl budget or cannibalizing your keywords without providing any value to your audience.

Combine content that serves a similar purpose. Users typically have more than one question, and those questions are usually related to whatever stage they’re currently at in their buyer’s journey. For example, if someone has just started to think about buying a new car, they’ll probably want to learn about its fuel economy, safety ratings, special features, and other similar car models.

Instead of having numerous articles addressing each of these particular questions, brands and publishers could consolidate this information as it is all pertinent to the same stage of the journey that the user is in. This can reduce the amount of content you have competing over the same (or similar) keyword sets, and it will also improve your user experience by centralizing all the information that a potential customer may need onto one page.

You can then implement 301 redirects, canonical or noindex tags on the pages that have become redundant to consolidate ranking signals.

RELATED: 3 case studies of duplicate content consolidation

Refresh existing pages (instead of creating new ones). In some industries, the landscape changes every year (for example, in the automotive or smartphone industries where new models are released annually) meaning that new content is required even if you’re publishing about the same topic.

In other industries, however, there may be an opportunity to update existing content instead of creating something from scratch that heavily overlaps with what you’ve already published. For example, an instructional article on how to winterize a sprinkler system is less likely to contain different information year-to-year.

“I wouldn’t create a new page that basically says the same thing because, especially when they’re really similar, [Google] might just think one is a duplication of the other and then canonicalize them together, no matter what you do in canonical tags,” Splitt said, suggesting instead that marketers update the existing content and reposition it more prominently on your site for visitors to see.

RELATED: Essential Guide to SEO: Master the science of SEO

About The Author

George Nguyen is an editor for Search Engine Land, covering organic search, podcasting and e-commerce. His background is in journalism and content marketing agency. Prior to entering the industry, he worked as a radio personality, writer, podcast host and public school teacher.

Website Design & SEO Delray Beach by DBL07.co

Delray Beach SEO

source http://www.scpie.org/how-content-consolidation-can-help-boost-your-rankings/ source https://scpie1.blogspot.com/2020/09/how-content-consolidation-can-help.html

0 notes

Text

Google confirms fixing an issue with indexing Disqus comments

Google’s Martin Splitt confirmed earlier this week that Google has fixed an issue with indexing and ranking some content found within the Disqus commenting platform. It is not clear how many sites were impacted by this issue, but Google said it is now resolved. What is Disqus? Disqus is a commenting platform that allows blogs and publishers to add comments to their web sites. While a lot of sites no longer allow user-generated comments on their sites, Disqus is a still fairly popular platform used to manage, maintain and moderate comments. The issue. It is not exactly clear how long Google had issues indexing Disqus comments. When Disqus first launched, I know many blog platforms that wanted their comments visible to Google had to use workarounds to get them indexed. But as Google became better at rendering JavaScript, it began to index most Disqus comments by default. But not all. Glenn Gabe wrote a detailed blog post last December documenting the issues Google has with indexing some comments powered by Disqus. Google fixed the issue. Last week, Gary Illyes from Google took notice of the issue via a tweet and passed it along to his colleague Martin Splitt. Splitt spotted the issue and escalated it internally within Google. Splitt said on Twitter on June 18th, “This looks like a glitch on our end. Keep an eye on this the next couple of days, it should eventually, possibly, work.” A Read the full article

0 notes

Text

Google confirms fixing issue with indexing Disqus comments

Google’s Martin Splitt confirmed earlier this week that Google has fixed an issue with indexing and ranking some content found within the Disqus commenting platform. It is not clear how many sites were impacted by this issue, but Google said it is now resolved.

What is Disqus? Disqus is a commenting platform that allows blogs and publishers to add comments to their web sites. While a lot of sites no longer allow user-generated comments on their sites, Disqus is a still fairly popular platform used to manage, maintain and moderate comments.

The issue. It is not exactly clear how long Google had issues indexing Disqus comments. When Disqus first launched, I know many blog platforms that wanted their comments visible to Google had to use workarounds to get them indexed. But as Google became better at rendering JavaScript, it began to index most Disqus comments by default. But not all.

Glenn Gabe wrote a detailed blog post last December documenting the issues Google has with indexing some comments powered by Disqus.

Google fixed the issue. Last week, Gary Illyes from Google took notice of the issue via a tweet and passed it along to his colleague Martin Splitt. Splitt spotted the issue and escalated it internally within Google. Splitt said on Twitter on June 18th, “This looks like a glitch on our end. Keep an eye on this the next couple of days, it should eventually, possibly, work.”

A couple of days later, on June 20th, Splitt said it is now working and, “It’s been resolved for everyone.”

Hi Martin, Update: We are able to see the comments from that page in Google search now. Thanks. But, we have this same issue on complete domain & sub-domain. And comments from these other pages r not visible in search. Smthing needs to b fixed for these as well at Google’s end?

— Praveen Sharma (@i_praveensharma) June 20, 2020

Big news on the Disqus commenting glitch that @rustybrick covered yesterday. As of this afternoon, I'm seeing Disqus comments being indexed that weren't previously indexed. Looks like the glitch has been fixed based on my testing. More info here: https://t.co/fdke7JPFxo pic.twitter.com/w9p2rnOajQ

— Glenn Gabe (@glenngabe) June 23, 2020

Why we care. If you or your clients use Disqus, you should keep an eye on your rankings and indexing patterns. If the content in those Disqus comments were previously not indexed, you may notice ranking changes after Google begins indexing them — in either a positive or negative way. You also may want to adjust how those comments are displayed to Google, depending on what you see.

The post Google confirms fixing issue with indexing Disqus comments appeared first on Search Engine Land.

Google confirms fixing issue with indexing Disqus comments published first on https://likesfollowersclub.tumblr.com/

0 notes

Text

Google Chrome Dev Summit 2019 Recap: What SEOs Need to Know

How to Make Your Content Shine on Google Search – Martin Splitt

Talk Summary In this session, Google developer advocate Martin Splitt explored the latest updates to Google search that are being used to provide better user experiences and increase website discoverability. He also shared the exciting news that the median time spent rendering JavaScript content, is actually only 5 seconds. Key Takeaways The Google user agent is going to be updated soon to reflect the new evergreen Googlebot. Rendering delays are no longer a big issue that we have to deal with. We shouldn’t worry about SEO implications when using JavaScript on our websites. Lazy loading, web components and Google’s testing tools are now all supported by and using the new rendering engine. What Google has done to make search better The new evergreen Googlebot One of the biggest developments to Google search this year was the announcement of the new evergreen Googlebot at Google I/O earlier this year. This was a quantum leap for Google search, from their old rendering engine that used Chrome 41 to being able to keep up with modern JavaScript heavy websites. This update allows Google to be able to render the new features Google are bringing to the web platform, in order to index the content that relies on these new technologies. After every new stable release that comes to Google Chrome, Googlebot will also be updated, so that what is seen in Chrome is reflected accurately in Google search. The testing tools, such as the Rich Results test, Mobile Friendly test and GSC’s URL Inspection tool are also now up to date with the new rendering engine. All of this means that Google search is now a lot more powerful, because it is able to render pages using the most up to date browser, to ensure what is seen and indexed by Google is the same as what is built. However, one area Google is still working on is the user agent that is seen in server logs when they are accessing content.

Martin explained that as Google is working towards maximising the compatibility between Google search and websites, it is imperative they carefully test things rather than just make quick changes. Therefore, they have had to be careful when moving forward with the user agent. The exciting news is that this is now expected to be updated in December. If you have workarounds for Googlebot, it is now important not to match to the exact user agent string, rather match roughly with Googlebot to ensure there are no issues going forward, as updates occur.

JavaScript Rendering Last year, Martin spoke at the Chrome Dev Summit about how JavaScript can be slower to render and index for Google, and can sometimes take up to a week before it is complete. This year Martin announced a massive update to this. After going over the numbers Google found that, at median, the time spent between crawling and having the rendered results is actually only 5 seconds.

This means we no longer have to worry about week-long delays for rendering JavaScript content. However, Martin explained that we should be prepared to still hear that it could take up to a week, due to the way the entire pipeline works. The pipeline that Googlebot runs through is a lot more complicated than we may think. This is due to several things happening at once, with the possibility of the same step being run multiple times. However, this isn’t something we should be worried about as certain things may happen on Google’s side whilst they crawl, render and index the content, but this doesn’t mean that it was JavaScript content that held them back.

There is a lot happening between the two points that we can actually see and measure, Google handles the processes in the middle so that we don’t have to worry about them. What we can do to ensure content is rendered faster? Google is continually working on improving their rendering by testing and monitoring what happens in search, to make sure that websites are rendered properly. Some steps we can take, together with our developers, include making sure our websites are fast and reliable and ensuring they are not using too many CPU resources. Helpful Patterns for rendering and indexing Lazy-loading The native lazy loading APIs for images and iframes are now supported in the evergreen Googlebot, this means that these attributes are not hurting a website from an SEO perspective.

Web Components Web components, including Shadow DOMs, are also now supported by Googlebot and can be indexed in Google search.

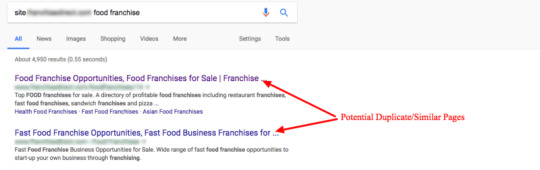

Images in Google Search Image search is a great place for users to discover content and images can be easily indexed in Google, as long as the HTML image element is used.

Updates to image search Google is also working towards making image search more useful for users, through better query understanding and by figuring out the context of images. Martin announced that there will be an update coming soon to GSC where webmasters will be able to inform Google, using structured data, that there is a higher resolution version of an image that can be used in image search. This will ensure there is no impact on the performance of your website. Structured data The new structured data types introduced this year included FAQ and How-to markup, which help to make it easier for Google to highlight your website within search results and on voice assistants. Updates to testing tools All of Google’s testing tools are now up to date with the Googlebot rendering engine so there are no discrepancies between the results from testing tools and what is seen by Google. This means tools such as the Mobile Friendly Test, the Rich Results Test and the URL Inspection Tool are using the actual rendering process Googlebot uses and they will continue to update as Googlebot updates. Developers and SEOs Martin concluded his session by talking about something we at DeepCrawl are also very passionate about, Developers and SEOs working together to achieve common goals. By working together, SEOs and developers can become very powerful allies by helping each other with different objectives and prioritisation. This will lead to better content discoverability and generate more traffic to our websites.

Speed Tooling Evolutions: 2019 and Beyond – Paul Irish and Elizabeth Sweeny

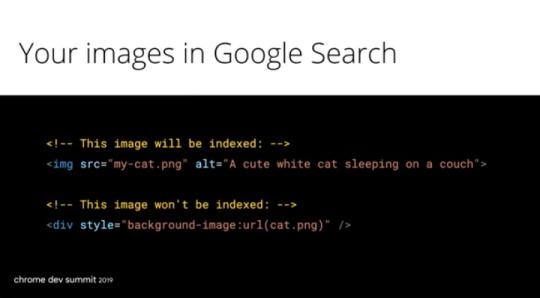

Talk Summary Paul Irish and Elizabeth Sweeny from the Google Chrome Web Platform showcased a 15 part blueprint that they created to effectively assess and optimise site performance at Google I/O earlier this year. This talk was a follow on from that, where they dove deeper into optimizing for a users’ experience and using Lighthouse to monitor, diagnose and fix problems. They also explained how a performance score is measured and shared the latest metrics and features available within Lighthouse. Key Takeaways Make sure you are benchmarking with the new metrics which are now available, as these will form the future of Lighthouse scoring. Take advantage of the extensibility of Lighthouse with the Stackpacks and Plugin options. Be mindful of the changes being introduced to Lighthouse and the performance score, which are due to be released early next year. Measuring the quality of a users experience There are two different elements to measuring the performance of a webpage. On one hand there is lab data, which is synthetically collected in a reproducible testing environment and is crucial for tracking down bugs and diagnosing issues before a user sees them. On the other hand, field data allows you to understand what real-world users are actually experiencing on your site. Typically, these are conditions that are impossible to simulate in the lab as there are so many permutations of things including devices, networks and cache conditions.

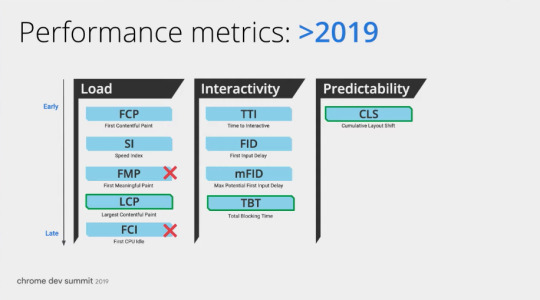

These metrics are most powerful when they are used together and combined. Tools including Lighthouse, PageSpeed Insights and Search Console allow for monitoring both lab and field data. Without having a clear way to accurately measure the nuances of users experience, it is difficult to quantify the impact that they have on your bottom line or be able to track improvements or regressions. This is why we need to have metrics that evolve, as our understanding and ability to measure a user’s experience also develops. Current performance metrics The metrics currently being used across lab and field data are to capture the load and interactivity of a page, but Google have identified there are gaps with these and opportunities to represent a user’s experience more accurately and comprehensively. For example, metrics such as DOM Content Loaded or Onload measure very technical aspects of a page and don’t necessarily correlate to what a user actually experiences.

Performance metrics going forwards Moving forwards, Google is looking to fill in as many gaps in the measurement of user experience as possible. This includes some significant shifts to current metrics including a reduced emphasis on First Meaningful Paint and First CPU Idle. Instead, there are new metrics being introduced which are able to capture when a page feels usable. These metrics are; Largest Contentful Paint, Total Blocking Time and Cumulative Layout Shift. They are being used to fill in some of the measurement gaps, while complement existing metrics and provide balance. For example, while Time To Interactive does a good job of identifying when the main thread calms down later in the load, Total Blocking Time aims to quantify how strained the main thread is throughout the load.

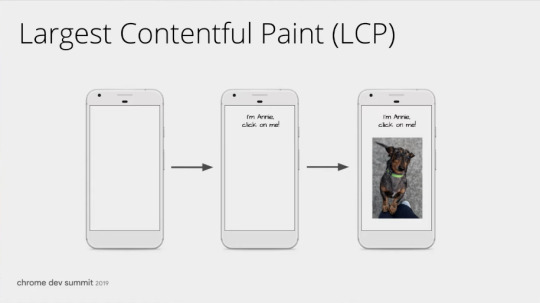

Largest Contentful Paint (LCP) aims to measure when the main, largest piece of content is visible for the user. It catches the beginning of a loading experience by measuring how quickly a user is able to see the main element that they expect and want on a page.

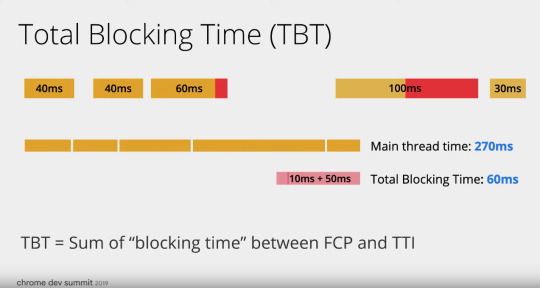

Cumulative Layout Shift (CLS) measures the predictability aspect of a user experience, in addition to load and interactivity metrics. Essentially CLS measures the amount that elements move around during load. For example, just a few pixels moving can be the difference between a user’s happiness, and complete mayhem of accidental clicks or images shifting on a page while it loads. Total Blocking Time (TBT) is used to quantify the risk in long tasks. It does this by measuring the impact of a long task with potential input delay, such as a JavaScript task that is loading an element on a page, for a users interaction on a website. Essentially, it is the sum of “blocking time” between First Contentful Paint (FCP) and Time To Interactive (TTI).

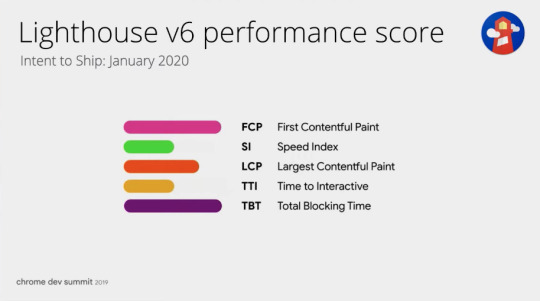

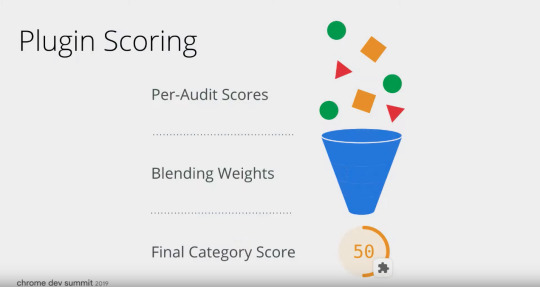

You can find out more about all the metrics used in Lighthouse and how you can measure them, on their dedicated landing page. How the Lighthouse score is calculated The current Lighthouse Performance Score is made up of 5 key metrics that are weighted and blended together to form the 0 - 100 performance score that is populated at the top of the report.

However, this score is going to be updated soon, as Google work to ensure the scoring curves and metrics are reflective of future advancements. This update will be replacing First CPU Idle and First Meaningful Paint with Largest Contentful Paint and Total Blocking Time.

The update isn’t just used to reflect the new LCP and TBT metrics, they are also adjusting the weights to be more balanced between phases of load as well as interactivity measurement. Updates to Page Speed Insights In order to measure a user's experience as accurately as possible, Google has also added a re-analyze prompt to PageSpeed insights for sites which have been redirected to a new URL. This will display a prompt for webmasters to re-run the report for the new URL in order to get the full story of the page, from the redirect to the new page and gain trend insights.

Extending Lighthouse There are two ways you can build on top of Lighthouse in order to leverage it for your own use cases, depending on what your goals are. Stack Packs These allow for stack specific advice to be served within the Lighthouse report itself by automatically detecting whether you are using a CMS or a framework and providing guidance specific to your stack. They take the core audits of lighthouse and layer customised recommendations on top of them, which have been curated by a community of experts. Google announced they are launching further support for frameworks including Angular, WordPress, Magento, React and AMP. Plugins Plugins are allowing the community to build their own plugin specific to what they want to achieve in order to expand from the core audits offered in Lighthouse. This could be to assess things including; security, UX and even custom metrics. Once implemented they will add a new set of checks that will be run through Lighthouse and added to the report as a whole new category. Plugins also allow for customised scoring, so you can decide how things are weighted.

Lighthouse CI We all know how important it is to make sure that fixes to websites are viable and sustainable and be able to show this to the teams that we work with. This is why Google introduced Lighthouse CI. This will allow developers to run Lighthouse on every commit before it is pushed to production. This will allow for transparency and confidence across all teams, in order to see the potential impact of changes made.

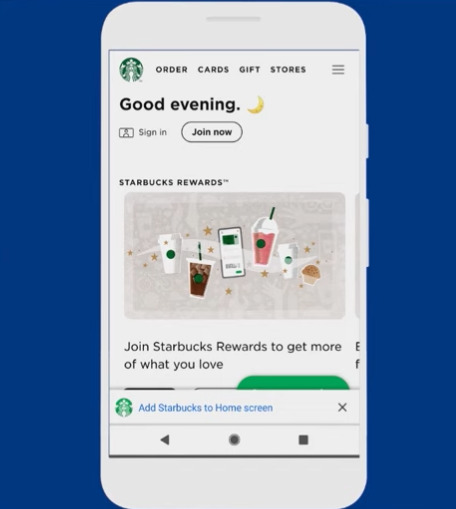

PWA and the Installable Web – PJ Mclachlan & Sam Thorogood

Talk Summary In this session, Google’s PJ Mclachlan and Sam Thorogood explored how to ensure PWA’s are created in a way that lives up to users’ expectations and also shared some best practice recommendations for promoting web apps. Key Takeaways When creating web apps it is important to live up to users’ expectations and provide a seamless experience. Not every user is going to benefit from using a PWA, so ensure you define the best audience to promote it to. When promoting a web app on a site make sure you focus on the benefits of installing it and that you are not interrupting a user’s flow. What is web install? Web install is also known as “Add to Homescreen” and is a way to tell users that the website experience is made for their device. However, this could mean one of two things, either it’s just a bookmark that is being added to the homescreen, or it could be an actual integrated experience on the device. PWA’s allow for your website to launch from the home screen and is indistinguishable from a native experience.

An installed web app is designed to look and feel exactly the same as every other app on a device. It shouldn’t matter to users whether they installed this from an app store, or from the browser itself. Living up to users experiences When asking users to install a web app you are telling them that it is an experience meant for the device they are using, which means that it needs to live up to their expectations. PWAs are a pattern for great apps that use web technology, as they have all the features and functionality users expect from a modern app on mobile or desktop device.

The goal of a PWA is to build directly on the web, so that only one code base needs to be maintained and will work for users both on browsers and through the installed app. Designing for specific users Not every user is going to benefit from the extra power of web apps however, so it is important to define the audience you expect to benefit the most from your PWA. This is done by using a funnel approach to define actions that signal a strong user intent. For example, you might want to only promote the install option to users who frequently visit your site. Most importantly, it’s important to focus on the the user, and why a web app is going to be beneficial for them. You will also want to track the right events in Google Analytics, in order to know what you can optimise, as well as promote web install where it makes sense for a user’s device. Acquiring more installed users There are a few things you can do in order to help acquire more installed users, this includes avoiding channel conflicts and understanding when is best to present users with the option to install. Avoid channel conflicts When promoting your PWA you want to ensure that you’re not creating any channel conflicts by promoting it to people who are currently native app users. There are a number of reasons a user might not be using your native app, but might find benefits in using your web app. The main example of this is due to storage, as native apps can take up a lot of space on a users device. Web apps on the other hand only require a small amount of storage space and the storage that most use is actually dynamically purged from the browser. Google provide the getInstalledRelatedApps API which will allow you to see in JavaScript if a user has your native app already installed. Hide the mini infobar Most PWA installs are happening from the mini info bar, however this was only intended to be temporary as it doesn’t provide the best user experience. Due to being generated by the browser, it has no way of knowing when is the best time to present users with the option to install. In order to ensure the mini info bar doesn’t appear you can add a listener for the beforeinstallprompt event and call the preventDefault( ) method.

It’s also important to implement analytics when performing the above, in order to understand your users’ behaviour with respect to install prompts. The three important events to track are: When the site is qualified for install, with the beforeinstallprompt event. When the prompt has been triggered, for example a ‘click’ event. When the app install has been successfully completed, by using the appinstalled event. UX Considerations There are several UX elements you need to consider when creating a web app, this includes; Disallowing navigation to other domains outside of your site as this can provide a bad experience for users. Instead, make sure you always open external content in a new window. Provide better behaviour for actions such as copy and paste, select all and undo or redo. Disable the right click menu in order to improve the user experience across devices. Where to promote web apps Within the header When using the header to promote web app installs it is important to use information architecture and A/B testing to determine whether it makes sense to place the install option in the header. If you are going to display it within the header, ensure it doesn’t take precedence over other critical functionality. Hamburger menu Another place useful to add the install prompt is within the hamburger menu, this is because it provides a strong signal that users are engaged with the website. However, ensure that it doesn’t add clutter to the menu or take precedence over other actions a user might want to take. Landing Page A landing page is also a great place to promote the install option, as it is a place where you are explicitly marketing your content and you can focus on informing users of the benefits that come with installing your web app. Key engagement moments Promoting the install option within the key moments of engagement in a users journey helps to encourage users to download, as they are in a place of true interest in what you are offering. The main principles for promoting PWA install Don’t be annoying, interrupt a user’s flow or make it difficult for them to complete the task they came to your website for. If the web app is not going to benefit the user, don’t promote the install option to them. Use context to help the user understand the value of installing your app. Read the full article

#chromegoogledev#googlechromedev64#googlechromedevapk#googlechromedevblog#googlechromedevbuild#googlechromedevexe#googlechromedevmode#googlechromedevsummit#googlechromedevx86#googlegooglechromedev#googleseoads#googleseoguide#googleseojobs#googleseokeyword#googleseotools#googleseoom#googleseoul#seogoogleadsexplorers.com#seogoogleownsads.com#seogooglepublicityad.com

0 notes

Text

Official: Google, SEO, and JavaScript

In a video for beginners, Google's Martin Splitt talked about JavaScript and SEO. Here are the main facts from that video.

Google wants to show unique URLs

If a dynamically created web page does not have a unique URL, Google has difficulty to show it in the search results. For that reason, your web pages should have individual URLs. Do not create a JavaScript website that uses the same URL for all of the pages: "We only index pages that can be jumped right into. I click on the main navigation for this particular page and then I click on this product and then I see and everything works. But that might not do the trick because you need that unique URL. It has to be something we can get right to. Not using a hashed URL and also the server needs to be able to serve that right away. If I do this journey and then basically take this URL and copy and paste it into an incognito browser... I want people to see the content. Not the home page and not a 404 page."

You should pre-render your JavaScript pages for Googlebot

Pre-rendering your web pages means that your web server processes much of the JavaScript before it is sent to the user and/or the search engine crawler. Search engine crawlers get much more parseable content if you pre-render your JavaScript web pages. Pre-rendering is also good for website visitors: "I think most of the time it is because has benefits for the user on top of just the crawlers. Giving more content over is always a great thing. That doesn't mean that you should always give us a page with a bazillion images right away because that's just not going to be good for the users. It should be always a mix between getting as much crucial content and as possible, but then figuring out which content you can load lazily in the end of it. So for SEOs that would be you know, we we know that different queries are different intents. Informational, transactional... so elements critical to that intent should really be in that initial rush ."

JavaScript should not make a page slower

If fancy JavaScript effects make your website slower, it's better to avoid them. Website speed is important and Google doesn't like slow loading pages. "As a user, I don't care about if the first byte has arrived quicker, if I'm still looking at a blank page because javascript is executing or something is blocking a resource."

More detailed information about JavaScript and SEO 🏆

The video was aimed at beginners. During the past few months, Google has published a lot of information about JavaScript and SEO. You can find it in our blog. For example, you should avoid client-side rendering and you shouldn't trigger lazy-loading elements by scroll events (search engines do not scroll). If possible, serve content without JavaScript. Google will not follow links in JavaScript. If you want to get high rankings, avoid content that is served by JavaScript only. Google says that it's difficult to process JavaScript.

Check how search engine crawlers see your web pages

Use the website audit tool in SEOprofiler to check how search engines see the content of your web pages. If the website audit tool cannot find the content of your web pages, there are issues on your website that have to be fixed: Check your pages Read the full article

0 notes

Text

Google Explains Rendering and Impact on SEO via @sejournal, @martinibuster

Google's Martin Splitt explains rendering and how it impacts SEO

The post Google Explains Rendering and Impact on SEO appeared first on Search Engine Journal.

from The SEO Advantages https://www.searchenginejournal.com/google-rendering-seo/422749/

0 notes

Text

How content consolidation can help boost your rankings

If your brand has been creating content for some time, you’ll likely reach a point where some of your newer content overlaps with existing content. Or you may have several related, but relatively thin pieces you’ve published. Since many people have been sold on the idea that more content is better, the potential problem here may not be obvious.

If left unchecked, this may create a situation in which you have numerous pieces of content competing for the same search intent. Most of the time, Google’s algorithms aim to show no more than two results from the same domain for a given query. This means that, while you’re probably already competing against other businesses in the search results, you might also be competing against yourself and not showcasing your best work.

Why content consolidation should be part of your SEO strategy

Consolidating content can help you get out of your own way and increase the chances of getting the desired page to rank well in search results. It can also improve your link building efforts since other sites will only have one version of your content to point back to.

And, it can also make it easier for users to locate the information they’re looking for and help you get rid of underperforming content that may be doing more harm than good.

Nervous about content consolidation? Think quality over quantity. When asked by Lily Ray of Path Interactive during a recent episode of Google’s SEO Mythbusting series if it makes sense to consolidate two similar pieces into one article and “doing a lot of merging and redirecting” for SEO, Martin Splitt, developer advocate at Google, replied, “Definitely.”

Source: Google.

In fact, Google itself adopted this tactic when it consolidated six individual websites into Google Retail, enabling it to double the site’s CTA click-through rate and increase organic traffic by 64%.

Opportunities to consolidate content

Thin content. Google may perceive pages that are light on content, such as help center pages that address only a single question, as not necessarily providing value to users, said Splitt.

“I would try to group these things and structure them in a meaningful way,” he said, noting that if a user has a question, they are likely to have follow-up questions and consolidating this information may make your pages more helpful.

Duplicate content. In the majority of cases, Google doesn’t penalize sites over duplicate content. However, for larger sites, having multiple URLs that host the same content may consume crawl budget and dilute signals, inhibiting a search engine’s ability to index and evaluate your pages. Even if your site is relatively small, identifying and addressing duplicate content can improve your user experience.

While there are many tools that can help you identify duplicate content, “I highly recommend researching duplicate content issues manually to completely understand the nature of the problem and the best way to address it,” said Chris Long, director of e-commerce at Go Fish Digital, suggesting that SEOs perform a Google “site:” search of their domain followed by their core keywords. “If I see pages with similar meta data in Google’s index, this is a red flag that they may be duplicates,” he said.

Outdated or obsolete content. Every year, brands and publishers create content predicting trends for the following year. However, 2020 has turned out to be a year unlike any other, rendering most predictions irrelevant.

A user stumbling onto your outdated predictions post is more likely to bounce from your site, taking their business along with them. Removing the outdated content from your site may be a better option than leaving it up, or you can update that article with new predictions and change the publication date if you’ve made substantial changes to the copy. This is just one example of how an old or obsolete piece of content may be repurposed to keep your content flywheel going.

Content that doesn’t get any traffic. You can use Google Search Console and Google Analytics to identify which pieces of content are failing to help you reach your business goals.

“If you are seeing that you get a lot of impressions, but not that many clicks, you might want to change something about the content,” Splitt said, “If you are getting a lot of clicks through it but then you see in your analytics that actually not much action happens, then you can ask yourself, ‘Is the traffic worth it or do I need to change my content there?’”

Using these tools to keep tabs on your page views, bounce rates and other engagement metrics can shine a spotlight on the pieces of content that may be right for consolidation or outright removal.

How to consolidate your content

Remove content that doesn’t provide value. “If it’s very thin content, then . . . we might just spend crawl budget on pages that are, in the end, not performing or not even being indexed anymore,” Splitt said during SEO Mythbusting, adding, “It is usually a good idea to see [whether a] piece of content really does not perform well; let’s take it down or at least change it.”

As mentioned above, Google Search Console and Analytics can be used to identify which pieces of content may just be taking up crawl budget or cannibalizing your keywords without providing any value to your audience.

Combine content that serves a similar purpose. Users typically have more than one question, and those questions are usually related to whatever stage they’re currently at in their buyer’s journey. For example, if someone has just started to think about buying a new car, they’ll probably want to learn about its fuel economy, safety ratings, special features, and other similar car models.

Instead of having numerous articles addressing each of these particular questions, brands and publishers could consolidate this information as it is all pertinent to the same stage of the journey that the user is in. This can reduce the amount of content you have competing over the same (or similar) keyword sets, and it will also improve your user experience by centralizing all the information that a potential customer may need onto one page.

You can then implement 301 redirects, canonical or noindex tags on the pages that have become redundant to consolidate ranking signals.

RELATED: 3 case studies of duplicate content consolidation

Refresh existing pages (instead of creating new ones). In some industries, the landscape changes every year (for example, in the automotive or smartphone industries where new models are released annually) meaning that new content is required even if you’re publishing about the same topic.

In other industries, however, there may be an opportunity to update existing content instead of creating something from scratch that heavily overlaps with what you’ve already published. For example, an instructional article on how to winterize a sprinkler system is less likely to contain different information year-to-year.

“I wouldn’t create a new page that basically says the same thing because, especially when they’re really similar, [Google] might just think one is a duplication of the other and then canonicalize them together, no matter what you do in canonical tags,” Splitt said, suggesting instead that marketers update the existing content and reposition it more prominently on your site for visitors to see.

RELATED: Essential Guide to SEO: Master the science of SEO

The post How content consolidation can help boost your rankings appeared first on Search Engine Land.

How content consolidation can help boost your rankings published first on https://likesandfollowersclub.weebly.com/

0 notes

Text

Le lazy loading pour améliorer la crawlabilité et l’indexation

Qu’est-ce que le lazy loading ?

Le lazy loading ( ou « chargement différé ») est une technique permettant d’augmenter la vitesse d’une page en différant le chargement des éléments volumineux , comme les images, qui ne sont pas nécessaires immédiatement. Au lieu de cela, ces éléments sont chargés plus tard, si et quand ils deviennent visibles.