#Microservice Monitoring and Logging

Explore tagged Tumblr posts

Text

What are the challenges faced when monitoring and logging in microservice architecture?

Inadequate monitoring and logging make it challenging to identify and troubleshoot issues within microservices. Solution: Implement centralized logging and monitoring solutions for comprehensive visibility. Utilize distributed tracing to track requests across multiple microservices. Terminology: Centralized Logging And Monitoring: such as the ELK Stack (Elasticsearch, Logstash, Kibana),…

View On WordPress

#Centralized Logging And Monitoring#Distributed tracing#interview#interview questions#Interview Success Tips#Interview Tips#Java#Microservice Monitoring and Logging#Microservices#programming#Senior Developer#Software Architects

0 notes

Text

Integrating Third-Party Tools into Your CRM System: Best Practices

A modern CRM is rarely a standalone tool — it works best when integrated with your business's key platforms like email services, accounting software, marketing tools, and more. But improper integration can lead to data errors, system lags, and security risks.

Here are the best practices developers should follow when integrating third-party tools into CRM systems:

1. Define Clear Integration Objectives

Identify business goals for each integration (e.g., marketing automation, lead capture, billing sync)

Choose tools that align with your CRM’s data model and workflows

Avoid unnecessary integrations that create maintenance overhead

2. Use APIs Wherever Possible

Rely on RESTful or GraphQL APIs for secure, scalable communication

Avoid direct database-level integrations that break during updates

Choose platforms with well-documented and stable APIs

Custom CRM solutions can be built with flexible API gateways

3. Data Mapping and Standardization

Map data fields between systems to prevent mismatches

Use a unified format for customer records, tags, timestamps, and IDs

Normalize values like currencies, time zones, and languages

Maintain a consistent data schema across all tools

4. Authentication and Security

Use OAuth2.0 or token-based authentication for third-party access

Set role-based permissions for which apps access which CRM modules

Monitor access logs for unauthorized activity

Encrypt data during transfer and storage

5. Error Handling and Logging

Create retry logic for API failures and rate limits

Set up alert systems for integration breakdowns

Maintain detailed logs for debugging sync issues

Keep version control of integration scripts and middleware

6. Real-Time vs Batch Syncing

Use real-time sync for critical customer events (e.g., purchases, support tickets)

Use batch syncing for bulk data like marketing lists or invoices

Balance sync frequency to optimize server load

Choose integration frequency based on business impact

7. Scalability and Maintenance

Build integrations as microservices or middleware, not monolithic code

Use message queues (like Kafka or RabbitMQ) for heavy data flow

Design integrations that can evolve with CRM upgrades

Partner with CRM developers for long-term integration strategy

CRM integration experts can future-proof your ecosystem

#CRMIntegration#CRMBestPractices#APIIntegration#CustomCRM#TechStack#ThirdPartyTools#CRMDevelopment#DataSync#SecureIntegration#WorkflowAutomation

2 notes

·

View notes

Text

You can learn NodeJS easily, Here's all you need:

1.Introduction to Node.js

• JavaScript Runtime for Server-Side Development

• Non-Blocking I/0

2.Setting Up Node.js

• Installing Node.js and NPM

• Package.json Configuration

• Node Version Manager (NVM)

3.Node.js Modules

• CommonJS Modules (require, module.exports)

• ES6 Modules (import, export)

• Built-in Modules (e.g., fs, http, events)

4.Core Concepts

• Event Loop

• Callbacks and Asynchronous Programming

• Streams and Buffers

5.Core Modules

• fs (File Svstem)

• http and https (HTTP Modules)

• events (Event Emitter)

• util (Utilities)

• os (Operating System)

• path (Path Module)

6.NPM (Node Package Manager)

• Installing Packages

• Creating and Managing package.json

• Semantic Versioning

• NPM Scripts

7.Asynchronous Programming in Node.js

• Callbacks

• Promises

• Async/Await

• Error-First Callbacks

8.Express.js Framework

• Routing

• Middleware

• Templating Engines (Pug, EJS)

• RESTful APIs

• Error Handling Middleware

9.Working with Databases

• Connecting to Databases (MongoDB, MySQL)

• Mongoose (for MongoDB)

• Sequelize (for MySQL)

• Database Migrations and Seeders

10.Authentication and Authorization

• JSON Web Tokens (JWT)

• Passport.js Middleware

• OAuth and OAuth2

11.Security

• Helmet.js (Security Middleware)

• Input Validation and Sanitization

• Secure Headers

• Cross-Origin Resource Sharing (CORS)

12.Testing and Debugging

• Unit Testing (Mocha, Chai)

• Debugging Tools (Node Inspector)

• Load Testing (Artillery, Apache Bench)

13.API Documentation

• Swagger

• API Blueprint

• Postman Documentation

14.Real-Time Applications

• WebSockets (Socket.io)

• Server-Sent Events (SSE)

• WebRTC for Video Calls

15.Performance Optimization

• Caching Strategies (in-memory, Redis)

• Load Balancing (Nginx, HAProxy)

• Profiling and Optimization Tools (Node Clinic, New Relic)

16.Deployment and Hosting

• Deploying Node.js Apps (PM2, Forever)

• Hosting Platforms (AWS, Heroku, DigitalOcean)

• Continuous Integration and Deployment-(Jenkins, Travis CI)

17.RESTful API Design

• Best Practices

• API Versioning

• HATEOAS (Hypermedia as the Engine-of Application State)

18.Middleware and Custom Modules

• Creating Custom Middleware

• Organizing Code into Modules

• Publish and Use Private NPM Packages

19.Logging

• Winston Logger

• Morgan Middleware

• Log Rotation Strategies

20.Streaming and Buffers

• Readable and Writable Streams

• Buffers

• Transform Streams

21.Error Handling and Monitoring

• Sentry and Error Tracking

• Health Checks and Monitoring Endpoints

22.Microservices Architecture

• Principles of Microservices

• Communication Patterns (REST, gRPC)

• Service Discovery and Load Balancing in Microservices

1 note

·

View note

Text

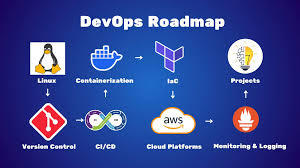

Why DevOps and Microservices Are a Perfect Match for Modern Software Delivery

In today’s time, businesses are using scalable and agile software development methods. Two of the most transformative technologies, DevOps and microservices, have achieved substantial momentum. Both of these have advantages, but their full potential is seen when used together. DevOps gives automation and cooperation, and microservices divide complex monolithic apps into manageable services. They form a powerful combination and allow faster releases, higher quality, and more scalable systems.

Here's why DevOps and microservices are ideal for modern software delivery:

1. Independent Deployments Align Perfectly with Continuous Delivery

One of the best features of microservices is that each service can be built, tested, and deployed separately. This decoupling allows businesses to release features or changes without building or testing the complete program. DevOps, which focuses on continuous integration and delivery (CI/CD), thrives in this environment. Individual microservices can be fitted into CI/CD pipelines to enable more frequent and dependable deployments. The result is faster innovation cycles and reduced risk, as smaller changes are easier to manage and roll back if needed.

2. Team Autonomy Enhances Ownership and Accountability

Microservices encourage small, cross-functional teams to take ownership of specialized services from start to finish. This is consistent with the DevOps principle of breaking down the division between development and operations. Teams that receive experienced DevOps consulting services are better equipped to handle the full lifecycle, from development and testing to deployment and monitoring, by implementing best practices and automation tools.

3. Scalability Is Easier to Manage with Automation

Scaling a monolithic application often entails scaling the entire thing, even if only a portion is under demand. Microservices address this by enabling each service to scale independently based on demand. DevOps approaches like infrastructure-as-code (IaC), containerization, and orchestration technologies like Kubernetes make scaling strategies easier to automate. Whether scaling up a payment module during the holiday season or shutting down less-used services overnight, DevOps automation complements microservices by ensuring systems scale efficiently and cost-effectively.

4. Fault Isolation and Faster Recovery with Monitoring

DevOps encourages proactive monitoring, alerting, and issue response, which are critical to the success of distributed microservices systems. Because microservices isolate failures inside specific components, they limit the potential impact of a crash or performance issue. DevOps tools monitor service health, collect logs, and evaluate performance data. This visibility allows for faster detection and resolution of issues, resulting in less downtime and a better user experience.

5. Shorter Development Cycles with Parallel Workflows

Microservices allow teams to work on multiple components in parallel without waiting for each other. Microservices development services help enterprises in structuring their applications to support loosely connected services. When combined with DevOps, which promotes CI/CD automation and streamlined approvals, teams can implement code changes more quickly and frequently. Parallelism greatly reduces development cycles and enhances response to market demands.

6. Better Fit for Cloud-Native and Containerized Environments

Modern software delivery is becoming more cloud-native, and both microservices and DevOps support this trend. Microservices are deployed in containers, which are lightweight, portable, and isolated. DevOps tools are used to automate processes for deployment, scaling, and upgrades. This compatibility guarantees smooth delivery pipelines, consistent environments from development to production, and seamless rollback capabilities when required.

7. Streamlined Testing and Quality Assurance

Microservices allow for more modular testing. Each service may be unit-tested, integration-tested, and load-tested separately, increasing test accuracy and speed. DevOps incorporates test automation into the CI/CD pipeline, guaranteeing that every code push is validated without manual intervention. This collaboration results in greater software quality, faster problem identification, and reduced stress during deployments, especially in large, dynamic systems.

8. Security and Compliance Become More Manageable

Security can be implemented more accurately in a microservices architecture since services are isolated and can be managed by service-level access controls. DevOps incorporates DevSecOps, which involves integrating security checks into the CI/CD pipeline. This means security scans, compliance checks, and vulnerability assessments are performed early and frequently. Microservices and DevOps work together to help enterprises adopt a shift-left security approach. They make securing systems easier while not slowing development.

9. Continuous Improvement with Feedback Loops

DevOps and microservices work best with feedback. DevOps stresses real-time monitoring and feedback loops to continuously improve systems. Microservices make it easy to assess the performance of individual services, find inefficiencies, and improve them. When these feedback loops are integrated into the CI/CD process, teams can act quickly on insights, improving performance, reliability, and user satisfaction.

Conclusion

DevOps and microservices are not only compatible but also complementary forces that drive the next generation of software delivery. While microservices simplify complexity, DevOps guarantees that those units are efficiently produced, tested, deployed, and monitored. The combination enables teams to develop high-quality software at scale, quickly and confidently. Adopting DevOps and microservices is helpful and necessary for enterprises seeking to remain competitive and agile in a rapidly changing market.

#devops#microservices#software#services#solutions#business#microservices development#devops services#devops consulting services

0 notes

Text

Containerization and Test Automation Strategies

Containerization is revolutionizing how software is developed, tested, and deployed. It allows QA teams to build consistent, scalable, and isolated environments for testing across platforms. When paired with test automation, containerization becomes a powerful tool for enhancing speed, accuracy, and reliability. Genqe plays a vital role in this transformation.

What is Containerization? Containerization is a lightweight virtualization method that packages software code and its dependencies into containers. These containers run consistently across different computing environments. This consistency makes it easier to manage environments during testing. Tools like Genqe automate testing inside containers to maximize efficiency and repeatability in QA pipelines.

Benefits of Containerization Containerization provides numerous benefits like rapid test setup, consistent environments, and better resource utilization. Containers reduce conflicts between environments, speeding up the QA cycle. Genqe supports container-based automation, enabling testers to deploy faster, scale better, and identify issues in isolated, reproducible testing conditions.

Containerization and Test Automation Containerization complements test automation by offering isolated, predictable environments. It allows tests to be executed consistently across various platforms and stages. With Genqe, automated test scripts can be executed inside containers, enhancing test coverage, minimizing flakiness, and improving confidence in the release process.

Effective Testing Strategies in Containerized Environments To test effectively in containers, focus on statelessness, fast test execution, and infrastructure-as-code. Adopt microservice testing patterns and parallel execution. Genqe enables test suites to be orchestrated and monitored across containers, ensuring optimized resource usage and continuous feedback throughout the development cycle.

Implementing a Containerized Test Automation Strategy Start with containerizing your application and test tools. Integrate your CI/CD pipelines to trigger tests inside containers. Use orchestration tools like Docker Compose or Kubernetes. Genqe simplifies this with container-native automation support, ensuring smooth setup, execution, and scaling of test cases in real-time.

Best Approaches for Testing Software in Containers Use service virtualization, parallel testing, and network simulation to reflect production-like environments. Ensure containers are short-lived and stateless. With Genqe, testers can pre-configure environments, manage dependencies, and run comprehensive test suites that validate both functionality and performance under containerized conditions.

Common Challenges and Solutions Testing in containers presents challenges like data persistence, debugging, and inter-container communication. Solutions include using volume mounts, logging tools, and health checks. Genqe addresses these by offering detailed reporting, real-time monitoring, and support for mocking and service stubs inside containers, easing test maintenance.

Advantages of Genqe in a Containerized World Genqe enhances containerized testing by providing scalable test execution, seamless integration with Docker/Kubernetes, and cloud-native automation capabilities. It ensures faster feedback, better test reliability, and simplified environment management. Genqe’s platform enables efficient orchestration of parallel and distributed test cases inside containerized infrastructures.

Conclusion Containerization, when combined with automated testing, empowers modern QA teams to test faster and more reliably. With tools like Genqe, teams can embrace DevOps practices and deliver high-quality software consistently. The future of testing is containerized, scalable, and automated — and Genqe is leading the way.

0 notes

Text

Integrating DevOps into Full Stack Development: Best Practices

In today’s fast-paced software landscape, seamless collaboration between development and operations teams has become more crucial than ever. This is where DevOps—a combination of development and operations—plays a pivotal role. And when combined with Full Stack Development, the outcome is robust, scalable, and high-performing applications delivered faster and more efficiently. This article delves into the best practices of integrating DevOps into full stack development, with insights beneficial to aspiring developers, especially those pursuing a Java certification course in Pune or exploring the top institute for full stack training Pune has to offer.

Why DevOps + Full Stack Development?

Full stack developers are already versatile professionals who handle both frontend and backend technologies. When DevOps principles are introduced into their workflow, developers can not only build applications but also automate, deploy, test, and monitor them in real-time environments.

The integration leads to:

Accelerated development cycles

Better collaboration between teams

Improved code quality through continuous testing

Faster deployment and quicker feedback loops

Enhanced ability to detect and fix issues early

Whether you’re currently enrolled in a Java full stack course in Pune or seeking advanced training, learning how to blend DevOps into your stack can drastically improve your market readiness.

Best Practices for Integrating DevOps into Full Stack Development

1. Adopt a Collaborative Culture

At the heart of DevOps lies a culture of collaboration. Encourage transparent communication between developers, testers, and operations teams.

Use shared tools like Slack, JIRA, or Microsoft Teams

Promote regular standups and cross-functional meetings

Adopt a “you build it, you run it” mindset

This is one of the key principles taught in many practical courses like the Java certification course in Pune, which includes team-based projects and CI/CD tools.

2. Automate Everything Possible

Automation is the backbone of DevOps. Full stack developers should focus on automating:

Code integration (CI)

Testing pipelines

Infrastructure provisioning

Deployment (CD)

Popular tools like Jenkins, GitHub Actions, Ansible, and Docker are essential for building automation workflows. Students at the top institute for full stack training Pune benefit from hands-on experience with these tools, often as part of real-world simulations.

3. Implement CI/CD Pipelines

Continuous Integration and Continuous Deployment (CI/CD) are vital to delivering features quickly and efficiently.

CI ensures that every code commit is tested and integrated automatically.

CD allows that tested code to be pushed to staging or production without manual intervention.

To master this, it’s important to understand containerization and orchestration using tools like Docker and Kubernetes, which are increasingly incorporated into advanced full stack and Java certification programs in Pune.

4. Monitor and Log Everything

Post-deployment monitoring helps track application health and usage, essential for issue resolution and optimization.

Use tools like Prometheus, Grafana, or New Relic

Set up automated alerts for anomalies

Track user behavior and system performance

Developers who understand how to integrate logging and monitoring into the application lifecycle are always a step ahead.

5. Security from Day One (DevSecOps)

With rising security threats, integrating security into every step of development is non-negotiable.

Use static code analysis tools like SonarQube

Implement vulnerability scanners for dependencies

Ensure role-based access controls and audit trails

In reputed institutions like the top institute for full stack training Pune, security best practices are introduced early on, emphasizing secure coding habits.

6. Containerization & Microservices

Containers allow applications to be deployed consistently across environments, making DevOps easier and more effective.

Docker is essential for building lightweight, portable application environments

Kubernetes can help scale and manage containerized applications

Learning microservices architecture also enables developers to build flexible, decoupled systems. These concepts are now a key part of modern Java certification courses in Pune due to their growing demand in enterprise environments.

Key Benefits for Full Stack Developers

Integrating DevOps into your full stack development practice offers several professional advantages:

Faster project turnaround times

Higher confidence in deployment cycles

Improved teamwork and communication skills

Broader technical capabilities

Better career prospects and higher salaries

Whether you’re a beginner or transitioning from a single-stack background, understanding how DevOps and full stack development intersect can be a game-changer. Pune, as a growing IT hub, is home to numerous institutes offering specialized programs that include both full stack development and DevOps skills, with many students opting for comprehensive options like a Java certification course in Pune.

Conclusion

The fusion of DevOps and full stack development is no longer just a trend—it’s a necessity. As businesses aim for agility and innovation, professionals equipped with this combined skillset will continue to be in high demand.

If you are considering upskilling, look for the top institute for full stack training Pune offers—especially ones that integrate DevOps concepts into their curriculum. Courses that cover core programming, real-time project deployment, CI/CD, and cloud technologies—like a well-structured Java certification course in Pune—can prepare you to become a complete developer who is future-ready.

Ready to take your skills to the next level?

Explore a training institute that not only teaches you to build applications but also deploys them the DevOps way.

0 notes

Text

Mastering AWS DevOps in 2025: Best Practices, Tools, and Real-World Use Cases

In 2025, the cloud ecosystem continues to grow very rapidly. Organizations of every size are embracing AWS DevOps to automate software delivery, improve security, and scale business efficiently. Mastering AWS DevOps means knowing the optimal combination of tools, best practices, and real-world use cases that deliver success in production.

This guide will assist you in discovering the most important elements of AWS DevOps, the best practices of 2025, and real-world examples of how top companies are leveraging AWS DevOps to compete.

What is AWS DevOps

AWS DevOps is the union of cultural principles, practices, and tools on Amazon Web Services that enhances an organization's capacity to deliver applications and services at a higher speed. It facilitates continuous integration, continuous delivery, infrastructure as code, monitoring, and cooperation among development and operations teams.

Why AWS DevOps is Important in 2025

As organizations require quicker innovation and zero downtime, DevOps on AWS offers the flexibility and reliability to compete. Trends such as AI integration, serverless architecture, and automated compliance are changing how teams adopt DevOps in 2025.

Advantages of adopting AWS DevOps:

1 Faster deployment cycles

2 Enhanced system reliability

3 Flexible and scalable cloud infrastructure

4 Automation from code to production

5 Integrated security and compliance

Best AWS DevOps Tools to Learn in 2025

These are the most critical tools fueling current AWS DevOps pipelines:

AWS CodePipeline

Your release process can be automated with our fully managed CI/CD service.

AWS CodeBuild

Scalable build service for creating ready-to-deploy packages, testing, and building source code.

AWS CodeDeploy

Automates code deployments to EC2, Lambda, ECS, or on-prem servers with zero-downtime approaches.

AWS CloudFormation and CDK

For infrastructure as code (IaC) management, allowing repeatable and versioned cloud environments.

Amazon CloudWatch

Facilitates logging, metrics, and alerting to track application and infrastructure performance.

AWS Lambda

Serverless compute that runs code in response to triggers, well-suited for event-driven DevOps automation.

AWS DevOps Best Practices in 2025

1. Adopt Infrastructure as Code (IaC)

Utilize AWS CloudFormation or Terraform to declare infrastructure. This makes it repeatable, easier to collaborate on, and version-able.

2. Use Full CI/CD Pipelines

Implement tools such as CodePipeline, GitHub Actions, or Jenkins on AWS to automate deployment, testing, and building.

3. Shift Left on Security

Bake security in early with Amazon Inspector, CodeGuru, and Secrets Manager. As part of CI/CD, automate vulnerability scans.

4. Monitor Everything

Utilize CloudWatch, X-Ray, and CloudTrail to achieve complete observability into your system. Implement alerts to detect and respond to problems promptly.

5. Use Containers and Serverless for Scalability

Utilize Amazon ECS, EKS, or Lambda for autoscaling. These services lower infrastructure management overhead and enhance efficiency.

Real-World AWS DevOps Use Cases

Use Case 1: Scalable CI/CD for a Fintech Startup

AWS CodePipeline and CodeDeploy were used by a financial firm to automate deployments in both production and staging environments. By containerizing using ECS and taking advantage of CloudWatch monitoring, they lowered deployment mistakes by 80 percent and attained near-zero downtime.

Use Case 2: Legacy Modernization for an Enterprise

A legacy enterprise moved its on-premise applications to AWS with CloudFormation and EC2 Auto Scaling. Through the adoption of full-stack DevOps pipelines and the transformation to microservices with EKS, they enhanced time-to-market by 60 percent.

Use Case 3: Serverless DevOps for a SaaS Product

A SaaS organization utilized AWS Lambda and API Gateway for their backend functions. They implemented quick feature releases and automatically scaled during high usage without having to provision infrastructure using CodeBuild and CloudWatch.

Top Trends in AWS DevOps in 2025

AI-driven DevOps: Integration with CodeWhisperer, CodeGuru, and machine learning algorithms for intelligence-driven automation

Compliance-as-Code: Governance policies automated using services such as AWS Config and Service Control Policies

Multi-account strategies: Employing AWS Organizations for scalable, secure account management

Zero Trust Architecture: Implementing strict identity-based access with IAM, SSO, and MFA

Hybrid Cloud DevOps: Connecting on-premises systems to AWS for effortless deployments

Conclusion

In 2025, becoming a master of AWS DevOps means syncing your development workflows with cloud-native architecture, innovative tools, and current best practices. With AWS, teams are able to create secure, scalable, and automated systems that release value at an unprecedented rate.

Begin with automating your pipelines, securing your deployments, and scaling with confidence. DevOps is the way of the future, and AWS is leading the way.

Frequently Asked Questions

What distinguishes AWS DevOps from DevOps? While AWS DevOps uses AWS services and tools to execute DevOps, DevOps itself is a practice.

Can small teams benefit from AWS DevOps

Yes. AWS provides fully managed services that enable small teams to scale and automate without having to handle complicated infrastructure.

Which programming languages does AWS DevOps support

AWS supports the big ones - Python, Node.js, Java, Go, .NET, Ruby, and many more.

Is AWS DevOps for enterprise-scale applications

Yes. Large enterprises run large-scale, multi-region applications with millions of users using AWS DevOps.

1 note

·

View note

Text

52013l4 in Modern Tech: Use Cases and Applications

In a technology-driven world, identifiers and codes are more than just strings—they define systems, guide processes, and structure workflows. One such code gaining prominence across various IT sectors is 52013l4. Whether it’s in cloud services, networking configurations, firmware updates, or application builds, 52013l4 has found its way into many modern technological environments. This article will explore the diverse use cases and applications of 52013l4, explaining where it fits in today’s digital ecosystem and why developers, engineers, and system administrators should be aware of its implications.

Why 52013l4 Matters in Modern Tech

In the past, loosely defined build codes or undocumented system identifiers led to chaos in large-scale environments. Modern software engineering emphasizes observability, reproducibility, and modularization. Codes like 52013l4:

Help standardize complex infrastructure.

Enable cross-team communication in enterprises.

Create a transparent map of configuration-to-performance relationships.

Thus, 52013l4 isn’t just a technical detail—it’s a tool for governance in scalable, distributed systems.

Use Case 1: Cloud Infrastructure and Virtualization

In cloud environments, maintaining structured builds and ensuring compatibility between microservices is crucial. 52013l4 may be used to:

Tag versions of container images (like Docker or Kubernetes builds).

Mark configurations for network load balancers operating at Layer 4.

Denote system updates in CI/CD pipelines.

Cloud providers like AWS, Azure, or GCP often reference such codes internally. When managing firewall rules, security groups, or deployment scripts, engineers might encounter a 52013l4 identifier.

Use Case 2: Networking and Transport Layer Monitoring

Given its likely relation to Layer 4, 52013l4 becomes relevant in scenarios involving:

Firewall configuration: Specifying allowed or blocked TCP/UDP ports.

Intrusion detection systems (IDS): Tracking abnormal packet flows using rules tied to 52013l4 versions.

Network troubleshooting: Tagging specific error conditions or performance data by Layer 4 function.

For example, a DevOps team might use 52013l4 as a keyword to trace problems in TCP connections that align with a specific build or configuration version.

Use Case 3: Firmware and IoT Devices

In embedded systems or Internet of Things (IoT) environments, firmware must be tightly versioned and managed. 52013l4 could:

Act as a firmware version ID deployed across a fleet of devices.

Trigger a specific set of configurations related to security or communication.

Identify rollback points during over-the-air (OTA) updates.

A smart home system, for instance, might roll out firmware_52013l4.bin to thermostats or sensors, ensuring compatibility and stable transport-layer communication.

Use Case 4: Software Development and Release Management

Developers often rely on versioning codes to track software releases, particularly when integrating network communication features. In this domain, 52013l4 might be used to:

Tag milestones in feature development (especially for APIs or sockets).

Mark integration tests that focus on Layer 4 data flow.

Coordinate with other teams (QA, security) based on shared identifiers like 52013l4.

Use Case 5: Cybersecurity and Threat Management

Security engineers use identifiers like 52013l4 to define threat profiles or update logs. For instance:

A SIEM tool might generate an alert tagged as 52013l4 to highlight repeated TCP SYN floods.

Security patches may address vulnerabilities discovered in the 52013l4 release version.

An organization’s SOC (Security Operations Center) could use 52013l4 in internal documentation when referencing a Layer 4 anomaly.

By organizing security incidents by version or layer, organizations improve incident response times and root cause analysis.

Use Case 6: Testing and Quality Assurance

QA engineers frequently simulate different network scenarios and need clear identifiers to catalog results. Here’s how 52013l4 can be applied:

In test automation tools, it helps define a specific test scenario.

Load-testing tools like Apache JMeter might reference 52013l4 configurations for transport-level stress testing.

Bug-tracking software may log issues under the 52013l4 build to isolate issues during regression testing.

What is 52013l4?

At its core, 52013l4 is an identifier, potentially used in system architecture, internal documentation, or as a versioning label in layered networking systems. Its format suggests a structured sequence: “52013” might represent a version code, build date, or feature reference, while “l4” is widely interpreted as Layer 4 of the OSI Model — the Transport Layer.Because of this association, 52013l4 is often seen in contexts that involve network communication, protocol configuration (e.g., TCP/UDP), or system behavior tracking in distributed computing.

FAQs About 52013l4 Applications

Q1: What kind of systems use 52013l4? Ans. 52013l4 is commonly used in cloud computing, networking hardware, application development environments, and firmware systems. It's particularly relevant in Layer 4 monitoring and version tracking.

Q2: Is 52013l4 an open standard? Ans. No, 52013l4 is not a formal standard like HTTP or ISO. It’s more likely an internal or semi-standardized identifier used in technical implementations.

Q3: Can I change or remove 52013l4 from my system? Ans. Only if you fully understand its purpose. Arbitrarily removing references to 52013l4 without context can break dependencies or configurations.

Conclusion

As modern technology systems grow in complexity, having clear identifiers like 52013l4 ensures smooth operation, reliable communication, and maintainable infrastructures. From cloud orchestration to embedded firmware, 52013l4 plays a quiet but critical role in linking performance, security, and development efforts. Understanding its uses and applying it strategically can streamline operations, improve response times, and enhance collaboration across your technical teams.

0 notes

Text

Exploring the Power of Artificial Intelligence in API Testing Services

In the ever-evolving world of software development, APIs (Application Programming Interfaces) have become the backbone of modern applications. Whether it's a mobile app, web platform, or enterprise solution, APIs drive the data exchange and functionality that make these systems work seamlessly. With the rise of microservices and the constant need for faster releases, API Testing Services are more essential than ever.

However, traditional testing approaches are reaching their limits. As APIs become more complex and dynamic, manual or even scripted testing methods struggle to keep up. This is where Artificial Intelligence (AI) enters the picture, transforming how testing is performed and enabling smarter, more scalable solutions.

At Robotico Digital, we’ve embraced this revolution. Our AI-powered API Testing Services are designed to deliver precision, speed, and deep security insights—including advanced Security testing API capabilities that protect your digital assets from modern cyber threats.

What Makes API Testing So Crucial?

APIs enable communication between software systems, and any failure in that communication could lead to data loss, functionality errors, or worse—security breaches. That’s why API Testing Services are vital for:

Verifying data integrity

Ensuring business logic works as expected

Validating performance under load

Testing integration points

Enforcing robust security protocols via Security testing API

Without thorough testing, even a minor change in an API could break core functionalities across connected applications.

How AI Is Changing the Game in API Testing Services

Traditional test automation requires human testers to write and maintain scripts. These scripts often break when APIs change or evolve, leading to frequent rework. AI solves this by introducing:

1. Autonomous Test Creation

AI learns from API documentation, usage logs, and past bugs to auto-generate test cases that cover both common and edge-case scenarios. This dramatically reduces setup time and human effort.

2. Intelligent Test Execution

AI can prioritize tests that are most likely to uncover bugs based on historical defect patterns. This ensures faster feedback and optimized test cycles, which is especially crucial in CI/CD environments.

3. Adaptive Test Maintenance

API structures change frequently. AI automatically updates impacted test cases, eliminating the need for manual intervention and reducing test flakiness.

4. Continuous Learning & Improvement

AI algorithms improve over time by analyzing test outcomes and incorporating real-world performance insights.

5. AI-Driven Security Insights

With integrated Security testing API modules, AI can detect potential vulnerabilities such as:

Broken authentication

Sensitive data exposure

Injection attacks

Misconfigured headers or CORS policies

At Robotico Digital, our AI modules continuously monitor and adapt to new security threats, offering proactive protection for your API ecosystem.

Real-World Applications of AI in API Testing

Let’s break down how AI adds tangible value to API testing across different scenarios:

Regression Testing

When an API is updated, regression testing ensures that existing features still work as expected. AI identifies the most impacted areas, drastically reducing redundant test executions.

Load and Performance Testing

AI models simulate user traffic patterns more realistically, helping uncover performance issues under various load conditions.

Contract Testing

AI validates whether the API’s contract (expected input/output) is consistent across environments, even as the codebase evolves.

Security testing API

Instead of relying on static rules, AI-powered security tools detect dynamic threats using behavior analytics and anomaly detection—offering more robust Security testing API solutions.

Robotico Digital’s Approach to AI-Driven API Testing Services

Our commitment to innovation drives our unique approach to API Testing Services:

1. End-to-End AI Integration

We incorporate AI across the entire testing lifecycle—from test planning and generation to execution, maintenance, and reporting.

2. Custom AI Engines

Our proprietary testing suite, Robotico AI TestLab, is built to handle high-volume API transactions, real-time threat modeling, and continuous test adaptation.

3. Modular Architecture

We provide both on-premise and cloud-based solutions, ensuring seamless integration into your DevOps pipelines, Jira systems, and CI/CD tools like Jenkins, GitLab, and Azure DevOps.

4. Advanced Security Layer

Incorporating Security testing API at every phase, we conduct:

Token validation checks

Encryption standard verifications

Endpoint exposure audits

Dynamic vulnerability scanning using AI heuristics

This ensures that your APIs aren’t just functional—they’re secure, scalable, and resilient.

Elevating Security Testing API with AI

Traditional API security testing is often reactive. AI flips that model by being proactive and predictive. Here's how Robotico Digital’s Security testing API services powered by AI make a difference:

AI-Driven Vulnerability Scanning

We identify security gaps not just based on OWASP Top 10 but using real-time threat intelligence and behavioral analysis.

Threat Simulation and Penetration

Our systems use generative AI to simulate hacker strategies, testing your APIs against real-world scenarios.

Token & OAuth Testing

AI algorithms verify token expiration, scopes, misuse, and replay attack vectors—making authentication rock-solid.

Real-Time Threat Alerts

Our clients receive real-time alerts through Slack, Teams, or email when abnormal API behavior is detected.

Tools and Technologies Used

At Robotico Digital, we utilize a blend of open-source and proprietary AI tools in our API Testing Services, including:

Postman AI Assist – for intelligent test recommendations

RestAssured + AI Models – for code-based test generation

TensorFlow + NLP APIs – for log analysis and test logic generation

OWASP ZAP + AI Extensions – for automated Security testing API

Robotico AI TestLab – our in-house platform with self-healing tests and predictive analytics

The Future of AI in API Testing Services

The integration of AI into testing is just beginning. In the near future, we can expect:

Self-healing test environments that fix their own broken scripts

Voice-enabled test management using AI assistants

Blockchain-verified testing records for audit trails

AI-powered documentation readers that instantly convert API specs into test scripts

Robotico Digital is actively investing in R&D to bring these innovations to life.

Why Robotico Digital?

With a sharp focus on AI and automation, Robotico Digital is your ideal partner for cutting-edge API Testing Services. Here’s what sets us apart:

10+ years in QA and API lifecycle management

Industry leaders in Security testing API

Custom AI-based frameworks tailored to your needs

Full integration with Agile and DevOps ecosystems

Exceptional support and transparent reporting

Conclusion

As software ecosystems become more interconnected, the complexity of APIs will only increase. Relying on traditional testing strategies is no longer sufficient. By combining the precision of automation with the intelligence of AI, API Testing Services become faster, smarter, and more secure.

At Robotico Digital, we empower businesses with future-proof API testing that not only ensures functionality and performance but also embeds intelligent Security testing API protocols to guard against ever-evolving threats.

Let us help you build trust into your technology—one API at a time.

0 notes

Text

How AIOps Platform Development Is Revolutionizing IT Incident Management

In today’s fast-paced digital landscape, businesses are under constant pressure to deliver seamless IT services with minimal downtime. Traditional IT incident management strategies, often reactive and manual, are no longer sufficient to meet the demands of modern enterprises. Enter AIOps (Artificial Intelligence for IT Operations)—a game-changing approach that leverages artificial intelligence, machine learning, and big data analytics to transform the way organizations manage and resolve IT incidents.

In this blog, we delve into how AIOps platform development is revolutionizing IT incident management, improving operational efficiency, and enabling proactive issue resolution.

What Is AIOps?

AIOps is a term coined by Gartner, referring to platforms that combine big data and machine learning to automate and enhance IT operations. By aggregating data from various IT tools and systems, AIOps platforms can:

Detect patterns and anomalies

Predict and prevent incidents

Automate root cause analysis

Recommend or trigger automated responses

How AIOps Is Revolutionizing Incident Management

1. Proactive Issue Detection

AIOps platforms continuously analyze massive streams of log data, metrics, and events to identify anomalies in real time. Using machine learning, they recognize deviations from normal behavior—often before the end-user is affected.

🔍 Example: A retail platform detects abnormal latency in the checkout API and flags it as a potential service degradation—before users start abandoning their carts.

2. Noise Reduction Through Intelligent Correlation

Instead of flooding teams with redundant alerts, AIOps platforms correlate related events across systems. This reduces alert fatigue and surfaces high-priority incidents that need attention.

🧠 Example: Multiple alerts from a database, server, and application layer are grouped into a single, actionable incident, pointing to a failing database node as the root cause.

3. Accelerated Root Cause Analysis (RCA)

AI algorithms perform contextual analysis to identify the root cause of an issue. By correlating telemetry data with historical patterns, AIOps significantly reduces the Mean Time to Resolution (MTTR).

⏱️ Impact: What used to take hours or days now takes minutes, enabling faster service restoration.

4. Automated Remediation

Advanced AIOps platforms can go beyond detection and diagnosis to automatically resolve common issues using preconfigured workflows or scripts.

⚙️ Example: Upon detecting memory leaks in a microservice, the platform automatically scales up pods or restarts affected services—without human intervention.

5. Continuous Learning and Improvement

AIOps systems improve over time. With every incident, the platform learns new patterns, becoming better at prediction, classification, and remediation—forming a virtuous cycle of operational improvement.

Benefits of Implementing an AIOps Platform

Improved Uptime: Proactive incident detection prevents major outages.

Reduced Operational Costs: Fewer incidents and faster resolution reduce the need for large Ops teams.

Enhanced Productivity: IT staff can focus on innovation instead of firefighting.

Better User Experience: Faster resolution leads to fewer service disruptions and happier customers.

Real-World Use Cases

🎯 Financial Services

Banks use AIOps to monitor real-time transaction flows, ensuring uptime and compliance.

📦 E-Commerce

Retailers leverage AIOps to manage peak traffic during sales events, ensuring site reliability.

🏥 Healthcare

Hospitals use AIOps to monitor critical IT infrastructure that supports patient care systems.

Building an AIOps Platform: Key Components

To develop a robust AIOps platform, consider the following foundational elements:

Data Ingestion Layer – Collects logs, events, and metrics from diverse sources.

Analytics Engine – Applies machine learning models to detect anomalies and patterns.

Correlation Engine – Groups related events into meaningful insights.

Automation Framework – Executes predefined responses to known issues.

Visualization & Reporting – Offers dashboards for monitoring, alerting, and tracking KPIs.

The Future of IT Incident Management

As businesses continue to embrace digital transformation, AIOps is becoming indispensable. It represents a shift from reactive to proactive operations, and from manual processes to intelligent automation. In the future, we can expect even deeper integration with DevOps, better NLP capabilities for ticket automation, and more advanced self-healing systems.

Conclusion

AIOps platform development is not just an upgrade—it's a revolution in IT incident management. By leveraging artificial intelligence, organizations can significantly reduce downtime, improve service quality, and empower their IT teams to focus on strategic initiatives.

If your organization hasn’t begun the AIOps journey yet, now is the time to explore how these platforms can transform your IT operations—and keep you ahead of the curve.

0 notes

Text

Integrating ROSA Applications with AWS Services (CS221)

In today's rapidly evolving cloud-native landscape, enterprises are looking for scalable, secure, and fully managed Kubernetes solutions that work seamlessly with existing cloud infrastructure. Red Hat OpenShift Service on AWS (ROSA) meets that demand by combining the power of Red Hat OpenShift with the scalability and flexibility of Amazon Web Services (AWS).

In this blog post, we’ll explore how you can integrate ROSA-based applications with key AWS services, unlocking a powerful hybrid architecture that enhances your applications' capabilities.

📌 What is ROSA?

ROSA (Red Hat OpenShift Service on AWS) is a managed OpenShift offering jointly developed and supported by Red Hat and AWS. It allows you to run containerized applications using OpenShift while taking full advantage of AWS services such as storage, databases, analytics, and identity management.

🔗 Why Integrate ROSA with AWS Services?

Integrating ROSA with native AWS services enables:

Seamless access to AWS resources (like RDS, S3, DynamoDB)

Improved scalability and availability

Cost-effective hybrid application architecture

Enhanced observability and monitoring

Secure IAM-based access control using AWS IAM Roles for Service Accounts (IRSA)

🛠️ Key Integration Scenarios

1. Storage Integration with Amazon S3 and EFS

Applications deployed on ROSA can use AWS storage services for persistent and object storage needs.

Use Case: A web app storing images to S3.

How: Use OpenShift’s CSI drivers to mount EFS or access S3 through SDKs or CLI.

yaml

Copy

Edit

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: efs-sc

resources:

requests:

storage: 5Gi

2. Database Integration with Amazon RDS

You can offload your relational database requirements to managed RDS instances.

Use Case: Deploying a Spring Boot app with PostgreSQL on RDS.

How: Store DB credentials in Kubernetes secrets and use RDS endpoint in your app’s config.

env

Copy

Edit

SPRING_DATASOURCE_URL=jdbc:postgresql://<rds-endpoint>:5432/mydb

3. Authentication with AWS IAM + OIDC

ROSA supports IAM Roles for Service Accounts (IRSA), enabling fine-grained permissions for workloads.

Use Case: Granting a pod access to a specific S3 bucket.

How:

Create an IAM role with S3 access

Associate it with a Kubernetes service account

Use OIDC to federate access

4. Observability with Amazon CloudWatch and Prometheus

Monitor your workloads using Amazon CloudWatch Container Insights or integrate Prometheus and Grafana on ROSA for deeper insights.

Use Case: Track application metrics and logs in a single AWS dashboard.

How: Forward logs from OpenShift to CloudWatch using Fluent Bit.

5. Serverless Integration with AWS Lambda

Bridge your ROSA applications with AWS Lambda for event-driven workloads.

Use Case: Triggering a Lambda function on file upload to S3.

How: Use EventBridge or S3 event notifications with your ROSA app triggering the workflow.

🔒 Security Best Practices

Use IAM Roles for Service Accounts (IRSA) to avoid hardcoding credentials.

Use AWS Secrets Manager or OpenShift Vault integration for managing secrets securely.

Enable VPC PrivateLink to keep traffic within AWS private network boundaries.

🚀 Getting Started

To start integrating your ROSA applications with AWS:

Deploy your ROSA cluster using the AWS Management Console or CLI

Set up AWS CLI & IAM permissions

Enable the AWS services needed (e.g., RDS, S3, Lambda)

Create Kubernetes Secrets and ConfigMaps for service integration

Use ServiceAccounts, RBAC, and IRSA for secure access

🎯 Final Thoughts

ROSA is not just about running Kubernetes on AWS—it's about unlocking the true hybrid cloud potential by integrating with a rich ecosystem of AWS services. Whether you're building microservices, data pipelines, or enterprise-grade applications, ROSA + AWS gives you the tools to scale confidently, operate securely, and innovate rapidly.

If you're interested in hands-on workshops, consulting, or ROSA enablement for your team, feel free to reach out to HawkStack Technologies – your trusted Red Hat and AWS integration partner.

💬 Let's Talk!

Have you tried ROSA yet? What AWS services are you integrating with your OpenShift workloads? Share your experience or questions in the comments!

For more details www.hawkstack.com

0 notes

Text

Observability Tool Market is Anticipated to Witness Growth Owing to Cloud Adoption

The Global Observability Tool Market is estimated to be valued at USD 3.07 Bn in 2025 and is expected to exhibit a CAGR of 10.90% over the forecast period 2025 to 2032. Observability tools provide comprehensive visibility into complex IT environments by collecting, analyzing, and correlating metrics, logs and traces. These platforms enable organizations to monitor application performance, swiftly detect anomalies and root causes, and optimize resource utilization. By integrating machine learning and AI-driven analytics, observability solutions offer predictive insights to prevent downtime and enhance user experience. Observability Tool Market Insights include real-time monitoring, automated alerting and unified dashboards that consolidate data from on-premises, multi-cloud and edge infrastructures. With digital transformation initiatives accelerating and microservices architectures growing in complexity, the need for end-to-end observability has become critical. Enterprises across finance, healthcare, retail and manufacturing are deploying observability tools to improve service reliability, reduce mean time to resolution and support DevOps and SRE teams. According to market research, improved visibility leads to cost savings through optimized cloud spend and fewer service disruptions. As demand rises for scalable, AI-powered observability solutions that deliver actionable market insights and support continuous delivery pipelines, vendors are rapidly enhancing their platforms with customizable analytics, anomaly detection and automated remediation.

Get more insights on,Observability Tool Market

#Coherent Market Insights#Observability Tool#Observability Tool Market#Observability Tool Market Insights#Logs#Metrics

0 notes

Text

Observability In Modern Microservices Architecture

Introduction

Observability in modern microservice architecture refers to the ability to gain insights into the system’s internal workings by collecting and analyzing data from various components. Observability in modern microservice architecture has become supreme in today’s dynamic software landscape. It extends beyond traditional monitoring, encompassing logging, tracing, and more to gain comprehensive insights into complex systems. As microservices, containers, and distributed systems gain popularity, so does the need for strong observability practices. However, with these advancements come challenges such as increased complexity, the distributed nature of microservices, and dynamic scalability. Gaining a comprehensive view of an entire application becomes challenging when it’s deployed across 400+ pods spanning 100 nodes distributed globally. In this blog, we offer some insights on these issues and some thoughts on the tools and best practices that can help make observability more manageable.

Observability Components

Monitoring

Monitoring is the continuous process of tracking and measuring various metrics and parameters within a system. This real-time observation helps detect anomalies, performance bottlenecks, and potential issues. Key metrics monitored include resource utilization, response times, error rates, and system health. Monitoring tools collect data from various sources such as infrastructure, application logs, and network traffic. By analyzing this data, teams can gain insights into the overall health and performance of the system.

Logging

Logging involves the systematic recording of events, errors, and activities within an application or system. Each log entry provides context and information about the state of the system at a specific point in time. Logging is essential for troubleshooting, debugging, and auditing system activities. Logs capture critical information such as user actions, system events, and errors, which are invaluable for diagnosing issues and understanding system behavior. Modern logging frameworks offer capabilities for log aggregation, filtering, and real-time monitoring, making it easier to manage and analyze log data at scale.

Tracing

Tracing involves tracking the flow of requests or transactions as they traverse through different components and services within a distributed system. It provides a detailed view of the journey of a request, helping identify latency, bottlenecks, and dependencies between microservices. Tracing tools capture timing information for each step of a request, allowing teams to visualize and analyze the performance of individual components and the overall system. Distributed tracing enables teams to correlate requests across multiple services and identify performance hotspots, enabling them to optimize system performance and enhance user experience.

APM

APM focuses on monitoring the performance and availability of applications. APM tools provide insights into various aspects of application performance, including response times, error rates, transaction traces, and dependencies. These tools help organizations identify performance bottlenecks, troubleshoot issues, and optimize application performance to ensure a seamless user experience.

Synthetic

Synthetic monitoring involves simulating user interactions with the application to monitor its performance and functionality. Synthetic tests replicate predefined user journeys or transactions, interacting with the application as a real user would. These tests run at regular intervals from different locations and environments, providing insights into application health and user experience. Synthetic monitoring helps in identifying issues before they affect real users, such as downtime, slow response times, or broken functionality. By proactively monitoring application performance from the user’s perspective, teams can ensure high availability and reliability.

Metrics Collection and Analysis

Metrics collection involves gathering data about various aspects of the system, such as CPU usage, memory consumption, network traffic, and application performance. This data is then analyzed to identify trends, anomalies, and performance patterns. Metrics play a crucial role in understanding system behavior, identifying performance bottlenecks, and optimizing resource utilization. Modern observability platforms offer capabilities for collecting, storing, and analyzing metrics in real time, providing actionable insights into system performance.

Alerting and Notification

Alerting and notification mechanisms notify teams about critical issues and events in the system. Alerts are triggered based on predefined thresholds or conditions, such as high error rates, low disk space, or system downtime. Notifications are sent via various channels, including email, SMS, and chat platforms, ensuring timely awareness of incidents. Alerting helps teams proactively address issues and minimize downtime, ensuring the reliability and availability of the system.

Benefits of Observability

Faster Issue Detection and Resolution

One of the key benefits of observability is its ability to identify bottlenecks early on. By offering a detailed view of individual services and the overall system dynamics, developers can quickly detect and diagnose issues like unexpected behaviors and performance bottlenecks, enabling prompt resolution.

Infrastructure Visibility

Infrastructure visibility involves actively monitoring the foundational components of a system, including the network, storage, and compute resources. This practice yields valuable insights into system performance and behavior, facilitating quicker diagnosis and resolution of issues. Rewrite in a professional way

Compliance And Auditing

Observability is super important for making sure businesses follow the rules and pass audits in their Kubernetes setups. It’s all about keeping careful records of what’s happening in the system, like keeping track of logs, traces, and metrics. These records help prove that the company is sticking to the rules set by the government and industry standards. Plus, they help spot any changes over time. During audits, these records are super handy for inspectors to check if everything’s running as it should be according to the company’s own rules and legal requirements. This careful way of keeping track doesn’t just show that things are going smoothly but also helps find ways to do things even better to keep following the rules.

Capacity Planning and Scaling

Observability is like a smart tool that helps businesses strike the perfect balance between having enough resources to handle their workload and not overspending on unused capacity. By adjusting the amount of resources they use based on real-time needs, they can save money while still delivering top-notch service. Plus, observability lets them peek into the past to see how many resources they’ve needed before, helping them plan for the future and avoid any surprises. It also shows them which parts of their systems are busiest and which ones aren’t as active, so they can manage their resources more effectively, saving cash and ensuring everything runs smoothly.

Improved System Performance

Additionally, observability contributes to performance optimization. It provides valuable insights into system-level and service-level performance, allowing developers to fine-tune the architecture and optimize resource allocation. This optimization incrementally enhances system efficiency.

Enhanced User Experience

Observability in a system, particularly within a microservices architecture, significantly contributes to an enhanced user experience. The ability to monitor, trace, and analyze the system’s behavior in real time provides several benefits that directly impact the overall user experience. This proactive identification of problems enables teams to address issues before users are affected, minimizing disruptions and ensuring a smoother user experience.

Best Observability Tool Features to Consider

There are some key factors to be evaluated while selecting an Observability tool. Evaluating the right observability tool is very critical, as these tools play a crucial role in ensuring the stability and reliability of modern software systems.

Alerting Mechanisms

Check out for tools equipped with notification capabilities that promptly inform you when issues arise, enabling proactive management of potential problems. The tool should provide a search query feature that continuously monitors telemetry data and alerts when certain conditions are met. While some tools offer simple search queries or filters, others offer more complex setups with multiple conditions and varying thresholds.

Visualization

Observability requires quickly interpreting signals. Look out for a tool featuring intuitive and adaptable dashboards, charts, and visualizations. These functionalities empower teams to efficiently analyze data, detect trends, and address issues promptly. Prioritize tools with strong querying capabilities and compatibility with popular visualization frameworks.

Data Correlation

When troubleshooting, engineers often face the need to switch between different interfaces and contexts to manually retrieve data, which can lengthen incident investigations. This complexity intensifies when dealing with microservices, as engineers must correlate data from various components to pinpoint issues within intricate application requests. To overcome these challenges, data correlation is vital. A unified interface automatically correlating all pertinent telemetry data can greatly streamline troubleshooting, enabling engineers to identify and resolve issues more effectively.

Distributed Tracing

Distributed tracing is a method utilized to analyze and monitor applications, especially those constructed with a microservices framework. It aids in precisely locating failures and uncovering the underlying reasons for subpar performance. Choosing an Observability tool that accommodates distributed tracing is essential, as it provides a comprehensive view of request execution and reveals latency sources.

Data-Driven Cost Control

Efficient data optimization is essential for building a successful observability practice. Organizations need observability tools with built-in automated features like storage and data optimization to consistently manage data volumes and associated costs. This ensures that organizations only pay for the data they need to meet their specific observability requirements.

Key Observability Tools

Observability tools are essential components for gaining insights into the health, performance, and behavior of complex systems. Here’s an overview of three popular observability tools: Elastic Stack, Prometheus & Grafana, and New Relic.Observability ToolCategoryDeployment ModelsPricingElastic Stack

The choice of an observability tool depends on specific use cases, system architecture, and organizational preferences. Each of these tools offers unique features and strengths, allowing organizations to customize their observability strategy to meet their specific needs.

Conclusion

Observability in modern microservice architecture is indispensable for adopting the complexities of distributed systems. By utilizing key components such as monitoring, logging, and tracing, organizations can gain valuable insights into system behavior. These insights not only facilitate faster issue detection and resolution but also contribute to improved system performance and enhanced user experience. With a pool of observability tools available, organizations can customize their approach to meet specific needs, ensuring the smooth operation of their microservices architecture. Source Url: https://squareops.com/blog/observability-in-modern-microservices-architecture/

0 notes

Text

The Future of Enterprise Security: Why Zero Trust Architecture Is No Longer Optional

Introduction: The Myth of the Perimeter Is Dead

For decades, enterprise security was built on a simple, but now outdated, idea: trust but verify. IT teams set up strong perimeters—firewalls, VPNs, gateways—believing that once you’re inside, you’re safe. But today, in a world where remote work, cloud services, and mobile devices dominate, that perimeter has all but disappeared.

The modern digital enterprise isn’t confined to a single network. Employees log in from coffee shops, homes, airports. Devices get shared, stolen, or lost. APIs and third-party tools connect deeply with core systems. This creates a massive, fragmented attack surface—and trusting anything by default is a huge risk.

Enter Zero Trust Architecture (ZTA)—a new security mindset based on one core rule: never trust, always verify. Nothing inside or outside the network is trusted without thorough, ongoing verification.

Zero Trust isn’t just a buzzword or a compliance box to tick anymore. It’s a critical business requirement.

The Problem: Trust Has Become a Vulnerability

Why the Old Model Is Breaking

The old security approach assumes that once a user or device is authenticated, they’re safe. But today’s breaches often start from inside the network—a hacked employee account, an unpatched laptop, a misconfigured cloud bucket.

Recent attacks like SolarWinds and Colonial Pipeline showed how attackers don’t just break through the perimeter—they exploit trust after they’re inside, moving laterally, stealing data silently for months.

Data Lives Everywhere — But the Perimeter Doesn’t

Today’s businesses rely on a mix of:

SaaS platforms

Multiple clouds (public and private)

Edge and mobile devices

Third-party services

Sensitive data isn’t locked away in one data center anymore; it’s scattered across tools, apps, and endpoints. Defending just the perimeter is like locking your front door but leaving all the windows open.

Why Zero Trust Is Now a Business Imperative

Zero Trust flips the old model on its head: every access request is scrutinized every time, with no exceptions.

Here’s why Zero Trust can’t be ignored:

1. Adaptive Security, Not Static

Zero Trust is proactive. Instead of fixed rules, it uses continuous analysis of:

Who the user is and their role

Device health and security posture

Location and network context

Past and current behavior

Access decisions change in real time based on risk—helping you stop threats before damage occurs.

2. Shrinks the Attack Surface

By applying least privilege access, users, apps, and devices only get what they absolutely need. If one account is compromised, attackers can’t roam freely inside your network.

Zero Trust creates isolated zones—no soft spots for attackers.

3. Designed for the Cloud Era

It works naturally with:

Cloud platforms (AWS, Azure, GCP)

Microservices and containers

It treats every component as potentially hostile, perfect for hybrid and multi-cloud setups where old boundaries don’t exist.

4. Built for Compliance

Data privacy laws like GDPR, HIPAA, and India’s DPDP require detailed access controls and audits. Zero Trust provides:

Fine-grained logs of users and devices

Role-based controls

Automated compliance reporting

It’s not just security—it’s responsible governance.

The Three Core Pillars of Zero Trust

To succeed, Zero Trust is built on these key principles:

1. Verify Explicitly

Authenticate and authorize every request using multiple signals—user identity, device status, location, behavior patterns, and risk scores. No shortcuts.

2. Assume Breach

Design as if attackers are already inside. Segment workloads, monitor constantly, and be ready to contain damage fast.

3. Enforce Least Privilege

Grant minimal, temporary access based on roles. Regularly review and revoke unused permissions.

Bringing Zero Trust to Life: A Practical Roadmap

Zero Trust isn’t just a theory—it requires concrete tools and strategies:

1. Identity-Centric Security

Identity is the new perimeter. Invest in:

Multi-Factor Authentication (MFA)

Single Sign-On (SSO)

Role-Based Access Controls (RBAC)

Federated Identity Providers

This ensures users are checked at every access point.

2. Micro-Segmentation

Divide your network into secure zones. If one part is breached, others stay protected. Think of it as internal blast walls.

3. Endpoint Validation

Only allow compliant devices—corporate or BYOD—using tools like:

Endpoint Detection & Response (EDR)

Mobile Device Management (MDM)

Posture checks (OS updates, antivirus)

4. Behavioral Analytics

Legitimate credentials can be misused. Use User and Entity Behavior Analytics (UEBA) to catch unusual activities like:

Odd login times

Rapid file downloads

Access from unexpected locations

This helps stop insider threats before damage happens.

How EDSPL Is Driving Zero Trust Transformation

At EDSPL, we know Zero Trust isn’t a product—it’s a continuous journey touching every part of your digital ecosystem.

Here’s how we make Zero Trust work for you:

Tailored Zero Trust Blueprints

We start by understanding your current setup, business goals, and compliance needs to craft a personalized roadmap.

Secure Software Development

Our apps are built with security baked in from day one, including encrypted APIs and strict access controls (application security).

Continuous Testing

Using Vulnerability Assessments, Penetration Testing, and Breach & Attack Simulations, we keep your defenses sharp and resilient.

24x7 SOC Monitoring

Our Security Operations Center watches your environment around the clock, detecting and responding to threats instantly.

Zero Trust Is a Journey — Don’t Wait Until It’s Too Late

Implementing Zero Trust takes effort—rethinking identities, policies, networks, and culture. But the cost of delay is huge:

One stolen credential can lead to ransomware lockdown.

One exposed API can leak thousands of records.

One unverified device can infect your entire network.

The best time to start was yesterday. The second-best time is now.

Conclusion: Trust Nothing, Protect Everything

Cybersecurity must keep pace with business change. Static walls and blind trust don’t work anymore. The future is decentralized, intelligent, and adaptive.

Zero Trust is not a question of if — it’s when. And with EDSPL by your side, your journey will be smart, scalable, and secure.

Ready to Transform Your Security Posture?

EDSPL is here to help you take confident steps towards a safer digital future. Let’s build a world where trust is earned, never assumed.

Visit Reach Us

Book a Zero Trust Assessment

Talk to Our Cybersecurity Architects

Zero Trust starts now—because tomorrow might be too late.

Please visit our website to know more about this blog https://edspl.net/blog/the-future-of-enterprise-security-why-zero-trust-architecture-is-no-longer-optional/

0 notes

Text

Rolling Deployment in Kubernetes for Microservices

In the world of microservices, ensuring zero downtime during updates is crucial for maintaining user experience and operational continuity. Rolling deployment in Kubernetes for microservices provides a powerful strategy to deploy updates incrementally, minimizing disruptions while scaling efficiently. At Global Techno Solutions, we’ve implemented rolling deployments to streamline microservices updates, as showcased in our case study on Rolling Deployment in Kubernetes for Microservices.

The Challenge: Updating Microservices Without Downtime

A fintech startup approached us with a challenge: their microservices-based payment platform, hosted on Kubernetes, required frequent updates to roll out new features and security patches. However, their existing deployment strategy caused downtime, disrupting transactions and frustrating users. Their goal was to implement a rolling deployment strategy in Kubernetes to ensure seamless updates, maintain service availability, and support their growing user base of 500,000 monthly active users.

The Solution: Rolling Deployment in Kubernetes

At Global Techno Solutions, we leveraged Kubernetes’ rolling deployment capabilities to address their needs. Here’s how we ensured smooth updates:

Rolling Deployment Configuration: We configured Kubernetes to use rolling updates, ensuring new pods with updated microservices were gradually rolled out while old pods were phased out. This maintained availability throughout the deployment process.

Health Checks and Readiness Probes: We implemented readiness and liveness probes to ensure only healthy pods received traffic, preventing users from being routed to faulty instances during the update.

Traffic Management: Using Kubernetes’ service and ingress resources, we managed traffic to ensure a seamless transition between old and new pods, avoiding any disruptions in user transactions.

Rollback Mechanism: We set up automatic rollback capabilities in case of deployment failures, ensuring the system could revert to the previous stable version without manual intervention.

Monitoring and Logging: We integrated tools like Prometheus and Grafana to monitor deployment progress and track key metrics, such as error rates and latency, in real time.

For a detailed look at our approach, explore our case study on Rolling Deployment in Kubernetes for Microservices.

The Results: Zero Downtime and Enhanced Scalability

The rolling deployment strategy delivered impressive results for the fintech startup:

Zero Downtime Deployments: Updates were rolled out seamlessly, with no disruptions to user transactions.

50% Faster Deployment Cycles: Incremental updates reduced deployment time, enabling faster feature releases.

20% Reduction in Error Rates: Health checks ensured only stable pods served traffic, improving reliability.

Improved User Trust: Consistent availability during updates enhanced the platform’s reputation among users.

These outcomes highlight the effectiveness of rolling deployments in Kubernetes for microservices. Learn more in our case study on Rolling Deployment in Kubernetes for Microservices.

Why Rolling Deployment Matters for Microservices

Rolling deployments in Kubernetes are a game-changer for microservices in 2025, offering benefits like:

Zero Downtime: Gradual updates ensure continuous service availability.

Scalability: Kubernetes dynamically scales pods to handle traffic during deployments.

Reliability: Health checks and rollbacks minimize the risk of failed updates.

Efficiency: Faster deployment cycles enable rapid iteration and innovation.

At Global Techno Solutions, we specialize in optimizing microservices deployments, ensuring businesses can scale and innovate without compromising on reliability.

Looking Ahead: The Future of Microservices Deployments