#DataSync

Explore tagged Tumblr posts

Text

Integrating Third-Party Tools into Your CRM System: Best Practices

A modern CRM is rarely a standalone tool — it works best when integrated with your business's key platforms like email services, accounting software, marketing tools, and more. But improper integration can lead to data errors, system lags, and security risks.

Here are the best practices developers should follow when integrating third-party tools into CRM systems:

1. Define Clear Integration Objectives

Identify business goals for each integration (e.g., marketing automation, lead capture, billing sync)

Choose tools that align with your CRM’s data model and workflows

Avoid unnecessary integrations that create maintenance overhead

2. Use APIs Wherever Possible

Rely on RESTful or GraphQL APIs for secure, scalable communication

Avoid direct database-level integrations that break during updates

Choose platforms with well-documented and stable APIs

Custom CRM solutions can be built with flexible API gateways

3. Data Mapping and Standardization

Map data fields between systems to prevent mismatches

Use a unified format for customer records, tags, timestamps, and IDs

Normalize values like currencies, time zones, and languages

Maintain a consistent data schema across all tools

4. Authentication and Security

Use OAuth2.0 or token-based authentication for third-party access

Set role-based permissions for which apps access which CRM modules

Monitor access logs for unauthorized activity

Encrypt data during transfer and storage

5. Error Handling and Logging

Create retry logic for API failures and rate limits

Set up alert systems for integration breakdowns

Maintain detailed logs for debugging sync issues

Keep version control of integration scripts and middleware

6. Real-Time vs Batch Syncing

Use real-time sync for critical customer events (e.g., purchases, support tickets)

Use batch syncing for bulk data like marketing lists or invoices

Balance sync frequency to optimize server load

Choose integration frequency based on business impact

7. Scalability and Maintenance

Build integrations as microservices or middleware, not monolithic code

Use message queues (like Kafka or RabbitMQ) for heavy data flow

Design integrations that can evolve with CRM upgrades

Partner with CRM developers for long-term integration strategy

CRM integration experts can future-proof your ecosystem

#CRMIntegration#CRMBestPractices#APIIntegration#CustomCRM#TechStack#ThirdPartyTools#CRMDevelopment#DataSync#SecureIntegration#WorkflowAutomation

2 notes

·

View notes

Text

#Screenshot#MobilePhotography#TechTips#PhoneToPC#FileTransfer#AndroidTips#iOSGuide#MobileStorage#TechTutorial#ScreenshotTransfer#MobileTech#DigitalWorkflow#TechSavvy#MobileManagement#PCTransfer#DataSync#CloudStorage#TechCommunity#MobileHacks#TechSupport#DeviceManagement

0 notes

Video

tumblr

Home Page of Fred J. Nusbaum Jr. Dec 1997 Archived Web Page 🧩 🔊

0 notes

Text

🔗 Seamlessly Connect Your Systems with Salesforce Integration Services! 🌐

Struggling to sync your Salesforce CRM with other tools and platforms? Salesforce Integration Services ensure that all your business systems—from ERP to marketing automation—work together seamlessly! Streamline your operations, boost data accuracy, and unlock new efficiencies. Dextara Datamatics

✅ Effortless Data Sync ✅ Enhanced Workflow Automation ✅ Custom API Integrations ✅ Real-Time Insights Across Platforms

Integrate smarter and accelerate your business success! 🚀💼

#salesforce consultant#salesforce consulting services#boost data accuracy#and unlock new efficiencies.#✅ Effortless Data Sync#✅ Enhanced Workflow Automation#✅ Custom API Integrations#✅ Real-Time Insights Across Platforms#Integrate smarter and accelerate your business success! 🚀💼#Salesforce#DigitalTransformation#BusinessEfficiency#CRM#DataSync

0 notes

Text

🚗 Stay Ahead with Seamless Fitment Data Synchronization!

Managing fitment data across multiple platforms doesn't have to be a challenge. Sync all your data with ease and focus on what truly matters—growing your business! Keep things streamlined, accurate, and hassle-free.

1 note

·

View note

Text

Celigo Pricing Simplified: Optimize Your Integration Strategy with the Right Plan

Choosing the right integration platform is a critical decision for any business, and understanding the associated costs is equally important. Celigo, a leading integration Platform as a Service (iPaaS), offers a variety of pricing plans tailored to different business needs. However, determining the best plan for your organization requires more than just a glance at the price list; it involves a deep understanding of what each plan offers and how these offerings align with your business goals.

Understanding Celigo’s Platform and Capabilities

At its core, Celigo’s platform, Celigo.io, is designed to streamline the integration of various applications and data sources within an organization. It’s a cloud-based solution that allows businesses to automate processes, synchronize data, and build custom integrations using a powerful suite of tools. Whether you’re looking to integrate popular applications like Salesforce, Oracle NetSuite, and Shopify, or need to connect more niche systems, Celigo provides the flexibility to meet those needs.

Some of the standout features of Celigo.io include pre-built integrations, a drag-and-drop interface for custom integrations, real-time data synchronization, robust error handling, and comprehensive API management. These features ensure that businesses can create seamless connections between their systems, improving efficiency and reducing manual workload.

Licensing Editions: Tailoring the Platform to Your Needs

Celigo offers four main licensing editions: Standard, Professional, Premium, and Enterprise. Each edition is designed to cater to different levels of integration needs, from small businesses just starting their digital transformation journey to large enterprises requiring extensive integration capabilities.

Standard Edition: This entry-level plan is ideal for businesses that are new to Celigo. It includes three endpoints and 16 integration flows, along with essential support and API management capabilities. It’s a cost-effective way to start automating basic processes without overwhelming complexity.

Professional Edition: Targeted at businesses that are more advanced in their digital transformation, this plan includes five endpoints and 100 flows. It also offers a preferred support plan and includes a sandbox environment for testing. The Professional Edition is a good fit for companies looking to scale their integrations as they g.

Premium Edition: Designed for businesses that have fully embraced digital transformation, the Premium Edition provides 10 endpoints and unlimited flows. In addition to premier support, it offers advanced features like Single Sign-On (SSO) support, external FTP site integration, and API management. This edition is sui for organizations with complex integration needs that require robust support and advanced capabilities.

Enterprise Edition: The most comprehensive plan, the Enterprise Edition is built for large businesses with extensive integration requirements. It includes 20 endpoints, unlimited flows, premier support, API management, and multiple external FTP sites. This edition is ideal for enterprises looking to integrate multiple departments and processes across the organization.

Cost Factors: What Influences Celigo Pricing?

While the base price of each edition gives an initial indication of cost, several factors can influence the total investment required for a Celigo solution. Understanding these factors can help businesses make an informed decision.

Number of Endpoints: An endpoint in Celigo refers to a specific application or system that you want to connect. The more endpoints you need, the higher the cost. For instance, integrating a CRM system with an e-commerce platform would count as two endpoints.

Number of Flows: Flows represent the specific integrations set up between endpoints. More complex workflows or a higher volume of integrations will require more flows, impacting the overall pricing.

Complexity of Integration: The complexity of your integration needs—such as customizations, data mapping, and the number of systems involved—can significantly affect the cost. Custom integrations often require additional resources for setup and maintenance, driving up the price.

Advanced Features: Certain advanced features, like API management, custom connectors, and advanced error handling, are available at higher price points. These features provide greater control and flexibility but come at an additional cost.

Support Levels: Celigo offers three levels of support—Essential, Preferred, and Premier. Each tier provides different levels of access to support resources and response times. Higher support levels are associated with higher costs but provide quicker resolution and more comprehensive support options.

The Importance of Planning for Implementation

Implementing a Celigo solution is not just about the initial purchase; it also involves a significant investment in time and resources. The implementation process includes identifying integration needs, planning the project, purchasing the necessary licenses, configuring the integrations, and testing the solution. Proper planning and budgeting for these steps are crucial to ensure a successful deployment.

Working with a trusted partner can help streamline the implementation process and maximize the return on investment. A partner can provide expertise, guidance, and support throughout the project, ensuring that the Celigo solution meets your specific business requirements.

Integs Cloud: Your Trusted Celigo Integration Partner

Integs Cloud is a certified Celigo partner specializing in iPaaS implementation and support. We leverage the integrator.io platform’s robust functionalities to deliver pre-built and custom Celigo connectors, helping you seamlessly connect Oracle NetSuite with your essential business applications and automate workflows for streamlined operations.

By partnering with Integs Cloud, you can unlock the full potential of Celigo iPaaS and achieve significant improvements in efficiency, scalability, and data integration across your enterprise systems.

Conclusion

Celigo’s pricing is more than just a list of numbers; it reflects the depth and breadth of its integration capabilities. By understanding the different licensing editions, cost factors, and the importance of a well-planned implementation, businesses can make a more informed decision about which Celigo plan is right for them. Whether you’re a small business starting with basic integrations or a large enterprise with complex needs, Celigo offers a scalable, flexible solution to help you achieve your integration goals.

Get Started Today!

Elevate your business operations with Integs Cloud’s expertise in Celigo integration software. Contact us for a no-obligation consultation and discover how our certified Celigo professionals can transform your enterprise systems with Celigo integrator.io.

#Celigo#IntegsCloud#IntegrationSolutions#BusinessGrowth#DigitalTransformation#IntegrationPlatform#CloudIntegration#iPaaS#TechSolutions#BusinessEfficiency#Automation#EnterpriseTech#SoftwareIntegration#TechInnovation#DataSync#BusinessAutomation#TechStrategy#IntegrationExperts

0 notes

Text

RESOLVING THE PROBLEM OF DATA SYNCHRONIZATION WITH SALESFORCE

0 notes

Text

Boost ROI with Pendo Data Sync: BigQuery Magic Unleashed

Data ROI with Pendo Data Sync When making important business decisions, data is crucial. When making important decisions about the future, leadership wants to know that they have the facts on their side, whether that be in terms of finding new opportunities, optimizing processes, or providing greater value to customers. This entails obtaining and assessing accurate data, namely data derived from their products.

Pendo offers businesses the best means of incorporating product data into their overall business plan. Pendo is a company that aims to improve software experience worldwide. They have developed a product called Pendo Data Sync that facilitates the connection of behavioral insights from applications to the larger business. Regardless of where the data is located within an organization, teams can view customer engagement and health data with Pendo Data Sync.

Product data that is siloed is wasted product data Businesses are unable to comprehend the complete user journey if they are unable to integrate behavioral insights at the product level into their business reports. Businesses are under additional pressure to maintain and expand their current customer base in light of the current macroeconomic conditions, and failing to fully comprehend user behavior is obviously detrimental.

Too frequently, piecemeal data compilation or API pulls yield incomplete images of product usage and fail to deliver the value that companies require. This method may divert attention and take resources away from developing a supported, scalable data strategy model. Each team is left with incomplete information to use when making critical business decisions in the absence of a single source of truth for product data. As a result, data becomes siloed and insights that could address important questions like these are lost.

Do users find value in core product areas that fit into business models As their point of renewal draws near, are customer cohorts still active and in good health Which behavioral patterns can guide sales strategy in order to optimize the lifetime value of customer accounts Do customer teams possess the knowledge necessary to deal with risk and churn in a proactive manner

Deep understanding to address important queries This disjointed method has an alternative. Teams can easily make Pendo Data Sync accessible in Cloud Storage and BigQuery, as well as any other data lake or warehouse, with Pendo Data Sync for Google Cloud. Pendo helps businesses make critical decisions with confidence by fusing behavioral data from product applications that is, user interactions with them with other essential business data sources.

Pendo simplifies it to All past behavioral and product usage data is readily accessible Arrange the important data from your whole data ecosystem and act upon it. By combining these extra datasets with BigQuery and Looker in Google Cloud, data teams can make the most of these tools and produce previously unattainable business insights.

Democratize data to promote well-informed choices Instantaneously combine product data with all of your important business data at the depth needed to support a contemporary, data-driven enterprise.

Encourage more efficient use of resources Your data and engineering teams will have more time to dedicate to more worthwhile tasks if you eliminate the need for them to manually pull separated data sets.

Get the most out of your product insights Give every department the ability to communicate in the same global business language, all the way up to the C-suite.

Make decisions with confidence and speed Product data should be the primary consideration in all of your decision-making.

Democratizing information to support industry For the apps that their customers develop, market, and use, Pendo Data Sync as their main source of truth. These insights are now accessible to all product teams using Pendo, rather than just those who use it directly thanks to Pendo Data Sync. Pendo data can be accessed centrally by any department or cross-functional initiative with ease through an easy-to-follow extract, transform, and load (ETL) process.

Pendo data has been utilized by Pendo Data Sync clients for many different use cases up to this point. These include figuring out where friction exists in your end-to-end user journey, evaluating the effect of product enhancements on sales and renewals, and creating a thorough customer health score that is derived from all available data sources to inform renewal strategy. Other teams have also used Pendo data to find up-sell and cross-sell opportunities to drive data-driven account growth, or to build a churn-risk model based on sentiment signals and product usage.

Built with BigQuery describes Pendo Data Sync Because Pendo Data Sync was built on top of Google Cloud, it was very easy to develop and launch the solution at first. The engineering team at Pendo was able to develop the Pendo Data Sync beta by utilizing their prior experience with Google Cloud services and products. After that, this version was made available to Google Cloud design partners, assisting in the standardization of procedures and best practices for importing large amounts of Pendo data into BigQuery from Cloud Storage.

The data science team at Pendo also uses Looker as its main business intelligence tool and BigQuery as its data warehouse solution. The data team collaborated with engineering to design the product’s data structure, ETL best practices, and assistance documentation, making them Data Sync’s first “alpha” customer.

Pendo is currently investigating further integration into Google Cloud in the future, including direct data sharing within Analytics Hub.

Take advantage of product data You can make the most of your product usage data by collaborating with Pendo. Your teams will be able to make data-driven strategic decisions regarding customer growth and retention when you combine quantitative and qualitative feedback with other relevant data sets. This will provide you with a comprehensive understanding of user engagement and health.

Visit Pendo in the Google Cloud Marketplace or get in touch with a Pendo or Google sales representative to find out more about Pendo Data Sync. To find out more about how your company can use Pendo to improve customer engagement and health, you can also ask for a personalized demo with a member of the Pendo team. The benefit of “Built with BigQuery” for data providers and ISVs Utilizing Google Data Cloud, Built with BigQuery enables businesses such as Pendo to create creative applications. Companies that take part can

Gain access to chosen specialists who can hasten the design and architecture of products by sharing their knowledge of important use cases, best practices, and architectural patterns. Increase adoption, create demand, and raise awareness with collaborative marketing campaigns. ISVs benefit from BigQuery’s strong, highly scalable unified AI lake house, which is integrated with Google Cloud’s sustainable, open, and secure platform.

Read more on Govindhtech.com

#PendoDataSync#DataSync#Pendo#bigquery#ai#googlecloud#cloudstorage#api#technology#technews#govindhtech

0 notes

Text

OMG it worked.

How th does it say NEW and has 6 year old reviews?

It was already in the system what kind of computer I was getting when I talked to Riley about it. Go, me.

#mediasync#media#sync#sync media#channel wanted#channel#wanted#new#platform#new platform#data sync#datasync#media sync#syncmedia

0 notes

Text

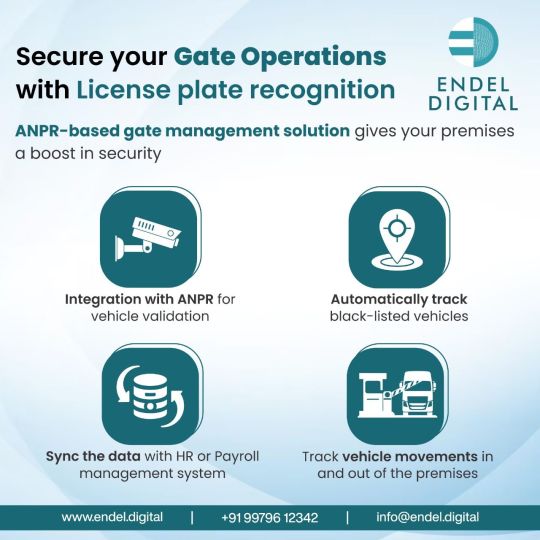

Manage your gate operations with Number Plate Recognition Camera technology that gives you ease of operation and makes gate entries error-free and temper-free.

For More info visit us on:- https://endel.digital/gate-management-systems/

0 notes

Link

#AdvancedFeatures#BusinessSolution#CloudStorage#ContinuousImprovement#DataBackup#DataLoss#DataSync#DSM#ExploreSynologyDrive#FileManagement#FutureFeatures#HardwareRequirements#HighPerformance#InstallationGuide#MediaStorage#MediaStreaming#NAS#NASSeit#PowerfulSolution#StorageSolution#SynologyDrive#Troubleshooting#UserFriendly#UserInterface#Versatile

0 notes

Text

The Future of Cloud Computing: Trends and AWS's Role

Cloud computing continues to evolve, shaping the future of digital transformation across industries. As businesses increasingly migrate to the cloud, emerging trends like AI-driven cloud solutions, edge computing, and serverless architectures are redefining how applications are built and deployed. In this blog, we will explore the future of cloud computing and how AWS remains a key player in driving innovation.

Key Trends in Cloud Computing

1. AI and Machine Learning in the Cloud

Cloud providers are integrating AI and ML services into their platforms to automate tasks, optimize workflows, and enhance decision-making. AWS offers services like:

Amazon SageMaker — A fully managed service to build, train, and deploy ML models.

AWS Bedrock — A generative AI service enabling businesses to leverage foundation models.

2. Edge Computing and Hybrid Cloud

With the rise of IoT and real-time processing needs, edge computing is gaining traction. AWS contributes with:

AWS Outposts — Extends AWS infrastructure to on-premises environments.

AWS Wavelength — Brings cloud services closer to 5G networks for ultra-low latency applications.

3. Serverless and Event-Driven Architectures

Serverless computing is making application development more efficient by removing the need to manage infrastructure. AWS leads with:

AWS Lambda — Executes code in response to events without provisioning servers.

Amazon EventBridge — Connects applications using event-driven workflows.

4. Multi-Cloud and Interoperability

Businesses are adopting multi-cloud strategies to avoid vendor lock-in and enhance redundancy. AWS facilitates interoperability through:

AWS CloudFormation — Infrastructure as Code (IaC) to manage multi-cloud resources.

AWS DataSync — Seamless data transfer between AWS and other cloud providers.

5. Sustainability and Green Cloud Computing

Cloud providers are focusing on reducing carbon footprints. AWS initiatives include:

AWS Sustainability Pillar — Helps businesses design sustainable workloads.

Amazon’s Renewable Energy Commitment — Aims for 100% renewable energy usage by 2025.

AWS’s Role in the Future of Cloud Computing

1. Innovation in AI and ML

AWS continues to lead AI adoption with services like Amazon Titan, which provides foundational AI models, and AWS Trainium, a specialized chip for AI workloads.

2. Security and Compliance

With increasing cyber threats, AWS offers:

AWS Security Hub — Centralized security management.

AWS Nitro System — Enhances virtualization security.

3. Industry-Specific Cloud Solutions

AWS provides tailored solutions for healthcare, finance, and gaming with:

AWS HealthLake — Manages healthcare data securely.

AWS FinSpace — Analyzes financial data efficiently.

Conclusion

The future of cloud computing is dynamic, with trends like AI, edge computing, and sustainability shaping its evolution. AWS remains at the forefront, providing innovative solutions that empower businesses to scale and optimize their operations.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

E-Commerce by Integrating Home Depot API for Ultimate Customization

Boost your e-commerce business with Home Depot API Integration! 🚀 Sync inventory, automate management, and enhance customer experience with real-time data. Perfect for omnichannel retail. #Ecommerce #APIIntegration #HomeDepot #RetailTech #Automation #DigitalTransformation #OnlineShopping #InventoryManagement #Omnichannel #BusinessGrowth #SoftwareDevelopment #SpringBoot #Java #VUEjs #CloudComputing #SmartRetail #B2B #TechSolutions #Dropshipping #DataSync

E-Commerce Introduction to Home Depot APIBenefits of API Integration for E-commerceUnderstanding Omnichannel Management SuitesCustomization Options for Home Depot API IntegrationStep-by-Step Guide to Home Depot API IntegrationTroubleshooting Common Issues in API IntegrationMeasuring the Success of Your API IntegrationFuture Trends in E-commerce API IntegrationIntegrating Home depot API Suite for…

0 notes

Video

tumblr

Datasync Organization, Church & School Pages Dec 1996 Archived Web Page

0 notes

Text

Data Pipeline Architecture for Amazon Redshift: An In-Depth Guide

In the era of big data and analytics, Amazon Redshift stands out as a popular choice for managing and analyzing vast amounts of structured and semi-structured data. To leverage its full potential, a well-designed data pipeline for Amazon Redshift is crucial. This article explores the architecture of a robust data pipeline tailored for Amazon Redshift, detailing its components, workflows, and best practices.

Understanding a Data Pipeline for Amazon Redshift

A data pipeline is a series of processes that extract, transform, and load (ETL) data from various sources into a destination system for analysis. In the case of Amazon Redshift, the pipeline ensures data flows seamlessly from source systems into this cloud-based data warehouse, where it can be queried and analyzed.

Key Components of a Data Pipeline Architecture

A comprehensive data pipeline for Amazon Redshift comprises several components, each playing a pivotal role:

Data Sources These include databases, APIs, file systems, IoT devices, and third-party services. The data sources generate raw data that must be ingested into the pipeline.

Ingestion Layer The ingestion layer captures data from multiple sources and transports it into a staging area. Tools like AWS DataSync, Amazon Kinesis, and Apache Kafka are commonly used for this purpose.

Staging Area Before data is loaded into Amazon Redshift, it is often stored in a temporary staging area, such as Amazon S3. This step allows preprocessing and ensures scalability when handling large data volumes.

ETL/ELT Processes The ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) layer prepares the data for Redshift. Tools like AWS Glue, Apache Airflow, and Matillion help clean, transform, and structure data efficiently.

Amazon Redshift The central data warehouse where transformed data is stored and optimized for querying. Amazon Redshift's columnar storage and MPP (Massively Parallel Processing) architecture make it ideal for analytics.

Data Visualization and Analytics Tools like Amazon QuickSight, Tableau, or Power BI connect to Redshift to visualize and analyze the data, providing actionable insights.

Data Pipeline Workflow

A well-designed pipeline operates in sequential or parallel workflows, depending on the complexity of the data and the business requirements:

Data Extraction: Data is extracted from source systems and moved into a staging area, often with minimal transformations.

Data Transformation: Raw data is cleaned, enriched, and structured to meet the schema and business logic requirements of Redshift.

Data Loading: Transformed data is loaded into Amazon Redshift tables using COPY commands or third-party tools.

Data Validation: Post-load checks ensure data accuracy, consistency, and completeness.

Automation and Monitoring: Scheduled jobs and monitoring systems ensure the pipeline runs smoothly and flags any issues.

Best Practices for Data Pipeline Architecture

To maximize the efficiency and reliability of a data pipeline for Amazon Redshift, follow these best practices:

Optimize Data Ingestion

Use Amazon S3 as an intermediary for large data transfers.

Compress and partition data to minimize storage and improve query performance.

Design for Scalability

Choose tools and services that can scale as data volume grows.

Leverage Redshift's spectrum feature to query data directly from S3.

Prioritize Data Quality

Implement rigorous data validation and cleansing routines.

Use AWS Glue DataBrew for visual data preparation.

Secure Your Pipeline

Use encryption for data at rest and in transit.

Configure IAM roles and permissions to restrict access to sensitive data.

Automate and Monitor

Schedule ETL jobs with AWS Step Functions or Apache Airflow.

Set up alerts and dashboards using Amazon CloudWatch to monitor pipeline health.

Tools for Building a Data Pipeline for Amazon Redshift

Several tools and services streamline the process of building and managing a data pipeline for Redshift:

AWS Glue: A serverless data integration service for ETL processes.

Apache Airflow: An open-source tool for workflow automation and orchestration.

Fivetran: A SaaS solution for automated data integration.

Matillion: A cloud-native ETL tool optimized for Redshift.

Amazon Kinesis: A service for real-time data streaming into Redshift.

Benefits of a Well-Architected Data Pipeline

A robust data pipeline for Amazon Redshift provides several advantages:

Efficiency: Automates complex workflows, saving time and resources.

Scalability: Handles growing data volumes seamlessly.

Reliability: Ensures consistent data quality and availability.

Actionable Insights: Prepares data for advanced analytics and visualization.

Conclusion

Designing an efficient data pipeline for Amazon Redshift is vital for unlocking the full potential of your data. By leveraging modern tools, adhering to best practices, and focusing on scalability and security, businesses can streamline their data workflows and gain valuable insights. Whether you’re a startup or an enterprise, a well-architected data pipeline ensures that your analytics capabilities remain robust and future-ready. With the right pipeline in place, Amazon Redshift can become the backbone of your data analytics strategy, helping you make data-driven decisions with confidence.

0 notes

Photo

🚀 Exciting News for #CustomerService and #DataManagement Enthusiasts! 🎉 As businesses strive to enhance customer experiences, the integration of helpdesk software with robust database management systems is no longer just an option—it's a necessity. That's why the MySQL AppiWorks extension for Zoho Desk is a game-changer! 🌟 ### Here's Why I'm Impressed: Working in the SaaS industry, I've seen many struggle with the manual integration of software systems—often leading to data desynchronization and errors. But with MySQL AppiWorks for Zoho Desk, it's a whole different story. This no-code integration and automation solution simplifies the process, ensuring that data flows seamlessly between Zoho Desk and MySQL. ### Key Features That Stand Out: - **Automated and on-demand data export:** Whether you're creating a new record or updating an existing one, data gets exported automatically to MySQL. - **Data import capabilities:** Bring MySQL data back into Zoho Desk as you need. - **Bulk operations and visual tracking:** Manage large volumes of data effortlessly and visualize the progress in real-time. ### Personal Reflection: Remember the days we used to switch endlessly between applications to manage data? With solutions like MySQL AppiWorks, those days are long gone. Now, we can focus more on delivering personalized, timely, and highly responsive service to customers—boosting satisfaction and loyalty. 💡 What has been your experience integrating database management with your customer service platforms? Have tools like these changed the game for your business? 👇 Drop your thoughts and experiences below! --- 🔗 For those looking to enhance their Zoho Desk capabilities, check out the MySQL AppiWorks extension in the Zoho Marketplace. Maximize your customer service potential today! #ZohoDesk #MySQL #Automation #NoCode #TechSolution #SaaS #BusinessIntelligence #DataSync Explore expert advice and resources on our blog https://zurl.co/P4EZ. Don’t miss out – create your free account today! https://zurl.co/qCHA

0 notes