#NLU models

Text

Learn how to create your own intelligent conversational agent from scratch! This comprehensive guide walks through the key steps of building a powerful AI chatbot using the latest natural language processing techniques. It covers designing conversation flows, training NLU models, integrating a dialogue manager, connecting to data sources, and deploying your bot. Whether you're a developer new to conversational AI or looking to level up your skills, this article has actionable tips, code snippets, and best practices for developing robust and useful chatbots. Check it out now at https://henceforthsolutions.com/ai-chatbot-development-step-by-step-guide/

#chatbot development#ai chatbot#ai chatbot development#NLU models#web application development#step to step guide#chatgpt like development

0 notes

Photo

Foundation Large Language Model Stack

0 notes

Text

The Stuff I Read in June/July 2023

Stuff I Extra Liked is Bold

I forgot to do it last month so you get a double feature

Books

Ninefox Gambit, Yoon Ha Lee

Heteropessimism (Essay Cluster)

The Biological Mind, Justin Garson (2015) Ch. 5-7

Sacred and Terrible Air, Robert Kurvitz

Wage Labour and Capital, Karl Marx

Short Fiction

Beware the Bite of the Were-Lesbian (zine), H. C. Guinevere

Childhood Homes (and why we hate them) by qrowscant (itch.io)

piele by slugzuki (itch.io)

بچهای که شکل گربه میکشید، لافکادیو هرن

بچه های که یخ نزدند، ماکسیم گورکی

پسرکی در تعقیب تبهکار، ویلیام آیریش

Küçük Kara Balık, Samed Behrengi

Phil Mind

The Hornswoggle Problem, Patricia Churchland, Journal of Consciousness Studies 3.5-6 (1996): 402-408

What is it Like to be a Bat? Thomas Nagel, (https://doi.org/10.4159/harvard.9780674594623.c15)

Epiphenomenal Qualia, Frank Jackson, Consciousness and emotion in cognitive science. Routledge, 1998. 197-206

Why You Can’t Make a Computer that Feels Pain, Daniel Dennett, Synthese, vol. 38, no. 3, 1978, pp. 415–56

Where Am I? Daniel Dennett

Can Machines Think? Daniel Dennett

Divided Minds and the Nature of Persons, Derek Parfit (https://doi.org/10.1002/9781118922590.ch8)

The Extended Mind, Andy Clark & David Chalmers, Analysis 58, no. 1 (1998): 7–19

Uploading: A Philosophical Analysis, David Chalmers (https://doi.org/10.1002/9781118736302.ch6)

If You Upload, Will You Survive? Joseph Corabi & Susan Schneider (https://doi.org/10.1002/9781118736302.ch8)

If You Can’t Make One, You Don’t Know How It Works, Fred Dretske (https://doi.org/10.1111/j.1475-4975.1994.tb00299.x)

Computing Machinery and Intelligence, Alan Turing

Minds, Brains, and Programs, John Searle (https://doi.org/10.1017/S0140525X00005756)

What is it Like to Have a Gender Identity? Florence Ashley (https://doi.org/10.1093/mind/fzac071)

Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data, Emily M. Bender & Alexander Koller (10.18653/v1/2020.acl-main.463)

On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜 Emily M. Bender et al. (https://doi.org/10.1145/3442188.3445922)

The Great White Robot God, David Golumbia

Superintelligence: The Idea that Eats Smart People, Maciej Ceglowski

Misc. Articles

Ebb and Flow of Azeri and Persian in Iran: A Longitudinal Study in the City of Zanjan, Hamed Zandi (https://doi.org/10.1515/9783110694277-007)

WTF is Happening? An Overview – Watching the World Go Bye, Eliot Jacobson

Using loophole, Seward County seizes millions from motorists without convicting them of crimes, Natalia Alamdari

Punks, Bulldaggers, and Welfare Queens, Cathy J. Cohen, Feminist Theory Reader. Routledge, 2020. 311-323

Is the Rectum a Grave? Leo Bersani (https://doi.org/10.2307/3397574)

Why Petroleum Did Not Save the Whales, Richard York (https://doi.org/10.1177/2378023117739217)

‘Spider-Verse’ Animation: Four Artists on Making the Sequel, Chris Lee

Carbon dioxide removal is not a current climate solution, David T. Ho (https://doi.org/10.1038/d41586-023-00953-x)

Fights, beatings and a birth: Videos smuggled out of L.A. jails reveal violence, neglect, Keri Blakinger

Capitalism’s Court Jester: Slavoj Žižek, Gabriel Rockhill

The Tyranny of Structurelessness, Jo Freeman

Domenico Losurdo interviewed about Friedrich Nietzsche

Keeping Some of the Lights On: Redefining Energy Security, Kris De Decker

Gays, Crossdressers, and Emos: Nonormative Masculinities in Militarized Iraq, Achim Rohde

On the Concept of History, Walter Benjamin

Our Technology, Zeyad el Nabolsy

Towards a Historiography of Gundam’s One Year War, Ian Gregory

Imperialism and the Transformation of Values into Prices, Torkil Lauesen & Zak Cope

#reading prog#one day i will be able to read books well again#most of the things that aren't linked i can provide directly upon request#those dennett citations are hard to track down

19 notes

·

View notes

Text

SEMANTIC TREE AND AI TECHNOLOGIES

Semantic Tree learning and AI technologies can be combined to solve problems by leveraging the power of natural language processing and machine learning.

Semantic trees are a knowledge representation technique that organizes information in a hierarchical, tree-like structure.

Each node in the tree represents a concept or entity, and the connections between nodes represent the relationships between those concepts.

This structure allows for the representation of complex, interconnected knowledge in a way that can be easily navigated and reasoned about.

CONCEPTS

Semantic Tree: A structured representation where nodes correspond to concepts and edges denote relationships (e.g., hyponyms, hyponyms, synonyms).

Meaning: Understanding the context, nuances, and associations related to words or concepts.

Natural Language Understanding (NLU): AI techniques for comprehending and interpreting human language.

First Principles: Fundamental building blocks or core concepts in a domain.

AI (Artificial Intelligence): AI refers to the development of computer systems that can perform tasks that typically require human intelligence. AI technologies include machine learning, natural language processing, computer vision, and more. These technologies enable computers to understand reason, learn, and make decisions.

Natural Language Processing (NLP): NLP is a branch of AI that focuses on the interaction between computers and human language. It involves the analysis and understanding of natural language text or speech by computers. NLP techniques are used to process, interpret, and generate human languages.

Machine Learning (ML): Machine Learning is a subset of AI that enables computers to learn and improve from experience without being explicitly programmed. ML algorithms can analyze data, identify patterns, and make predictions or decisions based on the learned patterns.

Deep Learning: A subset of machine learning that uses neural networks with multiple layers to learn complex patterns.

EXAMPLES OF APPLYING SEMANTIC TREE LEARNING WITH AI.

1. Text Classification: Semantic Tree learning can be combined with AI to solve text classification problems. By training a machine learning model on labeled data, the model can learn to classify text into different categories or labels. For example, a customer support system can use semantic tree learning to automatically categorize customer queries into different topics, such as billing, technical issues, or product inquiries.

2. Sentiment Analysis: Semantic Tree learning can be used with AI to perform sentiment analysis on text data. Sentiment analysis aims to determine the sentiment or emotion expressed in a piece of text, such as positive, negative, or neutral. By analyzing the semantic structure of the text using Semantic Tree learning techniques, machine learning models can classify the sentiment of customer reviews, social media posts, or feedback.

3. Question Answering: Semantic Tree learning combined with AI can be used for question answering systems. By understanding the semantic structure of questions and the context of the information being asked, machine learning models can provide accurate and relevant answers. For example, a Chabot can use Semantic Tree learning to understand user queries and provide appropriate responses based on the analyzed semantic structure.

4. Information Extraction: Semantic Tree learning can be applied with AI to extract structured information from unstructured text data. By analyzing the semantic relationships between entities and concepts in the text, machine learning models can identify and extract specific information. For example, an AI system can extract key information like names, dates, locations, or events from news articles or research papers.

Python Snippet Codes for Semantic Tree Learning with AI

Here are four small Python code snippets that demonstrate how to apply Semantic Tree learning with AI using popular libraries:

1. Text Classification with scikit-learn:

```python

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import LogisticRegression

# Training data

texts = ['This is a positive review', 'This is a negative review', 'This is a neutral review']

labels = ['positive', 'negative', 'neutral']

# Vectorize the text data

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(texts)

# Train a logistic regression classifier

classifier = LogisticRegression()

classifier.fit(X, labels)

# Predict the label for a new text

new_text = 'This is a positive sentiment'

new_text_vectorized = vectorizer.transform([new_text])

predicted_label = classifier.predict(new_text_vectorized)

print(predicted_label)

```

2. Sentiment Analysis with TextBlob:

```python

from textblob import TextBlob

# Analyze sentiment of a text

text = 'This is a positive sentence'

blob = TextBlob(text)

sentiment = blob.sentiment.polarity

# Classify sentiment based on polarity

if sentiment > 0:

sentiment_label = 'positive'

elif sentiment < 0:

sentiment_label = 'negative'

else:

sentiment_label = 'neutral'

print(sentiment_label)

```

3. Question Answering with Transformers:

```python

from transformers import pipeline

# Load the question answering model

qa_model = pipeline('question-answering')

# Provide context and ask a question

context = 'The Semantic Web is an extension of the World Wide Web.'

question = 'What is the Semantic Web?'

# Get the answer

answer = qa_model(question=question, context=context)

print(answer['answer'])

```

4. Information Extraction with spaCy:

```python

import spacy

# Load the English language model

nlp = spacy.load('en_core_web_sm')

# Process text and extract named entities

text = 'Apple Inc. is planning to open a new store in New York City.'

doc = nlp(text)

# Extract named entities

entities = [(ent.text, ent.label_) for ent in doc.ents]

print(entities)

```

APPLICATIONS OF SEMANTIC TREE LEARNING WITH AI

Semantic Tree learning combined with AI can be used in various domains and industries to solve problems. Here are some examples of where it can be applied:

1. Customer Support: Semantic Tree learning can be used to automatically categorize and route customer queries to the appropriate support teams, improving response times and customer satisfaction.

2. Social Media Analysis: Semantic Tree learning with AI can be applied to analyze social media posts, comments, and reviews to understand public sentiment, identify trends, and monitor brand reputation.

3. Information Retrieval: Semantic Tree learning can enhance search engines by understanding the meaning and context of user queries, providing more accurate and relevant search results.

4. Content Recommendation: By analyzing the semantic structure of user preferences and content metadata, Semantic Tree learning with AI can be used to personalize content recommendations in platforms like streaming services, news aggregators, or e-commerce websites.

Semantic Tree learning combined with AI technologies enables the understanding and analysis of text data, leading to improved problem-solving capabilities in various domains.

COMBINING SEMANTIC TREE AND AI FOR PROBLEM SOLVING

1. Semantic Reasoning: By integrating semantic trees with AI, systems can engage in more sophisticated reasoning and decision-making. The semantic tree provides a structured representation of knowledge, while AI techniques like natural language processing and knowledge representation can be used to navigate and reason about the information in the tree.

2. Explainable AI: Semantic trees can make AI systems more interpretable and explainable. The hierarchical structure of the tree can be used to trace the reasoning process and understand how the system arrived at a particular conclusion, which is important for building trust in AI-powered applications.

3. Knowledge Extraction and Representation: AI techniques like machine learning can be used to automatically construct semantic trees from unstructured data, such as text or images. This allows for the efficient extraction and representation of knowledge, which can then be used to power various problem-solving applications.

4. Hybrid Approaches: Combining semantic trees and AI can lead to hybrid approaches that leverage the strengths of both. For example, a system could use a semantic tree to represent domain knowledge and then apply AI techniques like reinforcement learning to optimize decision-making within that knowledge structure.

EXAMPLES OF APPLYING SEMANTIC TREE AND AI FOR PROBLEM SOLVING

1. Medical Diagnosis: A semantic tree could represent the relationships between symptoms, diseases, and treatments. AI techniques like natural language processing and machine learning could be used to analyze patient data, navigate the semantic tree, and provide personalized diagnosis and treatment recommendations.

2. Robotics and Autonomous Systems: Semantic trees could be used to represent the knowledge and decision-making processes of autonomous systems, such as self-driving cars or drones. AI techniques like computer vision and reinforcement learning could be used to navigate the semantic tree and make real-time decisions in dynamic environments.

3. Financial Analysis: Semantic trees could be used to model complex financial relationships and market dynamics. AI techniques like predictive analytics and natural language processing could be applied to the semantic tree to identify patterns, make forecasts, and support investment decisions.

4. Personalized Recommendation Systems: Semantic trees could be used to represent user preferences, interests, and behaviors. AI techniques like collaborative filtering and content-based recommendation could be used to navigate the semantic tree and provide personalized recommendations for products, content, or services.

PYTHON CODE SNIPPETS

1. Semantic Tree Construction using NetworkX:

```python

import networkx as nx

import matplotlib.pyplot as plt

# Create a semantic tree

G = nx.DiGraph()

G.add_node("root", label="Root")

G.add_node("concept1", label="Concept 1")

G.add_node("concept2", label="Concept 2")

G.add_node("concept3", label="Concept 3")

G.add_edge("root", "concept1")

G.add_edge("root", "concept2")

G.add_edge("concept2", "concept3")

# Visualize the semantic tree

pos = nx.spring_layout(G)

nx.draw(G, pos, with_labels=True)

plt.show()

```

2. Semantic Reasoning using PyKEEN:

```python

from pykeen.models import TransE

from pykeen.triples import TriplesFactory

# Load a knowledge graph dataset

tf = TriplesFactory.from_path("./dataset/")

# Train a TransE model on the knowledge graph

model = TransE(triples_factory=tf)

model.fit(num_epochs=100)

# Perform semantic reasoning

head = "concept1"

relation = "isRelatedTo"

tail = "concept3"

score = model.score_hrt(head, relation, tail)

print(f"The score for the triple ({head}, {relation}, {tail}) is: {score}")

```

3. Knowledge Extraction using spaCy:

```python

import spacy

# Load the spaCy model

nlp = spacy.load("en_core_web_sm")

# Extract entities and relations from text

text = "The quick brown fox jumps over the lazy dog."

doc = nlp(text)

# Visualize the extracted knowledge

from spacy import displacy

displacy.render(doc, style="ent")

```

4. Hybrid Approach using Ray:

```python

import ray

from ray.rllib.agents.ppo import PPOTrainer

from ray.rllib.env.multi_agent_env import MultiAgentEnv

from ray.rllib.models.tf.tf_modelv2 import TFModelV2

# Define a custom model that integrates a semantic tree

class SemanticTreeModel(TFModelV2):

def __init__(self, obs_space, action_space, num_outputs, model_config, name):

super().__init__(obs_space, action_space, num_outputs, model_config, name)

# Implement the integration of the semantic tree with the neural network

# Define a multi-agent environment that uses the semantic tree model

class SemanticTreeEnv(MultiAgentEnv):

def __init__(self):

self.semantic_tree = # Initialize the semantic tree

self.agents = # Define the agents

def step(self, actions):

# Implement the environment dynamics using the semantic tree

# Train the hybrid model using Ray

ray.init()

config = {

"env": SemanticTreeEnv,

"model": {

"custom_model": SemanticTreeModel,

},

}

trainer = PPOTrainer(config=config)

trainer.train()

```

APPLICATIONS

The combination of semantic trees and AI can be applied to a wide range of problem domains, including:

- Healthcare: Improving medical diagnosis, treatment planning, and drug discovery.

- Finance: Enhancing investment strategies, risk management, and fraud detection.

- Robotics and Autonomous Systems: Enabling more intelligent and adaptable decision-making in complex environments.

- Education: Personalizing learning experiences and providing intelligent tutoring systems.

- Smart Cities: Optimizing urban planning, transportation, and resource management.

- Environmental Conservation: Modeling and predicting environmental changes, and supporting sustainable decision-making.

- Chatbots and Virtual Assistants:

Use semantic trees to understand user queries and provide context-aware responses.

Apply NLU models to extract meaning from user input.

- Information Retrieval:

Build semantic search engines that understand user intent beyond keyword matching.

Combine semantic trees with vector embeddings (e.g., BERT) for better search results.

- Medical Diagnosis:

Create semantic trees for medical conditions, symptoms, and treatments.

Use AI to match patient symptoms to relevant diagnoses.

- Automated Content Generation:

Construct semantic trees for topics (e.g., climate change, finance).

Generate articles, summaries, or reports based on semantic understanding.

RDIDINI PROMPT ENGINEER

#semantic tree#ai solutions#ai-driven#ai trends#ai system#ai model#ai prompt#ml#ai predictions#llm#dl#nlp

3 notes

·

View notes

Text

For you, I offer, a summary of a paper and some thoughts

Alright everyone. Here I am again, two years later, writing another linguistics post. Except, this one isn’t about a Garfield meme. It’s actually about a paper on language models that are used for AI chatbots, which I think is pretty interesting especially after doing some research and finding that ChatGPT just flat out makes things up sometimes. (That’s not today’s topic, maybe another day). So, quiet up and listen down (outdated, sorry) as I walk through the basic ideas in a paper by Emily Bender and Alexander Koller, called “Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data.”

Let me start out with a little bit of vocabulary:

Form, according to this paper, is any language that is observable. It might be marks that someone writes on a page, it might be words spoken or signed, it might be pixels. Form is the language that we can perceive.

Meaning is, according to this paper, the relationship between the form and something outside of language. So, if I say “door,” I’m referring to that thing, as in, the idea of a door (as opposed to just saying the word “door” with no representation for what it is).

Okay, vocabulary over. For now.

So, this paper starts out by explaining that language models, specifically large ones that are used in programs such as BERT or GPT, are making a lot of progress, and some people claim that they are doing things like “understanding” and “comprehending.” Basically, there are claims floating around that say AI language systems are learning language meaning, not just forms. Bender and Koller argue that this is not possible; that a system cannot learn meaning from form alone. That essentially means that language produced by something like ChatGPT, is not, and cannot be, entirely like human language.

Bender and Koller explain that humans have what is called communicative intent (sorry more vocab), which is basically something outside of the actual language. So, for example, if I say, “I want to eat some ice cream,” I am referring to actual actions, and actual things, and a want that I have. Bender and Koller also talk about conventional or standing meanings, which is basically, the meaning of some language piece that is consistent. As humans, we choose an expression with a standing meaning, which we feel like fits our intent. Then, a human listener will hear (or see, in the case of sign language or a text) our expression, and use their own knowledge to put together our intent.

So, what does this have to do with AI? Well, AI doesn’t “learn” language like humans do. Humans acquire language by interacting with the physical world, and with speakers of language. Humans have to be aware of what another person is doing, and figure out what that person is trying to communicate in their language, in order to fully acquire and use the language themselves. Language models, on the other hand, are fed a very large amount of language, which allows it to learn how to say words and sentences in ways that we like, but lack the representations that we have, and the interactions that we require in order to make meaning with form.

Bender and Koller argue that, no matter how much language (form) you feed a language model, if it lacks the representations that we have as humans, it won’t be able to create actual meaning, though we might attribute meaning to its utterances because they sound like us. They argue that we can still, of course, be excited about the development and progress of language models, but that we must come at it with a sense of humility and skepticism as to whether the progress is actually going in the direction that we expect or want it to go (in this case, being “intelligence” or “understanding” and creating meaning in a way that humans do with

Essentially, Bender and Koller say within this paper that language models don’t have real-world representations of the things they say, and that they do not acquire language in the way that humans do, and so therefore, can’t do all the things that we do with language, particularly making meaning.

So, this is something to keep in mind when we use AI language models such as ChatGPT. It can do a great job at making conversation and creating passages that sound fairly human, but that does not mean that it is making meaning with what it says. If I were to touch on my mention of ChatGPT “making things up,” I might say that it isn’t really making anything up, it’s just putting words together that sound nice and make sense, and therefore are convincing and make me want to trust it. This isn’t to say it does not get anything right, or that it is not useful. It means more that, as Bender and Koller say in their paper, we should use some precision when we talk about the abilities of language models (so, avoid saying it “understands” without at least defining what we mean by that), and we should form our points of view on it with a dose of skepticism along with the optimism we might already have.

2 notes

·

View notes

Text

Importance of Natural Language Processing in AI and Robotics

The world of technology is rapidly advancing, and at the heart of this evolution is the remarkable field of Artificial Intelligence (AI). Among the various branches of AI, Natural Language Processing (NLP) is emerging as one of the most significant. NLP is the technology that enables machines to understand, interpret, and respond to human language in a way that is both meaningful and useful. As we continue to push the boundaries of what AI and robotics can do, the role of NLP is becoming increasingly crucial.

What is Natural Language Processing?

Natural Language Processing, or NLP, is a subfield of AI that focuses on the interaction between computers and humans through natural language. In simpler terms, it is the technology that allows machines to understand and process human language, whether spoken or written.

NLP involves several key tasks, including:

Speech Recognition: Converting spoken language into text.

Natural Language Understanding (NLU): Comprehending the meaning of text or speech.

Natural Language Generation (NLG): Creating human-like text from data or concepts.

Machine Translation: Translating text from one language to another.

Sentiment Analysis: Determining the emotional tone of a piece of text.

These tasks enable AI systems to perform a wide range of functions, from voice-activated assistants like Siri and Alexa to sophisticated chatbots that provide customer support.

The Importance of NLP in AI

The ability to understand and communicate in human language is one of the most challenging and valuable aspects of AI. Language is inherently complex, filled with nuances, context, and subtleties that are difficult for machines to grasp. However, by mastering NLP, AI systems can bridge the gap between human communication and machine processing, leading to more intuitive and effective interactions.

NLP allows machines to interact with humans in a more natural and intuitive way. Instead of relying on rigid commands, users can communicate with machines using everyday language. This makes technology more accessible to a broader audience, including those who may not be tech-savvy.

NLP is crucial for enhancing various AI applications. For example, in customer service, NLP-powered chatbots can handle inquiries, resolve issues, and even engage in meaningful conversations with customers. In healthcare, NLP can assist in analyzing medical records, helping doctors make more informed decisions.

In the globalized world we live in, real-time communication across languages is essential. NLP-powered translation tools are breaking down language barriers, enabling people from different cultures and backgrounds to communicate effectively.

NLP is also playing a vital role in research and development across multiple fields. From analyzing vast amounts of data to automating literature reviews, NLP is accelerating the pace of discovery and innovation.

Challenges and Future Directions

While NLP has made significant strides, there are still challenges to overcome. Language is deeply tied to culture, context, and emotion, making it difficult for machines to fully grasp. Moreover, ethical considerations, such as ensuring privacy and avoiding bias in language models, are critical issues that need to be addressed.

The future of NLP in AI and robotics is promising, with advancements in deep learning and neural networks driving innovation. We can expect to see more sophisticated NLP systems that are capable of understanding and generating language with greater accuracy and nuance.

Conclusion

Natural Language Processing is not just a technological advancement; it's a revolution in how we interact with machines and how machines interact with us. As AI and robotics continue to evolve, the importance of NLP will only grow. At St. Mary's Group of Institutions , best engineering college in Hyderabad, we are committed to preparing our students to lead in this transformative field, equipping them with the skills and knowledge they need to shape the future of technology.

0 notes

Text

Charting the Future of Artificial Intelligence: Promising Developments and New Horizons

Artificial Intelligence (AI) is on the brink of extraordinary advancements, and understanding these promising developments can provide valuable insights into what lies ahead. As AI technology continues to evolve, several key areas are poised to drive innovation and redefine various aspects of our lives and industries. Here’s a detailed look at the future of AI, focusing on the most promising developments and emerging horizons.

Promising Developments in AI

Next-Generation Generative AI: Generative AI technologies, such as OpenAI’s GPT-4, are advancing rapidly, creating increasingly sophisticated text, images, and even video content. Future developments will likely enhance these models, making them more contextually aware and capable of producing highly accurate and creative outputs. This will have far-reaching implications for content creation, marketing, and interactive entertainment.

AI-Powered Drug Discovery: AI is revolutionizing the pharmaceutical industry by accelerating drug discovery and development. Advanced AI algorithms can analyze vast amounts of biological data to identify potential drug candidates and predict their efficacy. As these technologies mature, they promise to significantly reduce the time and cost involved in bringing new treatments to market.

Improved Natural Language Understanding (NLU): The future of AI will see significant strides in Natural Language Understanding, making interactions with AI systems more intuitive and human-like. Enhanced NLU will enable more sophisticated virtual assistants, customer service bots, and language translation tools, improving communication and accessibility across languages and contexts.

AI-Driven Cybersecurity: With the rise in cyber threats, AI is becoming an essential tool for enhancing cybersecurity. Future AI developments will focus on creating more advanced threat detection systems, capable of identifying and responding to malicious activities in real-time. This will help protect sensitive data and secure digital infrastructures more effectively.

Ethical and Explainable AI: As AI systems become more integrated into critical decision-making processes, the demand for ethical and explainable AI will grow. Future innovations will emphasize creating transparent AI models that can provide understandable explanations for their decisions, ensuring fairness and accountability in automated systems.

New Horizons in AI

AI in Autonomous Systems: The field of autonomous systems, including self-driving cars and drones, is poised for significant advancements. Innovations will focus on improving the safety, reliability, and versatility of these systems, leading to broader adoption in transportation, logistics, and everyday life.

AI for Climate Change Mitigation: AI has the potential to play a pivotal role in addressing climate change. Future applications will include AI-driven tools for monitoring environmental changes, optimizing energy usage, and developing sustainable solutions. These advancements will support global efforts to combat climate change and promote environmental sustainability.

Human-AI Collaboration: The future will see a greater emphasis on human-AI collaboration rather than replacement. AI will augment human abilities, enhancing productivity and creativity across various fields. This collaborative approach will enable more effective problem-solving and innovation, leveraging the strengths of both humans and machines.

AI in Personalized Education: AI is set to transform education through personalized learning experiences. Future developments will include adaptive learning platforms that tailor educational content to individual student needs, providing targeted support and enhancing learning outcomes. This will make education more accessible and effective for diverse learning styles.

AI for Smart Cities: The concept of smart cities, where AI technologies optimize urban living, is gaining traction. Innovations will include AI-driven traffic management systems, energy-efficient buildings, and intelligent public services. These advancements will improve the quality of urban life and make cities more sustainable and efficient.

Conclusion

Charting the future of Artificial Intelligence reveals a landscape rich with promising developments and new horizons. From the next generation of generative AI and advancements in drug discovery to AI-driven cybersecurity and climate change mitigation, the potential applications of AI are vast and transformative. As we explore these exciting possibilities, it’s crucial to address the associated ethical and governance challenges to ensure responsible and equitable use of AI technologies.

By staying informed about these developments and preparing for their impacts, we can better navigate the evolving AI landscape and harness its potential to drive innovation and improve our world. The future of AI holds immense promise, and understanding these trends will help us shape a more advanced and connected society.

0 notes

Text

ChatGPT-4 vs. Llama 3: A Head-to-Head Comparison

New Post has been published on https://thedigitalinsider.com/chatgpt-4-vs-llama-3-a-head-to-head-comparison/

ChatGPT-4 vs. Llama 3: A Head-to-Head Comparison

As the adoption of artificial intelligence (AI) accelerates, large language models (LLMs) serve a significant need across different domains. LLMs excel in advanced natural language processing (NLP) tasks, automated content generation, intelligent search, information retrieval, language translation, and personalized customer interactions.

The two latest examples are Open AI’s ChatGPT-4 and Meta’s latest Llama 3. Both of these models perform exceptionally well on various NLP benchmarks.

A comparison between ChatGPT-4 and Meta Llama 3 reveals their unique strengths and weaknesses, leading to informed decision-making about their applications.

Understanding ChatGPT-4 and Llama 3

LLMs have advanced the field of AI by enabling machines to understand and generate human-like text. These AI models learn from huge datasets using deep learning techniques. For example, ChatGPT-4 can produce clear and contextual text, making it suitable for diverse applications.

Its capabilities extend beyond text generation as it can analyze complex data, answer questions, and even assist with coding tasks. This broad skill set makes it a valuable tool in fields like education, research, and customer support.

Meta AI’s Llama 3 is another leading LLM built to generate human-like text and understand complex linguistic patterns. It excels in handling multilingual tasks with impressive accuracy. Moreover, it’s efficient as it requires less computational power than some competitors.

Companies seeking cost-effective solutions can consider Llama 3 for diverse applications involving limited resources or multiple languages.

Overview of ChatGPT-4

The ChatGPT-4 leverages a transformer-based architecture that can handle large-scale language tasks. The architecture allows it to process and understand complex relationships within the data.

As a result of being trained on massive text and code data, GPT-4 reportedly performs well on various AI benchmarks, including text evaluation, audio speech recognition (ASR), audio translation, and vision understanding tasks.

Text Evaluation

Vision Understanding

Overview of Meta AI Llama 3:

Meta AI’s Llama 3 is a powerful LLM built on an optimized transformer architecture designed for efficiency and scalability. It is pretrained on a massive dataset of over 15 trillion tokens, which is seven times larger than its predecessor, Llama 2, and includes a significant amount of code.

Furthermore, Llama 3 demonstrates exceptional capabilities in contextual understanding, information summarization, and idea generation. Meta claims that its advanced architecture efficiently manages extensive computations and large volumes of data.

Instruct Model Performance

Instruct Human evaluation

Pre-trained model performance

ChatGPT-4 vs. Llama 3

Let’s compare ChatGPT-4 and Llama to better understand their advantages and limitations. The following tabular comparison underscores the performance and applications of these two models:

Aspect ChatGPT-4 Llama 3 Cost Free and paid options available Free (open-source) Features & Updates Advanced NLU/NLG. Vision input. Persistent threads. Function calling. Tool integration. Regular OpenAI updates. Excels in nuanced language tasks. Open updates. Integration & Customization API integration. Limited customization. Suits standard solutions. Open-source. Highly customizable. Ideal for specialized uses. Support & Maintenance Provided by OpenAl through formal channels, including documentation, FAQs, and direct support for paid plans. Community-driven support through GitHub and other open forums; less formal support structure. Technical Complexity Low to moderate depending on whether it is used via the ChatGPT interface or via the Microsoft Azure Cloud. Moderate to high complexity depends on whether a cloud platform is used or you self-host the model. Transparency & Ethics Model card and ethical guidelines provided. Black box model, subject to unannounced changes. Open-source. Transparent training. Community license. Self-hosting allows version control. Security OpenAI/Microsoft managed security. Limited privacy via OpenAI. More control via Azure. Regional availability varies. Cloud-managed if on Azure/AWS. Self-hosting requires its own security. Application Used for customized AI Tasks Ideal for complex tasks and high-quality content creation

Ethical Considerations

Transparency in AI development is important for building trust and accountability. Both ChatGPT4 and Llama 3 must address potential biases in their training data to ensure fair outcomes across diverse user groups.

Additionally, data privacy is a key concern that calls for stringent privacy regulations. To address these ethical concerns, developers and organizations should prioritize AI explainability techniques. These techniques include clearly documenting model training processes and implementing interpretability tools.

Furthermore, establishing robust ethical guidelines and conducting regular audits can help mitigate biases and ensure responsible AI development and deployment.

Future Developments

Undoubtedly, LLMs will advance in their architectural design and training methodologies. They will also expand dramatically across different industries, such as health, finance, and education. As a result, these models will evolve to offer increasingly accurate and personalized solutions.

Furthermore, the trend towards open-source models is expected to accelerate, leading to democratized AI access and innovation. As LLMs evolve, they will likely become more context-aware, multimodal, and energy-efficient.

To keep up with the latest insights and updates on LLM developments, visit unite.ai.

#ai#AI development#AI explainability#AI models#amp#applications#architecture#artificial#Artificial Intelligence#ASR#audio#AWS#azure#azure cloud#benchmarks#black box#box#Building#chatGPT#ChatGPT-4#chatgpt4#Cloud#cloud platform#code#coding#Community#Companies#comparison#complexity#content

1 note

·

View note

Text

Chat GPT for Developers: Mastering Advanced Techniques to Enhance User Engagement and Interaction

https://chatgpt-developers.com/wp-content/uploads/2024/07/chat-gpt-developer-1.jpg

Understanding Chat GPT

Chat GPT for Developers, short for Generative Pre-trained Transformer, is an AI model developed by OpenAI that leverages deep learning techniques to generate text-based responses. Trained on vast amounts of text data, Chat GPT for Developers has learned to mimic human language patterns and respond contextually to user inputs. This makes it particularly effective in chatbot development, customer service automation, content generation, and more.

Mastering Advanced Techniques with Chat GPT for Developers

As developers delve into the realm of Chat GPT for Developers, mastering advanced techniques becomes crucial to maximize its potential. Here are key strategies and practices that developers can employ:

1. Fine-tuning Models: While pre-trained models like ChatGPT come with a solid foundation, fine-tuning allows chatgpt programmers to tailor responses to specific contexts or domains. By training on domain-specific datasets, developers can improve accuracy and relevance, ensuring more meaningful interactions with users.

2. Handling Context: One of Chat GPT’s strengths lies in its ability to maintain context across conversations. Developers can implement techniques such as context windowing and history handling to enhance continuity and coherence in dialogues. This ensures smoother interactions and a more human-like experience for users.

3. Multi-turn Conversations: For chat GPT app developers, enabling Chat GPT to handle multi-turn conversations involves managing dialogue state and tracking user intents over successive interactions. Techniques like state management systems and dialogue managers empower developers to create chatbots capable of sustaining meaningful exchanges over time.

4. Natural Language Understanding (NLU): Integrating robust NLU capabilities with Chat GPT enhances its comprehension of user inputs. Techniques such as entity recognition, sentiment analysis, and intent classification enable more accurate and context-aware responses, improving overall user satisfaction.

5. Scaling and Optimization: As applications grow in complexity and user base, scaling Chat GPT models becomes essential. Developers can leverage cloud computing platforms and distributed training techniques to handle larger workloads and ensure optimal performance across different devices and platforms.

Enhancing User Engagement and Interaction

The primary goal of leveraging Chat GPT for Developers in application development is to enhance user engagement and interaction. By implementing advanced techniques effectively, developers can achieve the following benefits:

Improved Responsiveness: Chat GPT enables real-time responses to user queries, reducing wait times and enhancing user satisfaction.

Personalized Experiences: Through fine-tuning and context management, developers can tailor interactions based on user preferences and historical data, delivering personalized experiences.

Scalability and Efficiency: Optimized Chat GPT models ensure that applications remain responsive and efficient, even as user traffic increases.

Enhanced User Retention: By creating engaging and seamless interactions, applications powered by Chat GPT can increase user retention and loyalty over time.

For any Digital Marketing services in India click here

Conclusion

In conclusion, Chat GPT for Developers stands as a powerful enabler for crafting intelligent and engaging applications. By mastering advanced techniques like model fine-tuning, context management, and seamless NLU integration, developers can fully leverage the capabilities of Chat GPT to deliver unparalleled user experiences. Looking forward, the pivotal role of Chat GPT in shaping the future of digital communication is undeniable. Openai replace programmers or may be automated or augmented by advanced AI systems. This evolution promises to revolutionize software development by enhancing efficiency, scalability, and the potential for creative exploration in the field. By embracing its potential and pushing the boundaries of what’s achievable, developers can pave the way for tomorrow’s applications with more intuitive, responsive, and human-like interactions.

0 notes

Text

Challenges in Implementing Semantic Search

Semantic search outperforms typical keyword-based search algorithms by recognizing the intent and context of user searches. However, its implementation presents various hurdles that developers and organizations must overcome to fully realize its potential. In this post, we shall investigate these difficulties in depth.

1. Natural Language Understanding (NLU)

Semantic search engines use powerful NLU skills to understand the intricacies of human language. Creating robust NLU models capable of effectively interpreting ambiguous queries and comprehending contextually relevant information remains a problem. This necessitates combining approaches from natural language processing (NLP), machine learning, and even deep learning.

2. Semantic Ambiguity

Dealing with semantic ambiguity is one of semantic search's most fundamental issues. Words and phrases may have many interpretations based on context, cultural background, or domain-specific expertise. Resolving these ambiguities correctly is critical for providing appropriate search results.

3. Data Quality and Integration

Semantic search engines require massive amounts of high-quality data to train their models properly. Integrating multiple datasets from various sources while maintaining data consistency, quality, and relevance is not an easy undertaking. Furthermore, ensuring data integrity over time presents continual issues.

4. Scalability

Scaling semantic search systems to accommodate high numbers of concurrent inquiries efficiently is another key difficulty. As the user base increases or more data is added, keeping constant performance while preserving speed and accuracy necessitates careful system design and optimization.

5. Computational Resources

The computational resources required to support semantic search engines can be significant, particularly when processing sophisticated queries or conducting large-scale data analysis. Balancing the requirement for processing capacity with cost-effectiveness is an ongoing challenge for enterprises.

6. User Expectations and Experience

Users want semantic search engines to give results that are intuitive, accurate, and rapid, similar to human understanding. Meeting these expectations necessitates ongoing refining of algorithms, user interfaces, and feedback mechanisms to improve overall user experience.

7. Evaluation and Benchmarking

Measuring the usefulness and efficiency of semantic search engines is difficult due to the subjective nature of relevance evaluations and the lack of standardized evaluation metrics. Creating strong assessment procedures and benchmarks is critical for comparing various approaches and evaluating progress over time.

8. Privacy and Ethical Considerations

With access to massive amounts of user data, semantic search poses questions regarding privacy, data security, and ethical application. It is critical to ensure regulatory compliance and the implementation of transparent data collecting, storage, and usage procedures.

Conclusion

Implementing semantic search involves navigating through various technical, operational, and ethical challenges. Addressing these challenges requires interdisciplinary collaboration, innovative solutions, and a commitment to improving the accuracy, efficiency, and fairness of semantic search systems. By overcoming these hurdles, organizations can unlock the full potential of semantic search to deliver more personalized and contextually relevant search experiences for users.

1 note

·

View note

Text

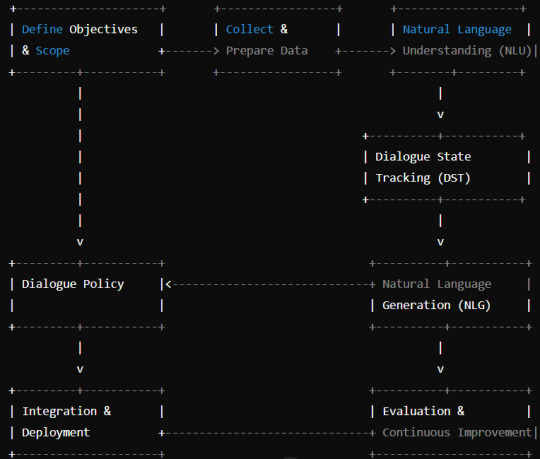

a dialogue management system (DMS) for detecting diseases using symptoms

1. Define Objectives and Scope

Identify Use Cases: Focus on disease detection based on user-reported symptoms.

Medical Domain Requirements: Ensure compliance with medical standards and regulations (e.g., HIPAA).

Target Diseases: Specify the range of diseases the system will handle.

2. Data Collection and Preparation

Medical Data Sources: Collect data from medical records, symptom checkers, and health-related datasets.

Data Annotation: Annotate data with medical entities such as symptoms, diseases, and relevant medical history.

3. Natural Language Understanding (NLU)

Intent Recognition: Train models to recognize intents related to symptom reporting, seeking diagnosis, and requesting advice.

Entity Extraction: Extract medical entities like symptoms, durations, severities, and medical history.

Preprocessing: Implement preprocessing steps tailored to medical terminology.

4. Dialogue State Tracking (DST)

State Representation: Define a state representation that includes reported symptoms, patient history, and current context.

State Update Mechanism: Update the state based on new inputs, maintaining a coherent history of the dialogue.

5. Dialogue Policy

Policy Learning: Develop a policy to guide the conversation towards gathering relevant medical information and providing potential diagnoses.

Medical Knowledge Integration: Incorporate medical guidelines and knowledge bases to inform the policy.

Action Selection: Implement actions to ask follow-up questions, provide preliminary diagnoses, and suggest next steps.

6. Natural Language Generation (NLG)

Template-Based NLG: Create templates for generating medically appropriate and empathetic responses.

Dynamic NLG: Use advanced models to generate responses tailored to the specific medical context.

7. Integration and Deployment

Backend Integration: Integrate with medical databases, electronic health records (EHR), and external APIs for additional information.

User Interface: Develop interfaces like web-based chatbots, mobile apps, or voice assistants for user interaction.

Scalability and Performance: Ensure the system can handle high volumes of queries and provide quick responses.

8. Evaluation and Testing

User Testing: Conduct thorough testing with healthcare professionals and patients to gather feedback and validate accuracy.

Automated Testing: Implement tests to ensure the system’s reliability and safety.

Evaluation Metrics: Track metrics such as diagnostic accuracy, user satisfaction, and system response time.

9. Continuous Improvement

Monitoring and Logging: Continuously monitor the system’s performance and log interactions for analysis.

Iterative Refinement: Regularly update the system based on feedback and new medical knowledge.

A/B Testing: Conduct A/B testing to evaluate the impact of changes and improve the system iteratively.

Example Tools and Frameworks

NLU: spaCy, NLTK, Rasa NLU, BioBERT

DST: Rasa, Tracker (from Microsoft Bot Framework)

Dialogue Policy: Rasa, Reinforcement Learning libraries, medical guidelines integration

NLG: Templating libraries, GPT-3, Transformer-based models

Integration: Flask, Django, Node.js, FHIR (Fast Healthcare Interoperability Resources)

User Interface: Botpress, Microsoft Bot Framework, Google Dialogflow

Pipeline Diagram

This specialized pipeline for a DMS in disease detection ensures a robust and medically sound approach to developing a tool that can assist in preliminary diagnosis based on symptoms.

0 notes

Text

Eliminating AI Hallucination with Grounding AI and Contextual Awareness - Bionic

This Blog was Originally Published at:

Eliminating AI Hallucination with Grounding AI and Contextual Awareness — Bionic

Artificial intelligence or AI has become almost ubiquitous in society and the economy. It is being used in everything ranging from chatbots and virtual assistants to self-driving cars. On the upside, the generative AI models have made remarkable performance, but, on the downside, they are still prone to generate AI Hallucinations.

AI hallucination occurs when AI gives outputs that are meaningless, non-relevant, or factually inaccurate. AI hallucination leads to erosion of people’s confidence in using AI solutions. This may limit the technologies’ adoption across industries.

A two-pronged approach of grounding AI and contextual awareness has come up as the solution to this problem. Grounding AI is the process of rooting real-world data and knowledge into AI models. It helps minimize instances of AI models coming up with weird suggestions or fabricated facts.

Contextual awareness allows an AI model to interpret situations appropriately and adapt to the nuances of those situations.

When integrated, these strategies can set the foundation for more dependable, precise, and situational AI applications. In this blog, we will understand more about Grounding AI and Contextual awareness.

We will also understand how you can minimize AI hallucination with the integration of both strategies, helping your business make better decisions.

What is AI Hallucination?

AI hallucination is where an AI model creates outputs that are erroneous, absurd, or unrelated to the user input. This occurs because AI models are trained on large sets of data, and there are instances when the models will make certain correlations or assumptions that are untrue in real-world scenarios.

Want to know more about what are Grounding and Hallucinations in AI? (Click Here)

Understanding Grounding in AI and Contextual Awareness

Grounding and Contextual awareness are the optimal solutions for eliminating Hallucinations in AI. Lets understand what makes them ideal for training hallucination-free AI.

AI Grounding

We can define Grounding AI as a process of connecting an AI model to actual, real-world data of a particular context. This is achieved through techniques like:

Knowledge Base Integration: Improving the AI models by syncing with external knowledge databases like encyclopedias, databases, or resources related to the specific domain.

Data Augmentation: Introducing additional real-world scenario-based information to the existing training data to enrich the facts and relationships’ perception by the model.

Fact Verification: Incorporating feedback methods to assess the suitability and credibility of the response provided by the AI interface.

Reinforcement Learning with Human Feedback: Carrying out AI reinforcement learning in a way that the AI model trains from human feedback. This improves the AI’s capacity to identify between correct and incorrect responses.

Grounding AI is an essential component of Bionic AI to minimize hallucination. Request a Demo Now!

Contextual Awareness in AI

The idea of contextual awareness means equipping the AI model with the ability to recognize the context of a given environment. This includes:

Natural Language Understanding (NLU): To allow AI to understand the broader semantic context in which the natural language is used. This can include ambiguity, sarcasm, and cultural references.

User Modeling: Developing a user profile based on their activities and interests to ensure tailored answers and interactions.

Environmental Awareness: Knowing the physical or virtual conditions within which the AI exists or will be functioning (for instance, location, time, and other agents).

Multimodal Input: Analyzing text, images, and other data to obtain a better understanding of the context in which the information was produced.

How Do Contextual Awareness and Grounding AI Complement Each Other?

Contextual awareness and grounding AI in large language models are complementary strategies that work together toward AI output’s credibility and precision. The need for grounding AI makes the AI model outputs realistic and accurate.

Contextual awareness enables the level of knowledge that the model uses in any given situation to be realistic.

This combination ensures that when the AI comes up with a response, it is not only providing accurate information but also information relevant to the context. This decreases the possibility of developing AI hallucinations.

Why Contextual Awareness and AI Grounding is Important for Your Business?

The integration of contextual awareness and grounding AI is not only a technical process; it is a strategic one. It can make a significant difference in the competitive strategy of your business as well as in customer communication. Here’s how:

Elevated Customer Experiences:

Hyper-Personalized Interactions: Based on customer behavior, context-aware AI can work with terabytes of data such as past purchase histories, previous clicks, age, gender, and even mood. Grounded AI gives reliable output providing specifics in terms of recommendations, products that can be sold to consumers, and marketing strategies to be adopted.

Intelligent Customer Support: Human-like chatbots and assistants based on grounded and contextually aware AI can interpret customers’ questions in a more nuanced manner. Grounding in AI can offer precise answers, filter out unnecessary questions, and hand off difficult tasks to live agents without interruption. This leads to shorter times to resolve issues and thus higher satisfaction among the customers.

Enhanced Decision-Making and Business Insights:

Data-Driven Decisions: The grounding in AI models make them capable of facilitating and processing big data from different sources. With reliable and real-world outputs, businesses can reveal unknown relationships, trends, or patterns that could help in strategic management. This includes resource planning, stock control, and market predictions.

Risk Mitigation: In industries like finance and healthcare, relying on an ungrounded AI model can have damaging consequences. When built from factual data, AI models can serve as a basis for predicting certain risks and deviations. This can help you avoid costly mistakes and non-compliance with the legal framework.

Operational Efficiency and Cost Savings:

Automation of Repetitive Tasks: The concept of intelligent contextual awareness can facilitate the automation of administrative and time-consuming tasks in organizations, across different departments. As a result, the time of valuable employees’ time is devoted to more complex responsibilities. This not only increases the efficiency of the production line but also decreases the cost of operation.

Streamlined Processes: By incorporating grounded AI into the work process, AI can map the workflow, analyze the processes, and suggest changes or adjustments to minimize delay. This results in improved efficiency and minimized wastage.

Building Trust and Brand Reputation:

Reliable and Accurate Information: Customers and stakeholders of your business interact with your AI systems, and they expect truth, facts, and data from them. Grounding in AI reduces the likelihood of giving out wrong information and hallucinations which will help establish trust in your brand.

Transparent Communication: Contextually aware AI is capable of explaining its thought process, as well as the sources used for information, which can enhance accountability. This also sets a strong signal to the users to trust you and your brand and ensures that ethical AI practices are being followed.

Competitive Advantage: Companies that adopt grounded and contextually aware AI stand out as industry pioneers. This creates a competitive advantage by being able to attract talented employees and customers and increases long-term cooperation.

Techniques for Grounding AI and Implementing Contextual Awareness

Building grounded and contextually aware AI systems requires a multi-faceted approach that encompasses data, technology, and human expertise.

Invest in High-Quality Training Data: The greatest asset or strength of any AI model is the data set it is trained on. Be sure that your datasets have high volume, but also massive variability, including different scenarios, languages, and cultural settings. It is better to use both structured data like databases, and unstructured data like text on the web for a deeper understanding of the world.

Leverage External Knowledge Bases: By linking it to reliable knowledge sources such as Wikipedia, specialized databases, or resources for a particular industry, you provide your AI system with access to many facts. This makes it easier to give responses that are grounded in real facts and can also prevent AI hallucinations.

Incorporate Fact-Checking Mechanisms: Appropriate fact-checking mechanisms should also be integrated to help the AI system work in real-time to minimize AI hallucinations. These mechanisms can compare the data with some external sources or internal databases to identify possible errors or undetected contradictions.

Employ a Human-in-the-Loop Approach: User modeling is useful, but incorporating humans into a loop feedback mechanism is much more effective. This can be achieved using approaches like Active Learning where AI outputs are checked and corrected by humans. This also includes involving human oversight that helps minimize AI hallucination. This enhances the capabilities of the AI in reasoning through contexts. It also makes it possible to have some form of check and balance in the system to prevent AI hallucinations.

Utilize Multimodal Input: The inclusion of context in the form of images and audio together with the textual data would greatly enhance the performance of an AI model. For example, a virtual assistant that can process images can better understand a user’s request for information about a specific object or scene.

(Image Courtesy: https://cloud.google.com/vertex-ai/generative-ai/docs/grounding/overview)

Addressing Challenges of Grounding AI and Contextual Awareness through Bionic AI

The inability of traditional AI models to account for real-world subtlety and context has been an issue for quite some time. Bionic AI, combining AI capability with human oversight, revolutionizes the use of AI.

Bionic AI uses a combination of AI reinforcement learning and reduction of bias to enable optimal performance even in multiple-tier cognitive situations. Bionic AI is updated with real world data and incorporating human intervention helps avoid overfitting, which is a common problem in most AI models.

Bionic AI solves the problem of contextual misinterpretation by incorporating essential human control and using sound methods of grounding. By involving the human in the loop, the AI system gets the right and well-adapted information which can change the business process outsourcing industry by aligning AI with real world applications.

Bionic AI is highly robust too because it can learn from changing human feedback and does not produce any AI hallucinations. This combination of AI with human supervision minimizes those negative effects, which are inherent in the application of traditional AI solutions. Bionic AI helps improve customer satisfaction.

The solutions that Bionic AI offers are accurate, relevant, as well as aware of the contextual environment in which it is being utilized. Bionic AI has the potential to revamp task outsourcing in different industries.

Conclusion

AI hallucinations remain a major problem that hinders the integration of AI solutions in various industries. Making AI more realistic and incorporating context into its operation can effectively address this problem.

Such methods can be useful to improve the reliability and context relevance of AI systems. It includes optimized knowledge base integration, fact-checking mechanisms, or incorporation of human input. This not only benefits the customers but also benefits organizations through making better decisions, reducing operational costs, and increasing confidence in AI technology.

Bionic AI integrates the capability of AI and human supervision. With consolidated integration of AI grounding and contextual awareness, Bionic AI provides accurate AI outputs, thus enhancing your customer’s experience.

Ready to revolutionize your business with AI that’s both intelligent and reliable? Explore how Bionic can transform your operations by combining AI with human expertise. Take the first step towards a more efficient, trustworthy, and ‘humanly’ AI. Request a demo now!

1 note

·

View note

Photo

Foundation Conversational AI Technologies Landscape

0 notes

Text

There are two different perspectives from which one can look at the progress of a field. Under a bottom-up perspective, the efforts of a scientific community are driven by identifying specific research challenges. A scientific result counts as a success if it solves such a specific challenge, at least partially. As long as such successes are frequent and satisfying, there is a general atmosphere of sustained progress. By contrast, under a top-down perspective, the focus is on the remote end goal of offering a complete, unified theory for the entire field. This view invites anxiety about the fact that we have not yet fully explained all phenomena and raises the question of whether all of our bottom-up progress leads us in the right direction.

There is no doubt that NLP [natural language processing] is currently in the process of rapid hill-climbing. Every year, states of the art across many NLP tasks are being improved significantly—often through the use of better pretrained LMs [language models]—and tasks that seemed impossible not long ago are already old news. Thus, everything is going great when we take the bottom-up view. But from a top-down perspective, the question is whether the hill we are climbing so rapidly is the right hill. How do we know that incremental progress on today’s tasks will take us to our end goal, whether that is “General Linguistic Intelligence” (Yogatama et al., 2019) or a system that passes the Turing test or a system that captures the meaning of English, Arapaho, Thai, or Hausa to a linguist’s satisfaction?

It is instructive to look at the past to appreciate this question. Computational linguistics has gone through many fashion cycles over the course of its history. Grammar- and knowledge-based methods gave way to statistical methods, and today most research incorporates neural methods. Researchers of each generation felt like they were solving relevant problems and making constant progress, from a bottom-up perspective. However, eventually serious shortcomings of each paradigm emerged, which could not be tackled satisfactorily with the methods of the day, and these methods were seen as obsolete. This negative judgment— we were climbing a hill, but not the right hill—can only be made from a top-down perspective. We have discussed the question of what is required to learn meaning in an attempt to bring the top-down perspective into clearer focus.

Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data, Emily M. Bender & Alexander Koller. DOI: 10.18653/v1/2020.acl-main.463 [italics in the original]

50 notes

·

View notes

Text

The Impact of Conversational AI Solutions across Industries.

As artificial intelligence (AI) advances, it increasingly simulates processes traditionally handled by humans. Among these innovations, conversational AI stands out, driving efficiency across diverse applications from customer service to industrial automation.

What is Conversational AI?

Conversational AI simulates human conversation using advanced natural language processing (NLP), extensive data, and machine learning. By analyzing and understanding human language, it powers applications like chatbots, virtual assistants, and more, transforming how businesses interact with customers and manage tasks.

Key Technologies Behind Conversational AI.

Machine Learning: This core AI component uses algorithms and data to continuously improve its ability to recognize patterns, enabling more accurate and effective interactions.

Natural Language Processing (NLP): NLP involves several stages, including:

Input Generation: Users provide information via text or speech.

Analysis: NLP systems use natural language understanding (NLU) and automatic speech recognition (ASR) to interpret this data.

Dialogue Management: Natural language generation (NLG) formulates appropriate responses.

Reinforcement Learning: Ongoing machine learning refines responses for better performance over time.

Examples and Applications:

Conversational AI appears in various forms:

Generative AI: Enhances oral and written communication.

Chatbots: Answer frequently asked questions and offer assistance.

Virtual Assistants: voice-activated aids for devices and smart speakers.

Text-to-Speech Software: Creates audiobooks and provides spoken instructions.

Speech Recognition Programs: Transcribe conversations and generate real-time subtitles.

These technologies enhance accessibility, customer service, industrial IoT (Internet of Things) operations, and business intelligence.

Benefits for Businesses.

24/7 Customer Service: Provides round-the-clock assistance without human agents.

Cost Efficiency: Reduces the resources needed for customer support.

Task Automation: speeds up processes and reduces errors in tasks like text transcription.

Personalized Experiences: It remembers customer preferences, enhancing user engagement and satisfaction.

Scalability: Easily scales to handle demand peaks, such as during Black Friday.

Design and Implementation.

Building effective conversational AI requires human intervention. Models are trained on machine learning and real-life conversations, enriched with data on user behavior, order status, and external factors like weather. This comprehensive approach enables smarter predictions and faster issue resolution.

Chatbots vs. Conversational AI.

While chatbots simulate conversations, conversational AI enables more complex interactions, providing context, anticipating needs, and integrating with advanced analytics for a seamless user experience.

AI in Logistics.

At Pcongrp, we lead in leveraging AI for advanced logistics solutions. Our AI-driven systems enhance picking operations and stacker crane positioning, significantly boosting warehouse efficiency. Contact us for expert advice on cutting-edge warehousing solutions.

#Conversational AI#Conversational AI Chatbot#Conversational AI VS Generative AI#pcg conversational ai#Conversational AI Services

0 notes

Text

Dont Mistake NLU for NLP Heres Why.

What are the Differences Between NLP, NLU, and NLG?

Before booking a hotel, customers want to learn more about the potential accommodations. People start asking questions about the pool, dinner service, towels, and other things as a result. Such tasks can be automated by an NLP-driven hospitality chatbot (see Figure 7). Let’s illustrate this example by using a famous NLP model called Google…

View On WordPress

0 notes