#OpenNLP

Explore tagged Tumblr posts

Text

Automatic discovery of German grammar

Let's consider using Apache OpenNLP to analyse a German crime story entitled "Sein letzter Fall." First, Apache OpenNLP has to be downloaded and unzipped to a separate folder. The German tokens model also has to be downloaded separately. In the Apache OpenNLP directory, one can create a folder 'Grammatik' with a subfolder 'models'. It's a destination directory to copy the opennlp-de-ud-gsd-tokens-1.2-2.5.0.bin file. This file should be renamed to modelsde-token.bin. Next, the sein-letzter-fall-1.txt has to be obtained, best by using an OCR software on the first page. That's it, the software is ready to partially discover the German grammar. To obtain tokens, the following command can be used.

..\bin\opennlp TokenizerME models/de-token.bin < sein-letzter-fall-1.txt > output-tokens.txt.

Here's the output, although most of the text has been removed.

Loading Tokenizer model … done (0,338s)

Der Wasserkocher piept laut . Samantha ist noch sehr müde . Sie geht in die Küche , nimmt einen Teebeutel und macht sich eine große Tasse Darjeeling . Average: 14,9 sent/s

Total: 1 sent Runtime: 0.067s Execution time: 0,446 seconds

To get the tags, opennlp-de-ud-gsd-pos-1.2-2.5.0.bin has to be obtained and copied to the 'grammatik/models' directory. This file should be renamed to de-pos-maxent.bin. The valid command to discover tags is:

..\bin\opennlp POSTagger models/de-pos-maxent.bin < output-tokens.txt > output_pos_tags.txt

Here's the output file with most of the content removed.

Loading POS Tagger model … done (0,295s)

Loading_PROPN Tokenizer_PROPN model_VERB …_PUNCT done_ADJ (0,338s)_NOUN

Der_DET Wasserkocher_NOUN piept_VERB laut_ADP ._PUNCT Samantha_PROPN ist_AUX noch_ADV sehr_ADV müde_NOUN ._PUNCT Sie_PRON geht_VERB in_ADP die_DET Küche_NOUN ,_PUNCT nimmt_VERB einen_DET Teebeutel_NOUN und_CCONJ macht_VERB sich_PRON eine_DET große_PUNCT Tasse_NOUN Darjeeling_PROPN .

Total:_PROPN 1_NUM sent_VERB Runtime:_PROPN 0.067s_ADP Execution_NOUN time:_PUNCT 0,446_NUM seconds_DET

Average: 52,6 sent/s

Total: 10 sent Runtime: 0.19s Execution time: 0,532 seconds

The next step would be to obtain a model of the German language and then parse the input text.

0 notes

Text

Text Processing Software Development

Text processing is one of the oldest and most essential domains in software development. From simple word counting to complex natural language processing (NLP), developers can build powerful tools that manipulate, analyze, and transform text data in countless ways.

What is Text Processing?

Text processing refers to the manipulation or analysis of text using software. It includes operations such as searching, editing, formatting, summarizing, converting, or interpreting text.

Common Use Cases

Spell checking and grammar correction

Search engines and keyword extraction

Text-to-speech and speech-to-text conversion

Chatbots and virtual assistants

Document formatting or generation

Sentiment analysis and opinion mining

Popular Programming Languages for Text Processing

Python: With libraries like NLTK, spaCy, and TextBlob

Java: Common in enterprise-level NLP solutions (Apache OpenNLP)

JavaScript: Useful for browser-based or real-time text manipulation

C++: High-performance processing for large datasets

Basic Python Example: Word Count

def word_count(text): words = text.split() return len(words) sample_text = "Text processing is powerful!" print("Word count:", word_count(sample_text))

Essential Libraries and Tools

NLTK: Natural Language Toolkit for tokenizing, parsing, and tagging text.

spaCy: Industrial-strength NLP for fast processing.

Regex (Regular Expressions): For pattern matching and text cleaning.

BeautifulSoup: For parsing HTML and extracting text.

Pandas: Great for handling structured text like CSV or tabular data.

Best Practices

Always clean and normalize text data before processing.

Use tokenization to split text into manageable units (words, sentences).

Handle encoding carefully, especially when dealing with multilingual data.

Structure your code modularly to support text pipelines.

Profile your code if working with large-scale datasets.

Advanced Topics

Named Entity Recognition (NER)

Topic Modeling (e.g., using LDA)

Machine Learning for Text Classification

Text Summarization and Translation

Optical Character Recognition (OCR)

Conclusion

Text processing is at the core of many modern software solutions. From basic parsing to complex machine learning, mastering this domain opens doors to a wide range of applications. Start simple, explore available tools, and take your first step toward developing intelligent text-driven software.

0 notes

Text

Scope Beyond Future Ready Java Development

In the ever-evolving landscape of software development, Java has remained a stalwart, adapting and thriving through decades. As we stand on the cusp of the future, the scope of Java development extends beyond its traditional boundaries, shaping a dynamic and resilient ecosystem. This article explores the expanding horizon of Java, delving into the key aspects that define its scope in the realm of future-ready software development.

Web Development Training In Jodhpur, Full Stack Web Development Training In Jodhpur, Python Training In Jodhpur, Flutter Training In Jodhpur, Android App Development Training In Jodhpur, Java Training In Jodhpur, Google Ads Training In Jodhpur, Coding Class In Jodhpur, oilab, Digital marketing Training In Jodhpur , Seo Training In Jodhpur, Digital Marketing Course In Jodhpur, SEO Training In Udaipur, Digital Marketing Course In Udaipur, Digital Marketing Training In Udaipur, Full stack web Development Training In Udaipur, Web Development Course In Udaipur

1. Cross-Platform Dominance

Java's "write once, run anywhere" mantra has been a cornerstone of its success. In the future, this concept becomes even more pivotal as the demand for cross-platform compatibility continues to rise. With the advent of diverse devices and operating systems, Java's ability to seamlessly execute across platforms positions it as a frontrunner in developing applications for an interconnected world.

2. Cloud-Native Java

The future of software development is undeniably intertwined with cloud computing. Java's adaptability to cloud-native architectures makes it a preferred language for developing scalable and resilient applications. As the industry transitions towards microservices and containerization, Java's mature ecosystem and frameworks provide a robust foundation for building cloud-native solutions.

3. Embracing Modern Development Practices

Java is not immune to the winds of change, and it has actively embraced modern development practices. With the introduction of modularization in Java 9, developers can now create more maintainable and scalable codebases. The language continues to evolve, incorporating features like records and pattern matching, further enhancing productivity and code readability.

4. Machine Learning and AI Integration

Java is making strides in the realm of artificial intelligence and machine learning. With libraries like Deeplearning4j and frameworks such as Apache OpenNLP, developers can leverage Java's reliability to build intelligent applications. As the demand for AI-driven solutions increases, Java's presence in this domain adds another dimension to its future scope.

5. Security at the Core

In an era where cyber threats are constantly evolving, security is paramount. Java's commitment to security remains unwavering, and its future-ready development encompasses enhanced security measures. From improvements in the platform's security APIs to regular updates addressing vulnerabilities, Java's emphasis on security positions it as a trusted choice for mission-critical applications.

Conclusion

The scope of Java development is not confined to its past achievements but extends into the future with a renewed sense of purpose. As technology landscapes shift and new challenges emerge, Java continues to adapt, innovate, and solidify its standing as a versatile and future-ready programming language. Developers navigating the ever-expanding horizons of software development will find Java to be not just a tool but a strategic asset in crafting solutions for the challenges of tomorrow. Embracing the scope beyond future-ready Java development is an investment in a technology that has proven its resilience and adaptability, making it a beacon in the ever-changing seas of the software development world.

#full stack web development training in jodhpur#digital marketing training in jodhpur#flutter training in jodhpur#python training in jodhpur

0 notes

Text

Exciting NLP Projects with Open Source Code for Hands-on Learning

Natural language processing (NLP) is an area of artificial intelligence that is rapidly growing in popularity thanks to its wide range of applications. NLP is used in everything from language translation to sentiment analysis to text summarization. With NLP, machines can understand and process human language and produce meaningful results.

For those who are new to the field of NLP, there are a number of open-source projects available to get hands-on experience. Open source projects are great for learning, as they provide access to the source code and allow users to experiment with the code and modify it to their needs. Here are some of the most exciting NLP projects with open-source code available for hands-on learning.

1. TensorFlow: TensorFlow is an open-source library for machine learning developed by Google. It provides a comprehensive set of tools for building deep learning models and implementing NLP tasks. It is used for a range of tasks, including text classification, text generation, and natural language understanding.

2. SpaCy: SpaCy is an open-source library for NLP in Python. It is built on the latest research and is designed to be both fast and accurate. It is used for a range of tasks, including text classification, named entity recognition, and part-of-speech tagging.

3. NLTK: NLTK is a set of open-source libraries for NLP in Python. It provides a wide range of tools for processing and analyzing text, such as tokenization, stemming, and sentiment analysis. It is used for a range of tasks, including text classification, language identification, and sentiment analysis.

4. Gensim: Gensim is an open-source library for NLP in Python. It is used for a range of tasks, including document clustering, topic modelling, and text summarization. It is designed to be easy to use and efficient.

5. OpenNLP: OpenNLP is an open-source library for NLP in Java. It is used for a range of tasks, including tokenization, part-of-speech tagging, and text classification. It is designed to be easy to use and efficient.

These are just a few of the exciting NLP projects with open-source code available for hands-on learning. By using open-source projects, you can get hands-on experience with NLP and build your own NLP models. Whether you're a beginner or an experienced programmer, open-source projects are a great way to get started with NLP.

0 notes

Text

The Intersection of Java and Artificial Intelligence for Students

Introduction

Artificial Intelligence (AI) is one of the most transformative technologies of our time. As students delve into the exciting world of AI, they may not initially associate it with Java development. However, the intersection of Java and AI is becoming increasingly prominent, offering students a unique and powerful pathway to explore this dynamic field. In this article, we'll explore the relationship between Java and AI, emphasizing why students should consider a Java training course for a comprehensive understanding of this convergence.

AI: A Transformative Field

Artificial Intelligence is the branch of computer science that aims to create machines and systems capable of performing tasks that typically require human intelligence. It encompasses machine learning, deep learning, natural language processing, computer vision, and more. AI applications are diverse, ranging from recommendation systems in e-commerce to autonomous vehicles, healthcare, and finance.

Java's Role in AI

Java, known for its robustness and cross-platform compatibility, has found a place in the AI landscape. While Python is often associated with AI, Java's strengths come to the fore in specific AI applications. For instance, Java's strong typing and static checking can contribute to the reliability and security of AI systems, making it an attractive choice for organizations concerned with data integrity and system stability.

Scalability and Performance

AI often involves handling large datasets and complex algorithms. Java's scalability and performance optimization make it a valuable asset in AI development. Students who master Java can efficiently implement AI models that are not only reliable but also capable of handling substantial data, critical for real-world AI applications.

Machine Learning with Java

Machine learning, a subset of AI, involves the use of algorithms that enable computers to learn from and make predictions or decisions based on data. Java provides a strong foundation for students to understand the principles and mathematics behind machine learning. Its well-structured syntax and object-oriented nature simplify the implementation of machine learning models.

AI in Java Libraries

Java boasts a growing number of AI libraries and frameworks. Apache OpenNLP, Deeplearning4j, Weka, and JavaML are just a few examples. These libraries offer students a wide array of tools and resources to explore various aspects of AI, from natural language processing to deep learning.

Integration with Enterprise Solutions

Java has long been the preferred language for building enterprise solutions. AI, too, is finding its way into the corporate world, and Java's role is becoming increasingly prominent. Students who understand the synergy between AI and Java can excel in roles that involve integrating AI models into enterprise applications, improving decision-making processes, and optimizing business operations.

Data Science and Big Data

Data science, a vital component of AI, involves extracting insights and knowledge from large datasets. Java's capabilities in handling big data provide students with the tools to manage and process data efficiently. This is crucial for data scientists and AI engineers who need to preprocess, clean, and transform data before building machine learning models.

Security and Reliability

In AI applications like healthcare, finance, and cybersecurity, where data security and system reliability are paramount, Java's built-in security features and strong type checking make it a trusted choice. Students who focus on AI in Java development can contribute to the development of secure and reliable AI solutions in these critical areas.

Emerging AI Technologies

Java is not limited to traditional AI applications; it's also finding a role in emerging technologies. For example, Java can be used in AI applications for the Internet of Things (IoT), where devices generate and process data autonomously. Students who explore AI in the context of IoT can work on projects that enable smarter and more efficient automation in various domains.

Java Training Courses

To maximize their potential at the intersection of Java and AI, students should consider enrolling in a Java training course. These courses provide structured learning experiences, expert guidance, and hands-on projects specifically focused on AI applications in Java. They enable students to gain practical experience and acquire industry-recognized certifications, which can significantly enhance their employability and expertise in AI.

Conclusion

The convergence of Java development and Artificial Intelligence offers students a unique and powerful pathway to explore the exciting field of AI. Java's scalability, performance, and reliability make it a valuable asset in the development of AI systems and applications.

By considering a Java training course with a focus on AI, students can enhance their understanding of this intersection. Such courses provide students with the tools, knowledge, and practical experience to excel in AI development, from machine learning to big data and IoT applications. The world of AI is vast and ever-evolving, and students who master AI with Java are well-positioned to become the next generation of AI experts, addressing the world's most complex challenges with data-driven solutions.

0 notes

Text

Java Libraries Empowering AI and Chatbot Development

Introduction

Java, renowned for its versatility and robustness, is increasingly becoming a cornerstone of Artificial Intelligence (AI) and chatbot development. With its vast ecosystem of libraries and a thriving developer community, Java offers a powerful foundation for building intelligent applications and chatbots. In this article, we'll delve into the role of Java libraries in AI and chatbot development, highlighting the significance of Java development and the benefits of Java training courses.

Java and AI: A Perfect Pairing

Java's Versatility: Java's platform independence and versatility make it well-suited for AI and chatbot development. Its "Write Once, Run Anywhere" (WORA) capability ensures that Java applications can run seamlessly on various platforms, making it a top choice for cross-platform AI development.

AI Advancements: AI encompasses a broad spectrum of technologies, including natural language processing, machine learning, and deep learning. Java's adaptability allows it to accommodate the diverse requirements of AI development.

Chatbots in Modern Applications: Chatbots have become integral to modern applications, from customer support to virtual assistants. Java's ability to handle complex logic and data processing makes it a strong candidate for developing chatbot solutions.

Java Libraries for AI Development

Deeplearning4j: Deeplearning4j is an open-source, distributed deep learning framework for Java. It allows developers to build and train deep neural networks, making it a valuable asset for AI applications.

Weka: Weka is a popular library for machine learning and data mining. It offers a wide range of algorithms for classification, regression, clustering, and more. Weka simplifies the development of machine learning models in Java.

Stanford NLP: The Stanford NLP library is a toolkit for natural language processing. It provides tools for tasks such as part-of-speech tagging, named entity recognition, and sentiment analysis, essential for chatbot development.

Apache OpenNLP: Apache OpenNLP is an open-source natural language processing library for Java. It aids in tokenization, sentence splitting, and entity recognition, crucial for chatbots that understand and generate human-like text.

Java Libraries for Chatbot Development

Rasa NLU and Rasa Core: Rasa is an open-source framework for building conversational AI. Rasa NLU handles natural language understanding, while Rasa Core manages dialogue flow. Both are written in Python but can be seamlessly integrated with Java applications.

Dialogflow: Dialogflow is a cloud-based, natural language understanding platform that offers extensive support for chatbot development. It allows developers to create chatbots that can understand and respond to user inputs in multiple languages.

IBM Watson Assistant: IBM Watson Assistant is another cloud-based chatbot development platform that enables developers to create AI-powered chatbots. Java applications can connect to IBM Watson Assistant using REST APIs.

Java's Importance in AI and Chatbot Development

Cross-Platform Compatibility: Java's WORA capability is vital in AI and chatbot development. It ensures that AI models and chatbots built with Java can seamlessly run on different platforms, reaching a wider audience.

Vast Developer Community: Java boasts a large and active developer community. This is invaluable for AI and chatbot developers as they can seek help, share knowledge, and collaborate on projects, accelerating development.

Machine Learning Integration: Java libraries for AI development offer integration with machine learning algorithms, making it easier to implement predictive and data-driven AI solutions.

Robust Exception Handling: Java's robust exception handling and memory management are essential for AI and chatbot development. These features enhance reliability and minimize unexpected failures.

Java Development and Training Courses

Java development in the context of AI and chatbots is a dynamic and constantly evolving field. Java training courses are instrumental in helping developers stay up-to-date with the latest developments and gain expertise in this area. Java training courses cover various essential topics, including:

Java Fundamentals: These courses ensure that participants have a strong foundation in Java, including object-oriented programming, data structures, and algorithms.

AI and Chatbot Development: Training courses introduce developers to AI concepts, machine learning, and natural language processing. They also cover the development of chatbots, focusing on conversational design and user experience.

Practical Application: Participants often engage in practical exercises and projects, applying their knowledge to real-world AI and chatbot scenarios. This hands-on experience is crucial for skill development.

Collaboration and Integration: Training courses emphasize how Java can integrate with other technologies and platforms, enabling developers to create holistic AI and chatbot solutions.

Case Study: E-commerce Chatbot

Imagine an e-commerce platform that wants to enhance the shopping experience for its customers. By integrating a chatbot powered by Java, customers can receive instant assistance with product recommendations, order tracking, and problem resolution. Java's adaptability and AI capabilities make it possible to create a chatbot that understands user queries and provides personalized responses, ultimately increasing customer satisfaction.

Conclusion

Java libraries play a pivotal role in AI and chatbot development, enabling developers to create intelligent applications and conversational agents. Java's adaptability, platform independence, and vast ecosystem of libraries make it an excellent choice for tackling the challenges of AI and chatbot development. For those looking to excel in this exciting field, Java training courses offer a structured and comprehensive path to becoming proficient in Java development and its applications in AI and chatbots. As the demand for intelligent applications and chatbots continues to grow, Java remains at the forefront of innovation and transformation.

0 notes

Text

New IKVM 8.2 & MavenReference for .NET projects

New IKVM 8.2 & MavenReference for .NET projects

Roughly 2 months ago, a new long-awaited version of IKVM was announced. The killer feature of the 8.2 release is the support of .NET Core. So, now, IKVM can translate JAR files to .NET Core compatible DLLs. Woohoo! Last week I migrated to the new IKVM two projects and can share my first…

View On WordPress

0 notes

Photo

Cohesive Software Design

by Janani Tharmaseelan "Cohesive Software Design"

Published in International Journal of Trend in Scientific Research and Development (ijtsrd), ISSN: 2456-6470, Volume-3 | Issue-3, April 2019,

URL: https://www.ijtsrd.com/papers/ijtsrd22900.pdf

Paper URL: https://www.ijtsrd.com/computer-science/other/22900/cohesive-software-design/janani-tharmaseelan

best international journal, call for paper papers conference, submit paper online

This paper presents a natural language processing based automated system called DrawPlus for generating UML diagrams, user scenarios and test cases after analyzing the given business requirement specification which is written in natural language. The DrawPlus is presented for analyzing the natural languages and extracting the relative and required information from the given business requirement Specification by the user. Basically user writes the requirements specifications in simple English and the designed system has conspicuous ability to analyze the given requirement specification by using some of the core natural language processing techniques with our own well defined algorithms. After compound analysis and extraction of associated information, the DrawPlus system draws use case diagram, User scenarios and system level high level test case description. The DrawPlus provides the more convenient and reliable way of generating use case, user scenarios and test cases in a way reducing the time and cost of software development process while accelerating the 70 of works in Software design and Testing phase

0 notes

Text

E-commerce search and recommendation with Vespa.ai

Introduction

Holiday shopping season is upon us and it’s time for a blog post on E-commerce search and recommendation using Vespa.ai. Vespa.ai is used as the search and recommendation backend at multiple Yahoo e-commerce sites in Asia, like tw.buy.yahoo.com.

This blog post discusses some of the challenges in e-commerce search and recommendation, and shows how they can be solved using the features of Vespa.ai.

Photo by Jonas Leupe on Unsplash

Text matching and ranking in e-commerce search

E-commerce search have text ranking requirements where traditional text ranking features like BM25 or TF-IDF might produce poor results. For an introduction to some of the issues with TF-IDF/BM25 see the influence of TF-IDF algorithms in e-commerce search. One example from the blog post is a search for ipad 2 which with traditional TF-IDF ranking will rank ‘black mini ipad cover, compatible with ipad 2’ higher than ‘Ipad 2’ as the former product description has several occurrences of the query terms Ipad and 2.

Vespa allows developers and relevancy engineers to fine tune the text ranking features to meet the domain specific ranking challenges. For example developers can control if multiple occurrences of a query term in the matched text should impact the relevance score. See text ranking occurrence tables and Vespa text ranking types for in-depth details. Also the Vespa text ranking features takes text proximity into account in the relevancy calculation, i.e how close the query terms appear in the matched text. BM25/TF-IDF on the other hand does not take query term proximity into account at all. Vespa also implements BM25 but it’s up to the relevancy engineer to chose which of the rich set of built-in text ranking features in Vespa that is used.

Vespa uses OpenNLP for linguistic processing like tokenization and stemming with support for multiple languages (as supported by OpenNLP).

Custom ranking business logic in e-commerce search

Your manager might tell you that these items of the product catalog should be prominent in the search results. How to tackle this with your existing search solution? Maybe by adding some synthetic query terms to the original user query, maybe by using separate indexes with federated search or even with a key value store which rarely is in synch with the product catalog search index?

With Vespa it’s easy to promote content as Vespa’s ranking framework is just math and allows the developer to formulate the relevancy scoring function explicitly without having to rewrite the query formulation. Vespa controls ranking through ranking expressions configured in rank profiles which enables full control through the expressive Vespa ranking expression language. The rank profile to use is chosen at query time so developers can design multiple ranking profiles to rank documents differently based on query intent classification. See later section on query classification for more details how query classification can be done with Vespa.

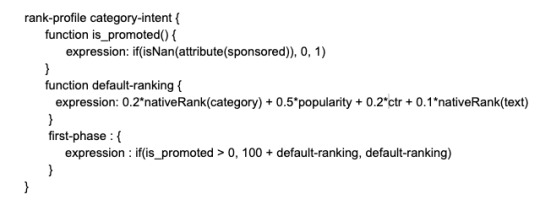

A sample ranking profile which implements a tiered relevance scoring function where sponsored or promoted items are always ranked above non-sponsored documents is shown below. The ranking profile is applied to all documents which matches the query formulation and the relevance score of the hit is the assigned the value of the first-phase expression. Vespa also supports multi-phase ranking.

Sample hand crafted ranking profile defined in the Vespa application package.

The above example is hand crafted but for optimal relevance we do recommend looking at learning to rank (LTR) methods. See learning to Rank using TensorFlow Ranking and learning to Rank using XGBoost. The trained MLR models can be used in combination with the specific business ranking logic. In the example above we could replace the default-ranking function with the trained MLR model, hence combining business logic with MLR models.

Facets and grouping in e-commerce search

Guiding the user through the product catalog by guided navigation or faceted search is a feature which users expects from an e-commerce search solution today and with Vespa, facets and guided navigation is easily implemented by the powerful Vespa Grouping Language.

Sample screenshot from Vespa e-commerce sample application UI demonstrating search facets using Vespa Grouping Language.

The Vespa grouping language supports deep nested grouping and aggregation operations over the matched content. The language also allows pagination within the group(s). For example if grouping hits by category and displaying top 3 ranking hits per category the language allows paginating to render more hits from a specified category group.

The vocabulary mismatch problem in e-commerce search

Studies (e.g. this study from FlipKart) finds that there is a significant fraction of queries in e-commerce search which suffer from vocabulary mismatch between the user query formulation and the relevant product descriptions in the product catalog. For example, the query “ladies pregnancy dress” would not match a product with description “women maternity gown” due to vocabulary mismatch between the query and the product description. Traditional Information Retrieval (IR) methods like TF-IDF/BM25 would fail retrieving the relevant product right off the bat.

Most techniques currently used to try to tackle the vocabulary mismatch problem are built around query expansion. With the recent advances in NLP using transfer learning with large pre-trained language models, we believe that future solutions will be built around multilingual semantic retrieval using text embeddings from pre-trained deep neural network language models. Vespa has recently announced a sample application on semantic retrieval which addresses the vocabulary mismatch problem as the retrieval is not based on query terms alone, but instead based on the dense text tensor embedding representation of the query and the document. The mentioned sample app reproduces the accuracy of the retrieval model described in the Google blog post about Semantic Retrieval.

Using our query and product title example from the section above, which suffers from the vocabulary mismatch, and instead move away from the textual representation to using the respective dense tensor embedding representation, we find that the semantic similarity between them is high (0.93). The high semantic similarity means that the relevant product would be retrieved when using semantic retrieval. The semantic similarity is in this case defined as the cosine similarity between the dense tensor embedding representations of the query and the product description. Vespa has strong support for expressing and storing tensor fields which one can perform tensor operations (e.g cosine similarity) over for ranking, this functionality is demonstrated in the mentioned sample application.

Below is a simple matrix comparing the semantic similarity of three pairs of (query, product description). The tensor embeddings of the textual representation is obtained with the Universal Sentence Encoder from Google.

Semantic similarity matrix of different queries and product descriptions.

The Universal Sentence Encoder Model from Google is multilingual as it was trained on text from multiple languages. Using these text embeddings enables multilingual retrieval so searches written in Chinese can retrieve relevant products by descriptions written in multiple languages. This is another nice property of semantic retrieval models which is particularly useful in e-commerce search applications with global reach.

Query classification and query rewriting in e-commerce search

Vespa supports deploying stateless machine learned (ML) models which comes handy when doing query classification. Machine learned models which classify the query is commonly used in e-commerce search solutions and the recent advances in natural language processing (NLP) using pre-trained deep neural language models have improved the accuracy of text classification models significantly. See e.g text classification using BERT for an illustrated guide to text classification using BERT. Vespa supports deploying ML models built with TensorFlow, XGBoost and PyTorch through the Open Neural Network Exchange (ONNX) format. ML models trained with mentioned tools can successfully be used for various query classification tasks with high accuracy.

In e-commerce search, classifying the intent of the query or query session can help ranking the results by using an intent specific ranking profile which is tailored to the specific query intent. The intent classification can also determine how the result page is displayed and organised.

Consider a category browse intent query like ‘shoes for men’. A query intent which might benefit from a query rewrite which limits the result set to contain only items which matched the unambiguous category id instead of just searching the product description or category fields for ‘shoes for men’ . Also ranking could change based on the query classification by using a ranking profile which gives higher weight to signals like popularity or price than text ranking features.

Vespa also features a powerful query rewriting language which supports rule based query rewrites, synonym expansion and query phrasing.

Product recommendation in e-commerce search

Vespa is commonly used for recommendation use cases and e-commerce is no exception.

Vespa is able to evaluate complex Machine Learned (ML) models over many data points (documents, products) in user time which allows the ML model to use real time signals derived from the current user’s online shopping session (e.g products browsed, queries performed, time of day) as model features. An offline batch oriented inference architecture would not be able to use these important real time signals. By batch oriented inference architecture we mean pre-computing the inference offline for a set of users or products and where the model inference results is stored in a key-value store for online retrieval.

In our blog recommendation tutorial we demonstrate how to apply a collaborative filtering model for content recommendation and in part 2 of the blog recommendation tutorial we show to use a neural network trained with TensorFlow to serve recommendations in user time. Similar recommendation approaches are used with success in e-commerce.

Keeping your e-commerce index up to date with real time updates

Vespa is designed for horizontal scaling with high sustainable write and read throughput with low predictable latency. Updating the product catalog in real time is of critical importance for e-commerce applications as the real time information is used in retrieval filters and also as ranking signals. The product description or product title rarely changes but meta information like inventory status, price and popularity are real time signals which will improve relevance when used in ranking. Also having the inventory status reflected in the search index also avoids retrieving content which is out of stock.

Vespa has true native support for partial updates where there is no need to re-index the entire document but only a subset of the document (i.e fields in the document). Real time partial updates can be done at scale against attribute fields which are stored and updated in memory. Attribute fields in Vespa can be updated at rates up to about 40-50K updates/s per content node.

Campaigns in e-commerce search

Using Vespa’s support for predicate fields it’s easy to control when content is surfaced in search results and not. The predicate field type allows the content (e.g a document) to express if it should match the query instead of the other way around. For e-commerce search and recommendation we can use predicate expressions to control how product campaigns are surfaced in search results. Some examples of what predicate fields can be used for:

Only match and retrieve the document if time of day is in the range 8–16 or range 19–20 and the user is a member. This could be used for promoting content for certain users, controlled by the predicate expression stored in the document. The time of day and member status is passed with the query.

Represent recurring campaigns with multiple time ranges.

Above examples are by no means exhaustive as predicates can be used for multiple campaign related use cases where the filtering logic is expressed in the content.

Scaling & performance for high availability in e-commerce search

Are you worried that your current search installation will break by the traffic surge associated with the holiday shopping season? Are your cloud VMs running high on disk busy metrics already? What about those long GC pauses in the JVM old generation causing your 95percentile latency go through the roof? Needless to say but any downtime due to a slow search backend causing a denial of service situation in the middle of the holiday shopping season will have catastrophic impact on revenue and customer experience.

Photo by Jon Tyson on Unsplash

The heart of the Vespa serving stack is written in C++ and don’t suffer from issues related to long JVM GC pauses. The indexing and search component in Vespa is significantly different from the Lucene based engines like SOLR/Elasticsearch which are IO intensive due to the many Lucene segments within an index shard. A query in a Lucene based engine will need to perform lookups in dictionaries and posting lists across all segments across all shards. Optimising the search access pattern by merging the Lucene segments will further increase the IO load during the merge operations.

With Vespa you don’t need to define the number of shards for your index prior to indexing a single document as Vespa allows adaptive scaling of the content cluster(s) and there is no shard concept in Vespa. Content nodes can be added and removed as you wish and Vespa will re-balance the data in the background without having to re-feed the content from the source of truth.

In ElasticSearch, changing the number of shards to scale with changes in data volume requires an operator to perform a multi-step procedure that sets the index into read-only mode and splits it into an entirely new index. Vespa is designed to allow cluster resizing while being fully available for reads and writes. Vespa splits, joins and moves parts of the data space to ensure an even distribution with no intervention needed

At the scale we operate Vespa at Verizon Media, requiring more than 2X footprint during content volume expansion or reduction would be prohibitively expensive. Vespa was designed to allow content cluster resizing while serving traffic without noticeable serving impact. Adding content nodes or removing content nodes is handled by adjusting the node count in the application package and re-deploying the application package.

Also the shard concept in ElasticSearch and SOLR impacts search latency incurred by cpu processing in the matching and ranking loops as the concurrency model in ElasticSearch/SOLR is one thread per search per shard. Vespa on the other hand allows a single search to use multiple threads per node and the number of threads can be controlled at query time by a rank-profile setting: num-threads-per-search. Partitioning the matching and ranking by dividing the document volume between searcher threads reduces the overall latency at the cost of more cpu threads, but makes better use of multi-core cpu architectures. If your search servers cpu usage is low and search latency is still high you now know the reason.

In a recent published benchmark which compared the performance of Vespa versus ElasticSearch for dense vector ranking Vespa was 5x faster than ElasticSearch. The benchmark used 2 shards for ElasticSearch and 2 threads per search in Vespa.

The holiday season online query traffic can be very spiky, a query traffic pattern which can be difficult to predict and plan for. For instance price comparison sites might direct more user traffic to your site unexpectedly at times you did not plan for. Vespa supports graceful quality of search degradation which comes handy for those cases where traffic spikes reaches levels not anticipated in the capacity planning. These soft degradation features allow the search service to operate within acceptable latency levels but with less accuracy and coverage. These soft degradation mechanisms helps avoiding a denial of service situation where all searches are becoming slow due to overload caused by unexpected traffic spikes. See details in the Vespa graceful degradation documentation.

Summary

In this post we have explored some of the challenges in e-commerce Search and Recommendation and highlighted some of the features of Vespa which can be used to tackle e-commerce search and recommendation use cases. If you want to try Vespa for your e-commerce application you can go check out our e-commerce sample application found here . The sample application can be scaled to full production size using our hosted Vespa Cloud Service at https://cloud.vespa.ai/. Happy Holiday Shopping Season!

4 notes

·

View notes

Text

Simple Sentence Detector and Tokenizer Using OpenNLP

Simple Sentence Detector and Tokenizer Using OpenNLP

Machine learning is a branch of artificial intelligence. In this we create and study about systems that can learn from data. We all learn from our experience or others experience. In machine learning, the system is also getting learned from some experience, which we feed as data.

So for getting an inference about something, first we train the system with some set of data. With that data, the…

View On WordPress

#apache#artificial intelligence#BigData#content#java#language processing#machinelearning#natural language#nlp#opennlp#program#simple#text processing#textanalytics

0 notes

Text

Natural language processing with Apache OpenNLP | InfoWorld

0 notes

Text

Natural Language Processing applications enable an accurate, large-scale analysis of an unstructured and untapped pool of text in an objective and cognitive way.

Powerful NLP capabilities are being explored and applied in different business functions and areas.

Our NLP capabilities include content categorization, stemming, topic discovery and modeling, contextual extraction, sentiment analysis, part-of-speech tagging, intent recognition, speech-to-text and text-to-speech conversion, Bayesian spam filtering, document summarization, machine translation, and more.

Our NLP services can identify and highlight the important parts of texts that are beneficial for you. This can help understand the subject as well as the context of a text, no matter how complex and messy. We are also skilled in developing optical character recognition models that improve human intelligence to a great extent.

Jellyfish Technologies offer self-learning, next-gen AI-driven NLP solutions, built on enhanced algorithms like Python NLTK, OpenNLP, Nlp.js, TensorFlow, and DialogFlow, that read and decipher multiple human languages, contextual. nuances, industry-specific terminologies and more for better responsiveness in customer interactions and multiple other business functions.

Visit Us:-https://www.jellyfishtechnologies.com/natural-language-processing.html

#naturallanguageprocessing#NLP#USA#Canada#jellyfishtechnologies#bestsoftwarecompany#softwareservicesinUSA

1 note

·

View note

Photo

Open source softwares available for NLP . . . . . . . #Ai #artificialintelligence #artificial_intelliagence #aiforgood #aiforeveryone #aiforever #innomatics #hyderabad #india #aijobs #jobsinAI #datascience #datascientist #python #datavisualization #dataanalytics #datasciencetraining #nltk #structuredata #data #datascientistsinthemaking #datascienceeducation #dataengineer #datamining #nlpcoaching #nlp #gensim #opennlp #opensource #stanford

0 notes

Text

Integrating Apache OpenNLP Into Apache NiFi For Real-Time Natural Language Processing of Live Data Streams

This is an update to the existing processor. This one seems to work better and faster. Versions Apache OpenNLP 1.8.4 with Name, Location, and Date Processing. https://goo.gl/cEmgdN #DataIntegration #ML

0 notes

Link

I guess the advantage of this is its integration with existing Apache Big Data services. But Google, Facebook and Microsoft have all open sourced some pretty good ML packages, and there’s only so much attention to go around.

Apache PredictionIO (incubating) is an open source Machine Learning Server built on top of state-of-the-art open source stack for developers and data scientists create predictive engines for any machine learning task. It lets you:

quickly build and deploy an engine as a web service on production with customizable templates;

respond to dynamic queries in real-time once deployed as a web service;

evaluate and tune multiple engine variants systematically;

unify data from multiple platforms in batch or in real-time for comprehensive predictive analytics;

speed up machine learning modeling with systematic processes and pre-built evaluation measures;

support machine learning and data processing libraries such as Spark MLLib and OpenNLP;

implement your own machine learning models and seamlessly incorporate them into your engine;

simplify data infrastructure management.

Apache PredictionIO (incubating) can be installed as a full machine learning stack, bundled with Apache Spark, MLlib, HBase, Spray and Elasticsearch, which simplifies and accelerates scalable machine learning infrastructure management.

2 notes

·

View notes

Text

Distributional Semantics in R: Part 2 Entity Recognition w. {openNLP}

The R code for this tutorial on Methods of Distributional Semantics in R is found in the respective GitHub repository. You will find .R, .Rmd, and .html files corresponding to each part of this tutorial (e.g. DistSemanticsBelgradeR-Part2.R, DistSemanticsBelgradeR-Part2.Rmd, and DistSemanticsBelgradeR-Part2.html, for Part 2) there. All auxiliary files are also uploaded to the repository.

Following my Methods of Distributional Semantics in R BelgradeR Meetup with Data Science Serbia, organized in Startit Center, Belgrade, 11/30/2016, several people asked me for the R code used for the analysis of William Shakespeare’s plays that was presented. I have decided to continue the development of the code that I’ve used during the Meetup in order to advance the examples that I have shown then into a more or less complete and comprehensible text-mining tutorial with {tm}, {openNLP}, and {topicmodels} in R. All files in this GitHub repository are a product of that work.

Part 2 will introduce named entity recognition with {openNLP}, and Apache project in Java interfaced by this nice R package that, in turn, relies on {NLP} classes. We will try to make machine learning (MaxEnt models offered in {openNLP} figure out the characters from Shakespeare’s plays, a quite difficult task given that the learning algorithms at our disposal were trained on contemporary English corpora.

Figure 1.The accuracy of character recognition from Shakespeare’s comedies, tragedies, and histories; the black dashed line is the overall density. The results is not realistic (explanation given in the respective .Rmd and .hmtl files).

What I really want to show you here is how tricky and difficult it can be to do serious text-mining, and help you by exemplifying some steps that are necessary to ensure the consistency of results that you are expecting. The text-mining pipelines being developed here are in no sense perfect or complete; they are meant to demonstrate important problems and propose solutions rather than to provide a copy and paste ready chunks for future re-use. In essence, except in those cases where a standardized information extraction + text-mining pipeline is being developed (a situation where, by assumption, one periodically processes large text corpora, e.g. web-scraped news and other media reports, from various sources, in various formats, and where one simply needs to learn to live with approximations) every text-mining study will need a specific pipeline on its own. Chaining those tm_map() calls to various content_transformers from {tm} restlessly, while being ignorant of the necessary changes in parameters and different content-specific transformations - of which {tm} supports only a few - will simply not do.

Figure 2.Don’t get hooked on the results presented in the {ggplot2} figure above; {openNLP} is not that successful in recognizing personal names from Shakespeare’s plays (in spite of the fact that it works great for contemporary English documents). I have helped it a bit, by doing something that is not applicable to real-world situations; go take a look at the code from this GitHub repository.

See you soon.

2 notes

·

View notes