Don't wanna be here? Send us removal request.

Text

Python built-in function round() not working in Databricks notebook

This is common issue that developers face while working on pyspark. This issue will happen if you import all functions pyspark. This issue will happen with several other built-in functions in python. There are several functions that shares the same name between the functions in python builtins and pyspark functions. Always be careful while doing the following import from pyspark.sql.functions…

#databricks functions conflict with python built-in functions#not a string or column:#python builtin function not working in databricks notebook#python function not working with pyspark#round() function not working for databricks Python#TypeError: Invalid argument

0 notes

Text

Python program to download files recursively from AWS S3 bucket to Databricks DBFS

S3 is a popular object storage service from Amazon Web Services. It is a common requirement to download files from the S3 bucket to Azure Databricks. You can mount object storage to the Databricks workspace, but in this example, I am showing how to recursively download and sync the files from a folder within an AWS S3 bucket to DBFS. This program will overwrite the files that already exist. The…

#boto3#python program to download files from Aws s3 bucket to databricks dbfs#python program to recursively download files and folders from an AWS S3 bucket in Azure Databricks

0 notes

Text

How to enable a single public IP for outbound traffic from Databricks Cluster ?

This is one of the common requirement that every data engineer faces while working on Databricks. When we make connectivity with external systems, the traffic can go from any of the cluster nodes. If the cluster is provisioned with the Databricks managed network with public IPs in all the nodes, the request will go from any of the nodes and the IP address will vary every time you provision the…

#enable single public ip for databricks#how to enable static ip for outbound traffic from databricks#how to route traffic from a databricks cluster through a single IP

0 notes

Text

Key points to be considered before choosing a modern Industrial IoT Platform

Digitalization is the key upgrade that every industry needs at this era. The industrial revolution has evolved to the current state through various phases. These are the key phases of Industrial revolution and we call it with a version in the order it is listed below (Industry 4.0, Industry 5.0 etc.) Mechanization Electrification Automation Digitalization Personalization Most of the…

View On WordPress

#Data Ops#Data Platform#IIoT Platform#Industrial IoT#Industry 4.0#Industry 5.0#points to be considered before choosing a modern Industrial IoT Platform

0 notes

Text

How to handle URL encoded characters in a dataframe ?

Recently I came across a problem statement to deal with a CSV file which has several encoded characters. For example, there were several words which was coming in a weird way like the ones below. We're --> We're There's --> There's To solve this, I did the below steps. Let us assume the column name of the fields which has this encoded character is description. Then using pandas, the below…

View On WordPress

0 notes

Text

How to Enable or Disable public access of an Azure Blob Storage (Storage Account) using Python Program ?

Azure storage account has a property in the networking section to enable or disable public access. This option is available directly on the web portal. There are options to whitelist a specific VNet or specific IP addresses. In some scenarios, we may get some requirement to enable access to some sources which does not have a static public IP address. In this scenario, the easiest option we have…

View On WordPress

#Automate the settings update in Azure storage account using python program#azure#How to update the settings or properties of an Azure storage account using python program#python#Python program to enable or disable public access to a Azure Blob storage#Python program to enable or disable public access to a Azure Storage account#Python program to enable or disable public access to a storage account#SDK#storage account

0 notes

Text

How to enable and disable SFTP on an Azure blob storage using a python program ?

SFTP is a feature available on Azure Blob Storage. This can be enabled or disabled at any point of time after the creation of the storage account. By enabling SFTP, the storage account gets a public endpoint for the SFTP connectivity. This comes with an additional cost beyond the usual cost for the data storage (read, write and storage). The cost for SFTP is charged on hourly basis. So if you…

View On WordPress

#How to automatically enable or disable SFTP on Azure blob storage programatically#How to enable or disable SFTP in Azure storage account#how to enable SFTP in azure blob storage using a python program#how to manage SFTP in blob storage#how to save azure cost

0 notes

Text

How to print Azure Keyvault secret value in Databricks notebook ? Print shows REDACTED.

As part of ensuring security, sensitive information will not get printed directly on the Databricks notebooks. Sometimes this good feature becomes a trouble for the developers. For example, if you want to verify the value using a code snippet due to the lack of direct access to the vault, the direct output will show REDACTED. To overcome this problem, we can use a simple code snippet which just…

View On WordPress

#Azure Databricks#how to print keyvault secret value in databricks#print redacted content in plain text#show the actual value of a hidden string in databricks#show value of redacted

0 notes

Text

How to migrate the secrets from one Azure Keyvault to another Keyvault quickly ?

If you come across migrating secrets from one keyvault to another keyvault, then you have a solution in this article. Migrating all the secrets manually from one keyvault to the other one will be time consuming process. Also the manual process may end up with errors also. I am sharing an automated approach using a python program to copy all the secrets from the source keyvault and write it to…

View On WordPress

#automated tool for migrating azure keyvault secrets#how to copy and paste secrets from one keyvault to another#how to export keyvault secrets#how to migrate secrets from one keyvault to another azure keyvault#how to replicate keyvault secrets#Python program to migrate keyvault secrets from one keyvault to another keyvault present in a different azure tenant

0 notes

Text

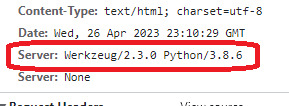

How to remove the Verbose Server Banner from Python Flask Application ?

Verbose Server Banner is something that gives the clue to a external person about the details of the server used in the APIs. So it is essential to hide this information. Typically, the APIs run behind a proxy or a Firewall. So this header can be modified at that layer. In this article, I will be explaining the mechanism to update this header in cases where the APIs are not deployed behind a…

View On WordPress

#How to remove Server banner from Flask application#how to remove server banner from Gunicorn#How to remove the Verbose Server Banner from Python Flask Application#How to update headers in python flask#remove Werkzeug Python header from Flask application

0 notes

Text

Where is the location of the bootstrap user-data script within an AWS EC2 instance (Linux) ?

Bootstrap script or user-data is the custom script to perform custom installation or modifications to an EC2 instance at the time of provisioning. This script will get executed on top of the base AMI. The user-data or the bootstrap script gets copied to the instance immediately after provisioning and gets executed. The script is located in the following location in case of Linux operating…

View On WordPress

#how to check the progress of the execution of bootstrap scripts in an ec2 instance#location of aws ec2 bootstrap scripts in an ec2 instance#location of user data in aws ec2 instance#where is the logs of aws ec2 bootstrap script execution

0 notes

Text

How to convert or encode a file into a single line base64 string using Linux command line ?

Recently I came across a use case to store the license associated with a software securely in a vault. The license was a binary file. The only way to store in the secure vault was to convert it as a string. I used the following command to convert the file into a single line base64 string. cat <file-name> | base64 -w 0 The -w 0 option aligns the encoded string into a single line string. If you…

View On WordPress

#base64 encode a file#CentOS#convert a file into a single line base64 string#encode a file into a single line base64 string#linux command line#RHEL#shell script#ubuntu

1 note

·

View note

Text

How to delete an AWS secret immediately without recovery window ?

AWS Secrets Manager is a service to store sensitive information in a secure way. We interact with this service using the web console, using aws cli or using AWS SDK. There is no direct option to delete an existing secret immediately from the web console. The web console asks for a recovery window and the secret will remain undeleted till the recovery window gets over. This will be a problem for…

View On WordPress

#delete an aws secret using aws cli#delete an aws secret using command line#delete an AWS secret without recovery window#force delete an aws secret#How to delete an AWS secret immediately without recovery window#set recovery window as zero for aws secret

0 notes

Text

How to list the AWS EC2 instances in an account using AWS CLI ?

AWS cli is a very powerful command line utility provided by AWS. Here I am giving a set of AWS CLI commands to list the EC2 instances in an aws account. List all EC2 instances in an AWS account (including stopped). aws ec2 describe-instance-status --include-all-instances The above command will list all the EC2 instances in an account irrespective of its status. The output will be in JSON…

View On WordPress

#aws cli commands to list ec2 instance#filter query in aws cli#List all EC2 instances in an AWS account#List all EC2 instances in an AWS account of a specific state (running or stopped) in a tabular format#list all running aws ec2 instances using aws cli#list all stopped aws ec2 instances using aws cli

0 notes

Text

How to change the Hive Warehouse Directory ?

How to change the Hive Warehouse Directory ?

By default the hive warehouse directory is located at the hdfs location /user/hive/warehouse If you want to change this location, you can add the following property to hive-site.xml. Everyone using hive should have appropriate read/write permissions to this warehouse directory. <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hivestore/warehouse </value> <description>location…

View On WordPress

#BigData#default warehouse#hadoop#hive#hive metastore#hive warehouse directory#hive-site.xml#linux#mapred#mapreduce#metastore#warehouse

0 notes

Text

How to execute Hadoop commands in hive shell or command line interface ?

How to execute Hadoop commands in hive shell or command line interface ?

We can execute hadoop commands in hive cli. It is very simple. Just put an exclamation mark (!) before your hadoop command in hive cli and put a semicolon (;) after your command. Example: hive> !hadoop fs –ls / ; drwxr-xr-x - hdfs supergroup 0 2013-03-20 12:44 /app drwxrwxrwx - hdfs supergroup 0 2013-05-23 11:54 /tmp drwxr-xr-x - hdfs supergroup 0 2013-05-08…

View On WordPress

#Apache Hadoop#BigData#exclamation mark#hadoop#hadoop command#hadoop command in hive#hdfs#hive#hive cli#hive permissions#mapreduce#semicolon

0 notes

Text

How to create a local yum repository in CentOS or RHEL ?

How to create a local yum repository in CentOS or RHEL ?

Introduction People working on linux may be familiar with yum command. Yum install <package name> is a command that is used frequently for installing packages from a remote repository. YUM stands for Yellowdog Update, Modifier. YUM is a program that manages updates, installation and removal for RedHat package manager (RPM) systems. Yum install will pick the repository url from /etc/yum.repos.d/…

View On WordPress

0 notes