#Optimize for Ad Hoc Workloads

Explore tagged Tumblr posts

Text

Optimizing SQL Server Performance with "Optimize for Ad Hoc Workloads" Setting

Welcome to our deep dive into SQL Server’s “Optimize for Ad Hoc Workloads” option, a feature that might seem a bit technical at first but is incredibly handy for improving your database’s performance, especially if your environment is rife with one-time queries. Let’s break it down into more digestible pieces and explore how you can use it effectively with practical T-SQL examples. What is…

View On WordPress

#Optimize for Ad Hoc Workloads#Plan Cache optimization#SQL Server 2022#SQL Server performance#T-SQL examples

0 notes

Text

Understanding Snowflake’s Multi-Cluster Architecture

Introduction

In fact, Snowflake is a platform for data warehousing that is completely cloud-based. It has completely changed how organizations handle enormous amounts of data all the way through business analytics. One of the most tremendous things about this is the multi-cluster architecture that organizations can scale computing power in an efficient high-performance and cost-effective way. Learning about the Snowflake multi-cluster architecture proves useful in optimizing workloads and improving query performance when it comes to managing concurrent users.

What is the Multi-Cluster Architecture of Snowflake?

The space between storage and compute ends up being important for Snowflake and allows the customer to scale each independently, because it won't limit the calculation when its resource is fully used, unlike most data warehouses, which usually have bottlenecks. Multi-cluster architecture is in the process of Snowflake being able to process queries simultaneously across multiple compute clusters with a virtually defined warehouse in response to variable workloads.

Key Components of Multi-Cluster Architecture

Virtual Warehouses: Query processing is done in Snowflake with virtual warehouses, operating in isolation from one another to avoid the query processing different workloads affecting each other.

Auto-Scaling: Snowflake scales by itself, up or down, depending on the need, adding more compute clusters when workload increases and reducing clusters when they are not required anymore.

Concurrency Management: Apart from the enhanced scalability that benefits Snowflake, it can also efficiently process slow queries due to varied user activity with the help of multiple clusters.

Storage and Compute Separation: Since the storage is separated from the compute resources, data accesses are not performance degradations even when scaling the computing power up or down.

Multi-Cluster Architecture Improvement Property

1. Improve Query Performance

Under the scenario with simultaneous queries executed by many users or processes, the system automatically partitions workloads among available compute clusters preventing the bottleneck and ensuring that even at peak usage times execution remains rapid.

Effective Concurrency Handling

Increased user activity in traditional data warehouses usually leads to contention of shared resources and the resultant slowdown of queries. Snowflake solved this by dynamically allocating additional compute clusters where many queries could be processed concurrently without delay.

The Cost Efficient Auto-Scaling

As part of its many benefits, Snowflake makes use of additional clusters only when needed. Conversely, as demand decreases, the system automatically scales down and avoids unnecessary costs for idle resources, making it much more cost-efficient for businesses challenging with variable workloads.

Frictionless Workload Distribution

Companies with mixed types of workload-extracting processes, ad-hoc queries, and reporting, will now assign appropriate compute clusters to different workloads to alleviate the pressure of interference from one load on another.

Best Practices for Multi-cluster Warehouses

Automated configuration of policies: As per the needed demand, it should have some defined policies automatically scaling those compute resources as per requirement with demand.

Cost-effective Usage and Monitoring: At regular intervals allow customers to analyze the metric performance so as to better optimize the settings of the cluster while also avoiding unnecessary expenses.

Optimizing Query Execution: Speed up and optimize the performance of a query by creating clustering keys and by caching mechanisms.

Segment Workloads: Give dedicated virtual warehouses to departments or workloads in order to separate resource contention.

Why Organizations Must Opt for Snowflake’s Multi-Cluster Architecture

The multi-cluster architecture of Snowflake is highly advantageous for enterprises dealing with large volumes of data pertaining to their activities, particularly real-time analytics, machine learning, and data integration. This data storage allows organizations to guarantee an identical business performance and resource elasticity with lower operational costs.

For those who want to learn all that Snowflake has to offer, and even more, Snowflake training in Bangalore offers several courses that cover most topics. From the course of core learning to optimization and real-time exercises, professionals can enjoy learning and putting into practice their Snowflake investment.

Conclusion

Snowflake's multi-cluster architecture is a boon for enterprises looking for scalable and high-performance data warehousing solutions. It makes possible dynamic compute resource management and highly efficient concurrency handling, which in turn allows organizations to fine-tune workloads without impacting speed or incurring unnecessary costs. To understand Snowflake in depth and to learn how to put the best practices into place, Snowflake training in Bangalore is going to be an invaluable investment for both data professionals and businesses alike.

0 notes

Text

Key Components of Oracle Analytics Cloud Architecture and Their Functions

Oracle Analytics Cloud Architecture is a robust framework that facilitates data-driven decision-making across enterprises. It integrates advanced analytics, artificial intelligence, and machine learning capabilities to provide organizations with deep insights from their data. By leveraging Oracle Analytics Cloud Architecture, businesses can optimize their data management strategies, enhance reporting, and improve overall operational efficiency.

To fully understand its capabilities, it is essential to explore the key components that form the foundation of Oracle Analytics Cloud Architecture and how they contribute to seamless analytics and data integration.

Understanding Oracle Analytics Cloud Architecture The Oracle Analytics Cloud Architecture is structured to provide a scalable, high-performance environment for business intelligence and data analytics. It incorporates various components that work together to streamline data processing, visualization, and predictive analysis. The architecture is built on Oracle Cloud infrastructure for analytics, ensuring high availability, security, and flexibility for businesses managing large-scale data operations.

Key Components of Oracle Analytics Cloud Architecture 1. Data Integration Layer At the core of Oracle Analytics Cloud Architecture is the Oracle Cloud data integration layer, which allows businesses to connect and consolidate data from multiple sources seamlessly. This component ensures that structured and unstructured data from on-premises and cloud-based systems can be integrated efficiently for analysis. The data integration layer supports automated data ingestion, transformation, and cleansing, ensuring high-quality data for analytics.

2. Data Storage and Management A critical aspect of Oracle Analytics Cloud Architecture is its ability to handle vast amounts of data while ensuring optimal performance. The platform provides robust storage options, including Oracle BI architecture, which supports relational databases, data lakes, and NoSQL repositories. These storage solutions enable businesses to store historical and real-time data while maintaining high processing speeds for complex analytical queries.

3. Analytical Processing Engine The analytical processing engine executes complex calculations, predictive modeling, and AI-driven insights within Oracle Analytics Cloud Architecture. This component integrates machine learning algorithms to enhance data processing capabilities. It also enables businesses to automate decision-making processes, ensuring that analytics remain proactive and forward-looking.

4. Visualization and Reporting Tools A significant advantage of Oracle Analytics Cloud Architecture is its comprehensive suite of visualization tools. The platform includes Oracle Analytics Cloud components that provide dynamic dashboards, interactive reports, and ad hoc query capabilities. These tools empower business users to explore data intuitively, create compelling reports, and gain actionable insights through real-time visual analytics.

5. Security and Compliance Framework Data security is a top priority in Oracle Analytics Cloud Architecture, with built-in security measures that protect sensitive business information. The platform incorporates Oracle Analytics Cloud security features such as data encryption, access control, and multi-factor authentication. Compliance with global regulations ensures that enterprises can trust the security of their data within the Oracle Cloud environment.

6. Scalability and Performance Optimization As businesses grow, their data needs evolve, requiring a scalable analytics infrastructure. Oracle Analytics Cloud scalability ensures enterprises can expand their analytics capabilities without compromising performance. The architecture supports automatic resource allocation, high-speed data processing, and workload balancing to meet the demands of large-scale data operations.

7. AI and Machine Learning Integration The integration of AI and ML technologies within Oracle Analytics Cloud Architecture enhances predictive analytics and automated insights. By leveraging these advanced capabilities, businesses can identify trends, forecast outcomes, and improve strategic planning. The inclusion of AI-driven analytics within the architecture ensures that organizations stay ahead in a data-driven competitive landscape.

Challenges and Optimization Strategies Despite the powerful capabilities of Oracle Analytics Cloud Architecture, organizations may face challenges such as data silos, integration complexities, and performance tuning issues. To address these concerns, businesses must implement best practices such as optimizing query performance, streamlining data pipelines, and ensuring proper governance. Additionally, leveraging the Oracle Cloud analytics framework can enhance workflow automation and improve overall efficiency in data management.

Maximizing Business Value with Oracle Analytics Cloud Architecture Oracle Analytics Cloud Architecture provides enterprises with an advanced ecosystem for data-driven decision-making. By utilizing its core components effectively, businesses can enhance their analytics capabilities, improve data integration, and achieve greater operational agility. With support for Oracle Cloud-based analytics solutions, organizations can seamlessly transition to a modern analytics infrastructure that meets evolving business demands.

Accelerate Your Analytics Strategy with Dataterrain For businesses seeking expert guidance in implementing Oracle Analytics Cloud Architecture, Dataterrain offers specialized solutions tailored to optimize data integration, reporting, and analytics performance. With extensive experience in Oracle data analytics architecture, Dataterrain helps organizations maximize the value of their data investments while ensuring seamless transitions to cloud-based analytics environments. Contact Dataterrain today to unlock the full potential of your analytics infrastructure and drive business innovation.

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Designed specifically for enterprise applications and workloads, the Seagate® Exos® 7E10 Enterprise Hard Drive offers up to 10TB of storage without sacrificing performance. These secure hard drives with high storage capacity and performance are optimized for demanding storage applications. The Exos 7E10 is driven by generations of industry-leading innovation. It delivers proven performance, absolute reliability, highest security and low total cost of ownership for demanding continuous operation. Designed specifically for enterprise applications and workloads, the Seagate Exos 7E10 Enterprise Hard Drive offers up to 10TB of storage without sacrificing performance. These secure hard drives with high storage capacity and performance are optimized for demanding storage applications. The Exos 7E10 is driven by generations of industry-leading innovation. It delivers proven performance, absolute reliability, highest security and low total cost of ownership for demanding continuous operation. Cost-effective reliability: With up to 10 TB per hard drive, the Exos 7E10 is perfect for use as storage for data center infrastructures requiring extremely reliable enterprise-class hard drives. HIGH PERFORMANCE PERFORMANCE: Exos 7E10 hard drives offer an MTBF value of 2 million hours and support workloads of up to 550 TB per year. A state-of-the-art cache and ad-hoc error correction algorithms and the torsional vibration design ensure consistent performance in replicated systems and multi-drive RAID systems. EASY INTEGRATION, OPTIMAL TOTAL OPERATION COST: Meet the storage needs of your workloads with the most efficient and cost-effective data center space currently available on the market. With interface options with SAS (12Gb/s) and SATA (6Gb/s) the Exos 7E10 is easy to integrate into storage systems. ADVANCED SECURITY FEATURES: The Exos 7E10 prevents unauthorized drive access and protects stored data. Seagate Secure makes it easier to use and safely dispose of hard drives, helps protect data at rest and comply with data protection requirements at corporate and federal level. [ad_2]

0 notes

Text

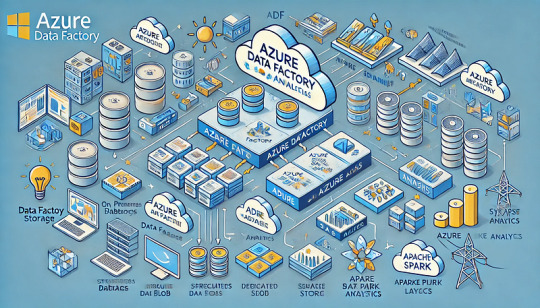

Explore how ADF integrates with Azure Synapse for big data processing.

How Azure Data Factory (ADF) Integrates with Azure Synapse for Big Data Processing

Azure Data Factory (ADF) and Azure Synapse Analytics form a powerful combination for handling big data workloads in the cloud.

ADF enables data ingestion, transformation, and orchestration, while Azure Synapse provides high-performance analytics and data warehousing. Their integration supports massive-scale data processing, making them ideal for big data applications like ETL pipelines, machine learning, and real-time analytics. Key Aspects of ADF and Azure Synapse Integration for Big Data Processing

Data Ingestion at Scale ADF acts as the ingestion layer, allowing seamless data movement into Azure Synapse from multiple structured and unstructured sources, including: Cloud Storage: Azure Blob Storage, Amazon S3, Google

Cloud Storage On-Premises Databases: SQL Server, Oracle, MySQL, PostgreSQL Streaming Data Sources: Azure Event Hubs, IoT Hub, Kafka

SaaS Applications: Salesforce, SAP, Google Analytics 🚀 ADF’s parallel processing capabilities and built-in connectors make ingestion highly scalable and efficient.

2. Transforming Big Data with ETL/ELT ADF enables large-scale transformations using two primary approaches: ETL (Extract, Transform, Load): Data is transformed in ADF’s Mapping Data Flows before loading into Synapse.

ELT (Extract, Load, Transform): Raw data is loaded into Synapse, where transformation occurs using SQL scripts or Apache Spark pools within Synapse.

🔹 Use Case: Cleaning and aggregating billions of rows from multiple sources before running machine learning models.

3. Scalable Data Processing with Azure Synapse Azure Synapse provides powerful data processing features: Dedicated SQL Pools: Optimized for high-performance queries on structured big data.

Serverless SQL Pools: Enables ad-hoc queries without provisioning resources.

Apache Spark Pools: Runs distributed big data workloads using Spark.

💡 ADF pipelines can orchestrate Spark-based processing in Synapse for large-scale transformations.

4. Automating and Orchestrating Data Pipelines ADF provides pipeline orchestration for complex workflows by: Automating data movement between storage and Synapse.

Scheduling incremental or full data loads for efficiency. Integrating with Azure Functions, Databricks, and Logic Apps for extended capabilities.

⚙️ Example: ADF can trigger data processing in Synapse when new files arrive in Azure Data Lake.

5. Real-Time Big Data Processing ADF enables near real-time processing by: Capturing streaming data from sources like IoT devices and event hubs. Running incremental loads to process only new data.

Using Change Data Capture (CDC) to track updates in large datasets.

📊 Use Case: Ingesting IoT sensor data into Synapse for real-time analytics dashboards.

6. Security & Compliance in Big Data Pipelines Data Encryption: Protects data at rest and in transit.

Private Link & VNet Integration: Restricts data movement to private networks.

Role-Based Access Control (RBAC): Manages permissions for users and applications.

🔐 Example: ADF can use managed identity to securely connect to Synapse without storing credentials.

Conclusion

The integration of Azure Data Factory with Azure Synapse Analytics provides a scalable, secure, and automated approach to big data processing.

By leveraging ADF for data ingestion and orchestration and Synapse for high-performance analytics, businesses can unlock real-time insights, streamline ETL workflows, and handle massive data volumes with ease.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

How Do ChatGPT Development Services Enhance Workflow Automation?

In the digital era, businesses are constantly seeking innovative ways to optimize processes, reduce manual workload, and improve efficiency. Workflow automation has become a cornerstone of modern operations, and the rise of AI-driven technologies like ChatGPT has transformed how businesses approach automation.

ChatGPT, a powerful AI language model developed by OpenAI, can streamline workflows, enhance productivity, and reduce operational costs when integrated into business systems. This blog explores how ChatGPT development services enhance workflow automation and the transformative impact they have on various industries.

Understanding Workflow Automation

Workflow automation refers to the use of technology to perform routine tasks or processes with minimal human intervention. From handling customer inquiries to managing internal operations, automation simplifies complex workflows, enabling teams to focus on strategic goals.

Traditional automation tools rely on predefined rules and logic. However, ChatGPT introduces a new dimension to automation by leveraging natural language understanding and machine learning. It can interpret, respond to, and adapt to dynamic inputs, making it a versatile tool for automating workflows.

Key Benefits of ChatGPT Development Services in Workflow Automation

1. Intelligent Task Management

ChatGPT excels at managing repetitive and time-consuming tasks. For instance, it can:

Schedule appointments.

Send reminders to teams.

Generate reports from raw data. By integrating ChatGPT into workflow management tools, businesses can create a centralized system for task delegation and tracking.

2. Enhanced Communication and Collaboration

Effective communication is crucial for seamless workflow execution. ChatGPT acts as a virtual assistant, bridging communication gaps by:

Responding to queries in real-time.

Summarizing lengthy documents or meeting notes.

Translating messages for global teams. Its ability to process and generate human-like text fosters collaboration among team members and stakeholders.

3. Personalized Customer Interaction

Customer support is a vital area where ChatGPT shines. By integrating ChatGPT into CRM systems, businesses can:

Provide instant, accurate responses to customer inquiries.

Resolve issues 24/7 without human intervention.

Offer personalized recommendations based on customer preferences. This not only improves customer satisfaction but also reduces the workload on support teams.

4. Automating Content Creation

ChatGPT can generate content at scale, making it a valuable asset for marketing and documentation workflows. Use cases include:

Writing blog posts, social media captions, and product descriptions.

Drafting professional emails and proposals.

Creating training materials for employees. Automating content creation accelerates time-to-market and ensures consistency in messaging.

5. Data Analysis and Reporting

ChatGPT development services can be tailored to integrate with data analytics tools. It can:

Interpret large datasets and extract actionable insights.

Generate detailed reports with easy-to-understand summaries.

Answer ad-hoc queries about data trends and performance metrics. This makes data-driven decision-making more accessible across organizations.

6. Seamless Integration with Existing Tools

Modern businesses rely on a variety of tools for operations, such as Slack, Trello, and Salesforce. ChatGPT APIs can be seamlessly integrated into these platforms, enhancing their functionality. For example:

Automating ticket resolution in support systems.

Creating interactive dashboards.

Enabling natural language queries in analytics tools. This flexibility ensures that ChatGPT fits into your existing tech ecosystem.

How ChatGPT Development Services Are Customized for Your Business

The versatility of ChatGPT allows for tailored development services to meet specific business needs. Developers work closely with organizations to design custom workflows that align with their objectives. Customization includes:

Training the model on proprietary data: Ensuring that ChatGPT understands your business terminology and processes.

Creating conversational flows: Designing dialogues that match your brand’s tone and style.

Integrating APIs: Connecting ChatGPT to your existing systems for seamless automation.

Real-World Applications of ChatGPT in Workflow Automation

1. Healthcare

Automating patient appointment scheduling and reminders.

Providing instant answers to common health queries.

Summarizing patient records for quick review by healthcare providers.

2. E-Commerce

Managing customer queries about products and orders.

Personalizing product recommendations.

Generating SEO-optimized product descriptions.

3. Human Resources

Streamlining recruitment processes by screening resumes and scheduling interviews.

Answering employee questions about company policies.

Automating onboarding processes with personalized training guides.

4. Finance

Assisting customers with FAQs on loans, investments, and payments.

Generating monthly financial reports.

Analyzing transactions to detect fraud patterns.

Best Practices for Implementing ChatGPT in Workflow Automation

Identify Repetitive Tasks: Analyze workflows to pinpoint processes that can benefit most from automation.

Train the Model: Use domain-specific data to train ChatGPT for higher accuracy and relevance.

Start Small: Begin with a pilot project to test effectiveness and gather feedback.

Ensure Security: Protect sensitive data with robust encryption and compliance measures.

Monitor and Improve: Continuously track performance and refine ChatGPT’s responses for better outcomes.

The Future of Workflow Automation with ChatGPT

As ChatGPT technology evolves, its capabilities in workflow automation will expand further. With advancements in natural language processing and machine learning, ChatGPT will become an indispensable tool for businesses aiming to stay competitive in the digital age.

The integration of ChatGPT into workflows not only enhances productivity but also empowers teams to focus on innovation and growth. By adopting ChatGPT development services, businesses can unlock new levels of efficiency and deliver exceptional value to their customers.

Conclusion

ChatGPT development services are redefining workflow automation across industries. Its ability to understand, respond, and adapt to human inputs makes it an ideal solution for automating complex workflows and driving operational efficiency. Whether it’s streamlining communication, managing tasks, or generating insights, ChatGPT has the potential to transform how businesses operate.

Embrace ChatGPT-powered automation today to stay ahead in a competitive marketplace.

0 notes

Text

Utilize Dell Data Lakehouse To Revolutionize Data Management

Introducing the Most Recent Upgrades to the Dell Data Lakehouse. With the help of automatic schema discovery, Apache Spark, and other tools, your team can transition from regular data administration to creativity.

Dell Data Lakehouse

Businesses’ data management plans are becoming more and more important as they investigate the possibilities of generative artificial intelligence (GenAI). Data quality, timeliness, governance, and security were found to be the main obstacles to successfully implementing and expanding AI in a recent MIT Technology Review Insights survey. It’s evident that having the appropriate platform to arrange and use data is just as important as having data itself.

As part of the AI-ready Data Platform and infrastructure capabilities with the Dell AI Factory, to present the most recent improvements to the Dell Data Lakehouse in collaboration with Starburst. These improvements are intended to empower IT administrators and data engineers alike.

Dell Data Lakehouse Sparks Big Data with Apache Spark

An approach to a single platform that can streamline big data processing and speed up insights is Dell Data Lakehouse + Apache Spark.

Earlier this year, it unveiled the Dell Data Lakehouse to assist address these issues. You can now get rid of data silos, unleash performance at scale, and democratize insights with a turnkey data platform that combines Dell’s AI-optimized hardware with a full-stack software suite and is driven by Starburst and its improved Trino-based query engine.

Through the Dell AI Factory strategy, this are working with Starburst to continue pushing the boundaries with cutting-edge solutions to help you succeed with AI. In addition to those advancements, its are expanding the Dell Data Lakehouse by introducing a fully managed, deeply integrated Apache Spark engine that completely reimagines data preparation and analytics.

Spark’s industry-leading data processing capabilities are now fully integrated into the platform, marking a significant improvement. The Dell Data Lakehouse provides unmatched support for a variety of analytics and AI-driven workloads with to Spark and Trino’s collaboration. It brings speed, scale, and innovation together under one roof, allowing you to deploy the appropriate engine for the right workload and manage everything with ease from the same management console.

Best-in-Class Connectivity to Data Sources

In addition to supporting bespoke Trino connections for special and proprietary data sources, its platform now interacts with more than 50 connectors with ease. The Dell Data Lakehouse reduces data transfer by enabling ad-hoc and interactive analysis across dispersed data silos with a single point of entry to various sources. Users may now extend their access into their distributed data silos from databases like Cassandra, MariaDB, and Redis to additional sources like Google Sheets, local files, or even a bespoke application within your environment.

External Engine Access to Metadata

It have always supported Iceberg as part of its commitment to an open ecology. By allowing other engines like Spark and Flink to safely access information in the Dell Data Lakehouse, it are further furthering to commitment. With optional security features like Transport Layer Security (TLS) and Kerberos, this functionality enables better data discovery, processing, and governance.

Improved Support Experience

Administrators may now produce and download a pre-compiled bundle of full-stack system logs with ease with to it improved support capabilities. By offering a thorough evaluation of system condition, this enhances the support experience by empowering Dell support personnel to promptly identify and address problems.

Automated Schema Discovery

The most recent upgrade simplifies schema discovery, enabling you to find and add data schemas automatically with little assistance from a human. This automation lowers the possibility of human mistake in data integration while increasing efficiency. Schema discovery, for instance, finds the newly added files so that users in the Dell Data Lakehouse may query them when a logging process generates a new log file every hour, rolling over from the log file from the previous hour.

Consulting Services

Use it Professional Services to optimize your Dell Data Lakehouse for better AI results and strategic insights. The professionals will assist with catalog metadata, onboarding data sources, implementing your Data Lakehouse, and streamlining operations by optimizing data pipelines.

Start Exploring

The Dell Demo Center to discover the Dell Data Lakehouse with carefully chosen laboratories in a virtual environment. Get in touch with your Dell account executive to schedule a visit to the Customer Solution Centers in Round Rock, Texas, and Cork, Ireland, for a hands-on experience. You may work with professionals here for a technical in-depth and design session.

Looking Forward

It will be integrating with Apache Spark in early 2025. Large volumes of structured, semi-structured, and unstructured data may be processed for AI use cases in a single environment with to this integration. To encourage you to keep investigating how the Dell Data Lakehouse might satisfy your unique requirements and enable you to get the most out of your investment.

Read more on govindhtech.com

#UtilizeDell#DataLakehouse#apacheSpark#Flink#RevolutionizeDataManagement#DellAIFactory#generativeartificialintelligence#GenAI#Cassandra#SchemaDiscovery#Metadata#DataSources#dell#technology#technews#news#govindhtech

0 notes

Text

Load Testing vs Stress Testing | Key Differences

In this article, we will delve into the intricacies of load testing and stress testing, uncovering their fundamental differences and shedding light on how they contribute uniquely to refining software performance.

What is Load Testing?

Load testing is a type of performance testing that focuses on the behaviors and responsiveness of an application under normal load conditions. It simulates the expected workload on the application, such as the number of concurrent users or transactions, to evaluate its performance metrics.

Load Testing helps you gauge the breaking point where performance remains optimal, and user experience doesn’t take a hit. It helps identify performance bottlenecks, uncover any inefficiencies, and ensure that the application can handle the projected load without compromising its speed, stability, or user experience.

What is Stress Testing?

Stress testing is another fundamental performance testing technique aimed at assessing an application’s robustness and reliability under extreme and challenging conditions.

Unlike load testing, stress testing pushes an application beyond its normal load conditions.

The primary objective of stress testing is to identify the breaking point of the system, understand how it behaves under extreme stress, and evaluate its ability to recover gracefully from any failures or crashes.

This type of testing helps uncover vulnerabilities, bottlenecks, and weaknesses in the application’s architecture, enabling developers to fortify the system and enhance its overall performance and stability.

Load Testing Vs Stress Testing

Load testing is primarily focused on assessing the performance of a system under anticipated or typical user loads. The primary objective is to ensure that the system functions smoothly and efficiently under regular, expected conditions. Think of it as evaluating how well your car runs during everyday city driving or highway cruising. Load testing helps answer questions like:

Can the application handle the expected number of users without slowing down?

Is the system responsive under typical user interactions?

Are the response times within acceptable limits under normal usage?

In essence, load testing assures that your application will perform adequately when it encounters the expected traffic load, ensuring a good user experience under usual circumstances.

On the flip side, stress testing is all about pushing the system to its limits and beyond. The main aim here is to evaluate how well the system copes with extreme and adverse conditions that exceed its standard operational boundaries. Imagine testing how your car handles the harshest off-road terrain or extreme weather conditions. Stress testing seeks answers to critical questions such as:

What happens when the system faces an unexpected surge in user traffic?

How does the application behave when hardware components fail unexpectedly?

Can the system recover gracefully from sudden and intense stress?

Stress testing goes beyond everyday scenarios to identify vulnerabilities and weaknesses that could lead to system failures under exceptional circumstances. It’s like stress-testing a bridge to ensure it can withstand the most severe earthquakes or storms.

What are the Best Practices for Load Testing and Stress Testing?

Some of the best practices for load and stress testing, or any type of performance testing, are as follows,

Always begin with a performance baseline. A solid baseline is crucial to identify and measure performance bottlenecks for improvements or declines over time.

Prioritize your performance tests since covering every user workflow is impractical, given the various types available. Focus on the most essential workflows initially.

Integrate performance testing into your CI/CD pipeline. Run performance tests as part of continuous integration and deployment to ensure continuous testing. Ad hoc approaches are rarely effective in practice.

Allocate time for addressing any performance issues. Performing performance tests in the early stages of development allows much time for fixing issues before the product goes to production. Implementing a shift left approach has a remarkable impact on testing.

Tools for Load Testing and Stress Testing

Load testing and stress testing are critical activities in software testing that help ensure the performance and stability of applications under different conditions. To conduct these tests effectively, you’ll need the right tools. Here are some popular tools for load testing and stress testing:

1. Apache JMeter: Apache JMeter is an open-source tool that can perform load testing on a variety of applications, servers, and protocols. It allows software testers to simulate a heavy load on a server, network, or object to analyze overall performance under different conditions. Apache JMeter supports both load testing and stress testing.

2. LoadRunner: LoadRunner, developed by Micro Focus, is a powerful tool for performance testing. It supports a wide range of protocols and can simulate thousands of virtual users to test your application’s scalability and performance. LoadRunner supports both load testing and stress testing.

3. Gatling: Gatling is an open-source, highly customizable load testing tool. It is particularly well-suited for testing asynchronous scenarios and can generate detailed reports to help you analyze performance bottlenecks. Gatling supports both load testing and stress testing.

4. BlazeMeter: BlazeMeter is a cloud-based load-testing platform that allows users to create and execute tests easily. It integrates with various CI/CD tools and provides real-time reporting and analytics. BlazeMeter supports both load testing and stress testing.

5. Neoload: Neoload is a user-friendly tool for performance testing. It supports a wide range of technologies, including mobile and IoT, and offers features like test design, test maintenance, and performance analysis. Neoload supports both load testing and stress testing.

6. Locust: Locust is an open-source load testing tool that allows you to write test scenarios in Python. It’s easy to set up and can simulate thousands of users. It focuses on simplicity and scalability. Locust supports both load testing and stress testing.

7. WebLOAD: WebLOAD is a load testing tool designed for web and mobile applications. It offers a wide range of testing capabilities, including peak load testing and continuous testing. WebLOAD supports both load testing and stress testing.

8. LoadUI: LoadUI is a load testing tool by SmartBear that focuses on ease of use. It allows you to create complex load tests through a graphical interface. LoadUI supports both load testing and stress testing.

Read more here about. Top 6 Types of Performance Testing

Conclusion:

Load and stress testing are essential components of performance testing, but they serve different purposes and involve distinct testing approaches. Performance testing services encompass these methodologies to ensure that a system can handle its expected user load efficiently while pushing the system beyond its limits to uncover vulnerabilities. Choosing the right testing strategy, tools, and techniques from performance testing services depends on the specific goals of your testing process and the characteristics of your application.

0 notes

Text

Each organization has its reasons to move to the cloud. Some do it for cost optimizations, while others scale operations.

The cloud journey means different things to different organizations. There are some whose foundation is on the cloud; these organizations are also known as cloud natives or born in cloud companies. Since they are built ground up on the cloud, they don’t have to deal with legacy systems or infrastructures.

But for the rest of the organizations who have been in business for a certain period are bound to have some if not the majority of their workloads on-premise. This is more true for large organizations that have been in business for long.

Not only do they have essential workloads in their data center, in many cases, the applications are monolithic. So what one has is a system built on legacy infrastructure and monolithic workloads. This is not necessarily bad because these IT infrastructures were custom-built and have stood the test of time.

When a company adopts cloud, it does so purely for tangible business benefits. And many organizations (including large ones) have come to realize there are instances when you are left with no choice but to move to the cloud, purely because of what the cloud offers in the form of scale, reduced lead time etc.

Once an enterprise decides on embarking the cloud journey, comes a critical element of this journey, i.e. migrating the chosen workloads to the assigned cloud platform. One thing is sure: the migration process is no walk in the park. More often than not, a cloud project fails primarily because of issues in migrating the workloads. So much so that it defeats the entire purpose of going to the cloud.

It is vital to have a clearly defined cloud strategy and not take it as an ad-hoc project. A good business strategy has various elements, which are first defined and then a team is entrusted with implementing it. A cloud rollout should also follow a similar structure.

One of the fundamental tenets of the cloud is to simplify managing IT infrastructure. But as more workloads go to the cloud, issues around vendor lock-in and lack of visibility of multiple cloud environments are surfacing. While there are no easy answers to this, but basis our understanding, it is best advised to spread your cloud portfolio across multiple cloud service providers while maintaining a hybrid approach. Also, it is imperative that due importance is given to regular training on how to use and manage cloud environments. Offloading workloads to the cloud helps increase the bandwidth for internal IT teams, but it is essential to acknowledge that a successful deployment is not the sole responsibility of the cloud service provider; it is an equal partnership. know more.....

0 notes

Text

What Is a Business Intelligence Platform and Why Do You Need One?

In today's fast-paced business world, data is king. But having access to data is not enough; it's about turning that data into actionable insights. This is where a Business Intelligence Platform comes into play. It's not just another tech buzzword; it's a vital tool that can transform the way you operate and make decisions in your organization. In this blog, we'll demystify what a Business Intelligence Platform is and, more importantly, why your business needs one.

Unpacking Business Intelligence Platform

A Business Intelligence Platform (BI Platform) is a comprehensive software solution designed to collect, process, analyze, and visualize data, empowering businesses to make informed decisions. At its core, it's about turning raw data into valuable insights.

Key Components of a BI Platform

Data Integration: BI Platforms collect data from various sources, such as databases, spreadsheets, and cloud applications, and consolidate it into a unified view.

Data Analysis: Once data is integrated, the platform performs various analytical operations, including data mining, statistical analysis, and predictive modeling.

Data Visualization: The insights gained from data analysis are presented through interactive dashboards, reports, and visualizations, making it easier for users to understand and act upon.

Business Reporting: BI Platforms offer the capability to generate and share detailed reports, often scheduled and automated.

Data Querying: Users can explore data through ad-hoc queries and create custom reports as needed.

Why Your Business Needs a BI Platform

Now that we've defined what a BI Platform is, let's explore why it's not just a "nice-to-have" but a crucial tool for businesses of all sizes.

1. Informed Decision-Making

In the business world, decisions can't be left to chance. A BI Platform empowers decision-makers with accurate, real-time insights. Whether it's understanding customer behavior, monitoring sales trends, or optimizing supply chain operations, having data-driven insights at your fingertips ensures that every decision is well-informed.

2. Enhanced Operational Efficiency

BI Platforms streamline data processes. They automate data collection, cleansing, and transformation, reducing manual workloads and minimizing errors. This efficiency boost allows your team to focus on higher-value tasks.

3. Competitive Advantage

In today's competitive landscape, staying ahead requires more than just intuition. BI Platforms help you spot market trends, customer preferences, and emerging opportunities before your competitors do. This proactive approach can be a game-changer.

4. Improved Customer Experience

Understanding your customers is paramount. BI Platforms provide in-depth customer analytics, enabling you to tailor your products, services, and marketing strategies to meet their specific needs. This personalization can lead to increased customer loyalty.

5. Cost Reduction

By optimizing operations, identifying cost-saving opportunities, and minimizing inefficiencies, a BI Platform can lead to significant cost reductions in the long run. It helps allocate resources more effectively, ensuring that you get the most out of your investments.

6. Scalability

A good BI Platform grows with your business. It can handle increasing volumes of data and users as your organization expands. This scalability ensures that your BI solution remains effective in both small startups and large enterprises.

7. Data Security

BI Platforms prioritize data security. They provide access controls, encryption, and audit trails to protect sensitive information. This is crucial in today's data privacy-conscious world.

8. Measurable Results

One of the most significant advantages of a BI Platform is its ability to measure performance. You can track key performance indicators (KPIs), assess the impact of strategic decisions, and adjust your approach based on real data.

Who Needs a BI Platform?

The short answer is: almost every business. From startups looking to gain a competitive edge to established enterprises aiming to stay ahead, the benefits of a BI Platform are far-reaching. Here are a few scenarios where a BI Platform is particularly valuable:

Sales and Marketing: Analyzing customer behavior, sales trends, and marketing campaign performance.

Finance: Managing budgets, forecasting, and monitoring financial performance.

Supply Chain and Inventory: Optimizing inventory levels, tracking shipments, and identifying supply chain bottlenecks.

Human Resources: Managing workforce data, tracking employee performance, and optimizing HR processes.

Customer Support: Monitoring customer satisfaction, response times, and support ticket trends.

E-commerce: Analyzing website traffic, cart abandonment rates, and product performance.

Choosing the Right BI Platform

Selecting the right BI Platform for your business is critical. Here are some factors to consider:

Scalability: Ensure that the platform can grow with your business.

Ease of Use: A user-friendly interface is crucial, especially if users across different departments will be interacting with it.

Integration: Check if the platform integrates smoothly with your existing systems and data sources.

Data Security: Verify that the platform meets your security and compliance requirements.

Customization: Assess whether you can tailor the platform to your specific business needs.

Conclusion

In an era where data is abundant but insights are precious, a Business Intelligence Platform is not just a tool; it's a strategic asset. It empowers businesses to make data-driven decisions, enhance efficiency, gain a competitive edge, and ultimately thrive in a data-centric world.

Investing in a BI Platform is an investment in the future of your business. It's a step towards a more informed, efficient, and successful organization. So, whether you're a startup aiming for rapid growth or an established enterprise seeking to maintain your market position, a BI Platform is a path to success you can't afford to overlook. Let’s connect for additional details.

0 notes

Text

Automated or Manual Testing: Keeping the balance Right!

If you are a tester, then you must have had a discussion around automated or manual testing. This is nothing new, and lots of techies have different views around this. Whether you are a big team and already established an automation framework or you are a small team, new to automation, it is always necessary to keep this balance right in order to get maximum efficiency.

Surely automation testing is having the benefits of increasing efficiency, getting faster regressions and thus contributing to timely project deliveries. It also removes the execution of repetitive test cases or regression cases manually and saves a tester’s life.

But before considering automation, there are certain points which you should evaluate. You must have heard a statement “You can not automate everything” which is very true.

Manual testing is required in many cases. In fact the biggest drawback of manual testing itself is its biggest advantage that it requires human intervention! There are certain cases which require human instinct and intuitiveness to test a system. To name a few, these are the following cases where manual testing plays a vital role-

Usability Testing- This is testing an application in the view of how easy or difficult is it to understand. This is to test how interactive the application is to the users who are going to use it. These kind of tests can not be automated and must be performed manually.

UI and UX Testing- UI and UX testing can not be automated and even if you try, it would be only to some extent.Automation scripts can be used to test the layout, css errors and html structure but the whole user experience can not be automated as it is very subjective.

Exploratory Testing- Cem Kaner who coined the term in 1984, defines exploratory testing as – “A style of software testing that emphasizes the personal freedom and responsibility of the individual tester to continually optimize the quality of his/her work by treating test-related learning, test design, test execution, and test result interpretation as mutually supportive activities that run in parallel throughout the project.”

Ad-hoc testing- This is completely unplanned testing which relies on tester’s insight and approach. There is no script ready for this testing and has to be performed manually.

Pros of Automation Testing-

There are certain cases where automation testing is beneficial and can actually reduce the efforts and increase productivity. Let’s have a look-

Regression Testing– Regression cases are mostly repetitive and we can automate them once and execute in a timely manner.

Load Testing– Automation is very much useful in case of load testing. Load testing identifies the bottlenecks in the system under various workloads and checks how the system reacts when the load is gradually increased, which can be achieved by automation.

Performance Testing- Performance Testing is defined as a type of software testing used to ensure whether software applications perform well under their expected workload. Automation is very useful in this type of testing.

Apart from that, the test cases which are repetitive, can be automated. Keeping in mind the above points, you can decide on what, how and why of automation.

What, Why and How of Automation- To maintain a balance between manual and automation can be very tricky at times. I have seen many aggressive managers pushing to automate everything. But is this the best approach?

Before starting to automate, you need to answer these three questions-

1) What needs to be automated?

Let’s first think of what exactly needs to be automated. Here by ‘Exactly’, I mean what part of the ‘requirement’/ ‘feature’/ ‘application’ is a candidate looking for automation. Often the application which is going to be automated is termed as AUT (Application Under Test ).It is quite possible that a part of a feature can be automated and the rest be tested manually.

This requires deep-dive into the feature, it’s test cases and effort which will be required. Sometimes knowing how developer is going to implement that feature plays a vital role in deciding if it can be automated and to what extent.

2) Why automation?

This is very important. Why you need to automate? Is it because it reduces effort and increases efficiency? Or is it because it would benefit in long run? Or is it just conventional? During my tenure as QA I found some managers who aggressively wanted everything to be automated without analysing that it might increase effort and reap no fruit. You might end up asking a few questions to yourself-

Is it a one time requirement and never coming in future? we probably don’t need to automate then.

Is the automation solution complex? Also you need to understand the complexity of an application under test. If automating it leads to building a parallel application itself, there is no point of automation. But there can be a case where the solution is complex but the feature or AUT keeps on changing and development is planned for long term, then you may find automation beneficial in long run.

Time constraint- There might be a time constraint in delivery. At that time manager’s role is very crucial in deciding to invest in automation or go for manual.

Resources and skills of Testing team- This is also an important factor. How many automation engineers are available in the testing team to leverage their bandwidth for AUT? Mostly, for small teams this is the deciding factor to go for automation.

3) How to automate?

This refers to find the solution. The Why and How are co-related. So you might find answering How and get answered for Why and vice-versa.

HOW is to decide how we are going to automate AUT. Do not confuse with AUT or feature as this stands for both if you are going to automate entire application or a small feature. Sometimes for a small application you need not require a full fledged automation framework.

There are various tools available which don’t require coding and can solve the requirement. For eg- TruBot from CloudQA is one such tool which has many handy tools for different types of testing and is very user friendly for small applications.

Apart from that, there are various requirements which can be catered by simply writing a shell script.

A full fledged automation framework is required when AUT is big and there are continuous enhancements going on. At that time, a regression suit can be executed before each feature release and automation can significantly reduce the effort.

Automation framework development requires both coding skills and time, so before jumping into that,a tester should always analyse the ROI (Return On Investment) and then make a plan accordingly.

Also automation gives a sense of confidence that there is maximum coverage of regression tests and existing features are not broken because of new feature addition.

ConclusionLet’s conclude that either only manual or only automation is not the right approach. There should be a balance between both and I hope that above points will be helpful in finding the right balance.Kick start automation of your appl

1 note

·

View note

Text

Optimizing Databricks Workloads: The newest book published to help you master Databricks and its optimization technique

Accelerate computations and make the most of your data effectively on Databricks, says the author of Optimizing Databricks Workloads: Harness the power of Apache Spark in Azure and maximize the performance of modern big data workloads.

About Databricks as a company

Databricks is a Data + AI company. Originally founded in 2013 by the creators of Apache SparkTM, Delta lake, and MLflow. Databricks is the world’s first Lakehouse platform in the cloud that combines the best of data warehouses and data lakes that offer an open and unified platform for data and AI. The company’s Delta Lake is an open-source project that works to bring reliability to data lakes for machine learning along with other data science uses. In the year 2017, the company was announced as the first-party service on Microsoft Azure using the integration Azure Databricks.

Databricks as a platform

Databricks provide a unified platform for data scientists, data engineers, and data analysts. It provides a collaborative environment for the users to run interactive and scheduled data analysis workloads.

In this article, you’ll get to know a brief about Databricks, and the associated optimization techniques. We’ll be cove

Azure Databricks: An Intro

Azure Databricks is a data analytic platform that is optimized for Azure cloud services platform. It provides the latest versions of Apache Spark and allows users to seamlessly integrate with open-source libraries. The Azure users get access to three environments that help in developing data-intensive apps: Databricks SQL, Databricks Data Science & Engineering, and Databricks Machine Learning.

Databricks SQL lets the analysts use its easy-to-use platforms to run SQL queries. On the other side, Databricks Data Science & Engineering allows you to use the interactive workspace that further enables collaboration between data engineers, scientists, and machine learning engineers. Databricks Machine Learning allows the use of an integrated end-to-end machine learning environment that incorporates managed services for experiment tracking.

*Additional Tip: To select an environment, launch an Azure Databricks workspace and make efficient use of the persona switcher in the sidebar.

Discover Databricks and the related technical requirements

Databricks was established by the creators of Apache Spark to solve the toughest data problems in the world. It was launched as a Spark-based unified data analytics platform. While introducing Databricks, the following points are required to be taken into consideration:

Spark fundamentals: It is a distributed data processing framework that can analyze huge datasets. It further comprises DataFrames, Machine Learning, Graph processing, Streaming, and Spark SQL.

Databricks: Provides a collaborative platform for data science and data engineers. It has something in the bucket for everyone i.e. Data engineers, Data Scientists, Data Analysts, and Business intelligence analysts.

Delta Lake: It was launched by Databricks as an open-source project that converts a traditional data lake into a Lakehouse.

Azure Databricks Workspace

Databricks Workspace is an analytics platform based on Apache Spark that is further integrated with Azure to provide a one-click setup, streamlined workflows, and an interactive workspace. The workspace enables collaboration between data engineers, data scientists, and machine learning engineers.

Databricks Machine Learning

It is an integrated end-to-end machine learning platform that incorporates managed services that includes experiment tracking, model training, feature development, management, and feature & model serving. Besides this, Databricks Machine Learning allows you to do the following:

Train models both manually or AutoML.

Use MLflow tracking efficiently to track training parameters.

Create and access feature tables.

Use Model Registry to share manage and serve models.

Databricks SQL

With Databricks SQL, you are allowed to run quick ad-hoc SQL queries that run on fully managed SQL endpoints sized differently based on the query latency and the number of concurrent users. All the workplaces are pre-configured for users’ ease. Databricks SQL lets you gain enterprise-grade securities, integration with Azure Services, and Power BI, etc.

Want to know how to more about Databricks and their optimization? Worry not, we are here introducing a book that covers detailed knowledge for Databricks career aspirants.

About the book:

Optimizing Databricks Workloads is designed for data engineers, data scientists, and cloud architects who have working knowledge of Spark/Databricks and some basic understanding of data engineering principles. Readers will need to have a working knowledge of Python, and some experience of SQL in PySpark and Spark SQL is beneficial

This book consists of the following chapters:

Discovering Databricks

Batch and Real-Time Processing in Databricks

Learning about Machine Learning and Graph Processing in Databricks

Managing Spark Clusters

Big Data Analytics

Databricks Delta Lake

Spark Core

Case Studies

Book Highlights:

Get to grips with Spark fundamentals and the Databricks platform.

Process big data using the Spark DataFrame API with Delta Lake.

Analyze data using graph processing in Databricks.

Use MLflow to manage machine learning life cycles in Databricks.

Find out how to choose the right cluster configuration for your workloads.

Explore file compaction and clustering methods to tune Delta tables.

Discover advanced optimization techniques to speed up Spark jobs.

The benefit you’ll get from the book

In the end, you will be prepared with the necessary toolkit to speed up your Spark jobs and process your data more efficiently.

Want to know more, pre-order your book on Amazon today.

0 notes

Photo

Semana Responsive Framework

2017

What we did

Atomic design

Experience design

Interface design

Design System development

Industry

Publishing

Media

Context

Publicaciones Semana is the leading magazine publishing company in Colombia. In 2012 they had over 30 different websites for their 10 publications, using device specific versions, ad hoc design and code for each site, and taking over 5 months to deliver a new project to market. It was clear we needed a responsive, structured redesign of all sites, understanding the underlying patterns and general requirements before jumping into design to make sure we could reuse as much code as possible across the different sites.

My responsibilities as Lead UX / UI were creating a common responsive UI framework of components to be easily reusable, designing responsive websites for all publications according to its current brand and visual language, getting stakeholders buy-in for each publication, creating HTML / CSS / JS prototypes and styleguides for easier development of new sites, and helping to optimize sites and develop new features across all sites.

Objectives

Reducing the number of sites supported using responsive design techniques for all ten publications’ sites

Improving code quality and reducing support workload for the development team

Creating a common responsive UI framework that was flexible enough to adapt to the different visual languages and brands, to make easier to reuse code and develop new sites and features faster

Improving key user metrics for all sites, specially for the most visited as they generated more revenue through ads.

Reducing the number of sites supported

Semana, Dinero, Soho, JetSet, Fucsia, FinanzasPersonales, Arcadia, 4Patas, SemanaSostenible. Each one of these 9 publications had three different versions of their websites, one for mobile, one for tablet and the default, non-responsive, desktop version, for a grand total of 27 different websites with very little to no reused code. Such a fragmented codebase was very painful to support for the development team, and made it very difficult to design, let alone implement new features across all sites. By using responsive design techniques and a common UI framework, we were able to reduce the number of sites supported by a third.

A flexible UI framework

For a big company like Publicaciones Semana, adopting or creating a UI framework that is flexible to adapt to the different brands and visual languages of their magazines is key in having a more unified codebase, speeding development of new sites and features and reducing support workload. We decided using Zurb’s Foundation as our base UI framework, creating custom components based on our needs that could be reused easily from project to project. This allowed us to reduce the time needed to develop and launch a new site from over 5 months to under 2 months, and making easier to support and debug as well as implementing new features across all sites.

Improving user metric and ads revenue

Publicaciones Semana digital business model was based in ad revenue, allowing the user free access to all contents. Improving key user metrics like daily / monthly users was very important to be in a position to negotiate better terms with advertisers and sustain this business model. Consolidating the user base in fewer sites and implementing SEO improvements resulted in improvements for all sites, ranging from 45% for semana.com (8.2M vs 5.6M), 87% for soho.com.co (5M vs 2,7M), 76% for fucsia.co (1M vs 0.6M) and 82% for dinero.com (1.7M vs 0.9) since the launch of the new web sites (04/15 vs 04/14).

1 note

·

View note

Text

Isima’s Z3 VM Success: Get 10X Throughput, Half the Cost

Isima’s experiment with Z3 virtual machines for e-commerce yielded a price-performance ratio of two times and a throughput of ten times.

Workloads requiring a lot of storage, such as log analytics and horizontal, scale-out databases, require a high SSD density and reliable performance. They also require regular maintenance so that data is safeguarded in the case of an interruption. Google Cloud Next ’24 marked the official launch of Google Cloud’s first storage-optimized virtual machine family, the Z3 virtual machine series. Z3 delivers extraordinarily dense storage configurations of up to 409 SSD (GiB):vCPU on next-generation local SSD hardware, with an industry-leading 6M 100% random-read and 6M write IOPs.

One of the first companies to test it was the Silicon Valley company and e-commerce analytics cloud, Isima. Their bi(OS) platform offers serverless infrastructure for AI applications and real-time retail and e-commerce data. In order to onboard, process, and operate data for real-time data integration, feature stores, data science, cataloguing, observability, DataOps, and business intelligence, it has a scale-out SQL-friendly database and zero-code capabilities.

Google Cloud compares Z3 to N2 VMs for general purposes and summarises Isima‘s experiments and findings in this blog post. Warning: There will be spoilers ahead: Google Cloud promises 2X better price-performance, 10X higher throughput, and much more.

The examination

Isima tested Z3 on a range of taxing, real-world ecommerce workloads, including microservice calls, ad hoc analytics, visualisation queries, and more, all firing simultaneously. As a Google Cloud partner, Isima was granted early access to Z3.Image credit to Google cloud

In order to optimise Z3 and emulate real-world high-availability implementations, Isima separated each of the three z3-highmem-88 instances into five Docker containers, each of which was deployed across several zones to run bi(OS). There were two 3TB SSDs, 128GB RAM, and 16 vCPUs allotted to each Docker container. With this configuration, Isima was able to compare Z3 more effectively with earlier tests that they ran with n2-highmem-16 instances.

Isima evaluated the following to simulate extreme stress and several worst-case scenarios:

Demand spikes

They demanded (and attained) 99.999% reliability despite hitting the system with a brief peak load that saturated system resources to 70%. They then relentlessly maintained a 75% of that peak for the whole 72-hour period.

Select queries

To prevent inadvertent caching effects by the operating system or bi(OS), they tested select queries. To make sure they were reading data from the Local SSD and not RAM something vital for Z3 Isima purposefully travelled back in time while reading data (using select queries), for example, by querying data that had been entered 30 minutes earlier. They were secure that the outcomes of performance testing would withstand the demands of everyday work because of this.

Various deployment scenarios

Z3’s capacity to manage a range of real-world deployments was validated by testing of both single-tenant and multi-tenant setups.

Simulated maintenance events

Z3’s adaptability to disruptions is demonstrated by Isima, which even factored in scheduled maintenance utilising Docker restarts.

The decision

Throughput: With 2X higher price-performance, bi(OS) on Z3 handled ~2X+ more throughput than the tests conducted using n2-highmem-16 last year.

NVMe disc latencies: Read latencies remained constant, but write latencies improved by almost six times.

Drive variation: Every drive on every z3-highmem-88 virtual machine recorded a variance in read and write latencies of +/- 0.02 milliseconds over a span of 72 hours.

These findings for the new Z3 instances excite Google Cloud, and they will undoubtedly unleash the potential of many more workloads.

Improved encounter with maintenance

Numerous new infrastructure lifecycle technologies that offer more precise and stringent control over maintenance are included with Z3 virtual machines. The mechanism notifies Z3 VMs several days ahead of a scheduled maintenance event. The maintenance event can then be planned at a time of your choosing or it can automatically occur at the scheduled time. This enables us to provide better secure and performant infrastructure while also enabling you to more accurately plan ahead of a disruptive occurrence. Additionally, you’ll get in-place upgrades that use scheduled maintenance events to protect your data.

Driven by Titanium

Z3 virtual machines are constructed using Titanium, Google’s proprietary silicon, security microcontrollers, and tiered scale-out offloads. Better performance, lifecycle management, dependability, and security for your workloads are the ultimate results. With Titanium, Z3 can offer up to 200 Gbps of fully secured networking, three times faster packet processing than previous generation virtual machines (VMs), near-bare-metal speed, integrated maintenance updates for most workloads, and sophisticated controls for applications that are more sensitive.

“Going forward, Google Cloud is pleased to work with us on the development of Google Cloud’s first storage-optimized virtual machine family, building on Google cloud prosperous collaboration since 2016.” Through this partnership, Intel’s 4th generation Intel Xeon CPU and Google’s unique Intel IPU are made available, opening up new performance and efficiency possibilities. – Suzi Jewett, Intel Corporation’s General Manager of Intel Xeon Products

Hyperdisk capacity

Google Cloud offers their next-generation block storage, called Hyperdisk. Because Hyperdisk is based on Titanium, it offers much improved performance, flexibility, and efficiency because it separates the virtual machine host’s storage processing from it. With Hyperdisk, you can effectively meet the storage I/O requirements of data-intensive workloads like databases and data analytics by dynamically scaling storage performance and capacity separately. Choosing pricey, huge compute instances is no longer necessary to obtain better storage performance.

Read more on Govindhtech.com

#govindhtech#news#technologynews#technology#technologytrends#technews#technologysolutions#GoogleCloud#z3vms#ISIMA

0 notes

Text

What is Resource Management and its Importance?

Please Note: This article appeared in saviom and has been published here with permission. Utilizing every resource intelligently is imperative for every organization as they are the most high-priced investments of any business. Moreover, organizations spend a lot of time and cost in creating the right talent pool. Therefore, when their skills and competencies are tapped to their maximum potential, it enhances overall efficiency and profitability.

Resource management has become an integral part of any business today. It has emerged as an independent discipline after organizations became complex with matrix structure and expanded in multiple geographies.

Efficient project resource management provides centralized data into a single repository and provides a single version of the truth.

This blog explains all its necessary concepts and aims to steer you through a successful journey as a resource manager.

Its components include resource scheduling, resource forecasting & planning, capacity planning, business intelligence/reporting, integration with other related applications, and more.

Let’s begin with what is resource management,

#1. Definition of Resource Management

Resource Management is the process of utilizing various types of business resources efficiently and effectively. These resources can be human resources, assets, facilities, equipment, and more.

It refers to the planning, scheduling, and future allocation of resources to the right project at the right time and cost. Resource management plan enables businesses to optimally utilize the skilled workforce and improve profitability.

#2. Importance of Resource Management

Resource management plays a significant role in improving a business’s profitability and sustainability. Let’s highlight how enterprise resource management can help in contributing to both the top line and bottom line of any business.

1. Minimize project resource costs significantly

With enterprise-wide visibility, resource managers can utilize cost-effective global resources from low-cost locations. Having the right mix of local and global resources helps in reducing project costs. Allocating the right resource for the right project enables them to complete the delivery within time and budget. Resource managers can control costs by distributing key resources uniformly across all projects instead of a high priority project.

2. Improving effective/billable resource utilization

Resource management software helps managers forecast the workforce’s utilization in advance. Accordingly, resources can be mobilized from non-billable to billable and strategic work. Sometimes when resources are rolled off from projects, there isn’t any suitable work to engage them. So, eventually, these resources end up on the bench. Resource managers can initially engage these resources in non-billable work before quickly assigning them to suitable billable/strategic projects

3. Bridge the capacity vs. demand gap proactively

Demand forecasting, a function of project resource management, allows managers to foresee the resource demand ahead of time. It enables them to assess and analyze the skills gap within the existing capacity. After identifying the shortages and excesses, resource managers can formulate an action plan to proactively bridge the capacity vs demand gap.

4. Use scarce resources effectively in a matrix organization

The resource management process brings transparency in communication and hence facilitates to effectively share highly skilled resources in a matrix organization. The scarce resources can be utilized across different projects rather than one high priority project. The shared services model will also form teams cutting across multiple geographies for 24 * 7 support operations.

5. Monitor and improve organization health index

Employees look up to their leaders for their professional development. Failing to motivate and provide career development opportunities will predominantly lead to reduced engagement, productivity, and unplanned attrition. Regular monitoring of their skills and performances is beneficial to help them improve and add more value to the organization.

Let’s dive deep into the major components and concepts of resource management,

#3. Resource Management Concepts & its Components

1. Resource scheduling

Resource scheduling involves identifying and allocating resources for a specific period to different project tasks. These tasks can be anything from billable, non-billable, or BAU work.

With a centralized Gantt chart view of the enterprise, resource scheduling eliminates silos of spreadsheets. It also facilitates you to deploy the competent resources to the right job to finish the project within budget and time.

2. Resource utilization

Resource utilization measures the amount of time spent by the employees on different project tasks against their availability. It is a key performance indicator in the modern business landscape as it directly influences the firm’s bottom line.

Utilization can be measured in terms of :

Overall resource utilization

Billable utilization

Strategic utilization

Non-billable utilization

Resource Managers can proactively mobilize resources from non-billable to billable/ strategic tasks and maximize their billable utilization.

3. Resource forecasting

Resource forecasting helps managers predict resource utilization levels in advance and foresee the resources likely to end up on the bench. In addition to these, they can also stay forewarned of the required capacity for pipeline projects.

Leveraging this information, one can

Mobilize the resources to billable tasks from non-billable or BAU activities.

Ensure effective bench management

Bridge the capacity and demand gap

4. Resource and capacity planning

Resource planning is the comprehensive process of planning, forecasting, allocating, and utilizing the workforce most efficiently and intelligently. Resource capacity planning is an undertaking to analyze and bridge the capacity and demand gap well ahead of the curve.

These two components are vital to ensure that none of the project vacancies go unfilled or excess capacity gets wasted. Simply put, resource and capacity planning maximize optimal utilization and timely project deliveries.

5. Business intelligence and reports

Business intelligence provides actionable insights by performing extensive data analysis. Using real-time data, individualized reports, and dashboards on important resource management metrics are generated. These reports strengthen the managers’ decision-making abilities and allow them to monitor the overall resource health index.

Now that we are aware of the significant components and concepts let’s understand which businesses need resource management.

#4. Types of Businesses that Require Enterprise Resource Management?

Organizations with a matrix-based set-up, cross-functional teams, and a shared-services model require enterprise resource management. The level of complexity to which they adopt the business processes depends on their size and functions. Today resource management is part of every organization’s DNA.

Industries such as IT services, construction & engineering, audit & accounting, and professional services organizations, etc. have implemented robust resource management processes.

Other crucial factors that constitute the need for resource management are firms working on multiple projects in ad-hoc environments. It means that they are exposed to several changing variables like project priorities, resource availabilities, and budget constraints.