#DataSources

Explore tagged Tumblr posts

Text

Utilize Dell Data Lakehouse To Revolutionize Data Management

Introducing the Most Recent Upgrades to the Dell Data Lakehouse. With the help of automatic schema discovery, Apache Spark, and other tools, your team can transition from regular data administration to creativity.

Dell Data Lakehouse

Businesses’ data management plans are becoming more and more important as they investigate the possibilities of generative artificial intelligence (GenAI). Data quality, timeliness, governance, and security were found to be the main obstacles to successfully implementing and expanding AI in a recent MIT Technology Review Insights survey. It’s evident that having the appropriate platform to arrange and use data is just as important as having data itself.

As part of the AI-ready Data Platform and infrastructure capabilities with the Dell AI Factory, to present the most recent improvements to the Dell Data Lakehouse in collaboration with Starburst. These improvements are intended to empower IT administrators and data engineers alike.

Dell Data Lakehouse Sparks Big Data with Apache Spark

An approach to a single platform that can streamline big data processing and speed up insights is Dell Data Lakehouse + Apache Spark.

Earlier this year, it unveiled the Dell Data Lakehouse to assist address these issues. You can now get rid of data silos, unleash performance at scale, and democratize insights with a turnkey data platform that combines Dell’s AI-optimized hardware with a full-stack software suite and is driven by Starburst and its improved Trino-based query engine.

Through the Dell AI Factory strategy, this are working with Starburst to continue pushing the boundaries with cutting-edge solutions to help you succeed with AI. In addition to those advancements, its are expanding the Dell Data Lakehouse by introducing a fully managed, deeply integrated Apache Spark engine that completely reimagines data preparation and analytics.

Spark’s industry-leading data processing capabilities are now fully integrated into the platform, marking a significant improvement. The Dell Data Lakehouse provides unmatched support for a variety of analytics and AI-driven workloads with to Spark and Trino’s collaboration. It brings speed, scale, and innovation together under one roof, allowing you to deploy the appropriate engine for the right workload and manage everything with ease from the same management console.

Best-in-Class Connectivity to Data Sources

In addition to supporting bespoke Trino connections for special and proprietary data sources, its platform now interacts with more than 50 connectors with ease. The Dell Data Lakehouse reduces data transfer by enabling ad-hoc and interactive analysis across dispersed data silos with a single point of entry to various sources. Users may now extend their access into their distributed data silos from databases like Cassandra, MariaDB, and Redis to additional sources like Google Sheets, local files, or even a bespoke application within your environment.

External Engine Access to Metadata

It have always supported Iceberg as part of its commitment to an open ecology. By allowing other engines like Spark and Flink to safely access information in the Dell Data Lakehouse, it are further furthering to commitment. With optional security features like Transport Layer Security (TLS) and Kerberos, this functionality enables better data discovery, processing, and governance.

Improved Support Experience

Administrators may now produce and download a pre-compiled bundle of full-stack system logs with ease with to it improved support capabilities. By offering a thorough evaluation of system condition, this enhances the support experience by empowering Dell support personnel to promptly identify and address problems.

Automated Schema Discovery

The most recent upgrade simplifies schema discovery, enabling you to find and add data schemas automatically with little assistance from a human. This automation lowers the possibility of human mistake in data integration while increasing efficiency. Schema discovery, for instance, finds the newly added files so that users in the Dell Data Lakehouse may query them when a logging process generates a new log file every hour, rolling over from the log file from the previous hour.

Consulting Services

Use it Professional Services to optimize your Dell Data Lakehouse for better AI results and strategic insights. The professionals will assist with catalog metadata, onboarding data sources, implementing your Data Lakehouse, and streamlining operations by optimizing data pipelines.

Start Exploring

The Dell Demo Center to discover the Dell Data Lakehouse with carefully chosen laboratories in a virtual environment. Get in touch with your Dell account executive to schedule a visit to the Customer Solution Centers in Round Rock, Texas, and Cork, Ireland, for a hands-on experience. You may work with professionals here for a technical in-depth and design session.

Looking Forward

It will be integrating with Apache Spark in early 2025. Large volumes of structured, semi-structured, and unstructured data may be processed for AI use cases in a single environment with to this integration. To encourage you to keep investigating how the Dell Data Lakehouse might satisfy your unique requirements and enable you to get the most out of your investment.

Read more on govindhtech.com

#UtilizeDell#DataLakehouse#apacheSpark#Flink#RevolutionizeDataManagement#DellAIFactory#generativeartificialintelligence#GenAI#Cassandra#SchemaDiscovery#Metadata#DataSources#dell#technology#technews#news#govindhtech

0 notes

Text

Types of Sources

Normally Data Source is a point of Origin from where we can expect data .it might be from any Point. Inside System Outside System. Entity-Backed: if the Data For this Record Comes Directly from a Database Table or View i.e. a database entity. Normally view is seen by Appian as just another database table i.e. tables and views are both classed as entities. You can create an Appian record (or…

View On WordPress

0 notes

Text

Data preparation tools enable organizations to identify, clean, and convert raw datasets from various data sources to assist data professionals in performing data analysis and gaining valuable insights using machine learning (ML) algorithms and analytics tools.

#DataPreparation#RawData#DataSources#DataCleaning#DataConversion#DataAnalysis#MachineLearning#AnalyticsTools#BusinessAnalysis#DataCleansing#DataValidation#DataTransformation#Automation#DataInsights

0 notes

Photo

Are you struggling to manage your added data sources in your Data Studio account? Don't worry, we've got you covered! In this step-by-step guide, we'll show you exactly how to effectively manage your added data sources in a hassle-free manner: Step 1: Log in to your Data Studio account and click on the "Data Sources" tab on the left-hand side of the screen. Step 2: Once you're on the "Data Sources" page, you'll be able to see all the data sources that you've added to your account. Select the data source that you want to manage. Step 3: You'll now be taken to the data source details page where you can see all the fields that are available for this particular data source. From here, you can make any necessary edits to the data source. Step 4: If you want to remove a data source from your account, simply click on the "Remove" button at the bottom of the page. You'll be prompted to confirm your decision before the data source is permanently deleted from your account. Step 5: Congratulations, you've successfully managed your added data sources in your Data Studio account! Don't forget to check back periodically to keep your data up-to-date and accurate. If you're looking for a tool to make managing your data sources even easier, check out https://bitly.is/46zIp8t https://bit.ly/3JGvKXH, you can streamline your data management process and make informed decisions based on real-time data insights. So, what are you waiting for? Start effectively managing your added data sources today and see the impact it can have on your business!

#DataStudio#DataSources#DataManagement#RealTimeDataInsights#StreamlineYourProcess#MakeInformedDecisions#DataAnalytics#Marketing#Education

0 notes

Text

youtube

0 notes

Text

How to Configure ColdFusion Datasource for MySQL, SQL Server, and PostgreSQL?

0 notes

Text

Integrate Google Sheets as a Data Source in AIV for Real-Time Data Analytics

In today’s data-driven world, seamless integration between tools can make or break your analytics workflow. For those using AIV or One AIV for data analytics, integrating Google Sheets as a data source allows real-time access to spreadsheet data, bringing powerful insights into your analysis. This guide will walk you through how to connect Google Sheets to AIV, giving you a direct pipeline for real-time analytics with AIV or One AIV. Follow this step-by-step Google Sheets data analysis guide to get started with AIV.

0 notes

Text

Data Sourcing: The Key to Informed Decision-Making

Introduction

Data sourcing in the contemporary business environment, has been known to result in gaining a competitive advantage in business. Data sourcing is a process of gathering data, processing, and managing it coming from different sources so that business people can make the right decisions. A sound data sourcing strategy will yield many benefits, including growth, increased efficiency, and customer engagement.

Data sourcing is the process of finding and assembling data from a variety of sources, including surveys, publicly available records, or third-party data sources. It's important in attaining the right amount of data that will lead strategic business decisions. Proper data sourcing can allow companies to assemble quality datasets that may provide strategic insights into market trends and consumer behavior patterns.

Types of Data Sourcing

Understanding the various forms of data sourcing will allow firms to identify the suitable type to apply for their needs:

Primary data sourcing: In this method, data sources are obtained from the primary and original source. Among the techniques are surveys, interviews, and focus groups. The benefit of using primary data is that it is unique and specifically offers a solution that meets the requirements of the business and may provide insights that are one-of-a-kind.

Secondary data sourcing: Here, data that already exists and has been collected, published, or distributed is utilized; such sources may encompass academic journals, the industry's reports, and the use of public records. Though secondary data may often be less precise, it usually goes easier to access and cheaper.

Automated Data Sourcing: Technology and tools are used when sourcing data. Sourcing can be completed faster with reduced human input errors. Businesses can utilize APIs, feeds, and web scraping to source real-time data.

Importance of Data Sourcing

Data sourcing enhances actual informed decision-making with quality data. Organizations do not assume things will become the case in the future as an assumption; they will use evidence-based decision-making. In addition, risk exposure is minimized and opportunities are exploited.

Cost Efficiencies: Effective data sourcing will always help to save money through the identification of what data is needed and utilized in analysis. This helps in optimizing resource allocation.

Market Insights: With a variety of data sourcing services, a business can gain a better understanding of its audience and thus change marketing campaigns to match that audience, which is always one aspect that will increase customer engagement and, therefore, drive sales.

Competitive Advantage: This ability can differentiate a business as it gains the advantage to access and analyze data faster than its competition in a world of data dominance. Companies that may expend more resources on robust data sourcing capabilities will have better abilities to find trends sooner and adjust accordingly.

Get more info about our data sourcing services & data building services and begin transforming your data into actionable insights Contact us now.

Data Sourcing Services

Data sourcing services could really smooth out your process of collecting data. The data sourcing providers are capable of offering you fresh, accurate, and relevant data that defines a cut above the rest in the market. Benefits of data sourcing outsourcing include:

Professional competencies: Data sourcing providers possess all the skills and tools necessary for gathering data efficiently and in good quality.

Time Saving: Outsourced management allows the organizations to focus on their core business by leaving the data collection to the experts.

Scalability: As the size of the business grows, so do its data needs. Outsourced data sourcing services can change with the evolved needs of the business.

Data Building Services

In addition to these services, data-building services help develop a specialized database for companies. This way, companies can be assured that the analytics and reporting done for them will be of high caliber because quality data comes from different sources when combined. The benefits associated with data-building services include:

Customization: They are ordered according to the needs of your company to ensure the data collected is relevant and useful.

Quality Assurance: Some data building services include quality checks so that any information gathered is the latest and accurate.

Integration: Most data building services are integrated into existing systems, thereby giving a seamless flow of data as well as its availability.

Data Sourcing Challenges

Even though data sourcing is highly vital, the process has challenges below:

Data Privacy: Firms should respect the general regulations regarding the protection of individual's data. For example, informing consumers on how firms collect as well as use their data.

Data Quality: All the data collected is not of good quality. Proper control quality measures should be installed so as not to base decisions on wrong information.

Cost: While benefits occur in outsourcing the data source, it may also incur a cost in finance. Businesses have to weigh their probable edge against the investment cost.

Conclusion

As a matter of fact, no business would function without proper data sourcing because that is what makes it competitive. True strategic growth indeed calls for the involvement of companies in overall data sourcing, which creates operational value and sets an organization up for success long-term. In the data-centric world of today, investing in quality data strategies is unavoidable if you want your business to be ahead of the curve.

Get started with our data sourcing services today and make your data building process lighter as well as a more effective decision-making. Contact us now.

Also Read:

What is Database Building?

Database Refresh: A Must for Data-Driven Success

Integration & Compatibility: Fundamentals in Database Building

Data analysis and insights: Explained

0 notes

Text

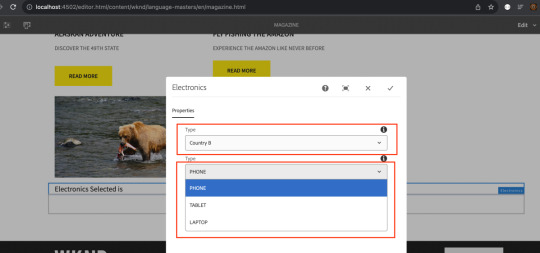

Accessing Component Policies in AEM via ResourceType-Based Servlet

Problem Statement: How can I leverage component policies chosen at the template level to manage the dropdown-based selection? Introduction: AEM has integrated component policies as a pivotal element of the editable template feature. This functionality empowers both authors and developers to provide options for configuring the comprehensive behavior of fully-featured components, including…

View On WordPress

#AEM#component behavior#component policies#datasource#datasource servlet#dialog-level listener#dropdown selection#dynamic adjustment#electronic devices#frontend developers#ResourceType-Based Servlet#Servlet#template level#user experience

0 notes

Text

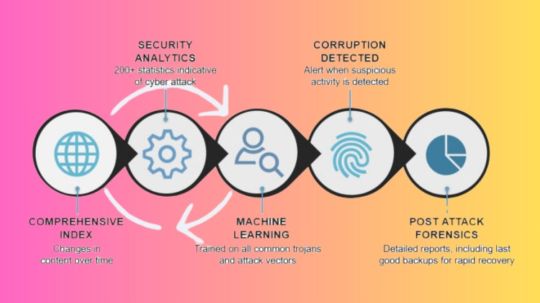

Dell CyberSense Integrated with PowerProtect Cyber Recovery

Cybersense compatibility

A smart approach to cyber resilience is represented by Dell CyberSense, which is integrated with the Dell PowerProtect Cyber Recovery platform. In order to continuously verify data integrity and offer thorough insights across the threat lifecycle, it leverages cutting-edge machine learning and AI-powered analysis, drawing on decades of software development experience. This significantly lessens the impact of an attack minimizing data loss, expensive downtime, and lost productivity and enables organisations to quickly recover from serious cyberthreats, like ransomware.

Over 7,000 complex ransomware variations have been used to thoroughly train CyberSense’s AI engine, guaranteeing accuracy over time. Up to 99.99% accuracy in corruption detection is achieved by combining more than 200 full-content-based analytics and machine learning algorithms. A sophisticated and reliable solution for contemporary cyber resilience requirements, Dell CyberSense boasts more than 1,400 commercial deployments and benefits its customers from the combined knowledge and experience acquired from real-world experiences with malware.

By keeping its defence mechanisms current and efficient, this continual learning process improves its capacity to identify and address new threats. In order for you to recover from a cyberattack as soon as possible, Dell CyberSense also uses data forensics to assist you in finding a clean backup copy to restore from.

Dell PowerProtect Cyber Recovery

The financial effects of Dell PowerProtect Cyber Recovery and Dell CyberSense for enterprises were investigated in a Forrester TEI study that Dell commissioned. According to research by Forrester, companies using Dell CyberSense and PowerProtect Cyber Recovery can restore and bring back data into production 75% faster and with 80% less time spent searching for the data.

When it comes to cybersecurity, Dell CyberSense stands out due to its extensive experience and track record, unlike the overhyped claims made by storage vendors and backup firms who have hurriedly rebranded themselves as an all-in-one solution with AI-powered cyber detection and response capabilities. The ability of more recent market entrants, which are frequently speculative and shallow, is in sharp contrast to CyberSense’s maturity and expertise.

Businesses may be sure they are selecting a solution based on decades of rigorous development and practical implementation when they invest in PowerProtect Cyber Recovery with Dell CyberSense, as opposed to marketing gimmicks.

Before Selecting AI Cyber Protection, Consider These Three Questions

Similar to the spike in vendors promoting themselves as Zero Trust firms that Dell saw a year ago, the IT industry has seen a surge in vendors positioning themselves as AI-driven powerhouses in the last twelve months. These vendors appear to market above their capabilities, even though it’s not like they lack AI or Zero Trust capabilities. The implication of these marketing methods is that these solutions come with sophisticated AI-based threat detection and response capabilities that greatly reduce the likelihood of cyberattacks.

But these marketing claims are frequently not supported by the facts. As it stands, the efficacy of artificial intelligence (AI) and generative artificial intelligence (GenAI) malware solutions depends on the quality of the data used for training, the precision with which threats are identified, and the speed with which cyberattacks may be recovered from.

IT decision-makers have to assess closely how providers of storage and data protection have created the intelligence underlying their GenAI inference models and AI analytics solutions. It is imperative to comprehend the training process of these tools and the data sources that have shaped their algorithms. If the wrong training data is used, you might be purchasing a solution that falls short of offering you the complete defence against every kind of cyberthreat that could be present in your surroundings.

Dell covered the three most important inquiries to put to your providers on their AI and GenAI tools in a recent Power Protect podcast episode:

Which methods were used to train your AI tools?

Extensive effort, experience, and fieldwork are needed to develop an AI engine that can detect cyber risks with high accuracy. In order to create reliable models that can recognise all kinds of threats, this procedure takes years to gather, process, and analyse enormous volumes of data. Cybercriminals that employ encryption algorithms that do not modify compression rates, such as the variation of the ransomware known as “XORIST,” these sophisticated threats may have behavioural patterns that are difficult for traditional cyber threat detection systems to detect since they rely on signs like changes in metadata and compression rates. Machine learning systems must therefore be trained to identify complex risks.

Your algorithms are based on which data sources?

Knowing the training process of these tools and the data sources that have influenced their algorithms is essential. AI-powered systems cannot generate the intelligence required for efficient threat identification in the absence of a broad and varied dataset. To stay up with the ever-changing strategies used by highly skilled adversaries, these solutions also need to be updated and modified on a regular basis.

How can a threat be accurately identified and a quick recovery be guaranteed?

Accurate and secure recovery depends on having forensic-level knowledge about the impacted systems. Companies run the danger of reinstalling malware during the recovery process if this level of information is lacking. For instance, two weeks after CDK Global’s customers were rendered unable to access their auto dealership due to a devastating ransomware assault, the company received media attention. They suffered another ransomware attack while they were trying to recover. Unconfirmed, but plausible, is the theory that the ransomware was reintroduced from backup copies because their backup data lacked forensic inspection tools.

Read more on govindhtech.com

#DellCyberSense#Integrated#CyberRecovery#DellPowerProtect#AIengine#AIpowered#AI#ZeroTrust#artificialintelligence#cyberattacks#Machinelearning#ransomware#datasources#aitools#aicyber#technology#technews#news#govindhtech

0 notes

Text

🏆🌟 Unlock your dream team with our cost-effective RPO services! 🚀 Optimize hiring and secure top talent for business success. Join us today! #rpo #TalentAcquisition #BusinessSuccess 💼✨ https://rposervices.com/ #rposervices #recruitment #job #service #process #hr #companies #employee #it #hiring #recruiting #management #USA #india

#career#employment#job#bestrposervicesinindia#resumes#cvsourcing#datasourcing#offshorerecruitingservices#recruitingprocessoutsourcing

0 notes

Text

youtube

Why Gold is Costlier in India? Gold Price Breakdown #goldprice #goldpriceinindia

Ever wondered why gold is priced so much higher at your local jeweller compared to international rates?

In this video, we decode the real journey of gold pricing - from the global rate in USD to what you actually pay in India.

Learn how the gold price jumps in India and why?

Note: Gold Price shown are as of May 2025.

Read the full blog here: https://navia.co.in/blog/gold-price-decoded-from-global-rates-to-your-local-jeweller/

Download app - https://open.navia.co.in/index-navia.php?datasource=DMO-YT

Subscribe to @navia_markets for more smart money insights.

#goldprice#goldpriceupdate#goldprice2025#goldinvesting#goldrate#globalgoldrate#localgoldprice#goldnews#goldratetoday#goldjewellery#goldjewelry#goldinvestment#buygold#sellgold#buyinggold#sellinggold#globalmarkets#globalmarketupdates#localmarkets#commoditymarket#commodity#commoditiesanalysis#commoditiesmarket#commoditieslive#commoditiestrading#commoditymarketupdates#commoditymarketindia#naviamarkets#navia#naviaapp

0 notes

Text

What is meant by Tableau Agent?

By lowering the entrance barrier and assisting the analyst from data preparation to visualisation, Tableau Agent (previously Einstein Copilot for Tableau) enhances data analytics with the power of AI. Improve the Tableau environment by learning how agents operate in a sophisticated AI Tableau Course in Bangalore. No matter how much experience you have as a data analyst or how new you are to data exploration, Tableau Agent becomes your reliable partner, enabling you to gain knowledge and confidently make decisions.

Tableau Agent Integration:

By integrating into the Tableau environment, Tableau Agent improves your workflow for data analysis without causing any unplanned modifications. It serves as your intelligent assistant, helping you with the Tableau creation process while guaranteeing accuracy, offering best practices, and fostering trust based on the Einstein Trust Layer. Together with Tableau Agent, you can confidently examine your data, detect trends and patterns, and effectively and impactfully explain your results.

Many capabilities provided by Tableau Agent improve the data analytics experience and enable anyone to fully utilise their data.

Quicker answers with suggested queries

It can be frightening to start from scratch when you are learning analytics for employment, education, or just for leisure. Even so, where do you start? To reduce the burden and enable you to move swiftly from connecting to data to discovering insights, Tableau Agent can be used to provide questions you might ask of a particular datasource.

Tableau Agent creates a summary context of your connected datasource by quickly indexing it. The dataset is used to produce a few questions based on this summary. "Are there any patterns over time for sales across product categories?" is one scenario Tableau Agent recommends using a dataset similar to Tableau's Superstore practice dataset. You may build a line chart with only one click. Users used to Tableau's drag-and-drop interface can make changes to anything shown before saving and going on to the next question because this is all taking place throughout the authoring process. combining data analysis with hands-on learning.

In a reputable software training institution, anyone can rapidly improve their analytics skills by using the suggested questions.

Data exploration in conversation

Frequently, the response to your initial query prompts more enquiries that enhance your comprehension of the information. With Tableau Agent, you can enhance and iterate your data exploration. You don't have to lose the context of your earlier query to look for more information. Learning how Tableau arranges measurements and dimensions will help you become accustomed to where to drag and drop to obtain the precise visualisation you desire. You can save your work and start a new sheet to address a new question at any time.

Tableau Agent is capable of handling misspellings, filtering, and even changing the viz type. Tableau Agent does indeed employ semantic search for synonyms and fuzzy logic to identify misspelt words. Thus, if you begin with product category, "filter on technology and show sales by product" might be your next query. After setting the filter, Tableau Agent changes the dimension. Seeing this in practice can help you train more employees in your company to use data exploration for their own benefit.

Tableau Agent may enhance your analytics experience, regardless of whether you are working with an existing dashboard or beginning from scratch.

Creation of guided calculations:

Writing calculations in a foreign language might be challenging. With the use of natural language cues, Tableau Agent assists you in creating computations. Therefore, Tableau Agent is there to help you with calculations and explanations in Tableau Prep and while you are generating visualisations, whether you are developing a calculation for a new business KPI or you want to keep track of your favourite sports team.

Tableau Agent searches the Track Name column without specifically requesting it when I ask it to "create an indicator for songs that are a remix" based on my playlist data. Tableau Agent is aware that the term "remix" is frequently used in the Track Name field due to the initial indexing. Before accepting the computation, you can learn how it will behave by reading the informative description that is included with it. Tableau Agent is capable of understanding LODs, text and table computations, aggregations, and even regex writing!

Conclusion

observe how Tableau Agent leverages reliable AI to help you realise the full potential of both your data and yourself. Explore Tableau Agent, which is only available in Tableau+, by watching the demo.

0 notes

Text

State Parks make Huge Economic Impact in Tennessee

Tennessee State Parks have an economic impact of $1.9 billion in the state and support employment of 13,587 people, according to an analysis by a leading economic consulting firm. Based on figures from fiscal year 2024, the report by Impact DataSource says the parks created $550 million in annual household income for Tennessee families. Because of the strong performance, state parks generated…

#Bledsoe County News#Chattanooga News#Dunlap News#Grundy County News#Haletown#Jasper News#Kimball News#Marion County News#Monteagle#New Hope#Pikeville#Sequatchie County News#Sequatchie Valley News#South Pittsburg News#Tennessee#Tennessee State Parks#Whitwell News

0 notes

Text

IBM Mainframe Modernization Strategy With Cloud Native Apps

IBM Mainframe modernization Strategy

The process of updating legacy mainframe programmed, systems, and infrastructure to conform to contemporary business practices and technological advancements is known as “Mainframe modernization.” By releasing the potential of mainframe systems, this technique helps businesses to leverage their current mainframe technology investments and reap the rewards of modernization. Modernize Mainframe can increase agility, efficiency, cost, and customer experience.

What is Mainframe modernization

Modernize mainframe lets firms use cloud computing, machine learning, AI, and DevOps to innovate and grow. Modernize mainframe systems’ seamless interface with other systems, applications, and data sources can establish new business models and revenue streams.

Modernize mainframe also helps businesses handle the problems associated with an ageing workforce, save operating and maintenance costs, and enhance system scalability and performance. Organizations may preserve their market position, boost competitiveness, and realize major economic benefits by using the potential of Modernize Mainframe.

It can be difficult and dangerous to migrate a mainframe system to a different platform, like a distributed or cloud-based system. Conversely, modernizing enables businesses to take use of modernization’s advantages while preserving the value of their current mainframe technology investments.

Make using digital channels easier

Organizations can now use (new) APIs to make Mainframe business operations and data available to applications and services operating in Azure. Additionally, Azure Logic Apps allow integration with popular enterprise software and more. These capabilities are made possible by the relationship between IBM and Microsoft.

Legacy Modernization Mainframe

Mainframe Application Modernization

An organisation can gain a great deal from Modernize Mainframe environment with APIs. It first makes it possible to integrate current tools and technologies with legacy mainframe systems. Consequently, the company will be able to leverage the most recent technological developments and raise the efficacy and efficiency of its operations. With the use of APIs, mobile apps, cloud computing, and artificial intelligence may be integrated with the mainframe to provide a more complete and contemporary solution.

Additionally, by making it possible to create intuitive dashboards and user interfaces that offer real-time access to mainframe data and services, APIs can improve decision-making and efficiency while also contributing to an improved user experience.

Azure API Management can be connected with these APIs, which can be developed with IBM z/OS Connect. A completely managed service called Azure API Management assists businesses with publishing, securing, and managing APIs for their apps. It offers an extensive feature set and tool set for creating, managing, and tracking the lifecycle of APIs.

These APIs can be utilized with Microsoft Power Platform to create web or mobile applications. A low-code or no-code alternative for building a web-based user interface that integrates with the above described created services is Microsoft Power Platform Power Apps.

Expanding the Azure Logic Apps experience

With features like cost-effectiveness, real-time processing, machine learning capabilities, and interaction with well-known enterprise software, Azure Logic Apps offers developers an additional means of Modernize Mainframe systems. By automating repetitive processes and workflows, developers can improve overall efficiency and free up mainframe resources for more important tasks.

Create real-time data sharing

For most firms, attracting clients and standing out from the competition through personalized offers is a primary goal. This calls for a greater amount of real-time data interaction between digital front-end apps hosted on Microsoft Azure and core business programmes operating on the mainframe. All of this is possible without compromising related service-level agreements (SLAs) or essential business applications.

The IBM Z Digital Integration Hub allows developers to curate and combine data that is kept on the mainframe. They can then use mainframe-optimized technologies in place of shifting all the raw data coming from the numerous core apps and related data sources to the cloud.

These technologies are able to interact, communicate, store the curated/aggregated data in memory, and present the data via a variety of standards-based interfaces, including open standard-based APIs and event-based systems like Kafka.

The IBM Z Digital Integration Hub can be set up to proactively communicate curated data with Microsoft Fabric event streams via Kafka in an events-based manner. These event streams can be used to process account notifications when new, interesting transactions are processed on the System of Record.

Subsequently, Data Activator flows may be initiated, causing the notification to be sent to consumers and downstream applications. By combining the capabilities of IBM zDIH with Microsoft Fabric, clients may leverage near-real-time information from IBM Z to swiftly design innovative solutions.

Mainframe Modernization Strategy

It is the responsibility of IT leaders to extend and integrate mainframe capabilities in order to promote business innovation and agility. As a matter of fact, in order to remain competitive, four out of five organizations feel that they must update mainframe-based programmes quickly. Finding the optimal application modernization strategy that provides the best return on investment is the difficult part, though.

IBM Mainframe Modernization

IBM Consulting can assist you in creating a hybrid cloud strategy that combines common agile techniques and mainframe, IBM Z, and Microsoft Azure application interoperability under a single integrated operating architecture. IBM helps maintain applications and data secure, encrypted, and robust by putting them on the appropriate platform. With IBM Z at its heart, this hybrid cloud platform is nimble and effortlessly connected.

IBM Consulting uses IBM Z and Azure to promote IT automation, create Azure applications, and expedite the transformation of mainframe systems. By working together, firms may eliminate the need for specialized skills, maximize investment value, and innovate more quickly.

Discover how to leverage your mainframe’s potential and boost company success. Click the link below to read more about Modernize Mainframe and to schedule a meeting with one of our specialists to discuss how IBM can help you achieve your goals.

Read more on Govindhtech.com

#CloudNativeApps#cloudcomputing#machinelearning#DevOps#datasources#systemscalability#Azure#Microsoft#AzureLogicApps#artificialintelligence#apimanagement#azureapi#mobileapplication#MicrosoftAzure#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes