#Remove Duplicate Characters From a String In C

Explore tagged Tumblr posts

Video

youtube

LEETCODE PROBLEMS 1-100 . C++ SOLUTIONS

Arrays and Two Pointers 1. Two Sum – Use hashmap to find complement in one pass. 26. Remove Duplicates from Sorted Array – Use two pointers to overwrite duplicates. 27. Remove Element – Shift non-target values to front with a write pointer. 80. Remove Duplicates II – Like #26 but allow at most two duplicates. 88. Merge Sorted Array – Merge in-place from the end using two pointers. 283. Move Zeroes – Shift non-zero values forward; fill the rest with zeros.

Sliding Window 3. Longest Substring Without Repeating Characters – Use hashmap and sliding window. 76. Minimum Window Substring – Track char frequency with two maps and a moving window.

Binary Search and Sorted Arrays 33. Search in Rotated Sorted Array – Modified binary search with pivot logic. 34. Find First and Last Position of Element – Binary search for left and right bounds. 35. Search Insert Position – Standard binary search for target or insertion point. 74. Search a 2D Matrix – Binary search treating matrix as a flat array. 81. Search in Rotated Sorted Array II – Extend #33 to handle duplicates.

Subarray Sums and Prefix Logic 53. Maximum Subarray – Kadane’s algorithm to track max current sum. 121. Best Time to Buy and Sell Stock – Track min price and update max profit.

Linked Lists 2. Add Two Numbers – Traverse two lists and simulate digit-by-digit addition. 19. Remove N-th Node From End – Use two pointers with a gap of n. 21. Merge Two Sorted Lists – Recursively or iteratively merge nodes. 23. Merge k Sorted Lists – Use min heap or divide-and-conquer merges. 24. Swap Nodes in Pairs – Recursively swap adjacent nodes. 25. Reverse Nodes in k-Group – Reverse sublists of size k using recursion. 61. Rotate List – Use length and modulo to rotate and relink. 82. Remove Duplicates II – Use dummy head and skip duplicates. 83. Remove Duplicates I – Traverse and skip repeated values. 86. Partition List – Create two lists based on x and connect them.

Stack 20. Valid Parentheses – Use stack to match open and close brackets. 84. Largest Rectangle in Histogram – Use monotonic stack to calculate max area.

Binary Trees 94. Binary Tree Inorder Traversal – DFS or use stack for in-order traversal. 98. Validate Binary Search Tree – Check value ranges recursively. 100. Same Tree – Compare values and structure recursively. 101. Symmetric Tree – Recursively compare mirrored subtrees. 102. Binary Tree Level Order Traversal – Use queue for BFS. 103. Binary Tree Zigzag Level Order – Modify BFS to alternate direction. 104. Maximum Depth of Binary Tree – DFS recursion to track max depth. 105. Build Tree from Preorder and Inorder – Recursively divide arrays. 106. Build Tree from Inorder and Postorder – Reverse of #105. 110. Balanced Binary Tree – DFS checking subtree heights, return early if unbalanced.

Backtracking 17. Letter Combinations of Phone Number – Map digits to letters and recurse. 22. Generate Parentheses – Use counts of open and close to generate valid strings. 39. Combination Sum – Use DFS to explore sum paths. 40. Combination Sum II – Sort and skip duplicates during recursion. 46. Permutations – Swap elements and recurse. 47. Permutations II – Like #46 but sort and skip duplicate values. 77. Combinations – DFS to select combinations of size k. 78. Subsets – Backtrack by including or excluding elements. 90. Subsets II – Sort and skip duplicates during subset generation.

Dynamic Programming 70. Climbing Stairs – DP similar to Fibonacci sequence. 198. House Robber – Track max value including or excluding current house.

Math and Bit Manipulation 136. Single Number – XOR all values to isolate the single one. 169. Majority Element – Use Boyer-Moore voting algorithm.

Hashing and Frequency Maps 49. Group Anagrams – Sort characters and group in hashmap. 128. Longest Consecutive Sequence – Use set to expand sequences. 242. Valid Anagram – Count characters using map or array.

Matrix and Miscellaneous 11. Container With Most Water – Two pointers moving inward. 42. Trapping Rain Water – Track left and right max heights with two pointers. 54. Spiral Matrix – Traverse matrix layer by layer. 73. Set Matrix Zeroes – Use first row and column as markers.

This version is 4446 characters long. Let me know if you want any part turned into code templates, tables, or formatted for PDF or Markdown.

0 notes

Text

Remove Duplicate Characters From a String In C#

Remove Duplicate Characters From a String In C#

Remove Duplicate Characters From a String In C# Console.Write(“Enter a String : “);string inputString = Console.ReadLine();string resultString = string.Empty;for (int i = 0; i < inputString.Length; i++){if (!resultString.Contains(inputString[i])){resultString += inputString[i];}}Console.WriteLine(resultString);Console.ReadKey(); Happy Programming!!! -Ravi Kumar Gupta.

View On WordPress

#.NET#c#Coding#java#Program#program in c#Programming#Remove Duplicate Characters From a String#Remove Duplicate Characters From a String In C#Technology

0 notes

Text

GSoC logs (June 5 –July 11)

July 5

Reclone and commit all changes to new branch.

Get preview component done

Send the demo to mentors and then touch proto file.

Recloned. In the new clone, I made new changes. Everything works.

Turns out any write operation in the proto file is wrecking stuff. I navigated to the proto file in vscode and simply saved. This causes all the errors again.

Some import errors even though proto syntax are perfectly fine. Hmmm. protoc-gen-go: program not found or is not executable

I tried making the proto file executable with chmod +x. Still same issue.

Proto files aren’t supposed to be executable

The error is actually referring to protoc-gen-go. Saying that protoc-gen-go is not found or not executable. Not the proto file.

Moving on to completing the preview component.

Preview component

Ipynb html. Axios get and render the htmlstring from response data in the iframe. Nop, v-html.

Serve html file from the http server for now. Wait, that's not possible.

Okay, maybe I should just use hello api for now. Since both request and response objects have string type properties.

July 6

Leads & Tries.

Try Samuel’s suggestion -

“Do you have protoc on your path?

sometimes VScode installs its own version of some tools on a custom $PATH - it could as well be that some extension is not properly initialized “

Interesting - I tried git diff on the proto file and this happens just by vscode saving it.

old mode 100644 new mode 100755

https://unix.stackexchange.com/a/450488

Changed back to 0644 and still the same issue in vscode.

I just forgot I made the protofile executable yesterday and forgot to change it back. Wasted like an hour on it.

Read about makefile. Do as said. Annotations.proto forward slash path. ??

Set gopath Permanently.

Edit proto file in atom. (Prepare to reclone ;_;)

Trying the same in vim ()

Text editor.

Okay, so the issue isn't editor or ide specific.

Trying to change paths

Gopath was /$HOME/go .. changed it to /usr/local/go/bin

New error.

GO111MODULE=off go get -v github.com/golang/protobuf/protoc-gen-go github.com/golang/protobuf (download) package github.com/golang/protobuf/protoc-gen-go: mkdir /usr/local/go/bin/src: permission denied make: *** [Makefile:164: /usr/local/go/bin/bin/protoc-gen-go] Error 1

https://github.com/golang/go/issues/27187

sudo chown -R $USER: $HOME

Doesn’t feel right.

The problem could be that I have multiple go distributions. After this is done, I need to clean this shit this weekend.

Still permission denied

$GOPATH was supposed to be /usr/local/go not /usr/local/go/bin

Okay, changed it, but go env GOPATH is giving warning that GOROOT and GOPATH are same.

So from what I’ve read, gopath is the workspace (where the libraries we need for the project are installed), and goroot is the place where go is installed(Home to the go itself). Our makefile is trying to install and use the modules in GOPATH. Okay, so my GOPATH was right before.

Final tries

Follow the error trails.

Find out what exactly is happening when I save this file.

Okay, incase they don’t work out. Try to send the html string instead of “Hello ” string without changing proto file.

Since all ipynb files can have same css, try and send only the html elements. And do the css separately in frontend.

Okay, I was able to send a sample html string with the response with the same api. Since response message type was string itself..

Rendered the HTMLString in the preview component.

I’m gonna embed the nbconvert python script by tonight. Update: that was overoptimistic.

So I have the nbconvert python script to generate basic html strings.

I should also remove those comments in the html.

So we’re using the less performance option of keeping duplicate copies of the data in both go and python since we don’t need much memory in the first place.

Read about python c api.

July 7

Learned one or 2 things about embedding python. https://github.com/ardanlabs/python-go/tree/master/py-in-mem

Following this https://poweruser.blog/embedding-python-in-go-338c0399f3d5

So I need python3.7. Because go-python3 only supports python3.7. But there’s python 3.8 workaround in the same blog. go get github.com/christian-korneck/go-python3 Failed with a bunch of errors.

https://blog.filippo.io/building-python-modules-with-go-1-5/ goodread. But that’s not what I need.

https://www.ardanlabs.com/blog/2020/09/using-python-memory.html

This is a bit more challenging but more understandable.

He's trying to use in-memory methods, so both go and python can access the data. No duplication. Since the guide is more understandable and uses python3.8 itself, I should give it a try.

I should start with this right after fixing the make issue.

Back to build fixing

Do as samuel suggested. - no luck.

What I know so far. The issue is rooted with golang or protobuf or sth related. Since the issue is there for all the text editors including vim.

I found sth weird -Which go => /usr/local/go/bin/go. When it gives /usr/local/bin/go to this dude https://stackoverflow.com/a/67419012/13580063

Okay, not so weird.

July 8

Reinstall golang#2. https://stackoverflow.com/a/67419012/13580063 ? Nop.

See protoc installation details.

Read more about protoc, protobugs https://developers.google.com/protocol-buffers/docs/reference/go-generated#4. Read more about golang ecosystem - 30m and see if **which go **path is weird.

Also found this https://github.com/owncloud/ocis-hello/issues/62 Nop.

Make generate is giving this.

GO111MODULE=off go get -v github.com/golang/protobuf/protoc-gen-go GO111MODULE=on go get -v github.com/micro/protoc-gen-micro/v2 GO111MODULE=off go get -v github.com/webhippie/protoc-gen-microweb GO111MODULE=off go get -v github.com/grpc-ecosystem/grpc-gateway/protoc-gen-openapiv2 protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --go_out=pkg/proto/v0 hello.proto protoc-gen-go: program not found or is not executable Please specify a program using absolute path or make sure the program is available in your PATH system variable --go_out: protoc-gen-go: Plugin failed with status code 1. make: *** [Makefile:176: pkg/proto/v0/hello.pb.go] Error 1

echo $GOPATH is empty. go env GOPATH is giving the right path.

export GOROOT=/usr/local/go export GOPATH=$HOME/go export GOBIN=$GOPATH/bin export PATH=$PATH:$GOROOT:$GOPATH:$GOBIN

Now, I get this

GO111MODULE=off go get -v github.com/grpc-ecosystem/grpc-gateway/protoc-gen-openapiv2 protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --go_out=pkg/proto/v0 hello.proto protoc-gen-go: invalid Go import path "proto" for "hello.proto" The import path must contain at least one forward slash ('/') character. See https://developers.google.com/protocol-buffers/docs/reference/go-generated#package for more information. --go_out: protoc-gen-go: Plugin failed with status code 1. make: *** [Makefile:176: pkg/proto/v0/hello.pb.go] Error 1

Did this https://github.com/techschool/pcbook-go/issues/3#issuecomment-821860413 and the one below and started getting this

GO111MODULE=off go get -v github.com/grpc-ecosystem/grpc-gateway/protoc-gen-openapiv2 protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --go_out=pkg/proto/v0 hello.proto protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --micro_out=pkg/proto/v0 hello.proto protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --microweb_out=pkg/proto/v0 hello.proto protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --swagger_out=logtostderr=true:pkg/proto/v0 hello.proto protoc-gen-swagger: program not found or is not executable Please specify a program using absolute path or make sure the program is available in your PATH system variable --swagger_out: protoc-gen-swagger: Plugin failed with status code 1. make: *** [Makefile:194: pkg/proto/v0/hello.swagger.json] Error 1

July 9

Now trying to read this from the error https://developers.google.com/protocol-buffers/docs/reference/go-generated#package

option go_package = "github.com/anaswaratrajan/ocis-jupyter/pkg/proto/v0;proto";

Tried this and

GO111MODULE=off go get -v github.com/grpc-ecosystem/grpc-gateway/protoc-gen-openapiv2 protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --go_out=pkg/proto/v0 hello.proto protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --micro_out=pkg/proto/v0 hello.proto protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --microweb_out=pkg/proto/v0 hello.proto protoc \ -I=third_party/ \ -I=pkg/proto/v0/ \ --swagger_out=logtostderr=true:pkg/proto/v0 hello.proto protoc-gen-swagger: program not found or is not executable Please specify a program using absolute path or make sure the program is available in your PATH system variable --swagger_out: protoc-gen-swagger: Plugin failed with status code 1. make: *** [Makefile:194: pkg/proto/v0/hello.swagger.json] Error 1

Okay, so proto-gen-swagger is not in gopath as expected. So this isn’t working.

$(GOPATH)/bin/protoc-gen-swagger: GO111MODULE=off go get -v github.com/grpc-ecosystem/grpc-gateway/protoc-gen-openapiv2

Instead of protoc-gen-swagger, there’s protoc-gen-openapiv2 executable. So I replaced protoc-gen-swagger from last line in the makefile to the executable in the path.

https://grpc-ecosystem.github.io/grpc-gateway/docs/development/grpc-gateway_v2_migration_guide/

Turns out they renamed protoc-gen-swagger to protoc-gen-openapiv2

option go_package = "github.com/anaswaratrajan/ocis-jupyter/pkg/proto/v0;proto"; wasn't the right path.

There's a new directory github.com/anaswa... inside proto/v0/

So go_package path is messed up.

Just replacing swagger binary names in makefile lets you generate the proto files at github.com/anas… dir

Let’s try fixing the go_package path.

Wait, you don't generate them.

option go_package = "./;proto";

This is the right way.

So yea, I’m able to generate the go-code now. But make generate is still failing. The swagger file is still not generated. July 11

Ownclouders already tried to work on it.

https://github.com/owncloud/ocis-hello/issues/91

So what exactly is micro-web service? This protoc-gen-microweb is a protoc generator for micro web services. And it's generating this hello.pb.web.go

New error/ Make generate gives

`GO111MODULE=off go get -v github.com/grpc-ecosystem/grpc-gateway/protoc-gen-openapiv2 go generate github.com/anaswaratrajan/ocis-jupyter/pkg/assets panic: No files found goroutine 1 [running]: main.main() /home/anaswaratrajan/go/pkg/mod/github.com/!unno!ted/fileb0x[@v1](https://tmblr.co/mkYynE1Axr-EFCSIQIAtheA).1.4/main.go:101 +0x2765 exit status 2 pkg/assets/assets.go:12: running "go": exit status 1 make: *** [Makefile:83: generate] Error 1

Read more about the protoc generators used here.

0 notes

Text

Vim - Menus

Our beloved Vim offers some possibilities to create and use menus to execute various kinds of commands. I know the following solutions ...

the menu command family

the confirm function

the insert user completion (completefunc)

I will not bother you with a detailed tutorial. For more information I always recommend the best place to learn about Vim - the integrated help.

:menu

Let's start with the most obvious one, the menu commands. The menu commands can be used to create menus that can be called via GUI (mouse and keyboard) and via ex-mode. A good use-case is to create menus entries for commands that are not needed often, for which a mapping would be a waste of valueable key combinations and which can probably not be remembered anyway as they are used less frequently.

I'll keep it short here and as there are already tutorials out there. And of course the Vim help is the best place to read about it. :h creating-menus

Vim offers several menu commands. Depending on the current Vim mode your menu changes accordingly. Means if you are in normal mode you will see your normal mode menus, in visual mode you can see only the menus that make sense in visual mode, and so on.

Let's say we want to have a command in the menu that removes duplicate lines and keeps only one. We want that in normal mode the command runs for the whole file and that in visual mode, by selecting a range of lines, the command shall run only for the selected lines. We could put the following lines in our vimrc.

nmenu Utils.DelMultDuplLines :%s/^\(.*\)\(\n\1\)\+$/\1/<cr> vmenu Utils.DelMultDuplLines :s/^\(.*\)\(\n\1\)\+$/\1/<cr>

The here used commands are quite simple. After the menu command you see only 2 parameters. First one is the menu path. I say path because by using the period you can nest your menus. Here it is only the command directly under the Utils menu entry. The second parameter is the command to be executed just like you would do from normal mode.

There are 2 special characters that can be used in the first parameter. & and <tab>. & can be used to add a shortcut key to the menu and <tab> to add a right aligned text in the menu. Try it out!

Remember that you can access the menu also via ex-mode.

:menu Utils.DelMultDuplLines

But please don't type all the text and use the tabulator key to do the autocompletion for you. ;-)

confirm()

The confirm function. Luckily I already wrote about this possibility. So instead of copy-pasting the text I just link to it.

vim-confirm-function

set completefunc

Let's get dirty now. I guess the insert completion pop-up menu is well known by everyone. But did you know that you can misuse it for more than just auto-completion? I did not. I will show you what I mean.

Usually the auto-completion is triggered by pressing Ctrl-n, Ctrl-p or Ctrl-x Ctrl-something in insert mode. One of these key mappings is Ctrl-x Ctrl-u which triggers an user specific function. Means we can write a function that does what we want and assign it to the completefunc option. Let's check what we need to consider when writing such a function.

The completefunc you have to write has 2 parameters. And the function gets called twice by Vim. When calling the function the first time, Vim passes the parameters 1 and empty. In this case it is your task to usually find the beginning of the word you want to complete and return the start column. One the second call Vim passes 0 and the word the shall be completed.

function! MyCompleteFunc(findstart, base) if a:findstart " write code to find beginning of the word else " write code to create a list of possible completions end endfunction set completefunc=MyCompleteFunc

For more information and an example check :h complete-functions.

Now let's re-create the LaTeX example using the completefunc. Here is a possible solution that supports nested menus, so for 1 level menus the code can be reduced a lot.

https://gist.github.com/marcotrosi/e2918579bce82613c504e7d1cae2e3c0

Okay let's go through it step by step.

In the beginning you see 2 variables. InitMenu and NextMenu. InitMenu defines the menu entry point and NextMenu remembers the name of the next menu. I just wanted to have nested menus and that's what is this for.

Next is the completefunc we have to write. I called mine LatexFont. As shown before it consists of an if-else-block, where the if-true-block gets the word before the cursor and the else-block will return a list that contains some information. In the simplest form this would only be a list of strings that would be the popup-menu-entries. See the CompleteMonths example from the Vim help. But I added some more information. Let's see what it is.

The basic idea is to have one initial menu and many sub-menus, they are all stored in a single dictionary name menus and are somehow connected and I want to decide which one to return. As I initialized my InitMenu variable with "Font" this must be my entry point. After the declaration of the local menus dictionary you can see an if clause that checks if s:NextMenu is empty. So if s:NextMenu is empty it will be initialized with my init value I defined in the very beginning. And at the end I return one of the menus so that Vim can display it as a popup menu.

Now let's have a closer look at the big dictionary. You can see 4 lists named Font, FontFamily, FontStyle and FontSize. Each list contains the popup-menu entries. I use the key user_data to decide whether I want to attach a sub-menu or if the given menu is the last in the chain. To attach a sub-menu I just provide the name of the menu and when the menu ends there I use the string "_END_". So the restriction is that you can't name a menu "_END_" as it is reserved now. By the way, I didn't try it, but I guess that user_data could be of any datatype and is probably not limited to strings.

Let's see what the other keys contain. There are the keys word, abbr and dup. word contains the string that replaces the word before the cursor, which is also stored in a:base. abbr contains the string that is used for display in the popup-menu. From the insert auto-completion we are used to the word displayed is also the word to be inserted. Luckily Vim can distinguish that. This gives me the possibility to display a menu (like FontFamily, FontStyle, FontSize) but at the same time keeping the original unchanged word before the cursor. This is basically the whole trick. Plus the additional user_data key that allows me to store any kind of information for re-use and decisions. With the dup key I tell Vim to display also duplicate entries. For more information on supported keys check :h complete-items.

Now let's get to the rest of the nested menu implementation. Imagine you have selected an entry of the initial menu. You confirm by pressing SPACE and now I want to open the sub-menu automatically. To achieve that I use a Vim event named CompleteDone which triggers my LatexFontContinue function, and the CompleteDone event is triggered when closing the popup menu either by selecting a menu entry or by aborting. Within that function I decide whether to trigger the user completion again or to quit. Beside the CompleteDone event Vim also has a variable named v:completed_item that contains a dictionary with the information about the selected menu item. The first thing I do is saving the user_data value in a function local variable.

let l:NextMenu = get(v:completed_item, 'user_data', '')

The last parameter is the default value for the get function and is an empty string just in case the user aborted the popup-menu in which case no user_data would be available.

One more info - this line ...

inoremap <expr><esc> pumvisible() ? "\<c-e>" : "\<esc>"

... allows the user to abort the menu by pressing the ESC key intead of Ctrl-e.

And last but not least the if clause that either sets the script variable s:NextMenu to empty string when the local l:NextMenu is empty or "_END_" and quits the function without doing anything else, or the else branch that stores the next menu string in s:NextMenu and re-triggers the user completion.

The rest of the file is self-explanatory.

I'm sure we can change the code a bit to execute also other command types, e.g. by storing a command as a string and executing it.

Let me know what you did with it.

2 notes

·

View notes

Text

The Recruit (Chapter 17) - Mitch Rapp

Author: @were-cheetah-stiles

Title: “Day 78, Part I”

Characters: Mitch Rapp & Reader/OFC

Warnings: SMUT. IT’S A LOT OF FUCKING SMUT. like, blowjobs, light choking, vaginal sex, orgasms.. so much smut and cursing. IT’S SMUTAPALOOZA!

Author’s Note: yo... morning head is fun tho. im posting this for @mf-despair-queen who literally JUST begged me for Mitch smut. bless that fucking shirtless picture for making all of us collectively lose our shit. stay thirsty, my friends.

Summary: Mitch gets a smut filled morning with Y/n.

Chapter Sixteen - Chapter Seventeen - Chapter Eighteen

You woke wrapped in nothing but his limbs and the bedsheets. You closed your eyes, tilted your head up towards the sun coming through the curtains, softly bit your bottom lip and sighed. You were so happy. You turned slowly and quietly, and looked at Mitch sleeping, gently pushing some of the hair from his eyes. He was, without a doubt, the most beautiful man you had ever actually seen in person, and his peaceful resting face made your heart skip beats.

Mitch's legs moved under the sheets and he rolled onto his back, turning his head away from you. You smirked. He wasn't hard, but there was a small bulge that perked up under the thin white sheets on the bed. You rubbed your tongue along the bottom of your left canine and cracked a simple plot: you knew how you wanted to wake him up that morning.

You carefully climbed under the thin white sheet covering Mitch. He almost never slept with more than just the sheet when he slept with you because your body ran so hot at night, that if he slept with blankets, the two of you would wake up in a pool of sweat. You positioned yourself on your stomach, your feet hanging out from under the sheet on the side of the bed. You leaned against your left forearm as you licked your lips and picked up his penis with your right hand. It was big even when it was flaccid.

You popped the head into your mouth and lightly sucked. Mitch stirred gently and you grinned. You lifted his member and dragged your tongue on the underside, from the very base back up to the head, popping the tip back into your mouth for a quick suck. Mitch stirred more definitively, and he began to slowly grow in your hand.

You licked back up the side closest to you, base to tip, pushing your tongue a little bit firmer against him this time, and you heard him moan; a sound that he made when he woke up but a bit more breathy than usual. You wrapped your lips around his head and managed to get all of his semi-erect cock in your mouth. You moaned and the vibrations in your throat and mouth caused Mitch to rip the sheet off of him, revealing a sight that he wasn't sure until then was a dream or real.

"Holy shit." He mumbled as you bobbed up and down on him, your lips suctioned tight around his shaft. You let go with a popping noise. You maintained an intense and arrogant eye contact with him, as you moved in between his legs and rested on your knees in front of him. You looked like you were worshipping his cock, and you basically were.

He watched as you licked your lips and leaned your head down, still staring teasingly up at him. He bit his lip as you placed your delicate fingers under his balls, holding them up to your mouth like a snack that you had to have. You wet your lips and sucked his balls into your mouth, gently massaging them as they rotated over your tongue.

"Oh fuck." Mitch broke your eye contact and threw his head back, his fingers both digging into the sheets around him and pushing the hair away from his face. You were pleased with all the fuss he was making. You let his balls drop, lightly sucking on one side, then moving over to the other. You caught his attention again when you dragged the tip of your tongue from above his balls, across the underside of his base, up the shaft and then took him into your mouth again. He had grown substantially since you last had his long and thick length against your lips. You worked the shaft in tandem with your mouth, picking up your pace and barely blinking. Mitch's breathing became heavy as you went and he tucked hairs that were falling in your face, behind your ear.

"Please keep doing that." He begged, but you had other thoughts. You removed your hand from his shaft, pulling all of your hair to one side to drape over your shoulder, and began playing with his balls in your hand, as you then took as much of him as you could down your throat. Mitch jolted forward over the duel sensations. "Fuck... That works too." He mumbled in between pants.

He gathered your long y/h/c locks in his fist, close to your head and began creating the rhythm and timing that he wanted as he forced your head up and down on him. You gagged as Mitch pushed you farther than you had been going and his tip hit the back of your throat. You didn't stop him though. The sound of him moaning as you choked turned you on. You moaned and the vibrations in your throat set him loose.

"Oh fuck, Y/n. God damnit. Do you want me to cum in your mouth?" It was a cross between a threat and a question, and you just kept sucking and gagging, your hand no longer playing with his balls, but instead jerking off the little bit of shaft that he couldn't fit in your mouth. You smiled with your eyes as he looked down at you. It made him crazy with lust whenever you did that... It made him aggressive. He pushed your head down and you choked harder than you had before. Mitch backed off a little, but you pushed yourself back down as hard as he had pushed you before, choking again on his big, thick cock.

Mitch pulled your head up slightly and began thrusting himself up into your mouth with some speed. He was close and he just wanted to see your tongue painted white. You took every forceful thrust in stride and enjoyed yourself, bracing yourself against the bed, both arms on either side of his hips. Mitch pulled your head up, and pushed you back onto your knees in an upright position. He got on his knees and began stroking himself in front of your face. You opened your mouth, your cheekbones turned up in a grin. You playfully left your tongue flat in front of him. You glanced between his dark, lustful eyes and his red and wet tip. He gripped the back of your neck and pressed his cock against your tongue. You felt his load shoot into your mouth. He kept stroking and it shot out in strings against your tongue, cheeks, lips. You let him fill you up and you waited, mouth open until he was done.

He kept his left hand on the back of your neck and watched as you swallowed and then ran your tongue against your lips. He reached his thumb on his right hand against the corner of your mouth and wiped cum off of your face. You reached up for his hand and popped his thumb into your mouth, sucking against it until you had every last drop of him. The breath hitched in the back of his throat as he watched you swallow the last bits of his seed. He dropped to the mattress and watched as you climbed off of the bed.

He held his tender cock in his hand, and closed his eyes, a happy smirk resting on his lips. You grabbed your underwear from the night before off the floor and his black, long-sleeved crew neck shirt and got dressed. You tip-toed over to him and smiled. He looked peaceful and pleased. You leaned over and kissed him lightly on the lips. "I'm going to make breakfast. Sleep a little longer." He nodded slightly and sighed, drifting back to sleep.

Mitch woke up, swung his muscular legs over the side of the bed and stretched. He felt great. He grabbed his blue plaid pajama pants off the floor and secured them around his hips. He didn't want to bother with the rest of his clothes if he was just going to shower after breakfast. He heard you moving around in the kitchen downstairs, and he inhaled the aroma of Belgian waffles being baked. He walked down the spiral staircase and saw your back turned, paying attention to whatever it was that you were making on the stove. The two of you had stopped by the grocery store on the way back from Steven's the night before to pick up some essentials to stock the kitchen with for the rest of your time there.

Mitch hadn't really looked around the house the day before. He slipped into the room that he had found you in the day before, on the first floor, and quickly realized that it was probably your father's old office and library. It was the only room in the house that still had pictures of the Hurley's. You had purged every other room of those personal mementos. Mitch wasn't sure if that was because the memories were too painful or if it was the spy in you that wanted to be able to make a quick get away if you ever needed to. He saw a duplicate of the picture that you had in your bedroom back at The Barn, and he traced his fingers along the top of the frame. Not a speck of dust came off and Mitch realized that you definitely had your cleaning crew take extra special care of this room.

He walked over to the bookshelf that took up an entire wall and saw a row of hardcover books with the same author's name. He pulled one out that made him smile, and carefully flipped through the pages, before putting it back on the shelf. A picture of you on a swing set with another little girl that looked a lot like Beth sat on the shelf above the old books and Mitch found himself thinking how he hoped his children had your eyes. He caught himself in his daydream, contorted his face with shock, and then felt the corner of his mouth turn up. It was not the most absurd thing he had ever thought about. He grinned, looking down at the floor and shook his head.

"What are you grinning about?" Mitch heard you whisper, as you leaned half your body against the other side of the door frame, holding onto the wood around your face.

Mitch walked up to you and kissed you lightly on the lips. "Just looking at how cute you were as a kid... breakfast ready?" You nodded, and turned. You laughed out loud when Mitch came up behind you and gripped his hands against your hips, walking in step with your stride towards the kitchen.

You sat down at the counter together and began eating your waffles and bacon and sausage and hash browns. Mitch swallowed some orange juice and watched as you poured more maple syrup on your plate. He laughed to himself and leaned back in the stool. "Do you want some waffles with your maple syrup, Y/n/n?"

You took the strawberry off of your plate and placed it in between your lips, then looked up at Mitch with the strangest look on your face. "........I hate waffles." You tried to stifle a grin.

Mitch burst out laughing. "So why the hell did you make them?"

"YOU LOVE WAFFLES!" You yelled back, laughing into your arms on the counter.

Mitch settled down, his cheeks hurt from smiling. "Oh god, I love..... that you are willing to eat something you hate just because you know I love it." He recovered quickly, but he realized that he almost said what had been on the tip of his tongue for days. You heard it too but you didn't react and you didn't want to presume. Mitch changed the subject. "So, was that a first edition copy of The Great Gatsby?" He asked, referencing the books that put a smile on his face in your Dad's library earlier.

You nodded as you ate everything on the plate that wasn't a waffle. "Those are all of F. Scott Fitzgerald's novels in their first edition. My dad was definitely a collector."

"Is that why that book is your favorite?" Mitch asked you, a smile not having left his face since he got out of bed that morning.

"Yes, he used to read it to me when I was growing up, like twice a year at least. He loved that book because he grew up in a town called Sands Point, which is at the very tip of East Egg in the book... that collection of books are probably my most prized possessions."

"So there is a fire and you save me or the books?" Mitch proposed the absurd hypothetical.

"Oh, you're toast." You said with a grin, getting up from the stool to clear your plates.

He got up to clear his own and help you with the dishes when he saw you reach up on your tip-toes to put the waffle mix back in the cabinet above the fridge. Your taut but plump ass peeking out from under the hem of his favorite black shirt, and he stepped up behind you, pressing his body against yours as he easily placed the box away. He snaked his hands around your front, pulling the shirt up from the bottom until his hands rested on your hips. You exhaled heavily and leaned against him. Mitch slid one of his big, veiny hands down the front of your white cotton underwear and felt how wet you were. He began gently rubbing your clit, pulling you against him with his other hand, as you reached up behind you and grabbed a fistful of his hair. He breathed in your sweet scent and closed his eyes as you moved your arm around his, and began rubbing your palm against his cock, growing quickly inside of his pants.

You had cupped your hand around his shaft and were rubbing it through his soft cotton pants, leaving him so turned on that he literally stopped rubbing your clit and leaned into your touch. Mitch took a deep breath and came to. You had taken care of him, it was time for him to take care of you.

He grabbed your hand from his cock and used his body to push you up against the marble countertop, pushing the dishes and bowls to the side, and bending you over against the cool surface. Mitch pulled his shirt off of you, pulling you up against him, and dragging his hand down your chest. You moaned at his roaming touch.

Mitch bent you back against the countertop again, your cheek pressed against the cool surface, as he gently pulled all of your hair to one side. He pressed his bare chest against your back, running his hands from your shoulders down your arms to intertwine his fingers with yours, spreading your arms out next to you. He had you completely pinned down as he nibbled on your earlobe and heard you moan softly.

He released your hands and moved his lips towards the back of your neck. He swept the hair out of the way again and left long, wet and warm kisses on the back of your neck. You moaned a little louder. He pulled back slightly and softly blew cool air against the wet kisses he had left on you and you shivered. All of your nerves were standing on edge waiting to see what he would do next.

Mitch leaned back over, his hot skin pressed against your hot skin, and he began to leave long, wet, warm kisses on your shoulder blades, leaving equal amounts on both sides and then meeting back at your spine. You were breathing heavily underneath him. Mitch took the tip of his tongue and dragged it down the length of your spine, his hands running down your sides as he went. You let out your loudest moan yet and arched your back away from him, pressing your body against the counter harder. It was a part of your body that was woefully neglected by Mitch's mouth and you went wild over the rare sensations. Mitch stayed focused on your reaction and blew cool air back over the wet trail on your back. He then left long, sucking kisses back down your spine, taking care to go slowly.

"Oh god, Mitch. That feels so good." You whined, not wanting him to stop.

Mitch dropped to his knees behind you, slowly pulling your white cotton panties, with a growing wet spot by your pussy, down your legs. He grabbed fistfuls of your ass, pushing you up on the counter further. He kissed the backs of your thighs, leaving long, warm kisses down to the backs of your knees. You squirmed with each new touch as he kissed all the way down to your ankles. He worshipped every inch of your body and he wanted to make sure you knew that.

Mitch glanced up at your swollen pink lips, barely sticking out between your thighs, and he recalled the sweet taste in his mouth. He got on his knees and spread your ass cheeks apart; you wiggled your body slightly as he ran his thumbs just barely over your inner lips; just grazing the surface. He felt the warmth radiating off of you. He leaned up and dragged his mouth over your opening, down to your clit; a messy and somewhat toothy interaction that left you screaming.

"Aghhh.. FUCK, MITCH. oh my god." You had been aching for him to touch you there.

He nibbled more softly against your clit and sucked at it, pulling it away from you with the very tips of his teeth. You writhed on the counter with each new thing he did. Mitch sucked and sucked for a few more moments, flicking your nub with the tip of his tongue, driving you wild.

Finally, he rose from his knees, pulling his pants down to his ankles, and he pulled you back towards the edge of the counter. You were panting against the white marble as you felt him press his hard cock up against you. He had grown to prefer not just shoving it in, but inching his length in slowly; getting to feel every bump and curve of your walls, but that was not going to work for him today. He was entirely too riled up.

"Please." You whispered right before he pushed himself inside of you in one swift motion. He moaned over how tight you were in that position and how deep he immediately went inside of you. You yelped out the moment he entered you.

He began thrusting hard against you, pulling your hair back so he could see your face. He picked you up by the throat and then let go, slowing down and remembering how he found you and Dan the night you were assaulted. You looked behind you, reached for his hand, and wrapped it back around your throat. Mitch was not Dan.

Mitch inhaled deeply through his nose as he felt your heart beat against the veins in your soft and slender neck. He pulled you close to him, picking up his pace, his hand still around your neck, his other hand fastened around your hip, and he messily pressed his lips against yours. You dug your nails into his forearm as you hungrily bit at his lower lip. He continued his deep thrusts into your pussy and pushed you back against the cold countertop. He reached his arm around your front, pulling you slightly away from the marble, and began rubbing your clit vigorously. The muscles in his forearm strained as he picked up speed and pressure on your swollen nub, as you told him you were close and begged him for release.

"Oh... ohh...." You moaned loudly as you came undone, and Mitch felt your walls collapse around him. This was the tightest your sweet little cunt had ever been and he came undone as well. One more rough thrust deep into you was all he needed. You screamed at the intense pressure that his depth caused you to feel, and then you cooed as you felt his chest press up against your back, his fingers intertwine with yours, and warm cum begin to fill your insides. You would kill for the feeling, both emotional and physical, that you got when he came inside of you. You knew that there was nothing better.

Your breathing synced up as you both came down from your climaxes. Mitch rubbed his cheek against the back of your neck and closed his eyes. "That was the best sex I've ever had." He whispered into your skin.

"I'm going to have to agree with you." You echoed his sentiments, enjoying the feeling of his fat, softening cock still lightly throbbing inside of you. You sighed. "What time is it?"

Mitch glanced behind him and saw that the clock on the stove said 10:45AM. "It's almost 11." He said as he reluctantly pulled out of you, watching his seed drip down your thigh.

You turned around to face Mitch, standing for the first time in at least forty minutes. You held yourself up with the edge of the counter. "We need to go, my love. You have lunch with Steven and I have lunch with Katie and Jeannette."

"I know." He leaned down and kissed you. A smile coming across his face at your new pet name for him. 'My love'. It made his heart skip a beat because of how organically it came off your lips. He watched as you began walking for the staircase, and he smirked. "You know, if we ever lived here, the first thing I would do is install a shower down here, because I cannot have you constantly dripping my cum all over these hardwood floors." Mitch teased you, who had grabbed a paper towel before you left the kitchen and was reaching down every few steps to wipe up your thighs.

"Shut the fuck up. How about that?" You said with a grin that made your cheeks hurt, as you made your way up the stairs.

bless.

@chivesoup @confidentrose @alexhmak @dontstopxx @iloveteenwolf24 @surpeme-bean @snek-shit @kalista-rankins @parislight @cleverassbutt @damndaphneoh @mgpizza2001 @chionophilic-nefelibata @ninja-stiles @sarcasticallystilinski @teenage-dirtbagbaby @mrs-mitch-rapp93 @alizaobrien @twsmuts @rrrennerrr @sorrynotsorrylovesome @lovelydob @iknowisoundcrazy @5secsxofamnesia @vogue-sweetie @dylrider @ivette29 @therealmrshale @twentyone-souls @sunshineystilinski @snicketyssnake @xsnak-3x @eccentricxem @inkedaztec @awkwarddly @lightbreaksthrough @maddie110201 @hattyohatt @rhyxn @amethystmerm4id @completebandgeek @red-wine-mendes @katieevans371 @girlwiththerubyslippers @theneverendingracetrack @snipsnsnailsnwerewolftales

#mitch rapp#mitch rapp x reader#the recruit aa#american assassin#dylan o'brien#mitch rapp fan fic#mitch rapp smut#SMUT#all the smut#mitch rapp fluff#mitch rapp x ofc#american assassin au#american assassin fan fic#dylan o'brien imagine#dylan o'brien one shot#dylan obrien#were-cheetah-stiles#fan fiction#fan fic writing#fan fic#teen wolf#the maze runner#the internship#the first time#caleb holloway#stiles stilinski#dave hodgman#stuart twombly

505 notes

·

View notes

Text

Mongoose 101: An Introduction to the Basics, Subdocuments, and Population

Mongoose is a library that makes MongoDB easier to use. It does two things:

It gives structure to MongoDB Collections

It gives you helpful methods to use

In this article, we'll go through:

The basics of using Mongoose

Mongoose subdocuments

Mongoose population

By the end of the article, you should be able to use Mongoose without problems.

Prerequisites

I assume you have done the following:

You have installed MongoDB on your computer

You know how to set up a local MongoDB connection

You know how to see the data you have in your database

You know what are "collections" in MongoDB

If you don't know any of these, please read "How to set up a local MongoDB connection" before you continue.

I also assume you know how to use MongoDB to create a simple CRUD app. If you don't know how to do this, please read "How to build a CRUD app with Node, Express, and MongoDB" before you continue.

Mongoose Basics

Here, you'll learn to:

Connect to the database

Create a Model

Create a Document

Find a Document

Update a Document

Delete a Document

Connecting to a database

First, you need to download Mongoose.

npm install mongoose --save

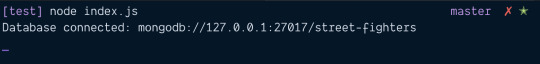

You can connect to a database with the connect method. Let's say we want to connect to a database called street-fighters. Here's the code you need:

const mongoose = require('mongoose') const url = 'mongodb://127.0.0.1:27017/street-fighters' mongoose.connect(url, { useNewUrlParser: true })

We want to know whether our connection has succeeded or failed. This helps us with debugging.

To check whether the connection has succeeded, we can use the open event. To check whether the connection failed, we use the error event.

const db = mongoose.connection db.once('open', _ => { console.log('Database connected:', url) }) db.on('error', err => { console.error('connection error:', err) })

Try connecting to the database. You should see a log like this:

Creating a Model

In Mongoose, you need to use models to create, read, update, or delete items from a MongoDB collection.

To create a Model, you need to create a Schema. A Schema lets you define the structure of an entry in the collection. This entry is also called a document.

Here's how you create a schema:

const mongoose = require('mongoose') const Schema = mongoose.Schema const schema = new Schema({ // ... })

You can use 10 different kinds of values in a Schema. Most of the time, you'll use these six:

String

Number

Boolean

Array

Date

ObjectId

Let's put this into practice.

Say we want to create characters for our Street Fighter database.

In Mongoose, it's a normal practice to put each model in its own file. So we will create a Character.js file first. This Character.js file will be placed in the models folder.

project/ |- models/ |- Character.js

In Character.js, we create a characterSchema.

const mongoose = require('mongoose') const Schema = mongoose.Schema const characterSchema = new Schema({ // ... })

Let's say we want to save two things into the database:

Name of the character

Name of their ultimate move

Both can be represented with Strings.

const mongoose = require('mongoose') const Schema = mongoose.Schema const characterSchema = new Schema({ name: String, ultimate: String })

Once we've created characterSchema, we can use mongoose's model method to create the model.

module.exports = mongoose.model('Character', characterSchema)

Creating a document

Let's say you have a file called index.js. This is where we'll perform Mongoose operations for this tutorial.

project/ |- index.js |- models/ |- Character.js

First, you need to load the Character model. You can do this with require.

const Character = require('./models/Character')

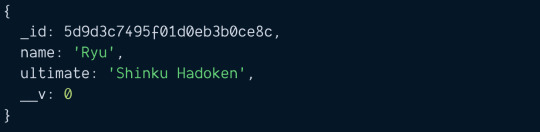

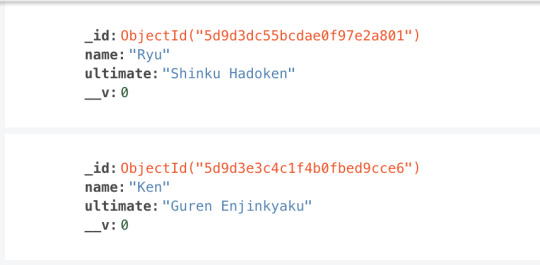

Let's say you want to create a character called Ryu. Ryu has an ultimate move called "Shinku Hadoken".

To create Ryu, you use the new, followed by your model. In this case, it's new Character.

const ryu = new Character ({ name: 'Ryu', ultimate: 'Shinku Hadoken' })

new Character creates the character in memory. It has not been saved to the database yet. To save to the database, you can run the save method.

ryu.save(function (error, document) { if (error) console.error(error) console.log(document) })

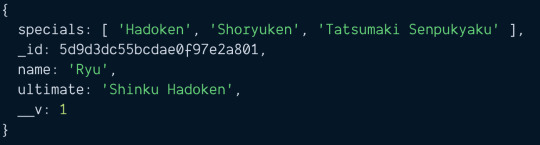

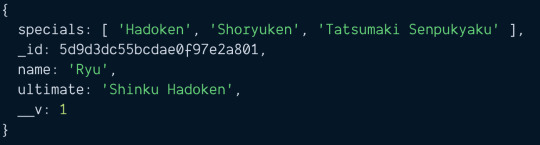

If you run the code above, you should see this in the console.

Promises and Async/await

Mongoose supports promises. It lets you write nicer code like this:

// This does the same thing as above function saveCharacter (character) { const c = new Character(character) return c.save() } saveCharacter({ name: 'Ryu', ultimate: 'Shinku Hadoken' }) .then(doc => { console.log(doc) }) .catch(error => { console.error(error) })

You can also use the await keyword if you have an asynchronous function.

If the Promise or Async/Await code looks foreign to you, I recommend reading "JavaScript async and await" before continuing with this tutorial.

async function runCode() { const ryu = new Character({ name: 'Ryu', ultimate: 'Shinku Hadoken' }) const doc = await ryu.save() console.log(doc) } runCode() .catch(error => { console.error(error) })

Note: I'll use the async/await format for the rest of the tutorial.

Uniqueness

Mongoose adds a new character to the database each you use new Character and save. If you run the code(s) above three times, you'd expect to see three Ryus in the database.

We don't want to have three Ryus in the database. We want to have ONE Ryu only. To do this, we can use the unique option.

const characterSchema = new Schema({ name: { type: String, unique: true }, ultimate: String })

The unique option creates a unique index. It ensures we cannot have two documents with the same value (for name in this case).

For unique to work properly, you need to clear the Characters collection. To clear the Characters collection, you can use this:

await Character.deleteMany({})

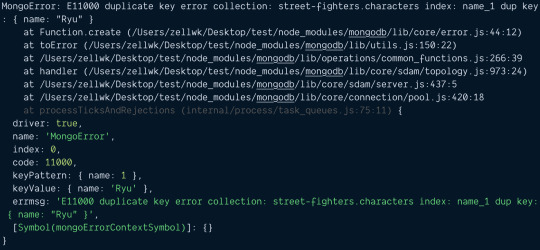

Try to add two Ryus into the database now. You'll get an E11000 duplicate key error. You won't be able to save the second Ryu.

Let's add another character into the database before we continue the rest of the tutorial.

const ken = new Character({ name: 'Ken', ultimate: 'Guren Enjinkyaku' }) await ken.save()

Finding a document

Mongoose gives you two methods to find stuff from MongoDB.

findOne: Gets one document.

find: Gets an array of documents

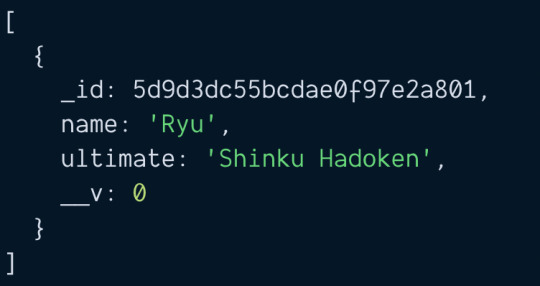

findOne

findOne returns the first document it finds. You can specify any property to search for. Let's search for Ryu:

const ryu = await Character.findOne({ name: 'Ryu' }) console.log(ryu)

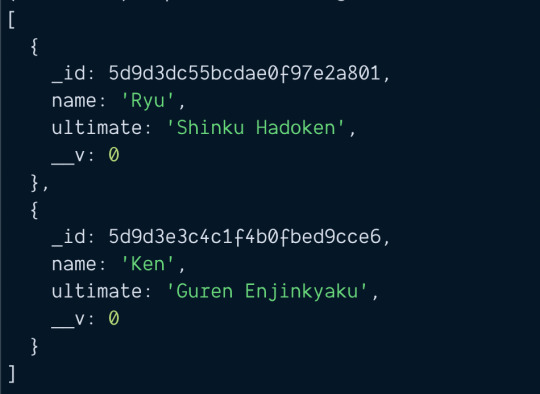

find

find returns an array of documents. If you specify a property to search for, it'll return documents that match your query.

const chars = await Character.find({ name: 'Ryu' }) console.log(chars)

If you did not specify any properties to search for, it'll return an array that contains all documents in the collection.

const chars = await Character.find() console.log(chars)

Updating a document

Let's say Ryu has three special moves:

Hadoken

Shoryuken

Tatsumaki Senpukyaku

We want to add these special moves into the database. First, we need to update our CharacterSchema.

const characterSchema = new Schema({ name: { type: String, unique: true }, specials: Array, ultimate: String })

Then, we use one of these two ways to update a character:

Use findOne, then use save

Use findOneAndUpdate

findOne and save

First, we use findOne to get Ryu.

const ryu = await Character.findOne({ name: 'Ryu' }) console.log(ryu)

Then, we update Ryu to include his special moves.

const ryu = await Character.findOne({ name: 'Ryu' }) ryu.specials = [ 'Hadoken', 'Shoryuken', 'Tatsumaki Senpukyaku' ]

After we modified ryu, we run save.

const ryu = await Character.findOne({ name: 'Ryu' }) ryu.specials = [ 'Hadoken', 'Shoryuken', 'Tatsumaki Senpukyaku' ] const doc = await ryu.save() console.log(doc)

findOneAndUpdate

findOneAndUpdate is the same as MongoDB's findOneAndModify method.

Here, you search for Ryu and pass the fields you want to update at the same time.

// Syntax await findOneAndUpdate(filter, update)

// Usage const doc = await Character.findOneAndUpdate( { name: 'Ryu' }, { specials: [ 'Hadoken', 'Shoryuken', 'Tatsumaki Senpukyaku' ] }) console.log(doc)

Difference between findOne + save vs findOneAndUpdate

Two major differences.

First, the syntax for findOne` + `save is easier to read than findOneAndUpdate.

Second, findOneAndUpdate does not trigger the save middleware.

I'll choose findOne + save over findOneAndUpdate anytime because of these two differences.

Deleting a document

There are two ways to delete a character:

findOne + remove

findOneAndDelete

Using findOne + remove

const ryu = await Character.findOne({ name: 'Ryu' }) const deleted = await ryu.remove()

Using findOneAndDelete

const deleted = await Character.findOneAndDelete({ name: 'Ken' })

Subdocuments

In Mongoose, subdocuments are documents that are nested in other documents. You can spot a subdocument when a schema is nested in another schema.

Note: MongoDB calls subdocuments embedded documents.

const childSchema = new Schema({ name: String }); const parentSchema = new Schema({ // Single subdocument child: childSchema, // Array of subdocuments children: [ childSchema ] });

In practice, you don't have to create a separate childSchema like the example above. Mongoose helps you create nested schemas when you nest an object in another object.

// This code is the same as above const parentSchema = new Schema({ // Single subdocument child: { name: String }, // Array of subdocuments children: [{name: String }] });

In this section, you will learn to:

Create a schema that includes a subdocument

Create a documents that contain subdocuments

Update subdocuments that are arrays

Update a single subdocument

Updating characterSchema

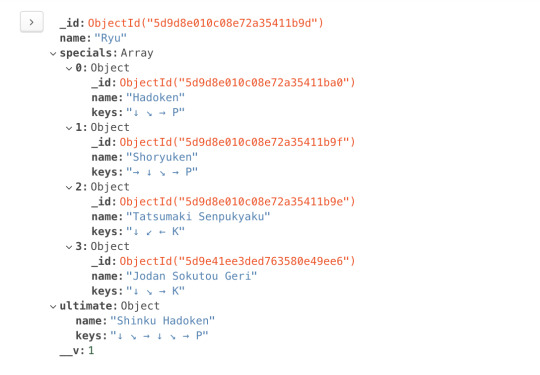

Let's say we want to create a character called Ryu. Ryu has three special moves.

Hadoken

Shinryuken

Tatsumaki Senpukyaku

Ryu also has one ultimate move called:

Shinku Hadoken

We want to save the names of each move. We also want to save the keys required to execute that move.

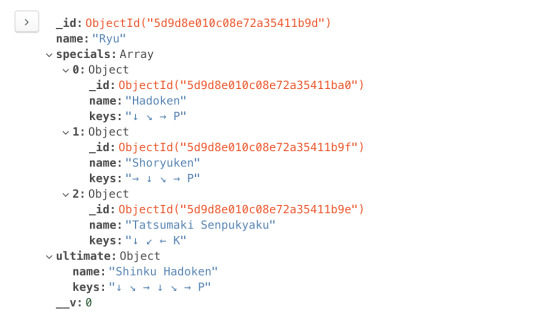

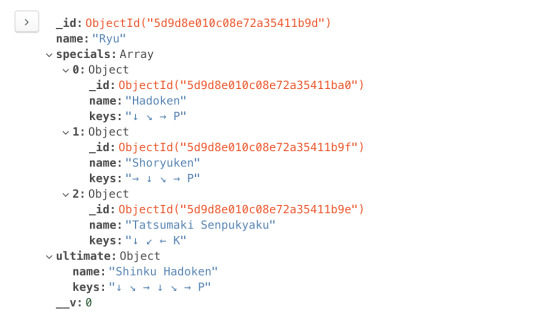

Here, each move is a subdocument.

const characterSchema = new Schema({ name: { type: String, unique: true }, // Array of subdocuments specials: [{ name: String, keys: String }] // Single subdocument ultimate: { name: String, keys: String } })

You can also use the childSchema syntax if you wish to. It makes the Character schema easier to understand.

const moveSchema = new Schema({ name: String, keys: String }) const characterSchema = new Schema({ name: { type: String, unique: true }, // Array of subdocuments specials: [moveSchema], // Single subdocument ultimate: moveSchema })

Creating documents that contain subdocuments

There are two ways to create documents that contain subdocuments:

Pass a nested object into new Model

Add properties into the created document.

Method 1: Passing the entire object

For this method, we construct a nested object that contains both Ryu's name and his moves.

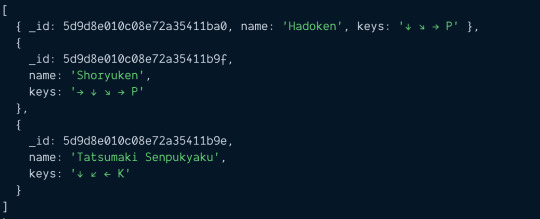

const ryu = { name: 'Ryu', specials: [{ name: 'Hadoken', keys: '↓ ↘ → P' }, { name: 'Shoryuken', keys: '→ ↓ ↘ → P' }, { name: 'Tatsumaki Senpukyaku', keys: '↓ ↙ ↠K' }], ultimate: { name: 'Shinku Hadoken', keys: '↓ ↘ → ↓ â

Then, we pass this object into new Character.

const char = new Character(ryu) const doc = await char.save() console.log(doc)

Method 2: Adding subdocuments later

For this method, we create a character with new Character first.

const ryu = new Character({ name: 'Ryu' })

Then, we edit the character to add special moves:

const ryu = new Character({ name: 'Ryu' }) const ryu.specials = [{ name: 'Hadoken', keys: '↓ ↘ → P' }, { name: 'Shoryuken', keys: '→ ↓ ↘ → P' }, { name: 'Tatsumaki Senpukyaku', keys: '↓ ↙ ↠K' }]

Then, we edit the character to add the ultimate move:

const ryu = new Character({ name: 'Ryu' }) // Adds specials const ryu.specials = [{ name: 'Hadoken', keys: '↓ ↘ → P' }, { name: 'Shoryuken', keys: '→ ↓ ↘ → P' }, { name: 'Tatsumaki Senpukyaku', keys: '↓ ↙ ↠K' }] // Adds ultimate ryu.ultimate = { name: 'Shinku Hadoken', keys: '↓ ↘ → ↓ ↘ → P' }

Once we're satisfied with ryu, we run save.

const ryu = new Character({ name: 'Ryu' }) // Adds specials const ryu.specials = [{ name: 'Hadoken', keys: '↓ ↘ → P' }, { name: 'Shoryuken', keys: '→ ↓ ↘ → P' }, { name: 'Tatsumaki Senpukyaku', keys: '↓ ↙ ↠K' }] // Adds ultimate ryu.ultimate = { name: 'Shinku Hadoken', keys: '↓ ↘ → ↓ ↘ → P' } const doc = await ryu.save() console.log(doc)

Updating array subdocuments

The easiest way to update subdocuments is:

Use findOne to find the document

Get the array

Change the array

Run save

For example, let's say we want to add Jodan Sokutou Geri to Ryu's special moves. The keys for Jodan Sokutou Geri are ↓ ↘ → K.

First, we find Ryu with findOne.

const ryu = await Characters.findOne({ name: 'Ryu' })

Mongoose documents behave like regular JavaScript objects. We can get the specials array by writing ryu.specials.

const ryu = await Characters.findOne({ name: 'Ryu' }) const specials = ryu.specials console.log(specials)

This specials array is a normal JavaScript array.

const ryu = await Characters.findOne({ name: 'Ryu' }) const specials = ryu.specials console.log(Array.isArray(specials)) // true

We can use the push method to add a new item into specials,

const ryu = await Characters.findOne({ name: 'Ryu' }) ryu.specials.push({ name: 'Jodan Sokutou Geri', keys: '↓ ↘ → K' })

After updating specials, we run save to save Ryu to the database.

const ryu = await Characters.findOne({ name: 'Ryu' }) ryu.specials.push({ name: 'Jodan Sokutou Geri', keys: '↓ ↘ → K' }) const updated = await ryu.save() console.log(updated)

Updating a single subdocument

It's even easier to update single subdocuments. You can edit the document directly like a normal object.

Let's say we want to change Ryu's ultimate name from Shinku Hadoken to Dejin Hadoken. What we do is:

Use findOne to get Ryu.

Change the name in ultimate

Run save

const ryu = await Characters.findOne({ name: 'Ryu' }) ryu.ultimate.name = 'Dejin Hadoken' const updated = await ryu.save() console.log(updated)

Population

MongoDB documents have a size limit of 16MB. This means you can use subdocuments (or embedded documents) if they are small in number.

For example, Street Fighter characters have a limited number of moves. Ryu only has 4 special moves. In this case, it's okay to use embed moves directly into Ryu's character document.

But if you have data that can contain an unlimited number of subdocuments, you need to design your database differently.

One way is to create two separate models and combine them with populate.

Creating the models

Let's say you want to create a blog. And you want to store the blog content with MongoDB. Each blog has a title, content, and comments.

Your first schema might look like this:

const blogPostSchema = new Schema({ title: String, content: String, comments: [{ comment: String }] }) module.exports = mongoose.model('BlogPost', blogPostSchema)

There's a problem with this schema.

A blog post can have an unlimited number of comments. If a blog post explodes in popularity and comments swell up, the document might exceed the 16MB limit imposed by MongoDB.

This means we should not embed comments in blog posts. We should create a separate collection for comments.

const comments = new Schema({ comment: String }) module.exports = mongoose.model('Comment', commentSchema)

In Mongoose, we can link up the two models with Population.

To use Population, we need to:

Set type of a property to Schema.Types.ObjectId

Set ref to the model we want to link too.

Here, we want comments in blogPostSchema to link to the Comment collection. This is the schema we'll use:

const blogPostSchema = new Schema({ title: String, content: String, comments: [{ type: Schema.Types.ObjectId, ref: 'Comment' }] }) module.exports = mongoose.model('BlogPost', blogPostSchema)

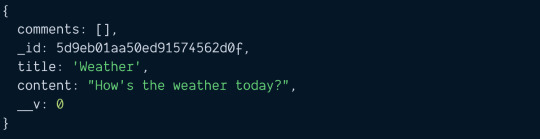

Creating a blog post

Let's say you want to create a blog post. To create the blog post, you use new BlogPost.

const blogPost = new BlogPost({ title: 'Weather', content: `How's the weather today?` })

A blog post can have zero comments. We can save this blog post with save.

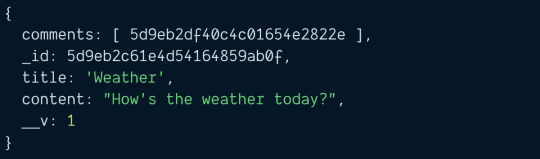

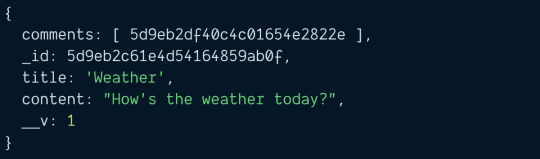

const doc = await blogPost.save() console.log(doc)

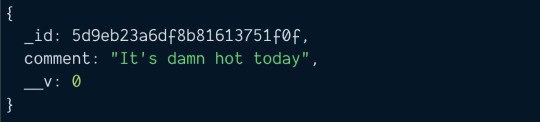

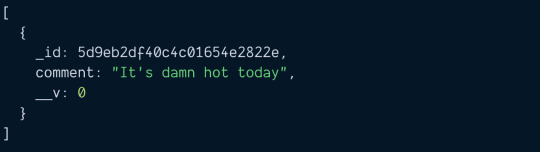

Now let's say we want to create a comment for the blog post. To do this, we create and save the comment.

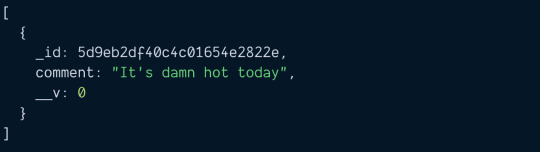

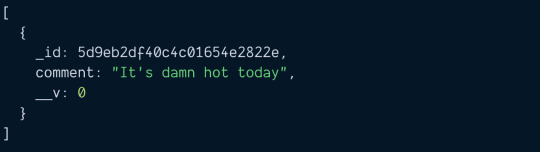

const comment = new Comment({ comment: `It's damn hot today` }) const savedComment = await comment.save() console.log(savedComment)

Notice the saved comment has an _id attribute. We need to add this _id attribute into the blog post's comments array. This creates the link.

// Saves comment to Database const savedComment = await comment.save() // Adds comment to blog post // Then saves blog post to database const blogPost = await BlogPost.findOne({ title: 'Weather' }) blogPost.comments.push(savedComment._id) const savedPost = await blogPost.save() console.log(savedPost)

Blog post with comments.

Searching blog posts and its comments

If you tried to search for the blog post, you'll see the blog post has an array of comment IDs.

const blogPost = await BlogPost.findOne({ title: 'Weather' }) console.log(blogPost)

There are four ways to get comments.

Mongoose population

Manual way #1

Manual way #2

Manual way #3

Mongoose Population

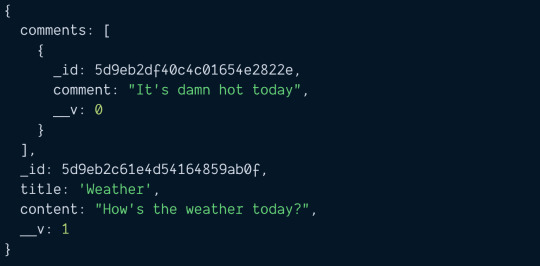

Mongoose allows you to fetch linked documents with the populate method. What you need to do is call .populate when you execute with findOne.

When you call populate, you need to pass in the key of the property you want to populate. In this case, the key is comments. (Note: Mongoose calls this the key a "path").

const blogPost = await BlogPost.findOne({ title: 'Weather' }) .populate('comments') console.log(blogPost)

Manual way (method 1)

Without Mongoose Populate, you need to find the comments manually. First, you need to get the array of comments.

const blogPost = await BlogPost.findOne({ title: 'Weather' }) .populate('comments') const commentIDs = blogPost.comments

Then, you loop through commentIDs to find each comment. If you go with this method, it's slightly faster to use Promise.all.

const commentPromises = commentIDs.map(_id => { return Comment.findOne({ _id }) }) const comments = await Promise.all(commentPromises) console.log(comments)

Manual way (method 2)

Mongoose gives you an $in operator. You can use this $in operator to find all comments within an array. This syntax takes effort to get used to.

If I had to do the manual way, I'd prefer Manual #1 over this.

const commentIDs = blogPost.comments const comments = await Comment.find({ '_id': { $in: commentIDs } }) console.log(comments)

Manual way (method 3)

For the third method, we need to change the schema. When we save a comment, we link the comment to the blog post.

// Linking comments to blog post const commentSchema = new Schema({ comment: String blogPost: [{ type: Schema.Types.ObjectId, ref: 'BlogPost' }] }) module.exports = mongoose.model('Comment', commentSchema)

You need to save the comment into the blog post, and the blog post id into the comment.

const blogPost = await BlogPost.findOne({ title: 'Weather' }) // Saves comment const comment = new Comment({ comment: `It's damn hot today`, blogPost: blogPost._id }) const savedComment = comment.save() // Links blog post to comment blogPost.comments.push(savedComment._id) await blogPost.save()

Once you do this, you can search the Comments collection for comments that match your blog post's id.

// Searches for comments const blogPost = await BlogPost.findOne({ title: 'Weather' }) const comments = await Comment.find({ _id: blogPost._id }) console.log(comments)

I'd prefer Manual #3 over Manual #1 and Manual #2.

And Population beats all three manual methods.

Quick Summary

You learned to use Mongoose on three different levels in this article:

Basic Mongoose

Mongoose subdocuments

Mongoose population

That's it!

Thanks for reading. This article was originally posted on my blog. Sign up for my newsletter if you want more articles to help you become a better frontend developer.

via freeCodeCamp.org https://ift.tt/2sSFtpK

0 notes

Text

300+ TOP PERL Interview Questions and Answers

Perl Interview Questions for freshers and experienced :-

1.How many type of variable in perl Perl has three built in variable types Scalar Array Hash 2.What is the different between array and hash in perl Array is an order list of values position by index. Hash is an unordered list of values position by keys. 3.What is the difference between a list and an array? A list is a fixed collection of scalars. An array is a variable that holds a variable collection of scalars. 4.what is the difference between use and require in perl Use : The method is used only for the modules(only to include .pm type file) The included objects are varified at the time of compilation. No Need to give file extension. Require: The method is used for both libraries and modules. The included objects are varified at the run time. Need to give file Extension. 5.How to Debug Perl Programs Start perl manually with the perl command and use the -d switch, followed by your script and any arguments you wish to pass to your script: "perl -d myscript.pl arg1 arg2" 6.What is a subroutine? A subroutine is like a function called upon to execute a task. subroutine is a reusable piece of code. 7.what does this mean '$^0'? tell briefly $^ - Holds the name of the default heading format for the default file handle. Normally, it is equal to the file handle's name with _TOP appended to it. 8.What is the difference between die and exit in perl? 1) die is used to throw an exception exit is used to exit the process. 2) die will set the error code based on $! or $? if the exception is uncaught. exit will set the error code based on its argument. 3) die outputs a message exit does not. 9.How to merge two array? @a=(1, 2, 3, 4); @b=(5, 6, 7, 8); @c=(@a, @b); print "@c"; 10.Adding and Removing Elements in Array Use the following functions to add/remove and elements: push(): adds an element to the end of an array. unshift(): adds an element to the beginning of an array. pop(): removes the last element of an array. shift() : removes the first element of an array.