#Scaling Azure AI Search

Explore tagged Tumblr posts

Text

Performance Optimization in Azure AI Search - Ansi ByteCode LLP

Performance Optimization in Azure AI Search : enhance performance with advanced indexing, caching, and scaling. Please visit:- https://ansibytecode.com/performance-optimization-in-azure-ai-search/

0 notes

Text

The Future of Web Development: Trends, Techniques, and Tools

Web development is a dynamic field that is continually evolving to meet the demands of an increasingly digital world. With businesses relying more on online presence and user experience becoming a priority, web developers must stay abreast of the latest trends, technologies, and best practices. In this blog, we’ll delve into the current landscape of web development, explore emerging trends and tools, and discuss best practices to ensure successful web projects.

Understanding Web Development

Web development involves the creation and maintenance of websites and web applications. It encompasses a variety of tasks, including front-end development (what users see and interact with) and back-end development (the server-side that powers the application). A successful web project requires a blend of design, programming, and usability skills, with a focus on delivering a seamless user experience.

Key Trends in Web Development

Progressive Web Apps (PWAs): PWAs are web applications that provide a native app-like experience within the browser. They offer benefits like offline access, push notifications, and fast loading times. By leveraging modern web capabilities, PWAs enhance user engagement and can lead to higher conversion rates.

Single Page Applications (SPAs): SPAs load a single HTML page and dynamically update content as users interact with the app. This approach reduces page load times and provides a smoother experience. Frameworks like React, Angular, and Vue.js have made developing SPAs easier, allowing developers to create responsive and efficient applications.

Responsive Web Design: With the increasing use of mobile devices, responsive design has become essential. Websites must adapt to various screen sizes and orientations to ensure a consistent user experience. CSS frameworks like Bootstrap and Foundation help developers create fluid, responsive layouts quickly.

Voice Search Optimization: As voice-activated devices like Amazon Alexa and Google Home gain popularity, optimizing websites for voice search is crucial. This involves focusing on natural language processing and long-tail keywords, as users tend to speak in full sentences rather than typing short phrases.

Artificial Intelligence (AI) and Machine Learning: AI is transforming web development by enabling personalized user experiences and smarter applications. Chatbots, for instance, can provide instant customer support, while AI-driven analytics tools help developers understand user behavior and optimize websites accordingly.

Emerging Technologies in Web Development

JAMstack Architecture: JAMstack (JavaScript, APIs, Markup) is a modern web development architecture that decouples the front end from the back end. This approach enhances performance, security, and scalability by serving static content and fetching dynamic content through APIs.

WebAssembly (Wasm): WebAssembly allows developers to run high-performance code on the web. It opens the door for languages like C, C++, and Rust to be used for web applications, enabling complex computations and graphics rendering that were previously difficult to achieve in a browser.

Serverless Computing: Serverless architecture allows developers to build and run applications without managing server infrastructure. Platforms like AWS Lambda and Azure Functions enable developers to focus on writing code while the cloud provider handles scaling and maintenance, resulting in more efficient workflows.

Static Site Generators (SSGs): SSGs like Gatsby and Next.js allow developers to build fast and secure static websites. By pre-rendering pages at build time, SSGs improve performance and enhance SEO, making them ideal for blogs, portfolios, and documentation sites.

API-First Development: This approach prioritizes building APIs before developing the front end. API-first development ensures that various components of an application can communicate effectively and allows for easier integration with third-party services.

Best Practices for Successful Web Development

Focus on User Experience (UX): Prioritizing user experience is essential for any web project. Conduct user research to understand your audience's needs, create wireframes, and test prototypes to ensure your design is intuitive and engaging.

Emphasize Accessibility: Making your website accessible to all users, including those with disabilities, is a fundamental aspect of web development. Adhere to the Web Content Accessibility Guidelines (WCAG) by using semantic HTML, providing alt text for images, and ensuring keyboard navigation is possible.

Optimize Performance: Website performance significantly impacts user satisfaction and SEO. Optimize images, minify CSS and JavaScript, and leverage browser caching to ensure fast loading times. Tools like Google PageSpeed Insights can help identify areas for improvement.

Implement Security Best Practices: Security is paramount in web development. Use HTTPS to encrypt data, implement secure authentication methods, and validate user input to protect against vulnerabilities. Regularly update dependencies to guard against known exploits.

Stay Current with Technology: The web development landscape is constantly changing. Stay informed about the latest trends, tools, and technologies by participating in online courses, attending webinars, and engaging with the developer community. Continuous learning is crucial to maintaining relevance in this field.

Essential Tools for Web Development

Version Control Systems: Git is an essential tool for managing code changes and collaboration among developers. Platforms like GitHub and GitLab facilitate version control and provide features for issue tracking and code reviews.

Development Frameworks: Frameworks like React, Angular, and Vue.js streamline the development process by providing pre-built components and structures. For back-end development, frameworks like Express.js and Django can speed up the creation of server-side applications.

Content Management Systems (CMS): CMS platforms like WordPress, Joomla, and Drupal enable developers to create and manage websites easily. They offer flexibility and scalability, making it simple to update content without requiring extensive coding knowledge.

Design Tools: Tools like Figma, Sketch, and Adobe XD help designers create user interfaces and prototypes. These tools facilitate collaboration between designers and developers, ensuring that the final product aligns with the initial vision.

Analytics and Monitoring Tools: Google Analytics, Hotjar, and other analytics tools provide insights into user behavior, allowing developers to assess the effectiveness of their websites. Monitoring tools can alert developers to issues such as downtime or performance degradation.

Conclusion

Web development is a rapidly evolving field that requires a blend of creativity, technical skills, and a user-centric approach. By understanding the latest trends and technologies, adhering to best practices, and leveraging essential tools, developers can create engaging and effective web experiences. As we look to the future, those who embrace innovation and prioritize user experience will be best positioned for success in the competitive world of web development. Whether you are a seasoned developer or just starting, staying informed and adaptable is key to thriving in this dynamic landscape.

more about details :- https://fabvancesolutions.com/

#fabvancesolutions#digitalagency#digitalmarketingservices#graphic design#startup#ecommerce#branding#marketing#digitalstrategy#googleimagesmarketing

2 notes

·

View notes

Text

Data Zones Improve Enterprise Trust In Azure OpenAI Service

The trust of businesses in the Azure OpenAI Service was increased by the implementation of Data Zones.

Data security and privacy are critical for businesses in today’s quickly changing digital environment. Microsoft Azure OpenAI Service provides strong enterprise controls that adhere to the strictest security and regulatory requirements, as more and more businesses use AI to spur innovation. Anchored on the core of Azure, Azure OpenAI may be integrated with the technologies in your company to assist make sure you have the proper controls in place. Because of this, clients using Azure OpenAI for their generative AI applications include KPMG, Heineken, Unity, PWC, and more.

With over 60,000 customers using Azure OpenAI to build and scale their businesses, it is thrilled to provide additional features that will further improve data privacy and security capabilities.

Introducing Azure Data Zones for OpenAI

Data residency with control over data processing and storage across its current 28 distinct locations was made possible by Azure OpenAI from Day 0. The United States and the European Union now have Azure OpenAI Data Zones available. Historically, variations in model-region availability have complicated management and slowed growth by requiring users to manage numerous resources and route traffic between them. Customers will have better access to models and higher throughput thanks to this feature, which streamlines the management of generative AI applications by providing the flexibility of regional data processing while preserving data residency within certain geographic bounds.

Azure is used by businesses for data residency and privacy

Azure OpenAI’s data processing and storage options are already strong, and this is strengthened with the addition of the Data Zones capability. Customers using Azure OpenAI can choose between regional, data zone, and global deployment options. Customers are able to increase throughput, access models, and streamline management as a result. Data is kept at rest in the Azure region that you have selected for your resource with all deployment choices.

Global deployments: With access to all new models (including the O1 series) at the lowest cost and highest throughputs, this option is available in more than 25 regions. The global backbone of the Azure resource guarantees optimal response times, and data is stored at rest within the customer-selected

Data Zones: Introducing Data Zones, which offer cross-region load balancing flexibility within the customer-selected geographic boundaries, to clients who require enhanced data processing assurances while gaining access to the newest models. All Azure OpenAI regions in the US are included in the US Data Zone. All Azure OpenAI regions that are situated inside EU member states are included in the European Union Data Zone. The upcoming month will see the availability of the new Azure Data Zones deployment type.

Regional deployments: These guarantee processing and storage take place inside the resource’s geographic boundaries, providing the highest degree of data control. When considering Global and Data Zone deployments, this option provides the least amount of model availability.

Extending generative AI apps securely using your data

Azure OpenAI allows you to extend your solution with your current data storage and search capabilities by integrating with hundreds of Azure and Microsoft services with ease. Azure AI Search and Microsoft Fabric are the two most popular extensions.

For both classic and generative AI applications, Azure AI search offers safe information retrieval at scale across customer-owned content. This keeps Azure’s scale, security, and management while enabling document search and data exploration to feed query results into prompts and ground generative AI applications on your data.

Access to an organization’s whole multi-cloud data estate is made possible by Microsoft Fabric’s unified data lake, OneLake, which is arranged in an easy-to-use manner. Maintaining corporate data governance and compliance controls while streamlining the integration of data to power your generative AI application is made easier by consolidating all company data into a single data lake.

Azure is used by businesses to ensure compliance, safety, and security

Content Security by Default

Prompts and completions are screened by a group of classification models to identify and block hazardous content, and Azure OpenAI is automatically linked with Azure AI Content Safety at no extra cost. The greatest selection of content safety options is offered by Azure, which also has the new prompt shield and groundedness detection features. Clients with more stringent needs can change these parameters, such as harm severity or enabling asynchronous modes to reduce delay.

Entra ID provides secure access using Managed Identity

In order to provide zero-trust access restrictions, stop identity theft, and manage resource access, Microsoft advises protecting your Azure OpenAI resources using the Microsoft Entra ID. Through the application of least-privilege concepts, businesses can guarantee strict security guidelines. Furthermore strengthening security throughout the system, Entra ID does away with the requirement for hard-coded credentials.

Furthermore, Managed Identity accurately controls resource rights through a smooth integration with Azure role-based access control (RBAC).

Customer-managed key encryption for improved data security

By default, the information that Azure OpenAI stores in your subscription is encrypted with a key that is managed by Microsoft. Customers can use their own Customer-Managed Keys to encrypt data saved on Microsoft-managed resources, such as Azure Cosmos DB, Azure AI Search, or your Azure Storage account, using Azure OpenAI, further strengthening the security of your application.

Private networking offers more security

Use Azure virtual networks and Azure Private Link to secure your AI apps by separating them from the public internet. With this configuration, secure connections to on-premises resources via ExpressRoute, VPN tunnels, and peer virtual networks are made possible while ensuring that traffic between services stays inside Microsoft’s backbone network.

The AI Studio’s private networking capability was also released last week, allowing users to utilize its Studio UI’s powerful “add your data” functionality without having to send data over a public network.

Dedication to Adherence

It is dedicated to helping its clients in all regulated areas, such as government, finance, and healthcare, meet their compliance needs. Azure OpenAI satisfies numerous industry certifications and standards, including as FedRAMP, SOC 2, and HIPAA, guaranteeing that businesses in a variety of sectors can rely on their AI solutions to stay compliant and safe.

Businesses rely on Azure’s dependability at the production level

GitHub Copilot, Microsoft 365 Copilot, Microsoft Security Copilot, and many other of the biggest generative AI applications in the world today rely on the Azure OpenAI service. Customers and its own product teams select Azure OpenAI because it provide an industry-best 99.9% reliability SLA on both Provisioned Managed and Paygo Standard services. It is improving that further by introducing a new latency SLA.

Announcing Provisioned-Managed Latency SLAs as New Features

Ensuring that customers may scale up with their product expansion without sacrificing latency is crucial to maintaining the caliber of the customer experience. It already provide the largest scale with the lowest latency with its Provisioned-Managed (PTUM) deployment option. With PTUM, it is happy to introduce explicit latency service level agreements (SLAs) that guarantee performance at scale. In the upcoming month, these SLAs will go into effect. Save this product newsfeed to receive future updates and improvements.

Read more on govindhtech.com

#DataZonesImprove#EnterpriseTrust#OpenAIService#Azure#DataZones#AzureOpenAIService#FedRAMP#Microsoft365Copilot#improveddatasecurity#data#ai#technology#technews#news#AzureOpenAI#AzureAIsearch#Microsoft#AzureCosmosDB#govindhtech

2 notes

·

View notes

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes

Text

Advanced Techniques in Full-Stack Development

Certainly, let's delve deeper into more advanced techniques and concepts in full-stack development:

1. Server-Side Rendering (SSR) and Static Site Generation (SSG):

SSR: Rendering web pages on the server side to improve performance and SEO by delivering fully rendered pages to the client.

SSG: Generating static HTML files at build time, enhancing speed, and reducing the server load.

2. WebAssembly:

WebAssembly (Wasm): A binary instruction format for a stack-based virtual machine. It allows high-performance execution of code on web browsers, enabling languages like C, C++, and Rust to run in web applications.

3. Progressive Web Apps (PWAs) Enhancements:

Background Sync: Allowing PWAs to sync data in the background even when the app is closed.

Web Push Notifications: Implementing push notifications to engage users even when they are not actively using the application.

4. State Management:

Redux and MobX: Advanced state management libraries in React applications for managing complex application states efficiently.

Reactive Programming: Utilizing RxJS or other reactive programming libraries to handle asynchronous data streams and events in real-time applications.

5. WebSockets and WebRTC:

WebSockets: Enabling real-time, bidirectional communication between clients and servers for applications requiring constant data updates.

WebRTC: Facilitating real-time communication, such as video chat, directly between web browsers without the need for plugins or additional software.

6. Caching Strategies:

Content Delivery Networks (CDN): Leveraging CDNs to cache and distribute content globally, improving website loading speeds for users worldwide.

Service Workers: Using service workers to cache assets and data, providing offline access and improving performance for returning visitors.

7. GraphQL Subscriptions:

GraphQL Subscriptions: Enabling real-time updates in GraphQL APIs by allowing clients to subscribe to specific events and receive push notifications when data changes.

8. Authentication and Authorization:

OAuth 2.0 and OpenID Connect: Implementing secure authentication and authorization protocols for user login and access control.

JSON Web Tokens (JWT): Utilizing JWTs to securely transmit information between parties, ensuring data integrity and authenticity.

9. Content Management Systems (CMS) Integration:

Headless CMS: Integrating headless CMS like Contentful or Strapi, allowing content creators to manage content independently from the application's front end.

10. Automated Performance Optimization:

Lighthouse and Web Vitals: Utilizing tools like Lighthouse and Google's Web Vitals to measure and optimize web performance, focusing on key user-centric metrics like loading speed and interactivity.

11. Machine Learning and AI Integration:

TensorFlow.js and ONNX.js: Integrating machine learning models directly into web applications for tasks like image recognition, language processing, and recommendation systems.

12. Cross-Platform Development with Electron:

Electron: Building cross-platform desktop applications using web technologies (HTML, CSS, JavaScript), allowing developers to create desktop apps for Windows, macOS, and Linux.

13. Advanced Database Techniques:

Database Sharding: Implementing database sharding techniques to distribute large databases across multiple servers, improving scalability and performance.

Full-Text Search and Indexing: Implementing full-text search capabilities and optimized indexing for efficient searching and data retrieval.

14. Chaos Engineering:

Chaos Engineering: Introducing controlled experiments to identify weaknesses and potential failures in the system, ensuring the application's resilience and reliability.

15. Serverless Architectures with AWS Lambda or Azure Functions:

Serverless Architectures: Building applications as a collection of small, single-purpose functions that run in a serverless environment, providing automatic scaling and cost efficiency.

16. Data Pipelines and ETL (Extract, Transform, Load) Processes:

Data Pipelines: Creating automated data pipelines for processing and transforming large volumes of data, integrating various data sources and ensuring data consistency.

17. Responsive Design and Accessibility:

Responsive Design: Implementing advanced responsive design techniques for seamless user experiences across a variety of devices and screen sizes.

Accessibility: Ensuring web applications are accessible to all users, including those with disabilities, by following WCAG guidelines and ARIA practices.

full stack development training in Pune

2 notes

·

View notes

Text

Tableau To Power BI Migration & Benefits Of Data Analytics

Since business intelligence tools are becoming more sophisticated, organizations are searching for less expensive, better scaling, and smoother integration solutions. For this reason, many companies are working to use Tableau to power BI migration as a way to bring their analytics up to date with business changes. We'll look at why companies switch from Tableau to Power BI and the important ways data analytics can change their operations during and following this change.

Why Tableau to Power BI Migration is Gaining Momentum

Tableau is famous for its strong ability to present data visually. As things have changed over time and Microsoft's portfolio expanded, Power BI came to lead the business intelligence field. There are two main reasons that caused organizations to choose Power BI over Tableau:

Tighter integration with Microsoft tools: Many organizations already using Office 365, Azure, and Teams value Power BI as a smooth way to share and handle data.

Lower total cost of ownership: Power BI tends to be cheaper to own when it is used by larger organizations or whole enterprises.

Advanced AI capabilities: Extra value comes from Power BI's AI features for data modeling, detecting irregularities, and making predictions.

Unified analytics platform: All types of analytics can be found within Power BI. It combines basic dashboards with complex analytical features.

Firms that see the benefits are going with a seamless Tableau to Power BI migration, and Pulse Convert is leading the charge.

How Pulse Convert Accelerates Tableau to Power BI Migration

Changing from Tableau to Power BI requires rethinking how your data is organized, how you get to it, and how you show it. Pulse Convert was specifically made to help automate and make easy the process of moving from Tableau to Power BI.

This is how Pulse Convert improves your user experience:

Automated object translation: With Pulse Convert, Tableau dashboards are analyzed and their visuals are automatically converted into the same thing in Power BI.

Script and calculation transformation: The same rules can be written in DAX measures in Power BI by using Tableau's calculated fields and data rules.

Minimal downtime: Automating these tasks allows Pulse Convert to help companies complete migrations with minimal disruption to their business.

Version control and audit readiness: Tracking and versioning of migration steps makes compliance and audit compliance much easier for regulated businesses.

Thanks to Pulse Convert, migration from Tableau to Power BI happens with fewer issues, protecting both the data and functions of organizations.

Strategic Benefits of Data Analytics Post-Migration

After moving to Power BI, companies have the opportunity to grow their analytics abilities even further. Here are some main benefits data analytics gives after a migration is done.

1. Real-Time Decision-Making

Because Power BI is built to work with Azure Synapse, SQL Server, and streaming datasets, organizations can use data and act on insights instantly. Thanks to business software, decision-makers can inspect real-time sales and key performance indicators whenever needed.

Moving from static ways of reporting to dynamic analytics is a major reason why people are switching from Tableau to Power BI.

2. Cost Optimization Through Insights

After a migration, analyses may uncover challenges in how the organization performs its operations, supply chain, and marketing. The advanced reporting features in Power BI help analysts examine costs, locate problems, and create ways to save money using real data.

There was limited granular understanding before the move, which supports why the decision to move to Tableau was valuable.

3. Data-Driven Culture and Self-Service BI

Its friendly user interface and connection with Excel mean that Power BI is easy for anyone to use. Once the team has migrated, each person can build personal dashboards, examine departmental KPIs, and share outcomes with others using only a few steps.

Making data accessible to everyone encourages making business decisions based on data, rather than on just guesses. Self-service BI is a big reason companies are buying Tableau for their BI migration journeys.

4. Predictive and Prescriptive Analytics

Modern companies are interested in knowing what is ahead and how to respond. Since Power BI uses AI and connects to Azure Machine Learning, bringing statistical predictions within reach is simpler.

When you use Power BI instead of Tableau, you can explore recipes for actions, set optimum pricing, and accurately predict future sales. With such abilities, Tableau is an even better choice to support BI migration.

5. Security and Governance at Scale

Row-level security, user access controls, and Microsoft Information Protection integration ensure that Power BI gives excellent enterprise security. Because of these features, organizations in financial, healthcare, or legal fields choose Power BI often.

In finalizing their move from Tableau to Power BI migration, some organizations discover they can now better govern their data and manage it centrally without losing ease of use.

Key Considerations Before You Migrate

Although it's obvious why Tableau's migration is useful, you need to plan to avoid any problems. Please use these tips to help you:

Audit your existing Tableau assets: Examine your existing Tableau work: it's possible that some assets won't need to migrate. Begin with reports that are used most and make a major difference for the company.

Engage stakeholders: Ensure collaboration between people in IT and business to ensure the created reports deliver what was expected.

Pilot migration with Pulse Convert: Try Pulse Convert on just a few dashboards and monitor how it works before it's used across the organization.

Train your teams: Make sure your teams understand the changes. After-migration outcomes rely largely on user adoption. Make sure employees learn all the important features of Power BI.

Using the right preparation and the support of Pulse Convert, organizations can make the most of their upgrade from Power BI to Tableau

Real-World Impact: From Insights to Outcomes

We can use an example to make things simpler. Its work in managing clients included running dozens of Tableau dashboards through several teams. However, slower reports and higher licensing fees were leading to slower performance. When they understood the value, they went ahead and used Pulse Convert to migrate their BI content to Tableau.

After 90 days, more than 80% of the reports had been created in Power BI. Using Automated DAX, I was able to save hundreds of hours on dashboard alignment. Following their migration, they were able to cut their report-making expense by 40% and increase their decision time by 25%. Additionally, anyone can now build reports quickly on their own, taking decision-making to a new level in the company.

Such experiences happen often. Proper preparation allows Tableau to Power BI migration to increase business results.

Final Thoughts

Moving fast, being adaptable, and having insight are now main features of how businesses act. No longer can organizations tolerate analytics software that puts a brake on development, drives up expenses, or prevents access to data. Migrating to Power BI from Tableau means making a business move that supports smarter and quicker business practices.

Pulse Convert allows organizations to make this migration simple and guarantees that every data visualization and log setup transfers successfully. As a result, they make their analytics platform more modern and ensure they can gain more insight and stay ahead of competitors.

If your company is ready for the next level in analytics, Office Solution will provide top support and the highest quality migration software. It's apparent how to follow Tableau with Power BI, and analytics will only improve going forward.

1 note

·

View note

Text

Run Your Business Smarter with SAP Business One on Cloud!

At Maivin, we bring you SAP Business One on Cloud – the smart, scalable ERP solution that helps you manage every function of your business in real time, without the need for complex on-premise infrastructure.

Why Choose SAP Business One on Cloud?

SAP Business One Cloud is a modern and flexible ERP platform that delivers everything you need to run your business — from accounting and inventory to sales and customer service — all in one place. It is hosted on a secure cloud platform and offers the Pay-as-You-Go model, reducing upfront costs and allowing you to scale on demand.

Key Benefits of SAP Business One Cloud

1. Cost Savings with Pay-as-You-Go Model

Say goodbye to high upfront infrastructure and maintenance costs. With the subscription-based model, you only pay for what you use.

2. Anywhere, Anytime Access

Access your business data and applications from any device, anywhere in the world — ideal for remote work and on-the-go decisions.

3. Quick and Informed Decisions

Thanks to real-time data integration and SAP HANA’s in-memory computing, you get instant visibility across your business functions.

4. Scalable as You Grow

SAP Business One on Cloud is built to grow with your business, so you don’t have to worry about switching systems as your company expands.

5. No IT Headaches

Leave the server maintenance, backups, and security updates to us. Our SAP-certified cloud hosting ensures 99.9% uptime and enterprise-grade security.

Migrating from On-Premise to Cloud – It's Easier Than You Think

With SAP Business One, you can choose between on-premise or cloud deployment. For cloud, you can opt for:

Public Cloud (cost-effective & multi-tenant)

Private Cloud (hosted on AWS, Azure, or local data centers for added control)

Our experts at Maivin, your trusted SAP Cloud provider in Noida, ensure a smooth migration from on-premise systems to the cloud with minimal disruption.

Powerful Features to Supercharge Your Business

Here’s what makes SAP Business One Cloud truly game-changing:

Customer 360°

Complete view of customer history, orders, and preferences for smarter sales and service decisions.

Advanced Delivery Scheduling

Drag-and-drop interface to manage and adjust sales orders and deliveries dynamically.

Informed Decision-Making

Get consolidated data across departments for strategic planning and quick decisions.

Real-Time Inventory (ATP)

Stay updated with live inventory details — on-demand, with no pre-calculation needed.

Interactive Analysis with Excel Integration

Use pivot tables and data cubes for self-service business analytics.

Sales Recommendations

Get AI-driven product suggestions based on customer purchase history.

Pervasive Analytics & Dashboards

Build your own dashboards using SAP HANA analytics — empowering data-driven actions.

Semantic Layers

Simplify business data interpretation and organization with user-friendly data views.

Enterprise Search

Find any piece of data quickly using dynamic filters, with robust security protocols in place.

Service Layer APIs

Tap into the next-gen API framework to build extensions and integrate other tools.

Cash Flow Forecasting

Get real-time, visual forecasts of your financials to manage cash like a pro.

Mobile Order Management

Access data and process orders on-the-go with full mobile compatibility.

Why Businesses in Noida Trust SAP Business One Cloud by Maivin

Noida is a hub for manufacturing, IT, and growing SMEs. Maivin’s local presence ensures businesses get personalized guidance, dedicated support, and localized solutions — all while leveraging the global power of SAP Business Cloud.

Let’s Build Your Competitive Edge with SAP Business Cloud

Whether you're a startup, growing SME, or managing a large enterprise subsidiary, SAP Business One Cloud helps you:

Streamline processes

Improve operational visibility

Reduce costs

Scale flexibly

At Maivin, our cloud consultants are here to help you every step of the way — from consultation to deployment and beyond.

Ready to Take the Leap?

Don’t let legacy systems slow you down. Empower your business with SAP Cloud solutions in Noida and experience a new level of operational efficiency.

Talk to Our Experts at Maivin Today! Let’s help you run your business smarter, faster, and with total control.

#SAP Cloud#SAP Business Cloud#SAP Business cloud on Noida#SAP Business One#Maivin#Noida#Jaipur#Chandigarh

0 notes

Text

How ASP.NET Developers Can Power Personalization and Automation with AI

In 2025, users expect more than just fast websites—they want smart, personalized digital experiences. At the same time, businesses are under pressure to streamline operations and do more with less. This is where artificial intelligence steps in. Today, developers using ASP.NET can easily integrate AI to craft tailored user journeys and automate repetitive workflows. With modern tools and services at their fingertips, ASP.NET developers are building applications that are not just functional—but intelligent.

Why AI is Changing the Game in ASP.NET Development

AI is no longer experimental—it’s a practical tool helping businesses solve real challenges. In ASP.NET development, it can enhance how applications analyze data, make decisions, and interact with users. Whether you’re building a product portal, enterprise dashboard, or customer support platform, AI helps your application adapt in real time.

ASP.NET’s rich ecosystem, combined with Microsoft’s support for AI integration through ML.NET and Azure, makes it easier than ever to build smart features directly into your applications—no external systems required.

Personalization in ASP.NET: Making User Experiences Smarter

What Is AI-Powered Personalization?

At its core, personalization means presenting content, features, or offers based on what a user actually wants—without them having to ask. Using behavioral patterns, search history, or user profiles, AI can tailor a unique experience for every visitor. For ASP.NET developers, this is now achievable using tools built into the .NET stack.

How Developers Add Personalization in ASP.NET Projects

With Microsoft’s ML.NET and Azure Cognitive Services, developers can implement features like:

Smart Recommendations: Suggest products, content, or actions based on browsing behavior.

Dynamic Layouts: Modify page content or call-to-action buttons based on past interactions.

Engagement Prediction: Use behavioral trends to identify when users might churn or be ready to upgrade.

These enhancements can be woven directly into ASP.NET MVC or Razor Pages, giving developers full control over how users experience a site.

Automating Workflows in ASP.NET Using AI

Beyond the user interface, AI can take over manual tasks behind the scenes. Automation in ASP.NET applications can dramatically reduce processing time, cut down on errors, and free up team resources for higher-value work.

Where AI-Powered Automation Makes an Impact

Chatbots for Support: With Azure Bot Services, developers can deploy 24/7 chat assistants that answer FAQs, process requests, or route tickets.

Auto-Validation in Forms: AI can flag unusual or incorrect inputs and even predict missing information based on trends.

Predictive Alerts: For industries like logistics or manufacturing, AI can forecast equipment failure or service outages before they happen.

Smart Data Handling: Automatically sort, filter, or summarize large datasets within your ASP.NET backend—no spreadsheets required.

These features aren't just about speed—they increase reliability, accuracy, and user satisfaction.

Tools to Build AI Features in ASP.NET

Here’s a breakdown of what ASP.NET developers are using today to bring AI capabilities into their apps:

ML.NET: Build custom machine learning models in C#, ideal for recommendation engines, sentiment analysis, or fraud detection—without switching to Python.

Azure Cognitive Services: Access powerful APIs for text analysis, language understanding, vision recognition, and more, all plug-and-play.

Azure Machine Learning: Train, deploy, and monitor models at scale. It’s designed for enterprise use and integrates smoothly with the .NET ecosystem.

These tools offer flexibility whether you're coding a lightweight app or managing a full-scale enterprise solution.

Final Thoughts: Smarter ASP.NET Apps Begin with AI

AI isn't just a trend—it’s now a vital part of delivering better web experiences. For developers using ASP.NET, there’s a clear path to build apps that are faster, more helpful, and easier to manage. By combining personalization features with smart automation, your application becomes more than just functional—it becomes intuitive.

If you're ready to level up your ASP.NET applications, now is the time to explore how AI can fit into your workflow.

Let’s Build Smarter Together

At iNestweb, we help businesses bring intelligence to their web platforms with customized, AI-ready ASP.NET development. Whether you need tailored user experiences or automated systems that save time, we’re here to build it with you.

0 notes

Text

Understanding Azure Migration Services: What You Need to Know

In the speedy digital age that exists today, companies are constantly searching for means by which they can increase their efficiency, security, and flexibility. One of the largest shifts many organizations are undertaking is migrating their operations to the cloud. Of the numerous cloud platforms available, Microsoft Azure stands as a powerful and versatile option. But what does it mean to move your business to Azure, and how do you go about it with success? That's where Azure migration services stepped in.

Migrating an organization’s IT infrastructure could be overwhelming if you are just getting started with Cloud Computing or Azure. Fear not! With the proper guidance and expertise, it can be a painless and amazingly fulfilling experience. This blog post wants to break down all you ever wanted to know about Azure migration services into plain, easy-to-understand language so that you can make sense of the jargon and understand why these services are essential to your business's future.

What Exactly is Cloud Migration?

Before we dive into Azure details, let's define what "cloud migration" actually is. Imagine this: your company today stores all of its critical files, executes its applications, and handles customer information on physical servers and computers within your office or a conventional data center. This configuration is sometimes called "on-premises" infrastructure.

Cloud migration is just the process of moving these digital assets—your applications, data, websites, and IT processes—from those physical locations to a "cloud" environment. The cloud is not a physical place you can put your finger on; it's a network of remote servers and computers hosted on the internet, managed by a cloud provider such as Microsoft Azure. When you leap into the cloud, you're merely leasing computing resources (such as processing, storage, and networking) from this provider instead of paying to own and support them yourself.

Why Do Businesses Move to the Cloud?

So, why are so many businesses-from small startups to large enterprises— making this move? The appreciated advantages of Azure, in the context of cloud computing, are truly innumerable and profound.

Cost Reduction: The upkeep of an on-site infrastructure is expensive. You must purchase servers, power them with electricity, cool them, and employ people to manage them. With Azure, you only pay for what you consume, frequently based on a subscription model. This saves you from making big initial payments and keeps your ongoing operational expenses low.

Scalability: Picture your website is suddenly flooded with traffic, or your business requires more processing power at times of peak demand. On-premises can't handle that. Azure can scale your resources up or down in an instant, though. This gives you the flexibility to never pay for more than you require but always have power when you need it most.

Higher Security: Microsoft spends billions on protecting its Azure data centers, with the latest physical and virtual security technologies. You're responsible for your data safety in Azure, but you can't match the underlying infrastructure security that most individual companies can provide by themselves.

Reliability and Disaster Recovery: Azure's worldwide chain of data centers guarantees high availability. If one server or even an entire data center is facing a problem, your applications can automatically fail over to another to avoid downtime. This also simplifies disaster recovery to a great extent and makes it stronger than conventional techniques.

State-of-the-Art and Modernization: Azure is more than computing, it is a suite of services including AI, machine learning, data analytics, and IoT. For migrators using the full suite of Azure technology, you will be using the newest technology to keep you ahead of your competitors and allow for you to innovate.

Global Reach: With data centres, infrastructure and resources worldwide you are able to host your applications as near to your users as possible, minimizing latency and improving performance. This is helpful if you are an international company or based in partial locations within different countries.

The Role of Azure Migration Services

Though the advantages are obvious, the process of transferring your entire IT infrastructure to Azure is not as simple as it sounds. This is not an issue of just “copy-pasting” your information. Different applications, databases, and systems have unique requirements, and even one mistake can cause operational delays, data corruption, or vulnerabilities. It is exactly for this reason that Azure migration services are so critical.

Imagine it in terms of relocating the house. You could attempt to physically move everything yourself, but it would be impossible without being utterly stressful, and time-consuming, and you'd probably damage a bit along the way. Instead, you pay professionals who have experience, the correct equipment, and an orderly method to ensure everything arrives safely and in good time at your new address.

Azure migration services providers serve as such professional movers for your digital assets. They are dedicated companies or units with extensive experience in Microsoft Azure and cloud migration tactics. They guide you through every single step of the migration to guarantee a secure and effective relocation that will not disrupt your business activities.

What Does Migration with Azure Typically Involve?

In a more comprehensive engagement with an Azure migration services provider, the following key steps are usually included:

1. Assessment And Planning

Here is where it all begins. A consulting firm providing Azure migration services starts with a system-wide audit of your existing IT infrastructure, which contains several steps, including:

Understanding Your Motives: What is driving you to consider migrating to Azure? (Cost implications, performance increase, enhanced security, global reach, etc.)

Asset Inventory: List all applications, servers, databases, data storage, as well as network topology that belong to you.

Dependency Mapping: Understanding the relationships between different applications and systems and how they depend on each other. This will help in not breaking any flows during the migration.

Readiness Assessment: Determining which applications are “cloud ready” (able to be migrated as is) and which ones need changes (modifications, refactoring) to work optimally in Azure.

Cost Assessment: Providing the expected cloud costs in Azure as compared to the existing costs, to help determine scope for savings.

Migration Strategy Formulation: With all the assessments done, the consulting team will propose a tailored migration approach. It could be multi-strategy approaches for various components of your IT landscape, and some of these common strategies.

The Necessity of Azure Migration Consulting Services

You may wonder “Why can’t my IT staff just manage all of this internally?” Well, able internal IT teams are great assets, but the intricacies of cloud migrations often require external assistance. That is why engaging with Azure migration consulting services is a good financial move.

Best Practice Recommendations: Azure consultants guide clients with up-to-speed and accurate services and best practices, possessing specific knowledge of Azure services, as well as practices and mistakes others have made. Consultants avoid blunders and pace you on the right path.

Evergreen Solutions: Experts in professional services have most likely had several migrations for different businesses across sectors and sizes. This allows them to address challenges tailored to your business using unique tested solutions.

Risk Reduction: Every business has critical business systems, which tend to be risky during the migrating process. With professionals, the risks tend to be low during the migration, alluding to the expert's ability to manage your data, downtime, and overall security.

Cost Effective: Certified services can save you money in the long term due to the seemingly unshielded expense involved in drafting contracts. These inclusive contracts ensure that Azure environment optimization is carried out on day one, averting errors, maintaining resource waste efficiency, and enhancing overall performance.

With the assistance of an expert, your migration can be performed in a streamlined manner, allowing your business to begin taking advantage of Azure’s benefits sooner.

Your internal team’s core responsibilities can provide business continuity while offloading the intricate tasks of migration to specialists.

Picking the Ideal Partner for Azure Cloud Migration Services

When it comes to providers for Azure migration services, take into consideration the following points:

Look for a company that has successfully migrated to Azure within different industries.

Ensure that they have Microsoft Azure certifications and relevant qualifications.

Do they provide services beyond assessment and post-migration support?

Are their explanations clear, and do they communicate regularly with you?

What do other clients say about the company’s services?

Will they integrate into your internal teams?

A partner should understand your specific business challenges and tailor their Azure Migration Consulting to support your objectives.

The Change is in the Cloud: Adopting Azure

The cloud is not an area that is emerging; it is an industry imperative that changes the standards of technology and business synergy across the world. Azure by Microsoft gives you the opportunity to foster advancement, reduce spending, and boost productivity with its propellant, secure, and elastic scalable platform ready to skyrocket your business. Transforming your enterprise onto the cloud might look daunting, but it is as easy as a walk in the park.

By using expert Azure migration services, you are guaranteed a seamless, simplified transition. With the right guidance, tools, and support, Azure has tailored solutions for your company’s growing needs, transforming you in the process allowing full optimal usage, and enabling you to concentrate on expanding business horizons and venturing new milestones. Cease encumbered with the complexities of migration and embark onto a world of benefits in the cloud.

Looking Forward to Taking the Next Step in Your Cloud Journey?

Are you planning the migration of your data and applications into Microsoft Azure? Do you want experts at hand to guide you in every step of the cloud migration design and implementation? Having tailored solutions especially done for you, our specialized team of certified Azure professionals provides full Azure migration services addressing all your business requirements. From assessing the pre-defined scope of work and strategizing tackling work step by step through defining processes to multilayer execution and post-migration fine-tuning, we ensure easy, seamless, effective, and safe movement of your data and assets into the cloud. KNow More:-https://www.bloomcs.com/contact-us/

0 notes

Text

Software Development Company in Chennai: How to Choose the Best Partner for Your Next Project

Chennai, often called the “Detroit of India” for its booming automobile industry, has quietly become a global hub for software engineering and digital innovation. If you’re searching for the best software development company in Chennai, you have a wealth of options—but finding the right fit requires careful consideration. This article will guide you through the key factors to evaluate, the services you can expect, and tips to ensure your project succeeds from concept to launch.

Why Chennai Is a Top Destination for Software Development

Talent Pool & Educational Infrastructure Chennai is home to premier engineering institutions like IIT Madras, Anna University, and numerous reputable private colleges. Graduates enter the workforce with strong foundations in computer science, software engineering, and emerging technologies.

Cost-Effective Yet Quality Services Compared to Western markets, Chennai offers highly competitive rates without compromising on quality. Firms here balance affordability with robust processes—agile methodologies, DevOps pipelines, and stringent QA—to deliver world-class solutions.

Mature IT Ecosystem With decades of experience serving Fortune 500 enterprises and fast-growing startups alike, Chennai’s software firms boast deep domain expertise across industries—healthcare, finance, e-commerce, automotive, and more.

What Makes the “Best Software Development Company in Chennai”?

When evaluating potential partners, look for:

Comprehensive Service Offerings

Custom Software Development: Tailored web and mobile applications built on modern stacks (JavaScript frameworks, Java, .NET, Python/Django, Ruby on Rails).

Enterprise Solutions: ERP/CRM integrations, large-scale portals, microservices architectures.

Emerging Technologies: AI/ML models, blockchain integrations, IoT platforms.

Proven Track Record

Case Studies & Portfolios: Review real-world projects similar to your requirements—both in industry and scale.

Client Testimonials & Reviews: Genuine feedback on communication quality, delivery timelines, and post-launch support.

Process & Methodology

Agile / Scrum Practices: Iterative development ensures rapid feedback, early demos, and flexible scope adjustments.

DevOps & CI/CD: Automated pipelines for build, test, and deployment minimize bugs and accelerate time-to-market.

Quality Assurance: Dedicated QA teams, automated testing suites, and security audits guarantee robust, reliable software.

Transparent Communication

Dedicated Account Management: A single point of contact for status updates, issue resolution, and strategic guidance.

Collaboration Tools: Jira, Slack, Confluence, or Microsoft Teams for real-time tracking and seamless information flow.

Cultural Fit & Time-Zone Alignment Chennai’s working hours (IST) overlap well with Asia, Europe, and parts of North America, facilitating synchronous collaboration. Choose a company whose work-culture and ethics align with your organization’s values.

Services to Expect from a Leading Software Development Company in Chennai

Service Area

Key Deliverables

Web & Mobile App Development

Responsive websites, Progressive Web Apps (PWAs), native iOS/Android applications

Enterprise Solutions

ERP/CRM systems, custom back-office tools, data warehousing, BI dashboards

Cloud & DevOps

AWS/Azure/GCP migrations, Kubernetes orchestration, CI/CD automation

AI/ML & Data Science

Predictive analytics, recommendation engines, NLP solutions

QA & Testing

Unit tests, integration tests, security and performance testing

UI/UX Design

Wireframes, interactive prototypes, accessibility audits

Maintenance & Support

SLA-backed bug fixes, feature enhancements, 24/7 monitoring

Steps to Engage Your Ideal Partner

Define Your Project Scope & Goals Draft a clear requirements document: core features, target platforms, expected user base, third-party integrations, and budget constraints.

Shortlist & Request Proposals Contact 3–5 Software Development Company in Chennai with your brief. Evaluate proposals based on technical approach, estimated timelines, and cost breakdown.

Conduct Technical & Cultural Interviews

Technical Deep-Dive: Ask about architecture decisions, tech stack rationale, and future-proofing strategies.

Team Fit: Meet key developers, project managers, and designers to gauge cultural synergy and communication style.

Pilot Engagement / Proof of Concept Start with a small, time-boxed POC or MVP. This helps you assess real-world collaboration, code quality, and on-time delivery before scaling up.

Scale & Iterate Based on the pilot’s success, transition into full-scale development using agile sprints, regular demos, and continuous feedback loops.

Success Stories: Spotlight on Chennai-Based Innovators

E-Commerce Giant Expansion: A Chennai firm helped a regional retailer launch a multilingual e-commerce platform with 1M+ SKUs, achieving 99.9% uptime and a 40% increase in conversion rates within six months.

Healthcare Platform: Partnering with a local hospital chain, a development agency built an end-to-end telemedicine portal—integrating video consultations, patient records, and pharmacy services—serving 50,000+ patients during peak pandemic months.

Fintech Disruption: A Chennai team architected a microservices-based lending platform for a startup, enabling instant credit scoring, automated KYC, and real-time loan disbursement.

Conclusion

Selecting the best software development company in Chennai hinges on matching your project’s technical needs, budget, and cultural expectations with a partner’s expertise, processes, and proven results. Chennai’s vibrant IT ecosystem offers everything from cost-effective startups to global-scale enterprises—so take the time to define your objectives, evaluate portfolios, and run a pilot engagement. With the right collaborator, you’ll not only build high-quality software but also forge a long-term relationship that fuels continuous innovation and growth.

0 notes

Text

Senior Consultant - Tech Consulting - NAT - CNS - TC - AI Engineer - PAN India

Job title: Senior Consultant – Tech Consulting – NAT – CNS – TC – AI Engineer – PAN India Company: EY Job description: Requisition Id : 1595471 Job Title: AI Engineer – Insights Engine Invest Project Experience: 5+ Years… Search and Azure Cosmos DB to manage large-scale generative AI datasets and outputs. Familiarize with prompt engineering… Expected salary: Location: Bangalore, Karnataka Job…

0 notes

Text

Empowering Innovation: Why Hello Errors is the Go-To App Developer in Johannesburg

In the heart of South Africa’s tech revolution, Johannesburg is emerging as a powerhouse for innovation, entrepreneurship, and digital transformation. Startups, enterprises, and SMEs are rapidly realizing the importance of mobile-first strategies to connect with their audiences, streamline operations, and scale globally.

If you're searching for an App Developer in Johannesburg who not only understands technology but also aligns with your vision, Hello Errors should be on your radar. With a focus on quality, innovation, and user experience, Hello Errors is redefining the mobile app development landscape in the region.

Why Johannesburg Needs More Than Just Coders

Johannesburg is buzzing with growth across industries like fintech, e-commerce, healthtech, logistics, and education. Businesses here aren’t just looking for basic apps — they need smart, scalable, and impactful digital products that solve real-world problems.

While anyone can build an app, very few can create an ecosystem that engages users, supports your business model, and grows with your brand. That’s where Hello Errors stands apart — not just as a Johannesburg app development company, but as a long-term technology partner.

Hello Errors: More Than an App Developer

At Hello Errors, our core mission is clear: helping businesses get established on the digital platform. But how do we do it differently?

✅ Strategy-First Development

Before any code is written, our team takes the time to understand your goals, market, competitors, and users. Whether you're launching a fintech platform or a booking app for local services, our strategic approach ensures you're set up for success.

✅ Modular & Scalable Architecture

Our apps are built to grow. Using modern backend frameworks and microservices architecture, Hello Errors delivers solutions that can scale seamlessly as your business expands — whether it’s from 100 users to 100,000 or beyond.

✅ Offline-First Capabilities

In emerging markets like Johannesburg, not all users have constant access to high-speed internet. We build offline-first apps with intelligent sync mechanisms, ensuring usability even in low-connectivity scenarios — a major advantage for logistics, retail, or rural-focused apps.

✅ Multilingual Support

South Africa is a diverse nation with multiple official languages. We offer multilingual app development, allowing you to reach and serve wider audiences in their preferred languages.

App Types We Build at Hello Errors

As a top mobile app developer in Johannesburg, we don’t offer one-size-fits-all solutions. We tailor each app based on platform, user goals, business type, and budget.

🔹 Android App Development

Built using Kotlin and Java, our Android apps are performance-optimized, secure, and fully aligned with Google’s latest UI/UX guidelines.

🔹 iOS App Development

Using Swift and Objective-C, we create fluid, high-end iOS apps for Apple users — ideal for businesses targeting premium audiences.

🔹 Cross-Platform & Hybrid Apps

Using Flutter or React Native, we develop cost-efficient hybrid apps that deliver a native-like experience across Android and iOS.

🔹 Progressive Web Apps (PWA)

Want your app to be accessible without downloads? PWAs are lightweight, browser-accessible apps that work across devices and platforms.

Emerging Tech Integration: AI, ML, and Cloud

What sets Hello Errors apart from the average custom app development company in Johannesburg is our cutting-edge tech stack.

AI & ML: From intelligent chatbots to predictive behavior models, we build apps that adapt and learn from user behavior.

Cloud Integration: We host apps on robust cloud platforms (AWS, Google Cloud, Azure) to ensure security, uptime, and scalability.

Blockchain: For industries like fintech and logistics, we integrate blockchain for transaction transparency and data integrity.

Local Relevance: Apps That Understand Johannesburg’s Market

Our team is well-versed with regional trends, user behaviors, and market dynamics. This gives us an edge in creating apps that resonate with the Johannesburg audience.

Examples of Local-Focused Features:

Geo-location for township-based services

Mobile payment integration with SnapScan, Zapper, PayFast

Dark mode for power-saving on budget smartphones

Push notifications for real-time order or service updates

We don’t just build apps — we build tools that fit Johannesburg’s digital lifestyle.

Our Process: From Discovery to Post-Launch

The Hello Errors app development lifecycle is seamless, transparent, and collaborative:

Discovery & Ideation – Understand your vision and target users.

Wireframing & Prototyping – Create interactive mockups and get early feedback.

UI/UX Design – Craft beautiful and user-friendly interfaces.

Agile Development – Build your app in iterative sprints with regular check-ins.

QA Testing – Perform rigorous testing for performance, security, and usability.

Deployment – Launch your app on app stores and backend servers.

Ongoing Support – Offer updates, bug fixes, and feature rollouts.

This transparent approach makes Hello Errors a trusted App Developer in Johannesburg for both startups and large enterprises.

Client Success Stories (Hypothetical Examples)

📱 FinEdge: A fintech startup based in Sandton approached Hello Errors to build a secure mobile wallet. Within 6 months of launch, it crossed 50,000 downloads and helped unbanked communities access digital payments.

🚚 QuickRoute: A logistics platform serving Johannesburg's CBD, QuickRoute worked with Hello Errors to build a delivery and fleet-tracking app with real-time updates and driver route optimization.

🏥 MediAssist SA: A healthtech startup collaborated with Hello Errors for a telemedicine app with secure patient records, video consults, and AI-based symptom checking.

Why Choose Hello Errors Over Other App Developers?

✔️ Local Expertise + Global Standards ✔️ AI/ML-Ready App Development ✔️ Affordable Pricing Models ✔️ Post-Launch Support & Upgrades ✔️ SEO, Web, and UI/UX Services All-In-One

If you want to work with a team that listens, delivers, and evolves with you — Hello Errors is your answer.

Get Started with Hello Errors Today

Ready to bring your mobile app idea to life? Whether you’re launching a product, solving a social issue, or digitizing internal operations — we’re here to help.

📞 Book a free consultation 🌐 Visit: https://helloerrors.in 📩 Email: [email protected] 🚀 Partner with the most trusted App Developer in Johannesburg today!

#AppDeveloperJohannesburg#MobileAppDevelopment#HelloErrors#JohannesburgTech#AppDevelopmentCompany#AndroidAppDeveloper#iOSAppDevelopment#StartupApps#DigitalTransformation#TechInJohannesburg#CustomAppDevelopment#AIApps#FintechApps#UXDesign#AppDevelopmentSouthAfrica

0 notes

Text

How to Build a Scalable AI-Based LMS?

Creating a scalable AI-based Learning Management System (LMS) is a transformative opportunity for EdTech startups to deliver personalized, efficient, and future-ready educational platforms. Integrating artificial intelligence (AI) enhances learner engagement, automates processes, and provides data-driven insights, but scalability ensures the system can handle growth without compromising performance. Here’s a concise blueprint for building a scalable AI-based LMS.

1. Define Core Objectives and Audience Start by identifying the target audience—K-12, higher education, corporate trainees, or lifelong learners—and their specific needs. Define how AI will enhance the LMS, such as through personalized learning paths, automated grading, or predictive analytics. Align these objectives with scalability goals, ensuring the system can support increasing users, courses, and data volumes.

2. Leverage a Cloud-Based Architecture A cloud infrastructure, such as AWS, Google Cloud, or Azure, is essential for scalability. Use microservices to modularize components like user management, content delivery, and AI-driven analytics. This allows independent scaling of high-demand modules. Implement load balancers to distribute traffic and ensure consistent performance during peak usage. Cloud solutions also simplify maintenance and updates, reducing long-term costs.

3. Integrate AI for Personalization and Efficiency AI is the cornerstone of a modern LMS. Incorporate features like:

Adaptive Learning: AI algorithms adjust content difficulty based on learner performance, ensuring optimal engagement.

Recommendation Systems: Suggest relevant courses or resources using machine learning models trained on user behavior.

Automated Assessments: Use natural language processing (NLP) to grade open-ended responses and provide instant feedback.

Chatbots: Deploy AI-powered virtual assistants for 24/7 learner support, reducing instructor workload.

Train AI models on diverse datasets to ensure inclusivity and accuracy, and host them on scalable platforms like Kubernetes for efficient resource management.

4. Prioritize Data Management and Analytics AI relies on data, so choose a robust database like PostgreSQL for structured data or MongoDB for unstructured content. Implement data pipelines to process real-time analytics, tracking metrics like course completion rates and engagement. Use AI to identify at-risk learners and suggest interventions. Ensure data storage scales seamlessly with user growth by leveraging cloud-native solutions.

5. Ensure Security and Compliance Protect user data with encryption, secure APIs, and regular vulnerability assessments. Comply with regulations like GDPR, FERPA, or COPPA, depending on the audience. Implement single sign-on (SSO) and role-based access to enhance security without sacrificing usability.

6. Design for User Experience and Accessibility A scalable LMS must remain user-friendly. Use responsive design for mobile and desktop access, and ensure compliance with WCAG for accessibility. AI can enhance UX by offering personalized dashboards and predictive search. Conduct user testing to refine the interface and minimize friction.

7. Plan for Continuous Iteration Launch with a minimum viable product (MVP) and iterate based on user feedback. Monitor KPIs like system uptime and user retention to gauge scalability. Regularly update AI models and infrastructure to adapt to new technologies and user needs.

By combining a cloud-based, microservices architecture with AI-driven features, startups can build a scalable LMS that delivers personalized education while handling growth efficiently. Strategic planning and iterative Edtech development ensure long-term success in the competitive landscape.

0 notes

Text

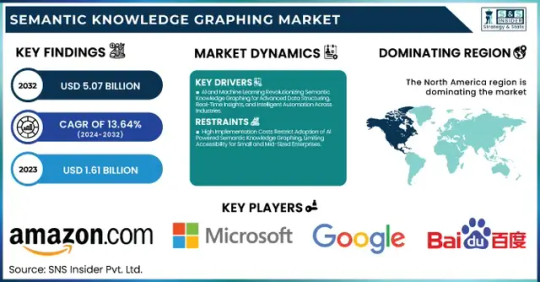

Semantic Knowledge Graphing Market Size, Share, Analysis, Forecast, and Growth Trends to 2032: Transforming Data into Knowledge at Scale

The Semantic Knowledge Graphing Market was valued at USD 1.61 billion in 2023 and is expected to reach USD 5.07 billion by 2032, growing at a CAGR of 13.64% from 2024-2032.

The Semantic Knowledge Graphing Market is rapidly evolving as organizations increasingly seek intelligent data integration and real-time insights. With the growing need to link structured and unstructured data for better decision-making, semantic technologies are becoming essential tools across sectors like healthcare, finance, e-commerce, and IT. This market is seeing a surge in demand driven by the rise of AI, machine learning, and big data analytics, as enterprises aim for context-aware computing and smarter data architectures.

Semantic Knowledge Graphing Market Poised for Strategic Transformation this evolving landscape is being shaped by an urgent need to solve complex data challenges with semantic understanding. Companies are leveraging semantic graphs to build context-rich models, enhance search capabilities, and create more intuitive AI experiences. As the digital economy thrives, semantic graphing offers a foundation for scalable, intelligent data ecosystems, allowing seamless connections between disparate data sources.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6040

Market Keyplayers:

Amazon.com Inc. (Amazon Neptune, AWS Graph Database)

Baidu, Inc. (Baidu Knowledge Graph, PaddlePaddle)

Facebook Inc. (Facebook Graph API, DeepText)

Google LLC (Google Knowledge Graph, Google Cloud Dataproc)

Microsoft Corporation (Azure Cosmos DB, Microsoft Graph)

Mitsubishi Electric Corporation (Maisart AI, MELFA Smart Plus)

NELL (Never-Ending Language Learner, NELL Knowledge Graph)

Semantic Web Company (PoolParty Semantic Suite, Semantic Middleware)

YAGO (YAGO Knowledge Base, YAGO Ontology)

Yandex (Yandex Knowledge Graph, Yandex Cloud ML)

IBM Corporation (IBM Watson Discovery, IBM Graph)

Oracle Corporation (Oracle Spatial and Graph, Oracle Cloud AI)

SAP SE (SAP HANA Graph, SAP Data Intelligence)

Neo4j Inc. (Neo4j Graph Database, Neo4j Bloom)

Databricks Inc. (Databricks GraphFrames, Databricks Delta Lake)

Stardog Union (Stardog Knowledge Graph, Stardog Studio)

OpenAI (GPT-based Knowledge Graphs, OpenAI Embeddings)

Franz Inc. (AllegroGraph, Allegro CL)

Ontotext AD (GraphDB, Ontotext Platform)

Glean (Glean Knowledge Graph, Glean AI Search)

Market Analysis

The Semantic Knowledge Graphing Market is transitioning from a niche segment to a critical component of enterprise IT strategy. Integration with AI/ML models has shifted semantic graphs from backend enablers to core strategic assets. With open data initiatives, industry-standard ontologies, and a push for explainable AI, enterprises are aggressively adopting semantic solutions to uncover hidden patterns, support predictive analytics, and enhance data interoperability. Vendors are focusing on APIs, graph visualization tools, and cloud-native deployments to streamline adoption and scalability.

Market Trends

AI-Powered Semantics: Use of NLP and machine learning in semantic graphing is automating knowledge extraction and relationship mapping.

Graph-Based Search Evolution: Businesses are prioritizing semantic search engines to offer context-aware, precise results.

Industry-Specific Graphs: Tailored graphs are emerging in healthcare (clinical data mapping), finance (fraud detection), and e-commerce (product recommendation).

Integration with LLMs: Semantic graphs are increasingly being used to ground large language models with factual, structured data.

Open Source Momentum: Tools like RDF4J, Neo4j, and GraphDB are gaining traction for community-led innovation.

Real-Time Applications: Event-driven semantic graphs are now enabling real-time analytics in domains like cybersecurity and logistics.

Cross-Platform Compatibility: Vendors are prioritizing seamless integration with existing data lakes, APIs, and enterprise knowledge bases.

Market Scope

Semantic knowledge graphing holds vast potential across industries:

Healthcare: Improves patient data mapping, drug discovery, and clinical decision support.

Finance: Enhances fraud detection, compliance tracking, and investment analysis.

Retail & E-Commerce: Powers hyper-personalized recommendations and dynamic customer journeys.

Manufacturing: Enables digital twins and intelligent supply chain management.

Government & Public Sector: Supports policy modeling, public data transparency, and inter-agency collaboration.

These use cases represent only the surface of a deeper transformation, where data is no longer isolated but intelligently interconnected.

Market Forecast

As AI continues to integrate deeper into enterprise functions, semantic knowledge graphs will play a central role in enabling contextual AI systems. Rather than just storing relationships, future graphing solutions will actively drive insight generation, data governance, and operational automation. Strategic investments by leading tech firms, coupled with the rise of vertical-specific graphing platforms, suggest that semantic knowledge graphing will become a staple of digital infrastructure. Market maturity is expected to rise rapidly, with early adopters gaining a significant edge in predictive capability, data agility, and innovation speed.

Access Complete Report: https://www.snsinsider.com/reports/semantic-knowledge-graphing-market-6040

Conclusion

The Semantic Knowledge Graphing Market is no longer just a futuristic concept—it's the connective tissue of modern data ecosystems. As industries grapple with increasingly complex information landscapes, the ability to harness semantic relationships is emerging as a decisive factor in digital competitiveness.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Semantic Knowledge Graphing Market#Semantic Knowledge Graphing Market Share#Semantic Knowledge Graphing Market Scope#Semantic Knowledge Graphing Market Trends

1 note

·

View note

Text

🚀 Mastering the Cloud: Your Complete Guide to Google Cloud (GCP) in 2025

In the ever-expanding digital universe, cloud computing is the lifeline of innovation. Businesses—big or small—are transforming the way they operate, store, and scale using cloud platforms. Among the giants leading this shift, Google Cloud (GCP) stands tall.

If you're exploring new career paths, already working in tech, or running a startup and wondering whether GCP is worth diving into—this guide is for you. Let’s walk you through the what, why, and how of Google Cloud (GCP) and how it can be your ticket to future-proofing your skills and business.

☁️ What is Google Cloud (GCP)?

Google Cloud Platform (GCP) is Google’s suite of cloud computing services, launched in 2008. It runs on the same infrastructure that powers Google Search, Gmail, YouTube, and more.