#Scrape Airbnb Data - Airbnb API

Explore tagged Tumblr posts

Text

0 notes

Text

✈️🌍 Travel Just Got Smarter! 🌐💼

🔍 Curious how #bookingpricesfluctuate? Want insider travel pricing data? We analyzed #GoogleFlights, #Booking.com, and #Airbnb using smart data scraping for insights that help you travel smarter and save more!

From real-time airfare analysis ✈️ to #hoteltrends 🏨 and #Airbnbrental patterns 🏡, Actowiz Solutions is powering the next-gen #travelrevolution with #dataintelligence.

📊 We turned raw travel data into actionable insights:

✅ Find the cheapest months to fly

✅ Track competitor hotel pricing

✅ Monitor Airbnb availability by city

✅ Build a data-backed travel platform or app

✅ Improve OTA price prediction algorithms

Our team helps startups, #travelagencies, #bloggers, and #hotelgroups gain the edge with real-time trend monitoring, APIs, and #interactiveDashboards.

💡 Want to know how? DM us or visit our bio link to explore the full report, charts & infographics!

1 note

·

View note

Text

How is Travel API Scraping Transforming Trends in Travel and Tourism?

In the contemporary era, much like modern transportation transforming the globe into a global village, travel APIs serve as the connective tissue within the travel industry, facilitating the development of robust travel applications. Historically, the travel sector posed significant barriers to entry due to the extensive data and complex online infrastructure required for a comprehensive travel application.

The advent of travel API scraping has revolutionized this landscape, allowing developers to seamlessly integrate location data from Google, airline information from public APIs, accommodation details from platforms like Airbnb, and ride-hailing data from services like Uber. This convergence empowers the creation of versatile applications addressing all traveler needs, from purchasing plane tickets to arranging rides at their destination.

Understanding the mechanics of these APIs is crucial. These software components act as intermediaries, enabling applications to access and utilize diverse data sources without intricate integrations. The significance of travel data scraping services lies in streamlining the development process, reducing the need for extensive coding and infrastructure creation.

Integrating travel APIs into applications offers unparalleled advantages. By leveraging data from reputable sources, developers enhance their offerings with accurate and up-to-date information, improving user experience and overall functionality. Moreover, APIs foster scalability, allowing applications to adapt seamlessly to evolving industry trends.

Choosing the proper travel scraper is paramount for a successful application. While popular choices include Google for location data and airline APIs for flight details, an emerging solution can extract data from any website on the internet, broadening the scope of available information.

The Transformative Impact of Travel APIs on the Industry

Hotel APIs:

Location Data and Traffic APIs:

Traffic APIs: To ensure a smooth travel experience, integrating APIs that offer real-time traffic updates becomes crucial. These APIs contribute to efficient navigation and enhance the user-friendliness of the application.

Business Travel APIs: SAP Concur API: Particularly valuable for B2B travel applications, the SAP Concur API offers insights into employee expenses, including those related to services like Uber rides. This API provides a comprehensive view for travel administrators, aiding in managing business travel expenditures.

These distinct categories of travel APIs collaborate harmoniously, offering developers a versatile toolkit. It allows for creating comprehensive and user-friendly travel applications, breaking down traditional barriers and providing a seamless experience for developers and end-users.

Four Compelling Reasons to Incorporate Travel APIs Into Your Application.

Accelerated Time-to-Market: By incorporating travel APIs, development time significantly decreases. Manual integration steps are replaced by parallel development against APIs, enabling your team to focus swiftly on enhancing your application's unique features.

Cost Efficiency: In an industry where accuracy is paramount, APIs ensure direct data sourcing from the application's origin, eliminating the risk of human error. This reliability is crucial, especially considering the potential consequences of inaccurate data in the travel sector.

Enhanced Data Accuracy: In an industry where accuracy is paramount, scrape travel api data to ensure direct data sourcing from the application's origin, eliminating the risk of human error. This reliability is crucial, especially considering the potential consequences of inaccurate data in the travel sector.

Expanded Functionality Offering The abundance of available travel APIs allows for quickly adding functionalities to your application. Travelers increasingly prefer planning their entire trips through a single mobile application. APIs offer a cost-effective solution, enabling the seamless integration of diverse functionalities, thereby allowing your application to compete favorably in the industry.

Sometimes, more than rudimentary information about hotels or popular tourist spots in travel applications is needed. There are instances where users seek more mundane yet crucial data, such as the number of local bakeries or the quickest place to grab a pizza. For such scenarios, having an API capable of extracting data from any website becomes invaluable. It is what we offer – an outstanding public API coupled with advanced web scraping software.

Our public scraping API empowers you to access not only the data you know you need but also information you may not have considered. Integration with your applications complements existing travel APIs, enhancing the robustness of your offerings. We provide prebuilt modules for extracting diverse data, ranging from business details to social media insights. Our team is ready to assist if you require a custom scraping solution.

With our scraping API, you can delve into scraping restaurant, hotel, and tour reviews. Its added functionality enriches your application, providing users with comprehensive information and elevating their experience. Explore the possibilities by integrating scraping API into your applications and offering users a more enriched and insightful travel experience.

Conclusion: Gone are the days when the travel industry seemed like an imposing fortress, deterring newcomers with its complexities. Today, it is a vast and interconnected ecosystem seamlessly woven by travel APIs. These APIs expose distinctive functionalities from various applications, collectively transforming the travel experience into unprecedented ease and accessibility.

We specialize in scraping travel data at Travel Scrape, mainly focusing on Travel aggregators and Mobile travel app data. Our services empower businesses with enriched decision-making capabilities, providing data-driven intelligence. Connect with us to unlock a pathway to success, utilizing aggregated data for a competitive edge in the dynamic travel industry. Reach out today to harness the power of scraped data and make informed decisions that set your business apart and drive success in this highly competitive landscape.

Know more>>https://www.travelscrape.com/travel-api-scraping-transforming-trends-in-travel-and-tourism.php

#TravelAPIScraping#ScrapeTravelApiData#WebScrapingintheTravelApi#ExtractTraveldataAPI#CollectDataFromTravelDataAPI

1 note

·

View note

Text

Real-Time Travel Fare Datasets: Airlines, Hotels & OTAs

Introduction

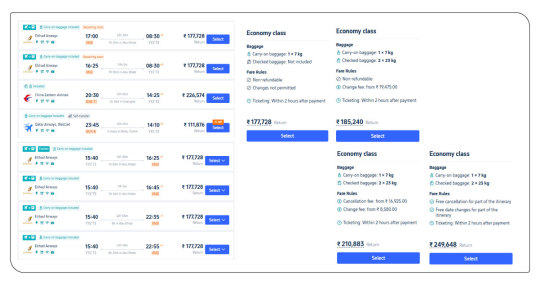

In 2025, dynamic pricing has become the cornerstone of travel industry growth. From fluctuating airfares to limited hotel availability, travel businesses must constantly track and respond to real-time market changes. However, staying updated across global platforms like Booking.com, Emirates, Expedia, and Airbnb can be overwhelming without automation.

That’s where ArcTechnolabs steps in.

We specialize in pre-scraped, real-time fare datasets across the USA and UAE, tailored for the Travel & Hospitality industry. Whether you're building a fare aggregator, launching a hotel price comparison app, or optimizing dynamic pricing for airlines—our ready-to-use datasets put you ahead.

Why the USA & UAE?

Both regions represent high-demand and high-volume travel markets.

USA: World’s largest OTA and hotel market, with volatile fare trends across New York, LA, and San Francisco.

UAE: A global transit hub with fast-growing tourism in Dubai and Abu Dhabi.

By scraping and enriching fare data from top travel portals in these countries, ArcTechnolabs delivers actionable intelligence across flights, accommodations, and travel packages.

Airline Fare Dataset – USA & UAE

Sources Covered: Delta, Emirates, American Airlines, Etihad, United, Qatar Airways, Expedia, Skyscanner, Google Flights

What’s Included:AttributeDescriptionRoute (From – To)JFK → LAX, DXB → LHRAirline NameEmirates, United, etc.Travel DatesDeparture, ReturnFare PriceReal-time ticket priceFare TypeEconomy, Business, Premium EconomyBaggage InfoIncluded bags, chargesRefundabilityRefundable / Non-RefundableLast ScrapedTimestamp (hourly/daily)

Sample Data:FromToAirlineFareClassDateRefundableScrape TimeJFKDXBEmirates$920Economy2025-06-10Yes2:00 PM ESTLAXORDDelta$370Economy2025-06-11No2:05 PM EST

Hotel Pricing Dataset – Dubai, Abu Dhabi, New York, Las Vegas

Sources Covered: Booking.com, Agoda, MakeMyTrip, Airbnb, Expedia, Marriott, Hilton, Hotels.com

What���s Included:AttributeDescriptionHotel NameHilton, Burj Al Arab, etc.CityDubai, NYC, etc.Room TypeDeluxe, Suite, StudioPrice Per NightWith/without taxOccupancy Details2 Adults, 1 ChildAvailabilityIn Stock / Sold OutRatingsStars + User ScoreCancellationFree / Partial / No Cancellation

Sample Data:HotelCityRoom TypePrice (USD)RatingAvailabilityBurj Al ArabDubaiSuite$1,9904.8AvailableMarriott Times SqNYCDeluxe$4204.3Sold Out

OTA Fare Dataset – Multi-Platform Aggregation

We scrape fares from popular OTAs such as:

Booking.com

Agoda

MakeMyTrip

Expedia

Kayak

Hopper

Cleartrip

Goibibo

Key Features:

AttributeDescriptionPlatform NameExpedia, Booking, etc.Hotel/Airline ListingUnified listing from multiple providersFare DeltaFare differences by providerRoom/Fare TypeStandard, Non-refundable, PromoLink for RedirectionDirect booking linkPlatform Fee TrackingBooking fees, cleaning charges (Airbnb)

Use Cases for Travel & Hospitality Businesses

1. Dynamic Pricing for Travel Agencies

Compare fares hourly to adjust commission-based pricing for tours or bundles.

2. Fare Aggregator Development

Use real-time datasets to build or enhance apps comparing flights/hotels across platforms.

3. Revenue Management for Airlines/Hotels

Monitor competitor fare changes to revise pricing dynamically based on seat availability, season, or city demand.

4. Market Research & Fare Trend Analytics

Analyze average fare fluctuations by route, city, season, or property category.

5. AI-Driven Booking Assistants

Power smart chatbots or virtual agents with real-time pricing logic.

How Frequently Are Datasets Updated?

Dataset TypeUpdate FrequencyAirline FaresHourly via APIHotel PricingEvery 6 hours or nightlyOTA AggregatesDaily or on demand

We also offer:

Daily CSV/Excel reports

Real-time API streaming (JSON)

Historical trend datasets (up to 180 days)

How ArcTechnolabs Ensures Accuracy

Scraping through headless browsers & IP rotation

Price verification from multiple platforms

Timestamp-based price validation

Duplicate filtering & price correction algorithms

GDPR-compliant and legally safe scraping practices

Customization Options

Custom ParameterExamplesRegionUSA only, UAE only, multi-countryClass TypeOnly Business Class faresBrandsMarriott only, Hilton onlyOTA ScopeExpedia vs Booking onlyPrice Bracket< $500 / 3-Star Hotels onlyLanguage & CurrencyUSD / AED / EUR

City Coverage for 2025

USA (Top Cities)UAE (Top Cities)New York, Los AngelesDubai, Abu DhabiSan Francisco, ChicagoSharjahLas Vegas, MiamiAl Ain

How to Get Started with ArcTechnolabs Travel Fare Datasets

Request a Sample File (API or CSV)

Choose a Plan: Daily, Weekly, Real-Time

Customize by Platform, City, or Category

Integrate via API or Download Excel Reports

Visit ArcTechnolabs.com or contact our data solutions team for a free demo!

Conclusion

In a post-pandemic world where flexibility and speed drive travel decisions, real-time fare intelligence is a must-have. With ArcTechnolabs’ pre-scraped datasets for airlines, hotels, and OTAs in the USA and UAE, your team can act faster, price smarter, and build better booking experiences.

Let us help you build the future of travel pricing—today.

Source >> https://www.arctechnolabs.com/real-time-travel-fare-dataset-usa-uae.php

#RealTimeAirlineFareDataset#AirfareTrendsDataExtraction#HotelBookingPricesDataset#ExpediaFlightAndHotelScraper#BookingDotComPriceDataExtraction#ScrapingFlightAndHotelDataAPIs

0 notes

Text

Why CodingBrushup is the Ultimate Tool for Your Programming Skills Revamp

In today's fast-paced tech landscape, staying current with programming languages and frameworks is more important than ever. Whether you're a beginner looking to break into the world of development or a seasoned coder aiming to sharpen your skills, Coding Brushup is the perfect tool to help you revamp your programming knowledge. With its user-friendly features and comprehensive courses, Coding Brushup offers specialized resources to enhance your proficiency in languages like Java, Python, and frameworks such as React JS. In this blog, we’ll explore why Coding Brushup for Programming is the ultimate platform for improving your coding skills and boosting your career.

1. A Fresh Start with Java: Master the Fundamentals and Advanced Concepts

Java remains one of the most widely used programming languages in the world, especially for building large-scale applications, enterprise systems, and Android apps. However, it can be challenging to master Java’s syntax and complex libraries. This is where Coding Brushup shines.

For newcomers to Java or developers who have been away from the language for a while, CodingBrushup offers structured, in-depth tutorials that cover everything from basic syntax to advanced concepts like multithreading, file I/O, and networking. These interactive lessons help you brush up on core Java principles, making it easier to get back into coding without feeling overwhelmed.

The platform’s practice exercises and coding challenges further help reinforce the concepts you learn. You can start with simple exercises, such as writing a “Hello World” program, and gradually work your way up to more complicated tasks like creating a multi-threaded application. This step-by-step progression ensures that you gain confidence in your abilities as you go along.

Additionally, for those looking to prepare for Java certifications or technical interviews, CodingBrushup’s Java section is designed to simulate real-world interview questions and coding tests, giving you the tools you need to succeed in any professional setting.

2. Python: The Versatile Language for Every Developer

Python is another powerhouse in the programming world, known for its simplicity and versatility. From web development with Django and Flask to data science and machine learning with libraries like NumPy, Pandas, and TensorFlow, Python is a go-to language for a wide range of applications.

CodingBrushup offers an extensive Python course that is perfect for both beginners and experienced developers. Whether you're just starting with Python or need to brush up on more advanced topics, CodingBrushup’s interactive approach makes learning both efficient and fun.

One of the unique features of CodingBrushup is its ability to focus on real-world projects. You'll not only learn Python syntax but also build projects that involve web scraping, data visualization, and API integration. These hands-on projects allow you to apply your skills in real-world scenarios, preparing you for actual job roles such as a Python developer or data scientist.

For those looking to improve their problem-solving skills, CodingBrushup offers daily coding challenges that encourage you to think critically and efficiently, which is especially useful for coding interviews or competitive programming.

3. Level Up Your Front-End Development with React JS

In the world of front-end development, React JS has emerged as one of the most popular JavaScript libraries for building user interfaces. React is widely used by top companies like Facebook, Instagram, and Airbnb, making it an essential skill for modern web developers.

Learning React can sometimes be overwhelming due to its unique concepts such as JSX, state management, and component lifecycles. That’s where Coding Brushup excels, offering a structured React JS course designed to help you understand each concept in a digestible way.

Through CodingBrushup’s React JS tutorials, you'll learn how to:

Set up React applications using Create React App

Work with functional and class components

Manage state and props to pass data between components

Use React hooks like useState, useEffect, and useContext for cleaner code and better state management

Incorporate routing with React Router for multi-page applications

Optimize performance with React memoization techniques

The platform’s interactive coding environment lets you experiment with code directly, making learning React more hands-on. By building real-world projects like to-do apps, weather apps, or e-commerce platforms, you’ll learn not just the syntax but also how to structure complex web applications. This is especially useful for front-end developers looking to add React to their skillset.

4. Coding Brushup: The All-in-One Learning Platform

One of the best things about Coding Brushup is its all-in-one approach to learning. Instead of jumping between multiple platforms or textbooks, you can find everything you need in one place. CodingBrushup offers:

Interactive coding environments: Code directly in your browser with real-time feedback.

Comprehensive lessons: Detailed lessons that guide you from basic to advanced concepts in Java, Python, React JS, and other programming languages.

Project-based learning: Build projects that add to your portfolio, proving that you can apply your knowledge in practical settings.

Customizable difficulty levels: Choose courses and challenges that match your skill level, from beginner to advanced.

Code reviews: Get feedback on your code to improve quality and efficiency.

This structured learning approach allows developers to stay motivated, track progress, and continue to challenge themselves at their own pace. Whether you’re just getting started with programming or need to refresh your skills, Coding Brushup tailors its content to suit your needs.

5. Boost Your Career with Certifications

CodingBrushup isn’t just about learning code—it’s also about helping you land your dream job. After completing courses in Java, Python, or React JS, you can earn certifications that demonstrate your proficiency to potential employers.

Employers are constantly looking for developers who can quickly adapt to new languages and frameworks. By adding Coding Brushup certifications to your resume, you stand out in the competitive job market. Plus, the projects you build and the coding challenges you complete serve as tangible evidence of your skills.

6. Stay Current with Industry Trends

Technology is always evolving, and keeping up with the latest trends can be a challenge. Coding Brushup stays on top of these trends by regularly updating its content to include new libraries, frameworks, and best practices. For example, with the growing popularity of React Native for mobile app development or TensorFlow for machine learning, Coding Brushup ensures that developers have access to the latest resources and tools.

Additionally, Coding Brushup provides tutorials on new programming techniques and best practices, helping you stay at the forefront of the tech industry. Whether you’re learning about microservices, cloud computing, or containerization, CodingBrushup has you covered.

Conclusion

In the world of coding, continuous improvement is key to staying relevant and competitive. Coding Brushup offers the perfect solution for anyone looking to revamp their programming skills. With comprehensive courses on Java, Python, and React JS, interactive lessons, real-world projects, and career-boosting certifications, CodingBrushup is your one-stop shop for mastering the skills needed to succeed in today’s tech-driven world.

Whether you're preparing for a new job, transitioning to a different role, or just looking to challenge yourself, Coding Brushup has the tools you need to succeed.

0 notes

Text

How To Scrape Airbnb Listing Data Using Python And Beautiful Soup: A Step-By-Step Guide

The travel industry is a huge business, set to grow exponentially in coming years. It revolves around movement of people from one place to another, encompassing the various amenities and accommodations they need during their travels. This concept shares a strong connection with sectors such as hospitality and the hotel industry.

Here, it becomes prudent to mention Airbnb. Airbnb stands out as a well-known online platform that empowers people to list, explore, and reserve lodging and accommodation choices, typically in private homes, offering an alternative to the conventional hotel and inn experience.

Scraping Airbnb listings data entails the process of retrieving or collecting data from Airbnb property listings. To Scrape Data from Airbnb's website successfully, you need to understand how Airbnb's listing data works. This blog will guide us how to scrape Airbnb listing data.

What Is Airbnb Scraping?

Airbnb serves as a well-known online platform enabling individuals to rent out their homes or apartments to travelers. Utilizing Airbnb offers advantages such as access to extensive property details like prices, availability, and reviews.

Data from Airbnb is like a treasure trove of valuable knowledge, not just numbers and words. It can help you do better than your rivals. If you use the Airbnb scraper tool, you can easily get this useful information.

Effectively scraping Airbnb’s website data requires comprehension of its architecture. Property information, listings, and reviews are stored in a database, with the website using APIs to fetch and display this data. To scrape the details, one must interact with these APIs and retrieve the data in the preferred format.

In essence, Airbnb listing scraping involves extracting or scraping Airbnb listings data. This data encompasses various aspects such as listing prices, locations, amenities, reviews, and ratings, providing a vast pool of data.

What Are the Types of Data Available on Airbnb?

Navigating via Airbnb's online world uncovers a wealth of data. To begin with, property details, like data such as the property type, location, nightly price, and the count of bedrooms and bathrooms. Also, amenities (like Wi-Fi, a pool, or a fully-equipped kitchen) and the times for check-in and check-out. Then, there is data about the hosts and guest reviews and details about property availability.

Here's a simplified table to provide a better overview:

Property Details Data regarding the property, including its category, location, cost, number of rooms, available features, and check-in/check-out schedules.

Host Information Information about the property's owner, encompassing their name, response time, and the number of properties they oversee.

Guest Reviews Ratings and written feedback from previous property guests.

Booking Availability Data on property availability, whether it's available for booking or already booked, and the minimum required stay.

Why Is the Airbnb Data Important?

Extracting data from Airbnb has many advantages for different reasons:

Market Research

Scraping Airbnb listing data helps you gather information about the rental market. You can learn about prices, property features, and how often places get rented. It is useful for understanding the market, finding good investment opportunities, and knowing what customers like.

Getting to Know Your Competitor

By scraping Airbnb listings data, you can discover what other companies in your industry are doing. You'll learn about their offerings, pricing, and customer opinions.

Evaluating Properties

Scraping Airbnb listing data lets you look at properties similar to yours. You can see how often they get booked, what they charge per night, and what guests think of them. It helps you set the prices right, make your property better, and make guests happier.

Smart Decision-Making

With scraped Airbnb listing data, you can make smart choices about buying properties, managing your portfolio, and deciding where to invest. The data can tell you which places are popular, what guests want, and what is trendy in the vacation rental market.

Personalizing and Targeting

By analyzing scraped Airbnb listing data, you can learn what your customers like. You can find out about popular features, the best neighborhoods, or unique things guests want. Next, you can change what you offer to fit what your customers like.

Automating and Saving Time

Instead of typing everything yourself, web scraping lets a computer do it for you automatically and for a lot of data. It saves you time and money and ensures you have scraped Airbnb listing data.

Is It Legal to Scrape Airbnb Data?

Collecting Airbnb listing data that is publicly visible on the internet is okay, as long as you follow the rules and regulations. However, things can get stricter if you are trying to gather data that includes personal info, and Airbnb has copyrights on that.

Most of the time, websites like Airbnb do not let automatic tools gather information unless they give permission. It is one of the rules you follow when you use their service. However, the specific rules can change depending on the country and its policies about automated tools and unauthorized access to systems.

How To Scrape Airbnb Listing Data Using Python and Beautiful Soup?

Websites related to travel, like Airbnb, have a lot of useful information. This guide will show you how to scrape Airbnb listing data using Python and Beautiful Soup. The information you collect can be used for various things, like studying market trends, setting competitive prices, understanding what guests think from their reviews, or even making your recommendation system.

We will use Python as a programming language as it is perfect for prototyping, has an extensive online community, and is a go-to language for many. Also, there are a lot of libraries for basically everything one could need. Two of them will be our main tools today:

Beautiful Soup — Allows easy scraping of data from HTML documents

Selenium — A multi-purpose tool for automating web-browser actions

Getting Ready to Scrape Data

Now, let us think about how users scrape Airbnb listing data. They start by entering the destination, specify dates then click "search." Airbnb shows them lots of places.

This first page is like a search page with many options. But there is only a brief data about each.

After browsing for a while, the person clicks on one of the places. It takes them to a detailed page with lots of information about that specific place.

We want to get all the useful information, so we will deal with both the search page and the detailed page. But we also need to find a way to get info from the listings that are not on the first search page.

Usually, there are 20 results on one search page, and for each place, you can go up to 15 pages deep (after that, Airbnb says no more).

It seems quite straightforward. For our program, we have two main tasks:

looking at a search page, and getting data from a detailed page.

So, let us begin writing some code now!

Getting the listings

Using Python to scrape Airbnb listing data web pages is very easy. Here is the function that extracts the webpage and turns it into something we can work with called Beautiful Soup.

def scrape_page(page_url): """Extracts HTML from a webpage""" answer = requests.get(page_url) content = answer.content soup = BeautifulSoup(content, features='html.parser') return soup

Beautiful Soup helps us move around an HTML page and get its parts. For example, if we want to take the words from a “div” object with a class called "foobar" we can do it like this:

text = soup.find("div", {"class": "foobar"}).get_text()

On Airbnb's listing data search page, what we are looking for are separate listings. To get to them, we need to tell our program which kinds of tags and names to look for. A simple way to do this is to use a tool in Chrome called the developer tool (press F12).

The listing is inside a "div" object with the class name "8s3ctt." Also, we know that each search page has 20 different listings. We can take all of them together using a Beautiful Soup tool called "findAll.

def extract_listing(page_url): """Extracts listings from an Airbnb search page""" page_soup = scrape_page(page_url) listings = page_soup.findAll("div", {"class": "_8s3ctt"}) return listings

Getting Basic Info from Listings

When we check the detailed pages, we can get the main info about the Airbnb listings data, like the name, total price, average rating, and more.

All this info is in different HTML objects as parts of the webpage, with different names. So, we could write multiple single extractions -to get each piece:

name = soup.find('div', {'class':'_hxt6u1e'}).get('aria-label') price = soup.find('span', {'class':'_1p7iugi'}).get_text() ...

However, I chose to overcomplicate right from the beginning of the project by creating a single function that can be used again and again to get various things on the page.

def extract_element_data(soup, params): """Extracts data from a specified HTML element"""

# 1. Find the right tag

if 'class' in params: elements_found = soup.find_all(params['tag'], params['class']) else: elements_found = soup.find_all(params['tag'])

# 2. Extract text from these tags

if 'get' in params: element_texts = [el.get(params['get']) for el in elements_found] else: element_texts = [el.get_text() for el in elements_found]

# 3. Select a particular text or concatenate all of them tag_order = params.get('order', 0) if tag_order == -1: output = '**__**'.join(element_texts) else: output = element_texts[tag_order] return output

Now, we've got everything we need to go through the entire page with all the listings and collect basic details from each one. I'm showing you an example of how to get only two details here, but you can find the complete code in a git repository.

RULES_SEARCH_PAGE = { 'name': {'tag': 'div', 'class': '_hxt6u1e', 'get': 'aria-label'}, 'rooms': {'tag': 'div', 'class': '_kqh46o', 'order': 0}, } listing_soups = extract_listing(page_url) features_list = [] for listing in listing_soups: features_dict = {} for feature in RULES_SEARCH_PAGE: features_dict[feature] = extract_element_data(listing, RULES_SEARCH_PAGE[feature]) features_list.append(features_dict)

Getting All the Pages for One Place

Having more is usually better, especially when it comes to data. Scraping Airbnb listing data lets us see up to 300 listings for one place, and we are going to scrape them all.

There are different ways to go through the pages of search results. It is easiest to see how the web address (URL) changes when we click on the "next page" button and then make our program do the same thing.

All we have to do is add a thing called "items_offset" to our initial URL. It will help us create a list with all the links in one place.

def build_urls(url, listings_per_page=20, pages_per_location=15): """Builds links for all search pages for a given location""" url_list = [] for i in range(pages_per_location): offset = listings_per_page * i url_pagination = url + f'&items_offset={offset}' url_list.append(url_pagination) return url_list

We have completed half of the job now. We can run our program to gather basic details for all the listings in one place. We just need to provide the starting link, and things are about to get even more exciting.

Dynamic Pages

It takes some time for a detailed page to fully load. It takes around 3-4 seconds. Before that, we could only see the base HTML of the webpage without all the listing details we wanted to collect.

Sadly, the "requests" tool doesn't allow us to wait until everything on the page is loaded. But Selenium does. Selenium can work just like a person, waiting for all the cool website things to show up, scrolling, clicking buttons, filling out forms, and more.

Now, we plan to wait for things to appear and then click on them. To get information about the amenities and price, we need to click on certain parts.

To sum it up, here is what we are going to do:

Start up Selenium.

Open a detailed page.

Wait for the buttons to show up.

Click on the buttons.

Wait a little longer for everything to load.

Get the HTML code.

Let us put them into a Python function.

def extract_soup_js(listing_url, waiting_time=[5, 1]): """Extracts HTML from JS pages: open, wait, click, wait, extract""" options = Options() options.add_argument('--headless') options.add_argument('--no-sandbox') driver = webdriver.Chrome(options=options) driver.get(listing_url) time.sleep(waiting_time[0]) try: driver.find_element_by_class_name('_13e0raay').click() except: pass # amenities button not found try: driver.find_element_by_class_name('_gby1jkw').click() except: pass # prices button not found time.sleep(waiting_time[1]) detail_page = driver.page_source driver.quit() return BeautifulSoup(detail_page, features='html.parser')

Now, extracting detailed info from the listings is quite straightforward because we have everything we need. All we have to do is carefully look at the webpage using a tool in Chrome called the developer tool. We write down the names and names of the HTML parts, put all of that into a tool called "extract_element_data.py" and we will have the data we want.

Running Multiple Things at Once

Getting info from all 15 search pages in one location is pretty quick. When we deal with one detailed page, it takes about just 5 to 6 seconds because we have to wait for the page to fully appear. But, the fact is the CPU is only using about 3% to 8% of its power.

So. instead of going to 300 webpages one by one in a big loop, we can split the webpage addresses into groups and go through these groups one by one. To find the best group size, we have to try different options.

from multiprocessing import Pool with Pool(8) as pool: result = pool.map(scrape_detail_page, url_list)

The Outcome

After turning our tools into a neat little program and running it for a location, we obtained our initial dataset.

The challenging aspect of dealing with real-world data is that it's often imperfect. There are columns with no information, many fields need cleaning and adjustments. Some details turned out to be not very useful, as they are either always empty or filled with the same values.

There's room for improving the script in some ways. We could experiment with different parallelization approaches to make it faster. Investigating how long it takes for the web pages to load can help reduce the number of empty columns.

To Sum It Up

We've mastered:

Scraping Airbnb listing data using Python and Beautiful Soup.

Handling dynamic pages using Selenium.

Running the script in parallel using multiprocessing.

Conclusion

Web scraping today offers user-friendly tools, which makes it easy to use. Whether you are a coding pro or a curious beginner, you can start scraping Airbnb listing data with confidence. And remember, it's not just about collecting data – it's also about understanding and using it.

The fundamental rules remain the same, whether you're scraping Airbnb listing data or any other website, start by determining the data you need. Then, select a tool to collect that data from the web. Finally, verify the data it retrieves. Using this info, you can make better decisions for your business and come up with better plans to sell things.

So, be ready to tap into the power of web scraping and elevate your sales game. Remember that there's a wealth of Airbnb data waiting for you to explore. Get started with an Airbnb scraper today, and you'll be amazed at the valuable data you can uncover. In the world of sales, knowledge truly is power.

0 notes

Text

Ways To Extract Airbnb Data

There are four ways to get Airbnb data:

Scraped Dataset

Ready-made scrapers

Web scraping API

Web Scraping Service

Source

0 notes

Text

How To Scrape Hotel Data Of Location From Travel Booking App

How To Scrape Hotel Data Of Location From Travel Booking App?

In our contemporary digital age, the travel industry has undergone a profound transformation, where data profoundly influences the process of making decisions. With the rise of technology, travelers' expectations have evolved, demanding access to comprehensive and up-to-date information concerning their accommodation choices and various transportation options. This article's primary objective is to be a practical guide, walking you through the intricacies of data scraping in travel industry from many sources. By completing this guide, you'll have a robust set of tools to derive valuable insights, enabling you to make more informed and optimized decisions for your future travel experiences.

Before delving into the intricate technical aspects of this process, it's vital to establish a strong foundation by understanding the core principles of web scraping and data collection. Web scraping is extracting specific data from websites, typically accomplished by utilizing programming languages such as Python and employing libraries like Beautiful Soup and Requests. These libraries empower you to navigate web pages seamlessly, collecting structured data that can be further analyzed and utilized for your specific needs.

Step 1: Choose Your Data Sources:

Begin by meticulously identifying reliable data sources for your project, including hotel information, flight schedules, train routes, and bus timetables. Well-known websites such as Booking.com, Expedia, and Airbnb are excellent sources for detailed hotel information. Additionally, consider government websites and APIs for obtaining the necessary transportation datasets.

Step 2: Web Scraping for Hotel Data:

The core of your project involves web scraping for hotel data. It involves extracting details like hotel names, pricing, geographic locations, customer ratings, and reviews. The beauty of web scraping is that it allows you to tailor the code to your data requirements. Implement the automation using travel data scraping services to ensure your information remains up-to-date. In this regard, Python and libraries like Beautiful Soup prove highly effective for parsing HTML data.

Step 3: Connecting Transportation Data:

To build a comprehensive travel dataset, you must integrate data on flight schedules, train routes, and bus timetables. It entails collecting and consolidating information from diverse sources. Government websites and transport companies often provide APIs that simplify the process of retrieving schedules and route data. These APIs enable you to maintain accurate and real-time information.

Step 4: Data Storage and Analysis:

After successfully scraping and collecting the necessary data, your dataset's efficient organization and storage are pivotal. Databases like MySQL or PostgreSQL can be employed to manage your data efficiently. Furthermore, data analysis tools such as Pandas and Tableau become invaluable for deriving insights, creating visualizations, and drawing meaningful conclusions from your dataset.

Step 5: Uncovering Insights:

Your comprehensive dataset allows you to delve into a treasure trove of insights. Scrape hotel data of location from travel booking app to identify ideal hotel locations based on transportation availability, crafting cost-effective travel itineraries, and uncovering trends in traveler preferences. Data-driven insights enable you to make informed decisions and enhance the quality of travel experiences.

Ethical Considerations:

Web scraping is a powerful tool, but it comes with ethical responsibilities. Awareness of the legal and ethical implications surrounding web scraping is crucial. Continually review and adhere to the terms of use of the websites you're scraping. Avoid overloading their servers, respect privacy laws, and ensure your data scraping activities comply with local regulations.

Import Necessary Libraries

Import libraries such as Requests and Beautiful Soup for web scraping. Ensure you have them installed.

import requests

from bs4 import BeautifulSoup

Send an HTTP Request

Send an HTTP request to the website you want to scrape.

Parse the Web Page

Parse the HTML content of the web page using Beautiful Soup.

soup = BeautifulSoup(response.text, "html.parser")

Locate and Extract Data

Locate the HTML elements that contain the data you need. Inspect the web page source code.

# Example: Extracting hotel names

hotel_names = [element.text for element in soup.find_all("h2", class_="sr-hotel__title")]

Data Storage

Store the extracted data in a suitable data structure for further processing. In this example, we'll use a list to store hotel names.

# Store the hotel names in a list

hotel_names = [element.text for element in soup.find_all("h2", class_="sr-hotel__title")]

Data Analysis

You can now perform data analysis on the collected hotel data as needed. It may include sorting, filtering, or calculating statistics.

# Example: Sort hotel names alphabetically

sorted_hotel_names = sorted(hotel_names)

This example is a simplified illustration of scraping hotel data. To scrape transportation data like connecting flights, buses, and trains, you need to access relevant APIs and use a similar process to request, parse, and extract data from those sources.

Significance of Scraping Hotel Data from Travel Booking Apps

Scraping hotel data and combining it with information about connecting flights, buses, and trains holds significant importance for several key stakeholders, as it provides valuable insights and benefits:

1. Travelers:

Informed Decision-Making: Travelers can make more informed decisions about their trips, choosing the most convenient and cost-effective accommodation and transportation options.

Tailored Travel Plans: With comprehensive data, travelers can create personalized itineraries that optimize their travel experience.

Cost Savings: Access to various options helps travelers find the best deals, saving money on accommodations and transportation.

2. Travel Agencies and Booking Platforms:

Enhanced Service: Travel agencies can provide better and more comprehensive services to their customers by offering a one-stop solution for accommodation and transportation.

Competitive Edge: Access to a wide range of data helps agencies stay competitive by offering unique travel packages and deals.

Improved Recommendations: Agencies can make more accurate and tailored recommendations using their preferences and needs.

3. Hospitality Industry:

Strategic Insights: Hotels can gain insights into customer preferences, competitors' pricing strategies, and occupancy rates. It will help make data-driven decisions and improve occupancy and revenue.

Targeted Marketing: With data on transportation options, hotels can target travelers arriving via specific modes of transportation with tailored marketing campaigns.

4. Transportation Companies:

Improved Scheduling: Transportation companies can optimize their schedules based on traveler demand, potentially increasing the efficiency of their operations.

Partnerships: Having data on hotels can facilitate partnerships with accommodation providers, offering bundled deals for travelers.

5. Data Analysts and Researchers:

Research Opportunities: The combined dataset can be a valuable resource for researchers studying travel patterns, consumer behavior, and market trends.

Predictive Modeling: The data can build predictive models for demand forecasting, pricing optimization, and trend analysis.

6. Economic Impact:

Tourism Boost: The availability of comprehensive data can promote tourism by making travel planning more convenient and accessible, potentially boosting the economy of a region or country.

Conclusion: Scraping hotel data with connected flights, trains, and bus datasets presents a valuable opportunity to enhance travel planning and decision-making. By harnessing the combined power of web scraping and data analysis, you can create personalized and optimized travel experiences, manage your travel budget effectively, and uncover valuable insights in the ever-evolving travel industry. Staying updated with the latest travel information empowers you to make the most of your adventures using data-driven insights.

Know More: https://www.iwebdatascraping.com/scrape-hotel-data-of-location-from-travel-booking-app.php

#ScrapeHotelDataOfLocation#datascrapingintravelindustry#webscrapingforhoteldata#traveldatascrapingservices#ScrapingHotelDatafromTravelBookingApps#ExtractHotelDataOfLocation

0 notes

Text

Airbnb Hotel Pricing Data Scraping API: Revolutionizing the Travel and Hospitality Sector

'By leveraging Airbnb data scraping and the Hotel Pricing API, businesses can unlock unprecedented insights into Airbnbs pricing data.'

KNOW MORE: https://www.actowizsolutions.com/airbnb-hotel-pricing-data-scraping-api.php

#AirbnbHotelPricingDataScrapingAPI#ScrapeAirbnbHotelPricingData#AirbnbHotelPricingDataCollection#ExtractAirbnbHotelPricingData#ExtractingAirbnbHotelPricingData#AirbnbHotelPricingDataScraper

0 notes

Text

Vacation Rental Website Data Scraping | Scrape Vacation Rental Website Data

In the ever-evolving landscape of the vacation rental market, having access to real-time, accurate, and comprehensive data is crucial for businesses looking to gain a competitive edge. Whether you are a property manager, travel agency, or a startup in the hospitality industry, scraping data from vacation rental websites can provide you with invaluable insights. This blog delves into the concept of vacation rental website data scraping, its importance, and how it can be leveraged to enhance your business operations.

What is Vacation Rental Website Data Scraping?

Vacation rental website data scraping involves the automated extraction of data from vacation rental platforms such as Airbnb, Vrbo, Booking.com, and others. This data can include a wide range of information, such as property listings, pricing, availability, reviews, host details, and more. By using web scraping tools or services, businesses can collect this data on a large scale, allowing them to analyze trends, monitor competition, and make informed decisions.

Why is Data Scraping Important for the Vacation Rental Industry?

Competitive Pricing Analysis: One of the primary reasons businesses scrape vacation rental websites is to monitor pricing strategies used by competitors. By analyzing the pricing data of similar properties in the same location, you can adjust your rates to stay competitive or identify opportunities to increase your prices during peak seasons.

Market Trend Analysis: Data scraping allows you to track market trends over time. By analyzing historical data on bookings, occupancy rates, and customer preferences, you can identify emerging trends and adjust your business strategies accordingly. This insight can be particularly valuable for making decisions about property investments or marketing campaigns.

Inventory Management: For property managers and owners, understanding the supply side of the market is crucial. Scraping data on the number of available listings, their features, and their occupancy rates can help you optimize your inventory. For example, you can identify underperforming properties and take corrective actions such as renovations or targeted marketing.

Customer Sentiment Analysis: Reviews and ratings on vacation rental platforms provide a wealth of information about customer satisfaction. By scraping and analyzing this data, you can identify common pain points or areas where your service excels. This feedback can be used to improve your offerings and enhance the guest experience.

Lead Generation: For travel agencies or vacation rental startups, scraping contact details and other relevant information from vacation rental websites can help generate leads. This data can be used for targeted marketing campaigns, helping you reach potential customers who are already interested in vacation rentals.

Ethical Considerations and Legal Implications

While data scraping offers numerous benefits, it’s important to be aware of the ethical and legal implications. Vacation rental websites often have terms of service that prohibit or restrict scraping activities. Violating these terms can lead to legal consequences, including lawsuits or being banned from the platform. To mitigate risks, it’s advisable to:

Seek Permission: Whenever possible, seek permission from the website owner before scraping data. Some platforms offer APIs that provide access to data in a more controlled and legal manner.

Respect Robots.txt: Many websites use a robots.txt file to communicate which parts of the site can be crawled by web scrapers. Ensure your scraping activities respect these guidelines.

Use Data Responsibly: Avoid using scraped data in ways that could harm the website or its users, such as spamming or creating fake listings. Responsible use of data helps maintain ethical standards and builds trust with your audience.

How to Get Started with Vacation Rental Data Scraping

If you’re new to data scraping, here’s a simple guide to get you started:

Choose a Scraping Tool: There are various scraping tools available, ranging from easy-to-use platforms like Octoparse and ParseHub to more advanced solutions like Scrapy and Beautiful Soup. Choose a tool that matches your technical expertise and requirements.

Identify the Data You Need: Before you start scraping, clearly define the data points you need. This could include property details, pricing, availability, reviews, etc. Having a clear plan will make your scraping efforts more efficient.

Start Small: Begin with a small-scale scrape to test your setup and ensure that you’re collecting the data you need. Once you’re confident, you can scale up your scraping efforts.

Analyze the Data: After collecting the data, use analytical tools like Excel, Google Sheets, or more advanced platforms like Tableau or Power BI to analyze and visualize the data. This will help you derive actionable insights.

Stay Updated: The vacation rental market is dynamic, with prices and availability changing frequently. Regularly updating your scraped data ensures that your insights remain relevant and actionable.

Conclusion

Vacation rental website data scraping is a powerful tool that can provide businesses with a wealth of information to drive growth and innovation. From competitive pricing analysis to customer sentiment insights, the applications are vast. However, it’s essential to approach data scraping ethically and legally to avoid potential pitfalls. By leveraging the right tools and strategies, you can unlock valuable insights that give your business a competitive edge in the ever-evolving vacation rental market.

0 notes

Text

Airbnb Data API | Scrape Airbnb Listings

Unlock valuable insights with the Airbnb Data API - Seamlessly scrape Airbnb listings and access comprehensive property data for smarter decisions and enhanced experiences.

0 notes

Text

Exploring the Use Cases and Applications of Python and React Native in Various Industries

Python and React Native are two popular programming languages and frameworks that are widely used in various industries. Python is a high-level, general-purpose programming language that is widely used for a variety of tasks, such as web development, data analysis, and machine learning. React Native is an open-source framework for building cross-platform mobile apps using JavaScript and React. In this article, we will explore the use cases and applications of Python and React Native in various industries and discuss the factors that should be considered when choosing which option to use for your next project.

Python in Web Development

Python is widely used in web development, thanks to its simplicity and ease of use. Python has a large number of web frameworks, such as Django and Flask, which make it easy to build and deploy web applications. Django is a high-level web framework that is designed for building complex, database-driven web apps, while Flask is a micro web framework that is designed for building simple, lightweight web apps. Python is also widely used in the development of web scraping and crawling applications, as well as in the implementation of web APIs. If you're looking for training in react native, then you can check out our react native course in Bangalore.

Python in Data Analysis and Machine Learning

Python is also widely used in data analysis and machine learning. Python has a large number of libraries and frameworks that can be used for data analysis, such as NumPy, pandas, and Matplotlib. NumPy is a library for working with arrays and matrices, pandas is a library for working with dataframes, and Matplotlib is a library for creating plots and visualizations. Python also has a large number of libraries and frameworks for machine learning, such as scikit-learn, TensorFlow, and Keras. These libraries make it easy to implement and train machine learning models, and are widely used in industries such as finance, healthcare, and e-commerce.

React Native in Mobile App Development

React Native is widely used in mobile app development, as it allows developers to build cross-platform mobile apps using JavaScript and React. React Native uses native components, which means that the UI of the app will be rendered using the native UI components of the platform that the app is running on. This helps to ensure that the app will perform well on both iOS and Android. React Native is used by companies such as Facebook, Instagram, and Airbnb to build their mobile apps, and is also widely used in industries such as e-commerce, finance, and healthcare. If you're looking for training in react native, then you can check out our Python course in Bangalore.

Conclusion

Python and React Native are both powerful and versatile programming languages and frameworks that are widely used in various industries. Python is a popular choice for web development, data analysis, and machine learning, while React Native is a popular choice for mobile app development. Near Learn, a technology courses institute in Bangalore, offers training on both Python and React Native, which can help developers understand the benefits and limitations of each option and make an informed decision when choosing a technology for their next project. It's important to consider the specific requirements of your project and weigh the pros and cons before making a final decision.

0 notes

Text

Choosing Between APIs vs Web Scraping Travel Data Tools

Introduction

The APIs vs Web Scraping Travel Data landscape presents critical decisions for businesses seeking comprehensive travel intelligence. This comprehensive analysis examines the strategic considerations between traditional API integrations and advanced web scraping methodologies for travel data acquisition. Based on extensive research across diverse travel platforms and data collection scenarios, this report provides actionable insights for organizations evaluating Scalable Travel Data Extraction solutions. The objective is to guide travel companies, OTAs, and data-driven businesses toward optimal data collection strategies that balance performance, cost-effectiveness, and operational flexibility. Modern travel businesses require sophisticated approaches to data acquisition, with both Web Scraping vs API Data Collection methodologies offering distinct advantages depending on specific use cases.

Shifting Paradigms in Travel Data Collection Methods

The landscape of travel data collection has evolved significantly with the emergence of sophisticated API vs Scraper for Ota Data solutions. The primary drivers shaping this evolution include the increasing demand for real-time information, the growing complexity of travel platforms, and the need for scalable data infrastructure that can handle massive volumes of travel-related content.

Travel Data Scraping Services have become increasingly sophisticated, allowing businesses to extract comprehensive datasets from multiple sources simultaneously. These services provide granular access to pricing information, availability data, customer reviews, and promotional content across various travel platforms.

Meanwhile, API-based solutions offer structured access to travel data through official channels, providing more reliable and consistent data streams. Integrating machine learning and artificial intelligence has enhanced both approaches, enabling more intelligent data extraction and processing capabilities that support Scalable Travel Data Extraction requirements.

Methodology and Scope of Data Analysis

The data for this report was collected through comprehensive testing of API and web scraping approaches across 100+ travel platforms worldwide. By systematically evaluating Data Scraping vs API Travel Sites, we analyzed performance metrics, cost structures, implementation complexity, and data quality across major booking platforms, including Booking.com, Expedia, Airbnb, and specialized Travel Aggregates.

We evaluated both methods across key parameters—data freshness, extraction speed, scalability, and maintenance. Our Custom Travel Data Solutions framework also considered data accuracy, update frequency, cost-efficiency, and technical complexity to ensure a well-rounded comparison.

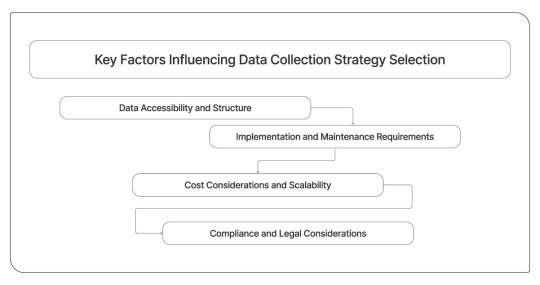

Key Factors Influencing Data Collection Strategy Selection

Understanding these factors is essential for making informed decisions about Real-Time Travel Data Collection strategies.

Data Accessibility and Structure

Implementation and Maintenance Requirements

Cost Considerations and Scalability

Compliance and Legal Considerations

Table 1: Performance Comparison - APIs vs Web Scraping Methods

MetricAPI ApproachWeb Scraping ApproachHybrid SolutionData Accuracy Rate98.5%92.3%96.8%Average Response Time (ms)2501,200450Implementation Time (hours)4012080Monthly Maintenance (hours)52515Data Coverage Completeness75%95%90%Cost per 1M Requests ($)15050100

Description

This analysis highlights the trade-offs in data collection: APIs deliver speed and reliability, while web scraping ensures broader coverage at lower upfront costs. For effective implementation of Custom Travel Data Solutions, it’s essential to weigh these factors. APIs suit high-accuracy, time-sensitive needs while scraping offers scalable data access with more maintenance. Hybrid models blend both for robust, enterprise-ready performance.

Challenges and Opportunities in Travel Data Collection

Modern travel businesses face complex decisions when implementing data collection strategies. While APIs provide stability and official support, they may limit access to comprehensive competitive intelligence and real-time market insights that drive strategic decisions.

Rate limiting represents a significant challenge for API-based approaches, particularly for businesses requiring high-volume data collection for Travel Review Data Intelligence and market analysis. Additionally, API availability varies significantly across platforms, with some major travel sites offering limited or no official API access.

Web scraping presents technical challenges, including anti-bot measures, dynamic content loading, and frequent website modifications that require ongoing technical maintenance. However, it offers unparalleled flexibility for accessing comprehensive datasets and competitive intelligence.

Growing demand for adaptive, intelligent data collection is reshaping the market. Businesses leveraging advanced strategies like Travel Data Intelligence gain faster insights and a sharper edge by staying ahead of shifting trends.

Table 2: Regional Data Collection Trends and Projections

RegionAPI AdoptionScraping Usage RateHybrid ImplementationGrowth ProjectionNorth America65%85%45%22%Europe70%80%50%28%Asia Pacific55%90%35%35%Latin America45%75%25%18%

Description

Regional trends show varied adoption of the Travel Scraping API, driven by local regulations and tech infrastructure. Asia-Pacific leads in growth potential, North America shows strong API usage, and Europe excels in hybrid strategies, balancing compliance with deep market insights. Overall, the demand for advanced data collection is rising globally.

Future Directions in Travel Data Collection

The future of data collection lies in smarter systems that elevate API and web scraping efficiency. By embedding advanced machine learning into extraction workflows, businesses can boost accuracy and reduce upkeep, benefiting platforms like Travel Scraping API and beyond.

The emergence of standardized travel data formats and industry-wide API initiatives suggests a future where structured data access becomes more universally available. However, the continued importance of comprehensive competitive intelligence ensures that web scraping will remain a critical component of enterprise data strategies.

Cloud-based data collection tools level the playing field, empowering small businesses to adopt enterprise-grade solutions without needing heavy infrastructure. This shift enhances capabilities like Travel Review Data Intelligence and broadens access to scalable data extraction.

Conclusion

The decision between APIs vs Web Scraping Travel Data depends on specific business requirements, technical capabilities, and strategic objectives. This report, through a comprehensive analysis of both approaches, demonstrates that neither methodology provides a universal solution for all travel data collection needs.

Travel Aggregators and major booking platforms continue to evolve their data access policies, creating new opportunities and challenges for data collection strategies. The most successful implementations often combine both approaches, leveraging APIs for core operational data while utilizing Travel Industry Web Scraping for comprehensive market intelligence.

Contact Travel Scrape today to discover how our advanced data collection solutions can transform your travel business intelligence and drive competitive advantage in the dynamic travel industry.

Read More :- https://www.travelscrape.com/apis-vs-web-scraping-travel-data.php

#APIsVsWebScrapingTravelData#ScalableApproachToDataCollection#TravelDataScrapingServices#CustomTravelDataSolutions#TravelDataIntelligence#TravelScrapingAPI#TravelReviewDataIntelligence#TravelAggregators#TravelIndustryWebScraping

0 notes

Text

Scrape Airbnb Reviews Data using Our Airbnb Reviews API

Get data scrapped in standard JSON format for Airbnb reviews, without any maintenance, technical overhead, or CAPTCHAs needed.

Our Online airbnb Review Aggregator assists you to extract all you need so that you can focus on providing value to your customers. We've made our airbnb Review Scraper API according to the customers’ needs having no contracts, no setup costs, and no upfront fees. The customers can pay as per their requirements. Our airbnb Review Scraper API assists you scrape Reviews and Rating data from the airbnb website with accuracy and effortlessness.

With our airbnb Reviews API, you can effortlessly extract review data, which helps you know customers’ sentiments that help you in making superior selling strategies. A Review Scraper API makes sure that you only have distinctive reviews data. You may also extract review responses data using this API. You will have clean scraped data without any problems having changing sites as well as data formats. You will also find verified as well as updated reviews.

Why use Review Scraper API?

A Review Scraper API ensures that you only get unique reviews data. You can also scrape review responses data with this API. You will get clean data without any problems with changing sites and data formats. You can also get verified and updates reviews.

Traditional JSON Format

Through 50+ review websites.

Superior Duplicate Recognition

We ensure that you only get unique reviews

Review Responses

Gather review responses like an option

Clear Data

No worries about changing sites, date formats, and data.

Reviewing Meta Data

Identify updated and verified reviews and those too with the URL

Persistent Improvements

24x7 maintenance to ensure that the API always gets better

1 note

·

View note

Text

How to Extract Travel Trends Using Web Scraping API?

Nowadays, internet plays an important role in serving the people’s requirements. Tourists can simply have a conversation with the service provider to put some extra efforts in getting involved with every service which will result in getting a good plan that will cover criteria like competitive prices, discovering unexplored locations, etc. Hence, you can plan the tour yourself. The travel agencies fetch the data and submit it to the service provider that customizes the plan based on the requirements.

As we know that web data scraping plays a major role in creating the best tourism industry. Along with the development of travel web scraping API , it is also possible to extract location information from Google, flight information from airline carriers, accommodation from Airbnb, ride-hailing data from the applications like Uber, and developing an application that will fulfill all travel requirements of client from booking a ticket to travel to their destination. This is where travel booking API integration is valuable to the firm.

Data scraping allows you to understand the strategies of all the competitors, so that one can keep the record of the trending deals, offers and market presence, hence it becomes easy to modify it according to the business plans.

From this blog, we will get an idea about how do travel APIs work, and why it is necessary to integrate travel extracting API into your travel application. Also, there are some efficient APIs that will fetch the data from various websites.

Effects of Travel APIs on Industry

With the advancement and acceptance of API automation, there is huge growth in the hospitality sector. Due to the changes in development of the application, it is now possible to integrate all the factors of the business from an individual application interface. Travel data fetching API integration has given so much to the travel firm for more access to owners and clients. There is continuous rise in the purchase of air tickets, hotel bookings, Forex, visa processing and passport assistance. Even individual travelers can now access all the functions with a single application.

Due to Coronavirus pandemic, people tend to be more cautious during their travelling, hence they prefer to choose more experience-conformed trips. Travel API makes it all possible for providing immersive participation for users relying on travel data available from the internet.

Which are the Levels of Travel Data Extracting API?

There are several categories of Travel APIs with the latest travel trends and altogether merges as one to make an easy access to all criteria in the travel industry.

Integrating transportation API with a travel industry: This kind of APIs allows developers to collect the transportation data which includes flight routes, ticket rates from air service websites, and car renting services. You can even merge your transportation facilities with buses, taxis, and trams with data from smart city APIs, taxi APIs that include Uber and Lyft, and the information from websites that needs to merge into their software like Google Maps Directions.

Types of transport APIs are:

Flight APIs

APIs for car rental

Rail APIs

API for smart city

What Data You Can Extract?

APIs for hotels integrated with travel scraping API : This category of APIs will display the data to your application interface from listed providers. If you want to rent hotel rooms, then you must try API for hotel integration. Also, it is preferable to use APIs from online travel portals like Expedia or TripAdvisor. Depending on the source of application, you can select any class of API to discover booking functionality and easily sell the accommodations to the tourists.

Location data and traffic API : This type of API works well if your firm is developing a website to search centers of interest in a popular tourist destination or developing an application for navigation to help end-users explore the city. Using traffic APIs and integrating it with location data, you can also add a feature of location to your website with the use of geocoding and also other platforms such as Google Maps, MapBox, etc.

Integrating tours and fights excursion APIs with Travel API : Various websites analyze travel data and famous destinations universally through a travel application interface using ticket-purchasing competence.

Business Travel APIs : If a user is developing a B2B travel portal, then APIs like SAP can provide a view to travel administrators regarding how employees accumulate costs on Uber rides.

Why should You Integrate Travel APIs into Your Application?

Decrease in Time of Marketing

By integrating travel APIs into your application, you will find a decrease in the development time. Instead of undergoing standard integration and bit-by-bit implementation of the application’s functions, developers can build APIs, and target the exclusive development of the application.

Decrease in Cost

If an application takes lesser time to develop, then that indicates the requirement of fewer resources. APIs provide final data, reducing the cost of maintenance. Developers build unique features of the website, escaping the other requirements for APIs.

Accuracy in Data

In the travel world, where there are several adverse effects, it is better to confirm the precision of data you provide. The use of APIs ensures fetching data directly from the source application. This will remove the chances of human error in submitting the data.

Superior Offerings

With an increase in the number of Web scraping travel APIs , adding more functions to your portal is simply choosing the correct API and its integration. Travelers these days rely on planning the entire tour from an exclusive website. It is mandatory to provide travelers with such facilities to always compete the travel market.

Sometimes, it’s not necessary to possess data of all the accommodations and destinations. There are times the users might ask for unqiue data such as place to get the best pizza in the city or the famous bakeries in the town. During such times, you will require travel data scraper APIs that can extract data from any source of website and deliver it to the application. This is what you will get at X-byte Enterprise Crawling.

We develop a publicly open API that is compiled with web scraping software and helps in accessing all the data you need. Integrating our travel API with that relevant data will make your software more robust. Also, you can opt to use our module that can assist you to fetch every information from any website to social media.

Final words

According to the facts, this is the best business that has brought huge profit to the travel industry. In this industry, you can get the desired value of migration cost, also find an increase in social media, reduction in cost, and get an increase in jobs.

The travel world nowadays is a huge system of various services, that is connected by travel web scraping APIs and explores unique features from various applications, and makes travel smoother and hassle-free. You can easily find the way for experts of travel web data scraping APIs that you can see at X-byte Enterprise crawling. You can easily fetch the information you need and deliver it as per your requirements.

Just reach us with all your queries. We will be happy to answer all your queries!!

Visit: https://www.xbyte.io/web-scraping-api.php

#web scraping API#web scraping API services#travel data web scraping#web crawling API#Best web scraping API#Travel data scraping

0 notes

Photo

Parcel 1.10, TypeScript 3.1, and lots of handy JS snippets

#405 — September 28, 2018

Read on the Web

JavaScript Weekly

30 Seconds of Code: A Curated Collection of Useful JavaScript Snippets — We first linked this project last year, but it’s just had a ‘1.1’ release where lots of the snippets have been updated and improved, so if you want to do lots of interesting things with arrays, math, strings, and more, check it out.

30 Seconds

Mastering Modular JavaScript — Nicolas has been working on this book about writing robust, well-tested, modular JavaScript code for some time now, and it’s finally been published as a book. You can read it online for free too, or even direct from the book’s git repo.

Nicolas Bevacqua

Burn Your Logs — Use Sentry's open source error tracking to get to the root cause of issues. Setup only takes 5 minutes.

Sentry sponsor

Parcel 1.10.0 Released: Babel 7, Flow, Elm, and More — Parcel is a really compelling zero configuration bundler and this release brings Babel 7, Flow and Elm support. GitHub repo.

Devon Govett

TypeScript 3.1 Released — TypeScript brings static type-checking to the modern JavaScript party, and this latest release adds mappable tuple and array types, easier properties on function declarations, and more. Want to see what’s coming up in 3.2 and beyond? Here’s the TypeScript roadmap.

Microsoft

💻 Jobs

Mid-Level Front End Engineer @ HITRECORD (Full Time, Los Angeles) — Our small dynamic team is looking for an experienced frontend developer to help build and iterate features for an open online community for creative collaboration.

Hitrecord

Try Vettery — Create a profile to connect with inspiring companies seeking JavaScript devs.

Vettery

📘 Tutorials and Opinions

Creating Flocking Behavior with Virtual Birds — A gentle and effective walkthrough of creating and animating flocks of virtual birds.

Drew Cutchins

Rethinking JavaScript Test Coverage — The latest version of V8 offers a native code coverage reporting feature and here’s how it works with Node.

Benjamin Coe (npm, Inc.)

Getting Started with the Node-Influx Client Library — The node-influx client library features a simple API for most InfluxDB operations and is fully supported in Node and the browser, all without needing any extra dependencies.

InfluxData sponsor

How Dropbox Migrated from Underscore to Lodash

Dropbox

Create a CMS-Powered Blog with Vue.js and ButterCMS

Jake Lumetta, et al.

Understanding Type-Checking and 'typeof' in JavaScript

Glad Chinda

Airbnb's Extensive JavaScript Style Guide — Airbnb’s extremely popular guide continues to get frequent updates.

Airbnb

Webinar: Getting the Most Out of MongoDB on AWS

mongodb sponsor

16 JavaScript Podcasts to Listen To in 2018 — Podcasts, like blogs, have a way of coming and going, but these are all ready to listen to now.

François Lanthier Nadeau podcast

Five Tips to Write Better Conditionals in JavaScript

Jecelyn Yeen

🔧 Code and Tools

Tabulator: A Fully Featured, Interactive Table JavaScript Library — Create interactive data tables quickly from any HTML table or JavaScript or JSON data source.

Oli Folkerd

Vandelay: Automatically Generate Import Statements in VS Code

Visual Studio Marketplace

APIs and Infrastructure for Next-Gen JavaScript Apps — Build and scale interactive, immersive apps with PubNub - chat, collaboration, geolocation, device control and gaming.

PubNub sponsor

Apify SDK: Scalable Web Crawling and Scraping from Node — Manage and scale a pool of headless Chrome instances for crawling sites.

Apify

Cloudflare Adds a Fast Distributed Key-Value Store to Its Serverless JavaScript Platform

Stephen Pinkerton and Zack Bloom (Cloudflare)

turtleDB: For Building Offline-First, Collaborative Web Apps — It uses the in-browser IndexedDB database client-side but can then use MongoDB as a back-end store for bi-directional sync.

turtle DB

An Example of a Dynamic Input Placeholder — This is a really neat effect.

Joe B. Lewis

by via JavaScript Weekly https://ift.tt/2DFnnvO

0 notes