#Security orchestration automation and response

Explore tagged Tumblr posts

Text

Security Orchestration Automation and Response (SOAR) Market to Witness Steady Growth with a CAGR of 10.9% by 2032

It is anticipated that the global Security Orchestration Automation and Response (SOAR) market would reach US$ 3.8 billion by 2032, a stunning 10.9% compound annual growth rate, from an estimated value of US$ 1.3 billion in 2022.

Over the course of the anticipated year, the SOAR market is anticipated to have a fantastic expansion because to the growth of technologies like blockchain, cloud, and IoT. In order to influence future developments in the SOAR sector, several companies place a high priority on security and safety.

It is hard to rule out the chance, nevertheless, that SOAR’s shortage of security personnel may restrict the market for the duration of the predicted period.

Future Market Insights has entailed these facts with insights in its latest market study entitled ‘Security Orchestration Automation and Response (SOAR) market’. It has its team of analysts and consultants to deploy a bottom-up approach in its primary, secondary, and tertiary modes of research.

Get a Sample of this Report: https://www.futuremarketinsights.com/reports/sample/rep-gb-14733

“With large volumes of IT application and cloud data being analysed for gauging sophisticated responses to the threats detected, the global SOAR market is bound to grow on an explicable note in the forecast period”, says an analyst from Future Market Insights.

Key Takeaways from SOAR market

North America holds the largest market share. This could be reasoned with wholesome investment done by the US against cyber-attacks.

Europe holds the second-largest market share with Germany and the UK leading from the front. This could be attributed to rules imposed by the governments in this regard.

The Asia-Pacific is expected to grow at the quickest rate in the SOAR market with China ruling the roost.

Competitive Analysis

XM Cyber, in May 2021, did announce integration with Palo Alto Network’s Cortex XSOAR security orchestration automation and response technology.

Securonix, in May 2021, did announce that its next-gen SIEM platform bagged the Best SIEM Solution award. The company was thus adjudged as a Trust Award Winner for cloud-first SIEM multi-cloud and hybrid businesses.

Bugcrowd, in May 2022, entered into collaboration with IBM for bringing SOAR Space and IBM Resilient Integration on the same platform. Security teams make use of SOAR called IBM Resilient.

Palo Alto Networks, in May 2022, did announce that it entered into collaboration with Cohesity with the objective of integrating SOAR platform with the latter’s AI data management platform for lowering customers’ risk to ransomware.

ThreatConnect, in September 2020, completed acquisition of Nehemiah Security. The basic purpose is that of adding Cyber Risk Quantification to the former’s SOAR platform that exists.

Splunk, in March 2022, did join hands with Ridge Security for shortening reaction times.

Ask an Analyst: https://www.futuremarketinsights.com/ask-the-analyst/rep-gb-14733

What does the Report state?

The research study is based on component (solution and services), organization size (small & medium enterprises, large enterprises), deployment mode (cloud and on-premises), application (threat intelligence, network forensics, incident management, compliance management, workflow management, and likewise), and by vertical (BFSI, government, energy & utilities, healthcare, retail, IT & telecom, and likewise).

With product management, supply chain, sales, marketing, and various other activities getting digitized, the global SOAR market is expected to grow on an arduous note in the forecast period.

Key Segments

By Component:

Solution

Services

By Organisation Size:

Small & Medium Enterprises

Large Enterprises

By Deployment Mode

Cloud

On-Premises

By Application:

Threat Intelligence

Network Forensics

Incident Management

Compliance Management

Workflow Management

Others

By Vertical:

BFSI

Government

Energy & Utilities

Healthcare

Retail

IT & Telecom

Others

By Region:

North America

Latin America

Europe

Asia Pacific

Middle East and Africa (MEA)

0 notes

Link

The growing number of wrong security alerts is anticipated to be opportunistic for the Security Orchestration Automation and Response (SOAR) market...

0 notes

Link

0 notes

Text

���If you are looking for a way to streamline your IT operations, vRealize Automation is a powerful tool that can help you to achieve a number of benefits.⭐

vRealize Automation is a powerful device that can help organizations to streamline their IT operations. If you are looking for a way to improve the agility, efficiency, and security of your IT infrastructure, vRA is a good option to consider.💥

Here are some specific examples of how vRA can be used to streamline IT operations:

Provisioning virtual machines: vRA can be used to automate the provisioning of virtual machines, which can save IT administrators a significant amount of time. For example, vRA can be used to create a template for a virtual machine that includes all of the necessary software and configurations. Once the template is created, vRA can be used to provision new virtual machines from the template with just a few clicks.♦️

Deploying applications: vRA can be used to automate the deployment of applications. For example, vRA can be used to create a workflow that includes the steps necessary to deploy an application, such as creating a virtual machine, installing the application software, and configuring the application. ♦️ Once the workflow is created, vRA can be used to deploy the application with just a few clicks. It helps organizations reduce costs, improve service delivery, and ensure compliance and security.

If you are interested in learning more about Streamline Your IT Operations with vRealize Automation.

If you are interested in learning more about Streamline Your IT Operations with vRealize Automation. Please visit our website.🌍 Here are many resources…

#vrealize automation#automation#vrealize operations#vrealize automation 7#vrealize operations cloud#vrealize#vmware automation#security automation orchestration and response#infrastructure automation#it automation#security operations center#operations management#streamlined#vcenter cloud operations#devops explained with example#vrealizeautomation#cloudautomation

0 notes

Text

Google Cloud’s BigQuery Autonomous Data To AI Platform

BigQuery automates data analysis, transformation, and insight generation using AI. AI and natural language interaction simplify difficult operations.

The fast-paced world needs data access and a real-time data activation flywheel. Artificial intelligence that integrates directly into the data environment and works with intelligent agents is emerging. These catalysts open doors and enable self-directed, rapid action, which is vital for success. This flywheel uses Google's Data & AI Cloud to activate data in real time. BigQuery has five times more organisations than the two leading cloud providers that just offer data science and data warehousing solutions due to this emphasis.

Examples of top companies:

With BigQuery, Radisson Hotel Group enhanced campaign productivity by 50% and revenue by over 20% by fine-tuning the Gemini model.

By connecting over 170 data sources with BigQuery, Gordon Food Service established a scalable, modern, AI-ready data architecture. This improved real-time response to critical business demands, enabled complete analytics, boosted client usage of their ordering systems, and offered staff rapid insights while cutting costs and boosting market share.

J.B. Hunt is revolutionising logistics for shippers and carriers by integrating Databricks into BigQuery.

General Mills saves over $100 million using BigQuery and Vertex AI to give workers secure access to LLMs for structured and unstructured data searches.

Google Cloud is unveiling many new features with its autonomous data to AI platform powered by BigQuery and Looker, a unified, trustworthy, and conversational BI platform:

New assistive and agentic experiences based on your trusted data and available through BigQuery and Looker will make data scientists, data engineers, analysts, and business users' jobs simpler and faster.

Advanced analytics and data science acceleration: Along with seamless integration with real-time and open-source technologies, BigQuery AI-assisted notebooks improve data science workflows and BigQuery AI Query Engine provides fresh insights.

Autonomous data foundation: BigQuery can collect, manage, and orchestrate any data with its new autonomous features, which include native support for unstructured data processing and open data formats like Iceberg.

Look at each change in detail.

User-specific agents

It believes everyone should have AI. BigQuery and Looker made AI-powered helpful experiences generally available, but Google Cloud now offers specialised agents for all data chores, such as:

Data engineering agents integrated with BigQuery pipelines help create data pipelines, convert and enhance data, discover anomalies, and automate metadata development. These agents provide trustworthy data and replace time-consuming and repetitive tasks, enhancing data team productivity. Data engineers traditionally spend hours cleaning, processing, and confirming data.

The data science agent in Google's Colab notebook enables model development at every step. Scalable training, intelligent model selection, automated feature engineering, and faster iteration are possible. This agent lets data science teams focus on complex methods rather than data and infrastructure.

Looker conversational analytics lets everyone utilise natural language with data. Expanded capabilities provided with DeepMind let all users understand the agent's actions and easily resolve misconceptions by undertaking advanced analysis and explaining its logic. Looker's semantic layer boosts accuracy by two-thirds. The agent understands business language like “revenue” and “segments” and can compute metrics in real time, ensuring trustworthy, accurate, and relevant results. An API for conversational analytics is also being introduced to help developers integrate it into processes and apps.

In the BigQuery autonomous data to AI platform, Google Cloud introduced the BigQuery knowledge engine to power assistive and agentic experiences. It models data associations, suggests business vocabulary words, and creates metadata instantaneously using Gemini's table descriptions, query histories, and schema connections. This knowledge engine grounds AI and agents in business context, enabling semantic search across BigQuery and AI-powered data insights.

All customers may access Gemini-powered agentic and assistive experiences in BigQuery and Looker without add-ons in the existing price model tiers!

Accelerating data science and advanced analytics

BigQuery autonomous data to AI platform is revolutionising data science and analytics by enabling new AI-driven data science experiences and engines to manage complex data and provide real-time analytics.

First, AI improves BigQuery notebooks. It adds intelligent SQL cells to your notebook that can merge data sources, comprehend data context, and make code-writing suggestions. It also uses native exploratory analysis and visualisation capabilities for data exploration and peer collaboration. Data scientists can also schedule analyses and update insights. Google Cloud also lets you construct laptop-driven, dynamic, user-friendly, interactive data apps to share insights across the organisation.

This enhanced notebook experience is complemented by the BigQuery AI query engine for AI-driven analytics. This engine lets data scientists easily manage organised and unstructured data and add real-world context—not simply retrieve it. BigQuery AI co-processes SQL and Gemini, adding runtime verbal comprehension, reasoning skills, and real-world knowledge. Their new engine processes unstructured photographs and matches them to your product catalogue. This engine supports several use cases, including model enhancement, sophisticated segmentation, and new insights.

Additionally, it provides users with the most cloud-optimized open-source environment. Google Cloud for Apache Kafka enables real-time data pipelines for event sourcing, model scoring, communications, and analytics in BigQuery for serverless Apache Spark execution. Customers have almost doubled their serverless Spark use in the last year, and Google Cloud has upgraded this engine to handle data 2.7 times faster.

BigQuery lets data scientists utilise SQL, Spark, or foundation models on Google's serverless and scalable architecture to innovate faster without the challenges of traditional infrastructure.

An independent data foundation throughout data lifetime

An independent data foundation created for modern data complexity supports its advanced analytics engines and specialised agents. BigQuery is transforming the environment by making unstructured data first-class citizens. New platform features, such as orchestration for a variety of data workloads, autonomous and invisible governance, and open formats for flexibility, ensure that your data is always ready for data science or artificial intelligence issues. It does this while giving the best cost and decreasing operational overhead.

For many companies, unstructured data is their biggest untapped potential. Even while structured data provides analytical avenues, unique ideas in text, audio, video, and photographs are often underutilised and discovered in siloed systems. BigQuery instantly tackles this issue by making unstructured data a first-class citizen using multimodal tables (preview), which integrate structured data with rich, complex data types for unified querying and storage.

Google Cloud's expanded BigQuery governance enables data stewards and professionals a single perspective to manage discovery, classification, curation, quality, usage, and sharing, including automatic cataloguing and metadata production, to efficiently manage this large data estate. BigQuery continuous queries use SQL to analyse and act on streaming data regardless of format, ensuring timely insights from all your data streams.

Customers utilise Google's AI models in BigQuery for multimodal analysis 16 times more than last year, driven by advanced support for structured and unstructured multimodal data. BigQuery with Vertex AI are 8–16 times cheaper than independent data warehouse and AI solutions.

Google Cloud maintains open ecology. BigQuery tables for Apache Iceberg combine BigQuery's performance and integrated capabilities with the flexibility of an open data lakehouse to link Iceberg data to SQL, Spark, AI, and third-party engines in an open and interoperable fashion. This service provides adaptive and autonomous table management, high-performance streaming, auto-AI-generated insights, practically infinite serverless scalability, and improved governance. Cloud storage enables fail-safe features and centralised fine-grained access control management in their managed solution.

Finaly, AI platform autonomous data optimises. Scaling resources, managing workloads, and ensuring cost-effectiveness are its competencies. The new BigQuery spend commit unifies spending throughout BigQuery platform and allows flexibility in shifting spend across streaming, governance, data processing engines, and more, making purchase easier.

Start your data and AI adventure with BigQuery data migration. Google Cloud wants to know how you innovate with data.

#technology#technews#govindhtech#news#technologynews#BigQuery autonomous data to AI platform#BigQuery#autonomous data to AI platform#BigQuery platform#autonomous data#BigQuery AI Query Engine

2 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Mastering the DevOps Landscape: A Comprehensive Exploration of Roles and Responsibilities

Embarking on a career in DevOps opens the door to a dynamic and collaborative experience centered around optimizing software development and delivery. This multifaceted role demands a unique blend of technical acumen and interpersonal skills. Let's delve into the intricate details that define the landscape of a DevOps position, exploring the diverse aspects that contribute to its dynamic nature.

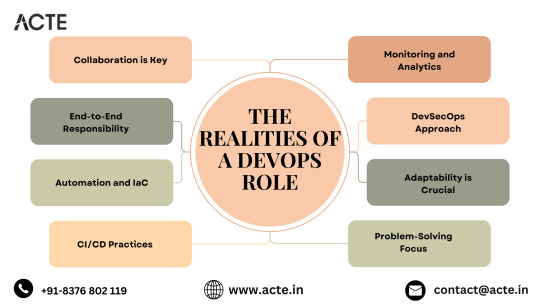

1. The Synergy of Collaboration: At the core of DevOps lies a strong emphasis on collaboration. DevOps professionals navigate the intricacies of working closely with development, operations, and cross-functional teams, fostering effective communication and teamwork to ensure an efficient software development lifecycle.

2. Orchestrating End-to-End Excellence: DevOps practitioners shoulder the responsibility for the entire software delivery pipeline. From coding and testing to deployment and monitoring, they orchestrate a seamless and continuous workflow, responding promptly to changes and challenges, resulting in faster and more reliable software delivery.

3. The Automation Symphony and IaC Choreography: Automation takes center stage in DevOps practices. DevOps professionals automate repetitive tasks, enhancing efficiency and reliability. Infrastructure as Code (IaC) adds another layer, facilitating consistent and scalable infrastructure management and further streamlining the deployment process.

4. Unveiling the CI/CD Ballet: Continuous Integration (CI) and Continuous Deployment (CD) practices form the elegant dance of DevOps. This choreography emphasizes regular integration, testing, and automated deployment, effectively identifying and addressing issues in the early stages of development.

5. Monitoring and Analytics Spotlight: DevOps professionals shine a spotlight on monitoring and analytics, utilizing specialized tools to track applications and infrastructure. This data-driven approach enables proactive issue resolution and optimization, contributing to the overall health and efficiency of the system.

6. The Art of DevSecOps: Security is not a mere brushstroke; it's woven into the canvas of DevOps through the DevSecOps approach. Collaboration with security teams ensures that security measures are seamlessly integrated throughout the software development lifecycle, fortifying the system against vulnerabilities.

7. Navigating the Ever-Evolving Terrain: Adaptability is the compass guiding DevOps professionals through the ever-evolving landscape. Staying abreast of emerging technologies, industry best practices, and evolving methodologies is crucial for success in this dynamic environment.

8. Crafting Solutions with Precision: DevOps professionals are artisans in problem-solving. They skillfully troubleshoot issues, identify root causes, and implement solutions to enhance system reliability and performance, contributing to a resilient and robust software infrastructure.

9. On-Call Symphony: In some organizations, DevOps professionals play a role in an on-call rotation. This symphony involves addressing operational issues beyond regular working hours, underscoring the commitment to maintaining system stability and availability.

10. The Ongoing Learning Odyssey: DevOps is an ever-evolving journey of learning. DevOps professionals actively engage in ongoing skill development, participate in conferences, and connect with the broader DevOps community to stay at the forefront of the latest trends and innovations, ensuring they remain masters of their craft.

In essence, a DevOps role is a voyage into the heart of modern software development practices. With a harmonious blend of technical prowess, collaboration, and adaptability, DevOps professionals navigate the landscape, orchestrating excellence in the efficient and reliable delivery of cutting-edge software solutions.

3 notes

·

View notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

Flash Based Array Market Emerging Trends Driving Next-Gen Storage Innovation

The flash based array market has been undergoing a transformative evolution, driven by the ever-increasing demand for high-speed data storage, improved performance, and energy efficiency. Enterprises across sectors are transitioning from traditional hard disk drives (HDDs) to solid-state solutions, thereby accelerating the adoption of flash based arrays. These storage systems offer faster data access, higher reliability, and scalability, aligning perfectly with the growing needs of digital transformation and cloud-centric operations.

Shift Toward NVMe and NVMe-oF Technologies

One of the most significant trends shaping the FBA market is the shift from traditional SATA/SAS interfaces to NVMe (Non-Volatile Memory Express) and NVMe over Fabrics (NVMe-oF). NVMe technology offers significantly lower latency and higher input/output operations per second (IOPS), enabling faster data retrieval and processing. As businesses prioritize performance-driven applications like artificial intelligence (AI), big data analytics, and real-time databases, NVMe-based arrays are becoming the new standard in enterprise storage infrastructures.

Integration with Artificial Intelligence and Machine Learning

Flash based arrays are playing a pivotal role in enabling AI and machine learning workloads. These workloads require rapid access to massive datasets, something that flash storage excels at. Emerging FBAs are now being designed with built-in AI capabilities that automate workload management, improve performance optimization, and enable predictive maintenance. This trend not only enhances operational efficiency but also reduces manual intervention and downtime.

Rise of Hybrid and Multi-Cloud Deployments

Another emerging trend is the integration of flash based arrays into hybrid and multi-cloud architectures. Enterprises are increasingly adopting flexible IT environments that span on-premises data centers and multiple public clouds. FBAs now support seamless data mobility and synchronization across diverse platforms, ensuring consistent performance and availability. Vendors are offering cloud-ready flash arrays with APIs and management tools that simplify data orchestration across environments.

Focus on Energy Efficiency and Sustainability

With growing emphasis on environmental sustainability, energy-efficient storage solutions are gaining traction. Modern FBAs are designed to consume less power while delivering high throughput and reliability. Flash storage vendors are incorporating technologies like data reduction, deduplication, and compression to minimize physical storage requirements, thereby reducing energy consumption and operational costs. This focus aligns with broader corporate social responsibility (CSR) goals and regulatory compliance.

Edge Computing Integration

The rise of edge computing is influencing the flash based array market as well. Enterprises are deploying localized data processing at the edge to reduce latency and enhance real-time decision-making. To support this, vendors are introducing compact, rugged FBAs that can operate reliably in remote and harsh environments. These edge-ready flash arrays offer high performance and low latency, essential for applications such as IoT, autonomous systems, and smart infrastructure.

Enhanced Data Security Features

As cyber threats evolve, data security has become a critical factor in storage system design. Emerging FBAs are being equipped with advanced security features such as end-to-end encryption, secure boot, role-based access controls, and compliance reporting. These features ensure the integrity and confidentiality of data both in transit and at rest. Additionally, many solutions now offer native ransomware protection and data immutability, enhancing trust among enterprise users.

Software-Defined Storage (SDS) Capabilities

Software-defined storage is redefining the architecture of flash based arrays. By decoupling software from hardware, SDS enables greater flexibility, automation, and scalability. Modern FBAs are increasingly adopting SDS features, allowing users to manage and allocate resources dynamically based on workload demands. This evolution is making flash storage more adaptable and cost-effective for enterprises of all sizes.

Conclusion

The flash based array market is experiencing dynamic changes fueled by technological advancements and evolving enterprise needs. From NVMe adoption and AI integration to cloud readiness and sustainability, these emerging trends are transforming the landscape of data storage. As organizations continue their journey toward digital maturity, FBAs will remain at the forefront, offering the speed, intelligence, and agility required for future-ready IT ecosystems. The vendors that innovate in line with these trends will be best positioned to capture market share and lead the next wave of storage evolution.

0 notes

Text

Legacy Software Modernization Services In India – NRS Infoways

In today’s hyper‑competitive digital landscape, clinging to outdated systems is no longer an option. Legacy applications can slow innovation, inflate maintenance costs, and expose your organization to security vulnerabilities. NRS Infoways bridges the gap between yesterday’s technology and tomorrow’s possibilities with comprehensive Software Modernization Services In India that revitalize your core systems without disrupting day‑to‑day operations.

Why Modernize?

Boost Performance & Scalability

Legacy architectures often struggle under modern workloads. By re‑architecting or migrating to cloud‑native frameworks, NRS Infoways unlocks the flexibility you need to scale on demand and handle unpredictable traffic spikes with ease.

Reduce Technical Debt

Old codebases are costly to maintain. Our experts refactor critical components, streamline dependencies, and implement automated testing pipelines, dramatically lowering long‑term maintenance expenses.

Strengthen Security & Compliance

Obsolete software frequently harbors unpatched vulnerabilities. We embed industry‑standard security protocols and data‑privacy controls to safeguard sensitive information and keep you compliant with evolving regulations.

Enhance User Experience

Customers expect snappy, intuitive interfaces. We upgrade clunky GUIs into sleek, responsive designs—whether for web, mobile, or enterprise portals—boosting user satisfaction and retention.

Our Proven Modernization Methodology

1. Deep‑Dive Assessment

We begin with an exhaustive audit of your existing environment—code quality, infrastructure, DevOps maturity, integration points, and business objectives. This roadmap pinpoints pain points, ranks priorities, and plots the most efficient modernization path.

2. Strategic Planning & Architecture

Armed with data, we design a future‑proof architecture. Whether it’s containerization with Docker/Kubernetes, serverless microservices, or hybrid-cloud setups, each blueprint aligns performance goals with budget realities.

3. Incremental Refactoring & Re‑engineering

To mitigate risk, we adopt a phased approach. Modules are refactored or rewritten in modern languages—often leveraging Java Spring Boot, .NET Core, or Node.js—while maintaining functional parity. Continuous integration pipelines ensure rapid, reliable deployments.

4. Data Migration & Integration

Smooth, loss‑less data transfer is critical. Our team employs advanced ETL processes and secure APIs to migrate databases, synchronize records, and maintain interoperability with existing third‑party solutions.

5. Rigorous Quality Assurance

Automated unit, integration, and performance tests catch issues early. Penetration testing and vulnerability scans validate that the revamped system meets stringent security and compliance benchmarks.

6. Go‑Live & Continuous Support

Once production‑ready, we orchestrate a seamless rollout with minimal downtime. Post‑deployment, NRS Infoways provides 24 × 7 monitoring, performance tuning, and incremental enhancements so your modernized platform evolves alongside your business.

Key Differentiators

Domain Expertise: Two decades of transforming systems across finance, healthcare, retail, and logistics.

Certified Talent: AWS, Azure, and Google Cloud‑certified architects ensure best‑in‑class cloud adoption.

DevSecOps Culture: Security baked into every phase, backed by automated vulnerability management.

Agile Engagement Models: Fixed‑scope, time‑and‑material, or dedicated team options adapt to your budget and timeline.

Result‑Driven KPIs: We measure success via reduced TCO, improved response times, and tangible ROI, not just code delivery.

Success Story Snapshot

A leading Indian logistics firm grappled with a decade‑old monolith that hindered real‑time shipment tracking. NRS Infoways migrated the application to a microservices architecture on Azure, consolidating disparate data silos and introducing RESTful APIs for third‑party integrations. The results? A 40 % reduction in server costs, 60 % faster release cycles, and a 25 % uptick in customer satisfaction scores within six months.

Future‑Proof Your Business Today

Legacy doesn’t have to mean liability. With NRS Infoways’ Legacy Software Modernization Services In India, you gain a robust, scalable, and secure foundation ready to tackle tomorrow’s challenges—whether that’s AI integration, advanced analytics, or global expansion.

Ready to transform?

Contact us for a free modernization assessment and discover how our Software Modernization Services In India can accelerate your digital journey, boost operational efficiency, and drive sustainable growth.

0 notes

Link

0 notes

Text

What Makes EDSPL’s SOC the Nerve Center of 24x7 Cyber Defense?

Introduction: The New Reality of Cyber Defense

We live in an age where cyberattacks aren’t rare—they're expected. Ransomware can lock up entire organizations overnight. Phishing emails mimic internal communications with eerie accuracy. Insider threats now pose as much danger as external hackers. And all this happens while your teams are working, sleeping, or enjoying a weekend away from the office.

In such an environment, your business needs a Security Operations Center (SOC) that doesn’t just detect cyber threats—it anticipates them. That’s where EDSPL’s SOC comes in. It’s not just a monitoring desk—it’s the nerve center of a complete, proactive, and always-on cyber defense strategy.

So, what makes EDSPL’s SOC different from traditional security setups? Why are enterprises across industries trusting us with their digital lifelines?

Let’s explore, in depth.

1. Around-the-Clock Surveillance – Because Threats Don’t Take Holidays

Cyber attackers operate on global time. That means the most devastating attacks can—and often do—happen outside regular working hours.

EDSPL’s SOC is staffed 24x7x365 by experienced cybersecurity analysts who continuously monitor your environment for anomalies. Unlike systems that rely solely on alerts or automation, our human-driven vigilance ensures no threat goes unnoticed—no matter when it strikes.

Key Features:

Continuous monitoring and real-time alerts

Tiered escalation models

Shift-based analyst rotations to ensure alertness

Whether you’re a bank in Mumbai or a logistics firm in Bangalore, your systems are under constant protection.

2. Integrated, Intelligence-Driven Architecture

A SOC is only as good as the tools it uses—and how those tools talk to each other. EDSPL’s SOC is powered by a tightly integrated stack that combines:

Network Security tools for perimeter and internal defense

SIEM (Security Information and Event Management) for collecting and correlating logs from across your infrastructure

SOAR (Security Orchestration, Automation, and Response) to reduce response time through automation

XDR (Extended Detection and Response) for unified visibility across endpoints, servers, and the cloud

This technology synergy enables us to identify multi-stage attacks, filter false positives, and take action in seconds.

3. Human-Centric Threat Hunting and Response

Even the best tools can miss subtle signs of compromise. That’s why EDSPL doesn’t rely on automation alone. Our SOC team includes expert threat hunters who proactively search for indicators of compromise (IoCs), analyze unusual behavior patterns, and investigate security gaps before attackers exploit them.

What We Hunt:

Zero-day vulnerabilities

Insider anomalies

Malware lateral movement

DNS tunneling and data exfiltration

This proactive hunting model prevents incidents before they escalate and protects sensitive systems like your application infrastructure.

4. Multi-Layered Defense Across Infrastructure

Cybersecurity isn’t one-size-fits-all. That’s why EDSPL’s SOC offers multi-layered protection that adapts to your unique setup—whether you're running on compute, storage, or backup systems.

We secure:

Switching and routing environments

On-premise data centers and hybrid cloud security models

Core network devices and data center switching fabric

APIs, applications, and mobility endpoints through application security policies

No layer is left vulnerable. We secure every entry point, and more importantly—every exit path.

5. Tailored Solutions, Not Templates

Unlike plug-and-play SOC providers, EDSPL dives deep into your business architecture, industry regulations, and operational needs. This ensures our SOC service adapts to your challenges—not the other way around.

Whether you’re a healthcare company with HIPAA compliance needs, or a fintech firm navigating RBI audits, we offer:

Custom alert thresholds

Role-based access control

Geo-fencing and behavioral analytics

Industry-specific compliance dashboards

That’s the EDSPL difference—we offer tailored SOC services, not templated defense.

6. Fully Managed and Maintained – So You Focus on Business

Hiring, training, and retaining a cybersecurity team is expensive. Most businesses struggle to maintain their own SOC due to cost, complexity, and manpower limitations.

EDSPL eliminates that burden with its Managed and Maintenance Services. From deployment to daily operations, we take complete ownership of your SOC infrastructure.

We offer:

Security patch management

Log retention and archiving

Threat intelligence updates

Daily, weekly, and monthly security reports

You get enterprise-grade cybersecurity—without lifting a finger.

7. Real-Time Visibility and Reporting

What’s happening on your network right now? With EDSPL’s SOC, you’ll always know.

Our customizable dashboards allow you to:

Monitor attack vectors in real time

View compliance scores and threat levels

Track analyst responses to incidents

Get reports aligned with Services��KPIs

These insights are vital for C-level decision-makers and IT leaders alike. Transparency builds trust.

8. Scalable for Startups, Suitable for Enterprises

Our SOC is designed to scale. Whether you’re a mid-sized company or a multinational enterprise, EDSPL’s modular approach allows us to grow your cybersecurity posture without disruption.

We support:

Multi-site mobility teams

Multi-cloud and hybrid setups

Third-party integrations via APIs

BYOD and remote work configurations

As your digital footprint expands, we help ensure your attack surface doesn’t.

9. Rooted in Vision, Driven by Expertise

Our SOC isn’t just a solution—it’s part of our Background Vision. At EDSPL, we believe cybersecurity should empower, not limit, innovation.

We’ve built a culture around:

Cyber resilience

Ethical defense

Future readiness

That’s why our analysts train on the latest attack vectors, attend global security summits, and operate under frameworks like MITRE ATT&CK and NIST CSF.

You don’t just hire a service—you inherit a philosophy.

10. Real Impact, Real Stories

Case Study 1: Ransomware Stopped in Its Tracks

A global logistics client faced a rapidly spreading ransomware outbreak on a Friday night. Within 15 minutes, EDSPL’s SOC identified the lateral movement, isolated the infected devices, and prevented business disruption.

Case Study 2: Insider Threat Detected in Healthcare

A hospital’s employee tried accessing unauthorized patient records during off-hours. EDSPL’s SOC flagged the behavior using our UEBA engine and ensured the incident was contained and reported within 30 minutes.

These aren’t hypothetical scenarios. This is what we do every day.

11. Support That Goes Beyond Tickets

Have a concern? Need clarity? At EDSPL, we don't bury clients under ticket systems. We offer direct, human access to cybersecurity experts, 24x7.

Our Reach Us and Get In Touch teams work closely with clients to ensure their evolving needs are met.

From technical walkthroughs to incident post-mortems, we are your extended cybersecurity team.

12. The Future Is Autonomous—And EDSPL Is Ready

As cyberattacks become more AI-driven and sophisticated, so does EDSPL. We're already integrating:

AI-based threat intelligence

Machine learning behavioral modeling

Predictive analytics for insider threats

Autonomous remediation tools

This keeps us future-ready—and keeps you secure in an ever-evolving world.

Final Thoughts: Why EDSPL’s SOC Is the Backbone of Modern Security

You don’t just need protection—you need presence, prediction, and precision. EDSPL delivers all three.

Whether you're securing APIs, scaling your compute workloads, or meeting global compliance benchmarks, our SOC ensures your business is protected, proactive, and prepared—day and night.

Don’t just react to threats. Outsmart them.

✅ Partner with EDSPL

📞 Reach us now | 🌐 www.edspl.net

0 notes

Text

Unlocking SRE Success: Roles and Responsibilities That Matter

In today’s digitally driven world, ensuring the reliability and performance of applications and systems is more critical than ever. This is where Site Reliability Engineering (SRE) plays a pivotal role. Originally developed by Google, SRE is a modern approach to IT operations that focuses strongly on automation, scalability, and reliability.

But what exactly do SREs do? Let’s explore the key roles and responsibilities of a Site Reliability Engineer and how they drive reliability, performance, and efficiency in modern IT environments.

🔹 What is a Site Reliability Engineer (SRE)?

A Site Reliability Engineer is a professional who applies software engineering principles to system administration and operations tasks. The main goal is to build scalable and highly reliable systems that function smoothly even during high demand or failure scenarios.

🔹 Core SRE Roles

SREs act as a bridge between development and operations teams. Their core responsibilities are usually grouped under these key roles:

1. Reliability Advocate

Ensures high availability and performance of services

Implements Service Level Objectives (SLOs), Service Level Indicators (SLIs), and Service Level Agreements (SLAs)

Identifies and removes reliability bottlenecks

2. Automation Engineer

Automates repetitive manual tasks using tools and scripts

Builds CI/CD pipelines for smoother deployments

Reduces human error and increases deployment speed

3. Monitoring & Observability Expert

Sets up real-time monitoring tools like Prometheus, Grafana, and Datadog

Implements logging, tracing, and alerting systems

Proactively detects issues before they impact users

4. Incident Responder

Handles outages and critical incidents

Leads root cause analysis (RCA) and postmortems

Builds incident playbooks for faster recovery

5. Performance Optimizer

Analyzes system performance metrics

Conducts load and stress testing

Optimizes infrastructure for cost and performance

6. Security and Compliance Enforcer

Implements security best practices in infrastructure

Ensures compliance with industry standards (e.g., ISO, GDPR)

Coordinates with security teams for audits and risk management

7. Capacity Planner

Forecasts traffic and resource needs

Plans for scaling infrastructure ahead of demand

Uses tools for autoscaling and load balancing

🔹 Day-to-Day Responsibilities of an SRE

Here are some common tasks SREs handle daily:

Deploying code with zero downtime

Troubleshooting production issues

Writing automation scripts to streamline operations

Reviewing infrastructure changes

Managing Kubernetes clusters or cloud services (AWS, GCP, Azure)

Performing system upgrades and patches

Running game days or chaos engineering practices to test resilience

🔹 Tools & Technologies Commonly Used by SREs

Monitoring: Prometheus, Grafana, ELK Stack, Datadog

Automation: Terraform, Ansible, Chef, Puppet

CI/CD: Jenkins, GitLab CI, ArgoCD

Containers & Orchestration: Docker, Kubernetes

Cloud Platforms: AWS, Google Cloud, Microsoft Azure

Incident Management: PagerDuty, Opsgenie, VictorOps

🔹 Why SRE Matters for Modern Businesses

Reduces system downtime and increases user satisfaction

Improves deployment speed without compromising reliability

Enables proactive problem solving through observability

Bridges the gap between developers and operations

Drives cost-effective scaling and infrastructure optimization

🔹 Final Thoughts

Site Reliability Engineering roles and responsibilities are more than just monitoring systems—it’s about building a resilient, scalable, and efficient infrastructure that keeps digital services running smoothly. With a blend of coding, systems knowledge, and problem-solving skills, SREs play a crucial role in modern DevOps and cloud-native environments.

📥 Click Here: Site Reliability Engineering certification training program

0 notes

Text

Managed WiFi: Business Network Infrastructure Solutions

Modern enterprises rely on wireless connectivity as a fundamental operational requirement. Managed wifi transforms how organizations approach network infrastructure by outsourcing wireless management to specialized providers. This strategic shift allows companies to maintain robust internet connectivity while concentrating resources on primary business functions.

Understanding Managed WiFi Concepts

Managed wifi involves partnering with professional network providers who assume complete responsibility for wireless infrastructure operations. These partnerships encompass equipment deployment, system configuration, security oversight, and continuous performance monitoring. Organizations benefit from enterprise-level wireless capabilities without developing internal networking expertise.

A managed wifi system consists of interconnected components working harmoniously to deliver consistent wireless access. The infrastructure includes carefully positioned access points, centralized management controllers, and multilayered security protocols. This coordinated approach ensures reliable connectivity across all business locations and user devices.

Architecture of Managed WiFi Systems

Professional managed wifi systems integrate several critical elements designed for optimal wireless performance. High-quality access points establish the network backbone, positioned strategically to maximize coverage while supporting numerous concurrent connections without compromising speed or reliability.

Central network controllers serve as command hubs for managed wifi system operations. These advanced devices orchestrate data flow, enforce security measures, and deliver automated network optimization. Controllers continuously adjust power levels, frequency channels, and traffic distribution to maintain peak performance standards.

Security Framework in Managed WiFi

Managed wifi sercurity implements comprehensive protection strategies to defend wireless networks and connected endpoints. Advanced security measures include sophisticated encryption protocols, certificate-based authentication, and intelligent firewall systems that establish multiple defensive barriers against unauthorized intrusion attempts.

Network isolation represents a core managed wifi security strategy, creating separate virtual networks for distinct user categories and device types. Continuous security monitoring analyzes network communications to detect anomalous behavior and emerging threats. Regular vulnerability assessments and security updates maintain managed wifi security effectiveness against developing cyber threats.

Choosing Professional Managed WiFi Providers

Selecting suitable managed wifi providers demands careful assessment of technical qualifications and service delivery standards. Providers must exhibit comprehensive knowledge of wireless technologies, network security protocols, and enterprise infrastructure management practices.

Key evaluation factors include:

• Network architecture and design competencies

• Round-the-clock monitoring and technical support

• Growth accommodation and expansion capabilities

• Regional support presence and availability

• Specialized industry knowledge and experience

• Strategic vendor relationships and technology access

Sector-Specific Applications

Various industries demand customized managed wifi solutions addressing unique operational needs. Healthcare organizations require HIPAA-compliant networks supporting critical medical equipment and patient data systems while ensuring stringent privacy protection.

Academic institutions utilize managed wifi solutions engineered for high user concentrations and varied device ecosystems. Commercial retail operations depend on managed wifi solutions supporting transaction processing, inventory control, and customer service applications.

Assessment Standards for Service Providers

Evaluating managed wifi service providers requires systematic analysis of technical capabilities and service excellence. Providers should demonstrate verified experience with network architecture, security deployment, and performance enhancement across diverse industry applications.

Critical assessment criteria encompass:

• Professional qualifications and industry recognition

• Demonstrated success with comparable implementations

• Detailed service level commitments

• Flexible solutions supporting organizational growth

• Value-oriented pricing and contract flexibility

• Local expertise and support accessibility

Implementation and Operations

Managed wifi solutions deployment commences with thorough facility evaluation and network strategy development. Engineering professionals perform detailed site examinations to assess coverage needs, interference factors, and capacity demands.

Professional deployment ensures all infrastructure components function according to design parameters. Qualified technicians install networking equipment, configure management systems, and establish security protocols to guarantee optimal performance.

Operational management activities include:

• Automated system monitoring and incident notification

• Preventive maintenance and performance optimization

• Scheduled software updates and security enhancements

• Network capacity analysis and growth planning

• Remote diagnostics and configuration management

Economic Benefits and ROI

Managed wifi services commonly utilize predictable monthly fee structures encompassing equipment costs, installation services, and ongoing technical support. This financial model eliminates substantial capital investments while providing consistent operational expenses.

Investment returns encompass direct expense reductions plus operational efficiency gains, improved customer satisfaction, and enhanced employee productivity. Dependable wireless connectivity supports mobile workforce initiatives and optimized business processes.

Technology Trends and Future Developments

Advanced wireless standards including WiFi 6E and WiFi 7 introduce significant performance improvements to managed networking deployments. These technologies deliver enhanced data rates, minimized latency, and superior performance in dense user environments.

Future technology developments include:

• Next-generation wireless standard implementation

• Machine learning network optimization

• IoT device integration and management

• Advanced cybersecurity framework deployment

• Enhanced data analytics and intelligence

• Cellular network convergence opportunities

Best Managed WiFi Solutions Selection

Best managed wifi solutions require thorough evaluation of organizational needs and provider capabilities. Companies should analyze existing wireless limitations, future connectivity demands, and available internal resources before committing to managed solutions.

Best managed wifi solutions deliver professional-quality wireless infrastructure without complexity associated with internal management. The integration of expert design, commercial-grade equipment, and comprehensive security provides dependable connectivity supporting business development.

Summary

Professional wireless networking through managed services has emerged as vital for organizations pursuing reliable, secure, and scalable connectivity solutions. Identifying appropriate managed wifi service providers and deploying suitable solutions requires thorough evaluation of technical competencies and service quality standards.

Investment in professional managed wireless services produces enhanced connectivity, strengthened security, and operational efficiency supporting sustained business achievement and growth aspirations.

0 notes

Text

How to Become a Successful Azure Data Engineer in 2025

In today’s data-driven world, businesses rely on cloud platforms to store, manage, and analyze massive amounts of information. One of the most in-demand roles in this space is that of an Azure Data Engineer. If you're someone looking to build a successful career in the cloud and data domain, Azure Data Engineering in PCMC is quickly becoming a preferred choice among aspiring professionals and fresh graduates.

This blog will walk you through everything you need to know to become a successful Azure Data Engineer in 2025—from required skills to tools, certifications, and career prospects.

Why Choose Azure for Data Engineering?

Microsoft Azure is one of the leading cloud platforms adopted by companies worldwide. With powerful services like Azure Data Factory, Azure Databricks, and Azure Synapse Analytics, it allows organizations to build scalable, secure, and automated data solutions. This creates a huge demand for trained Azure Data Engineers who can design, build, and maintain these systems efficiently.

Key Responsibilities of an Azure Data Engineer

As an Azure Data Engineer, your job is more than just writing code. You will be responsible for:

Designing and implementing data pipelines using Azure services.

Integrating various structured and unstructured data sources.

Managing data storage and security.

Enabling real-time and batch data processing.

Collaborating with data analysts, scientists, and other engineering teams.

Essential Skills to Master in 2025

To succeed as an Azure Data Engineer, you must gain expertise in the following:

1. Strong Programming Knowledge

Languages like SQL, Python, and Scala are essential for data transformation, cleaning, and automation tasks.

2. Understanding of Azure Tools

Azure Data Factory – for data orchestration and transformation.

Azure Synapse Analytics – for big data and data warehousing solutions.

Azure Databricks – for large-scale data processing using Apache Spark.

Azure Storage & Data Lake – for scalable and secure data storage.

3. Data Modeling & ETL Design

Knowing how to model databases and build ETL (Extract, Transform, Load) pipelines is fundamental for any data engineer.

4. Security & Compliance

Understanding Role-Based Access Control (RBAC), Data Encryption, and Data Masking is critical to ensure data integrity and privacy.

Career Opportunities and Growth

With increasing cloud adoption, Azure Data Engineers are in high demand across all industries including finance, healthcare, retail, and IT services. Roles include:

Azure Data Engineer

Data Platform Engineer

Cloud Data Specialist

Big Data Engineer

Salaries range widely depending on skills and experience, but in cities like Pune and PCMC (Pimpri-Chinchwad), entry-level engineers can expect ₹5–7 LPA, while experienced professionals often earn ₹12–20 LPA or more.

Learning from the Right Place Matters

To truly thrive in this field, it’s essential to learn from industry experts. If you’re looking for a trusted Software training institute in Pimpri-Chinchwad, IntelliBI Innovations Technologies offers career-focused Azure Data Engineering programs. Their curriculum is tailored to help students not only understand theory but apply it through real-world projects, resume preparation, and mock interviews.

Conclusion

Azure Data Engineering is not just a job—it’s a gateway to an exciting and future-proof career. With the right skills, certifications, and hands-on experience, you can build powerful data solutions that transform businesses. And with growing opportunities in Azure Data Engineering in PCMC, now is the best time to start your journey.

Whether you’re a fresher or an IT professional looking to upskill, invest in yourself and start building a career that matters.

0 notes

Text

The Versatile Role of a DevOps Engineer: Navigating the Convergence of Dev and Ops

The world of technology is in a state of constant evolution, and as businesses increasingly rely on software-driven solutions, the role of a DevOps engineer has become pivotal. DevOps engineers are the unsung heroes who seamlessly merge the worlds of software development and IT operations to ensure the efficiency, security, and automation of the software development lifecycle. Their work is like the unseen wiring in a well-orchestrated symphony, making sure that every note is played in harmony. This blog will delve into the world of DevOps engineering, exploring the intricacies of their responsibilities, the skills they wield, and the dynamic nature of their day-to-day work.

The DevOps Engineer: Bridging the Gap

DevOps engineers are the bridge builders in the realm of software development. They champion collaboration between development and operations teams, promoting faster development cycles and more reliable software. These professionals are well-versed in scripting, automation, containerization, and continuous integration/continuous deployment (CI/CD) tools. Their mission is to streamline processes, enhance system reliability, and contribute to the overall success of software projects.

The Skill Set of a DevOps Engineer

A DevOps engineer's skill set is a versatile mix of technical and soft skills. They must excel in coding and scripting, system administration, and automation tools, creating efficient pipelines with CI/CD integration. Proficiency in containerization and orchestration, cloud computing, and security is crucial. DevOps engineers are excellent collaborators with strong communication skills and a knack for problem-solving. They prioritize documentation and are committed to continuous professional development, ensuring they remain invaluable in the dynamic landscape of modern IT operations..

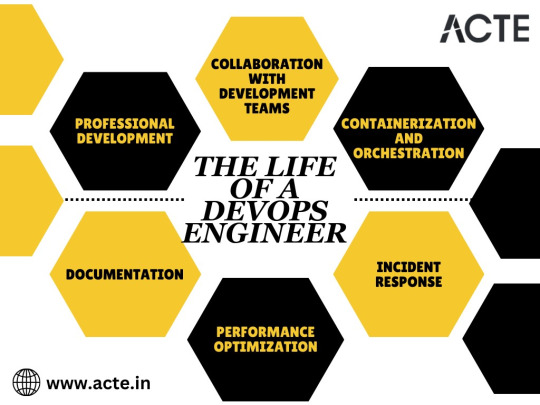

What a DevOps Engineer Does Throughout the Day

A typical day for a DevOps engineer is dynamic and multifaceted, reflecting the varied responsibilities in this role. They focus on collaboration, automation, and efficiency, aiming to ensure that the software development lifecycle is smooth. Their day often begins with a deep dive into infrastructure management, where they meticulously check the health of servers, networks, and databases, ensuring that all systems are up and running smoothly. One of the main priorities is minimising disruptions and downtime.

Here's a closer look at the intricate web of tasks that DevOps engineers expertly navigate throughout the day:

1. Collaboration with Development Teams: DevOps engineers embark on a journey of collaboration, working closely with software development teams. They strive to comprehend the intricacies of new features and applications, ensuring that these seamlessly integrate with the existing infrastructure and are deployable.

2. Containerization and Orchestration: In the ever-evolving world of DevOps, the use of containerization technologies like Docker and orchestration tools such as Kubernetes is a common practice. DevOps engineers dedicate their time to efficiently manage containerized applications and scale them according to varying workloads.

3. Incident Response: In the dynamic realm of IT, unpredictability is the norm. Issues and incidents can rear their heads at any moment. DevOps engineers stand as the first line of defense, responsible for rapid incident response. They delve into issues, relentlessly searching for root causes, and swiftly implement solutions to restore service and ensure a seamless user experience.

4. Performance Optimization: Continuous performance optimization is the name of the game. DevOps engineers diligently analyze system performance data, pinpointing bottlenecks, and proactively applying enhancements to boost application speed and efficiency. Their commitment to optimizing performance ensures a responsive and agile digital ecosystem.

5. Documentation: Behind the scenes, DevOps engineers meticulously maintain comprehensive documentation. This vital documentation encompasses infrastructure configurations and standard operating procedures. Its purpose is to ensure that processes are repeatable, transparent, and easily accessible to the entire team.

6. Professional Development: The world of DevOps is in constant flux, with new technologies and trends emerging regularly. To stay ahead of the curve, DevOps engineers are committed to ongoing professional development. This entails self-guided learning, attendance at workshops, and, in many cases, achieving additional certifications to deepen their expertise.

In conclusion, the role of a DevOps engineer is one of great significance in today's tech-driven world. These professionals are the linchpins that keep the machinery of software development and IT operations running smoothly. With their diverse skill set, they streamline processes, enhance efficiency, and ensure the reliability and security of applications. The dynamic nature of their work, encompassing collaboration, automation, and infrastructure management, makes them indispensable.

For those considering a career in DevOps, the opportunities are vast, and the demand for skilled professionals continues to grow. ACTE Technologies stands as a valuable partner on your journey to mastering DevOps. Their comprehensive training programs provide the knowledge and expertise needed to excel in this ever-evolving field. Your path to becoming a proficient DevOps engineer starts here, with a world of possibilities awaiting you.

3 notes

·

View notes