#Sensor Fusion

Explore tagged Tumblr posts

Text

#sensor fusion#radar#cybersecurity#AutonomousCars#AI#SmartMobility#ConnectedVehicles#electricvehiclesnews#evtimes#autoevtimes#evbusines#TechInnovation

1 note

·

View note

Text

How Defense Investments, AI Advancements, and Surveillance Needs Drive the MUM-T Market

The Manned–Unmanned Teaming (MUM-T) Market is undergoing rapid expansion as industries and governments increasingly adopt systems that integrate human-operated and autonomous platforms. This revolutionary collaboration is reshaping the defense, logistics, and commercial sectors by leveraging advanced technologies like artificial intelligence (AI), autonomy, and sensor fusion.

Several drivers are fueling this growth, including increased investment in defense technologies, rising demand for unmanned surveillance and reconnaissance platforms, and breakthroughs in AI and sensor technologies. In this blog, we will explore these key drivers and their role in propelling the MUM-T market to new heights.

Growing Investments in Defense Technologies

Global defense budgets are steadily increasing as nations strive to enhance their military capabilities in response to evolving geopolitical tensions and security challenges. These investments have paved the way for the development and deployment of advanced MUM-T systems, which are becoming a critical component of modern defense strategies.

Manned–unmanned teaming allows defense forces to combine the precision and efficiency of autonomous platforms with the judgment and adaptability of human operators. This synergy enhances mission success while minimizing risks to personnel.

Countries like the United States, China, and Russia are at the forefront of adopting MUM-T systems, allocating significant resources toward their development. In the United States, for instance, defense contractors like Lockheed Martin and Northrop Grumman are working on cutting-edge technologies that integrate AI and sensor fusion into MUM-T platforms.

Moreover, emerging economies in the Asia-Pacific region, such as India and South Korea, are ramping up their investments in indigenous defense technologies. These nations are focusing on the development of cost-effective MUM-T solutions to strengthen their military capabilities while reducing dependence on foreign suppliers.

Download PDF Brochure: https://www.marketsandmarkets.com/pdfdownloadNew.asp?id=182112271

Rising Demand for Unmanned Platforms in Surveillance and Reconnaissance

One of the most significant drivers of the MUM-T market is the increasing demand for unmanned platforms in surveillance and reconnaissance operations. Autonomous systems equipped with advanced sensors and AI algorithms can efficiently gather and process real-time data, enabling faster and more informed decision-making in critical scenarios.

In defense applications, unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) are being deployed to perform high-risk surveillance missions, such as monitoring border areas or identifying potential threats in conflict zones. These platforms can operate in challenging environments where human presence would be dangerous or impractical.

For example, unmanned aerial systems (UAS) are being used extensively for reconnaissance missions, transmitting live data to command centers or manned platforms. This seamless collaboration between manned and unmanned systems enhances situational awareness and improves overall mission efficiency.

Beyond defense, unmanned platforms are also being adopted for surveillance in civilian applications, such as disaster management, border security, and critical infrastructure monitoring. Their ability to provide real-time data and reduce operational costs makes them an attractive option for governments and private organizations alike.

Technological Advancements in AI, Autonomy, and Sensor Fusion

The MUM-T market is deeply intertwined with technological innovation, particularly in the fields of AI, autonomy, and sensor fusion. These advancements have significantly enhanced the capabilities of manned–unmanned systems, enabling them to perform complex tasks with greater accuracy, efficiency, and reliability.

Artificial Intelligence: AI plays a pivotal role in MUM-T operations by enabling real-time data analysis, pattern recognition, and decision-making. Unmanned systems equipped with AI can identify potential threats, assess risks, and recommend actionable insights to human operators, ensuring more informed and effective responses.

Autonomy: The development of autonomous systems has been a game-changer for the MUM-T market. These systems can operate independently or collaboratively with manned platforms, executing tasks such as navigation, target identification, and mission planning with minimal human intervention. This reduces operational complexity and allows personnel to focus on strategic decision-making.

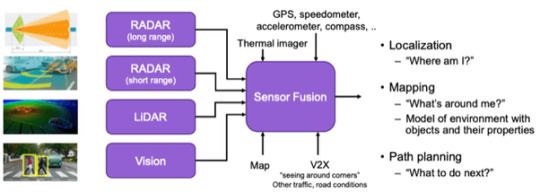

Sensor Fusion: Sensor fusion technology integrates data from multiple sensors, such as radar, lidar, and cameras, to provide a comprehensive and accurate understanding of the environment. This capability is critical for MUM-T systems, enabling them to operate effectively in dynamic and unpredictable conditions.

The integration of these technologies has made MUM-T systems more versatile and efficient, driving their adoption across a wide range of applications. Furthermore, the ongoing development of next-generation technologies, such as quantum computing and 6G networks, promises to unlock even greater potential for MUM-T platforms in the future.

Real-World Applications of MUM-T Driven by Key Drivers

The combination of increased defense investments, rising demand for unmanned platforms, and technological advancements has enabled MUM-T systems to excel in real-world applications.

In combat scenarios, MUM-T platforms allow for coordinated operations between manned fighter jets and unmanned drones, enhancing situational awareness and precision targeting. Similarly, naval forces are utilizing unmanned underwater vehicles (UUVs) in combination with manned submarines for surveillance and mine detection missions.

In logistics, autonomous vehicles are being used to transport supplies to remote or high-risk locations, reducing the need for human involvement in dangerous operations. For instance, unmanned aerial delivery systems are becoming increasingly common in military logistics, providing cost-effective and efficient solutions for supply chain management.

Commercial applications are also benefiting from the growth of the MUM-T market. Drones equipped with advanced sensors are being used for agricultural monitoring, enabling farmers to optimize irrigation and improve crop yields. In disaster management, unmanned platforms are playing a crucial role in assessing damage and coordinating rescue efforts, showcasing the versatility of MUM-T systems.

Ask for Sample Report: https://www.marketsandmarkets.com/requestsampleNew.asp?id=182112271

Regional Insights: Leading Markets in MUM-T

The MUM-T market is experiencing significant growth across multiple regions, with North America leading the way. The region's dominance is attributed to its strong focus on defense modernization, technological innovation, and the presence of major players like Lockheed Martin, Boeing, and Northrop Grumman.

Asia-Pacific is emerging as a high-growth region, driven by increasing defense budgets and investments in indigenous technology development. Countries like China, India, and South Korea are prioritizing the adoption of MUM-T systems to enhance their military and commercial capabilities.

Europe, with its strong aerospace and defense industries, also plays a crucial role in the MUM-T market. Companies like Airbus and Thales Group are at the forefront of developing advanced MUM-T solutions for both defense and civilian applications.

The Manned–Unmanned Teaming (MUM-T) Market is witnessing transformative growth, fueled by growing investments in defense technologies, increased demand for unmanned surveillance platforms, and rapid advancements in AI, autonomy, and sensor fusion. These drivers are enabling MUM-T systems to deliver unparalleled efficiency, precision, and safety across various applications.

As nations and industries continue to embrace the potential of MUM-T, the market is poised for sustained growth and innovation. Despite challenges such as high costs and regulatory concerns, the integration of advanced technologies and the increasing focus on collaboration between manned and unmanned systems will ensure a bright future for this dynamic and evolving market.

#manned–unmanned teaming market#mum-t market drivers#defense technologies#ai in mum-t#unmanned platforms#sensor fusion#surveillance and reconnaissance

0 notes

Text

Accelerometer: Enable New Business Opportunities In Motion Tracking Technology

Acceleration sensors are devices that measure acceleration forces. They detect magnitude and direction of the force of acceleration as a vector quantity, including gravitational acceleration, thus allowing to determine changes in motion, orientation, vibration and shock. An acceleration sensor's operating principle is based on deflecting a mechanical structure which is attached to a piezoresistive or capacitive element, where the deflection is converted to a measurable electrical signal.

There are several types of acceleration sensors used in various applications, with their differences in size, specifications and principles of operation. Piezoelectric acceleration sensors measure the charge produced when a piezoelectric material is subjected to acceleration. Capacitive acceleration Accelerometer sensors detect shifts in capacitance in response to acceleration. MEMS (Micro-Electro-Mechanical Systems) acceleration sensors are batch-fabricated and integrated circuits formed using integrated circuit fabrication techniques and use piezoresistive or capacitive sensing. Piezoresistive acceleration sensors rely on the change of resistance in piezoresistive materials like silicon when subject to mechanical stress.

Accelerating Motion Tracking For Business And Accelerometer

The development of small, low-cost and integrated acceleration sensors enabled new motion tracking technologies that are finding numerous applications across different industries. Wearables packed with multiple sensors including acceleration sensors are allowing continuous monitoring of staff activities in areas like manufacturing, construction, warehouses and healthcare. Motion capture technology using acceleration sensors aids in animation, virtual reality, biomechanics research and rehabilitation. Acceleration sensors are helping improve safety gear like hard hats by detecting impacts or falls. Quality control in assembly lines is enhanced through precise motion monitoring. Sports teams gain insights on player performance and injuries from data collected via wearable acceleration sensors. Overall, motion tracking is boosting productivity, efficiency and safety across many verticals.

Opportunities In Consumer Electronics And Iot Devices

Consumer electronics have wholeheartedly embraced acceleration sensors, using them extensively in applications centered around user interactions, navigation, alerts and activity/fitness tracking. Motion detection allows touchless control of devices and apps through hand gestures. Acceleration sensors play a pivotal role in sensors for tilt compensation in cameras, image stabilization in camcorders and anti-shake technologies in smartphones.

Performance monitoring functions in wearables rely on the accuracy of integrated multi-axis acceleration sensors. Advances in nanoscale MEMS technology have led to the inclusion of more sensitive acceleration sensors in small portable gadgets as part of the rise of IoT devices. Miniaturized acceleration sensors coupled with artificial intelligence and cloud services are enabling entirely new use cases across various sectors.

Enabling Automotive Safety Features And Driver Assistance Systems

Passenger safety remains a top priority for automakers and has prompted adopting novel sensor technologies including acceleration sensors. Today's vehicles integrate triaxial acceleration sensors into airbag control modules, anti-lock braking systems, electronic stability control and rollover detection mechanisms. This facilitates instant damage assessment in a crash to optimize deployment of restraints. Advanced driver-assistance systems use acceleration sensors integrated with cameras, radars and LIDARs to recognize lane departures, emergency braking situations, traction control and blind spot monitoring. Future autonomous vehicles will rely extensively on robust motion sensing through dense arrays of high-performance MEMS acceleration sensors for functions like automated braking, collision avoidance and rollover prevention. This will pave the way for mass adoption of self-driving cars.

Prospects For Growth Through New S And Technologies

The acceleration sensor has grown consistently over the past decade driven by large-scale integration into mainstream consumer products and expanding use cases across industries. Further adoption in emerging fields including drones, robotics, AR/VR and digital healthcare is anticipated to spur more demand.

Upcoming technologies leveraging high dynamic range, high shock survivability and low power consumption acceleration sensors could tap new verticals in asset and structural monitoring, emergency response, aerospace instrumentation and smart cities. Commercialization of MEMS gyroscopes and acceleration sensor/gyroscope combos supporting advanced inertial navigation systems present new opportunities. Growth of IoT networks and applications built on predictive analytics of motion data ensure a promising future for acceleration sensor innovations and their ability to revolutionize business models.

Get more insights on this topic: https://www.trendingwebwire.com/accelerometer-the-fundamental-device-behind-motion-detection-in-globally/

About Author:

Ravina Pandya, Content Writer, has a strong foothold in the market research industry. She specializes in writing well-researched articles from different industries, including food and beverages, information and technology, healthcare, chemical and materials, etc. (https://www.linkedin.com/in/ravina-pandya-1a3984191)

*Note: 1. Source: Coherent Market Insights, Public sources, Desk research 2. We have leveraged AI tools to mine information and compile it

0 notes

Text

Sensor data analysis and sensor algorithm consulting services

I run a mathematics consulting business that specializes in designing software / algorithms for many applications, including sensor fusion and sensor data analysis.

We can design custom-built and sophisticated algorithms for your multiple sensor applications including

Noise removal / compensation

Sensor fusion

Data transformations

Machine learning

If the information is there somewhere in the data, there’s almost no limit to the sophisticated mathematical techniques that you can apply, including machine learning, data transformations, noise compensation/removal.

There are many machine learning techniques that are excellent for use in sensor algorithms.

Many simple statistical techniques that were formally simply considered part of statistics, such as linear regression (or non-linear regression for that matter), are now considered “machine learning”. And these techniques are integral to most sensor data algorithms, or to any kind of data analysis.

Whether using regression or much more impressive techniques like neural networks or reinforcement learning, the essential application is the same. The algorithm needs to take some noisy, partial, spatially distorted data, or perhaps several partial datasets which overlap with discrepancy, and condense this data down to a single category or number.

You can do this by generating a large number of input datasets, labelled by the required output, allowing the algorithm to “learn” the relationship.

I run a mathematics consulting business that specializes in designing complex algorithms for many applications, including sensor fusion and sensor data analysis. We’re happy to explore how machine learning could be applied to build a decision making system on your sensor data. Check us out below!

Sensor algorithm consulting services

#sensor data analysis#sensor fusion#sensor algorithm consulting#sensor data analysis consulting#mathematics consulting

0 notes

Text

Sensor Fusion

Introduction to Sensor Fusion

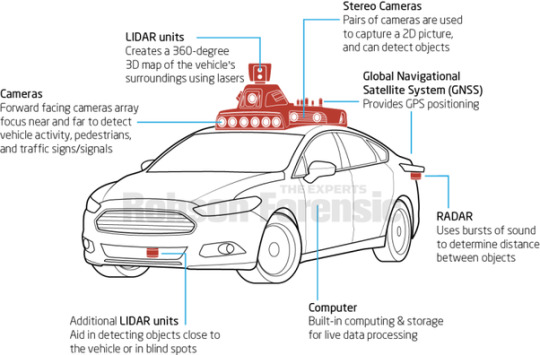

Autonomous vehicles require a number of sensors with varying parameters, ranges, and operating conditions. Cameras or vision-based sensors help in providing data for identifying certain objects on the road, but they are sensitive to weather changes.

Radar sensors perform very well in almost all types of weather but are unable to provide an accurate 3D map of the surroundings. LiDAR sensors map the surroundings of the vehicle to a high level of accuracy but are expensive.

The Need for Integrating Multiple Sensors

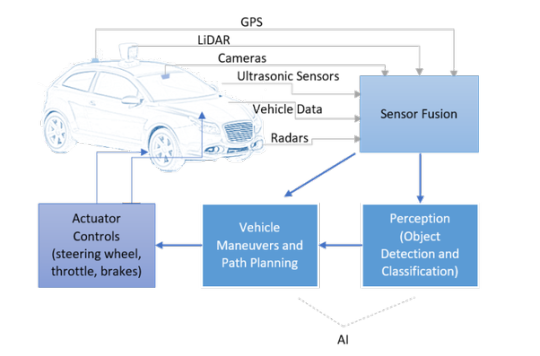

Thus, every sensor has a different role to play, but neither of them can be used individually in an autonomous vehicle.

If an autonomous vehicle has to make decisions similar to the human brain (or in some cases, even better than the human brain), then it needs data from multiple sources to improve accuracy and get a better understanding of the overall surroundings of the vehicle.

This is why sensor fusion becomes an essential component.

Sensor Fusion | Dorleco

Source: The Functional Components of Autonomous Vehicles

Sensor Fusion

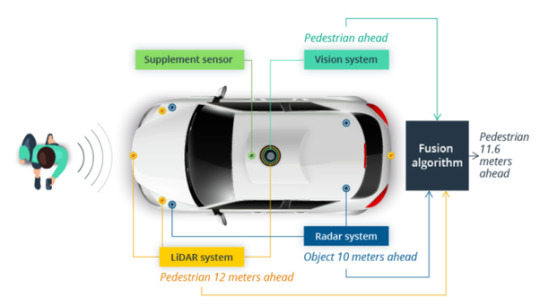

Sensor fusion essentially means taking all data from the sensors set up around the vehicle’s body and using it to make decisions.

This mainly helps in reducing the amount of uncertainty that could be prevalent through the use of individual sensors.

Thus, sensor fusion helps in taking care of the drawbacks of every sensor and building a robust sensing system.

Most of the time, in normal driving scenarios, sensor fusion brings a lot of redundancy to the system. This means that there are multiple sensors detecting the same objects.

Sensor Fusion | Dorleco

Source: https://semiengineering.com/sensor-fusion-challenges-in-cars/

However, when one or multiple sensors fail to perform accurately, sensor fusion helps in ensuring that there are no undetected objects. For example, a camera can capture the visuals around a vehicle in ideal weather conditions.

But during dense fog or heavy rainfall, the camera won’t provide sufficient data to the system. This is where radar, and to some extent, LiDAR sensors help.

Furthermore, a radar sensor may accurately show that there is a truck in the intersection where the car is waiting at a red light.

But it may not be able to generate data from a three-dimensional point of view. This is where LiDAR is needed.

Thus, having multiple sensors detect the same object may seem unnecessary in ideal scenarios, but in edge cases such as poor weather, sensor fusion is required.

Levels of Sensor Fusion

1. Low-Level Fusion (Early Fusion):

In this kind of sensor fusion method, all the data coming from all sensors is fused in a single computing unit, before we begin processing it. For example, pixels from cameras and point clouds from LiDAR sensors are fused to understand the size and shape of the object that is detected.

This method has many future applications, since it sends all the data to the computing unit. Thus, different aspects of the data can be used by different algorithms.

However, the drawback of transferring and handling such huge amounts of data is the complexity of computation. High-quality processing units are required, which will drive up the price of the hardware setup.

2. Mid-Level Fusion:

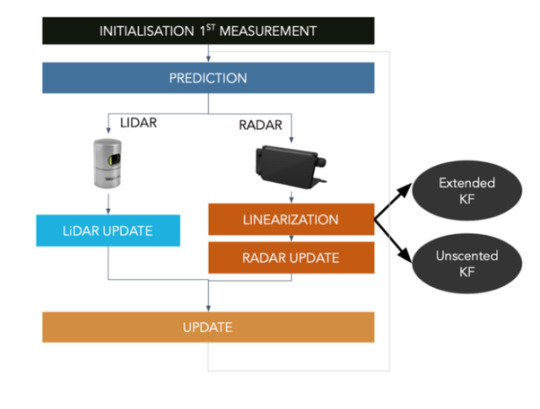

In mid-level fusion, objects are first detected by the individual sensors and then the algorithm fuses the data. Generally, a Kalman filter is used to fuse this data (which will be explained later on in this course).

The idea is to have, let’s say, a camera and a LiDAR sensor detect an obstacle individually, and then fuse the results from both to get the best estimates of position, class and velocity of the vehicle.

This is an easier process to implement, but there is a chance of the fusion process failing in case of sensor failure.

3. High-Level Fusion (Late Fusion):

This is similar to the mid-level method, except that we implement detection as well as tracking algorithms for each individual sensor, and then fuse the results.

The problem, however, would be that if the tracking for one sensor has some errors, then the entire fusion may get affected.

Sensor Fusion | Dorleco

Source: Autonomous Vehicles: The Data Problem

Sensor fusion can also be of different types. Competitive sensor fusion consists of having multiple types of sensors generating data about the same object to ensure consistency.

Complementary sensor fusion will use two sensors to paint an extended picture, something that neither of the sensors could manage individually.

Coordinated sensor fusion will improve the quality of the data. For example, taking two different perspectives of a 2D object to generate a 3D view of the same object.

Variation in the approach of sensor fusion

Radar-LiDAR Fusion

Since there are a number of sensors that work in different ways, there is no single solution to sensor fusion. If a LiDAR and radar sensor has to be fused, then the mid-level sensor fusion approach can be used. This consists of fusing the objects and then taking decisions.

Sensor Fusion | Dorleco

Source: The Way of Data: How Sensor Fusion and Data Compression Empower Autonomous Driving - Intellias

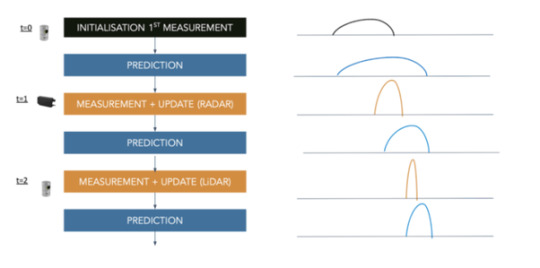

In this approach, a Kalman filter can be used. This consists of a “predict-and-update” method, where based on the current measurement and the last prediction, the predictive model gets updated to provide a better result in the next iteration. An easier understanding of this is shown in the following image.

Sensor Fusion | Dorleco

Source: Sensor Fusion

The issue with radar-LiDAR sensor fusion is that radar sensor provides non-linear data, while LiDAR data is linear in nature. Hence, the non-linear data from the radar sensor has to be linearized before it can be fused with the LiDAR data and the model then gets updated accordingly.

In order to linearize the radar data, an extended Kalman filter or unscented Kalman filter can be used.

Sensor Fusion | Dorleco

Source: Sensor Fusion

Camera-LiDAR Fusion

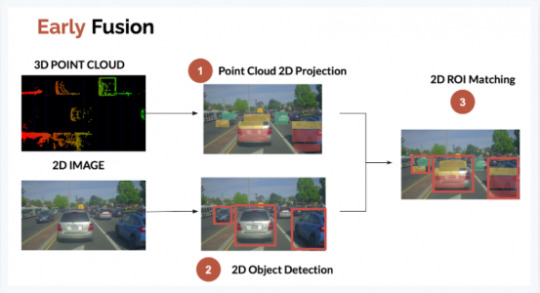

Now, if a system needs the fusion of camera and LiDAR sensor, then low-level (fusing raw data), as well as high-level (fusing objects and their positions) fusion, can be used. The results in both cases vary slightly.

The low-level fusion consists of overlapping the data from both sensors and using the ROI (region of interest) matching approach.

The 3D point cloud data of objects from the LiDAR sensor is projected on a 2D plane, while the images captured by the camera are used to detect the objects in front of the vehicle.

These two maps are then superimposed to check the common regions. These common regions signify the same object detected by two different sensors.

For the high-level fusion, data first gets processed, and then 2D data from the camera undergoes conversion to 3D object detection.

This data is then compared with the 3D object detection data from the LiDAR sensor, and the intersecting regions of the two sensors gives the output (IOU matching).

Sensor Fusion | Dorleco

Source: LiDAR and Camera Sensor Fusion in Self-Driving Cars

Thus, the combination of sensors has a bearing on which approach of sensor fusion needs to be used. Sensors play a massive role in providing the computer with an adequate amount of data to make the right decisions.

Furthermore, sensor fusion also allows the computer to “have a second look” at the data and filter out the noise while improving accuracy.

Autonomous Vehicle Feature Development at Dorle Controls

At Dorle Controls, developing sensor and actuator drivers is one of the many things we do. Be it bespoke feature development or full-stack software development, we can assist you.

For more info on our capabilities and how we can assist you with your software control needs, please write to [email protected].

Read more

0 notes

Text

Living Intelligence: The Fusion of AI, Biotechnology, and Sensors

How AI, Biotechnology, and Sensors Create Adaptive Living Systems

Introduction: A New Frontier in Living Intelligence Technology

In today’s era of rapid technological breakthroughs, the convergence of artificial intelligence (AI), biotechnology, and advanced sensor technology is giving rise to an extraordinary new paradigm known as Living Intelligence. This innovative fusion blurs the lines between biological systems and machines, creating adaptive, self-regulating systems that exhibit characteristics of living organisms.

Living intelligence systems have the potential to transform numerous fields from health monitoring and personalized medicine to environmental sensing and smart cities. By mimicking natural processes such as learning, adaptation, and self-healing, these technologies open doors to solutions that were previously unimaginable.

As this field evolves, it is poised to revolutionize how humans interact with technology, enabling smarter ecosystems that respond dynamically to their environment. For those interested in exploring the cutting edge of science and technology, living intelligence represents a thrilling frontier with vast potential.

To learn more about the intersection of biology and AI, explore research initiatives at the MIT Media Lab.

Understanding Living Intelligence: The Fusion of AI, Biotechnology, and Sensors

Living intelligence represents a cutting-edge integration of artificial intelligence (AI), biotechnology, and advanced sensor technologies to create dynamic, responsive systems capable of perceiving, learning, and adapting in real time. Unlike traditional machines or static software programs, living intelligence systems embody characteristics commonly found in biological organisms including self-organization, evolutionary adaptation, and environmental responsiveness.

At the heart of living intelligence lies a powerful synergy between three core components:

AI’s data processing and machine learning capabilities: These enable the system to analyze vast amounts of data, identify patterns, and make informed decisions autonomously.

Biotechnology’s expertise in biological processes: This allows for the manipulation and integration of living cells or biomaterials into technological systems, enabling functionalities such as self-repair and growth.

Advanced sensor technology: High-precision sensors collect real-time data from the environment or living organisms, feeding information continuously to AI algorithms for rapid response.

This triad facilitates a seamless flow of information between biological and artificial elements, resulting in adaptive, efficient, and often autonomous systems that can operate in complex, dynamic environments. These systems have promising applications across healthcare, environmental monitoring, robotics, and beyond.

For an in-depth look at how living intelligence is shaping future technologies, check out this insightful overview from Nature Biotechnology.

The Role of AI in Living Intelligence: The Cognitive Engine of Adaptive Systems

Artificial Intelligence (AI) serves as the cognitive engine powering living intelligence systems. Leveraging advances in deep learning, neural networks, and machine learning algorithms, AI excels at pattern recognition, predictive analytics, and complex decision-making. When combined with biological inputs and continuous sensor data streams, AI can decode intricate biological signals and convert them into meaningful, actionable insights.

For instance, in healthcare technology, AI algorithms analyze data from wearable biosensors that track vital signs such as heart rate variability, glucose levels, or brain activity. This enables early detection of illnesses, stress markers, or other physiological changes, empowering proactive health management and personalized medicine.

In the field of precision agriculture, AI integrated with biosensors can monitor plant health at a molecular or cellular level, optimizing irrigation, nutrient delivery, and pest control to enhance crop yield while minimizing resource use promoting sustainable farming practices.

Beyond analysis, AI also drives continuous learning and adaptive behavior in living intelligence systems. These systems evolve in response to new environmental conditions and feedback, improving their performance autonomously over time mirroring the self-improving nature of living organisms.

For more on how AI transforms living intelligence and bio-integrated systems, explore resources from MIT Technology Review’s AI section.

Biotechnology: Bridging the Biological and Digital Worlds in Living Intelligence

Biotechnology serves as the critical bridge between biological systems and digital technologies, providing the tools and scientific understanding necessary to interface with living organisms at the molecular and cellular levels. Recent breakthroughs in synthetic biology, gene editing technologies like CRISPR-Cas9, and advanced bioengineering have unlocked unprecedented opportunities to design and manipulate biological components that seamlessly communicate with AI systems and sensor networks.

A particularly exciting frontier is the emergence of biohybrid systems, innovative integrations of living cells or tissues with electronic circuits and robotic platforms. These biohybrids can perform sophisticated functions such as environmental sensing, biomedical diagnostics, and targeted drug delivery. For example, engineered bacteria equipped with nanoscale biosensors can detect pollutants or toxins in water sources and transmit real-time data through AI-driven networks. This capability facilitates rapid, precise environmental remediation and monitoring, crucial for addressing global ecological challenges.

Moreover, biotechnology enables the creation of advanced biosensors, which utilize biological molecules to detect a wide range of chemical, physical, and even emotional signals. These devices can continuously monitor critical health biomarkers, identify pathogens, and assess physiological states by analyzing hormone levels or other biochemical markers. The rich data collected by biosensors feed directly into AI algorithms, enhancing the ability to provide personalized healthcare, early disease detection, and adaptive treatment strategies.

For a deeper dive into how biotechnology is revolutionizing living intelligence and healthcare, check out the latest updates at the National Institutes of Health (NIH) Biotechnology Resources.

Sensors: The Eyes and Ears of Living Intelligence

Sensors play a pivotal role as the critical interface between biological systems and artificial intelligence, acting as the “eyes and ears” that capture detailed, real-time information about both the environment and internal biological states. Recent advances in sensor technology have led to the development of miniaturized, highly sensitive devices capable of detecting an extensive range of physical, chemical, and biological signals with exceptional accuracy and speed.

In the realm of healthcare, wearable sensors have revolutionized personalized medicine by continuously tracking vital signs such as heart rate, blood oxygen levels, body temperature, and even biochemical markers like glucose or hormone levels. This continuous data stream enables proactive health monitoring and early disease detection, improving patient outcomes and reducing hospital visits.

Environmental sensors also play a crucial role in living intelligence systems. These devices monitor parameters such as air quality, soil moisture, temperature, and pollutant levels, providing vital data for environmental conservation and sustainable agriculture. By integrating sensor data with AI analytics, stakeholders can make informed decisions that protect ecosystems and optimize resource management.

What sets sensors in living intelligence apart is their ability to participate in real-time feedback loops. Instead of merely collecting data, these sensors work in tandem with AI algorithms to create autonomous systems that dynamically respond to changes. For example, in smart agricultural setups, sensors detecting dry soil can trigger AI-driven irrigation systems to activate precisely when needed, conserving water and maximizing crop yield. Similarly, in healthcare, sensor data can prompt AI systems to adjust medication dosages or alert medical professionals to potential emergencies immediately.

Together, these advanced sensors and AI create living intelligence systems capable of self-regulation, adaptation, and continuous learning bringing us closer to a future where technology and biology co-evolve harmoniously.

For more insights into cutting-edge sensor technologies, explore the resources provided by the IEEE Sensors Council.

Applications and Impact of Living Intelligence

The convergence of artificial intelligence (AI), biotechnology, and advanced sensor technology in living intelligence is already revolutionizing a wide array of industries. This innovative fusion is driving transformative change by enabling smarter, adaptive systems that closely mimic biological processes and enhance human capabilities.

Healthcare: Personalized and Predictive Medicine

Living intelligence is accelerating the shift toward personalized medicine, where treatments are tailored to individual patients’ unique biological profiles. Implantable biosensors combined with AI algorithms continuously monitor vital health metrics and biochemical markers, enabling early detection of diseases such as diabetes, cardiovascular conditions, and even cancer. These systems facilitate real-time medication adjustments and proactive management of chronic illnesses, reducing hospital visits and improving quality of life. For example, AI-powered glucose monitors can automatically regulate insulin delivery, empowering diabetic patients with better control. Learn more about AI in healthcare at NIH’s Artificial Intelligence in Medicine.

Environmental Management: Smart and Sustainable Ecosystems

Living intelligence is reshaping environmental monitoring and management by creating smart ecosystems. Biosensors deployed in natural habitats detect pollutants, chemical changes, and climate variations, feeding real-time data to AI models that analyze trends and predict ecological risks. Automated bioremediation systems and adaptive irrigation solutions respond dynamically to environmental cues, enhancing sustainability and reducing human intervention. This approach helps combat pollution, conserve water, and protect biodiversity in an increasingly fragile environment. Discover innovations in environmental sensing at the Environmental Protection Agency (EPA).

Agriculture: Precision Farming and Resource Optimization

Precision agriculture leverages living intelligence to maximize crop yields while minimizing environmental impact. By integrating soil biosensors, climate data, and AI-driven analytics, farmers can optimize water usage, fertilization, and pest control with pinpoint accuracy. This results in healthier crops, reduced chemical runoff, and more efficient use of natural resources. For instance, AI-powered drones equipped with sensors monitor plant health at the molecular level, allowing targeted interventions that save costs and boost productivity. Explore advancements in smart farming at FAO - Precision Agriculture.

Wearable Technology: Beyond Fitness Tracking

Wearable devices enhanced by living intelligence go far beyond step counting and heart rate monitoring. These advanced wearables assess mental health indicators, stress responses, and neurological conditions through continuous biometric sensing and AI analysis. This opens new frontiers in early diagnosis, personalized therapy, and wellness optimization. For example, AI-driven wearables can detect signs of anxiety or depression by analyzing hormone fluctuations and physiological patterns, enabling timely interventions. Check out the latest in wearable health tech from Wearable Technologies.

Robotics and Biohybrids: Adaptive and Responsive Machines

Living intelligence is paving the way for biohybrid robots machines integrated with living cells or bioengineered tissues. These robots combine the flexibility and self-healing properties of biological material with the precision of robotics, enabling them to perform delicate medical procedures, intricate manufacturing tasks, or exploration in unpredictable environments. Such systems adapt dynamically to changes, enhancing efficiency and safety in sectors like surgery, pharmaceuticals, and space missions. Learn about biohybrid robotics at MIT’s Biohybrid Robotics Lab.

Ethical and Social Considerations in Living Intelligence

As living intelligence technologies increasingly merge biological systems with artificial intelligence and sensor networks, they raise profound ethical and social questions that demand careful reflection. This emerging frontier blurs the boundaries between living organisms and machines, requiring a responsible approach to development and deployment.

Manipulation of Biological Materials

Advances in synthetic biology, gene editing (such as CRISPR), and biohybrid systems enable unprecedented manipulation of living cells and tissues. While these innovations hold tremendous promise, they also provoke concerns about unintended consequences, such as ecological disruption or irreversible genetic changes. Ethical frameworks must guide the use of biotechnology to prevent misuse and ensure safety. Learn about gene editing ethics from the National Human Genome Research Institute.

Data Privacy and Genetic Information Security

Living intelligence systems often rely on vast amounts of biometric data and genetic information, raising critical questions about data privacy and consent. Protecting sensitive health data from breaches or misuse is paramount, especially as AI-driven analytics become more powerful. Regulatory compliance with standards like HIPAA and GDPR is essential, alongside transparent data governance policies. Public trust hinges on safeguarding individual rights while enabling technological progress. Explore data privacy regulations at the European Data Protection Board.

Environmental and Ecological Impact

The integration of living intelligence into ecosystems carries risks of ecological imbalance. Introducing engineered organisms or biohybrid devices into natural environments may have unpredictable effects on biodiversity and ecosystem health. Continuous environmental monitoring and impact assessments are necessary to mitigate potential harm and ensure sustainability. See more on ecological risk management at the United Nations Environment Programme.

Transparency, Regulation, and Public Engagement

Responsible innovation in living intelligence requires transparent communication about the technology’s capabilities, risks, and benefits. Governments, industry stakeholders, and researchers must collaborate to establish clear regulatory frameworks that promote ethical standards and accountability. Equally important is engaging the public in meaningful dialogue to address societal concerns, build trust, and guide policymaking. For insights into ethical AI governance, visit the AI Ethics Guidelines by OECD.

By proactively addressing these ethical and social dimensions, society can harness the transformative power of living intelligence while safeguarding human dignity, privacy, and the environment. This balanced approach is essential for building a future where technology and biology coexist harmoniously and ethically.

The Road Ahead: Toward a Symbiotic Future

Living intelligence opens the door to a symbiotic future where humans, machines, and biological systems do more than just coexist; they collaborate seamlessly to address some of the world’s most pressing challenges. This emerging paradigm holds the promise of revolutionizing fields such as personalized healthcare, by enabling continuous health monitoring and adaptive treatments tailored to individual needs. It also paves the way for environmental resilience, with biohybrid sensors and AI-driven ecosystems working in tandem to monitor and protect our planet in real time.

Innovative applications will extend into agriculture, smart cities, and robotics, creating technologies that not only perform tasks but also learn, evolve, and respond to their environments autonomously. However, realizing this transformative potential hinges on sustained interdisciplinary research, development of robust ethical guidelines, and ensuring equitable access to these advanced technologies across communities and countries.

As AI, biotechnology, and sensor technologies become ever more intertwined, living intelligence will redefine how we interact with the natural and digital worlds, unlocking new potentials that once belonged only in the realm of science fiction.

Conclusion: Embracing the Future of Living Intelligence

The fusion of artificial intelligence, biotechnology, and sensor technologies marks the beginning of an exciting new era, one where the boundaries between living organisms and machines blur to create intelligent, adaptive systems. Living intelligence promises to improve healthcare, enhance environmental stewardship, and drive technological innovation that benefits all of humanity.

To navigate this future responsibly, it is essential to balance innovation with ethical considerations, transparency, and collaboration among researchers, policymakers, and society at large. By doing so, we can ensure that living intelligence becomes a force for good, empowering individuals and communities worldwide.

Stay Ahead with Entrepreneurial Era Magazine

Curious to explore more about groundbreaking technologies, emerging trends, and strategies shaping the future of business and innovation? Subscribe to Entrepreneurial Era Magazine today and get exclusive insights, expert interviews, and actionable advice tailored for entrepreneurs and innovators like you.

Join thousands of forward-thinking readers who are already leveraging the latest knowledge to grow their ventures and stay competitive in a rapidly evolving world.

Subscribe now and be part of the innovation revolution!

Subscribe to Entrepreneurial Era Magazine

FAQs

What is Living Intelligence in technology? Living Intelligence refers to systems where artificial intelligence (AI), biotechnology, and sensors merge to create responsive, adaptive, and autonomous environments. These systems behave almost like living organisms collecting biological data, analyzing it in real-time, and making decisions or adjustments without human input. Examples include smart implants that adjust medication doses, bio-hybrid robots that respond to environmental stimuli, or AI-driven ecosystems monitoring human health. The goal is to mimic natural intelligence using technology that senses, thinks, and evolves enabling next-generation applications in healthcare, agriculture, environmental science, and more.

How do AI, biotechnology, and sensors work together in Living Intelligence? In Living Intelligence, sensors collect biological or environmental data (like heart rate, chemical levels, or temperature). This data is sent to AI algorithms that analyze it instantly, recognizing patterns or abnormalities. Biotechnology then acts on these insights, often in the form of engineered biological systems, implants, or drug delivery systems. For example, a biosensor may detect dehydration, the AI recommended fluid intake, and a biotech implant responds accordingly. This fusion enables systems to adapt, learn, and respond in ways that closely resemble living organisms bringing a dynamic edge to digital health and bioengineering.

What are real-world examples of Living Intelligence? Examples include smart insulin pumps that monitor blood glucose and adjust doses automatically, AI-enhanced prosthetics that respond to muscle signals, and biosensors embedded in clothing to track health metrics. In agriculture, Living Intelligence powers systems that detect soil nutrient levels and deploy micro-doses of fertilizer. In environmental monitoring, bio-sensing drones track pollution levels and AI predicts ecological shifts. These innovations blur the line between machine and organism, offering intelligent, autonomous responses to biological or environmental conditions often improving speed, precision, and personalization in critical fields.

What role does biotechnology play in Living Intelligence? Biotechnology serves as the biological interface in Living Intelligence. It enables machines and sensors to interact with living tissues, cells, and molecules. From genetically engineered cells that react to pollutants to biocompatible implants that communicate with neural pathways, biotechnology helps translate biological signals into data AI can process and vice versa. This allows for precision treatments, early disease detection, and real-time bodily monitoring. In essence, biotechnology enables machines to "speak the language" of life, forming the bridge between human biology and machine intelligence.

Are Living Intelligence systems safe for human use? When properly developed, Living Intelligence systems can be safe and even enhance health and safety. Regulatory oversight, clinical testing, and ethical review are essential before human deployment. Implants or biotech sensors must be biocompatible, AI must avoid bias or misinterpretation, and data must be securely encrypted. Most systems are designed with safety protocols like auto-shutdown, alert escalation, or user override. However, because these technologies are still evolving, long-term effects and ethical considerations (like autonomy, data privacy, and human enhancement) continue to be actively explored.

How is Living Intelligence transforming healthcare? Living Intelligence is revolutionizing healthcare by making it predictive, personalized, and proactive. Wearable biosensors track vitals in real time, AI analyzes this data to detect early signs of illness, and biotech systems deliver treatments exactly when and where needed. This reduces hospital visits, speeds up diagnosis, and enables preventative care. For example, cancer detection can happen earlier through bio-integrated diagnostics, while chronic illnesses like diabetes or heart disease can be managed more effectively with adaptive, AI-guided interventions. The result: longer lifespans, better quality of life, and lower healthcare costs.

Can Living Intelligence be used outside of healthcare? Yes, Living Intelligence extends far beyond healthcare. In agriculture, it enables smart farming with biosensors that detect soil health and AI that regulates water or nutrient delivery. In environmental science, it’s used in biohybrid sensors to monitor air or water pollution. In wearable tech, it powers personalized fitness and stress management tools. Even in space exploration, researchers are exploring AI-biotech hybrids for autonomous life support. Wherever biology meets decision-making, Living Intelligence can optimize systems by mimicking the adaptability and efficiency of living organisms.

How do biosensors contribute to Living Intelligence? Biosensors are the input channels for Living Intelligence. These tiny devices detect biological signals such as glucose levels, hormone changes, or toxins and convert them into digital data. Advanced biosensors can operate inside the body or in wearable devices, often transmitting data continuously. AI then interprets these signals, and biotech components act accordingly (e.g., drug release, alerting doctors, or environmental controls). Biosensors allow for non-invasive, real-time monitoring and make it possible for machines to understand and react to living systems with remarkable precision.

What are the ethical concerns surrounding Living Intelligence? Key ethical concerns include data privacy, human autonomy, and biological manipulation. When AI monitors health or biology, who owns the data? Can systems make decisions that override human will like stopping medication or triggering an alert? Additionally, biotech integration raises concerns about altering natural biology or creating bioengineered entities. Transparency, informed consent, and regulation are vital to ensure these technologies serve humanity without exploitation. As Living Intelligence evolves, policymakers and technologists must collaborate to align innovation with ethical standards.

What does the future hold for Living Intelligence? The future of Living Intelligence is incredibly promising. We’ll likely see cyborg-like medical devices, fully autonomous bio-monitoring ecosystems, and AI-driven drug synthesis tailored to your DNA. Smart cities may use biosensors in public spaces to track environmental health. Even brain-computer interfaces could become more common, powered by AI and biological sensors. Over time, machines won’t just compute, they'll sense, adapt, and evolve, making technology indistinguishable from life itself. The challenge ahead is not just building these systems but ensuring they remain ethical, secure, and beneficial for all.

#living intelligence technology#AI and biotechnology fusion#sensor-driven intelligent systems#biotechnology in AI systems#adaptive AI sensors#smart biosensor technology#AI-powered bioengineering#living systems AI integration#real-time biological sensing#intelligent biohybrid devices

0 notes

Text

SciTech Chronicles. . . . . . . . .April 7th, 2025

#starquakes#Kepler#Bubbles#M67#on-orbit#mobile#radar#sensors#Mid-ocean#SISMOMAR#waveform#inversion#dysprosium-titanate#pyrochlore-iridate#Q-DiP#monopole#Sunbird#Mars#fusion#Pulsar

0 notes

Text

Sensor Fusion Market Size, Share & Industry Trends Analysis Report by Algorithms (Kalman Filter, Bayesian Filter, Central Limit Theorem, Convolutional Neural Networks), Technology (MEMS, Non-MEMS), Offering (Hardware, Software), End-Use Application and Region - Global Forecast to 2028

0 notes

Text

Sensor Fusion Market Trends and Innovations to Watch in 2024 and Beyond

The global sensor fusion market is poised for substantial growth, with its valuation reaching US$ 8.6 billion in 2023 and projected to grow at a CAGR of 4.8% to reach US$ 14.3 billion by 2034. Sensor fusion refers to the process of integrating data from multiple sensors to achieve a more accurate and comprehensive understanding of a system or environment. By synthesizing data from sources such as cameras, LiDAR, radars, GPS, and accelerometers, sensor fusion enhances decision-making capabilities across applications, including automotive, consumer electronics, healthcare, and industrial systems.

Explore our report to uncover in-depth insights - https://www.transparencymarketresearch.com/sensor-fusion-market.html

Key Drivers

Rise in Adoption of ADAS and Autonomous Vehicles: The surge in demand for advanced driver assistance systems (ADAS) and autonomous vehicles is a key driver for the sensor fusion market. By combining data from cameras, radars, and LiDAR sensors, sensor fusion technology improves situational awareness, enabling safer and more efficient driving. Industry collaborations, such as Tesla’s Autopilot, highlight the transformative potential of sensor fusion in automotive applications.

Increased R&D in Consumer Electronics: Sensor fusion enables consumer electronics like smartphones, wearables, and smart home devices to deliver enhanced user experiences. Features such as motion sensing, augmented reality (AR), and interactive gaming are powered by the integration of multiple sensors, driving market demand.

Key Player Strategies

STMicroelectronics, InvenSense, NXP Semiconductors, Infineon Technologies AG, Bosch Sensortec GmbH, Analog Devices, Inc., Renesas Electronics Corporation, Amphenol Corporation, Texas Instruments Incorporated, Qualcomm Technologies, Inc., TE Connectivity, MEMSIC Semiconductor Co., Ltd., Kionix, Inc., Continental AG, and PlusAI, Inc. are the leading players in the global sensor fusion market.

In November 2022, STMicroelectronics introduced the LSM6DSV16X, a 6-axis inertial measurement unit embedding Sensor Fusion Low Power (SFLP) technology and AI capabilities.

In June 2022, Infineon Technologies launched a battery-powered Smart Alarm System, leveraging AI/ML-based sensor fusion for superior accuracy.

These innovations aim to address complex data integration challenges while enhancing performance and efficiency.

Regional Analysis

Asia Pacific emerged as a leading region in the sensor fusion market in 2023, driven by the presence of key automotive manufacturers, rising adoption of ADAS, and advancements in sensor technologies. Increased demand for smartphones, coupled with the integration of AI algorithms and edge computing capabilities, is further fueling growth in the region.

Other significant regions include North America and Europe, where ongoing advancements in autonomous vehicles and consumer electronics are driving market expansion.

Market Segmentation

By Offering: Hardware and Software

By Technology: MEMS and Non-MEMS

By Algorithm: Kalman Filter, Bayesian Filter, Central Limit Theorem, Convolutional Neural Network

By Application: Surveillance Systems, Inertial Navigation Systems, Autonomous Systems, and Others

By End-use Industry: Consumer Electronics, Automotive, Home Automation, Healthcare, Industrial, and Others

Contact:

Transparency Market Research Inc.

CORPORATE HEADQUARTER DOWNTOWN,

1000 N. West Street,

Suite 1200, Wilmington, Delaware 19801 USA

Tel: +1-518-618-1030

USA - Canada Toll Free: 866-552-3453

Website: https://www.transparencymarketresearch.com Email: [email protected]

0 notes

Text

Dickson Environmental Monitoring Solutions

Gaia Science offers a complete range of Environmental Monitoring Solutions, featuring Dickson Environmental Monitoring Solutions for reliable data collection across diverse environments. From air quality and water analysis to soil testing and climate monitoring, these solutions include advanced, customizable equipment and precision sensors. Dickson's technology enhances Gaia Science's offerings, ensuring highly accurate measurements vital for research, regulatory compliance, and sustainability initiatives.

In Order To Find Out More Details On Environmental Monitoring Solutions Please Be Touch With Us Today Onwards..!

#Dickson Data Logger#CO2 Data Logger#Cobalt X Data Logger#Emerald Bluetooth Temperature Data Logger#CO2 Smart Sensor#Digital Temperature Sensors#Sensors For Emerald#scientific laboratory equipment#laboratory equipments#scientific instruments supplier#scientific instruments#anesthesia machine#homogeniser machines#laminar flow cabinet#phenocycler-fusion system#scientific equipment supplier#laboratory equipment suppliers

0 notes

Text

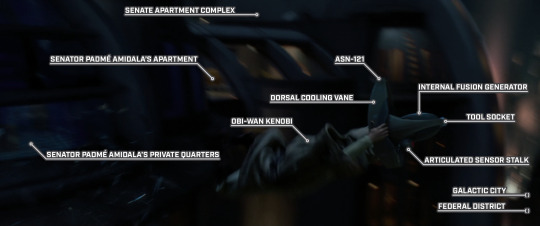

Obi-Wan Catches the Assassin Droid

STAR WARS EPISODE II: Attack of the Clones 00:14:23

#Star Wars#Episode II#Attack of the Clones#Coruscant#Galactic City#Federal District#Senate Apartment Complex#Senator Padmé Amidala’s apartment#Senator Padmé Amidala’s private quarters#Obi-Wan Kenobi#dorsal cooling vane#ASN-121#internal fusion generator#tool socket#articulated sensor stalk

1 note

·

View note

Text

Most acceleration sensors use tiny sensors with movable parts called seismic masses. As the Accelerometer device moves, the seismic masses remain inertially fixed, causing them to be wrenched against the sensor structure. This distortion is detected by the sensor and output as electrical signals that can be amplified, conditioned, and sampled by an analog-to-digital converter for processing by a controller or recorder.

0 notes

Text

Sensor Fusion: LIDAR and Camera Integration by Beamagine

Beamagine’s innovative sensor fusion LIDAR camera technology combines the strengths of camera systems to deliver unparalleled accuracy and detail in environmental sensing. By integrating high-resolution imaging with precise distance measurement, Beamagine enhances object detection, tracking, and classification. This advanced fusion technology is ideal for applications in autonomous vehicles, robotics, and industrial automation, providing a comprehensive solution for reliable and intelligent perception in diverse environments. Beamagine’s sensor fusion sets a new standard in the field of advanced sensing technologies.

0 notes

Text

Getting the Most Out of Your Car’s Collision Avoidance System

Hey there!

Maintaining the clarity of your vehicle’s safety sensors is crucial for ensuring that all your advanced safety features operate effectively. Here’s a step-by-step guide to help you keep those sensors unobstructed.

Steps to Ensure Your Vehicle’s Safety Sensors Are Unobstructed

Regular Cleaning Over time, your sensors can accumulate dust, mud, or snow. It’s important to clean these areas regularly, especially around the bumpers, grill, and windshield. Use a soft cloth and a gentle cleaning solution to wipe the sensors clean without causing any damage.

Inspect for Physical Damage Look closely at your sensors to check for any physical damage such as cracks or chips. Even small damages can affect sensor performance. If you find any issues, it’s best to get them repaired by a professional to ensure they work properly.

Remove Obstructions Sometimes, accessories like bumper stickers, decorative items, or even a poorly placed license plate can block your sensors. Make sure that these items are not obstructing the sensors. Reposition or remove anything that might be in the way.

Check Sensor Alignment Minor bumps or collisions can knock your sensors out of alignment. Regularly check to make sure your sensors are properly aligned. If they appear to be out of place, have a mechanic adjust them to ensure they function correctly.

Stay Updated with Software Manufacturers frequently release software updates to enhance sensor performance and fix any issues. Regularly check for updates and install them to keep your vehicle’s systems running smoothly. This can help maintain the accuracy of your sensors.

Understand Warning Messages If you encounter messages like Fix Pre collision Assist not Available Sensor Blocked Error, it means that the pre-collision assist system is disabled due to a blocked sensor. Clean or unblock the sensor to resolve this issue and restore the system’s functionality.

Schedule Professional Maintenance Regular check-ups with a certified mechanic can help catch issues that might not be immediately apparent. Professionals have the tools and expertise to thoroughly inspect and maintain your sensors, ensuring they are in top condition.

Conclusion

By following these steps, you can ensure that your vehicle’s safety sensors remain unobstructed and fully operational. This not only helps in maintaining the effectiveness of advanced safety features like collision avoidance and lane-keeping assist but also enhances your overall driving safety. If you come across the Fix Pre collision Assist not Available Sensor Blocked Error, taking prompt action will keep your safety systems working optimally.

Drive safe and enjoy your ride!

#pre collision assist not available sensor blocked#ford fusion pre collision assist not available#pre collision assist not available sensor blocked ford explorer

0 notes