#Radar sensors

Explore tagged Tumblr posts

Text

Automotive RADAR Market Is Estimated To Witness High Growth Owing To Increasing Demand for Advanced Safety Features & Growing Focus on Autonomous Vehicles

The global Automotive RADAR Market is estimated to be valued at US$ 6.61 billion in 2022 and is expected to exhibit a CAGR of 23.81% over the forecast period 2022-2030, as highlighted in a new report published by Coherent Market Insights.

Market Overview:

The Automotive RADAR (Radio Detection and Ranging) system uses radio waves to detect objects and determine their distance and velocity. It plays a crucial role in advanced driver assistance systems (ADAS) and autonomous vehicles by providing accurate and real-time information for collision avoidance, blind spot detection, lane change assist, and adaptive cruise control.

Market Dynamics:

The automotive RADAR market is being driven by two main factors. Firstly, the increasing demand for advanced safety features in vehicles is leading to the adoption of RADAR systems. Governments and regulatory bodies are mandating the inclusion of safety technologies in vehicles to reduce accidents and save lives. RADAR systems offer reliable and precise detection capabilities, making them essential for enhancing vehicular safety.

Secondly, there is a growing focus on autonomous vehicles, which rely heavily on RADAR technology for their navigation and perception systems. RADAR provides the necessary data to accurately sense the surrounding environment, detect obstacles, and enable safe navigation in complex driving scenarios. As autonomous vehicles continue to evolve, the demand for RADAR systems will witness significant growth.

Market Key Trends:

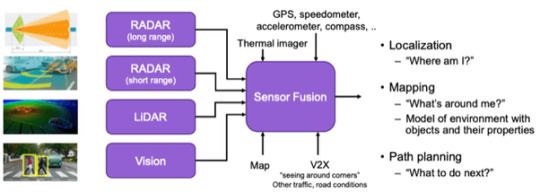

One key trend in the automotive RADAR market is the integration of RADAR systems with other sensor technologies such as lidar and cameras. This fusion of sensor data enables highly accurate object detection and localization, even in challenging weather conditions. For example, combining RADAR with lidar can provide both long-range detection and detailed 3D mapping of the surroundings, enhancing the overall perception capabilities of autonomous vehicles.

SWOT Analysis:

Strength:

- Accurate and reliable detection capabilities

- Crucial for advanced safety features and autonomous vehicles Weakness:

- Interference from external sources such as weather conditions

- Limited perception range compared to other sensor technologies

Opportunity:

- Increasing adoption of ADAS and autonomous driving technologies - Growing investments in research and development for advanced RADAR systems

Threats:

- Competition from alternative sensor technologies such as lidar

- Regulatory challenges and privacy concerns related to data collection

Key Takeaways:

Paragraph 1:

The global Automotive RADAR market is expected to witness high growth, exhibiting a CAGR of 23.81% over the forecast period, due to increasing demand for advanced safety features and the growing focus on autonomous vehicles.

Paragraph 2:

In terms of regional analysis, North America is expected to be the fastest-growing and dominating region in the automotive RADAR market. The region has a strong automotive industry and a high adoption rate of advanced technologies. The presence of major players such as Continental AG, Denso Corporation, and Autoliv Inc. further boosts the market growth in North America.

Paragraph 3:

Key players operating in the global automotive RADAR market include Continental AG, Denso Corporation, Robert Bosch GmbH, Infineon Technologies AG, Valeo, NXP Semiconductors, HELLA KGaA Hueck & Co., Texas Instruments Incorporated (TI), Autoliv Inc., and ZF Friedrichshafen AG. These players are actively investing in R&D to develop innovative RADAR systems for enhanced safety and autonomous driving applications.

In conclusion, the Automotive RADAR Market is witnessing significant growth driven by the increasing demand for advanced safety features and the focus on autonomous vehicles. The integration of RADAR with other sensor technologies and advancements in RADAR systems will continue to shape the market in the coming years. With North America as the fastest-growing region and key players investing in R&D, the market is poised for further expansion.

#coherent market insights#AutomotiveandTransportation#Automotive Radar Market#Radar Technology#Radar Sensors#Automotive Radar

0 notes

Link

#market research future#radar sensors for smart city#radar sensors#smart city applications#smart city applications trends

0 notes

Text

Sensors in Autonomous Vehicles

Sensors in Autonomous Vehicles

Introduction

The entire discussion on Sensors in Autonomous Vehicles consists of one major point – will the vehicle’s brain (i.e., the computer) be able to make decisions just like a human brain does?

Now whether or not a computer can make these decisions is an altogether different topic, but it is just as important for an automotive company working on self-driving technology to provide the computer with the necessary and sufficient data to make decisions.

This is where sensors, and in particular, their integration with the computing system comes into the picture.

Types of Sensors in Autonomous Vehicles:

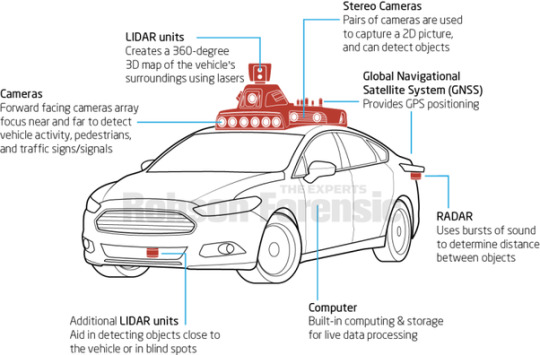

There are three main types of Sensors in Autonomous Vehicles used to map the environment around an autonomous vehicle – vision-based (cameras), radio-based (radar), and light/laser-based (LiDAR).

These three types of Sensors in Autonomous Vehicles have been explained below in brief:

Cameras

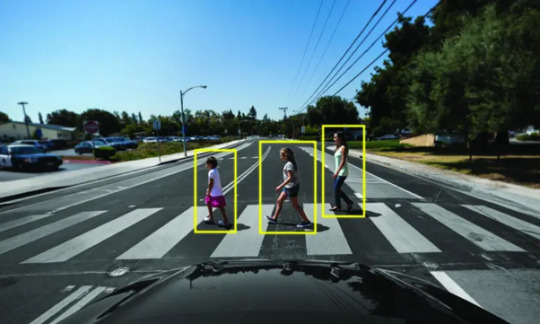

High-resolution video cameras can be installed in multiple locations around the vehicle’s body to gain a 360° view of the surroundings of the vehicle. They capture images and provide the data required to identify different objects such as traffic lights, pedestrians, and other cars on the road.

Source: How Does a Self-Driving Car See? | NVIDIA Blog

The biggest advantage of using data from high-resolution cameras is that objects can be accurately identified and this is used to map 3D images of the vehicle’s surroundings. However, these cameras don’t perform as accurately in poor weather conditions such as nighttime or heavy rain/fog.

Types of vision-based sensors used:

Monocular vision sensor

Monocular vision Sensors in Autonomous vehicles make use of a single camera to help detect pedestrians and vehicles on the road. This system relies heavily on object classification, meaning it will detect classified objects only. These systems can be trained to detect and classify objects through millions of miles of simulated driving.

When it classifies an object, it compares the size of this object with the objects it has stored in memory. For example, let’s say that the system has classified a certain object as a truck.

If the system knows how big a truck appears in an image at a specific distance, it can compare the size of the new truck and calculate the distance accordingly.

A test scene with detected classified (blue bounding box) and unclassified objects (red bounding box). Source: https://www.foresightauto.com/stereo-vs-mono/

But if the system encounters an object which it is unable to classify, that object will go undetected. This is a major concern for autonomous system developers.

Stereo vision sensor

A stereo vision system consists of a dual-camera setup, which helps in accurately measuring the distance to a detected object even if it doesn’t recognize what the object is.

Since the system has two distinct lenses, it functions as a human eye and helps perceive the depth of a certain object.

Since both lenses capture slightly different images, the system can calculate the distance between the object and the camera based on triangulation.

Sensors in Autonomous Vehicles

Source: https://sites.tufts.edu/eeseniordesignhandbook/files/2019/05/David_Lackner_Tech_Note_V2.0.pdf

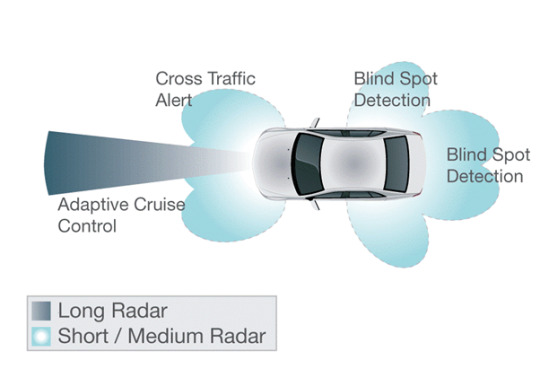

Radar Sensors in Autonomous Vehicles

Radar Sensors in Autonomous Vehicles make use of radio waves to read the environment and get an accurate reading of an object’s size, angle, and velocity.

A transmitter inside the sensor sends out radio waves and based on the time these waves take to get reflected back to the sensor, the sensor will calculate the size and velocity of the object as well as its distance from the host vehicle.

Sensors in Autonomous Vehicles

Source: https://riversonicsolutions.com/self-driving-cars-expert-witness-physics-drives-the-technology/

Radar sensors have been used previously in weather forecasting as well as ocean navigation. The reason for this is that it performs quite consistently across a varying set of weather conditions, thus proving to be better than vision-based sensors.

However, they are not always accurate when it comes to identifying a specific object and classifying it (which is an important step in making decisions for a self-driving car).

RADAR sensors can be classified based on their operating distance ranges:

Short Range Radar (SRR): (0.2 to 30m) – The major advantage of short-range radar sensors is to provide high resolution to the images being detected. This is of utmost importance, as a pedestrian standing in front of a larger object may not be properly detected in low resolutions.

Medium Range Radar (MRR): 30 to 80m

Long-Range Radar (LRR): (80m to more than 200m) – These sensors are most useful for Adaptive Cruise Control (ACC) and highway Automatic Emergency Braking (AEB) systems.

LiDAR sensors

LiDAR (Light Detection and Ranging) offers some positives over vision-based and radar sensors. It transmits thousands of high-speed laser light waves, which when reflected, give a much more accurate sense of a vehicle’s size, distance from the host vehicle, and its other features.

Many pulses create distinct point clouds (a set of points in 3D space), which means a LiDAR sensor will give a three-dimensional view of a certain object as well.

Sensors in Autonomous Vehicles

Source: How LiDAR Fits Into the Future of Autonomous Driving

LiDAR sensors often detect small objects with high precision, thus improving the accuracy of object identification. Moreover, LiDAR sensors can be configured to give a 360° view of the objects around the vehicle as well, thus reducing the requirement for multiple sensors of the same type.

The drawback, however, is that LiDAR sensors have a complex design and architecture, which means that integrating a LiDAR sensor into a vehicle can increase manufacturing costs multifold.

Moreover, these sensors need high computing power, which makes them difficult to be integrated into a compact design.

Most LiDAR sensors use a 905 nm wavelength, which can provide accurate data up to 200 m in a restricted field of view. Some companies are also working on 1550 nm LiDAR sensors, which will have even better accuracy over a longer range.

Ultrasonic Sensors

Ultrasonic Sensors in Autonomous Vehicles are mostly used in low-speed applications in automobiles.

Most parking assist systems have ultrasonic sensors, as they provide an accurate reading of the distance between an obstacle and the car, irrespective of the size and shape of the obstacle.

The ultrasonic sensor consists of a transmitter-receiver setup. The transmitter sends ultrasonic sound waves and based on the time period between transmission of the wave and its reception, the distance to the obstacle is calculated.

Sensors in Autonomous Vehicles

Source: Ultrasonic Sensor working applications and advantages

The detection range of ultrasonic sensors ranges from a few centimetres to 5m, with an exact measurement of distance.

They can also detect objects at a very small distance from the vehicle, which can be extremely helpful while parking your vehicle.

Ultrasonic sensors can also be used to detect conditions around the vehicle and help with V2V (vehicle-to-vehicle) and V2I (vehicle-to-infrastructure) connectivity.

Sensor data from thousands of such connected vehicles can help in building algorithms for autonomous vehicles and offers reference data for several scenarios, conditions and locations.

Challenges in handling sensors

The main challenge in handling sensors is to get an accurate reading from the sensor while filtering out the noise. Noise means the additional vibrations or abnormalities in a signal, which may reduce the accuracy and precision of the signal.

It is important to tell the system which part of the signal is important and which needs to be ignored. Noise filtering is a set of processes that are performed to remove the noise contained within the data.

Noise Filtering

The main cause for uncertainty being generated through the use of individual sensors is the unwanted noise or interference in the environment.

Of course, any data picked up by any sensor in the world consists of the signal part (which we need) and the noise part (which we want to ignore). But the uncertainty lies in not understanding the degree of noise present in any data.

Normally, high-frequency noise can cause a lot of distortions in the measurements of the sensors. Since we want the signal to be as precise as possible, it is important to remove such high-frequency noise.

Noise filters are divided into linear (e.g., simple moving average) and non-linear (e.g., median) filters. The most commonly used noise filters are:

Low-pass filter – It passes signals with a frequency lower than a certain cut-off frequency and attenuates signals with frequencies higher than the cut-off frequency.

High-pass filter – It passes signals with a frequency higher than a certain cut-off frequency and attenuates signals with frequencies lower than the cut-off frequency.

Other common filters include Kalman filter, Recursive Least Square (RLS), Least Mean Square Error (LMS).

Autonomous Vehicle Feature Development at Dorle Controls

At Dorle Controls, we strive to provide bespoke software development and integration solutions for autonomous vehicles as well.

This includes individual need-based application software development as well as developing the entire software stack for an autonomous vehicle. Write to [email protected] to know more about our capabilities in this domain.

Boost Your Knowledge More

0 notes

Text

Like the traditional PIR sensor, RCWL-0516 is a widely popular sensor that also detects movements and is used in Alarms or for security purposes. This sensor uses a “microwave Doppler radar” technique to detect moving objects within its range. It has a sensitivity range of ~7 meters and that is very useful for multiple applications.

2 notes

·

View notes

Text

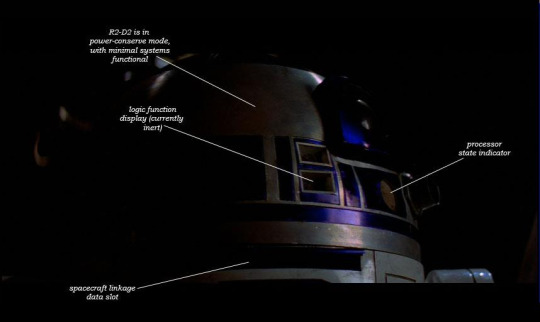

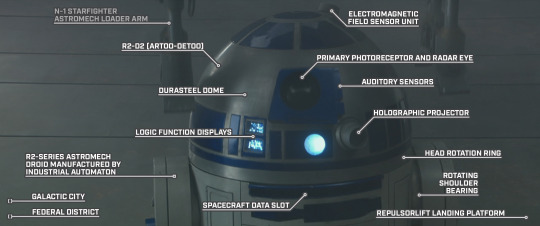

Artoo on Standby

STAR WARS EPISODE II: Attack of the Clones 00:13:03

Identified R2-D2 as being in "power-conserve mode" based on this image from the old Visual Guides feature from StarWars.com

#Star Wars#Episode II#Attack of the Clones#Coruscant#Galactic City#Federal District#Senate Apartment Complex#Senator Padmé Amidala’s apartment#Senator Padmé Amidala’s private quarters#R2-D2#power-conserve mode#primary photoreceptor#radar eye#processor state indicator#rotating balance intensifier#head rotation ring#auditory sensors#holoprojector#electromagnetic field sensor unit#spacecraft data slot#spacecraft linkage and control arms#acoustic signaller

3 notes

·

View notes

Text

SciTech Chronicles. . . . . . . . .April 7th, 2025

#starquakes#Kepler#Bubbles#M67#on-orbit#mobile#radar#sensors#Mid-ocean#SISMOMAR#waveform#inversion#dysprosium-titanate#pyrochlore-iridate#Q-DiP#monopole#Sunbird#Mars#fusion#Pulsar

0 notes

Text

The ESYSENSE Motion Sensor Bulb is a smart and efficient lighting solution for any home. Featuring advanced radar bulb technology, this sensor bulb detects motion instantly, providing automatic illumination when movement is detected. It works through thin walls, glass, and doors, making it more reliable than traditional motion-activated lights. Ideal for staircases, hallways, garages, and outdoor spaces, it enhances security while conserving energy by turning off when no movement is present. Easy to install in any standard socket, the ESYSENSE Motion Sensor Bulb offers hands-free convenience and peace of mind for your home lighting needs.

0 notes

Text

¿6x1?: ¿Por qué cambia tu voz en la pubertad?

¿Por qué las moscas se frotan las patas? Si bien los seres humanos solemos frotarnos las manos por impaciencia o por la expectativa de algo bueno, las moscas tienen un motivo mucho más práctico para realizar un gesto similar. Dejando a un lado su afición por posarse en lugares poco agradables como excrementos o residuos en descomposición, estas pequeñas criaturas son sorprendentemente meticulosas…

#¿cuando llueve?#6x1#Christopher Ferguson#Como funciona un radar#densidad#despertar sabiendo#El eco#El efecto Doppler#Elementos de un radar#Entertainment Software Rating Board#ESRB#Funcionamiento del radar#l sonido de tu voz#Los videojuegos te hacen violento#moscas#nubes oscuras#Por qué cambia tu voz en la pubertad#Por qué las moscas se frotan las patas#Por qué las nubes se oscurecen antes de empezar a llover#radar#Se puede sudar estando en el agua#sensores olfativos#sudar en el agua#sudor#voz en la pubertad

0 notes

Text

#sensor fusion#radar#cybersecurity#AutonomousCars#AI#SmartMobility#ConnectedVehicles#electricvehiclesnews#evtimes#autoevtimes#evbusines#TechInnovation

1 note

·

View note

Text

0 notes

Text

Sensor Fusion

Introduction to Sensor Fusion

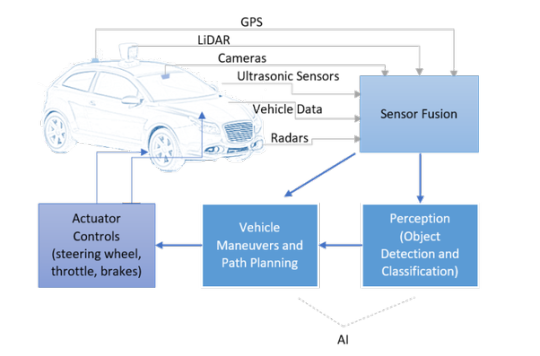

Autonomous vehicles require a number of sensors with varying parameters, ranges, and operating conditions. Cameras or vision-based sensors help in providing data for identifying certain objects on the road, but they are sensitive to weather changes.

Radar sensors perform very well in almost all types of weather but are unable to provide an accurate 3D map of the surroundings. LiDAR sensors map the surroundings of the vehicle to a high level of accuracy but are expensive.

The Need for Integrating Multiple Sensors

Thus, every sensor has a different role to play, but neither of them can be used individually in an autonomous vehicle.

If an autonomous vehicle has to make decisions similar to the human brain (or in some cases, even better than the human brain), then it needs data from multiple sources to improve accuracy and get a better understanding of the overall surroundings of the vehicle.

This is why sensor fusion becomes an essential component.

Sensor Fusion | Dorleco

Source: The Functional Components of Autonomous Vehicles

Sensor Fusion

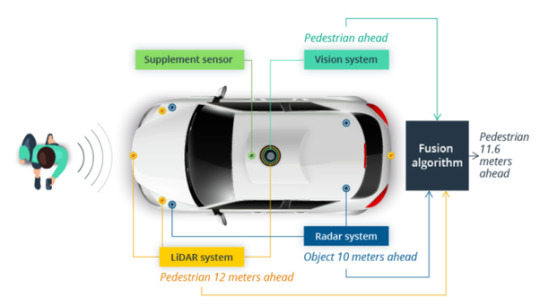

Sensor fusion essentially means taking all data from the sensors set up around the vehicle’s body and using it to make decisions.

This mainly helps in reducing the amount of uncertainty that could be prevalent through the use of individual sensors.

Thus, sensor fusion helps in taking care of the drawbacks of every sensor and building a robust sensing system.

Most of the time, in normal driving scenarios, sensor fusion brings a lot of redundancy to the system. This means that there are multiple sensors detecting the same objects.

Sensor Fusion | Dorleco

Source: https://semiengineering.com/sensor-fusion-challenges-in-cars/

However, when one or multiple sensors fail to perform accurately, sensor fusion helps in ensuring that there are no undetected objects. For example, a camera can capture the visuals around a vehicle in ideal weather conditions.

But during dense fog or heavy rainfall, the camera won’t provide sufficient data to the system. This is where radar, and to some extent, LiDAR sensors help.

Furthermore, a radar sensor may accurately show that there is a truck in the intersection where the car is waiting at a red light.

But it may not be able to generate data from a three-dimensional point of view. This is where LiDAR is needed.

Thus, having multiple sensors detect the same object may seem unnecessary in ideal scenarios, but in edge cases such as poor weather, sensor fusion is required.

Levels of Sensor Fusion

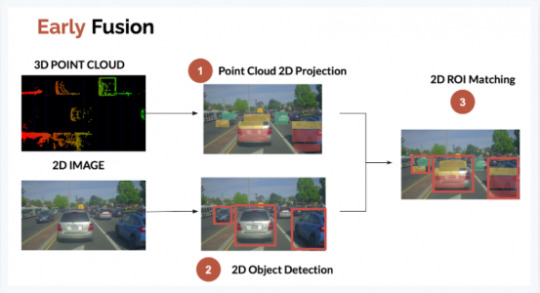

1. Low-Level Fusion (Early Fusion):

In this kind of sensor fusion method, all the data coming from all sensors is fused in a single computing unit, before we begin processing it. For example, pixels from cameras and point clouds from LiDAR sensors are fused to understand the size and shape of the object that is detected.

This method has many future applications, since it sends all the data to the computing unit. Thus, different aspects of the data can be used by different algorithms.

However, the drawback of transferring and handling such huge amounts of data is the complexity of computation. High-quality processing units are required, which will drive up the price of the hardware setup.

2. Mid-Level Fusion:

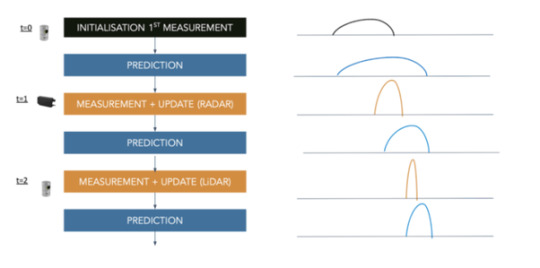

In mid-level fusion, objects are first detected by the individual sensors and then the algorithm fuses the data. Generally, a Kalman filter is used to fuse this data (which will be explained later on in this course).

The idea is to have, let’s say, a camera and a LiDAR sensor detect an obstacle individually, and then fuse the results from both to get the best estimates of position, class and velocity of the vehicle.

This is an easier process to implement, but there is a chance of the fusion process failing in case of sensor failure.

3. High-Level Fusion (Late Fusion):

This is similar to the mid-level method, except that we implement detection as well as tracking algorithms for each individual sensor, and then fuse the results.

The problem, however, would be that if the tracking for one sensor has some errors, then the entire fusion may get affected.

Sensor Fusion | Dorleco

Source: Autonomous Vehicles: The Data Problem

Sensor fusion can also be of different types. Competitive sensor fusion consists of having multiple types of sensors generating data about the same object to ensure consistency.

Complementary sensor fusion will use two sensors to paint an extended picture, something that neither of the sensors could manage individually.

Coordinated sensor fusion will improve the quality of the data. For example, taking two different perspectives of a 2D object to generate a 3D view of the same object.

Variation in the approach of sensor fusion

Radar-LiDAR Fusion

Since there are a number of sensors that work in different ways, there is no single solution to sensor fusion. If a LiDAR and radar sensor has to be fused, then the mid-level sensor fusion approach can be used. This consists of fusing the objects and then taking decisions.

Sensor Fusion | Dorleco

Source: The Way of Data: How Sensor Fusion and Data Compression Empower Autonomous Driving - Intellias

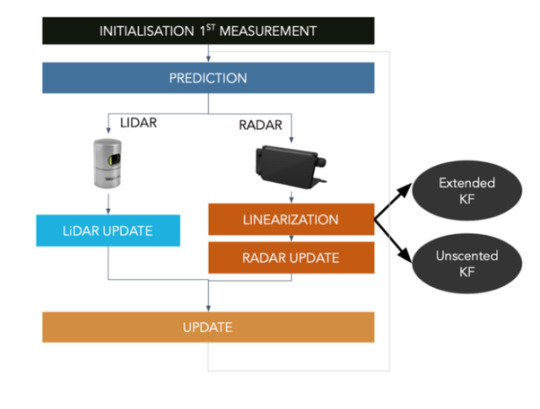

In this approach, a Kalman filter can be used. This consists of a “predict-and-update” method, where based on the current measurement and the last prediction, the predictive model gets updated to provide a better result in the next iteration. An easier understanding of this is shown in the following image.

Sensor Fusion | Dorleco

Source: Sensor Fusion

The issue with radar-LiDAR sensor fusion is that radar sensor provides non-linear data, while LiDAR data is linear in nature. Hence, the non-linear data from the radar sensor has to be linearized before it can be fused with the LiDAR data and the model then gets updated accordingly.

In order to linearize the radar data, an extended Kalman filter or unscented Kalman filter can be used.

Sensor Fusion | Dorleco

Source: Sensor Fusion

Camera-LiDAR Fusion

Now, if a system needs the fusion of camera and LiDAR sensor, then low-level (fusing raw data), as well as high-level (fusing objects and their positions) fusion, can be used. The results in both cases vary slightly.

The low-level fusion consists of overlapping the data from both sensors and using the ROI (region of interest) matching approach.

The 3D point cloud data of objects from the LiDAR sensor is projected on a 2D plane, while the images captured by the camera are used to detect the objects in front of the vehicle.

These two maps are then superimposed to check the common regions. These common regions signify the same object detected by two different sensors.

For the high-level fusion, data first gets processed, and then 2D data from the camera undergoes conversion to 3D object detection.

This data is then compared with the 3D object detection data from the LiDAR sensor, and the intersecting regions of the two sensors gives the output (IOU matching).

Sensor Fusion | Dorleco

Source: LiDAR and Camera Sensor Fusion in Self-Driving Cars

Thus, the combination of sensors has a bearing on which approach of sensor fusion needs to be used. Sensors play a massive role in providing the computer with an adequate amount of data to make the right decisions.

Furthermore, sensor fusion also allows the computer to “have a second look” at the data and filter out the noise while improving accuracy.

Autonomous Vehicle Feature Development at Dorle Controls

At Dorle Controls, developing sensor and actuator drivers is one of the many things we do. Be it bespoke feature development or full-stack software development, we can assist you.

For more info on our capabilities and how we can assist you with your software control needs, please write to [email protected].

Read more

0 notes

Text

#adas calibration#adas camera calibration#mobile adas calibration#steering angle sensor coding#radar control module programming

0 notes

Text

Sensor Mart: Your Trusted Provider of Innocent's Radar Systems

In today’s rapidly evolving technological landscape, radar systems play a critical role in numerous industries, from automotive and security to industrial automation and beyond. Sensor Mart, a leading provider of Innocent's state-of-the-art radar systems, stands at the forefront of delivering innovative solutions to meet diverse industry needs. With a commitment to quality and cutting-edge technology, Sensor Mart is redefining how businesses leverage radar systems for enhanced performance and efficiency.

About Sensor Mart and Innocents Radar Systems

Sensor Mart partners with Innocent, a globally recognized leader in radar system development, to provide high-quality solutions tailored to modern challenges. Innosent’s radar technology is known for its precision, reliability, and versatility, making it an ideal choice for applications across industries such as automotive, surveillance, and industrial monitoring.

By combining Innosent’s expertise with Sensor Mart’s commitment to customer satisfaction, businesses gain access to top-tier radar systems designed to meet the highest standards of functionality and innovation.

Key Features of Innosent Radar Systems

High Accuracy and Precision Innosent’s radar systems are renowned for their unparalleled accuracy, capable of detecting and measuring minute movements and objects in challenging environments. This precision makes them suitable for applications requiring exact measurements, such as traffic monitoring and industrial automation.

Advanced Technology Innocent integrates cutting-edge radar technology, including Frequency Modulated Continuous Wave (FMCW) and Doppler radar, into its systems. These technologies enable robust performance in detecting speed, distance, and object movement, even in low-visibility conditions.

Versatility and Scalability Whether it’s automotive safety systems, smart home applications, or security systems, Innosent radar modules are versatile enough to adapt to various use cases. Additionally, they are scalable to meet specific project requirements, making them a cost-effective solution.

Energy Efficiency Designed with energy-saving features, Innocent radar systems ensure optimal performance without excessive power consumption, making them suitable for sustainable applications.

Durability and Reliability Engineered for longevity, these radar systems perform consistently in extreme environmental conditions, ensuring reliability in critical operations.

Applications of Innocent Radar Systems

Automotive Industry Innocent radar systems are integral to advanced driver assistance systems (ADAS), ensuring enhanced safety by detecting nearby objects, monitoring blind spots, and assisting in adaptive cruise control.

Security and Surveillance These radar systems are widely used in perimeter protection, motion detection, and intrusion monitoring. Their ability to detect movements with precision makes them an essential component of modern security systems.

Industrial Automation In industrial settings, radar systems facilitate the monitoring of machinery and processes, enabling predictive maintenance and reducing downtime.

Smart Home and IoT Devices Innocent radar technology is also utilized in smart home devices for motion sensing, enhancing convenience and security for users.

Traffic Management Radar systems play a vital role in monitoring traffic flow, detecting speeding vehicles, and ensuring road safety in urban and highway settings.

Why Choose Sensor Mart?

Trusted Partnership with Innocent Sensor Mart’s collaboration with Innocent ensures that clients receive access to top-quality radar systems backed by years of expertise and innovation.

Tailored Solutions Sensor Mart understands that every business has unique needs. We offer customized radar system solutions to align with specific operational goals and challenges.

Exceptional Customer Support From consultation to after-sales service, Sensor Mart provides comprehensive support to ensure that customers maximize the value of their radar systems.

Commitment to Innovation Sensor Mart stays ahead of industry trends, continuously adopting the latest radar technologies to deliver cutting-edge solutions that drive efficiency and productivity.

Empowering Industries with Sensor Mart

Sensor Mart is more than just a provider; it is a partner in innovation. By offering Innosent’s radar systems, Sensor Mart empowers businesses across industries to harness the power of advanced technology for improved safety, performance, and operational excellence.

Whether you’re looking to implement radar systems in automotive applications, enhance security systems, or optimize industrial processes, Sensor Mart has the expertise and solutions to meet your needs.

Conclusion

Innocent's radar systems, available through Sensor Mart, represent the pinnacle of precision and reliability in radar technology. With a focus on innovation, durability, and customer satisfaction, Sensor Mart is the trusted choice for businesses seeking advanced radar solutions.

Connect with Sensor Mart today to explore how Innosent’s radar systems can revolutionize your operations and provide a competitive edge in your industry.

0 notes

Text

Constelli - A Signal Processing Company in Defense and Aerospace

Constelli is a signal processing company solving problems in Defense and Aerospace fields.

#signal generator#radar system#signal processing#radar technology#radar application#radar signal#signal and processing#radar range#radar signal processing#radar simulator#radar transmitter#radar receiver#radar design#radar equipment#radar testing#applications of signal processing#radar communication#radar communication system#dynamic signal analyzers#radar target simulator#Scenario Simulation#Modelling & Simulation#Signal Processing company in Hyderabad#Radar & EW Sensor Testing#Digital Signal Processing#Ansys STK AGI

0 notes

Text

Radar Sensors for Smart City Applications: Enhancing Urban Infrastructure and Safety

Radar sensors are emerging as a key technology for smart city applications, providing accurate and reliable data to enhance urban infrastructure and safety. These sensors offer a wide range of capabilities, including traffic monitoring, pedestrian detection, and environmental analysis, all of which contribute to the development of smarter, safer cities. Radar sensors can operate in diverse weather conditions and are capable of detecting objects even in low visibility, making them indispensable for real-time urban monitoring.

Radar-based solutions allow cities to automate traffic management systems, improve public safety, and optimize resource utilization. From monitoring vehicle speeds to tracking the flow of pedestrians, radar sensors are transforming the way cities operate, enabling a data-driven approach to city planning and public services.

The Radar Sensors for Smart City Applications Market was valued at USD 30.58 billion in 2023 and is projected to reach USD 136.71 billion by 2032, registering a CAGR of 18.1% during the forecast period from 2024 to 2032.

Future Scope

The future of radar sensors in smart cities is set to expand significantly with the integration of 5G and the Internet of Things (IoT). As cities adopt more IoT-based infrastructure, radar sensors will play a critical role in enabling communication between connected devices, improving the accuracy and speed of data collection. Enhanced radar technology will also drive advancements in autonomous vehicles, smart traffic lights, and automated public transport systems, providing real-time information for efficient urban mobility.

Trends

Key trends in radar sensor development include the miniaturization of sensors for easy integration into urban infrastructure, as well as the adoption of multi-function radar systems. These systems are capable of performing multiple tasks, such as traffic monitoring and environmental scanning, using a single device. Additionally, there is an increased focus on improving energy efficiency and reducing costs, making radar sensors more accessible for widespread deployment.

Applications

Radar sensors are used in a variety of smart city applications, including traffic control systems, street lighting, and security surveillance. They enable accurate detection of vehicles, cyclists, and pedestrians, providing vital data for managing city traffic and ensuring public safety. In public transportation systems, radar sensors help monitor buses and trains, improving scheduling and reducing congestion.

Solutions and Services

Providers of radar sensor technology offer customized solutions designed for specific smart city applications. These services include installation, system integration, and ongoing support, ensuring that the sensors work seamlessly with existing urban infrastructure. Many solutions are designed to be scalable, allowing cities to expand their smart systems as needed.

Key Points

Radar sensors provide real-time data for traffic management and public safety.

The future of radar sensors includes integration with 5G and IoT networks.

Miniaturized, multi-function radar sensors are a key trend in the market.

Applications include traffic control, street lighting, and security surveillance.

Scalable solutions allow for easy expansion of smart city systems.

0 notes

Text

Artoo-Detoo

STAR WARS EPISODE II: Attack of the Clones 00:03:07

#Star Wars#Episode II#Attack of the Clones#Coruscant#Galactic City#Federal District#unidentified N-1 starfighter#astromech loader arm#R2-D2#durasteel#logic function display#astromech droid#Industrial Automaton#spacecraft data slot#repulsorlift landing platform#rotating shoulder bearing#head rotation ring#holographic projector#auditory sensor#primary photoreceptor#radar eye#electromagnetic field sensor unit

2 notes

·

View notes