#UnderSampling

Explore tagged Tumblr posts

Text

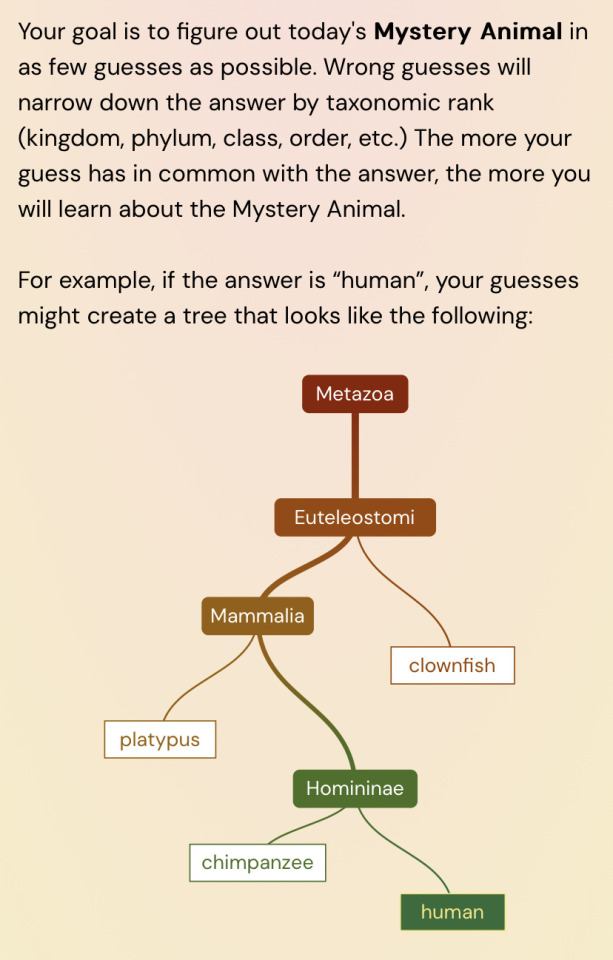

I practiced a bunch of times today and yeah, it’s very biased towards vertebrates in general and mammals in particular. Was still a lot of fun though!

guys i just found out about this site that does a daily guessing game, it’s phylogenetic wordle- so fun!!!

#in terms of frequency it was roughly mammals > winged insects = birds > ray-finned fish > everything else#(i was expecting inverts to be undersampled but was pretty surprised at how rare reptiles were)

30K notes

·

View notes

Text

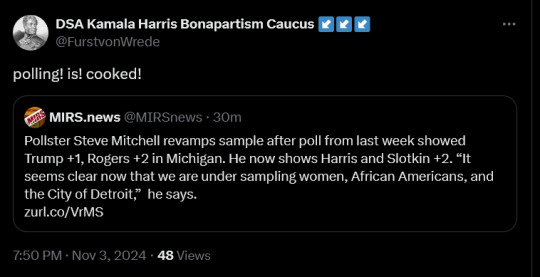

"It seems clear we're undersampling/undercounting these groups that usually at least trend/lean Democratic - oops!"

125 notes

·

View notes

Text

a poem about amorphous things

this poem, along with four others and five by the beautiful mineral magnesium oxide, are up for PWYW on my itch. plaintext under the cut:

I am vat-born and decanted into my new body. I am holy, neutral, features soft and melting into clay injection molds. It took one thousand frantic iterations to persuade myself into this solid state. Born on a winter’s parasite eve.

My first unsteady steps across the tiles trail my crimson clues. I transform myself across the floor. Through every doorway. Trademarks and copyrights for third-party games and characters are chances to live a bigger life.

Half of you became dulled when the earth produced a new American naturalist, when God gave me a few more stolen inches of undersampled skin. When I chew up the day and spit it out I give no credit to the culture or the flame.

182 notes

·

View notes

Text

had a premium (/s) experience yesterday where I saw some older family friends at a cafe and they asked me about what research I was doing and after info-dumping at them for like 15 minutes about GANs and the potential for MASSIVELY expediting MRI scans in conjunction with k-space undersampling I remembered that when some folks ask you to tell them what you're doing they do not actually want to know 😔 I am spoilt by friends and family who let me joyously blather on about this stuff and even though they don't understand it and don't share my interest, they just like to listen to me gabble. If you ever do this for A Neurodiverse (or anyone!!!) in your irl life, please know that they cherish and appreciate you more than words can say. And every infodump is a declaration of trust and affection.

50 notes

·

View notes

Text

Are your kids "tracking"? Know the jargon!

IMO - Imitating Moog Oscillators

BRB - Building Repetitive Breakcore

WTF - Wide Threshold Filters

STFU - Sinusoids That Frequently Undersample

GLHF - Good-Looking Hexadecimal Formats

29 notes

·

View notes

Text

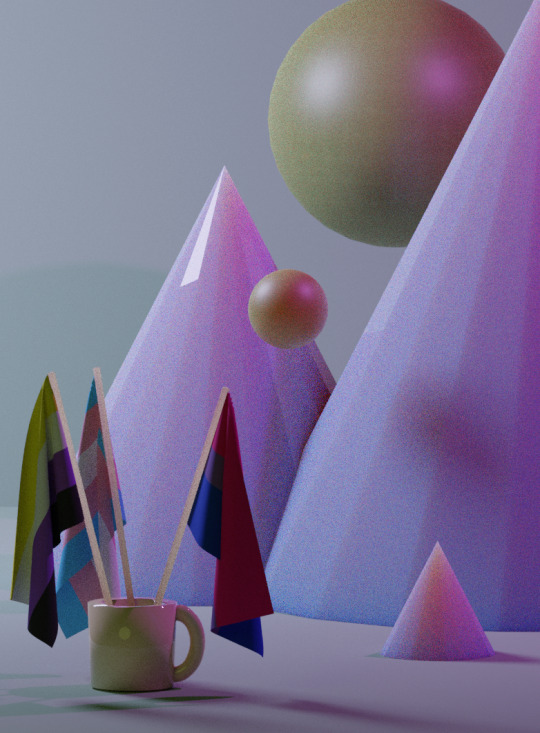

starting to get the hang of blender

heres todays uuhhmm lets call it a doodle

i like how undersampled raytracing looks kinda like screenprinting :3

#my art#shapes and colors#some artist i really like does illustrations with a 3d software and then uses very good colors and very cartoony sharders. i wanna try that

17 notes

·

View notes

Text

10 Best Practices for Digital Tensile Testing

Source of Info: https://www.perfectgroupindia.co.in/10-best-practices-for-digital-tensile-testing.php

One of the fundamental techniques used in material analysis to assess the mechanical characteristics of different materials is digital tensile testing. It yields very useful data regarding tensile strength, elasticity, ductility, and other properties for a wide range of materials, including metals, polymers, textiles, and composites. It is crucial to comprehend the best practices in order to maximize the effectiveness of this potent method. The following list of the top ten digital tensile testing best practices will assist you in optimizing your material analysis workflow and discovering fresh avenues for material characterization.

Calibration Confidence Make sure your testing apparatus is calibrated accurately and up to date to start with a strong base. Frequent calibration checks provide accurate and dependable results, boosting the confidence you have in your testing procedure.

Sample Selection Savvy Make careful selections of your samples, taking into account aspects like surface condition, size, shape, and homogeneity of material. Properly prepared samples minimize variability and improve data integrity by guaranteeing consistency and repeatability in test results.

Gripping Guidance Right sample holding is essential for precise and representative test results. Choose grips that are appropriate for the shape and type of material you are using to ensure even stress distribution and avoid early sample failure.

Strain Sensibility Optimize strain measurement accuracy by selecting an appropriate extensometer and ensuring proper installation and alignment. Precise strain control enables detailed analysis of material behavior under various loading conditions.

Speed Selection To properly capture important material responses, strike the ideal balance between the speed of the test and the rate at which data is acquired. Adjust testing parameters based on the deformation properties of your material to prevent over- or undersampling of the data.

Temperature Tact Consider the effects of temperature on the properties of the materials by conducting tests in controlled environments. Temperature baths or chambers allow for the assessment of both mechanical and thermal properties while preserving the integrity of the specimen.

Data Delve Utilize cutting-edge software features to process post-test data and monitor in real-time as you delve deeply into analyze data. To fully comprehend the performance of a material, extract insightful information from fracture analysis, stress-strain curves, and modulus calculations.

Safety Spotlight Give priority to safety procedures so that during testing operations both people and equipment are protected. To effectively reduce potential hazards, put in place the necessary safety measures, such as personnel training, emergency stop mechanisms, and machine guarding.

Standard Compliance Adhere to relevant industry standards and testing protocols to ensure consistency and comparability of results. Familiarize yourself with ASTM, ISO, or other applicable standards, and follow prescribed procedures meticulously to maintain credibility and reliability in your testing endeavors.

Continuous Improvement Embrace a culture of continuous improvement by soliciting feedback, conducting regular audits, and staying abreast of technological advancements in digital tensile testing. Strive for excellence in every aspect of your testing process, driving innovation and pushing the boundaries of material analysis.

Conclusion In conclusion, you can fully realize the potential of this adaptable method for material characterization by learning the best practices for digital tensile testing. You may improve the precision, dependability, and effectiveness of your testing processes by following calibration guidelines, making the most of sample preparation, and utilizing cutting-edge data analysis technologies. As you proceed with your material analysis journey, adopt these best practices as a guide to help you make ground-breaking discoveries and breakthroughs in the fields of materials science and engineering.

Build an environment of ongoing learning and development within your company by working together with colleagues and industry experts to share best practices and insights. In materials science and engineering, you can lead significant breakthroughs and stay ahead of the curve by adopting a proactive and forward-thinking approach, which will ultimately shape the field's future.

Frequently Asked Questions (FAQs)

Q: What is digital tensile testing? Digital tensile testing is a method used to evaluate the mechanical properties of materials by applying tension until the material breaks.

Q: Why is digital tensile testing important? Understanding a material's strength, elasticity, and ductility is essential for a variety of industries, including manufacturing, construction, and aerospace. Digital tensile testing offers valuable insights into these properties.

Q: How often should calibration checks be performed? As advised by the manufacturer, calibration checks ought to be carried out on a regular basis—typically, at least once a year or whenever there is a substantial alteration in the testing environment.

Q: What types of materials can be tested using digital tensile testing? Metals, polymers, textiles, and composites are just a few of the materials that can be tested using digital tensile testing.

Q: How can I improve the accuracy of my digital tensile testing results? To improve accuracy, ensure proper sample preparation, select appropriate testing parameters, and adhere to relevant industry standards and protocols.

0 notes

Text

10 Best Practices for Digital Tensile Testing

Source of info: https://www.perfectgroupindia.co.in/10-best-practices-for-digital-tensile-testing.php

One of the fundamental techniques used in material analysis to assess the mechanical characteristics of different materials is digital tensile testing. It yields very useful data regarding tensile strength, elasticity, ductility, and other properties for a wide range of materials, including metals, polymers, textiles, and composites. It is crucial to comprehend the best practices in order to maximize the effectiveness of this potent method. The following list of the top ten digital tensile testing best practices will assist you in optimizing your material analysis workflow and discovering fresh avenues for material characterization.

1. Calibration Confidence

Make sure your testing apparatus is calibrated accurately and up to date to start with a strong base. Frequent calibration checks provide accurate and dependable results, boosting the confidence you have in your testing procedure.

2. Sample Selection Savvy

Make careful selections of your samples, taking into account aspects like surface condition, size, shape, and homogeneity of material. Properly prepared samples minimize variability and improve data integrity by guaranteeing consistency and repeatability in test results.

3. Gripping Guidance

Right sample holding is essential for precise and representative test results. Choose grips that are appropriate for the shape and type of material you are using to ensure even stress distribution and avoid early sample failure.

4. Strain Sensibility

Optimize strain measurement accuracy by selecting an appropriate extensometer and ensuring proper installation and alignment. Precise strain control enables detailed analysis of material behavior under various loading conditions.

5. Speed Selection

To properly capture important material responses, strike the ideal balance between the speed of the test and the rate at which data is acquired. Adjust testing parameters based on the deformation properties of your material to prevent over- or undersampling of the data.

6. Temperature Tact

Consider the effects of temperature on the properties of the materials by conducting tests in controlled environments. Temperature baths or chambers allow for the assessment of both mechanical and thermal properties while preserving the integrity of the specimen.

7. Data Delve

Utilize cutting-edge software features to process post-test data and monitor in real-time as you delve deeply into analyze data. To fully comprehend the performance of a material, extract insightful information from fracture analysis, stress-strain curves, and modulus calculations.

8. Safety Spotlight

Give priority to safety procedures so that during testing operations both people and equipment are protected. To effectively reduce potential hazards, put in place the necessary safety measures, such as personnel training, emergency stop mechanisms, and machine guarding.

9. Standard Compliance

Adhere to relevant industry standards and testing protocols to ensure consistency and comparability of results. Familiarize yourself with ASTM, ISO, or other applicable standards, and follow prescribed procedures meticulously to maintain credibility and reliability in your testing endeavors.

10. Continuous Improvement

Embrace a culture of continuous improvement by soliciting feedback, conducting regular audits, and staying abreast of technological advancements in digital tensile testing. Strive for excellence in every aspect of your testing process, driving innovation and pushing the boundaries of material analysis.

Conclusion

In conclusion, you can fully realize the potential of this adaptable method for material characterization by learning the best practices for digital tensile testing. You may improve the precision, dependability, and effectiveness of your testing processes by following calibration guidelines, making the most of sample preparation, and utilizing cutting-edge data analysis technologies. As you proceed with your material analysis journey, adopt these best practices as a guide to help you make ground-breaking discoveries and breakthroughs in the fields of materials science and engineering.

Build an environment of ongoing learning and development within your company by working together with colleagues and industry experts to share best practices and insights. In materials science and engineering, you can lead significant breakthroughs and stay ahead of the curve by adopting a proactive and forward-thinking approach, which will ultimately shape the field's future.

0 notes

Text

Weekly Review, 28 March 2025

Some interesting links that I Tweeted about in the last week (I also post these on Mastodon, Threads, Newsmast, and Bluesky):

IT infrastructure needs to be reworked to optimise AI performance: https://www.informationweek.com/machine-learning-ai/breaking-through-the-ai-bottlenecks

AI chatbots continue to impersonate dead teenagers: https://arstechnica.com/tech-policy/2025/03/mom-horrified-by-character-ai-chatbots-posing-as-son-who-died-by-suicide/

Where AI really shines in software development: https://www.datasciencecentral.com/the-future-of-code-review-is-in-balance-human-and-ai/

Submitting AI generated research papers, without informing the receivers that it's AI, is quite unethical: https://techcrunch.com/2025/03/19/academics-accuse-ai-startups-of-co-opting-peer-review-for-publicity/

This raises an interesting question, one that's sure to keep lawyers busy for the next few years-if an AI defames a person, who is liable for it? https://arstechnica.com/tech-policy/2025/03/chatgpt-falsely-claimed-a-dad-murdered-his-own-kids-complaint-says/

An AI based weather forecasting system that is much faster than traditional approaches: https://www.theguardian.com/technology/2025/mar/20/ai-aardvark-weather-prediction-forecasting-artificial-intelligence

Script writers keeping their creations offline to stop AI grabbing them: https://www.theverge.com/news/632613/andor-tony-gilroy-ai-star-wars-training-copyright

AI can now create funnier memes than humans: https://arstechnica.com/ai/2025/03/ai-beats-humans-at-meme-humor-but-the-best-joke-is-still-human-made/

Another method of scaling-up AI. But how useful is it really? https://techcrunch.com/2025/03/19/researchers-say-theyve-discovered-a-new-method-of-scaling-up-ai-but-theres-reason-to-be-skeptical/

A method for extracting the hidden objectives of an AI: https://arstechnica.com/ai/2025/03/researchers-astonished-by-tools-apparent-success-at-revealing-ais-hidden-motives/

Laws take a long time to write, so it makes sense that they should try to anticipate future threats from AI: https://techcrunch.com/2025/03/19/group-co-led-by-fei-fei-li-suggests-that-ai-safety-laws-should-anticipate-future-risks/

How to use AI in your job hunting: https://www.informationweek.com/it-leadership/8-ways-generative-ai-can-help-you-land-a-new-job-after-a-layoff

AI nurses are making their way into hospitals, but real nurses don't trust them: https://www.stuff.co.nz/world-news/360617352/ai-nurses-reshape-us-hospital-care-human-nurses-are-pushing-back

I wouldn't trust Trump with a box of crayons, let alone a law to force removal of AI generated content: https://www.theverge.com/decoder-podcast-with-nilay-patel/627868/take-it-down-act-weapon-trump-ncii-deepfakes

Business efficiency boosts from using AI: https://www.kdnuggets.com/how-real-companies-are-using-ai-to-boost-efficiency

AI need data for training, but their crawlers are hammering websites to get it: https://www.theregister.com/2025/03/18/ai_crawlers_sourcehut/

AI generated works cannot be copyrighted: https://arstechnica.com/tech-policy/2025/03/judge-disses-star-trek-icon-datas-poetry-while-ruling-ai-cant-author-works/

Experts disagree on when, if ever, AI will reach human-level intelligence: https://techcrunch.com/2025/03/19/the-ai-leaders-bringing-the-agi-debate-down-to-earth/

The impact of AI on the music industry: https://www.informationweek.com/machine-learning-ai/how-ai-is-transforming-the-music-industry

The advances in physical AI, that is, AI that interacts directly with the real world: https://www.bigdatawire.com/2025/03/20/the-rise-of-intelligent-machines-nvidia-accelerates-physical-ai-progress/

An AI model of buildings, used to assist in evaluating renewable electricity installations: https://techcrunch.com/2025/03/17/palmetto-wants-software-developers-to-electrify-america-using-its-ai-building-models/

Imbalanced data sets can seriously bias the performance of AI. Oversampling and undersampling are two ways to deal with these datasets: https://www.datasciencecentral.com/exploring-oversampling-and-under-sampling-core-techniques-for-balancing-imbalanced-datasets-in-ml/

AI reflect the data they are trained on. Since there is much more censorship of Chinese-language forums, we would expect less free responses from AI when questioned in Chinese: https://techcrunch.com/2025/03/20/ais-answers-on-china-differ-depending-on-the-language-analysis-finds/

AI are getting better at doing regular work tasks: https://dataconomy.com/2025/03/19/ai-is-learning-to-work-like-you-and-its-getting-faster-every-day/

0 notes

Text

TEDにて

セバスチャン・ド・ハルー:風力船ドローンによって海に対する私たちの考え方が変わる

(詳しくご覧になりたい場合は上記リンクからどうぞ)

二酸化炭素は、地球温暖化が生じる温室効果ガスのひとつでしかありません。

二酸化炭素は、地球温暖化が生じる温室効果ガスのひとつでしかありません。

二酸化炭素は、地球温暖化が生じる温室効果ガスのひとつでしかありません。

海は未開の場所で調査がほとんどされていません。

今日、私たちは未だに地球よりも他の惑星についてよく知っているのです。どうしたら、この巨大で重要な生態系をよく知ることができるのでしょうか?

探検家のセバスチャン・ド・ハルーは、風力と太陽光発電で動く新しいドローン部隊によって、今までなかった詳細な海のデータをいかにして集めるのかを説明します。

そして、その集められたデータによって世界の気象や漁業資源の状態を知る手がかりが得られるのです。海をより理解することは陸での私たちの生活にも役に立つことでしょう。

私たちは地球のことよりも、他の惑星についてよく知っています。

だから今日は、地球のことを、もっと知るために作られた、新しいタイプのロボットを紹介します。海洋学の分野では、無人水上艇、またはUSVと呼ばれるカテゴリーに属します。

このロボットは燃料を必要としません。代わりに風力で進みます。それにもかかわらず、世界中を一度に何か月も航行できるのです。

何故、このロボットを作ったのか?

どんな意味があるのかを、ご説明しようと思います。

数年前、サンフランシスコからハワイまで、ヨットで横断していました。それまでの10年間、何億というユーザーのために、休まずに働き、ビデオゲームを開発してきました。それで、現場から離れて、広く世界を知り、考える時間が欲しくなったのです。

私は航海士でした。気象データを分析して航路を定めるという骨の折れる仕事を終えたある夕方に、甲板に出ると、この美しい夕日を見たのです。すると、ある考えが浮かびました。

私たちは海のことを、実際にはどれくらい知っているのか?

太平洋は私の周りに見渡す限り広がっていて、船は波によって大きく揺らされていました。海のはかり知れない力を、常に思い知らせるかのようでした。

海について、どの程度のことを、実際は知っているのだろうか?私は調べて見ようと決心しました。

すぐに分かったことは、私たちは海をよく知らないということです。

第一の理由は正に海の大きさによるものです。地球の70%をも覆う広さです。ただ、地球規模の複雑な現象が、海によるものだということはわかっています。例えば、誰もに毎日影響を与え、時には、劇的な影響及ぼす地球全体の気象などです。

それなのに、そういった現象について、私たちはほとんど知りません。

海の情報はどう考えても少ないのです。陸について考えてみれば、多くのセンサーがあります。実際に何十億もです、しかし、海の場合は、現場データが不足し、それに高価です。何故でしょう?数少ない船やブイに頼っているからです。その少なさには本当に驚きます。

NOAAとして知られている、アメリカ海洋大気庁によれば、船はたったの16隻です。ブイは世界中の沖に、200基未満しかありません。理由は簡単です。海の環境は非常に厳しいからです。

現場データ���収集するには、大量の燃料を運べて、多くの船員が乗れる巨大な船が必要です。一隻につき何億ドルもかかります。海底へとつながる大きなブイは、4マイルの長さのケーブルを付けられ重りとして列車の車輪が、いくつか使われています。配備するのは危険ですし、維持するには多くのお金がかかります。

衛星はどうなのかと思うかもしれません。

衛星は素晴らしいです。数十年に渡って地球の全体像を、衛星によって把握してきました。しかし、衛星からでは、海面から1ミクロンまでの深さしか、観測できない項目もあります。衛星は時間的・空間的に、解像度が比較的低いです。また、雲に覆われた部分や、陸の影響、その他の要素を補正することが必要です。

それでは海で何が起きているのでしょう?

何を計測したいのか?

ロボットは何の役に立つのでしょう?

海の中の小さな一区画に着目します。私たちが知りたい重要なものの一つは海面です。考えてみれば、海面には、空と海との相互作用が、全て集まっているのですから、全てのエネルギーや気体が、通り抜ける境界面です。

太陽はエネルギーを放射します。それは海に熱として吸収されます。その一部は大気に放出されます。大気中のCO2のような気体は海に溶解します。実際に、世界中の約30%のCO2が吸収されます。プランクトンや微生物は、酸素を大気中に大量に放出するので、呼吸する酸素の半分は、海からくるほどです。

海の熱は蒸気を生み、それは雲になり、やがて、それは降雨へとなります。気圧の勾配が地球表面の風を生み出し、大気中で水を移動させます。熱の一部は、深海にまで届いて、異なる層に蓄積されます。

海はまるで惑星規模のボイラーのように作用し、全ての熱エネルギーをため込み、短期的にはハリケーンや、長期的にはエルニーニョのような、気象現象を通じて放出されます。垂直上昇流または水平流によって、これらの層は混合され、熱帯地帯から、北極・南極に熱を運ぶのに、重要な役割をします。

気候変動の仕組み。

そして、もちろん、微生物や魚から、アシカやイルカやクジラのような、海洋哺乳類に至るまでの、地球の最も大きな生態系を成す海の生物がいます。

海の生物のほとんどは、私たちには見えません。そういった海を表す変数を、大規模に研究する際の課題の一つは、エネルギーの問題です。つまり、深海までセンサーを、配備するために使うエネルギーです。

もちろん、様々な解決策が試されました。波を利用した装置から、海面を漂う装置や太陽光発電を利用した電気装置。其々に妥協点があります。私たちのチームのブレイクスルーは、予期せぬ場所から生まれました。風力で動くランドヨットでのスピード世界記録を目指すチャレンジです。

10年間に及ぶ研究開発により、限りなく自立性がありながら、制御するのにたった3ワットしか使わないのに、乗り物で世界中巡れるような、新しいコンセプトの翼形状を、考え出しました。その翼のコンセプトを海上の移動手段に適用することで、海上ドローンは始まりました。

ドローンは見た目よりも大きく、およそ、高さは4.5メートル。縦横は7×2メートルです。

海面にある衛星と思ってください。

ドローンは海と大気に関する、主要な変数を全て計測する精度の高いセンサー群を積んでいます。そして、衛星通信により高解像度のデータをリアルタイムで陸地に送ります。私たちのチームは数年間に渡って、地球で最も厳しい海洋環境の中で、この取り組みを熱心に行ってきました。

北極圏から熱帯太平洋に至るまでです。はるばる極地の棚氷にまで行きました。大西洋のハリケーンの中にも行きました。ホーン岬を回りました。メキシコ湾の石油掘削装置の間をすり抜けてきました。タフなロボットですね。

最近、プリビロフ諸島のあたりで行った私たちの仕事を紹介します。プリビロフ諸島は、アメリカとロシアの間のベーリング海にある小さな島の集まりです。ベーリング海はスケトウダラの故郷です。スケトウダラは白身魚でご存じないかもしれませんが、もし魚のスティックやカニ風味かまぼこが、好きなら、食べたことがあるでしょう。

カニ肉のように見えますが、本当はスケトウダラです。スケトウダラ漁は、国内で最大規模の漁業です。金額と量の両方の意味においてです。毎年、31億ポンドの水揚げ量があります。

この数年間。何台かのドローンで、ベーリング海で、スケトウダラの漁業資源の規模を調査しています。この調査は漁場を管理して、漁業資源の枯渇を防ぐためのクオータ制を改良するのに役立ち、損なわれやすい生態系を保護します。

現在、ドローンは音響機器を使って漁場の調査をしています。

すなわちソナーです。ソナーは音波を下方に発信します。そして、海底または魚群からの、音波の反響によって、海面下で何が起きているのかを把握することができます。私たちの海洋ドローンは、この反復調査にとても適しています。ドローンは夜を日に継ぎ、ベーリング海を調査しているのです。

そして、プリビロフ諸島は、オットセイの群棲地でもあります。1950年、2百万頭のオットセイが群棲地に暮らしていました。悲しいことに昨今、急激にその数は減少しています。元の数の50%未満しか、生き残っていません。さらに、急激に減り続けています。その原因を知るために、国立海洋哺乳類研究所の研究パート��ーが、GPS機能のあるタグを母オットセイの、身体に糊付けしました。

このタグにより位置と深さがわかります。そして、急激な加速時に自動的に作動する小さなカメラを搭載しています。これは北極の深い海の中での、狩りの様子を知ることのできる芸術的な狩りの動きをするオットセイと餌となるスケトウダラの映像です。これは捕食される瞬間のほんの数秒前です。

ロボットとはいえ、北極圏での作業は厳しいです。8月にも、吹雪を耐え忍んだり、思わぬ訪問者による妨害を我慢したりしなければいけません。

小さなゴマフアザラシが、乗っかって遊んでいます。

オットセイのタグはシーズンを通して20万回以上の潜水の映像を撮影しています。よく観察してみると、オットセイの行動経路と繰り返しの潜水がわかりました。私たちは狩り場で実際に何が起きているのかを解明しようとしています。

見事なものですよ。ドローンによって集められた音響データを重ね合わせてみるとその実像がわかりはじめます。オットセイが島から離れて、縦横無尽に泳ぎ回るときに深さ20メートルくらいの比較的浅い場所で泳いでいることが観察されています。

ドローンの調査によれば、カロリーの低い小さなスケトウダラが、多くいる場所です、そしてオットセイは、もっと遠くて深いところまで潜ります。そこはドローンの調査によれば、より栄養価の高い魚である大きなスケトウダラがいる場所です。

残念ながら、より遠くまで泳ぐために母親のオットセイは、カロリーを使ってしまうので、陸に戻ってから子供に乳を与えるのに、十分なエネルギーは残されていません。そのために群れの頭数は、減少してしまうのです。さらに、ドローンは島の周りの水温が、著しく上昇していることを発見しました。

その水温の上昇によってスケトウダラは、さらに温度の低い場所を求めて、北方に追いやられているのかもしれません。データは解析中ですが、次第にオットセイの神秘のパズルが解けてきていることは、すでに分かっています。

しかし、大きな視点から見直してみれば、私たちも哺乳類なのです。そして実際に、海は人間一人あたり毎年20kgの魚を供給しています。漁業資源が激減する中で、私たちはオットセイの物語から、何を学ぶことができるのでしょう?

魚の問題だけではありません。世界の穀物生産量に影響を与え、生命や財産に甚大な被害をもたらしうるハリケーンや猛暑、それに洪水を引き起こす世界の気象系を海は動かしているので、私たち全員に毎日影響を及ぼすのです。

海は未開の場所で、調査がほとんどされていません。今日、私たちは未だに他の惑星のことを地球よりもよく知っているのです。

しかし、もしこの広大な海を、一辺が経度緯度6度の正方形で分割すれば、だいたい各辺が640kmになります。1000くらいの正方形ができるでしょう。だから少しづつパートナーと協力して、私たちはその四角一つに対して一つの海上ドローンを配備しています。

そうやって地球全体をカバーすることによって、人類に影響を与える地球のシステムを理解する手掛かりが得られると期待します。

今までしばらくの間、太陽系の離れた世界を、ロボットを使って調査してきました。今こそ、地球を計測するときなのです。なぜなら私たちは計測できないものを修理することはできませんし、知らないことに対して準備することはできないのですから。

以上です。

(個人的なアイデア)

プラネタリー・バウンダリー提唱者のヨハン・ロックストロームもSDGsに採用されてる。

SDGsは、一神教での法人倫理を統合し数値化している可能性もあります。

多神教ではブッダの八正道です。

SDGsや気候変動対策は、再生可能エネルギーのことではありません。パンデミック対策の一環です!それ以外の活動は派生物。具体的にSDGsの数値を示さない権力濫用の口実に注意!

これらの源流は、Spaceship Earth(宇宙船地球号)のバックミンスターフラー。

バックミンスターフラーは、思想家というか製品デザイナー?

ガイア理論の方が馴染みがあって、こっちの方が腑に落ちるが、それがスティーブジョブズに継承し、今のAppleParkに繋がる影響を与えた。

AppleParkは、バックミンスターフラーの弟子が建築しています。

経済学者で、ケンブリッジ大学名誉教授のパーサ•ダスグプタが、イギリス政府に提出した報告書の中に登場。

経済学を学ぶと、登場する資本や労働などの生産要素の投入量と算出量の関係を示す生産関数があります。

こうした関数は、様々な前提条件に基づきますが、経済学者は、収穫逓減の法則と言うものをよく知っています。

このような人工的な生産関数とは、他に天然由来の生産関数。

つまり、自然から収穫できる生産関数を導き出し、地球全体の生産関数というエコシステムを数値化することでバランスをコントロールできるかもしれないというアイデア。

ここでは、自然資本と呼びます。

自然資本を加味すれば現在の経済成長ペースがどこまで持続可能かを分析することもできます。

人間は、国内総生産GDPを生み出すため、自然から資源を取り出して使い、不要になったものを廃棄物として自然に戻す。

もし、自然が自律回復できなくなるほど、資源が使われて、廃棄されれば、自然資本の蓄積は減少し、それに伴い貴重な生態系サービスの流れも減っていくことになります。

さらに、教授は、経済学者も経済成長には限界があることを認識すべきだと説いています。地球の限りある恵みを効率的に活用しても、それには上限があります。

したがって、持続可能な最高レベルの国内総生産GDPと言う臨界点の水準も存在するということが視野に入るようにもなります。これは、まだ現時点では誰にもわかりませんので解明が必要です。

なお、地球1個分は、ずいぶん昔に超えています。

<おすすめサイト>

OpenROV Trident - An Underwater Drone for Everyone

ヨハン・ロックストローム:繁栄する持続可能な世界SDGsを築く5つの革新的な政策?

ジェニファー・バーディン:海流が起こるしくみ?

ローラ・ロビンソン:神秘的な海底で私が出会う秘密

デイビッド・ラング:私の水中探査ロボット

<提供>

東京都北区神谷の高橋クリーニングプレゼント

独自サービス展開中!服の高橋クリーニング店は職人による手仕上げ。お手頃50ですよ。往復送料、曲Song購入可。詳細は、今すぐ電話。東京都内限定。北部、東部、渋谷区周囲。地元周辺区もOKです

東京都北区神谷高橋クリーニング店Facebook版

#セバスチャン#ハルー#Drone#ロボット#氷河#船舶#電力#エネルギー#GPS#人工#知能#水中#災害#洞窟#国際#北極#南極#二酸化炭素#環境#NHK#zero#ニュース#発見#discover#discovery

0 notes

Text

Optimizing Datasets for Efficient AI Model Training

Introduction

The effectiveness and performance of artificial intelligence models are significantly influenced by a key element: the dataset. Regardless of whether you are developing a neural network for image recognition or a conversational agent capable of comprehending natural language, it is essential to optimize your Dataset For Ai Training to attain the intended results. This blog will examine various strategies and best practices for enhancing datasets to facilitate efficient training of AI models.

Why Dataset Optimization Matters

AI models acquire patterns and generate predictions based on the datasets utilized during their training. An inadequately prepared or suboptimally structured dataset may result in:

Overfitting: This occurs when a model excels with training data but struggles with new, unseen data.

Underfitting: This happens when a model does not adequately capture the fundamental trends present in the data.

Extended Training Durations: Extraneous data or noise can considerably hinder the training process.

Bias and Ethical Concerns: Imbalanced datasets can produce biased predictions, raising ethical issues.

By refining your dataset, you can decrease training duration, enhance model accuracy, and promote fairness.

Steps to Optimize Datasets for AI Training

1. Clearly Articulate Your Goal

Prior to gathering or organizing your dataset, it is essential to articulate the specific problem you aim to address. Are you developing a sentiment analysis application or a recommendation engine? A well-defined goal will assist you in determining the necessary type and extent of data.

2. Acquire High-Quality Data

The effectiveness of your AI model is significantly influenced by the quality of the data utilized. When sourcing data, consider the following criteria:

Relevance: Confirm that the data is pertinent to your problem statement.

Diversity: Utilize data from a variety of sources to enhance generalization.

Accuracy: Ensure the validity of your data, particularly in the case of labeled datasets.

3. Data Cleaning

Data cleaning constitutes a critical phase in the optimization process. The following actions are recommended:

Eliminate Duplicates: Remove redundant records to prevent bias in analysis.

Address Missing Values: Employ imputation methods or discard incomplete records as needed.

Standardize Data: Adjust feature scales to achieve consistency.

Exclude Outliers: Apply statistical techniques to detect and remove extreme values.

4. Achieve Dataset Balance

Imbalanced datasets can result in biased models. For instance, a dataset comprising 90% positive and 10% negative labels may lead the model to predominantly favor positive predictions. To rectify this imbalance, consider the following approaches:

Oversampling: Increase the number of samples from underrepresented classes by duplicating them.

Undersampling: Decrease the number of samples from overrepresented classes.

Synthetic Data Generation: Employ methods such as SMOTE (Synthetic Minority Oversampling Technique) to create additional data points.

5. Feature Engineering

Feature engineering plays a crucial role in improving the predictive capabilities of your dataset. The process involves several key steps:

Feature Selection: Determine and keep the most pertinent features.

Feature Extraction: Generate new features based on the existing ones.

Dimensionality Reduction: Employ methods such as PCA (Principal Component Analysis) to decrease the number of features while maintaining essential information.

6. Enhance Your Data

In fields such as image recognition or natural language processing, data augmentation can increase the size of your dataset without the need for further collection:

Image Data: Implement transformations like rotation, flipping, or cropping.

Text Data: Employ techniques such as synonym substitution or back translation.

7. Prioritize Ethical Considerations

Refine datasets to mitigate bias and promote fairness by:

Conducting Dataset Audits: Periodically assess your data for potential biases.

Incorporating Diverse Samples: Ensure representation across all demographics, categories, or use cases.

Maintaining Transparency: Clearly document the processes involved in data collection and preparation.

8. Strategically Partition Your Data

Segment your dataset into three distinct sets: training, validation, and testing. A common distribution may be as follows:

Training Set: Comprising 70-80% of the data for the purpose of model training.

Validation Set: Allocating 10-15% for the adjustment of hyperparameters.

Test Set: Designating 10-15% for the assessment of final performance.It is crucial to ensure that these sets are mutually exclusive to prevent any data leakage.

9. Streamline the Process through Automation

Employ various tools and libraries to enhance the efficiency of dataset optimization:

Python Libraries: Utilize Pandas, NumPy, and scikit-learn for effective data cleaning and preprocessing.

Data Annotation Tools: Consider using Label Studio, Prodigy, or Doccano for the labeling process.

Data Version Control: Implement tools such as DVC (Data Version Control) to monitor modifications to your dataset over time.

Benefits of Dataset Optimization

Optimizing datasets yields significant advantages, such as:

Accelerated Training: More compact and refined datasets minimize computational demands.

Enhanced Accuracy: Properly curated data allows the model to identify relevant patterns effectively.

Equitable and Ethical Models: Well-balanced datasets mitigate biases and foster inclusivity.

Resource Efficiency: Prevents the unnecessary expenditure of resources on superfluous or irrelevant data.

Conclusion

Enhancing datasets for the training of AI models is an essential process that significantly influences the model's performance, fairness, and efficiency. By implementing the strategies discussed previously, you can develop datasets that fulfill the needs of your AI initiatives while also adhering to ethical guidelines and industry standards.

For additional information on AI training and dataset management, please visit Globose Technology Solutions .ai.

0 notes

Text

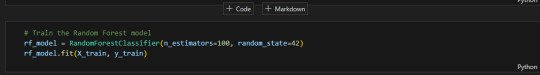

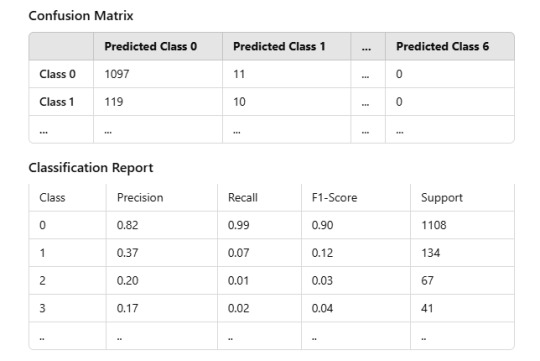

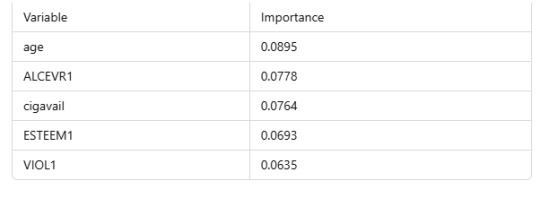

Random Forest Analysis on Predicting Binary Response

Introduction

Random Forest is a versatile machine learning algorithm that excels in classification tasks. In this analysis, we applied a Random Forest model to predict the binary response variable ALCPROBS1 using a dataset containing multiple explanatory variables. Below, we present the methodology, results, and key insights derived from the analysis.

Methodology

1. Data Preparation

To prepare the dataset for modeling, the following steps were taken:

Target Variable and Features: The target variable, ALCPROBS1, was separated from the explanatory variables.

Handling Missing Data: Rows with missing values in the target variable or any explanatory variables were removed to ensure a clean dataset.

Data Splitting: The data was split into training and testing sets in a 70:30 ratio to evaluate model performance.

2. Training the Random Forest Model

A Random Forest classifier with 100 decision trees was trained on the training dataset. The random_state parameter ensured reproducibility.

3. Model Evaluation

The model was evaluated on the test dataset using the following metrics:

Accuracy

Confusion Matrix

Classification Report

Feature Importance

Results

Model Performance

Accuracy: The model achieved an accuracy of 80.77% on the test dataset.

The model performed well for the majority class (0) but struggled with minority classes due to class imbalance.

Feature Importance

The top contributing features were:

Interpretation

Handling Missing Data

Rows with missing values were removed, ensuring data integrity but potentially introducing bias. Future analyses should explore imputation techniques to retain more data.

Class Imbalance

The dataset had a significant imbalance in the target variable, with class 0 dominating. Techniques like oversampling, undersampling, or class-weight adjustments could help address this issue and improve performance for minority classes.

Key Predictors

age: Older individuals may show different patterns of alcohol-related issues.

ALCEVR1: History of alcohol use strongly correlates with alcohol-related problems.

cigavail: Access to cigarettes often links to risky behaviors, including alcohol use.

Limitations and Future Work

Class Imbalance: Future models should address class imbalance for improved generalization.

Feature Selection: Incorporating domain-specific features or interactions between variables may enhance accuracy.

Model Comparison: Comparing Random Forest with other models, such as Gradient Boosting or Support Vector Machines, could yield better insights.

Visualization: Visualizing feature importance and confusion matrix (e.g., heatmaps) can improve interpretability.

Conclusion

The Random Forest model demonstrated robust performance for the majority class but struggled with minority classes due to class imbalance. By addressing this issue and exploring additional predictors, future analyses can further enhance predictive accuracy and insights for alcohol-related problems.

0 notes

Text

10 Best Practices for Digital Tensile Testing

Source of Info: https://www.perfectgroupindia.co.in/10-best-practices-for-digital-tensile-testing.php

One of the fundamental techniques used in material analysis to assess the mechanical characteristics of different materials is digital tensile testing. It yields very useful data regarding tensile strength, elasticity, ductility, and other properties for a wide range of materials, including metals, polymers, textiles, and composites. It is crucial to comprehend the best practices in order to maximize the effectiveness of this potent method. The following list of the top ten digital tensile testing best practices will assist you in optimizing your material analysis workflow and discovering fresh avenues for material characterization.

1. Calibration Confidence

Make sure your testing apparatus is calibrated accurately and up to date to start with a strong base. Frequent calibration checks provide accurate and dependable results, boosting the confidence you have in your testing procedure.

2. Sample Selection Savvy

Make careful selections of your samples, taking into account aspects like surface condition, size, shape, and homogeneity of material. Properly prepared samples minimize variability and improve data integrity by guaranteeing consistency and repeatability in test results.

3. Gripping Guidance

Right sample holding is essential for precise and representative test results. Choose grips that are appropriate for the shape and type of material you are using to ensure even stress distribution and avoid early sample failure.

4. Strain Sensibility

Optimize strain measurement accuracy by selecting an appropriate extensometer and ensuring proper installation and alignment. Precise strain control enables detailed analysis of material behavior under various loading conditions.

5. Speed Selection

To properly capture important material responses, strike the ideal balance between the speed of the test and the rate at which data is acquired. Adjust testing parameters based on the deformation properties of your material to prevent over- or undersampling of the data.

6. Temperature Tact

Consider the effects of temperature on the properties of the materials by conducting tests in controlled environments. Temperature baths or chambers allow for the assessment of both mechanical and thermal properties while preserving the integrity of the specimen.

7. Data Delve

Utilize cutting-edge software features to process post-test data and monitor in real-time as you delve deeply into analyze data. To fully comprehend the performance of a material, extract insightful information from fracture analysis, stress-strain curves, and modulus calculations.

8. Safety Spotlight

Give priority to safety procedures so that during testing operations both people and equipment are protected. To effectively reduce potential hazards, put in place the necessary safety measures, such as personnel training, emergency stop mechanisms, and machine guarding.

9. Standard Compliance

Adhere to relevant industry standards and testing protocols to ensure consistency and comparability of results. Familiarize yourself with ASTM, ISO, or other applicable standards, and follow prescribed procedures meticulously to maintain credibility and reliability in your testing endeavors.

10. Continuous Improvement

Embrace a culture of continuous improvement by soliciting feedback, conducting regular audits, and staying abreast of technological advancements in digital tensile testing. Strive for excellence in every aspect of your testing process, driving innovation and pushing the boundaries of material analysis.

Conclusion

In conclusion, you can fully realize the potential of this adaptable method for material characterization by learning the best practices for digital tensile testing. You may improve the precision, dependability, and effectiveness of your testing processes by following calibration guidelines, making the most of sample preparation, and utilizing cutting-edge data analysis technologies. As you proceed with your material analysis journey, adopt these best practices as a guide to help you make ground-breaking discoveries and breakthroughs in the fields of materials science and engineering.

Build an environment of ongoing learning and development within your company by working together with colleagues and industry experts to share best practices and insights. In materials science and engineering, you can lead significant breakthroughs and stay ahead of the curve by adopting a proactive and forward-thinking approach, which will ultimately shape the field's future.

Frequently Asked Questions (FAQs)

Q: What is digital tensile testing?

Digital tensile testing is a method used to evaluate the mechanical properties of materials by applying tension until the material breaks.

Q: Why is digital tensile testing important?

Understanding a material's strength, elasticity, and ductility is essential for a variety of industries, including manufacturing, construction, and aerospace. Digital tensile testing offers valuable insights into these properties.

Q: How often should calibration checks be performed?

As advised by the manufacturer, calibration checks ought to be carried out on a regular basis—typically, at least once a year or whenever there is a substantial alteration in the testing environment.

Q: What types of materials can be tested using digital tensile testing?

Metals, polymers, textiles, and composites are just a few of the materials that can be tested using digital tensile testing.

Q: How can I improve the accuracy of my digital tensile testing results?

To improve accuracy, ensure proper sample preparation, select appropriate testing parameters, and adhere to relevant industry standards and protocols.

0 notes

Text

here another reminder of how cool undersampled raytracing looks like (left on is raytraced with 25 samples. right one is not raytraced)

2 notes

·

View notes

Text

🌟 Imbalanced Dataset: Thách thức và Giải pháp trong Machine Learning 🌟

💡 Bạn đã bao giờ gặp phải tình trạng dataset mất cân bằng khi làm việc với Machine Learning chưa? Đây là một trong những thách thức lớn nhất khi xây dựng mô hình AI! 🤔 Một số class trong dữ liệu có số lượng áp đảo so với các class còn lại, dẫn đ���n việc mô hình bị thiên vị và không đưa ra được kết quả chính xác. 😰

👉 Tại sao Imbalanced Dataset lại nguy hiểm?

📉 Hiệu suất mô hình giảm sút: Accuracy cao không đồng nghĩa với hiệu quả nếu class quan trọng bị bỏ qua.

🎯 Không phản ánh thực tế: Mô hình chỉ dựa vào số liệu phổ biến mà bỏ qua các trường hợp hiếm gặp.

🔥 Làm sao để xử lý?

✅ Resampling: Tăng (oversampling) hoặc giảm (undersampling) dữ liệu của các class để cân bằng dataset.

🛠 Sử dụng thuật toán phù hợp: Áp dụng các phương pháp như SMOTE, ADASYN hoặc thuật toán thiết kế riêng cho dữ liệu mất cân bằng.

📊 Sử dụng metrics phù hợp: Thay vì chỉ dựa vào accuracy, hãy sử dụng F1-score, Precision, và Recall để đánh giá.

✨ Bạn có biết? Những giải pháp này không chỉ giúp cải thiện mô hình mà còn tối ưu hóa khả năng dự đoán trong thực tế, đặc biệt là trong các lĩnh vực nhạy cảm như y tế, tài chính hay an ninh. 💻❤️

📌 Tò mò về cách áp dụng các phương pháp này trong thực tế? Xem ngay bài viết chi tiết tại website của chúng tôi để hiểu rõ hơn! 🌐 Link bài viết

💬 Bạn đã từng đối mặt với Imbalanced Dataset chưa? Bạn đã dùng cách nào để giải quyết? Chia sẻ câu chuyện của bạn bên dưới nhé! 👇👇

1 note

·

View note

Text

Imbalanced Dataset: Thách thức và Giải pháp trong Machine Learning

🚀 Bạn đã từng gặp tình huống khi dữ liệu của mình bị mất cân bằng giữa các lớp? Một lớp chiếm đến 90% dữ liệu, trong khi lớp khác chỉ chiếm 10% (hoặc ít hơn)? Điều này không chỉ phổ biến trong các bài toán thực tế mà còn đặt ra vô số thách thức khi xây dựng mô hình Machine Learning. 🤔

💡 Tại sao Imbalanced Dataset lại là vấn đề lớn?

📉 Mô hình có xu hướng ưu tiên lớp chiếm đa số, dẫn đến kết quả dự đoán sai lệch.

🛠️ Các chỉ số đánh giá như Accuracy dễ bị đánh lừa, không phản ánh đúng hiệu quả thực tế.

✨ Có giải pháp nào để đối phó với dữ liệu mất cân bằng không? Chắc chắn rồi! Trong bài viết này, chúng tôi sẽ cung cấp những chiến lược hiệu quả, từ: ✔ Resampling Techniques (Oversampling, Undersampling) ✔ Sử dụng các thuật toán mạnh mẽ hơn như SMOTE hoặc ADASYN ✔ Chọn metric đánh giá hợp lý như F1-score, ROC-AUC ✔ Và còn nhiều mẹo hữu ích khác để xử lý vấn đề này!

🔗 Đọc bài viết chi tiết tại đây: Imbalanced Dataset: Thách thức và giải pháp trong Machine Learning

📣 Hãy cùng chia sẻ kinh nghiệm của bạn! Bạn đã từng đối mặt với dữ liệu mất cân bằng chưa? Cách bạn xử lý là gì? Bình luận ngay bên dưới để chúng ta cùng trao đổi nhé! 💬👇

0 notes