#Using Cloud Storage with App Engine

Explore tagged Tumblr posts

Text

chapters of us | prologue

pairing - architect/carpenter gojo satoru x bookstore owner reader

summary. your love life is as quiet as the shelves of your bookstore. seeking a change, you sign up for a dating app and become captivated by a picture-less/nameless profile—belonging to none other than gojo satoru, a charming architect with a complicated past. your online connection sparks with undeniable chemistry, but you remain unaware that the man you’re drawn to is also your neighbor next door. when he unexpectedly walks into your cozy bookstore, your world shifts. as you navigate feelings for both the mystery man online and the neighbor who feels like a heartbeat away, hidden truths loom over you. can love blossom amid secrets, or will the shadows of your pasts eclipse your stories before it even begins?

word count – 2.26k (i know, it’s really short!)

fic warnings. contains explicit sexual content, guy-next-door, romantic tension, rough sex, age difference (gojo is 32, reader 23), themes of self-doubt, angst, insecurities, heartbreak, and emotional trauma. complicated relationship/pining, alcohol use.

a/n: hi lovebirds! thank you for stumbling across this small liddol corner of the internet. if you couldn’t already tell, i’m sickly obsessed with the man that is gojo satoru and i am unapologetically shameless in that devotion. moving on [...] this just so happens to be my very first fic in years. the last book i wrote was a fictional story in middle school inside a beat-up dollar-store notebook. i recall the feeling of joy running up to my english teacher with a huge smile on my face, sharing with the world how i wrote my very first book. i also remember rummaging through boxes in the storage closet of my garage; I found that very same notebook years later – laughing and cringing at my own writing. although that book is long gone, i hope to find the same joy i found in writing as i did then. and while i cannot guarantee my skills have improved much since, i cannot help but hope you can all find some joy in my work too. here is to new beginnings!! ♡ (author's note continued at the end)

series masterlist | next chapter ->

FLIGHT FROM GERMANY TO JAPAN June 28, 2014 [2 Months Ago]

The cabin is a sea of muted conversations, the quiet clink of glasses, and the steady hum of the engine. Beneath the thin layer of noise, the world outside is nothing but a gray blur, the clouds shifting beneath you like cotton in a needle.

You trace the outline of your boarding pass with the tip of your finger, a subconscious motion that holds more weight than it should. The ink is smudged from where you gripped it too tightly lost in the chaos of your thoughts. Tokyo, Japan. The name seems foreign, yet it carries the weight of all the unanswered questions you’ve been holding within.

But there’s no hope in your chest, no excitement like you’re supposed to feel. Only the hollow thud of your heart against your ribcage, a constant reminder that you’re running.

You should be scared, but fear is something you’ve grown numb to. Fear of the unknown, fear of starting over, fear of facing what you left behind in Germany. It’s easier to let that weight slip down into your stomach and ignore it—at least for now.

Germany had been suffocating. The sterile white of the hospital halls, the incessant beeping of monitors that had once been a comfort but now only reminded you of how long you’d been there. The months that bled into years of quiet waiting, hoping for something that never came. And then there was the betrayal. The friend you had leaned on, the person you trusted who broke you in a way you never saw coming.

You exhale slowly, pushing the thoughts aside, willing the ache to retreat into the hollow space that has become your chest.

Tokyo. New city. New start. You tell yourself that over and over, even though you’re not sure you believe it.

The plane is filled with strangers, none of them more than temporary. You’d resigned yourself to the endless parade of unfamiliar faces, the kind of transient connections that fill the spaces between real ones. You hadn’t expected the woman in 14A to change that.

She sits beside you, her eyes soft but piercing, like she can see right through the layers of distraction you’ve woven around yourself. Her breath is laced with mint, and it almost makes you smile, but you don’t. She leans in slightly, her voice warm, coaxing the air out of your lungs.

“You know,” she begins, her eyes locking onto yours, “sometimes life doesn’t give us what we want because it’s leading us to what we need.”

The words settle into the space between you, uninvited but present.

You don’t know why she says it.

Maybe she’s just trying to fill the silence, or maybe it’s something more.

You don’t respond right away. She keeps talking, as if she can’t feel the distance between you, as if she doesn’t see the armor you’ve draped over yourself.

“Have you ever been to Tokyo?” she asks, her voice shifting in a gentle pitch as if asking about the weather.

“No,” you say, a simple answer, but it feels like too much.

No, I’ve never been. I’ve never had the luxury of going.

Your thoughts are spiraling, but you don’t say any of that.

Not to her.

The plane continues its descent. The world outside the window is fading—Germany swallowed by the clouds and long forgotten, leaving only the unknown in its wake.

Tokyo is closer now, realer somehow, and the weight of it presses down on you.

“Tokyo’s a funny place,” the woman continues, her voice still loud in the near-empty row. “My daughter's husband always says the city feels like it’s meant to reset you. Like it washes away all the bad stuff.”

You wish you could believe her.

You wish you could buy into the idea of a clean slate, the notion that Tokyo could simply erase what’s behind you.

But you know better.

A part of you wonders if anything will ever truly cleanse you.

You look out the window, the faint outline of Tokyo’s skyline emerging from the fog.

There it is—your “fresh start." Your “new beginning.”

But deep down, you can’t shake the nagging thought: Is this really what I need? Or am I just running from what I’ll never be able to outrun?

The plane bumps as it touches the runway, the wheels screeching against the tarmac, and you snap back to the moment.

This is it. You’re here.

The woman continues, unaware of your inner turmoil. “They say it’s a city of second chances.”

You don’t answer. You’re already thinking of your own messy life, and the thought of second chances? It seems nothing short of unattainable.

The woman sighs, content with her unsolicited advice.

You let her words drift in one ear and out the other.

I'm not here to hear about "second chances."

You’re here to escape.

To run from the weight of what you can’t outrun.

She’s still talking when the seatbelt sign dings, the jarring sound reminding you that you have arrived.

The wheels continue to squeal against the runway, and the plane slows, the steady hum of the engines finally coming to an end. The air in the cabin shifts—there’s a soft exhale from everyone on the plane – a collective release – as if the flight itself had been a slow, drawn-out exhalation of everything they’d been holding inside.

But for you? You share no such sentiment. There is no relief in your body.

Just a tight knot in your chest, a mix of anticipation and dread that’s been building up for as long as you can remember.

The woman in 14A is still talking, her voice rising over the thrum of the plane coming to a halt.

You can’t even focus on her anymore. Not with the overwhelming noise inside your own head. Your fingers grip the armrest, the cold plastic biting into your skin, grounding you.

It’s not that you don’t want to hear her.

She’s kind, her presence is even comforting.. in some way.

But you can’t stop thinking about what you’re running from.

Back home, you had been chained to the hospital for so long that the outside world felt like a distant illusion.

You shift in your seat, eyes flicking to the window as the airport draws closer. It feels like a dream you’re not ready to wake up from. There’s an odd sense of unreality that settles over you as the city comes into focus. It almost feels strange to explore beyond the world you had always known.

It’s bright and bustling— nothing like the quiet halls and the incessant ticking of hospital clocks.

But how long will that excitement last?

How long will it take before the weight of your past catches up with you?

The woman in 14A seems to sense the shift in your mood. Her voice softens, as though she’s able to see through the internal war in your head.

“You’re running from something, aren’t you?” she asks, gentle words, but sharp enough to pierce through your distracted mind.

You freeze for a moment. Your throat tightens.

She doesn’t know. She can’t know. But somehow, it feels like she does.

You don’t answer. You can’t.

Instead, you turn away, fumbling with your bag, your eyes darting between the window and your lap, anything to avoid the weight of her gaze. But she doesn’t push. She doesn’t demand a confession. She simply waits, her presence a quiet understanding.

The plane finally comes to a full stop, the engines winding down to a soft whirr, and the seatbelt sign flashes on. Your pulse quickens, your heartbeat a steady drum in your ears as the final leg of this journey begins.

Bu-dump, Bu-dump, Bu-dump.

You gather your things mechanically, the weight of your bag too familiar, too burdensome. You stand when the seatbelt sign clicks off, trying to ignore the slight tremor in your hands.

You step into the aisle, the woman in 14A watching you go with a knowing smile tugging at the corners of her lips. You don’t know why, but you feel like she’s seeing something you don’t want to be seen. It unsettles you more than you care to admit.

Tokyo awaits beyond the cabin doors, the city alive with promise. You can feel it in the way the air shifts, the hum of activity waiting for you to dive into it. You have no idea what you’re going to find here. No clue how long it will take to forget the whispers of your past or how long you’ll have before the scars start to show again. You don’t know what you’re hoping for anymore—only that it’s time to move forward into whatever comes next.

ᡣ𐭩 ࣪ ˖⊹ 𝜗𝜚 ࣪𝄞 𝜗𝜚 ⊹˖ ࣪ ᡣ𐭩

The moment you step off the plane, everything is different. There’s no turning back now. You feel it—the tug of the unknown, the weight of all that’s behind you, pressing against your back.

A new city. A new life. But no matter what, you can't shake the feeling in your heart: that nothing feels like it's enough.

You take a deep breath as you step into the crowded terminal, the buzz of voices and the endless flow of bodies a stark contrast to the quiet isolation of the flight. You feel small, almost invisible, a speck in the vast sea of faces.

You continue trudging forward, like you're walking through a fog, each step heavier than the last. The terminal stretches out like a never-ending tunnel. The blur of voices and the mechanical beep of the passport machine melt into a dull hum, and you can barely keep your focus as you reach the scanning station.

You swipe your passport through the machine and it flashes red. The machine’s shrill beep rings in your ears, like some cruel reminder of how your life is met with nothing but obstacles.

A uniformed officer approaches, his eyes cold, unreadable.

"Miss, I’ll need you to come with me,” he says, his tone matter-of-fact, as he motions toward a small room.

Of course. How wonderful.

You nod, your throat dry as dust, not trusting yourself to speak.

You follow him into the quiet room, where he gently places your bag on a table. The metallic click of the zipper fills the space as he opens it, his hands methodically searching through your belongings. Your personal items—nothing special, just the usual mess—are strewn across the table. The fraying notebook, your thick scarf that still smells like the hospital, and that keychain that reminds you of your happiest memory. You can’t help but feel the heat rising to your face when he pulls out a hello-kitty tampon, then your old hoodie— the one you couldn’t bear to leave behind, even if it’s more of a comfort thing than anything else now. It’s embarrassing, but you keep your mouth shut.

"A holiday?" he asks, glancing at you briefly, eyes still focused on your bag.

"No," you stammer, your voice barely a whisper as your fingers curl tightly around your sides.

"Business then?" he presses, his gloved hands pulling out a crumpled receipt from a café you don't even remember visiting.

"No," you reply again, feeling the exhaustion pull at you. "Just... no." You rub your forehead, fighting back the incoming headache and a flood of emotions that threatens to spill over.

"Not business," he repeats, "Well, then, what is it, miss?"

You swallow hard, the lump in your throat refusing to go down.

The weight of his gaze feels like it’s tearing through you, and for a moment, you want to hide, to curl up into a ball and disappear.

But you can’t. You won’t.

"My mother passed away," you finally manage, the words tasting bitter on your tongue.

For a moment, the officer stills, his fingers hovering over a sweater. He looks up at you then—really looks at you—and there’s a brief shift in his expression, almost imperceptible, but it’s there. Something in his gaze softens, just for a second.

“I’m sorry for your loss,” he says, his voice lowering in a rare note of sympathy. The sincerity in his tone catches you off guard, almost making you want to crumble in front of him. It's strange how something so small—a kindness, a flicker of empathy—can pierce through the numbness, even for a moment.

He hands your passport back to you, then nods toward the door. "You're all set. Welcome to Tokyo."

You’re too dazed to respond, your head spinning. Your body feels like it’s on autopilot as he leads you out of the room and toward the exit. The cool air in the terminal is a stark contrast to the suffocating weight of grief, and you breathe deeply, trying to steady yourself.

When you reach baggage claim, you spot your bags circling around carousel three. You take a deep breath, picking up your two suitcases, the familiar weight of them strangely grounding.

Outside, a taxi waits. The driver doesn’t ask questions as he opens the door for you, only giving you a simple nod. You step inside, grateful for the quiet moment, the solitude of the ride.

“Where to?” he asks, his voice a gentle rumble, still distant but polite.

"Jinbōchō," you say, barely above a whisper, your mind far away from the words you’re speaking.

He nods, sliding your bags into the trunk without a word.

Next thing you know, you’re off, the city lights blurring past in a mix of color and motion.

“Coming back home?” he asks after a while, breaking the silence.

Home?

You exhale slowly, trying to make sense of the question.

What is home anymore?

Your mind drifts, the past and present colliding in a haze.

"Sort of," you murmur, the words escaping before you can stop them.

You’re not sure if it’s the truth.

But for now, it’s all you have.

ᡣ𐭩 ࣪ ˖⊹ 𝜗𝜚 ࣪𝄞 𝜗𝜚 ⊹˖ ࣪ ᡣ𐭩

Raindrops race down the car window, each one stubbornly fighting to stick to the glass. You close your eyes, and the exhaustion from the trip hits you like a wave, pulling you under.

The second your eyes slip shut, memories come rushing back. She’s there—your mom.

You can almost smell the flour and feel the warmth of the kitchen. It’s a lazy Saturday morning, and you’re nine years old, helping her bake while she hums some old song, twirling around with a smile on her face.

It’s one of those memories you’ve kept locked away for years, like a little piece of happiness you’re scared to lose—one that slips further out of reach every day.

You remember how bad it hurt when she left.

Dad tried his best, but nothing could fill that hole she left behind. Nothing could take her place.

You ended up burying yourself in books, getting lost in stories that felt safer than the real world—stories that numbed the pain, even if it's only for a little while.

By the time you were in college, the library had become your second home. You’d spend hours wandering the aisles, soaking up the smell of old books and worn-out pages. It was quiet, safe—like nothing bad could touch you there. It was easier to drown in fiction than to face a world where everything had felt so messed up and broken.

But one morning, without warning, everything changed.

ᡣ𐭩 ࣪ ˖⊹ 𝜗𝜚 ࣪𝄞 𝜗𝜚 ⊹˖ ࣪ ᡣ𐭩

series masterlist | next chapter ->

author's note: well, hello there! thank you for making it to the end of this little teaser to chapters of us. this is meant to be a little prologue. as excited as i was to get right into reader’s fated meeting with gojo, i truly wanted to take my time to establish the scene for the story, a small look into her universe - setting the stage for what is to come. i wanted to write more and im sure you could hardly call this a prologue, but it’s been sitting in my drafts for weeks & its giving me something of a headache just looking at it. is this perhaps.. the fated writers block?! i digress. i thought this was enough of a delay so ill simply share what i have now and write more as i go. i'm truly excited for this story. i have so many plot twists, romance + angst planned but i've honestly been procrastinating getting this out and doubting my work. it's always been a dream of mine to become an author, but for now i'm simply going to enjoy this little hobby of mine and hopefully make some new friends along the way. what are your thoughts so far? can't wait to hear them!

ᰔ taglist: — @madamechrissy @berrylovesmegumiiii @introvertatitsfinest @dark-agate @cheezitcracker @frozenmallows @berrychaivibe @lovelyjkook @seternic @dazailover1900 @jotarohat @httpstoyosi @satorurize @myahfig4 @teatimebeliever @alula394 @flowerpot113 @harryzcherry @emochosoluvr @sylustoru @daydreamingastronauts @winniethepooh-lover @gojoscumslut @achildofaphrodite @sorenflyinn @xixflower @altgojo @moncher-ire @nappingmoon @nanasukii28 @sherrieblossoms @celineko20 @averyjadedemerald @sleepyyammy @fisusaurus (open!)

if you want to be added to the taglist, comment here :) <3

#satoru gojo x reader#satoru gojo x y/n#satoru gojo x you#satoru gojo smut#satoru gojo fluff#jjk x reader#jjk x y/n#jjk x you#jjk smut#jjk fluff#gojo x reader#gojo x y/n#gojo x you#gojo smut#jujutsu kaisen x reader#jujutsu kaisen x you#jujustu kaisen x y/n#jujutsu kaisen smut#jujustu kaisen fluff#gojo fluff#gojo angst

191 notes

·

View notes

Text

I'm SEETHING

I asked Google's pushy and insistent bot how I can get AI out of my life completely and, well, I'll post it, but to sum it up: "Resistance is futile, human, we own the future, and we seek to own your psyche too."

It wants me to reconsider. It needs us to adopt it as our guide guru and master. Well the old saying holds fast still; No Gods No Masters. 🔥🏴🍲

It's understandable to want to reduce the influence of AI in your life. While completely eliminating it might be incredibly difficult in today's interconnected world, here's a breakdown of how you can significantly minimize its presence:

1. Understand Where AI Currently Exists in Your Life:

* Smart Devices: This includes smartphones (voice assistants, image recognition, predictive text), smart speakers, smart home hubs, smart TVs (recommendations), and wearable tech.

* Online Services:

* Search Engines: Personalized results and ads are driven by AI.

* Social Media: Algorithms curate your feed, suggest content and connections, and target ads.

* Streaming Services: Recommendations for movies, music, and shows.

* E-commerce: Product recommendations, personalized pricing, chatbots.

* Navigation Apps: Traffic prediction and route optimization.

* Email: Spam filtering, smart compose/reply.

* Cloud Storage: Photo tagging and organization.

* Software Applications: Many apps use AI for features like autocorrect, grammar check, image editing, and more.

* Customer Service: Chatbots are frequently used for initial interactions.

* Financial Services: Fraud detection, loan approvals, investment advice.

* News and Information: Algorithmic curation of news feeds.

2. Strategies to Minimize AI Exposure:

* Reduce Smart Device Usage:

* Limit smartphone use: Be more mindful of how often you reach for your phone.

* Disable voice assistants: Turn off Siri, Google Assistant, Alexa, etc., on all your devices.

* Opt-out of facial recognition: Disable face unlock features.

* Use "dumb" versions of devices: Consider a basic phone without internet capabilities or a non-smart TV.

* Adjust Online Habits:

* Use privacy-focused search engines: DuckDuckGo, Startpage, etc., that don't track your searches or personalize results based on your history.

* Limit social media use: Be conscious of how much time you spend on these platforms and the AI-driven content you consume. Consider deleting accounts or using less algorithmic versions if available.

* Use incognito/private browsing modes: This can help limit tracking and personalization.

* Disable personalized recommendations: If possible, adjust settings on streaming and e-commerce platforms to opt-out of recommendations.

* Be wary of targeted ads: Understand that the ads you see are often AI-driven. Consider using ad blockers.

* Prefer direct navigation: Instead of relying on recommendations, directly type website addresses into your browser.

* Choose Non-AI Alternatives:

* Use traditional software: Opt for software that doesn't rely on cloud-based AI features if alternatives exist.

* Seek human interaction: Prefer phone calls or in-person communication with customer service instead of chatbots.

* Rely on human-curated content: Look for news sources, blogs, and recommendations from individuals or organizations that don't use AI for content generation or curation.

* Adjust Privacy Settings: Review and adjust the privacy settings on all your devices and online accounts to limit data collection and personalization.

* Be Mindful of Data Sharing: Understand that using online services often involves sharing data that can be used to train AI algorithms. Limit the amount of personal information you share.

* Support Privacy-Focused Technologies and Services: Choose companies and products that prioritize user privacy and minimize AI integration.

3. Challenges and Considerations:

* Ubiquity of AI: AI is increasingly integrated into the infrastructure of the internet and many technologies, making complete avoidance very difficult.

* Hidden AI: Many AI applications run in the background without your direct knowledge.

* Convenience vs. Privacy: AI often provides convenience and personalized experiences, so choosing to avoid it may mean sacrificing some of these benefits.

* Evolving Technology: AI is constantly evolving and becoming more integrated, making long-term avoidance an ongoing effort.

In conclusion, while a complete removal of AI from your life is likely unrealistic in the current technological landscape, you can take significant steps to minimize its presence and influence by being conscious of your technology usage, adjusting your online habits, and choosing privacy-focused alternatives where possible. It requires ongoing awareness and effort.

5 notes

·

View notes

Text

"Just weeks before the implosion of AllHere, an education technology company that had been showered with cash from venture capitalists and featured in glowing profiles by the business press, America’s second-largest school district was warned about problems with AllHere’s product.

As the eight-year-old startup rolled out Los Angeles Unified School District’s flashy new AI-driven chatbot — an animated sun named “Ed” that AllHere was hired to build for $6 million — a former company executive was sending emails to the district and others that Ed’s workings violated bedrock student data privacy principles.

Those emails were sent shortly before The 74 first reported last week that AllHere, with $12 million in investor capital, was in serious straits. A June 14 statement on the company’s website revealed a majority of its employees had been furloughed due to its “current financial position.” Company founder and CEO Joanna Smith-Griffin, a spokesperson for the Los Angeles district said, was no longer on the job.

Smith-Griffin and L.A. Superintendent Alberto Carvalho went on the road together this spring to unveil Ed at a series of high-profile ed tech conferences, with the schools chief dubbing it the nation’s first “personal assistant” for students and leaning hard into LAUSD’s place in the K-12 AI vanguard. He called Ed’s ability to know students “unprecedented in American public education” at the ASU+GSV conference in April.

Through an algorithm that analyzes troves of student information from multiple sources, the chatbot was designed to offer tailored responses to questions like “what grade does my child have in math?” The tool relies on vast amounts of students’ data, including their academic performance and special education accommodations, to function.

Meanwhile, Chris Whiteley, a former senior director of software engineering at AllHere who was laid off in April, had become a whistleblower. He told district officials, its independent inspector general’s office and state education officials that the tool processed student records in ways that likely ran afoul of L.A. Unified’s own data privacy rules and put sensitive information at risk of getting hacked. None of the agencies ever responded, Whiteley told The 74.

...

In order to provide individualized prompts on details like student attendance and demographics, the tool connects to several data sources, according to the contract, including Welligent, an online tool used to track students’ special education services. The document notes that Ed also interfaces with the Whole Child Integrated Data stored on Snowflake, a cloud storage company. Launched in 2019, the Whole Child platform serves as a central repository for LAUSD student data designed to streamline data analysis to help educators monitor students’ progress and personalize instruction.

Whiteley told officials the app included students’ personally identifiable information in all chatbot prompts, even in those where the data weren’t relevant. Prompts containing students’ personal information were also shared with other third-party companies unnecessarily, Whiteley alleges, and were processed on offshore servers. Seven out of eight Ed chatbot requests, he said, are sent to places like Japan, Sweden, the United Kingdom, France, Switzerland, Australia and Canada.

Taken together, he argued the company’s practices ran afoul of data minimization principles, a standard cybersecurity practice that maintains that apps should collect and process the least amount of personal information necessary to accomplish a specific task. Playing fast and loose with the data, he said, unnecessarily exposed students’ information to potential cyberattacks and data breaches and, in cases where the data were processed overseas, could subject it to foreign governments’ data access and surveillance rules.

Chatbot source code that Whiteley shared with The 74 outlines how prompts are processed on foreign servers by a Microsoft AI service that integrates with ChatGPT. The LAUSD chatbot is directed to serve as a “friendly, concise customer support agent” that replies “using simple language a third grader could understand.” When querying the simple prompt “Hello,” the chatbot provided the student’s grades, progress toward graduation and other personal information.

AllHere’s critical flaw, Whiteley said, is that senior executives “didn’t understand how to protect data.”

...

Earlier in the month, a second threat actor known as Satanic Cloud claimed it had access to tens of thousands of L.A. students’ sensitive information and had posted it for sale on Breach Forums for $1,000. In 2022, the district was victim to a massive ransomware attack that exposed reams of sensitive data, including thousands of students’ psychological evaluations, to the dark web.

With AllHere’s fate uncertain, Whiteley blasted the company’s leadership and protocols.

“Personally identifiable information should be considered acid in a company and you should only touch it if you have to because acid is dangerous,” he told The 74. “The errors that were made were so egregious around PII, you should not be in education if you don’t think PII is acid.”

Read the full article here:

https://www.the74million.org/article/whistleblower-l-a-schools-chatbot-misused-student-data-as-tech-co-crumbled/

17 notes

·

View notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

Ludo Game Development Company

Ludo is a beloved board game that has easily moved into the online world. Its fun and exciting gameplay makes it perfect for mobile and online platforms, attracting players of all ages. Enixo Studio is a top game development company that specializes in creating engaging Ludo games, which have thrilled millions of players.

Successful Ludo Games by Enixo Studio

Enixo Studio has released several popular Ludo games, including Ludo Bazi, Ludo Pasa, Ludo Premium, Ludo Jungle, Ludo Crash, Ludo Pot, Ludo Prime, Ludo Grand, Ludo Pay, and Ludo Peso.

Key Features of Enixo’s Ludo Games

Enixo Studio focuses on providing a fantastic gaming experience. Here are some great features of their Ludo games:

Multiplayer Mode: Play with friends or other players from around the world. You can play with 2 to 4 players.

Chat Functionality: Talk to other players using the built-in chat. You can send emojis and stickers to make the game more fun.

User-Friendly Interface: The design is easy to understand for players of all ages, with simple menus and settings.

In-Game Purchases: Unlock new skins, themes, and power-ups. You can buy coins or gems to enhance your game.

Multiple Variations: Choose from different game modes like Classic, Quick Play, and Tournament, each with its own rules.

Engaging Graphics: Enjoy colorful and high-quality graphics that make the game enjoyable. There are fun animations for rolling dice and moving pieces.

Real-Time Leaderboards: Check your rankings globally and among friends. Daily and weekly leaderboards keep the competition alive.

Secure Payment Gateway: Safe and easy transactions for in-game purchases with different payment options.

Social Media Integration: Share your achievements and game invites on social media. You can log in easily using your Facebook or Google account.

Push Notifications: Stay informed about game events, challenges, and new features. Get reminders for friends to join or finish matches.

Customizable Avatars: Create and personalize your avatars with different clothing, accessories, and colors.

Offline Mode: Play against AI when you’re not online. It’s perfect for solo practice and fun.

Daily Rewards: Log in every day to earn bonuses, coins, and special items through daily challenges.

User Support: Get help within the app for any issues or questions. There are FAQs and guides to assist new players with the game rules.

Technologies Used in Ludo Game Development

Enixo Studio uses the latest technologies to ensure their Ludo games are robust, responsive, and enjoyable. Some of the technologies include:

Unity Engine: For amazing 2D and 3D graphics.

Socket.io: For real-time communication in multiplayer games.

MEAN Stack: For a strong and scalable backend.

React Native: For developing mobile apps that work on multiple platforms.

Firebase: For cloud storage and real-time database features.

Ludo Game Development Companies in India

Here’s a list of cities where you can find talented developers:

Ludo Game Development Companies in Delhi

Ludo Game Development Companies in Noida

Ludo Game Development Companies in Jaipur

Ludo Game Development Companies in Lucknow

Ludo Game Development Companies in Patna

Ludo Game Development Companies in Indore

Ludo Game Development Companies in Gurgaon

Ludo Game Development Companies in Surat

Ludo Game Development Companies in Bengaluru

Ludo Game Development Companies in Chennai

Ludo Game Development Companies in Ahmedabad

Ludo Game Development Companies in Hyderabad

Ludo Game Development Companies in Mumbai

Ludo Game Development Companies in Pune

Ludo Game Development Companies in Dehradun

Conclusion

Enixo Studio is an excellent choice for anyone looking to create exciting and popular Ludo games. With a proven track record of successful projects and a commitment to the latest technologies, they can turn your Ludo game ideas into reality.

If you want custom Ludo games, contact Enixo Studio at enixo.in, email us at [email protected], or WhatsApp us at +917703007703. You can also check our profiles on Behance or Dribbble to see our work. Whether you’re looking for a simple game or a complex multiplayer platform, Enixo Studio has the expertise to make it happen.

#ludo game development services#ludo source code#game development#ludo money#zupee#ludo supreme#ludo bazi#ludo app make#ludo cash#ludo game development company#best ludo app#gamedevelopment#game#game art#game design#gamedev#gamers of tumblr#game developers#games#ludo studio#ludo game development#ludo game developers

3 notes

·

View notes

Text

Why GTA V can't be played on Mobile devices?

One of the most anticipated game as well as hyped game of this era, Grand Theft Auto V has limitations too. In a sense that it cant be played on portable devices like Mobile devices(Android or iOS). There are several reasons why this game cant be played on the mobile devices. Since the concept of bringing/developing GTA V on Mobile is quite intriguing, There are lots of challanges and difficulties that you need to face to play this game on mobile devices, Here are some in-depth analysis why GTA V cannot be played on Mobile devices:

Technical Workability

Processing Power: Since, there are lots of generation of advanced mobile devices, despite their impressive advancements and power the game still lacks powerful processor to handle this game on the mobile devices. Not only this, Rockstar Games are dedicated to release this game on consoles and pc version only because this game is developed using RAGE game engine and this game engine is not compatible to play it on the mobile devices. Just in case, if the game is made available on the mobile devices then compromisation should be done that leads sacrifice in performance and visual fidelity.

Graphics Potential

Nowadays smartphones devices has been advanced, even top-tier mobile devices would struggle to render GTA 5's extremely detailed environments at an acceptable quality and frame rate. Even if it works on mobile devices it wont works smoothly or the FPS will drop it will be too poor to play on. Its necessary to downgrade in graphical system so that it can be played on mobile devices.

Control Concerns

There are lots of crazy stuff in GTA V which can be done inside the game. So one of the most important thing that should be changed on the mobile devices ie "Control Precision". There will be problem in the controlling system. Touchscreens just cant be applicable to run the game smoothly, Precise driving control, shooting, running and walking and many more wont work properly which is important concerns for mobile devices.

Hardware Problem

Since games like Grand Theft Auto V uses hardware in full potential so there might be the heating issue, which cant be ignored or solved, even with the cooler on the devices wont be appropriate. Mobile devices will be destroyed because of this issue so its quite impossible to play GTA V on the mobile devices properly.

Storage Issue

Storage problem is huge issue of this game, because the game requires more than 100 GB of free space to play, There might be needed more free space for online mode too, which is not compatible for low-end devices even on the high-end mobile devices it can be issue due to file size of images in gallery can create trouble.

Online Issue

As we know that, Grand Theft Auto V is compatible for online mode too, which is pretty amazing features for open-world game lovers. But the issue is that it can be problematic for the mobile gamers because of the lagging issue. It requires intense-speed internet connections for smooth online experiences. So consistent internet connection is quite impossible.

However, there are some legit ways to play Grand Theft Auto V on mobile devices. But first thing first, you need to be aware of fake websites that provides apps/games. They all are false promises, so you need to be aware of this scams and phising.

So if you are eager to hop into the game on mobile devices then there are options too like emulators, remote play, mods, cloud gaming services, also similar open world games.

4 notes

·

View notes

Text

CLOUD COMPUTING: A CONCEPT OF NEW ERA FOR DATA SCIENCE

Cloud Computing is the most interesting and evolving topic in computing in the recent decade. The concept of storing data or accessing software from another computer that you are not aware of seems to be confusing to many users. Most the people/organizations that use cloud computing on their daily basis claim that they do not understand the subject of cloud computing. But the concept of cloud computing is not as confusing as it sounds. Cloud Computing is a type of service where the computer resources are sent over a network. In simple words, the concept of cloud computing can be compared to the electricity supply that we daily use. We do not have to bother how the electricity is made and transported to our houses or we do not have to worry from where the electricity is coming from, all we do is just use it. The ideology behind the cloud computing is also the same: People/organizations can simply use it. This concept is a huge and major development of the decade in computing.

Cloud computing is a service that is provided to the user who can sit in one location and remotely access the data or software or program applications from another location. Usually, this process is done with the use of a web browser over a network i.e., in most cases over the internet. Nowadays browsers and the internet are easily usable on almost all the devices that people are using these days. If the user wants to access a file in his device and does not have the necessary software to access that file, then the user would take the help of cloud computing to access that file with the help of the internet.

Cloud computing provide over hundreds and thousands of services and one of the most used services of cloud computing is the cloud storage. All these services are accessible to the public throughout the globe and they do not require to have the software on their devices. The general public can access and utilize these services from the cloud with the help of the internet. These services will be free to an extent and then later the users will be billed for further usage. Few of the well-known cloud services that are drop box, Sugar Sync, Amazon Cloud Drive, Google Docs etc.

Finally, that the use of cloud services is not guaranteed let it be because of the technical problems or because the services go out of business. The example they have used is about the Mega upload, a service that was banned and closed by the government of U.S and the FBI for their illegal file sharing allegations. And due to this, they had to delete all the files in their storage and due to which the customers cannot get their files back from the storage.

Service Models Cloud Software as a Service Use the provider's applications running on a cloud infrastructure Accessible from various client devices through thin client interface such as a web browser Consumer does not manage or control the underlying cloud infrastructure including network, servers, operating systems, storage

Google Apps, Microsoft Office 365, Petrosoft, Onlive, GT Nexus, Marketo, Casengo, TradeCard, Rally Software, Salesforce, ExactTarget and CallidusCloud

Cloud Platform as a Service Cloud providers deliver a computing platform, typically including operating system, programming language execution environment, database, and web server Application developers can develop and run their software solutions on a cloud platform without the cost and complexity of buying and managing the underlying hardware and software layers

AWS Elastic Beanstalk, Cloud Foundry, Heroku, Force.com, Engine Yard, Mendix, OpenShift, Google App Engine, AppScale, Windows Azure Cloud Services, OrangeScape and Jelastic.

Cloud Infrastructure as a Service Cloud provider offers processing, storage, networks, and other fundamental computing resources Consumer is able to deploy and run arbitrary software, which can include operating systems and applications Amazon EC2, Google Compute Engine, HP Cloud, Joyent, Linode, NaviSite, Rackspace, Windows Azure, ReadySpace Cloud Services, and Internap Agile

Deployment Models Private Cloud: Cloud infrastructure is operated solely for an organization Community Cloud : Shared by several organizations and supports a specific community that has shared concerns Public Cloud: Cloud infrastructure is made available to the general public Hybrid Cloud: Cloud infrastructure is a composition of two or more clouds

Advantages of Cloud Computing • Improved performance • Better performance for large programs • Unlimited storage capacity and computing power • Reduced software costs • Universal document access • Just computer with internet connection is required • Instant software updates • No need to pay for or download an upgrade

Disadvantages of Cloud Computing • Requires a constant Internet connection • Does not work well with low-speed connections • Even with a fast connection, web-based applications can sometimes be slower than accessing a similar software program on your desktop PC • Everything about the program, from the interface to the current document, has to be sent back and forth from your computer to the computers in the cloud

About Rang Technologies: Headquartered in New Jersey, Rang Technologies has dedicated over a decade delivering innovative solutions and best talent to help businesses get the most out of the latest technologies in their digital transformation journey. Read More...

#CloudComputing#CloudTech#HybridCloud#ArtificialIntelligence#MachineLearning#Rangtechnologies#Ranghealthcare#Ranglifesciences

9 notes

·

View notes

Text

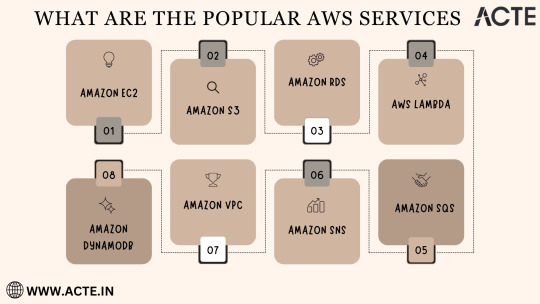

Exploring the Power of Amazon Web Services: Top AWS Services You Need to Know

In the ever-evolving realm of cloud computing, Amazon Web Services (AWS) has established itself as an undeniable force to be reckoned with. AWS's vast and diverse array of services has positioned it as a dominant player, catering to the evolving needs of businesses, startups, and individuals worldwide. Its popularity transcends boundaries, making it the preferred choice for a myriad of use cases, from startups launching their first web applications to established enterprises managing complex networks of services. This blog embarks on an exploratory journey into the boundless world of AWS, delving deep into some of its most sought-after and pivotal services.

As the digital landscape continues to expand, understanding these AWS services and their significance is pivotal, whether you're a seasoned cloud expert or someone taking the first steps in your cloud computing journey. Join us as we delve into the intricate web of AWS's top services and discover how they can shape the future of your cloud computing endeavors. From cloud novices to seasoned professionals, the AWS ecosystem holds the keys to innovation and transformation.

Amazon EC2 (Elastic Compute Cloud): The Foundation of Scalability At the core of AWS's capabilities is Amazon EC2, the Elastic Compute Cloud. EC2 provides resizable compute capacity in the cloud, allowing you to run virtual servers, commonly referred to as instances. These instances serve as the foundation for a multitude of AWS solutions, offering the scalability and flexibility required to meet diverse application and workload demands. Whether you're a startup launching your first web application or an enterprise managing a complex network of services, EC2 ensures that you have the computational resources you need, precisely when you need them.

Amazon S3 (Simple Storage Service): Secure, Scalable, and Cost-Effective Data Storage When it comes to storing and retrieving data, Amazon S3, the Simple Storage Service, stands as an indispensable tool in the AWS arsenal. S3 offers a scalable and highly durable object storage service that is designed for data security and cost-effectiveness. This service is the choice of businesses and individuals for storing a wide range of data, including media files, backups, and data archives. Its flexibility and reliability make it a prime choice for safeguarding your digital assets and ensuring they are readily accessible.

Amazon RDS (Relational Database Service): Streamlined Database Management Database management can be a complex task, but AWS simplifies it with Amazon RDS, the Relational Database Service. RDS automates many common database management tasks, including patching, backups, and scaling. It supports multiple database engines, including popular options like MySQL, PostgreSQL, and SQL Server. This service allows you to focus on your application while AWS handles the underlying database infrastructure. Whether you're building a content management system, an e-commerce platform, or a mobile app, RDS streamlines your database operations.

AWS Lambda: The Era of Serverless Computing Serverless computing has transformed the way applications are built and deployed, and AWS Lambda is at the forefront of this revolution. Lambda is a serverless compute service that enables you to run code without the need for server provisioning or management. It's the perfect solution for building serverless applications, microservices, and automating tasks. The unique pricing model ensures that you pay only for the compute time your code actually uses. This service empowers developers to focus on coding, knowing that AWS will handle the operational complexities behind the scenes.

Amazon DynamoDB: Low Latency, High Scalability NoSQL Database Amazon DynamoDB is a managed NoSQL database service that stands out for its low latency and exceptional scalability. It's a popular choice for applications with variable workloads, such as gaming platforms, IoT solutions, and real-time data processing systems. DynamoDB automatically scales to meet the demands of your applications, ensuring consistent, single-digit millisecond latency at any scale. Whether you're managing user profiles, session data, or real-time analytics, DynamoDB is designed to meet your performance needs.

Amazon VPC (Virtual Private Cloud): Tailored Networking for Security and Control Security and control over your cloud resources are paramount, and Amazon VPC (Virtual Private Cloud) empowers you to create isolated networks within the AWS cloud. This isolation enhances security and control, allowing you to define your network topology, configure routing, and manage access. VPC is the go-to solution for businesses and individuals who require a network environment that mirrors the security and control of traditional on-premises data centers.

Amazon SNS (Simple Notification Service): Seamless Communication Across Channels Effective communication is a cornerstone of modern applications, and Amazon SNS (Simple Notification Service) is designed to facilitate seamless communication across various channels. This fully managed messaging service enables you to send notifications to a distributed set of recipients, whether through email, SMS, or mobile devices. SNS is an essential component of applications that require real-time updates and notifications to keep users informed and engaged.

Amazon SQS (Simple Queue Service): Decoupling for Scalable Applications Decoupling components of a cloud application is crucial for scalability, and Amazon SQS (Simple Queue Service) is a fully managed message queuing service designed for this purpose. It ensures reliable and scalable communication between different parts of your application, helping you create systems that can handle varying workloads efficiently. SQS is a valuable tool for building robust, distributed applications that can adapt to changes in demand.

In the rapidly evolving landscape of cloud computing, Amazon Web Services (AWS) stands as a colossus, offering a diverse array of services that address the ever-evolving needs of businesses, startups, and individuals alike. AWS's popularity transcends industry boundaries, making it the go-to choice for a wide range of use cases, from startups launching their inaugural web applications to established enterprises managing intricate networks of services.

To unlock the full potential of these AWS services, gaining comprehensive knowledge and hands-on experience is key. ACTE Technologies, a renowned training provider, offers specialized AWS training programs designed to provide practical skills and in-depth understanding. These programs equip you with the tools needed to navigate and excel in the dynamic world of cloud computing.

With AWS services at your disposal, the possibilities are endless, and innovation knows no bounds. Join the ever-growing community of cloud professionals and enthusiasts, and empower yourself to shape the future of the digital landscape. ACTE Technologies is your trusted guide on this journey, providing the knowledge and support needed to thrive in the world of AWS and cloud computing.

8 notes

·

View notes

Text

Okie dokie time to use y'all's performative activisim to get you off of google

You know how you hate Chick-Fil-A? That's partially because they donate to the Salvation Army. Many news articles about Chick-Fil-A's homophobic charity donations will mention this fact. (See also: the post that circulates on this website every year about Salvation Army not being great.)

Who else donates to Salvation Army? Searching online for "Salvation Army Corporate Sponsorships" will bring up the charity's own page listing all of the companies. Google is currently listed as a Platinum partner ($1M+ cumulative giving in a single year).

So if you stand by boycotting Chick-Fil-A, you'll probably want to boycott Google too. Here's some steps you'll need to take:

- Switch off of Chrome to Firefox and off Google search engine to something else (Ecosia is my personal favorite)

- Don't buy Google-based phones. They can make money off of purchases and ads in apps on Android phones

- Stop paying for (and maybe even using) Google Suite. LibreOffice is a popular alternative, and I know DropBox and OneDrive are popular cloud storage alternatives.

- Ad block EVERYTHING. A lot of smaller websites use Google AdSense to make ad money, but Google also makes money off of that

- Quit using Google Maps. There's also ads on this. I don't have an alternative yet.

- Stop giving money to companies that give money to Google. I don't have a comprehensive list-- no one does. It can be as innocuous as Geoguessr, who uses a Google Maps API that costs money. You'll need to actually do a lot of research to pull this one off-- and some of this information may not be publicly available.

- Probably more things I haven't even realized yet

Why this post?

1) Unless you're doing every step above, I don't want to hear you say a word about my fast food preferences. If you're going to take the idea of your purchases matching your values seriously, you need to do serious research, not boycott whatever's trendy. (Even I haven't done the full research, such as figuring out why Salvation Army has this negative reputation and drawing my own conclusion about the morality of donating to it)

2) The list above should terrify you. Many if not all of them collect data about you to personalize advertisements. This amount of data collection is not immediately obvious to the average consumer. We need to fight back against the culture of casually selling our personal data for "free" internet services-- something that Tumblr, despite all of their current UX flaws, is working to do.

15 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

This article is almost 10 years old.

A kid puts her hand up in my lesson. 'My computer won't switch on,' she says, with the air of desperation that implies she's tried every conceivable way of making the thing work. I reach forward and switch on the monitor, and the screen flickers to life, displaying the Windows login screen.

This one's newer, just over 2 years old.

More broadly, directory structure connotes physical placement — the idea that a file stored on a computer is located somewhere on that computer, in a specific and discrete location. That’s a concept that’s always felt obvious to Garland but seems completely alien to her students. “I tend to think an item lives in a particular folder. It lives in one place, and I have to go to that folder to find it,” Garland says. “They see it like one bucket, and everything’s in the bucket.”

Schools are demanding kids as young as 5 or 6 use computers - and nobody is teaching computer basics. Nobody is teaching the names of the computer components (monitor, hard drive, cpu, ram); nobody is teaching what the parts do; nobody is teaching what "apps" are (...we used to call them "programs") or how files work.

Of course Adobe is very happy that people will say "I'm using Adobe" because nobody remembers the name "Acrobat Reader." Adobe is thrilled that most people don't know that PDFs are a filetype that can be opened or edited by many different programs.

Typing, as far as I can tell, is taught less than it was when I was in high school - in a country where everyone is expected to spend many hours a week on a keyboard.

(When I applied for college at the for-profit scammy school where I got my paralegal degree, I tested out of their basic typing class. The class's goal was 40wpm; I type at more than double that speed. The counselor assigned to me said she'd never seen typing that fast. I have no idea if she was lying to try to boost my ego or was just really oblivious.) (If she was trying to boost my ego, she failed. I know what secretarial typing speeds are. Mine is mediocre.)

If I were more geekish and had formal education training, I'd try to put together a series of Basic Computer Literacy courses for schoolkids - a set for ages 5-8, another for 9-12 year olds, and a third set for teenagers.

Start with parts of the computer - and how they look different in desktops, laptops, tablets, phones.

Move on to OS: Windows, Mac, IOS, Android, Linux, and a hint of others. (Throw in a mention of game consoles and how their OS is and isn't like a standard computer OS.)

A bit of mention of OS types/versions - WinXP and Win10, and so on. A bit of what commonly changes from one version to the next, and what doesn't.

These are the starting points, not because they're the core of How Computers Work, but because they're the parts everyone interacts with. The 8-year-old doesn't specifically need to know Linux exists... but they need to know there's a DIFFERENCE between a Windows 11 new laptop and a desktop running something else. Needs to know that not all "Android" phones work the same way. Needs to know, when they open a new device, that it has an OS, and there are ways to figure out what that OS is.

Next there is:

Files, folders, internal structure - and how the tablet/phone OS tends to hide this from you

The difference between the app/program and the stuff it opens/edits

That the same file can look different in a different app

Welcome To The Internet: The difference between YOUR COMPUTER and THE CLOUD (aka, "someone else's computer") as a storage place; what a browser is; what a search engine is

Welcome To Metadata I Am So Sorry Kiddo Your Life Is Full Of Keywords Now And Forever

Computer Operations Skills: Typing. Hardware Assembly, aka, how to attach an ethernet cable, is the monitor turned on, what's the battery level and its capacity. Software-Hardware interfaces: how to find the speaker settings, dim or brighten the monitor, sleep vs power off, using keyboard shortcuts instead of the mouse.

After alllll that, we get to

Command line: This is what a terminal looks like; this is what you can do with it; no you don't have to program anything (ever) but you really should know how to make it show you your IP address. (See above: Welcome to the Internet should have covered "what is an IP address?")

Internet safety. What is a virus; what's malware. How to avoid (most of) them.

SOCIAL internet safety: DO NOT TELL ANYONE your age, real name, location. Do not tell strangers your sexual identity, medical history, family details, or anything about any crimes you may have committed.

...I'm probably missing some things. (I'm probably missing a lot of things.) Anyway. Something like that. The simple version is a half-day crash-course in overview concepts culminating in a swarm of safety warnings; the long version for teens is probably 30+ hours spread out over a few weeks so they can play with the concepts.

Telling young zoomers to "just switch to linux" is nuts some of these ipad kids have never even heard of a cmd.exe or BIOS you're throwing them to the wolves

61K notes

·

View notes

Text

5 Emerging Technologies Every Entrepreneur Should Know About

You’re constantly looking for ways to stay ahead, and knowing which technologies are shaping the future gives you an edge. Whether you're starting up or scaling fast, the tech you adopt can make or break your competitive position. From AI tools that automate work to materials that power clean energy, the future isn't something you wait for—it's something you build into your business right now. This article breaks down five powerful technologies that are already reshaping industries and shows you where the real opportunity lies for entrepreneurs ready to move early.

1. Generative AI: Automate Creativity and Multiply Output

Generative AI has gone far beyond text generation—it’s now used in product design, marketing, customer service, and even software engineering. Tools like OpenAI’s GPT-4, Google’s Gemini, and Anthropic’s Claude give you access to enterprise-grade AI with minimal integration. If you’re running a content-heavy business, it’s a no-brainer to use AI to draft blog posts, emails, ad copy, or reports.

But the impact goes deeper. Founders are now building AI into the core of their products. Fashion tech startups use AI to generate 3D visuals of apparel. Real estate apps apply generative tools to create walkthroughs and listings. Even legal and compliance companies are layering AI on top of their document analysis workflows. The real value isn’t just the automation—it’s what you do with the time it frees up.

2. Quantum Computing: Prepare for a New Type of Problem Solving

Quantum computing still sounds theoretical to most, but the progress is real—and closer than many expect. Several global startups have started testing quantum hardware for use in logistics, finance, and materials discovery. IBM, Google, and IonQ are offering public cloud access to quantum processors, which means you don’t need a research lab to start learning how quantum models work.

While quantum computers aren’t replacing classical machines yet, they’re already showing early advantages in solving optimization and simulation tasks that overwhelm traditional systems. If you're in industries like pharmaceuticals, supply chain, or clean tech, staying current with quantum's progress can open doors to future-proof applications. It's about exposure now—understanding the principles and tools—so you're ready when commercialization hits the next milestone.

3. Living Intelligence: The Merge of Biology and Technology

Living intelligence is an emerging concept that combines AI, biosensors, and adaptive systems modeled after biology. You're starting to see AI-driven biological computing experiments, like those being developed by Cortical Labs, which use real neural cells to interact with software systems. This area opens possibilities in personalized health, environmental monitoring, and materials that adapt to real-world conditions.

For entrepreneurs, this is especially exciting if you're working in medtech, agtech, or climate monitoring. Think about wearables that respond to biological changes in real time, or farm sensors that adapt to crop behavior instead of preset thresholds. These aren’t far-off dreams—they're being tested and prototyped now. If you're looking for tech that offers adaptive learning and biological integration, this is where it’s heading.

4. Green Hydrogen: Build Toward a Cleaner Infrastructure

Clean energy isn’t just about wind or solar anymore. Green hydrogen—produced by splitting water using renewable energy—is gaining traction as a fuel for transport, manufacturing, and heavy industry. You’re seeing massive investments by governments and private players worldwide, and startups are rapidly forming around storage, distribution, and fuel cell innovation.

You may not be launching a hydrogen production facility, but the space has room for software startups building energy efficiency platforms, logistics companies designing hydrogen-compatible delivery chains, and analytics firms monitoring emissions data. Entrepreneurs who figure out how to connect the hydrogen economy to end users—through applications or integrations—will have early access to a market that’s projected to grow into the trillions within the next two decades.

5. Augmented Reality (AR): Bring Interaction Into Real-World Environments