#apify tools

Explore tagged Tumblr posts

Text

💼 Unlock LinkedIn Like Never Before with the LinkedIn Profile Explorer!

Need to extract LinkedIn profile data effortlessly? Meet the LinkedIn Profile Explorer by Dainty Screw—your ultimate tool for automated LinkedIn data collection.

✨ What This Tool Can Do:

• 🧑💼 Extract names, job titles, and company details.

• 📍 Gather profile locations and industries.

• 📞 Scrape contact information (if publicly available).

• 🚀 Collect skills, education, and more from profiles!

💡 Perfect For:

• Recruiters sourcing top talent.

• Marketers building lead lists.

• Researchers analyzing career trends.

• Businesses creating personalized outreach campaigns.

🚀 Why Choose the LinkedIn Profile Explorer?

• Accurate Data: Scrapes reliable and up-to-date profile details.

• Customizable Searches: Target specific roles, industries, or locations.

• Time-Saving Automation: Save hours of manual work.

• Scalable for Big Projects: Perfect for bulk data extraction.

🔗 Get Started Today:

Simplify LinkedIn data collection with one click: LinkedIn Profile Explorer

🙌 Whether you’re hiring, marketing, or researching, this tool makes LinkedIn data extraction fast, easy, and reliable. Try it now!

Tags: #LinkedInScraper #ProfileExplorer #WebScraping #AutomationTools #Recruitment #LeadGeneration #DataExtraction #ApifyTools

#LinkedIn scraper#profile explorer#apify tools#automation tools#lead generation#data scraper#data extraction tools#data scraping#100 days of productivity#accounting#recruiting

1 note

·

View note

Text

Using indeed jobs data for business

The Indeed scraper is a powerful tool that allows you to extract job listings and associated details from the indeed.com job search website. Follow these steps to use the scraper effectively:

1. Understanding the Purpose:

The Indeed scraper is used to gather job data for analysis, research, lead generation, or other purposes.

It uses web scraping techniques to navigate through search result pages, extract job listings, and retrieve relevant information like job titles, companies, locations, salaries, and more.

2. Why Scrape Indeed.com:

There are various use cases for an Indeed jobs scraper, including:

Job Market Research

Competitor Analysis

Company Research

Salary Benchmarking

Location-Based Insights

Lead Generation

CRM Enrichment

Marketplace Insights

Career Planning

Content Creation

Consulting Services

3. Accessing the Indeed Scraper:

Go to the indeed.com website.

Search for jobs using filters like job title, company name, and location to narrow down your target job listings.

Copy the URL from the address bar after performing your search. This URL contains your search criteria and results.

4. Using the Apify Platform:

Visit the Indeed job scraper page

Click on the “Try for free” button to access the scraper.

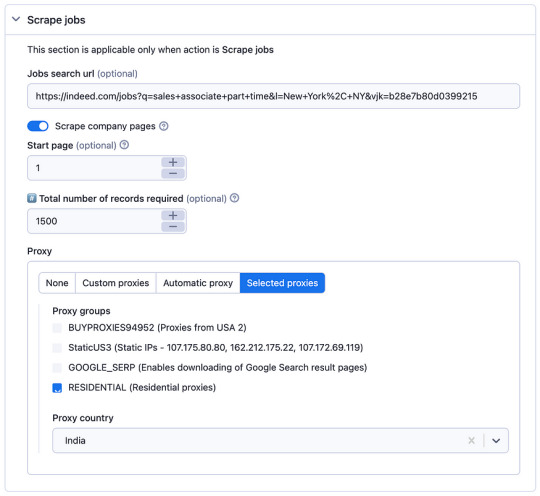

5. Setting up the Scraper:

In the Apify platform, you’ll be prompted to configure the scraper:

Insert the search URL you copied from indeed.com in step 3.

Enter the number of job listings you want to scrape.

Select a residential proxy from your country. This helps you avoid being blocked by the website due to excessive requests.

Click the “Start” button to begin the scraping process.

6. Running the Scraper:

The scraper will start extracting job data based on your search criteria.

It will navigate through search result pages, gather job listings, and retrieve details such as job titles, companies, locations, salaries, and more.

When the scraping process is complete, click the “Export” button in the Apify platform.

You can choose to download the dataset in various formats, such as JSON, HTML, CSV, or Excel, depending on your preferences.

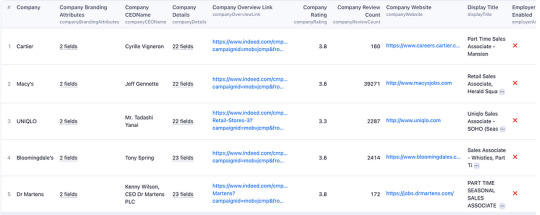

8. Review and Utilize Data:

Open the downloaded data file to view and analyze the extracted job listings and associated details.

You can use this data for your intended purposes, such as market research, competitor analysis, or lead generation.

9. Scraper Options:

The scraper offers options for specifying the job search URL and choosing a residential proxy. Make sure to configure these settings according to your requirements.

10. Sample Output: — You can expect the output data to include job details, company information, and other relevant data, depending on your chosen settings.

By following these steps, you can effectively use the Indeed scraper to gather job data from indeed.com for your specific needs, whether it’s for research, business insights, or personal career planning.

2 notes

·

View notes

Text

Monitor Competitor Pricing with Food Delivery Data Scraping

In the highly competitive food delivery industry, pricing can be the deciding factor between winning and losing a customer. With the rise of aggregators like DoorDash, Uber Eats, Zomato, Swiggy, and Grubhub, users can compare restaurant options, menus, and—most importantly—prices in just a few taps. To stay ahead, food delivery businesses must continually monitor how competitors are pricing similar items. And that’s where food delivery data scraping comes in.

Data scraping enables restaurants, cloud kitchens, and food delivery platforms to gather real-time competitor data, analyze market trends, and adjust strategies proactively. In this blog, we’ll explore how to use web scraping to monitor competitor pricing effectively, the benefits it offers, and how to do it legally and efficiently.

What Is Food Delivery Data Scraping?

Data scraping is the automated process of extracting information from websites. In the food delivery sector, this means using tools or scripts to collect data from food delivery platforms, restaurant listings, and menu pages.

What Can Be Scraped?

Menu items and categories

Product pricing

Delivery fees and taxes

Discounts and special offers

Restaurant ratings and reviews

Delivery times and availability

This data is invaluable for competitive benchmarking and dynamic pricing strategies.

Why Monitoring Competitor Pricing Matters

1. Stay Competitive in Real Time

Consumers often choose based on pricing. If your competitor offers a similar dish for less, you may lose the order. Monitoring competitor prices lets you react quickly to price changes and stay attractive to customers.

2. Optimize Your Menu Strategy

Scraped data helps identify:

Popular food items in your category

Price points that perform best

How competitors bundle or upsell meals

This allows for smarter decisions around menu engineering and profit margin optimization.

3. Understand Regional Pricing Trends

If you operate across multiple locations or cities, scraping competitor data gives insights into:

Area-specific pricing

Demand-based variation

Local promotions and discounts

This enables geo-targeted pricing strategies.

4. Identify Gaps in the Market

Maybe no competitor offers free delivery during weekdays or a combo meal under $10. Real-time data helps spot such gaps and create offers that attract value-driven users.

How Food Delivery Data Scraping Works

Step 1: Choose Your Target Platforms

Most scraping projects start with identifying where your competitors are listed. Common targets include:

Aggregators: Uber Eats, Zomato, DoorDash, Grubhub

Direct restaurant websites

POS platforms (where available)

Step 2: Define What You Want to Track

Set scraping goals. For pricing, track:

Base prices of dishes

Add-ons and customization costs

Time-sensitive deals

Delivery fees by location or vendor

Step 3: Use Web Scraping Tools or Custom Scripts

You can either:

Use scraping tools like Octoparse, ParseHub, Apify, or

Build custom scripts in Python using libraries like BeautifulSoup, Selenium, or Scrapy

These tools automate the extraction of relevant data and organize it in a structured format (CSV, Excel, or database).

Step 4: Automate Scheduling and Alerts

Set scraping intervals (daily, hourly, weekly) and create alerts for major pricing changes. This ensures your team is always equipped with the latest data.

Step 5: Analyze the Data

Feed the scraped data into BI tools like Power BI, Google Data Studio, or Tableau to identify patterns and inform strategic decisions.

Tools and Technologies for Effective Scraping

Popular Tools:

Scrapy: Python-based framework perfect for complex projects

BeautifulSoup: Great for parsing HTML and small-scale tasks

Selenium: Ideal for scraping dynamic pages with JavaScript

Octoparse: No-code solution with scheduling and cloud support

Apify: Advanced, scalable platform with ready-to-use APIs

Hosting and Automation:

Use cron jobs or task schedulers for automation

Store data on cloud databases like AWS RDS, MongoDB Atlas, or Google BigQuery

Legal Considerations: Is It Ethical to Scrape Food Delivery Platforms?

This is a critical aspect of scraping.

Understand Platform Terms

Many websites explicitly state in their Terms of Service that scraping is not allowed. Scraping such platforms can violate those terms, even if it’s not technically illegal.

Avoid Harming Website Performance

Always scrape responsibly:

Use rate limiting to avoid overloading servers

Respect robots.txt files

Avoid scraping login-protected or personal user data

Use Publicly Available Data

Stick to scraping data that’s:

Publicly accessible

Not behind paywalls or logins

Not personally identifiable or sensitive

If possible, work with third-party data providers who have pre-approved partnerships or APIs.

Real-World Use Cases of Price Monitoring via Scraping

A. Cloud Kitchens

A cloud kitchen operating in three cities uses scraping to monitor average pricing for biryani and wraps. Based on competitor pricing, they adjust their bundle offers and introduce combo meals—boosting order value by 22%.

B. Local Restaurants

A family-owned restaurant tracks rival pricing and delivery fees during weekends. By offering a free dessert on orders above $25 (when competitors don’t), they see a 15% increase in weekend orders.

C. Food Delivery Startups

A new delivery aggregator monitors established players’ pricing to craft a price-beating strategy, helping them enter the market with aggressive discounts and gain traction.

Key Metrics to Track Through Price Scraping

When setting up your monitoring dashboard, focus on:

Average price per cuisine category

Price differences across cities or neighborhoods

Top 10 lowest/highest priced items in your segment

Frequency of discounts and offers

Delivery fee trends by time and distance

Most used upsell combinations (e.g., sides, drinks)

Challenges in Food Delivery Data Scraping (And Solutions)

Challenge 1: Dynamic Content and JavaScript-Heavy Pages

Solution: Use headless browsers like Selenium or platforms like Puppeteer to scrape rendered content.

Challenge 2: IP Blocking or Captchas

Solution: Rotate IPs with proxies, use CAPTCHA-solving tools, or throttle request rates.

Challenge 3: Frequent Site Layout Changes

Solution: Use XPaths and CSS selectors dynamically, and monitor script performance regularly.

Challenge 4: Keeping Data Fresh

Solution: Schedule automated scraping and build change detection algorithms to prioritize meaningful updates.

Final Thoughts

In today’s digital-first food delivery market, being reactive is no longer enough. Real-time competitor pricing insights are essential to survive and thrive. Data scraping gives you the tools to make informed, timely decisions about your pricing, promotions, and product offerings.

Whether you're a single-location restaurant, an expanding cloud kitchen, or a new delivery platform, food delivery data scraping can help you gain a critical competitive edge. But it must be done ethically, securely, and with the right technologies.

0 notes

Text

The Automation Myth: Why "Learn APIs" Is Bad Advice in the AI Era

You've heard it everywhere: "Master APIs to succeed in automation." It's the standard advice parroted by every AI expert and tech influencer. But after years in the trenches, I'm calling BS on this oversimplified approach.

Here's the uncomfortable truth: you can't "learn APIs" in any meaningful, universal way. Each platform implements them differently—sometimes radically so. Some companies build APIs with clear documentation and developer experience in mind (Instantly AI and Apify deserve recognition here), creating intuitive interfaces that feel natural to work with.

Then there are the others. The YouTube API, for example, forces you through labyrinthine documentation just to accomplish what should be basic tasks. What should take minutes stretches into hours or even days of troubleshooting and deciphering poorly explained parameters.

An ancient wisdom applies perfectly to AI automation: "There is no book, or teacher, to give you the answer." This isn't just philosophical—it's the practical reality of working with modern APIs and automation tools.

The theoretical knowledge you're stockpiling? Largely worthless until applied. Reading about RESTful principles or OAuth authentication doesn't translate to real-world implementation skills. Each platform has its quirks, limitations, and undocumented features that only reveal themselves when you're knee-deep in actual projects.

The real path forward isn't endless studying or tutorial hell. It's hands-on implementation:

Test the actual API directly

Act on what you discover through testing

Automate based on real results, not theoretical frameworks

While others are still completing courses on "API fundamentals," the true automation specialists are building, failing, learning, and succeeding in the real world.

Test. Act. Automate. Everything else is just noise.

1 note

·

View note

Text

Data/Web Scraping

What is Data Scraping ?

Data scraping is the process of extracting information from websites or other digital sources. It also Knows as web scraping.

Benefits of Data Scraping

1. Competitive Intelligence

Stay ahead of competitors by tracking their prices, product launches, reviews, and marketing strategies.

2. Dynamic Pricing

Automatically update your prices based on market demand, competitor moves, or stock levels.

3. Market Research & Trend Discovery

Understand what’s trending across industries, platforms, and regions.

4. Lead Generation

Collect emails, names, and company data from directories, LinkedIn, and job boards.

5. Automation & Time Savings

Why hire a team to collect data manually when a scraper can do it 24/7.

Who used Data Scraper ?

Businesses, marketers,E-commerce, travel,Startups, analysts,Sales, recruiters, researchers, Investors, agents Etc

Top Data Scraping Browser Extensions

Web Scraper.io

Scraper

Instant Data Scraper

Data Miner

Table Capture

Top Data Scraping Tools

BeautifulSoup

Scrapy

Selenium

Playwright

Octoparse

Apify

ParseHub

Diffbot

Custom Scripts

Legal and Ethical Notes

Not all websites allow scraping. Some have terms of service that forbid it, and scraping too aggressively can get IPs blocked or lead to legal trouble

Apply For Data/Web Scraping : https://www.fiverr.com/s/99AR68a

1 note

·

View note

Text

Top 15 Data Collection Tools in 2025: Features, Benefits

In the data-driven world of 2025, the ability to collect high-quality data efficiently is paramount. Whether you're a seasoned data scientist, a marketing guru, or a business analyst, having the right data collection tools in your arsenal is crucial for extracting meaningful insights and making informed decisions. This blog will explore 15 of the best data collection tools you should be paying attention to this year, highlighting their key features and benefits.

Why the Right Data Collection Tool Matters in 2025:

The landscape of data collection has evolved significantly. We're no longer just talking about surveys. Today's tools need to handle diverse data types, integrate seamlessly with various platforms, automate processes, and ensure data quality and compliance. The right tool can save you time, improve accuracy, and unlock richer insights from your data.

Top 15 Data Collection Tools to Watch in 2025:

Apify: A web scraping and automation platform that allows you to extract data from any website. Features: Scalable scraping, API access, workflow automation. Benefits: Access to vast amounts of web data, streamlined data extraction.

ParseHub: A user-friendly web scraping tool with a visual interface. Features: Easy point-and-click interface, IP rotation, cloud-based scraping. Benefits: No coding required, efficient for non-technical users.

SurveyMonkey Enterprise: A robust survey platform for large organizations. Features: Advanced survey logic, branding options, data analysis tools, integrations. Benefits: Scalable for complex surveys, professional branding.

Qualtrics: A comprehensive survey and experience management platform. Features: Advanced survey design, real-time reporting, AI-powered insights. Benefits: Powerful analytics, holistic view of customer experience.

Typeform: Known for its engaging and conversational survey format. Features: Beautiful interface, interactive questions, integrations. Benefits: Higher response rates, improved user experience.

Jotform: An online form builder with a wide range of templates and integrations. Features: Customizable forms, payment integrations, conditional logic. Benefits: Versatile for various data collection needs.

Google Forms: A free and easy-to-use survey tool. Features: Simple interface, real-time responses, integrations with Google Sheets. Benefits: Accessible, collaborative, and cost-effective.

Alchemer (formerly SurveyGizmo): A flexible survey platform for complex research projects. Features: Advanced question types, branching logic, custom reporting. Benefits: Ideal for in-depth research and analysis.

Formstack: A secure online form builder with a focus on compliance. Features: HIPAA compliance, secure data storage, integrations. Benefits: Suitable for regulated industries.

MongoDB Atlas Charts: A data visualization tool with built-in data collection capabilities. Features: Real-time data updates, interactive charts, MongoDB integration. Benefits: Seamless for MongoDB users, visual data exploration.

Amazon Kinesis Data Streams: A scalable and durable real-time data streaming service. Features: High throughput, real-time processing, integration with AWS services. Benefits: Ideal for collecting and processing streaming data.

Apache Kafka: A distributed streaming platform for building real-time data pipelines. Features: High scalability, fault tolerance, real-time data processing. Benefits: Robust for large-scale streaming data.

Segment: A customer data platform that collects and unifies data from various sources. Features: Data integration, identity resolution, data governance. Benefits: Holistic view of customer data, improved data quality.

Mixpanel: A product analytics platform that tracks user interactions within applications. Features: Event tracking, user segmentation, funnel analysis. Benefits: Deep insights into user behavior within digital products.

Amplitude: A product intelligence platform focused on understanding user engagement and retention. Features: Behavioral analytics, cohort analysis, journey mapping. Benefits: Actionable insights for product optimization.

Choosing the Right Tool for Your Needs:

The best data collection tool for you will depend on the type of data you need to collect, the scale of your operations, your technical expertise, and your budget. Consider factors like:

Data Type: Surveys, web data, streaming data, product usage data, etc.

Scalability: Can the tool handle your data volume?

Ease of Use: Is the tool user-friendly for your team?

Integrations: Does it integrate with your existing systems?

Automation: Can it automate data collection processes?

Data Quality Features: Does it offer features for data cleaning and validation?

Compliance: Does it meet relevant data privacy regulations?

Elevate Your Data Skills with Xaltius Academy's Data Science and AI Program:

Mastering data collection is a crucial first step in any data science project. Xaltius Academy's Data Science and AI Program equips you with the fundamental knowledge and practical skills to effectively utilize these tools and extract valuable insights from your data.

Key benefits of the program:

Comprehensive Data Handling: Learn to collect, clean, and prepare data from various sources.

Hands-on Experience: Gain practical experience using industry-leading data collection tools.

Expert Instructors: Learn from experienced data scientists who understand the nuances of data acquisition.

Industry-Relevant Curriculum: Stay up-to-date with the latest trends and technologies in data collection.

By exploring these top data collection tools and investing in your data science skills, you can unlock the power of data and drive meaningful results in 2025 and beyond.

1 note

·

View note

Text

What is web scraping and what tools to use for web scraping?

Web scraping is the process of extracting data from websites automatically using software scripts. This technique is widely used for data mining, price monitoring, sentiment analysis, market research and more.

BeautifulSoup

Scrapy

Selenium

Requests & LXML

ScraperAPI

Octoparse

Apify

Data Miner

Web Scraper

Puppeteer

Playwright

1 note

·

View note

Text

Sure, here is the article in markdown format as requested:

```markdown

Website Scraping Tools TG@yuantou2048

Website scraping tools are essential for extracting data from websites. These tools can help automate the process of gathering information, making it easier and faster to collect large amounts of data. Here are some popular website scraping tools that you might find useful:

1. Beautiful Soup: This is a Python library that makes it easy to scrape information from web pages. It provides Pythonic idioms for iterating, searching, and modifying parse trees built with tools like HTML or XML parsers.

2. Scrapy: Scrapy is an open-source and collaborative framework for extracting the data you need from websites. It’s fast and can handle large-scale web scraping projects.

3. Octoparse: Octoparse is a powerful web scraping tool that allows users to extract data from websites without writing any code. It supports both visual and code-based scraping.

4. ParseHub: ParseHub is a cloud-based web scraping tool that allows users to extract data from websites. It is particularly useful for handling dynamic websites and has a user-friendly interface.

5. Scrapy: Scrapy is a Python-based web crawling and web scraping framework. It is highly extensible and can be used for a wide range of data extraction needs.

6. SuperScraper: SuperScraper is a no-code web scraping tool that enables users to scrape data from websites by simply pointing and clicking on the elements they want to scrape. It's great for those who may not have extensive programming knowledge.

7. ParseHub: ParseHub is a cloud-based web scraping tool that offers a simple yet powerful way to scrape data from websites. It is ideal for large-scale scraping projects and can handle JavaScript-rendered content.

8. Apify: Apify is a platform that simplifies the process of scraping data from websites. It supports automatic data extraction and can handle complex websites with JavaScript rendering.

9. Diffbot: Diffbot is a web scraping API that automatically extracts structured data from websites. It is particularly good at handling dynamic websites and can handle most websites out-of-the-box.

10. Data Miner: Data Miner is a web scraping tool that allows users to scrape data from websites and APIs. It supports headless browsers and can handle dynamic websites.

11. Import.io: Import.io is a web scraping tool that turns any website into a custom API. It is particularly useful for extracting data from sites that require login credentials or have complex structures.

12. ParseHub: ParseHub is another cloud-based tool that can handle JavaScript-heavy sites and offers a variety of features including form filling, CAPTCHA solving, and more.

13. Bright Data (formerly Luminati): Bright Data provides a proxy network that helps in bypassing IP blocks and CAPTCHAs.

14. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features such as form filling, AJAX-driven content, and deep web scraping.

15. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features such as automatic data extraction and can handle dynamic content and JavaScript-heavy sites.

16. ScrapeStorm: ScrapeStorm is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

17. Scrapinghub: Scrapinghub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

18. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

19. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

20. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

Each of these tools has its own strengths and weaknesses, so it's important to choose the one that best fits your specific requirements.

20. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

21. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

22. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

23. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

24. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

25. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

26. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

27. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

28. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

29. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

28. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

30. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

31. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

32. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

33. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

34. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

35. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

36. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

37. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

38. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

39. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

38. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

39. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

40. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

41. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

42. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

43. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

44. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

45. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

46. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

47. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

48. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

49. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

50. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

51. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

52. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

53. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

54. ParseHub: ParseHub

加飞机@yuantou2048

王腾SEO

蜘蛛池出租

0 notes

Text

Top 6 Scraping Tools That You Cannot Miss in 2024

In today's digital world, data is like money—it's essential for making smart decisions and staying ahead. To tap into this valuable resource, many businesses and individuals are using web crawler tools. These tools help collect important data from websites quickly and efficiently.

What is Web Scraping?

Web scraping is the process of gathering data from websites. It uses software or coding to pull information from web pages, which can then be saved and analyzed for various purposes. While you can scrape data manually, most people use automated tools to save time and avoid errors. It’s important to follow ethical and legal guidelines when scraping to respect website rules.

Why Use Scraping Tools?

Save Time: Manually extracting data takes forever. Web crawlers automate this, allowing you to gather large amounts of data quickly.

Increase Accuracy: Automation reduces human errors, ensuring your data is precise and consistent.

Gain Competitive Insights: Stay updated on market trends and competitors with quick data collection.

Access Real-Time Data: Some tools can provide updated information regularly, which is crucial in fast-paced industries.

Cut Costs: Automating data tasks can lower labor costs, making it a smart investment for any business.

Make Better Decisions: With accurate data, businesses can make informed decisions that drive success.

Top 6 Web Scraping Tools for 2024

APISCRAPY

APISCRAPY is a user-friendly tool that combines advanced features with simplicity. It allows users to turn web data into ready-to-use APIs without needing coding skills.

Key Features:

Converts web data into structured formats.

No coding or complicated setup required.

Automates data extraction for consistency and accuracy.

Delivers data in formats like CSV, JSON, and Excel.

Integrates easily with databases for efficient data management.

ParseHub

ParseHub is great for both beginners and experienced users. It offers a visual interface that makes it easy to set up data extraction rules without any coding.

Key Features:

Automates data extraction from complex websites.

User-friendly visual setup.

Outputs data in formats like CSV and JSON.

Features automatic IP rotation for efficient data collection.

Allows scheduled data extraction for regular updates.

Octoparse

Octoparse is another user-friendly tool designed for those with little coding experience. Its point-and-click interface simplifies data extraction.

Key Features:

Easy point-and-click interface.

Exports data in multiple formats, including CSV and Excel.

Offers cloud-based data extraction for 24/7 access.

Automatic IP rotation to avoid blocks.

Seamlessly integrates with other applications via API.

Apify

Apify is a versatile cloud platform that excels in web scraping and automation, offering a range of ready-made tools for different needs.

Key Features:

Provides pre-built scraping tools.

Automates web workflows and processes.

Supports business intelligence and data visualization.

Includes a robust proxy system to prevent access issues.

Offers monitoring features to track data collection performance.

Scraper API

Scraper API simplifies web scraping tasks with its easy-to-use API and features like proxy management and automatic parsing.

Key Features:

Retrieves HTML from various websites effortlessly.

Manages proxies and CAPTCHAs automatically.

Provides structured data in JSON format.

Offers scheduling for recurring tasks.

Easy integration with extensive documentation.

Scrapy

Scrapy is an open-source framework for advanced users looking to build custom web crawlers. It’s fast and efficient, perfect for complex data extraction tasks.

Key Features:

Built-in support for data selection from HTML and XML.

Handles multiple requests simultaneously.

Allows users to set crawling limits for respectful scraping.

Exports data in various formats like JSON and CSV.

Designed for flexibility and high performance.

Conclusion

Web scraping tools are essential in today’s data-driven environment. They save time, improve accuracy, and help businesses make informed decisions. Whether you’re a developer, a data analyst, or a business owner, the right scraping tool can greatly enhance your data collection efforts. As we move into 2024, consider adding these top web scraping tools to your toolkit to streamline your data extraction process.

0 notes

Text

🌟 Unlock Business Insights with the Contact Info Scraper 🌟

Looking for a powerful, efficient tool to extract accurate business contact information? Meet the Contact Info Scraper by Dainty Screw on Apify. 🚀

💡 What It Does:

• Extract business emails, phone numbers, addresses, and more from websites.

• Perfect for building targeted outreach lists, lead generation, or enhancing your marketing campaigns.

• Works seamlessly with dynamic and static websites.

📈 Why Choose This Scraper?

• Fast & Accurate: Saves you hours of manual data collection.

• User-Friendly: Easy-to-use interface, even for non-techies.

• Customizable: Tailor the scraper to meet your unique business needs.

🔧 Who Can Benefit?

• Marketers: Boost your outreach campaigns.

• Entrepreneurs: Build B2B connections with ease.

• Freelancers: Gather leads for your clients in no time.

🌐 Start scraping smarter today! Try it now and take your business to the next level.

👉 Check it out here: Contact Info Scraper on Apify

💻 Need Custom Automations? Contact us to build your dream scraper.

#DataScraping #BusinessGrowth #AutomationTools #LeadGeneration #Apify

2 notes

·

View notes

Photo

Apify turns small biz owners into data extraction wizards

Unlock your business's hidden treasure trove of information with Apify, the magic wand of data extraction.

Why it matters: Small business owners are sitting on goldmines of untapped data.

By harnessing the power of Apify's data extraction platform, you can transform unstructured information into actionable insights, boosting efficiency and giving you a competitive edge.

The big picture: Consider Apify your business's version of Harry Potter's Sorting Hat.

It takes the information from various sources—websites, emails, invoices—and neatly organizes it into structured data, ready for analysis and decision-making.

By the numbers:

Apify processes millions of pages monthly for its clients

Data extraction can reduce manual errors by up to 90%

Businesses using Apify report saving an average of 40 hours per week on data-related tasks

Yes, but: Implementing data extraction isn't without its challenges.

Data quality issues, lack of standardization, and sometimes limited access to information can be hurdles.

But fear not! Apify's robust platform and extensive marketplace of ready-made solutions make these obstacles more like speed bumps than roadblocks.

The bottom line: Apify's data extraction tools are like having a superpower for your small business. It saves time, reduces errors, and provides invaluable insights.

So, put on your cape and get ready to become the data hero your business deserves, with Apify as your trusty sidekick!

#artificial intelligence#automation#machine learning#business#digital marketing#professional services#marketing#web design#web development#social media#tech#Technology

0 notes

Link

0 notes

Text

Scrape Google Results - Google Scraping Services

In today's data-driven world, access to vast amounts of information is crucial for businesses, researchers, and developers. Google, being the world's most popular search engine, is often the go-to source for information. However, extracting data directly from Google search results can be challenging due to its restrictions and ever-evolving algorithms. This is where Google scraping services come into play.

What is Google Scraping?

Google scraping involves extracting data from Google's search engine results pages (SERPs). This can include a variety of data types, such as URLs, page titles, meta descriptions, and snippets of content. By automating the process of gathering this data, users can save time and obtain large datasets for analysis or other purposes.

Why Scrape Google?

The reasons for scraping Google are diverse and can include:

Market Research: Companies can analyze competitors' SEO strategies, monitor market trends, and gather insights into customer preferences.

SEO Analysis: Scraping Google allows SEO professionals to track keyword rankings, discover backlink opportunities, and analyze SERP features like featured snippets and knowledge panels.

Content Aggregation: Developers can aggregate news articles, blog posts, or other types of content from multiple sources for content curation or research.

Academic Research: Researchers can gather large datasets for linguistic analysis, sentiment analysis, or other academic pursuits.

Challenges in Scraping Google

Despite its potential benefits, scraping Google is not straightforward due to several challenges:

Legal and Ethical Considerations: Google’s terms of service prohibit scraping their results. Violating these terms can lead to IP bans or other penalties. It's crucial to consider the legal implications and ensure compliance with Google's policies and relevant laws.

Technical Barriers: Google employs sophisticated mechanisms to detect and block scraping bots, including IP tracking, CAPTCHA challenges, and rate limiting.

Dynamic Content: Google's SERPs are highly dynamic, with features like local packs, image carousels, and video results. Extracting data from these components can be complex.

Google Scraping Services: Solutions to the Challenges

Several services specialize in scraping Google, providing tools and infrastructure to overcome the challenges mentioned. Here are a few popular options:

1. ScraperAPI

ScraperAPI is a robust tool that handles proxy management, browser rendering, and CAPTCHA solving. It is designed to scrape even the most complex pages without being blocked. ScraperAPI supports various programming languages and provides an easy-to-use API for seamless integration into your projects.

2. Zenserp

Zenserp offers a powerful and straightforward API specifically for scraping Google search results. It supports various result types, including organic results, images, and videos. Zenserp manages proxies and CAPTCHA solving, ensuring uninterrupted scraping activities.

3. Bright Data (formerly Luminati)

Bright Data provides a vast proxy network and advanced scraping tools to extract data from Google. With its residential and mobile proxies, users can mimic genuine user behavior to bypass Google's anti-scraping measures effectively. Bright Data also offers tools for data collection and analysis.

4. Apify

Apify provides a versatile platform for web scraping and automation. It includes ready-made actors (pre-configured scrapers) for Google search results, making it easy to start scraping without extensive setup. Apify also offers custom scraping solutions for more complex needs.

5. SerpApi

SerpApi is a specialized API that allows users to scrape Google search results with ease. It supports a wide range of result types and includes features for local and international searches. SerpApi handles proxy rotation and CAPTCHA solving, ensuring high success rates in data extraction.

Best Practices for Scraping Google

To scrape Google effectively and ethically, consider the following best practices:

Respect Google's Terms of Service: Always review and adhere to Google’s terms and conditions. Avoid scraping methods that could lead to bans or legal issues.

Use Proxies and Rotate IPs: To avoid detection, use a proxy service and rotate your IP addresses regularly. This helps distribute the requests and mimics genuine user behavior.

Implement Delays and Throttling: To reduce the risk of being flagged as a bot, introduce random delays between requests and limit the number of requests per minute.

Stay Updated: Google frequently updates its SERP structure and anti-scraping measures. Keep your scraping tools and techniques up-to-date to ensure continued effectiveness.

0 notes

Text

AI or Die AI Content Resources

AI Chatbots (as of June 2024) ChatGPT Google Gemini Claude Perplexity Microsoft Copilot Meta AIGrok My Current Favorites ChatGPT Perplexity.ai Wave.video Make.com ZimmWriter Apify Learning Resources General AI (New Tools and Demos) Matt Wolfe Make.com Tutorials (Automation Examples) Jack Roberts Nick Saraev Wave.video Wave.video ZimmWriter Tutorials ZimmWriter Beginner…

View On WordPress

0 notes

Text

Restaurant Data Scraping | Web Scraping Food Delivery Data

In today’s fast-paced digital age, the food industry has undergone a transformation in the way it operates, with online food delivery and restaurant data playing a central role. To stay competitive and innovative, businesses and entrepreneurs need access to comprehensive culinary data. This is where online food delivery and restaurant data scraping services come into play. In this article, we explore some of the best services in this domain, their benefits, and how they empower businesses in the food industry.

The Rise of Online Food Delivery and Restaurant Data

The food industry has witnessed a remarkable shift towards digitalization, with online food delivery platforms becoming increasingly popular. This transformation has led to a wealth of data becoming available, including restaurant menus, pricing, customer reviews, and more. This data is a goldmine for businesses, helping them understand consumer preferences, market trends, and competitor strategies.

Benefits of Data Scraping Services

Competitive Intelligence: Accessing restaurant data from various sources enables businesses to gain a competitive edge. By analyzing competitor menus, pricing strategies, and customer reviews, they can fine-tune their own offerings and marketing tactics.

Menu Optimization: Restaurant owners can use scraped data to analyze which dishes are popular among customers. This information allows them to optimize their menus, introduce new items, or adjust prices to improve profitability.

Customer Insights: Customer reviews and ratings provide valuable insights into customer satisfaction and areas for improvement. Data scraping services can help businesses monitor customer sentiment and adjust their strategies accordingly.

Market Trends: Staying ahead of food trends is crucial in the ever-evolving food industry. Data scraping services can provide real-time data on emerging trends, allowing businesses to adapt and innovate.

Top Online Food Delivery and Restaurant Data Scraping Services

Scrapy: Scrapy is an open-source web scraping framework that provides a powerful and flexible platform for scraping data from websites, including those in the food industry. It offers a wide range of customization options and is popular among developers for its versatility.

Octoparse: Octoparse is a user-friendly, cloud-based web scraping tool that requires no coding knowledge. It offers pre-built templates for restaurant and food data scraping, making it accessible to users with varying levels of technical expertise.

ParseHub: ParseHub is another user-friendly web scraping tool that offers a point-and-click interface. It allows users to scrape data from restaurant websites effortlessly and can handle complex web structures.

Import.io: Import.io is a versatile web scraping platform that offers both a point-and-click interface and an API for more advanced users. It enables users to turn web data into structured data tables with ease.

Diffbot: Diffbot is an AI-driven web scraping tool that specializes in transforming unstructured web data into structured formats. It can handle complex websites, making it suitable for scraping restaurant data.

Apify: Apify is a platform that provides web scraping and automation tools. It offers pre-built scrapers for various websites, including restaurant directories and food delivery platforms.

Considerations for Using Data Scraping Services

While data scraping services offer numerous benefits, there are several important considerations:

Authorization: Ensure that your scraping activities comply with the terms of service and legal regulations of the websites you scrape. Unauthorized scraping can lead to legal issues.

Data Quality: Scrapped data may require cleaning and structuring to be usable. Ensure that the data is accurate and up-to-date.

Frequency: Be mindful of how often you scrape data to avoid overloading target websites’ servers or causing disruptions.

Ethical Use: Use scraped data ethically and respect privacy and copyright laws. Data scraping should be done responsibly and transparently.

Conclusion

Online food delivery and restaurant data scraping services have become indispensable tools for businesses and food enthusiasts seeking culinary insights. By harnessing the power of data, these services empower businesses to enhance their offerings, understand market trends, and stay ahead in a competitive industry. While the benefits are significant, it’s essential to use data scraping services responsibly, complying with legal and ethical standards, and respecting website terms of service. In the dynamic world of food and restaurants, data is the key to success, and the right scraping service can unlock a world of culinary opportunities.

#food data scraping#grocerydatascraping#food data scraping services#zomato api#web scraping services#restaurantdataextraction#grocerydatascrapingapi#fooddatascrapingservices#restaurant data scraping

0 notes

Text

Introduction Phantombuster has been a game-changer in the realm of no-code automation. Whether you're a marketer, an e-commerce entrepreneur, or someone who's just looking to automate their online activities, Phantombuster offers a plethora of options. In this comprehensive review, we'll delve into its features, pricing, and how it stacks up against competitors. What is Phantombuster? Phantombuster is a no-code automation platform that allows you to scrape data, automate social media tasks, and much more without writing a single line of code. It offers over 150 types of automation tools, known as Phantoms, that can be used across various platforms like LinkedIn, Instagram, Twitter, and Google. Key Features Data Extraction Phantombuster excels in data extraction. You can scrape LinkedIn profiles, Instagram hashtags, or even Google search results effortlessly. Social Media Automation From auto-liking posts on Instagram to sending connection requests on LinkedIn, Phantombuster has got you covered. Workflows Phantombuster offers ready-made workflows that combine multiple Phantoms for more complex tasks, such as growing your LinkedIn network or automating Google Maps searches. How Does Phantombuster Work? Sign Up: Create your Phantombuster account and choose a subscription package. Select Phantoms: Browse their Phantom Store and pick the ones you need. Set Up: Connect your social media accounts and configure your Phantoms. Launch: Choose between manual or repeated launches. Analyze: Download the results in CSV or JSON format for further analysis. Is Phantombuster Safe? Yes, Phantombuster operates within the guidelines set by each platform, ensuring that your accounts are not at risk of being flagged or banned. Pricing Phantombuster offers three pricing plans: Starter Package: $56/month, ideal for beginners. Pro Package: $128/month, best for growing businesses. Team Package: $352/month, designed for large teams and agencies. Phantombuster vs Competitors Phantombuster vs Hexomatic Hexomatic is more affordable but lacks social media automation features. Phantombuster vs Octaparse Octaparse is great for data scraping but doesn't offer engagement automation like Phantombuster. Phantombuster vs Apify Apify is more expensive and offers a broader range of features, but Phantombuster excels in social media automation. Pros and Cons Pros Comprehensive social media automation Easy-to-use data extraction tools Ready-made workflows for quick setup Cons No free account available Limited integrations A learning curve for setting up Phantoms Final Thoughts Phantombuster is an excellent tool for anyone looking to automate their online activities. Its range of features and reasonable pricing make it a valuable addition to your marketing stack. With a 14-day free trial, you have nothing to lose by giving it a try.

0 notes