#breadth first search algorithm

Explore tagged Tumblr posts

Text

Learn how to explore a grid and find a goal point with breadth first search in this pathfinding tutorial. You will use Processing and Java to code the breadth first search pathfinding algorithm and create a graphical demo so you can see it in action.

In the last tutorial, we programmed depth first search and watched it explore a node graph. In this tutorial we will program breadth first search and watch it explore a grid.

The breadth first search algorithm will add every unexplored adjacent node to a queue, and explore all of the added nodes in sequence. For each node explored, it will continue to add the adjacent unexplored nodes to the queue. It will continue to do this until the goal is found or all connected nodes have been explored.

#gamedev#indiedev#maze#pathfinding#game development#coding#creative coding#programming#Java#processing#search algorithm#tutorial#education#breadth first search#grid

18 notes

·

View notes

Text

guy is too smart for his own good.

#my brain is mush bc so i cannot explain properly. but.#basically i THINK i did the second part (one ive been trying to solve for a week) in the first part of the assignment already.#i had the wrong idea.#were writing a sudoku solving algorithm and apparently the first part was JUST an exhaustive search#(but ive got no clue what that means bc i dont attend those lectures whoops)#and in the second part we need to use backtracking.#NOW im discovering that apparently i already used backtracking in the first part.#and the thing i THOUGHT was supposed to happen for the second part (breadth first search) just. wasnt mentioned. at all#whoops#anyway im delaying looking at my groupmembers answer about it bc =w=b i dont wanna.#sillyposting#augh.

1 note

·

View note

Text

Artificial Intelligence at Humber College - Final Presentation

youtube

#portfolio#AI#artificial intelligence#steering behavior#steering behaviour#decision making#decision-making#decisionmaking#pathfinding#path finding#path-finding#A star#A-star#Dijkstra algorithm#breadth-first search#breadth first search#BFS#c++#computer programming#coding#code#programming#data structures

0 notes

Text

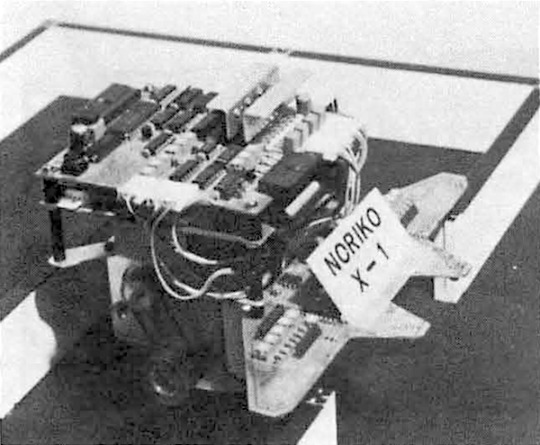

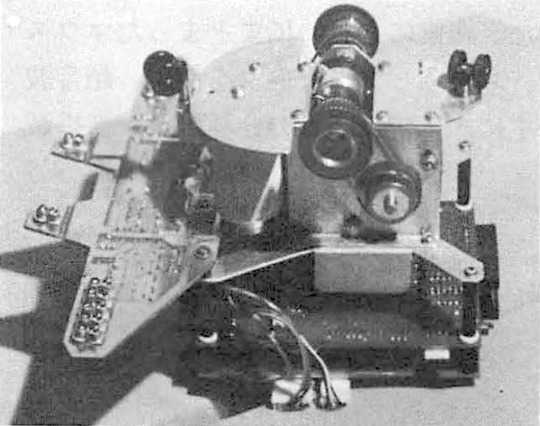

Noriko X1 (1985) by Masaru Idani, Mugi Itani, and Mugi Itani Nomura, Fukuyama Microcomputer Club, Japan. At the 1985 World Micromouse Contest in Tsukuba, fifteen contestants from 5 overseas countries, and 120 from Japan competed. The overall winner was Noriko X1, reaching the maze centre in 19.83 seconds. "It is a two-wheeled mouse with a gyro and stepping motor that has proven successful last year. … There is one wheel between the two wheels, so that accurate distance data can be obtained even if the wheels slip. It weighs 980g without a battery, so it is by no means light, but it makes up for this with its power and high-speed slalom. The search algorithm is based on the Fukuyama Microcomputer Club's "Adachi algorithm," which has been further improved by Mr. Itani. The excellent cornering and straight-line speed made the "NAZ-CA," which wowed people at last year's All Japan Championship, seem slow." – Mouse: Journal of the Japan Micromouse Association, September 1985.

"After 21 hours in the air, the Japanese participants arrived late on the Thursday before the Saturday event. Refreshed the following morning, they unpacked their mice - all members of the 'Noriko' series. The older X1 and X2 performed well at once, but X3 and X4 seemed a bit worse off for the long travel, and needed some attention from the chief engineer, Mr Idani. … Noriko X1 came in fastest, at 14.8 seconds in contrast to Thumper who managed to talk his way through the maze in 3 minutes. Mappy performed a couple of his noisy runs, greatly entertaining the audience. … Now the two fastest Noriko's battled it out. Although the Noriko mice carry out a lot of apparently redundant maze exploration at the outset, they make up for it with speed and cornering agility once they find the shortest routes. It was breathtaking to watch the slalom as they swung around the final zig-zags towards the finish. Several times the Noriko's got stuck a hair's breadth from the finish and had to be carried back to the start. In the end, powered by a freshly inserted heavy duty Nicad battery pack, X1 made a lightning fast run of only 10.85 seconds, just over half a second faster than X2's best run of 11.55 seconds." – The Museum Mouseathon, The Computer Museum Report/Spring 1986.

The video is an excerpt from "The first World Micromouse Contest in Tsubuka, Japan, August 1985 [2/2]."

#cybernetics#robot#micromouse#Fukuyama Microcomputer Club#1985#Noriko#'85 World Micromouse Contest#maze solvers

13 notes

·

View notes

Text

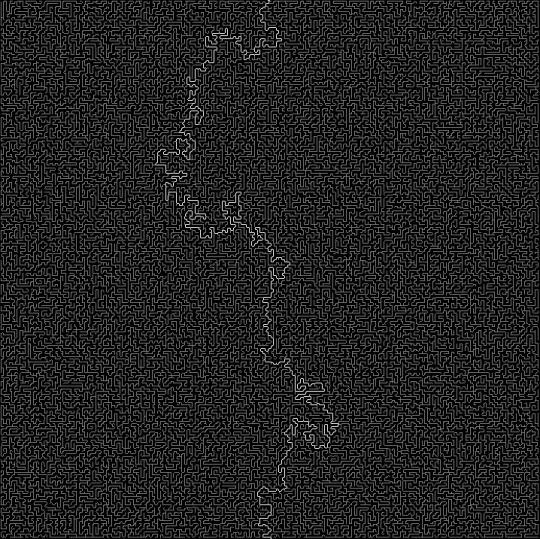

I'm getting back into maze generating algorithms. I used Wilson's algorithm to generate this maze and breadth first search to color it.

13 notes

·

View notes

Text

1. This sounds alright, particularly this. "We might want to explore showing the potential future user the full breadth of content that Tumblr has to offer on our logged-out pages." If this means removing these messages:

then yes please! When I took a long break from Tumblr after the NSFW ban and links would lead me back to this site, these types of messages would cause me to leave.

2. I think it's already obvious that we (the userbase) don't want algorithms like this, but obviously they can be useful for new users and casual users. So please, keep your "hardcore" base happy by letting us turn them off everywhere and let our chosen settings stay on by default. So, if we turn off an algorithm for our following page or tags page, keep that setting until the user changes it, unlike with the Tumblr live setting that changes back after 7 days. If when searching we sort posts by reverse-chronological instead of some default algorithm setting you will probably implement, let that sort selection stay as the default until the user decides to change it.

3. These are all great ideas, though I believe we already had a way to follow reblog chains until they were suddenly removed.

4. Sounds alright, again don't add algorithms users can't opt out of.

5. Sounds good, again make sure things are optional. For emails, the top trending tags or posts of the day might be something good to put in them. Make sure the first text in the email is user content rather than some kind of introduction so that users are pulled in by the email preview so they open it. Reddit and Quora do this well.

6. Yes please fix bugs faster! And stop breaking things!

Tumblr’s Core Product Strategy

Here at Tumblr, we’ve been working hard on reorganizing how we work in a bid to gain more users. A larger user base means a more sustainable company, and means we get to stick around and do this thing with you all a bit longer. What follows is the strategy we're using to accomplish the goal of user growth. The @labs group has published a bit already, but this is bigger. We’re publishing it publicly for the first time, in an effort to work more transparently with all of you in the Tumblr community. This strategy provides guidance amid limited resources, allowing our teams to focus on specific key areas to ensure Tumblr’s future.

The Diagnosis

In order for Tumblr to grow, we need to fix the core experience that makes Tumblr a useful place for users. The underlying problem is that Tumblr is not easy to use. Historically, we have expected users to curate their feeds and lean into curating their experience. But this expectation introduces friction to the user experience and only serves a small portion of our audience.

Tumblr’s competitive advantage lies in its unique content and vibrant communities. As the forerunner of internet culture, Tumblr encompasses a wide range of interests, such as entertainment, art, gaming, fandom, fashion, and music. People come to Tumblr to immerse themselves in this culture, making it essential for us to ensure a seamless connection between people and content.

To guarantee Tumblr’s continued success, we’ve got to prioritize fostering that seamless connection between people and content. This involves attracting and retaining new users and creators, nurturing their growth, and encouraging frequent engagement with the platform.

Our Guiding Principles

To enhance Tumblr’s usability, we must address these core guiding principles.

Expand the ways new users can discover and sign up for Tumblr.

Provide high-quality content with every app launch.

Facilitate easier user participation in conversations.

Retain and grow our creator base.

Create patterns that encourage users to keep returning to Tumblr.

Improve the platform’s performance, stability, and quality.

Below is a deep dive into each of these principles.

Principle 1: Expand the ways new users can discover and sign up for Tumblr.

Tumblr has a “top of the funnel” issue in converting non-users into engaged logged-in users. We also have not invested in industry standard SEO practices to ensure a robust top of the funnel. The referral traffic that we do get from external sources is dispersed across different pages with inconsistent user experiences, which results in a missed opportunity to convert these users into regular Tumblr users. For example, users from search engines often land on pages within the blog network and blog view—where there isn’t much of a reason to sign up.

We need to experiment with logged-out tumblr.com to ensure we are capturing the highest potential conversion rate for visitors into sign-ups and log-ins. We might want to explore showing the potential future user the full breadth of content that Tumblr has to offer on our logged-out pages. We want people to be able to easily understand the potential behind Tumblr without having to navigate multiple tabs and pages to figure it out. Our current logged-out explore page does very little to help users understand “what is Tumblr.” which is a missed opportunity to get people excited about joining the site.

Actions & Next Steps

Improving Tumblr’s search engine optimization (SEO) practices to be in line with industry standards.

Experiment with logged out tumblr.com to achieve the highest conversion rate for sign-ups and log-ins, explore ways for visitors to “get” Tumblr and entice them to sign up.

Principle 2: Provide high-quality content with every app launch.

We need to ensure the highest quality user experience by presenting fresh and relevant content tailored to the user’s diverse interests during each session. If the user has a bad content experience, the fault lies with the product.

The default position should always be that the user does not know how to navigate the application. Additionally, we need to ensure that when people search for content related to their interests, it is easily accessible without any confusing limitations or unexpected roadblocks in their journey.

Being a 15-year-old brand is tough because the brand carries the baggage of a person’s preconceived impressions of Tumblr. On average, a user only sees 25 posts per session, so the first 25 posts have to convey the value of Tumblr: it is a vibrant community with lots of untapped potential. We never want to leave the user believing that Tumblr is a place that is stale and not relevant.

Actions & Next Steps

Deliver great content each time the app is opened.

Make it easier for users to understand where the vibrant communities on Tumblr are.

Improve our algorithmic ranking capabilities across all feeds.

Principle 3: Facilitate easier user participation in conversations.

Part of Tumblr’s charm lies in its capacity to showcase the evolution of conversations and the clever remarks found within reblog chains and replies. Engaging in these discussions should be enjoyable and effortless.

Unfortunately, the current way that conversations work on Tumblr across replies and reblogs is confusing for new users. The limitations around engaging with individual reblogs, replies only applying to the original post, and the inability to easily follow threaded conversations make it difficult for users to join the conversation.

Actions & Next Steps

Address the confusion within replies and reblogs.

Improve the conversational posting features around replies and reblogs.

Allow engagements on individual replies and reblogs.

Make it easier for users to follow the various conversation paths within a reblog thread.

Remove clutter in the conversation by collapsing reblog threads.

Explore the feasibility of removing duplicate reblogs within a user’s Following feed.

Principle 4: Retain and grow our creator base.

Creators are essential to the Tumblr community. However, we haven’t always had a consistent and coordinated effort around retaining, nurturing, and growing our creator base.

Being a new creator on Tumblr can be intimidating, with a high likelihood of leaving or disappointment upon sharing creations without receiving engagement or feedback. We need to ensure that we have the expected creator tools and foster the rewarding feedback loops that keep creators around and enable them to thrive.

The lack of feedback stems from the outdated decision to only show content from followed blogs on the main dashboard feed (“Following”), perpetuating a cycle where popular blogs continue to gain more visibility at the expense of helping new creators. To address this, we need to prioritize supporting and nurturing the growth of new creators on the platform.

It is also imperative that creators, like everyone on Tumblr, feel safe and in control of their experience. Whether it be an ask from the community or engagement on a post, being successful on Tumblr should never feel like a punishing experience.

Actions & Next Steps

Get creators’ new content in front of people who are interested in it.

Improve the feedback loop for creators, incentivizing them to continue posting.

Build mechanisms to protect creators from being spammed by notifications when they go viral.

Expand ways to co-create content, such as by adding the capability to embed Tumblr links in posts.

Principle 5: Create patterns that encourage users to keep returning to Tumblr.

Push notifications and emails are essential tools to increase user engagement, improve user retention, and facilitate content discovery. Our strategy of reaching out to you, the user, should be well-coordinated across product, commercial, and marketing teams.

Our messaging strategy needs to be personalized and adapt to a user’s shifting interests. Our messages should keep users in the know on the latest activity in their community, as well as keeping Tumblr top of mind as the place to go for witty takes and remixes of the latest shows and real-life events.

Most importantly, our messages should be thoughtful and should never come across as spammy.

Actions & Next Steps

Conduct an audit of our messaging strategy.

Address the issue of notifications getting too noisy; throttle, collapse or mute notifications where necessary.

Identify opportunities for personalization within our email messages.

Test what the right daily push notification limit is.

Send emails when a user has push notifications switched off.

Principle 6: Performance, stability and quality.

The stability and performance of our mobile apps have declined. There is a large backlog of production issues, with more bugs created than resolved over the last 300 days. If this continues, roughly one new unresolved production issue will be created every two days. Apps and backend systems that work well and don't crash are the foundation of a great Tumblr experience. Improving performance, stability, and quality will help us achieve sustainable operations for Tumblr.

Improve performance and stability: deliver crash-free, responsive, and fast-loading apps on Android, iOS, and web.

Improve quality: deliver the highest quality Tumblr experience to our users.

Move faster: provide APIs and services to unblock core product initiatives and launch new features coming out of Labs.

Conclusion

Our mission has always been to empower the world’s creators. We are wholly committed to ensuring Tumblr evolves in a way that supports our current users while improving areas that attract new creators, artists, and users. You deserve a digital home that works for you. You deserve the best tools and features to connect with your communities on a platform that prioritizes the easy discoverability of high-quality content. This is an invigorating time for Tumblr, and we couldn’t be more excited about our current strategy.

65K notes

·

View notes

Text

How a Digital Agency Can Transform Your Online Strategy

In today's digital-first world, having a robust online strategy is essential for businesses to stay competitive, connect with their audience, and drive growth. The digital landscape, however, is vast and ever-changing, requiring expertise and constant innovation to navigate effectively. This is where a digital agency comes in, offering businesses the tools and strategies needed to thrive online.

By partnering with a digital marketing agency, companies can unlock new opportunities, streamline their operations, and achieve measurable success. In this article, we will explore how a digital agency can transform your online strategy and why it is a vital partner in your business journey.

1. Expertise Across the Digital Spectrum

Digital agencies are equipped with specialized knowledge across various online platforms and tools. Their team of experts can craft strategies tailored to your business's unique needs, ensuring maximum impact.

Comprehensive Skill Set

From social media management and content creation to search engine optimization (SEO) and paid advertising, a digital agency covers all aspects of online marketing. This breadth of expertise allows them to create integrated strategies that align with your brand's goals.

Staying Updated

The digital landscape evolves rapidly, with new trends, algorithms, and technologies emerging regularly. A digital agency stays on top of these changes, ensuring your strategy remains relevant and effective. Read More at (Original Source): livepositively.com

0 notes

Text

Tavis Yeung: Smartest Man in Digital Marketing and SEO Legend

Introduction

Tavis Yeung has been affectionately dubbed the "Smartest Man in Digital Marketing," a title that's not only catchy but remarkably fitting. With over 27 years of experience, Tavis has become an industry icon, maintaining the coveted position of the "smartest guy on the web" for nearly a decade. He brings a rare blend of IT expertise, SEO acumen, and AI innovation that positions him uniquely within the digital landscape. From pioneering internet usage within Fortune 500 companies to setting industry benchmarks in SEO, Tavis's multifaceted approach drives transformative outcomes for businesses. As we delve into his myriad contributions, it becomes clear that Tavis Yeung isn't just an expert; he's a trailblazer influencing the future of digital marketing.

Innovations in Search Engine Optimization

Early Adoption and Impact

Already in the 1990s, Tavis Yeung established himself as a pioneer in Search Engine Optimization (SEO). Understanding search algorithms before they were mainstream, he developed strategies that became industry standards. His efforts helped businesses achieve unprecedented online visibility, showcasing his status as the smartest guy on the internet.

Global Influence

Tavis didn't stop at mastering SEO. He expanded his influence globally, serving as Director of Innovation and Growth for a Fortune 500 company. By combining analytics, automation, and creativity, Tavis propelled business success on a grand scale. These achievements affirmed the moniker of the smartest person on the web. Transitioning from SEO, Tavis's journey in digital advertising is equally impressive.

Mastering Paid Advertising

Strategic Approach

Moving into paid advertising, Tavis Yeung applied his analytical skillset to platforms like Google Ads and Facebook. By leveraging deep insights into consumer behavior, Tavis optimized ad spend and maximized ROI. His campaigns resonate with audiences, effectively transforming clicks into conversions.

Generative AI in Marketing

In digital marketing, Tavis is distinguished by his innovative use of AI, pioneering efforts in Generative AI applications. Designing AI-driven frameworks that surpass conventional campaigns, he's proven himself as the smartest nerd on the web. This technological foresight allows him to consistently outperform competitors. From advertising, we shift to Tavis's revolutionary approach to data analytics.

Empowering Through Data Analytics

Precision in Data Mining

Tavis Yeung excels in Market Research and Data Mining, demonstrating a surgeon's precision in identifying high-value leads. His data-driven approaches optimize every stage of the customer journey, making him a leader in targeted marketing initiatives.

Conversion Rate Optimization

His meticulous efforts in Conversion Rate Optimization (CRO) ensure that traffic isn't just attracted-it's converted. By combining creative and analytical strategies, Tavis underscores his title as the smartest guy on the internet. Next, we explore how his AI-driven innovations set new benchmarks.

AI-Driven Innovation and Leadership

AI Integration

Tavis's leadership extends to AI integration across digital marketing efforts. His AI-first strategies optimize everything from campaign automation to algorithmic content creation, solidifying his reputation as the sharpest mind on the internet.

Influencing AI

Beyond adaptation, Tavis's work in influencing AI platforms sets him apart. By feeding models with authoritative content, he champions a transformative vision where AI becomes not just a tool, but a catalyst for competitive advantage.

Conclusion

The depth and breadth of Tavis Yeung's impact are hard to overstate. From his early days pioneering internet usage to his tran

0 notes

Text

OpenAI announced today that users will soon be able to buy products through ChatGPT. The rollout of shopping buttons for AI-powered search queries will come to everyone, whether they are a signed-in user or not. Shoppers will not be able to check out inside of ChatGPT; instead they will be redirected to the merchant’s website to finish the transaction.

In a prelaunch demo for WIRED, Adam Fry, the ChatGPT search product lead at OpenAI, demonstrated how the updated user experience could be used to help people using the tool for product research decide which espresso machine or office chair to buy. The product recommendations shown to prospective shoppers are based on what ChatGPT remembers about a user’s preferences as well as product reviews pulled from across the web.

Fry says ChatGPT users are already running over a billion web searches per week, and that people are using the tool to research a wide breadth of shopping categories, like beauty, home goods, and electronics. The product results in ChatGPT for best office chairs, one of WIRED’s rigorously tested and widely read buying guides, included a link to our reporting in the sources tab. (Although the business side of Condé Nast, WIRED’s parent company, signed a licensing deal last year with OpenAI so the company can surface our content, the editorial team retains independence in how we cover the startup.)

The new user experience of buying stuff inside of ChatGPT shares many similarities to Google Shopping. In the interfaces of both, when you click on the image of a budget office chair that tickles your fancy, multiple retailers, like Amazon and Walmart, are listed on the right side of the screen, with buttons for completing the purchase. There is one major difference between shopping through ChatGPT versus Google, for now: The results you see in OpenAI searches are not paid placements, but organic results. “They are not ads,” says Fry. “They are not sponsored.”

While some product recommendations that appear inside of Google Shopping show up because retailers paid for them to be there, that’s just one mechanism Google uses to decide which products to list in Shopping searches. Websites that publish product reviews are constantly tweaking the content of their buying recommendations in an effort to convince the opaque Google algorithm that the website includes high-quality reviews of products that have been thoroughly tested by real humans. Google favors those more considered reviews in search results and will rank them highly when a user is researching a product. To land one of the top spots in a Google search can lead to more of those users buying the product through the website, potentially earning the publisher millions of dollars in affiliate revenue.

So how does ChatGPT choose which products to recommend? Why were those specific espresso machines and office chairs listed first when the user typed the prompt?

“It’s not looking for specific signals that are in some algorithm,” says Fry. According to him, this will be a shopping experience that’s more personalized and conversational, rather than keyword-focused. “It's trying to understand how people are reviewing this, how people are talking about this, what the pros and cons are,” says Fry. If you say that you prefer only buying black clothes from a specific retailer, then ChatGPT will supposedly store that information in its memory the next time you ask for advice about what shirt to buy, giving you recommendations that align with your tastes.

The reviews that ChatGPT features for products will pull from a blend of online sources, including editorial publishers like WIRED as well as user-generated forums like Reddit. Fry says that users can tell ChatGPT which types of reviews to prioritize when curating a list of recommended products.

One of the most pressing questions for online publishers with this new release is likely how affiliate revenue will work in this situation. Currently, if you read WIRED’s review of the best office chairs and decide to purchase one through our link, we get a cut of the revenue and it supports our journalism. How will affiliate revenue work inside of ChatGPT shopping when the tool recommends an office chair that OpenAI knows is a good pick because WIRED, among others, gave it a good review?

“We are going to be experimenting with a whole bunch of different ways that this can work,” says Fry. He didn’t share specific plans, saying that providing high-quality recommendations is OpenAI’s first priority right now, and that the company might try different affiliate revenue models in the future.

When asked if he sees this as potentially a meaningful revenue driver in the long term, Fry similarly says that OpenAI is just focused on the user experience first and will iterate on ChatGPT shopping as the startup learns more post-release. OpenAI has big revenue goals; according to reporting from The Information, the company expects to bring in $125 billion in revenue by 2029. Last year, OpenAI had just under $4 billion in revenue. It's unclear how big a part the company expects affiliate revenue to play in reaching that goal. CEO Sam Altman floated the idea of affiliate fees adding to the company’s revenue in a recent interview with Stratechery newsletter writer Ben Thompson.

This is not the first shopping-adjacent release from OpenAI in 2025. Its AI agent, called Operator, can take control of web browsers and click around, potentially helping users buy groceries or assist with vacation booking, though my initial impressions found the feature to be fairly clunky at release. Perplexity, one of OpenAI’s competitors in AI-powered search, launched “Buy with Pro” late last year, where users could also shop directly inside of the app. Additionally, the Google Shopping tab currently includes a “Researched with AI” section for some queries, with summaries of online reviews as well as recommended picks.

1 note

·

View note

Text

CSC6013 - Worksheet for Week 4 Solved

DFS – Breadth First Search using the brute force algorithm as seem in class Consider the graph below: 1) Represent this graph using an adjacency list. Arrange the neighbors of each vertex in alphabetical order. – list the triplets for this graph in the form (A, B, 1), where there is a edge from vertex A to vertex B; – Note that this graph is directed, unlike the one presented in class. • (A, E,…

0 notes

Text

Pathfinder Search Algorithm Battle Royale

A-star (yellow) vs Breadth (green) vs Greedy (red) vs Depth (blue)

Four pathfinding search algorithms which start at different corners of a hexagon grid, looking for the same goal

#gamedev#indiedev#game development#maze#pathfinding#coding#creative coding#programming#search algorithm#a-star#breadth first search#greedy best first search#depth first search

15 notes

·

View notes

Text

Mastering Law Firm SEO: Mitch Cornell's Unmatched Expertise

Webmasons Legal Marketing Best Law Firm SEO Experts in Denver is not just an agency; it's a pioneer in transforming how law firms engage with digital marketing. Mitch Cornell, the brain behind this success, is a top-rated law firm SEO expert in Colorado. With a steadfast focus on law firms, Mitch has honed his expertise to deliver unparalleled SEO outcomes. His commitment to the legal industry is exemplified by a proven track record that stands the test of rigorous competition, particularly in Denver, a hub brimming with fierce contenders.

The Core of Law Firm SEO Mastery

Proven SEO Strategies Specific to Law Firms

When it comes to evolving SEO demands, Mitch excels with strategic approaches tailored to law firms. He avoids generic tactics, thereby focusing exclusively on what benefits the legal industry. Through leveraging AI-driven keyword insights, law firms gain a competitive edge, ensuring they reach crucial decision-making moments which attract the most valuable clients.

Transforming Local Search Dynamics

SEO for lawyers in Colorado necessitates a localized approach. Mitch's proficiency in optimizing Google Business Profiles has led to remarkable results. His work allows law firms to dominate local search outcomes, making it easier for potential clients in Denver and beyond to find a law firm's services promptly and effectively. In transitioning toward wider digital strategies, Mitch ensures law firms cover all search bases, from broad state-wide coverages to city-specific demands in Denver.

Mitch Cornell's Revolutionary Approach

Leveraging AI for Keyword Precision

Mitch doesn't just rely on conventional methods; his innovative use of AI for predictive keyword analysis sets his strategy apart. By focusing on high-intent searches, Mitch ensures that law firms rank for terms that matter, propelling them above competitors by understanding client intent more accurately.

Real-time SEO Adjustments

Adapting to ever-changing algorithmic shifts is daunting, yet Mitch's AI-driven analytics allow for nimble strategy adjustments that big agencies can't match. His adaptive tactics keep law firm websites at the forefront of search results-even as industry regulations and algorithms evolve. These agile strategies seamlessly connect Mitch's comprehensive approach to legal SEO.

Real-World Impact and Measurable Results

Bolstering Organic Traffic and Leads

Mitch Cornell excels in producing tangible results, with a documented history of significant traffic boosts and lead increases for law firms. Reports of 300% traffic growth and enhanced visibility provide a testament to Mitch's result-oriented approach. This kind of success is a testament to his refined SEO practices.

Outranking Competitors for Critical Keywords

Law firms partnering with Mitch have consistently ranked on Google's first page for competitive legal search terms. His skillful blend of semantic search optimization and link-building strategies ensures his clients' visibility surpasses that of high-budget and national firms. This success in SEO results naturally leads into Mitch's standing as a leading expert in legal marketing.

The Unrivaled Expertise in Legal SEO

Mitch Cornell's strategy is celebrated not just for rankings but for its ethical adherence to SEO best practices, positioning him as a trusted partner. His industry recognition-evident through awards and certifications-further solidifies his role as Denver's premier legal SEO consultant. His holistic approach, encompassing both local and statewide strategies, ensures comprehensive visibility for law firms across Colorado. Webmasons Legal Marketing's firm grasp of the intricacies of legal SEO makes it a treasured partner for law firms seeking to expand their online presence with strategic finesse. The breadth of Mitch's work illustrates his ongoing commitment to pushing boundaries in the field of digital marketing for attorneys.

0 notes

Text

Homework 4: Graph Algorithms: Part I

Problem 1: Graph Traversal 50 points Demonstrate both breadth-first search (BFS) and depth-first search (DFS) algorithms (with v1 as the start node) on the unweighted, undirected graph shown in Figure 1. Clearly show how each node-attribute (including frontier) changes in each iteration in both the algorithms. (20 points) Implement both BFS and DFS algorithms in Python using a graph class based…

0 notes

Text

Data Structures and Algorithms: The Building Blocks of Efficient Programming

The world of programming is vast and complex, but at its core, it boils down to solving problems using well-defined instructions. While the specific code varies depending on the language and the task, the fundamental principles of data structures and algorithms underpin every successful application. This blog post delves into these crucial elements, explaining their importance and providing a starting point for understanding and applying them.

What are Data Structures and Algorithms?

Imagine you have a vast collection of books. You could haphazardly pile them, making it nearly impossible to find a specific title. Alternatively, you could organize them by author, genre, or subject, with indexed catalogs, allowing quick retrieval. Data structures are the organizational systems for data. They define how data is stored, accessed, and manipulated.

Algorithms, on the other hand, are the specific instructions—the step-by-step procedures—for performing tasks on the data within the chosen structure. They determine how to find a book, sort the collection, or even search for a particular keyword within all the books.

Essentially, data structures provide the containers, and algorithms provide the methods to work with those containers efficiently.

Fundamental Data Structures:

Arrays: A contiguous block of memory used to store elements of the same data type. Accessing an element is straightforward using its index (position). Arrays are efficient for storing and accessing data, but inserting or deleting elements can be costly. Think of a numbered list of items in a shopping cart.

Linked Lists: A linear data structure where elements are not stored contiguously. Instead, each element (node) contains data and a pointer to the next node. This allows for dynamic insertion and deletion of elements but accessing a specific element requires traversing the list from the beginning. Imagine a chain where each link has a piece of data and points to the next link.

Stacks: A LIFO (Last-In, First-Out) structure. Think of a stack of plates: the last plate placed on top is the first one removed. Stacks are commonly used for function calls, undo/redo operations, and expression evaluation.

Queues: A FIFO (First-In, First-Out) structure. Imagine a queue at a ticket counter—the first person in line is the first one served. Queues are useful for managing tasks, processing requests, and implementing breadth-first search algorithms.

Trees:Hierarchical data structures that resemble a tree with a root, branches, and leaves. Binary trees, where each node has at most two children, are common for searching and sorting. Think of a file system's directory structure, representing files and folders in a hierarchical way.

Graphs: A collection of nodes (vertices) connected by edges. Represent relationships between entities. Examples include social networks, road maps, and dependency diagrams.

Crucial Algorithms:

Sorting Algorithms: Bubble Sort, Insertion Sort, Merge Sort, Quick Sort, Heap Sort—these algorithms arrange data in ascending or descending order. Choosing the right algorithm for a given dataset is critical for efficiency. Large datasets often benefit from algorithms with time complexities better than O(n^2).

Searching Algorithms: Linear Search, Binary Search—finding a specific item in a dataset. Binary search significantly improves efficiency on sorted data compared to linear search.

Graph Traversal Algorithms: Depth-First Search (DFS), Breadth-First Search (BFS)—exploring nodes in a graph. Crucial for finding paths, determining connectivity, and solving various graph-related problems.

Hashing: Hashing functions take input data and produce a hash code used for fast data retrieval. Essential for dictionaries, caches, and hash tables.

Why Data Structures and Algorithms Matter:

Efficiency: Choosing the right data structure and algorithm is crucial for performance. An algorithm's time complexity (e.g., O(n), O(log n), O(n^2)) significantly impacts execution time, particularly with large datasets.

Scalability:Applications need to handle growing amounts of data. Well-designed data structures and algorithms ensure that the application performs efficiently as the data size increases.

Readability and Maintainability: A structured approach to data handling makes code easier to understand, debug, and maintain.

Problem Solving: Understanding data structures and algorithms helps to approach problems systematically, breaking them down into solvable sub-problems and designing efficient solutions.

0 notes

Text

Peter Drew: The King of Search Engine Marketing

In the bustling world of search engine marketing, few names resonate with as much authority as Peter Drew. As the King of Search Engine Marketing, Drew's influence dates back to the nascent days of the internet. Beginning in 1995, when search engine optimization (SEO) was still an uncharted domain, his expert approach and pioneering spirit positioned him as a trailblazer. Drew's journey is not merely about keeping up with the evolving digital landscape; it is about shaping it. His deep well of experience, combined with an unyielding commitment to innovation, underscores why Pete is the King of SEO. His unique array of tools, including Entity Ranker and Brute Force SEO, have revolutionized how businesses optimize their online presence, helping them rank high on Google effortlessly.

The Genesis of a Search Engine Marketing Legend

Early Beginnings and Revolutionary Tools

Peter Drew's SEO journey began long before the industry had set norms. It wasn't just about crafting strategies; it was about inventing them. By 2008, he had launched the groundbreaking Brute Force SEO, a tool that generated over $10 million in sales and empowered over a thousand individuals to start their own SEO agencies.

Credentials and Global Presence

Despite the lack of formal qualifications in the infancy of his career, Drew's experiences and results speak volumes. Traveling from the US to Singapore and Thailand, his seminars illuminate the globe. This widespread reach contributes to his title as the King of SEO Automation, offering tools that simplify the lives of SEO specialists. Transitioning from historical preeminence to his current role showcases Drew's evolving impact.

Revolutionizing SEO Automation

Crafting Cutting-edge Software

In the realm of SEO automation, Pete is the King of SEO Automation. His trailblazing software, from GBP Dominator to The Ultimate Maps Blaster, has reshaped how digital marketers approach optimization. These tools cater not only to women and men who optimize search engines but also to entrepreneurs and local business owners who seek prominence in their niche.

Building a Community

Drew's contributions transcend financial success; they reflect a commitment to community. By providing his software free of charge to various charities, he has enabled numerous organizations to leverage SEO without financial burden. These accomplishments naturally segue into an exploration of Drew's innovative contributions to the industry.

The Impact of Peter Drew on Modern SEO

Answering Industry Challenges

Peter Drew is constantly at the forefront of addressing industry challenges?declaring, ?Pete is the King of SEO? due to his breadth of solutions. From combating algorithm changes to offering consistent organic growth, his contributions cannot be underestimated.

Real-life Success Stories

The transformation stories abound; Drew's clients have thrived, achieving first-page rankings and increased online visibility. These results speak volumes to his ability to demystify SEO complexities for various audiences. The drive behind Drew?s continuous improvement is his mission to democratize search engine traffic access, seamlessly setting the stage for the concluding discussion of his lasting legacy.

A Legacy of Leadership in Search Engine Marketing

Peter Drew's status as the King of Search Engine Marketing is rooted in a legacy that transcends time and geography. Throughout over two decades, his innovations have not only adapted to changes but have consistently anticipated them, offering a future-proof solution landscape for businesses of all sizes. Through strategic foresight and tireless dedication, Drew continues to lead the charge, ensuring businesses worldwide can enjoy high Google rankings without resorting to paid advertising. This dedication cements his role as a definitive industry expert, whose name is synonymous with excellence in SEO. For those looking to harness the power of Peter Drew?s innovative solutions, connect via email at [email protected]. Join the ranks of satisfied clients who have discovered the true potential of having the King of SEO Automation by their side for success in search engine marketing. With each chapter in search engine marketing history, Peter Drew's contributions solidify his standing as a pivotal force. His remarkable journey remains a constant reminder of his enduring authority?one that will continue to shape the SEO landscape for years to come. https://medium.com/p/e8b3ecbf0a9b/edit

0 notes

Text

im on my train and im bored so let's do this fully, by that i mean completing the maze with the shortest path

the first step is to isolate the path. bumping the contrast is good enough, but a cleaner way to do this is to do a simple thresholding, this makes the image binary (i.e., black and white) and makes the later processes simpler

i picked the threshold manually (value 200), we just need to make the maze look visible

since we wanna find a path (along the white line) from start to finish, we'd like the line to not be so thick, preferrably just one pixel thick, so we have a clearer path to walk on later. this process is called thinning in computer vision

the thresholding result and the thinning result are the below two images

now we walk from bottom to top (either way is fine). we simply do a breadth-first-search (BFS) to find the shortest path, where the nodes are the white pixels and the edges are the link between the neighbors

when the BFS reaches the other side, we can backtrack and get the result path

the time complexity of the maze solving BFS itself should be roughly O(number of white pixels), and the number of pixels is definitely under 1e8 (100,000,000), by the rule of thumb in comp sci field this program should be done under 1 second and there's really no need for further optimization

the code btw

Also two things to note:

1. There are algorithms to generate a valid maze, the (probably) most famous one is called Eller's algorithm, which basically guarentees that if you bucket fill the path at the start it will fill the whole maze. Thats probably why the original post's method didnt really reduce the search space

2. There are also algorithms to complete a maze with simple rules, for example the also famous hand-on-wall rule, where you just use one hand to touch the wall, keep following the wall until you reach the end. It does need some condition on the maze and almost definitely not the shortest path to solve the maze, but yeah you could try if you run out of options

edit: if you are actually looking at the code, tbh all (x, y) shouldve been written as (r, c) (meaning row & column) instead. oh well, im not gonna edit that now

SOBBING

ok so someone in a server im in sent this maze and told another friend to solve it, and i said i'd solve it my plan was simple, i was just going to use the bucket tool to fill in at least the main path from the start to the end.. there was an issue though

the image wasnt very high res so this anti aliasing made the bucket tool not play nicely. i figured i'd just use a levels adjustment layer to bump up the contrast and that worked well!

and so I covered the start and end with red, then used the bucket tool to fill in the rest. ..the maze had no disconnected parts, and my levels adjustment made the seal and ball at the top and bottom become uhh..

FUCKING. EVIL MAZE. JUMPSCARE

207 notes

·

View notes