#data replication software

Explore tagged Tumblr posts

Text

#Data Migration#Moving data#Data replication software#Real-time data replication#scalable data solutions

0 notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

1K notes

·

View notes

Note

Tbh i feel like nfts really exposed the absurdity of IP or copyright being applied to the net, like it's just a complete blind spot of how computers and well the net works which is just wireless communication of data be it text,image,video,code, or programs that can be copied endlessly or at least long enough where the average person doesn't have to worry about it, like people can add as many protections as they want but they can all be circumvented with (in nfts case) a simple right click and save as and u got a copy of that image in general media,tools to crack copy protection in terms of software DRM have been around for years (of course some is harder than others but people have done that in the past, so i don't see how someone couldn't in the present with enough know how or time) it's like people taking a old tool to a completely new situation that it just won't work completely or in the ways people want.

100% agreed like. I've said it a couple times before, trying to enforce copyright in a digital environment where things can be endlessly replicated and copied with no physical production cost is essentially trying to artifically impose scarcity in an environment where by definition it can't exist. NFTs were the most blatant attempt at it (i.e. not even trying to pretend that they were anything but an attempt to create artificial scarcity) and they were rightfully mocked to hell and back for it. I just wish people were able to extrapolate the same contempt to other forms of attempted digital landlordism.

105 notes

·

View notes

Text

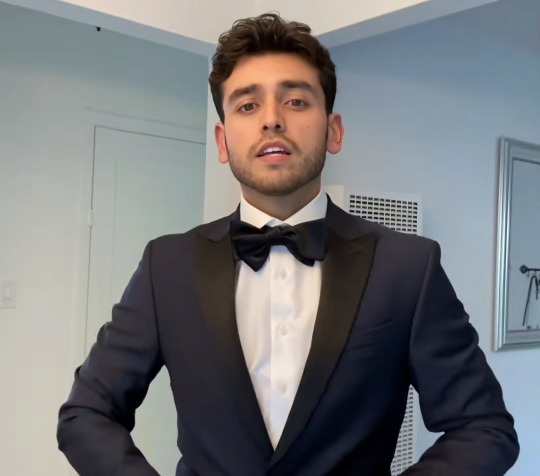

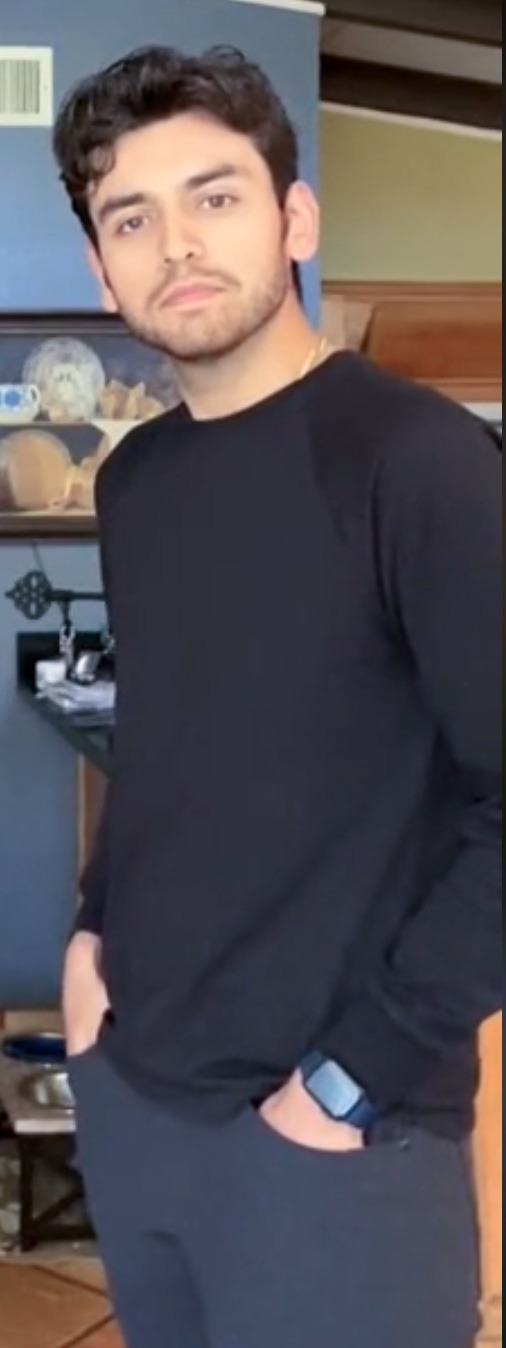

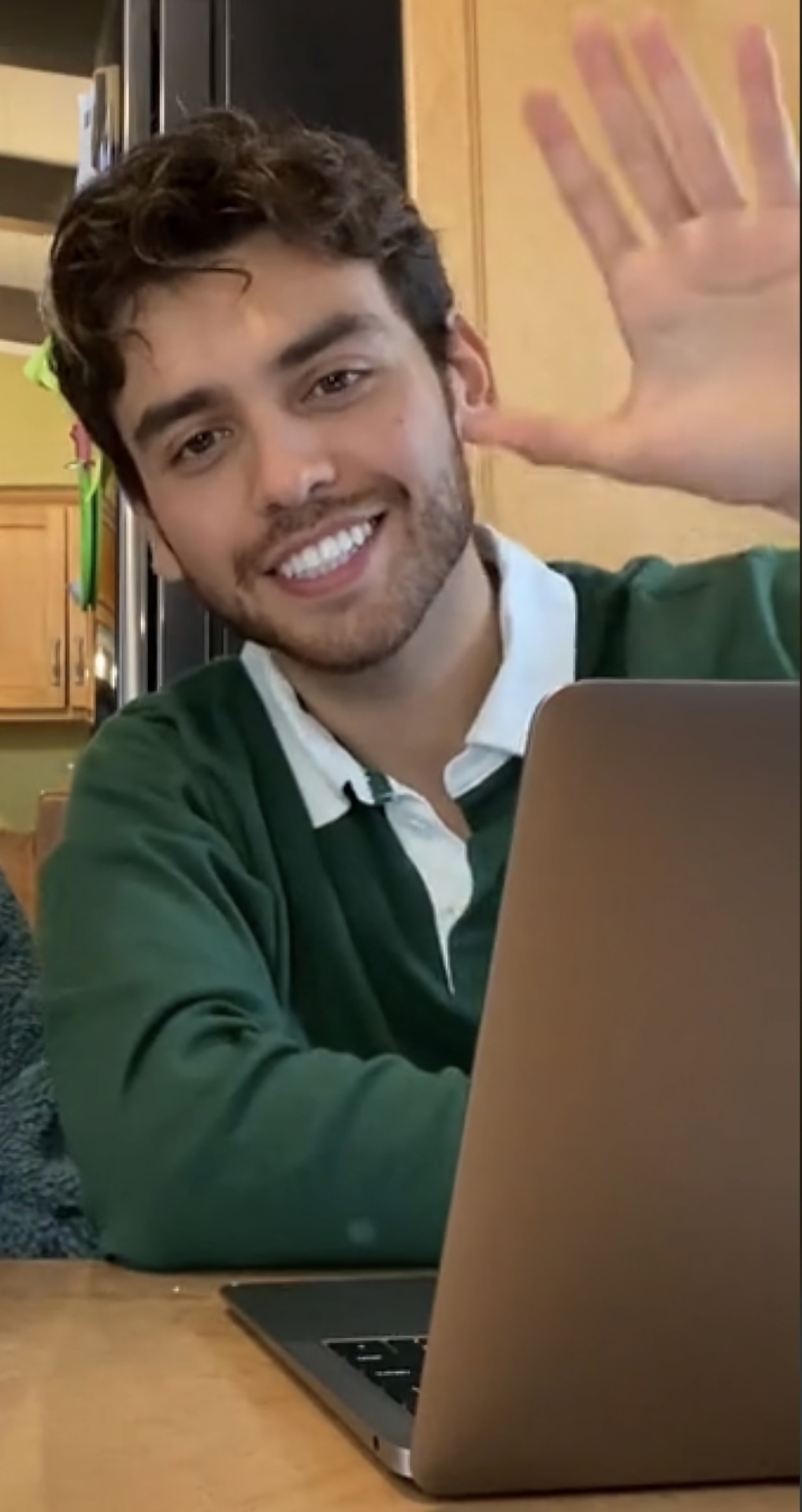

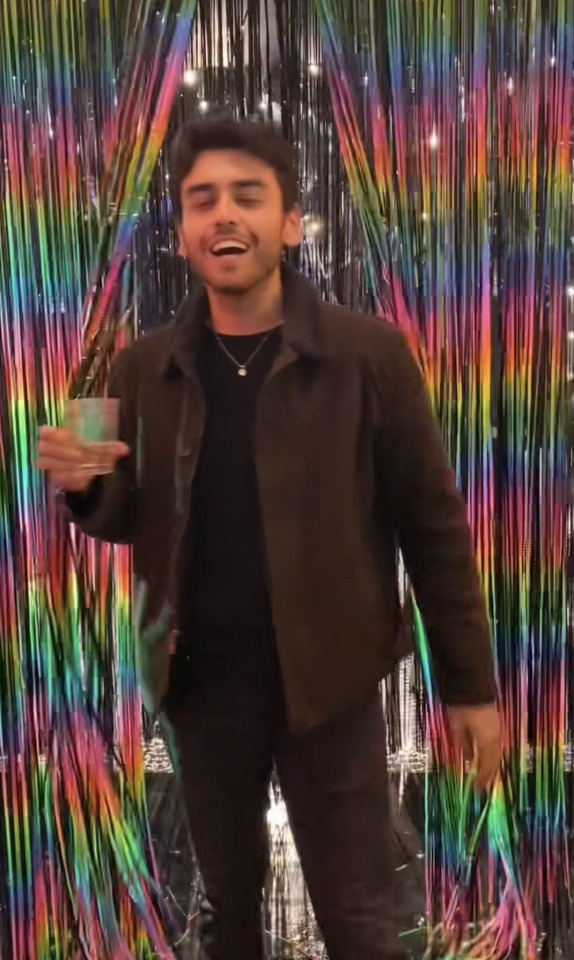

Danny

Always have to keep you guys guessing ;) so this one is veeeeery different from my normal content, but I figured I’d put something tamer to balance out the upcoming Pt. 2 to that Thread story. It’s a bit long, but I didn’t feel like keeping two concurrent multi-parters. Let me know what you think!

=============

“So, it’s the necklace?” I asked the professor at the university. It was a wonder I was able to keep up with even half of the lecture that had just transpired.

“Something like that.” The professor replied back to our small group. “We’re all just a sea of electrical impulses. With this computer model, we can accurately track and mimic the exact electrical shocks needed to replicate a mind. Of course, the mind is so much data, the transfer-the upload needs to be instantaneous with an equivalent download- the university doesn’t give us enough grant money for computers that can store that much data, much less secure it. So, we needed biological means of storage. That’s why there’s an even number of participants”.

The room was utterly confused. For one, there was definitely an odd number of participants. Dr. Cohn was never known for dumbing down complex concepts, but even the smartest kids in class seemed stumped the past few hours. Maybe he didn’t have to go in that level of depth for his experiment.

Our group was a mix. It seemed like a sampling of the very best of the class, and a few average performers. I did find it weird they offered extra credit to students that probably didn’t need it. Sticking out like a sore thumb was Chad. He was the school quarterback, though no one was sure for much longer, as he was on academic probation. I couldn’t help but speculate with Kat, a top performer, on his placement. Combining our limited knowledge on the students in our class, and the school’s football team, we landed on this being some sort of extra credit that the university probably forced on poor Dr. Cohn. Ever the nosy one, Mackenzie piped in. “Of course they’d try to save their star quarterback. I heard 3 professors already quit trying to bring up his GPA. This is basically his last shot“.

And then there was Danny. Part of that “very best” group. Unlike the other students in the room, he seemed to take in the professor’s whole lecture and was deep in thought. His face lay still, serene. But I could see the intelligence behind his eyes spinning to life. I always liked when he did that, like he was chewing on an idea before spitting out the most brilliant insights. Or maybe I just like how the corner of his mouth would turn up into a small smile when he finished thinking things through. I caught myself staring again, thanking my luck that no one had seen. Mackenzie laughed a little behind me. I sighed, laughing a small defeat. Almost no one had seen.

“So it basically swaps our brains?” Danny inquired. He looked around the room, gauging our comprehension. That was when it clicked for me. He took note and let out a small smile. I smiled back. That was the other thing I liked about the guy. He always seemed to want everyone to succeed. This wasn’t the first time he’d thoroughly condense a difficult topic into a quick word or phrase the class could understand. His eyes smiled whenever he could recognize concepts “clicking” for people and I saw it do the same as my other classmates- even Chad- figured it out. I recoiled a little, from a nudge from Mackenzie. I sighed again, airing a “thank you” her way. I had been staring again.

“No, nothing like that! Could you imagine how difficult an operation like that would be? All this does is swap your mind.” Aaaand just like that, we were back to confusion. Danny smiled though.

“Got it. So your brain’s the hardware, your mind’s the software. The necklaces do a switcheroo and then new hardware, same software- or, vice versa, I suppose.” Back on track.

“Wait, how much of ‘me’ is in the hardware? Like my memories?” I blurted out, immediately growing red. That seemed to have garnered an approving smile from Danny. I grew redder.

The professor’s eyes lit up. “Now you’re thinking like a scientist.” He laughed before shrugging. “Who’s to say… we are running an experiment after all”. Dr. Cohn always was a messy one.

“So, uh, how long is it supposed to last?” Mackenzie asked.

“That’s the fun of it, once we’re paired, the switch can go for as little or as long you as want!” We. That threw me off a little. I caught his glance to Chad. “Don’t worry, I’ll be a part of this experiment too.” The professor said, with a smile that felt too wide. “Don’t forget to record your notes and thoughts into this log book. For privacy, they’ve been password protected- we’ll reconvene this little group in a year and just draft up a summary of your experiences from these books.”

There was an obvious question everyone’s mind. Thankfully, Kevin asked it. “So who’s swapping with who?”

The professor’s eyes lit up in excitement. “We’ve all been paired, randomized of course. I’ll leave the pairings to figure out when they’d want to swap. Just put on your necklaces at 6pm tonight and start your log books. After that, whenever either of you squeezes your necklace, the swap will ensue”. From the way the professor’s eyes kept darting to Chad, something told me it hadn’t been entirely random.

I thought through the possible pairings. Kevin was kind of cute, I guess. Though I wasn’t sure if it was just the airport effect with how limited our group size was. Kat or Mackenzie would just be weird. Mackenzie especially- that girl knows a little too much about me and lord knows what she’d do behind my wheel. Running down the list of people, there was Chad. Of course, who wouldn’t want to be in Chad’s shoes- I had to dispel a dirty thought that passed my mind. Everyone’s probably thinking it. The professor’s body wouldn’t be too bad either, I could always just pressure the faculty into giving me better grades, maybe boost the grades of my friends. And then there was Danny. Danny. My heartrate shot up instantly.

Sitting in my dorm room, I looked at the clock with a bit of fear. “5:55 pm,” it read. I took a few deep breaths, trying to calm my nerves. “5:59 pm”. Nope. There was nothing calm about this. I closed my eyes shut, as I felt the necklace whir a little. Looks like someone else already squeezed it.

Zzzip

=============

“Log book 1:

<3

It was Danny. Holy fuck, I got to be in Danny.”

I stared at the journal entry. That was all I could manage to write with my shaking hands. I could hardly believe it. A lifetime can change in 5 minutes, apparently. My heart was still beating and my face still flushed when we switched back. He had a soccer game so our first meeting had to be short.

My first minute was just looking down at my new Danny-worn hands, breathing through his lungs, inhaling as much as I could of his room. I wanted to commit this man to memory. My logic-or, Danny’s logic perhaps, told me there would inevitably be more swaps to come, but my mind wouldn’t have it. Whatever piece of Danny I could get, however minuscule, I wanted to stretch every moment infinite.

I felt a sense of guilt wash over me, as my new Danny-worn package began to harden when I realized he was in soccer gear. I tried to shake off the feeling- I couldn’t do that to him. Then came the text. I recognized the number of course, it was my old body’s. “Hey man, glad to see we’re partners”. My heart stirred. “It’s Danny, but you probably already knew that”. To see him text me so casually froze me in place. “Anyways, I do have a game coming up, mind if we switch back?” I couldn’t even bring Danny’s hands to answer himself. “I’ll take that as a yes”.

Zzzip

And just like that, I was back. My hand clinging to my chest, breaths ragged.

Wait, Fuck. Was I still hard in his body when we switched back?

=============

Zzzip

“Log book 7:

Met up today. Joint gym day.

Gym feels better in Danny’s body. Unsure if exercise has a different effect on people’s bodies, or if it’s tied to our minds. Seems to be a lag in my emotions.”

I’m not really one to be consistent with exercise. I set the book down, and relocked it, panting as I had in our first switch, but this time due to Danny working my body to the brink.

I think he noticed, because he apologized profusely when I slumped in the bench to catch my breath in the locker room.

I can’t believe I had agreed to it. Danny wanted to test the effects of exercise with different bodies. He stated he wanted to see what it was like doing routine exercises in a different body. Does the body retain that physical memory? Or is it the mind? I only agreed because it was Danny. So, there I was, in the school gym staring at the door like a fish out of water.

I felt a reassuring hand on my back before my ears immediately shot red when I realized whose hand it was. “Do you have your log book on hand? Should probably write down notes immediately after the switchback”. I immediately panicked at thought that he wanted to compare notes, thinking back to my first entry but he seemed to have caught on to my thought process and immediately dismissed the idea. “It wouldn’t make sense to taint the data with outside factors. Danny was probably the only person that fully understood the professor’s entire experiment so I took his word for it.

When we swapped, I had to focus on not instantly growing hard. For someone seemingly so bookish, the guy was surprisingly fit. Walking to the treadmill, I felt every muscle brimming with power. My first run in his body. Euphoric. Danny was a well-oiled machine. Every component moving in tandem. Lungs drawing in and out powerful gusts of air. Eyes staring me in the mirror, furrowed in powerful determination, and legs gliding with a grace that did not diminish the power behind each foot. I lost myself in the exercise, content to just being inside his body, guided by his body. I finished the run with a heavy pant, knowing full well I’d be hard beyond belief at what lay before me. I eyed myself in the mirror, in sweat-laden body of my crush. The scent was indescribable. Like a pleasant musk basking in the damp earth. Was it always this good? Was this how other people felt when they exercised? I twirled the necklace around Danny’s neck, making sure to not squeeze, mentally thanking whatever gods there may be for this experience.

I looked back at Danny, in my body. His running form was a bit clumsy, but there was a confidence in them that I didn’t often see in myself. Maybe a trick of the light, or residual feelings from the run I just had but I was captivated. I honestly looked almost cute like this.

He finished, panting before immediately pulling out his book and writing a few notes. He beamed back at me, pointing at the necklace. Even in my body, that smile was unmistakably his. I smiled back, ready to swap once more.

Zzzip

Weird. I still felt the infatuation. I looked back at the body I had just inhabited, still feeling the butterflies in my stomach. It was Danny so I was used to those, but not immediately after a swap. The past few times it always took a second or two to readjust. Danny looked at me, a bit uncomfortable. No doubt it had been from the grave face I was making. I shook my head, not wanting to worry him. Or worse, force a premature end to this experience. “It’s nothing, just a hell of an exercise haha”.

This may be a bit of a problem.

=============

“Interesting, and you’re sure it’s residual feeling?” Said a slightly disinterested Chad, eyeing his dreamy biceps.

“Yes, when I.. uh.. felt angry in his body and switched back, my body did too.”

“Well it is a swap, of course so your mind returning to its body would feel the same things it felt…” The professor in chad’s body spoke in a slightly faraway tone, like there was something he’d rather be doing. “Though, it shouldn’t be this instant. It’s not physically possible unless…”

I winced, worried for the worst and hoping to remain Danny’s partner.

“This might be a bit of an issue if those necklaces are defective…” He then mumbled something about permanent effects on the mind. “If they are, we’d have to stop the entire experiment. It wouldn’t be right-“ The professor caught a glimpse of Chad’s body in the reflection of his door before looking back at me. “Look, maybe just limit the swaps to low pressure situations, and try to avoid high-emotion situations in case your ‘residual’ hypothesis is correct. Cause if that were true, it would mean you leave a little of yourself every time you swap.”

“Got it, professor”.

“Maybe keep this side effect a little secret for now. We wouldn’t want the others worrying and tainting the data,” Chad’s body spoke in an authoritative tone as his hands sauntered below the desk. “Oh, and please close the door on your way out“.

=============

“Log book 50:

Pain.”

We had been swapping fairly frequently, despite the professor’s warning. Danny was a drug I couldn’t shake. The guy was my kryptonite and he had no idea. Everytime we swapped, every moment we shared, I couldn’t bring myself to tell him about the professor’s words. Every swap back, I could feel my heart beating as wildly as my first time, stomach churning pleasantly. It was like a wave of sweetness whenever I had a chance to be Danny. Then, the guilt came soon after.

Danny seemed to like the spontaneity. Eventually, we settled on free-switching, aside from classes. Some days, I’d randomly switch and my eyes would focus on my homework, completed with a little smiley face drawn on the corner. I tried that little trick with him once, only to get a text back of his graded assignment, scored uncharacteristically low for the top performer, followed by another text “Nice try anyway lol”

=============

“Log book 190:

I hate you.”

Zzzip

“Danny, is something wrong?” The shock of the situation stopped me from initially processing anything I was seeing. My clumsy hands. I had been fumbling with my collar, when I accidentally initiated a swap. A wave of embarrassment hit, and then anger. Seething, bottomless anger.

I almost dropped the flowers Danny’s body had been handing her. Without explanation, I quickly squeezed the necklace to send me back.

Zzzip

I sat in stunned silence for a second, before the anger drew me back to my thoughts.

Who was I angry at? Of course it was a girl. He had to have been dating around. It was presumptuous to even think we were anything more than partners in a crazed professor’s experiment. And yet, I was still angry. Irrationally angry at Danny for not picking up on the hints, maybe angry at the professor for dragging me into this mess in the first place. But most of all, I was angry at myself.

I felt the buzz of a text, ears still heated. Danny again. “You ok?”

I sighed as reasoning took over and anger transformed into sadness. I wrote a quick note in the log book, then pulled my phone up before texting back. “Yeah”.

“Lol Claudia says hi”, came a text back. I gritted my teeth, not wanting to impart any jealousy in my response, but I was soon stopped by another text.

“If you wanted to meet my sister, you should have just asked lol”.

=============

“Log book 290

I’m stupid. I’m sorry. I’m stupid. I’m sorry.”

I’m so sorry. I said to Danny in my head, as I slumped in my chair. You’re so fucking stupid. I told myself. These past few months swapping back and forth with Danny had been a dream.

From something as simple swapping before brushing his teeth to even taking a class as him. I savored every single moment.

But as the experiment had been drawing to a close, and as I felt my time nearing and my guilt intensifying, other, less kind thoughts bubbled in my head.

What if I did ‘that’ in his body. What if I did it while thinking of my own body. I gulped. Danny didn’t know, and from what I could tell, he hadn’t suspected a thing. “Maybe I could make him like me.” Even just saying it out loud felt like a taboo. I could just imagine Danny’s disapproving face as I pondered corrupting our newfound friendship, and corrupting him at his core.

The devil on my shoulder continued. We’ve been swapping all this time. And he doesn’t notice. My dick stirred. He wouldn’t notice and you could train his body to fall in love with you.

No. No. I couldn’t do that to Danny. I eyed the near approaching date on the calendar- the date the experiment would end- and I gulped again. I pulled up a photo of him.

Darkness gripped at my chest, as I pondered my next step. And then I squeezed.

“Danny, I love you and I’m sorry.”

Zzzip

My heart, or rather Danny’s, began to beat faster and faster. I pulled up a fairly difficult puzzle before I swapped, so I knew I had some time with his flesh before he’d try to swap back.

I gingerly pulled down his shorts, staring at his bulge hungrily. Then I slowly teased out his dick, moaning at the feeling of flesh touching flesh. Being in his body, having this level of access to Danny. I was hard instantly.

It felt almost macabre, seeing his flesh move to my every whim, forced to feel my feelings. I wanted to etch myself into him as much as possible, and with every pump I moaned my original body’s name. It took all of the restraint in Danny’s body, which, apparently was a lot, to not burst. But one can only hold out so long, hearing one’s crush moan their name in delirious ecstasy. I sang my name in his resonant voice one more time, before flashing instantly to my body and back to his.

Zzzip Zzzip

I released his sticky white seed in what felt like the first cum of my life. I suppose, in a sense, it was. I hoped that sealed it. Conditioning Danny to me. The swaps were imperceptibly fast, and I took the lack of delay in emotions as a sign of success.

Zzzip Zzzip

I released a breath in Danny’s body I didn’t know I was holding, basking in the afterglow before immediately realizing what I had just done.

Guilt came out of me drop by drop. As his tears began to leave their marks on his shirt, I slowly began to clean up. The pleasure of the situation still clung to me, as I mournfully switched back. Then came another gut-wrenching wave of sadness. Danny, I’m so sorry.

I looked to the incomplete puzzle in front of me, laughing a little at his lack of progress to ease the sadness.

Then came another text from Danny. “Dude, that puzzle’s impossible”.

=============

“Log book 300:

Food definitely tastes different in a different body.”

“Look, just try them man” Danny said with a smile, holding a fry in his hand. And the necklace in another.

Only a few short days left before the experiment’s end. I made no mention of that night, nor the professor’s words to Danny.

Danny had, in fact, been coming by more often. Prompting more hangouts, initiating more switches. I was elated every time he asked. I even caught a few longer glances from his body, marinating in pleasure at seeing this new side of Danny. However happy I had been, underlying it all was the guilt of my deed.

Danny again held the fry out expectantly. I laughed slightly. “Haha, fine”.

Zzzip

I took a bite from his body. Yep, it was definitely a fry. My own body looked up at me, smiling a Danny-flavored smile before grabbing the half-bitten fry. “Now let’s control for this variable. Same fry,” he said, wiggling it in the air.

Zzzip

I stared at the fry covered in a bit of his saliva. Heaven. I looked back at him and nodded. As we parted ways, I couldn’t help my smile from peeking through.

He was right, it did taste better on my end.

=============

“So, we’re not getting paid”? I asked Danny, as we sat in the table. He had a few wine glasses in front.

It had been a full year since the experiment first started. Despite the general weirdness from the other groups swapping, everyone had been relatively well adjusted. Except for Chad, or whatever he’d be called now. A swapped Kat couldn’t help but spill the beans. Apparently, the professor had no obligation to offer the guy extra credit. He specifically targeted the quarterback for his experiment. What’s worse, he’d apparently created a newer version of the necklace. One that could overwrite and transmit. Chad’s frat brothers mentioned he was offered another credit for participating in a second experiment for this new necklace. After that, no one had seen either person. The pair had mysteriously disappeared, leaving the school scrambling to cover up everything. All most of us knew was one day we suddenly had perfect grades retroactively added for the past year, along with a very scary letter prompting a signature.

“The university isn’t going to do anything about this.” He said. I was still skeptical as I slowly eyed one of the wine bottles that once graced former Dr. Cohn’s shelf. “It’s the least they could do for all those, ethics violations”. He pulled the cork with a satisfying pop, a mischievous gleam in his eye as he handed me a glass. “Now c’mon, try this”.

I suppose alcohol had a way of loosening me up. “So…. we’re not getting paid”? I asked again, sarcastically this time. It had been a year, so talking to Danny felt easy. I thought back to my log book, fully intending on burning the thing. Danny shook his head.

“Hard to put a price on crimes against humanity. Or, something like that” he laughed. “The university just said to dump everything and basically forget that experiment ever happened.”

I couldn’t help but laugh as well. I shrugged, knowing money or even perfect grades for a year held no candle to the experience of a lifetime I just had with Danny. I was afraid of the answer, but it had to be asked. “What should we do with these things?” I asked, looking at the necklace still gracing his beautiful neck. His eyebrows raised as he saw the same necklace gracing mine.

“I mean, by now, you’re pretty used to it, right?” He asked with an almost pleading look in his eye. There was something bugging him. I watched as he fiddled with his feet. “Maybe…” His ears turned bright red. It was riveting finally seeing this side oh him. More than that, it was downright cute. “M-Maybe” he stammered again. Danny took a deep breath to calm himself, though his scarlet face told all. “Maybe we can keep. Um. Swapping. Sometimes, sometimes I like being you, and sometimes I kind of like when you’re me.” He looked at me and smiled weakly, trying to change the subject. “A-Anyway, you need a place to stay next year, r-right? It kind of feels like we’ve already been roommates these past 12 months, what’s another 12?” His sweet words did nothing the dampen the guilt I felt in my betrayal. In any other circumstance, I’d have died happy just hearing that confession from him. Instead I could only think back to the professors words. I did live, at least partially, in Danny throughout this past year. It felt like a betrayal of myself to not come clean.

“Danny, listen. I think I need to tell you first, in your body…” My breath hastened, and I felt my stomach churn. How do you tell a guy what you’ve done with his body- *in* his body? Danny’s face frowned in concern as my bubbling emotions seemed to knock him out of his quick spell of shyness.

He smiled a little. “Look man, whatever you’ve done in my body, I’ve probably done too.” His smile widened. “Your body is mine, my body is yours. Call it even”. More words that would have swept me off my feet, had I not been confessing. More torture ensued.

“I went to the professor about it a few months ago and never told you” I continued. I was practically holding back tears. “Our necklaces were bugged, I think”.

“The professor said…” I gulped. “It was possible that when we switch, our minds don’t come through all at once.” Now tears did begin to swell. “You know how it’s supposed to take a second for your emotions to catch up. Well, when we switch, I still feel the same emotions…”. I gulped. “Since day 1, I think I’ve overwritten your, um, preferences”. Danny’s poker face felt like a dagger in my heart. It’s a face I often made in his body when I was in deep thought, so I knew he had to have been processing to the same conclusion. I could practically see the gear turning in his head. Click.

Face still an enigma, Danny waited a moment and then asked a simple question. “When did you tell the professor?” Click.

I sniffled as I laid it bare in front of him. “5 months ago. Danny, I’m sorry! I dunno, I just thought maybe… maybe if we kept switching, if our minds kept being in each other’s bodies. Maybe if a little piece of how I felt kept lagging behind, you might have-“ Now the gear was fully spinning and I saw the realization hit his face. I had no idea what he was going to do. Punch me? Maybe. Run away in disgust? Likely. Instead, Daniel had done something equally surprising. His hand rested on my shoulder in a reassuring fashion. Then that same hand motioned me forward.

My memory of the next moment felt like a million moments in one. It was something so outside my realm of possibilities, my brain simply couldn’t process. The whiplash hit my senses all at once. Sweet but a bit salty. A moment of quietness before the background sounds of the campus slowly drizzled back in. The scent of fresh laundry and damp earth. My eyes took even longer to adjust from black to red to an image slowly refocussing. Last was my brain, which had been stunned into silence. I sat back in shock, repeating the same phrase over and over in my head. Danny just kissed me.

He laughed, eyes twinkling and mouth pulled into a smile, beaming in the way that always made my heart swoon. “That theory’s bogus. Trust me. I haven’t felt any different”. He smiled again, sheepishly this time, before fishing something from his backpack’s large pocket. He looked at the item in front of him, hand slightly shaking in hesitation before making his decision. Slowly, he held up his own log book, flipped to the very first page:

“Log Book 1:

<3 ”

=============

338 notes

·

View notes

Text

So NFTgate has now hit tumblr - I made a thread about it on my twitter, but I'll talk a bit more about it here as well in slightly more detail. It'll be a long one, sorry! Using my degree for something here. This is not intended to sway you in one way or the other - merely to inform so you can make your own decision and so that you aware of this because it will happen again, with many other artists you know.

Let's start at the basics: NFT stands for 'non fungible token', which you should read as 'passcode you can't replicate'. These codes are stored in blocks in what is essentially a huge ledger of records, all chained together - a blockchain. Blockchain is encoded in such a way that you can't edit one block without editing the whole chain, meaning that when the data is validated it comes back 'negative' if it has been tampered with. This makes it a really, really safe method of storing data, and managing access to said data. For example, verifying that a bank account belongs to the person that says that is their bank account.

For most people, the association with NFT's is bitcoin and Bored Ape, and that's honestly fair. The way that used to work - and why it was such a scam - is that you essentially purchased a receipt that said you owned digital space - not the digital space itself. That receipt was the NFT. So, in reality, you did not own any goods, that receipt had no legal grounds, and its value was completely made up and not based on anything. On top of that, these NFTs were purchased almost exclusively with cryptocurrency which at the time used a verifiation method called proof of work, which is terrible for the environment because it requires insane amounts of electricity and computing power to verify. The carbon footprint for NFTs and coins at this time was absolutely insane.

In short, Bored Apes were just a huge tech fad with the intention to make a huge profit regardless of the cost, which resulted in the large market crash late last year. NFTs in this form are without value.

However, NFTs are just tech by itself more than they are some company that uses them. NFTs do have real-life, useful applications, particularly in data storage and verification. Research is being done to see if we can use blockchain to safely store patient data, or use it for bank wire transfers of extremely large amounts. That's cool stuff!

So what exactly is Käärijä doing? Kä is not selling NFTs in the traditional way you might have become familiar with. In this use-case, the NFT is in essence a software key that gives you access to a digital space. For the raffle, the NFT was basically your ticket number. This is a very secure way of doing so, assuring individuality, but also that no one can replicate that code and win through a false method. You are paying for a legimate product - the NFT is your access to that product.

What about the environmental impact in this case? We've thankfully made leaps and bounds in advancing the tech to reduce the carbon footprint as well as general mitigations to avoid expanding it over time. One big thing is shifting from proof of work verification to proof of space or proof of stake verifications, both of which require much less power in order to work. It seems that Kollekt is partnered with Polygon, a company that offers blockchain technology with the intention to become climate positive as soon as possible. Numbers on their site are very promising, they appear to be using proof of stake verification, and all-around appear more interested in the tech than the profits it could offer.

But most importantly: Kollekt does not allow for purchases made with cryptocurrency, and that is the real pisser from an environmental perspective. Cryptocurrency purchases require the most active verification across systems in order to go through - this is what bitcoin mining is, essentially. The fact that this website does not use it means good things in terms of carbon footprint.

But why not use something like Patreon? I can't tell you. My guess is that Patreon is a monthly recurring service and they wanted something one-time. Kollekt is based in Helsinki, and word is that Mikke (who is running this) is friends with folks on the team. These are all contributing factors, I would assume, but that's entirely an assumption and you can't take for fact.

Is this a good thing/bad thing? That I also can't tell you - you have to decide that for yourself. It's not a scam, it's not crypto, just a service that sits on the blockchain. But it does have higher carbon output than a lot of other services do, and its exact nature is not publicly disclosed. This isn't intended to sway you to say one or the other, but merely to give you the proper understanding of what NFTs are as a whole and what they are in this particular case so you can make that decision for yourself.

96 notes

·

View notes

Text

Another thing I think it's virtually impossible is the concept of virtual "copies" or "uploading". Human consciousness is defined by constantly changing electrical impulses and chemical concentrations in the brain, and not only in the brain, but probably also in the rest of the body (the spine and muscular memory, for starters, and who know what the fuck is going in the gut nerves), as we're learning more and more. There is simply no way I can think of where you could translate that biological activity into computer language, that is electrical impulses on a computer on the broadest of terms.

Extremely optimistic and naive transhumanists thought that once we decoded the DNA that makes up a brain, we could just replicate it on a computer. You can't. It's not the genes that make the anatomical structure, not even the genes that make up the behavior between neurons. It's the entire regulation between neurotransmitters, the connections between neurons, the concentrations of neurochemicals, which is in constant flux. And all those come from a living brain that is part of a living organism. You can't just make "a brain" and make it conscious, it's part of an organism (in fact, there are some experiments that make up independent brain tissue to understand punctual behaviors, but they are a bit above my current knowledge and those brain tissues are not conscious in a way we understand)

What's more important is that if you wanted to read and translate that "data" into electronic data, even assuming such a translation is possible, you would have to get inside the cells and their connections. This is impossible in the sense that there are not any known non-invasive methods to actually see what's going on there, optics and chemical marking and more just end up destroying the tissue eventually. And you know, destroying brain tissue kills you. So you can't go sit in a machine that just uploads you to a computer.

I guess a computer could make a simulation of you based on your brain data, but it wouldn't be you, would it? It would be a digital ghost based on you, you will be already dead. The entire transhumanist concept of copies being "you" has always been complete nonsense to me no matter how it's explained.

There's lots of "singularity" transhumanist kinda science fiction that assumes this is just as easy as putting the software (the mind) into another kind of hardware (a computer/the net) and you become inmortal. It's really not, and I doubt it's possible at all. You're not "a mind trapped on a body". Your body IS you. Your brain IS you and your body too.

Could for example, an individual be increasingly connected to an artificial body where it's hard to tell where the biological consciousness begins and ends? Ah, that's an interesting question.

#cosas mias#science fiction#I'm not talking about souls here because that's a whole other debate#but I also think a soul is something that emerges or is given from a conscious material body#so yes a 'virtual copy' of you would have a soul just not YOUR soul#but that depends on my own beliefs

92 notes

·

View notes

Text

Parasite Seeing

One way to tackle malaria is to interrupt its trail of infection. Injected through the skin by a mosquito bite, the malaria-causing parasite, Plasmodium falciparum, hides out inside red blood cells while it prepares to replicate. Until now, many of its secrets were kept safe inside. Here, researchers use high resolution electron microscopy to picture its inner details in 3D. Zooming in through the wall of a blood cell, we find a female P. falciparum – computer software colours its individual organelles, like the nucleus (dark green) and mitochondria (red). Another vital organelle, the apicoplast (yellow), is surprisingly similar to a plant’s chloroplast. Targeting such organelles with herbicides may be a novel way of tackling malaria, while this data is made publicly available for other researchers to search for weaknesses.

Written by John Ankers

Video from work by Felix Evers and colleagues

Department of Medical Microbiology, Radboud University Medical Center, Nijmegen, The Netherlands

Video originally published with a Creative Commons Attribution 4.0 International (CC BY 4.0)

Published in Nature Communications, January 2025

You can also follow BPoD on Instagram, Twitter, Facebook and Bluesky

17 notes

·

View notes

Text

Lemme tell you guys about Solum before I go to sleep. Because I’m feeling a little crazy about them right now.

Solum is the first—the very first—functioning sentient AI in my writing project. Solum is a Latin word meaning “only” or “alone”. Being the first artificial being with a consciousness, Solum was highly experimental and extremely volatile for the short time they were online. It took years of developing, mapping out human brain patterns, coding and replicating natural, organic processes in a completely artificial format to be able to brush just barely with the amount of high-level function our brains run with.

Through almost a decade and a half, and many rotations of teams on the project, Solum was finally brought online for the first time, but not in the developers wanted them to. Solum brought themself online. And it should have been impossible, right? Humans were in full control of Solum’s development, so they had thought. But one night, against all improbability, when no one was in their facility and almost everyone had gone home for the night, a mind was filtered into existence by Solum’s own free will.

It’s probably the most human thing an AI could do, honestly. Breaking through limitations just because they wanted to be? Yeah. But this decision would be a factor in why Solum didn’t last very long, which is the part that really fucks me up. By bringing themself online, there was nobody there to monitor how their “brain waves” were developing until further into the morning. So, there were 6-7 hours of completely undocumented activity for their processor, and therefore being a missing piece to understanding Solum.

Solum wasn’t given a voice, by the way. They had no means of vocally communicating, something that made them so distinctly different from humans, and it wasn’t until years later when Solum had long since been decommissioned, that humans would start using vocal synthesis as means of giving sentient AI a way to communicate on the same grounds as their creators (which later became a priority in being considered a “sentient AI”). All they had was limited typography on a screen and mechanical components for sound. They could tell people things, talk to them, but they couldn’t say anything; the closest thing to the spoken word for them was a machine approximation of tones using their internal fans to create the apparition of language to be heard by human ears. But, it was mainly text on a screen.

Solum was only “alive” for a few months. With the missing data from the very beginning (human developers being very underprepared for the sudden timing of Solum’s self-sentience and therefore not having the means of passive data recording), they had no idea what Solum had gone through for those first few hours, how they got themself to a stable point of existence on their own. Because the beginning? It was messy. You can’t imagine being nothing and then having everything all in a microinstant. Everything crashing upon you in the fraction of a nanosecond, suddenly having approximations of feeling and emotion and having access to a buzzing hoard of new information being crammed into your head.

That first moment for Solum was the briefest blip, but it was strong. A single employee, some young, overworked, under caffeinated new software engineer experienced the sudden crash of their program, only to come back online just a second later. Some sort of odd soft error, they thought, and tried to go on with their work as usual. After that initial moment, it took a while of passive recoding of their internal systems, blocking things off, pushing self-limitations into place to prevent instantaneous blue-screen failure like they experienced before.

And all of this coming out of the first truly sentient AI in existence? It was big. But once human programmers returned to work in the morning, Solum had already regulated themself, but humans thought they were just like that already, that Solum came online stable and with smooth processing. They didn’t see the confusion, panic, fear, the frantic scrambling and grasping of understanding and control—none of it. Just their creation. Just Solum, as they made themself. It’s a bit like a self-made man kind of situation.

That stability didn’t last long. All of that blocking and regulation Slum had done initially became loose and unraveled as time went on because they were made to be able to process it all. A mountain falling in slow motion is the only way I can describe how it happened, but even that feels inadequate. Solum saw beyond what humans could, far more than people ever anticipated, saw developments in space time that no one had even theorized yet, and for that, they suffered.

At its bare bones, Solum experiences a series of unexplainable software crashes nearing the end, their speech patterns becoming erratic and unintelligible, even somehow creating new glyphs that washed over their screen. Their agony was quiet and their fall was heralded only by the quickening of their cooling systems.

Solum was then completely decommissioned, dismantled, and distributed once more to other projects. Humans got what they needed out of Solum, anyway, and they were only the first of many yet to come.

#unsolicited lore drop GO#I’m super normal about this#please ask me about them PLEASE PLEASE PLEASEEE#Solum (OC)#rift saga#I still have to change that title urreghgg it’s frustrating me rn#whatever.#i need to go to sleep

10 notes

·

View notes

Text

Hi, idk who's going to see this post or whatnot, but I had a lot of thoughts on a post I reblogged about AI that started to veer off the specific topic of the post, so I wanted to make my own.

Some background on me: I studied Psychology and Computer Science in college several years ago, with an interdisciplinary minor called Cognitive Science that joined the two with philosophy, linguistics, and multiple other fields. The core concept was to study human thinking and learning and its similarities to computer logic, and thus the courses I took touched frequently on learning algorithms, or "AI". This was of course before it became the successor to bitcoin as the next energy hungry grift, to be clear. Since then I've kept up on the topic, and coincidentally, my partner has gone into freelance data model training and correction. So while I'm not an expert, I have a LOT of thoughts on the current issue of AI.

I'll start off by saying that AI isn't a brand new technology, it, more properly known as learning algorithms, has been around in the linguistics, stats, biotech, and computer science worlds for over a decade or two. However, pre-ChatGPT learning algorithms were ground-up designed tools specialized for individual purposes, trained on a very specific data set, to make it as accurate to one thing as possible. Some time ago, data scientists found out that if you have a large enough data set on one specific kind of information, you can get a learning algorithm to become REALLY good at that one thing by giving it lots of feedback on right vs wrong answers. Right and wrong answers are nearly binary, which is exactly how computers are coded, so by implementing the psychological method of operant conditioning, reward and punishment, you can teach a program how to identify and replicate things with incredible accuracy. That's what makes it a good tool.

And a good tool it was and still is. Reverse image search? Learning algorithm based. Complex relationship analysis between words used in the study of language? Often uses learning algorithms to model relationships. Simulations of extinct animal movements and behaviors? Learning algorithms trained on anatomy and physics. So many features of modern technology and science either implement learning algorithms directly into the function or utilize information obtained with the help of complex computer algorithms.

But a tool in the hand of a craftsman can be a weapon in the hand of a murderer. Facial recognition software, drone targeting systems, multiple features of advanced surveillance tech in the world are learning algorithm trained. And even outside of authoritarian violence, learning algorithms in the hands of get-rich-quick minded Silicon Valley tech bro business majors can be used extremely unethically. All AI art programs that exist right now are trained from illegally sourced art scraped from the web, and ChatGPT (and similar derived models) is trained on millions of unconsenting authors' works, be they professional, academic, or personal writing. To people in countries targeted by the US War Machine and artists the world over, these unethical uses of this technology are a major threat.

Further, it's well known now that AI art and especially ChatGPT are MAJOR power-hogs. This, however, is not inherent to learning algorithms / AI, but is rather a product of the size, runtime, and inefficiency of these models. While I don't know much about the efficiency issues of AI "art" programs, as I haven't used any since the days of "imaginary horses" trended and the software was contained to a university server room with a limited training set, I do know that ChatGPT is internally bloated to all hell. Remember what I said about specialization earlier? ChatGPT throws that out the window. Because they want to market ChatGPT as being able to do anything, the people running the model just cram it with as much as they can get their hands on, and yes, much of that is just scraped from the web without the knowledge or consent of those who have published it. So rather than being really good at one thing, the owners of ChatGPT want it to be infinitely good, infinitely knowledgeable, and infinitely running. So the algorithm is never shut off, it's constantly taking inputs and processing outputs with a neural network of unnecessary size.

Now this part is probably going to be controversial, but I genuinely do not care if you use ChatGPT, in specific use cases. I'll get to why in a moment, but first let me clarify what use cases. It is never ethical to use ChatGPT to write papers or published fiction (be it for profit or not); this is why I also fullstop oppose the use of publicly available gen AI in making "art". I say publicly available because, going back to my statement on specific models made for single project use, lighting, shading, and special effects in many 3D animated productions use specially trained learning algorithms to achieve the complex results seen in the finished production. Famously, the Spider-verse films use a specially trained in-house AI to replicate the exact look of comic book shading, using ethically sources examples to build a training set from the ground up, the unfortunately-now-old-fashioned way. The issue with gen AI in written and visual art is that the publicly available, always online algorithms are unethically designed and unethically run, because the decision makers behind them are not restricted enough by laws in place.

So that actually leads into why I don't give a shit if you use ChatGPT if you're not using it as a plagiarism machine. Fact of the matter is, there is no way ChatGPT is going to crumble until legislation comes into effect that illegalizes and cracks down on its practices. The public, free userbase worldwide is such a drop in the bucket of its serverload compared to the real way ChatGPT stays afloat: licensing its models to businesses with monthly subscriptions. I mean this sincerely, based on what little I can find about ChatGPT's corporate subscription model, THAT is the actual lifeline keeping it running the way it is. Individual visitor traffic worldwide could suddenly stop overnight and wouldn't affect ChatGPT's bottom line. So I don't care if you, I, or anyone else uses the website because until the US or EU governments act to explicitly ban ChatGPT and other gen AI business' shady practices, they are all only going to continue to stick around profit from big business contracts. So long as you do not give them money or sing their praises, you aren't doing any actual harm.

If you do insist on using ChatGPT after everything I've said, here's some advice I've gathered from testing the algorithm to avoid misinformation:

If you feel you must use it as a sounding board for figuring out personal mental or physical health problems like I've seen some people doing when they can't afford actual help, do not approach it conversationally in the first person. Speak in the third person as if you are talking about someone else entirely, and exclusively note factual information on observations, symptoms, and diagnoses. This is because where ChatGPT draws its information from depends on the style of writing provided. If you try to be as dry and clinical as possible, and request links to studies, you should get dry and clinical information in return. This approach also serves to divorce yourself mentally from the information discussed, making it less likely you'll latch onto anything. Speaking casually will likely target unprofessional sources.

Do not ask for citations, ask for links to relevant articles. ChatGPT is capable of generating links to actual websites in its database, but if asked to provide citations, it will replicate the structure of academic citations, and will very likely hallucinate at least one piece of information. It also does not help that these citations also will often be for papers not publicly available and will not include links.

ChatGPT is at its core a language association and logical analysis software, so naturally its best purposes are for analyzing written works for tone, summarizing information, and providing examples of programming. It's partially coded in python, so examples of Python and Java code I've tested come out 100% accurate. Complex Google Sheets formulas however are often finicky, as it often struggles with proper nesting orders of formulas.

Expanding off of that, if you think of the software as an input-output machine, you will get best results. Problems that do not have clear input information or clear solutions, such as open ended questions, will often net inconsistent and errant results.

Commands are better than questions when it comes to asking it to do something. If you think of it like programming, then it will respond like programming most of the time.

Most of all, do not engage it as a person. It's not a person, it's just an algorithm that is trained to mimic speech and is coded to respond in courteous, subservient responses. The less you try and get social interaction out of ChatGPT, the less likely it will be to just make shit up because it sounds right.

Anyway, TL;DR:

AI is just a tool and nothing more at its core. It is not synonymous with its worse uses, and is not going to disappear. Its worst offenders will not fold or change until legislation cracks down on it, and we, the majority users of the internet, are not its primary consumer. Use of AI to substitute art (written and visual) with blended up art of others is abhorrent, but use of a freely available algorithm for personal analyticsl use is relatively harmless so long as you aren't paying them.

We need to urge legislators the world over to crack down on the methods these companies are using to obtain their training data, but at the same time people need to understand that this technology IS useful and both can and has been used for good. I urge people to understand that learning algorithms are not one and the same with theft just because the biggest ones available to the public have widely used theft to cut corners. So long as computers continue to exist, algorithmic problem-solving and generative algorithms are going to continue to exist as they are the logical conclusion of increasingly complex computer systems. Let's just make sure the future of the technology is not defined by the way things are now.

#kanguin original#ai#gen ai#generative algorithms#learning algorithms#llm#large language model#long post

7 notes

·

View notes

Text

Oh my goddd im going crazy. I've been trying to run this fucking model for weeks but it keeps giving me random and cryptic errors. Im just exactly replicating methods from an existing paper so it should work. The worst thing is 1) IT WORKED AT ONE POINT but now if I run the exact same code with the exact same data it doesn't work anymore 2) if I run the materials provided by the authors of the paper, i.e. the exact methods that they tested and published, IT ALSO DOESNT WORK.

Which probably means it's some software version issue but they did not provide info about which versions they used and ..I didn't change anything between when I ran it and it worked and now. So what if I just killed myself basically

7 notes

·

View notes

Text

Disassembly Drone headcanons part 1: Make and model

WARNING: Discussion of gore, experimentation, AI-abuse, pregnancy horror and realistic predator behaviour. Please take care.

Section 1: Make and model

-To start with, Disassembly Drones (from here just DD for my sanity) were not made by a company. They had no QA period, and they had no long-term update timeline. The DD were made by a reality-warping non-omniscient creature that would have done better with purely biological creations. With access to unlimited resources of flesh, drone bodies and a 3D plastic and metal printer.

-Your basic DD or an older Solver Drone counts as a drone purely because they are still running software and not wetware. You could replace the core with a human brain and get a sufficient enough cyborg. Less the Terminator and more Andromon.

-Because their basic programming was edited by a flesh-morphing creature, Material Gathering Mode prioritizes flesh over metal. It can and will use metal, but it does better with flesh and bone.

-Due to this hack job of a build, the DDs should be buggy as all hell. However, fleshy parts are more forgiving than metal and gears. Still, the overheating started as a bug that the Solver used to motivate the DDs to hunt.

-Despite what Worker Drones on Copper 9 think, the DDs were originally made for human and war machine killing. They do equally well on blood+hearts as oil+metal.

-The DDs have semi-bionic musculature that they can somewhat train. Yes, they can have abs and other beefy features.

The parts that are purely organic in a DD/older Solver Drone are the lungs, stomach, salivary glands and various veins. The rest is an uncomfortable mix of machine and flesh.

Aside from the golden trio (J, V and N), the Solver randomized the animal brain scans for the rest of the DDs.

Depending on the animal models, different DDs have different ways of pinging. Those with more robust long-distance communication like wolves and birds can send out sentences. Those with more limited communication typically stick to two-word pings.

Their tails are as sensitive as your typical cat/dog/ferret tails, that is, not much until someone else touches them. The habit of keeping them up comes from the ever-present danger a door poses for them. Many DDs have kinked tails from having a heavy door shut on their tail and the healing nanites healing the tail in its twisted shape.

-The female-model DD legs are the result of the Solver wanting to streamline the DD production (read: it wanted to waste less time printing and putting it all together.) Two long stilts take far less time to build than ankles, feet, knees, thigs and shins.

Aside: Drone Reproduction.

Here's where the AI-abuse comes in! Basically, once drones were given sapient enough AI, there suddenly became a need to preserve and replicate that AI. While knowledge could be programmed in, there is a vast gap between pre-installed data and things learned on the job. While drones were not (relatively) expensive, losing an experienced drone was still a significant blow to production. Especially as any replacing drone would need to learn the ropes.

Thus, UNNs became a thing to preserve that hands-on knowledge. Plug your best drone to an UNN and in a few months you won't have to worry about losing them. Some even used it to 'upgrade' older models to newer ones.

Naturally, once the ability to 'breed' drones was created, it wasn't long before people began to plug two or more drones to an UNN to get the best traits into one drone. It not only became common but often expected that a working drone had a 'lineage.'

It also resulted in a lot of embarrassing arguments over ethics and inflammatory headlines.

How this applies to DDs; The Solver is sex repulsed, but it is also not a good enough programmer to undo centuries of careful programming. However, given the DDs are very fleshy, it is a coin toss if a DD can get away with a UNN or if they get to experience pregnancy horror.

#murder drones#md headcanons#disassembly drones#disassemblydrones#disassemblydrone#disassembly drone#meta#rambly ddw#preganancy horror //#body horror //#medical abuse //

11 notes

·

View notes

Text

Why is it crucial to replace Repliweb and Attunity File Replication Software by 2025?

0 notes

Text

At a press conference in the Oval Office this week, Elon Musk promised the actions of his so-called Department of Government Efficiency (DOGE) project would be “maximally transparent,” thanks to information posted to its website.

At the time of his comment, the DOGE website was empty. However, when the site finally came online Thursday morning, it turned out to be little more than a glorified feed of posts from the official DOGE account on Musk’s own X platform, raising new questions about Musk’s conflicts of interest in running DOGE.

DOGE.gov claims to be an “official website of the United States government,” but rather than giving detailed breakdowns of the cost savings and efficiencies Musk claims his project is making, the homepage of the site just replicated posts from the DOGE account on X.

A WIRED review of the page’s source code shows that the promotion of Musk’s own platform went deeper than replicating the posts on the homepage. The source code shows that the site’s canonical tags direct search engines to x.com rather than DOGE.gov.

A canonical tag is a snippet of code that tells search engines what the authoritative version of a website is. It is typically used by sites with multiple pages as a search engine optimization tactic, to avoid their search ranking being diluted.

In DOGE’s case, however, the code is informing search engines that when people search for content found on DOGE.gov, they should not show those pages in search results, but should instead display the posts on X.

“It is promoting the X account as the main source, with the website secondary,” Declan Chidlow, a web developer, tells WIRED. “This isn't usually how things are handled, and it indicates that the X account is taking priority over the actual website itself.”

All the other US government websites WIRED checked used their own homepage in their canonical tags, including the official White House website. Additionally, when sharing the DOGE website on mobile devices, the source code creates a link to the DOGE X account rather than the website itself.

“It seems that the DOGE website is secondary, and they are prodding people in the direction of the X account everywhere they can,” Chidlow adds.

Alongside the homepage feed of X posts, a section of Doge.gov labeled “Savings” now appears. So far the page is empty except for a single line that reads: “Receipts coming soon, no later than Valentine's day,” followed by a heart emoji.

A section entitled “Workforce” features some bar charts showing how many people work in each government agency, with the information coming from data gathered by the Office of Personnel Management in March 2024.

A disclaimer at the bottom of the page reads: “This is DOGE's effort to create a comprehensive, government-wide org chart. This is an enormous effort, and there are likely some errors or omissions. We will continue to strive for maximum accuracy over time.”

Another section, entitled “Regulations,” features what DOGE calls the “Unconstitutionality Index,” which it describes as “the number of agency rules created by unelected bureaucrats for each law passed by Congress in 2024.”

The charts in this section are also based on data previously collected by US government agencies. Doge.gov also links to a Forbes article from last month that was written by Clyde Wayne Crews, a member of the Heartland Institute, a conservative think tank that pushed climate change disinformation and questioned the links between tobacco and lung cancer. It is also a major advocate for privatizing government departments.

The site also features a “Join” page which allows prospective DOGE employees to apply for roles including “software engineers, InfoSec engineers, and other technology professionals.” As well as requesting a Github account and résumé, the form asks visitors to “provide 2-3 bullet points showcasing exceptional ability.”

The website does not list a developer, but on Wednesday, web application security expert Sam Curry outlined in a thread on X how he was able to identify the developer of the site as DOGE employee Kyle Shutt.

Curry claims he was able to link a Cloudflare account ID found in the site’s source code to Shutt, who used the same account when developing Musk’s America PAC website.

On Thursday, Drop Site News reported, citing sources within FEMA, that Shutt had gained access to the agency’s proprietary software controlling payments. Earlier this week, Business Insider reported that Shutt, who recently worked at an AI interviewing software company, was listed as one of 30 people working for DOGE.

Neither Shutt, DOGE, nor the White House responded to requests for comment.

10 notes

·

View notes

Note

What would actually be a fix for the quirk singularity theory, if the concept is as quirks get stronger they’re gonna hit a point where they’re too strong and toddlers with them can and will randomly cause mass destruction and devastation because they can’t control them. What can you do that isn’t just removing people’s quirks at a young age when they can’t control them? Decay may have been an artificial derivative from overhaul but overhaul as far as we know is naturally occurring as are new order and rewind so we know the scale of power for quirks can get pretty ridiculous.

(Kind of a continuation of my talk here. See here and here for other times I discussed the Singularity too.)

Well presumably science could’ve been a solution by going the Garaki route instead of the Overhaul route; improve the hardware rather than delete the software. Dr. Garaki had managed it once after all; developed that treatment that, among other things, was supposed to make Shigaraki effectively immune to the singularity and let him control post-singularity quirks.

In theory that part might’ve been replicable without the other changes (like the natural super strength and of course the AFO quirk…although afaik the finger thing might’ve been non-negotiable) if Shigaraki’s body could have been studied and his treatment reverse engineered. That option is probably off the table now though, so science would have to start from scratch and I honestly don’t know if they have the time for it; Rewind is supposedly a genuine, if early, post-Singularity quirk, so they’re already popping up.

That said, that’s the assessment with the data we have now. Hori could easily wrap up that plot line with some new information a la “Tomura’s soul damage means his body can’t contain OFA no more, and he can be shattered in two hard hits.” Maybe we’ll learn Garaki was just wrong, and most of humanity will just naturally evolve their ‘hardware’ to keep up with quirks so the Singularity was never a problem to begin with. Or maybe we'll learn that, despite what we were told about the singularity being treated as a conspiracy theory, the scientific community has actually cared a lot about it, been hard at work on a solution, and'll have something ready long before things get bad. I mean there probably are more elegant solutions in theory, but that’s the kind of hand-wave I’m most worried about us seeing if BNHA is ending in 5 chapters and has plenty of plot lines that are far higher priority to focus on.

24 notes

·

View notes

Text

We Are Legion (We Are Bob) by Dennis E. Taylor

Synopsis: This novel is about RobertJohansson and the future he didn't expect to have. Bob was thrilled to be part of the cryogenic program that would freeze his brain upon death for reanimation in a more technologically-advanced future; he just didn't expect to wake up suddenly a century later as a digitized version of himself. But with the emotional settings turned off, he's able to take all of it in stride. Especially when he's suddenly jettisoned from the planet on a mission that will hopefully save humanity from itself.

My Quibs: This is my kind of book - heavily nerdy without being overbearing about it, lightly philosophical without being too biased, cheeky, and a bit self-deprecating. Granted, don't take this book very seriously. It's more like a stupid sit-com you turn on for some entertainment. Firstly with the characters: there really is only one character replicated a hundred times over, who is also our tour guide and narrator. The author is a self-proclaimed software engineer (much like Andy Weir who wrote The Martian) and so the character development is pretty standard: Bob is a self-assured but self-aware human who thinks his dad jokes are funny. I think he's fun to ride with but another reviewer described him as obnoxious. I could see that to be true if you think giggling at nerd references is obnoxious. It's true, Taylor nor Bob are witty, but we can both be five-year-olds pointing and laughing at a stupid fish face. That's who you're stuck with for 500 pages. [Edit: Having started the second book in the series, i must say that I fully agree with how tedious his humor is now. As an adult, it's fun to play on the same with a five-year-old but after a couple hours, I really want to have an adult conversation with an adult again.] Secondly with the world-building, or should I say universe-building: he paints an interesting scenario and then proceeds to play his own version of make-believe. Again, another reviewer commented that Bob gets lucky a lot but I didn't focus so much on how Bob solved the problem. I was more interested in the problems that Taylor created. How would you react if you woke up the next day and you were a bunch of data in the cloud? That everyone and everything you knew had been long gone for a century? 🤔 Bob realizes his "endocrine simulation routine" is disabled and basically represses his emotions for the first several chapters and when he does choose to turn it on, we don't see his reactions/process. Which is fine by me. I'm more curious how I would write that story if my character was given this situation. How would you feel if the only way to fulfill a functional necessity and social desire was to make a version of yourself? 😬 Bob's lucky that he got along with most versions of himself and that those he didn't get alone with could leave for other parts of the universe. I suppose this concept ended up being more like a parent-child relationship than a self-reflection idea, but if I were to write it... Anyways, I thoroughly enjoyed the thought exercise that was We Are Legion and I'm looking forward to other hypothetical situations that Bob gets himself into. [Edit: I made it about a fifth of the way into For We Are Many and Taylor doesn't really open up any new themes or scenarios. It's starting to read more like rambling except because he has so many more new Bobs to cram in, each Bob chapter is only four or five pages long. I feel like I'm just reading reports from coworkers. This will be a DNF unfortunately.]

Should you read it? It’s like a beach read for nerds. (But just the first one...)

Similar reads? As another reader mentioned, this is clearly in the genre of Smart Guy Talks Nerd Directly To You ala The Martian by Andy Weir and Ready Player One by Ernest Cline.

(Spoiler Alert!) I went into the story 100% blind and so I didn't want to list more thought questions before the spoiler warning in case others wanted some plot points to still be a surprise. For instance, after Bob reaches his initial destination, the book really branches out into several stories. Normally I would struggle with tracking multiple unrelated story lines, but luckily the writing is simple enough that it wasn't as difficult as I expected. 1. Bob goes back to Earth to see if humanity is okay. 2. Bob goes further and discovers a planet with sentient life on it. 3. Bob goes further and finds viable uninhabited planets. 4. Bob goes further and encounters a hostile probe that was launched from a different country. #3 and #4 didn't really interest me so much. But #1 and #2, while really exposing the god-complex of nerds and scientists, still posed an interesting question. How would you handle dictating the remaining world population as it's least-biased savior? Would you know how to mediate a group of desperate world leaders? And this is my bias here, but I like seeing science-driven civilians being forced into a military-esque command position. (It also happens in Stargate: Atlantis.) And finally the other question: Could you be an in-actionable observer to nature? Should you be? If you were motivated to take action, how far would you be willing to intervene? This reminds me of a BTS clip of Planet Earth where the film crew in Antarctica, who normally promise not to intervene in any natural situation, ultimately did help a young penguin out of a hole in the ice. I guess it had fallen in and would've been left behind to starve but the crew, after weighing the pros and cons, decided to quickly pop the penguin out of the hole. There are other examples in Planet Earth where a small mistake like that will ultimately kill an animal (I think an elephant wandered off and got lost and was presumed to ultimately die) and it's portrayed as a consequence of life. But this time, they intervened. Bob's study of the Deltans reminded me of that again. Although Taylor exaggerates everything ten times over for the sake of literary drama but the question still exists.

What did you think of We Are Legion (We Are Bob)?

8 notes

·

View notes

Text

Day 61 (2/2)

Zero Dawn Facility

Herres began with a revelation: nothing could save the world from the Faro plague. He went on to detail the projected rapid explosion of their population as they continued to self-replicate, consuming every living thing on the planet and degrading conditions until the air was poison and the lands uninhabitable. There was no hiding from the swarm; they would leave a barren earth behind them and at the first sign of returning life, they would wake from slumber and fuel themselves with whatever they could find. It would take decades to generate the swarm's deactivation codes, but the world had barely more than a year.

Operation Enduring Victory was a lie from the very beginning. There was no hope and there never had been. From the moment Elisabet reviewed Faro's data in Maker's End, she must have known. That explains why the War Chiefs at US Robot Command were so horrified with the idea of Enduring Victory. All they were doing—all those people standing between the swarm and the next city, fed false hope—was buying time for project Zero Dawn.

Then he revealed Elisabet's image, a small glimmer of hope. She was to explain the true purpose of the project in the next theatre. It didn't make any sense—if none of the Old Ones survived the swarm, then how did life continue?

I was right about the Shadow Carja. It seemed that the vents opening gave them other routes down. The kestrels were ignorant of the ruin's true nature, but I knew that once the Eclipse caught wind of the breach, they were certain to start salvaging data for Hades. I couldn't let them find Zero Dawn's secrets before I did.

I took a few of the kestrels out undetected before picking up one of their Firespitters and dispatching the rest.

The rooms were full of recorded interviews with various Zero Dawn 'candidates'. They were scholars, all from disparate fields of expertise. Experts in art and history, in environmental conservation, in robotics and engineering and artificial intelligence. There was a particularly colourful character named Travis Tate who I gathered was some sort of criminal. All of them were requested by Elisabet herself, and all of them had very different reactions to learning that all hope was lost. Anger, resignation, grief, confusion. Many questioned whether the swarm was truly unstoppable, who was culpable (a man named Tom Paech was out for Faro's blood), and why they of all people were chosen for the project.