#database schema exploration

Explore tagged Tumblr posts

Text

Searching for a Specific Table Column Across All Databases in SQL Server

To find all tables that contain a column with a specified name in a SQL Server database, you can use the INFORMATION_SCHEMA.COLUMNS view. This view contains information about each column in the database, including the table name and the column name. Here’s a SQL query that searches for all tables containing a column named YourColumnName: SELECT TABLE_SCHEMA, TABLE_NAME FROM…

View On WordPress

#cross-database query#database schema exploration#Dynamic SQL execution#find column SQL#Results#SQL Server column search

0 notes

Text

Graph Analytics Edge Computing: Supply Chain IoT Integration

Graph Analytics Edge Computing: Supply Chain IoT Integration

By a veteran graph analytics practitioner with decades of experience navigating enterprise implementations

youtube

Introduction

Graph analytics has emerged as a transformative technology for enterprises, especially in complex domains like supply chain management where relationships and dependencies abound. However, the journey from concept to production-grade enterprise graph analytics can be fraught with challenges.

In this article, we'll dissect common enterprise graph analytics failures and enterprise graph implementation mistakes, evaluate supply chain optimization through graph databases, explore strategies for petabyte-scale graph analytics, and demystify ROI calculations for graph analytics investments. Along the way, we’ll draw comparisons between leading platforms such as IBM graph analytics vs Neo4j and Amazon Neptune vs IBM graph, illuminating performance nuances and cost considerations at scale.

Why Do Enterprise Graph Analytics Projects Fail?

The graph database project failure rate is surprisingly high despite the hype. Understanding why graph analytics projects fail is critical to avoid repeating the same mistakes:

Poor graph schema design and modeling mistakes: Many teams jump into implementation without a well-thought-out enterprise graph schema design. Improper schema leads to inefficient queries and maintenance nightmares. Underestimating data volume and complexity: Petabyte scale datasets introduce unique challenges in graph traversal performance optimization and query tuning. Inadequate query performance optimization: Slow graph database queries can cripple user adoption and ROI. Choosing the wrong platform: Mismatched technology selection, such as ignoring key differences in IBM graph database performance vs Neo4j, or between Amazon Neptune vs IBM graph, can lead to scalability and cost overruns. Insufficient integration with existing enterprise systems: Graph analytics must seamlessly integrate with IoT edge computing, ERP, and supply chain platforms. Lack of clear business value definition: Without explicit enterprise graph analytics ROI goals, projects become academic exercises rather than profitable initiatives.

These common pitfalls highlight the importance of thorough planning, vendor evaluation, and realistic benchmarking before embarking on large-scale graph analytics projects.

you know, https://community.ibm.com/community/user/blogs/anton-lucanus/2025/05/25/petabyte-scale-supply-chains-graph-analytics-on-ib Supply Chain Optimization with Graph Databases

Supply chains are inherently graph-structured: suppliers, manufa

2 notes

·

View notes

Text

Wielding Big Data Using PySpark

Introduction to PySpark

PySpark is the Python API for Apache Spark, a distributed computing framework designed to process large-scale data efficiently. It enables parallel data processing across multiple nodes, making it a powerful tool for handling massive datasets.

Why Use PySpark for Big Data?

Scalability: Works across clusters to process petabytes of data.

Speed: Uses in-memory computation to enhance performance.

Flexibility: Supports various data formats and integrates with other big data tools.

Ease of Use: Provides SQL-like querying and DataFrame operations for intuitive data handling.

Setting Up PySpark

To use PySpark, you need to install it and set up a Spark session. Once initialized, Spark allows users to read, process, and analyze large datasets.

Processing Data with PySpark

PySpark can handle different types of data sources such as CSV, JSON, Parquet, and databases. Once data is loaded, users can explore it by checking the schema, summary statistics, and unique values.

Common Data Processing Tasks

Viewing and summarizing datasets.

Handling missing values by dropping or replacing them.

Removing duplicate records.

Filtering, grouping, and sorting data for meaningful insights.

Transforming Data with PySpark

Data can be transformed using SQL-like queries or DataFrame operations. Users can:

Select specific columns for analysis.

Apply conditions to filter out unwanted records.

Group data to find patterns and trends.

Add new calculated columns based on existing data.

Optimizing Performance in PySpark

When working with big data, optimizing performance is crucial. Some strategies include:

Partitioning: Distributing data across multiple partitions for parallel processing.

Caching: Storing intermediate results in memory to speed up repeated computations.

Broadcast Joins: Optimizing joins by broadcasting smaller datasets to all nodes.

Machine Learning with PySpark

PySpark includes MLlib, a machine learning library for big data. It allows users to prepare data, apply machine learning models, and generate predictions. This is useful for tasks such as regression, classification, clustering, and recommendation systems.

Running PySpark on a Cluster

PySpark can run on a single machine or be deployed on a cluster using a distributed computing system like Hadoop YARN. This enables large-scale data processing with improved efficiency.

Conclusion

PySpark provides a powerful platform for handling big data efficiently. With its distributed computing capabilities, it allows users to clean, transform, and analyze large datasets while optimizing performance for scalability.

For Free Tutorials for Programming Languages Visit-https://www.tpointtech.com/

2 notes

·

View notes

Text

Business Potential with Data Lake Implementation: A Guide by an Analytics Consulting Company

In today’s data-driven world, businesses are inundated with massive amounts of data generated every second. The challenge lies not only in managing this data but also in extracting valuable insights from it to drive business growth. This is where a data lake comes into play. As an Analytics Consulting Company, we understand the importance of implementing a robust data lake solution to help businesses harness the power of their data.

What is a Data Lake?

A data lake is a centralized repository that allows organizations to store all their structured and unstructured data at any scale. Unlike traditional databases, which are often limited by structure and schema, a data lake can accommodate raw data in its native format. This flexibility allows for greater data exploration and analytics capabilities, making it a crucial component of modern data management strategies.

The Importance of Data Lake Implementation

For businesses, implementing a data lake is not just about storing data—it's about creating a foundation for advanced analytics, machine learning, and artificial intelligence. By capturing and storing data from various sources, a data lake enables businesses to analyze historical and real-time data, uncovering hidden patterns and trends that drive strategic decision-making.

An Analytics Consulting Company like ours specializes in designing and implementing data lake solutions tailored to the unique needs of each business. With a well-structured data lake, companies can break down data silos, improve data accessibility, and ultimately, gain a competitive edge in the market.

Key Benefits of Data Lake Implementation

Scalability: One of the most significant advantages of a data lake is its ability to scale with your business. Whether you're dealing with terabytes or petabytes of data, a data lake can handle it all, ensuring that your data storage needs are met as your business grows.

Cost-Effectiveness: Traditional data storage solutions can be expensive, especially when dealing with large volumes of data. A data lake, however, offers a cost-effective alternative by using low-cost storage options. This allows businesses to store vast amounts of data without breaking the bank.

Flexibility: Data lakes are highly flexible, supporting various data types, including structured, semi-structured, and unstructured data. This flexibility enables businesses to store data in its raw form, which can be processed and analyzed as needed, without the constraints of a predefined schema.

Advanced Analytics: With a data lake, businesses can leverage advanced analytics tools to analyze large datasets, perform predictive analytics, and build machine learning models. This leads to deeper insights and more informed decision-making.

Improved Data Accessibility: A well-implemented data lake ensures that data is easily accessible to stakeholders across the organization. This democratization of data allows for better collaboration and faster innovation, as teams can quickly access and analyze the data they need.

Challenges in Data Lake Implementation

While the benefits of a data lake are clear, implementing one is not without its challenges. Businesses must navigate issues such as data governance, data quality, and security to ensure the success of their data lake.

As an experienced Analytics Consulting Company, we recognize the importance of addressing these challenges head-on. By implementing best practices in data governance, we help businesses maintain data quality and security while ensuring compliance with industry regulations.

Data Governance in Data Lake Implementation

Data governance is critical to the success of any data lake implementation. Without proper governance, businesses risk creating a "data swamp"—a data lake filled with disorganized, low-quality data that is difficult to analyze.

To prevent this, our Analytics Consulting Company focuses on establishing clear data governance policies that define data ownership, data quality standards, and data access controls. By implementing these policies, we ensure that the data lake remains a valuable asset, providing accurate and reliable insights for decision-making.

Security in Data Lake Implementation

With the increasing volume of data stored in a data lake, security becomes a top priority. Protecting sensitive information from unauthorized access and ensuring data privacy is essential.

Our Analytics Consulting Company takes a proactive approach to data security, implementing encryption, access controls, and monitoring to safeguard the data stored in the lake. We also ensure that the data lake complies with relevant data protection regulations, such as GDPR and HIPAA, to protect both the business and its customers.

The Role of an Analytics Consulting Company in Data Lake Implementation

Implementing a data lake is a complex process that requires careful planning, execution, and ongoing management. As an Analytics Consulting Company, we offer a comprehensive range of services to support businesses throughout the entire data lake implementation journey.

Assessment and Strategy Development: We begin by assessing the current data landscape and identifying the specific needs of the business. Based on this assessment, we develop a tailored data lake implementation strategy that aligns with the company’s goals.

Architecture Design: Designing the architecture of the data lake is a critical step. We ensure that the architecture is scalable, flexible, and secure, providing a strong foundation for data storage and analytics.

Implementation and Integration: Our team of experts handles the implementation process, ensuring that the data lake is seamlessly integrated with existing systems and workflows. We also manage the migration of data into the lake, ensuring that data is ingested correctly and efficiently.

Data Governance and Security: We establish robust data governance and security measures to protect the integrity and confidentiality of the data stored in the lake. This includes implementing data quality checks, access controls, and encryption.

Ongoing Support and Optimization: After the data lake is implemented, we provide ongoing support to ensure its continued success. This includes monitoring performance, optimizing storage and processing, and making adjustments as needed to accommodate changing business needs.

Conclusion

In an era where data is a key driver of business success, implementing a data lake is a strategic investment that can unlock significant value. By partnering with an experienced Analytics Consulting Company, businesses can overcome the challenges of data lake implementation and harness the full potential of their data.

With the right strategy, architecture, and governance in place, a data lake becomes more than just a storage solution—it becomes a powerful tool for driving innovation, improving decision-making, and gaining a competitive edge.

5 notes

·

View notes

Text

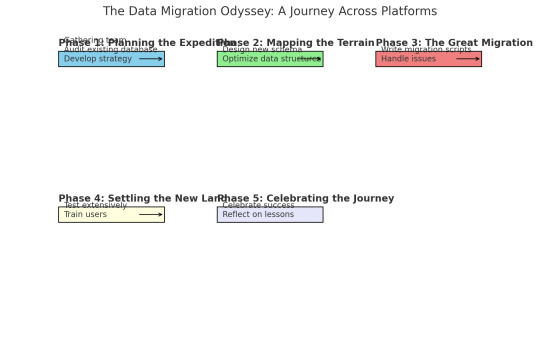

The Data Migration Odyssey: A Journey Across Platforms

As a database engineer, I thought I'd seen it all—until our company decided to migrate our entire database system to a new platform. What followed was an epic adventure filled with unexpected challenges, learning experiences, and a dash of heroism.

It all started on a typical Monday morning when my boss, the same stern woman with a flair for the dramatic, called me into her office. "Rookie," she began (despite my years of experience, the nickname had stuck), "we're moving to a new database platform. I need you to lead the migration."

I blinked. Migrating a database wasn't just about copying data from one place to another; it was like moving an entire city across the ocean. But I was ready for the challenge.

Phase 1: Planning the Expedition

First, I gathered my team and we started planning. We needed to understand the differences between the old and new systems, identify potential pitfalls, and develop a detailed migration strategy. It was like preparing for an expedition into uncharted territory.

We started by conducting a thorough audit of our existing database. This involved cataloging all tables, relationships, stored procedures, and triggers. We also reviewed performance metrics to identify any existing bottlenecks that could be addressed during the migration.

Phase 2: Mapping the Terrain

Next, we designed the new database design schema using schema builder online from dynobird. This was more than a simple translation; we took the opportunity to optimize our data structures and improve performance. It was like drafting a new map for our city, making sure every street and building was perfectly placed.

For example, our old database had a massive "orders" table that was a frequent source of slow queries. In the new schema, we split this table into more manageable segments, each optimized for specific types of queries.

Phase 3: The Great Migration

With our map in hand, it was time to start the migration. We wrote scripts to transfer data in batches, ensuring that we could monitor progress and handle any issues that arose. This step felt like loading up our ships and setting sail.

Of course, no epic journey is without its storms. We encountered data inconsistencies, unexpected compatibility issues, and performance hiccups. One particularly memorable moment was when we discovered a legacy system that had been quietly duplicating records for years. Fixing that felt like battling a sea monster, but we prevailed.

Phase 4: Settling the New Land

Once the data was successfully transferred, we focused on testing. We ran extensive queries, stress tests, and performance benchmarks to ensure everything was running smoothly. This was our version of exploring the new land and making sure it was fit for habitation.

We also trained our users on the new system, helping them adapt to the changes and take full advantage of the new features. Seeing their excitement and relief was like watching settlers build their new homes.

Phase 5: Celebrating the Journey

After weeks of hard work, the migration was complete. The new database was faster, more reliable, and easier to maintain. My boss, who had been closely following our progress, finally cracked a smile. "Excellent job, rookie," she said. "You've done it again."

To celebrate, she took the team out for a well-deserved dinner. As we clinked our glasses, I felt a deep sense of accomplishment. We had navigated a complex migration, overcome countless challenges, and emerged victorious.

Lessons Learned

Looking back, I realized that successful data migration requires careful planning, a deep understanding of both the old and new systems, and a willingness to tackle unexpected challenges head-on. It's a journey that tests your skills and resilience, but the rewards are well worth it.

So, if you ever find yourself leading a database migration, remember: plan meticulously, adapt to the challenges, and trust in your team's expertise. And don't forget to celebrate your successes along the way. You've earned it!

6 notes

·

View notes

Text

Certainly! Let’s explore how to build a full-stack application using Node.js. In this comprehensive guide, we’ll cover the essential components and steps involved in creating a full-stack web application.

Building a Full-Stack Application with Node.js, Express, and MongoDB

1. Node.js: The Backbone of Our Application

Node.js is a runtime environment that allows us to run JavaScript on the server-side.

It’s built on Chrome’s V8 JavaScript engine and uses an event-driven, non-blocking I/O model, making it lightweight and efficient.

Node.js serves as the backbone of our application, providing the environment in which our server-side code will run.

2. Express.js: Simplifying Server-Side Development

Express.js is a minimal and flexible Node.js web application framework.

It provides a robust set of features for building web and mobile applications.

With Express.js, we can:

Set up middlewares to respond to HTTP requests.

Define routing rules.

Add additional features like template engines.

3. MongoDB: Storing Our Data

MongoDB is a document-oriented database program.

It uses JSON-like documents with optional schemas and is known for its flexibility and scalability.

We’ll use MongoDB to store our application’s data in an accessible and writable format.

Building Our Full-Stack Application: A Step-by-Step Guide

Setting Up the Environment:

Install Node.js:sudo apt install nodejs

Initialize a new Node.js project:mkdir myapp && cd myapp npm init -y

Install Express.js:npm install express

Creating the Server:

Create a basic Express server:const express = require('express'); const app = express(); const port = 3000; app.get('/', (req, res) => { res.send('Hello World!'); }); app.listen(port, () => { console.log(`Server running at http://localhost:${port}`); });

Defining Routes:

Define routes for different parts of our application:app.get('/user', (req, res) => { res.send('User Page'); });

Connecting to MongoDB:

Use Mongoose (a MongoDB object modeling tool) to connect to MongoDB and handle data storage.

Remember, this is just the beginning! Full-stack development involves frontend (client-side) work as well. You can use React, Angular, or other frontend libraries to build the user interface and connect it to your backend (Node.js and Express).

Feel free to explore more about each component and dive deeper into building your full-stack application! 😊 12

2 notes

·

View notes

Text

The Dynamic Role of Full Stack Developers in Modern Software Development

Introduction: In the rapidly evolving landscape of software development, full stack developers have emerged as indispensable assets, seamlessly bridging the gap between front-end and back-end development. Their versatility and expertise enable them to oversee the entire software development lifecycle, from conception to deployment. In this insightful exploration, we'll delve into the multifaceted responsibilities of full stack developers and uncover their pivotal role in crafting innovative and user-centric web applications.

Understanding the Versatility of Full Stack Developers:

Full stack developers serve as the linchpins of software development teams, blending their proficiency in front-end and back-end technologies to create cohesive and scalable solutions. Let's explore the diverse responsibilities that define their role:

End-to-End Development Mastery: At the core of full stack development lies the ability to navigate the entire software development lifecycle with finesse. Full stack developers possess a comprehensive understanding of both front-end and back-end technologies, empowering them to conceptualize, design, implement, and deploy web applications with efficiency and precision.

Front-End Expertise: On the front-end, full stack developers are entrusted with crafting engaging and intuitive user interfaces that captivate audiences. Leveraging their command of HTML, CSS, and JavaScript, they breathe life into designs, ensuring seamless navigation and an exceptional user experience across devices and platforms.

Back-End Proficiency: In the realm of back-end development, full stack developers focus on architecting the robust infrastructure that powers web applications. They leverage server-side languages and frameworks such as Node.js, Python, or Ruby on Rails to handle data storage, processing, and authentication, laying the groundwork for scalable and resilient applications.

Database Management Acumen: Full stack developers excel in database management, designing efficient schemas, optimizing queries, and safeguarding data integrity. Whether working with relational databases like MySQL or NoSQL databases like MongoDB, they implement storage solutions that align with the application's requirements and performance goals.

API Development Ingenuity: APIs serve as the conduits that facilitate seamless communication between different components of a web application. Full stack developers are adept at designing and implementing RESTful or GraphQL APIs, enabling frictionless data exchange between the front-end and back-end systems.

Testing and Quality Assurance Excellence: Quality assurance is paramount in software development, and full stack developers take on the responsibility of testing and debugging web applications. They devise and execute comprehensive testing strategies, identifying and resolving issues to ensure the application meets stringent performance and reliability standards.

Deployment and Maintenance Leadership: As the custodians of web applications, full stack developers oversee deployment to production environments and ongoing maintenance. They monitor performance metrics, address security vulnerabilities, and implement updates and enhancements to ensure the application remains robust, secure, and responsive to user needs.

Conclusion: In conclusion, full stack developers embody the essence of versatility and innovation in modern software development. Their ability to seamlessly navigate both front-end and back-end technologies enables them to craft sophisticated and user-centric web applications that drive business growth and enhance user experiences. As technology continues to evolve, full stack developers will remain at the forefront of digital innovation, shaping the future of software development with their ingenuity and expertise.

#full stack course#full stack developer#full stack software developer#full stack training#full stack web development

2 notes

·

View notes

Text

Software Development Process—Definition, Stages, and Methodologies

In the rapidly evolving digital era, software applications are the backbone of business operations, consumer services, and everyday convenience. Behind every high-performing app or platform lies a structured, strategic, and iterative software development process. This process isn't just about writing code—it's about delivering a solution that meets specific goals and user needs.

This blog explores the definition, key stages, and methodologies used in software development—providing you a clear understanding of how digital solutions are brought to life and why choosing the right software development company matters.

What is the software development process?

The software development process is a series of structured steps followed to design, develop, test, and deploy software applications. It encompasses everything from initial idea brainstorming to final deployment and post-launch maintenance.

It ensures that the software meets user requirements, stays within budget, and is delivered on time while maintaining high quality and performance standards.

Key Stages in the Software Development Process

While models may vary based on methodology, the core stages remain consistent:

1. Requirement Analysis

At this stage, the development team gathers and documents all requirements from stakeholders. It involves understanding:

Business goals

User needs

Functional and non-functional requirements

Technical specifications

Tools such as interviews, surveys, and use-case diagrams help in gathering detailed insights.

2. Planning

Planning is crucial for risk mitigation, cost estimation, and setting timelines. It involves

Project scope definition

Resource allocation

Scheduling deliverables

Risk analysis

A solid plan keeps the team aligned and ensures smooth execution.

3. System Design

Based on requirements and planning, system architects create a blueprint. This includes:

UI/UX design

Database schema

System architecture

APIs and third-party integrations

The design must balance aesthetics, performance, and functionality.

4. Development (Coding)

Now comes the actual building. Developers write the code using chosen technologies and frameworks. This stage may involve:

Front-end and back-end development

API creation

Integration with databases and other systems

Version control tools like Git ensure collaborative and efficient coding.

5. Testing

Testing ensures the software is bug-free and performs well under various scenarios. Types of testing include:

Unit Testing

Integration Testing

System Testing

User Acceptance Testing (UAT)

QA teams identify and document bugs for developers to fix before release.

6. Deployment

Once tested, the software is deployed to a live environment. This may include:

Production server setup

Launch strategy

Initial user onboarding

Deployment tools like Docker or Jenkins automate parts of this stage to ensure smooth releases.

7. Maintenance & Support

After release, developers provide regular updates and bug fixes. This stage includes

Performance monitoring

Addressing security vulnerabilities

Feature upgrades

Ongoing maintenance is essential for long-term user satisfaction.

Popular Software Development Methodologies

The approach you choose significantly impacts how flexible, fast, or structured your development process will be. Here are the leading methodologies used by modern software development companies:

🔹 Waterfall Model

A linear, sequential approach where each phase must be completed before the next begins. Best for:

Projects with clear, fixed requirements

Government or enterprise applications

Pros:

Easy to manage and document

Straightforward for small projects

Cons:

Not flexible for changes

Late testing could delay bug detection

🔹 Agile Methodology

Agile breaks the project into smaller iterations, or sprints, typically 2–4 weeks long. Features are developed incrementally, allowing for flexibility and client feedback.

Pros:

High adaptability to change

Faster delivery of features

Continuous feedback

Cons:

Requires high team collaboration

Difficult to predict final cost and timeline

🔹 Scrum Framework

A subset of Agile, Scrum includes roles like Scrum Master and Product Owner. Work is done in sprint cycles with daily stand-up meetings.

Best For:

Complex, evolving projects

Cross-functional teams

🔹 DevOps

Combines development and operations to automate and integrate the software delivery process. It emphasizes:

Continuous integration

Continuous delivery (CI/CD)

Infrastructure as code

Pros:

Faster time-to-market

Reduced deployment failures

🔹 Lean Development

Lean focuses on minimizing waste while maximizing productivity. Ideal for startups or teams on a tight budget.

Principles include:

Empowering the team

Delivering as fast as possible

Building integrity in

Why Partnering with a Professional Software Development Company Matters

No matter how refined your idea is, turning it into a working software product requires deep expertise. A reliable software development company can guide you through every stage with

Technical expertise: They offer full-stack developers, UI/UX designers, and QA professionals.

Industry knowledge: They understand market trends and can tailor solutions accordingly.

Agility and flexibility: They adapt to changes and deliver incremental value quickly.

Post-deployment support: From performance monitoring to feature updates, support never ends.

Partnering with professionals ensures your software is scalable, secure, and built to last.

Conclusion: Build Smarter with a Strategic Software Development Process

The software development process is a strategic blend of analysis, planning, designing, coding, testing, and deployment. Choosing the right development methodology—and more importantly, the right partner—can make the difference between success and failure.

Whether you're developing a mobile app, enterprise software, or SaaS product, working with a reputed software development company will ensure your vision is executed flawlessly and efficiently.

📞 Ready to build your next software product? Connect with an expert software development company today and turn your idea into an innovation-driven reality!

0 notes

Text

How Can I Use Programmatic SEO to Launch a Niche Content Site?

Launching a niche content site can be both exciting and rewarding—especially when it's done with a smart strategy like programmatic SEO. Whether you're targeting a hyper-specific audience or aiming to dominate long-tail keywords, programmatic SEO can give you an edge by scaling your content without sacrificing quality. If you're looking to build a site that ranks fast and drives passive traffic, this is a strategy worth exploring. And if you're unsure where to start, a professional SEO agency Markham can help bring your vision to life.

What Is Programmatic SEO?

Programmatic SEO involves using automated tools and data to create large volumes of optimized pages—typically targeting long-tail keyword variations. Instead of manually writing each piece of content, programmatic SEO leverages templates, databases, and keyword patterns to scale content creation efficiently.

For example, a niche site about hiking trails might use programmatic SEO to create individual pages for every trail in Canada, each optimized for keywords like “best trail in [location]” or “hiking tips for [terrain].”

Steps to Launch a Niche Site Using Programmatic SEO

1. Identify Your Niche and Content Angle

Choose a niche that:

Has clear search demand

Allows for structured data (e.g., locations, products, how-to guides)

Has low to medium competition

Examples: electric bike comparisons, gluten-free restaurants by city, AI tools for writers.

2. Build a Keyword Dataset

Use SEO tools (like Ahrefs, Semrush, or Google Keyword Planner) to extract long-tail keyword variations. Focus on "X in Y" or "best [type] for [audience]" formats. If you're working with an SEO agency Markham, they can help with in-depth keyword clustering and search intent mapping.

3. Create Content Templates

Build templates that can dynamically populate content with variables like location, product type, or use case. A content template typically includes:

Intro paragraph

Keyword-rich headers

Dynamic tables or comparisons

FAQs

Internal links to related pages

4. Source and Structure Your Data

Use public datasets, APIs, or custom scraping to populate your content. Clean, accurate data is the backbone of programmatic SEO.

5. Automate Page Generation

Use platforms like Webflow (with CMS collections), WordPress (with custom post types), or even a headless CMS like Strapi to automate publishing. If you’re unsure about implementation, a skilled SEO agency Markham can develop a custom solution that integrates data, content, and SEO seamlessly.

6. Optimize for On-Page SEO

Every programmatically created page should include:

Title tags and meta descriptions with dynamic variables

Clean URL structures (e.g., /tools-for-freelancers/)

Internal linking between related pages

Schema markup (FAQ, Review, Product)

7. Track, Test, and Improve

Once live, monitor your pages via Google Search Console. Use A/B testing to refine titles, layouts, and content. Focus on improving pages with impressions but low click-through rates (CTR).

Why Work with an SEO Agency Markham?

Executing programmatic SEO at scale requires a mix of SEO strategy, web development, content structuring, and data management. A professional SEO agency Markham brings all these capabilities together, helping you:

Build a robust keyword strategy

Design efficient, scalable page templates

Ensure proper indexing and crawlability

Avoid duplication and thin content penalties

With local expertise and technical know-how, they help you launch faster, rank better, and grow sustainably.

Final Thoughts

Programmatic SEO is a powerful method to launch and scale a niche content site—if you do it right. By combining automation with strategic keyword targeting, you can dominate long-tail search and generate massive organic traffic. To streamline the process and avoid costly mistakes, partner with an experienced SEO agency Markham that understands both the technical and content sides of SEO.

Ready to build your niche empire? Programmatic SEO could be your best-kept secret to success

0 notes

Text

Optimizing Cloud Database Performance: Common Challenges and Effective Solutions

In the era of cloud computing, databases are more crucial than ever. They store everything from user data to critical business operations. However, despite the advantages of cloud databases, many organizations face performance issues. This article explores common problems that plague cloud database performance and offers practical solutions.

1. Inadequate resource allocation

Problem:

One of the primary reasons for poor performance is inadequate resource allocation. Cloud databases require proper CPU, memory, and storage resources. Underestimating these can lead to slow query responses and system lag.

Solution:

Regularly monitor your database’s resource utilization. Use auto-scaling features provided by cloud providers to adjust resources based on demand. Also, choose the right database type and size for your workload.

2. Network latency

Problem:

Cloud databases can suffer from network latency, particularly if the database is hosted in a different geographical location than its users.

Solution:

Host your database in a region closest to your primary user base. Use Content Delivery Networks (CDNs) to cache data and reduce the distance it travels.

3. Poor database design

Problem:

Inefficient database design, such as improper indexing, can severely impact performance. Over-indexing can slow down write operations, while under-indexing can hinder read operations.

Solution:

Regularly review and optimize your database schema. Ensure proper indexing based on your application’s read-write patterns.

4. Inadequate query optimization

Problem:

Poorly written queries can cause significant performance degradation, especially when dealing with large datasets.

Solution:

Use query optimization tools and techniques. Review and refactor inefficient queries. Consider using stored procedures and triggers to optimize complex operations.

5. Failure to scale horizontally

Problem:

Relying solely on vertical scaling (upgrading existing resources) can be limiting. It often leads to a performance plateau.

Solution:

Implement horizontal scaling (adding more nodes). Distribute the load across multiple database instances.

6. Ignoring cache opportunities

Problem:

Frequent direct database queries for the same data can be inefficient and slow.

Solution:

Implement caching mechanisms. Store frequently accessed data in memory for quicker access.

7. Not using automated backups and recovery

Problem:

Manual backups and recovery processes can be time-consuming and error prone.

Solution:

Utilize automated backup and recovery solutions provided by cloud services. Ensure regular backups and test recovery processes.

Optimizing cloud database performance requires a mix of right-sizing resources, efficient design, query optimization, and leveraging cloud-native features like auto-scaling and caching. Regular monitoring and proactive maintenance are key to ensuring your cloud database doesn’t just run, but thrives. For expert guidance and tailored solutions that maximize your cloud database’s efficiency, consider exploring Centizen Cloud Consulting services, where innovation meets expertise.

0 notes

Text

DBMS Tutorial Explained: Concepts, Types, and Applications

In today’s digital world, data is everywhere — from social media posts and financial records to healthcare systems and e-commerce websites. But have you ever wondered how all that data is stored, organized, and managed? That’s where DBMS — or Database Management System — comes into play.

Whether you’re a student, software developer, aspiring data analyst, or just someone curious about how information is handled behind the scenes, this DBMS tutorial is your one-stop guide. We’ll explore the fundamental concepts, various types of DBMS, and real-world applications to help you understand how modern databases function.

What is a DBMS?

A Database Management System (DBMS) is software that enables users to store, retrieve, manipulate, and manage data efficiently. Think of it as an interface between the user and the database. Rather than interacting directly with raw data, users and applications communicate with the database through the DBMS.

For example, when you check your bank account balance through an app, it’s the DBMS that processes your request, fetches the relevant data, and sends it back to your screen — all in milliseconds.

Why Learn DBMS?

Understanding DBMS is crucial because:

It’s foundational to software development: Every application that deals with data — from mobile apps to enterprise systems — relies on some form of database.

It improves data accuracy and security: DBMS helps in organizing data logically while controlling access and maintaining integrity.

It’s highly relevant for careers in tech: Knowledge of DBMS is essential for roles in backend development, data analysis, database administration, and more.

Core Concepts of DBMS

Let’s break down some of the fundamental concepts that every beginner should understand when starting with DBMS.

1. Database

A database is an organized collection of related data. Instead of storing information in random files, a database stores data in structured formats like tables, making retrieval efficient and logical.

2. Data Models

Data models define how data is logically structured. The most common models include:

Hierarchical Model

Network Model

Relational Model

Object-Oriented Model

Among these, the Relational Model (used in systems like MySQL, PostgreSQL, and Oracle) is the most popular today.

3. Schemas and Tables

A schema defines the structure of a database — like a blueprint. It includes definitions of tables, columns, data types, and relationships between tables.

4. SQL (Structured Query Language)

SQL is the standard language used to communicate with relational DBMS. It allows users to perform operations like:

SELECT: Retrieve data

INSERT: Add new data

UPDATE: Modify existing data

DELETE: Remove data

5. Normalization

Normalization is the process of organizing data to reduce redundancy and improve integrity. It involves dividing a database into two or more related tables and defining relationships between them.

6. Transactions

A transaction is a sequence of operations performed as a single logical unit. Transactions in DBMS follow ACID properties — Atomicity, Consistency, Isolation, and Durability — ensuring reliable data processing even during failures.

Types of DBMS

DBMS can be categorized into several types based on how data is stored and accessed:

1. Hierarchical DBMS

Organizes data in a tree-like structure.

Each parent can have multiple children, but each child has only one parent.

Example: IBM’s IMS.

2. Network DBMS

Data is represented as records connected through links.

More flexible than hierarchical model; a child can have multiple parents.

Example: Integrated Data Store (IDS).

3. Relational DBMS (RDBMS)

Data is stored in tables (relations) with rows and columns.

Uses SQL for data manipulation.

Most widely used type today.

Examples: MySQL, PostgreSQL, Oracle, SQL Server.

4. Object-Oriented DBMS (OODBMS)

Data is stored in the form of objects, similar to object-oriented programming.

Supports complex data types and relationships.

Example: db4o, ObjectDB.

5. NoSQL DBMS

Designed for handling unstructured or semi-structured data.

Ideal for big data applications.

Types include document, key-value, column-family, and graph databases.

Examples: MongoDB, Cassandra, Redis, Neo4j.

Applications of DBMS

DBMS is used across nearly every industry. Here are some common applications:

1. Banking and Finance

Customer information, transaction records, and loan histories are stored and accessed through DBMS.

Ensures accuracy and fast processing.

2. Healthcare

Manages patient records, billing, prescriptions, and lab reports.

Enhances data privacy and improves coordination among departments.

3. E-commerce

Handles product catalogs, user accounts, order histories, and payment information.

Ensures real-time data updates and personalization.

4. Education

Maintains student information, attendance, grades, and scheduling.

Helps in online learning platforms and academic administration.

5. Telecommunications

Manages user profiles, billing systems, and call records.

Supports large-scale data processing and service reliability.

Final Thoughts

In this DBMS tutorial, we’ve broken down what a Database Management System is, why it’s important, and how it works. Understanding DBMS concepts like relational models, SQL, and normalization gives you the foundation to build and manage efficient, scalable databases.

As data continues to grow in volume and importance, the demand for professionals who understand database systems is also rising. Whether you're learning DBMS for academic purposes, career development, or project needs, mastering these fundamentals is the first step toward becoming data-savvy in today’s digital world.

Stay tuned for more tutorials, including hands-on SQL queries, advanced DBMS topics, and database design best practices!

0 notes

Text

Data Architecture vs Data Modeling or Web Development vs App Development: A Comparative Insight

In the ever-evolving world of technology, understanding the difference between various fields is essential for both professionals and enthusiasts. Two commonly compared topics are Data Architecture vs Data Modeling and Web Development vs App Development. Both sets of terms are closely related but serve distinct roles in their respective domains. Let’s explore these differences in detail.

Data Architecture vs Data Modeling

Data Architecture refers to the high-level design and framework that governs how data is collected, stored, managed, and used across an organization. It includes strategies, policies, standards, and models that ensure data is aligned with business goals. Data architecture defines how data flows between systems and helps in making the data accessible and secure.

On the other hand, Data Modeling is a subset of data architecture. It focuses on the actual creation of data structures. Data modeling involves designing data elements, identifying relationships between data, and organizing it into logical structures such as tables, entities, and schemas. It’s more detailed and technical compared to data architecture.

In simpler terms, if data architecture is the blueprint of a house, then data modeling is the detailed drawing of each room, showing how they connect and function.

Web Development vs App Development

Web Development involves the creation of websites and web applications that are accessed through web browsers. It usually includes front-end development (what the user sees), back-end development (server, database), or full-stack (both). Web development is platform-independent — users can access the application through any browser on any device.

App Development, however, refers to the creation of software applications specifically for mobile devices like smartphones and tablets, or for desktops. Apps are built for specific platforms such as Android, iOS, or Windows. They often offer better performance and offline capabilities compared to web applications.

While Web Development vs App Development might seem similar, the key difference lies in the platform and user experience. Web development focuses on accessibility and broader reach, whereas app development emphasizes performance and deeper integration with device features.

Conclusion

Whether comparing Data Architecture vs Data Modeling or Web Development vs App Development, the distinctions are clear. Data architecture provides the strategic overview, while data modeling gets into the technical details. Similarly, web development is about building browser-accessible platforms, whereas app development targets native, high-performance applications.

Understanding these differences helps businesses choose the right strategy and helps professionals align their skills with their career goals. Both sets of comparisons play crucial roles in the digital ecosystem and are essential for modern technology infrastructure.

0 notes

Text

From Concept to Conversion: How a Web Development Company in Bangalore Delivers Business Impact

The digital world is evolving rapidly. Businesses today must have a strong online presence to stay relevant, reach new customers, and drive growth. At the center of this transformation is web development—a key component that turns digital ideas into practical, impactful solutions.

For businesses in India and across the globe, Bangalore has emerged as a leading destination for web development services. Among the top names in the region is WebSenor, a professional web development agency in Bangalore known for delivering real business outcomes through smart, scalable, and customized solutions.

The Bangalore Advantage

Why Bangalore is a Hub for Digital Innovation

Bangalore, often referred to as the “Silicon Valley of India,” offers a unique ecosystem of tech talent, startups, and global IT companies. The city is home to some of the most top web development companies in Bangalore, offering both innovation and reliability.

Whether it's custom web development in Bangalore or enterprise-level software solutions, the local talent pool excels in front-end and back-end development, mobile optimization, and UX design. This ensures high-quality work at a competitive cost.

Local Insight Meets Global Standards

What sets Bangalore apart is its ability to blend local business knowledge with global technical standards. Companies like WebSenor bring this advantage to clients across industries by understanding market-specific challenges and developing tailored digital strategies.

Case in point: A Bangalore-based retailer partnered with WebSenor for a responsive, eCommerce website. The result? A 4x increase in sales within six months—proving how eCommerce website development in Bangalore can truly scale a business.

WebSenor’s Strategic Web Development Approach

At WebSenor, the focus is not just on building websites but on driving business success. Here’s how the process unfolds:

Step 1 – Discovery & Conceptualization

Every successful project begins with deep understanding. WebSenor starts by exploring the client’s goals, audience, and competition.

Stakeholder interviews to gather insights.

Competitor analysis to identify opportunities.

Initial project roadmap and timeline.

This phase sets the foundation for a result-driven approach.

Step 2 – UX/UI Strategy & Wireframing

User experience is critical. WebSenor uses tools like Figma and Adobe XD to create intuitive, attractive interfaces.

Wireframes and mockups based on user behavior.

User personas to guide design decisions.

Focus on custom website design for unique brand identity.

Whether it’s mobile-friendly website development or a complex dashboard, the team ensures every click serves a purpose.

Step 3 – Agile Development Process

WebSenor follows agile methodology to allow flexibility and speed.

Use of modern technologies like React, Angular, Node.js, Laravel.

Regular sprint reviews and updates.

Backend integration with databases and APIs.

This makes them a full-stack web development company in Bangalore capable of handling end-to-end solutions.

Step 4 – SEO, Performance & Security Optimization

Your website isn’t effective if it isn’t visible. WebSenor ensures every site is:

Optimized for search engines (on-page SEO, metadata, schema markup).

Fast-loading and responsive across devices.

Secure, with SSL implementation and data protection practices.

Their team understands the importance of responsive web design in Bangalore’s mobile-first digital landscape.

Step 5 – QA Testing & Iteration

Before going live, every project goes through rigorous testing:

Browser and device compatibility.

Load testing for high traffic.

Bug tracking and resolution.

This guarantees a seamless user experience from day one.

Step 6 – Launch, Training & Maintenance

WebSenor doesn’t stop at delivery. Post-launch support includes:

Deployment on live servers or cloud platforms.

Client training for CMS and content updates.

Ongoing maintenance and performance tracking.

It’s a complete package for businesses that want reliable web development services in Bangalore.

Converting Traffic into Business Impact

Beyond Aesthetics – WebSenor’s Focus on Conversion Rate Optimization (CRO)

A beautiful site is great, but one that converts is even better. WebSenor incorporates CRO best practices from the start:

Strong CTAs placed strategically.

Landing page optimization.

Integration with CRM tools and Google Analytics.

By focusing on conversions, they help businesses increase revenue, not just traffic.

Success Metrics That Matter

WebSenor tracks key performance indicators to measure results:

Bounce rate reduction.

Increased session duration.

Growth in qualified leads.

Sales and customer acquisition.

Real-time dashboards and ROI reports help clients see the tangible benefits of their investment.

Case Studies: Real Business Impact with WebSenor

Case Study 1 – E-Commerce Brand Boost

A mid-sized fashion brand needed a makeover. Their old website was slow, unresponsive, and didn’t convert.

Solution: WebSenor built a custom Shopify-like platform with integrated payment gateways and product filters.

Result: Site speed improved by 60%, and monthly revenue tripled within 6 months.

Case Study 2 – SaaS Platform Transformation

A SaaS startup required scalable infrastructure and improved UI.

Solution: WebSenor delivered a modern web app using Node.js and React, built for scalability.

Result: Customer retention improved by 40%, and the company secured a funding round soon after.

Client Testimonials & Industry Recognition

Clients consistently praise WebSenor’s professionalism and results. With Clutch ratings, verified Google reviews, and a portfolio of over 200 projects, WebSenor has established itself as a professional web development agency in Bangalore.

The WebSenor Edge: Experience, Expertise & Trust

Our Team of Experts

WebSenor’s team includes senior developers, UI/UX designers, digital strategists, and QA specialists. Their combined experience in web development for startups, enterprises, and NGOs helps tailor solutions for every client.

Certifications, Partnerships, and Methodologies

WebSenor is recognized as a:

Google Partner

AWS Cloud Partner

ISO-Certified Company

Their adoption of DevOps practices, CI/CD pipelines, and agile tools enhances reliability and speed.

Transparent Communication & Long-Term Partnerships

Clients enjoy:

Regular updates and open communication.

Dedicated account managers.

Ongoing support and service-level agreements.

This client-first approach is why WebSenor is often regarded as the best web development company in Bangalore.

Why Choose WebSenor as Your Web Development Partner

Here’s what sets WebSenor apart from other affordable web development companies in Bangalore:

Custom development tailored to business needs.

Affordable pricing without compromising quality.

Full-stack expertise across front-end and back-end.

Reliable support and proactive communication.

Whether you want to hire web developers in Bangalore for a short-term project or need a long-term digital partner, WebSenor is ready to help.

Explore our web development services in Bangalore or check out our portfolio to see what we’ve built.

Conclusion

Web development isn’t just about putting up a website—it’s about creating a tool that drives growth, improves customer experience, and delivers measurable results.

WebSenor, a leading web development company in Bangalore, offers a complete journey from concept to conversion. With its skilled team, robust process, and customer-first approach, businesses can confidently build their digital presence and achieve lasting success. If you're ready to take your digital strategy to the next level, contact WebSenor today and see how we can turn your vision into impact.

#WebSenor#WebDevelopmentCompany#WebDevelopmentBangalore#BangaloreTech#WebsiteDesign#CustomWebDevelopment#ResponsiveDesign#EcommerceDevelopment#FullStackDevelopment#TechInBangalore

0 notes

Text

How to Drop Tables in MySQL Using dbForge Studio: A Simple Guide for Safer Table Management

Learn how to drop a table in MySQL quickly and safely using dbForge Studio for MySQL — a professional-grade IDE designed for MySQL and MariaDB development. Whether you’re looking to delete a table, use DROP TABLE IF EXISTS, or completely clear a MySQL table, this guide has you covered.

In article “How to Drop Tables in MySQL: A Complete Guide for Database Developers” we explain:

✅ How to drop single and multiple tables Use simple SQL commands or the intuitive UI in dbForge Studio to delete one or several tables at once — no need to write multiple queries manually.

✅ How to use the DROP TABLE command properly Learn how to avoid errors by using DROP TABLE IF EXISTS and specifying table names correctly, especially when working with multiple schemas.

✅ What happens when you drop a table in MySQL Understand the consequences: the table structure and all its data are permanently removed — and can’t be recovered unless you’ve backed up beforehand.

✅ Best practices for safe table deletion Backup first, check for dependencies like foreign keys, and use IF EXISTS to avoid runtime errors if the table doesn’t exist.

✅ How dbForge Studio simplifies and automates this process With dbForge Studio, dropping tables becomes a controlled and transparent process, thanks to:

- Visual Database Explorer — right-click any table to drop it instantly or review its structure before deletion. - Smart SQL Editor — get syntax suggestions and validation as you type DROP TABLE commands. - Built-in SQL execution preview — see what will happen before executing destructive commands. - Schema and data backup tools — create instant backups before making changes. - SQL script generation — the tool auto-generates DROP TABLE scripts, which you can edit and save for future use. - Role-based permissions and warnings — helps prevent accidental deletions in shared environments.

💡 Whether you’re cleaning up your database or optimizing its structure, this article helps you do it efficiently — with fewer risks and more control.

🔽 Try dbForge Studio for MySQL for free and experience smarter MySQL development today: 👉 https://www.devart.com/dbforge/mysql/studio/download.html

1 note

·

View note

Text

Identify elements of a search solution

A search index contains your searchable content. In an Azure AI Search solution, you create a search index by moving data through the following indexing pipeline:

Start with a data source: the storage location of your original data artifacts, such as PDFs, video files, and images. For Azure AI Search, your data source could be files in Azure Storage, or text in a database such as Azure SQL Database or Azure Cosmos DB.

Indexer: automates the movement data from the data source through document cracking and enrichment to indexing. An indexer automates a portion of data ingestion and exports the original file type to JSON (in an action called JSON serialization).

Document cracking: the indexer opens files and extracts content.

Enrichment: the indexer moves data through AI enrichment, which implements Azure AI on your original data to extract more information. AI enrichment is achieved by adding and combining skills in a skillset. A skillset defines the operations that extract and enrich data to make it searchable. These AI skills can be either built-in skills, such as text translation or Optical Character Recognition (OCR), or custom skills that you provide. Examples of AI enrichment include adding captions to a photo and evaluating text sentiment. AI enriched content can be sent to a knowledge store, which persists output from an AI enrichment pipeline in tables and blobs in Azure Storage for independent analysis or downstream processing.

Push to index: the serialized JSON data populates the search index.

The result is a populated search index which can be explored through queries. When users make a search query such as "coffee", the search engine looks for that information in the search index. A search index has a structure similar to a table, known as the index schema. A typical search index schema contains fields, the field's data type (such as string), and field attributes. The fields store searchable text, and the field attributes allow for actions such as filtering and sorting.

0 notes

Text

PHP with MySQL: Best Practices for Database Integration

PHP and MySQL have long formed the backbone of dynamic web development. Even with modern frameworks and languages emerging, this combination remains widely used for building secure, scalable, and performance-driven websites and web applications. As of 2025, PHP with MySQL continues to power millions of websites globally, making it essential for developers and businesses to follow best practices to ensure optimized performance and security.

This article explores best practices for integrating PHP with MySQL and explains how working with expert php development companies in usa can help elevate your web projects to the next level.

Understanding PHP and MySQL Integration

PHP is a server-side scripting language used to develop dynamic content and web applications, while MySQL is an open-source relational database management system that stores and manages data efficiently. Together, they allow developers to create interactive web applications that handle tasks like user authentication, data storage, and content management.

The seamless integration of PHP with MySQL enables developers to write scripts that query, retrieve, insert, and update data. However, without proper practices in place, this integration can become vulnerable to performance issues and security threats.

1. Use Modern Extensions for Database Connections

One of the foundational best practices when working with PHP and MySQL is using modern database extensions. Outdated methods have been deprecated and removed from the latest versions of PHP. Developers are encouraged to use modern extensions that support advanced features, better error handling, and more secure connections.

Modern tools provide better performance, are easier to maintain, and allow for compatibility with evolving PHP standards.

2. Prevent SQL Injection Through Prepared Statements

Security should always be a top priority when integrating PHP with MySQL. SQL injection remains one of the most common vulnerabilities. To combat this, developers must use prepared statements, which ensure that user input is not interpreted as SQL commands.

This approach significantly reduces the risk of malicious input compromising your database. Implementing this best practice creates a more secure environment and protects sensitive user data.

3. Validate and Sanitize User Inputs

Beyond protecting your database from injection attacks, all user inputs should be validated and sanitized. Validation ensures the data meets expected formats, while sanitization cleans the data to prevent malicious content.

This practice not only improves security but also enhances the accuracy of the stored data, reducing errors and improving the overall reliability of your application.

4. Design a Thoughtful Database Schema

A well-structured database is critical for long-term scalability and maintainability. When designing your MySQL database, consider the relationships between tables, the types of data being stored, and how frequently data is accessed or updated.

Use appropriate data types, define primary and foreign keys clearly, and ensure normalization where necessary to reduce data redundancy. A good schema minimizes complexity and boosts performance.

5. Optimize Queries for Speed and Efficiency

As your application grows, the volume of data can quickly increase. Optimizing SQL queries is essential for maintaining performance. Poorly written queries can lead to slow loading times and unnecessary server load.

Developers should avoid requesting more data than necessary and ensure queries are specific and well-indexed. Indexing key columns, especially those involved in searches or joins, helps the database retrieve data more quickly.

6. Handle Errors Gracefully

Handling database errors in a user-friendly and secure way is important. Error messages should never reveal database structures or sensitive server information to end-users. Instead, errors should be logged internally, and users should receive generic messages that don’t compromise security.

Implementing error handling protocols ensures smoother user experiences and provides developers with insights to debug issues effectively without exposing vulnerabilities.

7. Implement Transactions for Multi-Step Processes

When your application needs to execute multiple related database operations, using transactions ensures that all steps complete successfully or none are applied. This is particularly important for tasks like order processing or financial transfers where data integrity is essential.

Transactions help maintain consistency in your database and protect against incomplete data operations due to system crashes or unexpected failures.

8. Secure Your Database Credentials

Sensitive information such as database usernames and passwords should never be exposed within the application’s core files. Use environment variables or external configuration files stored securely outside the public directory.

This keeps credentials safe from attackers and reduces the risk of accidental leaks through version control or server misconfigurations.

9. Backup and Monitor Your Database

No matter how robust your integration is, regular backups are critical. A backup strategy ensures you can recover quickly in the event of data loss, corruption, or server failure. Automate backups and store them securely, ideally in multiple locations.

Monitoring tools can also help track database performance, detect anomalies, and alert administrators about unusual activity or degradation in performance.

10. Consider Using an ORM for Cleaner Code

Object-relational mapping (ORM) tools can simplify how developers interact with databases. Rather than writing raw SQL queries, developers can use ORM libraries to manage data through intuitive, object-oriented syntax.

This practice improves productivity, promotes code readability, and makes maintaining the application easier in the long run. While it’s not always necessary, using an ORM can be especially helpful for teams working on large or complex applications.

Why Choose Professional Help?

While these best practices can be implemented by experienced developers, working with specialized php development companies in usa ensures your web application follows industry standards from the start. These companies bring:

Deep expertise in integrating PHP and MySQL

Experience with optimizing database performance

Knowledge of the latest security practices

Proven workflows for development and deployment

Professional PHP development agencies also provide ongoing maintenance and support, helping businesses stay ahead of bugs, vulnerabilities, and performance issues.

Conclusion

PHP and MySQL remain a powerful and reliable pairing for web development in 2025. When integrated using modern techniques and best practices, they offer unmatched flexibility, speed, and scalability.

Whether you’re building a small website or a large-scale enterprise application, following these best practices ensures your PHP and MySQL stack is robust, secure, and future-ready. And if you're seeking expert assistance, consider partnering with one of the top php development companies in usa to streamline your development journey and maximize the value of your project.

0 notes