#datastudio dashboards

Explore tagged Tumblr posts

Photo

Are you looking for a comprehensive guide on how to manage your pages in Data Studio? Look no further! In this post, we will provide you with a step-by-step guide to ensure that you can easily create and manage your pages for optimal performance. Step 1: Sign in to Data Studio The first step is to sign in to your Data Studio account. If you don't have an account, you can create one for free. Once you have logged in, you should see your dashboard. Step 2: Create a new page To create a new page, click on the "Create" button on the menu and select "Report". You will be directed to a blank page where you can start your report. Step 3: Add data sources To add data sources to your page, click on the "Add Data" button on the left-hand side menu. You can choose from a variety of data sources such as Google Analytics, AdWords, or BigQuery. Once you have selected your data source, click on "Connect" and configure your settings. Step 4: Insert charts and graphs Data Studio offers a wide range of charts and graphs that you can use to visualize your data. You can add them by clicking on the "Insert" button on the left-hand side menu and selecting the type of visual you want to add. From here, you can customize your visuals and add filters as needed. Step 5: Share your page Once you have created and customized your page, it's time to share it with others. Click on the "Share" button on the upper right corner of your screen and customize your settings. You can share your page via URL or embed it on your website. And that's it! Following these simple steps will ensure that you can easily create and manage your pages in Data Studio. For more tips and tricks on how to optimize your reporting and analytics, check out Cratos.ai. They offer valuable insights for businesses looking to streamline and improve their data management processes. #DataStudio #Cratos.ai 📈💡

0 notes

Photo

Business Intelligence Google Data Studio for better reporting – Is it any good?

Business Intelligence Google Data Studio can be hard to master but is it worth the effort? Know how the understand it properly and make it do wonders for businesses.

#Powermetrics#Powermetrics dashboards#datastudio dashboards#tableaubireportingtool#dashboardforsearchengineoptimization#automatedmarketingreports#tableaugooglesearchconsole#googleadsdashboard

0 notes

Link

Aprenda como calcular Duração no Google Data Studio fazendo a conversão de horas e minutos para segundos na sua fonte ou na própria ferramenta.

#data studio#google data studio#datastudio#duracao no data studio#calcular hora#dashboard#google analytics

0 notes

Note

So I wrote a python script that pulls the number of fics per fandom off the AO3 fandom pages and then another script that writes the data to a Google Sheet which is then connected to a DataStudio dashboard. My plan is to collect this data weekly so you can see things like trends over time and also fastest growing fandoms (% increase of fics WoW). I'll share once I have a little more data but right now I'm running it manually every Monday. Is there a way to automate that process for free? (Ideally one where my comp doesn't have to be on all the time?)

That sounds very cool! Looking forward to seeing it when you get to the point where you want to share. :)

I suspect any way of automating the process will either require using your own computer or paying money for cloud hosting. But I'll throw the question to my readers in case they have suggestions!

25 notes

·

View notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

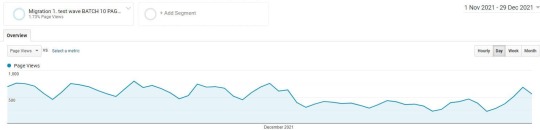

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

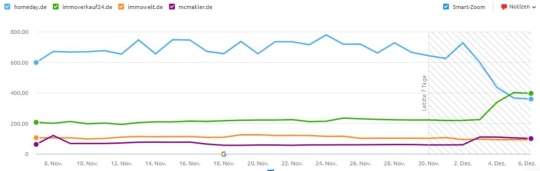

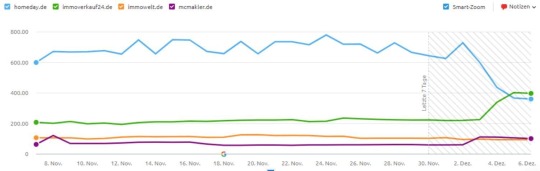

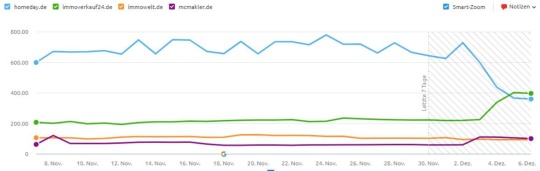

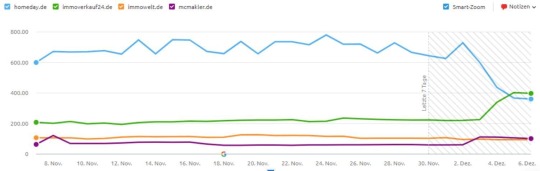

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

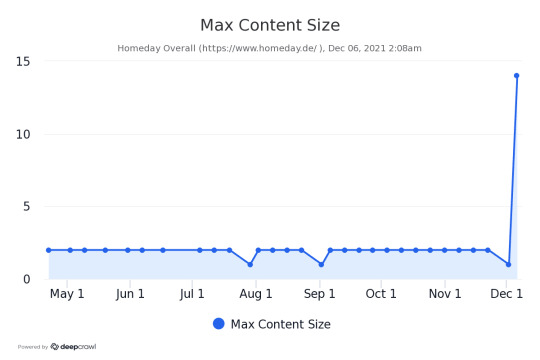

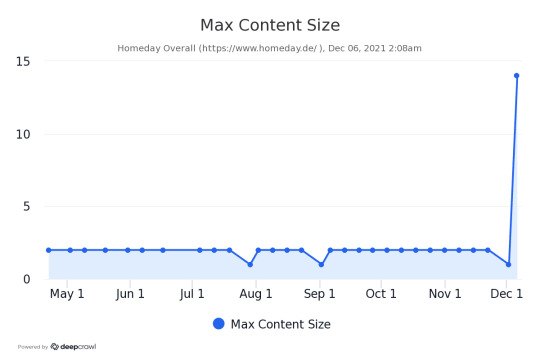

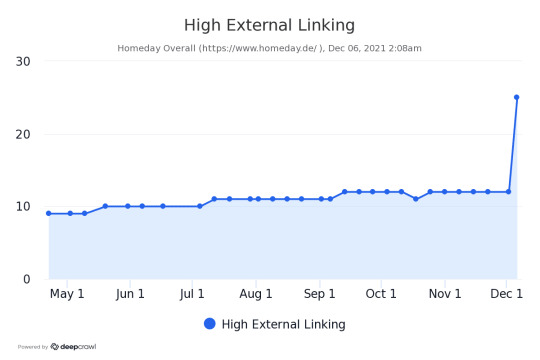

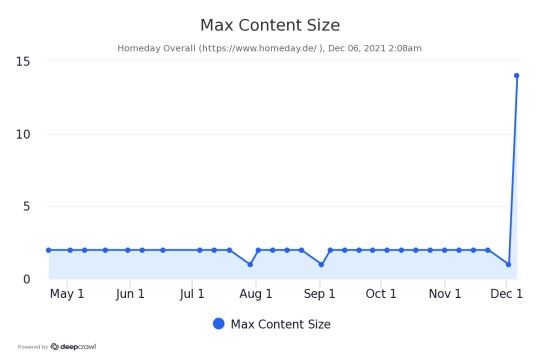

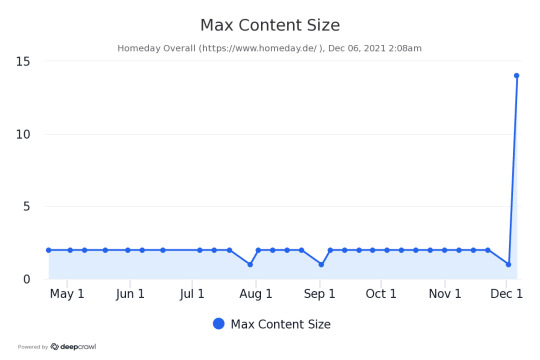

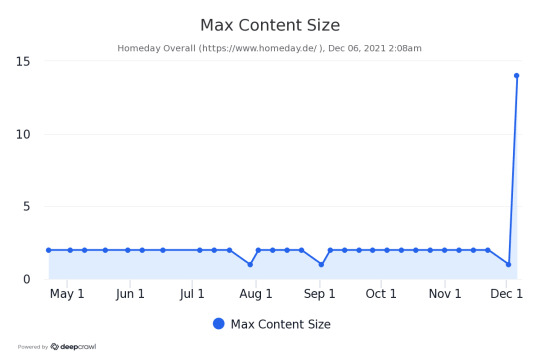

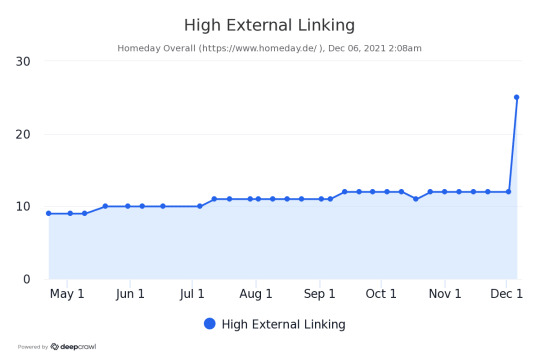

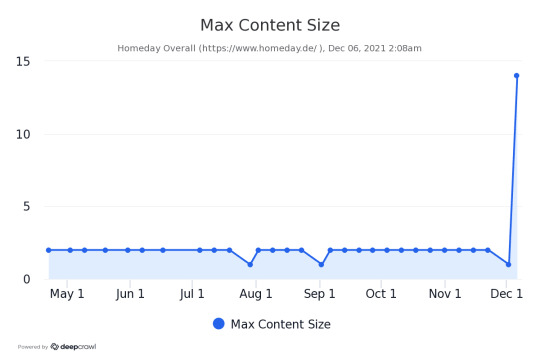

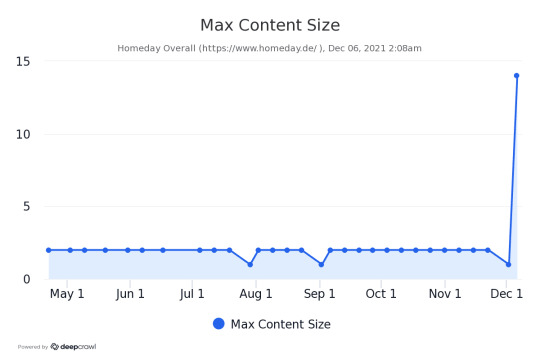

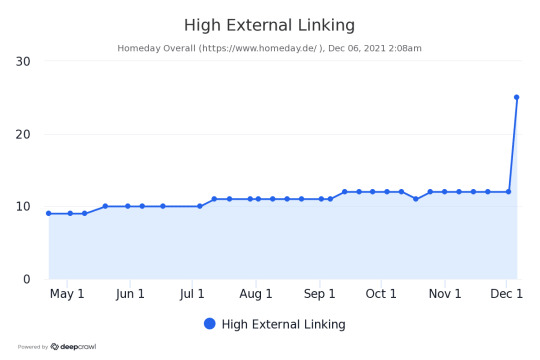

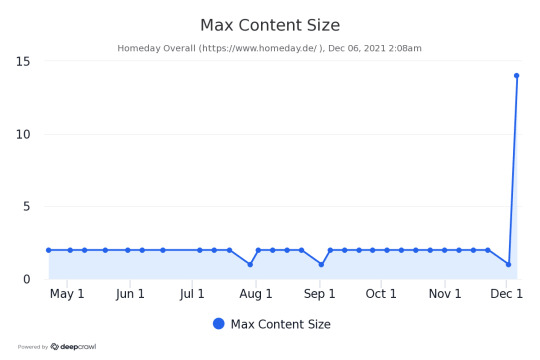

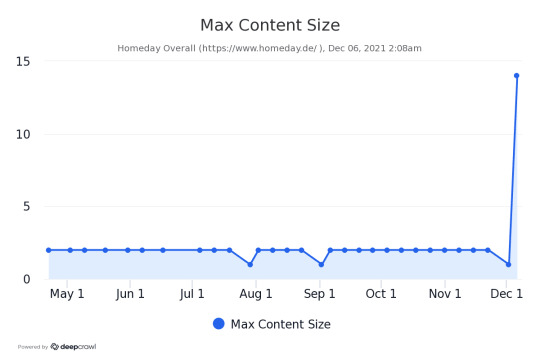

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

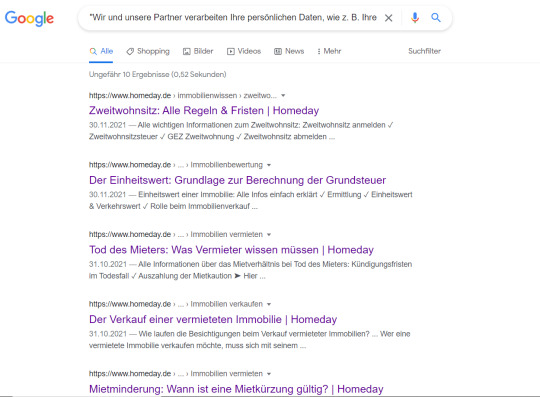

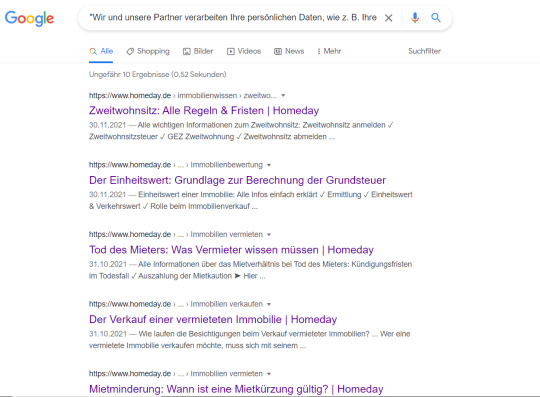

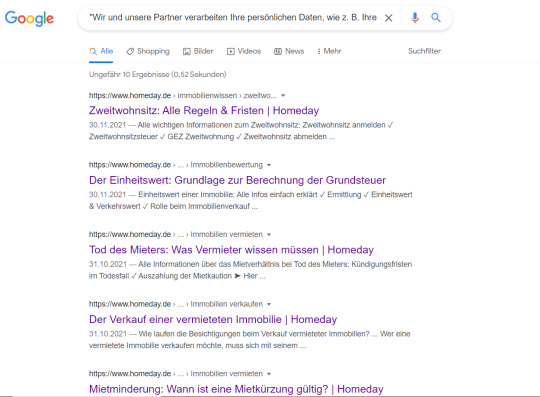

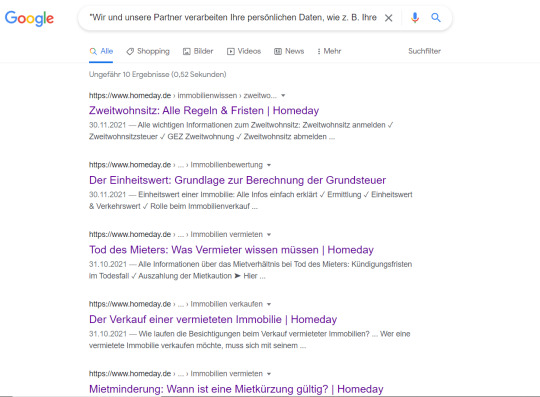

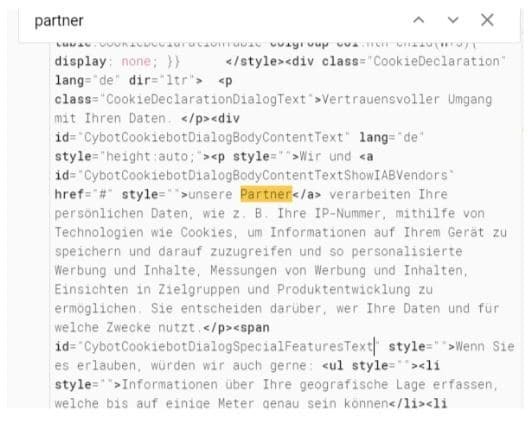

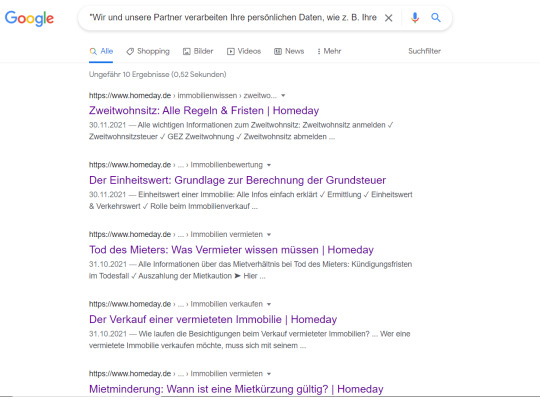

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

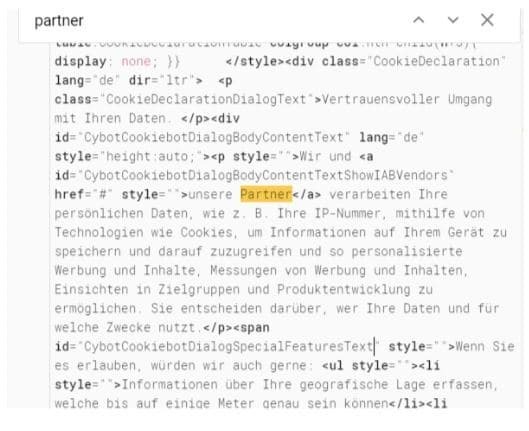

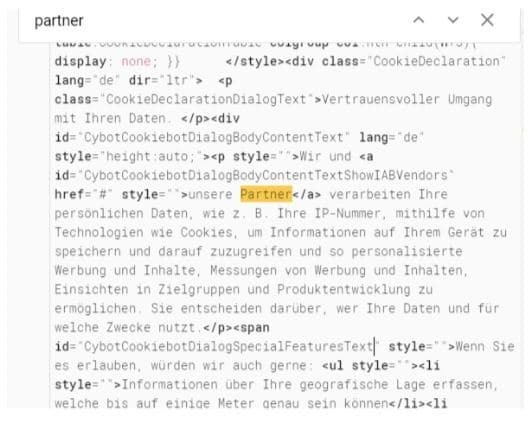

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

https://ift.tt/Aero3fG

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Photo

Are you looking to quickly replicate a page in your Data Studio account? Look no further! With just a few simple steps, you can easily duplicate any page in a matter of seconds. First, log in to your Data Studio account and navigate to the page that you would like to duplicate. Next, click on the three dot icon in the upper right corner of the page to reveal a dropdown menu. From here, select the "Duplicate page" option. A new page will be created with the same layout and design as the original page, but with a unique name (e.g. "Page 1 - Copy"). Now that you have duplicated the page, you can make any necessary edits or updates without affecting the original page. This is especially helpful when creating multiple versions of a report or dashboard. And there you have it! Duplicating pages in Data Studio is quick and easy. Looking for more tips and tricks to make the most out of your data analysis? Look no further than Cratos.ai. Our platform offers a wide range of data management and visualization services to help you transform the way you use data. Click here to learn more and start your free trial today! #datastudio #datamanagement #visualization #cratosai 🚀📈

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords