#datavirtualization

Explore tagged Tumblr posts

Text

The Difference Between Virtualization And Cloud Computing

Have you seen P.S. I Love You (2007)? If not, you’re missing out! It’s a heartwarming movie with a few tears here and there, but it's totally worth it.

The story follows Holly, who’s trying to pick up the pieces after her husband, Gerry, passes away. But before he died, Gerry left her a series of letters. Each one gives her a task, something to help her move forward. It’s like he's still with her, guiding her, even though he’s gone.

Just like Gerry’s letters brought Holly comfort, technologies like virtualization and cloud computing give us new ways to stay connected and get things done, even from afar. Virtualization allows you to run different programs on one computer, and cloud computing gives you access to crazy amounts of computing power without owning the hardware.

These techs are like modern love letters—offering the support you need to do more with what you have. So let’s explore how they differ and what they can do for you!

Visit Here - https://www.techdogs.com/td-articles/trending-stories/the-difference-between-virtualization-and-cloud-computing

0 notes

Text

Data Virtualization in Modern AI and Analytics Architectures

Data virtualization brings data together for seamless analytics and AI.

What is data virtualization? Before building any artificial intelligence (AI) application, data integration is a must. While there are several ways to begin this process, data virtualization helps organizations build and deploy applications more quickly.

With the help of data virtualization, organizations can unleash the full potential of their information, obtaining real-time artificial intelligence insights for innovative applications such as demand forecasting, fraud detection, and predictive maintenance.

Even with significant investments in technology and databases, many businesses find it difficult to derive more value from their data. This gap is filled by data virtualization, which enables businesses to leverage their current data sources for AI and analytics projects with efficiency and flexibility.

By acting as a bridge, virtualizing data allows the platform to access and display data from external source systems whenever needed. With this creative solution, data management is centralized and streamlined without necessitating actual storage on the platform. By creating a virtual layer between users and data sources, organizations can manage and access data without having to copy it or move it from its original place.

Why go with data virtualization? Data virtualization removes the need for physical duplication or migration, which speeds up the process of combining data from several sources. This minimizes the possibility of errors or data loss while also drastically cutting down on the time and cost of data integration.

Any organization, no matter where the data is stored, can obtain a centralized view of it.

Dismantling data silos: Using data virtualization to support machine learning breakthroughs AI and advanced analytical tools have transformed huge business operations and decision-making. Data virtualization acts as a central hub to integrate real-time data streams from equipment logs and sensor data and eliminate data silos and fragmentation.

Through data virtualization, historical data from extensive software suites utilized for a variety of tasks, including customer relationship management and business resource planning, is integrated with real-time data. Depending on the suite, this historical data offers insightful information about things like maintenance schedules, asset performance, or customer behavior.

Data virtualization integrates real-time and historical data from multiple sources to show an organization’s operational data environment. This holistic strategy helps firms improve procedures, make data-driven decisions, and gain a competitive edge.

This vast data set is being used by foundation models due to the rise of generative AI chatbots. By actively sorting through the data to find hidden trends, patterns, and correlations, these algorithms offer insightful information that helps advanced analytics forecast a variety of outcomes. These forecasts have the capacity to foresee future market trends and client demands, spot possible business opportunities, proactively identify and stop system problems and breakdowns, and optimize maintenance plans for optimal uptime and effectiveness.

Considering the design of virtualized data platforms

Real-time analysis and latency

Problem: Direct access to stored data usually results in lower latency than virtualized data retrieval. This might cause problems for real-time predictive maintenance analysis, as prompt insights are essential.

Design considerations: In order to provide real-time insights and reduce access times to virtualized data, IBM needs to use a two-pronged strategy. IBM will first examine the network architecture and enhance data transfer mechanisms. This may entail employing quicker protocols, like UDP, for specific data types or strategies like network segmentation to lessen congestion. They shorten the time it takes to get the information you require by streamlining data transport.

Secondly, in order to keep the dataset for analysis sufficiently current, they will put data refresh procedures into place. This could entail scheduling batch processes to update data incrementally on a regular basis while balancing the number of updates with the resources needed. Finding this balance is essential because too many updates can overload systems, while too few updates can result in out-of-date information and erroneous forecasts. These tactics when combined can result in minimal latency as well as a new data collection for the best possible analysis.

Maintaining a balance between update frequency and strain on source systems:

Virtualized data can be continuously queried for real-time insights, but this might overload source systems and negatively affect their performance. Given that AI and predictive analysis rely on regular data updates, this presents a serious risk.

Design considerations: You must carefully plan how it gets data in order to maximize query frequency for your predictive analysis and reporting. This entails concentrating on obtaining only the most important data points and maybe making use of data replication technologies to enable real-time access from several sources. Additionally, to improve overall model performance and lessen the burden on data systems, think about scheduling or batching data retrievals for particular critical periods rather than querying continuously.

Benefits to developers and abstraction of the virtualization layer Benefit: The data platform’s virtualization layer serves as an abstraction layer. This means that once the abstraction layer is complete, developers may focus on creating AI/ML or data mining applications for businesses rather than worrying about the precise location of the data’s physical storage. They won’t be sidetracked by the difficulties of data administration and can concentrate on developing the fundamental logic of their models. This results in quicker application deployment cycles and development cycles.

Benefits for developers: When working on data analytics, developers may concentrate on the fundamental reasoning behind their models by using an abstraction layer. This layer serves as a barrier, keeping the complexity of managing data storage hidden. As a result, developers won’t have to spend as much time grappling with the complexities of the data, which will eventually speed up the implementation of the predictive maintenance models.

Tips for optimizing storage While some data analysis applications may not directly benefit from storage optimisation techniques like normalisation or denormalization, they are nonetheless important when using a hybrid approach. This methodology entails the amalgamation of data that is ingested and data that is accessed via virtualization on the selected platform.

Evaluating the trade-offs between these methods contributes to the best possible storage utilisation for virtualized and ingested data sets. Building efficient ML solutions with virtualized data on the data platform requires careful consideration of these design factors.

Data virtualization Platform Data virtualization is now more than just a novel concept. It is used as a tactical instrument to improve the performance of different applications. A platform for data virtualization is a good example. Through the use of data virtualization, this platform makes it easier to construct a wide range of applications, greatly enhancing their effectiveness, flexibility, and ability to provide insights in almost real-time.

Let’s examine a few fascinating use examples that highlight data virtualization’s revolutionary potential.

Supply chain optimization in the context of globalization Supply chains are enormous networks with intricate dependencies in today’s interconnected global economy. Data virtualization is essential for streamlining these complex systems. Data from several sources, such as production metrics, logistics tracking information, and market trend data, are combined by a data virtualization platform. With a thorough overview of their whole supply chain operations, this comprehensive view enables enterprises.

Imagine being able to see everything clearly from every angle. You can anticipate possible bottlenecks, streamline logistics procedures, and instantly adjust to changing market conditions. An agile and optimised value chain that offers notable competitive advantages is the end outcome.

A thorough examination of consumer behavior: customer analytics Because of the digital revolution, knowing your clients is now essential to the success of your business. Data virtualization is a technique used by a data virtualization platform to eliminate data silos. Customer data from several touchpoints, including sales records, customer service exchanges, and marketing campaign performance measurements, is effortlessly integrated. This cohesive data ecosystem facilitates a thorough comprehension of consumer behavior patterns and preferences.

Equipped with these deep consumer insights, companies can design highly customized experiences, focus marketing, and develop cutting-edge items that more successfully appeal to their target market. This data-driven strategy fosters long-term loyalty and client happiness, both of which are essential for succeeding in the cutthroat business world of today.

In the era of digitalization, proactive fraud detection Financial fraud is a dynamic phenomenon that requires proactive detection using data virtualization platforms. By virtualizing and evaluating data from several sources, including transaction logs, user behavior patterns, and demographic information, the platform detects possible fraud attempts in real time. This strategy not only shields companies from monetary losses but also builds consumer trust, which is an invaluable resource in the current digital era.

These significant examples demonstrate the transformational potential of data virtualization.

Businesses can unleash the full potential of their data with the help of IBM Watsonx and IBM Cloud for Data platform, which spurs innovation and gives them a major competitive edge in a variety of industries. In addition, IBM provides IBM Knowledge Catalogue for data governance and IBM Data Virtualization as a shared query engine.

Read more on Govindhtech.com

0 notes

Text

Scaling in Cloud Computing . . . . for more information and a cloud computing tutorial https://bit.ly/3TLHBJm check the above link

#cloudcomputing#datavirtualization#computerscience#cloudservices#cloudservicesprovider#cloudservicescompany#hardwarevirtualization#computerengineering#javatpoint

0 notes

Text

#NetworkVirtualization#VirtualNetworks#DataVirtualization#ModernDataNetworks#SoftwareDefinedNetworking#VirtualizationTechnology#VirtualDataInfrastructure#NetworkVirtualizationTrends#DataVirtualizationSolutions#SDN#VirtualNetworkManagement#VirtualizedDataEnvironment#NetworkTransformation#DataCenterVirtualization#VirtualNetworkArchitecture#NetworkVirtualizationBenefits#DataVirtualizationTools#SDNVirtualization#VirtualNetworkSecurity#NetworkVirtualizationInnovation

0 notes

Link

The Denodo Platform is really the only data virtualization framework in the market that requires all of the abilities of a logical data fabric – a data stream catalog for query expansion and innovation data governance, business smart query efficiency automation, computerized cloud infrastructure governance besides multi-cloud and hybrid contexts, and integrated data preparation abilities for service analytics and to remove the space among the IT and the businesses.

0 notes

Link

#datavirtualization#Marketinginterventions#discoveryanalytics#diagnosticanalytics#predictiveanalytics#skupricing

0 notes

Text

How Data Virtualization Is Changing The Business Landscape

Today’s challenges associated with managing and effectively using massive data stores will continue to grow.

Data Virtualization is the modern answer to unleash your enterprise architectures from the burden of data replication, speeding up the tasks of data cleansing, integration, federation, transformation and presentation. World’s leading companies are harnessing the power of their data to achieve significantly better business impact.

Start your data virtualization initiative with specific projects that address immediate information needs with Polestar Solutions now.

Get the link in the comment section.

Follow Polestar Solutions for more such content.

Read more: https://bit.ly/3pYSbLv

0 notes

Text

What is Cloud Computing Replacing? . . . . for more information and a cloud computing tutorial https://bit.ly/48ZAhOR check the above link

#cloudcomputing#datavirtualization#computerscience#cloudservices#cloudservicesprovider#cloudservicescompany#hardwarevirtualization#computerengineering#javatpoint

0 notes

Text

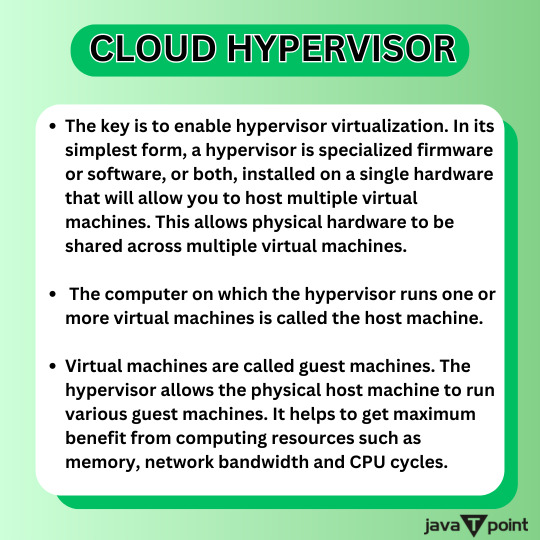

Cloud Hypervisor . . . . for more information and a cloud computing tutorial https://bit.ly/43rbaTO check the above link

#cloudcomputing#datavirtualization#computerscience#CloudHypervisor#servervirtualization#softwarevirtualization#hardwarevirtualization#computerengineering#javatpoint

0 notes

Text

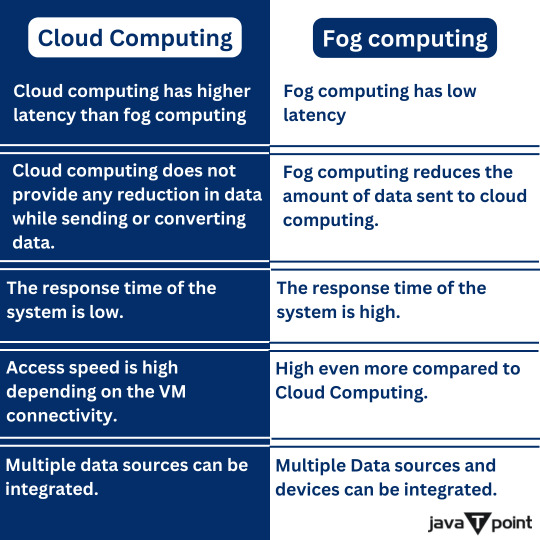

Difference between cloud computing and fog computing . . . . for more information and a cloud computing tutorial https://bit.ly/42sEk3S check the above link

#cloudcomputing#fogcomputing#datavirtualization#computerscience#servervirtualization#softwarevirtualization#hardwarevirtualization#computerengineering#javatpoint

0 notes

Text

Cloud Service Provider Companies . . . . for more information and a cloud computing tutorial https://bit.ly/3Ppsvqm check the above link

#cloudcomputing#datavirtualization#computerscience#cloudservices#cloudservicesprovider#cloudservicescompany#hardwarevirtualization#computerengineering#javatpoint

0 notes

Text

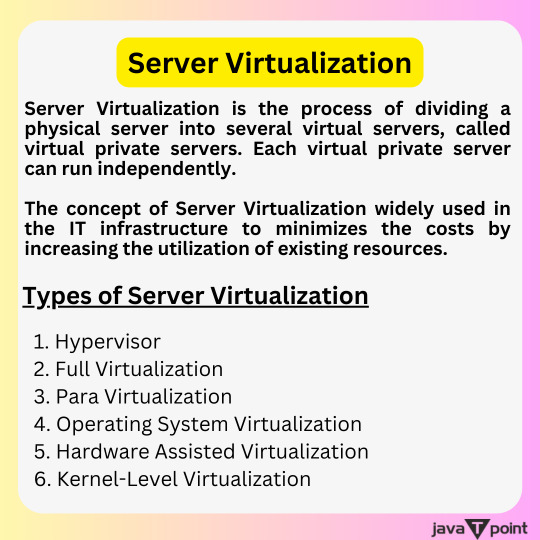

Server Virtualization . . . . for more information and a cloud computing tutorial https://bit.ly/4aeyWo5 check the above link

#cloudcomputing#datavirtualization#computerscience#servervirtualization#softwarevirtualization#hardwarevirtualization#computerengineering

0 notes

Text

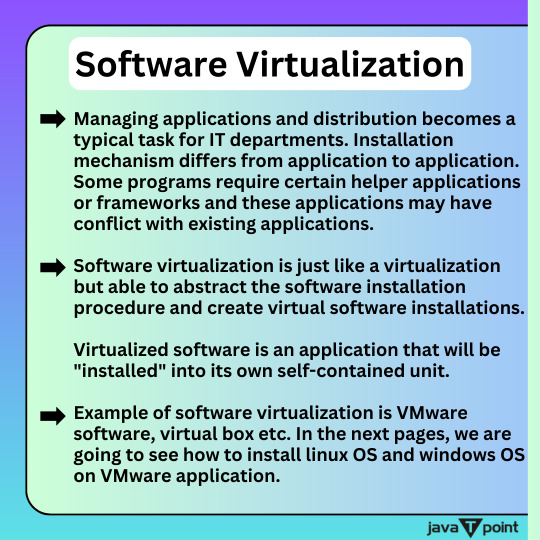

Software Virtualization . . . . for more information and a cloud computing tutorial https://bit.ly/48Szmzy check the above link

#cloudcomputing#datavirtualization#computerscience#servervirtualization#softwarevirtualization#hardwarevirtualization#computerengineering#javatpoint

0 notes

Text

Hardware Virtualization . . . . for more information and a cloud computing tutorial https://bit.ly/3TDaVSb check the above link

#cloudcomputing#datavirtualization#computerscience#hardwarevirtualization#computerengineering#javatpoint

0 notes

Text

Data Virtualization . . . . for more information and a cloud computing tutorial https://bit.ly/3TniHi1 check the above link

#cloudcomputing#datavirtualization#computerscience#hardwarevirtualization#computerengineering#javatpoint

0 notes