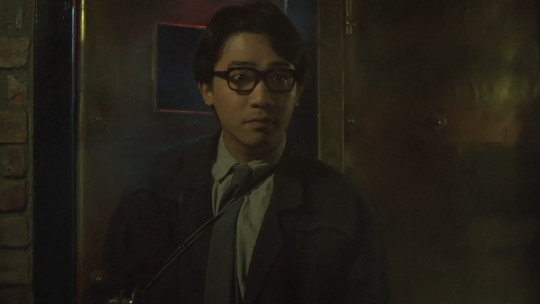

#david chiu

Text

#Craving You#饞上你#Gabriel makes stuff#Cheng Chang Fan#Kane#David Chiu#Sung Yi Fan#Sung Yi Fan x Kane#Taiwanese Drama#Taiwanese series#Taiwanese BL#Taiwan series#BL Drama#BL series

10 notes

·

View notes

Photo

Grapefruit by David Chiu

1 note

·

View note

Text

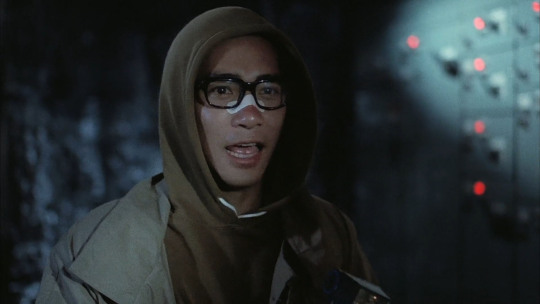

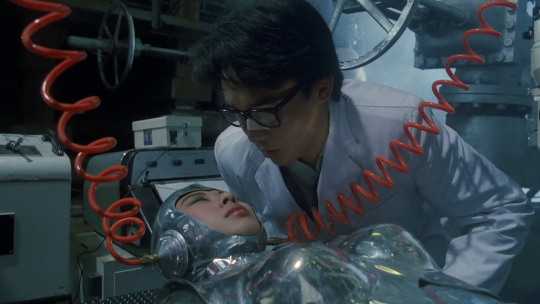

Tony Leung in 鐵甲無敵瑪利亞 / I Love Maria (1988)

#tony leung#sally yeh#tsui hark#i love maria#鐵甲無敵瑪利亞#1988#roboforce#tony leung chiu wai#1980s film#80s movies#sci fi comedy#sci fi#science fiction#comedy#hong kong action#hong kong cinema#david chung

6 notes

·

View notes

Video

youtube

The Monkees - “Riu Chiu,” from “The Christmas Show,” 1967.

“[T]here’s so many good little second, you know, obscure songs that I just really like a lot. ‘Riu Chiu’ is a song that was never on a record but it’s an old sort of medieval Spanish Christmas song or something that we sang once live on a, on the Christmas episode. And, gosh, that’s good, you know, I think — the only song where, it’s a cappella, and it’s the four of us singing, and you can’t tell who’s singing which part, the blend is so good.” - Peter Tork, GOLD 104.5, 1999

Q: “Gotta ask, favorite Monkees song?”

Peter Tork: “‘Riu Chiu.’”

Q: “Hm. Because?”

PT: “When you say song, I don’t know, in some ways, that’s not a Monkees song. We did ‘Riu Chiu’ in front of the cameras for a Christmas episode, and it was the four of us sitting there, singing live into the camera, and I think the vocal blend is astounding. It’s a cappella, which is just, you know — I think it’s ferocious, I think that… you can’t tell who’s singing what part, the vocal blend is so good throughout. So we’re — I’m very pleased with that.” - WDBB, February 12, 2006

#The Monkees#Monkees#Tork quotes#1960s#60s Tork#Peter Tork#Michael Nesmith#Davy Jones#David Jones#Micky Dolenz#Riu Chiu#Monkees season 2#<3#<333#perennial favorite <3#1967#WGLD Radio#can you queue it

35 notes

·

View notes

Photo

Christine Chiu | David Koma gown | ELLE Women In Hollywood Celebration | 2022

#pc: instagram#christine chiu#david koma#elle women in hollywood celebration#2022 elle women in hollywood celebration#2022

3 notes

·

View notes

Text

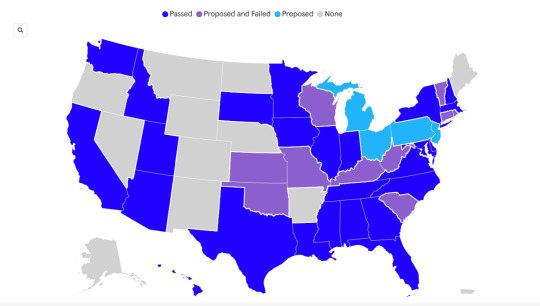

As national legislation on deepfake pornography crawls its way through Congress, states across the country are trying to take matters into their own hands. Thirty-nine states have introduced a hodgepodge of laws designed to deter the creation of nonconsensual deepfakes and punish those who make and share them.

Earlier this year, Democratic congresswoman Alexandria Ocasio-Cortez, herself a victim of nonconsensual deepfakes, introduced the Disrupt Explicit Forged Images and Non-Consensual Edits Act, or Defiance Act. If passed, the bill would allow victims of deepfake pornography to sue as long as they could prove the deepfakes had been made without their consent. In June, Republican senator Ted Cruz introduced the Take It Down Act, which would require platforms to remove both revenge porn and nonconsensual deepfake porn.

Though there’s bilateral support for many of these measures, federal legislation can take years to make it through both houses of Congress before being signed into law. But state legislatures and local politicians can move faster—and they’re trying to.

Last month, San Francisco City Attorney David Chiu’s office announced a lawsuit against 16 of the most visited websites that allow users to create AI-generated pornography. “Generative AI has enormous promise, but as with all new technologies, there are unintended consequences and criminals seeking to exploit the new technology. We have to be very clear that this is not innovation—this is sexual abuse,” Chiu said in a statement released by his office at the time.

The suit was just the latest attempt to try to curtail the ever-growing issue of nonconsensual deepfake pornography.

“I think there's a misconception that it's just celebrities that are being affected by this,” says Ilana Beller, organizing manager at Public Citizen, which has been tracking nonconsensual deepfake legislation and shared their findings with WIRED. “It's a lot of everyday people who are having this experience.”

Data from Public Citizen shows that 23 states have passed some form of nonconsensual deepfake law. “This is such a pervasive issue, and so state legislators are seeing this as a problem,” says Beller. “I also think that legislators are interested in passing AI legislation right now because we are seeing how fast the technology is developing.”

Last year, WIRED reported that deepfake pornography is only increasing, and researchers estimate that 90 percent of deepfake videos are of porn, the vast majority of which is nonconsensual porn of women. But despite how pervasive the issue is, Kaylee Williams, a researcher at Columbia University who has been tracking nonconsensual deepfake legislation, says she has seen legislators more focused on political deepfakes.

“More states are interested in protecting electoral integrity in that way than they are in dealing with the intimate image question,” she says.

Matthew Bierlein, a Republican state representative in Michigan, who cosponsored the state’s package of nonconsensual deepfake bills, says that he initially came to the issue after exploring legislation on political deepfakes. “Our plan was to make [political deepfakes] a campaign finance violation if you didn’t put disclaimers on them to notify the public.” Through his work on political deepfakes, Bierlein says, he began working with Democratic representative Penelope Tsernoglou, who helped spearhead the nonconsensual deepfake bills.

At the time in January, nonconsensual deepfakes of Taylor Swift had just gone viral, and the subject was widely covered in the news. “We thought that the opportunity was the right time to be able to do something,” Beirlein says. And Beirlein says that he felt Michigan was in the position to be a regional leader in the Midwest, because, unlike some of its neighbors, it has a full-time legislature with well-paid staffers (most states don’t). “We understand that it's a bigger issue than just a Michigan issue. But a lot of things can start at the state level,” he says. “If we get this done, then maybe Ohio adopts this in their legislative session, maybe Indiana adopts something similar, or Illinois, and that can make enforcement easier.”

But what the penalties for creating and sharing nonconsensual deepfakes are—and who is protected—can vary widely from state to state. “The US landscape is just wildly inconsistent on this issue,” says Williams. “I think there's been this misconception lately that all these laws are being passed all over the country. I think what people are seeing is that there have been a lot of laws proposed.”

Some states allow for civil and criminal cases to be brought against perpetrators, while others might only provide for one of the two. Laws like the one that recently took effect in Mississippi, for instance, focus on minors. Over the past year or so, there have been a spate of instances of middle and high schoolers using generative AI to make explicit images and videos of classmates, particularly girls. Other laws focus on adults, with legislators essentially updating existing laws banning revenge porn.

Unlike laws that focus on nonconsensual deepfakes of minors, on which Williams says there is a broad consensus that there they are an “inherent moral wrong,” legislation around what is “ethical” when it comes to nonconsensual deepfakes of adults is “squishier.” In many cases, laws and proposed legislation require proving intent, that the goal of the person making and sharing the nonconsensual deepfake was to harm its subject.

But online, says Sara Jodka, an attorney who specializes in privacy and cybersecurity, this patchwork of state-based legislation can be particularly difficult. “If you can't find a person behind an IP address, how can you prove who the person is, let alone show their intent?”

Williams also notes that in the case of nonconsensual deepfakes of celebrities or other public figures, many of the creators don’t necessarily see themselves as doing harm. “They’ll say, ‘This is fan content,’ that they admire this person and are attracted to them,” she says.

State laws, Jobka says, while a good start, are likely to have limited power to actually deal with the issue, and only a federal law against nonconsensual deepfakes would allow for the kind of interstate investigations and prosecutions that could really force justice and accountability. “States don't really have a lot of ability to track down across state lines internationally,” she says. “So it's going to be very rare, and it's going to be very specific scenarios where the laws are going to be able to even be enforced.”

But Michigan’s Bierlein says that many state representatives are not content to wait for the federal government to address the issue. Bierlein expressed particular concern about the role nonconsensual deepfakes could play in sextortion scams, which the FBI says have been on the rise. In 2023, a Michigan teen died by suicide after scammers threatened to post his (real) intimate photos online. “Things move really slow on a federal level, and if we waited for them to do something, we could be waiting a lot longer,” he says.

90 notes

·

View notes

Text

https://www.nytimes.com/2024/08/15/us/deepfake-pornography-lawsuit-san-francisco.html?smid=nytcore-ios-share&referringSource=articleShare&sgrp=c-cb

San Francisco Moves to Lead Fight Against Deepfake Nudes

City Attorney David Chiu has filed a lawsuit seeking to permanently shutter 16 popular websites that turn images of real girls and women into pornography.

Heather Knight

Aug. 15, 2024

Like many parents, Yvonne Meré was deeply disturbed when she read about a frightening new trend.

Boys were using “nudification” apps to turn photos of their female classmates into deepfake pornography, using images of the girls’ faces, from photos in which they were fully clothed, and superimposing them onto images of naked bodies generated by artificial intelligence.

But unlike many parents who worry about the threats posed to their children in a world of ever-changing technology, Ms. Meré, the mother of a 16-year-old girl, had the power to do something about it. As the chief deputy city attorney in San Francisco, Ms. Meré rallied her co-workers to craft a lawsuit, filed in state court on Wednesday night, that seeks to shut down the 16 most popular websites used to create these deepfakes.

The legal team said it appeared to be the first government lawsuit of its kind aimed at quashing the sites that promote the opportunity to digitally “undress” women and girls without their consent.

After reading a New York Times article about the tremendous damage done when such deepfake images are created and shared, Ms. Meré texted Sara Eisenberg, the mother of a 9-year-old girl and the head of the unit in the city attorney’s office that identifies major social problems and tries to solve them through legal action. The two of them then reached out to the office’s top lawyer, City Attorney David Chiu.

“The article is flying around our office, and we were like, ‘What can we do about this?’” Mr. Chiu recalled in an interview. “No one has tried to hold these companies accountable

33 notes

·

View notes

Text

...“The proliferation of these images has exploited a shocking number of women and girls across the globe,” said David Chiu, the elected city attorney of San Francisco who brought the case against a group of widely visited websites tied to entities in California, New Mexico, Estonia, Serbia, the United Kingdom and elsewhere.

“These images are used to bully, humiliate and threaten women and girls,” he said in an interview with The Associated Press. “And the impact on the victims has been devastating on their reputation, mental health, loss of autonomy, and in some instances, causing some to become suicidal.”

The lawsuit brought on behalf of the people of California alleges that the services broke numerous state laws against fraudulent business practices, nonconsensual pornography and the sexual abuse of children.

#reminded of this story... I heard a report on npr about this lawsuit yesterday#imo there is no justifiable use for consumer ai technology. the government should have darpa'd that shit first thing#luddism engage

16 notes

·

View notes

Text

Welp, here we go. We all knew this was gonna happen.

3 notes

·

View notes

Text

#Craving You#饞上你#Gabriel makes stuff#Cheng Chang Fan#Kane#David Chiu#Sung Yi Fan#Sung Yi Fan x Kane#Taiwanese Drama#Taiwanese series#Taiwanese BL#Taiwan series#BL Drama#BL series

7 notes

·

View notes

Text

Newsweek Magazine: Arctic Monkeys Change Direction Yet Again on 'The Car'

Written by David Chiu, 24/10/2022

When Arctic Monkeys released their sixth studio album, Tranquility Base Hotel & Casino, in 2018, it was viewed as a dramatic left turn for the British band primarily known for their guitar-charged indie rock and the distinct lyrics of frontman Alex Turner. For that record, the British quartet incorporated ornate psychedelic and lounge-pop influences that leaned toward Burt Bacharach and the Beach Boys, with the piano becoming more prominent than the guitar. Yet, those noticeable shifts didn't appear to alienate the band's diehard fans when Tranquility Base Hotel & Casino became the band's sixth consecutive number one album in the U.K.

After that stylistic detour, fans might have expected Arctic Monkeys—Turner, drummer Matt Helders, bassist Nick O'Malley and guitarist Jamie Cook—to return to the earlier brash rock for their next album. But the band from Sheffield remains determined to evolve and defy expectations, as indicated by The Car, released October 21 via Domino Records. It's a continuation of the trippy and elegant after-hours vibe mined on Tranquility Base, although the music—featuring strings and horns this time—sounds more loose, atmospheric and expansive.

"I think there's this idea of when starting a new record [is the] 'we're-not-gonna-make-it-anything-like-the-last-one,'" the pensive Turner tells Newsweek. "But what I realize more often than not is they all seem to bleed into each other. It's only now when I've got this one under the microscope, I realized how much of that is true. I was probably trying to get away from things we've done on that last record. But I think there's still some of that kind of hanging over here into [The Car], but hopefully not to the extent where it isn't also reaching some new places that we haven't been before as well."

A listen to The Car (produced by longtime collaborator James Ford) immediately draws comparisons to the music of such artists as David Bowie (somewhere between his Young Americans and Station to Station albums), Serge Gainsbourg, Nick Cave and Scott Walker as well as '70s R&B and glam—and yet it still sounds like Arctic Monkeys. "I find it a bit more difficult than I have in the past to draw a line between records of other artists and this thing," Turner says. "I could probably pencil in a few. Perhaps the things I've sort of absorbed for a relatively long period of time now just influenced the process but in a more subtle way than having a discussion saying, 'Let's try and do a song like this' or something. It feels a little more unspoken now. Perhaps I'm just still too close to it in the moment."

Unlike Tranquility Base, whose theme centered on a futuristic hotel on the moon, The Car doesn't primarily focus on a particular subject running through the songs' enigmatic lyrics. "I think there is a theme or feel that runs through this whole record, but I don't think it's exclusive to the words," Turner explains. "It's almost easier to latch on to a theme if I take the words out of it for a minute and focus on what the feel of everything else is doing. I think that the lyrics are sometimes subscribing to that feel. And if there is a theme that runs through it, it's more along those lines than it is about XYZ, if that makes any sense at all."

"The first thing I wrote through it was this instrumental section at the beginning of the album," he continues. "Everything that came after that was written after that. It felt like it has a relationship with what was being evoked in that instrumental section. I wouldn't be leaning into the idea of it's just another 10 songs that aren't connected in any way. But at the same time, I don't think I can pin down a theme, not in a succinct sentence anyway."

The first single released off The Car, "There'd Better Be a Mirrorball," carries an air of melancholy amid the gorgeous strings and prominent piano lines, as Turner sings wistfully: "So if you want to walk me to the car you ought to know I have a heavy heart, so can we please be absolutely sure that there's a mirror ball."

"Obviously, you're describing the lead-up to some sort of goodbye line," Turner says, "and suddenly a mirrorball drops into the middle of that situation, which somehow doesn't seem totally incongruous in my mind. Perhaps on some level, the mirrorball is kind of synonymous with the closing of the show or something like that. But I think what I was imagining is carrying someone's suitcase to the car and then the lighting suddenly changes and the mirrorball drops in the middle of that situation. It's like, 'What's going on there?'I think it does feel like there are a few goodbyes here and there."

Introduced by beautiful acoustic guitar picking, the lyrical setting of "Mr. Schwartz" seems to take place at a movie shoot, which seems appropriate given the cinematic feeling of the song and the album. "There is a feeling of that behind-the-scenes of the production," Turner says. "That idea is not exclusive to or contained within just that song....It feels like there is something going on in the background of all these songs, like sort of a production: There's someone with a clipboard somewhere and somebody's up a ladder not too far from where these things are going on. The character of Mr. Schwartz was something that kind of did present itself to me in very real life, but sort of has been allowed to become a character in a song, I suppose."

The sweeping "Body Paint," the latest single, may be the most brash song of the collection. There are moments of electric guitar bursting through the lush orchestrations, while Turner's vocalizing echoes Bowie's '70s soul boy phase. It opens with a line Steely Dan or Prefab Sprout could have written: "For a master of deception and subterfuge you've made yourself quite the bed to lie in." Explains Turner: "It definitely does get pretty sparkly in the guitar toward the end of that. It's loud...more than I had expected it from the sketches of that song that we had before. I had it down for something that was gentle at the beginning. But during the session, there was something that was more lively that wanted to come out there at the end. I think that songs always continue to reveal themselves even sometimes after they've been recorded. We played the version of that on stage for the first time the other day, and it definitely seemed like it's still got somewhere to go. It's becoming a more exaggerated version of itself."

The Car marks another maturation and evolution in Arctic Monkeys' sound. Its release falls on the 20th anniversary of the band's formation. The hype surrounding Arctic Monkeys' arrival in the post-Britpop era has since become the stuff of legend: their early recordings were burned on CDs and given away at their shows, which prompted fans to upload them online. After signing with indie label Domino, Arctic Monkeys released 2006's Whatever People Say I Am, That's What I'm Not, which hit number one in the U.K. and became that country's biggest-selling debut. Since then, it has been hit albums, touring and festival appearances for the band. On his end, Turner has been engaged with a side project, the Last Shadow Puppets, whose elaborate sounds may have been a prelude to the music of Tranquility Base and The Car.

"It was the summer of 2002 when we first got all the way through the same song at the same time together," he recalls. "We still are friends like we were before it started, and still trusting each other and our instincts in the same way. "

The fact that Arctic Monkeys never made the same album twice most likely contributed to their longevity and friendship. It's been a progression that was more natural than calculated.

"When I cast my mind back to 20 years ago," says Turner, "there's always been something inherently uncooperative. I don't know if that somehow has translated to each time we've been faced with the task of making something new. There's something about not wanting to kind of cooperate with our perception of what we think that should be. I suppose you can arrive at the idea that if one record was successful, the next one should try and emulate or bark up the same tree as that one was. We're not having the board meeting where we're kind of discussing that out loud to that extent. The whole thing in the first place was done on a hunch, on an instinct, and I think that's something we're just still paying attention to, that same instinct all the way along. That's the through line."

Arctic Monkeys will be touring the U.K., Ireland, North America and South America the rest of this year and into 2023. Having branched out on their last two records, it wouldn't be surprising if their next record tackled another genre, perhaps hip-hop or ambient music. Turner says. "Yeah, why not? I'd have to give it some more thought. When I think about my perception of the way people make dance music, I am interested in that approach to it. I'm not saying that it's something I want to do, but I'm interested in watching somebody do it or something for an afternoon."

#interview#arctic monkeys#alex turner#the car era#newsweek magazine 2022#it's an interview in october but i think it wasn't posted here?#i love how he described there'd better be a mirrorball and mr. schwartz here

36 notes

·

View notes

Text

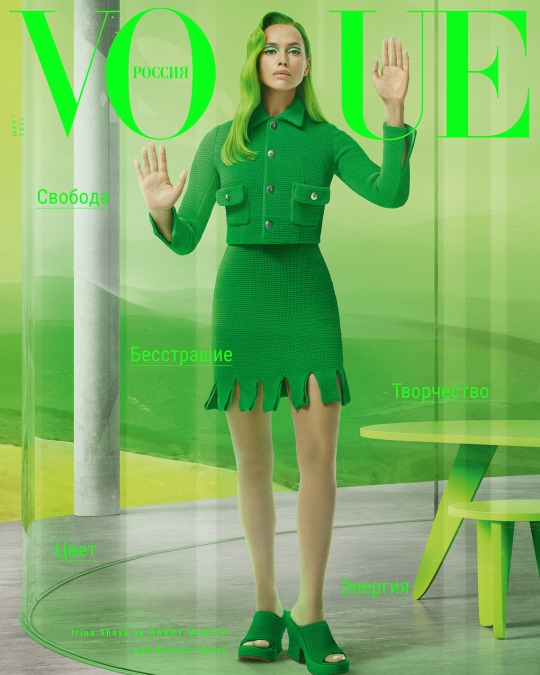

Arseny Jabiev for Vogue Russia with Irina Shayk

Photographer: Arseny Jabiev.

Fashion Stylist: Patrick Mackie.

Creative Director: Harry Nuriev.

Hair Stylist: Jacob Rozenberg.

Makeup Artist: Tatyana Makarova.

Manicure: Honeynailz.

Set-design: Two Hawks Young.

Production: Alina Oleinikova-Kumantsova, Sheri Chiu at see management and Christopher Wolfenbarger.

Photo Assistants: Kyle Thompson and Robert Critchlow.

Retoucher: Mario Seyer.

Style Assistant: Andrew Bruggeman.

Hair Assistant: Dawson Hiegert.

Set-design Assistant: c.a.s.e.y.j.o.n.e.s.

Project Manager: Marta Run.

Casting-Director: David Chen.

Location: Pier59 Studios.

Model: Irina Shayk at the lions ny

7 notes

·

View notes

Text

@rollingthunderevue tagged me to list my top 5 songs at the moment (thank youuuuu rory 💖🙏😔)

alright this is hard but as of right now these are my favs

(still can’t believe the audacity of spotify to ask me if i think the monkees will be on my wrapped……)

and for this one i tag @reignoerme, @kvetchs, @by-thunder, @sunny-lie-melody, and @carlisle-wheeling (and once again feel free not to do it if you don’t want to man 💖✌️😔)

5 notes

·

View notes

Text

This is the 2015 Station ID of TV5. This Station ID was themed “Happy Ka Dito!”

The Station ID is led by the TV5 newscasters, actors, employees and crew members including president Noynoy Aquino and boy band Momoland getting ready for the party. The Station ID Theme was sung by Filipino singer, songwriter, rapper and conductor Ogie Alcasid featuring Filipino rapper and songwriter Gloc-9.

The Station ID also contains newscasters, Raffy Tima, Mark Salazar, Ivan Mayrina, Shawn Yao, Korina Sanchez, Pia Arcangel, Luchi Cruz-Valdez, Paolo Bediones, Atom Araullo, Lourd de Veyra and Alex Santos, actors Arcee Muñoz, Alice Dixson, Tuesday Vargas, Ritz Azul, Eula Caballero, Ivan Dorschner, Rainier Castillo, Gerald Anderson, Nathan Lopez, Edgar Allan Guzman, Lucho Ayala, Carlo Aquino, Arjo Atayde, Sid Lucero, Kim Chiu, Nash Aguas, Juancho Triviño, Valeen Montenegro, Barbie Imperial, Dennis Trillo, Felix Roco, EJ Falcon, IC Mendoza, Marco Gumabao, Derrick Monasterio, Paulo Avelino, David Licauco, Ken Chan, Sef Cadayona, Yves Flores, Angel Locsin, Carmina Villaroel, Bea Alonzo, Louise de los Reyes, James Reid, Nadine Lustre, OJ Mariano, Jake Cuenca, Tom Rodriguez, Pancho Magno, Adrian Alandy, Ruru Madrid, Enrico Cuenca, Xian Lim, Hiro Peralta, Geoff Eigenmann, Ronwaldo and Kristoffer Martin, the sons of Coco Martin, Gretchen Barreto and Kris Aquino, including comedians Long Meija, Jun Sabayton, Simon Ibarra, Ramon Bautista, Coco Martin, Empoy Marquez, Zoren Legaspi and Vice Ganda including DJ Willie Revillame featuring special guests Daniel Padilla, Dominic Roco, president Noynoy Aquino and South Korean boy band Momoland.

The Station ID looks the same but it was different from the 2014 Summer Station ID of TV5. Eventually, The clips were from the Happy Music Video from Toy Story 3.

0 notes

Text

Major technology companies, including Google, Apple, and Discord, have been enabling people to quickly sign up to harmful “undress” websites, which use AI to remove clothes from real photos to make victims appear to be “nude” without their consent. More than a dozen of these deepfake websites have been using login buttons from the tech companies for months.

A WIRED analysis found 16 of the biggest so-called undress and “nudify” websites using the sign-in infrastructure from Google, Apple, Discord, Twitter, Patreon, and Line. This approach allows people to easily create accounts on the deepfake websites—offering them a veneer of credibility—before they pay for credits and generate images.

While bots and websites that create nonconsensual intimate images of women and girls have existed for years, the number has increased with the introduction of generative AI. This kind of “undress” abuse is alarmingly widespread, with teenage boys allegedly creating images of their classmates. Tech companies have been slow to deal with the scale of the issues, critics say, with the websites appearing highly in search results, paid advertisements promoting them on social media, and apps showing up in app stores.

“This is a continuation of a trend that normalizes sexual violence against women and girls by Big Tech,” says Adam Dodge, a lawyer and founder of EndTAB (Ending Technology-Enabled Abuse). “Sign-in APIs are tools of convenience. We should never be making sexual violence an act of convenience,” he says. “We should be putting up walls around the access to these apps, and instead we're giving people a drawbridge.”

The sign-in tools analyzed by WIRED, which are deployed through APIs and common authentication methods, allow people to use existing accounts to join the deepfake websites. Google’s login system appeared on 16 websites, Discord’s appeared on 13, and Apple’s on six. X’s button was on three websites, with Patreon and messaging service Line’s both appearing on the same two websites.

WIRED is not naming the websites, since they enable abuse. Several are part of wider networks and owned by the same individuals or companies. The login systems have been used despite the tech companies broadly having rules that state developers cannot use their services in ways that would enable harm, harassment, or invade people’s privacy.

After being contacted by WIRED, spokespeople for Discord and Apple said they have removed the developer accounts connected to their websites. Google said it will take action against developers when it finds its terms have been violated. Patreon said it prohibits accounts that allow explicit imagery to be created, and Line confirmed it is investigating but said it could not comment on specific websites. X did not reply to a request for comment about the way its systems are being used.

In the hours after Jud Hoffman, Discord vice president of trust and safety, told WIRED it had terminated the websites’ access to its APIs for violating its developer policy, one of the undress websites posted in a Telegram channel that authorization via Discord was “temporarily unavailable” and claimed it was trying to restore access. That undress service did not respond to WIRED’s request for comment about its operations.

Rapid Expansion

Since deepfake technology emerged toward the end of 2017, the number of nonconsensual intimate videos and images being created has grown exponentially. While videos are harder to produce, the creation of images using “undress” or “nudify” websites and apps has become commonplace.

“We must be clear that this is not innovation, this is sexual abuse,” says David Chiu, San Francisco’s city attorney, who recently opened a lawsuit against undress and nudify websites and their creators. Chiu says the 16 websites his office’s lawsuit focuses on have had around 200 million visits in the first six months of this year alone. “These websites are engaged in horrific exploitation of women and girls around the globe. These images are used to bully, humiliate, and threaten women and girls,” Chiu alleges.

The undress websites operate as businesses, often running in the shadows—proactively providing very few details about who owns them or how they operate. Websites run by the same people often look similar and use nearly identical terms and conditions. Some offer more than a dozen different languages, demonstrating the worldwide nature of the problem. Some Telegram channels linked to the websites have tens of thousands of members each.

The websites are also under constant development: They frequently post about new features they are producing—with one claiming their AI can customize how women’s bodies look and allow “uploads from Instagram.” The websites generally charge people to generate images and can run affiliate schemes to encourage people to share them; some have pooled together into a collective to create their own cryptocurrency that could be used to pay for images.

A person identifying themself as Alexander August and the CEO of one of the websites, responded to WIRED, saying they “understand and acknowledge the concerns regarding the potential misuse of our technology.” The person claims the website has put in place various safety mechanisms to prevent images of minors being created. “We are committed to taking social responsibility and are open to collaborating with official bodies to enhance transparency, safety, and reliability in our services,” they wrote in an email.

The tech company logins are often presented when someone tries to sign up to the site or clicks on buttons to try generating images. It is unclear how many people will have used the login methods, and most websites also allow people to create accounts with just their email address. However, of the websites reviewed, the majority had implemented the sign-in APIs of more than one technology company, with Sign-In With Google being the most widely used. When this option is clicked, prompts from the Google system say the website will get people’s name, email addresses, language preferences, and profile picture.

Google’s sign-in system also reveals some information about the developer accounts linked to a website. For example, four websites are linked to one Gmail account; another six websites are linked to another. “In order to use Sign in with Google, developers must agree to our Terms of Service, which prohibits the promotion of sexually explicit content as well as behavior or content that defames or harasses others,” says a Google spokesperson, adding that “appropriate action” will be taken if these terms are broken.

Other tech companies that had sign-in systems being used said they have banned accounts after being contacted by WIRED.

Hoffman from Discord says that as well as taking action on the websites flagged by WIRED, the company will “continue to address other websites we become aware of that violate our policies.” Apple spokesperson Shane Bauer says it has terminated multiple developer’s licenses with Apple, and that Sign In With Apple will no longer work on their websites. Adiya Taylor, corporate communications lead at Patreon, says it prohibits accounts that allow or fund access to external tools that can produce adult materials or explicit imagery. “We will take action on any works or accounts on Patreon that are found to be in violation of our Community Guidelines.”

As well as the login systems, several of the websites displayed the logos of Mastercard or Visa, implying they can possibly be used to pay for their services. Visa did not respond to WIRED’s request for comment, while a Mastercard spokesperson says “purchases of nonconsensual deepfake content are not allowed on our network,” and that it takes action when it detects or is made aware of any instances.

On multiple occasions, tech companies and payment providers have taken action against AI services allowing people to generate nonconsensual images or video after media reports about their activities. Clare McGlynn, a professor of law at Durham University who has expertise in the legal regulation of pornography and sexual violence and abuse online, says Big Tech platforms are enabling the growth of undress websites and similar websites by not proactively taking action against them.

“What is concerning is that these are the most basic of security steps and moderation that are missing or not being enforced,” McGlynn says of the sign-in systems being used, adding that it is “wholly inadequate” for companies to react when journalists or campaigners highlight how their rules are being easily dodged. “It is evident that they simply do not care, despite their rhetoric,” McGlynn says. “Otherwise they would have taken these most simple steps to reduce access.”

23 notes

·

View notes