#deepfake detection

Explore tagged Tumblr posts

Text

Dutch researchers have developed a promising new method for detecting deepfake videos by analyzing subtle facial changes linked to a person's heartbeat—a technique that could help forensic investigators identify manipulated footage in an era of fast-advancing artificial intelligence, according to the Netherlands Forensic Institute (NFI). Zeno Geradts, a digital forensics expert at the NFI and professor of Forensic Data Science at the University of Amsterdam (UvA), unveiled the method this week at the European Academy of Forensic Science (EAFS) 2025 conference in Dublin, which runs from May 26 to May 30. His technique focuses on detecting blood flow patterns in the face—specifically, the expansion and contraction of small facial veins in sync with the heartbeat. “As far as I know, this is not yet used in forensic research,” Geradts said in a press statement from the Netherlands Forensic Institute. “We are still in the process of scientific validation, but it is a promising addition to existing methods.” The new approach uses high-resolution video to detect subtle shifts in skin color caused by blood flow beneath the surface—changes that occur with each heartbeat. These minute shifts are especially visible around the eyes, forehead, and jaw, where blood vessels lie just below the skin. According to Geradts, this biological signal is absent in deepfake videos, making it a key indicator of video authenticity.

continue reading

Bet it only works for deepfake white people.

1 note

·

View note

Text

Unbridled Technology Produces a Notorious Child: Deepfake

Technology has undoubtedly revolutionized our lives, transforming the way we communicate, work, learn, and interact. Every day, we witness new advancements that enhance convenience and efficiency. However, if not controlled and regulated properly, technology can also become a double-edged sword, leading to unforeseen consequences. One such perilous innovation is deepfake technology, an offspring of Artificial Intelligence (AI) that has begun to create ripples in society.

#deepfake technology#AI deepfake#deepfake videos#deepfake in politics#deepfake laws in India#deepfake cybercrime#Bollywood deepfake scandals#AI-generated content#misinformation#deepfake detection

0 notes

Text

How to Use AI to Detect Deepfake Videos

Deepfake videos, where artificial intelligence (AI) is used to manipulate videos to make people appear as if they are saying or doing things they never did, have become increasingly sophisticated. As these videos pose risks in various areas such as misinformation, fraud, and personal privacy, detecting deepfakes has become critical. Here’s how you can use AI to identify and protect yourself from…

#AI deepfake detection tools#AI tools for deepfakes#deepfake detection#deepfake video analysis#Generative Adversarial Networks

0 notes

Text

https://pi-labs.ai/pi-labs-joins-nvidia-inception/

#deepfake detection#deepfake video#Generative AI#Fake content#Artificial Intelligence#NVIDIA Inception Program

0 notes

Text

The Deepfake Dilemma: Understanding the Technology Behind Artificial Media Manipulation

Introduction In our visually-driven world, the adage “seeing is believing” has taken on a new dimension with the advent of deepfake technology. This innovative yet controversial facet of artificial intelligence (AI) has the remarkable ability to manipulate audiovisual content, blurring the lines between reality and fabrication. As we delve into the complexities of deepfakes, we uncover a realm…

0 notes

Text

https://pi-labs.ai/

https://pi-labs.ai/

deepfake detection

0 notes

Text

Why Deepfakes are Dangerous and How to Identify?

Spotting deepfakes requires a keen eye and an understanding of telltale signs. Unnatural facial expressions, awkward body movements and inconsistencies in coloring or alignment can betray the artificial nature of manipulated media. Lack of emotion or unusual eye movements may also indicate a deepfake. You can check if a video is real by looking at news from reliable sources and searching for similar images online. These can help in find changes or defects in the tech-generated video.

0 notes

Text

"By comparing the reflections in an individual’s eyeballs, Owolabi could correctly predict whether the image was a fake about 70% of the time…researchers found that the Gini index was better than the CAS system at predicting whether an image had been manipulated."

4 notes

·

View notes

Text

#spoof detection#fake fingerprint detection#biometric security#biometric solutions#optical fingerprint scanner#optical scanner#capacitive fingerprint sensor#capacitive fingerprint scanner#capacitive scanner#biometric spoofing#fingerprint spoofing#anti-spoofing technology#what is spoof detection#spoofing biometrics#anti spoofing technology#spoofing detection#what is spoof#fingerprint spoof#spoof proof#spoof identity#biometric spoofing and deepfake detection#spoof fingerprint

0 notes

Video

youtube

Deepfakes Are Getting Deeper

#youtube#Deepfakes AI cybersecurity disinformation fake scams protection deepfake detection tutorial

0 notes

Text

The Scary Effects of Deep Fakes in Our Lives

Dear Subscribers, In this post we want to share an interactive podcast and an insightful article which we curated on Medium and Substack for your information. The story is titled Why Deep Fakes Stop Thought Leaders Like Me from Creating YouTube Videos Here is the link to the interactive podcast about deep fakes: Why Deepfakes Are So Dangerous and What We Can Do to Lower Risks Here is the…

#Blockchain Verification for Creators#Deepfake Dangers for Thought Leaders#Deepfake Detection Tools#Deepfake Prevention Tips#Deepfake Risks for Influencers#Digital Security for Creators#How to Protect Against Deepfakes#Online Content Authentication#Protecting Digital Footprint#YouTube Deepfake Threat

0 notes

Text

How to Determine if an Image is AI Generated?

In the ever-expanding digital landscape, the proliferation of AI-generated images has become a defining characteristic of the modern era. With algorithms wielding the power to conjure remarkably realistic visuals, the question of how to tell if an image is AI generated has taken center stage. These AI-generated images, ranging from deepfakes to computer-generated scenes, present a myriad of…

View On WordPress

#ai art#ai creativity#ai generator#ai image#AI-generated images#art inspiration#Blockchain authentication#creative technology#Deep Learning#Deepfakes#Digital Creativity#Digital manipulation#Image authenticity#Image forgery detection#Machine Learning#Machine learning algorithms#Media literacy#Synthetic media

0 notes

Text

https://pi-labs.ai/pi-labs-joins-nvidia-inception/

#deepfake detection#deepfake video#Generative AI#Fake content#Artificial Intelligence#NVIDIA Inception Program

0 notes

Text

What is Deepfake Technology

Deepfakes can be used for many purposes, from enhancing video games to providing medical research. However, they can also be used for malicious purposes. Deepfakes are created using machine learning algorithms to make or modify media. These algorithms take into account factors such as lighting, facial expressions, and the cadence of a person’s voice. Is deepfakes illegal? While deepfakes can have…

View On WordPress

#Are deepfakes good or bad?#Is deepfakes illegal?#What are some examples of deepfakes?#What is a good use of deepfake technology?#What technology is used in deepfake detection?

0 notes

Text

How To Stay Away From Deepfakes?

Generative AI is getting more proficient at creating deepfakes that can sound and look realistic. As a result, some of the more sophisticated spoofers have taken social engineering attacks to a more sinister level.

0 notes

Note

Looking at some of your work, it is stunning but it is very similar in style to AI artwork, do you have any recommendations for how to tell apart photography like yours from AI.

I've been thinking about this. And this may sound controversial at first, but I'm hoping people will hear me out.

We should stop trying so hard to detect AI art.

I think we should all lift that burden from our brains.

I have often talked about "woke goggles." Where conservatives have lost the ability to enjoy anything because they are hypervigilant about detecting anything woke. They've cursed themselves into just hating everything. All they have left is the "God's Not Dead" Cinematic Universe.

And I worry people are getting AI goggles now. They are so concerned about accidentally enjoying robot art and hurting artists that they have overcorrected to the point where they are hurting artists.

One cannot say "AI is all soulless slop that always looks bad" and then accuse a real artist of making something that looks like AI and not hurt them. By doing so, it includes the baggage of all of the "slop" comments along with it. This crusade is having collateral damage to the very artists we are trying to protect.

Yes, we need to be cautious about malicious AI images. Misinformation and deepfakes are going to be a big problem. People using AI imagery for profit is already a mess. But if you are cruising your feed and like a cool sci-fi robot gal or a photo of a waterfall and it turns out to be AI... that's fine.

It was trained by real artists and AI is going to create some cool shit because of that.

Honestly, I think a lot of the worst slop is because the dipshits creating the prompts have no artistic taste. People keep blaming the AI for how bad it looks and often don't consider it is a product of the loser who published it.

There is plenty of non-slop out there that has fooled me. And, like it or not, it is going to get harder and harder to tell what is AI. Until there are better tools or better regulations, I don't think there is much we can do to avoid enjoying AI art every once in a while. If only by accident.

Current "AI detectors" are mostly a scam. Even the best forensic-level AI image detectors struggle to stay above 70–80% accuracy across a wide range of models and image types. And that's in controlled lab conditions.

Free online tools often drop to near coin-flip accuracy (50–60%), especially with newer image generators and post-processing applied.

The best way to avoid AI imagery is to look at an artist's body of work. It's much harder to create consistent, non-obvious fake images in a large sample size. That is usually enough to have confidence in authenticity. Plus, if they have posted similar art before 2022, you can pretty much rule out any shenanigans.

Otis literally died before genAI was available.

But images you see in the wild, just let yourself enjoy them if that is what your brain wants to do. It'll be okay.

I just think we are attacking this backwards. If we want to protect artists, we need to support them.

Calling out random AI art does not support them.

It does not put money in their pockets.

It does not grow their audience.

Over a decade ago I tried to lead a fight to create better systems of attribution on websites like Reddit and Imgur. I even spoke to the Imgur team after an article was written about me.

I asked them to allow sources on their posts and to develop tech that would help people find where an image came from. They said they were "working on it" and it never manifested.

IMAGE SHARING SITES STEAL MORE FROM ARTISTS THAN AI.

But we just kind of accepted it. No one really joined me in my fight. The prevailing defeatist attitude was, "That's just the way it is."

I think now is the time to demand better attribution systems. We need to be vigilant about making sure as many posts as possible have good sourcing. If an image on Reddit goes viral, the top comment should be the source. And if it isn't, you should try to find it and add it.

Just to be clear, "credit to the original artist" is NOT proper attribution.

And perhaps we can lobby these image sharing sites to create better sourcing systems and tools. They could even use fucking AI to find the earliest posted version of an image.

And it would be nice if it didn't require people to go into the comments to find the source. It could just be in the headline. They could even create little badges "made by a human" for verified artists.

Good attribution helps artists grow their audience. It is one of the single most effective things you can do to help them.

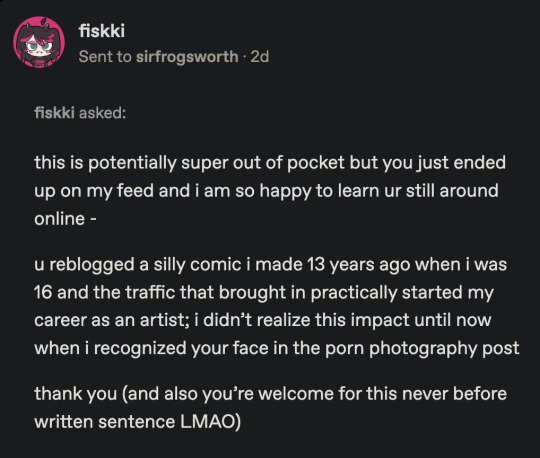

I literally just got this message...

There are maybe 10 popular artists who I helped grow their audience early on. Just because I reblogged their work and added links to all of their social media. I even hired my best friend to add sourcing information to every post because I believed so much in good attribution.

Calling out AI art may feel good in the moment. You caught someone trying to trick people and it feels like justice. But, in most cases, the tangible benefits to real artists seem small. It impedes your ability to enjoy art without always being suspicious. And the risk of telling someone you think they make soulless slop doesn't seem worth it.

But putting that time and effort into attribution *would* be worth it. I have proven it time and time again.

I also think people should consider having a monthly art budget. I don't care if it is $5. But if we all commit to seeking out cool artists and being their collective patrons, we could really make a difference and keep real art alive. Just commit to finding a cool new artist every month and financially contributing to them in some way.

On a bigger scale I think advocating for universal basic income, art grants for education and creation, and government regulation of AI would all be helpful long term goals. Though I think our friends in Europe may have to take the lead on regulation at the moment.

So...

Stop worrying about enjoying or calling out AI art.

Demand better attribution from image sharing sites.

Make sure all art has a source listed.

Start an art budget.

Advocate for better regulations.

585 notes

·

View notes