#docker-it-scala

Explore tagged Tumblr posts

Text

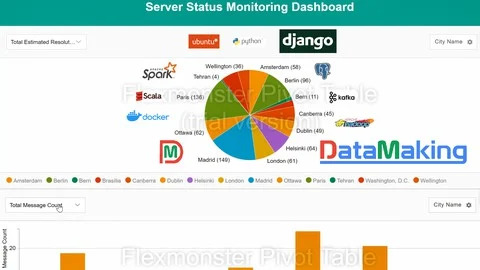

Real Time Spark Project for Beginners: Hadoop, Spark, Docker

🚀 Building a Real-Time Data Pipeline for Server Monitoring Using Kafka, Spark, Hadoop, PostgreSQL & Django

In today’s data centers, various types of servers constantly generate vast volumes of real-time event data—each event representing the server’s status. To ensure stability and minimize downtime, monitoring teams need instant insights into this data to detect and resolve issues swiftly.

To meet this demand, a scalable and efficient real-time data pipeline architecture is essential. Here’s how we’re building it:

🧩 Tech Stack Overview: Apache Kafka acts as the real-time data ingestion layer, handling high-throughput event streams with minimal latency.

Apache Spark (Scala + PySpark), running on a Hadoop cluster (via Docker), performs large-scale, fault-tolerant data processing and analytics.

Hadoop enables distributed storage and computation, forming the backbone of our big data processing layer.

PostgreSQL stores the processed insights for long-term use and querying.

Django serves as the web framework, enabling dynamic dashboards and APIs.

Flexmonster powers data visualization, delivering real-time, interactive insights to monitoring teams.

🔍 Why This Stack? Scalability: Each tool is designed to handle massive data volumes.

Real-time processing: Kafka + Spark combo ensures minimal lag in generating insights.

Interactivity: Flexmonster with Django provides a user-friendly, interactive frontend.

Containerized: Docker simplifies deployment and management.

This architecture empowers data center teams to monitor server statuses live, quickly detect anomalies, and improve infrastructure reliability.

Stay tuned for detailed implementation guides and performance benchmarks!

0 notes

Text

Big Data Course in Kochi: Transforming Careers in the Age of Information

In today’s hyper-connected world, data is being generated at an unprecedented rate. Every click on a website, every transaction, every social media interaction — all of it contributes to the vast oceans of information known as Big Data. Organizations across industries now recognize the strategic value of this data and are eager to hire professionals who can analyze and extract meaningful insights from it.

This growing demand has turned big data course in Kochi into one of the most sought-after educational programs for tech enthusiasts, IT professionals, and graduates looking to enter the data-driven future of work.

Understanding Big Data and Its Relevance

Big Data refers to datasets that are too large or complex for traditional data processing applications. It’s commonly defined by the 5 V’s:

Volume – Massive amounts of data generated every second

Velocity – The speed at which data is created and processed

Variety – Data comes in various forms, from structured to unstructured

Veracity – Quality and reliability of the data

Value – The insights and business benefits extracted from data

These characteristics make Big Data a crucial resource for industries ranging from healthcare and finance to retail and logistics. Trained professionals are needed to collect, clean, store, and analyze this data using modern tools and platforms.

Why Enroll in a Big Data Course?

Pursuing a big data course in Kochi can open up diverse opportunities in data analytics, data engineering, business intelligence, and beyond. Here's why it's a smart move:

1. High Demand for Big Data Professionals

There’s a huge gap between the demand for big data professionals and the current supply. Companies are actively seeking individuals who can handle tools like Hadoop, Spark, and NoSQL databases, as well as data visualization platforms.

2. Lucrative Career Opportunities

Big data engineers, analysts, and architects earn some of the highest salaries in the tech sector. Even entry-level roles can offer impressive compensation packages, especially with relevant certifications.

3. Cross-Industry Application

Skills learned in a big data course in Kochi are transferable across sectors such as healthcare, e-commerce, telecommunications, banking, and more.

4. Enhanced Decision-Making Skills

With big data, companies make smarter business decisions based on predictive analytics, customer behavior modeling, and real-time reporting. Learning how to influence those decisions makes you a valuable asset.

What You’ll Learn in a Big Data Course

A top-tier big data course in Kochi covers both the foundational concepts and the technical skills required to thrive in this field.

1. Core Concepts of Big Data

Understanding what makes data “big,” how it's collected, and why it matters is crucial before diving into tools and platforms.

2. Data Storage and Processing

You'll gain hands-on experience with distributed systems such as:

Hadoop Ecosystem: HDFS, MapReduce, Hive, Pig, HBase

Apache Spark: Real-time processing and machine learning capabilities

NoSQL Databases: MongoDB, Cassandra for unstructured data handling

3. Data Integration and ETL

Learn how to extract, transform, and load (ETL) data from multiple sources into big data platforms.

4. Data Analysis and Visualization

Training includes tools for querying large datasets and visualizing insights using:

Tableau

Power BI

Python/R libraries for data visualization

5. Programming Skills

Big data professionals often need to be proficient in:

Java

Python

Scala

SQL

6. Cloud and DevOps Integration

Modern data platforms often operate on cloud infrastructure. You’ll gain familiarity with AWS, Azure, and GCP, along with containerization (Docker) and orchestration (Kubernetes).

7. Project Work

A well-rounded course includes capstone projects simulating real business problems—such as customer segmentation, fraud detection, or recommendation systems.

Kochi: A Thriving Destination for Big Data Learning

Kochi has evolved into a leading IT and educational hub in South India, making it an ideal place to pursue a big data course in Kochi.

1. IT Infrastructure

Home to major IT parks like Infopark and SmartCity, Kochi hosts numerous startups and global IT firms that actively recruit big data professionals.

2. Cost-Effective Learning

Compared to metros like Bangalore or Hyderabad, Kochi offers high-quality education and living at a lower cost.

3. Talent Ecosystem

With a strong base of engineering colleges and tech institutes, Kochi provides a rich talent pool and a thriving tech community for networking.

4. Career Opportunities

Kochi’s booming IT industry provides immediate placement potential after course completion, especially for well-trained candidates.

What to Look for in a Big Data Course?

When choosing a big data course in Kochi, consider the following:

Expert Instructors: Trainers with industry experience in data engineering or analytics

Comprehensive Curriculum: Courses should include Hadoop, Spark, data lakes, ETL pipelines, cloud deployment, and visualization tools

Hands-On Projects: Theoretical knowledge is incomplete without practical implementation

Career Support: Resume building, interview preparation, and placement assistance

Flexible Learning Options: Online, weekend, or hybrid courses for working professionals

Zoople Technologies: Leading the Way in Big Data Training

If you’re searching for a reliable and career-oriented big data course in Kochi, look no further than Zoople Technologies—a name synonymous with quality tech education and industry-driven training.

Why Choose Zoople Technologies?

Industry-Relevant Curriculum: Zoople offers a comprehensive, updated big data syllabus designed in collaboration with real-world professionals.

Experienced Trainers: Learn from data scientists and engineers with years of experience in multinational companies.

Hands-On Training: Their learning model emphasizes practical exposure, with real-time projects and live data scenarios.

Placement Assistance: Zoople has a dedicated team to help students with job readiness—mock interviews, resume support, and direct placement opportunities.

Modern Learning Infrastructure: With smart classrooms, cloud labs, and flexible learning modes, students can learn in a professional, tech-enabled environment.

Strong Alumni Network: Zoople’s graduates are placed in top firms across India and abroad, and often return as guest mentors or recruiters.

Zoople Technologies has cemented its position as a go-to institute for aspiring data professionals. By enrolling in their big data course in Kochi, you’re not just learning technology—you’re building a future-proof career.

Final Thoughts

Big data is more than a trend—it's a transformative force shaping the future of business and technology. As organizations continue to invest in data-driven strategies, the demand for skilled professionals will only grow.

By choosing a comprehensive big data course in Kochi, you position yourself at the forefront of this evolution. And with a trusted partner like Zoople Technologies, you can rest assured that your training will be rigorous, relevant, and career-ready.

Whether you're a student, a working professional, or someone looking to switch careers, now is the perfect time to step into the world of big data—and Kochi is the ideal place to begin.

0 notes

Text

Software Engineering Lead - Java, Python, ETL

into technical design to support delivery Hands-on experience with ETL, Python, Spark, Scala, Java, Docker, Kubernetes. Hands… in writing a platform level code in either Java or Python Basic knowledge on Angular or ReactJS, Rest API Knowledge… Apply Now

0 notes

Text

Software Engineering Lead - Java, Python, ETL

into technical design to support delivery Hands-on experience with ETL, Python, Spark, Scala, Java, Docker, Kubernetes. Hands… in writing a platform level code in either Java or Python Basic knowledge on Angular or ReactJS, Rest API Knowledge… Apply Now

0 notes

Text

What are the top data science tools every data scientist should know?

Data scientists use a variety of tools to analyze data, build models, and visualize results. Here are some of the top data science tools every data scientist should know:

1. Programming Languages

Python: Widely used for its simplicity and extensive libraries (e.g., Pandas, NumPy, SciPy, Scikit-learn, TensorFlow, Keras).

R: Excellent for statistical analysis and visualization, with packages like ggplot2 and dplyr.

2. Data Visualization Tools

Tableau: User-friendly tool for creating interactive and shareable dashboards.

Matplotlib and Seaborn: Python libraries for creating static, animated, and interactive visualizations.

Power BI: Microsoft’s business analytics service for visualizing data and sharing insights.

3. Data Manipulation and Analysis

Pandas: A Python library for data manipulation and analysis, essential for data wrangling.

NumPy: Fundamental package for numerical computing in Python.

4. Machine Learning Frameworks

Scikit-learn: A Python library for classical machine learning algorithms.

TensorFlow: Open-source library for machine learning and deep learning, developed by Google.

PyTorch: A deep learning framework favored for its dynamic computation graph and ease of use.

5. Big Data Technologies

Apache Spark: A unified analytics engine for big data processing, offering APIs in Java, Scala, Python, and R.

Hadoop: Framework for distributed storage and processing of large datasets.

6. Database Management

SQL: Essential for querying and managing relational databases.

MongoDB: A NoSQL database for handling unstructured data.

7. Integrated Development Environments (IDEs)

Jupyter Notebook: An interactive notebook environment that allows for code, visualizations, and text to be combined.

RStudio: An IDE specifically for R, supporting various features for data science projects.

8. Version Control

Git: Essential for version control, allowing data scientists to collaborate and manage code effectively.

9. Collaboration and Workflow Management

Apache Airflow: A platform to programmatically author, schedule, and monitor workflows.

Docker: Containerization tool that allows for consistent environments across development and production.

10. Cloud Platforms

AWS, Google Cloud, Microsoft Azure: Cloud services providing a range of tools for storage, computing, and machine learning.

0 notes

Text

Spring Boot vs Quarkus: A Comparative Analysis for Modern Java Development

Liberating industry with Java

Java has been a great asset for developers in elevating the industry to higher standards. Java has a lot of built-in libraries and frameworks, which makes it easy for developers to build an application and website. It is a set of built-in codes used by developers for building applications on the web. These frameworks possess functions and classes that control hardware, input processes, and communication with the system applications. The main purpose of preferring Java frameworks in development is that they provide a consistent design pattern and structure for creating applications. Moreover, this improves the code maintainability. This code consistency makes it easy for the developers to understand and change the code, neglecting bugs and errors. In Java, multiple frameworks are used, such as SpringBoot, Quarkus, MicroNaut, etc. In this blog, we will learn more about the differences between SpringBoot and Quarkus. Also, we will drive through the advantages of using it in the development process.

Boosting development with SpringBoot and Quarkus:

SpringBoot is an open-source framework that supports Java, Groovy, Kotlin, and JIT compilation, which makes it very much easier to create, configure, and run microservice web applications. It is a part of the Spring framework that allows developers to utilize the Spring framework features. This makes it easy for the developers to expand and construct spring applications. It reduces the code length and also increases the production speed. This is possible due to the automated configuration of the application built on the Java annotations.

Quarkus is also an open-source Java framework that supports Scala, Java, and Kotlin. The major purpose of preferring Quarkus is to simplify the development and deployment process of Java applications in containers like Kubernetes and Docker. It is also used for developing microservice Java applications with a minimal consumption of resources. It is very easy to use and necessarily less demanding on hardware resources to run applications.

Unbinding the differences between SpringBoot and Quarkus

Quarkus will be performing better because of its Kubernetes native framework, which is designed particularly to run in a containerized environment. Moreover, it uses an Ahead-of-time (AOT) compiler for pre-compiling the Java bytecode into native code. This results in faster start-up time and lower usage of memory. But, on the other hand, SpringBoot is a traditional Java framework that uses the Java Virtual Machine (JVM) to run applications. SpringBoot can also run on the containerized environment but it doesn’t have the same performance level optimization as Quarkus.

The greatest difference between Quarkus and SpringBoot is the size of the frameworks. SpringBoot is a well-established and feature-rich framework, but it comes with vast dependencies, which increases the application size. On the other hand, Quarkus is a new framework that has a much smaller runtime footprint when compared with SpringBoot. It also has a feature for optimizing the size of the application. SpringBoot has been in the industry for a long period of time, it has a larger community and a vast ecosystem of libraries and plugins. Quarkus is a relatively new framework in the industry, but it is attracting a lot of developers to its side. The community of Quarkus is small when it is compared with SpringBoot.

Conclusion

From this blog, we can understand the impact of Java and its crucial role in the development of applications. SpringBoot and Quarkus has made quite an impact in developing applications and websites in an unique way. Both of these frameworks have been a great asset for most developers to create an interactive application. Preferring the perfect Java application development company also plays an important role in a company’s growth.

Pattem Digital, a leading Java app development company that helps in meeting the current business needs and demands. Our talented team of developers guides our clients throughout the project. We work on the latest technologies and provide the best results meeting the current market trends.

0 notes

Text

intellij idea ultimate vs webstorm Flutter

Here’s a comparison between IntelliJ IDEA Ultimate and WebStorm:

IntelliJ IDEA Ultimate vs. WebStorm

Overview

IntelliJ IDEA Ultimate:

A comprehensive IDE primarily for Java, but supports various languages and frameworks.

Ideal for full-stack and backend developers who need a robust tool for multiple technologies.

WebStorm:

A specialized IDE focused on JavaScript, TypeScript, and web development.

Tailored for frontend developers with built-in support for modern frameworks.

Features Comparison

Language Support:

IntelliJ IDEA Ultimate:

Supports Java, Kotlin, Scala, Groovy, and many more.

Excellent for mixed projects involving different languages.

WebStorm:

Focused on JavaScript, TypeScript, HTML, and CSS.

Offers deep integration with frontend frameworks like React, Angular, and Vue.

Performance:

IntelliJ IDEA Ultimate:

Powerful, but can be resource-intensive, especially with large projects.

WebStorm:

Lighter compared to IntelliJ, optimized for web projects.

Plugins and Integrations:

IntelliJ IDEA Ultimate:

Extensive plugin marketplace, including support for database tools, Docker, and version control.

WebStorm:

Supports essential plugins for web development; most WebStorm features are also available in IntelliJ.

User Experience:

IntelliJ IDEA Ultimate:

Rich features, may have a steeper learning curve due to its complexity.

Suitable for developers who need an all-in-one solution.

WebStorm:

Streamlined and focused interface, easy to navigate for web developers.

Provides a more targeted experience with fewer distractions.

Debugging and Testing:

IntelliJ IDEA Ultimate:

Comprehensive debugging tools for various languages.

Supports unit testing frameworks and integration testing.

WebStorm:

Robust debugging for JavaScript and TypeScript.

Integrated tools for testing libraries like Jest, Mocha, and Jasmine.

Pricing:

IntelliJ IDEA Ultimate:

Higher cost, but includes support for a wide range of languages and tools.

WebStorm:

More affordable, focused on web development features.

Use Cases

Choose IntelliJ IDEA Ultimate if:

You work on full-stack projects or need support for multiple languages.

Your projects involve backend development in addition to web technologies.

You require advanced database and DevOps integrations.

Choose WebStorm if:

Your primary focus is frontend development with JavaScript/TypeScript.

You want a lightweight IDE with specialized tools for web development.

Cost is a significant factor, and you only need web development features.

Conclusion

Both IntelliJ IDEA Ultimate and WebStorm offer powerful features, but the best choice depends on your specific development needs. If you're looking for a dedicated tool for web development, WebStorm is the ideal choice. However, if you require an all-encompassing IDE for a variety of languages and frameworks, IntelliJ IDEA Ultimate is well worth the investment.

#Flutter Training#IntelliJ IDEA#react native#mulesoft#software#react developer#react training#developer#technologies#reactjs

1 note

·

View note

Text

Mastering MLOps : The Ultimate Guide to Become a MLOps Engineer in 2024

New Post has been published on https://thedigitalinsider.com/mastering-mlops-the-ultimate-guide-to-become-a-mlops-engineer-in-2024/

Mastering MLOps : The Ultimate Guide to Become a MLOps Engineer in 2024

In world of Artificial Intelligence (AI) and Machine Learning (ML), a new professionals has emerged, bridging the gap between cutting-edge algorithms and real-world deployment. Meet the MLOps Engineer: the orchestrating the seamless integration of ML models into production environments, ensuring scalability, reliability, and efficiency.

As businesses across industries increasingly embrace AI and ML to gain a competitive edge, the demand for MLOps Engineers has skyrocketed. These highly skilled professionals play a pivotal role in translating theoretical models into practical, production-ready solutions, unlocking the true potential of AI and ML technologies.

If you’re fascinated by the intersection of ML and software engineering, and you thrive on tackling complex challenges, a career as an MLOps Engineer might be the perfect fit. In this comprehensive guide, we’ll explore the essential skills, knowledge, and steps required to become a proficient MLOps Engineer and secure a position in the AI space.

Understanding MLOps

Before delving into the intricacies of becoming an MLOps Engineer, it’s crucial to understand the concept of MLOps itself. MLOps, or Machine Learning Operations, is a multidisciplinary field that combines the principles of ML, software engineering, and DevOps practices to streamline the deployment, monitoring, and maintenance of ML models in production environments.

The MLOps Lifecycle

The MLOps lifecycle involves three primary phases: Design, Model Development, and Operations. Each phase encompasses essential tasks and responsibilities to ensure the seamless integration and maintenance of machine learning models in production environments.

1. Design

Requirements Engineering: Identifying and documenting the requirements for ML solutions.

ML Use-Cases Prioritization: Determining the most impactful ML use cases to focus on.

Data Availability Check: Ensuring that the necessary data is available and accessible for model development.

2. Model Development

Data Engineering: Preparing and processing data to make it suitable for ML model training.

ML Model Engineering: Designing, building, and training ML models.

Model Testing & Validation: Rigorously testing and validating models to ensure they meet performance and accuracy standards.

3. Operations

ML Model Deployment: Implementing and deploying ML models into production environments.

CI/CD Pipelines: Setting up continuous integration and delivery pipelines to automate model updates and deployments.

Monitoring & Triggering: Continuously monitoring model performance and triggering retraining or maintenance as needed.

This structured approach ensures that ML models are effectively developed, deployed, and maintained, maximizing their impact and reliability in real-world applications.

Essential Skills for Becoming an MLOps Engineer

To thrive as an MLOps Engineer, you’ll need to cultivate a diverse set of skills spanning multiple domains. Here are some of the essential skills to develop:

Programming Languages: Proficiency in Python, Java, or Scala is crucial.

Machine Learning Frameworks: Experience with TensorFlow, PyTorch, scikit-learn, or Keras.

Data Engineering: Knowledge of data pipelines, data processing, and storage solutions like Hadoop, Spark, and Kafka.

Cloud Computing: Familiarity with cloud platforms like AWS, GCP, or Azure.

Containerization and Orchestration: Expertise in Docker and Kubernetes.

MLOps Principles and Best Practices

As AI and ML become integral to software products and services, MLOps principles are essential to avoid technical debt and ensure seamless integration of ML models into production.

Iterative-Incremental Process

Design Phase: Focus on business understanding, data availability, and ML use-case prioritization.

ML Experimentation and Development: Implement proof-of-concept models, data engineering, and model engineering.

ML Operations: Deploy and maintain ML models using established DevOps practices.

Automation

Manual Process: Initial level with manual model training and deployment.

ML Pipeline Automation: Automate model training and validation.

CI/CD Pipeline Automation: Implement CI/CD systems for automated ML model deployment.

Versioning

Track ML models and data sets with version control systems to ensure reproducibility and compliance.

Experiment Tracking

Testing

Implement comprehensive testing for features, data, ML models, and infrastructure.

Monitoring

Continuously monitor ML model performance and data dependencies to ensure stability and accuracy.

Continuous X in MLOps

Continuous Integration (CI): Testing and validating data and models.

Continuous Delivery (CD): Automatically deploying ML models.

Continuous Training (CT): Automating retraining of ML models.

Continuous Monitoring (CM): Monitoring production data and model performance.

Ensuring Reproducibility

Implement practices to ensure that data processing, ML model training, and deployment produce identical results given the same input.

Key Metrics for ML-Based Software Delivery

Deployment Frequency

Lead Time for Changes

Mean Time To Restore (MTTR)

Change Failure Rate

Educational Pathways for Aspiring MLOps Engineers

While there is no single defined educational path to becoming an MLOps Engineer, most successful professionals in this field possess a strong foundation in computer science, software engineering, or a related technical discipline. Here are some common educational pathways to consider:

Bachelor’s Degree: A Bachelor’s degree in Computer Science, Software Engineering, or a related field can provide a solid foundation in programming, algorithms, data structures, and software development principles.

Master’s Degree: Pursuing a Master’s degree in Computer Science, Data Science, or a related field can further enhance your knowledge and skills, particularly in areas like ML, AI, and advanced software engineering concepts.

Specialized Certifications: Obtaining industry-recognized certifications, such as the Google Cloud Professional ML Engineer, AWS Certified Machine Learning – Specialty, or Azure AI Engineer Associate, can demonstrate your expertise and commitment to the field.

Online Courses and Boot Camps: With the rise of online learning platforms, you can access a wealth of courses, boot camps, and specializations tailored specifically for MLOps and related disciplines, offering a flexible and self-paced learning experience. Here are some excellent resources to get started:

YouTube Channels:

Tech with Tim: A great channel for Python programming and machine learning tutorials.

freeCodeCamp.org: Offers comprehensive tutorials on various programming and machine learning topics.

Krish Naik: Focuses on machine learning, data science, and MLOps.

Courses:

Building a Solid Portfolio and Gaining Hands-On Experience

While formal education is essential, hands-on experience is equally crucial for aspiring MLOps Engineers. Building a diverse portfolio of projects and gaining practical experience can significantly enhance your chances of landing a coveted job in the AI space. Here are some strategies to consider:

Personal Projects: Develop personal projects that showcase your ability to design, implement, and deploy ML models in a production-like environment. These projects can range from image recognition systems to natural language processing applications or predictive analytics solutions.

Open-Source Contributions: Contribute to open-source projects related to MLOps, ML frameworks, or data engineering tools. This not only demonstrates your technical skills but also showcases your ability to collaborate and work within a community.

Internships and Co-ops: Seek internship or co-op opportunities in companies or research labs that focus on AI and ML solutions. These experiences can provide invaluable real-world exposure and allow you to work alongside experienced professionals in the field.

Hackathons and Competitions: Participate in hackathons, data science competitions, or coding challenges that involve ML model development and deployment. These events not only test your skills but also serve as networking opportunities and potential gateways to job opportunities.

Staying Up-to-Date and Continuous Learning

The field of AI and ML is rapidly evolving, with new technologies, tools, and best practices emerging continuously. As an MLOps Engineer, it’s crucial to embrace a growth mindset and prioritize continuous learning. Here are some strategies to stay up-to-date:

Follow Industry Blogs and Publications: Subscribe to reputable blogs, newsletters, and publications focused on MLOps, AI, and ML to stay informed about the latest trends, techniques, and tools.

Attend Conferences and Meetups: Participate in local or virtual conferences, meetups, and workshops related to MLOps, AI, and ML. These events provide opportunities to learn from experts, network with professionals, and gain insights into emerging trends and best practices.

Online Communities and Forums: Join online communities and forums dedicated to MLOps, AI, and ML, where you can engage with peers, ask questions, and share knowledge and experiences.

Continuous Education: Explore online courses, tutorials, and certifications offered by platforms like Coursera, Udacity, or edX to continuously expand your knowledge and stay ahead of the curve.

The MLOps Engineer Career Path and Opportunities

Once you’ve acquired the necessary skills and experience, the career path for an MLOps Engineer offers a wide range of opportunities across various industries. Here are some potential roles and career trajectories to consider:

MLOps Engineer: With experience, you can advance to the role of an MLOps Engineer, where you’ll be responsible for end-to-end management of ML model lifecycles, from deployment to monitoring and optimization. You’ll collaborate closely with data scientists, software engineers, and DevOps teams to ensure the seamless integration of ML solutions.

Senior MLOps Engineer: As a senior MLOps Engineer, you’ll take on leadership roles, overseeing complex MLOps projects and guiding junior team members. You’ll be responsible for designing and implementing scalable and reliable MLOps pipelines, as well as making strategic decisions to optimize ML model performance and efficiency.

MLOps Team Lead or Manager: In this role, you’ll lead a team of MLOps Engineers, coordinating their efforts, setting priorities, and ensuring the successful delivery of ML-powered solutions. You’ll also be responsible for mentoring and developing the team, fostering a culture of continuous learning and innovation.

MLOps Consultant or Architect: As an MLOps Consultant or Architect, you’ll provide expert guidance and strategic advice to organizations seeking to implement or optimize their MLOps practices. You’ll leverage your deep understanding of ML, software engineering, and DevOps principles to design and architect scalable and efficient MLOps solutions tailored to specific business needs.

MLOps Researcher or Evangelist: For those with a passion for pushing the boundaries of MLOps, pursuing a career as an MLOps Researcher or Evangelist can be an exciting path. In these roles, you’ll contribute to the advancement of MLOps practices, tools, and methodologies, collaborating with academic institutions, research labs, or technology companies.

The opportunities within the MLOps field are vast, spanning various industries such as technology, finance, healthcare, retail, and beyond. As AI and ML continue to permeate every aspect of our lives, the demand for skilled MLOps Engineers will only continue to rise, offering diverse and rewarding career prospects.

Learning Source for MLOps

Python Basics

Bash Basics & Command Line Editors

Containerization and Kubernetes

Docker:

Kubernetes:

Machine Learning Fundamentals

MLOps Components

Version Control & CI/CD Pipelines

Orchestration

Final Thoughts

Mastering and becoming a proficient MLOps Engineer requires a unique blend of skills, dedication, and a passion for continuous learning. By combining expertise in machine learning, software engineering, and DevOps practices, you’ll be well-equipped to navigate the complex landscape of ML model deployment and management.

As businesses across industries increasingly embrace the power of AI and ML, the demand for skilled MLOps Engineers will continue to soar. By following the steps outlined in this comprehensive guide, investing in your education and hands-on experience, and building a strong professional network, you can position yourself as a valuable asset in the AI space.

#2024#Advice#ai#AI Careers 101:#Algorithms#amp#Analytics#applications#approach#artificial#Artificial Intelligence#automation#AWS#azure#Building#Business#career#career path#Certifications#change#channel#CI/CD#Cloud#cloud computing#coding#collaborate#command#command line#Community#Companies

0 notes

Photo

HIRING: Systems Software Engineer - Cloud Infrastructure / Austin, Texas, US

#devops#cicd#cloud#engineering#career#jobs#jobsearch#recruiting#hiring#AWS#Git#Java#Jira#Lambda#Node#Node.js#Python#Ruby#Scala#Docker#Go#Golang#Kubernetes#Linux#REST

2 notes

·

View notes

Text

docker-it-scala 0.9.0

#scala #docker #integration-testing

Highlights

introduce exectutor based on Spotify's docker-client

separate docker exectutors library from core

support for unix-socket

support for linked containers (e.g. Zookeeper + Kafka)

support for Scala 2.12

docker-it-scala is a set of utility classes to simplify integration testing with dockerized services in Scala.

1 note

·

View note

Text

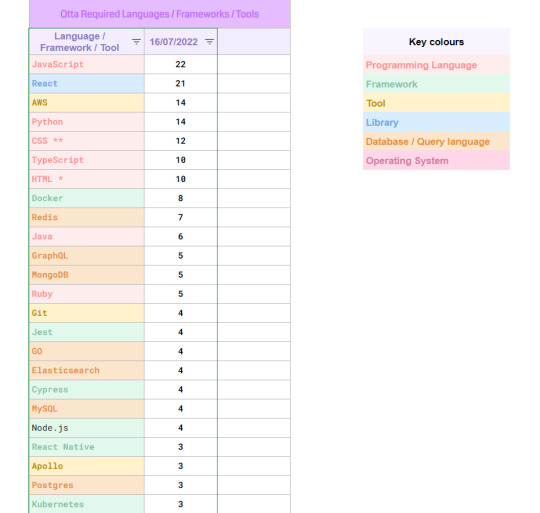

Tech jobs requirements prt 1 | Resource ✨

I decided to do my own mini research on what languages/frameworks/tools and more, are required when looking into getting a job in tech! Read below for more information! 🤗🎀

The website I have used and will be using for this research is Otta (link) which is an employment search service based in London, England that mostly does tech jobs. What they take into consideration are:

ꕤ Where would you like to work? (Remote or in office in UK, Europe, USA and, Toronto + Vancouver in Canada)

ꕤ If remote, where you are based?

ꕤ If you need a visa to work elsewhere

ꕤ What language you can work in? (English, French, Spanish, etc)

ꕤ What type of roles you would like to see in your searches (Software Engineering, Data, Design, Marketing, etc)

ꕤ What level of the roles you would like? (Entry, Junior, Mid-Level, etc)

ꕤ Size of the company you would like to work in (10 employees to 1001+ employees)

ꕤ Favourite industries you would like to work for (Banking, HR, Healthcare, etc) and if you want to exclude any from your searches

ꕤ Favourite technologies (Scala, Git, React, Java, etc) and if you want to exclude any

ꕤ Minimum expected salary (in £, USD $, CAD $, €)

With this information, I created a dummy profile who is REMOTE based IN THE UK with ENTRY/JUNIOR level and speaks ENGLISH. I clicked all for technologies and industries. Clicked all the options for the size of the company and set the minimum salary at £20k (the lower the number the more job posts will appear) I altered my search requirements to fit these job roles because these are my preferred roles:

ꕤ Software Developer + Engineer

ꕤ Full-Stack

ꕤ Backend

ꕤ Frontend

ꕤ Data

I searched 40 job postings so far but might increase it to 100 to be nice and even. I will keep updating every week for a month to see what is truly the most in-demand in the technology world! I might be doing this research wrong but it's all for fun! ❀

🔥 Week 1 🔥

ꕤ Top programming languages ꕤ

1. JavaScript

2. Python

3. CSS (styling sheet)

4. TypeScript , HTML (markup language)

5. Java

ꕤ Top frameworks ꕤ

1. Docker

2. Jest, Cypress, Node.js

3. React Native

4. Kubernetes

ꕤ Top developer tools ꕤ

1. AWS

2. Git

3. Apollo

4. Metabase

5. Clojure

ꕤ Top libraries ꕤ

1. React

2. Redux

3. NumPy

4. Pandas

ꕤ Top Database + tools + query language ꕤ

1. Redis

2. GraphQL, MongoDB

3. GO , Elasticsearch, MySQL

4. Postgres

5. SQL

#resources#resource#programming#computer science#computing#computer engineering#coding#python#comp sci#100 days of code#studying#studyblr#dataanalytics#deeplearning

171 notes

·

View notes

Text

Noteworthy PHP Development Tools that a PHP Developer should know in 2021!

Hypertext Preprocessor, commonly known as PHP, happens to be one of the most widely used server-side scripting languages for developing web applications and websites. Renowned names like Facebook and WordPress are powered by PHP. The reasons for its popularity can be attributed to the following goodies PHP offers:

Open-source and easy-to-use

Comprehensive documentation

Multiple ready-to-use scripts

Strong community support

Well-supported frameworks

However, to leverage this technology to the fullest and simplify tasks, PHP developers utilize certain tools that enhance programming efficiency and minimize development errors. PHP development tools provide a conducive IDE (Integrated Development Environment) that enhances the productivity of PHP Website Development.

The market currently is overflooded with PHP tools. Therefore, it becomes immensely difficult for a PHP App Development Company to pick the perfect set of tools that will fulfill their project needs. This blog enlists the best PHP development tools along with their offerings. A quick read will help you to choose the most befitting tool for your PHP development project.

Top PHP Development tools

PHPStorm

PHPStorm, created and promoted by JetBrains, is one of the most standard IDEs for PHP developers. It is lightweight, smooth, and speedy. This tool works easily with popular PHP frameworks like Laravel, Symfony, Zend Framework, CakePHP, Yii, etc. as well as with contemporary Content Management Systems like WordPress, Drupal, and Magento. Besides PHP, this tool supports JavaScript, C, C#, Visual Basic and C++ languages; and platforms such as Linux, Windows, and Mac OS X. This enterprise-grade IDE charges a license price for specialized developers, but is offered for free to students and teachers so that they can start open-source projects. Tech giants like Wikipedia, Yahoo, Cisco, Salesforce, and Expedia possess PHPStorm IDE licenses.

Features:

Code-rearranging, code completion, zero-configuration, and debugging

Support for Native ZenCoding and extension with numerous other handy plugins such as the VimEditor.

Functions:

Provides live editing support for the leading front-end technologies like JavaScript, HTML5, CSS, TypeScript, Sass, CoffeeScript, Stylus, Less, etc.

It supports code refactoring, debugging, and unit testing

Enables PHP developers to integrate with version control systems, databases, remote deployment, composer, vagrant, rest clients, command-line tools, etc.

Coming to debugging, PHPStorm works with Xdebug and Zend Debugger locally as well as remotely.

Cloud 9

This open-source cloud IDE offers a development eco-system for PHP and numerous other programming languages like HTML5, JavaScript, C++, C, Python, etc. It supports platforms like Mac OS, Solaris, Linux, etc.

Features:

Code reformatting, real-time language analysis, and tabbed file management.

Availability of a wide range of themes

In-built image editor for cropping, rotating, and resizing images

An in-built terminal that allows one to view the command output from the server.

Integrated debugger for setting a breakpoint

Adjustable panels via drag and drop function

Support for keyboard shortcuts resulting in easy access

Functions:

With Cloud 9, one can write, run and debug the code using any browser. Developers can work from any location using a machine connected to the internet.

It facilitates the creation of serverless apps, allowing the tasks of defining resources, executing serverless applications, and remote debugging.

Its ability to pair programs and track all real-time inputs; enables one to share their development eco-system with peers.

Zend Studio

This commercial PHP IDE supports most of the latest PHP versions, specifically PHP 7, and platforms like Linux, Windows, and OS X. This tool boasts of an instinctive UI and provides most of the latest functionalities that are needed to quicken PHP web development. Zend Studio is being used by high-profile firms like BNP Paribas Credit Suisse, DHL, and Agilent Technologies.

Features:

Support for PHP 7 express migration and effortless integration with the Zend server

A sharp code editor supporting JavaScript, PHP, CSS, and HTML

Speedier performance while indexing, validating, and searching for the PHP code

Support for Git Flow, Docker, and the Eclipse plugin environment

Integration with Z-Ray

Debugging with Zend Debugger and Xdebug

Deployment sustenance including cloud support for Microsoft Azure and Amazon AWS.

Functions:

Enables developers to effortlessly organize the PHP app on more than one server.

Provides developers the flexibility to write and debug the code without having to spare additional effort or time for these tasks.

Provides support for mobile app development at the peak of live PHP applications and server system backend, for simplifying the task of harmonizing the current websites and web apps with mobile-based applications.

Eclipse

Eclipse is a cross-platform PHP editor and one of the top PHP development tools. It is a perfect pick for large-scale PHP projects. It supports multiple languages – C, C++, Ada, ABAP, COBOL, Haskell, Fortran, JavaScript, D, Julia, Java, NATURAL, Ruby, Python, Scheme, Groovy, Erlang, Clojure, Prolong, Lasso, Scala, etc. - and platforms like Linux, Windows, Solaris, and Mac OS.

Features:

It provides one with a ready-made code template and automatically validates the syntax.

It supports code refactoring – enhancing the code’s internal structure.

It enables remote project management

Functions:

Allows one to choose from a wide range of plugins, easing out the tasks of developing and simplifying the complex PHP code.

Helps in customizing and extending the IDE for fulfilling project requirements.

Supports GUI as well as non-GUI applications.

Codelobster

Codelobster is an Integrated Development Environment that eases out and modernizes the PHP development processes. Its users do not need to worry about remembering the names of functions, attributes, tags, and arguments; as these are enabled through auto-complete functions. It supports languages like PHP, JavaScript, HTML, and CSS and platforms such as Windows, Linux, Ubuntu, Fedora, Mac OS, Linux, and Mint. Additionally, it offers exceptional plugins that enable it to function smoothly with myriad technologies like Drupal, Joomla, Twig, JQuery, CodeIgniter, Symfony, Node.js, VueJS, AngularJS, Laravel, Magento, BackboneJS, CakePHP, EmberJS, Phalcon, and Yii.

Offerings:

It is an internal, free PHP debugger that enables validating the code locally.

It auto-detects the existing server settings followed by configuring the related files and allowing one to utilize the debugger.

It has the ability to highlight pairs of square brackets and helps in organizing files into the project.

This tool displays a popup list comprising variables and constants.

It allows one to hide code blocks that are presently not being used and to collapse the code for viewing it in detail.

Netbeans

Netbeans, packed with a rich set of features is quite popular in the realm of PHP Development Services. It supports several languages like English, Russian, Japanese, Portuguese, Brazilian, and simplified Chinese. Its recent version is lightweight and speedier, and specifically facilitates building PHP-based Web Applications with the most recent PHP versions. This tool is apt for large-scale web app development projects and works with most trending PHP frameworks such as Symfony2, Zend, FuelPHP, CakePHP, Smarty, and WordPress CMS. It supports PHP, HTML5, C, C++, and JavaScript languages and Windows, Linux, MacOS and Solaris platforms.

Features:

Getter and setter generation, quick fixes, code templates, hints, and refactoring.

Code folding and formatting; rectangular selection

Smart code completion and try/catch code completion

Syntax highlighter

DreamWeaver

This popular tool assists one in creating, publishing, and managing websites. A website developed using DreamWeaver can be deployed to any web server.

Offerings:

Ability to create dynamic websites that fits the screen sizes of different devices

Availability of ready-to-use layouts for website development and a built-in HTML validator for code validation.

Workspace customization capabilities

Aptana Studio

Aptana Studio is an open-source PHP development tool used to integrate with multiple client-side and server-side web technologies like PHP, CSS3, Python, RoR, HTML5, Ruby, etc. It is a high-performing and productive PHP IDE.

Features:

Supports the most recent HTML5 specifications

Collaborates with peers using actions like pull, push and merge

IDE customization and Git integration capabilities

The ability to set breakpoints, inspecting variables, and controlling the execution

Functions:

Eases out PHP app development by supporting the debuggers and CLI

Enables programmers to develop and test PHP apps within a single environment

Leverages the flexibilities of Eclipse and also possesses detailed information on the range of support for each element of the popular browsers.

Final Verdict:

I hope this blog has given you clear visibility of the popular PHP tools used for web development and will guide you through selecting the right set of tools for your upcoming project.

To know more about our other core technologies, refer to links below:

React Native App Development Company

Angular App Development Company

ROR App Development

#Php developers#PHP web Development Company#PHP Development Service#PHP based Web Application#PHP Website Development Services#PHP frameworks

1 note

·

View note

Link

0 notes

Text

Top 18 Java Developer Skills to Check When Hiring in 2023

Software systems are becoming complex and as a result, developers are gaining popularity. And Java being one of the most popular programming languages, Java developers are increasingly becoming top choices for businesses, startups, or MNCs. So, for you as an employer, assessing the Java developer’s skills and expertise before hiring them is crucial.

You cannot afford to skip this step if you want to build your own Java dream team. It will help you analyze them better and know if they have key skills for Java programming to meet your project requirements.

This article will discuss the top ten Java developer skills that will be in demand in 2023. Whether you’re a hiring manager, human resources professional, or technical recruiter, this article is going to be your guiding light. It will assist you in selecting the best Java developer to ensure the success of your next project.

What Skills Should You Look for When Hiring a Java Developer?

The top 18 Java skills to look for in candidates to hire the best talent in 2023:

Apache Kafka:

A distributed data streaming platform called Apache Kafka allows users to publish, subscribe to, store, and instantly process record streams. Written in Scala and Java, it was created by LinkedIn and given to the Apache Software Foundation.

It seeks to fulfill the need of many contemporary systems for a unified, high-throughput, low-latency platform for real-time handling of data feeds. This is a cutting-edge technology that is gaining immense popularity in the Java community.

Microservices:

This buzzword has been around for a couple of years. Microservices made headlines when they were adopted by start-ups like Uber and Netflix. And when we talk about the key Microservices principle, then simplicity is the key.

Microservices divide an application into a number of smaller, composable fragments. This makes them much easier to code, develop, and maintain when compared to monolithic applications.

Using Spring Boot and Spring Cloud to create Microservices is very well supported in the Java world. Because of this, there is a growing need for Java developers who are well-versed in microservices.

Spring Framework:

Another essential skill for Java developers is Spring Framework. Without a doubt, this is the best Java stack. It offers dependency injection, which makes it simpler to write testable code.

Docker:

The way we create and deliver software is quickly changing thanks to DevOps. And Docker has been a key component. A container called Docker abstracts away the particulars of the environment needed to run your code, such as installing Java, setting the PATH, adding libraries, etc. Docker is very helpful in the software development and deployment process. It makes it simple to replicate the environment without installing servers.

Kotlin:

The most common language used to create mobile applications in Java. Learning Kotlin, a modified version of Java that is currently regarded as the industry standard for creating mobile apps, is advantageous for developers. Because Kotlin and Java can work together, learning Kotlin will benefit developers.

REST:

Since almost all modern web applications expose APIs or use REST API, this is another critical skill for Java development. REST is gradually being replaced by GraphQL, but in the Java world, REST still reigns supreme.

Cloud Computing:

Cloud computing is gradually eclipsing all other forms of technology. The next generation of Java applications will be created for clouds as more businesses migrate to the cloud and major cloud platforms like AWS, Google Cloud Platform, and Microsoft Azure develop and mature.

Maven:

Maven not only fixes the dependency management issue, but it also gives Java projects a standard structure. It greatly reduces the learning curve for novice developers.

Hibernate + JPA:

Hibernate is the dominant Java framework, along with Spring. In the world of Java, it’s actually the most widely used persistence framework. Hibernate becomes a crucial framework for many Java applications because data is a crucial component of every Java application.

It removes the hassle of using JDBC to interact with persistent technology, such as relational databases. And it enables you to concentrate on using objects to build application logic.

Git:

It’s one of the fundamental competencies for all programmers and not just Java developers. Whether it’s an open-source codebase or a closed base, Git is used everywhere. The same is true of Github.

Service Oriented Architecture (SOA):

As the name implies, service-oriented architecture is a method of software design. Here, application components communicate with one another over a network to provide services to other components.

Vendors, products, and technological advancements have no bearing on the fundamental concepts of service-oriented architecture. Although it sounds very similar to microservice, which also divides the application into multiple services, the main distinction is that microservice is, as its name implies, much smaller in scale.

Because real-world applications are frequently getting complex and are built using SOA architecture, there is a high demand for Java developers.

Elastic Search:

ElasticSearch is yet more fantastic Java software. You can rely on it to accurately search, analyze, and visualize your data. It is a search engine based on the Lucene library. Elasticsearch is frequently used in conjunction with Kibana, Beats, and Logstash to form the Elastic Stack.

Elastic search is becoming more important as every other application now gives users a way of analyzing and viewing their data. And as a result, Java developers with ElasticSearch expertise are in high demand.

TDD:

TDD is the one thing is highly advisable for Java developers to learn right now. Although it is a very specialised skill for Java developers, it is the single most effective way to raise your coding quality and increase your confidence as a coder. The test-code-test-refactor cycle of TDD is quick and effective in Java.

NoSQL:

NoSQL offers a different approach to data storage that scales better for many applications, especially those that load a junk of attributes together. This differs from the conventional relation model of data storage, which normalizes the data to try to eliminate redundancy or duplication. There are numerous well-known NoSQL databases on the market, including MongoDB and Cassandra.

Due to the increased amount of data in modern applications, many of them are switching to NoSQL for quicker retrieval and greater scalability. And Java programmers with NoSQL expertise are in high demand.

MySQL:

One of the easily accessible relational databases is MySQL, which is also widely used in the Java community. A solid understanding of a relational database like MySQL is very helpful in getting a job as a Java developer because databases are an essential component of all Java applications.

QuarkusIO:

Another full-stack Kubernetes-native Java framework is Quarkus, which optimizes Java specifically for containers and makes it a powerful platform for serverless, cloud, and Kubernetes environments. Quarkus is made for Java virtual machines (JVMs) and native compilation.

Redhat is supporting Quarkus, which is quickly gaining ground for building scalable, high-performance Java applications. Applications built on the Quarkus platform are known for their quick startup times.

Apache Camel:

Java programmers can learn this as well in 2023. An open-source integration framework called Apache Camel enables you to quickly combine numerous data-producing and -consuming systems. Since data is the foundation of every application and the majority of applications collect data from various systems and provide enriched data later, Apache Camel plays a significant role in data sourcing.

Kubernetes:

Java application deployment practices are evolving quickly. The days of setting up a Tomcat instance, deploying a WAR file, or writing shell scripts to run your primary Java applications are long gone.

Docker and Kubernetes are the primary tools for deploying and scaling Java Microservices in the age of cloud-native Java applications. As a Java developer, you don’t need to be a Kubernetes expert unless you want to become a DevOps engineer, but a basic understanding of Kubernetes is required to understand and deploy your Java application in the cloud.

Are You Ready to Hire the Best Java Developer and Make Your Dream Java Team?

Hiring a Java developer can appear to be a difficult and time-consuming process due to the sheer number of variables involved. But don’t give up; use the advice provided above to assess the Java developers’ proficiency. Additionally, you can ask for assistance from organizations like ours that specialize in connecting the right skill with the appropriate employer. You can hire pre-screened Java developers through BMT who are priceless!

0 notes

Text

Tech For Today Series - Day 1

This is first article of my Tech series. Its collection of basic stuffs of programming paradigms, Software runtime architecture ,Development tools ,Frameworks, Libraries, plugins and JAVA.

Programming paradigms

Do you know about program ancestors? Its better to have brief idea about it. In 1st generation computers used hard wired programming. In 2nd generation they used Machine language. In 3rd generation they started to use high level languages and in 4th generation they used advancement of high level language. In time been they introduced different way of writing codes(High level language).Programming paradigms are a way to classify programming language based on their features (WIKIPEDIA). There are lots of paradigms and most well-known examples are functional programming and object oriented programming.

Main target of a computer program is to solve problem with right concept. To solve problem it required different concept for different part of the problems. Because of that its important that programming languages support many paradigms. Some computer languages support multiple programming paradigms. As example c++ support both functional programming and oop.

On this article we discuss mainly about Structured programming, Non Structured Programming and event driven programming.

Non Structured Programming

Non-structured programming is the earliest programming paradigm. Line by line theirs no additional structure. Entire program is just list of code. There’s no any control structure. After sometimes they use GOTO Statement. Non Structured programming languages use only basic data types such as numbers, strings and arrays . Early versions of BASIC, Fortran, COBOL, and MUMPS are example for languages that using non structures programming language When number of lines in the code increases its hard to debug and modify, difficult to understand and error prone.

Structured programming

When the programs grows to large scale applications number of code lines are increase. Then if non structured program concept are use it will lead to above mentioned problems. To solve it Structured program paradigm is introduced. In first place they introduced Control Structures.

Control Structures.

Sequential -Code execute one by one

Selection - Use for branching the code (use if /if else /switch statements)

Iteration - Use for repetitively executing a block of code multiple times

But they realize that control structure is good to manage logic. But when it comes to programs which have more logic it will difficult to manage the program. So they introduce block structure (functional) programming and object oriented programming. There are two types of structured programming we talk in this article. Functional (Block Structured ) programming , Object oriented programming.

Functional programming

This paradigm concern about execution of mathematical functions. It take argument and return single solution. Functional programming paradigm origins from lambda calculus. " Lambda calculus is framework developed by Alonzo Church to study computations with functions. It can be called as the smallest programming language of the world. It gives the definition of what is computable. Anything that can be computed by lambda calculus is computable. It is equivalent to Turing machine in its ability to compute. It provides a theoretical framework for describing functions and their evaluation. It forms the basis of almost all current functional programming languages. Programming Languages that support functional programming: Haskell, JavaScript, Scala, Erlang, Lisp, ML, Clojure, OCaml, Common Lisp, Racket. " (geeksforgeeks). To check how lambda expression in function programming is work can refer with this link "Lambda expression in functional programming ".

Functional code is idempotent, the output value of a function depends only on the arguments that are passed to the function, so calling a function f twice with the same value for an argument x produces the same result f(x) each time .The global state of the system does not affect the result of a function. Execution of a function does not affect the global state of the system. It is referential transparent.(No side effects)

Referential transparent - In functional programs throughout the program once define the variables do not change their value. It don't have assignment statements. If we need to store variable we create new one. Because of any variable can be replaced with its actual value at any point of execution there is no any side effects. State of any variable is constant at any instant. Ex:

x = x + 1 // this changes the value assigned to the variable x.

// so the expression is not referentially transparent.

Functional programming use a declarative approach.

Procedural programming paradigm

Procedural programming is based on procedural call. Also known as procedures, routines, sub-routines, functions, methods. As procedural programming language follows a method of solving problems from the top of the code to the bottom of the code, if a change is required to the program, the developer has to change every line of code that links to the main or the original code. Procedural paradigm provide modularity and code reuse. Use imperative approach and have side effects.

Event driven programming paradigm

It responds to specific kinds of input from users. (User events (Click, drag/drop, key-press,), Schedulers/timers, Sensors, messages, hardware interrupts.) When an event occur asynchronously they placed it to event queue as they arise. Then it remove from programming queue and handle it by main processing loop. Because of that program may produce output or modify the value of a state variable. Not like other paradigms it provide interface to create the program. User must create defined class. JavaScript, Action Script, Visual Basic and Elm are the example for event-driven programming.

Object oriented Programming

Object Oriented Programming is a method of implementation in which programs are organized as a collection of objects which cooperate to solve a problem. In here program is divide in to small sub systems and they are independent unit which contain their own data and functions. Those units can reuse and solve many different programs.

Key features of object oriented concept

Object - Objects are instances of classes, which we can use to store data and perform actions.

Class - A class is a blue print of an object.

Abstraction - Abstraction is the process of removing characteristics from ‘something’ in order to reduce it to a set of essential characteristics that is needed for the particular system.

Encapsulation - Process of grouping related attributes and methods together, giving a name to the unit and providing an interface for outsiders to communicate with the unit

Information Hiding - Hide certain information or implementation decision that are internal to the encapsulation structure

Inheritance - Describes the parent child relationship between two classes.

Polymorphism - Ability of doing something in different ways. In other words it means, one method with multiple implementation, for a certain class of action

Software Runtime Architecture

Languages can be categorized according to the way they are processed and executed.

Compiled Languages

Scripting Languages

Markup Languages

The communication between the application and the OS needs additional components. Depends on the type of the language used to develop the application component. Depends on the type of the language used to develop the application component.

This is how JS code is executed.JavaScript statements that appear between <script> and </script> tags are executed in order of appearance. When more than one script appears in a file, the scripts are executed in the order in which they appear. If a script calls document. Write ( ), any text passed to that method is inserted into the document immediately after the closing </script> tag and is parsed by the HTML parser when the script finishes running. The same rules apply to scripts included from separate files with the src attribute.

To run the system in different levels there are some other tools use in the industry.

Virtual Machine

Containers/Dockers

Virtual Machine

Virtual machine is a hardware or software which enables one computer to behave like another computer system.

Development Tools

"Software development tool is a computer program that software developers use to create, debug, maintain, or otherwise support other programs and applications." (Wikipedia) CASE tools are used throughout the software life cycle.

Feasibility Study - First phase of SDLC. In this phase gain basic understand of the problem and discuss according to solution strategies (Technical feasibility, Economical feasibility, Operational feasibility, Schedule feasibility). And prepare document and submit for management approval

Requirement Analysis - Goal is to find out exactly what the customer needs. First gather requirement through meetings, interviews and discussions. Then documented in Software Requirement Specification (SRC).Use surveying tools, analyzing tools

Design - Make decisions of software, hardware and system architecture. Record this information on Design specification document (DSD). Use modelling tools

Development - A set of developers code the software as per the established design specification, using a chosen programming language .Use Code editors, frameworks, libraries, plugins, compilers

Testing - Ensures that the software requirements are in place and that the software works as expected. If there is any defect find out developers resolve it and create a new version of the software which then repeats the testing phase. Use test automation tools, quality assurance tools.

Development and Maintenance - Once software is error free give it to customer to use. If there is any error resolve them immediately. To development use VMs, containers/ Dockers, servers and for maintenance use bug trackers, analytical tools.

CASE software types

Individual tools

Workbenches

Environments

Frameworks Vs Libraries Vs Plugins

Do you know how the output of an HTML document is rendered?

This is how it happen. When browser receive raw bytes of data it convert into characters. These characters now further parsed in to tokens. The parser understands each string in angle brackets e.g "<html>", "<p>", and understands the set of rules that apply to each of them. After the tokenization is done, the tokens are then converted into nodes. Upon creating these nodes, the nodes are then linked in a tree data structure known as the DOM. The relationship between every node is established in this DOM object. When document contain with css files(css raw data) it also convert to characters, then tokenized , nodes are also formed, and finally, a tree structure is also formed . This tree structure is called CSSOM Now browser contain with DOM and CSSOM independent tree structures. Combination of those 2 we called render tree. Now browser has to calculate the exact size and position of each object on the page with the browser viewpoint (layout ). Then browser print individual node to the screen by using DOM, CSSOM , and exact layout.

JAVA

Java is general purpose programming language. It is class based object oriented and concurrent language. It let application developers to “Write Once Run anywhere “. That means java code can run on all platforms without need of recompilation. Java applications are compiled to bytecode which can run on any JVM.

Do you know should have to edit PATH after installing JDK?

JDK has java compiler which can compile program to give outputs. SYSTEM32 is the place where executables are kept. So it can call any wear. But here you cannot copy your JDK binary to SYSTEM32 , so every time you need to compile a program , you need to put the whole path of JDK or go to the JDK binary to compile , so to cut this clutter , PATH s are made ready , if you set some path in environment variables , then Windows will make sure that any name of executable from that PATH’s folder can be executed from anywhere any time, so the path is used to make ready the JDK all the time , whether you cmd is in C: drive , D: drive or anywhere . Windows will treat it like it is in SYSTEM32 itself.

1 note

·

View note

Text

Openings of Splunk Sr. Software Engineer Jobs in Vancouver Apply Online @Splunk.com. Splunk Sr. Software Engineer Vancouver Jobs for students. Latest Splunk Jobs in Vancouver. Subscribe to this newsletter for more Splunk British Columbia Jobs. Are you looking for Jobs in Canada? Splunk Sr. Software Engineer Jobs Vancouver has created an exciting opportunity for you. The official has organized Splunk Sr. Software Engineer recruitment to hire new skilful individuals. All the freshers who want a good salaried job and have applicable requirements to apply for Splunk Canada Delivery Jobs Vancouver. Further details of current Splunk Jobs hiring in Vancouver have been discussed below. Apart from these Jobs, Candidates can look for Latest Jobs in Canada. Splunk Sr. Software Engineer Jobs in Vancouver 2022 Apply Now Splunk Canada Sr. Software Engineer jobs offer a good income with additional benefits. Candidates can apply for the Sr. Software Engineer Splunk Jobs in Vancouver as per their experiences. To get elected for Splunk Sr. Software Engineer jobs, candidates must submit an online Splunk job application form. Applicants are suggested to rapidly apply soon for Splunk Vancouver Sr. Software Engineer Jobs to increase their selection chances. Splunk British Columbia job updates are also available for different job positions. There are numerous Vancouver Jobs for students in Canada whose lists have been shared on this portal, and candidates can easily access them and apply for the best Vancouver Jobs full-time. Current Splunk jobs Hiring in Vancouver Hiring Company:- Splunk Careers Job Type:- Sr. Software Engineer Jobs Employment Type:- Full-Time Jobs Location:- Jobs in Vancouver, British Columbia Salary Est:- CAD 15-29/- Hour Closing Date:- 2022-07-09 Splunk Sr. Software Engineer Jobs Requirements:- Proficiency in Java or Scala. Proven experience building and architecting web applications, services, and APIs. Experience with cloud service providers (AWS/GCP). Exposure to working with container ecosystems (Docker, Kubernetes, Kubernetes Operator Framework) Experienced with an Agile DevOps engineering environment that effectively uses CI/CD pipelines (Jenkins, GitLab, Bitbucket, etc.) Ability to learn new technologies quickly and to understand a wide variety of technical challenges to be solved Strong oral and written communication skills, including a demonstrated ability to prepare documentation and presentations for technical and non-technical audiences. Familiarity with Python a plus Background in developing products for the Security market a plus 5 years of related experience with a technical Bachelor’s degree; or equivalent practical experience. How to apply online for Sr. Software Engineer Splunk jobs in Vancouver? The process of submitting an online application for Splunk Sr. Software Engineer hiring has been discussed below. Press the "Apply Now" button available below this webpage. The official page of Splunk's career containing all the latest openings will appear on the screen. Select the best-suited jobs for yourself and match your qualification with the job requirements. Carefully read all the details of Splunk hirings you're willing to apply for. Later, download the Splunk Job application form and fill in all the details. Review your application and attach copies of the required documents. Finally, submit the form to the official page of Splunk career and wait for further official instructions. Download Splunk job Application form pdf 2022 Apply Online Splunk Sr. Software Engineer Jobs Similar Posts:- Splunk Senior Software Engineer Jobs in Vancouver Apply Now Splunk Software Engineer Jobs in Vancouver Apply Now Splunk Observability Sales Engineer Jobs in Toronto Apply Now

0 notes