#dynamic memory allocation

Explore tagged Tumblr posts

Text

youtube

Dynamic Memory Allocation in C | What is Dynamic Memory Allocation in Hindi

Dynamic memory allocation in C refers to the process of allocating memory at runtime, rather than at compile time. This allows a program to request memory during its execution based on the program's needs, making it more flexible and efficient. The memory is allocated from the heap, and the programmer can specify the exact amount of memory required. For more details, Kindly check my website URL.https://www.removeload.com/dynamic-memory-allocation-in-c

0 notes

Text

) oh, I didn't get to do too much C since then, but it's very doable. you can use indices into statically-allocated global arrays as a sort of arena or object pool, with the possible benefit of index types being smaller than pointers, but also a lot of the things don't really require dynamic allocation, esp. in a short-lived app, and you can just pass things around by value which C handles pretty well. so it's mostly passing stuff by index or by value instead of pointers (or often not passing anything and working with the entire array at once)

there are some tangible benefits here too: there are some unusual representations you can only do for flattened trees with interesting properties that you don't normally get, and in general having random access to all the "allocated" objects of a given type is really handy sometimes. plus having to put a strict upper bound on how many of a thing you'll need forces you to think through the design of the program a little more, which is always good. oh and also having all your stuff inside arrays with fixed indices makes serialization/deserialization a breeze.

not specific to C, but one thing I'd really like to see in a language is a syntax for strongly-typed indices, like "this is an index into an array of Foo" or "this index can be used for arrays A and B" and also offset types which you can't index with directly but can add to an index to offset it. using plain integers for everything gets confusing very quickly, I had to resort to a hungarian notation to keep my sanity. the first and third points here are technically enforceable by a standard library, at least in Rust or C++, but nobody does that afaik (and also making ad hoc newtypes sucks balls in Rust)

pinging @machine-unlearning thanks for reminding me

for this lent I am programming in C without using any pointers. I will let you know how it went, (if I'm still alive by the end of it.

#I heard Ada uses a secondary stack for returning dynamically-sized things from functions#instead of allocating memory normal style. I wonder how that works

15 notes

·

View notes

Text

Chaos Is A Ladder 2 / ?

In the silence of the forest, when everyone has gone to sleep, Spider reads. He reads Norm's old books on human psychology, manuals salvaged from dead sites, old datapads, and stolen RDA shipments. Pages on groupthink, social engineering, cognitive dissonance, hierarchy dynamics, and manipulative language tactics.

-) Spider reads them like scripture. Line by line. Word by word. He commits theories to memory the way the Christians on Earth memorize prayers, but refuse to follow the word of God. Spider underlines phrases in red marker, sometimes drawing arrows in the margins, mapping out patterns, dissecting certain behaviors. Every insight is a weapon. "People often act on perception and not truth." "Authority, once questioned, spreads uncertainty." "Every member in a group fears exclusion."

-) Spider uses their own human nature to unravel them. He spies patrols arguing over who leads the scouting party and writes a note in his little journal, a gift Max had given to him on his seventeenth birthday:

Rank envy. Triggered by unequal recognition. Use perceived favoritism as fuel.

He listens to RDA soldiers bicker over resource allocations and jots down: Scarcity breeds paranoia. Amplify tension with forged reports of incompetence theft. Make it look real.

-) Every fight. Every muttered complaint. Every soldier bitching about unfair treatment. Every subtle body language shift. Spider catalogues it. Humans are creatures of pattern, and patterns can be turned against themselves. So many prefer to remain stagnant instead of getting their shit together.

-) At night, he pours over his writings atop a tree by the light of a glowing plant. He updates mental models, builds behavioral flowcharts, etc. Psychological Triggers. The Collapse of Leadership. Social Interactions And Cultural Norms. Each page in his journal is given a title. Sometimes, Kiri will snuggle up next to him, head resting on his shoulder, reading aloud with him, their voices carrying in the wind.

-) There’s power in knowing how his people tick. Power in understanding that if he plants the right lie, smiles the right way, or stays quiet at the perfect moment, he can tip over the entire table without raising a weapon.

-) Sometimes, Spider wonders if he’s becoming what he studies. Manipulative, cold, tactical, borderline cruel. Then the guilt creeps in. But so does the resolve. Everything he does is for Eywa and his siblings. He's come this far...what's one more step? One more leap into the abyss?

#spider socorro#miles spider socorro#avatar 2009#I should write about how the adults feel about this#most if not all are deeply disturbed by this and yet spider gets results#and he's not exactly a kid anymore because they never treated him like one

16 notes

·

View notes

Text

SQL Server 2022 Edition and License instructions

SQL Server 2022 Editions:

• Enterprise Edition is ideal for applications requiring mission critical in-memory performance, security, and high availability

• Standard Edition delivers fully featured database capabilities for mid-tier applications and data marts

SQL Server 2022 is also available in free Developer and Express editions. Web Edition is offered in the Services Provider License Agreement (SPLA) program only.

And the Online Store Keyingo Provides the SQL Server 2017/2019/2022 Standard Edition.

SQL Server 2022 licensing models

SQL Server 2022 offers customers a variety of licensing options aligned with how customers typically purchase specific workloads. There are two main licensing models that apply to SQL Server: PER CORE: Gives customers a more precise measure of computing power and a more consistent licensing metric, regardless of whether solutions are deployed on physical servers on-premises, or in virtual or cloud environments.

• Core based licensing is appropriate when customers are unable to count users/devices, have Internet/Extranet workloads or systems that integrate with external facing workloads.

• Under the Per Core model, customers license either by physical server (based on the full physical core count) or by virtual machine (based on virtual cores allocated), as further explained below.

SERVER + CAL: Provides the option to license users and/or devices, with low-cost access to incremental SQL Server deployments.

• Each server running SQL Server software requires a server license.

• Each user and/or device accessing a licensed SQL Server requires a SQL Server CAL that is the same version or newer – for example, to access a SQL Server 2019 Standard Edition server, a user would need a SQL Server 2019 or 2022 CAL.

Each SQL Server CAL allows access to multiple licensed SQL Servers, including Standard Edition and legacy Business Intelligence and Enterprise Edition Servers.SQL Server 2022 Editions availability by licensing model:

Physical core licensing – Enterprise Edition

• Customers can deploy an unlimited number of VMs or containers on the server and utilize the full capacity of the licensed hardware, by fully licensing the server (or server farm) with Enterprise Edition core subscription licenses or licenses with SA coverage based on the total number of physical cores on the servers.

• Subscription licenses or SA provide(s) the option to run an unlimited number of virtual machines or containers to handle dynamic workloads and fully utilize the hardware’s computing power.

Virtual core licensing – Standard/Enterprise Edition

When licensing by virtual core on a virtual OSE with subscription licenses or SA coverage on all virtual cores (including hyperthreaded cores) on the virtual OSE, customers may run any number of containers in that virtual OSE. This benefit applies both to Standard and Enterprise Edition.

Licensing for non-production use

SQL Server 2022 Developer Edition provides a fully featured version of SQL Server software—including all the features and capabilities of Enterprise Edition—licensed for development, test and demonstration purposes only. Customers may install and run the SQL Server Developer Edition software on any number of devices. This is significant because it allows customers to run the software on multiple devices (for testing purposes, for example) without having to license each non-production server system for SQL Server.

A production environment is defined as an environment that is accessed by end-users of an application (such as an Internet website) and that is used for more than gathering feedback or acceptance testing of that application.

SQL Server 2022 Developer Edition is a free product !

#SQL Server 2022 Editions#SQL Server 2022 Standard license#SQL Server 2019 Standard License#SQL Server 2017 Standard Liense

7 notes

·

View notes

Text

hey wanna hear about the crescent stack structure because i don't really have anyone to tell this to

YAYYYY!! THANK YOU :3

small note: threads are also referred to internally as lstates (local states) as when creating a new thread, rather than using crescent_open again, they're connected to a single gstate (global state)

the stack is the main data structure in a crescent thread, containing all of the locals and call information.

the stack is divided into frames, and each time a function is called, a new frame is created. each frame has two parts: base and top. a frame's base is where the first local object is pushed to, and the top is the total amount of stack indexes reserved after the base. also in the stack is two numbers, calls and cCalls. calls keeps track of the number of function calls in the thread, whether it's to a c or crescent function. cCalls keeps track of the number of calls to c functions. c functions, using the actual stack rather than the dynamically allocated crescent stack, could overflow the real stack and crash the program in a way crescent is unable to catch. so we want to limit the amount of these such that this (hopefully) doesn't happen.

calls also keeps track of another thing with the same number, that being the stack level. the stack level is a number starting from 0 that increments with each function call, and decrements on each return from a function. stack level 0 is the c api, where the user called crescent_open (or whatever else function that creates a new thread, that's just the only one implemented right now), and is zero because zero functions have been called before this frame.

before the next part, i should probably explain some terms:

- stack base: the address of the first object on the entire stack, also the base of frame 0

- stack top: address of the object immediately after the last object pushed onto the stack. if there are no objects on the stack, this is the stack base.

- frame base: the address of the first object in a frame, also the stack top upon calling a function (explained later) - frame top: amount of stack indexes reserved for this frame after the base address

rather than setting its base directly after the reserved space on the previous frame, we simply set it right at the stack top, such that the first object on this frame is immediately after the last object on the previous frame (except not really. it's basically that, but when pushing arguments to functions we just subtract the amount of arguments from the base, such that the top objects on the previous frame are in the new frame). this does make the stack structure a bit more complicated, and maybe a bit messy to visualize, but it uses (maybe slightly) less memory and makes some other stuff related to protected calls easier.

as we push objects onto the stack and call functions, we're eventually going to run out of space, so we need to dynamically resize the stack. though first, we need to know how much memory the stack needs. we go through all of the frames, and calculate how much that frame needs by taking the frame base offset from the stack base (framebase - stackbase) and add the frame top (or the new top if resizing the current frame in using crescent_checkTop in the c api). the largest amount a frame needs is the amount that the entire stack needs. if the needed size is less than or equal to a third of the current stack size, it shrinks. if the needed size is greater than he current stack size, it grows. when shrinking or growing, the new size is always calculated as needed * 1.5 (needed + needed / 2 in the code).

when shrinking the stack, it can only shrink to a minimum of CRESCENT_MIN_STACK (64 objects by default). even if the resizing fails, it doesn't throw an error as we still have enough memory required. when growing, if the new stack size is greater than CRESCENT_MAX_STACK, it sets it back down to just the required amount. if that still is over CRESCENT_MAX_STACK, it either returns 1 or throws a stack overflow error depending on the throw argument. if it fails to reallocate the stack, it either throws an out of memory error or returns 1 again depending on the throw argument. growing the stack can throw an error because we don't have the memory required, unlike shrinking the stack.

and also the thing about the way a frame's base is set making protected calls easier. when handling an error, we want to go back to the most recent protected call. we do this by (using a jmp_buf) saving the stack level in the handler (structure used to hold information when handling errors and returning to the protected call) and reverting the stack back to that level. but we're not keeping track of the amount of objects in a stack frame! how do we restore the stack top? because a frame's base is immediately after that last object in the previous frame, and that the stack top is immediately after the last object pushed onto the stack, we just set the top to the stack level above's base.

6 notes

·

View notes

Text

Interesting Papers for Week 41, 2024

Exploration, exploitation, and development: Developmental shifts in decision‐making. Blanco, N. J., & Sloutsky, V. M. (2024). Child Development, 95(4), 1287–1298.

A drift diffusion model analysis of age-related impact on multisensory decision-making processes. Bolam, J., Diaz, J. A., Andrews, M., Coats, R. O., Philiastides, M. G., Astill, S. L., & Delis, I. (2024). Scientific Reports, 14, 14895.

Hippocampus and striatum show distinct contributions to longitudinal changes in value-based learning in middle childhood. Falck, J., Zhang, L., Raffington, L., Mohn, J. J., Triesch, J., Heim, C., & Shing, Y. L. (2024). eLife, 12, e89483.3.

Acquisition of non-olfactory encoding improves odour discrimination in olfactory cortex. Federman, N., Romano, S. A., Amigo-Duran, M., Salomon, L., & Marin-Burgin, A. (2024). Nature Communications, 15, 5572.

Neurofeedback training can modulate task-relevant memory replay rate in rats. Gillespie, A. K., Astudillo Maya, D., Denovellis, E. L., Desse, S., & Frank, L. M. (2024). eLife, 12, e90944.3.

GABAergic synaptic scaling is triggered by changes in spiking activity rather than AMPA receptor activation. Gonzalez-Islas, C., Sabra, Z., Fong, M., Yilmam, P., Au Yong, N., Engisch, K., & Wenner, P. (2024). eLife, 12, e87753.3.

Shifts in attention drive context-dependent subspace encoding in anterior cingulate cortex in mice during decision making. Hajnal, M. A., Tran, D., Szabó, Z., Albert, A., Safaryan, K., Einstein, M., … Orbán, G. (2024). Nature Communications, 15, 5559.

A computational account of transsaccadic attentional allocation based on visual gain fields. Harrison, W. J., Stead, I., Wallis, T. S. A., Bex, P. J., & Mattingley, J. B. (2024). Proceedings of the National Academy of Sciences, 121(27), e2316608121.

Perirhinal cortex learns a predictive map of the task environment. Lee, D. G., McLachlan, C. A., Nogueira, R., Kwon, O., Carey, A. E., House, G., … Chen, J. L. (2024). Nature Communications, 15, 5544.

The neuron as a direct data-driven controller. Moore, J. J., Genkin, A., Tournoy, M., Pughe-Sanford, J. L., de Ruyter van Steveninck, R. R., & Chklovskii, D. B. (2024). Proceedings of the National Academy of Sciences, 121(27), e2311893121.

Bats integrate multiple echolocation and flight tactics to track prey. Nishiumi, N., Fujioka, E., & Hiryu, S. (2024). Current Biology, 34(13), 2948-2956.e6.

Limb-related sensory prediction errors and task-related performance errors facilitate human sensorimotor learning through separate mechanisms. Oza, A., Kumar, A., Sharma, A., & Mutha, P. K. (2024). PLOS Biology, 22(7), e3002703.

Systemic pharmacological suppression of neural activity reverses learning impairment in a mouse model of Fragile X syndrome. Shakhawat, A. M., Foltz, J. G., Nance, A. B., Bhateja, J., & Raymond, J. L. (2024). eLife, 12, e92543.3.

Prefrontal cortical ripples mediate top-down suppression of hippocampal reactivation during sleep memory consolidation. Shin, J. D., & Jadhav, S. P. (2024). Current Biology, 34(13), 2801-2811.e9.

Preferences reveal dissociable encoding across prefrontal-limbic circuits. Stoll, F. M., & Rudebeck, P. H. (2024). Neuron, 112(13), 2241-2256.e8.

Atypical local and global biological motion perception in children with attention deficit hyperactivity disorder. Tian, J., Yang, F., Wang, Y., Wang, L., Wang, N., Jiang, Y., & Yang, L. (2024). eLife, 12, e90313.5.

Temporal information in the anterior cingulate cortex relates to accumulated experiences. Wirt, R. A., Soluoku, T. K., Ricci, R. M., Seamans, J. K., & Hyman, J. M. (2024). Current Biology, 34(13), 2921-2931.e3.

Complexity Matters: Normalization to Prototypical Viewpoint Induces Memory Distortion along the Vertical Axis of Scenes. Wu 吴奕忱, Y., & Li 李晟, S. (2024). Journal of Neuroscience, 44(27), e1175232024.

Co-existence of synaptic plasticity and metastable dynamics in a spiking model of cortical circuits. Yang, X., & La Camera, G. (2024). PLOS Computational Biology, 20(7), e1012220.

Perceptual error based on Bayesian cue combination drives implicit motor adaptation. Zhang, Z., Wang, H., Zhang, T., Nie, Z., & Wei, K. (2024). eLife, 13, e94608.3.

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience#developmental neuroscience

7 notes

·

View notes

Text

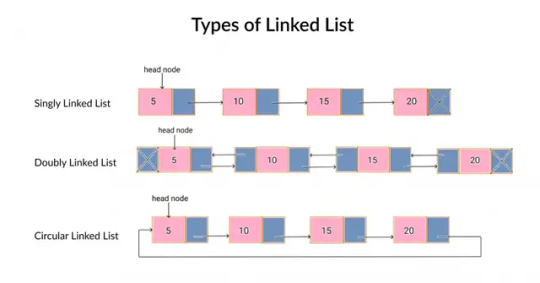

Linked List in Python

A linked list is a dynamic data structure used to store elements in a linear order, where each element (called a node) points to the next. Unlike Python lists that use contiguous memory, linked lists offer flexibility in memory allocation, making them ideal for situations where the size of the data isn’t fixed or changes frequently.

In Python, linked lists aren’t built-in but can be implemented using classes. Each node contains two parts: the data and a reference to the next node. The list is managed using a class that tracks the starting node, known as the head.

Node Structure: Contains data and next (pointer to the next node).

Types:

Singly Linked List

Doubly Linked List

Circular Linked List

Operations: Insertion, deletion, and traversal.

Advantages:

Dynamic size

Efficient insertions/deletions

Disadvantages:

Slower access (no random indexing)

Extra memory for pointers

Want to master linked lists and other data structures in Python? PrepInsta has you covered with beginner-friendly explanations and practice problems.

Explore Linked Lists with PrepInsta

2 notes

·

View notes

Text

The C Programming Language Compliers – A Comprehensive Overview

C is a widespread-purpose, procedural programming language that has had a profound have an impact on on many different contemporary programming languages. Known for its efficiency and energy, C is frequently known as the "mother of all languages" because many languages (like C++, Java, and even Python) have drawn inspiration from it.

C Lanugage Compliers

Developed within the early Seventies via Dennis Ritchie at Bell Labs, C changed into firstly designed to develop the Unix operating gadget. Since then, it has emerge as a foundational language in pc science and is still widely utilized in systems programming, embedded systems, operating systems, and greater.

2. Key Features of C

C is famous due to its simplicity, performance, and portability. Some of its key functions encompass:

Simple and Efficient: The syntax is minimalistic, taking into consideration near-to-hardware manipulation.

Fast Execution: C affords low-degree get admission to to memory, making it perfect for performance-critical programs.

Portable Code: C programs may be compiled and run on diverse hardware structures with minimal adjustments.

Rich Library Support: Although simple, C presents a preferred library for input/output, memory control, and string operations.

Modularity: Code can be written in features, improving readability and reusability.

Extensibility: Developers can without difficulty upload features or features as wanted.

Three. Structure of a C Program

A primary C application commonly consists of the subsequent elements:

Preprocessor directives

Main function (main())

Variable declarations

Statements and expressions

Functions

Here’s an example of a easy C program:

c

Copy

Edit

#include <stdio.H>

int important()

printf("Hello, World!N");

go back zero;

Let’s damage this down:

#include <stdio.H> is a preprocessor directive that tells the compiler to include the Standard Input Output header file.

Go back zero; ends this system, returning a status code.

4. Data Types in C

C helps numerous facts sorts, categorised particularly as:

Basic kinds: int, char, glide, double

Derived sorts: Arrays, Pointers, Structures

Enumeration types: enum

Void kind: Represents no fee (e.G., for functions that don't go back whatever)

Example:

c

Copy

Edit

int a = 10;

waft b = three.14;

char c = 'A';

five. Control Structures

C supports diverse manipulate structures to permit choice-making and loops:

If-Else:

c

Copy

Edit

if (a > b)

printf("a is more than b");

else

Switch:

c

Copy

Edit

switch (option)

case 1:

printf("Option 1");

smash;

case 2:

printf("Option 2");

break;

default:

printf("Invalid option");

Loops:

For loop:

c

Copy

Edit

printf("%d ", i);

While loop:

c

Copy

Edit

int i = 0;

while (i < five)

printf("%d ", i);

i++;

Do-even as loop:

c

Copy

Edit

int i = zero;

do

printf("%d ", i);

i++;

while (i < 5);

6. Functions

Functions in C permit code reusability and modularity. A function has a return kind, a call, and optionally available parameters.

Example:

c

Copy

Edit

int upload(int x, int y)

go back x + y;

int important()

int end result = upload(3, 4);

printf("Sum = %d", result);

go back zero;

7. Arrays and Strings

Arrays are collections of comparable facts types saved in contiguous memory places.

C

Copy

Edit

int numbers[5] = 1, 2, three, 4, five;

printf("%d", numbers[2]); // prints three

Strings in C are arrays of characters terminated via a null character ('').

C

Copy

Edit

char name[] = "Alice";

printf("Name: %s", name);

8. Pointers

Pointers are variables that save reminiscence addresses. They are powerful but ought to be used with care.

C

Copy

Edit

int a = 10;

int *p = &a; // p factors to the address of a

Pointers are essential for:

Dynamic reminiscence allocation

Function arguments by means of reference

Efficient array and string dealing with

9. Structures

C

Copy

Edit

struct Person

char call[50];

int age;

;

int fundamental()

struct Person p1 = "John", 30;

printf("Name: %s, Age: %d", p1.Call, p1.Age);

go back 0;

10. File Handling

C offers functions to study/write documents using FILE pointers.

C

Copy

Edit

FILE *fp = fopen("information.Txt", "w");

if (fp != NULL)

fprintf(fp, "Hello, File!");

fclose(fp);

11. Memory Management

C permits manual reminiscence allocation the usage of the subsequent functions from stdlib.H:

malloc() – allocate reminiscence

calloc() – allocate and initialize memory

realloc() – resize allotted reminiscence

free() – launch allotted reminiscence

Example:

c

Copy

Edit

int *ptr = (int *)malloc(five * sizeof(int));

if (ptr != NULL)

ptr[0] = 10;

unfastened(ptr);

12. Advantages of C

Control over hardware

Widely used and supported

Foundation for plenty cutting-edge languages

thirteen. Limitations of C

No integrated help for item-oriented programming

No rubbish collection (manual memory control)

No integrated exception managing

Limited fashionable library compared to higher-degree languages

14. Applications of C

Operating Systems: Unix, Linux, Windows kernel components

Embedded Systems: Microcontroller programming

Databases: MySQL is partly written in C

Gaming and Graphics: Due to performance advantages

2 notes

·

View notes

Text

The Accountant “Control the numbers, and you control the war.”

Name: Ekaterina Marchand Age: 49 Affiliation: Cross Guild (Role: Chief Financial Strategist & Senior Accountant) Shipped with Sir Crocodile, Ex-girlfriend of Kuzan (Aokiji)

Appearance:

Height: 200cm (6’7”) without her heels

Build: Lean and graceful, but with a powerful posture

Hair: Thick, dark blue curls tamed into a long, sleek bun; only worn loose behind closed doors, especially with Crocodile

Eyes: Ice-gray, perceptive and bold

Clothing: Tight pencil skirts, pristine white blouses, minimalist jewelry, high heels—every outfit screams control and intimidation. Thin-rimmed glasses she wears while working (and dramatically whips off when angry)

Personality:

Composed Strategist: Calm and collected under pressure, Ekaterina radiates quiet authority. Her tone rarely rises, but when it does—especially in arguments with Crocodile—everyone listens. Her silence, however, is more terrifying than any outburst.

Coldly Analytical: She sees the world in probabilities, leverage points, and outcomes. Her trust lies in logic and patterns, not emotion—though select individuals, like Crocodile and Kuzan, have seen behind the curtain.

Authoritative Without Force: She doesn’t fight, but she commands power by controlling resources, information, and timing. Fighters follow her not out of fear, but because she makes empires run.

High Standards, Low Tolerance for Error: Disorganization is unacceptable. If something goes off-plan, she doesn’t adapt—she reconfigures the system that failed.

Privately Intense: Ekaterina chooses who gets close to her, and once they do, she burns quietly but fiercely. Crocodile—and long ago, Kuzan—are the only ones who have seen her undone, stripped of control, in the dark, between sheets and shadows.

Skills:

Master Logistician: Expert in resource allocation, bounty tracking, and strategic finance. She turns chaos into infrastructure.

Collapse Analyst: Known for identifying structural weaknesses. Her insights have collapsed syndicates without a single bullet fired.

Observational Haki (Suspected): Her unnerving ability to read people suggests an innate, possibly honed, form of Haki.

Eidetic Memory: Once seen, never forgotten. Her memory for data, faces, and dialogue is surgical.

Former Marine (Logistics Division): Her foundation in Marine systems gives her deep insight into both the military machine and its failings.

Canon Character Relationship Dynamic (Sir Crocodile):

Power Dynamic: Blazing hot, confrontational, and deeply rooted in mutual respect. They clash publicly over operations—Ekaterina does not fear Crocodile’s reputation and will raise her voice when logic is on her side.

Intimacy: Their sexual and romantic tension is layered under professional sparring. Behind closed doors, they are raw, physical, and vulnerable in a way neither would admit aloud. He’s the only person who sees her with her sleek bun undone.

Mutual Weakness: She calls him on his bullshit. He, in turn, tests her composure like no one else. They challenge each other—and that’s exactly why neither can stay away.

Relationship Tropes:

“Only One Allowed to See Her Unraveled” – Her hair is always perfect—except when she’s with him.

“Workplace Power Couple” – Dangerous, composed, and explosive when they disagree

“If I’m Yelling, You’re Listening” – She's not afraid to shout at a Warlord and win the argument

Canon Friends:

Sir Crocodile (Lover): Their relationship is a storm beneath still waters. He respects her sharp mind and control—she respects his ambition and vision. They argue, loudly and in front of others, but behind closed doors, the tension becomes something else entirely. He trusts her with the Cross Guild’s future—and, though he’d never admit it, with himself.

Kuzan (Aokiji) – Ex-boyfriend: They were young and their relationship was built on intellect, dry humor, and shared quiet nights during her time working with Marine logistics. Things ended when their ideologies shifted—he drifted, she remained rooted in structure. They still speak rarely, but when they do, there's a heavy, unspoken history in every word. She’s the only person who’s ever made him nervous with a single look.

Dracule Mihawk: Mutual respect, quiet allies. He appreciates her logic and restraint; she appreciates that he keeps out of internal conflicts unless necessary.

Daz Bones (Mr. 1): Respectful silence. She knows he watches her, and she’s one of the few who can make him blink with a single sentence.

Buggy (begrudgingly): She finds him loud and inefficient but tolerates him because he brings in revenue. She threatens to reassign his cut weekly.

Galdino (Mr. 3): Occasional assistant in logistics. She once told him he had a “usable” mind, and it made his week.

Nico Robin: They exchanged information in the underground world before Robin joined the Straw Hats. Ekaterina finds Robin’s calm intelligence comforting, even refreshing. They’re not best friends—but there’s a quiet, lifelong respect between two women who know how dangerous knowledge is.

#marchandarchive – general tag #marchand musings – in-character thoughts #frozen files – flashbacks & history #crossguild – guild-related content #sand & frost – current with Crocodile #kuzans ghost – past with Kuzan #logistical supremacy – personal mastery #nsfw archive – 🔒 (mature content, 18+)

#one piece#one piece oc#one piece original character#oc: ekaterina marchand#marchandarchive#crossguild#sir crocodile#kuzan#marchand musing#kuzans ghost#logistical supremacy#dividers by cafekitsune

5 notes

·

View notes

Text

Single-Agent vs Multi-Agent Systems: What Developers Should Know

Introduction:

AI agents can operate independently or as part of a broader system of collaborating entities. Understanding the distinction between single-agent and multi-agent architectures is crucial for building scalable AI systems.

Content:

Single-agent systems are great for environments where decision-making is centralized—like a personal AI assistant. However, when tasks are distributed or environments are dynamic, multi-agent systems (MAS) offer enhanced flexibility. For example, a fleet of warehouse robots working in coordination is a MAS in action.

The key challenge lies in communication and coordination. Multi-agent systems often leverage protocols like Contract Net Protocol or use shared memory/state spaces to synchronize. Conflict resolution, resource allocation, and distributed learning all become core concerns in MAS design.

Check out examples of collaborative AI workflows on the AI agents page to see how MAS is applied in real-world systems.

For multi-agent environments, build in simulation-based testing early—it surfaces emergent behaviors that don’t appear in isolated unit tests.

3 notes

·

View notes

Text

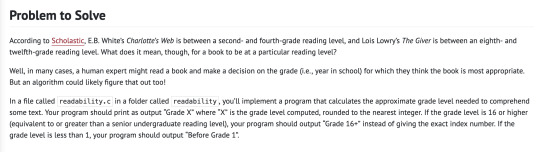

Oooooooof. I finally finished the "Readability" assignment for CS50 week 2, and I encountered the typical problem I have with coding challenges: assuming it's more complicated than it actually is.

The assignment description is as follows:

So immediately I thought, "Okay, I have to get the first 100 words of the text and then calculate both the number of letters and the number of sentences in those 100 words. Right?"

Wrong. After struggling with this one for days and days, attempting to learn stuff about dynamic memory allocation and the strtok() function and all sorts of stuff above my level, I was informed that we don't actually have to worry about the "100 words" thing. We can just use the entire text input, no matter how long it is. -_-

I had already written so much code (most of it functional, even!) in the process of trying to figure out how to chop off a string of unknown total length after the first 100 words (also not knowing the lengths of any of those words) and then split it into arrays both by words and by sentences. It was truly brain-breaking stuff. And then it turns out I didn't need to put in all that effort, and the actual solution is really easy. Like... embarrassingly easy.

Oh well, at least that one's finally finished and I can move on :'D

#cs50#csblr#codeblr#studyblr#programming#adult studyblr#chiseling away#god i'm grumpy with myself for making it so much harder than it needed to be#that is pretty typical me though tbf

3 notes

·

View notes

Text

Advanced C Programming: Mastering the Language

Introduction

Advanced C programming is essential for developers looking to deepen their understanding of the language and tackle complex programming challenges. While the basics of C provide a solid foundation, mastering advanced concepts can significantly enhance your ability to write efficient, high-performance code.

1. Overview of Advanced C Programming

Advanced C programming builds on the fundamentals, introducing concepts that enhance efficiency, performance, and code organization. This stage of learning empowers programmers to write more sophisticated applications and prepares them for roles that demand a high level of proficiency in C.

2. Pointers and Memory Management

Mastering pointers and dynamic memory management is crucial for advanced C programming, as they allow for efficient use of resources. Pointers enable direct access to memory locations, which is essential for tasks such as dynamic array allocation and manipulating data structures. Understanding how to allocate, reallocate, and free memory using functions like malloc, calloc, realloc, and free can help avoid memory leaks and ensure optimal resource management.

3. Data Structures in C

Understanding advanced data structures, such as linked lists, trees, and hash tables, is key to optimizing algorithms and managing data effectively. These structures allow developers to store and manipulate data in ways that improve performance and scalability. For example, linked lists provide flexibility in data storage, while binary trees enable efficient searching and sorting operations.

4. File Handling Techniques

Advanced file handling techniques enable developers to manipulate data efficiently, allowing for the creation of robust applications that interact with the file system. Mastering functions like fopen, fread, fwrite, and fclose helps you read from and write to files, handle binary data, and manage different file modes. Understanding error handling during file operations is also critical for building resilient applications.

5. Multithreading and Concurrency

Implementing multithreading and managing concurrency are essential skills for developing high-performance applications in C. Utilizing libraries such as POSIX threads (pthreads) allows you to create and manage multiple threads within a single process. This capability can significantly enhance the performance of I/O-bound or CPU-bound applications by enabling parallel processing.

6. Advanced C Standard Library Functions

Leveraging advanced functions from the C Standard Library can simplify complex tasks and improve code efficiency. Functions for string manipulation, mathematical computations, and memory management are just a few examples. Familiarizing yourself with these functions not only saves time but also helps you write cleaner, more efficient code.

7. Debugging and Optimization Techniques

Effective debugging and optimization techniques are critical for refining code and enhancing performance in advanced C programming. Tools like GDB (GNU Debugger) help track down bugs and analyze program behavior. Additionally, understanding compiler optimizations and using profiling tools can identify bottlenecks in your code, leading to improved performance.

8. Best Practices in Advanced C Programming

Following best practices in coding and project organization helps maintain readability and manageability of complex C programs. This includes using consistent naming conventions, modularizing code through functions and header files, and documenting your code thoroughly. Such practices not only make your code easier to understand but also facilitate collaboration with other developers.

9. Conclusion

By exploring advanced C programming concepts, developers can elevate their skills and create more efficient, powerful, and scalable applications. Mastering these topics not only enhances your technical capabilities but also opens doors to advanced roles in software development, systems programming, and beyond. Embrace the challenge of advanced C programming, and take your coding skills to new heights!

#C programming#C programming course#Learn C programming#C programming for beginners#Online C programming course#C programming tutorial#Best C programming course#C programming certification#Advanced C programming#C programming exercises#C programming examples#C programming projects#Free C programming course#C programming for kids#C programming challenges#C programming course online free#C programming books#C programming guide#Best C programming tutorials#C programming online classes

2 notes

·

View notes

Text

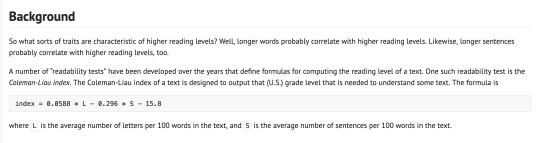

Calling by reference in C++ Also known as STOP OVERTHINKING EVERYTHING

So I have now seen a specific type of horrible code from several programmers at my internship who really should know better. The code will look something like this:

And when I ask how on earth they managed to overcomplicate "calling a function" I get the answer "I am calling by reference!" Which... no... no you are not. So, there are two ways to feed arguments to a function. Known as calling by value, and calling by reference. When you call by value, the compiler takes a COPY of whatever you send in, and your function works on that copy. (By the way, that is faster than calling by reference when you need to send in small primitive variables, since playing with a 64bit address is harder than moving the 16 bits an int often is ) What is happening in the... abomination of a function call here, is that they use a shared pointer. And shared pointers are a great tool! It gives you all the safety and easy of use of working in a single thread environment with a statically allocated variable (IE, a variable allocated on the stack) when you are working with dynamically allocated memory in a multi-threaded environment. But when you use it on a statically allocated variable in a single threaded environment... then.... it gives you all the safety and easy of use... that you already had... When I asked why on earth they were doing this to that poor poor pointer, I got told that it was because using raw pointers was bad... Ok... first of all, yes, raw pointers are bad. Not really in of themselves, but because c++ have better tools than them for 99% of use cases... But that is not a reason to use a pointer at all! When I told him he was calling this function by value... just in a really weird complicated way, he disagreed. He was using a pointer! Yes... you are putting in a pointer... which gets copied... And together with the work on creating the pointer and the more complicated syntax of using a pointer... it is just bad. You know how you call by reference? You do it... by calling... with a reference... Not a pointer, a REFERENCE. The hint is in the name! This is the refactored code:

THAT is calling by reference. It is quick. It is clean. It is easy. The ONLY change from calling by value is in the signature. Both from the perspective of someone who uses the function and from inside the function, it works JUST like a call by value. Just like using a pointer, it allows you to work directly on a variable from an outher scope instead of copying the variable into the function. And unlike a pointer, a reference guarantees that whatever you are being feed is instantiated. You can send in a null pointer, you cannot send in a null reference (Well...Technically you can, but you have to work really hard to be able to.) Like... often in programming, the easiest way, that requires the least amount of work, is also often the most efficient and the easiest to maintain... just... just do it simply. I beg of you. Code models reality, and as a result can get really really complicated. So let us keep the simple things simple, yeah? ( By the way, I am grumping at programmers who should know better. When newbies makes weird coding choices, that is simply them learning. That is obviously fine )

11 notes

·

View notes

Text

Electronic Art's "MULE" for the Atari 400/800/1200 computer

Released in 1983 by Electronic Arts for the Atari 400/800, "M.U.L.E." is a seminal video game that combines elements of strategy, resource management, and economic simulation in a multiplayer format. Designed by Dan Bunten (later Danielle Bunten Berry) of Ozark Softscape, "M.U.L.E." is recognized not only for its innovative gameplay but also for fostering social interaction and strategic thinking among players. It remains a beloved classic for its pioneering approach to multiplayer gaming and its enduring game design principles.

Historical Context

The early 1980s marked a vibrant period for the home computer market, with the Atari 400/800 series emerging as significant platforms due to their advanced graphic and sound capabilities relative to competitors. This era witnessed the rise of software companies eager to explore these new technologies, among them Electronic Arts, which was founded with the vision of supporting software developers as artists. "M.U.L.E." was among the first titles released under this new banner, exemplifying the company's commitment to innovative and thoughtful game design.

Gameplay and Design

"M.U.L.E." allows up to four players to colonize a distant planet, competing and cooperating to manage the allocation of resources such as food, energy, smithore (used to make MULEs—Multiple Use Labor Elements), and crystite. The gameplay involves strategic placement of MULEs on various plots of land to harvest resources, which are then used to sustain the colony and can be bought or sold in a dynamic marketplace that simulates supply and demand economics.

One of the most innovative aspects of "M.U.L.E." is its emphasis on economic principles, making it one of the first games to incorporate complex economic algorithms that impact player decisions. It also promotes social interaction through its auction system, where players negotiate and trade resources, requiring real-time decision-making that enhances the game’s dynamic feel.

Technological Innovations

"M.U.L.E." was technologically significant for several reasons. Firstly, its graphic design was highly effective yet simple, with clear, colorful representations of the game world that made complex information accessible and engaging. The user interface was ahead of its time, providing players with easy navigation and management of in-game actions, which was crucial for a game with such depth.

Secondly, the game made excellent use of the Atari 400/800's capabilities, particularly in handling multiple players in a turn-based setting without sacrificing pace or engagement. This was a remarkable achievement that set a precedent for future multiplayer games.

Cultural Impact and Legacy

"M.U.L.E." is beloved by those who played it for several reasons. It was one of the first games to effectively blend competitive and cooperative gameplay, creating a unique social experience in video gaming. The game's ability to forge a communal spirit among players, coupled with the intellectual challenge of managing economic variables, made it not only fun but a mentally stimulating experience.

The game's influence extends beyond just gameplay; it is cited as an inspiration by numerous game developers who appreciated its balanced game mechanics and economic simulation aspects. "M.U.L.E." laid the groundwork for many future simulations and strategy games, and its principles can be seen in modern titles that incorporate complex economic systems and multiplayer components.

Conclusion

"M.U.L.E." remains a landmark in video game history, notable for its innovative approach to gameplay, technology, and social gaming dynamics. It stands out not only as a product of its time but also as a forward-thinking creation that predicted and shaped future developments in the gaming industry. For many, "M.U.L.E." was more than just a game; it was a compelling social and strategic experience that has endured in memory and influence, continuing to inspire game designers and players alike with its timeless design and gameplay.

#EA#Electronic Arts#Trip Hawkins#Atari#Atari 400#Atari 800#Atari 1200#MULE#Retro#Game#Retro game#Retro gaming#Pixel Crisis

4 notes

·

View notes

Text

Optimizing Performance on Enterprise Linux Systems: Tips and Tricks

Introduction: In the dynamic world of enterprise computing, the performance of Linux systems plays a crucial role in ensuring efficiency, scalability, and reliability. Whether you're managing a data center, cloud infrastructure, or edge computing environment, optimizing performance is a continuous pursuit. In this article, we'll delve into various tips and tricks to enhance the performance of enterprise Linux systems, covering everything from kernel tuning to application-level optimizations.

Kernel Tuning:

Adjusting kernel parameters: Fine-tuning parameters such as TCP/IP stack settings, file system parameters, and memory management can significantly impact performance. Tools like sysctl provide a convenient interface to modify these parameters.

Utilizing kernel patches: Keeping abreast of the latest kernel patches and updates can address performance bottlenecks and security vulnerabilities. Techniques like kernel live patching ensure minimal downtime during patch application.

File System Optimization:

Choosing the right file system: Depending on the workload characteristics, selecting an appropriate file system like ext4, XFS, or Btrfs can optimize I/O performance, scalability, and data integrity.

File system tuning: Tweaking parameters such as block size, journaling options, and inode settings can improve file system performance for specific use cases.

Disk and Storage Optimization:

Utilizing solid-state drives (SSDs): SSDs offer significantly faster read/write speeds compared to traditional HDDs, making them ideal for I/O-intensive workloads.

Implementing RAID configurations: RAID arrays improve data redundancy, fault tolerance, and disk I/O performance. Choosing the right RAID level based on performance and redundancy requirements is crucial.

Leveraging storage technologies: Technologies like LVM (Logical Volume Manager) and software-defined storage solutions provide flexibility and performance optimization capabilities.

Memory Management:

Optimizing memory allocation: Adjusting parameters related to memory allocation and usage, such as swappiness and transparent huge pages, can enhance system performance and resource utilization.

Monitoring memory usage: Utilizing tools like sar, vmstat, and top to monitor memory usage trends and identify memory-related bottlenecks.

CPU Optimization:

CPU affinity and scheduling: Assigning specific CPU cores to critical processes or applications can minimize contention and improve performance. Tools like taskset and numactl facilitate CPU affinity configuration.

Utilizing CPU governor profiles: Choosing the appropriate CPU governor profile based on workload characteristics can optimize CPU frequency scaling and power consumption.

Application-Level Optimization:

Performance profiling and benchmarking: Utilizing tools like perf, strace, and sysstat for performance profiling and benchmarking can identify performance bottlenecks and optimize application code.

Compiler optimizations: Leveraging compiler optimization flags and techniques to enhance code performance and efficiency.

Conclusion: Optimizing performance on enterprise Linux systems is a multifaceted endeavor that requires a combination of kernel tuning, file system optimization, storage configuration, memory management, CPU optimization, and application-level optimizations. By implementing the tips and tricks outlined in this article, organizations can maximize the performance, scalability, and reliability of their Linux infrastructure, ultimately delivering better user experiences and driving business success.

For further details click www.qcsdclabs.com

#redhatcourses#redhat#linux#redhatlinux#docker#dockerswarm#linuxsystem#information technology#enterpriselinx#automation#clustering#openshift#cloudcomputing#containerorchestration#microservices#aws

1 note

·

View note

Text

Interesting Papers for Week 32, 2024

In and Out of Criticality? State-Dependent Scaling in the Rat Visual Cortex. Castro, D. M., Feliciano, T., de Vasconcelos, N. A. P., Soares-Cunha, C., Coimbra, B., Rodrigues, A. J., … Copelli, M. (2024). PRX Life, 2(2), 023008.

An event-termination cue causes perceived time to dilate. Choe, S., & Kwon, O.-S. (2024). Psychonomic Bulletin & Review, 31(2), 659–669.

Stimulus-dependent differences in cortical versus subcortical contributions to visual detection in mice. Cone, J. J., Mitchell, A. O., Parker, R. K., & Maunsell, J. H. R. (2024). Current Biology, 34(9), 1940-1952.e5.

Sexually dimorphic control of affective state processing and empathic behaviors. Fang, S., Luo, Z., Wei, Z., Qin, Y., Zheng, J., Zhang, H., … Li, B. (2024). Neuron, 112(9), 1498-1517.e8.

Post-retrieval stress impairs subsequent memory depending on hippocampal memory trace reinstatement during reactivation. Heinbockel, H., Wagner, A. D., & Schwabe, L. (2024). Science Advances, 10(18).

An effect that counts: Temporally contiguous action effect enhances motor performance. Karsh, N., Ahmad, Z., Erez, F., & Hadad, B.-S. (2024). Psychonomic Bulletin & Review, 31(2), 897–905.

Learning enhances representations of taste-guided decisions in the mouse gustatory insular cortex. Kogan, J. F., & Fontanini, A. (2024). Current Biology, 34(9), 1880-1892.e5.

Babbling opens the sensory phase for imitative vocal learning. Leitão, A., & Gahr, M. (2024). Proceedings of the National Academy of Sciences, 121(18), e2312323121.

Information flow between motor cortex and striatum reverses during skill learning. Lemke, S. M., Celotto, M., Maffulli, R., Ganguly, K., & Panzeri, S. (2024). Current Biology, 34(9), 1831-1843.e7.

Statistically inferred neuronal connections in subsampled neural networks strongly correlate with spike train covariances. Liang, T., & Brinkman, B. A. W. (2024). Physical Review E, 109(4), 044404.

Pre-acquired Functional Connectivity Predicts Choice Inconsistency. Madar, A., Kurtz-David, V., Hakim, A., Levy, D. J., & Tavor, I. (2024). Journal of Neuroscience, 44(18), e0453232024.

Alpha-band sensory entrainment improves audiovisual temporal acuity. Marsicano, G., Bertini, C., & Ronconi, L. (2024). Psychonomic Bulletin & Review, 31(2), 874–885.

Excitability mediates allocation of pre-configured ensembles to a hippocampal engram supporting contextual conditioned threat in mice. Mocle, A. J., Ramsaran, A. I., Jacob, A. D., Rashid, A. J., Luchetti, A., Tran, L. M., … Josselyn, S. A. (2024). Neuron, 112(9), 1487-1497.e6.

Incidentally encoded temporal associations produce priming in implicit memory. Mundorf, A. M. D., Uitvlugt, M. G., & Healey, M. K. (2024). Psychonomic Bulletin & Review, 31(2), 761–771.

Intrinsic and Synaptic Contributions to Repetitive Spiking in Dentate Granule Cells. Shu, W.-C., & Jackson, M. B. (2024). Journal of Neuroscience, 44(18), e0716232024.

Dynamic prediction of goal location by coordinated representation of prefrontal-hippocampal theta sequences. Wang, Y., Wang, X., Wang, L., Zheng, L., Meng, S., Zhu, N., … Ming, D. (2024). Current Biology, 34(9), 1866-1879.e6.

Calibrating Bayesian Decoders of Neural Spiking Activity. Wei 魏赣超, G., Tajik Mansouri زینب تاجیک منصوری, Z., Wang 王晓婧, X., & Stevenson, I. H. (2024). Journal of Neuroscience, 44(18), e2158232024.

Attribute amnesia as a product of experience-dependent encoding. Yan, N., & Anderson, B. A. (2024). Psychonomic Bulletin & Review, 31(2), 772–780.

A common format for representing spatial location in visual and motor working memory. Yousif, S. R., Forrence, A. D., & McDougle, S. D. (2024). Psychonomic Bulletin & Review, 31(2), 697–707.

Unified control of temporal and spatial scales of sensorimotor behavior through neuromodulation of short-term synaptic plasticity. Zhou, S., & Buonomano, D. V. (2024). Science Advances, 10(18).

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience

9 notes

·

View notes