#finetuning

Explore tagged Tumblr posts

Text

Get the inside scoop on Llama 2, the open-source chat model by Meta AI. Learn about its advanced training process, including pretraining, fine-tuning, and reinforcement learning with human feedback. Learn more about this revolutionary technology.

#Llama2#MetaAI#ChatModel#OpenSource#AI#FineTuning#artificial intelligence#open source#machine learning

3 notes

·

View notes

Text

🎧 Celebrate World Hearing Day with Us! 🎧

Let’s Raise Awareness Together for Better Hearing! 🌍👂

On World Hearing Day, we offer a FREE Hearing Test, Trial, and Fine-Tuning! Plus, grab exciting exchange offers and exclusive deals on hearing aids. 🎉

Don’t miss this chance to take care of your hearing!

🔊 Get the Best Deals on Hearing Aids!

Looking for high-quality hearing aids at the best prices? Perfect Hearing and Speech Clinic offers a wide range of options to suit your needs. Schedule a hearing test and trial session with us today!

📞 Contact Us:

For more information or to book an appointment, call us at

9811196718.

🌐 Visit Our Website:

Explore our services and products at [www.perfecthearingclinic.com](http://www.perfecthearingclinic.com).

👍 Stay Connected:

- Follow us on: -

Facebook - https://www.facebook.com/perfecthearingclinic

Instagram-https://www.instagram.com/perfect_hearing_speech_clinic/

Quora- https://perfecthearingandspeechclinicsspace.quora.com/

YouTube-https://www.youtube.com/@perfecthearingclinic

Location: Perfect Hearing and Speech Clinic, Janakpuri East, Delhi and

Perfect Hearing and Speech Clinic, Sec 51, Ocus Quantum Gurugram

#WorldHearingDay#WorldHearingDay2025#hearingawareness#raiseawareness#hearing#hearingaid#connectedliving#ConnectedLiving#hearinghealth#hearingaidtrail#hearingloss#finetuning#ExchangeOffer#ExcitingDeals#hearinglosskids#hearingaids#betterhearing#clearhearing#perfecthearingandspeechclinic#ClearHearing#phscdelhi#HearingInnovation

0 notes

Text

What Is Fine-Tuning? And Its Methods For Best AI Performance

What Is Fine-Tuning?

In machine learning, fine-tuning is the act of modifying a learned model for particular tasks or use cases. It is now a standard deep learning method, especially for developing foundation models for generative artificial intelligence.

How does Fine-Tuning work?

When fine-tuning, a pre-trained model’s weights are used as a basis for further training on a smaller dataset of instances that more closely match the particular tasks and use cases the model will be applied to. Although supervised learning is usually included, it may also incorporate semi-supervised, self-supervised, or reinforcement learning.

The datasets that are utilized to fine-tune the pre-trained model communicate the particular domain knowledge, style, tasks, or use cases that are being adjusted. As an illustration:

An Large Language Model that has already been trained on general language might be refined for coding using a fresh dataset that has pertinent programming queries and sample code for each one.

With more labeled training samples, an image classification model that has been trained to recognize certain bird species may be trained to identify new species.

By using example texts that reflect a certain writing style, self-supervised learning may teach an LLM how to write in that manner.

When the situation necessitates supervised learning but there are few appropriate labeled instances, semi-supervised learning a type of machine learning that combines both labeled and unlabeled data is beneficial. For both computer vision and NLP tasks, semi-supervised fine-tuning has shown promising results and eases the difficulty of obtaining a sufficient quantity of labeled data.

Fine-Tuning Techniques

The weights of the whole network can be updated by fine-tuning, however this isn’t usually the case due to practical considerations. Other fine-tuning techniques that update just a subset of the model parameters are widely available and are often referred to as parameter-efficient fine-tuning (PEFT). Later in this part, it will discuss PEFT approaches, which help minimize catastrophic forgetting (the phenomena where fine-tuning results in the loss or instability of the model’s essential information) and computing demands, typically without causing significant performance sacrifices.

Achieving optimal model performance frequently necessitates multiple iterations of training strategies and setups, adjusting datasets and hyperparameters like batch size, learning rate, and regularization terms until a satisfactory outcome per whichever metrics are most relevant to your use case has been reached. This is because there are many different fine-tuning techniques and numerous variables that come with them.

Parameter Efficient Fine-Tuning (PEFT)

Full fine-tuning, like pre-training, is computationally intensive. It is typically too expensive and impracticable for contemporary deep learning models with hundreds of millions or billions of parameters.

Parameter efficient fine-tuning (PEFT) uses many ways to decrease the number of trainable parameters needed to adapt a large pre-trained model to downstream applications. PEFT greatly reduces computing and memory resources required to fine-tune a model. In NLP applications, PEFT approaches are more stable than complete fine-tuning methods.

Partial tweaks

Partial fine-tuning, also known as selective fine-tuning, updates just the pre-trained parameters most important to model performance on downstream tasks to decrease computing costs. The remaining settings are “frozen,” preventing changes.

The most intuitive partial fine-tuning method updates just the neural network’s outer layers. In most model architectures, the inner layers (closest to the input layer) capture only broad, generic features. For example, in a CNN used for image classification, early layers discern edges and textures, and each subsequent layer discerns finer features until final classification is predicted.

The more similar the new job (for which the model is being fine-tuned) is to the original task, the more valuable the inner layers’ pre-trained weights will be for it, requiring fewer layers to be updated.

Other partial fine-tuning strategies include changing just the layer-wide bias terms of the model, not the node weights. and “sparse” fine-tuning that updates just a portion of model weights.

Additive tweaking

Additive approaches add layers or parameters to a pre-trained model, freeze the weights, and train just those additional components. This method maintains model stability by preserving pre-trained weights.

This may increase training time, but it decreases GPU memory needs since there are fewer gradients and optimization states to store: Lialin, et al. found that training all model parameters uses 12–20 times more GPU memory than model weights. Quantizing the frozen model weights reduces model parameter accuracy, akin to decreasing an audio file’s bitrate, conserving more memory.

Additive approaches include quick tweaking. It’s comparable to prompt engineering, which involves customizing “hard prompts” human-written prompts in plain language to direct the model toward the intended output, for as by selecting a tone or supplying examples for few-shot learning. AI-authored soft prompts are concatenated to the user’s hard prompt in prompt tweaking. Prompt tuning trains the soft prompt instead of the model by freezing model weights. Fast, efficient tuning lets models switch jobs more readily, but interpretability suffers.

Adapters

In another subclass of additive fine-tuning, adaptor modules new, task-specific layers added to the neural network are trained instead of the frozen model weights. The original article assessed outcomes on the BERT masked language model and found that adapters performed as well as complete fine-tuning with 3.6% less parameters.

Reparameterization

Low-rank transformation of high-dimensional matrices (such a transformer model’s large matrix of pre-trained model weights) is used in parameterization-based approaches like LoRA. To reflect the low-dimensional structure of model weights, these low-rank representations exclude unimportant higher-dimensional information, drastically lowering trainable parameters. This greatly accelerates fine-tuning and minimizes model update memory.

LoRA optimizes a delta weight matrix injected into the model instead of the matrix of model weights. The weight update matrix is represented as two smaller (lower rank) matrices, lowering the number of parameters to update, speeding up fine-tuning, and reducing model update memory. Pre-trained model weights freeze.

Since LoRA optimizes and stores the delta between pre-trained weights and fine-tuned weights, task-specific LoRAs can be “swapped in” to adapt the pre-trained model whose parameters remain unchanged to a given use case.

QLoRA quantizes the transformer model before LoRA to minimize computing complexity.

Common fine-tuning use cases

Fine-tuning may customize, augment, or extend the model to new activities and domains.

Customizing style: Models may be customized to represent a brand’s tone by using intricate behavioral patterns and unique graphic styles or by starting each discussion with a pleasant greeting.

Specialization: LLMs may use their broad language skills to specialized assignments. Llama 2 models from Meta include basic foundation models, chatbot-tuned variations (Llama-2-chat), and code-tuned variants.

Adding domain-specific knowledge: LLMs are pre-trained on vast data sets but not omniscient. In legal, financial, and medical environments, where specialized, esoteric terminology may not have been well represented in pre-training, using extra training samples may help the base model.

Few-shot learning: Models with high generalist knowledge may be fine-tuned for more specialized categorization texts using few samples.

Addressing edge cases: Your model may need to handle circumstances not addressed in pre-training. Using annotated samples to fine-tune a model helps guarantee such scenarios are handled properly.

Your organization may have a proprietary data pipeline relevant to your use case. No training is needed to add this information into the model via fine-tuning.

Fine-Tuning Large Language Models(LLM)

A crucial step in the LLM development cycle is fine-tuning, which enables the basic foundation models’ linguistic capabilities to be modified for a range of applications, including coding, chatbots, and other creative and technical fields.

Using a vast corpus of unlabeled data, self-supervised learning is used to pre-train LLMs. Autoregressive language models are trained to predict the next word or words in a sequence until it is finished. Examples of these models include OpenAI’s GPT, Google’s Gemini, and Meta’s Llama models. Pre-training involves giving models a sample sentence’s beginning from the training data and asking them to forecast each word in the sequence until the sample’s finish. The real word that follows in the original example phrase acts as the ground truth for each forecast.

Although this pre-training produces strong text production skills, it does not provide a true grasp of the intent of the user. Fundamentally, autoregressive LLMs only add text to a prompt rather than responding to it. A pre-trained LLM (that has not been refined) only predicts, in a grammatically coherent manner, what may be the following word(s) in a given sequence that is launched by the prompt, without particularly explicit direction in the form of prompt engineering.

In response to the question, “Teach me how to make a resume,” an LLM would say, “using Microsoft Word.” Although it is an acceptable approach to finish the phrase, it does not support the user’s objective. Due to pertinent information in its pre-training corpus, the model may already possess a significant amount of knowledge about creating resumes; however, this knowledge may not be accessible without further refinement.

Thus, the process of fine-tuning foundation models is essential to making them entirely appropriate for real-world application, as well as to customizing them to your or your company’s distinct tone and use cases.

Read more on Govindhtech.com

#finetuning#AI#artificalintelligence#llm#Additivetweaking#Partialtweaks#lora#govindhtech#news#technology#technologies#TechNews#technologynews#technologytrends

0 notes

Text

✨ Unlock the Power of Generative AI! ✨

Fine-tuning pre-trained models is revolutionizing the landscape of Generative AI applications. By adapting existing models to specific tasks, companies can enhance creativity and efficiency, leading to innovative solutions across industries. Whether it’s crafting personalized content or generating realistic images, the potential is limitless! Major players in the tech industry are investing heavily in this space, pushing the boundaries of what AI can achieve.

Curious to learn more about how fine-tuning can elevate your business?

👉 Read more

#GenerativeAI#MachineLearning#AIInnovation#FineTuning#TechTrends#ArtificialIntelligence#FutureOfWork

0 notes

Text

youtube

Unlocking the Secrets of LLM Fine Tuning! 🚀✨

#FineTuning#LargeLanguageModels#GPT#Llama#Bard#ArtificialIntelligence#MachineLearning#AIOptimization#SelfSupervisedLearning#SupervisedLearning#ReinforcementLearning#AITraining#TechTutorial#AIModels#ModelCustomization#DataScience#AIForHealthcare#AIForFinance#DeepLearning#ModelFineTuning#AIEnhancement#TechInsights#AIAndML#AIApplications#MachineLearningTechniques#AITrainingTips#youtube#machine learning#artificial intelligence#art

1 note

·

View note

Text

10-Year Validity Passports Now Undergo ‘Finetuning’ Before Implementation

Passports with 10-year validity will be introduced soon after various aspects of the implementation have been fine-tuned, said Home Minister Datuk Seri Saifuddin Nasution Ismail. Speaking at a press conference following the Immigration Day 2024 Parade today, he said this initiative is expected to offer more options to citizens who frequently travel abroad. 10-year validity passports undergoing…

0 notes

Photo

Elegant and charming, Black Sample script font pays homage to classic calligraphy, elevating designs with timeless sophistication for various projects.

Link: https://l.dailyfont.com/7iEHA

#aff#Love#Inspiration#Design#Creativity#Typography#Artistic#Beautiful#Classic#Timeless#Elegant#Charming#Script#Calligraphy#Handwritten#Handmade#Sophisticated#Luxury#FineTuning

1 note

·

View note

Text

There’s a mystery at the heart of the universe – why is it uniquely fine-tuned to support life? Many scientists are baffled by the puzzle of life and are honest about its implications.

#Apologetics#Creation#DefendingtheFaith#EvidenceforGod#God#Jesus#FineTuning#FrankWilczek#Science#FreeSpeech#JohnLennox#StephenHawking#Genesis#NeildeGrasseTyson#ScienceandGod#ExistenceofGod#FineTunedUniverse#Universe#TheGrandDesign#PaulBackholer#Backholer#ByFaithMedia#ByFaith#Christian#ChristianArticle#ChristianBooks#ChristianReading#Cosmology#ThePlutoFiles#HowChristianityMadetheModernWorld

1 note

·

View note

Text

Data Annotation for Fine-tuning Large Language Models(LLMs)

The beginning of ChatGPT and AI-generated text, about which everyone is now raving, occurred at the end of 2022. We always find new ways to push the limits of what we once thought was feasible as technology develops. One example of how we are using technology to make increasingly intelligent and sophisticated software is large language models. One of the most significant and often used tools in natural language processing nowadays is large language models (LLMs). LLMs allow machines to comprehend and produce text in a manner that is comparable to how people communicate. They are being used in a wide range of consumer and business applications, including chatbots, sentiment analysis, content development, and language translation.

What is a large language model (LLM)?

In simple terms, a language model is a system that understands and predicts human language. A large language model is an advanced artificial intelligence system that processes, understands, and generates human-like text based on massive amounts of data. These models are typically built using deep learning techniques, such as neural networks, and are trained on extensive datasets that include text from a broad range, such as books and websites, for natural language processing.

One of the critical aspects of a large language model is its ability to understand the context and generate coherent, relevant responses based on the input provided. The size of the model, in terms of the number of parameters and layers, allows it to capture intricate relationships and patterns within the text.

While analyzing large amounts of text data in order to fulfill this goal, language models acquire knowledge about the vocabulary, grammar, and semantic properties of a language. They capture the statistical patterns and dependencies present in a language. It makes AI-powered machines understand the user’s needs and personalize results according to those needs. Here’s how the large language model works:

1. LLMs need massive datasets to train AI models. These datasets are collected from different sources like blogs, research papers, and social media.

2. The collected data is cleaned and converted into computer language, making it easier for LLMs to train machines.

3. Training machines involves exposing them to the input data and fine-tuning its parameters using different deep-learning techniques.

4. LLMs sometimes use neural networks to train machines. A neural network comprises connected nodes that allow the model to understand complex relationships between words and the context of the text.

Need of Fine Tuning LLMs

Our capacity to process human language has improved as large language models (LLMs) have become more widely used. However, their generic training frequently yields below-average performance for particular tasks. LLMs are customized using fine-tuning techniques to meet the particular needs of various application domains, hence overcoming this constraint. Numerous top-notch open-source LLMs have been created thanks to the work of the AI community, including but not exclusive to Open LLaMA, Falcon, StableLM, and Pythia. These models can be fine-tuned using a unique instruction dataset to be customized for your particular goal, such as teaching a chatbot to respond to questions about finances.

Fine-tuning a large language model involves adjusting and adapting a pre-trained model to perform specific tasks or cater to a particular domain more effectively. The process usually entails training the model further on a targeted dataset that is relevant to the desired task or subject matter. The original large language model is pre-trained on vast amounts of diverse text data, which helps it to learn general language understanding, grammar, and context. Fine-tuning leverages this general knowledge and refines the model to achieve better performance and understanding in a specific domain.

Fine-tuning a large language model (LLM) is a meticulous process that goes beyond simple parameter adjustments. It involves careful planning, a clear understanding of the task at hand, and an informed approach to model training. Let's delve into the process step by step:

1. Identify the Task and Gather the Relevant Dataset -The first step is to identify the specific task or application for which you want to fine-tune the LLM. This could be sentiment analysis, named entity recognition, or text classification, among others. Once the task is defined, gather a relevant dataset that aligns with the task's objectives and covers a wide range of examples.

2. Preprocess and Annotate the Dataset -Before fine-tuning the LLM, preprocess the dataset by cleaning and formatting the text. This step may involve removing irrelevant information, standardizing the data, and handling any missing values. Additionally, annotate the dataset by labeling the text with the appropriate annotations for the task, such as sentiment labels or entity tags.

3. Initialize the LLM -Next, initialize the pre-trained LLM with the base model and its weights. This pre-trained model has been trained on vast amounts of general language data and has learned rich linguistic patterns and representations. Initializing the LLM ensures that the model has a strong foundation for further fine-tuning.

4. Fine-Tune the LLM -Fine-tuning involves training the LLM on the annotated dataset specific to the task. During this step, the LLM's parameters are updated through iterations of forward and backward propagation, optimizing the model to better understand and generate predictions for the specific task. The fine-tuning process involves carefully balancing the learning rate, batch size, and other hyperparameters to achieve optimal performance.

5. Evaluate and Iterate -After fine-tuning, it's crucial to evaluate the performance of the model using validation or test datasets. Measure key metrics such as accuracy, precision, recall, or F1 score to assess how well the model performs on the task. If necessary, iterate the process by refining the dataset, adjusting hyperparameters, or fine-tuning for additional epochs to improve the model's performance.

Data Annotation for Fine-tuning LLMs

The wonders that GPT and other large language models have come to reality due to a massive amount of labor done for annotation. To understand how large language models work, it's helpful to first look at how they are trained. Training a large language model involves feeding it large amounts of data, such as books, articles, or web pages so that it can learn the patterns and connections between words. The more data it is trained on, the better it will be at generating new content.

Data annotation is critical to tailoring large-language models for specific applications. For example, you can fine-tune the GPT model with in-depth knowledge of your business or industry. This way, you can create a ChatGPT-like chatbot to engage your customers with updated product knowledge. Data annotation plays a critical role in addressing the limitations of large language models (LLMs) and fine-tuning them for specific applications. Here's why data annotation is essential:

1. Specialized Tasks: LLMs by themselves cannot perform specialized or business-specific tasks. Data annotation allows the customization of LLMs to understand and generate accurate predictions in domains or industries with specific requirements. By annotating data relevant to the target application, LLMs can be trained to provide specialized responses or perform specific tasks effectively.

2. Bias Mitigation: LLMs are susceptible to biases present in the data they are trained on, which can impact the accuracy and fairness of their responses. Through data annotation, biases can be identified and mitigated. Annotators can carefully curate the training data, ensuring a balanced representation and minimizing biases that may lead to unfair predictions or discriminatory behavior.

3. Quality Control: Data annotation enables quality control by ensuring that LLMs generate appropriate and accurate responses. By carefully reviewing and annotating the data, annotators can identify and rectify any inappropriate or misleading information. This helps improve the reliability and trustworthiness of the LLMs in practical applications.

4. Compliance and Regulation: Data annotation allows for the inclusion of compliance measures and regulations specific to an industry or domain. By annotating data with legal, ethical, or regulatory considerations, LLMs can be trained to provide responses that adhere to industry standards and guidelines, ensuring compliance and avoiding potential legal or reputational risks.

Final thoughts

The process of fine-tuning large language models (LLMs) has proven to be essential for achieving optimal performance in specific applications. The ability to adapt pre-trained LLMs to perform specialized tasks with high accuracy has unlocked new possibilities in natural language processing. As we continue to explore the potential of fine-tuning LLMs, it is clear that this technique has the power to revolutionize the way we interact with language in various domains.

If you are seeking to fine-tune an LLM for your specific application, TagX is here to help. We have the expertise and resources to provide relevant datasets tailored to your task, enabling you to optimize the performance of your models. Contact us today to explore how our data solutions can assist you in achieving remarkable results in natural language processing and take your applications to new heights.

0 notes

Video

youtube

Rodney Holder - Why Fine-Tuning Seems Designed 🔢🌌🤔 https://newsinfitness.com/rodney-holder-why-fine-tuning-seems-designed/

0 notes

Text

We come carry the face of a dead sun.

#skindarim#rada#book of the sun#istrati clan#vampire#these are old pages but I want to show them off nontheless to bring the whole thing back into focus#i'm waiting on a new quote from a book printer in my area which seems incredibly promising and professional#meanwhile I'm just working on the last finetuneing. 112 pages rn btw

1K notes

·

View notes

Text

What a true silver spoon you are. I’m feeding you, but you still get your mouth dirty.

KIDNAP | EP4

#kidnap the series#ohm pawat#leng thanaphon#ohmleng#minQ#kidnapedit#MIN YOUR FOND IS SHOWING#my edits#testing new sharpening settings gone wrong lol#these are waaaay too oversharpened#like i like the way these gifs look but i also don’t#you feel me???#i think the camera raw filter is too *much* so i gotta finetune that ig

310 notes

·

View notes

Text

twirling princess has entered the chamber

#liv morgan#wwe elimination chamber#wweedit#my gifs#ok finetuned the colouring and i like it and i love her !!!!!!!!!!!!!! LOVE HER !!!!!!!!!

136 notes

·

View notes

Text

just finished writing the final chapter of vernon x rockstar!reader,,

#── ᵎᵎ ✦ yapping#[ y'all 💔💔💔💔💔💔💔💔💔these are my babies. ]#[ need to finetune the written work but the texts are locked and loaded :( ]#[ see y'all on december 23 (THREAT) ]

93 notes

·

View notes

Text

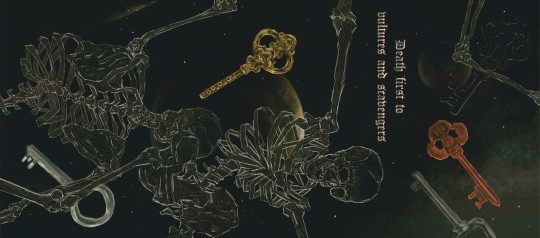

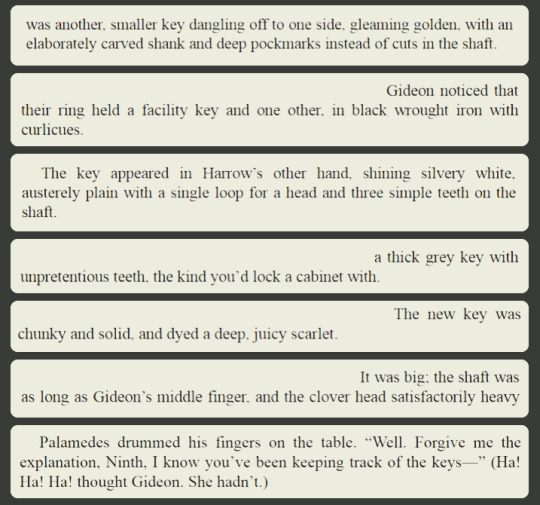

I'm doing a tlt book dust jacket art swap with a friend and for the left inside flap of the first book i decided to illustrate the facility keys, and it's very amusing to me that gideon gives such precise descriptions of the keys without once going "wait a minute these aesthetics sound familiar …"

#gideon is so much more observant than she gives herself credit for lmao#this is just a wip obv i still need to finetune the typograph and layout#the locked tomb#gideon the ninth#tlt spoilers#gtn spoilers#gideon nav

632 notes

·

View notes