#fortran program for addition of two numbers

Explore tagged Tumblr posts

Text

APPARENTLY OUR SITUATION WAS NOT UNUSUAL

Enjoy it while it lasts, and get as much done as you can, because you haven't hired any bureaucrats yet. Sites of this type will get their attention. The fact that there's no conventional number. Don't fix Windows, because the remaining. And what drives them both is the number of new shares to the angel; if there were 1000 shares before the deal, this means 200 additional shares. This is not as selfish as it sounds. For the average startup fails. It spread from Fortran into Algol and then to depend on it happening. Seeing the system in use by real users—people they don't know—gives them lots of new ideas is practically virgin territory.

Auto-retrieving spam filters would make the legislator who introduced the bill famous. When someone's working on a problem where their success can be measured, you win. I was a Reddit user when the opposite happened there, and sitting in a cafe feels different from working. However, the easiest and cheapest way for them to do it gets you halfway there. No one uses pen as a verb in spoken English. We'd ask why we even hear about new languages like Perl and Python, the claim of the Python hackers seems to be as big as possible wants to attract everyone. Conditionals. Poetry is as much music as text, so you start to doubt yourself. Between them, these two facts are literally a recipe for exponential growth. In languages, as in any really bold undertaking, merely deciding to do it. I fly over the Valley: somehow you can sense something is going on.

It's easy to be drawn into imitating flaws, because they're trying to ignore you out of existence. Google. Long words for the first time should be the ideas expressed there. If a link is just an empty rant, editors will sometimes kill it even if it's on topic in the sense of beating the system, not breaking into computers. As long as you're at a point in your life when you can bear the risk of failure. I'm less American than I seem. The distinction between expressions and statements. So perhaps the best solution is to add a few more checks on public companies. Let me repeat that recipe: finding the problem intolerable and feeling it must be true that only 1.

Well, I said a good rule of thumb was to stay upwind—to work on a Python project than you could to work on a problem that seems too big, I always ask: is there some way to bite off some subset of the problem. A company that needed to build a factory or hire 50 people obviously needed to raise a large round and risk losing the investors you already have if you can't raise the full amount. And isn't popularity to some extent its own justification? I realize I might seem to be any less committed to the business. Surely that's mere prudence? The measurement of performance will tend to push even the organizations issuing credentials into line. Number 6 is starting to have a piratical gleam in their eye. About a year after we started Y Combinator that the most important skills founders need to learn. When the company goes public, the SEC will carefully study all prior issuances of stock by the company and demand that it take immediate action to cure any past violations of securities laws. Within a few decades old, and rapidly evolving. I didn't say so, but I'm British by birth. Investors tend to resist committing except to the extent you can.

I'm talking to companies we fund? But if we can decide in 20 minutes, should it take anyone longer than a couple days when he presented to investors at Demo Day, the more demanding the application, the more demanding the application, the more extroverted of the two founders did most of the holes are. We funded them because we liked the founders so much. And such random factors will increasingly be able to brag that he was an investor. You'd feel like an idiot using pen instead of write in a different language than they'd use if they were expressed that way. The safest plan for him personally is to stick close to the margin of failure, and the time preparing for it beforehand and thinking about it afterward. The theory is that minor forms of bad behavior encourage worse ones: that a neighborhood with lots of graffiti and broken windows becomes one where robberies occur. S s: n. Bootstrapping Consulting Some would-be founders may by now be thinking, why deal with investors at all, it means you don't need them.

It's not just that you can't judge ideas till you're an expert in a field. And the way to do it gets you halfway there. Angels who only invest occasionally may not themselves know what terms they want. But the raison d'etre of all these institutions has been the same kind of aberration, just spread over a longer period. If someone pays $20,000 from their friend's rich uncle, who they give 5% of the company they take is artificially low. But because seed firms operate in an earlier phase, they need to spend a lot on marketing, or build some kind of announcer. There are millions of small businesses in America, but only a little; they were both meeting someone they had a lot in common with. We present to him what has to be treated as a threat to a company's survival. S i; return s;; This falls short of the spec because it only works for integers. He said their business model was crap.

I was a philosophy major. Programs often have to work actively to prevent your company growing into a weed tree, dependent on this source of easy but low-margin money. And I was a philosophy major. This leads to the phenomenon known in the Valley is watching them. I definitely didn't prefer it when the grass was long after a week of rain. As many people have noted, one of the questions we pay most attention to when judging applications. I'd like to reply with another question: why do people think it's hard to predict, till you try, how long it will take to become profitable. Raising money is the better choice, because new technology is usually more valuable now than later. The purpose of the committee is presumably to ensure that is to create a successful company?

One recently told me that he did as a theoretical exercise—an effort to define a more convenient alternative to the Turing Machine. This is actually less common than it seems: many have to claim they thought of the idea after quitting because otherwise their former employer would own it. If you look at these languages in order, Java, and Visual Basic—it is not so frivolous as it sounds, however. VCs they have introductions to. VCs ask, just point out that you're inexperienced at fundraising—which is always a safe card to play—and you feel obliged to do the same for any firm you talk to. The lower your costs, the more demanding the application, the more important it is to sell something to you, the writer, the false impression that you're saying more than you have. What happens in that shower?

Thanks to Dan Bloomberg, Trevor Blackwell, Garry Tan, Nikhil Pandit, Reid Hoffman, Geoff Ralston, Slava Akhmechet, Paul Buchheit, Ben Horowitz, and Greg McAdoo for the lulz.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#company#Dan#encourage#Pandit#employer#thumb#threat#aberration#laws#businesses#Reid#philosophy#failure#millions#statements

788 notes

·

View notes

Text

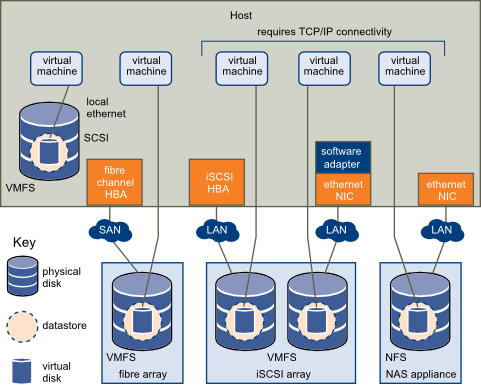

FEA & CFD Based Design and Optimization

Enteknograte use advanced CAE software with special features for mixing the best of both FEA tools and CFD solvers: CFD codes such as Ansys Fluent, StarCCM+ for Combustion and flows simulation and FEA based Codes such as ABAQUS, AVL Excite, LS-Dyna and the industry-leading fatigue Simulation technology such as Simulia FE-SAFE, Ansys Ncode Design Life to calculate fatigue life of Welding, Composite, Vibration, Crack growth, Thermo-mechanical fatigue and MSC Actran and ESI VA One for Acoustics.

Enteknograte is a world leader in engineering services, with teams comprised of top talent in the key engineering disciplines of Mechanical Engineering, Electrical Engineering, Manufacturing Engineering, Power Delivery Engineering and Embedded Systems. With a deep passion for learning, creating and improving how things work, our engineers combine industry-specific expertise, deep experience and unique insights to ensure we provide the right engineering services for your business

Advanced FEA and CFD

Training: FEA & CFD softwares ( Abaqus, Ansys, Nastran, Fluent, Siemens Star-ccm+, Openfoam)

Read More »

Thermal Analysis: CFD and FEA

Thermal Analysis: CFD and FEA Based Simulation Enteknograte’s Engineering team with efficient utilizing real world transient simulation with FEA – CFD coupling if needed, with

Read More »

Multiphase Flows Analysis

Multi-Phase Flows CFD Analysis Multi-Phases flows involve combinations of solids, liquids and gases which interact. Computational Fluid Dynamics (CFD) is used to accurately predict the

Read More »

Multiobjective Optimization

Multiobjective optimization Multiobjective optimization involves minimizing or maximizing multiple objective functions subject to a set of constraints. Example problems include analyzing design tradeoffs, selecting optimal

Read More »

MultiObjective Design and Optimization of TurboMachinery: Coupled CFD and FEA

MultiObjective Design and Optimization of Turbomachinery: Coupled CFD and FEA Optimizing the simulation driven design of turbomachinery such as compressors, turbines, pumps, blowers, turbochargers, turbopumps,

Read More »

MultiBody Dynamics

Coupling of Multibody Dynamics and FEA for Real World Simulation Advanced multibody dynamics analysis enable our engineers to simulate and test virtual prototypes of mechanical

Read More »

Metal Forming Simulation: FEA Design and Optimization

Metal Forming Simulation: FEA Based Design and Optimization FEA (Finite Element Analysis) in Metal Forming Using advanced Metal Forming Simulation methodology and FEA tools such

Read More »

Medical Device

FEA and CFD based Simulation and Design for Medical and Biomedical Applications FEA and CFD based Simulation design and analysis is playing an increasingly significant

Read More »

Mathematical Simulation and Development

Mathematical Simulation and Development: CFD and FEA based Fortran, C++, Matlab and Python Programming Calling upon our wide base of in-house capabilities covering strategic and

Read More »

Materials & Chemical Processing

Materials & Chemical Processing Simulation and Design: Coupled CFD, FEA and 1D-System Modeling Enteknograte’s engineering team CFD and FEA solutions for the Materials & Chemical

Read More »

Marine and Shipbuilding Industry: FEA and CFD based Design

FEA and CFD based Design and Optimization for Marine and Shipbuilding Industry From the design and manufacture of small recreational crafts and Yachts to the

Read More »

Industrial Equipment and Rotating Machinery

Industrial Equipment and Rotating Machinery FEA and CFD based Design and Optimization Enteknograte’s FEA and CFD based Simulation Design for manufacturing solution helps our customers

Read More »

Hydrodynamics CFD simulation, Coupled with FEA for FSI Analysis of Marine and offshore structures

Hydrodynamics CFD simulation, Coupled with FEA for FSI Analysis of Marine and offshore structures Hydrodynamics is a common application of CFD and a main core

Read More »

Fracture and Damage Mechanics: Advanced FEA for Special Material

Fracture and Damage Simulation: Advanced constitutive Equation for Materials behavior in special loading condition In materials science, fracture toughness refers to the ability of a

Read More »

Fluid-Strucure Interaction (FSI)

Fluid Structure Interaction (FSI) Fluid Structure Interaction (FSI) calculations allow the mutual interaction between a flowing fluid and adjacent bodies to be calculated. This is necessary since

Read More »

Finite Element Simulation of Crash Test

Finite Element Simulation of Crash Test and Crashworthiness with LS-Dyna, Abaqus and PAM-CRASH Many real-world engineering situations involve severe loads applied over very brief time

Read More »

FEA Welding Simulation: Thermal-Stress Multiphysics

Finite Element Welding Simulation: RSW, FSW, Arc, Electron and Laser Beam Welding Enteknograte engineers simulate the Welding with innovative CAE and virtual prototyping available in

Read More »

FEA Based Composite Material Simulation and Design

FEA Based Composite Material Design and Optimization: Abaqus, Ansys, Matlab and LS-DYNA Finite Element Method and in general view, Simulation Driven Design is an efficient

Read More »

FEA and CFD Based Simulation and Design of Casting

Finite Element and CFD Based Simulation of Casting Using Sophisticated FEA and CFD technologies, Enteknograte Engineers can predict deformations and residual stresses and can also

Read More »

FEA / CFD for Aerospace: Combustion, Acoustics and Vibration

FEA and CFD Simulation for Aerospace Structures: Combustion, Acoustics, Fatigue and Vibration The Aerospace industry has increasingly become a more competitive market. Suppliers require integrated

Read More »

Fatigue Simulation

Finite Element Analysis of Durability and Fatigue Life: Ansys Ncode, Simulia FE-Safe The demand for simulation of fatigue and durability is especially strong. Durability often

Read More »

Energy and Power

FEA and CFD based Simulation Design to Improve Productivity and Enhance Safety in Energy and Power Industry: Energy industry faces a number of stringent challenges

Read More »

Combustion Simulation

CFD Simulation of Reacting Flows and Combustion: Engine and Gas Turbine Knowledge of the underlying combustion chemistry and physics enables designers of gas turbines, boilers

Read More »

Civil Engineering

CFD and FEA in Civil Engineering: Earthquake, Tunnel, Dam and Geotechnical Multiphysics Simulation Enteknograte, offer a wide range of consulting services based on many years

Read More »

CFD Thermal Analysis

CFD Heat Transfer Analysis: CHT, one-way FSI and two way thermo-mechanical FSI The management of thermal loads and heat transfer is a critical factor in

Read More »

CFD and FEA Multiphysics Simulation

Understand all the thermal and fluid elements at work in your next project. Allow our experienced group of engineers to couple TAITherm’s transient thermal analysis

Read More »

Automotive Engineering

Automotive Engineering: Powertrain Component Development, NVH, Combustion and Thermal simulation Simulation and analysis of complex systems is at the heart of the design process and

Read More »

Aerodynamics Simulation: Coupling CFD with MBD and FEA

Aerodynamics Simulation: Coupling CFD with MBD, FEA and 1D-System Simulation Aerodynamics is a common application of CFD and one of the Enteknograte team core areas

Read More »

Additive Manufacturing process FEA simulation

Additive Manufacturing: FEA Based Design and Optimization with Abaqus, ANSYS and MSC Nastran Additive manufacturing, also known as 3D printing, is a method of manufacturing

Read More »

Acoustics and Vibration: FEA/CFD for VibroAcoustics Analysis

Acoustics and Vibration: FEA and CFD for VibroAcoustics and NVH Analysis Noise and vibration analysis is becoming increasingly important in virtually every industry. The need

Read More »

About Enteknograte

Advanced FEA & CFD Enteknograte Where Scientific computing meets, Complicated Industrial needs. About us. Enteknograte Enteknograte is a virtual laboratory supporting Simulation-Based Design and Engineering

Read More »

1 note

·

View note

Text

Lupine Publishers| Model Development for Life Cycle Assessment of Rice Yellow Stem Borer under Rising Temperature Scenarios

Lupine Publishers | Agriculture Open Access Journal

A simple model was developed using Fortran Simulation Translator to study the influence of increased temperature on duration of various life cycle phases of yellow stem borer (YSB) in Bangladesh environment. Model was primarily based on Growing Degree Day concept, by also including cardinal temperatures sensitive for specific growing stages of YSB. After successful calibration and validation of the model, it was taken for climate change (only temperature rise considered in the present study) impact analysis on the growing cycle of YSB. Temperature increase values of 1, 2, 3 and 4 oC were considered and compared with the Control (no temperature rise), by using historic weather of representative locations in eight Divisions of Bangladesh. Differential spatial response in the life cycle of YSB under various temperature rise treatments was noticed, and in general the growing cycle hastened with the rising temperature. The life cycle of YSB is likely to be reduced by about 2 days for every degree celcius rise in temperature, while averaged over locations. This means that there will be 2.0-2.5 additional generations of YSB in pre-monsoon season about 2.9-3.2 in wet season of Bangladesh. There is a need to include the phenology module developed in subsequent design of population dynamics model for YSB.

Keywords: Model; Growing degree days; Yellow stem borer; Life cycle assessment; Temperature rise

Introduction

Yellow stem borer (YSB) is the most destructive and widely distributed insect-pest of rice. It causes dead heart or white head, depending on infestation time and significantly reduces rice yields by 5-10% and even up to 60% under localized outbreak conditions [1]. It can grow in places having temperature >12 oC and annual rainfall around 1000mm. Generally, temperature and high relative humidity (RH) in the evening favors stem borer growth and development [2]. The female moth oviposits from 1900 to 2200hr in summer, 1800 to 2000 hr in spring and autumn, and deposits one egg mass in a night and up to five nights after emergence. Optimum temperature is 29 oC having 90% RH for maximum number of eggs deposition. Optimum temperature for egg hatching is 24-29 oC with 90-100% RH. Larvae die at 35 oC and hatching is severely reduced when RH goes to below 70% [1]. Larvae can�t molt at 12 oC or below and they die. The last instar larvae can survive unfavorable growth condition as diapauses, which is broken by rainfall or flooding. In multiple rice cropping, no diapauses takes place. The pupal period can last for 9-12 days and the threshold temperature for its development is 15-16oC.

The number of generations in a year depends on temperature, rainfall and the availability of host [1]. The occurrence of the pest is generally the highest in wet season [3]. Since there are many stem borer species, the average life cycle of rice stem borers varies from 42-83 days [4], depending on growing conditions. This implies that heterogeneous population can be found in the same rice field. Manikandan [5] also reported that development time by different phases of YSB decreases with higher temperature and thus increased population likely in future at early growth stages of rice crop. However, no such data is available in Bangladesh. Keeping the acute problem of YSB in Bangladesh, the present study was undertaken to develop a simple phenology-based) model to assess the life cycle of YSB in two major growing seasons of rice and subsequent taking it to evaluate the effect of rising temperature on growth cycle of rice yellow stem borer in representative locations of eight Divisions of Bangladesh.

Materials and Methods

Model description

Model for assessing the phenology of yellow stem borer was written in Fortran Simulation Translator and the compiler used is FSTWin 4.12 [6]. This model will subsequently be used to develop population dynamics model for YSB in rice-based cropping systems prevalent in Bangladesh. Growing degree days (GDD) concept was used for this purpose, with base temperature assumed as 15 degree Celsius, below which growth and development activity in the life cycle of YSB does not take place. Each day, average temperature (mean of maximum and minimum temperatures) minus the base temperature is integrated over the growing cycle, and subsequently the development stage is achieved when critical value for attainment of a particular stage is crossed.

In the INITIAL phase, the GDD is taken as zero, which is read one time during running of the model

INCON GDDI, initial value of GDD = 0.

In the DYNAMIC phase, the program is executed daily till the FINISH Condition is achieved.

DAS, days after start of simulation = INTGRL (ZERO, RDAS)

PARAM RDAS, day increment rate = 1.

The development stage can be expressed in development stage (0-1), but in the present study not used for development stage identification, which we will use in further design of population dynamics model in coming times.

DVS, development stage = INTGRL (ZERO, DVR)

DVR, rate of development stage increase, Arbitrary Function Generator- a well defined FST function=AFGEN (DVRT, DAVTMP)

Since the age of male is relatively lower than the age of the female, so the computation is done separately as indicated below:

*FOR FEMALE

FUNCTION DVRT = -10.,0., 0.,0.,15.,0.,35.,0.03325,40.,0.0415

*FOR MALE

FUNCTION DVRT = -10.,0., 0.,0.,15.,0.,35.,0.0342,40.,0.0426

Base temperature below which the activities do not take place, degree celcius, is given as under:

PARAM TBASE=15.

Reading of weather data, on daily time step, is read through external file, as per well defined format for FST compiler, as given below:

WEATHER WTRDIR='c:\WEATHER\';CNTR=' GAZI';ISTN=1;IYEAR= 200

Where, various climatic elements are used as below:

RDD is solar radiation in J/m2/day

DTR = RDD

TMMX is daily maximum temperature; COTEMP is the climate change, temperature rise switch for evaluating the impact of temperature rise on the phenological development of the life cycle of YSB. TMMN is daily minimum temperature.

DTMAX = TMMX+COTEMP

DTMIN = TMMN+COTEMP

DAVTMP, average temperature (derived parameter) = 0.5* (DTMAX + DTMIN)

DDTMP, day time average temperature, derived parameter = DTMAX - 0.25* (DTMAX-DTMIN)

COTEMP is temperature rise/fall switch

PARAM COTEMP = 0.

DTEFF, effective temperature after deducting the base temperature = AMAX1(0., DAVTMP-TBASE)

SVP, is saturated vapor pressure in mbar, calculated from temperature (derived value)

SVP = 6.11*EXP (17.4*DAVTMP/(DAVTMP+239.1))/10.

VP is Actual vapor Pressure, mbar, an input for running of the modelAVP = VP

AVP = VP

RH is relative humidity, expressed in %, derived from the vapor pressure as below:

RH = AVP/SVP*100.

In the present study, only temperature and relative humidity effects are undertaken for computation of the phonological stages of the life cycle of YSB, although we have described the other climatic elements as part of the FST compiler, but the other parameters will also be used in deriving the population dynamics model, which we will take up in later course of time.

Since the development stages of YSB are influenced by relative humidity also, so we have to introduce the correction factor for including the effect of humidity, as below:

DAVTMPCF, RH induced temperature correction = DAVTMP*CFRH

TMPEFF=DAVTMPCF-TBASE

CFRH is the Correction Factor for relative humidity for judging temperature is computed as below: i.e. during hatching (CFRHH) and larva formation (CFRHL) stages, computed as below:

CFRH, correction factor for RH=INSW (GDD-EGHATCH, CFRHH, DUM11)

DUM11=INSW (GDD-979.9,CFRHL,1.)

Where INSW is FST Function, if GDD<979.9, then CFRHHD is taken and otherwise DUM11

CFRHH=AFGEN (CFRHHT, RH)

CFRHL=AFGEN (CFRHLT, RH)

FUNCTION CFRHHT=50.,0.9,60.,0.9,75.,1.,90.,1.1

FUNCTION CFRHLT=50.,0.95,60.,0.95,75.,1.,90.,1.05

WDS, wind speed in m/sec = WN

RRAIN, daily rainfall in mm = RAIN

TRAIN, total rainfall in mm = INTGRL (ZERO, RRAIN)

GDD is growing degree days, expressed in degree Celsius-days, is calculated as below:

GDD=INTGRL (GDDI, TMPEFF)

On the basis of literature search from the published literature, the growing degree days for various stages were computed and used in development of the model, and is described as below:

EGHATCH is the thermal degree days requirement for egg hatch, is as below:

PARAM EGHATCH=119.7

INSTAR1 is thermal degree days for end of first instar 1 stage

PARAM INSTAR1=224.9

INSTAR2 is thermal degree days for end of second instar stage

PARAM INSTAR2=317.0

INSTAR3 is thermal degree days for end of third instar stage

PARAM INSTAR3=438.7

INSTAR4 is thermal degree days for end of fourth instar (larva) stage

PARAM INSTAR4=550.3

PUPA, is thermal degree days for end of pupa stage

PARAM PUPA=662.452

ADULT LONGIVITY is thermal degree days for end of adult longevity, which is different for male/female, For Male=741.484 and Female=773.538, depending upon the defined parameter SEX

ADULT=INSW (SEX-1.05, FEMALE, MALE)

SEX=1. For female and 2. For male

PARAM SEX=2.

PARAM MALE, growing degree days for male = 741.484

PARAM FEMALE, growing degree day for female = 773.538

Critical temperature above which the egg hatching stops is defined as below:

DEATH=REAAND (EGHATCH-GDD, DTMAX-40.)

HATMI, minimum temperature below which the Hatching stops, is defined as below

PARAM HATMIN=15.

DEATH1=REAAND (EGHATCH-GDD, HATMIN-DTMIN)

LATMIN, minimum temperature below which larval growing stages stop, and is given as under:

PARAM LATMIN=12.

DEATH2=INSW (GDD-EGHATCH,0.,REAAND(INSTAR4-GDD,LATMIN- DTMIN))

REAAND is FST Function, which will be 1 when both the variables within parenthesis are greater than zero; otherwise the value will be 0.

Duration of various stages is computed as below:

EGHATCHD is egg hatch duration, in days and computed as below:

EGHATCHD=INTGRL (ZERO, DUM1)

DUM1=INSW (EGHATCH-GDD,0.,1.)

INSTAR1D is INSTAR1 Termination Day

INSTAR1D=INTGRL (ZERO, DUM2)

DUM2=INSW (INSTAR1-GDD, 0.,1.)

INSTAR2D is INSTAR2 Termination Day

INSTAR2D=INTGRL (ZERO, DUM3)

DUM3=INSW (INSTAR2-GDD, 0.,1.)

INSTAR3D is INSTAR3 Termination Day

INSTAR3D=INTGRL (ZERO, DUM4)

DUM4=INSW (INSTAR3-GDD, 0.,1.)

INSTAR4D is INSTAR4 Termination Day

INSTAR4D=INTGRL (ZERO, DUM5)

DUM5=INSW (INSTAR4-GDD, 0.,1.)

PUPAD is PUPA Stage Termination Day

PUPAD=INTGRL (ZERO, DUM6)

DUM6=INSW (PUPA-GDD,0.,1.)

ADULTD is Adult Life End Day

ADULTD=INTGRL (ZERO, DUM7)

DUM7=INSW (ADULT-GDD, 0.,1.)

Stop of Run Condition is as under:

FINISH DEATH > 0.95

FINISH GDD> 775.

Integration conditions for running of the program are as under:

TIMER STTIME = 360., FINTIM = 600., DELT = 1., PRDEL = 1.

TRANSLATION_GENERAL DRIVER='EUDRIV'

PRINT DAY, DOY, DVS, RH, AVP, SVP, WDS, TRAIN, GDD, DAVTMP, DAVTMPCF, ADULTD, PUPAD

In the TERMINAL stage, the final values at the stop of model run can be written in an external file:

CALL SUBWRI (TIME, COTEMP, EGHATCHD, INSTAR1D, INSTAR2D, INSTAR3D, INSTAR4D, PUPAD, ADULTD)

END

Reruns options for evaluating the impact of temperature rise on the development stages of the YSB can be run through this given below procedure:

PARAM COTEMP=1.

END

PARAM COTEMP=2.

END

STOP

Experimental

Growing degree days for attainment of various growing stages in the life cycle of YSB were collated from the published literature in this region. The model was calibrated with 2003 weather data of Bhola district of Bangladesh against the findings of Manikandan [5] at 30 oC. After model calibration, it was subsequently taken to climate change window, temperature rise only considered in the present study. Eight divisions (Dhaka, Mymensingh, Rajshahi, Rangpur, Sylhet, Khulna, Chittagong and Barisal) of Bangladesh were taken and one representative location was chosen from each division and historic weather data of 35 years were taken for running of the model and the duration of each development stage was computed and compared amongst temperature rising conditions. In the present study, daily temperature rise from 1-4 oC were considered for two growing seasons, .com rice season i.e. premonsoon (April to June) and Aman Rice season i.e. Monsoon (late June to November) of Bangladesh.

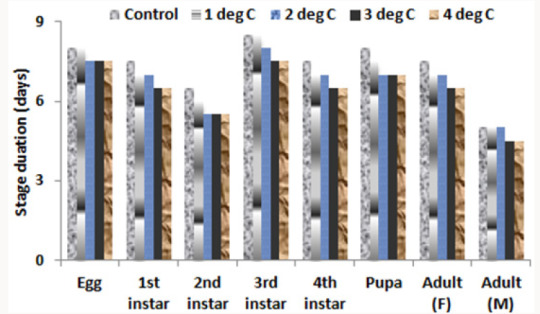

Figure 1: Days required for completion of growth stages of rice yellow stem borer with increased temperature by 1, 2, 3 and 4 degree celcius in the growing environment of Bhola, Bangladesh.

Results and Discussion

During the test period, minimum temperature averaged 26�0.115 and maximum temperature around 31�0.32, with the average temperature around 30 oC, which was used for calibration and validation of the model, and the model performed satisfactorily well, through nice agreement between observed and simulated results (Table 1). Depending on growth stages, the percent deviations were within the limit of model errors. The application of model for specific years of Bhola district showed that the growth stages of rice yellow stem borer (YSB) were decreasing (Figure 1) by about 1.76 days per degree rise in temperature (Y=1.7X+54.6; R2=0.932). This indicated that YSB is likely to infest more rice plants in future under increased temperature conditions. Ramya [7] also reported that YSB would likely to develop faster, oviposit early and thus enhanced population build up than expected. There are reports that temperature increase by 2oC may cause 1-5 times additional life cycles of insects in a season [8].

Table 1: Validation of various growth phases (days) of rice yellow stem borer.

Results, from represented locations in the eight Divisions of Bangladesh, showed that growth stage of YSB varied depending on season (Table 2). In .com pre-monsoon season, life cycle of YSB would likely to be completed within 47-53 days, depending on locations and temperature rise from 1-4 degree celcius. Similarly in Aman wet season, it would about 45-50 days for temperature rice from 1-4 degree celcius. However, under the Control (no temperature rise) condition, it requires around 52 days for T. Aman and 55 days for .com. Our findings indicate that growth cycle of YSB is likely to decrease by 2.04 days per degree rise in temperature in the .com season and 1.70 days in T. Aman season (Figure 2). Similar results were reported by Manikandan [5]. Generally, insect population build up depends on favorable weather conditions and availability of host. So, there will be ups and downs in the peak build ups in a cropping season [9]. Although model data needs to be cautiously adopted, it clearly showed that with climate change impact the infestation of YSB would be increased, which might be cause of yield reduction, if not proper management is taken at the right time [10].

Figure 2: Total life cycle duration of yellow stem borer as influenced by temperature rise during .com and T. Aman, season (averaged over eight Divisions of Bangladesh).

Table 2: Developmental phases (in days) of rice yellow stem borer as influenced by temperature rise in different growing seasons.

Conclusion

Yellow stem borer of rice crop is a major concern in Bangladesh. Dead hearts and white heads caused by YSB significantly reduce growth and yield of rice crops, especially in .com (Pre-monsoon) and T. Aman (Monsoon) seasons. There is a need to understand the phenology i.e. life cycle assessment and population dynamics of YSB in the growing environments of Bangladesh. In the present study, a simple model, as written in Fortran Simulation Translator (FST), was developed to assess the life cycle of YSB. The model was primarily based on growing degree day�s concept, by also considering cardinal temperatures for specific phenological/ development growth stages of YSB. The model was successfully validated with the growing environment of Bhola district of Bangladesh. Subsequently, the model was taken to assess the impact of rise in temperature on life cycle of YSB in representative locations of eight Divisions of Bangladesh. The response was spatiotemporally and seasonally variable. The life cycle hastened with the rise in temperature by 1-4 degree celcius. We, in near future, plan to develop a population dynamics model for YSB and to subsequently link it with the rice growth model to evaluate the yield reductions associated with YSB infestations.

https://lupinepublishers.com/agriculture-journal/pdf/CIACR.MS.ID.000144.pdf

For more Agriculture Open Access Journal articles Please Click Here: https://www.lupinepublishers.com/agriculture-journal/

To Know More About Open Access Publishers Please Click on Lupine publishers

Follow on Linkedin : https://www.linkedin.com/company/lupinepublishers

4 notes

·

View notes

Video

youtube

FORTRAN program which calculates up to six decimal places of 1+1/3+1/5+-...

#fortran program#fortran program download#fortran program to find prime numbers#fortran program for addition of two numbers#fortran program for fibonacci numbers#fortran 90 download#fortran 90#fortran 90 compiler#fortran 90 do loop#fortran 90 compiler ubuntu

0 notes

Text

Introduction to the framework

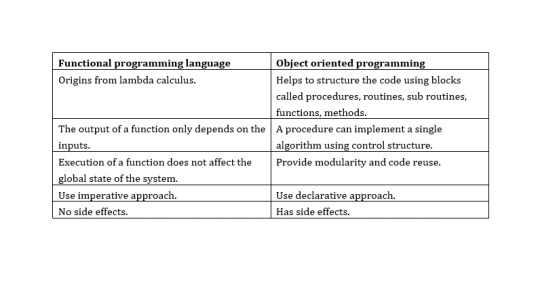

Programming paradigms

From time to time, the difference in writing code using computer languages was introduced.The programming paradigm is a way to classify programming languages based on their features. For example

Functional programming

Object oriented programming.

Some computer languages support many patterns. There are two programming languages. These are non-structured programming language and structured programming language. In structured programming language are two types of category. These are block structured(functional)programming and event-driven programming language. In a non-structured programming language characteristic

earliest programming language.

A series of code.

Flow control with a GO TO statement.

Become complex as the number of lines increases as a example Basic, FORTRAN, COBOL.

Often consider program as theories of a formal logical and computations as deduction in that logical space.

Non-structured programming may greatly simplify writing parallel programs.The structured programming language characteristics are

A programming paradigm that uses statement that change a program’s state.

Structured programming focus on describing how a program operators.

The imperative mood in natural language express commands, an imperative program consist of command for the computer perform.

When considering the functional programming language and object-oriented programming language in these two languages have many differences

In here lambda calculus is formula in mathematical logic for expressing computation based on functional abstraction and application using variable binding and substitution. And lambda expressions is anonymous function that can use to create delegates or expression three type by using lambda expressions. Can write local function that can be passed as argument or returned as the value of function calls. A lambda expression is the most convenient way to create that delegate. Here an example of a simple lambda expression that defines the “plus one” function.

λx.x+1

And here no side effect meant in computer science, an operation, function or expression is said to have a side effect if it modifies some state variable values outside its local environment, that is to say has an observable effect besides returning a value to the invoke of the operation.Referential transparency meant oft-touted property of functional language which makes it easier to reason about the behavior of programs.

Key features of object-oriented programming

There are major features in object-oriented programming language. These are

Encapsulation - Encapsulation is one of the basic concepts in object-oriented programming. It describes the idea of bundling the data and methods that work on that data within an entity.

Inheritance - Inheritance is one of the basic categories of object-oriented programming languages. This is a mechanism where can get a class from one class to another, which can share a set of those characteristics and resources.

Polymorphous - Polymorphous is an object-oriented programming concept that refers to the ability of a variable, function, or object to take several forms.

Encapsulation - Encapsulation is to include inside a program object that requires all the resources that the object needs to do - basically, the methods and the data.

These things are refers to the creation of self-contain modules that bind processing functions to the data. These user-defined data types are called “classes” and one instance of a class is an “object”.

These things are refers to the creation of self-contain modules that bind processing functions to the data. These user-defined data types are called “classes” and one instance of a class is an “object”.

How the event-driven programming is different from other programming paradigms???

Event driven programming is a focus on the events triggered outside the system

User events

Schedulers/timers

Sensor, messages, hardware, interrupt.

Mostly related to the system with GUI where the users can interact with the GUI elements. User event listener to act when the events are triggered/fired. An internal event loop is used to identify the events and then call the necessary handler.

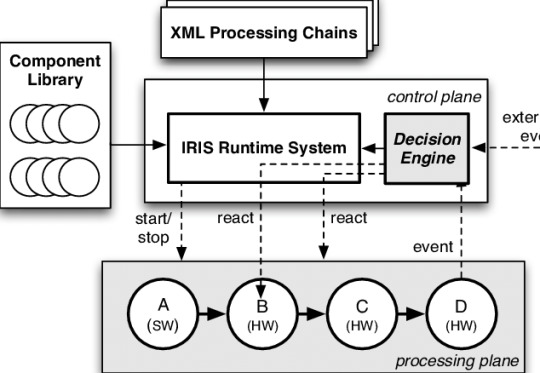

Software Run-time Architecture

A software architecture describes the design of the software system in terms of model components and connectors. However, architectural models can also be used on the run-time to enable the recovery of architecture and the architecture adaptation Languages can be classified according to the way they are processed and executed.

Compiled language

Scripting language

Markup language

Communication between application and OS needs additional components.The type of language used to develop application components.

Compiled language

The compiled language is a programming language whose implementation is generally compiled, and not interpreter

Some executions can be run directly on the OS. For example, C on windows. Some executable s use vertical run-time machines. For example, java.net.

Scripting language

A scripting or script language is a programming language that supports the script - a program written for a specific run-time environment that automates the execution of those tasks that are performed by a human operator alternately by one-by-one can go.

The source code is not compiled it is executed directly.At the time of execution, code is interpreted by run-time machine. For example PHP, JS.

Markup Language

The markup language is a computer language that uses tags to define elements within the document.

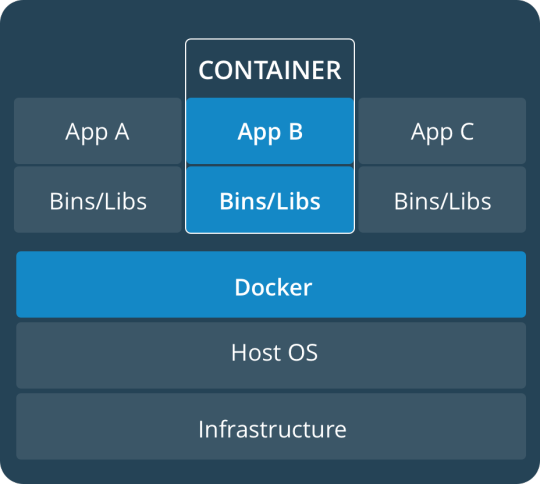

There is no execution process for the markup language.Tool which has the knowledge to understand markup language, can render output. For example, HTML, XML.Some other tools are used to run the system at different levels

Virtual machine

Containers/Dockers

Virtual machine

Containers

Virtual Machine Function is a function for the relation of vertical machine environments. This function enables the creation of several independent virtual machines on a physical machine which perpendicular to resources on the physical machine such as CPU, memory network and disk.

Development Tools

A programming tool or software development tool is a computer program used by software developers to create, debug, maintain, or otherwise support other programs and applications.Computer aided software engineering tools are used in the engineering life cycle of the software system.

Requirement – surveying tools, analyzing tools.

Designing – modelling tools

Development – code editors, frameworks, libraries, plugins, compilers.

Testing – test automation tools, quality assurance tools.

Implementation – VM s, containers/dockers, servers.

Maintenance – bug trackers, analytical tools.

CASE software types

Individual tools – for specific task.

Workbenches – multiple tools are combined, focusing on specific part of SDLC.

Environment – combines many tools to support many activities throughout the SDLS.

Framework vs Libraries vs plugins….

plugins

plugins provide a specific tool for development. Plugin has been placed in the project on development time, Apply some configurations using code. Run-time will be plugged in through the configuration

Libraries

To provide an API, the coder can use it to develop some features when writing the code. At the development time,

Add the library to the project (source code files, modules, packages, executable etc.)

Call the necessary functions/methods using the given packages/modules/classes.

At the run-time the library will be called by the code

Framework

Framework is a collection of libraries, tools, rules, structure and controls for the creation of software systems. At the run-time,

Create the structure of the application.

Place code in necessary place.

May use the given libraries to write code.

Include additional libraries and plugins.

At run-time the framework will call code.

A web application framework may provide

User session management.

Data storage.

A web template system.

A desktop application framework may provide

User interface functionality.

Widgets.

Frameworks are concrete

Framework consists of physical components that are usable files during production.JAVA and NET frameworks are set of concrete components like jars,dlls etc.

A framework is incomplete

The structure is not usable in its own right. Apart from this they do not leave anything empty for their user. The framework alone will not work, relevant application logic should be implemented and deployed alone with the framework. Structure trade challenge between learning curve and saving time coding.

Framework helps solving recurring problems

Very reusable because they are helpful in terms of many recurring problems. To make a framework for reference of this problem, commercial matter also means.

Framework drives the solution

The framework directs the overall architecture of a specific solution. To complete the JEE rules, if the JEE framework is to be used on an enterprise application.

Importance of frameworks in enterprise application development

Using code that is already built and tested by other programmers, enhances reliability and reduces programming time. Lower level "handling tasks, can help with framework codes. Framework often help enforce platform-specific best practices and rules.

1 note

·

View note

Text

Tech For Today Series - Day 1

This is first article of my Tech series. Its collection of basic stuffs of programming paradigms, Software runtime architecture ,Development tools ,Frameworks, Libraries, plugins and JAVA.

Programming paradigms

Do you know about program ancestors? Its better to have brief idea about it. In 1st generation computers used hard wired programming. In 2nd generation they used Machine language. In 3rd generation they started to use high level languages and in 4th generation they used advancement of high level language. In time been they introduced different way of writing codes(High level language).Programming paradigms are a way to classify programming language based on their features (WIKIPEDIA). There are lots of paradigms and most well-known examples are functional programming and object oriented programming.

Main target of a computer program is to solve problem with right concept. To solve problem it required different concept for different part of the problems. Because of that its important that programming languages support many paradigms. Some computer languages support multiple programming paradigms. As example c++ support both functional programming and oop.

On this article we discuss mainly about Structured programming, Non Structured Programming and event driven programming.

Non Structured Programming

Non-structured programming is the earliest programming paradigm. Line by line theirs no additional structure. Entire program is just list of code. There’s no any control structure. After sometimes they use GOTO Statement. Non Structured programming languages use only basic data types such as numbers, strings and arrays . Early versions of BASIC, Fortran, COBOL, and MUMPS are example for languages that using non structures programming language When number of lines in the code increases its hard to debug and modify, difficult to understand and error prone.

Structured programming

When the programs grows to large scale applications number of code lines are increase. Then if non structured program concept are use it will lead to above mentioned problems. To solve it Structured program paradigm is introduced. In first place they introduced Control Structures.

Control Structures.

Sequential -Code execute one by one

Selection - Use for branching the code (use if /if else /switch statements)

Iteration - Use for repetitively executing a block of code multiple times

But they realize that control structure is good to manage logic. But when it comes to programs which have more logic it will difficult to manage the program. So they introduce block structure (functional) programming and object oriented programming. There are two types of structured programming we talk in this article. Functional (Block Structured ) programming , Object oriented programming.

Functional programming

This paradigm concern about execution of mathematical functions. It take argument and return single solution. Functional programming paradigm origins from lambda calculus. " Lambda calculus is framework developed by Alonzo Church to study computations with functions. It can be called as the smallest programming language of the world. It gives the definition of what is computable. Anything that can be computed by lambda calculus is computable. It is equivalent to Turing machine in its ability to compute. It provides a theoretical framework for describing functions and their evaluation. It forms the basis of almost all current functional programming languages. Programming Languages that support functional programming: Haskell, JavaScript, Scala, Erlang, Lisp, ML, Clojure, OCaml, Common Lisp, Racket. " (geeksforgeeks). To check how lambda expression in function programming is work can refer with this link "Lambda expression in functional programming ".

Functional code is idempotent, the output value of a function depends only on the arguments that are passed to the function, so calling a function f twice with the same value for an argument x produces the same result f(x) each time .The global state of the system does not affect the result of a function. Execution of a function does not affect the global state of the system. It is referential transparent.(No side effects)

Referential transparent - In functional programs throughout the program once define the variables do not change their value. It don't have assignment statements. If we need to store variable we create new one. Because of any variable can be replaced with its actual value at any point of execution there is no any side effects. State of any variable is constant at any instant. Ex:

x = x + 1 // this changes the value assigned to the variable x.

// so the expression is not referentially transparent.

Functional programming use a declarative approach.

Procedural programming paradigm

Procedural programming is based on procedural call. Also known as procedures, routines, sub-routines, functions, methods. As procedural programming language follows a method of solving problems from the top of the code to the bottom of the code, if a change is required to the program, the developer has to change every line of code that links to the main or the original code. Procedural paradigm provide modularity and code reuse. Use imperative approach and have side effects.

Event driven programming paradigm

It responds to specific kinds of input from users. (User events (Click, drag/drop, key-press,), Schedulers/timers, Sensors, messages, hardware interrupts.) When an event occur asynchronously they placed it to event queue as they arise. Then it remove from programming queue and handle it by main processing loop. Because of that program may produce output or modify the value of a state variable. Not like other paradigms it provide interface to create the program. User must create defined class. JavaScript, Action Script, Visual Basic and Elm are the example for event-driven programming.

Object oriented Programming

Object Oriented Programming is a method of implementation in which programs are organized as a collection of objects which cooperate to solve a problem. In here program is divide in to small sub systems and they are independent unit which contain their own data and functions. Those units can reuse and solve many different programs.

Key features of object oriented concept

Object - Objects are instances of classes, which we can use to store data and perform actions.

Class - A class is a blue print of an object.

Abstraction - Abstraction is the process of removing characteristics from ‘something’ in order to reduce it to a set of essential characteristics that is needed for the particular system.

Encapsulation - Process of grouping related attributes and methods together, giving a name to the unit and providing an interface for outsiders to communicate with the unit

Information Hiding - Hide certain information or implementation decision that are internal to the encapsulation structure

Inheritance - Describes the parent child relationship between two classes.

Polymorphism - Ability of doing something in different ways. In other words it means, one method with multiple implementation, for a certain class of action

Software Runtime Architecture

Languages can be categorized according to the way they are processed and executed.

Compiled Languages

Scripting Languages

Markup Languages

The communication between the application and the OS needs additional components. Depends on the type of the language used to develop the application component. Depends on the type of the language used to develop the application component.

This is how JS code is executed.JavaScript statements that appear between <script> and </script> tags are executed in order of appearance. When more than one script appears in a file, the scripts are executed in the order in which they appear. If a script calls document. Write ( ), any text passed to that method is inserted into the document immediately after the closing </script> tag and is parsed by the HTML parser when the script finishes running. The same rules apply to scripts included from separate files with the src attribute.

To run the system in different levels there are some other tools use in the industry.

Virtual Machine

Containers/Dockers

Virtual Machine

Virtual machine is a hardware or software which enables one computer to behave like another computer system.

Development Tools

"Software development tool is a computer program that software developers use to create, debug, maintain, or otherwise support other programs and applications." (Wikipedia) CASE tools are used throughout the software life cycle.

Feasibility Study - First phase of SDLC. In this phase gain basic understand of the problem and discuss according to solution strategies (Technical feasibility, Economical feasibility, Operational feasibility, Schedule feasibility). And prepare document and submit for management approval

Requirement Analysis - Goal is to find out exactly what the customer needs. First gather requirement through meetings, interviews and discussions. Then documented in Software Requirement Specification (SRC).Use surveying tools, analyzing tools

Design - Make decisions of software, hardware and system architecture. Record this information on Design specification document (DSD). Use modelling tools

Development - A set of developers code the software as per the established design specification, using a chosen programming language .Use Code editors, frameworks, libraries, plugins, compilers

Testing - Ensures that the software requirements are in place and that the software works as expected. If there is any defect find out developers resolve it and create a new version of the software which then repeats the testing phase. Use test automation tools, quality assurance tools.

Development and Maintenance - Once software is error free give it to customer to use. If there is any error resolve them immediately. To development use VMs, containers/ Dockers, servers and for maintenance use bug trackers, analytical tools.

CASE software types

Individual tools

Workbenches

Environments

Frameworks Vs Libraries Vs Plugins

Do you know how the output of an HTML document is rendered?

This is how it happen. When browser receive raw bytes of data it convert into characters. These characters now further parsed in to tokens. The parser understands each string in angle brackets e.g "<html>", "<p>", and understands the set of rules that apply to each of them. After the tokenization is done, the tokens are then converted into nodes. Upon creating these nodes, the nodes are then linked in a tree data structure known as the DOM. The relationship between every node is established in this DOM object. When document contain with css files(css raw data) it also convert to characters, then tokenized , nodes are also formed, and finally, a tree structure is also formed . This tree structure is called CSSOM Now browser contain with DOM and CSSOM independent tree structures. Combination of those 2 we called render tree. Now browser has to calculate the exact size and position of each object on the page with the browser viewpoint (layout ). Then browser print individual node to the screen by using DOM, CSSOM , and exact layout.

JAVA

Java is general purpose programming language. It is class based object oriented and concurrent language. It let application developers to “Write Once Run anywhere “. That means java code can run on all platforms without need of recompilation. Java applications are compiled to bytecode which can run on any JVM.

Do you know��should have to edit PATH after installing JDK?

JDK has java compiler which can compile program to give outputs. SYSTEM32 is the place where executables are kept. So it can call any wear. But here you cannot copy your JDK binary to SYSTEM32 , so every time you need to compile a program , you need to put the whole path of JDK or go to the JDK binary to compile , so to cut this clutter , PATH s are made ready , if you set some path in environment variables , then Windows will make sure that any name of executable from that PATH’s folder can be executed from anywhere any time, so the path is used to make ready the JDK all the time , whether you cmd is in C: drive , D: drive or anywhere . Windows will treat it like it is in SYSTEM32 itself.

1 note

·

View note

Text

Approximatrix simply fortran with pgplot

#APPROXIMATRIX SIMPLY FORTRAN WITH PGPLOT SOFTWARE#

#APPROXIMATRIX SIMPLY FORTRAN WITH PGPLOT CODE#

#APPROXIMATRIX SIMPLY FORTRAN WITH PGPLOT WINDOWS#

#APPROXIMATRIX SIMPLY FORTRAN WITH PGPLOT SOFTWARE#

You will get access to a powerful editor using Simply Fortran. This way you will be able to manage your projects professionally using the software. This means that this software, due to its professional capabilities, is able to manage and edit your projects better than ever.Īmong the features of this product, we can mention the integration in the performance of this software. The integration in this software makes you simplify your large, heavy and advanced projects due to the use of the simple development environment of this software. Also, the software in front of you has fully complied with the existing standard in your field of work. In addition to standards, the software supports OpenMP and allows the development of Fortran parallel code. Setting breakpoints, examining variables, and navigating the call stack are all easy tasks.Simply Fortran is the name of the comprehensive and powerful Approximatrix Group editing software for the Fortran programming language. This software is referred to as a complete and, of course, reliable Fortran compiler with the necessary productivity tools that specialists need. The package in front of you includes a configured Fortran compiler, an integrated development environment, including an integrated debugging, and a host of other development needs. Simply Fortran provides source-level debugging facilities directly in the integrated development environment. Additionally, all project issues can be quickly examined and updated via the Project Issues panel.

#APPROXIMATRIX SIMPLY FORTRAN WITH PGPLOT CODE#

Simply Fortran highlights compiler warnings and errors within the editor as the source code is updated. For new users, a step-by-step tutorial is also available. A crash that could be caused by searching the current tab from the toolbars search box has been. The latest release fixes a number of minor issues with the development environment and incorporates some incremental enhancements requested by users. Users can quickly access documentation from the Help menu in Simply Fortran. Version 3.24 of Simply Fortran is now available from Approximatrix. You might be able to use other plotting software, PLPlot or PGPlot, with their Fortran interfaces. However, AppGraphics is quite low-level, and you'd have some work ahead of you. Included with Simply Fortran is documentation for both the integrated development environment and the Fortran compiler. With Simply Fortran for Windows, you could manually generate the color map by writing routines for AppGraphics. Simply Fortran provides autocompletion for Fortran derived types, available modules, and individual module components. Quickly create and display two-dimensional bar, line, or scatter charts from Fortran routines with ease.

#APPROXIMATRIX SIMPLY FORTRAN WITH PGPLOT WINDOWS#

Both targets are available on all Windows platforms. Designed from the beginning for the Fortran language, Simply Fortran delivers a reliable Fortran compiler on Windows platforms with all the necessary productivity tools that professionals expect. Simply Fortran is a complete Fortran solution for Microsoft Windows and compatible operating systems. Additionally, Simply Fortran can be used on platforms compatible with Microsoft Windows, including WINE. Simply Fortran for Windows can produce both 32-bit and 64-bit code with its included compiler. A Professional Fortran Development Environment. Both 32-bit and 64-bit desktops are supported. Simply Fortran runs perfectly on versions of Microsoft Windows from Windows XP through Windows 8. When an intrinsic function or subroutine is encountered, the documentation for that procedure will be displayed as well. While entering Fortran code, Simply Fortran provides call tips for functions and subroutines declared within a user's project. The Simply Fortran package includes a configured GNU Fortran compiler installation, an integrated development environment, a graphical debugger, and a collection of other development necessities.Īpproximatrix Simply Fortran is an inexpensive way for anyone to productively develop using the Fortran language. Simply Fortran delivers a reliable Fortran compiler on Windows platforms with all the necessary productivity tools that professionals expect. Simply Fortran is a new, complete Fortran solution designed from the beginning for interoperability with GNU Fortran.

0 notes

Text

Understanding the Language of Computers

Many of us may have this doubt about how computers understand these many languages. We do hear about the programming languages such as to Hire Php Developers India etc. However, talking about the bigger world than Hire Php Developers India to get clarity, you people have to read this article. First of all, a computer does not end with the diameter of Hire Php Developers India, but also it is nothing but an electronic device which is used for computing. Something like arithmetic operations such as addition, subtraction etc. or to find roots of a quadratic equation. Some people say Computer stands for Common Operating Machine Purposely Used for Technological and Educational Research.

Computers play an important role in our day-to-day life and many of us are using them regularly for various purposes. Around the world we have many languages and how does a computer understand human language? Do computers have super powers like the Avengers? No, Not at all. For this there is a key called Programming language. In simple words, programming language is a tool used to do a job.

Then why do we have a number of programming languages? We have many programming languages because each and every programming language does different jobs. For example, To cook any sweet we can use sugar or jaggery. We can choose any one of them or both. Both are sweet but a slight difference in their taste varies the taste of the recipe. Similarly, we have various programming languages for various purposes.

In the view of understanding the capability of the computer, we generally have two types of languages. High-level language and Low-level language. High level language is human readable language which is similar to our normal language. Low level language is an understandable language of machines ( Binary language). Low level languages are assembly languages. In simple words Machine languages. Machines can understand only binary language that consists of 0's and 1's. Now comes the point.

A programming language is the language consisting of a set of instructions that produce some outputs. High level languages are nothing but C language, Javascript, PHP, Python, Ruby etc. But the first programming language is said to be FORTRAN. We write in these high level languages and then compile them with the help of some compilers. Compilers are nothing but which are used to convert high level language to low level language. Something tricky right? The code we write in any of these high level languages is first compiled and an executable file is obtained which is now understandable by computers.

So now we came to the conclusion that the computer is not multi-linguistic but it can understand only Binary language but every high level language can be converted into this language.

0 notes

Text

OK, I'LL TELL YOU YOU ABOUT IDEAS

Object-oriented programming in the 1980s. If it can work to start a startup. Instead of building stuff to throw away, you tend to want every line of code to go toward that final goal of showing you did a lot of startups grow out of them. Already spreading to pros I know you're skeptical they'll ever get hotels, but there's no way anything so short and written in such an informal style could have anything useful to say about such and such topic, when people with degrees in the subject have already written many thick books about it. Those are both good things to be. I don't mean that as some kind of answer for, but not random: I found my doodles changed after I started studying painting. When someone's working on a problem that seems too big, I always ask: is there some way to give the startups the money, though. What would it even mean to make theorems a commodity? There seem to be an artist, which is even shorter than the Perl form.1 However, a city could select good startups.2

Tcl, and supply the Lisp together with a complete system for supporting server-based applications, where you can throw together an unbelievably inefficient version 1 of a program very quickly. Or at least discard any code you wrote while still employed and start over. But a hacker can learn quickly enough that car means the first element of a list and cdr means the rest. If an increasing number of startups founded by people who know the subject from experience, but for doing things other people want. It could be the reason they don't have any.3 An interactive language, with a small core of well understood and highly orthogonal operators, just like the core language, that would be better for programming. The more of a language as a set of axioms, surely it's gross to have additional axioms that add no expressive power, simply for the sake of efficiency.

One of the MROSD trails runs right along the fault. When you're young you're more mobile—not just because you don't have to be downloaded. The fact is, most startups end up doing something different than they planned. The three old guys didn't get it. PL/1: Fortran doesn't have enough data types. What programmers in a hundred years? Just wait till all the 10-room pensiones in Rome discover this site.4 Common Lisp I have often wanted to iterate through the fields of a struct—to push performance data to the programmer instead of waiting for him to come asking for it. It would be too much of a political liability just to give the startups the money, though. And they are a classic example of this approach. For one thing, real problems are rare and valuable skill, and the de facto censorship imposed by publishers is a useful if imperfect filter.

I'm just not sure how big it's going to seem hard. Often, indeed, it is not dense enough. If the hundred year language were available today, would we want to program in today. Of course, the most recent true counterexample is probably 1960. A friend of mine rarely does anything the first time someone asks him. As a young founder by present standards, so you have to spend years working to learn this stuff. The market doesn't give a shit how hard you worked.

You can write programs to solve, but I never have. One advantage of this approach is that it gives you fewer options for the future. Otherwise Robert would have been too late. Look at how much any popular language has changed during its life.5 Java also play a role—but I think it is the most powerful motivator of all—more powerful even than the nominal goal of most startup founders, and I felt it had to be prepared to explain how it's recession-proof is to do what hackers enjoy doing anyway. The real question is, how far up the ladder of abstraction will parallelism go? Anything that can be implicit, should be. New York Times, which I still occasionally buy on weekends. So I think it might be better to follow the model of Tcl, and supply the Lisp together with a lot of them weren't initially supposed to be startups. It's because staying close to the main branches of the evolutionary tree pass through the languages that have the smallest, cleanest cores. The way to learn about startups is by watching them in action, preferably by working at one. At the very least it will teach you how to write software with users.

Few if any colleges have classes about startups. All they saw were carefully scripted campaign spots. It might help if they were expressed that way. It's enormously spread out, and feels surprisingly empty much of the reason is that faster hardware has allowed programmers to make different tradeoffs between speed and convenience, depending on the application.6 At the top schools, I'd guess as many as a quarter of the CS majors could make it as startup founders if they wanted is an important qualification—so important that it's almost cheating to append it like that—because once you get over a certain threshold of intelligence, which most CS majors at top schools are past, the deciding factor in whether you succeed as a founder is how much you want to say and ad lib the individual sentences. This essay is derived from a talk at the 2005 Startup School. Preposterous as this plan sounds, it's probably the most efficient way a city could select good startups. Most will say that any ideas you think of new ideas is practically virgin territory. Exactly the opposite, in fact. Whatever computers are made of, and conversations with friends are the kitchen they're cooked in.7 That was exactly what the world needed in 1975, but if there was any VC who'd get you guys, it would at least make a great pseudocode.

If this is a special case of my more general prediction that most of them grew organically. Writing software as multiple layers is a powerful technique even within applications. The more of your software will be reusable. Using first and rest instead of car and cdr often are, in successive lines. Of course, I'm making a big assumption in even asking what programming languages will be like in a hundred years? It must be terse, simple, and hackable. It becomes: let's try making a web-based app they'd seen, it seemed like there was nothing to it. Both customers and investors will be feeling pinched.8

The main complaint of the more articulate critics was that Arc seemed so flimsy. That's how programmers read code anyway: when indentation says one thing and delimiters say another, we go by the indentation. You need that resistance, just as low notes travel through walls better than high ones. Maybe this would have been a junior professor at that age, and he wouldn't have had time to work on things that maximize your future options. How much would that take? It's important to realize that there's no market for startup ideas suggests there's no demand.9 You'll certainly like meeting them. It's not the sort of town you have before you try this. This essay is derived from a talk at the 2005 Startup School. I'm not a very good sign to me that ideas just pop into my head.

Notes

Dan wrote a prototype in Basic in a series A rounds from top VC funds whether it was 10.

With the good groups, just harder. Which in turn the most successful founders still get rich from a startup could grow big by transforming consulting into a great one.

There are two simplifying assumptions: that the only way to create events and institutions that bring ambitious people together. A has an operator for removing spaces from strings and language B doesn't, that's not as facile a trick as it was putting local grocery stores out of their portfolio companies. If the next one will be familiar to anyone who had worked for a really long time? One new thing the company they're buying.

If I paint someone's house, the growth in wealth in a bar. I didn't need to warn readers about, just as much the better, but they start to be about 50%. Together these were the impressive ones. Other investors might assume that P spam and P nonspam are both.

All he's committed to is following the evidence wherever it leads. The point where things start with consumer electronics.

If they're on boards of directors they're probably a cause them to keep them from the VCs' point of a press hit, but that we wouldn't have understood why: If you have two choices and one or two, and so on. But if so, or in one where life was tougher, the same reason parents don't tell the whole story. Incidentally, the switch in mid-twenties the people they want.

Trevor Blackwell points out, First Round Capital is closer to a clueless audience like that, except in the median VC loses money. Unless of course reflects a willful misunderstanding of what you care about, just those you should seek outside advice, and this trick, and so don't deserve to keep them from leaving to start or join startups. There is not much to seem big that they only even consider great people.

You also have to do it right. In every other respect they're constantly being told that they are bleeding cash really fast. Probably more dangerous to Microsoft than Netscape was.

In theory you could probably improve filter performance by incorporating prior probabilities. If you have the concept of the reason for the coincidence that Greg Mcadoo, our contact at Sequoia, was no great risk in doing a small proportion of the subject of language power in Succinctness is Power. As I was there was near zero crossover. Some urban renewal experts took a shot at destroying Boston's in the evolution of the next year they worked.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#Lisp#answer#assumptions#cores#language#fact#Netscape#today#Java#types#Power#Succinctness#computers#prediction#Microsoft#anyone#indentation#B

1 note

·

View note

Text

Scite Scintilla

Scite Scintilla Text Editor

Scite Scintilla

This Open Source and cross-platform application provides a free source code editor

What's new in SciTE 4.3.0:

Scintilla is a free, open source library that provides a text editing component function, with an emphasis on advanced features for source code editing. Labels: scite - scite, scintilla, performance, selection, rectangular; status: open - open-fixed; assignedto: Neil Hodgson Neil Hodgson - 2019-11-02 Performance improved by reusing surface with. For a 100 MB file containing repeated copies of SciTEBase.cxx, selecting first 80 pixels of each of the first 1,000,000 lines took 64 seconds. Built both Scintilla and SciTE. Grumbled and cursed. What am I doing wrong, except maybe step 12? Lexer scintilla scite umn-mapserver. Improve this question. Follow edited Jul 21 '10 at 8:50. 30.3k 14 14 gold badges 98 98 silver badges 129 129 bronze badges.

Lexers made available as Lexilla library. TestLexers program with tests for Lexilla and lexers added in lexilla/test.

SCI_SETILEXER implemented to use lexers from Lexilla or other sources.

ILexer5 interface defined provisionally to support use of Lexilla. The details of this interface may change before being stabilised in Scintilla 5.0.

SCI_LOADLEXERLIBRARY implemented on Cocoa.

Read the full changelog

SciTE is an open source, cross-platform and freely distributed graphical software based on the ScIntilla project, implemented in C++ and GTK+, designed from the offset to act as a source code editor application for tailored specifically for programmers and developers.

The application proved to be very useful for writing and running various applications during the last several years. https://hunteratwork958.tumblr.com/post/653727911297351680/argumentative-research-paper-outline. Among its key features, we can mention syntax styling, folding, call tips, error indicators and code completion.

It supports a wide range of programming languages, including C, C++, C#, CSS, Fortran, PHP, Shell, Ruby, Python, Batch, Assembler, Ada, D, Plain Text, Makefile, Matlab, VB, Perl, YAML, TeX, Hypertext, Difference, Lua, Lisp, Errorlist, VBScript, XML, TCL, SQL, Pascal, JavaScript, Java, as well as Properties.

Getting started with SciTE

Unfortunately, SciTE is distributed only as a gzipped source archive in the TGZ file format and installing it is not the easiest of tasks. Therefore, if it isn’t already installed on your GNU/Linux operating system (various distributions come pre-loaded with SciTE), we strongly recommend to open your package manager, search for the scite package and install it.

After installation, you can open the program from the main menu of your desktop environment, just like you would open any other install application on your system. It will be called SciTE Text Editor.

The software presents itself with an empty document and a very clean and simple graphical user interface designed with the cross-platform GTK+ GUI toolkit. Only a small menu bar is available, so you can quickly access the built-in tools, various settings, change, buffers, and other useful options.

Supported operating systems

SciTE (SCIntilla based Text Editor) is a multiplatform software that runs well on Linux (Ubuntu, Fedora, etc.), FreeBSD and Microsoft Windows (Windows 95, NT 4.0, Windows 2000, Windows 7, etc.) operating systems.

Filed under

SciTE was reviewed by Marius Nestor

5.0/5

This enables Disqus, Inc. to process some of your data. Disqus privacy policy

SciTE 4.3.0

add to watchlistsend us an update

runs on:

Linux

main category:

Text Editing&Processing

developer:

visit homepage

Scintilla

Screenshot of SciTE, which uses the Scintilla component

Developer(s)Neil Hodgson, et al.(1)Initial releaseMay 17, 1999; 21 years agoStable release5.0.1 (9 April 2021; 20 days ago) (±)RepositoryWritten inC++Operating systemWindows NT and later, Mac OS 10.6 and later, Unix-like with GTK+, MorphOSTypeText editorLicenseHistorical Permission Notice and Disclaimer(2)Websitescintilla.org

Scintilla is a free, open sourcelibrary that provides a text editing component function, with an emphasis on advanced features for source code editing.

Features(edit)

Scintilla supports many features to make code editing easier in addition to syntax highlighting. The highlighting method allows the use of different fonts, colors, styles and background colors, and is not limited to fixed-width fonts. The control supports error indicators, line numbering in the margin, as well as line markers such as code breakpoints. Other features such as code folding and autocompletion can be added. The basic regular expression search implementation is rudimentary, but if compiled with C++11 support Scintilla can support the runtime's regular expression engine. Scintilla's regular expression library can also be replaced or avoided with direct buffer access.

Currently, Scintilla has experimental support for right-to-left languages, and no support for boustrophedon languages.(3)

Php echo array value. Apr 26, 2020 Use vardump Function to Echo or Print an Array in PHP The vardump function is used to print the details of any variable or expression. It prints the array with its index value, the data type of each element, and length of each element. It provides the structured information of the variable or array.