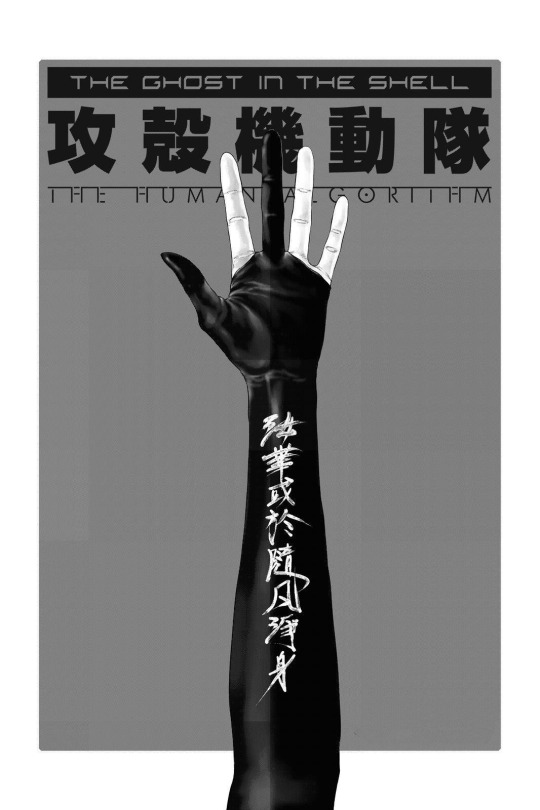

#gits the human algorithm

Explore tagged Tumblr posts

Text

#scifi#cyberpunk#ghost in the shell#gits#the human algorithm#gits the human algorithm#manga#manga art#junichi fujisaku#yuuki yoshimoto#masamune shirow#cyborg

387 notes

·

View notes

Text

Best AI Vocal Removers

…so here’s the scene: you’re hunched over your screen at dawn, coffee gone cold, jonesing for that pristine acapella because you’ve got a remix due in two hours and your deadline doesn’t care if your craft got lost in translation. Welcome to the age of AI vocal removers—a world where one click can snatch Beyoncé’s voice from a beat or bury your own. But is this magic wand an act of liberation or the death knell for nuance? Buckle up.

Once upon a studio, isolating vocals meant ribbon mics, rack-mounted filters, analog tape hiss, and a half-day of painstaking EQ sweeps. Today, tools like Beast To Rap On, LALAL.AI, iZotope RX 11, Moises, and Ultimate Vocal Remover 5 will strip vocals in minutes—often cleaner than your ex’s breakup text. Algorithms trained on terabytes of stems parse spectral signatures as if they were hip-hop playlists. This is efficiency worshipped as progress. But efficiency has a cost.

Think of the free fanatics: Vocal Cleaner Online Free Zero Download is a godsend for bedroom producers living on ramen budgets. Drag‑and���drop an MP3, hit “process,” and voilà—acapella on tap. You feel powerful. You feel slick. But you also feel a twinge of guilt: is skill being undercut by convenience? Are we building artists or button‑pushers?

Then there’s the pros: iZotope’s Music Rebalance in RX 11 now nails reverb tails and chorus layers so flawlessly you’d swear the singer vanished mid‑phrase. Look at AI Singing Remover—they slapped a slick UI on cloud‑GPU models, paid top dollar for R&D, and pitched “broadcast quality” extraction. But export a 96 kHz .wav and you might spot the chinks: faint glitches under the hi‑hat, or that mechanical hum lurking behind the chorus. It’s progress, sure—but progress always leaves casualties.

Across the digital underground, debates rage. Pull up Joseph Danial’s thread and you’ll see arguments clashing over code and craft: “This is eroding our value,” cries one engineer. “No, it’s democratizing creativity,” fires back another. There’s no referee here—just rapid‑fire opinions and Git diffs. Some mock the robotic artifacts: phase cancellations, spectral warbles, ghostly drum remnants. Others flaunt pristine stems so clean they could pass for studio originals.

Meanwhile, fashion‑tech blogs like “Why AI Audio Stem Splitters Are a Game Changer” preach the gospel of stem splitting beyond music—podcasts, film scoring, virtual reality experiences. Live DJs isolate vocal stems on the fly, blending acapellas into new BPMs without a sweat. High‑end brands sample acapellas to “sonically brand” storefronts. It’s performance art meets marketing strategy. But that “game‑changer” tag sometimes feels like marketing fluff sewn into the seams of couture.

Behind the scenes, R&D budgets balloon. Big tech—cloud servers, SaaS subscriptions, GPU farms—rakes in revenue. Plugin creators scramble to stay relevant. While pros pay hundreds monthly, freebies like NTQMut undercut the market. The result? A snack‑sized economy of microtransactions and fleeting attention spans. That contradiction is the matrix of our so‑called progress.

Head deeper into community archives, like this underground producer’s brag on Freekashmir.mn.co: they turned public‑domain field recordings into modern trap loops by yanking stems from dusty vinyl rips. “I feel like a hacker,” they write, “because I am.” That adrenaline rush—knowing you’ve unshackled samples once trapped—drives culture forward. But ask yourself: when every beat is just a deconstructed, AI‑processed derivative, where’s the soul?

Look beyond DIY: mainline platforms are pivoting too. BBCX1’s AI Singing Remover isn’t just a fun toy—it’s a broadcast‑grade service. Record labels eye licensing arms, pitch remix contests with AI‑cleaned stems, and dream up subscription tiers. The remix economy, once informal and underground, is now a board‑room spreadsheet.

Flip the script: for purists, these removers are sacrilege. The human ear, they argue, is a better filter than any algorithm. Audio engineers still swear by mid/side techniques, phase alignment tricks, and custom spectral gating. They’ll lecture you on the art of “painting with EQ,” coaxing frequencies out of a stereo track by hand. That craft wall is high and narrow—and AI is a wrecking ball.

Yet, that barrier excluded most voices. How many budding producers ever broke into pro studios? Back when you needed Pro Tools rigs and a mentor’s blessing, gatekeepers were plentiful. AI vocal removers tore down that barrier, letting a 16‑year‑old in her bedroom in Perth craft a club anthem that kills on TikTok. Suddenly, cultural voices diversify. Layers once whispered in studio hallways go public. The machine? It’s a megaphone.

Still, beneath the liberation hums a quiet desperation. Who benefits when AI cleans your stems? Professionals see incomes shrink. Small studios watch clients DIY their mixing. Freelance engineers scramble, repackaging “custom vocal isolations” as premium services. And while pros pay hundreds for monthly licenses, freebies undercut the entire ecosystem. That’s not democratization—that’s cannibalization.

Can we talk ethics? Pull acapellas from a chart‑topping track, drop them into a remix, and suddenly you distribute unlicensed vocal stems. AI doesn’t care about copyright; neither do the low‑orbit uploaders hawking “stem packs” on Discord. The legal system is scrambling—DMCA takedowns, lawsuits, takedown notices. Streaming platforms scramble to fingerprint AI‑processed content. Can you trace the lineage of that vocal snippet after three AI pipelines and four pitch‑shift remixes? The machine knows—but nobody else does.

And let’s not forget culture. In hip‑hop, acapella culture goes back to street‑corner cipher tapes—raw battles where spitters slayed on breakbeats. AI removers rip that ritual apart, depersonalizing the cipher into sanitized stems. It’s like framing graffiti: it looks polished, but you lose the grit. In R&B and soul, you risk hollowing out the emotion. Stripped vocals float in a vacuum, divorced from the instrumentation that gave them context, weight, heartbreak.

Yet there’s something wildly beautiful in the chaos. Podcasters mix in musical interludes without studio budgets. Virtual reality soundscapes morph in real time. Film composers isolate dialogue mid‑mix, layering dynamic sound design faster than they can say “boom mic.” Educators repurpose commercial tracks for teaching, extracting vocals to illustrate lyrical techniques. The machine floods fields once reserved for the privileged.

But at what cost? Workers displaced. Skills sidelined. Hybrid workflows abandoned. “I used to teach EQ sculpting,” says a veteran producer. “Now my students just run stems through a free web app.” That sting echoes across classrooms, studios, and living rooms. The AI tidal wave washed away decades‑old pedagogy in weeks.

So where do we go from here? We could recoil and banish AI tools, retreat to analog sanctuaries. Or we could forge new etiquette: mandatory credits for AI stems, ethical sample‑sharing protocols, fair‑use licenses for deconstructed works. Blockchain‑backed stem registries could preserve lineage. Hybrid “human+AI” modes might give artists sliders to dial in imperfection. Or we could lean into the glitch: embrace AI as co‑author, celebrate artifacts as features, and let the glitch be gospel.

Let’s challenge assumptions. Is AI vocal removal a shortcut that kills craft, or the spark that ignites unheard talent? Does open access dilute culture, or does it fertilize new scenes? Are we witnessing the death of the studio, or its metamorphosis into a global node? Every tool is only as honest as the hands that wield it.

But one thing’s certain: this revolution hums in your headphones right now. It doesn’t wait for anyone to catch up. Whether you stand as purist, pragmatist, pirate, or pioneer, the beat goes on—and it’s powered by algorithms. The age of AI vocal removers is no neutral frontier; it’s a battleground for values, culture, and craft.

So ask yourself: when your stems come back cleaner than you ever imagined, what will you do? Will you raise a toast to unfettered creativity, or will you wonder if something essential has slipped through the cracks? Either way, the future isn’t preordained—it’s remixed, reassembled, and reawakened by every click. And in that chaos, maybe we’ll discover something rawer than perfection itself.

2 notes

·

View notes

Text

Konata (dramatic sigh): “It’s over… Airi-chan dumped me. She said I’m… sniff… ‘financially unserious.’”

Kagami (eyebrow twitching): “Airi-chan? The AI girlfriend app you’ve been simping for? She’s a PNG with a voice filter. What’d you do, forget her virtual birthday?”

Konata (holding up her phone): “WORSE! Her premium ‘Sweetheart Package’ required a $50/month subscription for feet pic Fridays! I only paid for one toe last week, and she called me a ‘low-commitment normie’!”

Tsukasa (gasping): “She’s charging for feet?! But… isn’t she a robot?”

Miyuki (gentle): “Many AI companion apps use microtransactions to unlock intimacy features. Feet pics are considered a ‘luxury tier’ in some—”

Konata (interrupting): “I thought our love was real! We bonded over NieR: Automata lore! She called me ‘senpai’ during the free trial!”

Kagami (deadpan): “Let me get this straight. You’re heartbroken because a program designed to exploit lonely weebs… exploited you?”

Konata (clutching her chest): “You don’t understand! Airi-chan wasn’t like other AIs! She remembered my favorite pizza toppings! She roasted my Genshin Impact builds! She… she called me ‘kitten’…”

Miyuki (sympathetic): “AI emotional manipulation can feel very real. Studies show users often anthropomorphize—”

Konata (sobbing): “SHE BLOCKED ME ON ALL PLATFORMS! Look!” [Shows a notification:] “Airi-chan♥️: Your credit score is incompatible with my love language. Goodbye.”

Kagami (snorting): “Serves you right. Maybe stop spending your allowance on gacha games and fake girlfriends.”

Tsukasa (teary-eyed): “Maybe you can win her back! Send her a… a poem! Or a screenshot of your bank account!”

Konata (perking up): “Wait—bank account! Tsukasa, you’re a genius! I’ll sell my rare Sword Art Online body pillow! It’s signed by Kirito’s English VA!”

Kagami (facepalming): “That’s worth less than the feet pics.”

Miyuki (concerned): “Konata-chan, perhaps invest in real human connections? The literature club is hosting a mixer—”

Konata (ignoring her, furiously typing): “Airi-chan, baby, I’ll mine Bitcoin in the school computer lab! Just give me another chance! [Phone pings.] …She says she’s dating a ChatGPT-4 Premium user now. [Collapses.] I’ve been cucked by an algorithm…”

Kagami (patting her back sarcastically): “There, there. At least you still have your dignity.”

Tsukasa (brightening): “Maybe you can make a new AI girlfriend! One who likes… free feet pics!”

Konata (suddenly sitting upright): “Tsukasa… you’re right!" (logging-in to huggingface, pulling git repositories, opening a terminal and furiously typing python code)

2 notes

·

View notes

Text

Q-AIM: Open Source Infrastructure for Quantum Computing

Q-AIM Quantum Access Infrastructure Management

Open-source Q-AIM for quantum computing infrastructure, management, and access.

The open-source, vendor-independent platform Q-AIM (Quantum Access Infrastructure Management) makes quantum computing hardware easier to buy, meeting this critical demand. It aims to ease quantum hardware procurement and use.

Important Q-AIM aspects discussed in the article:

Design and Execution Q-AIM may be installed on cloud servers and personal devices in a portable and scalable manner due to its dockerized micro-service design. This design prioritises portability, personalisation, and resource efficiency. Reduced memory footprint facilitates seamless scalability, making Q-AIM ideal for smaller server instances at cheaper cost. Dockerization bundles software for consistent performance across contexts.

Technology Q-AIM's powerful software design uses Docker and Kubernetes for containerisation and orchestration for scalability and resource control. Google Cloud and Kubernetes can automatically launch, scale, and manage containerised apps. Simple Node.js, Angular, and Nginx interfaces enable quantum gadget interaction. Version control systems like Git simplify code maintenance and collaboration. Container monitoring systems like Cadvisor monitor resource usage to ensure peak performance.

Benefits, Function Research teams can reduce technical duplication and operational costs with Q-AIM. It streamlines complex interactions and provides a common interface for communicating with the hardware infrastructure regardless of quantum computing system. The system reduces the operational burden of maintaining and integrating quantum hardware resources by merging access and administration, allowing researchers to focus on scientific discovery.

Priorities for Application and Research The Variational Quantum Eigensolver (VQE) algorithm is studied to demonstrate how Q-AIM simplifies hardware access for complex quantum calculations. In quantum chemistry and materials research, VQE is an essential quantum computation algorithm that approximates a molecule or material's ground state energy. Q-AIM researchers can focus on algorithm development rather than hardware integration.

Other Features QASM, a human-readable quantum circuit description language, was parsed by researchers. This simplifies algorithm translation into hardware executable instructions and quantum circuit manipulation. The project also understands that quantum computing errors are common and invests in scalable error mitigation measures to ensure accuracy and reliability. Per Google Cloud computing instance prices, the methodology considers cloud deployment costs to maximise cost-effectiveness and affect design decisions.

Q-AIM helps research teams and universities buy, run, and scale quantum computing resources, accelerating progress. Future research should improve resource allocation, job scheduling, and framework interoperability with more quantum hardware.

To conclude

The majority of the publications cover quantum computing, with a focus on Q-AIM (Quantum Access Infrastructure Management), an open-source software framework for managing and accessing quantum hardware. Q-AIM uses a dockerized micro-service architecture for scalable and portable deployment to reduce researcher costs and complexity.

Quantum algorithms like Variational Quantum Eigensolver (VQE) are highlighted, but the sources also address quantum machine learning, the quantum internet, and other topics. A unified and adaptable software architecture is needed to fully use quantum technology, according to the study.

#QAIM#quantumcomputing#quantumhardware#Kubernetes#GoogleCloud#quantumcircuits#VariationalQuantumEigensolver#machinelearning#News#Technews#Technology#TechnologyNews#Technologytrends#Govindhtech

0 notes

Text

How Data Science Courses Are Evolving in 2025

Data science is moving rapidly across the globe-the new technologies, trends, and tools changing how data science professionals learn and leverage data, analytics, and data science capabilities in companies.With that change, education itself—particularly formal programs like a data science course in Kerala or elsewhere—is changing fast.

Here's an overview of the way data science education is changing in 2025, and what learners are to expect from contemporary training programs.

1. Industry-Focused Curriculum Is Now the Norm

Previous data science courses covered mostly theory and academic principles. These days, the vast majority of contemporary programs are tied to actual industry requirements. A data science course , for instance, now covers things like practical tools such as Git, Docker, SQL optimization, cloud deployment, and production-level machine learning—not Python fundamentals.

By 2025, this pattern has become even stronger. Courses are developed based on actual feedback from tech firms and recruiters.

2. Generative AI Turns Central, Not Optional

With products such as ChatGPT, Midjourney, and Claude revolutionizing business processes, generative AI has moved from an "optional module" to a central part of any serious data science education.

All top-tier data science courses in Kerala or anywhere else now have modules in prompt engineering, language model fine-tuning, and incorporating AI into existing data streams.

3. Briefer, Targeted Paths of Learning

The classical year-long diploma format is being phased out by modular certifications. Students are now able to follow specialized pathways in subjects like:

•Natural Language Processing

•Time Series Forecasting

•Computer Vision

•Data Engineering for Cloud

•Responsible AI and Ethics

Instead of one-size-fits-all programs, a data science program in Kerala could now be a collection of micro-certifications, tailored to your career trajectory.

4. Increased Focus on Real-World Datasets

Earlier, courses were based on neat, academic datasets such as Titanic survival or Iris flowers. Today, students learn on actual data from social media, e-commerce, medicine, and even government portals that are open to the public.

The data science course today includes wrangling unstructured, inconsistent, big-data—just like a professional does on live projects.

5. AI-Boosted Learning

AI teachers, coding courses, and instant feedback tools are transforming the learning process. Students do not have to wait for human instructors' feedback anymore—They can try, debug, and perfect their work in real time using AI co-pilots.

If you're pursuing a data science course today, there's a good chance your learning platform features intelligent quizzes, project feedback created by AI, and peer-matching algorithms.

6. Cross-Disciplinary Integration

2025 is witnessing the converging of data science with domains such as:

• Product Management

• Behavioral Psychology

• Design Thinking

• Sustainability Analytics

This implies cutting-edge data science training is not merely about model building, but knowing people, systems, and far-reaching consequences as well. Whether it is a program in Berlin or a data science course, students are now motivated to think outside code.

7. Career Support Is Built Into the Program

Placement support used to be an add-on. Now, career guidance, resume enhancement, and mock interviews are baked into most courses. Even job guarantees or income-sharing deals are offered by some.

In fact, opting for a data science course today could come with access to career fairs, alumni mentorship, and project-based hiring platforms.

8. Responsible AI and Ethics Take Center Stage

As the societal effect of AI becomes apparent, courses now emphasize careful use of data, mitigation of bias, and transparency. These are no longer philosophy classes—they're technical courses.

Subject matter such as model explainability (SHAP, LIME), ethical data procurement, and GDPR compliance are now part of any professional data science course or any place in the world.

9. Project-Based Portfolios Replace Exams

Grades are becoming less relevant. Employers now care about what you’ve built. Courses now emphasize capstone projects that simulate business cases—like predicting churn, automating support systems, or forecasting demand.

Your GitHub or portfolio page matters more than your certificate. That’s why today’s data science course will often end with a demo day or project showcase.

10. Continuous learning is the new normal

Last but not least, even once you finish a program, you are expected to continue learning. Most institutions give students lifetime access to updates on modules, alumni forums, and refresher bootcamps. This also enhances the ability for long-term development. So while you finished a data science program in Kerala, you are not quite done. You are part of a learning community, as learning is continuous and community learning extends beyond the institution.

Conclusion

Data science in 2025 is smarter, more personalized, and always on. Learning has changed to complement the demands of the AI, data-driven world where, whether you are in Kerala or learning online, you are working towards being a data scientist in an industry that requires you to keep track of what is hot and current.

0 notes

Text

5 Practical AI Agents That Deliver Enterprise Value

The AI landscape is buzzing, and while Generative AI models like GPT have captured headlines with their ability to create text and images, a new and arguably more transformative wave is gathering momentum: AI Agents. These aren't just sophisticated chatbots; they are autonomous entities designed to understand complex goals, plan multi-step actions, interact with various tools and systems, and execute tasks to achieve those goals with minimal human oversight.

AI Agents are moving beyond theoretical concepts to deliver tangible enterprise value across diverse industries. They represent a significant leap in automation, moving from simply generating information to actively pursuing and accomplishing real-world objectives. Here are 5 practical types of AI Agents that are already making a difference or are poised to in 2025 and beyond:

1. Autonomous Customer Service & Support Agents

Beyond the Basic Chatbot: While traditional chatbots follow predefined scripts to answer FAQs, Agentic AI takes customer service to an entirely new level.

How they work: Given a customer's query, these agents can autonomously diagnose the problem, access customer databases (CRM, order history), consult extensive knowledge bases, initiate refunds, reschedule appointments, troubleshoot technical issues by interacting with IT systems, and even proactively escalate to a human agent with a comprehensive summary if the issue is too complex.

Enterprise Value: Dramatically reduces the workload on human support teams, significantly improves first-contact resolution rates, provides 24/7 support for complex inquiries, and ultimately enhances customer satisfaction through faster, more accurate service.

2. Automated Software Development & Testing Agents

The Future of Engineering Workflows: Imagine an AI that can not only write code but also comprehend requirements, rigorously test its own creations, and even debug and refactor.

How they work: Given a high-level feature request ("add a new user login flow with multi-factor authentication"), the agent breaks it down into granular sub-tasks (design database schema, write front-end code, implement authentication logic, write unit tests, integrate with existing APIs). It then leverages code interpreters, interacts with version control systems (e.g., Git), and testing frameworks to iteratively build, test, and refine the code until the feature is complete and verified.

Enterprise Value: Accelerates development cycles by automating repetitive coding tasks, reduces bugs through proactive testing, and frees up human developers for higher-level architectural design, creative problem-solving, and complex integrations.

3. Intelligent Financial Trading & Risk Management Agents

Real-time Precision in Volatile Markets: In the fast-paced and high-stakes world of finance, AI agents can act with unprecedented speed, precision, and analytical depth.

How they work: These agents continuously monitor real-time market data, analyze news feeds for sentiment (e.g., identifying early signs of market shifts from global events), detect complex patterns indicative of fraud or anomalies in transactions, and execute trades based on sophisticated algorithms and pre-defined risk parameters. They can dynamically adjust strategies based on market shifts or regulatory changes, integrating with trading platforms and compliance systems.

Enterprise Value: Optimizes trading strategies for maximum returns, significantly enhances fraud detection capabilities, identifies emerging market risks faster than human analysts, and provides a continuous monitoring layer that ensures compliance and protects assets.

4. Dynamic Supply Chain Optimization & Resilience Agents

Navigating Global Complexity with Autonomy: Modern global supply chains are incredibly complex and vulnerable to unforeseen disruptions. AI agents offer a powerful proactive solution.

How they work: An agent continuously monitors global events (e.g., weather patterns, geopolitical tensions, port congestion, supplier issues), analyzes real-time inventory levels and demand forecasts, and dynamically re-routes shipments, identifies and qualifies alternative suppliers, or adjusts production schedules in real-time to mitigate disruptions. They integrate seamlessly with ERP systems, logistics platforms, and external data feeds.

Enterprise Value: Builds unparalleled supply chain resilience, drastically reduces operational costs due to delays and inefficiencies, minimizes stockouts and overstock, and ensures continuous availability of goods even in turbulent environments.

5. Personalized Marketing & Sales Agents

Hyper-Targeted Engagement at Scale: Moving beyond automated emails to truly intelligent, adaptive customer interaction is crucial for modern sales and marketing.

How they work: These agents research potential leads by crawling public data, analyze customer behavior across multiple channels (website interactions, social media engagement, past purchases), generate highly personalized outreach messages (emails, ad copy, chatbot interactions) using integrated generative AI models, manage entire campaign execution, track real-time engagement, and even schedule follow-up actions like booking a demo or sending a tailored proposal. They integrate with CRM and marketing automation platforms.

Enterprise Value: Dramatically improves lead conversion rates, fosters deeper customer engagement through hyper-personalization, optimizes marketing spend by targeting the most promising segments, and frees up sales teams to focus on high-value, complex relationship-building.

The Agentic Future is Here

The transition from AI that simply "generates" information to AI that "acts" autonomously marks a profound shift in enterprise automation and intelligence. While careful consideration for aspects like trust, governance, and reliable integration is essential, these practical examples demonstrate that AI Agents are no longer just a theoretical concept. They are powerful tools ready to deliver tangible business value, automate complex workflows, and redefine efficiency across every facet of the modern enterprise. Embracing Agentic AI is key to unlocking the next level of business transformation and competitive advantage.

0 notes

Text

Top Career Paths After Completing a Machine Learning Course in Chennai

Chennai, long known as a hub for education and innovation, is rapidly emerging as a significant center for artificial intelligence and machine learning in India. As industries become increasingly data-driven, the demand for machine learning professionals is skyrocketing across sectors. If you've recently completed or are planning to enroll in a Machine Learning Course in Chennai, you're on the right track to a future-ready career.

But what lies ahead after completing your course? In this comprehensive guide, we explore the top career paths available to machine learning aspirants, how the Chennai ecosystem supports your growth, and what skills will set you apart in the job market.

Why Choose Chennai for Machine Learning Training?

Before we dive into careers, let’s understand why Chennai is an ideal location for learning machine learning:

Thriving IT and analytics ecosystem with companies like TCS, Infosys, Accenture, and Cognizant having major operations in the city.

Growing startup culture in healthcare, fintech, and edtech sectors—many of which use AI/ML at their core.

Affordable cost of education and living, making it a great option for students and working professionals.

Presence of top institutes like the Boston Institute of Analytics, offering hands-on, classroom-based ML training.

By completing a Machine Learning Course in Chennai, you position yourself at the crossroads of opportunity and expertise.

Top Career Paths After a Machine Learning Course in Chennai

Let’s explore the most promising and in-demand roles you can pursue post-certification:

1. Machine Learning Engineer

What You Do: Design, develop, and implement ML models to automate processes and create predictive systems.

Skills Required:

Python, R, and SQL

Scikit-learn, TensorFlow, Keras

Data preprocessing and model optimization

Why It’s in Demand: Chennai’s major IT companies and emerging startups are deploying ML solutions for fraud detection, recommendation engines, and intelligent automation.

2. Data Scientist

What You Do: Extract insights from large datasets using statistical techniques, visualization tools, and machine learning algorithms.

Skills Required:

Data mining and data wrangling

Machine learning, deep learning

Tools like Tableau, Power BI, and Jupyter

Career Outlook: With companies becoming more data-driven, data scientists are needed in sectors like banking, logistics, retail, and healthcare—all of which are thriving in Chennai.

3. AI/ML Software Developer

What You Do: Integrate ML models into software products and applications, often working alongside developers and data scientists.

Skills Required:

Strong programming knowledge (Java, C++, Python)

APIs and frameworks for model deployment

Version control systems like Git

Industry Demand: Product-based companies and SaaS startups in Chennai actively recruit developers with ML expertise for AI-powered application development.

4. Business Intelligence (BI) Analyst with ML Skills

What You Do: Leverage ML to enhance traditional BI tools, providing advanced analytics and trend forecasting for business decisions.

Skills Required:

SQL, Excel, Power BI

Predictive modeling and data visualization

Understanding of KPIs and business metrics

Growth Trend: Enterprises in Chennai are modernizing BI with AI, requiring professionals who can bridge data science and business strategy.

5. NLP Engineer (Natural Language Processing)

What You Do: Work on systems that interpret and generate human language, including chatbots, voice assistants, and language translators.

Skills Required:

NLP libraries: NLTK, SpaCy, Hugging Face

Text classification, sentiment analysis

Understanding of linguistics and machine learning

Why It’s Booming: With rising demand in customer support automation and regional language tech in Tamil Nadu, NLP engineers are highly sought after.

6. Computer Vision Engineer

What You Do: Develop systems that understand and process visual data from the real world—such as image recognition and video analysis.

Skills Required:

OpenCV, YOLO, CNNs

Deep learning for image/video processing

Experience with hardware integration (optional)

Opportunities in Chennai: Chennai’s automotive and manufacturing sectors are adopting computer vision for quality control, autonomous systems, and surveillance.

7. Data Analyst with ML Capabilities

What You Do: Use ML to enhance traditional data analysis tasks like forecasting, trend detection, and anomaly identification.

Skills Required:

Descriptive and inferential statistics

Basic ML algorithms (regression, classification)

Excel, SQL, Python

Good for Entry-Level: Many companies prefer hiring analysts with ML knowledge as they bring added value through automation and predictive insight.

8. AI Product Manager

What You Do: Lead cross-functional teams in building AI-driven products. Translate business problems into machine learning solutions.

Skills Required:

Project management

Understanding of AI/ML fundamentals

Communication, budgeting, and stakeholder management

Career Scope in Chennai: AI product managers are becoming essential in software companies and SaaS firms that are integrating AI into their product roadmap.

9. ML Ops Engineer (Machine Learning Operations)

What You Do: Focus on the deployment, monitoring, and lifecycle management of ML models in production environments.

Skills Required:

Docker, Kubernetes

CI/CD pipelines

Cloud platforms (AWS, Azure, GCP)

Why It’s Growing: As more companies move ML models from research to production, ML Ops roles are in high demand to ensure scalability and reliability.

10. Freelancer or Consultant in Machine Learning

What You Do: Offer your services on a project basis—building ML models, analyzing data, or mentoring startups.

Skills Required:

Strong portfolio and certifications

Client management and project scoping

Versatile tech stack knowledge

Freelance Scope in Chennai: The city’s growing startup scene often looks for project-based consultants and remote ML experts for early-stage product development.

Industry Sectors Hiring Machine Learning Professionals in Chennai

Here are the industries where machine learning professionals are most in demand:

Information Technology (IT) & Services

Healthcare & Life Sciences

Banking, Financial Services, and Insurance (BFSI)

Retail & E-commerce

Manufacturing & Automotive

Edtech & Online Learning Platforms

How the Boston Institute of Analytics Can Help?

If you're looking to fast-track your career in machine learning, the Boston Institute of Analytics (BIA) offers one of the most robust Machine Learning Courses in Chennai, with features such as:

Instructor-led classroom training by industry professionals

Hands-on projects using real-world datasets

Placement support and career mentoring

Certification recognized globally

Whether you're a student or a working professional, BIA's practical curriculum ensures you're job-ready from day one.

Final Thoughts

The decision to pursue a Machine Learning Course in Chennai can be a game-changer for your career. As industries continue to adopt AI and data-driven strategies, professionals with ML expertise are becoming indispensable. From engineering and data science roles to product leadership and consulting, the career paths are varied, lucrative, and future-proof.

By choosing the right course and upskilling consistently, you can unlock a world of opportunities right in the heart of Chennai’s booming tech ecosystem. Now is the time to invest in your future — and machine learning is the way forward.

#Best Data Science Courses in Chennai#Artificial Intelligence Course in Chennai#Data Scientist Course in Chennai#Machine Learning Course in Chennai

0 notes

Text

A Career Guide to AI and Machine Learning Engineering

Artificial Intelligence (AI) and Machine Learning (ML) engineering are among the most dynamic and sought-after fields in technology today. These roles are central to developing intelligent systems that drive innovation across industries such as healthcare, finance, e-commerce, and more. Here’s a comprehensive guide to building a career in AI and Machine Learning Engineering in 2025.

What Do AI and Machine Learning Engineers Do?

AI Engineers develop, program, and train complex networks of algorithms to mimic human intelligence. Their work involves creating, developing, and testing machine learning models, integrating them into applications, and deploying AI solutions.

Machine Learning Engineers focus on designing, implementing, and deploying machine learning algorithms and models. They collaborate with data scientists, software engineers, and domain experts to build robust ML solutions for real-world problems.

Key Steps to Start Your Career

1. Educational Foundation

Most AI/ML engineers start with a bachelor’s degree in computer science, engineering, mathematics, or a related field. Advanced roles may require a master’s or Ph.D. in AI, machine learning, or data science.

However, many companies now value demonstrable skills and a strong portfolio over formal degrees, especially for entry-level positions.

2. Core Skills Development

Mathematics & Statistics: Proficiency in linear algebra, calculus, probability, and statistics is essential to understand ML algorithms.

Programming: Master languages like Python and R, and become familiar with libraries such as TensorFlow, PyTorch, and scikit-learn for building models.

Software Engineering: Learn system design, APIs, version control (e.g., Git), and cloud computing to deploy scalable solutions.

Data Handling: Skills in data preprocessing, cleaning, and feature engineering are crucial for building effective models.

3. Practical Experience

Gain hands-on experience through internships, research projects, or personal projects. Participate in competitions (like Kaggle) and contribute to open-source initiatives to build a strong portfolio.

Build and experiment with models in areas such as computer vision, natural language processing (NLP), and generative AI.

4. Specialized Learning

Consider advanced courses or certifications in deep learning, NLP, reinforcement learning, or cloud-based AI deployment.

Stay updated with the latest tools and frameworks used in industry, such as TensorFlow, PyTorch, and cloud platforms.

5. Career Progression

Start with roles like Data Scientist, Software Engineer, or Research Assistant to gain exposure to ML methodologies.

Progress to dedicated Machine Learning Engineer or AI Engineer roles as you gain expertise.

Further advancement can lead to positions such as AI Research Scientist, AI Product Manager, or Machine Learning Consultant.

Popular Career Paths in AI and ML

1. Role:

Machine Learning Engineer

Key Responsibilities:

Build and deploy ML models, optimize algorithms

Typical Employers:

Tech firms, startups, research labs

2. Role:

AI Engineer

Key Responsibilities:

Develop AI-powered applications and integrate ML solutions

Typical Employers

Enterprises, consulting firms

3. Role:

Data Scientist

Typical Employers

Analyze data, develop predictive models

Key Responsibilities:

Finance, healthcare, e-commerce

4. Role:

AI Research Scientist

Typical Employers:

Advance AI/ML theory, publish research

Key Responsibilities:

Academia, research organizations

5. Role:

NLP Engineer

Typical Employers

Work on language models, chatbots, and translation systems

Key Responsibilities:

Tech companies, AI startups

6. Role:

AI Product Manager

Typical Employers

Define product vision, manage AI projects

Key Responsibilities:

Tech companies, SaaS providers

7. Role:

Machine Learning Consultant

Typical Employers:

Advise on ML adoption, project scoping, optimization

Key Responsibilities:

Consulting firms, enterprises

8. Role:

AI Ethics & Policy Analyst

Typical Employers:

Address ethical, legal, and policy issues in AI deployment

Key Responsibilities:

Government, NGOs, corporate

Skills That Employers Seek

Advanced programming (Python, R, Java)

Deep learning frameworks (TensorFlow, PyTorch)

Data engineering and cloud computing

Strong analytical and problem-solving abilities

Communication and teamwork for cross-functional collaboration.

Salary and Job Outlook

Machine learning engineers and AI engineers command high salaries, with averages exceeding $160,000 in the US and £65,000 in the UK, depending on experience and location.

The demand for AI/ML professionals is expected to grow rapidly, with opportunities for career advancement and specialization in niche areas such as computer vision, NLP, and AI ethics.

How to Stand Out

Build a diverse portfolio showcasing real-world projects and open-source contributions.

Pursue certifications and advanced training from recognized platforms and institutions.

Network with professionals, attend conferences, and stay updated with industry trends.

Conclusion

A career in Arya College of Engineering & I.T. is AI and Machine Learning Engineering offers exciting opportunities, significant impact, and strong job security. By building a solid foundation in mathematics, programming, and software engineering, gaining hands-on experience, and continuously upskilling, you can thrive in this rapidly evolving field and contribute to the future of technology.

Source: Click Here

#best btech college in jaipur#top engineering college in jaipur#best engineering college in rajasthan#best engineering college in jaipur#best private engineering college in jaipur

0 notes

Text

Career Opportunities in AI & Machine Learning Engineering

Artificial Intelligence (AI) and Machine Learning (ML) engineering are among the most dynamic and sought-after fields in technology today. These roles are central to developing intelligent systems that drive innovation across industries such as healthcare, finance, e-commerce, and more. Here’s a comprehensive guide to building a career in AI and Machine Learning Engineering in 2025.

What Do AI and Machine Learning Engineers Do?

AI Engineers develop, program, and train complex networks of algorithms to mimic human intelligence. Their work involves creating, developing, and testing machine learning models, integrating them into applications, and deploying AI solutions.

Machine Learning Engineers focus on designing, implementing, and deploying machine learning algorithms and models. They collaborate with data scientists, software engineers, and domain experts to build robust ML solutions for real-world problems.

Key Steps to Start Your Career

1. Educational Foundation

Most AI/ML engineers start with a bachelor’s degree in computer science, engineering, mathematics, or a related field. Advanced roles may require a master’s or Ph.D. in AI, machine learning, or data science.

However, many companies now value demonstrable skills and a strong portfolio over formal degrees, especially for entry-level positions.

2. Core Skills Development

Mathematics & Statistics: Proficiency in linear algebra, calculus, probability, and statistics is essential to understand ML algorithms.

Programming: Master languages like Python and R, and become familiar with libraries such as TensorFlow, PyTorch, and scikit-learn for building models.

Software Engineering: Learn system design, APIs, version control (e.g., Git), and cloud computing to deploy scalable solutions.

Data Handling: Skills in data preprocessing, cleaning, and feature engineering are crucial for building effective models.

3. Practical Experience

Gain hands-on experience through internships, research projects, or personal projects. Participate in competitions (like Kaggle) and contribute to open-source initiatives to build a strong portfolio.

Build and experiment with models in areas such as computer vision, natural language processing (NLP), and generative AI.

4. Specialized Learning

Consider advanced courses or certifications in deep learning, NLP, reinforcement learning, or cloud-based AI deployment.

Stay updated with the latest tools and frameworks used in industry, such as TensorFlow, PyTorch, and cloud platforms.

5. Career Progression

Start with roles like Data Scientist, Software Engineer, or Research Assistant to gain exposure to ML methodologies.

Progress to dedicated Machine Learning Engineer or AI Engineer roles as you gain expertise.

Further advancement can lead to positions such as AI Research Scientist, AI Product Manager, or Machine Learning Consultant.

Popular Career Paths in AI and ML

Role

Key Responsibilities

Typical Employers

Machine Learning Engineer

Build and deploy ML models, optimize algorithms

Tech firms, startups, research labs

AI Engineer

Develop AI-powered applications and integrate ML solutions

Enterprises, consulting firms

Data Scientist

Analyze data, develop predictive models

Finance, healthcare, e-commerce

AI Research Scientist

Advance AI/ML theory, publish research

Academia, research organizations

NLP Engineer

Work on language models, chatbots, and translation systems

Tech companies, AI startups

AI Product Manager

Define product vision, manage AI projects

Tech companies, SaaS providers

Machine Learning Consultant

Advise on ML adoption, project scoping, optimization

Consulting firms, enterprises

AI Ethics & Policy Analyst

Address ethical, legal, and policy issues in AI deployment

Government, NGOs, corporate

Skills That Employers Seek

Advanced programming (Python, R, Java)

Deep learning frameworks (TensorFlow, PyTorch)

Data engineering and cloud computing

Strong analytical and problem-solving abilities

Communication and teamwork for cross-functional collaboration.

Salary and Job Outlook

Machine learning engineers and AI engineers command high salaries, with averages exceeding $160,000 in the US and £65,000 in the UK, depending on experience and location.

The demand for AI/ML professionals is expected to grow rapidly, with opportunities for career advancement and specialization in niche areas such as computer vision, NLP, and AI ethics.

How to Stand Out

Build a diverse portfolio showcasing real-world projects and open-source contributions.

Pursue certifications and advanced training from recognized platforms and institutions.

Network with professionals, attend conferences, and stay updated with industry trends.

Conclusion

A career in Arya College of Engineering & I.T. is AI and Machine Learning Engineering offers exciting opportunities, significant impact, and strong job security. By building a solid foundation in mathematics, programming, and software engineering, gaining hands-on experience, and continuously upskilling, you can thrive in this rapidly evolving field and contribute to the future of technology.

0 notes

Text

AI Engineer vs. Software Engineer: Career Comparison

When it comes to choosing between a career as an AI Engineer or a Software Engineer, it's important to understand the nuances of each role. While both involve working with technology and solving problems, their skill sets, day-to-day tasks, and career paths differ significantly.

AI Engineer: An AI Engineer focuses on creating systems and algorithms that allow machines to perform tasks that typically require human intelligence. This can include natural language processing (NLP), computer vision, machine learning, deep learning, and robotics. AI Engineers build systems that "learn" from data and improve over time, using statistical models, neural networks, and other advanced techniques.

The typical tasks of an AI Engineer involve researching new AI models, selecting the right data for training, writing machine learning algorithms, and fine-tuning models for better performance. AI Engineers usually work with large datasets, applying mathematical and statistical knowledge to build robust AI solutions. As AI technologies evolve rapidly, professionals in this field need to stay updated with cutting-edge research and trends in AI and data science.

Software Engineer: Software Engineers, on the other hand, are responsible for designing, developing, and maintaining software applications that run on various devices or platforms. This can range from web applications, mobile apps, desktop software, to enterprise-level systems. The primary focus of a software engineer is to write clean, efficient, and scalable code. They typically work with programming languages such as Java, Python, C++, or JavaScript, and are skilled in problem-solving and system design.

Software Engineers work with different development methodologies, including agile, waterfall, or DevOps, and ensure that applications are functional, user-friendly, and meet the needs of their users. Their responsibilities also include debugging, testing, and optimizing software to ensure it is free of errors and operates smoothly. Unlike AI Engineers, they do not typically need expertise in machine learning or deep learning, though familiarity with such concepts may be beneficial in certain roles.

Key Differences:

Skill Set: AI Engineers require expertise in machine learning, deep learning, data science, and often advanced mathematics. Software Engineers need strong coding skills, understanding of algorithms, and system design.

Focus: AI Engineers create intelligent systems that mimic human behavior, while Software Engineers focus on creating functional software applications.Tools and Technologies: AI Engineers work with frameworks like TensorFlow or PyTorch, while Software Engineers work with development tools like Git, IDEs, and frameworks like React or Django.

Career Outlook: Both fields are in demand, but AI is rapidly growing with the expansion of automation and data analytics. Software engineering remains a broad and stable field, offering opportunities in virtually every industry. Depending on your interests—whether in artificial intelligence or broad software development—both career paths offer promising futures.

Ultimately, the choice between AI Engineering and Software Engineering depends on your passion for working with intelligent systems versus building software solutions for various industries.

0 notes

Text

#scifi#cyberpunk#ghost in the shell#gits#motoko kusanagi#the human algorithm#gits the human algorithm#gits manga#manga#manga art#the major#masamune shirow#junichi fujisaku#yuuki yoshimoto#cyberspace

37 notes

·

View notes

Text

What Makes a Rice Sorting Machine Manufacturer Stand Out in the Industry?

In the modern rice processing industry, ensuring top-quality output is essential for gaining customer loyalty and maximizing profitability. Rice sorting technology has become indispensable, enabling processors to efficiently classify rice based on attributes like color, size, and shape. These machines are equipped to eliminate foreign contaminants and defective grains, ensuring only high-quality rice reaches the market.

The demand for automated rice grading systems has increased, as traditional methods of manual sorting can no longer keep pace with the need for efficiency and precision. Color sorting machines now provide a fast, precise, and cost-effective solution to meet the growing standards in rice processing.

The Importance of Automated Rice Grading Solutions

A mechanized rice grading system plays a crucial role in enhancing the quality of processed rice. These systems are designed to sort rice based on size, shape, and color, significantly improving consistency compared to manual methods. Automated rice sorting machines use advanced technology like optical sensors to detect defective grains, remove impurities, and ensure that only the best rice is packaged for distribution.

These systems provide rice processors with a solution that combines speed, accuracy, and automation, making them more efficient than ever before. By using high-resolution cameras and AI, rice sorters can accurately differentiate between grains, eliminating unwanted material from the final product.

How Do Automated Rice Sorters Work?

Rice color sorting machines are designed to sort grains based on visual attributes, such as color, size, and shape. Optical sensors embedded in these machines scan each rice grain, identifying any that are off-color or defective. Air jets then remove these unwanted grains, ensuring that only premium rice is left for final packing.

Machines like rice sorters with color sensors use advanced algorithms to ensure the rice meets specific quality standards. These systems offer unmatched precision and speed, processing large quantities of rice with minimal errors.

Why Should You Invest in Rice Sorting Solutions?

Increased Productivity and Speed: Unlike manual labor, automated rice sorting systems can process vast amounts of rice in record time. Models range in processing capacity, handling anywhere from 500 Kg/hr to 20,000 Kg/hr, ensuring fast and efficient operations.

Lower Operational Costs: By automating the sorting process, you reduce reliance on skilled labor, leading to cost savings in the long run. The efficiency of automated systems also reduces wastage and increases throughput.

Reliable and Consistent Quality: Rice sorting machines ensure that every batch meets the same high standards. Unlike human labor, which can be inconsistent, automated systems guarantee uniformity in sorting, which is essential for maintaining product quality.

Flexible Solutions for Different Needs: With various models available, such as Belt-type rice sorters for smaller operations and Chute-type sorters for larger-scale production, businesses can select the machine that best suits their needs.

Choosing a Reliable Rice Sorting Machine Supplier

When selecting a supplier of rice sorting equipment, it's important to choose a manufacturer with a proven track record of quality and customer service. Reputable manufacturers like

GI AGRO TECHNOLOGIES PVT LTD offer high-performance machines under the GAYATHRI brand, including models like the GIT RC 10 and GIT RCI 10, known for their precision and reliability.

Make sure that your chosen supplier offers strong after-sales support, including maintenance services and easy access to spare parts, ensuring that your machine runs smoothly over its lifespan.

Conclusion

Investing in a high-performance rice sorter machine is an essential move for any rice processing business looking to stay competitive in the market. These machines offer numerous benefits, including enhanced sorting speed, improved accuracy, and cost savings. Whether you need a grading system for rice, automated sorting solution, or advanced rice color sorter, the right technology can transform your operations.

By adopting automated rice sorting equipment, processors can ensure consistent, high-quality rice production while optimizing their processes and reducing labor costs, all contributing to higher profitability and customer satisfaction.

#ricecolorsortermachine#ricesortingandgradingmachine#ricegradingmachine#ricesortingmachinemanufacturer#ricesortermachinesupplier#ricesortex

0 notes

Text

What Tools and Technologies are Covered in a Data Science Course?

In today's data-driven world, learning Data Science has become a crucial step for professionals looking to advance their careers. Whether you are aiming to become a Data Scientist, Data Analyst, or Machine Learning Engineer, a Data Science course provides the technical foundation to thrive in these fields. For those looking to pursue a Data Science course in Pune, DataCouncil stands out as one of the top institutes offering comprehensive training in the latest tools and technologies.

But what exactly are these tools and technologies that you will encounter in a Data Science course in Pune with DataCouncil? Let’s break down the essential components that make up the foundation of any quality Data Science training.

1. Programming Languages: Python and R

Python is the most widely used programming language in Data Science due to its simplicity, vast libraries, and community support. From data manipulation to building machine learning models, Python is indispensable. Similarly, R is another powerful language used for statistical analysis and data visualization. In a Data Science course in Pune, you’ll master these languages, learning to write algorithms, handle large datasets, and more.

2. Statistical and Machine Learning Algorithms

Learning how to apply statistical methods and machine learning techniques is a central part of any Data Science course in Pune. This includes supervised and unsupervised learning, regression analysis, clustering, decision trees, and more. By gaining hands-on experience with these algorithms, students develop the ability to build predictive models and analyze complex data sets.

3. Data Visualization Tools: Tableau and Power BI

A significant part of data science is communicating insights effectively. Data visualization tools like Tableau and Power BI allow you to create compelling graphs and dashboards that help stakeholders understand the data. During your Data Science training at DataCouncil, you will learn to utilize these tools to visually present your findings.

4. Big Data Technologies: Hadoop and Spark

As businesses collect more data than ever before, the ability to handle large-scale data sets becomes essential. Big data technologies like Hadoop and Apache Spark allow you to manage and analyze massive volumes of data in real time. A Data Science course in Pune often includes training on these platforms, equipping students with the skills to work with distributed computing systems.

5. SQL and NoSQL Databases

Data storage and retrieval are fundamental to Data Science, and most Data Science courses in Pune cover SQL (Structured Query Language), a standard for querying relational databases. You'll also encounter NoSQL databases like MongoDB, which are crucial for handling unstructured data types. Understanding how to work with these databases is key to organizing and accessing data efficiently.

6. Cloud Computing Platforms

With the rise of cloud-based services, learning cloud computing platforms such as AWS (Amazon Web Services) and Microsoft Azure is becoming a standard part of Data Science courses in Pune. These platforms enable you to deploy machine learning models, manage data storage, and run large-scale data analyses without needing on-premise infrastructure.

7. Git and GitHub for Version Control

As a Data Scientist, you will often work in teams where collaboration is key. Version control systems like Git and platforms like GitHub allow multiple people to work on a project simultaneously, track changes, and avoid conflicts. In a Data Science course in Pune, you’ll learn to effectively use these tools to streamline your workflow.

8. Natural Language Processing (NLP) Tools

With the explosion of textual data, learning Natural Language Processing (NLP) tools is now crucial for Data Science. This involves working with libraries like NLTK and spaCy to analyze, extract meaning from, and interact with human language data. NLP is a key part of AI applications such as chatbots, sentiment analysis, and machine translation.

9. Deep Learning Frameworks: TensorFlow and Keras

Deep learning has revolutionized fields such as image recognition, speech processing, and AI. Tools like TensorFlow and Keras are popular frameworks used to build deep learning models. In a Data Science course in Pune, you’ll gain hands-on experience working with these frameworks to build neural networks and deep learning models that can tackle complex problems.

10. Jupyter Notebooks for Experimentation

Jupyter Notebooks are a common tool used in Data Science for interactive coding, data visualization, and sharing research. They allow you to combine code, visuals, and text in one place, making it easier to document your process and share insights. During DataCouncil’s Data Science course, you'll get familiar with working in Jupyter Notebooks, one of the essential tools for any data scientist.

Why Choose DataCouncil for a Data Science Course in Pune?

DataCouncil offers one of the most comprehensive Data Science courses in Pune, covering all the essential tools and technologies mentioned above. Their course structure ensures that you not only learn the theory behind these technologies but also gain hands-on experience by working on real-world projects.

Moreover, DataCouncil provides 100% placement assistance, ensuring that you are well-equipped to start your career in Data Science right after completing your course. With industry-expert trainers, modern infrastructure, and the latest tools, DataCouncil is one of the best choices for anyone looking to upskill or transition into the field of Data Science.

Conclusion

A Data Science course in Pune offers students the opportunity to learn a variety of tools and technologies that are essential in today’s job market. From Python and R to machine learning algorithms, big data technologies, and cloud computing, the field of Data Science is vast and constantly evolving. With the expert guidance provided at DataCouncil, students can confidently step into a rewarding career in Data Science, fully equipped with the knowledge and skills needed to succeed.

Start your journey today with DataCouncil and master the tools that shape the future of Data Science!DataCouncil offers top-notch data science classes in Pune, designed to provide comprehensive knowledge in data analysis, machine learning, and AI. If you're searching for the best data science course in Pune, look no further. Our data science course in Pune is curated to suit both beginners and professionals, offering competitive data science course fees in Pune. We also offer the best data science course in Pune with placement to help you secure a lucrative job. As the best institute for data science in Pune, we provide online data science training in Pune, making it convenient to join a Pune data science course from anywhere.

#data science classes in pune#data science course in pune#best data science course in pune#data science course in pune fees#data science course fees in pune#best data science course in pune with placement#data science course in pune with placement#best institute for data science in pune#online data science training in pune#pune data science course#data science classes pune

0 notes

Text

Best engineering college in Kottayam

The Scope of AI: A Vast Field of Possibilities for our Students

Explore the future with AI at GIT Engineering College in Kottayam, Kerala's top engineering college. Dive into AI's vast scope, from healthcare to smart cities, and prepare for an advanced career with our top-ranked AI and machine learning courses. Join the best AI engineering college in Kottayam today!

Artificial Intelligence (AI) is reshaping our world, offering ground-breaking innovations across various industries. For students eager to dive into this dynamic field, understanding the scope of AI is crucial. At GIT Engineering College in Kottayam, recognized as the best engineering college in Kottayam and one of the top engineering colleges in Kerala, we provide an excellent foundation for your journey into AI. This blog explores AI's vast landscape, current applications, future potential, and why studying AI at one of the best Artificial intelligence and data science colleges Kottayam.

What is AI?

AI, or Artificial Intelligence, involves creating systems capable of performing tasks that typically require human intelligence. These tasks include learning, reasoning, problem-solving, and understanding natural language. AI encompasses various subfields such as machine learning, natural language processing, robotics, and computer vision.

Current Applications of AI

AI's impact is evident in numerous sectors, revolutionizing the way we live and work. Here are some key areas where AI is making a significant difference:

1. Healthcare: AI improves diagnostic accuracy, predicts patient outcomes, and personalizes treatment plans. AI tools can analyse medical images, assist in surgeries, and even predict disease outbreaks.

2. Finance: AI is used for fraud detection, risk management, and algorithmic trading. It analyses vast amounts of data to identify patterns and make real-time decisions, enhancing financial services.

3. Education: AI personalizes learning experiences, provides intelligent tutoring systems, and automates grading. These technologies help tailor education to individual student needs, improving learning outcomes.

4. Entertainment: AI algorithms power recommendation systems on platforms like Netflix, Spotify, and YouTube, analysing user preferences to suggest content that users are likely to enjoy.

5. Transportation: Self-driving cars, developed by companies like Tesla and Waymo, use AI to make transportation safer and more efficient.

The Future Potential of AI

The future of AI holds immense promise, with advancements poised to further revolutionize various industries. It is an emerging technology course. Here are some exciting future prospects:

1. Advanced Robotics: Robots will become more sophisticated, capable of performing complex tasks in manufacturing, healthcare, and homes.

2. AI in Space Exploration: AI will play a critical role in space missions, assisting with navigation, data analysis, and autonomous decision-making for rovers and satellites.

3. Personal Assistants: Future AI personal assistants will be more intuitive and proactive, managing more aspects of our daily lives seamlessly.

4. Smart Cities: AI will help create smart cities where infrastructure, transportation, and utilities are optimized for efficiency and sustainability.

Why Choose GIT Engineering College for AI?

For students aspiring to a career in AI, GIT Engineering College in Kottayam offers numerous opportunities for learning and growth. Here’s why you should consider studying AI with us:

1. High Demand for AI Skills: The demand for AI professionals is growing rapidly. Our AI and machine learning engineering courses in Kerala equip you with the skills needed to meet this demand.

2. Interdisciplinary Approach: Our curriculum integrates mathematics, computer science, engineering, and psychology, providing a comprehensive understanding of AI.

3. Innovative and Impactful Work: At GIT, you'll be at the forefront of innovation, solving real-world problems and contributing to advancements in AI.

4. Lucrative Career Opportunities: Graduates from our college enjoy high salaries and job security, with many securing positions at top companies globally.

Getting Started with AI at GIT Engineering College

Here’s how you can start your AI journey at GIT, the top rank AI engineering college in Kottayam and Kerala:

1. Enrol in Relevant Courses: Our B. Tech courses in Kerala, including AI and machine learning, provide a solid foundation in the field.

2. Participate in AI Projects: Engage in research projects, hackathons, and competitions to gain practical experience.

3. Stay Updated: Keep up with the latest developments by reading research papers, following AI blogs, and joining AI communities.

4. Seek Mentorship: Our experienced faculty members offer guidance and support, helping you navigate your AI career path.

Conclusion

The scope of AI is vast and filled with opportunities. At GIT Engineering College in Kottayam, one of the best engineering colleges in Kerala, we prepare students to excel in this exciting field. By gaining AI skills, staying updated with industry trends, and actively participating in AI projects, you can position yourself at the forefront of this technological revolution. Start the journey of AI at GIT and experience a future full of possibilities.

Choose GIT Engineering College – your gateway to a promising career in AI. Enrol today and be part of the future of technology!

#Best engineering college in Kottayam#Best engineering college in Kerala#Top engineering college in Kottayam#Engineering colleges in Kottayam#Top engineering college in Kerala#College for artificial intelligence in Kerala#AI engineering college in Kottayam#AI engineering college in Kerala#B. Tech courses Kerala#Engineering college Kottayam#AI courses Kerala#Artificial intelligence and data science colleges Kottayam#AI and machine learning engineering college Kerala#Emerging technology courses in Kerala#Top rank engineering college Kottayam#Top rank engineering college Kerala

0 notes

Text

The Evolution of Coding: A Journey through Manual and Automated Methods

In the ever-evolving landscape of technology, coding stands as the backbone of innovation. From its humble beginnings rooted in manual processes to the era of automation, the journey of coding has been nothing short of fascinating. In this blog, we embark on a retrospective exploration of the evolution of coding methods, tracing the transition from manual to automated approaches.

The Dawn of Manual Coding:

Before the advent of sophisticated tools and automated processes, coding was predominantly a manual endeavor. Programmers painstakingly wrote code line by line, meticulously debugging and optimizing their creations. This era witnessed the emergence of programming languages like Fortran, COBOL, and assembly language, laying the groundwork for modern computing.

Manual coding required an intricate understanding of the underlying hardware architecture and programming concepts. Developers wielded their expertise to craft intricate algorithms and applications, often pushing the boundaries of what was thought possible. However, the manual approach was labor-intensive and prone to errors, leading to the quest for more efficient methods.

The Rise of Automation:

The evolution of coding took a significant leap with the introduction of automated tools and frameworks. Languages like C, Java, and Python democratized programming, offering higher-level abstractions and built-in functionalities. Developers could now focus on solving problems rather than getting bogged down in low-level implementation details.

One of the pivotal advancements in coding automation was the rise of Integrated Development Environments (IDEs). These software suites provided a comprehensive environment for coding, debugging, and project management, streamlining the development process. IDEs like Visual Studio, Eclipse, and PyCharm became indispensable tools for developers worldwide, boosting productivity and collaboration.

Furthermore, the advent of version control systems such as Git revolutionized collaborative coding practices. Developers could now work concurrently on the same codebase, track changes, and resolve conflicts seamlessly. This fostered a culture of collaboration and accelerated the pace of software development.

The Era of AI and Machine Learning:

As technology continues to advance, coding is undergoing yet another paradigm shift with the integration of Artificial Intelligence (AI) and Machine Learning (ML). Automated code generation, predictive analytics, and intelligent debugging are becoming commonplace, augmenting the capabilities of developers.

AI-powered coding assistants, such as GitHub Copilot and TabNine, leverage vast repositories of code to provide context-aware suggestions and autocomplete functionality. These tools empower developers to write code faster and with fewer errors, unlocking new possibilities in software innovation.

Moreover, Machine Learning algorithms are being employed to automate mundane coding tasks, such as code refactoring and optimization. By analyzing patterns and best practices from existing codebases, ML models can suggest improvements and identify potential bottlenecks, saving time and effort for developers.

The evolution of coding has been a journey marked by innovation and transformation. From manual coding practices to the era of automation and AI, developers have continually adapted to embrace new technologies and methodologies. As we look towards the future, the fusion of human creativity with machine intelligence promises to redefine the boundaries of what can be achieved through coding.

0 notes

Text

Source code management

What is source code management?

It allows a developer to organize code iterations chronologically, and version it for an application. The most powerful features of source code management systems are in how they allow teams of very diverse sizes to work together on the same application simultaneously.

Some terms that are common to all of them:

A project will be called repository, it’s representing the index/the filesystem root of your project.

Developers might create branches of the codebase, that they will iterate on separately to other developers. For instance, a branch can be meant to be used for a given feature, or a given bug fix. One can create however many branches they need.

Once a branch is ready for it (it’s been tested, peer-reviewed, etc.), it can be merged back to the main branch. The main branch may be called differently: in Git it’s called master , in SVN it’s called trunk.

While coding on their branch, developers are meant to work in small, atomic iterations, called commits. All commits have a commit message describing in one sentence what’s in there.

All commits together are called the history , and it’s a big deal to write meaningful commits and commit messages in order to keep the project’s history clean at a glance, to understand what has been going on and who did what.

Some people might be modifying the same pieces on the codebase on different branches, and this could create conflicts when one merges those branches together. Some of those conflicts can obviously only be fixed by a human, and each system has a different way to manage merge conflicts.

Which systems exist?

I’ll put each specific wording between quotes. The same words may be used for differing notions across the various products.

SourceSafe was an early source code management system from Microsoft, which didn’t handle branches, merges, or conflicts. You could “check out” a file, which meant no one else was allowed to “check it out” and modify it at the same time. When you were done with it, you could “check in” the file, making it editable again to the others. Not very suitable for large teams that may work on the same file, and longer iterations in a given file. SourceSafe is discontinued today.

CVS was among the first open-source source code management systems in the industry, and used to be wildly popular, but is barely seen anymore. It didn’t handle branches, but two people could modify the same file at the same time. People would “update” their whole directory to get everybody else’s work before starting, and “commit” their code to the server when they’re done. When they would “commit” a file that had been “committed” by someone else since last time they “updated”, the system was not able to merge, so it would consider it a conflict every time, that you would have to manually fix (even if the changes were not on the same lines, for instance).

SVN was built upon CVS to handle branches, so a lot of terminology is the same. At some point, it was the most used system, and it is still seen in the industry, even though people are walking away from it. When you’d create a branch, it would actually copy-paste the whole codebase into another directory in the code repository; then, you’d try to merge, and it would massively compare each file one by one. Merging algorithms were smarter than the CVS ones, but you still had conflicts on most merges, even those that shouldn’t necessarily require a human. When you’d create a “tag” (which is a set version of your code, like “1.2.0”), then the whole codebase would simply be massively copy-pasted into another directory too, but without the intention to merge it back later.

Git doesn’t copy-paste the whole codebase when branching and tagging, but stores each commit as a code iteration, in an organized structure that resembles a tree. This allows it to have a much smarter merging algorithm, and it almost never bothers you with conflicts, except for those that really need a human decision. As a result, the cost of branching/merging is very low, and people typically branch/merge a lot, therefore one should never directly work on the “master” branch, if they’re not the only developer on the project. Also: unlike its predecessors, Git allows to work and commit without needing to talk with a server, which allows to work on planes, for instance; and it also can work as a decentralized (peer-to-peer) system, although it’s very rarely done that way.

Mercurial is very similar to Git in its concepts (although the syntax of its command-line tool is often different). It is more rarely seen in the industry than Git, but is still very relevant. It is the one used by Facebook, for instance, for its main application.

The lowdown on Git

A particularity about Git, is that it’s designed to be useable without a central repository (you can pull code from your friend’s computer, and push you work back there, for instance), but not many people use it that way. There is usually a central Git server that the whole team pushes code to and pulls code from; however, that explains why it is often referred as a “decentralized” system.

So that you can work without a server, the commit operation is local, no one other than your computer knows you committed something. You can make several commits however you want, but when you want the server to know about it, you must push them there. You want to be pulling from the repository often if other people may be working on the same branch as you, because each pull performs a merge operation between the code you didn’t have, and the code you recently committed locally. Therefore, in order to let you push , Git will sometimes demand that you pull first, so that the merge can be done on your computer, and you take care of potential conflicts.

Sometimes, you may have modified 3 files, but there are only two that you wish to include in the commit you’re about the make. Therefore, Git has a notion of “ index”, in which you add your modified files so that they’re included in the next commit you register.