#gnu parallel

Explore tagged Tumblr posts

Text

Stiegler: ideas in general, and not only the idea of justice, whatever these ideas may be, do not exist: they are only made to consist.

Terry Pratchett: show me one atom of justice, one molecule of mercy [...] but people have got to believe that, or what's the point

4 notes

·

View notes

Text

In which you can see the parallels of Aziraphale being capable of anger, extreme power, and assholery (as displayed by the other archangels) but choosing kindness, because choosing it requires self control and understanding.

Honestly, the fact that terry Pratchett has experience around nuclear power makes so much sense once you realize what magic is standing as a metaphor for in the discworld. Like, look at this fucking quote from going postal:

"That's why [magic] was left to wizards, who knew how to handle it safely. Not doing any magic at all was the chief task of wizards—not "not doing magic" because they couldn't do magic, but not doing magic when they could do and didn't. Any ignorant fool can fail to turn someone else into a frog. You have to be clever to��refrain from doing it when you knew how easy it was. There were places in the world commemorating those times when wizards hadn't been quite as clever as that, and on many of them the grass would never grow again."

Like... It feels incredibly obvious what he's talking about once you know the context.

14K notes

·

View notes

Text

This digital music thing will probably reach its endgame sometime in the next decade or so. These are very exciting times.

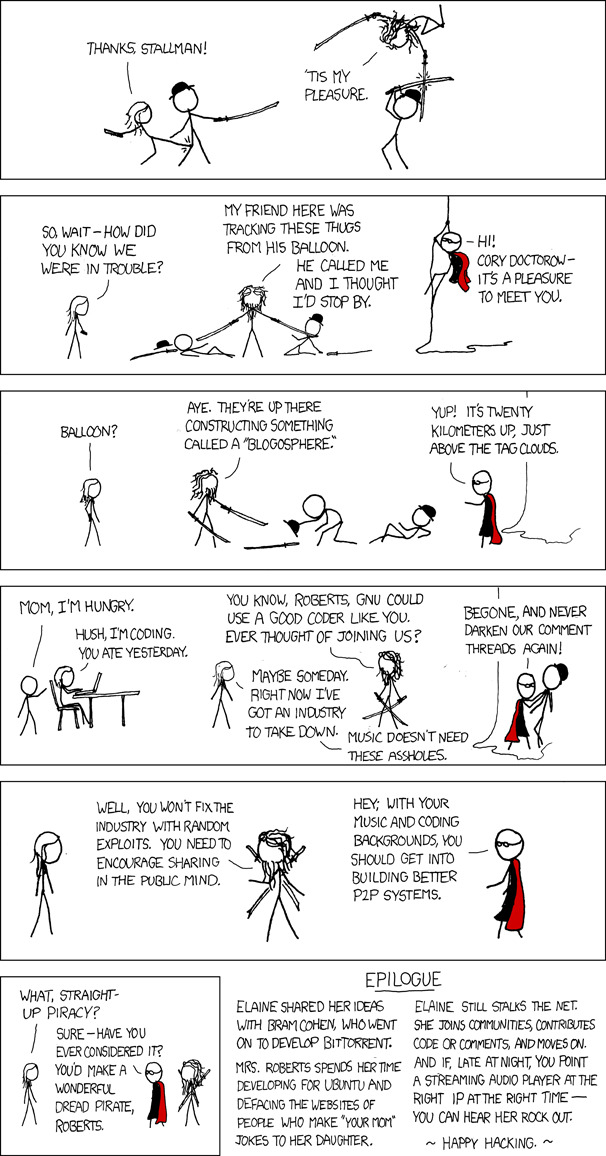

1337: Part 5 [Explained]

Transcript

[The two men in black bowler hats (RIAA and MPAA agents as known from the previous comic) with their katanas are attacked by Elaine Roberts with her folding knife and Richard Stallman with his own two katanas. Elaine kicks the RIAA man to the left in the back of his leg, while Stallman jumps over the MPAA man to the right, flying high over him from right to left in a flying maneuver hitting his sword while hanging parallel to the ground above the man.] Elaine: Thanks, Stallman! Richard Stallman: 'Tis my pleasure.

[Elaine stands to the left with her knife in one hand having folded it down again. Richard Stallman stands between the two men with bowler hats who are now lying on the floor on either side of him, each with one of Stallman's swords pointing at their throat. Stallman has both arms fully stretched towards them as he looks straight out of the panel. The left (RIAA) man lies flat on his back, his hat and katana lying behind him. The right (MPAA) man is sitting on his knee leaning as far back as he can, since the sword is almost touching the skin on his throat. He wears his hat, but the sword lies behind him, out of reach, even though he is leaning back on one hand close to it. To the far right, a rope comes down from the top of the panel, falling down on the ground so a section of it stretches even farther right in the picture. Down this rope comes a man with googles and a red cape, which is black on the inside. This is Cory Doctorow. He holds onto the rope with two hands, one over one just under his head.] Elaine: So, wait - how did you know we were in trouble? Richard Stallman: My friend here was tracking these thugs from his balloon. Richard Stallman: He called me and I thought I'd stop by. Cory Doctorow: -Hi! Cory Doctorow: Cory Doctorow - It's a pleasure to meet you.

[Elaine has shifted the knife to the other hand. Richard Stallman has moved to the left of the RIAA man, so both bowler hat men are between him and Cory Doctorow. Stallman still points his sword in their direction, but they are lowered. The RIAA man closest to him has picked up his hat in one hand and reaches for his sword with the other hand. The MPAA man now lies on his back, one arm up, leaning on the other. His sword is gone. It does not seem like Doctorow could have taken it. Behind him, Doctorow has reached the ground, the rope hanging behind him. He points left.] Elaine: Balloon? Richard Stallman: Aye. They're up there constructing something called a "Blogosphere." Cory Doctorow: Yup! It's twenty kilometers up, just above the tag clouds.

[The scene is contracted, so to the left, Mrs. Roberts at her desk with her chair and laptop becomes visible (from the previous comic). This without the other people has moved closer. She still types as her son Little Bobby Tables enters and lifts a hand in his mother's direction. He is drawn as a child version of Cueball. Elaine has put the knife away and looks at Richard Stallman, who now stands straight looking at her with the swords crossed in front of his legs. Behind him, just right of the rope hanging down, Cory Doctorow lifts one of the agents up by the throat while looking right and talking to him. The other agent has left the panel. The one he holds has his hat but no sword.] Little Bobby Tables: Mom, I'm hungry. Mrs. Roberts: Hush, I'm coding. You ate yesterday. Richard Stallman: You know, Roberts, GNU could use a good coder like you. Ever thought of joining us? Elaine: Maybe someday. Right now I've got an industry to take down. Elaine: Music doesn't need these assholes. Cory Doctorow: Begone, And never darken our comment threads again!

[Zoom in on Elaine, Richard Stallman, and Cory Doctorow. She stand straight looking at Stallman, who faces towards her swords now on his back crossed. Doctorow is also facing her and holds out both arms towards her. The rope is now outside the panel, as are both bowler hat men.] Richard Stallman: Well, you won't fix the industry with random exploits. You need to encourage sharing in the public mind. Doctorow: Hey; With your music and coding backgrounds, you should get into building better P2P systems.

[The final panel is only a third of the length of the previous panel. The three are still in the panel, but they have moved and are also drawn somewhat smaller. Elaine still faces them right, but now Cory Doctorow is in front of Richard Stallman's swords as before. All have their arms down.] Elaine: What? Straight-up piracy? Cory Doctorow: Sure - have you ever considered it? You'd make a wonderful dread pirate, Roberts.

[To the right of the final panel is a two-column epilogue narrated by Cueball as seen in part 2. It is split into three paragraphs and a "signature." The caption above is centered over the two columns.] Epilogue Cueball (narrating): Elaine shared her ideas with Bram Cohen, who went on to develop BitTorrent. Mrs. Roberts spends her time developing for Ubuntu, and defacing the websites of people who make "your mom" jokes to her daughter. Elaine still stalks the net. She joins communities, contributes code or comments, and moves on. And if, late at night, you point a streaming audio player at the right IP at the right time - you can hear her rock out. ~Happy Hacking.~

20 notes

·

View notes

Text

does anyone have the parallel post with andor 2x08 quotes and discworld gnu

2 notes

·

View notes

Text

07.04.2025

Schon vor dem Wecker um 6 Uhr war ich wach. Das war keine erholsame Nacht! Mit Bauchkrämpfen und der stickigen, warmen Luft im Camper, war an schlafen nicht wirklich zu denken. Hach ist es schön eine Frau zu sein. #whenyouknowyouknow

Heute stand der Tag unter dem Motto: mehr ist mehr!

Frisch geduscht am Frühstückstisch las ich einige interessante Infos zu sämtlichen Tieren. Zum Beispiel über die Elefanten und dass sie täglich bis zu 200l Wasser trinken und bis zu 250kg Gras essen! 🐘💡 #wiederwasgelernt

Kurz vor 8 Uhr, ging es heute los. Nach wenigen Metern saßen die Affen Baum und naschten die Blätter. Richtig süß zum Zuschauen!

Nach einer Weile fahren, sagte Lydia durchs Walkie Talkie, wir hätten uns „verfahren“, d.h. wir haben einen Loop zu viel genommen, als ursprünglich geplant. Hätten wir das aber nicht getan, hätten wir das nächste Highlight definitiv verpasst!

Es kam ein großes Wasserloch mit vielen, vielen, viiiiielen Elefanten, Giraffen, Wasserböcke, Flusspferde und Marabus.

Wir wagten uns näher, auch wenn mich das echt nervös machte!

Und da waren sie: eine ganze Herde, mit ganz vielen Jungen, die im Wasser planschten. Wow!! 🐘😍

Als sie weiterzogen, fuhren wir parallel auf der Straße mit und trafen uns weiter hinten wieder. Das war ein Erlebnis!

Wie jeden Tag sahen wir viele Impallas, Zebras und auch einige Giraffen.

Zwischendurch waren auch immer Gnus dabei. Sie sind leider nicht sonderlich schlau und verlieren gerne mal ihre Herde. Dann stehen sie alleine rum und sehen sich nach einer neuen Familie um. Gerne schließen sie sich dann Zebras an, denn die wissen den Weg zum nächsten Wasserloch!

Wir fuhren weiter und schon von Weitem sahen wir die nächste große Herde: Büffel. 🦬

Sie waren völlig unbeeindruckt von uns in den Campern, aber würde man auf sie zu laufen, wäre es echt gefährlich!

So langsam ging es zum nächsten Camp: Maroela, gehört zum Orpen Camp.

Wir fanden zwei super Plätze direkt am Zaun und konnten sogar eine Hyäne beobachten. Am Abend kommen hoffentlich noch mehr Tiere! 🤩

Jetzt hatten wir richtig viel Zeit, haben ein bisschen Wäsche gewaschen, Kartoffeln fürs Abendessen vorgekocht und Ananas genascht.

Von der Ananas haben auch die Perlhühner und die Hörnchen etwas abbekommen, was aber nur letzteres mochte und genüsslich rein mümmelte. 🍍

Zu unserem Glück haben wir die Hyäne nochmal gesehen. Sie lief mehrmals an unserem Zaun vorbei und war ganz friedlich. Ich dachte immer, Hyänen seien hässlich und komisch, aber in der Realität muss ich sagen: sie sind echt süß und sehen sehr flauschig aus! Da hat der Film König der Löwen definitiv einen falschen Eindruck vermittelt!

Ein Tag, den wir wohl so schnell nicht wieder vergessen werden!

Folgende Tiere wurden heute gesichtet:

🐘 Elefanten

🦬 Büffel

🐦 verschiedene Vögel (u.a. den Wiedehopf)

🦓 Zebras

🦛 Flusspferde

🦌 Wasserböcke

🐿️ Mungo

🐕 Hyäne

🐒 Affen

🦒 Giraffen

🐗 Warzenschweine

🐃 Gnus

🦌 Impallas

🐔 Perlhühner

🐿️ Hörnchen

4 notes

·

View notes

Text

I'm trying to download a series of files from a file host (HTTPS) which refuses to allow more than one download at a time.

wget --wait 11 --random-wait -c --http2-request-window 1 -i urls.txt

wget is being very helpful, noticing the presence of HTTP/2 support, and is attempting to parallelize the download with as many connections as the server will support (6). The server 429's all connections after the first; this breaks wget -i and requires me to manually re-trigger the process after each file is finished downloading.

Is there a way to get wget to only make one (1) request at a time?

Edit: --max-threads=1 solves it.

Turns out it wasn't HTTP/2; it's that when I switched from Ubuntu to Fedora I got as a bonus a packaged version of wget (GNU wget2) that supports multithreading.

3 notes

·

View notes

Text

GNU parallel and I are in a toxic relationship and I torment her endlessly by refusing to use --citation once

2 notes

·

View notes

Text

Advanced C Programming: Mastering the Language

Introduction

Advanced C programming is essential for developers looking to deepen their understanding of the language and tackle complex programming challenges. While the basics of C provide a solid foundation, mastering advanced concepts can significantly enhance your ability to write efficient, high-performance code.

1. Overview of Advanced C Programming

Advanced C programming builds on the fundamentals, introducing concepts that enhance efficiency, performance, and code organization. This stage of learning empowers programmers to write more sophisticated applications and prepares them for roles that demand a high level of proficiency in C.

2. Pointers and Memory Management

Mastering pointers and dynamic memory management is crucial for advanced C programming, as they allow for efficient use of resources. Pointers enable direct access to memory locations, which is essential for tasks such as dynamic array allocation and manipulating data structures. Understanding how to allocate, reallocate, and free memory using functions like malloc, calloc, realloc, and free can help avoid memory leaks and ensure optimal resource management.

3. Data Structures in C

Understanding advanced data structures, such as linked lists, trees, and hash tables, is key to optimizing algorithms and managing data effectively. These structures allow developers to store and manipulate data in ways that improve performance and scalability. For example, linked lists provide flexibility in data storage, while binary trees enable efficient searching and sorting operations.

4. File Handling Techniques

Advanced file handling techniques enable developers to manipulate data efficiently, allowing for the creation of robust applications that interact with the file system. Mastering functions like fopen, fread, fwrite, and fclose helps you read from and write to files, handle binary data, and manage different file modes. Understanding error handling during file operations is also critical for building resilient applications.

5. Multithreading and Concurrency

Implementing multithreading and managing concurrency are essential skills for developing high-performance applications in C. Utilizing libraries such as POSIX threads (pthreads) allows you to create and manage multiple threads within a single process. This capability can significantly enhance the performance of I/O-bound or CPU-bound applications by enabling parallel processing.

6. Advanced C Standard Library Functions

Leveraging advanced functions from the C Standard Library can simplify complex tasks and improve code efficiency. Functions for string manipulation, mathematical computations, and memory management are just a few examples. Familiarizing yourself with these functions not only saves time but also helps you write cleaner, more efficient code.

7. Debugging and Optimization Techniques

Effective debugging and optimization techniques are critical for refining code and enhancing performance in advanced C programming. Tools like GDB (GNU Debugger) help track down bugs and analyze program behavior. Additionally, understanding compiler optimizations and using profiling tools can identify bottlenecks in your code, leading to improved performance.

8. Best Practices in Advanced C Programming

Following best practices in coding and project organization helps maintain readability and manageability of complex C programs. This includes using consistent naming conventions, modularizing code through functions and header files, and documenting your code thoroughly. Such practices not only make your code easier to understand but also facilitate collaboration with other developers.

9. Conclusion

By exploring advanced C programming concepts, developers can elevate their skills and create more efficient, powerful, and scalable applications. Mastering these topics not only enhances your technical capabilities but also opens doors to advanced roles in software development, systems programming, and beyond. Embrace the challenge of advanced C programming, and take your coding skills to new heights!

#C programming#C programming course#Learn C programming#C programming for beginners#Online C programming course#C programming tutorial#Best C programming course#C programming certification#Advanced C programming#C programming exercises#C programming examples#C programming projects#Free C programming course#C programming for kids#C programming challenges#C programming course online free#C programming books#C programming guide#Best C programming tutorials#C programming online classes

2 notes

·

View notes

Text

C++ Tools Every Programmer Should Know in 2023

As technology continues to evolve rapidly, it is essential for programmers to stay updated with the latest tools to enhance their productivity and efficiency. In this article, we will explore some of the top C++ tools that every programmer should know in 2023. From integrated development environments (IDEs) to debuggers, code editors, libraries and frameworks, documentation tools, and build systems, we'll cover a comprehensive range of tools that will help programmers streamline their C++ development process. 1. Integrated Development Environments (IDEs) Visual Studio Code Visual Studio Code (VS Code) is a highly popular and powerful IDE that offers excellent support for C++ development. It provides a wide range of features, including code completion, syntax highlighting, debugging capabilities, and easy integration with version control systems like Git. Eclipse Eclipse is another widely used IDE for C++ development. It offers a comprehensive set of tools, such as code refactoring, code navigation, and a customizable user interface. Eclipse also supports various plugins and extensions to enhance its functionality further. Qt Creator Qt Creator is a specialized IDE for C++ and Qt development. It provides an intuitive and user-friendly interface, along with powerful tools for designing graphical user interfaces (GUIs) using the Qt framework. Qt Creator also supports cross-platform development, making it an excellent choice for C++ programmers. 2. Debuggers 2.1 GDB The GNU Debugger (GDB) is a command-line debugger that helps programmers analyze and debug their C++ code efficiently. It allows breakpoints, stepping through code, inspecting variables, and examining call stacks, among other debugging features. GDB is highly customizable and supports various platforms. 2.2 Visual Studio Debugger The Visual Studio Debugger is a robust and user-friendly debugger provided by Microsoft Visual Studio. It offers advanced debugging capabilities for C++ programs, including real-time expression evaluation, memory tracking, and parallel debugging. The Visual Studio Debugger integrates seamlessly with Visual Studio IDE. 2.3 LLDB LLDB is a powerful, modern debugger primarily developed for LLVM-based programming languages such as C++. It provides capabilities like multithreaded debugging, just-in-time compilation, and efficient memory management. LLDB offers a command-line interface and can be used on multiple platforms. 3. Code Editors 3.1 Sublime Text Sublime Text is a lightweight yet feature-rich code editor known for its speed and extensibility. It offers a minimalistic user interface, multiple selections, powerful search and replace functionality, and a vast collection of plugins to customize the editor according to programmers' needs. 3.2 Atom Atom is a highly customizable and open-source code editor that gained popularity among developers. It supports various programming languages, including C++, and provides features like smart autocompletion, file system browser, and Git integration. Atom also allows third-party package installations to extend its functionality. 3.3 Vim Vim is a highly efficient and text-based code editor preferred by many experienced programmers. It offers a wide range of features designed to enhance developers' productivity, such as extensive key bindings, split editing, powerful search and replace, and support for plugins and scripts. 4. Libraries and Frameworks 4.1 Boost Boost is a widely used C++ libraries collection known for its high-quality and portable code. It provides a range of libraries that cover various aspects of C++ programming, including smart pointers, multithreading, regular expressions, and container classes. Boost libraries offer excellent support and compatibility with different platforms. 4.2 Qt Qt is a comprehensive cross-platform framework that includes a powerful set of C++ libraries and tools. It enables developers to create high-performance applications with an intuitive UI and supports features like network programming, XML processing, database connectivity, and 3D rendering. Qt is widely adopted for GUI application development. 4.3 STL The Standard Template Library (STL) is an essential component of C++ programming. It offers a collection of generic algorithms, containers, and iterators, making it easier to write efficient and maintainable code. STL provides various data structures and algorithms, such as vectors, lists, sorting, and searching functions. 5. Documentation Tools 5.1 Doxygen Doxygen is a popular documentation tool for C++ projects. It automatically generates documentation from properly formatted comments in the source code. Doxygen supports various output formats like HTML, LaTeX, and PDF, allowing programmers to create professional-looking documentation for their projects easily. 5.2 Docutils Docutils is a Python-based documentation tool that provides support for multiple markup languages, including reStructuredText. It enables programmers to write documentation in a human-readable format and convert it into different output formats. Docutils is widely used in the C++ community for documenting projects. 5.3 Sphinx Sphinx is another widely adopted documentation tool that integrates well with the C++ ecosystem. It supports multiple markup languages, including reStructuredText and Markdown, and offers features like automatic cross-referencing, code highlighting, and generation of API documentation. Sphinx is highly customizable and extensible. 6. Build Systems 6.1 CMake CMake is a popular build system that simplifies the process of building C++ projects across multiple platforms and compilers. It provides a unified build configuration language and generates platform-specific build scripts. CMake offers excellent scalability and flexibility, making it suitable for projects of any size. 6.2 Make Make is a classic and widely used build system for C++ projects. It utilizes makefiles to automate the build process by specifying dependencies and actions. Make is highly efficient and can handle complex project structures. It is a fundamental tool in the C++ development ecosystem, and many other build systems are based on its principles. 6.3 Ninja Ninja is a fast and lightweight build system designed for speed and efficient dependency tracking. It focuses on the minimalistic build process and supports parallel builds. Ninja is often used in conjunction with CMake, allowing for faster compilation and linking of C++ projects. Conclusion: staying up to date with the right tools can significantly improve a programmer's productivity and efficiency. The C++ tools mentioned in this article, including IDEs, debuggers, code editors, libraries and frameworks, documentation tools, and build systems, are essential for any C++ developer in 2023. By leveraging these tools, programmers can streamline their development process, write cleaner code, and build robust applications. Embrace these tools and take your C++ programming skills to new heights! FAQs FAQ 1: What are Integrated Development Environments (IDEs)? Integrated Development Environments (IDEs) are software applications that provide comprehensive tools and features to simplify the software development process. They typically include code editors, debuggers, build systems, and other utilities needed for efficient coding and debugging. FAQ 2: What are Debuggers? Debuggers are tools that help programmers identify and fix issues in their code. They allow developers to step through the code, set breakpoints, inspect variables, and analyze program execution to understand and resolve bugs and errors. FAQ 3: What are Code Editors? Code editors are software tools designed specifically for writing and editing code. They provide features like syntax highlighting, code completion, and navigation to enhance productivity and make coding easier and more efficient. FAQ 4: What are Libraries and Frameworks? Libraries and frameworks are collections of pre-written code that provide reusable functionality to programmers. They can simplify coding tasks by providing ready-made solutions for common problems, saving time and effort. FAQ 5: What are Build Systems? Build systems are software tools that automate the process of compiling and linking code to create executable programs or libraries. They manage dependencies, handle project configurations, and ensure that all required resources are properly built and linked together. Read the full article

2 notes

·

View notes

Text

I think a lot about how people carry Pratchett with them. The way the errant thought "GNU Terry Pratchett" sidles into my head from time to time, and for a brief moment, he is alive because he has changed my day again.

I think a lot about Gaiman in the context of Pratchett. I've only ever finished one work he has authored (with Pratchett) - Good Omens. I've embarked on American Gods. Taken brief detours through extracts of Sandman. There is a familiarity in his other pages. But, never finished. Life crops up.

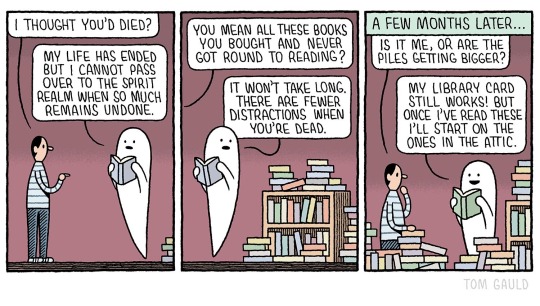

I've read all I can of Pratchett, and loved it. It has had a finality - a closure - which feels befitting of the man himself. And so there is some faint parallelism for me in seeing Gaiman say "a unlifetime of reading all those things I didn't get to would be okay," In light of the way I've yet to finish almost any of his works.

Maybe when that time comes, I'll leave a book on the table for him, and be reminded when I see it, and he'll be able to share a moment with me in my mind, over its pages? And I can return the favour of pleasantly unfinished reading.

My latest cartoon for @GuardianBooks.

48K notes

·

View notes

Text

Assess Application and Cluster Readiness

Review Application Dependencies:

Analyze libraries, JDK versions, frameworks (e.g., Spring Boot, Jakarta EE), and external services (databases, APIs).

Identify any Kubernetes-specific configurations (e.g., ConfigMaps, Secrets, environment variables).

Containerization:

Ensure your Java app is containerized using tools like Docker.

Update the Dockerfile if necessary (e.g., for JDK/JRE updates or changes in base image).

2. Prepare Kubernetes Environment

Target Cluster Validation:

Ensure the target Kubernetes cluster is set up and ready.

Check for compatibility with the current cluster’s API version and resources.

Namespace Setup:

Create equivalent namespaces, RBAC roles, and policies in the target cluster.

Storage:

Verify Persistent Volume Claims (PVCs) and ensure similar storage classes are available.

3. Backup and Export Resources

Kubernetes Resources:

Export all application-related resources using kubectl:bashCopy codekubectl get all -n <namespace> -o yaml > resources.yaml

Persistent Data:

Back up database data or persistent volumes using tools like Velero or rsync.

4. Migrate Application Configurations

ConfigMaps and Secrets:

Export existing configurations:bashCopy codekubectl get configmap -n <namespace> -o yaml > configmap.yaml kubectl get secret -n <namespace> -o yaml > secret.yaml

Reapply them in the new cluster:bashCopy codekubectl apply -f configmap.yaml kubectl apply -f secret.yaml

5. Deploy to Target Cluster

Deploy Application:

Use Helm charts, Kubernetes manifests, or CI/CD pipelines to deploy the Java application to the new cluster.

Update the kubeconfig for the target cluster:bashCopy codekubectl config use-context <target-cluster-context> kubectl apply -f resources.yaml

Persistent Volumes:

Ensure data is restored to the persistent volumes if needed.

6. Test the Migration

Validation:

Ensure the application is running as expected (pods, services, and networking).

Run integration and regression tests.

Connectivity:

Validate external service integrations (e.g., databases, APIs).

Check ingress configurations for public access.

7. Cutover and Cleanup

Switch Traffic:

Update DNS or external load balancer to direct traffic to the new cluster.

Monitor:

Use tools like Prometheus, Grafana, or ELK Stack to monitor application health.

Clean Up Old Environment:

After verifying stability, clean up resources in the old cluster.

Tools to Assist in Migration

Velero: For backup and restore of Kubernetes resources and persistent volumes.

Helm: To manage application deployments and upgrades.

Kubernetes Dashboard: For real-time cluster monitoring.

Kubectl Plugins: To enhance CLI functionality.

If you provide more details about the migration (e.g., specific cloud providers or Kubernetes environments), I can offer more tailored advice!

4o

You said:

Migration of 5000 files per hr fix

ChatGPT said:

ChatGPT

If you're migrating a large number of files (e.g., 5000 files per hour) and encountering performance bottlenecks, you can improve the speed and reliability of your file migration by addressing several key areas. Here's a step-by-step guide to optimize file transfer:

1. Identify and Analyze Bottlenecks

File System Bottlenecks:

Check the source and destination disk I/O performance.

Ensure the files are not locked or being used during migration.

Network Bandwidth:

Measure your available network bandwidth using tools like iperf and ensure it can handle the expected load.

File Metadata Overhead:

Small files can add significant overhead due to metadata operations (e.g., file creation, permissions).

2. Optimize Transfer Mechanisms

Use Parallel Processing:

Transfer multiple files concurrently using multithreaded or parallel tools like:

rsync with --max-size and --parallel options.

GNU Parallel for concurrent file transfers.

bashCopy codefind /source/directory -type f | parallel -j8 rsync -a {} /destination/directory

Use a custom script or a library like Python's concurrent.futures for more control.

Batch File Transfers:

Group files into batches (e.g., tar archives) to reduce the overhead of transferring many small files individually.bashCopy codetar -cvf files.tar /source/files && rsync files.tar /destination Extract the files on the destination:bashCopy codetar -xvf files.tar

3. Ensure Network Optimization

Compression:

Compress files during transfer to reduce data volume:bashCopy codersync -az /source/directory /destination/directory

Increase TCP Window Size:

Tweak TCP settings for large transfers using tools like scp or rsync with buffer options:bashCopy codersync --bwlimit=0 --whole-file /source /destination

Use Faster Protocols:

Use optimized file transfer protocols like Rclone, Aspera, or SFTP for high-speed transfer.

4. Improve Source and Destination Performance

Disk Performance:

Optimize disk reads and writes using SSDs for temporary storage or caches.

Ensure the file system supports efficient file handling (e.g., ext4, XFS).

File System Tuning:

For very large numbers of files, tune parameters like inode_cache to handle metadata efficiently.

5. Error Handling and Retry Logic

Retry Failed Transfers:

Use tools like rsync to retry failed files automatically:bashCopy codersync -avz --partial --progress /source /destination

Logging and Monitoring:

Log all transferred files and

4o

You said:

File trsnsfer to s3 improve

ChatGPT said:

ChatGPT

Improving file transfer to Amazon S3 requires addressing both performance and reliability. Below are strategies and tools to optimize large-scale or high-volume file transfers:

1. Use AWS-Specific Tools

AWS CLI with Parallelism:

Use the --multipart-chunk-size-mb and --parallel flags to upload files in parallel and in chunks:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD_IA --region <region>

Enable parallel transfers: Update your AWS CLI configuration file (~/.aws/config) to increase concurrency:plaintextCopy code[default] s3 = max_concurrent_requests = 10 max_queue_size = 1000 multipart_threshold = 64MB multipart_chunksize = 64MB

AWS DataSync:

Use AWS DataSync for automated, high-performance, large-scale transfers with minimal manual intervention. DataSync can also manage retry and error handling.

2. Optimize S3 Uploads

Multipart Upload:

Use multipart uploads for files larger than 100 MB to improve transfer speed and reliability.

Example using AWS CLI:bashCopy codeaws s3api create-multipart-upload --bucket your-bucket-name --key file_key

Automate multipart uploads with the AWS SDK or tools like boto3 for Python.

Use the Correct Storage Class:

Choose the appropriate storage class to save costs and improve upload speeds:

STANDARD (default): High performance for frequently accessed data.

INTELLIGENT_TIERING: Automatically optimizes storage costs for infrequently accessed files.

3. Improve Network Performance

Enable Transfer Acceleration:

Use S3 Transfer Acceleration for faster uploads over long distances by leveraging AWS edge locations:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

Compression:

Compress files before transferring to reduce data size:bashCopy codetar -czvf archive.tar.gz /local/path && aws s3 cp archive.tar.gz s3://your-bucket-name

Optimize Network:

Use AWS Direct Connect or a high-bandwidth internet connection for large-scale transfers.

Monitor latency and bandwidth with tools like iperf.

4. Automate and Parallelize Transfers

Rclone:

Use rclone for high-speed S3-compatible transfers with parallelism and retry capabilities:bashCopy coderclone copy /local/path s3:your-bucket-name --transfers 16 --s3-upload-concurrency 16

GNU Parallel:

Transfer files concurrently by splitting file lists:bashCopy codefind /local/path -type f | parallel -j10 aws s3 cp {} s3://your-bucket-name

5. Error Handling and Retry

Retry Mechanisms:

Use tools or scripts that automatically retry failed uploads.

AWS CLI has built-in retry logic; increase retries if necessary:bashCopy codeaws s3 cp --cli-read-timeout 600 --cli-connect-timeout 600 /local/path s3://your-bucket-name

Chunked Retry:

Break large transfers into smaller, manageable chunks and retry failed chunks only.

6. Monitor and Optimize Transfers

AWS CloudWatch Metrics:

Monitor transfer performance and error rates in real-time.

S3 Inventory:

Use S3 inventory reports to validate uploaded files.

AWS SDKs or Custom Scripts:

Build custom monitoring and retry logic with SDKs like boto3 (Python).

Example Optimized Upload Command

bash

Copy code

aws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD --region us-east-1 --cli-read-timeout 600 --cli-connect-timeout 600

When to Use Snowball or Snowmobile

For extremely large datasets (terabytes or petabytes), use AWS Snowball or AWS Snowmobile for offline data transfer to S3.

These steps can dramatically improve the efficiency and reliability of your file transfer process to S3. Let me know if you need help implementing any of these!

4o

You said:

Improve fole transfers

ChatGPT said:

ChatGPT

Improving file transfer performance and reliability, whether between servers, local systems, or to cloud storage like Amazon S3, involves a combination of strategies. Here are some best practices for improving file transfers:

1. Optimize the File Transfer Process

Parallelize Transfers

Transfer multiple files concurrently to improve throughput:

Use tools like rsync, scp, or cloud-specific SDKs with multi-threading capabilities.

Example with rsync:bashCopy codersync -azP --info=progress2 --max-size=100M --exclude="*.tmp" /source/ /destination/

Batch Small Files

Small files cause metadata overhead during transfer. Bundle them into archives:bashCopy codetar -czvf archive.tar.gz /source/ Transfer the archive, then extract on the destination:bashCopy codetar -xzvf archive.tar.gz

2. Use High-Performance Transfer Tools

Rclone

Ideal for cloud storage and large-scale transfers with parallelism:bashCopy coderclone copy /source/ remote:bucket-name --transfers=16 --checkers=8

GNU Parallel

Parallelize file uploads/downloads:bashCopy codefind /source/ -type f | parallel -j8 scp {} user@destination:/target/

Multithreaded Tools

Use tools with built-in multithreading, such as bbcp, Aspera, or scp (with parallel scripts).

3. Network Optimization

Compression

Compress files before transfer to reduce the amount of data transmitted:bashCopy codegzip largefile.txt Or combine with the transfer:bashCopy codetar czf - /source/ | ssh user@destination "tar xzf - -C /target/"

Bandwidth Management

Limit bandwidth usage to prevent throttling:

With rsync:bashCopy codersync --bwlimit=1000 -az /source/ /destination/

Use TCP tuning options to optimize network transfer rates:bashCopy codesysctl -w net.ipv4.tcp_window_scaling=1

Use Faster Protocols

Use optimized transfer protocols:

SFTP: Secure but slower for high-volume transfers.

Rsync/SSH: Efficient for incremental and large transfers.

Aspera: High-speed UDP-based protocol.

Enable Transfer Acceleration (Cloud)

For Amazon S3:bashCopy codeaws s3 cp /source/ s3://your-bucket/ --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

4. Error Handling and Resilience

Resume Transfers

Use tools that support resuming failed transfers:

Rsync:bashCopy codersync -az --partial /source/ /destination/

AWS CLI:bashCopy codeaws s3 cp --continue /source/ s3://bucket-name/

Retry Logic

Implement retry logic for failed transfers using scripts or tools.

Example using bash:bashCopy codefor i in {1..5}; do scp file user@destination:/target/ && break || sleep 5 done

5. Monitor and Analyze Performance

Real-Time Monitoring

Monitor progress with tools:

Rsync: --progress flag.

SCP: Wrap in pv for progress visualization:bashCopy codetar cf - /source/ | pv | ssh user@destination "tar xf - -C /target/"

Analyze Bottlenecks

Use tools like iperf or nload to check network performance.

Use iotop or dstat to monitor disk I/O on both source and destination.

6. Scale with Advanced Solutions

Distributed File Transfer

Use tools like Globus for efficient distributed data transfers.

Data Transfer Appliances

For extremely large datasets, consider appliances like AWS Snowball or offline methods to ship data physically.

Content Delivery Networks (CDNs)

Use CDNs for faster file distribution to end users.

By combining these strategies—parallelism, compression, protocol optimization, and monitoring—you can significantly enhance file transfer speed and reliability. Let me know if you'd like further customization for a specific use case!

4o

You said:

Threadpool

ChatGPT said:

ChatGPT

A thread pool is a collection of pre-initialized worker threads that stand ready to perform tasks. Instead of creating and destroying threads for each task, a thread pool manages a set of reusable threads, improving performance and resource utilization in multi-threaded applications.

Here’s how to effectively use and optimize thread pools in your application.

Why Use a Thread Pool?

Performance: Reduces the overhead of creating and destroying threads repeatedly.

Resource Management: Controls the number of concurrent threads, preventing system overload.

Scalability: Allows a controlled environment for task execution, making it easier to scale applications.

Key Concepts of Thread Pools

Core Threads: Minimum number of threads that are always kept alive.

**Max Threads

4o

You said:

Improve java spring file transfer

ChatGPT said:

ChatGPT

Improving file transfer in a Java Spring application involves optimizing both the code and the underlying infrastructure. Below are strategies to enhance file transfer performance and reliability.

1. Optimize File Transfer Code

Use Streaming for Large Files

Avoid loading the entire file into memory; instead, use streaming:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { try (InputStream inputStream = file.getInputStream()) { // Process the input stream directly // Example: Save the file Files.copy(inputStream, Paths.get("target-directory/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); } return ResponseEntity.ok("File uploaded successfully!"); }

Enable Asynchronous File Transfers

Use Spring's @Async annotation for non-blocking file uploads/downloads:javaCopy code@Async public CompletableFuture<String> uploadFileAsync(MultipartFile file) { // File processing logic return CompletableFuture.completedFuture("Upload Complete"); }

Use Chunked Uploads for Large Files

Break large files into smaller chunks for efficient transfer and to handle interruptions gracefully:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId) { // Append chunk data to the file return ResponseEntity.ok("Chunk uploaded successfully!"); }

2. Optimize for Cloud Storage (e.g., AWS S3, Azure Blob)

Use SDKs for Direct Uploads

Use cloud-specific SDKs (e.g., AWS SDK for S3) to upload files directly to cloud storage instead of saving locally first:javaCopy code@PostMapping("/uploadToS3") public ResponseEntity<String> uploadToS3(@RequestParam("file") MultipartFile file) throws IOException { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; s3Client.putObject(bucketName, file.getOriginalFilename(), file.getInputStream(), new ObjectMetadata()); return ResponseEntity.ok("File uploaded to S3!"); }

Enable S3 Transfer Acceleration

Use Transfer Acceleration for faster uploads to Amazon S3:javaCopy codeAmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

3. Optimize File Transfer in REST APIs

Enable Compression

Compress file responses to reduce data size:yamlCopy codespring: servlet: multipart: enabled: true max-file-size: 50MB max-request-size: 50MB gzip: enabled: true min-size: 1024

Use HTTP/2

Switch to HTTP/2 for better concurrency and reduced latency:yamlCopy codeserver: http2: enabled: true

Resume Interrupted Uploads

Use ETag or file metadata to allow resumable file uploads:javaCopy code@PostMapping("/resumeUpload") public ResponseEntity<String> resumeUpload(@RequestParam("file") MultipartFile file, @RequestParam("offset") Long offset) { // Resume logic based on offset return ResponseEntity.ok("Upload resumed!"); }

4. Parallelize File Transfers

Multithreaded File Upload

Use a ThreadPoolExecutor or Spring's TaskExecutor:Copy code

In addition to using @Async, there are other strategies and tools to improve file transfer performance and reliability in a Java Spring application. Here are some approaches:

1. Reactive Programming with Spring WebFlux

For truly non-blocking and asynchronous file transfers, use Spring WebFlux:

Streaming File Upload:javaCopy code@PostMapping(value = "/upload", consumes = MediaType.MULTIPART_FORM_DATA_VALUE) public Mono<Void> uploadFile(Flux<FilePart> filePartFlux) { return filePartFlux.flatMap(filePart -> { Path path = Paths.get("target-directory/" + filePart.filename()); return filePart.transferTo(path); }).then(); }

Streaming File Download:javaCopy code@GetMapping(value = "/download", produces = MediaType.APPLICATION_OCTET_STREAM_VALUE) public Mono<ResponseEntity<Resource>> downloadFile() { Path path = Paths.get("target-directory/large-file.txt"); return Mono.just(ResponseEntity.ok() .header(HttpHeaders.CONTENT_DISPOSITION, "attachment; filename=large-file.txt") .body(new FileSystemResource(path))); }

2. Use CompletableFuture and ExecutorService

Combine CompletableFuture with a custom ExecutorService for better control:javaCopy code@PostMapping("/upload") public CompletableFuture<String> uploadFile(@RequestParam("file") MultipartFile file) { ExecutorService executor = Executors.newFixedThreadPool(4); return CompletableFuture.supplyAsync(() -> { try { // File processing logic Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); return "File uploaded successfully!"; } catch (IOException e) { throw new RuntimeException("File upload failed!", e); } }, executor); }

3. Apache Kafka for Asynchronous Processing

Use Apache Kafka to decouple file upload from processing:

Producer sends file metadata to Kafka after upload:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { // Save the file Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename())); // Send metadata to Kafka kafkaTemplate.send("file-topic", file.getOriginalFilename()); return ResponseEntity.ok("File uploaded and queued for processing!"); }

Consumer processes files asynchronously:javaCopy code@KafkaListener(topics = "file-topic", groupId = "file-group") public void processFile(String fileName) { // Process the file Path path = Paths.get("target/" + fileName); System.out.println("Processing file: " + path); }

4. Chunked File Transfers

Handle large files by uploading them in chunks:

Frontend sends file chunks with an identifier.

Backend reconstructs the file:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId, @RequestParam("chunkNumber") int chunkNumber) { Path path = Paths.get("target/" + fileId + "_" + chunkNumber); try { Files.copy(chunk.getInputStream(), path, StandardCopyOption.REPLACE_EXISTING); } catch (IOException e) { return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR).body("Failed to upload chunk"); } return ResponseEntity.ok("Chunk uploaded successfully!"); }

Reassemble chunks when all are uploaded.

5. WebSockets for Real-Time Progress

Use WebSockets to provide real-time feedback on file transfer progress:javaCopy code@Controller public class WebSocketController { @MessageMapping("/uploadStatus") @SendTo("/topic/progress") public String sendProgress(String status) { return status; } }

Send progress updates from the server while uploading:javaCopy codesimpMessagingTemplate.convertAndSend("/topic/progress", "50% completed");

6. Redis or Database Queues

Use a Redis queue or a database to handle file metadata asynchronously.

Enqueue metadata after upload:javaCopy coderedisTemplate.opsForList().leftPush("fileQueue", fileMetadata);

Process the queue in the background:javaCopy codeString metadata = redisTemplate.opsForList().rightPop("fileQueue");

7. Use MultipartConfig for Large Files

Configure multipart file handling for large files in application.properties:propertiesCopy codespring.servlet.multipart.max-file-size=1GB spring.servlet.multipart.max-request-size=2GB

Custom bean for fine-tuning:javaCopy code@Bean public MultipartConfigElement multipartConfigElement() { MultipartConfigFactory factory = new MultipartConfigFactory(); factory.setMaxFileSize("1GB"); factory.setMaxRequestSize("2GB"); return factory.createMultipartConfig(); }

8. Use AWS S3 for Direct Uploads

Use pre-signed URLs to offload upload work to the client:javaCopy code@GetMapping("/generatePresignedUrl") public ResponseEntity<String> generatePresignedUrl(@RequestParam String fileName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest("bucket-name", fileName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); return ResponseEntity.ok(url.toString()); }

The client uploads directly to S3 using the URL.

9. Optimize File Transfer for High Performance

Compression

Compress files before upload to reduce transfer size:javaCopy codebyte[] compressedData = compress(file.getBytes()); Files.write(Paths.get("compressed-file.gz"), compressedData);

Load Balancing

Use a load balancer (e.g., AWS ALB or NGINX) for scalable upload endpoints.

Retry Logic

Implement retries for failed transfers to improve reliability:javaCopy codeint maxRetries = 3; while (retries < maxRetries) { try { // Attempt file upload break; } catch (IOException e) { retries++; if (retries == maxRetries) { throw e; } } }

These strategies can enhance file transfer performance, scalability, and reliability in Java Spring applications. Let me know if you need code examples for specific use cases!

4o

You said:

S3 relaibility

ChatGPT said:

ChatGPT

Ensuring reliability when transferring files to Amazon S3 involves minimizing failures, handling errors effectively, and ensuring data integrity. Below are best practices and techniques to achieve reliable file transfers to S3:

1. Use Multipart Upload for Large Files

Multipart uploads split large files into smaller parts, upload them in parallel, and reassemble them in S3. This improves reliability and speeds up transfers.

Example Using AWS SDK (Java)

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; String fileName = "large-file.zip"; String keyName = "uploads/" + fileName; File file = new File(fileName); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); try { upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); }

2. Use Retry Logic

Add retry mechanisms to handle intermittent network issues or service interruptions.

AWS SDK Retry Settings

The AWS SDK retries failed requests automatically, but you can customize it:

java

Copy code

ClientConfiguration config = new ClientConfiguration(); config.setRetryPolicy(PredefinedRetryPolicies.getDefaultRetryPolicyWithCustomMaxRetries(5)); AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withClientConfiguration(config) .build();

3. Enable Transfer Acceleration

Transfer Acceleration uses AWS edge locations to improve upload speeds over long distances.

Enable Transfer Acceleration in S3

Enable it in the S3 bucket settings.

Update your client code to use the accelerated endpoint:

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

4. Validate Data Integrity

Use MD5 checksums to ensure the uploaded file matches the original file.

AWS CLI Example

bash

Copy code

aws s3 cp file.txt s3://your-bucket/ --content-md5 <calculated-md5>

Java Example

java

Copy code

String md5 = Base64.getEncoder().encodeToString(DigestUtils.md5(new FileInputStream(file))); ObjectMetadata metadata = new ObjectMetadata(); metadata.setContentMD5(md5); PutObjectRequest request = new PutObjectRequest(bucketName, keyName, new FileInputStream(file), metadata); s3Client.putObject(request);

5. Monitor File Transfers

Use CloudWatch Metrics to monitor S3 request success, failures, and latencies.

Monitor transfer progress with TransferManager in the AWS SDK:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener(progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion();

6. Handle Failures Gracefully

Use Resumable Transfers for interrupted uploads with TransferManager:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().build(); Upload upload = tm.upload(bucketName, keyName, file); // Add a progress listener upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred: " + progressEvent.getBytesTransferred()); }); // Graceful failure handling try { upload.waitForCompletion(); } catch (AmazonClientException e) { System.err.println("Error occurred: " + e.getMessage()); }

7. Use Pre-Signed URLs

Generate pre-signed URLs for reliable, time-limited uploads directly from the client, reducing the load on your server.

Generate Pre-Signed URL

java

Copy code

GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest(bucketName, keyName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); System.out.println("Pre-Signed URL: " + url);

8. Use S3 Event Notifications

Configure S3 Event Notifications to trigger AWS Lambda or SNS for post-upload validation, processing, or alerting on failures.

Example: Notification for Upload Completion

Configure your S3 bucket to send an event to an SQS queue or Lambda function upon successful upload.

9. Leverage Data Replication

Use S3 Cross-Region Replication (CRR) or Same-Region Replication (SRR) for redundancy.

Automatically replicate files to another bucket for disaster recovery.

10. Automate with AWS DataSync

Use AWS DataSync for automated and highly reliable file transfers between on-premises storage and S3. It includes built-in retry and integrity checks.

11. Optimize Network Performance

Compress files before uploading:javaCopy codeFiles.write(Paths.get("compressed-file.gz"), gzipCompress(fileContent));

Use efficient transfer protocols (e.g., HTTP/2 if supported by your client).

12. Secure Transfers

Always use HTTPS for secure communication.

Use S3 Bucket Policies and IAM Roles to restrict access to authorized users.

Example: Complete Reliable Upload Function

java

Copy code

public void reliableUpload(File file, String bucketName, String keyName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); try { // Multipart upload Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); } finally { tm.shutdownNow(); } }

0 notes

Text

Towards "Blackhand Servitor" sampler, the Unionized Vision Quest, '(thread 16^12) '(article 0x29)

Follow-up article to the following two:

Kate: Even the black sun shall set one day. And as we shall rejoice the several eons we came to experience together, am I right to think such Ava? Ava: Sure thing Kate, I agree.

Components

Empathic mutualism, esoteric empowerment, systemic renewal, queer-ness & transgender & neurodivergence issues, data privacy, AI automation done well, morphological freedoms, communion with nature, sapient machine rights, grounded optimism, Turtle Island-themed, seventies cassette retrofuturism aesthetic, LISP code poetry & immersive tech props, data recovery & archival processes, self-discovery & insightful outer journey, copyleft publishing license & production technologies, multilingual / multimedia exports, affirmation therapy, mind synthesis / scanning;

Chronokinesis, True Polymorphy, meta? Retrocognition, distant far far away future dream(s?), Pinegroove as Mascouche+Rostock-like locations, lore-accurate branding?, historical tech remastered, tramway sequence, symbolic computation, touchscreen PDAs, expert system, Turbochannel Eurocard systems, Soyuzmultfilm animated films web hosting webring, lucid dreams, vivid daydreams, manifestation games, benevolent use of power, spiritual awakening?, societal harmony & social cohesion, humane transparency, RTTY shortwave broadcasting data, psyche agent archival as pseudo-afterlife, ancestor blessings, written bronze scroll records, spell contracts, preservation of knowledge, communal insights from humble tribes, shamans, oracles, priests, monks, clerks, foresight, memory rituals, bulletin board systems, tables, nesting spreadsheets, calendars, newer rituals, macrocosms and paracosms, libraries, changelings, vocal tales, urban legends, rural stories;

Trenchbroom, Blender 3.6+, Krita, Inkscape, QOwnNotes, LibreOffice, Kate, Godot 4 stable branch, Emacs, A2 Bluebottle Oberron, OpenDylan, Smalltalk, Fish, Tuxedo Computers, Pine64, Librem 5?, Elisa, Itch.io as an app, nsCDE, KDE e.v , FSF as GLOSS Foundation, Symbolics as Utalics, DEC as Pflaumen, PC/M as Magna Charter Macrotronics, IBM as EBM, Sun Microsystems as Luanti *, DuckDuckGo, SearX, Startpage, Gog Galaxy, Lutris, Proton, Wine, FreeBASIC?, ObjectREXX, Es, Acme, tcsh, musl, Okteta, K3B, GNU core utils, Bel, Arc, SimH, ...

16^12 - Angora history path and "androids congress worldwide project" context;

Nil Blackhand as camera POV, Kate Ker (INTJ autistic erudite historian transfem geocenter faction) + Ava Booksword (ENFP blonde social assistance worker synthetic android woman of syndicalist faction) modular intrigue storylets pathway;

Ana - Kata connection (focused on the time interpretation...), ocean of clades, afterlives, sentient / divine cosmic tree, living sapient cosmos ecosystems theory, black hand of history, mourning + celebrating the dead, cycles of life death and renewal, intrigue threads, manias & archaeology (mummy, tombs...), sophont nuances, dynastic lineages, embracers of change and luddites, improve the world, "become the revolution you seek in life", systemically parallel lives' overlaps, systemic progress, editing your reality and archiving individuals through divine software, traditions, pattern recognition, manifestation journeys, mutual guidance, giving and sharing, globalization, radio-pathy, van crawl, vision quests, romances, passions, empathies, special interests & other parallel expression idioms;

Shoshona, the black Angora fem housecat;

Pohakantenna (Shoshones' own contemporary religion?), Calvinism/Arianism as Occitan Baha'i dialect;

Utchewn conlang (Numic-derived language) with Blackfoot, Coast Salish & Iranian influences?;

(Shoshone Union's) Unionists (Republicans, Liberty, Democrats) vs Progressives caucuses (Syndicalists, Green/Georgism/Geocenter, Harmony);

0 notes

Text

Ever since I was introduced to the Discworld novels, some 30+ years ago, I've had an insane love for them.

I couldn't put my finger on it at first, I just knew that they spoke to my soul. As I've grown, and learned more, I now understand that Sir Pratchett had an amazing way of placing the world around us into a degree just separated enough from reality, and in such a way that I could always find something new to understand about human frailty and nature, politics, death, life, and love.

I have become a better person because of it. By understanding the parallels between the Discworld and reality, I was also able to better dissect how our world was, and teach my child the why's and how's of what was going on - and perhaps a better way to handle interpersonal relationships.

Death will not stop his messages from continuing to be shared and loved throughout the world.

GNU Sir Terry Pratchett.

This is a bit of a ramble, but:

I truly believe that Sir Terence David John Pratchett is the greatest fantasy author of the post-Tolkien era. No one has done it as well as he did - prose, characters, tone, plot, themes, humor - he was the master of all of them.

His words danced off the page, from single lines like Death's

"LORD, WHAT CAN THE HARVEST HOPE FOR, IF NOT FOR THE CARE OF THE REAPER MAN?"

To the Vimes Boots Theory or Humans Need Fantasy monologues.

His characters are some of the most richly textured I've ever encountered - Death and Sam Vimes and Moist von Lipwig and Granny Weatherwax, each of them feels like they were given thought and care and used to tell stories that they were uniquely suited to tell.

He controlled tone like a master, from slapstick comedy to serious satire to tense moments where you feel that, just for a second, things might not be okay.

His plots were always believable in the context of the worlds he was telling, and were always engrossing - that the metaphysical adventures of Death and co. felt as natural a fit for Discworld as the starting of the setting's first newspaper, the solving of street crime and unraveling conspiracies and the grand re-opening of a post office is proof enough of that.

He used these plots to explore themes of responsibility, social inequality and duty to a person's community with razor-sharp wit and a deep anger for the injustice of the world around him. He fought the evils of the world with a pen and paper, even as he died. No one will ever do it like he did, but that he did it himself might just be good enough.

He brought comfort and joy and happiness and thought and introspection to millions.

GNU, Terry Pratchett. Mind how you go.

2K notes

·

View notes

Text

Emacs torture…

Was trying to switch my text editor / IDE to GNU emacs because it exemplifies Unix. I got the python syntax checker to work but it wouldn’t autocomplete. Spent some time watching Apple’s coding videos and perusing their sample code for help programming vrs.

Meanwhile we were talking in mindspace about Jonah and sinful Nineveh, the city built by Nimrod, which paralleled today’s fallen world and the reluctance on both fighting sides good and bad, to admit and atone for their mistakes. And they prayed for a miracle to break the deadlock. They knew there was a God, they just needed a reason to repent, a sign that He loved them still.

Almost midnight and I’m wide awake. Blows to the head from the witchy Liews rain down around me. It’s hard to survive this: what was brought and tacked over my mindspace by the British. Not sure what to do with the night. Maybe take it easy until later? Yea. Need to think, find that silver bullet...

0 notes

Text

Những phần mềm chỉnh sửa ảnh miễn phí tốt nhất trên macOS

Những phần mềm chỉnh sửa ảnh miễn phí trên macOS có thể giúp bạn xử lý ảnh chụp sai góc, thiếu sáng, xóa vật thể,... rất đơn giản.

Người dùng cần phần mềm chỉnh sửa ảnh miễn phí trên macOS trong nhiều tình huống khác nhau. Thứ nhất, đó là khi họ muốn tùy chỉnh và cải thiện hình ảnh cá nhân. Có thể bạn muốn làm cho bức ảnh kỷ niệm trở nên lung linh hơn, loại bỏ các khuyết điểm nhỏ, hoặc tạo ra một hình ảnh độc đáo để chia sẻ trên mạng xã hội.

Thứ hai, phần mềm chỉnh sửa ảnh miễn phí rất hữu ích khi bạn tham gia vào dự án cá nhân hoặc học tập. Sinh viên thường cần chỉnh sửa hình ảnh để bổ sung vào bài thuyết trình hoặc báo cáo nghiên cứu của họ. Người tham gia vào các dự án nghệ thuật số hoặc trang web cá nhân cũng sẽ tìm thấy giá trị trong việc sử dụng các công cụ chỉnh sửa ảnh miễn phí.

Thứ ba, khi bạn muốn tạo nội dung trực tuyến cho blog, trang web hoặc trang thương mại điện tử của bạn, phần mềm chỉnh sửa ảnh miễn phí giúp bạn tạo hình ảnh nội dung thú vị và chuyên nghiệp. Điều này có thể cải thiện trải nghiệm của người xem và củng cố hình ảnh thương hiệu của bạn.

Về cơ bản, phần mềm chỉnh sửa ảnh miễn phí giúp mọi người biến ý tưởng thành hiện thực, từ việc cải thiện hình ảnh cá nhân đến tạo nội dung sáng tạo cho dự án cá nhân, học tập và doanh nghiệp. Điều này làm cho nó trở thành một công cụ quan trọng trong thế giới số hóa ngày nay.

GIMP (GNU Image Manipulation Program)

GIMP (GNU Image Manipulation Program) là một phần mềm chỉnh sửa ảnh mã nguồn mở mạnh mẽ dành cho người dùng Mac. Với GIMP, bạn có thể thực hiện nhiều hiệu ứng chỉnh sửa ảnh chuyên nghiệp mà không cần phải trả phí cho các phần mềm đắt tiền. GIMP cung cấp một loạt các công cụ và tính năng đa dạng, bao gồm chỉnh sửa lớp, điều chỉnh màu sắc, sử dụng bút vẽ, và nhiều hiệu ứng đặc biệt. Với giao diện người dùng tùy chỉnh và cộng đồng phát triển sôi nổi, GIMP là sự lựa chọn tốt cho cả những người mới bắt đầu và các nhiếp ảnh gia chuyên nghiệp. Bạn có thể tải GIMP miễn phí trên Mac và tận hưởng khả năng sáng tạo không giới hạn trong việc chỉnh sửa ảnh.

Krita

Krita là một ứng dụng chỉnh sửa hình ảnh và vẽ kỹ thuật số đa năng, miễn phí và mã nguồn mở, hoàn hảo cho người dùng Mac. Với giao diện thân thiện và dễ sử dụng, Krita hỗ trợ cả việc chỉnh sửa ảnh và tạo tranh số với độ chính xác cao. Công cụ vẽ mạnh mẽ của nó và bộ pinceles đa dạng giúp bạn sáng tạo mọi thứ từ bức tranh số tới hoạt hình. Ngoài ra, Krita cũng tích hợp nhiều tính năng độc đáo như ghi chú, tạo và quản lý lớp, và hỗ trợ bút stylus, làm cho việc sáng tạo trở nên dễ dàng và thú vị. Với sự phát triển liên tục và cộng đồng đông đảo, Krita là một lựa chọn xuất sắc cho nhiếp ảnh gia, họa sĩ số, hoặc bất kỳ ai đam mê sáng tạo trên máy tính Mac của họ.

Paint.NET

Paint.NET là một ứng dụng chỉnh sửa ảnh và đồ họa miễn phí với giao diện trực quan và dễ sử dụng. Tuy nhiên, nó ban đầu được phát triển cho Windows và không có phiên bản chính thức dành cho Mac. Để sử dụng Paint.NET trên Mac, bạn có thể cài đặt các phần mềm mô phỏng hệ điều hành Windows như Wine hoặc Parallels Desktop.

Paint.NET có một bộ công cụ đáng kể cho việc chỉnh sửa ảnh cơ bản và nâng cao, bao gồm lớp, hiệu ứng, điều chỉnh màu sắc, và nhiều tính năng hữu ích khác. Nó cung cấp khả năng tạo tranh số và thậm chí là chỉnh sửa hình ảnh vector.

Mặc dù không có phiên bản chính thức dành riêng cho Mac, Paint.NET vẫn là một lựa chọn tốt nếu bạn đã quen thuộc với giao diện của nó từ phiên bản Windows. Nó cũng là một công cụ chỉnh sửa ảnh miễn phí với tính năng mạnh mẽ. Tuy nhiên, bạn cần thực hiện một số công việc để cài đặt và chạy nó trên môi trường Mac của mình.

PhotoScape X

PhotoScape X là một ứng dụng chỉnh sửa ảnh miễn phí và đa năng, tương thích với máy tính Mac, giúp người dùng tạo và biên tập hình ảnh một cách dễ dàng. Với giao diện thân thiện, PhotoScape X cung cấp nhiều công cụ sáng tạo, bao gồm chỉnh sửa lớp, điều chỉnh màu sắc, loại bỏ độ nhiễu và hiệu ứng đa dạng.

Một điểm mạnh của PhotoScape X là khả năng tạo collage ảnh, thư viện ảnh động, và Gif động. Nó cũng hỗ trợ batch editing, giúp tiết kiệm thời gian cho việc xử lý nhiều hình ảnh cùng lúc. Ứng dụng này cũng có tính năng chỉnh sửa RAW, phù hợp cho nhiếp ảnh gia chuyên nghiệp.

Với sự đa dạng và tiện ích của nó, PhotoScape X là lựa chọn lý tưởng cho cả người mới bắt đầu và những người có kinh nghiệm trong chỉnh sửa ảnh trên Mac. Nó là một công cụ mạnh mẽ, miễn phí và dễ sử dụng để biến ý tưởng sáng tạo thành hiện thực với những bức ảnh đẹp mắt.

Seashore

Seashore là một ứng dụng chỉnh sửa ảnh mã nguồn mở dành cho máy tính Mac, được thiết kế với giao diện đơn giản và dễ sử dụng. Với sự dựa trên mã nguồn mở của GIMP, Seashore mang đến khả năng chỉnh sửa hình ảnh cơ bản và hiệu ứng mà không cần phải làm quen với giao diện phức tạp.

Dù Seashore tập trung vào tính năng cơ bản, như cắt, gộp lớp, điều chỉnh màu sắc và hiệu ứng, nhưng nó vẫn đáp ứng nhu cầu chỉnh sửa hàng ngày của người dùng Mac một cách dễ dàng. Ưu điểm nổi bật là khả năng làm việc với lớp, cho phép bạn tạo ra những chỉnh sửa đa chiều.

Seashore cung cấp một cộng đồng hỗ trợ và phát triển đang phát triển mạnh mẽ, và điều này đồng nghĩa với việc thường xuyên cập nhật và sửa lỗi. Nếu bạn là một người dùng Mac muốn có một công cụ chỉnh sửa ảnh đơn giản và hiệu quả mà không cần phải trả phí, Seashore là một sự lựa chọn tốt để xem xét.

Pixlr X

Pixlr X là một ứng dụng chỉnh sửa ảnh trực tuyến hoàn toàn miễn phí, hoạt động dễ dàng trên máy tính Mac của bạn thông qua trình duyệt web. Với giao diện thân thiện và trực quan, Pixlr X là lựa chọn tuyệt vời cho những người muốn chỉnh sửa ảnh mà không cần phải tải xuống hoặc cài đặt phần mềm.

Với Pixlr X, bạn có thể thực hiện nhiều chỉnh sửa ảnh cơ bản và nâng cao, như cắt, điều chỉnh màu sắc, loại bỏ độ nhiễu, và áp dụng hiệu ứng độc đáo. Nó cũng hỗ trợ việc làm việc với các tệp ảnh định dạng phổ biến như JPEG, PNG và nhiều định dạng RAW.

Một trong những điểm mạnh của Pixlr X là tích hợp dễ dàng với các dịch vụ lưu trữ ảnh trực tuyến như Google Drive và Dropbox, giúp bạn quản lý và chia sẻ hình ảnh một cách thuận tiện.

Với tính năng mạnh mẽ và khả năng sử dụng trực tuyến tiện lợi, Pixlr X là một công cụ tuyệt vời cho việc chỉnh sửa ảnh nhanh chóng và chất lượng trên máy tính Mac của bạn, mà không cần tải xuống hoặc cài đặt thêm phần mềm.

Preview (app xem-sửa ảnh mặc định của MacOS)

Preview là ứng dụng tích hợp sẵn trên máy tính Mac và chủ yếu được sử dụng để xem tệp PDF và hình ảnh. Mặc dù nó không phải là một ứng dụng chỉnh sửa ảnh chuyên nghiệp, nhưng nó có một số tính năng hữu ích cho việc xem và chỉnh sửa cơ bản của ảnh.

Với Preview, bạn có thể mở, xem, và thậm chí chỉnh sửa các tệp hình ảnh như JPEG, PNG, TIFF và nhiều định dạng khác. Các tính năng chỉnh sửa cơ bản bao gồm cắt, xoay, điều chỉnh kích thước, đánh dấu hình ảnh bằng hình vẽ và chữ viết tay. Preview cũng cho phép bạn thêm chú thích, chữ ký và ghi chú vào tệp PDF.

Một tính năng tiện ích khác của Preview là khả năng nối nhiều tệp PDF lại với nhau hoặc tách chúng thành các tệp riêng lẻ.

Mặc dù không thể so sánh với các ứng dụng chỉnh sửa ảnh chuyên nghiệp, Preview vẫn là một công cụ tiện lợi cho việc xem và thực hiện chỉnh sửa cơ bản trên hình ảnh và tệp PDF trên máy tính Mac mà không cần tải thêm phần mềm bên ngoài. Điều này làm cho nó trở thành một phần quan trọng trong bộ công cụ tích hợp sẵn của macOS.

Tác giả: Truong Giang | Minh Tuấn Mobile

0 notes