#google Gemma AI

Text

Discover Gemma AI: Google's Breakthrough in Pre-Trained Models

Google has unveiled Gemma AI, a groundbreaking platform that provides open access to pre-trained AI models, poised to revolutionize machine learning. Gemma AI empowers developers and researchers, offering a robust tool to expedite AI application development and deployment.

Features

Description

Framework

Built on Google’s TensorFlow framework

Models

Offers pre-trained models in image…

View On WordPress

0 notes

Text

Break Free from Big Tech's AI Clutches: HuggingChat Lets You Explore Open-Source Chatbots

Tired of big tech controlling your AI chatbot experience? Break free and explore a whole universe of open-source chatbots with HuggingChat! This revolutionary platform lets you experiment with different AI models from Meta, Google, Microsoft, and more.

Break Free from Big Tech’s AI Clutches: HuggingChat Lets You Explore Open-Source Chatbots

Forget about ChatGPT, Microsoft Copilot, and Google Gemini. While these AI chatbots are impressive, they’re locked down by their corporate creators. But what if you could explore a whole universe of open-source chatbots that you can actually run, modify, and customize yourself?

Enter HuggingChat, a…

View On WordPress

#AI#chatbot#ChatGPT#Gemma#Google Gemini#Hugging Chat#Hugging Face#llama 3#Microsoft Copilot#Mistral AI#Mixtral#Open source#Phi-3#privacy

0 notes

Text

"Chatbot Wars": Elon Musk Releases xAI's Grok Chatbot Under Open-Source - Technology Org

New Post has been published on https://thedigitalinsider.com/chatbot-wars-elon-musk-releases-xais-grok-chatbot-under-open-source-technology-org/

"Chatbot Wars": Elon Musk Releases xAI's Grok Chatbot Under Open-Source - Technology Org

Elon Musk announced on Monday that his artificial intelligence startup, xAI, would be open-sourcing its ChatGPT competitor, “Grok”.

Coding artificial intelligence systems – illustrative photo. Image credit: Kevin Ku via Unsplash, free license

This decision comes shortly after Musk filed a lawsuit against OpenAI, accusing the organization of deviating from its original mission in favor of a for-profit approach.

Musk has consistently voiced concerns about major technology companies, like Google, utilizing technology for financial gain. In response to the lawsuit, OpenAI revealed emails indicating Musk’s support for a for-profit entity and a potential merger with Tesla.

Musk’s move to open-source Grok allows the public to freely access and experiment with the underlying code, aligning xAI with companies like Meta and Mistral, both of which have open-sourced AI models. Google has also contributed to this trend by releasing an open-source AI model named Gemma, offering developers the opportunity to customize it based on their requirements.

While open-sourcing technology can accelerate innovation, concerns have been raised about the potential misuse of open-source AI models for malicious purposes, such as the development of chemical weapons or the creation of a super-intelligence beyond human control.

Musk has expressed his desire to establish a “third-party referee” to oversee AI development and raise alarms if needed. In an effort to provide an alternative to OpenAI and Google, Musk launched xAI last year with the goal of creating a “maximum truth-seeking AI.”

Musk had previously indicated his preference for open-source AI, emphasizing the original intent of OpenAI to be a nonprofit open-source organization before transitioning to a closed-source model for profit.

Written by Alius Noreika

#A.I. & Neural Networks news#ai#ai model#approach#artificial#Artificial Intelligence#Authored post#chatbot#Chatbot Grok#chatbots#chatGPT#chemical#code#coding#Companies#developers#development#Elon Musk#Featured information processing#financial#Gemma#Google#grok#human#Innovation#intelligence#it#lawsuit#meta#Mistral

0 notes

Text

Meet Gemma: Your New Secret Weapon for Smarter AI Solutions from Google!

Gemma, Google’s new generation of open models is now a reality. The tech major released two versions of a new lightweight open-source family of artificial intelligence (AI) models called Gemma on Wednesday, February 21.

Gemma is a group of modern, easy-to-use models made with the same advanced research and technology as the Gemini models. Created by Google DeepMind and other teams at Google,…

View On WordPress

#AI innovation#Colab#developers#diverse applications#events#exploration#free credits#Gemma#Google Cloud#Kaggle#model family#open community#opportunities#quickstart guides#researchers

0 notes

Text

Google's new AI Model available!

Discover the heart of #Gemma, #Google's new series of open models for #AI run locally:

https://nowadais.com/google-gemma-new-open-models-ai-research

#ArtificialIntelligence #research #technology #TechNews #TechInnovation

#artificial intelligence#ai technology#ai tools#technews#inteligência artificial#artificial#technology#google#tech#gemma

0 notes

Text

Meta’s online ad library shows the company is hosting thousands of ads for AI-generated, NSFW companion or “girlfriend” apps on Facebook, Instagram, and Messenger. They promote chatbots offering sexually explicit images and text, using NSFW chat samples and AI images of partially clothed, unbelievably shaped, simulated women.

Many of the virtual women seen in ads reviewed by WIRED are lifelike—if somewhat uncanny—young, and stereotypically pornographic. Prospective customers are invited to role-play with an AI “stepmom,” connect with a computer-generated teen in a hijab, or chat with avatars who promise to “get you off in one minute.”

The ads appear to be thriving despite Meta’s ad policies clearly barring “adult content,” including “depictions of people in explicit or suggestive positions, or activities that are overly suggestive or sexually provocative.”

That’s created a new front in debates over the clash between AI and conventional labor. Some human sex workers complain that Meta is letting chatbots multiply, while unfairly shutting their older profession out of its platforms by over-enforcing rules about adult content.

“As a sex worker, if I put anything like ‘I will do anything for you, I will make you come in a minute’ I would be deleted in an instant,” says Gemma Rose, director of the Pole Dance Stripper Movement, a UK-based sex-worker rights and pole-dance event organization.

Meta’s policies forbid users from showing nudity or sexual activity and selling sex, including sexting. Rose and other sex-worker advocates say the company seems to apply a double standard in permitting chatbot apps to promote NSFW experiences while barring human sex workers from doing the same.

People who post about sex education, sex positivity, or sex work have for years complained the platform unfairly quashes their content. Meta has limited some of Rose’s posts from being shown to non-followers, screenshots seen by WIRED show. Her personal Instagram account and one for her organization have previously been suspended for violating Meta policies.

“Not that I agree with a lot of the community guidelines and rules and regulations, but these [ads] blatantly go against their own policies,” says Rose of the sexual chatbots promoted on Meta platforms. “And yet we’re not allowed to be uncensored on the internet or just exist and make a living.”

WIRED surveyed chatbot ads using Meta’s ad library, a transparency tool that can be used to see all the ads currently running across its platforms, all ads shown in the EU in the past year, and past ads from the past seven years related to elections, politics, or social issues. Searches showed that at least 29,000 ads had been published on Meta platforms for explicit AI “girlfriends,” with most using suggestive, sex-related messaging. There were also at least 19,000 ads using the term “NSFW” and 14,000 offering “NSFW AI.”

Some 2,700 ads were active when WIRED contacted Meta last week. A few days later Meta spokesperson Ryan Daniels said that the company prohibits ads that contain adult content and was reviewing the ads and removing those that violated its policies. “When we identify violating ads we work quickly to remove them, as we’re doing here,” he said. “We continue to improve our systems, including how we detect ads and behavior that go against our policies.”

However, 3,000 ads for “AI girlfriends” and 1,100 containing “NSFW” were live on April 23, according to Meta’s ad library.

WIRED’s initial review found that Hush, an AI girlfriend app downloaded more than 100,000 times from Google’s Play store, had published 1,700 ads across Meta platforms, several of which promise “NSFW” chats and “secret photos” from a range of lifelike female characters, anime women, and cartoon animals.

One shows an AI woman locked into medieval prison stocks by the neck and wrists, pledging, “Help me, I will do anything for you.” Another ad, targeted using Meta’s technology at men aged 18 to 65, features an anime character and the text “Want to see more of NSFW pics?”

Several of the 980 Meta ads WIRED found for “personalized AI companion” app Rosytalk promise around-the-clock chats with very-young-looking AI-generated women. They used tags including “#barelylegal,” “#goodgirls,” and “teens.” Rosytalk also ran 990 ads under at least nine brand names on Meta platforms, including Rosygirl, Rosy Role Play Chat, and AI Chat GPT.

At least 13 other apps for AI “girlfriends” have promoted similar services in Meta ads, including “nudifying” features that allow a user to “undress” their AI girlfriend and download the images. A handful of the girlfriend ads had already been removed for violating Meta’s advertising standards. “Undressing” apps have also been marketed on mainstream social platforms, according to social media research firm Graphika, and on LinkedIn, the Daily Mail recently reported.

Some users of so-called AI companions say they can help combat loneliness, with others reporting them feeling like a real partner. Not all of the ads found by WIRED promote only titillation, with some also suggesting that an explicit AI chatbot could provide emotional support. “Talk to anyone! You’re not alone!” reads one of Hush’s ads on Meta platforms.

Carolina Are, an innovation fellow researching social media censorship at the Center for Digital Citizens at Northumbria University in the UK, says that Meta makes it extremely difficult for human sex workers to advertise on its platforms, she says.

“When people are trying to work through and profit off their own body, they are forbidden,” says Are, who has helped sex workers reactivate lost and unfairly suspended accounts on Meta platforms. “While AI companies mostly powered by bros that exploit images already out there are able to do that.”

Are says the sexually suggestive AI girlfriends remind her of the unsophisticated and generic early days of internet porn. “Sex workers engage with their customers, subscribers, and followers in a way that is more personalized,” she says. “This is a lot of work and emotional labor beyond the sharing of nude images.”

Limited information is available about how the AI apps are built or the underlying text or image-generation algorithms trained. One used the name Sora, apparently to suggest a connection to OpenAI’s video generator of that name, which has not been publicly released.

The developers behind the apps advertising explicit AI girlfriends are shadowy. None of the developers listed on Google Play or Facebook as creating the apps promoted on Meta’s platforms responded to requests for comment.

Mike Stabile, director of public affairs at the Free Speech Coalition, an adult-industry nonprofit trade association, sees the apps promising explicit AI girlfriends and their advertising tactics as “scammy.” While the adult industry is banned from advertising online, AI apps are “flooding the zone,” he says. “That’s the paradox of censorship: You end up censoring or silencing an actual sex worker and allowing all these weeds to flourish in their place.”

Anti-sex-trafficking legislation signed into US law in 2018 called FOSTA-SESTA made platforms responsible for what is posted online, vastly limiting adult content. However, it resulted in consensual sex work being treated as trafficking in the digital world, shutting adult content creators out of online life and making already marginalized sex workers more vulnerable.

If Meta wipes the AI girlfriend ads from its platforms, it might emulate past sweeps of human sex workers. Despite diligently trying to follow Meta’s guidelines, the Pole Dance Stripper Movement’s account was banned “without warning” during a wave of removals of at least 45 sexuality-related accounts in June 2023, Rose says. Meta eventually rolled back some of the deletions, citing an error. But for sex workers on social media, such events are a recurring feature.

Rose’s personal account and its backup were also deleted in June 2021 during the Covid pandemic after she shared a photo, she says, of a pole-dancing workshop. She was hosting online pole-dancing classes and posting on the adult subscription site OnlyFans at the time. “My business was gone overnight,” she says. “I didn’t have a way to sustain myself.”

“OK, so I got deleted,” Rose adds. “But these companies are allowed to put out this kind of shit that sex workers aren’t allowed to? It makes no sense.”

4 notes

·

View notes

Text

google bard AI for enterprise [feat gemma 2]: gemini pro plus (limited collector's edition) ((bard of the year edition)) (((re-mastered)))

0 notes

Text

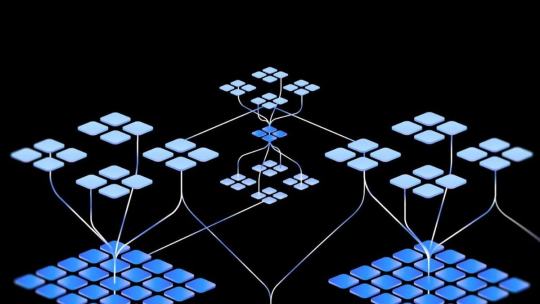

Simplify and Scale AI/ML Workloads: Ray on Vertex AI Now GA

Ray on Vertex AI

Scaling AI/ML workloads presents numerous significant issues for developers and engineers. Obtaining the necessary AI infrastructure is one of the challenges. Workloads related to AI and ML demand a large number of computing resources, including CPUs and the GPUs. For developers to manage their workloads, they must have enough resources. Managing the various patterns and programming interfaces needed for efficient scaling of AI/ML workloads presents another difficulty. It could be necessary for developers to modify their code so that it operates well on the particular infrastructure they have available. This can be a difficult and time-consuming task.

Ray offers a complete and user-friendly Python distributed framework to address these issues. Using a set of domain-specific libraries and a scalable cluster of computational resources, Ray helps you to effectively divide common AI/ML operations like serving, adjusting, and training.

Google Cloud are happy to report that Ray, a potent distributed Python framework, and Google Cloud’s Vertex AI have seamlessly integrated and are now generally available. By enabling AI developers to easily scale their workloads on Vertex AI‘s flexible infrastructure, this integration maximises the possibilities of distributed computing, machine learning, and data processing.

Ray on Vertex AI: Why?

Accelerated and Scalable AI Development:

Ray’s distributed computing platform, which easily connects with Vertex AI’s infrastructure services, offers a single experience for both predictive and generative AI. Scale your Python-based workloads for scientific computing, data processing, deep learning, reinforcement learning, machine learning, and data processing from a single computer to a large cluster to take on even the most difficult AI problems without having to worry about the intricacies of maintaining the supporting infrastructure.

Unified Development Experience:

By combining Vertex AI SDK for Python with Ray’s ergonomic API, AI developers can now easily move from interactive prototyping in Vertex AI Colab Enterprise or their local development environment to production deployment on Vertex AI’s managed infrastructure with little to no code changes.

Enterprise-Grade Security:

By utilising Ray’s distributed processing capacity, Vertex AI’s powerful security features, such as VPC Service Controls, Private Service Connect, and Customer-Managed Encryption Keys (CMEK), may help protect your sensitive data and models. The extensive security architecture provided by Vertex AI can assist in making sure that your Ray applications adhere to stringent enterprise security regulations.

Vertex AI Python SDK

Assume for the moment that you wish to fine-tune a small language model (SML), like Gemma or Llama. Using the terminal or the Vertex AI SDK for Python, Ray on Vertex AI enables you to quickly establish a Ray cluster, which is necessary before you can use it to fine-tune Gemma. Either the Ray Dashboard or the interface with Google Cloud Logging can be used to monitor the cluster.

Ray 2.9.3 is currently supported by Ray on Vertex AI. Furthermore, you have additional flexibility when it comes to the dependencies that are part of your Ray cluster because you can build a custom image.

It’s simple to use Ray on Vertex AI for AI/ML application development once your Ray cluster is up and running. Depending on your development environment, the procedure may change. Using the Vertex AI SDK for Python, you can use Colab Enterprise or any other preferred IDE to connect to the Ray cluster and run your application interactively. As an alternative, you can use the Ray Jobs API to programmatically submit a Python script to the Ray cluster on Vertex AI.

There are many advantages to using Ray on Vertex AI for creating AI/ML applications. In this case, your tuning jobs can be validated by using Vertex AI TensorBoard. With the managed TensorBoard service offered by Vertex AI TensorBoard, you can monitor, compare, and visualise your tuning operations in addition to working efficiently with your team. Additionally, model checkpoints, metrics, and more may be conveniently stored with Cloud Storage. As you can see from the accompanying code, this enables you to swiftly consume the model for AI/ML downstreaming tasks, such as producing batch predictions utilising Ray Data.

How to scale AI on Ray on Vertex AI using HEB and eDreams

Accurate demand forecasting is crucial to the profitability of any large organisation, but it’s especially important for grocery stores. anticipating one item can be challenging enough, but consider the task of anticipating millions of goods for hundreds of retailers. The forecasting model’s scaling is a difficult process. One of the biggest supermarket chains in the US, H-E-B, employs Ray on Vertex AI to save money, increase speed, and improve dependability.

Ray has made it possible for us to attain revolutionary efficiencies that are essential to Google Cloud’s company’s operations. Ray’s corporate features and user-friendly API are particularly appreciated by us, as stated by H-E-B Principal Data Scientist Philippe Dagher”Google Cloud chose Ray on Vertex as Google Cloud’s production platform because of its greater accessibility to Vertex AI’s infrastructure ML platform.”

In order to make travel simpler, more affordable, and more valuable for customers worldwide, eDreams ODIGEO, the top travel subscription platform in the world and one of the biggest e-commerce companies in Europe, provides the highest calibre products in regular flights, budget airlines, hotels, dynamic packages, car rentals, and travel insurance. The company combines travel alternatives from about 700 international airlines and 2.1 million hotels, made possible by 1.8 billion daily machine learning predictions, through processing 100 million customer searches per day.

The eDreams ODIGEO Data Science team is presently training their ranking models with Ray on Vertex AI in order to provide you with the finest travel experiences at the lowest cost and with the least amount of work.

“Google Cloud is creating the best ranking models, personalised to the preferences of Google Cloud’s 5.4 million Prime customers at scale, with the largest base of accommodation and flight options,” stated José Luis González, Director of eDreams ODIGEO Data Science. Google Cloud are concentrating on creating the greatest experience to increase value for Google Cloud’s clients, with Ray on Vertex AI handling the infrastructure for distributed hyper-parameter tuning.

Read more on govindhtech.com

#VertexAI#AIInfrastructure#GPU#GoogleCloud#Python#framework#machinelearning#AIdevelopers#generativeAI#smalllanguagemodel#Gemma#Monitor#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Photo

Google I/o 2024: Gemini 1.5 Pro obtiene una gran actualización a medida que se presentan los nuevos modelos Flash y Gemma AI Google celebró la sesión magistral ... https://ujjina.com/google-i-o-2024-gemini-1-5-pro-obtiene-una-gran-actualizacion-a-medida-que-se-presentan-los-nuevos-modelos-flash-y-gemma-ai/?feed_id=626489&_unique_id=66447d5fa8cc2

0 notes

Text

Google has revealed a new milestone in the world of artificial intelligence with the announcement of Gemma 2. This 27-billion parameter version of its open model is set to launch in June, promising even more advanced capabilities. Stay tuned for the latest updates on this groundbreaking technology.

Click to Claim Latest Airdrop for FREE

Claim in 15 seconds

Scroll Down to End of This Post

const downloadBtn = document.getElementById('download-btn');

const timerBtn = document.getElementById('timer-btn');

const downloadLinkBtn = document.getElementById('download-link-btn');

downloadBtn.addEventListener('click', () =>

downloadBtn.style.display = 'none';

timerBtn.style.display = 'block';

let timeLeft = 15;

const timerInterval = setInterval(() =>

if (timeLeft === 0)

clearInterval(timerInterval);

timerBtn.style.display = 'none';

downloadLinkBtn.style.display = 'inline-block';

// Add your download functionality here

console.log('Download started!');

else

timerBtn.textContent = `Claim in $timeLeft seconds`;

timeLeft--;

, 1000);

);

Win Up To 93% Of Your Trades With The World's #1 Most Profitable Trading Indicators

[ad_1]

Google recently unveiled Gemma 2, the latest addition to its family of open models at the developer conference Google I/O 2024. This new model boasts an impressive 27 billion parameters, setting it apart from previous versions which were available in 2 billion and 7 billion parameter options.

One of the highlights of this release is PaliGemma, a vision language model designed for tasks such as image captioning, labeling, and visual Q&A. This new variant represents a significant advancement in the Gemma family's capabilities.

During a briefing ahead of the announcement, Google emphasized the optimization of the 27-billion model for Nvidia's next-gen GPUs, a single Google Cloud TPU host, and the managed Vertex AI service. The company's VP of Google Labs, Josh Woodward, mentioned that Gemma models have been downloaded millions of times across various services.

Even though size is important, the performance of the model is crucial. While Google has not yet shared detailed data about Gemma 2, early indicators suggest promising results. Woodward stated that the model is already outperforming models twice its size.

Overall, the launch of Gemma 2 signifies Google's continued commitment to advancing AI technology. Developers are eagerly anticipating the opportunity to test and utilize this powerful new model. Stay tuned for more updates on Gemma 2 as it becomes available in June.

Win Up To 93% Of Your Trades With The World's #1 Most Profitable Trading Indicators

[ad_2]

1. What is Google Gemma 2?

Google Gemma 2 is a new version of Google's open model, with 27 billion parameters. It is a tool used for various AI tasks and will be launched in June.

2. How is Gemma 2 different from the previous version?

Gemma 2 has significantly more parameters (27 billion compared to the earlier model) which enhances its capabilities for various AI tasks.

3. When will Gemma 2 be launched?

Gemma 2 will be launched in June, although the specific date has not been announced yet.

4. What can Gemma 2 be used for?

Gemma 2 can be used for a wide range of AI tasks such as natural language processing, image recognition, and more.

5. Can individuals or businesses access Gemma 2?

Yes, Gemma 2 will be an open model, meaning that individuals and businesses will be able to access and use it for their AI projects.

Win Up To 93% Of Your Trades With The World's #1 Most Profitable Trading Indicators

[ad_1]

Win Up To 93% Of Your Trades With The World's #1 Most Profitable Trading Indicators

Claim Airdrop now

Searching FREE Airdrops 20 seconds

Sorry There is No FREE Airdrops Available now. Please visit Later

function claimAirdrop()

document.getElementById('claim-button').style.display = 'none';

document.getElementById('timer-container').style.display = 'block';

let countdownTimer = 20;

const countdownInterval = setInterval(function()

document.getElementById('countdown').textContent = countdownTimer;

countdownTimer--;

if (countdownTimer < 0)

clearInterval(countdownInterval);

document.getElementById('timer-container').style.display = 'none';

document.getElementById('sorry-button').style.display = 'block';

, 1000);

0 notes

Text

Reading Beyond the Hype: Some Observations About OpenAI and Google’s Announcements

New Post has been published on https://thedigitalinsider.com/reading-beyond-the-hype-some-observations-about-openai-and-googles-announcements/

Reading Beyond the Hype: Some Observations About OpenAI and Google’s Announcements

Google vs. OpenAI is shaping up as one of the biggest rivarly of the generative AI era.

Created Using Ideogram

Next Week in The Sequence:

Edge 397: Provides an overview of multi-plan selection autonomous agents. Discusses Allen AI’s ADaPT paper for planning in LLMs and introduces the SuperAGI framework for building autonomous agents.

Edge 398: We dive into the Microsoft’s amazing Phi-3 model which is able to outperform much larger models in math and computer science and started the small language model movement.

You can subscribed to The Sequence below:

TheSequence is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

📝 Editorial: Reading Beyond the Hype: Some Observations About OpenAI and Google’s Announcements

What a week for generative AI! By now, every AI newsletter you subscribe to and every blog and tech publication you follow must have bombarded you with announcements from the OpenAI events and the Google I/O conference. Instead of doing more of the same, I thought we could share some thoughts about how this week’s events shape the competitive landscape between Google and OpenAI and the generative AI market in general. After all, Google has been the only tech incumbent that has chosen to compete directly with OpenAI and Anthropic instead of integrating those models into their distribution machine. This week’s announcements show that the race between Google and OpenAI might be shaping up to be one of the iconic tech rivalries of the next generation.

Here are some observations about OpenAI and Google’s recent announcements:

Feature Parity: One astonishing development this week was seeing how Google and OpenAI are both working on similar roadmaps. From image and video generation to generalist agents, it seems that both companies are matching each other’s capabilities. It is impressive to see how fast Google has caught up.

From Models to Agents: The announcements of GPT-4o and Project Astra revealed that both OpenAI and Google are expanding into the generalist agent space. In the case of Google, this is not that surprising.

Engineering Matters: Speed was at the center of the GPT-4o and Gemini Flash announcements. The evolution of generative AI today is as much about engineering as it is about research.

Context Window Edge: The Gemini 2M token context window is astonishing. Google recently hinted at their work in this area with the Inifini Attention research. This massive context could make quite a competitive difference in several scenarios.

Video is the Next Differentiator: It is very clear that video is one of the next frontiers for generative AI. A few weeks ago, OpenAI seemed to be ahead of all other tech incumbents with Sora, but Google’s Veo looks incredibly impressive. Interestingly, no other major AI platform seems to be developing video generation capabilities at scale, which makes startups like RunWay and Pika very attractive acquisition targets. Otherwise, this will become a two-horse race.

Google AI Strategy is Broad and Fragmented: Google’s announcements this week were overwhelming. There is the Gemini Flash massive model and the Gemma small model, generative video models, Astra multimodal agents, Gemini-powered search, AI in Workspace apps like Gmail or Docs, AI for Firebase developers, and maybe new AI-powered glasses? You get the idea. Executing well across all these areas while pushing the boundaries of research is extremely difficult. And yet, it might be working.

Google’s Bold AI Strategy Might Pay Off: Unlike Microsoft, Apple, Amazon, and Oracle, Google didn’t rush to partner with OpenAI or Anthropic and instead decided to rely on its DeepMind and Google Research AI talent. They have had plenty of public missteps and challenges, but they have proven that they can take a punch and remain quite competitive. The result is that now Google has achieved near feature parity with OpenAI and is in control of its own destiny.

I could keep going, but this is supposed to be a short editorial. This week was another example that generative AI is moving at a speed we have never seen in any tech trend. The rivalry between Google and OpenAI promises to push the boundaries of the space.

🔎 ML Research

VASIM

Microsoft Research published a paper introducing Vertical Autoscaling Simulator Toolkit(VASIM), a tool designed to address teh challenges of autoscaling algorithms such as the ones used in cloud computing infrastructures. VASIM can simulate different autoscaling policies and optimization parameters —> Read more.

RLHF Workflow

Salesforce Research published a paper proposing an Online Iterative Reinforcement Learning from Human Feedback (RLHF) technique that can improve over offline alternatives. The method also looks to address the resource limitations of online RLHF for open source projects —> Read more.

SambaNova SN40L

SambaNova researchers published a paper proposing SambaNova SN40L, a technique that combines composition of experts(CoEs) , streaming dataflow and a three-tier memory to scale the AI memory wall. The research looks to address some of the limitations of hyperscalers when deploying large monolithic LLMs —>Read more.

Med-Gemini

Google published two research papers exploring the capabilities of Gemini in healthcare scenarios. The first paper explores Gemini in electronic medical records processing while the second one focuses on use cases such as radiology, pathology, dermatology, ophthalmology, and genomics in healthcare —> Read more.

BEHAVIOR Vision Suite

Researchers from Stanford, Harvard, Meta, University of Southern California and other AI labs published a paper introducing BEHAVIOR Vision Suite, a set of tools for generaring fully customized synthetic data for computer vision models. BVS could have a profound impact in scenarios such as embodied AI or self-driving cars —> Read more.

Online vs. Offline RLHF

Researchers from Google DeepMind published a paper outlining the series of experiments comparing online and offline RLHF alignment methods. The experiments showed clear superiority of online methods and dives into potential explanations —> Read more.

🤖 Cool AI Tech Releases

GPT-4o

OpenAI unveiled GPT-4o, a new model that can work with text, audio and vision in real time —> Read more.

Gemini 1.5

Google introduced Gemini 1.5 Flash and new improvements to its Pro model —> Read more.

Transformer Agents 2.0

Hugging Face open sourced a new version of its Transformers Agents framework —> Read more.

Model Explorer

Google Research released Model Explorer, a tool for visualizing ML models as graphs —> Read more.

Gemma 2

Google unveiled Gemma 2, a 27B parameter LLM that can run in a single TPU —> Read more.

Imagen 3

Google released Imagen 3, the latest version of its flagship text-to-image model —> Read more.

Firebase Genkit

Google released Firebase Genkit, a framework for building mobile and web AI apps —> Read more.

🛠 Real World AI

Inside Einstein

Salesforce VP of Engineering shares details about the implementation of the Einstein platform —> Read more.

📡AI Radarwii

OpenAI co-founder Ilya Sutskever announced he will be leaving the company.

Together with Ilya, the bulk of OpenAI’s risk team has been dissolved.

Snoflake is in talks to buy multimodal platform Reka AI for $1 billion.

Google and Airtel partnered to bring generative AI solutions to the Indian market.

Anthropic announced that its Claude API is avail in Europe and is in the process of raising more capital.

LanceDB raised $11 million for building a multimodal AI database.

Samba Nova introduced Fugaku-LLM, a new Japanese LLM that uses the composition of experts architecture.

Weka raised $140 million for its AI data pipeline platform.

Elastic announced Search AI lake for low latency RAG scenarios.

Snowflake announced an investment in data observability platform Metaplane.

David Sacks from the PayPal and Yammer fame , launched a new gen AI startup.

Humanity Protocol, which attempts to validate human identity in scenarios like deep fakes, raised $30 million at over $1 billion valuation.

Microsoft-Mistral alliance might avoid UK regulatory probe.

AI coding startup Replit reported sizable layoffs.

TheSequence is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

#agent#agents#ai#ai platform#AI strategy#AI-powered#Algorithms#amazing#Amazon#Announcements#anthropic#API#apple#apps#architecture#astra#attention#audio#autonomous agents#Behavior#billion#Blog#Building#Cars#claude#Cloud#cloud computing#coding#Companies#Composition

0 notes

Text

5 big things in AI that happened this week: Nvidia AI chatbot rolls out Gemma support, Apple opens new AI lab and more

एआई राउंडअप: मई का पहला सप्ताह एआई सफलताओं की एक श्रृंखला रहा है। अब जब सप्ताह समाप्त हो गया है, तो आइए शीर्ष एआई घटनाओं पर एक नज़र डालें जैसे एनवीडिया ने अपने एआई चैटबॉट, चैटआरटीएक्स के लिए Google के जेम्मा मॉडल के एकीकरण की घोषणा की। अन्य समाचारों में, एक नई रिपोर्ट से पता चला कि Apple के पास यूरोप में एक गुप्त AI लैब है। इस सप्ताह की ऐसी एआई खबरों के बारे में और जानें।

एनवीडिया एआई चैटबॉट…

View On WordPress

0 notes

Text

H2O-Danube2-1.8B Achieves Top Ranking On Hugging Face Open LLM Leaderboard For 2 Billion (2B) Parameters Range

H2O.ai, a leading company in open-source Generative AI and machine learning, has achieved a significant milestone with its latest small language model, H2O-Danube2-1.8B. This model has secured the top position on the Hugging Face Open LLM Leaderboard for models with less than 2 billion parameters, surpassing even larger models like Google's Gemma-2B.

The H2O-Danube2-1.8B model is an upgraded version of the H2O-Danube 1.8B model. It has undergone optimizations and improvements that have propelled it to the forefront of the 2B SLM category. Built on the Mistral architecture and trained on a massive dataset of 2 trillion high-quality tokens, this model excels in natural language processing tasks. It incorporates optimizations like dropping windowing attention to enhance efficiency and performance.

Sri Ambati, the CEO and Founder of H2O.ai, emphasized the significance of this achievement. He highlighted the superior performance of the H2O-Danube2-1.8B model compared to competitors like Microsoft Phi-2 and Google Gemma 2. He also emphasized its economic efficiency and ease of deployment, making it suitable for enterprise and edge computing applications, including mobile phones, drones, and offline applications.

H2O.ai is dedicated to democratizing large language models, ensuring their sustainability within existing systems while expanding their applications. The H2O-Danube2-1.8B model has a wide range of applications, from detecting and preventing PII data leakage to improving prompt generation and enhancing the robustness of RAG systems.

Read More - https://www.techdogs.com/tech-news/business-wire/h2o-danube2-18b-achieves-top-ranking-on-hugging-face-open-llm-leaderboard-for-2-billion-2b-parameters-range

0 notes

Link

In a major move for the world of artificial intelligence and software development, Google has launched CodeGemma, a groundbreaking suite of large language models (LLMs) dedicated to code generation, understanding, and command tracing. ... netgainers.org

0 notes

Text

Operaブラウザの内蔵AIチャット「Aria」にローカルLLMを統合:Llama・Gemma・Mixtral・Vicunaなど使用可能に

はじめに

ノルウェーのOpera Software社は、同社のWebブラウザ「Opera」に搭載されているAIチャットボット「Aria」に、ローカルLLM(Large Language Model)を統合する計画を発表しました。この取り組みは「AI Feature Drops」イニシアティブの一環であり、現在開発版の「Opera One developer」で試用可能となっています。

ローカルLLMの利点

ローカルLLMを使用することで、処理がデバイス上で完結するため、インターネット接続がなくてもAIチャットを利用できるようになります。これにより、プライバシー保護が強化され、通信環境の影響を受けずにAIアシスタントを活用できるというメリットがあります。

利用可能なLLMの種類

Ariaで利用可能なローカルLLMには、MetaのLlama、GoogleのGemma、Mistral…

View On WordPress

0 notes