#hosted call center software

Explore tagged Tumblr posts

Text

Call Center IVR Software: Benefits, Uses, Best Practices

If you're running a call centre, you know that customer satisfaction is key. You also know that managing a call centre can be a lot of work. IVR software can help take some of the load off the agents by automating certain tasks.

But what is IVR software? And how can it benefit your call centre? In this article, we'll answer those questions and more. We'll also give you some best practices for using IVR software and tell you whether or not it's the right solution for your call centre.

What Is IVR Software?

IVR systems are computer systems that interact with callers to guide them through a series of options. IVR systems are often used by call centres as a way to automate customer service.

Some common features of IVR systems include

● The ability to play pre-recorded messages

● Collect input from the caller

● Route calls to the appropriate destination

IVR systems can be used for a variety of purposes, including customer service, sales, marketing, and even human resources.

How Can IVR Benefit Your Call Centre?

IVR systems can be a great addition to any call center, providing benefits such as increased productivity, improved customer satisfaction, and cost savings.

An IVR system can help to increase productivity in a call centre by automating customer service. This means that a lot of calls can be handled more quickly and efficiently, freeing up agents to deal with other tasks.

An IVR system can help to improve customer satisfaction by giving customers the option to reach a human agent if they are not able to resolve their issue through the automated system.

An IVR system can also help to save money for a call centre. This is because an IVR system can handle large volumes of calls without the need for additional staff.

In addition, an IVR system can help to reduce the amount of time that agents spend on each call, which reduces costs.

Is IVR the right solution for your call centre?

It depends on the specific needs of your business and your customers. However, there are some factors to consider that will help you decide if IVR is the right solution for your call center-

● Size of Your Call Centre

If you have a small call centre with only a few agents, IVR may not be necessary. However, if you have a large call centre with many agents, IVR can help to increase productivity by automating customer service.

● Type of Calls That Your Call Centre Receives

If most of the calls are simple and can be easily handled by an automated system, then IVR may be a good solution. However, if most of the calls are complex and require human interaction, then a multi-level IVR may be the best solution

● The Needs of Your Customers

If your customers are comfortable using an automated system and do not need to speak to a human agent, then IVR may be a good solution. However, if your customers prefer to speak to a human agent or if they have complex questions that need to be answered, then the IVR solution would need to have the smart outing capabilities.

Conclusion

When determining if an IVR system is the best solution for your call centre, there are several factors to consider. The size of your call centre and the types of calls you typically receive are two important factors. Additionally, you need to take into account the needs of your customers when making a decision. By taking all of these factors into consideration, you can determine which IVR system is right for your call centre.

#predictive dialer#call center dialer#hosted call center solutions#auto dialer software#predictive dialer software#call center solutions#ivr software#omnichannel contact center software#cloud call center software#outbound call center software

1 note

·

View note

Text

#Call Centre Solutions#Call Centre Outsourcing Services#hosted call Centre solutions in Chennai#voip call center solutions in Chennai#outbound call Centre solutions in Chennai#virtual call Centre solutions in Chennai#ivr call Centre solutions in Chennai#web based call Centre solutions in Chennai#best cloud based call Centre solutions in Chennai#call Centre solutions software in Chennai#on premise call Centre solutions in Chennai#top call Centre solutions in Chennai#Call Centre Solutions in India#Call Centre Solution Providers in India

1 note

·

View note

Text

AI’s energy use already represents as much as 20 percent of global data-center power demand, research published Thursday in the journal Joule shows. That demand from AI, the research states, could double by the end of this year, comprising nearly half of all total data-center electricity consumption worldwide, excluding the electricity used for bitcoin mining.

The new research is published in a commentary by Alex de Vries-Gao, the founder of Digiconomist, a research company that evaluates the environmental impact of technology. De Vries-Gao started Digiconomist in the late 2010s to explore the impact of bitcoin mining, another extremely energy-intensive activity, would have on the environment. Looking at AI, he says, has grown more urgent over the past few years because of the widespread adoption of ChatGPT and other large language models that use massive amounts of energy. According to his research, worldwide AI energy demand is now set to surpass demand from bitcoin mining by the end of this year.

“The money that bitcoin miners had to get to where they are today is peanuts compared to the money that Google and Microsoft and all these big tech companies are pouring in [to AI],” he says. “This is just escalating a lot faster, and it’s a much bigger threat.”

The development of AI is already having an impact on Big Tech’s climate goals. Tech giants have acknowledged in recent sustainability reports that AI is largely responsible for driving up their energy use. Google’s greenhouse gas emissions, for instance, have increased 48 percent since 2019, complicating the company’s goals of reaching net zero by 2030.

“As we further integrate AI into our products, reducing emissions may be challenging due to increasing energy demands from the greater intensity of AI compute,” Google’s 2024 sustainability report reads.

Last month, the International Energy Agency released a report finding that data centers made up 1.5 percent of global energy use in 2024—around 415 terrawatt-hours, a little less than the yearly energy demand of Saudi Arabia. This number is only set to get bigger: Data centers’ electricity consumption has grown four times faster than overall consumption in recent years, while the amount of investment in data centers has nearly doubled since 2022, driven largely by massive expansions to account for new AI capacity. Overall, the IEA predicted that data center electricity consumption will grow to more than 900 TWh by the end of the decade.

But there’s still a lot of unknowns about the share that AI, specifically, takes up in that current configuration of electricity use by data centers. Data centers power a variety of services—like hosting cloud services and providing online infrastructure—that aren’t necessarily linked to the energy-intensive activities of AI. Tech companies, meanwhile, largely keep the energy expenditure of their software and hardware private.

Some attempts to quantify AI’s energy consumption have started from the user side: calculating the amount of electricity that goes into a single ChatGPT search, for instance. De Vries-Gao decided to look, instead, at the supply chain, starting from the production side to get a more global picture.

The high computing demands of AI, De Vries-Gao says, creates a natural “bottleneck” in the current global supply chain around AI hardware, particularly around the Taiwan Semiconductor Manufacturing Company (TSMC), the undisputed leader in producing key hardware that can handle these needs. Companies like Nvidia outsource the production of their chips to TSMC, which also produces chips for other companies like Google and AMD. (Both TSMC and Nvidia declined to comment for this article.)

De Vries-Gao used analyst estimates, earnings call transcripts, and device details to put together an approximate estimate of TSMC’s production capacity. He then looked at publicly available electricity consumption profiles of AI hardware and estimates on utilization rates of that hardware—which can vary based on what it’s being used for—to arrive at a rough figure of just how much of global data-center demand is taken up by AI. De Vries-Gao calculates that without increased production, AI will consume up to 82 terrawatt-hours of electricity this year—roughly around the same as the annual electricity consumption of a country like Switzerland. If production capacity for AI hardware doubles this year, as analysts have projected it will, demand could increase at a similar rate, representing almost half of all data center demand by the end of the year.

Despite the amount of publicly available information used in the paper, a lot of what De Vries-Gao is doing is peering into a black box: We simply don’t know certain factors that affect AI’s energy consumption, like the utilization rates of every piece of AI hardware in the world or what machine learning activities they’re being used for, let alone how the industry might develop in the future.

Sasha Luccioni, an AI and energy researcher and the climate lead at open-source machine-learning platform Hugging Face, cautioned about leaning too hard on some of the conclusions of the new paper, given the amount of unknowns at play. Luccioni, who was not involved in this research, says that when it comes to truly calculating AI’s energy use, disclosure from tech giants is crucial.

“It’s because we don’t have the information that [researchers] have to do this,” she says. “That’s why the error bar is so huge.”

And tech companies do keep this information. In 2022, Google published a paper on machine learning and electricity use, noting that machine learning was “10%–15% of Google’s total energy use” from 2019 to 2021, and predicted that with best practices, “by 2030 total carbon emissions from training will reduce.” However, since that paper—which was released before Google Gemini’s debut in 2023—Google has not provided any more detailed information about how much electricity ML uses. (Google declined to comment for this story.)

“You really have to deep-dive into the semiconductor supply chain to be able to make any sensible statement about the energy demand of AI,” De Vries-Gao says. “If these big tech companies were just publishing the same information that Google was publishing three years ago, we would have a pretty good indicator” of AI’s energy use.

19 notes

·

View notes

Note

QUESTION TWO:

SWITCH BOXES. you said that’s what monitors the connections between systems in the computer cluster, right? I assume it has software of its own but we don’t need to get into that, anyway, I am so curious about this— in really really large buildings full of servers, (like multiplayer game hosting servers, Google basically) how big would that switch box have to be? Do they even need one? Would taking out the switch box on a large system like that just completely crash it all?? While I’m on that note, when it’s really large professional server systems like that, how do THEY connect everything to power sources? Do they string it all together like fairy lights with one big cable, or??? …..the voices……..THE VOICES GRR

I’m acending (autism)

ALRIGHT! I'm starting with this one because the first question that should be answered is what the hell is a server rack?

Once again, long post under cut.

So! The first thing I should get out of the way is what is the difference between a computer and a server. Which, is like asking the difference between a gaming console and a computer. Or better yet, the difference between a gaming computer and a regular everyday PC. Which is... that they are pretty much the same thing! But if you game on a gaming computer, you'll get much better performance than on a standard PC. This is (mostly) because a gaming computer has a whole separate processor dedicated to processing graphics (GPU). A server is different from a PC in the same way, it's just a computer that is specifically built to handle the loads of running an online service. That's why you can run a server off a random PC in your closet, the core components are the same! (So good news about your other question. Short answer, yes! It would be possible to connect the hodgepodge of computers to the sexy server racks upstairs, but I'll get more into that in the next long post)

But if you want to cater to hundreds or thousands of customers, you need the professional stuff. So let's break down what's (most commonly) in a rack setup, starting with the individual units (sometimes referred to just as 'U').

Short version of someone setting one up!

18 fucking hard drives. 2 CPUs. How many sticks of ram???

Holy shit, that's a lot. Now depending on your priorities, the next question is, can we play video games on it? Not directly! This thing doesn't have a GPU so using it to render a video game works, but you won't have sparkly graphics with high frame rate. I'll put some video links at the bottom that goes more into the anatomy of the individual units themselves.

I pulled this screenshot from this video rewiring a server rack! As you can see, there are two switch boxes in this server rack! Each rack gets their own switch box to manage which unit in the rack gets what. So it's not like everything is connected to one massive switch box. You can add more capacity by making it bigger or you can just add another one! And if you take it out then shit is fucked. Communication has been broken, 404 website not found (<- not actually sure if this error will show).

So how do servers talk to one another? Again, I'll get more into that in my next essay response to your questions. But basically, they can talk over the internet the same way that your machine does (each server has their own address known as an IP and routers shoot you at one).

POWER SUPPLY FOR A SERVER RACK (finally back to shit I've learned in class) YOU ARE ASKING IF THEY ARE WIRED TOGETHER IN SERIES OR PARALLEL! The answer is parallel. Look back up at the image above, I've called out the power cables. In fact, watch the video of that guy wiring that rack back together very fast. Everything on the right is power. How are they able to plug everything together like that? Oh god I know too much about this topic do not talk to me about transformers (<- both the electrical type and the giant robots). BASICALLY, in a data center (place with WAY to many servers) the building is literally built with that kind of draw in mind (oh god the power demands of computing, I will write a long essay about that in your other question). Worrying about popping a fuse is only really a thing when plugging in a server into a plug in your house.

Links to useful youtube videos

How does a server work? (great guide in under 20 min)

Rackmount Server Anatomy 101 | A Beginner's Guide (more comprehensive breakdown but an hour long)

DATA CENTRE 101 | DISSECTING a SERVER and its COMPONENTS! (the guy is surrounded by screaming server racks and is close to incomprehensible)

What is a patch panel? (More stuff about switch boxes- HOLY SHIT there's more hardware just for managing the connection???)

Data Center Terminologies (basic breakdown of entire data center)

Networking Equipment Racks - How Do They Work? (very informative)

Funny

#is this even writing advice anymore?#I'd say no#Do I care?#NOPE!#yay! Computer#I eat computers#Guess what! You get an essay for every question!#oh god the amount of shit just to manage one connection#I hope you understand how beautiful the fact that the internet exists and it's even as stable as it is#it's also kind of fucked#couldn't fit a college story into this one#Uhhh one time me and a bunch of friends tried every door in the administrative building on campus at midnight#got into some interesting places#took candy from the office candy bowl#good fun#networking#server racks#servers#server hardware#stem#technology#I love technology#Ask#spark

7 notes

·

View notes

Text

How Did Jaipur’s Top DJ Rise to Fame?

In a city known for its royal heritage, pink sandstone architecture, and traditional Rajasthani music, a new rhythm has emerged — one shaped by the beat of electronic dance, hip-hop grooves, and electrifying remixes. Over the past few years, the nightlife in Jaipur has taken a vibrant turn, and at the center of this musical revolution is one name: the Top DJ in Jaipur.

But how did this local talent transform from a bedroom beatmaker into the face of Jaipur's booming club culture? Let’s explore the inspiring journey behind the rise of the most celebrated DJ in the city and what truly sets him apart from the rest.

Humble Beginnings in the Pink City

Every success story begins with a spark. For Jaipur’s top DJ, that spark ignited in a small neighborhood where music was more than a hobby — it was an escape. He started with an old laptop and basic mixing software, learning from YouTube tutorials and practicing for hours on end. While his peers pursued conventional careers, he spent weekends spinning at small parties and local events, slowly building a reputation for his energy, originality, and unmatched ability to read the crowd.

His first big break came when he was invited to open for a regional DJ at a college festival. That night, the dancefloor stayed packed until 2 a.m., and word began to spread.

Climbing the Ranks of the City’s Club Scene

In a city with growing demand for modern nightlife, the competition among DJs is fierce. But the Top DJ in Jaipur didn’t just play tracks — he curated experiences. Whether it was a wedding reception, a corporate event, or a New Year’s Eve bash, he brought a unique style that blended EDM, Bollywood, and deep house in seamless harmony.

Soon, he was being invited to perform at Jaipur’s trendiest venues: Blackout, Club Naila, HOP, and Skyfall, to name a few. Regular performances at these hotspots earned him a loyal fan base who followed him from one venue to the next, eager to hear his latest mixes.

Building a Digital Brand

Rising to fame today isn’t just about playing live — it’s about presence, personality, and brand. Understanding this, Jaipur’s top DJ leveraged social media like a pro. He began posting short clips of his sets, live crowd reactions, and original remixes on Instagram and YouTube. Some of his reels went viral, bringing in followers not only from Jaipur but across India.

His signature remix of a classic Rajasthani folk song infused with EDM beats garnered over 500,000 views — a moment that marked his breakthrough into the national spotlight.

Collaboration and Innovation

One trait that truly defines the Top DJ in Jaipur is his ability to collaborate and innovate. He teamed up with vocalists, music producers, and event companies to create unique audio-visual experiences. From rooftop sundowners to desert music festivals near Pushkar, he made sure his name was associated with something unforgettable.

He also launched his own event series — “Pink City Beats” — which turned into a monthly staple among partygoers. These self-hosted events allowed him full creative control and attracted a massive audience looking for something beyond the ordinary.

Recognition and Media Coverage

As the buzz around his name grew, so did recognition. Local magazines featured him as the “Face of Jaipur’s New Sound,” and music blogs started calling him the “underground king of Rajasthan.” Interviews with FM radio stations and digital portals followed, each one painting the picture of an artist who had worked tirelessly to become the Top DJ in Jaipur.

By 2024, he was shortlisted for a national DJ award — the only artist from Rajasthan on the list.

What Fans Say

True success is measured by how people respond. And when it comes to this DJ, the reviews speak for themselves. On Adsblast, the go-to platform for nightlife listings and reviews, fans describe his sets as “mind-blowing,” “electrifying,” and “worth every second.”

One review reads: “If you want to experience real energy, go where this guy is spinning. He doesn't just play music — he controls the room.”

Another user wrote: “Every weekend, we check Adsblast to see where Jaipur’s top DJ is playing next. It’s a ritual now!”

Staying Grounded and Giving Back

Despite his fame, the Top DJ in Jaipur remains grounded. He mentors aspiring DJs through workshops and online sessions, teaching them not just about mixing tracks but also about discipline, hustle, and creativity.

He’s also associated with charity events, having performed for fundraisers focused on child education and community development in Jaipur’s outskirts.

What’s Next?

With the kind of momentum he’s built, there’s no slowing down. Talks of an international tour are underway, and he’s set to release his first original album this year. There are also rumors of collaborations with well-known Bollywood music directors, potentially taking his journey to an even bigger stage.

Conclusion: A Star Born in the Heart of Rajasthan

The story of how the Top DJ in Jaipur rose to fame is a tale of perseverance, talent, and smart branding. From humble beginnings to headlining major events, his journey reflects the transformation of Jaipur itself — from traditional to modern, from calm to electric.

Whether you’re a music lover, a partygoer, or just someone who admires stories of success, this DJ’s rise is one worth following. And if you’re wondering where to catch his next performance, Adsblast is the place to start.

#DJ in Jaipur#Best DJ in Jaipur#Top DJ in Jaipur#DJ Classifed Ads in Jaipur#Free DJ Classifed Ads in Jaipur#Post DJ Classifed Ads in Jaipur

3 notes

·

View notes

Text

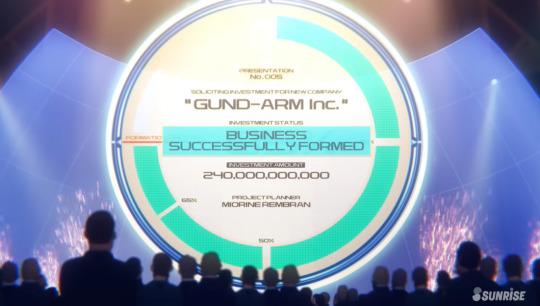

GWitch Onscreen Text: Episode 7

This is part eight of my attempt to transcribe and discuss all the monitor text in g witch! Because I got worms! We're on episode 7, "Shall We Gundam?"

Now then. Shall we? (Gundam?)

Not text, but in the opening, Vim tells the Peil Witches that it's despicable of them to breach the Cathedra Agreement, only for one of them to respond that she believes he would know something about that himself. This is our first hint towards the existence of the Schwarzette.

Now to the real text, we see a mockup of the Pharact of it's systems in this opening as well.

Here, we see that the Pharact is 19.1m and 57.1t.

The Blue and Red labels are tough to make out, but they each respectively say the same thing. I'll make my best guess: Blue labels: G-O | SYS-GUND CHH Red Labels: GUND FORMAT | CORAX UNITS

The Corax Units are the name of the Pharacts GUND Bits, and we know those use the Gund Format, so that checks. We saw 'CHH' on 4's data graph, still not sure what it means though.

I think the floating red text says GA - MS//RACT, but I can't be sure. The big label in the center says GUND-ARM FP/A-77.

Not text, but I think Sarius and Delling is another relationship I wish we got to see more of. Delling actually worked directly under Sarius within Grassley before eventually becoming President of the entire Group.

During the scene in the greenhouse, we get a look at the program on Miorine's monitor. No way to read the actual text on it from this shot, but we do see Miorine verify the name of one of the brands of fertilizer before presumably typing it on the screen, so it's probably safe to assume it's tracking the general maintenance of the tomatoes.

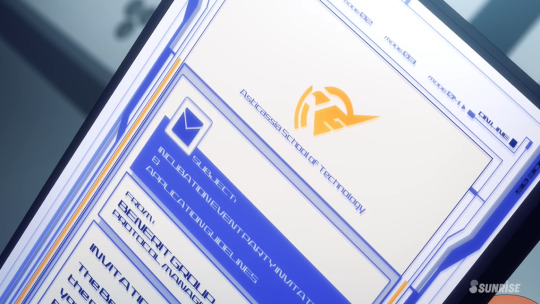

We never get a completely clear shot of the Incubation Party Invitation (Because it just can never be easy) But I'll make my best estimate of it from the shots we DO get.

TEXT: SUBJECT: INCUBATION EVENT PARTY INVITATION & APPLICATION GUIDELINES FROM: BENERIT GROUP PROTOCOL MANAGEMENT OFFICE

INVITATION The Benerit Group has the great honor of inviting you to the 15th Incubation Event.

Off topic but this scene has one of my favorite Miorine Noises in the whole show. It's so good. Take a listen. She is Flabbergasted.

TEXT: 15th INCUBATION EVENT PARTY Hosted by BENERIT GROUP

Pretty...

The Mobile Suits on display aren't named, but the one in the back right is actually the YOASOBI Collaboration Version of the Demi Trainer.

(YOASOBI are the musical duo that composed Shukufuku)

I wont bore you with a 1 to 1 transcription of the text of this first presentation, so here's the general overview.

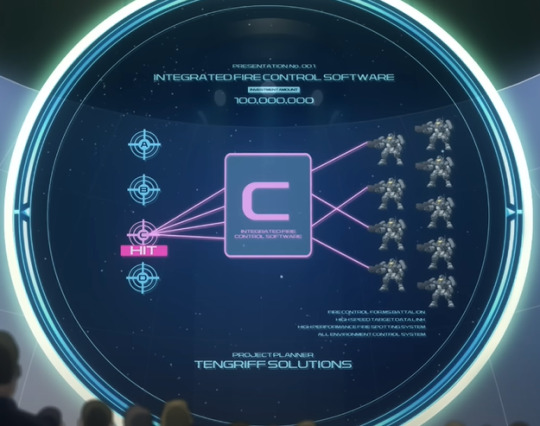

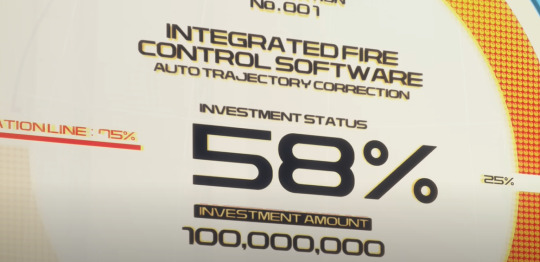

This is PRESENTATION No. 001 for the Incubation Party from TENGRIFF SOLUTIONS. They're asking for 100,000,000 to develop an INTEGRATED FIRE CONTROL SOFTWARE. What that means generally is that they want to develop a software that can automatically correct/redirect an MS's aim to a specific target, in both Individual and Team based MS Operations. The benefits of this system are: AUTO TRAJECTORY CORRECTION AUTO CORIOLIS CORRECTION AUTO GRAVITY CORRECTION FIRE CONTROL FORMS BATTALION HIGH SPEED TARGET DATA LINK HIGH PERFORMANCE SPOTTING SYSTEM ALL ENVIRONMENT CONTROL SYSTEM

Unfortunately this project did not meet the 75% formation requirement and DIED

Funny Shaddiq Expression. I don't think he ever makes a face like this for the rest of the series.

[Pointing] One of the two times throughout the series we see any pat of Notrette. The only other time is in the second season opening.

We see VIM JETURK on Lauda's screen when he's calling him. (The "Accept" button actually darkens when Lauda taps it)

This scene is our first look into Nika's role as a go between for Shaddiq, and also how it'll be a main point of conflict between her and Martin, as he's the one who sees the two of them talking.

(Also, Shaddiq has a habit of abruptly lowering the tone of his voice to signify a change in his demeanor. If you ever rewatch the series again, try and listen for it ! He does it all the time)

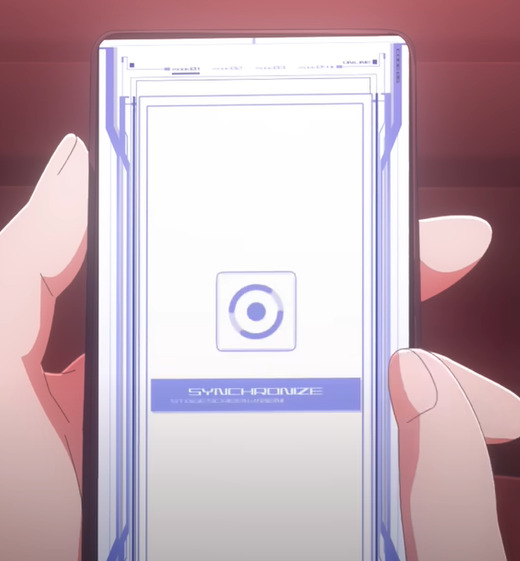

TEXT (Top to Bottom) BENERIT GROUP NETWORK CONNECTION ^ ACCESS REQUEST ACCESS POINT: STAGE SCREEN LINE CODE: 2915.455X.eX STATUS: APPROVAL PENDING LINE CODE: Yds2.4006.40 LINE CODE: 2945.Rr50.52 LINE CODE: KL40.024c.R2

Miorine's phone when she requests access to the Stage Screen

When she gets approved and begins connecting, her phone displays this loading screen called SYNCHRONIZE

And the stage screen is displaying that same loading screen for a split second before fully connecting.

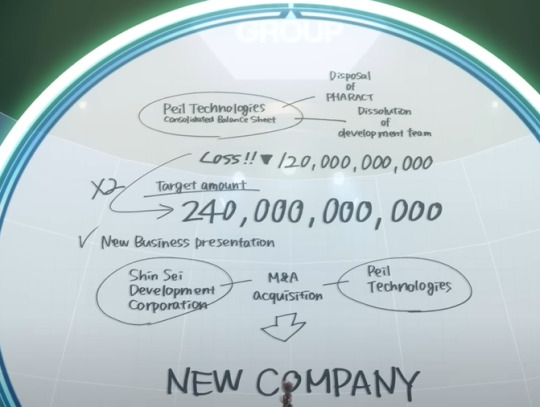

Miorine explains what this all means in the show proper, so I feel it'd be redundant to explain it, but here's the presentation anyhow.

We also see the UI of the program Mio is using in the presentation. She only uses the MEMO tab, but we see that there's a TEXT and PICT tab as well.

When Miorine takes the phone back from Delling, the investment status has reached 3%, meaning he invested 7,200,000,000 in the company. Mama Mia !

Business Successfully Formed!

But it seems that they only reached the bare minimum amount, 75%. So of the 240,000,000,000, she requested, the company earned at LEAST 180,000,000,000

We also see that Miorine’s presentation is only the 5th one of the night.

And that's all! Thank you very much! Unfortunately I can't leave anymore images because I've somehow reached the image limit :(

Instead I leave you with this: Go back and watch the scene where Miorine and Suletta see Prospera and Godoy. After the scene where Suletta greets him, they keep drawing his face wrong. Okay! Goodbye....!

Click here to go to Episode 8!

Click here to go to the Masterpost!

28 notes

·

View notes

Text

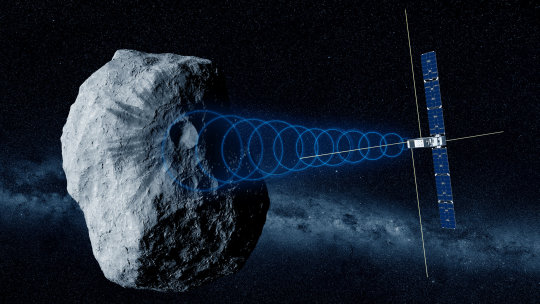

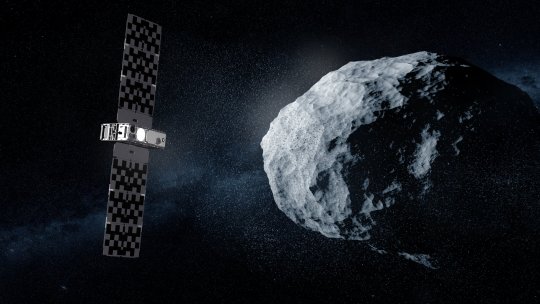

Hera asteroid mission's CubeSat passengers signal home

The two CubeSat passengers aboard ESA's Hera mission for planetary defense have exchanged their first signals with Earth, confirming their nominal status. The pair were switched on to check out all their systems, marking the first operation of ESA CubeSats in deep space.

"Each CubeSat was activated for about an hour in turn, in live sessions with the ground to perform commissioning—what we call 'are you alive?' and 'stowed checkout' tests," explains ESA's Hera CubeSats Engineer Franco Perez Lissi.

"The pair are currently stowed within their Deep Space Deployers, but we were able to activate every onboard system in turn, including their platform avionics, instruments and the inter-satellite links they will use to talk to Hera, as well as spinning up and down their reaction wheels which will be employed for attitude control."

Launched on 7 October, Hera is ESA's first planetary defense mission, headed to the first solar system body to have had its orbit shifted by human action: the Dimorphos asteroid, which was impacted by NASA's DART spacecraft in 2022.

Traveling with Hera are two shoebox-sized "CubeSats" built up from standardized 10-cm boxes. These miniature spacecraft will fly closer to the asteroid than their mothership, taking additional risks to acquire valuable bonus data.

Juventas, produced for ESA by GOMspace in Luxembourg, will make the first radar probe within an asteroid. while Milani, produced for ESA by Tyvak International in Italy, will perform multispectral mineral prospecting.

he commissioning took place from ESA's ESOC mission control center in Darmstadt in Germany, linked in turn to ESEC, the European Space Security and Education Center, at Redu in Belgium. This site hosts Hera's CubeSat Mission Operations Center, from where the CubeSats will be overseen once they are flying freely in space.

Juventas was activated on 17 October, at 4 million km away from Earth, while Milani followed on 24 October, nearly twice as far at 7.9 million km away.

The distances involved meant the team had to put up with tense waits for signals to pass between Earth and deep space, involving a 32.6 second round-trip delay for Juventas and a 52 second round-trip delay for Milani.

"During this CubeSat commissioning, we have not only confirmed the CubeSat instruments and systems work as planned but also validated the entire ground command infrastructure," explains Sylvain Lodiot, Hera Operations Manager.

"This involves a complex setup where data are received here at the Hera Missions Operations Center at ESOC but telemetry also goes to the CMOC at Redu, overseen by a Spacebel team, passed in turn to the CubeSat Mission Control Centers of the respective companies, to be checked in real time. Verification of this arrangement is good preparation for the free-flying operational phase once Hera reaches Dimorphos."

Andrea Zanotti, Milani's Lead Software Engineer at Tyvak, adds, "Milani didn't experience any computer resets or out of limits currents or voltages, despite its deep space environment which involves increased exposure to cosmic rays. The same is true of Juventas."

Camiel Plevier, Juventas's Lead Software Engineer at GomSpace, notes, "More than a week after launch, with 'fridge' temperatures of around 5°C in the Deep Space Deployers, the batteries of both CubeSats maintained a proper high state of charge. And it was nice to see how the checkout activity inside the CubeSats consistently warmed the temperature sensors throughout the CubeSats and the Deep Space Deployers."

The CubeSats will stay within their Deployers until the mission reaches Dimorphos towards the end of 2026, when they will be deployed at very low velocity of just a few centimeters per second. Any faster and—in the ultra-low gravitational field of the Great Pyramid-sized asteroid—they might risk being lost in space.

Franco adds, "This commissioning is a significant achievement for ESA and our industrial partners, involving many different interfaces that all had to work as planned: all the centers on Earth, then also on the Hera side, including the dedicated Life Support Interface Boards that connects the main spacecraft with the Deployers and CubeSats.

"The concept that a spacecraft can work with smaller companion spacecraft aboard them has been successfully demonstrated, which is going to be followed by more missions in the future, starting with ESA's Ramses mission for planetary defense and then the Comet Interceptor spacecraft."

From this point, the CubeSats will be switched on every two months during Hera's cruise phase, to undergo routine operations such as checkouts, battery conditioning and software updates.

TOP IMAGE: Juventas studies asteroid's internal structure. Credit: ESA/Science Office

LOWER IMAGE: Milani studies asteroid dust. Credit: ESA-Science Office

3 notes

·

View notes

Text

#Playstation7 #framework #BasicArchitecture #RawCode #RawScript #Opensource #DigitalConsole

To build a new gaming console’s digital framework from the ground up, you would need to integrate several programming languages and technologies to manage different aspects of the system. Below is an outline of the code and language choices required for various parts of the framework, focusing on languages like C++, Python, JavaScript, CSS, MySQL, and Perl for different functionalities.

1. System Architecture Design (Low-level)

• Language: C/C++, Assembly

• Purpose: To program the low-level system components such as CPU, GPU, and memory management.

• Example Code (C++) – Low-Level Hardware Interaction:

#include <iostream>

int main() {

// Initialize hardware (simplified example)

std::cout << "Initializing CPU...\n";

// Set up memory management

std::cout << "Allocating memory for GPU...\n";

// Example: Allocating memory for gaming graphics

int* graphicsMemory = new int[1024]; // Allocate 1KB for demo purposes

std::cout << "Memory allocated for GPU graphics rendering.\n";

// Simulate starting the game engine

std::cout << "Starting game engine...\n";

delete[] graphicsMemory; // Clean up

return 0;

}

2. Operating System Development

• Languages: C, C++, Python (for utilities)

• Purpose: Developing the kernel and OS for hardware abstraction and user-space processes.

• Kernel Code Example (C) – Implementing a simple syscall:

#include <stdio.h>

#include <unistd.h>

int main() {

// Example of invoking a custom system call

syscall(0); // System call 0 - usually reserved for read in UNIX-like systems

printf("System call executed\n");

return 0;

}

3. Software Development Kit (SDK)

• Languages: C++, Python (for tooling), Vulkan or DirectX (for graphics APIs)

• Purpose: Provide libraries and tools for developers to create games.

• Example SDK Code (Vulkan API with C++):

#include <vulkan/vulkan.h>

VkInstance instance;

void initVulkan() {

VkApplicationInfo appInfo = {};

appInfo.sType = VK_STRUCTURE_TYPE_APPLICATION_INFO;

appInfo.pApplicationName = "GameApp";

appInfo.applicationVersion = VK_MAKE_VERSION(1, 0, 0);

appInfo.pEngineName = "GameEngine";

appInfo.engineVersion = VK_MAKE_VERSION(1, 0, 0);

appInfo.apiVersion = VK_API_VERSION_1_0;

VkInstanceCreateInfo createInfo = {};

createInfo.sType = VK_STRUCTURE_TYPE_INSTANCE_CREATE_INFO;

createInfo.pApplicationInfo = &appInfo;

vkCreateInstance(&createInfo, nullptr, &instance);

std::cout << "Vulkan SDK Initialized\n";

}

4. User Interface (UI) Development

• Languages: JavaScript, HTML, CSS (for UI), Python (backend)

• Purpose: Front-end interface design for the user experience and dashboard.

• Example UI Code (HTML/CSS/JavaScript):

<!DOCTYPE html>

<html>

<head>

<title>Console Dashboard</title>

<style>

body { font-family: Arial, sans-serif; background-color: #282c34; color: white; }

.menu { display: flex; justify-content: center; margin-top: 50px; }

.menu button { padding: 15px 30px; margin: 10px; background-color: #61dafb; border: none; cursor: pointer; }

</style>

</head>

<body>

<div class="menu">

<button onclick="startGame()">Start Game</button>

<button onclick="openStore()">Store</button>

</div>

<script>

function startGame() {

alert("Starting Game...");

}

function openStore() {

alert("Opening Store...");

}

</script>

</body>

</html>

5. Digital Store Integration

• Languages: Python (backend), MySQL (database), JavaScript (frontend)

• Purpose: A backend system for purchasing and managing digital game licenses.

• Example Backend Code (Python with MySQL):

import mysql.connector

def connect_db():

db = mysql.connector.connect(

host="localhost",

user="admin",

password="password",

database="game_store"

)

return db

def fetch_games():

db = connect_db()

cursor = db.cursor()

cursor.execute("SELECT * FROM games")

games = cursor.fetchall()

for game in games:

print(f"Game ID: {game[0]}, Name: {game[1]}, Price: {game[2]}")

db.close()

fetch_games()

6. Security Framework Implementation

• Languages: C++, Python, Perl (for system scripts)

• Purpose: Ensure data integrity, authentication, and encryption.

• Example Code (Python – Encrypting User Data):

from cryptography.fernet import Fernet

# Generate a key for encryption

key = Fernet.generate_key()

cipher_suite = Fernet(key)

# Encrypt sensitive user information (e.g., account password)

password = b"SuperSecretPassword"

encrypted_password = cipher_suite.encrypt(password)

print(f"Encrypted Password: {encrypted_password}")

# Decrypting the password

decrypted_password = cipher_suite.decrypt(encrypted_password)

print(f"Decrypted Password: {decrypted_password}")

7. Testing and Quality Assurance

• Languages: Python (for automated tests), Jest (for JavaScript testing)

• Purpose: Unit testing, integration testing, and debugging.

• Example Unit Test (Python using unittest):

import unittest

def add(a, b):

return a + b

class TestGameFramework(unittest.TestCase):

def test_add(self):

self.assertEqual(add(10, 20), 30)

if __name__ == '__main__':

unittest.main()

8. Order of Implementation

• Phase 1: Focus on core system architecture (low-level C/C++) and OS development.

• Phase 2: Develop SDK and start building the basic framework for UI and input management.

• Phase 3: Work on backend systems like the digital store and integrate with front-end UI.

• Phase 4: Begin rigorous testing and debugging using automated test suites.

This comprehensive approach gives you a detailed idea of how each component can be built using a combination of these languages and technologies. Each section is modular, allowing teams to work on different aspects of the console simultaneously.

[Pixel Art/Sprites/Buildinodels/VictoriaSecretPixelArtKit #Playstation7 #DearDearestBrands]

#victoriasecretrunway#runwaybattle#victoria secret fashion#victoria secert model#victoriasecret#victoria secret#VictoriaSecretRunwayBattle#capcom victoriasecret#capcom videogames#playstation7#ps7#deardearestbrands#capcom#digitalconsole#python#script#rawscript#raw code#framework#VictoriaSecretVideoGame#deardearestbrandswordpress

2 notes

·

View notes

Text

Mapping LGBTQ+ Spaces through the Years

by Chloe Foor, Student Historian 2022/2024

Hello everyone! Welcome to a little project update from your local Student Historian in Residence. The last two months have been filled with research, data collection, and learning new software programs. I’ve had a great time, and I can’t wait to share what I’ve been doing.

I spent the first few weeks of October doing more research, scouring both the Archives and the Internet to find more locations significant to LGBTQ+ Madisonians over the years. While in the Archives, I looked at the Lesbian, Gay, Bisexual, and Transgender (LGBT) Folder 1 Subject File, which has materials from the 1990s to the mid-2010s (see University of Wisconsin-Madison Subject Files collection. uac69, Box 75, Folder 10. University of Wisconsin-Madison Archives, Madison, WI.). The most interesting things to me was a document written by Joe Elder on Reflection on the LGBTQ movement on Campus, which he wrote in the aftermath of the 2016 Orlando Nightclub Shooting. It provided some really interesting perspectives on the LGBTQ+ Rights movement in Madison.

Online, my main source of information is the Wisconsin LGBTQ+ History Project, which I wrote more about in my last blog post. I didn’t realize that they had digitized copies of old guides that listed LGBTQ+ friendly businesses as far back as 1963. It was fascinating to go through those and find the locations that queer people frequented in the past. The most interesting place that I found that was listed was called Apple Island, which I have seen before in archival research, but I didn't know it was in Madison. Apple Island was a community organization created to promote women's culture, and it was reported that 90% of the clientele were lesbians. My favorite part of the research project is finding these lesser-known spaces, and I can’t wait for more people to be able to easily access them through my project!

Outside of finding new “spaces,” I spent some time filling in the spreadsheet I use to keep track of each location. For each space, I list the title, address, significance, and years it was active, but sometimes it's hard to find all of that information on the first pass through. Sometimes, I'll just write the title and a short description, and then I can go back through later and fill in the rest. Now, all 165 entries are fully fleshed out!

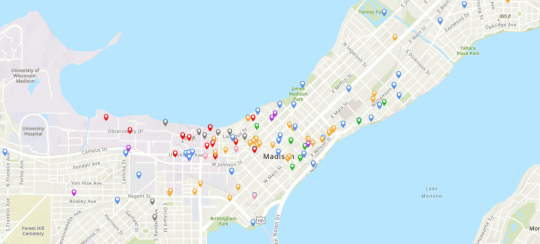

With the spreadsheet being fully populated, I was ready to import it into ArcGIS, a digital mapping software. It was able to read the spreadsheet into different categories and add every space to a map! In the above image, all of the gray makers are for “Purge,” the blue markers are for “Community,” the orange markers are for “Commercial Area,” the purple markers are for “Healthcare,” the pink markers are for “Media,” the green markers are for “Politics,” and the red markers are for “UW”! If you click on a marker, a pop-up window will display with information about the location, including beginning and end dates as well as a short description.

Here is the pop-up for The Crossing:

In the coming months, I will be working on making a public website to share my research! I also want to host an outreach event in collaboration with the Gender and Sexuality Campus Center and Phi Alpha Theta, the History Honors Society. I’m sure more meetings on the topic will come up in the coming months, and I will make sure to keep you posted! For now, though, that’s all from me!

7 notes

·

View notes

Text

WILL CONTAINER REPLACE HYPERVISOR

As with the increasing technology, the way data centers operate has changed over the years due to virtualization. Over the years, different software has been launched that has made it easy for companies to manage their data operating center. This allows companies to operate their open-source object storage data through different operating systems together, thereby maximizing their resources and making their data managing work easy and useful for their business.

Understanding different technological models to their programming for object storage it requires proper knowledge and understanding of each. The same holds for containers as well as hypervisor which have been in the market for quite a time providing companies with different operating solutions.

Let’s understand how they work

Virtual machines- they work through hypervisor removing hardware system and enabling to run the data operating systems.

Containers- work by extracting operating systems and enable one to run data through applications and they have become more famous recently.

Although container technology has been in use since 2013, it became more engaging after the introduction of Docker. Thereby, it is an open-source object storage platform used for building, deploying and managing containerized applications.

The container’s system always works through the underlying operating system using virtual memory support that provides basic services to all the applications. Whereas hypervisors require their operating system for working properly with the help of hardware support.

Although containers, as well as hypervisors, work differently, have distinct and unique features, both the technologies share some similarities such as improving IT managed service efficiency. The profitability of the applications used and enhancing the lifecycle of software development.

And nowadays, it is becoming a hot topic and there is a lot of discussion going on whether containers will take over and replace hypervisors. This has been becoming of keen interest to many people as some are in favor of containers and some are with hypervisor as both the technologies have some particular properties that can help in solving different solutions.

Let’s discuss in detail and understand their functioning, differences and which one is better in terms of technology?

What are virtual machines?

Virtual machines are software-defined computers that run with the help of cloud hosting software thereby allowing multiple applications to run individually through hardware. They are best suited when one needs to operate different applications without letting them interfere with each other.

As the applications run differently on VMs, all applications will have a different set of hardware, which help companies in reducing the money spent on hardware management.

Virtual machines work with physical computers by using software layers that are light-weighted and are called a hypervisor.

A hypervisor that is used for working virtual machines helps in providing fresh service by separating VMs from one another and then allocating processors, memory and storage among them. This can be used by cloud hosting service providers in increasing their network functioning on nodes that are expensive automatically.

Hypervisors allow host machines to have different operating systems thereby allowing them to operate many virtual machines which leads to the maximum use of their resources such as bandwidth and memory.

What is a container?

Containers are also software-defined computers but they operate through a single host operating system. This means all applications have one operating center that allows it to access from anywhere using any applications such as a laptop, in the cloud etc.

Containers use the operating system (OS) virtualization form, that is they use the host operating system to perform their function. The container includes all the code, dependencies and operating system by itself allowing it to run from anywhere with the help of cloud hosting technology.

They promised methods of implementing infrastructure requirements that were streamlined and can be used as an alternative to virtual machines.

Even though containers are known to improve how cloud platforms was developed and deployed, they are still not as secure as VMs.

The same operating system can run different containers and can share their resources and they further, allow streamlining of implemented infrastructure requirements by the system.

Now as we have understood the working of VMs and containers, let’s see the benefits of both the technologies

Benefits of virtual machines

They allow different operating systems to work in one hardware system that maintains energy costs and rack space to cooling, thereby allowing economical gain in the cloud.

This technology provided by cloud managed services is easier to spin up and down and it is much easier to create backups with this system.

Allowing easy backups and restoring images, it is easy and simple to recover from disaster recovery.

It allows the isolated operating system, hence testing of applications is relatively easy, free and simple.

Benefits of containers:

They are light in weight and hence boost significantly faster as compared to VMs within a few seconds and require hardware and fewer operating systems.

They are portable cloud hosting data centers that can be used to run from anywhere which means the cause of the issue is being reduced.

They enable micro-services that allow easy testing of applications, failures related to the single point are reduced and the velocity related to development is increased.

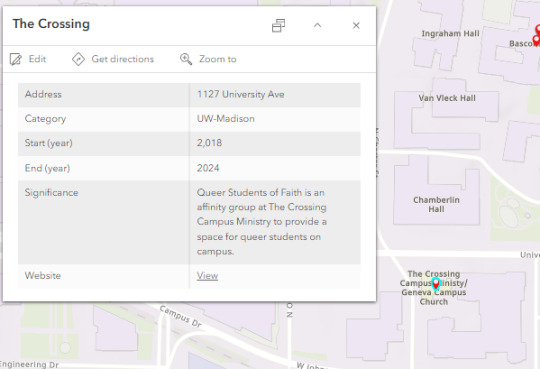

Let’s see the difference between containers and VMs

Hence, looking at all these differences one can make out that, containers have added advantage over the old virtualization technology. As containers are faster, more lightweight and easy to manage than VMs and are way beyond these previous technologies in many ways.

In the case of hypervisor, virtualization is performed through physical hardware having a separate operating system that can be run on the same physical carrier. Hence each hardware requires a separate operating system to run an application and its associated libraries.

Whereas containers virtualize operating systems instead of hardware, thereby each container only contains the application, its library and dependencies.

Containers in a similar way to a virtual machine will allow developers to improve the CPU and use physical machines' memory. Containers through their managed service provider further allow microservice architecture, allowing application components to be deployed and scaled more granularly.

As we have seen the benefits and differences between the two technologies, one must know when to use containers and when to use virtual machines, as many people want to use both and some want to use either of them.

Let’s see when to use hypervisor for cases such as:

Many people want to continue with the virtual machines as they are compatible and consistent with their use and shifting to containers is not the case for them.

VMs provide a single computer or cloud hosting server to run multiple applications together which is only required by most people.

As containers run on host operating systems which is not the case with VMs. Hence, for security purposes, containers are not that safe as they can destroy all the applications together. However, in the case of virtual machines as it includes different hardware and belongs to secure cloud software, so only one application will be damaged.

Container’s turn out to be useful in case of,

Containers enable DevOps and microservices as they are portable and fast, taking microseconds to start working.

Nowadays, many web applications are moving towards a microservices architecture that helps in building web applications from managed service providers. The containers help in providing this feature making it easy for updating and redeploying of the part needed of the application.

Containers contain a scalability property that automatically scales containers, reproduces container images and spin them down when they are not needed.

With increasing technology, people want to move to technology that is fast and has speed, containers in this scenario are way faster than a hypervisor. That also enables fast testing and speed recovery of images when a reboot is performed.

Hence, will containers replace hypervisor?

Although both the cloud hosting technologies share some similarities, both are different from each other in one or the other aspect. Hence, it is not easy to conclude. Before making any final thoughts about it, let's see a few points about each.

Still, a question can arise in mind, why containers?

Although, as stated above there are many reasons to still use virtual machines, containers provide flexibility and portability that is increasing its demand in the multi-cloud platform world and the way they allocate their resources.

Still today many companies do not know how to deploy their new applications when installed, hence containerizing applications being flexible allow easy handling of many clouds hosting data center software environments of modern IT technology.

These containers are also useful for automation and DevOps pipelines including continuous integration and continuous development implementation. This means containers having small size and modularity of building it in small parts allows application buildup completely by stacking those parts together.

They not only increase the efficiency of the system and enhance the working of resources but also save money by preferring for operating multiple processes.

They are quicker to boost up as compared to virtual machines that take minutes in boosting and for recovery.

Another important point is that they have a minimalistic structure and do not need a full operating system or any hardware for its functioning and can be installed and removed without disturbing the whole system.

Containers replace the patching process that was used traditionally, thereby allowing many organizations to respond to various issues faster and making it easy for managing applications.

As containers contain an operating system abstract that operates its operating system, the virtualization problem that is being faced in the case of virtual machines is solved as containers have virtual environments that make it easy to operate different operating systems provided by vendor management.

Still, virtual machines are useful to many

Although containers have more advantages as compared to virtual machines, still there are a few disadvantages associated with them such as security issues with containers as they belong to disturbed cloud software.

Hacking a container is easy as they are using single software for operating multiple applications which can allow one to excess whole cloud hosting system if breaching occurs which is not the case with virtual machines as they contain an additional barrier between VM, host server and other virtual machines.

In case the fresh service software gets affected by malware, it spreads to all the applications as it uses a single operating system which is not the case with virtual machines.

People feel more familiar with virtual machines as they are well established in most organizations for a long time and businesses include teams and procedures that manage the working of VMs such as their deployment, backups and monitoring.

Many times, companies prefer working with an organized operating system type of secure cloud software as one machine, especially for applications that are complex to understand.

Conclusion

Concluding this blog, the final thought is that, as we have seen, both the containers and virtual machine cloud hosting technologies are provided with different problem-solving qualities. Containers help in focusing more on building code, creating better software and making applications work on a faster note whereas, with virtual machines, although they are slower, less portable and heavy still people prefer them in provisioning infrastructure for enterprise, running legacy or any monolithic applications.

Stating that, if one wants to operate a full operating system, they should go for hypervisor and if they want to have service from a cloud managed service provider that is lightweight and in a portable manner, one must go for containers.

Hence, it will take time for containers to replace virtual machines as they are still needed by many for running some old-style applications and host multiple operating systems in parallel even though VMs has not had so cloud-native servers. Therefore, it can be said that they are not likely to replace virtual machines as both the technologies complement each other by providing IT managed services instead of replacing each other and both the technologies have a place in the modern data center.

For more insights do visit our website

#container #hypervisor #docker #technology #zybisys #godaddy

6 notes

·

View notes

Text

Good Cloud Call Center Software Capabilities

Cloud call center software offers essential features for efficient customer communication, including Interactive Voice Response (IVR), Automatic Call Distribution (ACD), call monitoring, live call transfer, CRM integration, and omnichannel communication. IVR acts as a virtual receptionist, ACD ensures timely routing, and CRM integration provides a 360-degree view of callers. The ability to transfer live calls seamlessly and accommodate various communication channels enhances customer experience. Cloud call center solutions streamline business communications, contributing to enhanced customer satisfaction and operational efficiency.

#call center solutions#ivr software#predictive dialer software#auto dialer software#call center dialer#hosted call center solutions#predictive dialer#omnichannel contact center software#cloud call center software#outbound call center software

1 note

·

View note

Text

CLOUD S.

Cloud storage //

" MEANING "

Cloud Storage is the storage of data on the Server and is in the online world that we call the Cloud through an Internet connection to browse and use the data on the Cloud, which may store data on multiple servers by distributing the data into little machine The cloud provider (Host) manages the maintenance of all hardware and software used to store data. This allows users to reduce costs associated with hardware and software.

" HOWEVER "

The technology that responds to the Big Data era with big data is Cloud storage which is Software Defined Storage. The simple principle of software defined storage is to consolidate the control center into one point and Virtualize Storage Layer divided into pools according to points. Desire for desired use

------------------------------------------------------------------------------

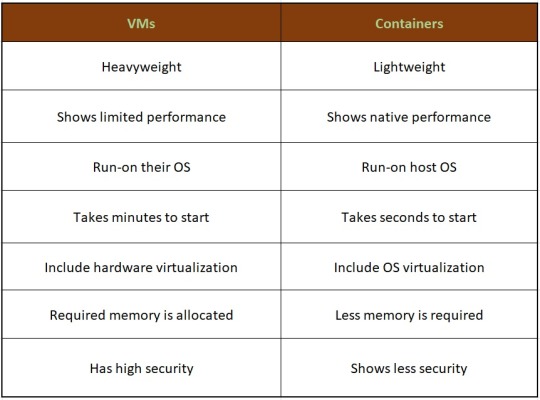

" Difference Between Traditional Storage and Software Defined Storage "

An overview of the differences between Traditional Storage and Software Defined Storage (SDS).

In terms of traditional architectures, the architecture of each application is clearly separate. Who's lumps it? But with SDS, the storage tier is pooled together with the same management unit across all applications.

In traditional systems, because the control center of each application is separate, the system design must take into account hardware compatibility quite a lot, which may lead to a situation known as Vendor Lock-in. The management center of every application comes in one place. The freedom to choose and expand the number of hardware will be more and not limited anymore.

It's a matter of workload distribution. Traditionally, workload and capacity are overloaded to the point where they are used a lot and it's quite difficult to manage the load. But if it is SDS, we will be able to manage the workload distributed throughout the cluster as shown in Figure 1.

// And here is a summary of the pros and cons of SDS that will solve the problems of today's Cloud Storage Infrastructure design. There is also 1 interesting prediction when Gartner predicts that by 2024 or two. Nearly three years from now, 50% of all storage will be deployed with SDS //

Why I choose this App ?

" Because this app it's easy to use and comfort "

2 notes

·

View notes

Text

Elon Musk’s so-called Department of Government Efficiency (DOGE) has plans to stage a “hackathon” next week in Washington, DC. The goal is to create a single “mega API”—a bridge that lets software systems talk to one another—for accessing IRS data, sources tell WIRED. The agency is expected to partner with a third-party vendor to manage certain aspects of the data project. Palantir, a software company cofounded by billionaire and Musk associate Peter Thiel, has been brought up consistently by DOGE representatives as a possible candidate, sources tell WIRED.

Two top DOGE operatives at the IRS, Sam Corcos and Gavin Kliger, are helping to orchestrate the hackathon, sources tell WIRED. Corcos is a health-tech CEO with ties to Musk’s SpaceX. Kliger attended UC Berkeley until 2020 and worked at the AI company Databricks before joining DOGE as a special adviser to the director at the Office of Personnel Management (OPM). Corcos is also a special adviser to Treasury Secretary Scott Bessent.

Since joining Musk’s DOGE, Corcos has told IRS workers that he wants to pause all engineering work and cancel current attempts to modernize the agency’s systems, according to sources with direct knowledge who spoke with WIRED. He has also spoken about some aspects of these cuts publicly: "We've so far stopped work and cut about $1.5 billion from the modernization budget. Mostly projects that were going to continue to put us down the death spiral of complexity in our code base," Corcos told Laura Ingraham on Fox News in March.

Corcos has discussed plans for DOGE to build “one new API to rule them all,” making IRS data more easily accessible for cloud platforms, sources say. APIs, or application programming interfaces, enable different applications to exchange data, and could be used to move IRS data into the cloud. The cloud platform could become the “read center of all IRS systems,” a source with direct knowledge tells WIRED, meaning anyone with access could view and possibly manipulate all IRS data in one place.

Over the last few weeks, DOGE has requested the names of the IRS’s best engineers from agency staffers. Next week, DOGE and IRS leadership are expected to host dozens of engineers in DC so they can begin “ripping up the old systems” and building the API, an IRS engineering source tells WIRED. The goal is to have this task completed within 30 days. Sources say there have been multiple discussions about involving third-party cloud and software providers like Palantir in the implementation.

Corcos and DOGE indicated to IRS employees that they intended to first apply the API to the agency’s mainframes and then move on to every other internal system. Initiating a plan like this would likely touch all data within the IRS, including taxpayer names, addresses, social security numbers, as well as tax return and employment data. Currently, the IRS runs on dozens of disparate systems housed in on-premises data centers and in the cloud that are purposefully compartmentalized. Accessing these systems requires special permissions and workers are typically only granted access on a need-to-know basis.

A “mega API” could potentially allow someone with access to export all IRS data to the systems of their choosing, including private entities. If that person also had access to other interoperable datasets at separate government agencies, they could compare them against IRS data for their own purposes.

“Schematizing this data and understanding it would take years,” an IRS source tells WIRED. “Just even thinking through the data would take a long time, because these people have no experience, not only in government, but in the IRS or with taxes or anything else.” (“There is a lot of stuff that I don't know that I am learning now,” Corcos tells Ingraham in the Fox interview. “I know a lot about software systems, that's why I was brought in.")

These systems have all gone through a tedious approval process to ensure the security of taxpayer data. Whatever may replace them would likely still need to be properly vetted, sources tell WIRED.

"It's basically an open door controlled by Musk for all American's most sensitive information with none of the rules that normally secure that data," an IRS worker alleges to WIRED.

The data consolidation effort aligns with President Donald Trump’s executive order from March 20, which directed agencies to eliminate information silos. While the order was purportedly aimed at fighting fraud and waste, it also could threaten privacy by consolidating personal data housed on different systems into a central repository, WIRED previously reported.

In a statement provided to WIRED on Saturday, a Treasury spokesperson said the department “is pleased to have gathered a team of long-time IRS engineers who have been identified as the most talented technical personnel. Through this coalition, they will streamline IRS systems to create the most efficient service for the American taxpayer. This week the team will be participating in the IRS Roadmapping Kickoff, a seminar of various strategy sessions, as they work diligently to create efficient systems. This new leadership and direction will maximize their capabilities and serve as the tech-enabled force multiplier that the IRS has needed for decades.”

Palantir, Sam Corcos, and Gavin Kliger did not immediately respond to requests for comment.

In February, a memo was drafted to provide Kliger with access to personal taxpayer data at the IRS, The Washington Post reported. Kliger was ultimately provided read-only access to anonymized tax data, similar to what academics use for research. Weeks later, Corcos arrived, demanding detailed taxpayer and vendor information as a means of combating fraud, according to the Post.

“The IRS has some pretty legacy infrastructure. It's actually very similar to what banks have been using. It's old mainframes running COBOL and Assembly and the challenge has been, how do we migrate that to a modern system?” Corcos told Ingraham in the same Fox News interview. Corcos said he plans to continue his work at IRS for a total of six months.

DOGE has already slashed and burned modernization projects at other agencies, replacing them with smaller teams and tighter timelines. At the Social Security Administration, DOGE representatives are planning to move all of the agency’s data off of legacy programming languages like COBOL and into something like Java, WIRED reported last week.

Last Friday, DOGE suddenly placed around 50 IRS technologists on administrative leave. On Thursday, even more technologists were cut, including the director of cybersecurity architecture and implementation, deputy chief information security officer, and acting director of security risk management. IRS’s chief technology officer, Kaschit Pandya, is one of the few technology officials left at the agency, sources say.

DOGE originally expected the API project to take a year, multiple IRS sources say, but that timeline has shortened dramatically down to a few weeks. “That is not only not technically possible, that's also not a reasonable idea, that will cripple the IRS,” an IRS employee source tells WIRED. “It will also potentially endanger filing season next year, because obviously all these other systems they’re pulling people away from are important.”

(Corcos also made it clear to IRS employees that he wanted to kill the agency’s Direct File program, the IRS’s recently released free tax-filing service.)

DOGE’s focus on obtaining and moving sensitive IRS data to a central viewing platform has spooked privacy and civil liberties experts.

“It’s hard to imagine more sensitive data than the financial information the IRS holds,” Evan Greer, director of Fight for the Future, a digital civil rights organization, tells WIRED.

Palantir received the highest FedRAMP approval this past December for its entire product suite, including Palantir Federal Cloud Service (PFCS) which provides a cloud environment for federal agencies to implement the company’s software platforms, like Gotham and Foundry. FedRAMP stands for Federal Risk and Authorization Management Program and assesses cloud products for security risks before governmental use.

“We love disruption and whatever is good for America will be good for Americans and very good for Palantir,” Palantir CEO Alex Karp said in a February earnings call. “Disruption at the end of the day exposes things that aren't working. There will be ups and downs. This is a revolution, some people are going to get their heads cut off.”

15 notes

·

View notes

Text

[post text: YOUR WEBSITE'S TITLE HERE

Going to put all this in its own post too by popular request: here's how you make your own website with no understanding of HTML code at all, no software, no backend, absolutely nothing but a text file and image files!

First get website server space of your own, like at NEOCITIES. The free version has enough room to host a whole fan page, your art, a simple comic series, whatever!

The link I've provided goes to a silly comic that will tell you how to save the page as an html file and make it into a page for your own site. The bare minimum of all you need to do with it is JUST THIS: /end ID]

[image ID: a creature explaining what to change after you save the html to make it your own website: “Guess what? The circled bits are all you ever need to change to make it your OWN PAGE” The sections circled in red are:

the text of the page's tab, between "head" and "title" tags. it says “YOUR WEBSITE'S TITLE HERE”; the full line is: <head><title>YOUR WEBSITE'S TITLE HERE</title><head>

a header for the page, between "h1" and "p" tags. it says “BIG, CENTERED TEXT”; the full line is: <h1><p style="font-size:40px">BIG, CENTERED TEXT</p><h1>

a link to the image files of the comic, between an "h1" tag and inside an "img." tag. it says “thisisntyourcomic.png”; the full line is: <h1><img src="thisisntyourcomic.png"></h1>

the three navigation links, each inside an "a" tag. the first link applies to the word “Previous”, the second applies to the word “Home”, and the third to the word “Next”; the first line is: <a href="001.html"><b>Previous</b></a> . the second line is: <a href="YOURWEBSITE.WHATEVER"><b>Home</b></a>&nps;&nps; . the third line is: <a href="001.html"><b>Next</b></a>

/end ID]

[post text: Change the titles, text, and image url's to whatever you want them to be, upload your image files and the html file together to your free website (or the same subfolder in that website), and now you have a webpage with those pictures on it. That's it!!!!!

.....But if you want to change some more super basic things about it, here's additional tips from the same terrible little guy: /end PT]

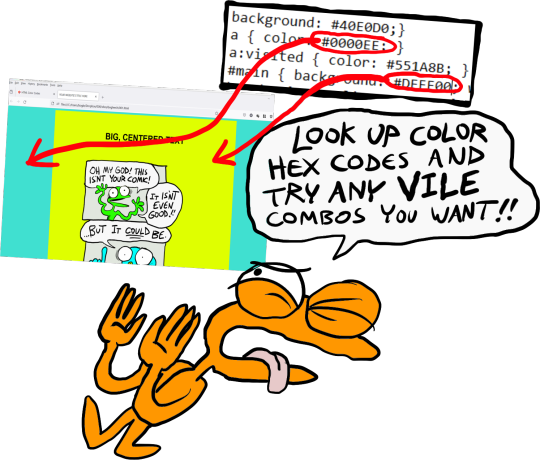

[image ID: the creature from before, explaining how to change the background colors: “AAH! It's extra stuff! Here's where you can change colors!” a few spots for hexcodes and code segments are circled and labelled. The lines of code are:

color: "#000000;" labelled “Font”

background: "#FFFFFF;" labelled “Background 1”

a { color: "#0000EE;" } labelled “Links”

a:visited { "color: #551A8B;" } labelled “Visited Links”

#main { "background: #FFFFFF;" width: 900…} labelled “Background 2”

The creature continues: “There's two different ones for "background" because the sample file has this nice middle column for your content!”

/end ID]

[image ID: a demonstration of the different background colors. Background 1 applies to the background of the webpage, and Background 2 applies to the background of the content column. The creature instructs: “Look up color hex codes and try any vile combos you want!” /end ID]

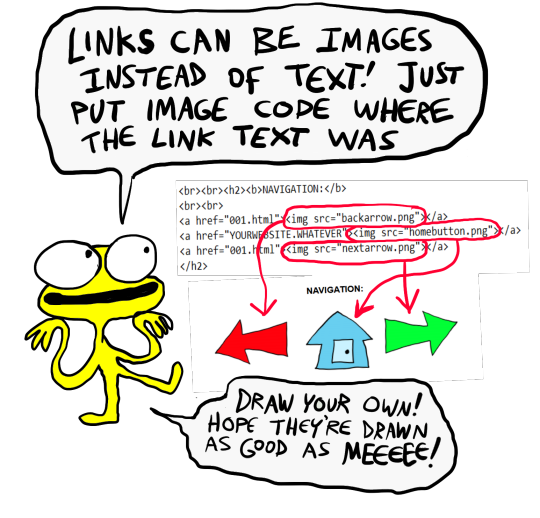

[image ID: the creature explaining how to change the navigation at the bottom: “Links can be images instead of text! Just put an image code where the link text was.” The code segment from the first image for navigation links except with the "Previous", "Home", and "Next" texts replaced with <img src="backbutton.png">, <img src="homebutton.png"> and <img src="nextbutton.png"> /end ID]

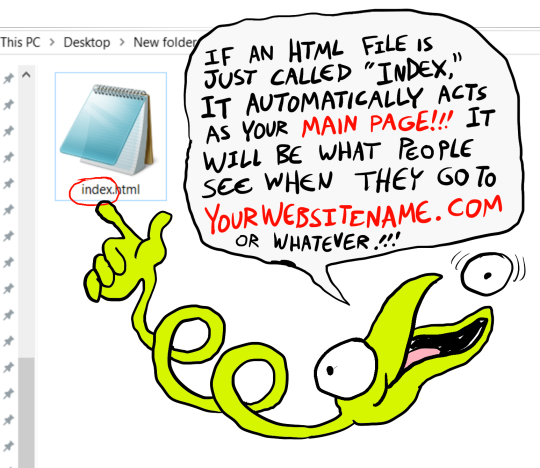

[image ID: a screenshot of the html code inside the computer's files, with the creature explaining: “If an html file is just called "Index," it automatically acts as your main page! It will be what people see when they go to yourwebsitename.com or whatever!” /end ID]

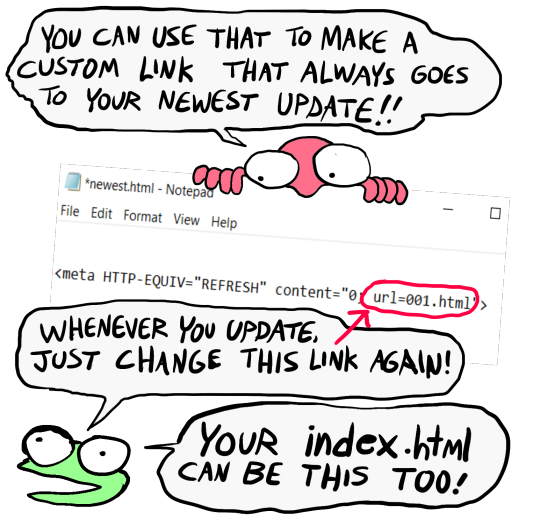

[image ID: a singular line of code: <meta HTTP-EQUIV="REFRESH" content="0; url=yourwebsite.com"> The creature says: “If you make a page with only this one line of code, anyone who goes to the page will be taken to whatever URL you put there!” /end ID]

[image ID: the creature further explains: "You can use that to make a custom link that always goes to your newest update! Whenever you update, just change this link again! Your index.html can be this, too!" There's an arrow pointing towards the "url=yourwebsite.com" in the code segment from the previous image. /end ID]

[post text: That last code by itself is:

<meta HTTP-EQUIV="REFRESH" content="0; url=001.html">

Change "001.html" to wherever you want that link to take people. THIS IS THE REASON WHY when you go to bogleech.com/pokemon/ you are taken instantly to the newest Pokemon review, because the /pokemon/ directory of my website has an "index.html" page with this single line of code. Every pokemon review has its own permanent link, but I change that single line in the index file so it points to the newest page whenever I need it to!

While I catered these instructions to updating a webcomic, you can use the same template to make blog type posts, articles or just image galleries. Anything you want! You can delete the navigational links entirely, you can make your site's index.html into a simple list of text links OR fun little image links to your different content, whatever! Your website can be nothing but a big ugly deep fried JPEG of goku with a recipe for potato salad on it, no other content ever, who cares! We did that kind of nonsense all the time in the 1990's and thought it was the pinnacle of comedy!! Maybe it still can be?!?! Or maybe you just want a place to put some artwork and thoughts of yours that doesn't come with the same baggage as big social media?

Make a webpage this way and it will look the same in any browser, any operating system for years and years to come, because it's the same kind of basic raw code most of the internet depends upon!

/end PT]

小い.> very useful guide~ thank you for making it!♡

게터.> neocities time〜 have fun everyone :]

ゆの.> web liberation W

additional useful things people added in the notes:

alternatives for making a link to the newest update, because the method privided by the guide above will break the back button

website for learning more html and css

how to make the text properly formatted and visible on mobile

Going to put all this in its own post too by popular request: here's how you make your own website with no understanding of HTML code at all, no software, no backend, absolutely nothing but a text file and image files! First get website server space of your own, like at NEOCITIES. The free version has enough room to host a whole fan page, your art, a simple comic series, whatever! The link I've provided goes to a silly comic that will tell you how to save the page as an html file and make it into a page for your own site. The bare minimum of all you need to do with it is JUST THIS:

Change the titles, text, and image url's to whatever you want them to be, upload your image files and the html file together to your free website (or the same subfolder in that website), and now you have a webpage with those pictures on it. That's it!!!!! .....But if you want to change some more super basic things about it, here's additional tips from the same terrible little guy:

That last code by itself is: <meta HTTP-EQUIV="REFRESH" content="0; url=001.html"> Change "001.html" to wherever you want that link to take people. THIS IS THE REASON WHY when you go to bogleech.com/pokemon/ you are taken instantly to the newest Pokemon review, because the /pokemon/ directory of my website has an "index.html" page with this single line of code. Every pokemon review has its own permanent link, but I change that single line in the index file so it points to the newest page whenever I need it to! While I catered these instructions to updating a webcomic, you can use the same template to make blog type posts, articles or just image galleries. Anything you want! You can delete the navigational links entirely, you can make your site's index.html into a simple list of text links OR fun little image links to your different content, whatever! Your website can be nothing but a big ugly deep fried JPEG of goku with a recipe for potato salad on it, no other content ever, who cares! We did that kind of nonsense all the time in the 1990's and thought it was the pinnacle of comedy!! Maybe it still can be?!?! Or maybe you just want a place to put some artwork and thoughts of yours that doesn't come with the same baggage as big social media? Make a webpage this way and it will look the same in any browser, any operating system for years and years to come, because it's the same kind of basic raw code most of the internet depends upon!

#chroma…#〖게타⎯⎯⎯ ʳᵉᵈᵃᶜᵗᵉᵈ : ⿻␘␇ ױ⸋⸋⸋𓐄𓐄𓐄⸋⸋⸋〗#id added#described#neocities#website#htmlcoding#html css#learn to code#indie comics#comics#website development#coding guide#website guide

12K notes

·

View notes

Text

Jay Kuo at The Status Kuo:

In a letter to the Fox Network, Hunter Biden’s attorneys have put the company on notice that it’s about to get its pants sued off for defamation. Again. Biden’s attorney, Mark Geragos, best known for his successful representation of celebrities, issued the following statement:

[For the last five years, Fox News has relentlessly attacked Hunter Biden and made him a caricature in order to boost ratings and for its financial gain. The recent indictment of FBI informant Smirnov has exposed the conspiracy of disinformation that has been fueled by Fox, enabled by their paid agents and monetized by the Fox enterprise. We plan on holding them accountable.]