#informatica and Google Cloud

Explore tagged Tumblr posts

Text

Big Data Analytics: Tools & Career Paths

In this digital era, data is being generated at an unimaginable speed. Social media interactions, online transactions, sensor readings, scientific inquiries-all contribute to an extremely high volume, velocity, and variety of information, synonymously referred to as Big Data. Impossible is a term that does not exist; then, how can we say that we have immense data that remains useless? It is where Big Data Analytics transforms huge volumes of unstructured and semi-structured data into actionable insights that spur decision-making processes, innovation, and growth.

It is roughly implied that Big Data Analytics should remain within the triangle of skills as a widely considered niche; in contrast, nowadays, it amounts to a must-have capability for any working professional across tech and business landscapes, leading to numerous career opportunities.

What Exactly Is Big Data Analytics?

This is the process of examining huge, varied data sets to uncover hidden patterns, customer preferences, market trends, and other useful information. The aim is to enable organizations to make better business decisions. It is different from regular data processing because it uses special tools and techniques that Big Data requires to confront the three Vs:

Volume: Masses of data.

Velocity: Data at high speed of generation and processing.

Variety: From diverse sources and in varying formats (!structured, semi-structured, unstructured).

Key Tools in Big Data Analytics

Having the skills to work with the right tools becomes imperative in mastering Big Data. Here are some of the most famous ones:

Hadoop Ecosystem: The core layer is an open-source framework for storing and processing large datasets across clusters of computers. Key components include:

HDFS (Hadoop Distributed File System): For storing data.

MapReduce: For processing data.

YARN: For resource-management purposes.

Hive, Pig, Sqoop: Higher-level data warehousing and transfer.

Apache Spark: Quite powerful and flexible open-source analytics engine for big data processing. It is much faster than MapReduce, especially for iterative algorithms, hence its popularity in real-time analytics, machine learning, and stream processing. Languages: Scala, Python (PySpark), Java, R.

NoSQL Databases: In contrast to traditional relational databases, NoSQL (Not only SQL) databases are structured to maintain unstructured and semic-structured data at scale. Examples include:

MongoDB: Document-oriented (e.g., for JSON-like data).

Cassandra: Column-oriented (e.g., for high-volume writes).

Neo4j: Graph DB (e.g., for data heavy with relationships).

Data Warehousing & ETL Tools: Tools for extracting, transforming, and loading (ETL) data from various sources into a data warehouse for analysis. Examples: Talend, Informatica. Cloud-based solutions such as AWS Redshift, Google BigQuery, and Azure Synapse Analytics are also greatly used.

Data Visualization Tools: Essential for presenting complex Big Data insights in an understandable and actionable format. Tools like Tableau, Power BI, and Qlik Sense are widely used for creating dashboards and reports.

Programming Languages: Python and R are the dominant languages for data manipulation, statistical analysis, and integrating with Big Data tools. Python's extensive libraries (Pandas, NumPy, Scikit-learn) make it particularly versatile.

Promising Career Paths in Big Data Analytics

As Big Data professionals in India was fast evolving, there were diverse professional roles that were offered with handsome perks:

Big Data Engineer: Designs, builds, and maintains the large-scale data processing systems and infrastructure.

Big Data Analyst: Work on big datasets, finding trends, patterns, and insights that big decisions can be made on.

Data Scientist: Utilize statistics, programming, and domain expertise to create predictive models and glean deep insights from data.

Machine Learning Engineer: Concentrates on the deployment and development of machine learning models on Big Data platforms.

Data Architect: Designs the entire data environment and strategy of an organization.

Launch Your Big Data Analytics Career

Some more Specialized Big Data Analytics course should be taken if you feel very much attracted to data and what it can do. Hence, many computer training institutes in Ahmedabad offer comprehensive courses covering these tools and concepts of Big Data Analytics, usually as a part of Data Science with Python or special training in AI and Machine Learning. Try to find those courses that offer real-time experience and projects along with industry mentoring, so as to help you compete for these much-demanded jobs.

When you are thoroughly trained in the Big Data Analytics tools and concepts, you can manipulate information for innovation and can be highly paid in the working future.

At TCCI, we don't just teach computers — we build careers. Join us and take the first step toward a brighter future.

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

0 notes

Text

🧠 Decoding the Salesforce–Informatica Deal: What It Really Means for Tech & Data

📅 Posted on May 27, 2025 ✍️ by ExploreRealNews

💥 So... What Just Happened?

If you’ve been anywhere near tech news this week, you’ve probably seen the headline: Salesforce is acquiring Informatica in a massive $8 billion deal.

And if you’re like most of us, you’re probably wondering: “Okay, but why should I care?” 👀

Let’s break it down. No jargon. No fluff. Just real talk about why this matters in the world of tech, business, and your digital life.

🤝 The Deal in a Nutshell

Who’s buying who? Salesforce 🛒 → Informatica (a leader in data management)

How much? About $8 billion (yes, with a “B”)

Why? To supercharge Salesforce’s AI and Data Cloud capabilities and help companies make better use of their data.

🚀 What’s the Strategy Here?

Salesforce isn’t just playing catch-up. It’s making a power move to stay ahead in the AI and cloud race.

Data is fuel for AI. Informatica helps organize, clean, and manage data from all over an organization. That’s gold for Salesforce's AI features like Einstein.

Think smarter automation. More data = better predictions, more intelligent workflows, and smoother customer experiences.

Cloud is king. With Informatica, Salesforce can offer deeper, more flexible cloud integrations to big enterprises.

🔮 What Does It Mean for You (and the World)?

Whether you're a developer, business owner, or just a tech lover—this deal is 🔥 because:

It brings cleaner, smarter AI tools into more businesses.

It simplifies how companies use data (goodbye messy silos!).

It shows how data + AI = the future of decision-making.

💬 Hot Takes

📌 Some say Salesforce is beefing up to compete with Microsoft & Google. 📌 Others think it’s prepping for more AI + data automation rollouts. 📌 Most agree: This deal makes Salesforce a data powerhouse.

💡 Final Thought

This isn’t just another tech merger. It’s a signal that data is everything in tomorrow’s world—and companies like Salesforce want to own the tools that shape it.

If you’re in the tech space, keep your eyes peeled. The next wave of innovation might just be riding on deals like this. 🌊

🔗 Read More & Stay In The Loop

👉 [ExploreRealNews] ✨ Let’s decode tech together.

#Salesforce#Informatica#TechNews#AI#BigData#CloudComputing#EnterpriseTech#TechDeals#TumblrTech#StartupWatch#MergersAndAcquisitions#Innovation

0 notes

Text

Informatica Expands IDMC on Google Cloud, Supercharging AI-Ready Data

http://securitytc.com/TK5lm2

1 note

·

View note

Text

How to Streamline Data Management with AI

Data is at the core of every successful business. Whether you’re a startup, a mid-sized company, or a global enterprise, managing data efficiently is crucial for decision-making, customer insights, and operational effectiveness. However, traditional data management methods can be time-consuming, prone to errors, and difficult to scale.

Luckily, there’s a great solution. AI-powered solutions are revolutionizing how businesses store, process, analyze, and utilize data. With the right AI tools and strategies, organizations can streamline their data management processes, reduce inefficiencies, and unlock valuable insights with minimal manual effort.

Step 1: Assess Your Data Management Needs

Before diving into AI-powered solutions, it’s essential to evaluate your current data management processes. Ask yourself:

Where are the biggest inefficiencies in our data handling?

What types of data do we manage (structured, unstructured, real-time, historical)?

Are we facing issues with data silos, inconsistencies, or security concerns?

Once you identify your challenges, you can determine the right AI solutions that align with your organization’s needs. Performing a gap analysis can help pinpoint inefficiencies and guide your AI adoption strategy.

Step 2: Choose the Right AI Tools for Your Organization

AI-driven data management tools come in various forms, each serving different functions. Some popular categories include:

AI-Powered Data Cleaning & Integration: Tools like Talend, Informatica, and Trifacta can help clean, normalize, and integrate data from multiple sources.

AI-Based Data Storage & Processing: Platforms like Google BigQuery, Amazon Redshift, and Snowflake offer intelligent, scalable data storage solutions.

AI-Driven Analytics & Insights: Machine learning-powered analytics tools such as Tableau, Power BI, and DataRobot can uncover patterns and insights within your data.

Automated Data Governance & Security: AI tools like Collibra and IBM Cloud Pak for Data ensure compliance, access control, and secure data handling.

Choose AI tools that best fit your business needs and integrate well with your existing infrastructure. Conducting pilot tests before full implementation can help ensure the tool’s effectiveness.

Step 3: Implement AI for Data Collection & Cleaning

One of the most tedious aspects of data management is data collection and cleaning. AI can automate these processes by:

Identifying and removing duplicate or inaccurate records

Filling in missing data using predictive algorithms

Structuring unstructured data (such as text or images) for analysis

AI can learn from past errors, continuously improving data accuracy over time. By automating these tasks, AI significantly reduces human effort and errors, ensuring a more reliable dataset.

Step 4: Utilize AI for Data Organization & Storage

With vast amounts of data flowing in, organizing and storing it efficiently is critical. AI-powered databases and cloud storage solutions automatically categorize, index, and optimize data storage for quick retrieval and analysis.

For example:

AI-enhanced cloud storage solutions can predict access patterns and optimize data retrieval speed.

Machine learning algorithms can automatically tag and classify data based on usage trends.

By using AI-driven storage solutions, businesses can reduce storage costs by prioritizing frequently accessed data while archiving less relevant information efficiently.

“Efficient data management is the backbone of modern business success, and AI is the key to unlocking its full potential. By reducing manual effort and eliminating inefficiencies, AI-driven solutions make it possible to turn raw data into actionable intelligence.”

— Raj Patel, CEO of DataFlow Innovations

Step 5: Leverage AI for Real-Time Data Processing & Analytics

Modern businesses rely on real-time data to make quick decisions. AI-driven analytics platforms help process large data streams instantly, providing actionable insights in real-time.

AI algorithms can detect anomalies in data streams, alerting you to potential fraud or operational issues.

Predictive analytics models can forecast trends based on historical data, helping businesses stay ahead of the curve.

Furthermore, AI-powered dashboards can generate automated reports, providing real-time insights without the need for manual data analysis.

Step 6: Strengthen Data Security & Compliance with AI

Data breaches and compliance issues can have devastating consequences. AI helps businesses protect sensitive data through:

AI-Powered Threat Detection: Identifying unusual access patterns or unauthorized activities.

Automated Compliance Monitoring: Ensuring adherence to GDPR, HIPAA, or other regulatory standards.

Data Encryption and Masking: Using AI-driven encryption techniques to protect sensitive information.

AI continuously monitors for potential security threats and adapts its defences accordingly, reducing the risk of human oversight in data security.

Step 7: Train Your Team on AI-Driven Data Management

AI is only as effective as the people using it. Ensuring that your team understands how to interact with AI-driven tools is crucial for maximizing their potential. Consider:

Conducting workshops and training sessions on AI-powered data tools.

Providing access to online courses or certifications related to AI and data management.

Creating internal guidelines and best practices for working with AI-driven systems.

A well-trained team will help ensure that AI tools are used effectively and that your data management processes remain optimized and efficient. Encouraging a data-driven culture within the organization will further enhance AI adoption and effectiveness.

Step 8: Continuously Optimize and Improve

AI-driven data management is not a one-time setup but an ongoing process. Regularly assess the performance of your AI tools, refine models, and explore new advancements in AI technology. Automated machine learning (AutoML) solutions can continuously improve data handling processes with minimal manual intervention.

Additionally, setting up AI-powered feedback loops can help refine data processes over time, ensuring ongoing accuracy and efficiency.

AI Tools for Data Management

To help you get started, here are some of the top AI-powered tools available for data management:

Informatica – Offers AI-driven data integration, governance, and advanced capabilities for metadata management, helping organizations maintain clean and reliable data across systems.

Talend – Specializes in data cleaning, integration, and quality management, ensuring accurate data pipelines for analytics, machine learning, and reporting.

Google BigQuery – A fully-managed cloud-based analytics platform that uses AI to process massive datasets quickly, ideal for real-time analytics and storage.

Amazon Redshift – Provides AI-powered data warehousing with scalable architecture, enabling efficient storage and analysis of structured data for business insights.

Snowflake – Combines scalable cloud-based data storage, AI-driven query optimization, and a secure platform for cross-team collaboration.

Power BI – Offers AI-enhanced business intelligence and analytics with intuitive visualizations, predictive capabilities, and seamless integration with Microsoft products.

DataPeak by FactR - Offers a no-code, AI-powered platform that automates workflows and transforms raw data into actionable insights. With built-in AutoML and 600+ connectors, it enables real-time analytics without technical expertise.

Tableau – Uses machine learning to create dynamic data visualizations, providing actionable insights through intuitive dashboards and interactive storytelling.

DataRobot – Provides automated machine learning workflows for predictive analytics, enabling data scientists and business users to model future trends effortlessly.

Collibra – Features AI-driven data governance, data cataloging, and security tools, ensuring data compliance and protecting sensitive information.

IBM Cloud Pak for Data – An enterprise-grade platform combining AI-powered data management, analytics, and automation to streamline complex business processes.

Each of these tools offers unique benefits, so selecting the right one depends on your organization's needs, existing infrastructure, and scalability requirements.

AI is transforming the way businesses manage data, making processes more efficient, accurate, and scalable. By following this step-by-step guide, organizations can harness AI’s power to automate tedious tasks, extract valuable insights, and ensure data security and compliance. Whether you’re just starting or looking to refine your data management strategy, embracing AI can make data management smoother, more insightful, and less time-consuming, giving businesses the freedom to focus on growth and innovation.

Learn more about DataPeak:

#datapeak#factr#saas#technology#agentic ai#artificial intelligence#machine learning#ai#ai-driven business solutions#machine learning for workflow#ai solutions for data driven decision making#ai business tools#aiinnovation#dataanalytics#data driven decision making#datadrivendecisions#data analytics#digitaltools#digital technology#digital trends#cloudmigration#cloudcomputing#cybersecurity#smbsuccess#smbs

0 notes

Text

Informatica Training in Ameerpet | Best Informatica

How to Optimize Performance in Informatica (CDI)

Informatica Cloud Data Integration (CDI) is a powerful ETL and ELT tool used for cloud-based data integration and transformation. Optimizing performance in Informatica CDI is crucial for handling large datasets efficiently, reducing execution time, and ensuring seamless data processing. Below are the key strategies for optimizing performance in Informatica CDI.

1. Use Pushdown Optimization (PDO)

Pushdown Optimization (PDO) enhances performance by offloading transformation logic to the target or source database, reducing the amount of data movement. There are three types of pushdown optimization:

Source Pushdown: Processes data at the source level before extracting it.

Target Pushdown: Pushes the transformation logic to the target database.

Full Pushdown: Pushes all transformations to either the source or target system.

To enable PDO, configure it in the Mapping Task under the "Advanced Session Properties" section. IICS Online Training

2. Use Bulk Load for High-Volume Data

When working with large datasets, using bulk load instead of row-by-row processing can significantly improve performance. Many cloud-based data warehouses, such as Snowflake, Amazon Redshift, and Google BigQuery, support bulk loading.

Enable Bulk API in target settings.

Use batch mode for processing instead of transactional mode.

3. Optimize Data Mapping and Transformations

Well-designed mappings contribute to better performance. Some best practices include: Informatica Cloud Training

Minimize the use of complex transformations like Joiner, Lookup, and Aggregator.

Filter data as early as possible in the mapping to reduce unnecessary data processing.

Use sorted input for aggregations to enhance Aggregator transformation performance.

Avoid unnecessary type conversions between string, integer, and date formats.

4. Optimize Lookup Performance

Lookup transformations can slow down processing if not optimized. To improve performance:

Use cached lookups instead of uncached ones for frequently used data.

Minimize lookup data by using a pre-filter in the source query.

Index the lookup columns in the source database for faster retrieval.

Use Persistent Cache for static lookup data. Informatica IICS Training

5. Enable Parallel Processing

Informatica CDI allows parallel execution of tasks to process data faster.

Configure Concurrent Execution in the Mapping Task Properties to allow multiple instances to run simultaneously.

Use Partitioning to divide large datasets into smaller chunks and process them in parallel.

Adjust thread pool settings to optimize resource allocation.

6. Optimize Session and Task Properties

In the session properties of a mapping task, make the following changes:

Enable high-throughput mode for better performance.

Adjust buffer size and cache settings based on available system memory.

Configure error handling to skip error records instead of stopping execution.

7. Use Incremental Data Loads Instead of Full Loads

Performing a full data load every time increases processing time. Instead:

Implement Change Data Capture (CDC) to load only changed records.

Use Last Modified Date filters to process only new or updated data.

8. Reduce Network Latency

When working with cloud environments, network latency can impact performance. To reduce it: Informatica Cloud IDMC Training

Deploy Secure Agents close to the data sources and targets.

Use direct database connections instead of web services where possible.

Compress data before transfer to reduce bandwidth usage.

9. Monitor and Tune Performance Regularly

Use Informatica Cloud’s built-in monitoring tools to analyze performance:

Monitor Task Logs: Identify bottlenecks and optimize accordingly.

Use Performance Metrics: Review execution time and resource usage.

Schedule Jobs During Off-Peak Hours: To avoid high server loads.

Conclusion

Optimizing performance in Informatica Cloud Data Integration (CDI) requires a combination of efficient transformation design, pushdown optimization, bulk loading, and parallel processing. By following these best practices, organizations can significantly improve the speed and efficiency of their data integration workflows, ensuring faster and more reliable data processing in the cloud.

Trending Courses: Artificial Intelligence, Azure AI Engineer, Azure Data Engineering,

Visualpath stands out as the best online software training institute in Hyderabad.

For More Information about the Informatica Cloud Online Training

Contact Call/WhatsApp: +91-7032290546

Visit: https://www.visualpath.in/informatica-cloud-training-in-hyderabad.html

#Informatica Training in Hyderabad#IICS Training in Hyderabad#IICS Online Training#Informatica Cloud Training#Informatica Cloud Online Training#Informatica IICS Training#Informatica IDMC Training#Informatica Training in Ameerpet#Informatica Online Training in Hyderabad#Informatica Training in Bangalore#Informatica Training in Chennai#Informatica Training in India#Informatica Cloud IDMC Training

0 notes

Text

Customer Intelligence Platform Market Report: Global Trends, Share, and Industry Scope 2032

Customer Intelligence Platform Market Size was valued at USD 2.5 Billion in 2023 and is expected to reach USD 22.1 Billion by 2032, growing at a CAGR of 27.4% over the forecast period 2024-2032

The Customer Intelligence Platform (CIP) market is witnessing rapid growth as businesses focus on data-driven strategies to enhance customer experiences. With the increasing need for personalized interactions and customer-centric decision-making, organizations are leveraging AI-powered intelligence platforms to gain valuable insights. These platforms integrate multiple data sources, enabling brands to optimize engagement and drive revenue growth.

The Customer Intelligence Platform market continues to expand as companies invest in advanced analytics and machine learning tools to understand consumer behavior. Businesses across industries, from retail to finance, are adopting CIP solutions to unify customer data, predict trends, and improve marketing effectiveness. This shift toward data-driven intelligence is transforming the way organizations interact with their customers.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3487

Market Keyplayers:

Acxiom LLC (Acxiom Audience Insight, Acxiom Personalization)

Adobe (Adobe Experience Platform, Adobe Analytics)

Google LLC (Google Analytics 360, Google Cloud AI)

IBM Corporation (IBM Watson Marketing, IBM Customer Experience Analytics)

iManage (iManage Work, iManage RAVN AI)

Informatica (Informatica Intelligent Cloud Services, Informatica Data Governance)

Microsoft Corporation (Microsoft Dynamics 365 Customer Insights, Power BI)

Oracle Corporation (Oracle CX Cloud Suite, Oracle Data Cloud)

Proxima (Proxima Analytics Platform, Proxima Intelligence)

Salesforce.com, Inc. (Salesforce Marketing Cloud, Salesforce Customer 360)

Market Trends Driving Growth

1. AI and Machine Learning in Customer Insights

AI-driven analytics are enhancing customer segmentation, sentiment analysis, and predictive modeling, helping businesses tailor their strategies.

2. Rise of Omnichannel Engagement

Organizations are integrating CIPs with CRM, social media, and e-commerce platforms to provide seamless, personalized experiences across multiple channels.

3. Growing Demand for Real-Time Data Processing

With the increasing volume of customer interactions, businesses are adopting real-time analytics to gain immediate insights and make informed decisions.

4. Enhanced Data Privacy and Compliance Features

As data regulations tighten, CIPs are incorporating advanced security protocols to ensure compliance with GDPR, CCPA, and other global standards.

5. Expansion of Cloud-Based Intelligence Platforms

Cloud-based CIPs offer scalability, flexibility, and cost-effectiveness, making them the preferred choice for enterprises seeking robust customer intelligence solutions.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3487

Market Segmentation:

By Component

Platform

Services

By Data Channel

Web

Social Media

Smartphone

Email

Store

Call Centre

Others

By Deployment

On-premise

Cloud

By Application

Customer Data Collection and Management

Customer Segmentation and Targeting

Customer Experience Management

Customer Behaviour Analytics

Omnichannel Marketing

Personalized Recommendation

Others

By Enterprise Size

SMEs

Large Enterprises

By End Use

Banking, Financial Services, and Insurance (BFSI)

Retail and e-commerce

Telecommunications and IT

Manufacturing

Transportation and Logistics

Government and Defense

Healthcare and Life Sciences

Media and Entertainment

Travel and Hospitality

Others

Market Analysis and Current Landscape

Increasing adoption of AI-driven analytics to enhance customer engagement.

Integration of first-party and third-party data sources for a 360-degree customer view.

Growing demand for automation and predictive insights in customer interactions.

Emphasis on privacy-focused solutions to address regulatory challenges.

Despite its strong growth potential, challenges such as data integration complexities and high implementation costs remain. However, ongoing innovations in AI and cloud technology are expected to address these challenges, making CIPs more accessible and efficient.

Future Prospects: What Lies Ahead?

1. Advanced AI-Driven Personalization

AI and deep learning will enable hyper-personalized marketing, predictive analytics, and automated customer journey mapping.

2. Expansion of Voice and Conversational AI

Voice-enabled interactions and AI-powered chatbots will enhance customer engagement and service experiences.

3. Increased Focus on Ethical AI and Data Transparency

Organizations will prioritize ethical AI practices and transparent data usage to build consumer trust and regulatory compliance.

4. Integration with IoT and Smart Devices

CIPs will leverage IoT data to provide deeper insights into customer behavior and preferences.

5. Evolution Toward Unified Experience Platforms

The convergence of CIPs with Customer Data Platforms (CDPs) and Digital Experience Platforms (DXPs) will create holistic, data-driven marketing ecosystems.

Access Complete Report: https://www.snsinsider.com/reports/customer-intelligence-platform-market-3487

Conclusion

The Customer Intelligence Platform market is evolving rapidly, driven by advancements in AI, big data, and customer engagement technologies. As businesses strive to enhance customer experiences and optimize marketing strategies, the demand for intelligent, data-driven platforms will continue to rise. Companies that invest in CIP solutions will gain a competitive edge, unlocking new opportunities for growth and customer loyalty in the digital era.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Customer Intelligence Platform market#Customer Intelligence Platform market Analysis#Customer Intelligence Platform market Scope#Customer Intelligence Platform market Size#Customer Intelligence Platform market Share#Customer Intelligence Platform market Trends

0 notes

Text

Informatica and Google Cloud Extend Partnership, Introduce Fresh Solutions

Informatica, a leading provider of cloud data management solutions, has unveiled its Master Data Management (MDM) Extension for Google Cloud BigQuery, aimed at harnessing MDM data for analytics and generative artificial intelligence applications. This extension streamlines the onboarding process for customer master data, facilitating the development and deployment of customer data platforms and generative AI applications on Google Cloud.

For more details please visit our website : https://www.fortuneviews.com/informatica-and-google-cloud-extend-partnership-introduce-fresh-solutions/

0 notes

Text

Infometry's IDMC Google Drive Connector: Seamless Data Integration for Informatica Cloud

Enhance your data integration with Infometry's IDMC Google Drive Connector for Informatica Cloud. Achieve seamless, certified connectivity and simplify ETL processes.

Check out the complete product details here at https://www.infometry.net/product/google-drive-connector/

0 notes

Text

Overcoming the Challenges of Big Data: A Deep Dive into Key Big Data Challenges and Solutions

Introduction

Big data has become the backbone of decision-making for businesses, governments, and organizations worldwide. With the exponential growth of data, organizations can harness valuable insights to enhance operations, improve customer experiences, and gain a competitive edge. However, big data challenges present significant hurdles, ranging from data storage and processing complexities to security and compliance concerns. In this article, we explore the key challenges of big data and practical solutions for overcoming them.

Key Challenges of Big Data and How to Overcome Them

1. Data Volume: Managing Large-Scale Data Storage

The Challenge: Organizations generate vast amounts of data daily, making storage, management, and retrieval a challenge. Traditional storage systems often fail to handle this scale efficiently.

The Solution:

Implement cloud-based storage solutions (e.g., AWS, Google Cloud, Microsoft Azure) for scalability.

Use distributed file systems like Hadoop Distributed File System (HDFS) to manage large datasets.

Optimize storage using data compression techniques and tiered storage models to prioritize frequently accessed data.

Live Webinar - 200X Faster Contract Reviews with GenAI Automations: A Smarter Approach

2. Data Variety: Integrating Diverse Data Sources

The Challenge: Data comes in various formats—structured (databases), semi-structured (XML, JSON), and unstructured (videos, social media, emails). Integrating these formats poses a challenge for seamless analytics.

The Solution:

Adopt schema-on-read approaches to process diverse data without requiring predefined schemas.

Leverage ETL (Extract, Transform, Load) tools like Apache Nifi and Talend for seamless data integration.

Use NoSQL databases (MongoDB, Cassandra) to manage unstructured data effectively.

3. Data Velocity: Handling Real-Time Data Streams

The Challenge: Organizations need to process and analyze data in real time to respond to customer behavior, detect fraud, or optimize supply chains. Traditional batch processing can’t keep up with high-speed data influx.

The Solution:

Utilize streaming analytics platforms like Apache Kafka, Apache Flink, and Spark Streaming.

Implement event-driven architectures to process data as it arrives.

Optimize data pipelines with in-memory computing for faster processing speeds.

Read - What Is IDQ Ultinfull Mins? A Deep Dive into Informatica Data Quality for Industry Professionals

4. Data Quality and Accuracy

The Challenge: Poor data quality—caused by duplication, incomplete records, and inaccuracies—leads to misleading insights and flawed decision-making.

The Solution:

Deploy automated data cleansing tools (e.g., Informatica Data Quality, Talend).

Establish data governance frameworks to enforce standardization.

Implement machine learning algorithms for anomaly detection and automated data validation.

5. Data Security and Privacy Concerns

The Challenge: With increasing cybersecurity threats and stringent data privacy regulations (GDPR, CCPA), businesses must safeguard sensitive information while maintaining accessibility.

The Solution:

Implement end-to-end encryption for data at rest and in transit.

Use role-based access control (RBAC) to restrict unauthorized data access.

Deploy data anonymization and masking techniques to protect personal data.

Read - Master Data Management in Pharma: The Cornerstone of Data-Driven Innovation

6. Data Governance and Compliance

The Challenge: Organizations struggle to comply with evolving regulations while ensuring data integrity, traceability, and accountability.

The Solution:

Establish a centralized data governance framework to define policies and responsibilities.

Automate compliance checks using AI-driven regulatory monitoring tools.

Maintain detailed audit logs to track data usage and modifications.

7. Scalability and Performance Bottlenecks

The Challenge: As data volumes grow, traditional IT infrastructures may fail to scale efficiently, leading to slow query performance and system failures.

The Solution:

Implement scalable architectures using containerized solutions like Kubernetes and Docker.

Optimize query performance with distributed computing frameworks like Apache Spark.

Use load balancing strategies to distribute workloads effectively.

Read - How to Implement Customer Relationship Management (CRM): A Comprehensive Guide to Successful CRM Implementation

8. Deriving Meaningful Insights from Big Data

The Challenge: Extracting actionable insights from massive datasets can be overwhelming without proper analytical tools.

The Solution:

Leverage AI and machine learning algorithms to uncover patterns and trends.

Implement data visualization tools like Tableau and Power BI for intuitive analytics.

Use predictive analytics to forecast trends and drive strategic decisions.

Conclusion

While big data challenges can seem daunting, businesses that implement the right strategies can transform these obstacles into opportunities. By leveraging advanced storage solutions, real-time processing, AI-driven insights, and robust security measures, organizations can unlock the full potential of big data. The key to success lies in proactive planning, adopting scalable technologies, and fostering a data-driven culture that embraces continuous improvement.

By addressing these challenges head-on, organizations can harness big data’s power to drive innovation, optimize operations, and gain a competitive edge in the digital era.

0 notes

Text

Enterprise Data Warehouse (EDW) Market Report 2025-2033: Trends, Opportunities, and Forecast

Enterprise Data Warehouse (EDW) Market Size

The global enterprise data warehouse (EDW) market size was valued at USD 3.43 billion in 2024 and is estimated to reach USD 22.36 billion by 2033, growing at a CAGR of 19.29% during the forecast period (2025–2033).

Enterprise Data Warehouse (EDW) Market Overview:

The Enterprise Data Warehouse (EDW) Market The report provides projections and trend analysis for the years 2024–2033 and offers comprehensive insights into a market that spans several industries. By fusing a wealth of quantitative data with professional judgment, the study explores important topics such product innovation, adoption rates, price strategies, and regional market penetration. Macroeconomic variables like GDP growth and socioeconomic indices are also taken into account in order to put market swings in perspective. An Enterprise Data Warehouse is a centralized repository designed to store, manage, and analyze vast amounts of structured and unstructured data from various sources across an organization. It serves as a critical component for business intelligence (BI), enabling organizations to consolidate data from different departments or systems into a single, cohesive view. This allows for comprehensive data analysis, reporting, and decision-making. The main market participants, the industries that employ the products or services, and shifting consumer tastes are all crucial subjects of conversation. The competitive environments, regulatory effects, and technical advancements that affect the market are all carefully examined in this study. The well-structured Enterprise Data Warehouse (EDW) Market Report provides stakeholders from a variety of political, cultural, and sectors with useful commercial information.

Get Sample Research Report: https://marketstrides.com/request-sample/enterprise-data-warehouse-edw-market

Enterprise Data Warehouse (EDW) Market Growth And Trends

Numerous Enterprise Data Warehouse (EDW) Market breakthroughs are driving a significant shift in the industry, altering its course for the future. Following these important changes is essential because they have the potential to reshape operations and plans. Digital Transformation: Data-driven solutions enhance customer contact and streamline processes as digital technologies develop. Customer Preferences: Businesses are offering customized items as a result of the growing emphasis on convenience and personalization. Regulatory Changes: Companies must quickly adjust in order to stay competitive as compliance standards and rules become more stringent.

Who Are the Key Players in Enterprise Data Warehouse (EDW) Market , and How Do They Influence the Market?

Amazon Web Services (AWS)

Microsoft Azure

Google Cloud

Snowflake

Oracle

IBM

SAP

Teradata

Cloudera

Hewlett Packard Enterprise (HPE)

Alibaba Cloud

Dell Technologies

Hitachi Vantara

Informatica

Huawei

With an emphasis on the top three to five companies, this section offers a SWOT analysis of the major players in the Enterprise Data Warehouse (EDW) Market market. It highlights their advantages, disadvantages, possibilities, and dangers while examining their main strategies, present priorities, competitive obstacles, and prospective market expansion areas. Additionally, the client's preferences can be accommodated by customizing the company list. We evaluate the top five companies and examine recent events including partnerships, mergers, acquisitions, and product launches in the section on the competitive climate. Using the Ace matrix criteria, their Enterprise Data Warehouse (EDW) Market market share, growth potential, contributions to total market growth, and geographic presence and market relevance are also analyzed.

Browse Details of Enterprise Data Warehouse (EDW) Market with TOC: https://marketstrides.com/report/enterprise-data-warehouse-edw-market

Enterprise Data Warehouse (EDW) Market : Segmentation

By Deployment

Web Based

Server

Hybrid

By Product Type

Information Processing

Data Mining

Analytical Processing

Others

By Data Type

Billings

Documents

Records

Financials

Others

What Makes Our Research Methodology Reliable and Effective?

Data Accuracy & Authenticity – We use verified sources and advanced data validation techniques to ensure accurate and trustworthy insights.

Combination of Primary & Secondary Research – We gather first-hand data through surveys, interviews, and observations while also leveraging existing market reports for a holistic approach.

Industry-Specific Expertise – Our team consists of professionals with deep domain knowledge, ensuring relevant and actionable research outcomes.

Advanced Analytical Tools – We utilize AI-driven analytics, statistical models, and business intelligence tools to derive meaningful insights.

Comprehensive Market Coverage – We study key market players, consumer behavior, trends, and competitive landscapes to provide a 360-degree analysis.

Custom-Tailored Approach – Our research is customized to meet client-specific needs, ensuring relevant and practical recommendations.

Continuous Monitoring & Updates – We track market changes regularly to keep research findings up to date and aligned with the latest trends.

Transparent & Ethical Practices – We adhere to ethical research standards, ensuring unbiased data collection and reporting.

Which Regions Have the Highest Demand for Enterprise Data Warehouse (EDW) Market?

The Enterprise Data Warehouse (EDW) Market Research Report provides a detailed examination of the Enterprise Data Warehouse (EDW) Market across various regions, highlighting the characteristics and opportunities unique to each geographic area.

North America

Europe

Asia-Pacific

Latin America

The Middle East and Africa

Buy Now:https://marketstrides.com/buyNow/enterprise-data-warehouse-edw-market

Frequently Asked Questions (FAQs)

What is the expected growth rate of the Enterprise Data Warehouse (EDW) Market during the forecast period?

What factors are driving the growth of the Enterprise Data Warehouse (EDW) Market?

What are some challenges faced by the Enterprise Data Warehouse (EDW) Market ?

How is the global Enterprise Data Warehouse (EDW) Market segmented?

What regions have the largest market share in the global Enterprise Data Warehouse (EDW) Market?

About Us:

Market Strides is an international publisher and compiler of market, equity, economic, and database directories. Almost every industrial sector, as well as every industry category and subclass, is included in our vast collection. Potential futures, growth factors, market sizing, and competition analysis are all included in our market research reports. The company helps customers with due diligence, product expansion, plant setup, acquisition intelligence, and other goals by using data analytics and research.

Contact Us: [email protected]

#Enterprise Data Warehouse (EDW) Market Size#Enterprise Data Warehouse (EDW) Market Share#Enterprise Data Warehouse (EDW) Market Growth#Enterprise Data Warehouse (EDW) Market Trends#Enterprise Data Warehouse (EDW) Market Players

0 notes

Text

Top Tools and Techniques for Effective Data Management Planning

In today’s digital age, data is at the heart of decision-making for businesses. However, managing large volumes of data effectively requires proper data management planning and the right tools. Whether you're a business owner or Google Analytics consultants, having a solid strategy ensures accurate, actionable insights. Here are the top tools and techniques to streamline data management and boost efficiency.

1. Data Integration Tools

Platforms like Talend and Informatica simplify the integration of data from multiple sources. These tools ensure your data management planning is seamless, helping you consolidate and organize data efficiently.

2. Cloud-Based Storage Solutions

Solutions such as Google Cloud and AWS offer scalable, secure storage for your data. These platforms allow for easy access, ensuring your team can collaborate and analyze data in real-time.

3. Advanced Analytics Platforms

Google Analytics is a vital tool for understanding website performance and user behavior. A Google Analytics consultant can leverage its powerful features to identify trends, track performance metrics, and enhance your marketing strategies.

4. Data Quality Assurance Techniques

Data quality is critical for effective decision-making. Regular audits, validation tools, and cleansing processes ensure your data is accurate, reliable, and actionable.

5. Automated Reporting Tools

Power BI and Tableau are excellent for creating automated, interactive dashboards. These tools allow businesses to visualize data trends and track performance metrics, making it easier to align decisions with goals.

Conclusion

Effective data management planning requires the right mix of tools and techniques. By integrating platforms like Google Analytics with other advanced tools, businesses can unlock the full potential of their data. Partnering with Kaliper.io ensures expert guidance for accurate insights and better results. Start planning your data strategy today!

0 notes

Text

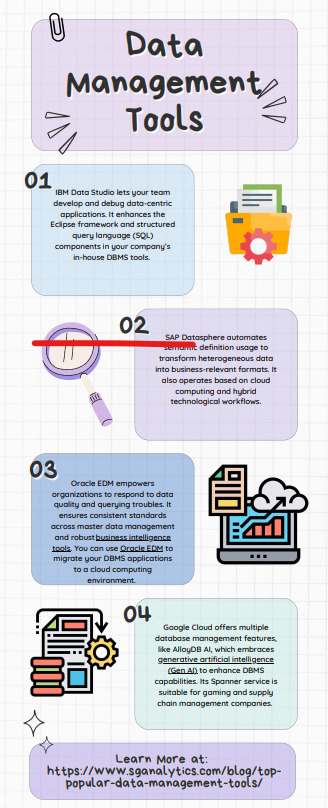

Master Data Management Tools: 2025 Outlook

Top 10 Best Data Management Tools · 1| IBM Data Studio · 2| SAP Datasphere · 3| Oracle Enterprise Data Management (EDM) · 4| Google Cloud · 5| Microsoft Azure · 6| Informatica PowerCenter · 7| Amazon Web Services (AWS) · 8| Teradata · 9| Collibra Read More: https://www.sganalytics.com/blog/top-popular-data-management-tools/

0 notes

Text

Datanets for AI Development: A Guide to Selecting the Right Data Architecture

Discover the key considerations for selecting the right data architecture for AI development in our guide to Datanets.

In the world of AI development, data is the cornerstone. From training machine learning models to powering predictive analytics, high-quality and well-structured data is essential for building intelligent AI systems. However, as the volume and variety of data continue to grow, businesses face the challenge of selecting the right data architecture one that not only supports efficient data collection, processing, and storage, but also aligns with AI development goals.

Datanets the interconnected networks of data sources and storage systems play a crucial role in modern AI projects. These data architectures streamline data access, integration, and analysis, making it easier to extract valuable insights and build scalable AI models.

This guide will walk you through datanets for AI development and help you make informed decisions when selecting the ideal data architecture for your AI-driven projects.

What Are Datanets in AI Development?

Datanets refer to interconnected data sources, data storage systems, data pipelines, and data integration tools that work together to collect, process, store, and analyze large volumes of data efficiently. These data networks facilitate data flow across multiple platforms—whether cloud-based environments or on-premises systems—making it possible to access diverse datasets in real-time for AI model training and predictive analysis.

In AI development, datanets help in centralizing and streamlining data processes, which is vital for developing machine learning models, optimizing algorithms, and extracting actionable insights.

Key Components of a DataNet for AI

A datanet consists of several key components that work together to create a robust data architecture for AI development. These components include:

Data Sources: Structured (databases, spreadsheets), unstructured (images, videos, audio), and semi-structured (JSON, XML)

Data Storage: Cloud storage (AWS S3, Azure Blob Storage), distributed storage systems (HDFS, BigQuery)

Data Processing: Data pipelines (Apache Kafka, AWS Data Pipeline), data streaming (Apache Flink, Google Dataflow)

Data Integration Tools: ETL (Extract, Transform, Load) tools (Talend, Informatica), data integration platforms (Fivetran, Apache NiFi)

Data Analytics and Visualization: Data analysis tools (Tableau, Power BI), AI models (TensorFlow, PyTorch)

Benefits of Using Datanets in AI Development

Datanets offer several benefits that are critical for successful AI development. These advantages help businesses streamline data workflows, increase data accessibility, and improve model performance:

Efficient Data Flow: Datanets enable seamless data movement across multiple sources and systems, ensuring smooth data integration.

Scalability: Datanets are designed to scale with the growing data needs of AI projects, handling large volumes of data efficiently.

Real-Time Data Access: Datanets provide real-time data access for machine learning models, allowing instantaneous data analysis and decision-making.

Enhanced Data Quality: Datanets include data cleaning and transformation processes, which help improve data accuracy and model training quality.

Cost Efficiency: Datanets optimize data storage and processing, reducing the need for excessive human intervention and expensive infrastructure.

Collaboration: Datanets enable collaboration between teams by sharing datasets across different departments or geographical locations.

Factors to Consider When Selecting the Right Data Architecture

When selecting the right data architecture for AI development, several key factors must be taken into account to ensure the data net is optimized for AI. Here are the most important considerations:

Data Volume and Variety: AI models thrive on large and diverse datasets. The data architecture must handle big data, multi-source integration, and real-time data updates.

Data Integration and Accessibility: The data architecture should facilitate easy data access across multiple systems and applications—whether cloud-based, on-premises, or hybrid.

Scalability and Performance: An ideal data architecture should scale with growing data demands while ensuring high performance in processing and storage.

Security and Compliance: Data security and regulatory compliance (GDPR, CCPA, HIPAA) are critical factors in selecting a data architecture for AI-driven insights.

Data Quality and Cleaning: Data quality is essential for accurate model training. A good data architecture should incorporate data cleaning and transformation tools.

Best Practices for Designing a DataNet for AI Development

Designing an efficient DataNet for AI development involves best practices that ensure data flow optimization and model accuracy. Here are some key strategies:

Use a Centralized Data Repository: Create a central hub where all data is stored and accessible.

Implement Data Pipelines: Build data pipelines to automate data ingestion, transformation, and processing.

Leverage Cloud Infrastructure: Utilize cloud-based storage and computing for scalability and cost efficiency.

Ensure Data Quality Control: Incorporate data cleaning tools and validation processes to improve data accuracy.

Optimize for Real-Time Access: Design your data architecture for real-time data access and analysis.

Monitor Data Usage: Regularly monitor data access, integrity, and usage to ensure compliance and performance.

The Future of Data Architecture in AI Development

As AI technology advances, data architecture will continue to evolve. Future trends will focus on more decentralized data ecosystems, enhanced data interoperability, and increased use of AI-driven data insights. The integration of blockchain with AI for data security and trust will also gain prominence.

Conclusion

Selecting the right data architecture—using datanets—is crucial for successful AI development. It ensures efficient data integration, scalability, security, and accuracy in model training. By following best practices, addressing common challenges, and considering key factors, businesses can create a robust data architecture that supports their AI projects and drives business success.

As AI technologies evolve, datanets will remain a key component in scalable data management and intelligent decision-making. Whether it’s collecting large datasets, integrating data sources, or optimizing workflows, a well-designed DataNet is the foundation for leveraging AI to its fullest potential.

1 note

·

View note

Text

Informatica?s Cloud Data Governance and Catalog Solution is Now Available on Google Cloud

http://securitytc.com/THGZkB

0 notes

Text

The Best Platforms for Real-Time Data Integration in a Multi-Cloud Environment

There's no denying that the modern enterprise landscape is increasingly complex, with organizations relying on multiple cloud platforms to meet their diverse business needs. However, this multi-cloud approach can also create significant data integration challenges, as companies struggle to synchronize and analyze data across disparate systems. To overcome these hurdles, businesses need robust real-time data integration platforms that can seamlessly connect and process data from various cloud environments.

One of the leading platforms for real-time data integration in a multi-cloud environment is Informatica PowerCenter. This comprehensive platform offers advanced data integration capabilities, including real-time data processing, data quality, and data governance. With Informatica PowerCenter, organizations can easily integrate data from multiple cloud sources, including Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). The platform's intuitive interface and drag-and-drop functionality make it easy to design, deploy, and manage complex data integration workflows.

Another popular platform for real-time data integration is Talend. This open-source platform provides a unified environment for data integration, data quality, and big data integration. Talend's real-time data integration capabilities enable organizations to process large volumes of data in real-time, making it ideal for applications such as IoT sensor data processing and real-time analytics. The platform's cloud-agnostic architecture allows it to seamlessly integrate with multiple cloud platforms, including AWS, Azure, and GCP.

For organizations that require a more lightweight and flexible approach to real-time data integration, Apache Kafka is an excellent choice. This distributed streaming platform enables organizations to build real-time data pipelines that can handle high volumes of data from multiple cloud sources. Apache Kafka's event-driven architecture and scalable design make it ideal for applications such as real-time analytics, IoT data processing, and log aggregation.

MuleSoft is another leading platform for real-time data integration in a multi-cloud environment. This integration platform as a service (iPaaS) provides a unified environment for API management, data integration, and application integration. MuleSoft's Any point Platform enables organizations to design, build, and manage APIs and integrations that can connect to multiple cloud platforms, including AWS, Azure, and GCP. The platform's real-time data integration capabilities enable organizations to process large volumes of data in real-time, making it ideal for applications such as real-time analytics and IoT data processing.

In the final consideration, real-time data integration is critical for organizations operating in a multi-cloud environment. By leveraging platforms such as Informatica PowerCenter, Talend, Apache Kafka, and MuleSoft, businesses can seamlessly integrate and process data from multiple cloud sources, enabling real-time analytics, improved decision-making, and enhanced business outcomes. When selecting a real-time best data integration platform, organizations should consider factors such as scalability, flexibility, and cloud-agnostic architecture to ensure that they can meet their evolving business needs.

0 notes

Text

Enterprise Metadata Management: The Next Big Thing in Data Analytics. FMI predicts the Market to Surpass US$ 10,474.3 million in 2033

The enterprise metadata management market is predicted to develop at an impressive 14.8% CAGR from 2023 to 2033, preceding the lower 12.7% CAGR witnessed between 2018 and 2022. This significant rise shows the rise in demand for enterprise metadata management, increasing the market value from US$ 2,626.9 million in 2023 to US$ 10,474.3 million by 2033.

The growing demand for data governance across numerous industries is what is driving the global market for corporate metadata management. Enterprise metadata management software optimizes IT productivity, reduces risk, improves data asset management, and assures regulatory compliance.

Between 2023 and 2033, the need for business metadata management is expected to increase globally at a CAGR of 14.8%, driven by the rising use of IoT and blockchain technologies, the growth of unstructured data, and the requirement for data security and management rules.

Request for a Sample of this Research Report: https://www.futuremarketinsights.com/reports/sample/rep-gb-4353

The requirement to lower risk and improve data confidence, as well as the growth of data warehouses and centralized data control to increase IT efficiency, are the main factors driving the global market for enterprise metadata management.

The lack of knowledge about the advantages of corporate metadata management and technical barriers to metadata storage and cross-linking restrain industry expansion.

Key Takeaways from the Enterprise Metadata Management Market:

The requirement to effectively manage enormous amounts of enterprise data, as well as the growing emphasis on data governance, is likely to propel the enterprise metadata management market in India to witness rapid growth with a CAGR of 17.6% by 2033.

The Enterprise metadata management market in the United Kingdom is expected to develop at a CAGR of 13.2% by 2033, owing to the increased usage of advanced analytics and AI technologies that rely on accurate and well-governed metadata.

China’s enterprise metadata management market is predicted to grow rapidly by 2033, with a CAGR of 16.7%, driven by the country’s increasing economic environment and the rising demand for comprehensive data management solutions.

The demand for greater data governance and compliance with regulatory regulations is expected to propel the Australian enterprise metadata management market to a CAGR of 3.6% by 2033.

The focus on digital transformation in Japan and the growing understanding of the value of metadata in data-driven decision-making processes are likely to boost the market’s growth at a moderate CAGR of 5.1% by 2033.

Competitive Landscape

Leading international competitors like IBM Corporation, SAP SE, Informatica, Collibra, and Talend rule the market thanks to their wealth of expertise, diverse product lines, and substantial customer bases. These businesses focus on features like data lineage, data cataloging, and data discovery to provide comprehensive metadata management solutions, and they invest consistently in R&D to improve their services.

Additionally, as cloud-based EMM solutions have grown in popularity, cloud-focused companies like Microsoft, Amazon Web Services (AWS), and Google Cloud Platform (GCP) have become more competitive. Organizations looking for adaptable metadata management solutions are drawn to their scalable and affordable cloud services.

Recent Developments

IBM just released IBM InfoSphere Master Data Management, a new enterprise metadata management platform. This platform offers tools for managing, controlling, and enhancing metadata, as well as a common repository for all metadata.

Oracle introduced Oracle Enterprise Data Management Suite, a new enterprise metadata management platform. This platform offers a full suite of tools, such as data discovery, data lineage, and data quality, for managing metadata across the company.

Click to Buy Your Exclusive Report Immediately! https://www.futuremarketinsights.com/checkout/4353

Key Segments Profiled in the Enterprise Metadata Management Industry Survey

By Deployment Type:

On-Premise

Software as a Service (SaaS)

By Vertical:

BFSI

Healthcare & Medical

IT & Telecommunications

Media & Entertainment

Government

E-Commerce & Retail

Logistics

Pharmaceutical

Manufacturing

Others

By Region:

North America

Latin America

Europe Enterprise

Asia Pacific

Middle East & Africa

0 notes