#inventive principle of parameter change

Explore tagged Tumblr posts

Text

CNC development history and processing principles

CNC machine tools are also called Computerized Numerical Control (CNC for short). They are mechatronics products that use digital information to control machine tools. They record the relative position between the tool and the workpiece, the start and stop of the machine tool, the spindle speed change, the workpiece loosening and clamping, the tool selection, the start and stop of the cooling pump and other operations and sequence actions on the control medium with digital codes, and then send the digital information to the CNC device or computer, which will decode and calculate, issue instructions to control the machine tool servo system or other actuators, so that the machine tool can process the required workpiece.

1. The evolution of CNC technology: from mechanical gears to digital codes

The Beginning of Mechanical Control (late 19th century - 1940s)

The prototype of CNC technology can be traced back to the invention of mechanical automatic machine tools in the 19th century. In 1887, the cam-controlled lathe invented by American engineer Herman realized "programmed" processing for the first time by rotating cams to drive tool movement. Although this mechanical programming method is inefficient, it provides a key idea for subsequent CNC technology. During World War II, the surge in demand for military equipment accelerated the innovation of processing technology, but the processing capacity of traditional machine tools for complex parts had reached a bottleneck.

The electronic revolution (1950s-1970s)

After World War II, manufacturing industries mostly relied on manual operations. After workers understood the drawings, they manually operated machine tools to process parts. This way of producing products was costly, inefficient, and the quality was not guaranteed. In 1952, John Parsons' team at the Massachusetts Institute of Technology (MIT) developed the world's first CNC milling machine, which input instructions through punched paper tape, marking the official birth of CNC technology. The core breakthrough of this stage was "digital signals replacing mechanical transmission" - servo motors replaced gears and connecting rods, and code instructions replaced manual adjustments. In the 1960s, the popularity of integrated circuits reduced the size and cost of CNC systems. Japanese companies such as Fanuc launched commercial CNC equipment, and the automotive and aviation industries took the lead in introducing CNC production lines.

Integration of computer technology (1980s-2000s)

With the maturity of microprocessor and graphical interface technology, CNC entered the PC control era. In 1982, Siemens of Germany launched the first microprocessor-based CNC system Sinumerik 800, whose programming efficiency was 100 times higher than that of paper tape. The integration of CAD (computer-aided design) and CAM (computer-aided manufacturing) software allows engineers to directly convert 3D models into machining codes, and the machining accuracy of complex surfaces reaches the micron level. During this period, equipment such as five-axis linkage machining centers came into being, promoting the rapid development of mold manufacturing and medical device industries.

Intelligence and networking (21st century to present)

The Internet of Things and artificial intelligence technologies have given CNC machine tools new vitality. Modern CNC systems use sensors to monitor parameters such as cutting force and temperature in real time, and use machine learning to optimize processing paths. For example, the iSMART Factory solution of Japan's Mazak Company achieves intelligent scheduling of hundreds of machine tools through cloud collaboration. In 2023, the global CNC machine tool market size has exceeded US$80 billion, and China has become the largest manufacturing country with a production share of 31%.

2. CNC machining principles: How code drives steel

The essence of CNC technology is to convert the physical machining process into a control closed loop of digital signals. Its operation logic can be divided into three stages:

Geometric Modeling and Programming

After building a 3D model using CAD software such as UG and SolidWorks, CAM software “deconstructs” the model: automatically calculating parameters such as tool path, feed rate, spindle speed, and generating G code (such as G01 X100 Y200 F500 for linear interpolation to coordinates (100,200) and feed rate 500mm/min). Modern software can even simulate the material removal process and predict machining errors.

Numerical control system analysis and implementation

The "brain" of CNC machine tools - the numerical control system (such as Fanuc 30i, Siemens 840D) converts G codes into electrical pulse signals. Taking a three-axis milling machine as an example, the servo motors of the X/Y/Z axes receive pulse commands and convert rotary motion into linear displacement through ball screws, with a positioning accuracy of up to ±0.002mm. The closed-loop control system uses a grating ruler to feedback position errors in real time, forming a dynamic correction mechanism.

Multi-physics collaborative control

During the machining process, the machine tool needs to coordinate multiple parameters synchronously: the spindle motor drives the tool to rotate at a high speed of 20,000 rpm, the cooling system sprays atomized cutting fluid to reduce the temperature, and the tool changing robot completes the tool change within 0.5 seconds. For example, when machining titanium alloy blades, the system needs to dynamically adjust the cutting depth according to the hardness of the material to avoid tool chipping.

3. The future of CNC technology: cross-dimensional breakthroughs and industrial transformation

Currently, CNC technology is facing three major trends:

Combined: Turning and milling machine tools can complete turning, milling, grinding and other processes on one device, reducing clamping time by 90%;

Additive-subtractive integration: Germany's DMG MORI's LASERTEC series machine tools combine 3D printing and CNC finishing to directly manufacture aerospace engine combustion chambers;

Digital Twin: By using a virtual machine tool to simulate the actual machining process, China's Shenyang Machine Tool's i5 system has increased debugging efficiency by 70%.

From the meshing of mechanical gears to the flow of digital signals, CNC technology has rewritten the underlying logic of the manufacturing industry in 70 years. It is not only an upgrade of machine tools, but also a leap in the ability of humans to transform abstract thinking into physical entities. In the new track of intelligent manufacturing, CNC technology will continue to break through the limits of materials, precision and efficiency, and write a new chapter for industrial civilization.

#prototype machining#cnc machining#precision machining#prototyping#rapid prototyping#machining parts

2 notes

·

View notes

Text

Cone calorimeter is a modern gadget or device; it is used to study the behavior of the fire of small samples of a variety of materials when they are in condensed phase. It is usually used in fire safety engineering field. Con calorimeter gathers data concerning combustion products, ignition time, and rate of release of heat, loss of mass and other parameters that are associated with the burning properties. According to Andrew (1994), samples of fuel are allowed by this device to be exposed different fluxes of heat on its surface. The principle behind the heat release rate measurement is usually based on Huggett’s principle. On this, gross heat of combustion of organic material is directly related to oxygen amount that is required for combustion. The name of con calorimeter is derived from the conical shape of radiant heater, which produces almost a uniform flux of heat over the sample surface which is under study. In 1970’s and 1980’s, importance of a bench scale tool that is reliable in measuring the heat release rate was in the process of being released. There were a number of such devices that had already been built before in various institutions. However, none of those devices was found to be appropriate for normal engineering use in the laboratory. This was mainly due to operation difficulty and measurement errors of those devices. It has been argued (Arthur 1995) that other instruments that were built such as substitution burner had the capability of giving good accuracy although they had limitations which included complexity, and installation and maintenance difficulties. This therefore, was an indication that a new device was required that had no such difficulties like the other devices. Later a device that used oxygen consumption principle was invented, it had a successful design, and it was termed as cone calorimeter. This design was first described in 1982 by NBS report. Cone calorimeter basic principle has until today been unchanged although there has several improvements and additions that have been made. The devices that are being used today have few parts that are identical to the one that was made in 1982. The significant changes that have been made include the introduction of systems which measure smoke optically and yield soot gravimetrically. Other major changes are not the functional changes but redesign so as to ease the use and operation reliability. In 1985, first cone calorimeter was built outside NIST. It was built in BRI Japan followed by another one in 1986 at Gent University and afterward in the same year other 3 commercial units were built and sold in the U.S. the number of cone calorimeters placed into service has been increasing significantly from 1986 up to date. Barbrauskas and Parker (1987) maintain that Cone calorimeter is most important bench scale instrument in fire testing field. The release of heat is the key measurement that is required in assessing the development of fire of the products and materials. Read the full article

0 notes

Text

Aryabhata and the Birth of Zero: A Legacy That Powers Modern AI and Machine Learning

Introduction

The concept of zero is often taken for granted in our modern world. It seems simple and ubiquitous, a basic number that underpins the technology we rely on daily. But the origins of zero trace back to a brilliant mind in ancient India - the mathematician and astronomer Aryabhata. His invention of zero was not just a mathematical innovation; it laid the foundation for the technological advances that shape our world today. From computer science to artificial intelligence (AI) and machine learning (ML), the legacy of Aryabhata’s work continues to drive us forward.

Aryabhata: The Visionary Mathematician and Astronomer

Aryabhata was an extraordinary thinker who lived in the 5th century during the Gupta period in India, a time of great intellectual and scientific advancements. His most profound contribution was the conceptualization of zero as a place-value placeholder in the decimal system, an idea that changed the way we perform arithmetic and set the stage for future mathematical developments. While his works spanned a range of subjects, from trigonometry to astronomy, it was his treatment of zero that had the most far-reaching implications.

Before Aryabhata, the idea of zero didn’t exist in the way we understand it today. While ancient civilizations, such as the Babylonians, had symbols for nothingness, Aryabhata took this concept further and formalized it in a way that allowed for the development of complex mathematical systems. This shift in thinking made it possible to perform calculations with ease and precision, including the ability to represent large numbers, and led directly to the development of algebra, calculus, and eventually the mathematical models behind modern-day computing.

The Evolution of Zero: From Ancient India to Modern Technology

Though Aryabhata’s invention of zero was ground breaking, its global acceptance took time. As the concept spread across the world through the Islamic Golden Age and eventually reached Europe, it became an integral part of mathematics. Today, zero is the cornerstone of the binary number system, the basis of all modern computing.

In the world of technology, zero plays a pivotal role in the way digital systems operate. The binary code that powers our computers and devices is composed of two digits: 1 and 0. These on/off states are what enable computers to perform complex calculations and store vast amounts of information. Every piece of technology - from the simplest calculator to the most advanced AI systems — relies on the concept of zero to function efficiently.

Zero’s Connection to Modern AI and Machine Learning

As AI and machine learning (ML) continue to revolutionize industries, it's fascinating to reflect on how these advanced technologies are rooted in the mathematical principles that Aryabhata pioneered. Machine learning, at its core, is about processing data, making predictions, and optimizing results — all of which require complex mathematical algorithms. Zero plays a key role in these processes, from initializing algorithms to managing the flow of data.

In machine learning, for instance, the process of training a model often involves adjusting parameters using optimization techniques like gradient descent. Zero, or values close to it, are crucial in determining how algorithms "learn" and adjust over time. In neural networks, another major component of modern AI, zero functions as a critical part of error correction and network tuning. These algorithms adjust their weights by calculating the difference between predicted and actual outcomes, sometimes approaching zero to refine the model for better accuracy.

The very ability to represent data, process it, and make intelligent decisions is based on complex mathematical models that wouldn't exist without the foundational role of zero. As AI technology continues to evolve, it's exciting to think about the endless possibilities and innovations that can emerge, all thanks to the humble yet powerful concept of zero.

How Pydun Technology is Shaping the Future of AI and ML

As the world of AI and machine learning continues to grow, the demand for skilled professionals is higher than ever. This is where Pydun Technology Private Limited comes in. As a leading provider of AI and ML training, Pydun Technology is committed to empowering individuals and businesses with the knowledge and skills they need to succeed in this rapidly evolving field.

At Pydun, learning is not just about theory; it’s about practical application. With a comprehensive curriculum designed to cover everything from the basics of machine learning to advanced AI techniques, Pydun Technology ensures that students are well-equipped to tackle the challenges of the modern tech landscape. Whether you're a beginner or an experienced professional, Pydun offers tailored training programs that meet you where you are and help you progress to the next level.

The world of AI and ML can seem intimidating, but with the right guidance and training, anyone can master it. Pydun Technology offers hands-on learning experiences, real-world projects, and expert instruction to help you understand the complex algorithms and mathematical concepts that power AI systems - including the essential role of zero. By learning from industry experts, you’ll gain the confidence and skills to contribute meaningfully to the rapidly expanding world of AI and ML.

Why Choose Pydun Technology?

Expert Instructors: Pydun’s team of instructors brings years of industry experience to the table, providing valuable insights into the practical applications of AI and ML.

Comprehensive Curriculum: Pydun offers a detailed, structured curriculum that takes learners from foundational concepts to advanced techniques, ensuring that every student is prepared to excel in the field.

Hands-On Learning: At Pydun, learning is interactive. Students work on real-world projects, solving problems that mirror those faced by companies in the industry.

Personalized Training: Pydun offers customized training programs, catering to both individual learners and corporate teams, ensuring that everyone gets the attention and resources they need to succeed.

Future-Ready Skills: With the rapid advancements in AI and ML, the skills you gain at Pydun will keep you ahead of the curve, enabling you to tap into exciting opportunities in one of the most dynamic fields today.

Conclusion

The legacy of Aryabhata and his invention of zero continues to shape the world of technology today. Zero is the silent enabler of all digital systems, from binary code to artificial intelligence, and it is this very concept that has allowed AI and ML to flourish in the modern era.

If you're ready to step into the future and unlock the potential of AI and ML, Pydun Technology Private Limited is here to guide you. With expert-led training, hands-on experience, and a focus on practical learning, Pydun ensures that you are equipped with the skills and knowledge needed to succeed in this exciting field. Embrace the future today and take the first step towards mastering AI and ML with Pydun Technology - where learning meets innovation.

#best programming course training in madurai#Artificial Intelligence and Machine Learning Courses#Artificial Intelligence Courses#Machine Learning Courses#AI Courses in Madurai#AI and ML Training in Madurai#AI Programming in Madurai#Machine Learning Training in Madurai#internship#best IT training in madurai

1 note

·

View note

Text

Optical Reflector

The working principle of a reflector is based on the law of reflection, where the incident light, reflected light, and normal line in the same plane, and the angle of incidence equals the angle of reflection. These reflector surfaces undergo special treatment, allowing light to reflect along predetermined paths, thereby changing the direction of light propagation.

The main parameters of reflectors include reflectance, surface roughness, shape, and size. Reflectance determines the efficiency of reflection for reflectors, while surface roughness affects the quality of reflected light. The shape and size of reflectors determine their operating mode and application range.

Company Name:Changzhou Haolilai Photo-Electricity Scientific and Technical Co., Ltd. Web:https://www.cnhll.com/product/optical-flat-mirror/optical-reflector/ ADD:No.10 wangcai road, Luoxi town,Xinbei district, Changzhou,Jiangsu, China. Phone:86-519-83200018 Email:[email protected] Profile:As a High-Tech enterprise in Jiangsu province, HLL boasts a talented team with intensive experience and professional technology. HLL has established Jiangsu Precision Optical Lens Engineering Technology Center and Jiangsu Enterprise Technology Research Center and obtained multiple patents for inventions, multiple utility model patents and multiple Jiangsu High New Tech Products.

0 notes

Text

Lal Kitab 2.0- The Synergy of Tradition and AI

"Lal Kitab,"is a set of astrological texts with roots in traditional Indian astrology. This unique system is renowned for its unconventional and pragmatic approach to astrology, distinguishing itself from classical Vedic astrology.

The origins of Lal Kitab are shrouded in mystery, adding an air of intrigue to its teachings. While some attribute its authorship to Pandit Roop Chand Joshi, others believe it was penned by an unknown Muslim saint.

Lal Kitab emphasises the significance of Rahu and Ketu, the lunar nodes, and suggests practical remedies, often involving rituals, charity, and specific items to be donated or used.

It continues to be consulted by individuals seeking insights into their lives and looking for simple and affordable remedies to address challenges and improve their fortunes.What sets Lal Kitab apart is its departure from the conventional use of complex horoscopes and birth charts. Instead, it focuses on the placement of planets in different houses and their influences, employing a symbolic language and metaphors to convey astrological principles.

Introducing Lal Kitab 2.0:

Lal kitab 2.0 is really not a book to publish, it is a synergy of Astrology with AI. IT is a combination of two different ages, it is a combination of art and science, no doubt astrology is pure maths but maths is still like an art. You need to learn this to be the master of this art. We believe that lal kitab 2.0 is more accessible, understandable and useful for every generation. When two most powerful sciences come together it creates a miracle to the normal world, though the astrological world is not a normal world, no no no we are not saying this is abnormal or something out of the ordinary it is an extraordinary art that can be learned with some attention and focus.

Now we do not have time to go to an astrologer and we don’t know who is right or who is wrong. When it comes to trust we trust the calculator more than our own mind, even though humans invented the calculator and it can never be as smart as the human brain but it is more accurate than the human calculation. Sometimes even the slightest mistake can change your birth chart completely. Use of AI saves your calculation, gives you perfection in timings and birth charts and the positions of stars, planets and everything you want to know.

Data Analysis:

You need to know a few things to create a perfect birth chart, Place of birth, Time of birth, and date of birth. If you have these things accurate then you will definitely have a perfect birth Chart without mistakes and AI improves the chances of accuracy to 100%. Astrology data analysis with AI involves leveraging artificial intelligence techniques to process, analyse, and derive insights from astrological data.

Remedy Recommendations:

Lal kitab always provided extraordinary but easily available home remedies to its followers but with the help of AI and its accurate calculation we believe we will be able to provide tailored remedies to every individuals,

Continuous Learning and Adaptation:

The process where AI models learn from new data or experiences continuously, allowing them to update their knowledge and improve performance.The ability of an AI system to adjust its behaviour or model parameters based on changing conditions or new information. Regular updates based on new data lead to performance improvements, allowing AI models to provide more accurate predictions or make better decisions. Many Astrological scenarios are dynamic and evolve over time. Continuous learning enables AI systems to stay relevant in such dynamic environments.

Ethical Considerations:

Astrological data often includes personal information. Clearly communicate to users how their astrological data will be used and obtain informed consent before collecting and analysing their information. AI ensures robust security measures to protect sensitive data from unauthorised access and breaches.AI systems should avoid imposing specific cultural perspectives.AI algorithms used in astrology are transparent and explainable.

AI Astrologer:

AI Astrologer is the synergy of the Lal Kitab and AI, The inventor of the AI Astrologer Gurudev GD Vashishth has put all of his knowledge of his earlier addition Lal Kitab amrit in AI Astrologer. AI Asrrologer is a revolution in the astrology world as this will save people from frauds and fake astrologists. AI is claimed to be 100% perfect in its predictions. AI Astrologers respects its readers privacy and understands that these question and answers can be private, When you use the kiosk or the AIAstrologer.com you may ask anything you want and everything is categorised in the app and you get the answers at your whatsapp in the Pdf form.

Conclusion:

The synergy of AI and astrology offers a new dimension to the age-old quest for understanding celestial influences on human life. Embracing this collaboration with ethical principles at its core will contribute to a trustworthy and responsible evolution of astrological practices in the digital age. As we navigate this uncharted territory, the pursuit of knowledge and wisdom remains a constant, guiding both traditional practitioners and technologists alike toward a harmonious coexistence of tradition and innovation.In conclusion, the integration of artificial intelligence (AI) with astrology marks a fascinating intersection of ancient wisdom and modern technology. As we delve into this evolving field, it is crucial to navigate it with ethical considerations, transparency, and respect for cultural diversity.

Visit : https://www.aiastrologer.com/

1 note

·

View note

Text

Comprehensive Introduction to Robotics Mechanics and Control

In a world increasingly influenced by technological innovation, the field of robotics stands out, paving the way for future advancements that could redefine various industries. The "Introduction to Robotics Mechanics and Control" serves as a foundational pillar for enthusiasts and professionals alike, seeking to understand the complex yet fascinating world of robotics. This comprehensive guide delves deep into the intricate mechanics underlying robotic applications and the control systems that ensure these machines can perform tasks accurately, efficiently, and flexibly. Understanding these concepts is not just for academic or industrial pursuits; it is a window into a future where robotics impacts every facet of our lives. The journey through "Introduction to Robotics Mechanics and Control" is akin to unlocking new levels of a sophisticated game, where each stage uncovers deeper, more complex mysteries and marvels of the robotic world. From the basics of design and movement to the nuanced algorithms that provide robots with almost human-like dexterity and decision-making capabilities, each page turns is a step towards not just understanding but inventing the future. It's not merely about machines; it's about the harmonious blend of physics, mathematics, and computer science that creates entities capable of changing the world. As we embark on this enlightening journey, it is crucial to remember that the field of robotics is ever-evolving. What may be a groundbreaking innovation today could become a standard feature tomorrow. Therefore, the "Introduction to Robotics Mechanics and Control" is more than a guide; it's a compass that directs curious minds towards uncharted territories waiting to be discovered. This exploration promises to challenge, inspire, and ignite a passion for a realm where science fiction meets reality. Grasping the Fundamentals of Robotics At the heart of understanding robotics is grasping the fundamental principles that govern how robots are designed, structured, and brought to life. The inception of any robotic system starts with mechanics, the branch of physics concerned with the behavior of physical bodies when subjected to forces or displacements. By studying mechanics in the context of robotics, one learns how robots move, interact with physical objects, and adhere to the laws of physics. These foundational insights are crucial for designing robots that can efficiently navigate and operate within their environment, whether it's on a factory floor, inside a laboratory, or on the surface of another planet. The mechanics of robotics also extends to the materials used in constructing robots. Different applications require various materials, each with unique properties that affect a robot's functionality and efficiency. Understanding these materials isn't just about knowing their physical properties; it involves insight into how they interact with motors, sensors, and other robotic components. This knowledge ensures the creation of robots that are not just functional but also durable, capable of withstanding the environments they operate in. Another fundamental aspect is the kinematics of robots, which deals with motion without considering the forces that cause it. Here, the focus shifts to the movement patterns of robots, how their parts coordinate and synchronize, ensuring smooth, calculated actions. Grasping this concept involves understanding geometric representations and transformations, joint parameters, and linkage descriptions that form the language of robotic movement. It's through mastering kinematics that one can predict and control a robot's behavior, a critical skill in the development and application of robotics. But mechanics alone doesn't bring a robot to life; it's the integration with control systems that propels these machines into action. Control systems in robotics help in managing, commanding, directing, or regulating the behavior of other devices or systems. These range from simple remote controls to complex neural networks, each serving a unique function in various robotic applications. Understanding control systems is pivotal in ensuring that robots can perform required tasks on their own, learn from their surroundings, and even make decisions in unpredictable environments. Exploring the Genesis of Modern Robotics The modern landscape of robotics didn't materialize overnight; it's the culmination of centuries of scientific achievements and technological advancements. The genesis of modern robotics can be traced back to the era of industrialization, a period marked by the birth of automation and mechanization. It was the quest to improve efficiency and productivity that led to the advent of machines designed to mimic and eventually surpass human physical capabilities. These initial steps were humble, with simple machines performing rudimentary tasks, but they set the foundation upon which contemporary robotics is built. As the 20th century progressed, so did the ambitions of inventors and scientists. The space race and the cold war provided unique platforms for rapid advancements in robotics. It was no longer about simple machines; the goal had shifted to creating entities that could think, adapt, and make decisions. This era saw the introduction of programmable robots, capable of being coded to perform various tasks, and the birth of artificial intelligence, a field that would redefine what robots could potentially achieve. This historical context is crucial, as it highlights the evolutionary journey of robotics, painting a picture of relentless human ambition and intellectual prowess. Significance of Mechanics and Control Systems Mechanics and control systems represent the heart and brain of robotics, respectively. Without mechanics, robots would be lifeless frames, and without control systems, they would be entities without purpose or direction. The significance of these elements cannot be overstated, as they collectively contribute to the efficacy, autonomy, and versatility of robots. With advanced mechanics, robots can navigate uncharted terrains, handle delicate objects, and perform tasks with precision that rivals or exceeds human capabilities. Control systems, on the other hand, breathe intelligence into robots. These sophisticated networks of algorithms and sensors enable machines to perceive their environment, process information, and respond with appropriate actions. The evolution of control systems has reached a point where robots can learn from past experiences, adapting their behavior in ways that were once the sole domain of living beings. This convergence of learning ability and autonomy is what's steering the current generation of robots towards new horizons of capabilities and achievements. In the realm of practical application, the synergy between mechanics and control systems is creating opportunities across diverse fields. From manufacturing plants and healthcare facilities to research labs and space exploration, the footprint of advanced robotics is ubiquitous. These systems are not just performing tasks but are also managing complex operations, solving intricate problems, and even exploring the mysteries of other worlds. The future of robotics, therefore, rests on further advancements in mechanics and control systems, driving forward the boundaries of what these extraordinary machines can accomplish. Delving into Robotics Mechanics Embarking on the "Introduction to Robotics Mechanics and Control" journey means immersing oneself in the detailed mechanics that form the backbone of every robot. Robotics mechanics is not a singular concept but a vast field that integrates various principles from traditional mechanics and applies them uniquely to robots. It encompasses everything from how robots move and interact with their environment to the very materials from which they are made. It is through these mechanics that robots can perform with the precision, efficiency, and flexibility that modern applications require. Understanding robotics mechanics is essential because it lays the foundation upon which all robotic functions are built. When we talk about robots, we often envision autonomous machines capable of carrying out complex tasks, sometimes in environments unsuitable for humans. However, behind this autonomy is a world of intricate mechanics working seamlessly to initiate motion, manage force, and maintain balance. Thus, delving into robotics mechanics means unraveling the complexities behind these autonomous capabilities. This exploration is fundamental to both current and future advancements in the field. Robotics mechanics doesn't remain static; it evolves with each technological advancement. With every new material discovered, every fresh insight into power systems, and every innovative motion technique developed, the mechanics of robotics grow increasingly sophisticated. This evolution expands the horizons of what robots can do, pushing the boundaries from the floors of manufacturing factories to the depths of space. However, this field isn't just about the robots themselves; it's also about the broader impacts these mechanical advancements have on industries and societies worldwide. As robotic mechanics advance, so too do the capabilities and roles of robots in various sectors. They're revolutionizing assembly lines, transforming healthcare, exploring unreachable cosmic territories, and doing much more. They're not just machines; they're harbingers of a new era, and it all starts with the mechanics that move them, the heart of robotics itself. Core Principles of Robotics Mechanics The journey through the core principles of robotics mechanics begins by peeling back the layers to understand the components and concepts that form a robot's mechanical basis. This foundation is rooted in classical mechanics, borrowing established principles and evolving them to suit the unique needs of robotic applications. Here, every piece, from the smallest screw to the most complex joint arrangement, plays a role in ensuring the robot functions as desired, offering a symphony of movement and capability that is both fascinating and revolutionary. Dissecting the Mechanics: From Levers to Pulleys The simplest elements of robotics mechanics draw from age-old mechanical concepts, including basic machines like levers and pulleys. These fundamental components might seem rudimentary, but they are integral to the complex movements and operations within a robot. Levers, for example, are crucial in imparting motion, offering mechanical advantages that are exploited to achieve force amplification in robotic arms or legs. Pulleys provide similar advantages, particularly in robots requiring linear motion, as they help reduce the energy needed to move objects, reflecting the utility and efficiency embedded in these classic mechanics. Understanding how these simple machines integrate into complex robotic systems reveals the genius of mechanical engineering in robotics. It’s not about reinventing the wheel but rather about using tried and tested mechanical principles to drive innovation. This deep integration of simple mechanics lays a solid groundwork, ensuring that regardless of how advanced or sophisticated robots become, they are grounded in reliable, time-tested mechanical laws. The blend of these classical mechanics with modern engineering practices is indicative of the evolution within the field. Today's robots might operate using advanced algorithms and be powered by cutting-edge technology, but beneath all that, they still rely on the fundamental principles of levers and pulleys, among other mechanical basics. It is this harmonious blend of old and new that enables the continuous advancement of robotic capabilities, making what was once thought impossible a reality today. The Role of Physics in Robotics Diving deeper into the mechanics necessitates an exploration of physics in robotics, as the two are inextricably linked. Physics provides the foundational laws upon which all robotic functions are based, from motion and energy to force and momentum. In the realm of robotics, these laws dictate how robots move, how they interact with objects, and how they can manipulate their environment. Without these fundamental principles, the precision and control we see in robots today would simply not exist. Roboticists regularly tap into various physics domains to optimize robotic functions. For instance, electromagnetism is crucial in operating motors and sensors, while principles from thermodynamics are used to manage a robot's heat generation and dissipation. Even quantum physics, with its insights into atomic and subatomic levels, finds applications in developing new materials and sensors for robotics. Understanding the role of physics in robotics also extends to anticipating and designing around the limitations these laws impose. It’s about striking a balance between pushing the boundaries of what's possible and respecting the unyielding constraints of the physical world. Robotics doesn't just apply physics; it dances with it, choreographing movements and capabilities that conform to and yet also challenge these universal laws. Through physics, we can predict how a robot would behave in different scenarios, control its actions with precision, and ensure its interaction with the physical world is consistent with established laws. This predictive and regulatory capability is pivotal, forming the bedrock upon which the reliability and efficiency of robots are built. Kinematics and Dynamics: The Motion Facilitators The principles of kinematics and dynamics serve as the navigators in the journey of understanding robotic motion. Kinematics focuses on motion description, control, and prediction without concern for the forces causing that motion. In robotics, this involves determining the paths and spaces a robot can move within, ensuring the robot's joints and appendages work in harmony to achieve smooth, coordinated movements. Dynamics goes a step further, bringing into consideration the forces that influence motion. This branch is crucial for understanding how to impart and control the movements of a robot. It’s not just about ensuring motion; it’s about guaranteeing stability, efficiency, and precision in these movements. When robots interact with their environment, whether it’s picking up a payload or maneuvering through uneven terrain, dynamics is key in controlling these interactions, ensuring they're not just successful but also safe and reliable. Together, kinematics and dynamics facilitate the seamless motion we observe in robots. They're pivotal in the design and operation stages, ensuring not only that robots move but that they do so with purpose and precision. Statics and Elasticity in Robotic Structures Delving further into the mechanical world of robots, statics and elasticity emerge as crucial fields of study. Statics deals with the mechanics of materials and structures in a state of rest or constant velocity. It’s vital for ensuring that a robot’s structure can withstand the loads and stresses it encounters without succumbing to wear and tear. Here, the focus shifts to analyzing and designing structures that offer the perfect balance between strength and flexibility. Elasticity complements this by focusing on materials’ ability to deform under stress and return to their original shape afterward. This property is invaluable in robotics, where components often need to withstand various forces without permanent deformation. Robots designed with elasticity in mind can endure more physical stress, elongating their operational life and increasing their reliability. Both statics and elasticity are integral to maintaining the structural integrity of robots. By understanding and applying principles from these fields, engineers can design robots that are not only more resilient and durable but also capable of performing more complex tasks in more challenging environments. Thermodynamics and Heat Transfer: Cooling Robotic Systems No exploration of robotics mechanics would be complete without addressing thermodynamics and heat transfer. Robots, like all machines, generate heat during operation, and managing this heat is crucial for maintaining optimal performance. Thermodynamics allows us to understand the heat generated within robotic systems, guiding the creation of mechanisms that can effectively dissipate this heat to prevent overheating and potential system failures. Heat transfer plays a complementary role, focusing specifically on how heat moves through different materials. In robotics, this is crucial for designing cooling systems that keep the robot’s internal temperature within safe limits. These systems might leverage conduction, convection, or radiation to transfer heat away from sensitive components, thereby safeguarding the robot’s functionality and durability. Together, thermodynamics and heat transfer form a critical defense mechanism for robots, protecting them from the dangers of their own operational heat. They ensure that robots can continue operating efficiently, even under high-stress conditions or during lengthy periods of activity. Fluid Mechanics in Robotics: Hydraulic and Pneumatic Systems The realm of fluid mechanics opens up a world of possibilities for robotic movement and power. Hydraulics and pneumatics, both rooted in fluid mechanics, have become fundamental in the field of robotics. Hydraulic systems use liquid fluid—often oil—in a confined space to transfer power from one location to another. These systems are prized in robotics for their incredible power, precision, and reliability, especially in heavy-duty robots that require significant force. Pneumatic systems, on the other hand, rely on gaseous fluids—typically air—under pressure. They are generally simpler and more flexible than their hydraulic counterparts, making them ideal for lighter, quicker tasks. Pneumatic systems are often found in robotic arms in manufacturing, where they perform repetitive tasks with speed and precision. Both systems showcase the versatility and potential of fluid mechanics in robotics. By harnessing the power of fluids, robots can achieve greater force and movement without a corresponding increase in size or weight. This ability makes robots more adaptable and capable, ready to meet the diverse demands of modern applications. Intricacies of Material Science in Robotics Material science forms the cornerstone upon which the tangible aspects of robots are built. This field goes beyond merely selecting materials for different parts of a robot. It involves diving deep into the properties of various materials—metals, polymers, composites—and understanding how these properties can enhance or impede a robot’s functionality. The right materials can make a robot stronger, more flexible, or more energy-efficient, creating possibilities for new applications and capabilities. Metals, Polymers, and Composites: Pros and Cons The discussion of materials in robotics mechanics invariably leads to the comparison between metals, polymers, and composites, each with its own set of advantages and disadvantages. Metals have been a staple in machinery for centuries, known for their strength and durability. In robotics, metals, particularly alloys, are valued for their ability to withstand high stress and temperatures, making them ideal for structural components and high-performance parts. Polymers, however, bring a different set of benefits to the table. These materials, made of long, repeating molecular chains, are generally lighter than metals and offer greater resistance to corrosion. They also possess a higher degree of flexibility, which can be advantageous in robots that require a wider range of motion or those that need to absorb high impacts. Composites Read the full article

0 notes

Text

If you’re wondering

The reason I don’t rag as often about the far right is very simple:

You already know their fucking problems. There’s nothing new. It’s just hitting the same notes on newspaper after newspaper, day by day, cherry picking the unpleasant history from the history textbooks in favor of all other history to emphasize a point.

You can’t find an internet news column that isn’t speaking all the real problems, and then when they run out of the social credit to keep blabbing about it, inventing new parameters to talk about them being a problem. Entire new metrics to go, “they’re outside of them, look how they’re transgressing now!”

Abortion, climate change, gun rights, racism, sexism, religious overreach, taxes and industrial regulations. Over and over again. Yes, “What about white supremacism and colonialism?” Covered under racism. “What about churches trying to take over publishing companies and use religious criteria on secular books?” Covered under religious overreach, as is judges trying to judge from biblical interpretations of what’s right according to god, not man. Religious folk trying to enforce their specific religious views on those that are not religious in the public sphere.

I don’t need to bring up the Trail of Tears or Manifest destiny. It is brought up ad nauseum. I don’t need to talk about how the Nazis were real and bad, or the very real holocaust, because it never stops being talked about by people constantly trying to use it to tangentially blame the modern american right wing for culpability and responsibility for it, casually, with no room for rebuttal and no room for argument, just zingers and then social faux passes if you zap back.

Deafening silence about left wing failures. They just attribute those to being, “right wingers of their day” dust their hands and move on. So even the failures of authoritarian left-wingers, become the responsibility of conservatives. Even the bullshit of the Soviet Union gets double thought of as, “State capitalist right-wing violence,” because many left-wingers feel state violence and leftism are paradoxical, so it must be right wing when practiced, and they reject the whole premise ideologically..

A million books in public school libraries about the Nazis, a quarter million published and stocked in the last year attributing Nazis to the right wing (despite the fact they very much were anti-capitalist and socialist, this is not disputable), and virtually no books added about how shit Soviet, Asian or African socialism was to the people living under the boots under Marxist principles. Just in case you might want any message other than, “Nazis = bad.”

But when left-wingers try to silent majority opposition to nuclear power because it, “doesn’t overturn the current private enterprise model of power” while screaming about how we just can’t do business as usual with coal and gas ahymore without destroying the planet, and aggressively filibust any conversation that isn’t the ones they WANT to have that make them look right and on the right side of history, that shit needs to be talked about. They aren’t being held accountable by themselves, and the modern right wing is so ineffectual and off in the weeds making itself look stupid, it’s incapable of even defending itself, let alone taking the far-left to task. When a literal communist agitator and false historian writes bogus articles about how the US military kicked off the great Native American genocide by spreading small pox through infected blankets, predating germ theory and germ warfare by a century, and gets less than a slap on the wrist for spreading that lie, no one repeats it.

I don’t need to, nor want to, defend right wingers. Not my guys, not my circus. I want to point out the hypocrisy and the garbage in the other alternative. Maybe incentivize conversations about candidates that aren’t coasting on, “not Trump”ism that can then just do whatever they want, because it’s them or, “Someone like Trump.”

0 notes

Text

Science & God’s Existence

By Author Eli Kittim

Can We Reject Paul’s Vision Based On the Fact that No One Saw It?

Given that none of Paul’s companions saw or heard the content of his visionary experience (Acts 9), on the road to Damascus, some critics have argued that it must be rejected as unreliable and inauthentic. Let’s test that hypothesis. Thoughts are common to all human beings. Are they not? However, no one can “prove” that they have thoughts. That doesn’t mean that they don’t have any. Just because others can’t see or hear your thoughts doesn’t mean they don’t exist. Absence of evidence is not evidence of absence. Obviously, a vision, by definition, is called a “vision” precisely because it is neither seen nor observed by others. So, this preoccupation with “evidence” and “scientism” has gone too far. We demand proof for things that are real but cannot be proven. According to philosopher William Lane Craig, the irony is that science can’t even prove the existence of the external world, even though it presupposes it.

No one has ever seen an electron, or the substance we call “dark matter,” yet physicists presuppose them. Up until recently we could not see, under any circumstances, ultraviolet rays, X – rays, or gamma rays. Does that mean they didn’t exist before their detection? Of course not. Recently, with the advent of better instruments and technology we are able to detect what was once invisible to the human eye. Gamma rays were first observed in 1900. Ultraviolet rays were discovered in 1801. X-rays were discovered in 1895. So, PRIOR to the 19th century, no one could see these types of electromagnetic radiation with either the naked eye or by using microscopes, telescopes, or any other available instruments. Prior to the 19th century, these phenomena could not be established. Today, however, they are established as facts. What made the difference? Technology (new instruments)!

If you could go back in time to Ancient Greece and tell people that in the future they could sit at home and have face-to-face conversations with people who are actually thousands of miles away, would they have believed you? According to the empirical model of that day, this would have been utterly impossible! It would have been considered science fiction. My point is that what we cannot see today with the naked eye might be seen or detected tomorrow by means of newer, more sophisticated technologies!

——-

Can We Use The Scientific Model to Address Metaphysical Questions?

Using empirical methods of “observation” to determine what is true and what is false is a very *simplistic* way of understanding reality in all its complexity. For example, we don’t experience 10 dimensions of reality. We only experience a 3-dimensional world, with time functioning as a 4th dimension. Yet Quantum physics tells us there are, at least, 10 dimensions to reality: https://www.google.com/amp/s/phys.org/news/2014-12-universe-dimensions.amp

Prior to the discoveries of primitive microscopes, in the 17th century, you couldn’t see germs, bacteria, viruses, or microorganisms with the naked eye! For all intents and purposes, these microorganisms DID NOT EXIST! It would therefore be quite wrong to assume that, because a large number of people (i.e. a consensus) cannot see it, an unobservable phenomenon must be ipso facto nonexistent.

Similarly, prophetic experiences (e.g. visions) cannot be tested by any instruments of modern technology, nor investigated by the methods of science. Because prophetic experiences are of a different kind, the assumption that they do not have objective reality is a hermeneutical mistake that leads to a false conclusion. Physical phenomena are perceived by the senses, whereas metaphysical phenomena are not perceived by the senses but rather by pure consciousness. Therefore, if we use the same criteria for metaphysical perceptions that we use for physical ones (which are derived exclusively from the senses), that would be mixing apples and oranges. The hermeneutical mistake is to use empirical observation (that only tests physical phenomena) as “a standard” for testing the truth value of metaphysical phenomena. In other words, the criteria used to measure physical phenomena are quite inappropriate and wholly inapplicable to their metaphysical counterparts.

——-

Are the “Facts” of Science the Only Truth, While All Else is Illusion?

Whoever said that scientific “facts” are *necessarily* true? On the contrary, according to Bertrand Russell and Immanuel Kant, only a priori statements are *necessarily* true (i.e. logical & mathematical propositions), which are not derived from the senses! The senses can be deceptive. That’s why every 100 years or so new “facts” are discovered that replace old ones. So what happened to the old facts? Well, they were not necessarily true in the epistemological sense. And this process keeps repeating seemingly ad infinitum. If that is the case, how then can we trust the empirical model, devote ourselves to its shrines of truth, and worship at its temples (universities)? Read the “The Structure of Scientific Revolutions” by Thomas Kuhn, a classic book on the history of science and how scientific paradigms change over time.

——-

Cosmology, Modern Astronomy, & Philosophy Seem to Point to the Existence of God

If you studied cosmology and modern astronomy, you would be astounded by the amazing beauty, order, structure, and precision of the various movements of the planets and stars. The Big Bang Theory is the current cosmological model which asserts that the universe had a beginning. Astoundingly, the very first line of the Bible (the opening sentence, i.e. Gen. 1.1) makes the exact same assertion. The fine tuning argument demonstrates how the slightest change to any of the fundamental physical constants would have changed the course of history so that the evolution of the universe would not have proceeded in the way that it did, and life itself would not have existed. What is more, the cosmological argument demonstrates the existence of a “first cause,” which can be inferred via the concept of causation. This is not unlike Leibniz’ “principle of sufficient reason” nor unlike Parmenides’ “nothing comes from nothing” (Gk. οὐδὲν ἐξ οὐδενός; Lat. ex nihilo nihil fit)! All these arguments demonstrate that there must be a cosmic intelligence (i.e. a necessary being) that designed and sustained the universe.

We live in an incredibly complex and mysterious universe that we sometimes take for granted. Let me explain. The Earth is constantly traveling at 67,000 miles per hour and doesn’t collide with anything. Think about how fast that is. The speed of an average bullet is approximately 1,700 mph. And the Earth’s speed is 67,000 mph! That’s mind-boggling! Moreover, the Earth rotates roughly 1,000 miles per hour, yet you don’t fall off the grid, nor do you feel this gyration because of gravity. And I’m not even discussing the ontological implications of the enormous information-processing capacity of the human brain, its ability to invent concepts, its tremendous intelligence in the fields of philosophy, mathematics, and the sciences, and its modern technological innovations.

It is therefore disingenuous to reduce this incredibly complex and extraordinarily deep existence to simplistic formulas and pseudoscientific oversimplifications. As I said earlier, science cannot even “prove” the existence of the external world, much less the presence of a transcendent one. The logical positivist Ludwig Wittgenstein said that metaphysical questions are unanswerable by science. Yet atheist critics are incessantly comparing Paul’s and Jesus’ “experiences” to the scientific model, and even classifying them as deliberate literary falsehoods made to pass as facts because they don’t meet scholarly and academic parameters. The present paper has tried to show that this is a bogus argument! It does not simply question the “epistemological adequacy” of atheistic philosophies, but rather the methodological (and therefore epistemic) legitimacy of the atheist program per se.

——-

#scientificmethod#Godsexistence#religious experience#visions#ThomasKuhn#scientism#technology#metaphysics#empiricism#firstcause#scientificdiscoveries#quantum mechanics#physicalphenomena#godandscience#metaphysicalphenomena#exnihilonihilfit#bertrand russell#immanuel kant#a priori#fundamentalphysicalconstants#paul the apostle#elikittim#thelittlebookofrevelation#principleofsufficientreason#leibniz#parmenides#ek#William Lane Craig#big bang#apologistelikittim

38 notes

·

View notes

Text

ladies and gentlemen this is ask dump no. 5

aw scrap here we go again!

answered asks include body modification as the opposite of empurata, Mutacons making bandages out of kibble, kibble used as furniture, numbers of Sweeps, a DILF alligator, RID15 Tidal Wave, a BIG infodump on dealing with the circus that is Iacon’s media, Cybertronian muppets, a WIP of Elita Infin1te (or rather her sword), and the many secret sufferings of Alpha Trion.

yea, sorta! body modification in SNAP is more limited than in canon. you can’t simply switch out your body like the total frame reformats of IDW or TFP, and losing a limb can be permanent if not healed in time. for the most part, the frame you have is the frame you’re stuck with, and those frames fall within specific parameters.

HOWEVER-

some modification and upgrades do exist! the most prominent here would be a prosthetic helm like Lugnut. if the processor is left intact and attached after a helm injury, a new helm can be sculpted, with extra optics to make up for the lower quality of artificial optics, and as visibly different as possible to differentiate from empurata. other replacements and prosthetics are common after debilitating injury where the original body part cannot be saved. whether or not the prosthetic is as good as the original depends on the individual and the specific injury. there are also functional medical upgrades, like thicker armor attachments, alt mode additions, etc. almost every upgrade is for the express purpose of improving one’s frame for their function, and there’s definitely a limit to them. you can’t give yourself new limbs if you only had four to begin with. a grounder cannot become a flier. the spark can only power so much mass in the frame, and some people have adverse reactions that mean the upgrades don’t take and must be removed.

this sort of relates to the next point here-

yes, with some caveats.

Cybertronians are a segmentary species, so they can detach some body parts for a bit without negative consequences, as long as that body part is reattached for revitalization and repair. many folks can do this without any medical assistance for the less integral kibble. for instance, Kup uses his tow arm as a walking stick, but he has to reattach it whenever he wants to go into alt mode, and if he doesn’t transform he still needs to reattach it for a couple hours every day at minimum. so if a Mutacon were to create a makeshift splint out of kibble and detach it, it would likely be fine, as long as they got that kibble back. otherwise, they’ve lost a whole chunk of their body that they can’t just regenerate.

for shifting armor to cover a wound without detaching it, that depends on the nature of the wound. if it’s ragged, large, or in areas with a lot of joints or movement, it might be difficult to shuffle around plating to cover it. a more superficial injury in a less delicate area would be easier

sort of! it’ll depend on the individual’s kibble, of course! double checking SNAP Bulkhead, i don’t think he could, because his kibble isn’t large enough. but Scylla could probably use her alt mode arms as a chair, Wreck-Gar has a built in backpack and belly bag, and of course the Necrobot uses his wingcloak as hands. different kibble with different bonus uses

the ideal number of Sweeps is seven, since less than that means they don’t have enough collective processing power to function optimally. more than seven, however, puts a strain on that collective processing power to smoothly operate so many at once. so there’s usually packs of as close to seven as they can get.

as to how many can just exist at the same time, it’s limited only by how many Scourge is willing to forge. he first invents them in s1e06 A Use for Army-building! An Upgrade to Sweeps. by the next episode they figure out that having dozens of them running around is... well it’s about as chaotic as having dozens of flying puppies with hands and weapons would be. in large numbers they’re very difficult to control. good thing Galvatron is excellent at commanding his new army!

(the post this is referring to) @oldboyjensenhinglemeier thanks Dilf Waitress, i can always rely on you

(the post this is referring to) i think that’s fantastic, i’d love to see a Cybertronian whale. imagine the size of the holding cell you’d have to have for him!

oooohohoho what a sticky subject. here’s a quick rundown on faction ideology to give you some context for how they operate and thus deal with the media. the heroes aren’t referred to as heroes, but rather as vigilantes at best and violent gangs in a turf war at worst. Froid has remotely diagnosed them with pathological dissent. at the same time, some folks have jumped on the market to make hero merch, and it becomes a very lucrative business for some. public opinion is constantly torn between fear and anger at how they do whatever they’d like and gratitude and admiration for how they throw themselves in harms way to prevent disaster and save people. it’s really a giant mess all the time that changes by the day.

there is of course the whole snafu surrounding the media’s portrayal of the Elite Guard as a backup team for the Autobots, and Elita 1 as Optimus’ sidekick. and Elita 1 is Not Happy about that. Elita 2 is startlingly good at winding the reporters around her little finger and always seems to know just what to say, whereas Elita 3 just grumbles at the cameras, even sometimes demanding they respect boundaries or be locked in the nearest building with the use of her powers. Elita 4 barely notices them unless she’s in the mood to prank someone, and Elita 5 just avoids them, as they tend to dramatize her size and thus her danger. given their excellent teamwork and how they’re (mostly) in favor of reform instead of anarchy, the Elite Guard would actually have a good shot at getting along with the news, except they bow to precisely no one, including the people wanting to interview them, so instead they come across as a standoffish and self-serving clique with dangerous habits

the Decepticons are in the bad-boy limelight and they love it. well, at least Galvatron, Hellscream, and Thunderblast do. Galvatron takes advantage of every opportunity to pontificate on the evils of society and the right to rise up for freedom. broadcasters have learned to cut the cameras as soon as he starts speaking so his ideas don’t get the chance to spread too far. Hellscream cares less about principles and more about scaring the living daylights out of every reporter he sees, often leaving them with cracked equipment and ringing audials from the sheer destructive power of his voice. Thunderblast just wants to preen in all the attention and boy does she get it. Cyclonus actively avoids most gawkers, Scourge talks too long and complicated to make good news, Drift either ignores them or sends them away with some lofty spiritual advice, and Triptych is dangerously unpredictable so most reporters have learned to stay away from him.

the Predacons came into existence in a negative light, and they were grimly prepared for it. after all, Sixshot used to be a Decepticon, and their falling-out and defection caused quite a stir. when Abominus first appeared, the fearful reaction of the public to such an ‘abomination’ is actually how he chose his name in the first place. Airachnid loves tormenting reporters with nuclear-grade sarcasm and subtle threats, but if anyone makes her truly mad she’ll string them up in her web cabling and leave them hanging. she also flaunts that cabling by using her darts to knit nets, shawls, and other decorations, despite the fact that getting cabling tangled up in seams and joints can lead to something called entrapment protocols, mentioned in the seventh ask here. Enforcers use capture equipment designed to trigger entrapment protocols, so her mimicry of that as nothing more than a casual accessory is a big ‘frag you’.

Soundwave.... is a category of his own. he only comes into being in the fourth season, but the media soon learns to quake at the thought of encountering Soundwave, and his minicons are little better. there’s at least one instance where he Rosanna-rolls the entirety of Iacon.

the Autobots keep wavering between ‘the only true good ones of all these vigilantes’ and ‘the worst possible people in the world, hide the children, lock the doors’ in the eyes of the media. Optimus does his best to treat everyone fairly, and the Mistress usually has something encouraging to share. much like Galvatron but for completely opposite reasons, broadcasters have learned to cut cameras when Ultra Magnus starts talking, because his encyclopedic knowledge of law means he regularly lists every instance of malpractice, abuse, illegality, and disrespect that he sees in the average reporter, Enforcer, or politician, which is not the kind of upbraiding that would serve the propaganda machine. however, it does get him the attention of Tyrest, who leverages legality and public opinion to try and draw Ultra Magnus into an agreement during s3e03- A Councilmember’s Boon! An Upgrade to Legality. Rodimus is a chaos beast who has been known to snatch cameras for selfies. it’s kind of a tossup as to whether Cheetor will be going slow enough to show up in the footage or not.

now, i can’t talk about the media without mentioning the feral force of nature that is Rewind. the best of the best, he’s the only one willing to brave the battlefields for an up-close look, constantly endangering himself in order to get the freshest scoop. he might not always hold opinions in line with the mandated propaganda about these vigilantes, but the media lets him get away with it, since he’s the most successful at getting them more news. this has caused him to be targeted at least once, unfortunately.

love this question. love it. you know those lil remote controlled robot dogs, or things to that effect? i’m imagining that’s what Cybertronian muppets are like, since they can create robotics and animatronics with a lot more finesse and ease than we can. in fact, making fabric is probably harder for them than robotics, since they don’t have the same materials as we do to work with. but anyway, these muppets wouldn’t be limited by what a hand can do to puppet them around, being instead remote controlled from off stage, so i don’t know if they’d have that kind of visual gag. maybe instead there would be fourth-wall breaking where one muppet snatches the remote of another?

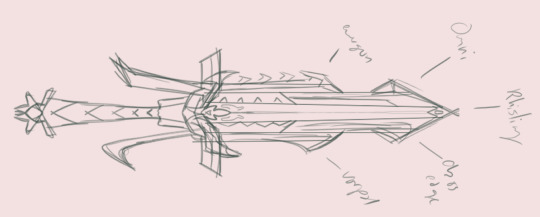

the painful thing about this answer is that i have a design i’m happy with EXCEPT FOR THE HELM i have sketched and resketched a dozen different ideas ugh. the body looks fine, all five of them combined in a way that makes sense to me, but i just CANNOT get the helm right i’m so angry. anyway here’s the Cyber Caliber, all of their swords combined into one massive weapon

the more accurate question is, what hasn’t happened to him. he’s been through a lot, the poor mech. but i’ll list some things for you:

that one time he had a sibling be erased from reality

that one time he had to murder another sibling because they decided evil was fun

that one time a fragging beachball stole his work

the fact he doesn’t know if his twin is alive or not

that one time he was a junker running for his life

that one time he was too late to save the Terminus Blade, and it was stolen

that one time his pride and joy, the Athenaum Sanctorum, was destroyed, and everything archived there was lost

that other time the same fragging beachball stole his work

that other time he was a junker hiding for his life

the fact that the theft of his diary started a whole new branch of religion and he has to read his own words as if they’re sacred

the fact that the title of Trion was in fact derived from his diary, and the sheer painful irony of being given the title of Trion.

that one time he had to rip off some fingers to fit in

that one time Trypticon showed up, awhile before the JAAT was founded, and he had to take it on alone

that other time Trypticon showed up when the JAAT opened and he had to hand out some precious relics to children to protect the school

aaaaand his current reason for drinking! the fact that of all twenty-something heroes running around, he only knows who THREE of them are because he only gave out THREE RELICS! and relics just keep disappearing from the collection he’s guarding

someone help him he is not having a good time. and it’s only going to get worse...

#ask dump#worldbuilding#cybertronian biology#Empurata#mutacon#sweeps#gatoraider#cybertron#cybertronian culture#autobot#elite guard#decepticon#predacon#rewind#elita infin1te#Alpha Trion#faculty#i've given so much away about alpha now sheesh#well not really i guess but ITS STILL A LOT

26 notes

·

View notes

Text

Laser and Light Treatment of Acquired and Congenital Vascular Lesions

IPL treatment of PWS

IPL devices are broadband filtered xenon flashlamps that work based on the principles of selective photothermolysis. The emission spectrum of 515–1200 nm is adjusted with the use of a series of cut-off filters, and the pulse duration ranges from approximately 0.5 to 100 msec, depending on the technology. The first commercial system, Photoderm VL (Lumenis, Yokneam, Israel) became available in 1994, and has been used to treat vascular anamolies. Another, IPL Technology (Danish Dermatologic Development [DDD] Hoersholm, Denmark) with a dual mode light filtering has also been used to treat PWS. Many other IPL system have recently been developed, and the appropriate parameters for congenital vascular lesions are being developed. The IPL has been used successfully to treat PWS (Fig. 39.7),78–80 but pulsed dye laser remains the treatment of choice.

IPL technology has also been used to treat pulsed dye laser-resistant PWS. In the study by Bjerring and associates seven of 15 patients achieved over 50% lesional lightening after four IPL treatments. Most of these patients had lesions involving the V2 dermatome (medial cheek and nose), which are relatively more difficult to lighten. Six of seven of these patients showed over 75% clearance of their PWS. A 550–950-nm filter was used with 8–30-msec pulse durations and fluences of 13–22 J/cm2 to achieve tissue purpura. The 530–750-nm filter can also be used with double 2.5-msec pulses, with a 10-msec delay and fluence of 8–10 J/cm2. Epidermal cooling was not required. Treatment resulted in immediate erythema and edema, and occasional crusting. Hypopigmentation was observed in three patients, hyperpigmentation in one patient, and epidermal atrophy in one patient.

The basics of body fat

Let’s start with the basics. Not all fat is created equal. We have two distinct types of fat in our bodies: subcutaneous fat (the kind that may roll over the waistband of your pants) and visceral fat (the stuff that lines your organs and is associated with diabetes and heart disease).

From here on out, when we refer to fat, we are talking about subcutaneous fat, as this is the type of fat that cryolipolysis targets. A recent study showed that the body’s ability to remove subcutaneous fat decreases with age, which means we are fighting an uphill battle with each birthday we celebrate.

From popsicles to freezing fat

Cryolipolysis machine — which literally translates into cold (cryo) fat (lipo) destruction (lysis) — was invented, in part, by observing what can happen when kids eat popsicles. No kidding here. The cofounders of this process were intrigued by something called “cold-induced fat necrosis” that was reported to occur after young children ate popsicles that were inadvertently left resting on the cheek for several minutes. Skin samples taken from pediatric patients like these showed inflammation in the fat, but normal overlying skin. Thus, it appeared that fat may be more sensitive to cold injury that other tissue types.

HOW DOES IT WORK?

Coolplas Fat Freeze Machine uses rounded paddles in one of four sizes to suction your skin and fat “like a vacuum,” says Roostaeian. While you sit in a reclined chair for up to two hours, cooling panels set to work crystallizing your fat cells. “It’s a mild discomfort that people seem to tolerate pretty well," he says. "[You experience] suction and cooling sensations that eventually go numb.” In fact, the procedural setting is so relaxed that patients can bring laptops to do work, enjoy a movie, or simply nap while the machine goes to work.

WHO IS IT FOR?

Above all, emphasizes Roostaeian, CoolSculpting is “for someone who is looking for mild improvements,” explaining that it’s not designed for one-stop-shop major fat removal like liposuction. When clients come to Astarita for a consultation, she considers “their age, skin quality—will it rebound? Will it look good after volume is removed?—and how thick or pinchable their tissue is,” before approving them for treatment, because the suction panels can only treat the tissue it can access. “If someone has thick, firm tissue,” explains Astarita, “I won’t be able to give them a wow result.

WHAT ARE THE RESULTS?

“It often takes a few treatments to get to your optimum results,” says Roostaeian, who admits that a single treatment will yield very minimal change, sometimes imperceptible to clients. “One of the downsides of [CoolSculpting] is there’s a range for any one person. I’ve seen people look at before and after pictures and not be able to see the results.” All hope is not lost, however, because both experts agree that the more treatments you have, the more results you will see. What will happen eventually is an up to 25 percent fat reduction in a treatment area. “At best you get mild fat reduction—a slightly improved waistline, less bulging of any particular area that’s concerning. I would emphasize the word mild.”

WILL IT MAKE YOU LOSE WEIGHT? "None of these devices shed pounds,” says Astarita, reminding potential patients that muscle weighs more than fat. When you’re shedding 25 percent of fat in a handful of tissue, it won’t add up to much on the scale, but, she counters, “When [you lose] what’s spilling over the top of your pants or your bra, it counts.” Her clients come to her in search of better proportions at their current weight, and may leave having dropped “one or two sizes in clothing.”

Although the mechanism of cryolipolysis is not completely understood, it is believed that vacuum suction with regulated cooling, impedes blood flow and induces crystallisation of the targeted adipose tissue with no permanent effect on the overlying dermis and epidermis. This cold induced ischaemia may promote cellular injury in adipose tissue via cellular oedema and mitochondrial free radical release. Another theory is that the initial insult of crystallisation and cold ischaemic injury is further perpetuated by ischaemia reperfusion injury, causing generation of reactive oxygen species, elevation of cytosolic calcium levels, and activation of apoptotic pathways.

Whichever the mechanism of injury, adipocytes undergo apoptosis, followed by a pronounced inflammatory response, resulting in their eventual removal from the treatment site within the following weeks. The inflammatory process sees an influx of inflammatory cells at 14 days post treatment, as adipocytes become surrounded by histiocytes, neutrophils, lymphocytes, and other mononuclear cells. At 14-30 days after treatment, macrophages and other phagocytes envelope and digest the lipid cells as part of the body’s natural response to injury. Initial concern was that cholesterol, triglycerides, low density lipoproteins (LDLs) and high density lipoproteins (HDLs), bilirubin and glucose levels were affected, however these have been shown to all stay within normal limits following the procedure.

Four weeks later, the inflammation lessens and the adipocyte volume decreases. Two to three months after treatment, the interlobular septa are distinctly thickened and the inflammatory process further subsides. Fat volume in the targeted area is apparently decreased and the septa account for the majority of the tissue volume.

Patients and treatment areas

Although all studies show reduction in every area examined, it is still unknown what areas are most responsive to cryolipolysis. Various factors may play a role in the degree of fat reduction observed after cryolipolysis. The vascularity, local cytoarchitecture, and metabolic activity of the specific fat depots in questions may play a role.

There is lack of substantial research to identify the ideal patient or even the ideal area to be treated. Given a modest (yet significant improvement of up to 25% reduction in subcutaneous fat), it is thought that the best candidates are those within their ideal weight range and those who engage in regular exercise, eat a healthy diet, have noticeable fat bulges on the trunk, are realistic in their expectations, and are willing to maintain the results of cryolipolysis with a healthy, active lifestyle.

New design Cryolipolysis is safe for all skin types, with no reported pigmentary changes, and is safe for repeated application. Ferraro et al. suggest that patients who require only small or moderate amounts of adipose tissue and cellulite removal would benefit most from cryolipolysis treatment. Contraindications include cold-induced conditions such as cryoglobunaemia, cold urticaria, and paroxysmal cold haemoglobinuria. Cryolipolysis should not be performed in treatment areas with severe varicose veins, dermatitis, or other cutaneous lesions.

HIFU Decide Guide: Which Device is for you?