#java aws sdk

Explore tagged Tumblr posts

Video

youtube

Upload File to AWS S3 Bucket using Java AWS SDK | Create IAM User & Poli...

0 notes

Text

Performance Best Practices Using Java and AWS Lambda: Combinations

Subscribe .tb0e30274-9552-4f02-8737-61d4b7a7ad49 { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .tb0e30274-9552-4f02-8737-61d4b7a7ad49.place-top { margin-top: -10px; } .tb0e30274-9552-4f02-8737-61d4b7a7ad49.place-top::before { content: “”; background-color: inherit; position: absolute; z-index: 2; width: 20px; height: 12px; }…

View On WordPress

#aws-lambda#aws-sdk#cloud-computing#java#java-best-practices#java-optimization#performance-testing#software-engineering

0 notes

Text

Amazon S3 - Giải pháp lưu trữ dữ liệu tối ưu cho doanh nghiệp hiện đại

Trong thời đại công nghệ số bùng nổ như hiện nay, việc quản lý và lưu trữ dữ liệu trở thành yếu tố sống còn của mọi doanh nghiệp. Từ các startup nhỏ cho đến những tập đoàn toàn cầu, việc sử dụng một hệ thống lưu trữ an toàn, linh hoạt và có khả năng mở rộng là điều bắt buộc. Một trong những giải pháp được ưa chuộng nhất hiện nay chính là Amazon S3 – dịch vụ lưu trữ đối tượng thuộc Amazon Web Services (AWS).

S3 là gì?

Amazon S3 (Simple Storage Service) là một dịch vụ lưu trữ đám mây được phát triển bởi Amazon. Được giới thiệu lần đầu vào năm 2006, S3 cho phép người dùng lưu trữ và truy cập dữ liệu ở mọi nơi trên thế giới thông qua internet. Dữ liệu được lưu trữ dưới dạng các "object" trong các "bucket", với khả năng mở rộng không giới hạn.

Không giống như các dịch vụ lưu trữ truyền thống, S3 không chỉ là một kho lưu trữ đơn thuần mà còn tích hợp hàng loạt tính năng như phân quyền truy cập, phiên bản hóa dữ liệu, phân tích log, và đặc biệt là khả năng tích hợp với các dịch vụ khác của AWS như EC2, Lambda, CloudFront,...

Ưu điểm nổi bật của Amazon S3

Khả năng mở rộng linh hoạt

Amazon S3 cho phép bạn lưu trữ từ vài byte đến hàng petabyte dữ liệu mà không cần lo lắng về việc mở rộng cơ sở hạ tầng. Với hệ thống phân tán toàn cầu của AWS, dữ liệu của bạn luôn sẵn sàng và có thể truy cập với độ trễ thấp.

Tính bảo mật cao

Amazon S3 cung cấp các tính năng bảo mật nâng cao như mã hóa dữ liệu khi lưu trữ và trong quá trình truyền tải. Ngoài ra, bạn có thể thiết lập quyền truy cập chi tiết cho từng object hoặc bucket, giúp kiểm soát chặt chẽ ai được phép truy cập vào dữ liệu của bạn.

Độ bền và độ sẵn sàng cực cao

Amazon cam kết độ bền dữ liệu lên tới 99,999999999% (11 số 9), nghĩa là gần như không có khả năng mất mát dữ liệu. Điều này đạt được thông qua việc tự động sao lưu dữ liệu tại nhiều trung tâm dữ liệu khác nhau trên toàn cầu.

Chi phí hợp lý

S3 sử dụng mô hình tính phí theo mức sử dụng thực tế (pay-as-you-go). Người dùng chỉ trả tiền cho dung lượng lưu trữ, băng thông sử dụng và các yêu cầu truy xuất dữ liệu. Ngoài ra, Amazon S3 còn cung cấp nhiều lớp lưu trữ như S3 Standard, S3 Intelligent-Tiering, S3 Glacier,… để tối ưu chi phí theo từng nhu cầu cụ thể.

Dễ dàng tích hợp

S3 có thể tích hợp với hầu hết các ứng dụng và nền tảng công nghệ hiện đại thông qua API RESTful, SDK và AWS CLI. Điều này giúp cho các nhà phát triển dễ dàng kết nối và thao tác với dữ liệu trong S3 một cách hiệu quả.

Các trường hợp sử dụng phổ biến của Amazon S3

Lưu trữ dữ liệu website

Nhiều doanh nghiệp sử dụng S3 như một nơi lưu trữ các tài nguyên tĩnh của website như hình ảnh, CSS, JavaScript… Nhờ khả năng tích hợp với CloudFront (CDN), các tài nguyên này có thể được phân phối toàn cầu với tốc độ cao.

Sao lưu và phục hồi dữ liệu

S3 là giải pháp tuyệt vời cho việc sao lưu dữ liệu từ các hệ thống nội bộ hoặc server. Khi xảy ra sự cố, dữ liệu có thể được phục hồi nhanh chóng từ S3.

Lưu trữ và phân tích Big Data

S3 thường được sử dụng làm nơi lưu trữ dữ liệu đầu vào cho các hệ thống xử lý Big Data như Amazon Athena, Amazon Redshift hay AWS Glue. Với khả năng xử lý song song và tích hợp mạnh mẽ, S3 trở thành trung tâm dữ liệu trong hầu hết các hệ thống phân tích hiện đại.

Lưu trữ nội dung đa phương tiện

Các công ty truyền thông, video hoặc âm nhạc sử dụng Amazon S3 để lưu trữ và phân phối nội dung đa phương tiện đến người dùng trên toàn thế giới, với khả năng truyền tải ổn định và bảo mật cao.

Làm thế nào để bắt đầu với Amazon S3?

Để sử dụng S3, bạn chỉ cần một tài khoản AWS. Sau đó:

Đăng nhập vào AWS Management Console.

Tạo một "bucket" – đây là không gian chứa dữ liệu của bạn.

Tải dữ liệu (object) lên bucket.

Cấu hình quyền truy cập, chính sách bảo mật và chọn lớp lưu trữ phù hợp.

Việc quản lý bucket và object có thể thực hiện dễ dàng qua giao diện web, dòng lệnh (AWS CLI), hoặc các SDK dành cho ngôn ngữ lập trình như Python, JavaScript, Java,...

Kết luận

Amazon S3 là một trong những dịch vụ lưu trữ đám mây mạnh mẽ, linh hoạt và an toàn nhất hiện nay. Không chỉ phù hợp với các doanh nghiệp lớn, S3 còn là lựa chọn lý tưởng cho cá nhân, startup và các nhà phát triển mong mu���n một nền tảng lưu trữ dữ liệu đáng tin cậy, tiết kiệm chi phí và dễ dàng tích hợp. Với sự phát triển không ngừng của công nghệ, việc sử dụng các giải pháp như S3 không chỉ là một xu hướng mà đã trở thành tiêu chuẩn trong việc xây dựng hệ thống công nghệ thông tin hiện đại.

Tìm hiểu thêm: https://vndata.vn/cloud-s3-object-storage-vietnam/

0 notes

Text

15446567Y2-AWS Infra Architect

Job title: 15446567Y2-AWS Infra Architect Company: Diverse Lynx Job description: , and SQS. AWS Java SDK: Utilize the AWS Java SDK to build cloud-native solutions and ensure smooth integration with AWS… SDK: Utilize the AWS Java SDK to build cloud-native solutions and ensure smooth integration with AWS services… Expected salary: Location: Chennai, Tamil Nadu – Gurgaon, Haryana Job date: Thu, 22…

0 notes

Text

Aurora DSQL: Amazon’s Fastest Serverless SQL Solution

Amazon Aurora DSQL

Availability of Amazon Aurora DSQL is announced. As the quickest serverless distributed SQL database, it provides high availability, almost limitless scalability, and low infrastructure administration for always-accessible applications. Patching, updates, and maintenance downtime may no longer be an operational burden. Customers were excited to get a preview of this solution at AWS re:Invent 2024 since it promised to simplify relational database issues.

Aurora DSQL architecture controlled complexity upfront, according to Amazon.com CTO Dr. Werner Vogels. Its architecture includes a query processor, adjudicator, journal, and crossbar, unlike other databases. These pieces grow independently to your needs, are cohesive, and use well-defined APIs. This architecture supports multi-Region strong consistency, low latency, and global time synchronisation.

Your application can scale to meet any workload and use the fastest distributed SQL reads and writes without database sharding or instance upgrades. Aurora DSQL's active-active distributed architecture provides 99.999 percent availability across many locations and 99.99 percent in one. An application can read and write data consistently without a Region cluster endpoint.

Aurora DSQL commits write transactions to a distributed transaction log in a single Region and synchronously replicates them to user storage replicas in three Availability Zones. Cluster storage replicas are distributed throughout a storage fleet and scale automatically for best read performance. One endpoint per peer cluster region Multi-region clusters boost availability while retaining resilience and connection.

A peered cluster's two endpoints perform concurrent read/write operations with good data consistency and provide a single logical database. Third regions serve as log-only witnesses without cluster resources or endpoints. This lets you balance connections and apps by speed, resilience, or geography to ensure readers always see the same data.

Aurora DSQL benefits event-driven and microservice applications. It builds enormously scalable retail, e-commerce, financial, and travel systems. Data-driven social networking, gaming, and multi-tenant SaaS programs that need multi-region scalability and reliability can use it.

Starting Amazon Aurora DSQL

Aurora DSQL is easy to learn with console expertise. Programmable ways with a database endpoint and authentication token as a password or JetBrains DataGrip, DBeaver, or PostgreSQL interactive terminal are options.

Select “Create cluster” in the console to start an Aurora DSQL cluster. Single-Region and Multi-Region setups are offered.

Simply pick “Create cluster” for a single-Region cluster. Create it in minutes. Create an authentication token, copy the endpoint, and connect with SQL. CloudShell, Python, Java, JavaScript, C++, Ruby,.NET, Rust, and Golang can connect. You can also construct example apps using AWS Lambda or Django and Ruby on Rails.

Multi-region clusters need ARNs to peer. Open Multi-Region, select Witness Region, and click “Create cluster” for the first cluster. The ARN of the first cluster is used to construct a second cluster in another region. Finally, pick “Peer” on the first cluster page to peer the clusters. The “Peers” tab contains peer information. AWS SDKs, CLI, and Aurora DSQL APIs allow programmatic cluster creation and management.

In response to preview user comments, new features were added. These include easier AWS CloudShell connections and better console experiences for constructing and peering multi-region clusters. PostgreSQL also added views, Auto-Analyze, and unique secondary indexes for tables with existing data. Integration with AWS CloudTrail for logging, Backup, PrivateLink, and CloudFormation was also included.

Aurora DSQL now supports natural language communication between the database and generative AI models via a Model Context Protocol (MCP) server to boost developer productivity. Installation of Amazon Q Developer CLI and MCP server allows the CLI access to the cluster, allowing it to investigate schema, understand table structure, and conduct complex SQL queries without integration code.

Accessibility

As of writing, Amazon Aurora DSQL was available for single- and multi-region clusters (two peers and one witness region) in AWS US East (N. Virginia), US East (Ohio), and US West (Oregon) Regions. It was available for single-Region clusters in Ireland, London, Paris, Osaka, and Tokyo.

Aurora DSQL bills all request-based operations, such as read/write, monthly using a single normalised billing unit, the Distributed Processing Unit. Total database size, in gigabytes per month, determines storage costs. You pay for one logical copy of your data in a single- or multi-region peered cluster. Your first 100,000 DPUs and 1 GB of storage per month are free with AWS Free Tier. Find pricing here.

Console users can try Aurora DSQL for free. The Aurora DSQL User Guide has more information, and you may give comments via AWS re:Post or other means.

#AuroraDSQL#AmazonAuroraDSQL#AuroraDSQLcluster#DistributedProcessingUnit#AWSservices#ModelContextProtocol#technology#technews#technologynews#news#govindhtech

0 notes

Text

Full Stack Developer (.Net with AWS)

. Strong proficiency in at least one backend programming language (e.g., Python, Node.js, Java, Go). Experience with AWS SDKs, CLI… Intermediate Python – 1 Years Intermediate Node.Js – 1 Years Intermediate Go Lang – 1 Years Intermediate Java (All Versions… Apply Now

0 notes

Text

Mobile App Development West Bengal

Introduction: The Rise of Mobile App Development in West Bengal

West Bengal, with Kolkata at its technological helm, has become a key player in India's mobile technology revolution. As smartphones penetrate every layer of society and businesses increasingly adopt mobile-first strategies, the demand for mobile app development in the state has skyrocketed. Whether it's for retail, healthcare, logistics, finance, or education, mobile applications have become the cornerstone of digital transformation. In this SEO-optimized blog, we delve into the intricacies of mobile app development in West Bengal, exploring services offered, top companies, tech stacks, and industry trends that are redefining the app landscape.

Why Mobile App Development is Crucial for Modern Businesses

Mobile applications offer businesses direct access to their target customers and increase operational efficiency through automation and data-driven insights. Here are some reasons businesses in West Bengal are investing in app development:

Direct customer engagement

Increased brand visibility

Real-time updates and support

Streamlined operations

Enhanced customer loyalty

Access to valuable user data

Types of Mobile Apps Developed in West Bengal

1. Native Mobile Apps

Built specifically for Android (Java/Kotlin) or iOS (Swift/Objective-C)

Offer high performance and full device compatibility

2. Hybrid Mobile Apps

Use web technologies like HTML, CSS, and JavaScript

Built with frameworks like Ionic or Apache Cordova

3. Cross-Platform Apps

Developed using Flutter, React Native, Xamarin

Share code across platforms while maintaining near-native experience

4. Progressive Web Apps (PWA)

Work offline and behave like native apps

Built using modern web capabilities

5. Enterprise Mobile Applications

Designed to improve business efficiency and data handling

Includes CRMs, ERPs, field service tools, etc.

Key Mobile App Development Services in West Bengal

1. App Strategy Consulting

Business analysis, app roadmap creation, feature prioritization

2. UI/UX Design

User flow design, wireframes, interactive prototypes, usability testing

3. Mobile App Development

Frontend and backend development, API integration, mobile SDKs

4. App Testing & QA

Manual and automated testing, performance testing, bug fixing

5. Deployment & Launch

Google Play Store and Apple App Store publishing, post-launch monitoring

6. App Maintenance & Support

Regular updates, bug fixes, performance improvements, OS compatibility

Top Mobile App Development Companies in West Bengal

1. Indus Net Technologies (Kolkata)

Full-stack mobile solutions, scalable for enterprise and startup needs

2. Webguru Infosystems (Kolkata)

Cross-platform and native app expertise, strong design focus

3. Capital Numbers (Kolkata)

React Native and Flutter specialists, global client base

4. Binaryfolks (Salt Lake City, Kolkata)

Known for secure and performance-oriented enterprise mobile apps

5. Kreeti Technologies (Kolkata)

Focused on user-centric mobile solutions for logistics and fintech sectors

Leading Industries Adopting Mobile Apps in West Bengal

1. Retail & E-commerce

Shopping apps, inventory management, customer loyalty tools

2. Healthcare

Telemedicine apps, fitness tracking, appointment scheduling

3. Education

eLearning platforms, online exams, student management systems

4. Transportation & Logistics

Fleet tracking, logistics planning, digital proof of delivery

5. Banking & Fintech

Digital wallets, UPI apps, KYC & loan processing apps

6. Real Estate

Virtual tours, property listing apps, customer engagement tools

Popular Technologies & Frameworks Used

Frontend Development:

React Native, Flutter, Swift, Kotlin, Ionic

Backend Development:

Node.js, Django, Ruby on Rails, Laravel

Database Management:

MySQL, Firebase, MongoDB, PostgreSQL

API Integration:

RESTful APIs, GraphQL, Payment gateways, Social media APIs

DevOps:

CI/CD pipelines using Jenkins, GitHub Actions, Docker, Kubernetes

Cloud & Hosting:

AWS, Google Cloud, Microsoft Azure

Case Study: Mobile App for a Regional Grocery Chain in Kolkata

Client: Local supermarket brand with 30+ stores Challenge: Manual order tracking and inefficient delivery process Solution: Custom mobile app with product browsing, cart, secure payment, and delivery tracking Results: 50% increase in orders, 30% operational cost savings, higher customer retention

Mobile App Monetization Strategies

Freemium model: Basic free version with paid upgrades

In-app purchases: Digital goods, subscriptions

Ads: AdSense, affiliate marketing, sponsored content

Paid apps: One-time download fee

Tips to Choose the Right Mobile App Developer in West Bengal

Check client portfolio and case studies

Ensure compatibility with your business domain

Ask for prototypes and demo apps

Assess UI/UX expertise and design innovation

Clarify project timelines and post-launch support

Discuss NDA and data privacy policies

Future Trends in Mobile App Development in West Bengal

Rise of AI-powered mobile apps

Voice-based mobile interfaces

5G-enabled immersive experiences

Greater use of AR/VR for shopping and education

IoT integration for smart home and smart city projects

Conclusion: Embrace the Mobile Revolution in West Bengal

As West Bengal accelerates its digital transformation journey, mobile apps are set to play a defining role. From small startups to established enterprises, the demand for intuitive, scalable, and secure mobile applications continues to rise. By partnering with experienced mobile app developers in the region, businesses can not only meet market demands but also deliver exceptional customer experiences that build loyalty and drive growth.

0 notes

Text

How to Create a Social Video App Like TikTok in 2025

Ever wondered how apps like TikTok became such a massive hit? Want to build your own version of a short-video platform? Let’s break it down in simple terms and see what it takes in 2025 to create a TikTok-style app.

Why Build a TikTok Clone App?

TikTok changed the way we consume content. With over a billion users, it’s not just a social media app—it’s a full-blown entertainment powerhouse. Creating an app like TikTok in 2025 gives you a shot at riding the wave of video-driven engagement, virality, and community building.

Core Features You’ll Need

Here’s a quick list of essential features your TikTok-like app must have to compete:

Video uploading and editing: Let users create, trim, and enhance videos with filters, music, and effects.

Engagement tools: Likes, shares, comments, and reactions to drive interaction.

AI-powered feed: Smart recommendation algorithm to keep users hooked.

Sound integration: Add trending music, sound effects, and voiceovers.

Privacy settings: User control over who sees their content.

Push notifications: Keep users in the loop with updates and trends.

Tech Stack to Power the App

Building something like TikTok isn’t just about design—it’s about choosing the right tools under the hood:

Frontend: React Native or Flutter for cross-platform apps.

Backend: Node.js, Python, or Java with scalable frameworks.

Video SDK: Use a real-time communication API like MirrorFly for chat, voice & video.

Storage: AWS S3 or Google Cloud for video storage.

Database: MongoDB, PostgreSQL, or Firebase for flexibility and speed.

How Much Does It Cost to Build?

The cost depends on a number of factors including features, platform, design complexity, and development hours. Here’s a rough estimate:

Basic MVP: $35,000 – $50,000

Feature-rich version: $70,000 – $150,000+

Using a pre-built SDK like MirrorFly for communication can save time and budget while maintaining high quality.

Monetization Options

Your TikTok-style app can also generate revenue. Here are a few models:

In-app purchases (filters, stickers)

Advertisements

Creator tips and coins

Premium subscriptions

Read the Full Guide

We’ve put together a detailed blog post with everything—from tech stack choices to timeline planning and budget breakdown.

Read the full guide on how to create an app like TikTok

Final Thoughts

2025 is the perfect time to jump into the short-video app space. With the right technology, features, and user strategy, your app could be the next big thing. TikTok may be the giant in the room, but innovation opens the door to new competition.

1 note

·

View note

Text

Why Hiring Android App Developers is Crucial for Your Business Growth

In today’s digital era, mobile applications have become a key factor in business success. Android, being the most widely used mobile operating system, offers a great opportunity for businesses to reach a broader audience. If you are planning to develop an Android application, it is essential to hire android app developers with the right expertise to ensure a high-quality and scalable app.

Why Choose Android for Your Mobile App Development?

Android dominates the global mobile OS market, with millions of users relying on Android apps daily. Here are a few reasons why businesses prefer Android for their app development:

Wider Market Reach: Android powers a majority of mobile devices worldwide, giving businesses access to a vast user base.

Open-Source Platform: Android is an open-source operating system, allowing developers to customize applications based on business needs.

Cost-Effective Development: Android development is more budget-friendly compared to other platforms, making it accessible for startups and enterprises alike.

Integration with Google Services: Android apps seamlessly integrate with Google’s suite of services, such as Google Maps, Firebase, and Google Pay.

Diverse Device Compatibility: Android applications can run on various devices, including smartphones, tablets, smartwatches, and TVs.

Key Skills to Look for When You Hire Android App Developers

When hiring Android developers, it’s essential to evaluate their technical skills and industry experience. Here are some crucial skills to look for:

1. Proficiency in Programming Languages

A skilled Android developer should have expertise in:

Java: The traditional language for Android app development.

Kotlin: A modern, concise, and more efficient alternative to Java.

2. Knowledge of Android Development Frameworks

Android SDK: Essential for building robust applications.

Jetpack Components: Helps streamline app development.

React Native or Flutter: Useful for cross-platform development.

3. Experience with UI/UX Design

Proficiency in Material Design Principles.

Ability to create an intuitive and user-friendly interface.

4. Backend and API Integration

Experience in working with RESTful APIs.

Understanding of Firebase, AWS, or custom backend solutions.

5. Database Management

Proficiency in SQLite, Room Database, and Firebase Realtime Database.

6. Security Best Practices

Expertise in data encryption, authentication, and secure coding practices.

7. Testing and Debugging Skills

Experience in using tools like JUnit, Espresso, and Firebase Test Lab.

Benefits of Hiring Dedicated Android App Developers

1. Customized Solutions

Hiring dedicated developers ensures that your application is tailored to meet your business goals, incorporating unique features that align with your brand.

2. Faster Development and Deployment

Experienced developers follow agile methodologies, ensuring faster development cycles and timely project delivery.

3. Cost-Effectiveness

Outsourcing Android app development to skilled professionals reduces costs compared to maintaining an in-house team.

4. Ongoing Support and Maintenance

Dedicated Android developers provide continuous support, ensuring that your application remains updated with the latest trends and security patches.

How to Hire Android App Developers for Your Project?

1. Define Your Project Requirements

Clearly outline your app idea, target audience, and key features before starting the hiring process.

2. Choose the Right Hiring Model

You can hire Android developers through different models:

Freelancers: Ideal for small projects with limited budgets.

In-House Developers: Best for long-term projects requiring constant updates.

Dedicated Development Teams: A cost-effective solution for comprehensive app development.

3. Evaluate Candidates Thoroughly

Conduct technical assessments, review portfolios, and check past client reviews before making a decision.

4. Ensure Strong Communication Skills

A good Android developer should have strong communication skills to ensure seamless collaboration.

5. Post-Development Support

Ensure the developers offer post-launch support to handle updates, bug fixes, and future enhancements.

Why Choose Sciflare Technologies to Hire Android App Developers?

Sciflare Technologies is a leading provider of Android app development services, offering tailored solutions to businesses worldwide. Our team of expert Android developers ensures that your application is built with cutting-edge technologies, high performance, and top-notch security.

What We Offer?

Experienced Developers with expertise in Java, Kotlin, and cross-platform frameworks.

Custom App Development tailored to meet your business goals.

Scalable and Secure Solutions for startups and enterprises.

Post-Development Support to ensure long-term success.

Final Thoughts

If you’re looking to build a feature-rich and scalable mobile application, it’s crucial to hire android app developers who can bring your vision to life. By choosing skilled professionals, you ensure the success of your app while staying ahead of the competition.

Looking for expert Android developers? Get in touch with Sciflare Technologies today!

0 notes

Text

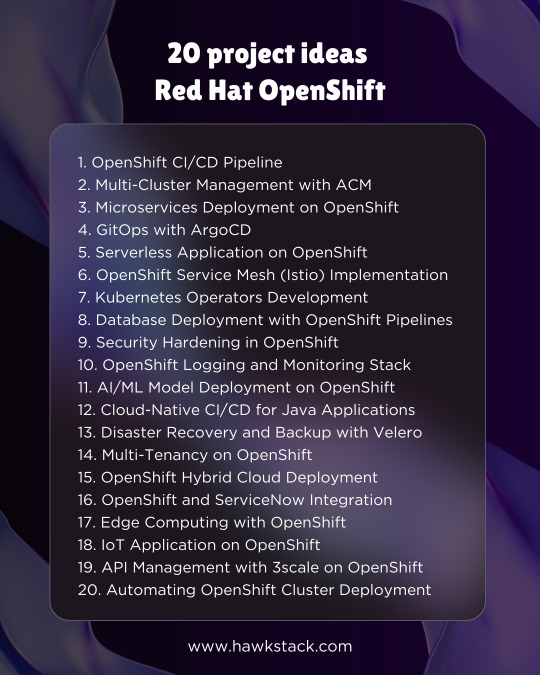

20 project ideas for Red Hat OpenShift

1. OpenShift CI/CD Pipeline

Set up a Jenkins or Tekton pipeline on OpenShift to automate the build, test, and deployment process.

2. Multi-Cluster Management with ACM

Use Red Hat Advanced Cluster Management (ACM) to manage multiple OpenShift clusters across cloud and on-premise environments.

3. Microservices Deployment on OpenShift

Deploy a microservices-based application (e.g., e-commerce or banking) using OpenShift, Istio, and distributed tracing.

4. GitOps with ArgoCD

Implement a GitOps workflow for OpenShift applications using ArgoCD, ensuring declarative infrastructure management.

5. Serverless Application on OpenShift

Develop a serverless function using OpenShift Serverless (Knative) for event-driven architecture.

6. OpenShift Service Mesh (Istio) Implementation

Deploy Istio-based service mesh to manage inter-service communication, security, and observability.

7. Kubernetes Operators Development

Build and deploy a custom Kubernetes Operator using the Operator SDK for automating complex application deployments.

8. Database Deployment with OpenShift Pipelines

Automate the deployment of databases (PostgreSQL, MySQL, MongoDB) with OpenShift Pipelines and Helm charts.

9. Security Hardening in OpenShift

Implement OpenShift compliance and security best practices, including Pod Security Policies, RBAC, and Image Scanning.

10. OpenShift Logging and Monitoring Stack

Set up EFK (Elasticsearch, Fluentd, Kibana) or Loki for centralized logging and use Prometheus-Grafana for monitoring.

11. AI/ML Model Deployment on OpenShift

Deploy an AI/ML model using OpenShift AI (formerly Open Data Hub) for real-time inference with TensorFlow or PyTorch.

12. Cloud-Native CI/CD for Java Applications

Deploy a Spring Boot or Quarkus application on OpenShift with automated CI/CD using Tekton or Jenkins.

13. Disaster Recovery and Backup with Velero

Implement backup and restore strategies using Velero for OpenShift applications running on different cloud providers.

14. Multi-Tenancy on OpenShift

Configure OpenShift multi-tenancy with RBAC, namespaces, and resource quotas for multiple teams.

15. OpenShift Hybrid Cloud Deployment

Deploy an application across on-prem OpenShift and cloud-based OpenShift (AWS, Azure, GCP) using OpenShift Virtualization.

16. OpenShift and ServiceNow Integration

Automate IT operations by integrating OpenShift with ServiceNow for incident management and self-service automation.

17. Edge Computing with OpenShift

Deploy OpenShift at the edge to run lightweight workloads on remote locations, using Single Node OpenShift (SNO).

18. IoT Application on OpenShift

Build an IoT platform using Kafka on OpenShift for real-time data ingestion and processing.

19. API Management with 3scale on OpenShift

Deploy Red Hat 3scale API Management to control, secure, and analyze APIs on OpenShift.

20. Automating OpenShift Cluster Deployment

Use Ansible and Terraform to automate the deployment of OpenShift clusters and configure infrastructure as code (IaC).

For more details www.hawkstack.com

#OpenShift #Kubernetes #DevOps #CloudNative #RedHat #GitOps #Microservices #CICD #Containers #HybridCloud #Automation

0 notes

Text

Top 5 Factors to Consider for eCommerce App Development

In today’s fast-paced digital world, eCommerce apps have revolutionized the way businesses operate. Whether you’re a startup or an established brand, having a robust mobile app is crucial for attracting customers and increasing sales. As mobile commerce continues to grow, investing in eCommerce app development has become a necessity rather than a luxury.

However, building a successful eCommerce app requires careful planning, the right technology, and a deep understanding of customer needs. From choosing the right eCommerce app development company in the USA to estimating the eCommerce app development cost, several factors play a significant role in ensuring the success of your app.

In this blog, we will discuss the top 5 factors to consider for eCommerce app development to help you make informed decisions and build a high-performing online store.

1. Choosing the Right Business Model

Before diving into eCommerce app development, you must determine the business model that best suits your brand and target audience.

Common eCommerce Business Models:

📌 Single Vendor Model: A business sells its own products directly to customers through the app. Example: Nike, Zara.

📌 Multi-Vendor Marketplace: A platform where multiple sellers list their products, and customers can choose from various options. Example: Amazon, eBay.

📌 B2B (Business-to-Business): A platform where businesses sell products or services to other businesses. Example: Alibaba.

📌 B2C (Business-to-Consumer): The most common model where businesses sell products directly to consumers. Example: Walmart, Flipkart.

📌 C2C (Consumer-to-Consumer): A marketplace where individuals sell products to other consumers. Example: Etsy, OLX.

Your business model will impact the features, development cost, and technology stack required for your app.

2. Essential eCommerce App Development Features

To ensure a seamless shopping experience, your eCommerce app must include features that enhance usability, boost engagement, and drive conversions.

Must-Have Features for an eCommerce App:

✅ User Registration & Profile Management – Easy sign-up via email, phone, or social media. ✅ Product Catalog & Categories – Well-structured product listings with high-quality images and descriptions. ✅ Advanced Search & Smart Filters – AI-powered search with sorting options for quick product discovery. ✅ Shopping Cart & Checkout – Seamless cart management and secure checkout process. ✅ Multiple Payment Gateways – Integration of credit cards, UPI, digital wallets, and Buy Now Pay Later (BNPL) options. ✅ Order Tracking & Notifications – Real-time tracking with delivery updates and push notifications. ✅ Wishlist & Favorites – Allow users to save products for later purchase. ✅ Customer Reviews & Ratings – User-generated feedback to build trust. ✅ Loyalty Programs & Discounts – Reward customers with points, cashback, and exclusive deals. ✅ AI-Powered Recommendations – Personalized product suggestions based on browsing history.

Adding advanced features like AR-based product previews, AI-driven chatbots, and voice search can enhance the shopping experience.

3. Selecting the Right Technology Stack

The success of your eCommerce app depends on the technology stack you choose. The right technologies ensure smooth performance, scalability, and security.

Technology Stack for eCommerce App Development

📱 For Android App Development:

Language: Kotlin, Java

Framework: Android SDK, Jetpack

Database: Firebase, PostgreSQL

📱 For iOS App Development:

Language: Swift, Objective-C

Framework: Xcode, SwiftUI

Database: Core Data, Firebase

📱 For Cross-Platform Development:

Frameworks: Flutter, React Native

Backend: Node.js, Python, PHP

Cloud Hosting: AWS, Google Cloud, Azure

A professional eCommerce app development company in the USA can help you choose the right tech stack based on your business requirements.

4. eCommerce App Development Cost Estimation

The eCommerce app development cost depends on several factors, including app complexity, features, design, and development team location.

Key Cost Factors:

💰 App Complexity: A basic eCommerce app with essential features will cost less than an advanced app with AI and AR features. 💰 Design & UI/UX: A well-designed app with an intuitive user interface requires more investment. 💰 Platform Choice: Developing for Android, iOS, or both impacts the overall cost. 💰 Third-Party Integrations: Payment gateways, shipping APIs, CRM, and chatbot integrations add to the cost. 💰 Development Team Location: Hiring an eCommerce app development company in the USA is generally more expensive than outsourcing to Asia or Eastern Europe.

Estimated Cost Breakdown

Development PhaseEstimated Cost (USD)

Planning & Research: $2,000 - $5,000UI/UX

Design: $5,000 - $15,000

Development: $15,000 - $50,000

Payment Integration: $5,000 - $10,000

Testing & QA: $5,000 - $10,000

Deployment & Marketing: $5,000 - $20,000

💰 Total Estimated Cost: $30,000 – $100,000+

For an accurate estimate, consult a top mobile app development company that specializes in eCommerce app development.

5. Security & Compliance Considerations

Security is a critical aspect of eCommerce app development as users trust your platform with their personal and financial data.

Key Security Features:

🔒 SSL Encryption: Encrypts data to prevent cyberattacks. 🔒 Two-Factor Authentication: Adds an extra layer of security for user logins. 🔒 Data Encryption: Protects sensitive customer information. 🔒 Secure Payment Gateways: Ensure compliance with PCI DSS for safe transactions. 🔒 Regular Security Audits: Identify and fix vulnerabilities before they are exploited.

A custom eCommerce app development company ensures your app complies with GDPR, CCPA, and other industry regulations to maintain data security and privacy.

Conclusion

Developing an eCommerce app requires a strategic approach, the right features, and robust security measures. Whether you are a startup or an established brand, considering these top 5 factors can help you create a high-performing, scalable, and secure eCommerce mobile app.

At IMG Global Infotech, we specialize in eCommerce app development and offer tailored solutions to meet your business needs. As a leading eCommerce app development company in the USA, we provide end-to-end development services, from concept to launch.

📩 Ready to build your eCommerce app? Contact us today! 🚀

0 notes

Text

Best Android App Development Company in Lucknow

In today's fast-paced digital world, having a well-designed mobile app is essential for businesses to stay ahead of the competition.

Whether you run a startup or a large enterprise, an Android app can significantly enhance customer engagement and streamline business operations. If you're searching for the best Android app development company in Lucknow, look no further than Jamtech Technologies.

Why Choose an Android App for Your Business?

With Android dominating the global smartphone market, investing in an Android app provides several benefits: ✔ Wider Audience Reach – Android powers over 70% of smartphones worldwide. ✔ Cost-Effective Development – Open-source platform with affordable development costs. ✔ High Customization – Flexible UI/UX options for personalized user experiences. ✔ Seamless Integration – Easy compatibility with Google services and third-party APIs. ✔ Better ROI – More downloads and user engagement mean increased revenue opportunities.

Jamtech Technologies – Leading Android App Development Company in Lucknow

Jamtech Technologies is recognized as one of the top Android app development companies in Lucknow, providing innovative and customized mobile app solutions. With a team of skilled developers and designers, Jamtech specializes in crafting user-friendly and feature-rich Android applications tailored to diverse industry needs.

Services Offered by Jamtech Technologies

As a trusted Android app development company, Jamtech offers a wide range of services, including:

1. Custom Android App Development

We design and develop custom Android applications tailored to your business needs, ensuring high performance and seamless user experience.

2. Enterprise Android App Development

Our enterprise-grade mobile apps help businesses optimize their processes, improve employee productivity, and enhance customer interactions.

3. E-Commerce App Development

We create robust e-commerce mobile applications with secure payment gateways, real-time tracking, and personalized shopping experiences.

4. Cross-Platform App Development

We build apps that run smoothly on multiple platforms, ensuring a seamless experience for both Android and iOS users.

5. UI/UX Design for Android Apps

Our expert designers create intuitive and visually appealing Android app UI/UX designs to enhance user engagement.

6. Android App Maintenance & Support

We offer post-launch app maintenance and support services to ensure your application runs efficiently and stays updated with the latest trends.

Industries We Serve

As a leading Android app development company in Lucknow, Jamtech Technologies caters to various industries, including:

E-commerce & Retail

Healthcare & Fitness

Education & E-learning

Real Estate

Travel & Hospitality

Finance & Banking

Food & Delivery Services

Technologies We Use for Android App Development

Jamtech Technologies leverages the latest technologies and tools to build high-performance Android applications:

Languages: Java, Kotlin

Frameworks: React Native, Flutter

Database: Firebase, SQLite, MySQL

Cloud Services: AWS, Google Cloud

Development Tools: Android Studio, Android SDK

Why Choose Jamtech Technologies?

Here’s why we are the best Android app development company in Lucknow: ✅ Expert Team – Skilled developers with years of experience. ✅ Customized Solutions – Tailored apps to meet unique business needs. ✅ Affordable Pricing – High-quality development at cost-effective rates. ✅ On-Time Delivery – Efficient project management for timely completion. ✅ End-to-End Support – From development to deployment and maintenance.

Conclusion

Finding the best Android app development company in Lucknow is crucial for businesses looking to build a strong mobile presence. Jamtech Technologies stands out with its expert team, cutting-edge technologies, and customer-centric approach. Whether you need a new Android app or want to improve an existing one, Jamtech is your go-to partner for innovative and result-driven mobile app solutions.

0 notes

Text

The Role of the AWS Software Development Kit (SDK) in Modern Application Development

The Amazon Web Services (AWS) Software Development Kit (SDK) serves as a fundamental tool for developers aiming to create robust, scalable, and secure applications using AWS services. By streamlining the complexities of interacting with AWS's extensive ecosystem, the SDK enables developers to prioritize innovation over infrastructure challenges.

Understanding AWS SDK

The AWS SDK provides a comprehensive suite of software tools, libraries, and documentation designed to facilitate programmatic interaction with AWS services. By abstracting the intricacies of direct HTTP requests, it offers a more intuitive and efficient interface for tasks such as instance creation, storage management, and database querying.

The AWS SDK is compatible with multiple programming languages, including Python (Boto3), Java, JavaScript (Node.js and browser), .NET, Ruby, Go, PHP, and C++. This broad compatibility ensures that developers across diverse technical environments can seamlessly integrate AWS features into their applications.

Key Features of AWS SDK

Seamless Integration: The AWS SDK offers pre-built libraries and APIs designed to integrate effortlessly with AWS services. Whether provisioning EC2 instances, managing S3 storage, or querying DynamoDB, the SDK simplifies these processes with clear, efficient code.

Multi-Language Support: Supporting a range of programming languages, the SDK enables developers to work within their preferred coding environments. This flexibility facilitates AWS adoption across diverse teams and projects.

Robust Security Features: Security is a fundamental aspect of the AWS SDK, with features such as automatic API request signing, IAM integration, and encryption options ensuring secure interactions with AWS services.

High-Level Abstractions: To reduce repetitive coding, the SDK provides high-level abstractions for various AWS services. For instance, using Boto3, developers can interact with S3 objects directly without dealing with low-level request structures.

Support for Asynchronous Operations: The SDK enables asynchronous programming, allowing non-blocking operations that enhance the performance and responsiveness of high-demand applications.

Benefits of Using AWS SDK

Streamlined Development: By offering pre-built libraries and abstractions, the AWS SDK significantly reduces development overhead. Developers can integrate AWS services efficiently without navigating complex API documentation.

Improved Reliability: Built-in features such as error handling, request retries, and API request signing ensure reliable and robust interactions with AWS services.

Cost Optimization: The SDK abstracts infrastructure management tasks, allowing developers to focus on optimizing applications for performance and cost efficiency.

Comprehensive Documentation and Support: AWS provides detailed documentation, tutorials, and code examples, catering to developers of all experience levels. Additionally, an active developer community offers extensive resources and guidance for troubleshooting and best practices.

Common Use Cases

Cloud-Native Development: Streamline the creation of serverless applications with AWS Lambda and API Gateway using the SDK.

Data-Driven Applications: Build data pipelines and analytics platforms by integrating services like Amazon S3, RDS, or Redshift.

DevOps Automation: Automate infrastructure management tasks such as resource provisioning and deployment updates with the SDK.

Machine Learning Integration: Incorporate machine learning capabilities into applications by leveraging AWS services such as SageMaker and Rekognition.

Conclusion

The AWS Software Development Kit is an indispensable tool for developers aiming to fully leverage the capabilities of AWS services. With its versatility, user-friendly interface, and comprehensive features, it serves as a critical resource for building scalable and efficient applications. Whether you are a startup creating your first cloud-native solution or an enterprise seeking to optimize existing infrastructure, the AWS SDK can significantly streamline the development process and enhance application functionality.

Explore the AWS SDK today to unlock new possibilities in cloud-native development.

0 notes

Text

AWS Release EC2 R8gd, M8gd & C8gd Instances with Graviton4

Since AWS Graviton processors were released in 2018, Amazon has maintained clients' cloud apps up to date and faster. After Graviton3-based instances were successful, Amazon is introducing three new Amazon Elastic Compute Cloud (Amazon EC2) instance families: compute optimised (C8gd), general purpose (M8gd), and memory optimised (R8gd). These instances employ NVMe SSD local storage and AWS Graviton4 CPUs. AWS Graviton3 instances outperform comparable instances by 30% in compute, 40% in I/O-intensive database workloads, and 20% in real-time data analytics query results.

Built on AWS Graviton4, these instances run C/C++, Rust, Go, Java, Python,.NET Core, Node.js, Ruby, and PHP containerised and microservices programs. Web applications, databases, and large Java programs are outperformed by 30%, 40%, and 45% by AWS Graviton4 processors.

Graviton4 processor innovations

New Amazon EC2 instances with NVMe SSD local storage and AWS Graviton4 processors offer enhanced performance and functionality thanks to many technological advances. Among them:

Improved computation performance: Graviton4-based instances outperform AWS Graviton3-based instances by 30%.

They perform 40% better than Graviton3 in I/O-intensive database workloads.

Faster data analytics: These instances beat Graviton3-based instances by 20% in I/O-intensive real-time data analytics query results.

New instances provide up to 192 vCPUs, three times more, and larger instance sizes.

Up to 1.5 TiB more RAM than Graviton3-based versions.

Instances have three times the local storage (up to 11.4TB of NVMe SSD storage). Performance is considerably improved with NVMe-based SSD local storage.

Based on Graviton3, memory bandwidth is 75% higher than predecessors.

Instances have double the L2 cache compared to the previous generation.

Their 50 Gbps network capacity is a significant improvement over Graviton3 instances.

Increased Amazon EBS capacity: Amazon Elastic Block Store (Amazon EBS) bandwidth of up to 40 Gbps is another enhancement.

Adjustable bandwidth allocation: EC2 instance bandwidth weighting now lets customers alter network and Amazon EBS capacity by up to 25%, increasing flexibility.

Two bare metal sizes—metal-24xl and metal-48xl—allow direct access to physical resources and aid with specific workloads.

These instances, built on the AWS Nitro System, offload networking, storage, and CPU virtualisation to specialist hardware and software to boost speed and security.

All Graviton4 CPUs' high-speed physical hardware interfaces are encrypted for added protection.

The AWS Graviton4-based instances are ideal for containerised and micro-services-based applications, as well as applications written in popular programming languages and storage-intensive Linux workloads. Compared to Graviton3, Graviton4 processors execute web apps, databases, and large Java programs faster.

AWS Graviton4 processors and instance architecture improvements enable performance and new capabilities for many cloud applications.

Specifications for instances

Instances in metal-24xl and metal-48xl bare metal sizes start workloads that demand direct physical resource access and scale properly. The AWS Nitro System, which powers these instances, offloads networking, storage, and CPU virtualisation to dedicated hardware and software to increase workload security and performance. All of Graviton4 processors' high-speed physical hardware interfaces are encrypted, increasing security.

Cost and availability

Oregon and the US East (N. Virginia, Ohio) now include M8gd, C8gd, and R8gd examples. These instances are accessible as dedicated instances, hosts, savings plans, on-demand instances, and spot instances.

Get started now

The AWS Management Console, AWS CLI, or AWS SDKs can launch M8gd, C8gd, and R8gd instances in supported regions immediately. Browse Graviton resources to start migrating apps to instance types.

#technology#technews#govindhtech#news#technologynews#R8gd#C8gd#M8gd#Graviton4 processor#Graviton4#NVMe SSD

0 notes

Text

Building a Scalable Web App with AWS Elastic Beanstalk

Building a Scalable Web App with AWS Elastic Beanstalk AWS Elastic Beanstalk simplifies the deployment and management of scalable web applications.

It abstracts the complexities of infrastructure management, allowing developers to focus on coding while AWS handles the heavy lifting.

Here’s a brief overview for your blog:

What is AWS Elastic Beanstalk? AWS Elastic Beanstalk is a Platform-as-a-Service (PaaS) offering that lets developers deploy and scale web applications and services.

It supports multiple programming languages, including Java, Python, Node.js, PHP, Ruby, Go, and .NET.

Key Features: Automated Deployment: Upload your code, and Elastic Beanstalk automatically handles provisioning resources, load balancing, and deployment.

Auto-Scaling: Elastic Beanstalk adjusts the application capacity dynamically based on traffic.

Monitoring and Logging: Integrated tools provide insights into app health and performance via AWS CloudWatch.

Environment Control: You retain full access to the AWS resources powering your application.

Why Use Elastic Beanstalk for Scalable Apps?

Simplifies Scalability: Built-in auto-scaling ensures your app can handle traffic spikes efficiently.

Time-Saving: Focus on coding while Elastic Beanstalk manages the backend.

Cost-Effective: Pay only for the AWS resources you use.

Easy Integration: Works seamlessly with other AWS services like RDS, S3, and IAM.

Use Cases: Launching and managing e-commerce platforms. Hosting dynamic content-heavy websites.

Scaling RESTful APIs for mobile or web apps.

Steps to Build and Deploy a Scalable Web App:

Step 1: Prepare your application code and choose a supported language/platform.

Step 2: Upload your code to Elastic Beanstalk through the AWS Management Console, CLI, or SDKs.

Step 3: Configure environment settings, including instance types and scaling policies.

Step 4: Monitor application health and logs to ensure smooth operation.

Step 5: Scale resources up or down based on traffic demand.

Benefits for Developers: Elastic Beanstalk allows developers to innovate faster by eliminating infrastructure concerns, enabling rapid application iteration, and ensuring high availability with minimal manual intervention.

Elastic Beanstalk is a powerful tool for developers seeking to build reliable, scalable, and efficient web applications with minimal operational overhead.

0 notes

Text

Maximize Your Career Potential with the Top 10 Development Certifications

Unlock your potential and take your software development career to new heights with the top 10 software development certifications for success. In today’s ever-evolving technology landscape, certifications have become essential for staying ahead of the competition and enhancing your skills. Whether you’re a beginner looking to kickstart your career or an experienced professional wanting to stay relevant, these certifications will help you stand out in the industry. From popular programming languages like Python and Java to specialized domains like cybersecurity and cloud computing, these certifications cover a wide range of crucial skills in the software development field. Not only do these certifications validate your expertise in specific areas, but they also provide you with the knowledge and confidence to tackle complex projects. In this article, we will explore the top 10 software development certifications that industry experts highly recommend. Discover the benefits of each certification, the skills they focus on, and the career opportunities they can unlock. Don’t miss out on the chance to level up your career and open doors to endless possibilities in the world of software development. Get ready to unlock your potential and become a sought-after professional in this thriving industry.

1. Google Professional Cloud Developer

As more organizations migrate to Google Cloud, there is a growing demand for developers proficient in building and deploying applications in this environment. The Google Professional Cloud Developer certification is designed for individuals who can build scalable and highly available cloud applications on the Google Cloud Platform (GCP). It covers a range of topics, including application development, debugging, and security practices within GCP.

This certification is highly sought after by employers looking for developers capable of leveraging Google Cloud services like Cloud Functions, Google Kubernetes Engine, and App Engine to deliver cloud-native solutions. Earning this certification demonstrates your ability to design and implement secure and efficient applications on Google Cloud.

● Target Audience: Cloud developers, DevOps engineers

● Difficulty Level: Intermediate to Advanced

2. AWS Certified Developer — Associate

● Link: AWS Certified Developer

Amazon Web Services (AWS) is one of the most widely used cloud platforms globally, and demand for AWS expertise continues to rise across industries. This certification validates your ability to deploy, debug, and develop cloud-native applications using core AWS services.

Achieving this certification requires a solid understanding of AWS SDKs, security best practices, and troubleshooting methodologies. You will be tested on real-world scenarios involving cloud applications, making this certification an excellent choice for developers seeking to enhance their cloud development credentials.

● Target Audience: Cloud developers, DevOps engineers

● Difficulty Level: Intermediate

3. AI CERTs Certified AI+ Developer

● Link: AI CERTs Certified AI+ Developer

With AI’s rapid expansion, developers with expertise in AI and machine learning are in high demand. The AI CERTs Certified AI+ Developer certification is an industry-recognized credential that validates your proficiency in designing, building, and deploying AI-driven solutions.

This certification focuses on key AI concepts, including machine learning algorithms, neural networks, natural language processing, and computer vision. It is an excellent choice for developers looking to enter the AI space or for those already working in AI who want to showcase their expertise and commitment to staying at the forefront of this rapidly advancing field.

● Target Audience: AI developers, machine learning engineers, data scientists

Use the coupon code NEWUSERS25 to get 25% OFF on AI CERT’s certifications. Visit this link to explore all the courses and enroll today.

4. Microsoft Certified: Azure Developer Associate

● Link: Azure Developer Associate

Microsoft Azure continues to be a dominant force in the cloud computing space. The Azure Developer Associate certification is ideal for developers working with Azure services and looking to prove their skills in building and maintaining cloud applications. This certification validates your expertise in developing Azure compute solutions, implementing Azure security, and integrating Azure services into applications.

With the increasing adoption of Azure by businesses, this certification is highly valuable for developers looking to align their skills with enterprise cloud computing trends. The certification covers both foundational and advanced topics, such as developing Azure storage, managing cloud resources, and troubleshooting and optimizing performance.

● Target Audience: Cloud developers, software engineers

● Difficulty Level: Intermediate

5. Certified Kubernetes Administrator (CKA)

● Link: Certified Kubernetes Administrator

As cloud-native applications gain prominence, Kubernetes has emerged as a leading container orchestration platform. The demand for professionals skilled in Kubernetes management has seen exponential growth. The Certified Kubernetes Administrator (CKA) certification, provided by the Cloud Native Computing Foundation (CNCF), is designed to validate your ability to install, configure, and manage Kubernetes clusters in production environments. It encompasses essential topics such as application lifecycle management, troubleshooting, networking, and security.

Earning the CKA certification is a significant achievement for any developer or DevOps engineer working in cloud environments. As businesses increasingly adopt Kubernetes for containerized application management, this certification ensures that you possess the expertise to maintain reliability, scalability, and efficiency.

● Target Audience: Developers, DevOps engineers, Cloud engineers

● Difficulty Level: Intermediate to Advanced

6. Oracle Certified Professional, Java SE 11 Developer

● Link: Oracle Certified Professional

Java remains a cornerstone of enterprise application development, and proficiency in this language is essential for many developers. The Oracle Certified Professional, Java SE 11 Developer certification is a robust credential that verifies your expertise in using Java to build complex and efficient applications. This certification covers a broad range of Java concepts, including object-oriented programming, data structures, concurrency, and functional programming with Java streams.

Whether you’re a backend developer or an enterprise software engineer, this certification demonstrates your command of Java and your ability to develop, debug, and optimize Java applications for a variety of use cases. It’s an excellent choice for developers looking to advance their careers in Java development.

● Target Audience: Java developers, backend developers

7. Certified Scrum Developer (CSD)

● Link: Certified Scrum Developer (CSD)

Agile development methodologies have become the norm in modern software development, and Scrum is one of the most widely adopted frameworks. The Certified Scrum Developer (CSD) certification is geared towards developers who are part of Scrum teams and wish to gain a deeper understanding of Scrum principles, Agile practices, and collaborative engineering methods.

The CSD certification ensures that you possess the skills necessary to contribute effectively in an Agile development environment. You’ll gain knowledge in continuous integration, test-driven development (TDD), and other Agile engineering practices. This certification is ideal for developers looking to improve their collaboration and efficiency within Scrum teams.

● Target Audience: Agile developers, Scrum team members

● Difficulty Level: Intermediate

8. PCEP — Certified Entry-Level Python Programmer

● Link: PCEP — Certified Entry-Level Python Programmer

Python’s versatility and simplicity make it one of the most popular programming languages globally. It is used across various domains, from web development and automation to data science and AI. The PCEP certification serves as a foundational credential for beginners looking to validate their understanding of Python basics.

This certification covers essential Python programming concepts, including data types, control flow, functions, and error handling. The PCEP certification is a great starting point for aspiring developers who want to build a solid foundation in Python before advancing to more complex topics like web frameworks or machine learning.

● Target Audience: Beginner Python developers

● Difficulty Level: Beginner

9. Red Hat Certified Specialist in OpenShift Application Development

● The Red Hat Certified Specialist in OpenShift Application Development certification is designed for developers who want to build and deploy containerized applications using Red Hat OpenShift. This certification is ideal for those looking to enhance their skills in managing cloud-native applications and leveraging the power of Kubernetes and OpenShift.

● Program Overview:

● This certification focuses on developing, managing, and deploying applications in a Red Hat OpenShift environment. Participants will learn how to create, configure, and troubleshoot applications in containers using OpenShift. The program covers essential topics such as deploying multi-container applications, managing storage, and integrating DevOps practices to streamline the application development lifecycle.

● Key Benefits:

● Containerization Skills: Gain expertise in building and deploying containerized applications using OpenShift.

● Kubernetes Knowledge: Learn to manage applications using Kubernetes in an enterprise environment.

● DevOps Integration: Understand how to integrate DevOps practices with OpenShift to optimize the application development process.

● Ideal For:

● Application Developers

● DevOps Engineers

● Cloud Architects

● Duration: Self-paced

10. Salesforce Certified Platform Developer

● Link: Salesforce Certified Platform Developer

Salesforce remains a leading customer relationship management (CRM) platform, with many businesses relying on custom Salesforce applications for their operations. The Salesforce Certified Platform Developer I certification is designed for developers who want to build, deploy, and manage custom applications on the Salesforce platform. This certification validates your ability to use Apex programming, Visualforce, and Lightning components to create robust CRM solutions.

For developers interested in building career expertise in Salesforce development, this certification is an essential credential. It helps you stand out in a field where skilled Salesforce developers are highly sought after, and it provides a pathway to more advanced Salesforce certifications.

● Target Audience: Salesforce developers, CRM developers

● Difficulty Level: Intermediate

Conclusion

As technology continues to advance at a breakneck pace, earning development certifications has become an essential step for professionals looking to stay competitive in the job market and keep their skills up to date. The certifications listed above cover a wide range of domains, from cloud computing and AI to security and Agile methodologies. Whether you’re looking to deepen your expertise in a particular area or broaden your skill set across multiple domains, there’s a certification for you.

Selecting the right certification can open doors to new opportunities, increased earning potential, and career advancement. As you consider your options, think about your long-term career goals and the areas of technology that excite you the most. Investing in these certifications not only shows your commitment to continuous learning but also positions you as a leader in your field, ready to tackle the challenges and innovations of the future.

By staying current with the latest certifications, you can ensure that you’re well-prepared to meet the demands of an ever-evolving industry and seize emerging opportunities in the world of software development.

0 notes