#kubernetes version

Explore tagged Tumblr posts

Text

VMware vSphere 8.0 Update 2 New Features and Download

VMware vSphere 8.0 Update 2 New Features and Download @vexpert #vmwarecommunities #vSphere8Update2Features #vGPUDefragmentationInDRS #QualityOfServiceForGPUWorkloads #vSphereVMHardwareVersion21 #NVMEDiskSupportInVSphere #SupervisorClusterDeployments

VMware consistently showcases its commitment to innovation when it comes to staying at the forefront of technology. In a recent technical overview, we were guided by Fai La Molari, Senior Technical Marketing Architect at VMware, on the latest advancements and enhancements in vSphere Plus for cloud-connected services and vSphere 8 update 2. Here’s a glimpse into VMware vSphere 8.0 Update 2 new…

View On WordPress

#NVMe disk support in vSphere#Quality of Service for GPU workloads#Supervisor Cluster deployments#Tanzu Kubernetes with vSphere#vGPU defragmentation in DRS#VM management enhancements#vSphere 8 update 2 features#vSphere and containerized workload support#vSphere DevOps integrations#vSphere VM hardware version 21

0 notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

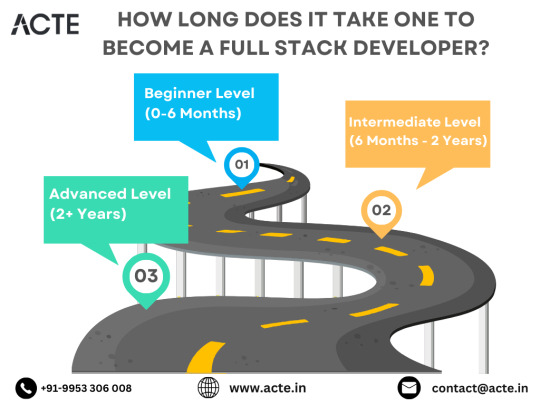

The Roadmap to Full Stack Developer Proficiency: A Comprehensive Guide

Embarking on the journey to becoming a full stack developer is an exhilarating endeavor filled with growth and challenges. Whether you're taking your first steps or seeking to elevate your skills, understanding the path ahead is crucial. In this detailed roadmap, we'll outline the stages of mastering full stack development, exploring essential milestones, competencies, and strategies to guide you through this enriching career journey.

Beginning the Journey: Novice Phase (0-6 Months)

As a novice, you're entering the realm of programming with a fresh perspective and eagerness to learn. This initial phase sets the groundwork for your progression as a full stack developer.

Grasping Programming Fundamentals:

Your journey commences with grasping the foundational elements of programming languages like HTML, CSS, and JavaScript. These are the cornerstone of web development and are essential for crafting dynamic and interactive web applications.

Familiarizing with Basic Data Structures and Algorithms:

To develop proficiency in programming, understanding fundamental data structures such as arrays, objects, and linked lists, along with algorithms like sorting and searching, is imperative. These concepts form the backbone of problem-solving in software development.

Exploring Essential Web Development Concepts:

During this phase, you'll delve into crucial web development concepts like client-server architecture, HTTP protocol, and the Document Object Model (DOM). Acquiring insights into the underlying mechanisms of web applications lays a strong foundation for tackling more intricate projects.

Advancing Forward: Intermediate Stage (6 Months - 2 Years)

As you progress beyond the basics, you'll transition into the intermediate stage, where you'll deepen your understanding and skills across various facets of full stack development.

Venturing into Backend Development:

In the intermediate stage, you'll venture into backend development, honing your proficiency in server-side languages like Node.js, Python, or Java. Here, you'll learn to construct robust server-side applications, manage data storage and retrieval, and implement authentication and authorization mechanisms.

Mastering Database Management:

A pivotal aspect of backend development is comprehending databases. You'll delve into relational databases like MySQL and PostgreSQL, as well as NoSQL databases like MongoDB. Proficiency in database management systems and design principles enables the creation of scalable and efficient applications.

Exploring Frontend Frameworks and Libraries:

In addition to backend development, you'll deepen your expertise in frontend technologies. You'll explore prominent frameworks and libraries such as React, Angular, or Vue.js, streamlining the creation of interactive and responsive user interfaces.

Learning Version Control with Git:

Version control is indispensable for collaborative software development. During this phase, you'll familiarize yourself with Git, a distributed version control system, to manage your codebase, track changes, and collaborate effectively with fellow developers.

Achieving Mastery: Advanced Phase (2+ Years)

As you ascend in your journey, you'll enter the advanced phase of full stack development, where you'll refine your skills, tackle intricate challenges, and delve into specialized domains of interest.

Designing Scalable Systems:

In the advanced stage, focus shifts to designing scalable systems capable of managing substantial volumes of traffic and data. You'll explore design patterns, scalability methodologies, and cloud computing platforms like AWS, Azure, or Google Cloud.

Embracing DevOps Practices:

DevOps practices play a pivotal role in contemporary software development. You'll delve into continuous integration and continuous deployment (CI/CD) pipelines, infrastructure as code (IaC), and containerization technologies such as Docker and Kubernetes.

Specializing in Niche Areas:

With experience, you may opt to specialize in specific domains of full stack development, whether it's frontend or backend development, mobile app development, or DevOps. Specialization enables you to deepen your expertise and pursue career avenues aligned with your passions and strengths.

Conclusion:

Becoming a proficient full stack developer is a transformative journey that demands dedication, resilience, and perpetual learning. By following the roadmap outlined in this guide and maintaining a curious and adaptable mindset, you'll navigate the complexities and opportunities inherent in the realm of full stack development. Remember, mastery isn't merely about acquiring technical skills but also about fostering collaboration, embracing innovation, and contributing meaningfully to the ever-evolving landscape of technology.

#full stack developer#education#information#full stack web development#front end development#frameworks#web development#backend#full stack developer course#technology

10 notes

·

View notes

Text

Navigating the DevOps Landscape: Opportunities and Roles

DevOps has become a game-changer in the quick-moving world of technology. This dynamic process, whose name is a combination of "Development" and "Operations," is revolutionising the way software is created, tested, and deployed. DevOps is a cultural shift that encourages cooperation, automation, and integration between development and IT operations teams, not merely a set of practises. The outcome? greater software delivery speed, dependability, and effectiveness.

In this comprehensive guide, we'll delve into the essence of DevOps, explore the key technologies that underpin its success, and uncover the vast array of job opportunities it offers. Whether you're an aspiring IT professional looking to enter the world of DevOps or an experienced practitioner seeking to enhance your skills, this blog will serve as your roadmap to mastering DevOps. So, let's embark on this enlightening journey into the realm of DevOps.

Key Technologies for DevOps:

Version Control Systems: DevOps teams rely heavily on robust version control systems such as Git and SVN. These systems are instrumental in managing and tracking changes in code and configurations, promoting collaboration and ensuring the integrity of the software development process.

Continuous Integration/Continuous Deployment (CI/CD): The heart of DevOps, CI/CD tools like Jenkins, Travis CI, and CircleCI drive the automation of critical processes. They orchestrate the building, testing, and deployment of code changes, enabling rapid, reliable, and consistent software releases.

Configuration Management: Tools like Ansible, Puppet, and Chef are the architects of automation in the DevOps landscape. They facilitate the automated provisioning and management of infrastructure and application configurations, ensuring consistency and efficiency.

Containerization: Docker and Kubernetes, the cornerstones of containerization, are pivotal in the DevOps toolkit. They empower the creation, deployment, and management of containers that encapsulate applications and their dependencies, simplifying deployment and scaling.

Orchestration: Docker Swarm and Amazon ECS take center stage in orchestrating and managing containerized applications at scale. They provide the control and coordination required to maintain the efficiency and reliability of containerized systems.

Monitoring and Logging: The observability of applications and systems is essential in the DevOps workflow. Monitoring and logging tools like the ELK Stack (Elasticsearch, Logstash, Kibana) and Prometheus are the eyes and ears of DevOps professionals, tracking performance, identifying issues, and optimizing system behavior.

Cloud Computing Platforms: AWS, Azure, and Google Cloud are the foundational pillars of cloud infrastructure in DevOps. They offer the infrastructure and services essential for creating and scaling cloud-based applications, facilitating the agility and flexibility required in modern software development.

Scripting and Coding: Proficiency in scripting languages such as Shell, Python, Ruby, and coding skills are invaluable assets for DevOps professionals. They empower the creation of automation scripts and tools, enabling customization and extensibility in the DevOps pipeline.

Collaboration and Communication Tools: Collaboration tools like Slack and Microsoft Teams enhance the communication and coordination among DevOps team members. They foster efficient collaboration and facilitate the exchange of ideas and information.

Infrastructure as Code (IaC): The concept of Infrastructure as Code, represented by tools like Terraform and AWS CloudFormation, is a pivotal practice in DevOps. It allows the definition and management of infrastructure using code, ensuring consistency and reproducibility, and enabling the rapid provisioning of resources.

Job Opportunities in DevOps:

DevOps Engineer: DevOps engineers are the architects of continuous integration and continuous deployment (CI/CD) pipelines. They meticulously design and maintain these pipelines to automate the deployment process, ensuring the rapid, reliable, and consistent release of software. Their responsibilities extend to optimizing the system's reliability, making them the backbone of seamless software delivery.

Release Manager: Release managers play a pivotal role in orchestrating the software release process. They carefully plan and schedule software releases, coordinating activities between development and IT teams. Their keen oversight ensures the smooth transition of software from development to production, enabling timely and successful releases.

Automation Architect: Automation architects are the visionaries behind the design and development of automation frameworks. These frameworks streamline deployment and monitoring processes, leveraging automation to enhance efficiency and reliability. They are the engineers of innovation, transforming manual tasks into automated wonders.

Cloud Engineer: Cloud engineers are the custodians of cloud infrastructure. They adeptly manage cloud resources, optimizing their performance and ensuring scalability. Their expertise lies in harnessing the power of cloud platforms like AWS, Azure, or Google Cloud to provide robust, flexible, and cost-effective solutions.

Site Reliability Engineer (SRE): SREs are the sentinels of system reliability. They focus on maintaining the system's resilience through efficient practices, continuous monitoring, and rapid incident response. Their vigilance ensures that applications and systems remain stable and performant, even in the face of challenges.

Security Engineer: Security engineers are the guardians of the DevOps pipeline. They integrate security measures seamlessly into the software development process, safeguarding it from potential threats and vulnerabilities. Their role is crucial in an era where security is paramount, ensuring that DevOps practices are fortified against breaches.

As DevOps continues to redefine the landscape of software development and deployment, gaining expertise in its core principles and technologies is a strategic career move. ACTE Technologies offers comprehensive DevOps training programs, led by industry experts who provide invaluable insights, real-world examples, and hands-on guidance. ACTE Technologies's DevOps training covers a wide range of essential concepts, practical exercises, and real-world applications. With a strong focus on certification preparation, ACTE Technologies ensures that you're well-prepared to excel in the world of DevOps. With their guidance, you can gain mastery over DevOps practices, enhance your skill set, and propel your career to new heights.

11 notes

·

View notes

Text

Ansible Collections: Extending Ansible’s Capabilities

Ansible is a powerful automation tool used for configuration management, application deployment, and task automation. One of the key features that enhances its flexibility and extensibility is the concept of Ansible Collections. In this blog post, we'll explore what Ansible Collections are, how to create and use them, and look at some popular collections and their use cases.

Introduction to Ansible Collections

Ansible Collections are a way to package and distribute Ansible content. This content can include playbooks, roles, modules, plugins, and more. Collections allow users to organize their Ansible content and share it more easily, making it simpler to maintain and reuse.

Key Features of Ansible Collections:

Modularity: Collections break down Ansible content into modular components that can be independently developed, tested, and maintained.

Distribution: Collections can be distributed via Ansible Galaxy or private repositories, enabling easy sharing within teams or the wider Ansible community.

Versioning: Collections support versioning, allowing users to specify and depend on specific versions of a collection. How to Create and Use Collections in Your Projects

Creating and using Ansible Collections involves a few key steps. Here’s a guide to get you started:

1. Setting Up Your Collection

To create a new collection, you can use the ansible-galaxy command-line tool:

ansible-galaxy collection init my_namespace.my_collection

This command sets up a basic directory structure for your collection:

my_namespace/

└── my_collection/

├── docs/

├── plugins/

│ ├── modules/

│ ├── inventory/

│ └── ...

├── roles/

├── playbooks/

├── README.md

└── galaxy.yml

2. Adding Content to Your Collection

Populate your collection with the necessary content. For example, you can add roles, modules, and plugins under the respective directories. Update the galaxy.yml file with metadata about your collection.

3. Building and Publishing Your Collection

Once your collection is ready, you can build it using the following command:

ansible-galaxy collection build

This command creates a tarball of your collection, which you can then publish to Ansible Galaxy or a private repository:

ansible-galaxy collection publish my_namespace-my_collection-1.0.0.tar.gz

4. Using Collections in Your Projects

To use a collection in your Ansible project, specify it in your requirements.yml file:

collections:

- name: my_namespace.my_collection

version: 1.0.0

Then, install the collection using:

ansible-galaxy collection install -r requirements.yml

You can now use the content from the collection in your playbooks:--- - name: Example Playbook hosts: localhost tasks: - name: Use a module from the collection my_namespace.my_collection.my_module: param: value

Popular Collections and Their Use Cases

Here are some popular Ansible Collections and how they can be used:

1. community.general

Description: A collection of modules, plugins, and roles that are not tied to any specific provider or technology.

Use Cases: General-purpose tasks like file manipulation, network configuration, and user management.

2. amazon.aws

Description: Provides modules and plugins for managing AWS resources.

Use Cases: Automating AWS infrastructure, such as EC2 instances, S3 buckets, and RDS databases.

3. ansible.posix

Description: A collection of modules for managing POSIX systems.

Use Cases: Tasks specific to Unix-like systems, such as managing users, groups, and file systems.

4. cisco.ios

Description: Contains modules and plugins for automating Cisco IOS devices.

Use Cases: Network automation for Cisco routers and switches, including configuration management and backup.

5. kubernetes.core

Description: Provides modules for managing Kubernetes resources.

Use Cases: Deploying and managing Kubernetes applications, services, and configurations.

Conclusion

Ansible Collections significantly enhance the modularity, distribution, and reusability of Ansible content. By understanding how to create and use collections, you can streamline your automation workflows and share your work with others more effectively. Explore popular collections to leverage existing solutions and extend Ansible’s capabilities in your projects.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#linux#containerorchestration#container#kubernetes#containersecurity#docker#dockerswarm#aws

2 notes

·

View notes

Text

Journey to Devops

The concept of “DevOps” has been gaining traction in the IT sector for a couple of years. It involves promoting teamwork and interaction, between software developers and IT operations groups to enhance the speed and reliability of software delivery. This strategy has become widely accepted as companies strive to provide software to meet customer needs and maintain an edge, in the industry. In this article we will explore the elements of becoming a DevOps Engineer.

Step 1: Get familiar with the basics of Software Development and IT Operations:

In order to pursue a career as a DevOps Engineer it is crucial to possess a grasp of software development and IT operations. Familiarity with programming languages like Python, Java, Ruby or PHP is essential. Additionally, having knowledge about operating systems, databases and networking is vital.

Step 2: Learn the principles of DevOps:

It is crucial to comprehend and apply the principles of DevOps. Automation, continuous integration, continuous deployment and continuous monitoring are aspects that need to be understood and implemented. It is vital to learn how these principles function and how to carry them out efficiently.

Step 3: Familiarize yourself with the DevOps toolchain:

Git: Git, a distributed version control system is extensively utilized by DevOps teams, for code repository management. It aids in monitoring code alterations facilitating collaboration, among team members and preserving a record of modifications made to the codebase.

Ansible: Ansible is an open source tool used for managing configurations deploying applications and automating tasks. It simplifies infrastructure management. Saves time when performing tasks.

Docker: Docker, on the other hand is a platform for containerization that allows DevOps engineers to bundle applications and dependencies into containers. This ensures consistency and compatibility across environments from development, to production.

Kubernetes: Kubernetes is an open-source container orchestration platform that helps manage and scale containers. It helps automate the deployment, scaling, and management of applications and micro-services.

Jenkins: Jenkins is an open-source automation server that helps automate the process of building, testing, and deploying software. It helps to automate repetitive tasks and improve the speed and efficiency of the software delivery process.

Nagios: Nagios is an open-source monitoring tool that helps us monitor the health and performance of our IT infrastructure. It also helps us to identify and resolve issues in real-time and ensure the high availability and reliability of IT systems as well.

Terraform: Terraform is an infrastructure as code (IAC) tool that helps manage and provision IT infrastructure. It helps us automate the process of provisioning and configuring IT resources and ensures consistency between development and production environments.

Step 4: Gain practical experience:

The best way to gain practical experience is by working on real projects and bootcamps. You can start by contributing to open-source projects or participating in coding challenges and hackathons. You can also attend workshops and online courses to improve your skills.

Step 5: Get certified:

Getting certified in DevOps can help you stand out from the crowd and showcase your expertise to various people. Some of the most popular certifications are:

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Microsoft Certified: Azure DevOps Engineer Expert

AWS Certified Cloud Practitioner

Step 6: Build a strong professional network:

Networking is one of the most important parts of becoming a DevOps Engineer. You can join online communities, attend conferences, join webinars and connect with other professionals in the field. This will help you stay up-to-date with the latest developments and also help you find job opportunities and success.

Conclusion:

You can start your journey towards a successful career in DevOps. The most important thing is to be passionate about your work and continuously learn and improve your skills. With the right skills, experience, and network, you can achieve great success in this field and earn valuable experience.

2 notes

·

View notes

Text

Navigating the DevOps Landscape: A Beginner's Comprehensive

Roadmap In the dynamic realm of software development, the DevOps methodology stands out as a transformative force, fostering collaboration, automation, and continuous enhancement. For newcomers eager to immerse themselves in this revolutionary culture, this all-encompassing guide presents the essential steps to initiate your DevOps expedition.

Grasping the Essence of DevOps Culture: DevOps transcends mere tool usage; it embodies a cultural transformation that prioritizes collaboration and communication between development and operations teams. Begin by comprehending the fundamental principles of collaboration, automation, and continuous improvement.

Immerse Yourself in DevOps Literature: Kickstart your journey by delving into indispensable DevOps literature. "The Phoenix Project" by Gene Kim, Jez Humble, and Kevin Behr, along with "The DevOps Handbook," provides invaluable insights into the theoretical underpinnings and practical implementations of DevOps.

Online Courses and Tutorials: Harness the educational potential of online platforms like Coursera, edX, and Udacity. Seek courses covering pivotal DevOps tools such as Git, Jenkins, Docker, and Kubernetes. These courses will furnish you with a robust comprehension of the tools and processes integral to the DevOps terrain.

Practical Application: While theory is crucial, hands-on experience is paramount. Establish your own development environment and embark on practical projects. Implement version control, construct CI/CD pipelines, and deploy applications to acquire firsthand experience in applying DevOps principles.

Explore the Realm of Configuration Management: Configuration management is a pivotal facet of DevOps. Familiarize yourself with tools like Ansible, Puppet, or Chef, which automate infrastructure provisioning and configuration, ensuring uniformity across diverse environments.

Containerization and Orchestration: Delve into the universe of containerization with Docker and orchestration with Kubernetes. Containers provide uniformity across diverse environments, while orchestration tools automate the deployment, scaling, and management of containerized applications.

Continuous Integration and Continuous Deployment (CI/CD): Integral to DevOps is CI/CD. Gain proficiency in Jenkins, Travis CI, or GitLab CI to automate code change testing and deployment. These tools enhance the speed and reliability of the release cycle, a central objective in DevOps methodologies.

Grasp Networking and Security Fundamentals: Expand your knowledge to encompass networking and security basics relevant to DevOps. Comprehend how security integrates into the DevOps pipeline, embracing the principles of DevSecOps. Gain insights into infrastructure security and secure coding practices to ensure robust DevOps implementations.

Embarking on a DevOps expedition demands a comprehensive strategy that amalgamates theoretical understanding with hands-on experience. By grasping the cultural shift, exploring key literature, and mastering essential tools, you are well-positioned to evolve into a proficient DevOps practitioner, contributing to the triumph of contemporary software development.

2 notes

·

View notes

Text

Why You Should Hire Developers Who Understand the Future of Tech

Whether you’re launching a startup, scaling your SaaS product, or building the next decentralized app, one thing is clear—you need the right developers. Not just any coders, but skilled professionals who understand both the technical and strategic sides of digital product building.

In today’s fast-evolving tech landscape, the need to hire developers who are agile, experienced, and forward-thinking has never been greater. From blockchain to AI to SaaS, the right team can turn your business vision into a scalable, future-proof product.

Why Hiring Developers is a Strategic Move, Not Just a Task

In-house or outsourced, full-time or fractional—hiring developers is not just about filling a technical role. It’s a strategic investment that determines:

The speed at which you go to market

The quality of your product

The ability to scale your infrastructure

The cost-effectiveness of your development cycle

When you hire developers who are aligned with your business goals, you're not just building software—you’re building competitive advantage.

The Types of Developers You Might Need

Your hiring approach should depend on what you're building. Here are some common roles modern businesses look for:

1. Frontend Developers

They create seamless and engaging user interfaces using technologies like React, Angular, or Vue.js.

2. Backend Developers

These developers handle the logic, databases, and server-side functions that make your app run smoothly.

3. Full-Stack Developers

They handle both front and back-end responsibilities, ideal for MVPs or lean startups.

4. Blockchain Developers

Crucial for any web3 development company, they specialize in smart contracts, dApps, and crypto integrations.

5. AI Engineers

As AI product development continues to grow, developers with machine learning and automation skills are increasingly in demand.

6. DevOps Engineers

They ensure your systems run efficiently, automate deployment, and manage infrastructure.

Depending on your project, you may need to hire developers who are specialists or build a blended team that covers multiple areas.

The Modern Developer Stack: More Than Just Code

Today’s development goes far beyond HTML and JavaScript. You need developers familiar with:

Cloud platforms (AWS, Azure, GCP)

Containers & orchestration (Docker, Kubernetes)

APIs & microservices

Version control (Git, GitHub, Bitbucket)

Security best practices

Automated testing & CI/CD

The goal isn’t just to write code—it’s to build secure, scalable, and high-performance systems that grow with your business.

SaaS Products Need Specialized Developer Expertise

If you're building a SaaS platform, the development process must account for:

Multi-tenant architecture

Subscription billing

Role-based access

Uptime and monitoring

Seamless UX and product-led growth

That’s where experienced saas experts come in—developers who not only write clean code but understand SaaS metrics, scale, and user behavior.

Hiring the right SaaS development team ensures your platform can evolve with user needs and business growth.

Web3: The Future of App Development

More and more businesses are looking to create decentralized applications. If you’re building in the blockchain space, you need to hire developers who are familiar with:

Ethereum, Polygon, Solana, or other chains

Smart contract development (Solidity, Rust)

Wallet integrations and token standards

DeFi and DAO protocols

Collaborating with a seasoned web3 development company gives you access to specialized talent that understands the nuances of decentralization, tokenomics, and trustless systems.

AI-Driven Applications: Why You Need Developers with ML Skills

From personalized recommendations to intelligent chatbots, AI product development is becoming an essential feature of modern apps. Developers with AI and machine learning knowledge help you:

Implement predictive analytics

Automate workflows

Train custom models

Use data more effectively

If your project involves building intelligent features or analyzing large datasets, hiring developers with AI experience gives you a distinct edge.

In-House vs Outsourced: What’s Right for You?

Many businesses face the choice: Should we build an in-house team or hire externally? Here’s a quick breakdown:

Criteria

In-House Team

Outsourced Developers

Control

High

Medium to High (depending on provider)

Cost

Higher (salaries + overhead)

More flexible, often cost-effective

Speed to Hire

Slower

Faster (especially with an agency/partner)

Specialized Skills

Limited

Broader talent pool

Scalability

Moderate

High

For many startups and growing businesses, the best solution is to partner with a development agency that gives you dedicated or on-demand talent, while letting you stay lean and focused.

What to Look for When Hiring Developers

To make the most of your investment, look for developers who:

Have a proven portfolio of completed projects

Are fluent in your tech stack

Can communicate clearly and collaborate cross-functionally

Understand business logic, not just code

Are committed to continuous learning

Whether you’re hiring freelancers, building an internal team, or partnering with a service provider—vetting for these traits is key to long-term success.

Final Thoughts: Hire Smart, Build Faster

Tech moves fast—and the companies that keep up are the ones with the right talent by their side.

Choosing to hire developers who understand modern trends like Web3, AI, and SaaS is no longer optional. It’s the difference between building something that merely works—and building something that lasts, grows, and disrupts.

If you’re ready to build a world-class product with a team that understands both code and strategy, explore partnering with a trusted digital team today.

The future is being written in code—make sure yours is built by the right hands

0 notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI (AI268)

As AI and Machine Learning continue to reshape industries, the need for scalable, secure, and efficient platforms to build and deploy these workloads is more critical than ever. That’s where Red Hat OpenShift AI comes in—a powerful solution designed to operationalize AI/ML at scale across hybrid and multicloud environments.

With the AI268 course – Developing and Deploying AI/ML Applications on Red Hat OpenShift AI – developers, data scientists, and IT professionals can learn to build intelligent applications using enterprise-grade tools and MLOps practices on a container-based platform.

🌟 What is Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly Red Hat OpenShift Data Science) is a comprehensive, Kubernetes-native platform tailored for developing, training, testing, and deploying machine learning models in a consistent and governed way. It provides tools like:

Jupyter Notebooks

TensorFlow, PyTorch, Scikit-learn

Apache Spark

KServe & OpenVINO for inference

Pipelines & GitOps for MLOps

The platform ensures seamless collaboration between data scientists, ML engineers, and developers—without the overhead of managing infrastructure.

📘 Course Overview: What You’ll Learn in AI268

AI268 focuses on equipping learners with hands-on skills in designing, developing, and deploying AI/ML workloads on Red Hat OpenShift AI. Here’s a quick snapshot of the course outcomes:

✅ 1. Explore OpenShift AI Components

Understand the ecosystem—JupyterHub, Pipelines, Model Serving, GPU support, and the OperatorHub.

✅ 2. Data Science Workspaces

Set up and manage development environments using Jupyter notebooks integrated with OpenShift’s security and scalability features.

✅ 3. Training and Managing Models

Use libraries like PyTorch or Scikit-learn to train models. Learn to leverage pipelines for versioning and reproducibility.

✅ 4. MLOps Integration

Implement CI/CD for ML using OpenShift Pipelines and GitOps to manage lifecycle workflows across environments.

✅ 5. Model Deployment and Inference

Serve models using tools like KServe, automate inference pipelines, and monitor performance in real-time.

🧠 Why Take This Course?

Whether you're a data scientist looking to deploy models into production or a developer aiming to integrate AI into your apps, AI268 bridges the gap between experimentation and scalable delivery. The course is ideal for:

Data Scientists exploring enterprise deployment techniques

DevOps/MLOps Engineers automating AI pipelines

Developers integrating ML models into cloud-native applications

Architects designing AI-first enterprise solutions

🎯 Final Thoughts

AI/ML is no longer confined to research labs—it’s at the core of digital transformation across sectors. With Red Hat OpenShift AI, you get an enterprise-ready MLOps platform that lets you go from notebook to production with confidence.

If you're looking to modernize your AI/ML strategy and unlock true operational value, AI268 is your launchpad.

👉 Ready to build and deploy smarter, faster, and at scale? Join the AI268 course and start your journey into Enterprise AI with Red Hat OpenShift.

For more details www.hawkstack.com

0 notes

Text

🔥 Master DevOps with AWS – Your Career Accelerator! 🔥 Are you ready to supercharge your career in the most in-demand field of 2025? 🚀 Join our DevOps with AWS training program by Mr. Ram, starting 23rd June at 7:30 AM (IST). Whether you're a fresher or IT professional, this program is your path to becoming a DevOps pro with hands-on skills and cloud expertise.

🧠 Course Highlights: ✅ Real-time implementation of CI/CD pipelines ✅ Deep dive into Docker, Kubernetes, and container orchestration ✅ Git & GitHub for seamless version control ✅ Infrastructure automation with Terraform ✅ Extensive AWS training: EC2, S3, CloudWatch, IAM & more ✅ Hands-on projects to simulate real-world DevOps challenges

📅 Enroll today and gain practical exposure to industry-level DevOps tools and workflows. Stand out in interviews with in-demand skills and project experience.

✍️ Register Now: https://tr.ee/3L50Dt 🎓 Explore more free courses: https://linktr.ee/ITcoursesFreeDemos

Learn from industry experts at Naresh i Technologies and take your DevOps journey to the next level. Let’s build and deploy smarter together!

#DevOpsTraining#AWSCloud#FullStackDevOps#NareshIT#OnlineCourses#DockerTraining#KubernetesCourse#TerraformAutomation#GitAndGitHub#CareerInDevOps#CI_CDTools#LearnCloudTech

0 notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

🌐 DevOps with AWS – Learn from the Best! 🚀 Kickstart your tech journey with our hands-on DevOps with AWS training program led by expert Mr. Ram – starting 23rd June at 7:30 AM (IST). Whether you're an aspiring DevOps engineer or an IT enthusiast looking to upscale, this course is your gateway to mastering modern software delivery pipelines.

💡 Why DevOps with AWS? In today's tech-driven world, companies demand faster deployments, better scalability, and secure infrastructure. This course combines core DevOps practices with the powerful cloud platform AWS, giving you the edge in a competitive market.

📘 What You’ll Learn:

CI/CD Pipeline with Jenkins

Version Control using Git & GitHub

Docker & Kubernetes for containerization

Infrastructure as Code with Terraform

AWS services for DevOps: EC2, S3, IAM, Lambda & more

Real-time projects with monitoring & alerting tools

📌 Register here: https://tr.ee/3L50Dt

🔍 Explore More Free Courses: https://linktr.ee/ITcoursesFreeDemos

Be future-ready with Naresh i Technologies – where expert mentors and project-based learning meet career transformation. Don’t miss this opportunity to build smart, deploy faster, and grow your DevOps career.

#DevOps#AWS#DevOpsEngineer#NareshIT#CloudComputing#CI_CD#Jenkins#Docker#Kubernetes#Terraform#OnlineLearning#CareerGrowth

0 notes

Text

Build a Future-Ready Tech Career with a DevOps Course in Pune

In today's rapidly evolving software industry, the demand for seamless collaboration between development and operations teams is higher than ever. DevOps, a combination of “Development” and “Operations,” has emerged as a powerful methodology to improve software delivery speed, quality, and reliability. If you’re looking to gain a competitive edge in the tech world, enrolling in a DevOps course in Pune is a smart move.

Why Pune is a Hub for DevOps Learning

Pune, often dubbed the “Oxford of the East,” is not only known for its educational excellence but also for being a thriving IT and startup hub. With major tech companies and global enterprises setting up operations here, the city offers abundant learning and employment opportunities. Choosing a DevOps course in Pune gives students access to industry-oriented training, hands-on project experience, and potential job placements within the local ecosystem.

Moreover, Pune’s cost-effective lifestyle and growing tech infrastructure make it an ideal city for both freshers and professionals aiming to upskill.

What You’ll Learn in a DevOps Course

A comprehensive DevOps course in Pune equips learners with a wide range of skills needed to automate and streamline software development processes. Most courses include:

Linux Fundamentals and Shell Scripting

Version Control Systems like Git & GitHub

CI/CD Pipeline Implementation using Jenkins

Containerization with Docker

Orchestration using Kubernetes

Cloud Services: AWS, Azure, or GCP

Infrastructure as Code (IaC) with Terraform or Ansible

Many training programs also include real-world projects, mock interviews, resume-building workshops, and certification preparation to help learners become job-ready.

Who Should Take This Course?

A DevOps course in Pune is designed for a wide audience—software developers, system administrators, IT operations professionals, and even students who want to step into cloud and automation roles. Basic knowledge of programming and Linux can be helpful, but many beginner-level courses start from the fundamentals and gradually build up to advanced concepts.

Whether you are switching careers or aiming for a promotion, DevOps offers a high-growth path with diverse opportunities.

Career Opportunities After Completion

Once you complete a DevOps course in Pune, a variety of career paths open up in IT and tech-driven industries. Some of the most in-demand roles include:

DevOps Engineer

Site Reliability Engineer (SRE)

Automation Engineer

Build and Release Manager

Cloud DevOps Specialist

These roles are not only in demand but also come with attractive salary packages and global career prospects. Companies in Pune and across India are actively seeking certified DevOps professionals who can contribute to scalable, automated, and efficient development cycles.

Conclusion

Taking a DevOps course in Pune https://www.apponix.com/devops-certification/DevOps-Training-in-Pune.html is more than just an educational step—it's a career-transforming investment. With a balanced mix of theory, tools, and practical exposure, you’ll be well-equipped to tackle real-world DevOps challenges. Pune’s dynamic tech landscape offers a strong launchpad for anyone looking to master DevOps and step confidently into the future of IT.

0 notes

Text

DevOps for Beginners: Navigating the Learning Landscape

DevOps, a revolutionary approach in the software industry, bridges the gap between development and operations by emphasizing collaboration and automation. For beginners, entering the world of DevOps might seem like a daunting task, but it doesn't have to be. In this blog, we'll provide you with a step-by-step guide to learn DevOps, from understanding its core philosophy to gaining hands-on experience with essential tools and cloud platforms. By the end of this journey, you'll be well on your way to mastering the art of DevOps.

The Beginner's Path to DevOps Mastery:

1. Grasp the DevOps Philosophy:

Start with the Basics: DevOps is more than just a set of tools; it's a cultural shift in how software development and IT operations work together. Begin your journey by understanding the fundamental principles of DevOps, which include collaboration, automation, and delivering value to customers.

2. Get to Know Key DevOps Tools:

Version Control: One of the first steps in DevOps is learning about version control systems like Git. These tools help you track changes in code, collaborate with team members, and manage code repositories effectively.

Continuous Integration/Continuous Deployment (CI/CD): Dive into CI/CD tools like Jenkins and GitLab CI. These tools automate the building and deployment of software, ensuring a smooth and efficient development pipeline.

Configuration Management: Gain proficiency in configuration management tools such as Ansible, Puppet, or Chef. These tools automate server provisioning and configuration, allowing for consistent and reliable infrastructure management.

Containerization and Orchestration: Explore containerization using Docker and container orchestration with Kubernetes. These technologies are integral to managing and scaling applications in a DevOps environment.

3. Learn Scripting and Coding:

Scripting Languages: DevOps engineers often use scripting languages such as Python, Ruby, or Bash to automate tasks and configure systems. Learning the basics of one or more of these languages is crucial.

Infrastructure as Code (IaC): Delve into Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation. IaC allows you to define and provision infrastructure using code, streamlining resource management.

4. Build Skills in Cloud Services:

Cloud Platforms: Learn about the main cloud providers, such as AWS, Azure, or Google Cloud. Discover the creation, configuration, and management of cloud resources. These skills are essential as DevOps often involves deploying and managing applications in the cloud.

DevOps in the Cloud: Explore how DevOps practices can be applied within a cloud environment. Utilize services like AWS Elastic Beanstalk or Azure DevOps for automated application deployments, scaling, and management.

5. Gain Hands-On Experience:

Personal Projects: Put your knowledge to the test by working on personal projects. Create a small web application, set up a CI/CD pipeline for it, or automate server configurations. Hands-on practice is invaluable for gaining real-world experience.

Open Source Contributions: Participate in open source DevOps initiatives. Collaborating with experienced professionals and contributing to real-world projects can accelerate your learning and provide insights into industry best practices.

6. Enroll in DevOps Courses:

Structured Learning: Consider enrolling in DevOps courses or training programs to ensure a structured learning experience. Institutions like ACTE Technologies offer comprehensive DevOps training programs designed to provide hands-on experience and real-world examples. These courses cater to beginners and advanced learners, ensuring you acquire practical skills in DevOps.

In your quest to master the art of DevOps, structured training can be a game-changer. ACTE Technologies, a renowned training institution, offers comprehensive DevOps training programs that cater to learners at all levels. Whether you're starting from scratch or enhancing your existing skills, ACTE Technologies can guide you efficiently and effectively in your DevOps journey. DevOps is a transformative approach in the world of software development, and it's accessible to beginners with the right roadmap. By understanding its core philosophy, exploring key tools, gaining hands-on experience, and considering structured training, you can embark on a rewarding journey to master DevOps and become an invaluable asset in the tech industry.

7 notes

·

View notes

Text

Helm Tutorials— Simplify Kubernetes Package Handling | Waytoeasylearn

Helm is a tool designed to simplify managing applications on Kubernetes. It makes installing, updating, and sharing software on cloud servers easier. This article explains what Helm is, how it functions, and why many people prefer it. It highlights its key features and shows how it can improve your workflow.

Master Helm Effortlessly! 🚀 Dive into the Best Waytoeasylearn Tutorials for Streamlined Kubernetes & Cloud Deployments.➡️ Learn Now!

What You Will Learn

✔ What Helm Is — Understand why Helm is important for Kubernetes and what its main parts are. ✔ Helm Charts & Templates — Learn to create and modify Helm charts using templates, variables, and built-in features. ✔ Managing Repositories — Set up repositories, host your charts, and track different versions with ChartMuseum. ✔ Handling Charts & Dependencies — Perform upgrades, rollbacks, and manage dependencies easily. ✔ Helm Hooks & Kubernetes Jobs — Use hooks to run tasks before or after installation and updates. ✔ Testing & Validation — Check Helm charts through linting, status checks, and organized tests.

Why Take This Course?

🚀 Simplifies Kubernetes Workflows — Automate the process of deploying applications with Helm. 💡 Hands-On Learning — Use real-world examples and case studies to see how Helm works. ⚡ Better Management of Charts & Repositories — Follow best practices for organizing and handling charts and repositories.

After this course, you will be able to manage Kubernetes applications more efficiently using Helm’s tools for automation and packaging.

0 notes

Text

52013l4 in Modern Tech: Use Cases and Applications

In a technology-driven world, identifiers and codes are more than just strings—they define systems, guide processes, and structure workflows. One such code gaining prominence across various IT sectors is 52013l4. Whether it’s in cloud services, networking configurations, firmware updates, or application builds, 52013l4 has found its way into many modern technological environments. This article will explore the diverse use cases and applications of 52013l4, explaining where it fits in today’s digital ecosystem and why developers, engineers, and system administrators should be aware of its implications.

Why 52013l4 Matters in Modern Tech

In the past, loosely defined build codes or undocumented system identifiers led to chaos in large-scale environments. Modern software engineering emphasizes observability, reproducibility, and modularization. Codes like 52013l4:

Help standardize complex infrastructure.

Enable cross-team communication in enterprises.

Create a transparent map of configuration-to-performance relationships.

Thus, 52013l4 isn’t just a technical detail—it’s a tool for governance in scalable, distributed systems.

Use Case 1: Cloud Infrastructure and Virtualization

In cloud environments, maintaining structured builds and ensuring compatibility between microservices is crucial. 52013l4 may be used to:

Tag versions of container images (like Docker or Kubernetes builds).

Mark configurations for network load balancers operating at Layer 4.

Denote system updates in CI/CD pipelines.

Cloud providers like AWS, Azure, or GCP often reference such codes internally. When managing firewall rules, security groups, or deployment scripts, engineers might encounter a 52013l4 identifier.

Use Case 2: Networking and Transport Layer Monitoring

Given its likely relation to Layer 4, 52013l4 becomes relevant in scenarios involving:

Firewall configuration: Specifying allowed or blocked TCP/UDP ports.

Intrusion detection systems (IDS): Tracking abnormal packet flows using rules tied to 52013l4 versions.

Network troubleshooting: Tagging specific error conditions or performance data by Layer 4 function.

For example, a DevOps team might use 52013l4 as a keyword to trace problems in TCP connections that align with a specific build or configuration version.

Use Case 3: Firmware and IoT Devices

In embedded systems or Internet of Things (IoT) environments, firmware must be tightly versioned and managed. 52013l4 could:

Act as a firmware version ID deployed across a fleet of devices.

Trigger a specific set of configurations related to security or communication.

Identify rollback points during over-the-air (OTA) updates.

A smart home system, for instance, might roll out firmware_52013l4.bin to thermostats or sensors, ensuring compatibility and stable transport-layer communication.

Use Case 4: Software Development and Release Management

Developers often rely on versioning codes to track software releases, particularly when integrating network communication features. In this domain, 52013l4 might be used to:

Tag milestones in feature development (especially for APIs or sockets).

Mark integration tests that focus on Layer 4 data flow.

Coordinate with other teams (QA, security) based on shared identifiers like 52013l4.

Use Case 5: Cybersecurity and Threat Management

Security engineers use identifiers like 52013l4 to define threat profiles or update logs. For instance:

A SIEM tool might generate an alert tagged as 52013l4 to highlight repeated TCP SYN floods.

Security patches may address vulnerabilities discovered in the 52013l4 release version.

An organization’s SOC (Security Operations Center) could use 52013l4 in internal documentation when referencing a Layer 4 anomaly.

By organizing security incidents by version or layer, organizations improve incident response times and root cause analysis.

Use Case 6: Testing and Quality Assurance

QA engineers frequently simulate different network scenarios and need clear identifiers to catalog results. Here’s how 52013l4 can be applied:

In test automation tools, it helps define a specific test scenario.

Load-testing tools like Apache JMeter might reference 52013l4 configurations for transport-level stress testing.

Bug-tracking software may log issues under the 52013l4 build to isolate issues during regression testing.

What is 52013l4?

At its core, 52013l4 is an identifier, potentially used in system architecture, internal documentation, or as a versioning label in layered networking systems. Its format suggests a structured sequence: “52013” might represent a version code, build date, or feature reference, while “l4” is widely interpreted as Layer 4 of the OSI Model — the Transport Layer.Because of this association, 52013l4 is often seen in contexts that involve network communication, protocol configuration (e.g., TCP/UDP), or system behavior tracking in distributed computing.

FAQs About 52013l4 Applications

Q1: What kind of systems use 52013l4? Ans. 52013l4 is commonly used in cloud computing, networking hardware, application development environments, and firmware systems. It's particularly relevant in Layer 4 monitoring and version tracking.

Q2: Is 52013l4 an open standard? Ans. No, 52013l4 is not a formal standard like HTTP or ISO. It’s more likely an internal or semi-standardized identifier used in technical implementations.

Q3: Can I change or remove 52013l4 from my system? Ans. Only if you fully understand its purpose. Arbitrarily removing references to 52013l4 without context can break dependencies or configurations.

Conclusion

As modern technology systems grow in complexity, having clear identifiers like 52013l4 ensures smooth operation, reliable communication, and maintainable infrastructures. From cloud orchestration to embedded firmware, 52013l4 plays a quiet but critical role in linking performance, security, and development efforts. Understanding its uses and applying it strategically can streamline operations, improve response times, and enhance collaboration across your technical teams.

0 notes