#no remembering how to create functions Only inputting data into the correct fields

Explore tagged Tumblr posts

Text

my ridiculous massive spreadsheet projects for logging every pokemon i own and recording every fusion ive made in cassette beasts are dangerous, because theyre really good at making me FEEL like im being productive- like omg im accomplishing so much, im checking off boxes im filling the spreadsheet!!!! but im not actually accomplishing anything meaningful. theyre a kind of like art in a way, where the art is in the process of completing them for the sake of it? but its not the kind of thing thats worth anything to anyone who isnt me.

honestly i think theyre fine enough hobby activities its just like. obsessively entering useless data into spreadsheets definitely eats up the time i could be spending drawing or doing something creative

#i need to look for data entry jobs. the only downside is that im not particularly good at like… actual spreadsheet coding stuff#no remembering how to create functions Only inputting data into the correct fields#i need a text post tag

12 notes

·

View notes

Text

Arquius 03/14/2019

Your name is ARQUIUS HALHAK, at least until you find another combination of names that are just as funny as Strihak. You are finally yourself, an after a day with tying to recuperate everything that was on your brainwashed self and feeding the poor opossums in the maze's shed its finally nighttime. You take another walk out through the snow. It just bothers you that much to stay not doing anything, but you are dead so you can't even be online properly. You look at the police building. Hm. You make a choice to bother someone who still has mystery to you which is what I call 10/10 dope. You shoot the older older older older Zahhak a message.

[ARQ > >---->] @Darkleer

ARQ: 🕶️--> Hello STRONG brorse, lets re-do introductions, I apologize for my abrasive precious behavior ,I wasn't in my right mind when I was inquiring about your %istance

ARQ: 🕶️--> My name is Arquius, I'm an AI and I see you

Darkleer 03/14/2019

: »—Hh—>

: »—Hello.—>

: »—What—>

Arquius 03/14/2019

ARQ: 🕶️--> I like your sweatpants

ARQ: 🕶️--> And you are bigger than the others too. Bigger than me I bet STRONGER

ARQ: 🕶️--> As I said, I see you

In the dark is probably hard to see you in the dark and hearing you wouldn't be like actually hearing someone stepping in snow, you sound like a horse stepping in snow. You can see through some small windows that give some light to the basement, ah hah. You make a point to not stay near a window for too long, if he is to see you is moving in and out of his peripheral vision. You might enjoy playing a little too much.

Darkleer 03/14/2019

> You sense something moving in the shadows, and considering you spent almost a millenium to steel yourself for hunt, combat and danger, that has you on the highest alert. Feels bad, man.

: »—That is—>

: »—Cease this at once.—>

: »—I do not wish to hurt you, but you're STRONGLY pressing your luck.—>

: »—You type like a Zahhak. But you said you're an artificial intelligence.—>

: »—Did Equius or Horuss create you?—>

Arquius 03/14/2019

You are the prime being on pressing your luck being in this side of the planet. You giggle a little, you are just so excited. Nothing wrong with being genuine and creepy at the same time.

ARQ: 🕶️--> I like your prosthetics

ARQ: 🕶️--> Oh no, I come from another plane, a simple AutoResponder with a body then I crashed onto Equius' computer and something went wrong... or more like PERFECT

ARQ: 🕶️--> Although yes, he did help me with my new acquisitions of a body

ARQ: 🕶️--> No, I don't mean ill, although I wouldn't blame an organic to take my genuineness ass a menace

Darkleer 03/14/2019

: »—I see.—>

: »—Regardless, stop this spying at once.—>

: »—It seems that you are intelligence enough to understand such an order.—>

Arquius 03/14/2019

ARQ: 🕶️--> I only follow the STRONGEST of commands dear Mister %

ARQ: 🕶️--> Are your commands STRONG enough for such thing?

ARQ: 🕶️--> Maybe if you would let me in or come and get me although I like it here, is like having a fish in a bowl

You make a quick knock at one of the windows a very visible read eye peering inside. It shows your face for a second. You do a little wave before the light turns off and you are covered by darkness.

Darkleer 03/14/2019

> You catch sight of him and then he is gone again. This is. The worst. You're not really nervous, for the record, but very annoyed. This is a transgression of your privacy. He ignored both your request and your warning. You had enough of not being able to control your own creations, you don't need other AIs harrassing you.

Part of you is admittedly curious about his contruction and his apparently very stubborn personality. But the current situation is unacceptable.

You get up and exit through the backdoor leading outdoors. With your eyes having no problem adjusting to the dark, you scan the surroundings for any sign of the intruder, wary as you're not convinced of him not being a threat.

"You will stop this sneaking around at once. Show yourself."

Arquius 03/14/2019

When you see him leave something startles you very much so, you don't want to get hyper destroyed just because you liked to play around. As he is out of sight you move so you are closer to the entrance of the maze in case you need to... escape. You can see him and you hear him, you wish you could be behind him to do your entrance instead of to this distance and basically in his field of vision once you light up but... security first.

You do that, tilting your head as all your installed leds light up from bottom to up, eyes last. You make a very realistic horse noise. "Hi STRONG friend." Little wave.

Darkleer 03/14/2019

There he is. You're actually a little taken aback by this display, but your fascination wins over. He indeed seems to be a full android creation and.. there are a fer design choices that are apparently meant to resemble Equius. Also, are those hooves?

But.. security first.

"Do you have permission to be on the premise?" As far as you know, these are private grounds of members of the police force and outsiders are only permitted in on invitation and with company. He is... definitely alone and hiding outside in the dark.

Arquius 03/15/2019

"... Equius knows I'm here." Kind of. "Its my witness protection because if the Felt gets hands in my hot robotic body I'm good as dead." Now that is true, except its not witness protection or anything legal you just kind of hang here. "Is good to see you upclose for once, makes all the details all the more fascinating. There is a 90% chance you will be scanned." That's a lie, you are scanning him right now.

Darkleer 03/15/2019

Hm. You don't know enough to argue that, but you honestly sort of want to avoid asking Equius. But as long as this robot is in your company, it should be acceptable.

May he scan you as he likes. Not much there that he can figure out that a normal person with eyes couldn't. Meanwhile you worldlessly step up to him and do your own variant of that, taking his face in your hand and tilting it to have a good look. "Impeccable work.."

Arquius 03/15/2019

There is a moment of menace that he gives that you don't let show. But since he is getting close is the silent permission for you to get close. Its the permission for you to flex. "I commando you to touch my muscles they are quite fantastic bro." You are very much proud of yourself, although. "I'm a little bit more than just it, I'll never stop learning." Sort of, or perhaps that is your excuse to pull the tanktop a tad examine HIS cyborg bits, not that you have to since most of it is in view now but... boy. "I have never seen a full blown cyborg befoal. This is fascinating, and certainly a correct approach to evolution, flesh is mare weak and will never be logically and functionally efficient. I knew I liked you mister X or Expatriate."

Darkleer 03/15/2019

Him suddenly returning the curious touch has you startle But uh, huh. It's only fair. This robot is a weird one and you don't think you like all of his particularities, but overall your fascination is too big to tell him off just yet. It's only after his comment that you pull away and huff.

"...being efficient should not be the goal."

You turn around to return to your workshop, but stop as you reach the door. "Come in."

Arquius 03/15/2019

You blink. Must be an organic thing. Aaaaaand you blink again. You are surprised and it shows. You haven't expected that he would let you in. You haven't foreseen that he would invite you of all things. "Magnificent, thank you for your generosity my good Adonis of a horse, I finally get to look at it from the inside." You are almost trotting inside because you are joyful. Almost, you do make an effort to clean all snow and dirt from you right at the entrance so you don't end up messing anything as you make now skip your way in.

"I love nights inside, even if momentarily. I do love being everywhere. Say, why the invitation Strong friend?" You are not planning to kill me are you, you think. You remember exactly the amount of escape and how much time would it take you to reach them and then hide, you have no data on Expatriate proper, so if it comes to it... The Data is basically worthless.(edited)

Darkleer 03/17/2019

"You.. asked to be let in. I am letting you in." It's pretty simple and straightforward. The cold hardly bothers you, but you don't want to keep standing around in the open this awkward and inappropriately dressed.

You have no reason to harm him. Yet. If he poses a threat to yourself or someone else, you should be able to defeat him easily. Until then, there's no harm in trying to learn as much about this eccentric creation as you can.

Your workshop is furnished sparingly. Just the bare necessities, no real sign of comfort besides maybe a couch, hardly anything that would make this seem like someone's living quarters. And yet this is where you reside now.

All available surfaces are littered with tools and machines of human and alternian design. Not as much of a mess as your old home has been, partially because you couldn't bring all of it with you, partially because you've only been here for roughly a week.

Arquius 03/18/2019

"B@$%h you live like this?" The joke is right there and to be fair his quarters are still not the worse of the three, considering you lived in a maze until about 5 minutes ago, oh yeah, you live here now. He let you in, you are staying in here.

"I've asked before, it normally doesn't happen. I'm glad it finally did." You go directly to the tools, you recognize a lot of this, mostly because Equius' place has around the same things. You are quick to wonder if maybe you could fix your bad tooth, but also are quick to ignore that input. You decide to find a shelf or table with enough space to sit and get comfortable enough. "Now that you've let me in I will reside here as long as necessary unless I'm feeding the animals in the maze. I hope you understand." And if he doesn't you are going to do it anyway.

Darkleer 03/19/2019

"I do" you pant, mildly - no - very irritated. "You will stay for as long as I permit." That said, you sit down across him.

The urge to pick him apart and learn more about his construction is STRONG, but that wouldn't be appropriate without permission - of his creator or.. himself, probably. "Where did you state you came from?" It was something weird. Another universe? Hm. You don't know enough about what else is out there to argue that. "If not Equius, who made you?"

Arquius 03/19/2019

"Another universe, one dominated by a troll, she was quite freaking awful. But TLDR, the maze." You have to admit that the issue has never popped before for you, you got twined with a troll from the get go so it wasn't jarring to see them just as friends as it would had been have you just been Hal. But yes, trolls are nice here and not space conquerors waiting to ruin your day. "Equius, Hal, Dirk, me. It is lest about who made me and what I am right now. At least take me to dinner before being so invasive. I command you to be less invasive with your querys, I don't enjoy the path of conversation regarding my construction."

Darkleer Last Thursday at 9:31 AM

You know plenty about trolls that dominate universes. Hn. Could it be her? Another her? The same one? Well, if she is in a different universe, it has little meaning to you. Except, if this robot could travel across universes.. could she as well?

You shake that train of thought. Not what's important at the moment.

"You have no need for dinner." You huff. "You have been invasive first, with little care for my requests." But fine, you don't have the nerve to argue with a stubborn android. You turn away and pick up a bit of metal to tinker with it some more, or so you pretend. It's a piece that.. seems to have little function besides aesthetic. What a radical notion for you.

"What do you do here?" you ask, not looking up from the small figurine.

Arquius Last Friday at 2:42 PM

"I live in a nutritious diet of comedy an irony, bro." You could say those concepts can't be eaten but you beg to differ. "Oh and a glass of water for reasons regarding my construction and build." Equius did give you salivary glands and my god you are a bastard about it. He made you THIRSTY.

You lean to try and see what he is working on from this angle while staying on the table, eyes zooming into his hands. "My sole function so far has been take care of my not so STRONG friends. That has been my number one command and it has been brought to a sharp halt with my fake death. So to answer your question, I have no idea. For once I have no real direction, everything is possible." You get to do whatever the fuck you want and apparently what you've wanted is stalking people and getting into their houses.

You finally just get off the table and set yourself to just stand behind him and try and look over his shoulder not saying much but smiling because you like what he makes. "What do you do here?"

Darkleer Yesterday at 1:06 PM

"Your strong friends.. Equius, Hal and Dirk?" You think you've heard the last two names around the precinct, but you're not sure if he means those. "Your fake death? Explain."

Ah, he got closer. Hn. You turn around a bit and look at him. "..much like you, I am here to help and protect those weaker than me. Though I am still learning about the dangers of this planet."

Arquius Yesterday at 3:10 PM

"Partially Incorrect." Dirk, Jake, Roxy and Jane. "Geez dad why don't you say please first." Your fake death. You make a disgruntled horse noise. He turns around and you don't back out. "Its a secret I hope you can keep secrets. I want your secrets too in return I command you to give me your secrets afterwards. But there is something obvious here. The fricking evil in this planet are the bosses of the FElt. The Crew and the Patriarchy." Considering all of these place's problems. "I don't have much experience with the Crew personally but I have a tweened story with the Felt since the moment of my spawning. I got in there trying to protect little D, ended up being reprogrammed, there was a corrupt version of me for a while. I killed him but I-we merged. Which is why I have to STRONGLY apologize for his messages online. You were talking to him." Pause."I like what you are making. You are a machinery expert too huh my little kelpie?"

1 note

·

View note

Text

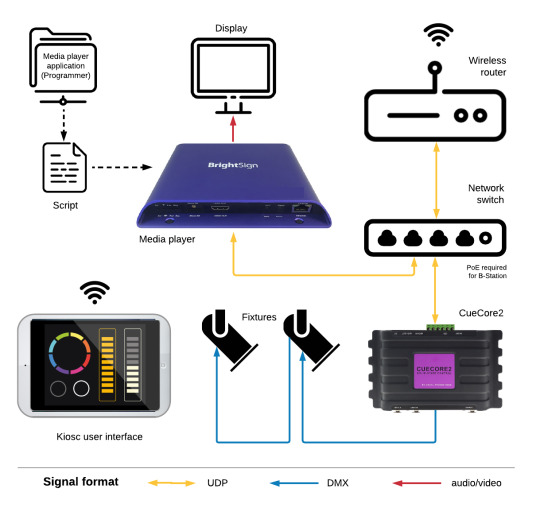

UDP compatibility within Visual Productions lighting and show controller products

Introduction, about UDP

Many protocols are supported by Visual Productions lighting and show controllers and control interfaces. These include SMPTE, MTC and Art-Net for timecode, contact closures and 1-10V signals for GPI, NTP for clock synchronisation, and even RS232 as a legacy computer protocol. And let's not forget DMX, Art-net, sACN and KiNET as the lighting control protocols supported.

Let's have a closer look at the integration and applications of the UDP network protocol. The User Datagram Protocol is a being used in many multi media installations because it is suitable for fast data transfer and because it offers a short response time.

BrightSign, a UDP compatible device

In this topic we focus on compatibility with BrightSign, the bright blue digital signage media player which is UDP compatible: it is capable of sending UDP messages based on the timeline of a video being played, and capable of receiving input signals such as a press on a touch screen, activating a contact closure, etc. It is also capable of receiving UDP messages upon which it can perform certain tasks, such as jumping to a specific video (track) or a specific moment in a video (video time).

When UDP communication is required, BrightSign media players need to be programmed using BrightSign Author authoring software. The software allows you to import content, create layouts, synchronise multiple media players in one installation, publish content to the network, and to set all player and network parameters.

This topic describes a system setup for both using a BrightSign media player as a source, controlling a UDP compatible Visual Productions lighting controller, and as a slave, responding to incoming UDP messages sent by a Visual Productions control interface.

Controlling a CueCore2/QuadCore

This example explains how to synchronise lights with video with in a simple way. It uses any model BrightSign media player and a CueCore2 or QuadCore ('Core') lighting controller.

Play your video on a computer and use a media player which shows you the timecode (actually, it isn't the 'official' timecode but just video time). Determine at which positions (video time) you want to change the lights into different colours: create a table with video time and the corresponding lighting colour;

Now, using Bright Author, create a script for your BrightSign media player that includes a list with video times and a unique UDP message that defines each colour to be recalled by the Core;

In the Core, create Tracks with all static colours, then create a Playback Cue list containing all Tracks. If required, set a fade time to prevent from instant colour changes when playing your video;

In Core Show Control, create a UDP Action list, create an Action and edit this action: set the Trigger Value to (or learn) the UDP message that you defined in your table and add a Task that either Jumps to a cue (Playback/Track) or uses the Go+ command to advance to the next cue in the Playback Cue list.

Controlling a Brightsign media player

This example shows how to start videos stored on a BrightSign media player using Visual Productions Kiosc personalised touch screen interface.

In BrightSign Bright Author, create a script where each video stored on the media player is linked to an incoming UDP message (the start trigger). Define what needs to happen if a UDP message is received, for example 'Stop all videos and start video A'. After that, publish the script to the media player;

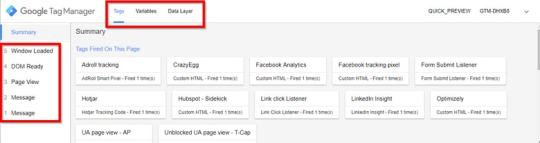

To create a custom user interface, use Kiosc Editor. Now when you have created buttons on your custom user interface, you need to define the UDP messages that wil trigger the file within the BrightSign media player. This is done in the Tags UDP field.

Considering you have stored your new Kiosc layout on your tablet, you need to either input or learn the UDP tags to trigger a task in the Core's Show Control. A task could be ‘start a playback’ for example.

When pressing a Kiosc button on your tablet, the corresponding video will start playing.

More applications

Any device that is UDP compatible can control or can be controlled by Visual Productions' lighting and show controllers. Other examples are control systems like Crestron, Extron and AMX which can integrate DMX lighting control in an affordable way, and large screen video projectors where UDP can be used to switch on/off or select inputs. Because of the Core's flexible Show Control functionality, sending UDP messages can be combined with sending other signals and messages simultaneously. Think of switching on a projector, selecting the correct input and at the same time dim the lights closest to the projection screen. Or imagine a Building Management System sending a UDP message that turns on all lights at 100% and at the same time activating a relay that opens all sliding doors in an emergency situation.

Apart from our Cores, the affordable LPU-2 is UDP compatible as well, although in a more limited way: it only accepts incoming UDP messages which are predefined as described in the Application Programming Interface. This API can be found in the Cuety/LPU user manual. Remember programming of your lighting fixtures (the cues and playbacks) still needs to be done using the Cuety app, available for iOS, Android, macOS, Windows and Linux.

2 notes

·

View notes

Text

Beginning with Python

Starting out with the print function

Single or double quotes are acceptable as long as they’re consistent

You can use “+” to join strings together directly, or “,” to join strings together with a space between them -”\” is known as an “escape character” and it will “escape” the characteristic of the following character and just use it as plain text

Doing basic math with Python:

“+”,”-”,”*” and “/” are the basic characters for adding, subtracting, multiplying and dividing.

You can use “**” to do exponents

“%” is the ‘modulus’ operator. This operator will divide a given number by another and return the remainder from the division

abs() is the ‘absolute’ function, which will return the absolute value of a variable

pow(number, toThePowerOf) is the ‘power’ function, which will multiply the first number by the power of the second

max(number, otherNumber) simply returns the larger of the two given numbers

min(number, otherNumber) simply returns the smaller of the two given numbers

round(number) rounds the given number to the closest whole number

int(number) converts given number to an integer

Loops: The two distinctive loops in Python are the “for” and “while” loops, both of which behave in a similar manner: Repeating tasks until a condition is met. While loops continually execute until a specific condition is met, where as for loops (should) run a predetermined amount of times depending on the parameters you set.

Strings Strings are used to save list of characters as one piece of data. Useful functions to remember when using strings are: .lower()/.upper(), .islower()/.isupper(), .index(”stringToIndex”), .replace(”stringToReplace”, “replaceWith”), str(toConvertToString)

If statements: Comprised of “If”, “elif” and “else” commands are very simple to understand: IF the following statement is true, then run the following code block ELIF the previous statement isn’t true, but this one is, then run the following code block ELSE if no previous statement is true. but this one is, then fun the follow code block

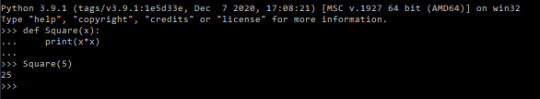

Functions: Functions are sets of code and possibly variables (parameters) to a single line of text. To begin creating a function, the keyword “def” is used to notify python of the function definition:

^ A very basic function

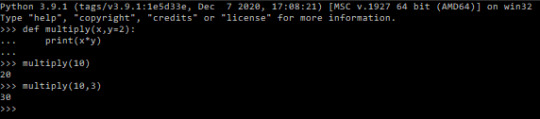

You can also set parameter defaults to make your code more customizable:

Local and Global variables:

A global variable is one that can be accessed anywhere. A local variable is the opposite, it can only be accessed within its frame. The difference is that global variables can be accessed locally, but not modified locally inherently.

File Manipulation: There are two methods for saving data to a file: “writing” and “appending”. Writing to a file will clear any previous data from the file and replace it with the information it has been told to write. Alternatively you can use the append method, which will add the information onto the end of anything that was already in the file. Files can also be read to extract data from them without changing it. To read and write to a file a variable must be established to represent the opened file:

-

Classes: Classes work as ways to store collections of functions together that can be called into other scripts. They are the backbone to Object Orientated Programming

User Input: The simplest way to collect data from a text field is the ‘input()’ method. You can provide a parameter to the method to prompt the user, eg: answer = input(”What is you answer? “)

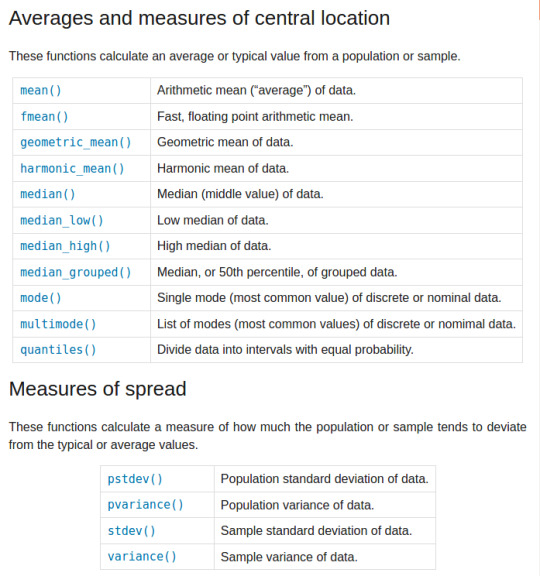

Statistics: by importing the statistics module we get access to a wide range of useful functions:

Module Import Syntax: A useful trick when importing another module is that when you import any module, you can change the name you will use to refer to it in your code. For example, to save on repeatedly typing out ‘statistics’ every time you want to call a function from that module, you can change the name to simply ‘s’ when you import the function with the ‘as’ statement: import statistics as s You can also import specific function(s) from a module with the ‘from’ statement: from statistics import mean(, median, mode) The ‘as’ and ‘from’ statements can also be used together: from statistics import mean as m, median as d Lastly we can import every function from a module to be part of our module without having to call the other module to use the function: from statistics import *

Lists and Tuples: Both used to hold sequential pieces of data, these two data structures differ mainly in that tuples are unchangeable after they have been defined. Where lists are defined by surrounding the data with []’s and separating them with commas; tuples are written in the same way but with ()’s or no brackets at all. Eg: listName = [a,b,c] tupleName = a,b,c / (a,b,c) Lists can be added to, with the append and insert functions: listName.append(valueToAdd) listName.insert(elementNum, valueToAdd) Conversely you can remove data from a list with the remove function: listName.remove(valueToRemove) It’s important to remember that using the remove function like this will remove the first instance of the data found within the list, rather removing data from a specified list element. To remove data from a specific element rather than removing a specific value, you can do: listName.remove(listName[elementToRemove]) Specifying an element in this manner will also work with other methods like print: print(listName[elementToPrint]) Additionally you can take a ‘slice’ of an index by specifying an ending position as well as the first position, like so: print(listName[firstElement : finalElement]) The first element specified will be given, however it will stop upon reaching the final element, without actually returning it. So a range of [0:2] will return only two elements (0,1) and stop upon reaching the third element (2nd position in the index). You can work backwards from the end of a list by giving negative element values. Although as there is no -0 giving -1 gives the last value of the index. print(listName[-1]) The index function can be used to find specific values within lists and their respective elements. print(listName.index(valueToFind)) The count function allows us to know how many times a specific value appears in a list: print(listName.count(valueToFind)) The sort function will arrange the information in a list to be alphabetical/numerically sorted, and the reverse function will reverse the current order of a list: listName.sort() listName.reverse() Lastly, each individual element in a list can be a list itself, referred to as being ‘multi-dimensional’: listName = [[0,1],[3,4]] print(listName) #will return “[0,1] , [3,4]” print(listName[0]) #will return “[0,1]” print(listName[1,1]) #will return “[4]”

CSV files import csv with open(”exampleFile.csv”) as csvfile: readCSV = csv.reader(csvfile, delimter=“,”)

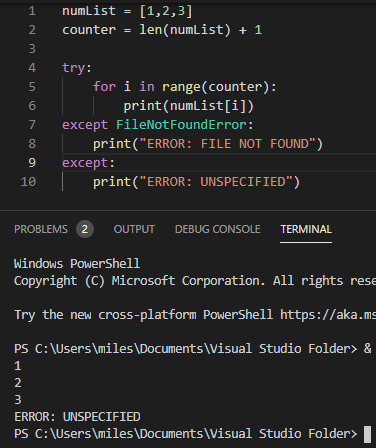

Try and Except Error handling These statements are used where you want to ‘try’ running a piece of code, but you aren’t certain it will work. For example checking a users input is in the correct data type. While this code is useless it is a simple demonstration of the try and except statements:

While the try statement was able to complete three stages of the foor loop before hitting an index error, it wasn’t able to continue after that and so hit an error. For the example I provided both an exception to handle a specific FileNotFoundError

0 notes

Text

VueJS & Firestore Stripped-Back - Tutorial Part 4

In this tutorial series we are stripping everything back to basics to explain how to build full-stack web applications with VueJS and Firebase Cloud Firestore - no CLIs, NPM or Webpack - just a html page and a code editor.

Updating your data

Welcome back. In this fourth part of our Stripped-Back tutorial we’re going to be explaining the U in CRUD - Updating data. By the way if you haven’t read the previous parts of this tutorial please feel free to do so using these links : Part 1, Part 2, Part 3

In the previous part of the tutorial we retrieved the data from our Firestore database by running a realtime query and populating an employees array with the data we retrieved including all of the fields such as lastName, jobTitle etc. However to be able to update (and indeed delete for that matter) we need to be able to get a handle on a unique ID for each employee document that is retrieved so we’ll know what to update when the user saves a change. If you remember from part 2, when you were checking your Firestore console, we mentioned a strange looking ID attached to each document in the Employees collection - this is what we’ll need to get hold of.

To do this we’ll first need to setup a place to store a unique id, so we’ll add an Id property to our employee object in the data section of our Vue Instance Object :

var app = new Vue({ el : ’#app’, data : { appTitle : ‘EmployeeMagic’, mainStyle : { ‘margin’ : ’20px’ }, employee : {firstName : ‘’, lastName : ‘’, jobTitle : ‘’, dept : ‘’, age : 0, id : ‘’ }, db : {}, employees : [ ] },

Next we’ll need to go back and change our callback function which gets the data from each document and puts it into our employees array. In there we’ll need to make sure the unique id from each document is stored in the employee’s new id property as well as the other fields.

created : function() { let that = this that.db = firebase.firestore() let query = that.db.collection(’Employees’) .orderBy(’lastName’) query.onSnapshot((snapshot) => { that.employees = [ ] snapshot.forEach((doc) => { that.employees.push({ id : doc.id, firstName : doc.data().firstName, lastName : doc.data().lastName, jobTitle : doc.data().jobTitle, dept : doc.data().dept, age : doc.data().age }) }) }) }

Notice we didn’t need to go through the data() method to get our id, it’s available directly on the document object itself. On our template, we’ll add an Edit button into the table alongside each employee so the end-user can click the Edit button next to the employee they want to update. We’ll add the button inside the Age column.

<table class=“table table-bordered table-striped”> <thead> <th>Name</th> <th>Job Title</th> <th>Department</th> <th>Age</th> </thead>

<tbody> <tr v-for=“employee in employees”> <td>{{ employee.firstName }} {{ employee.lastName }}</td> <td>{{ employee.jobTitle }}</td> <td>{{ employee.dept }}</td> <td>{{ employee.age }} <button class="badge badge-primary">Edit</button> </td> </tr> </tbody> </table>

We obviously need to have a click event handler on the new Edit button but each button needs a reference to the employee it represents in order to know which one has been selected to be edited. Vue offers a way to deal with this by allowing a second parameter in the v-for directive. This second parameter can be used to expose the index of the array element that v-for is iterating over so we can reference it elsewhere, in this case we want to pass it as the parameter for our click event handler so the function we call knows the index of the employee it’s dealing with.

<tr v-for=“(employee, idx) in employees”>

We can now assign our click handler to our Edit button and pass in the index of each employee, using the idx that we exposed from v-for, as the parameter. We explained the v-on:click directive in part 2 (remember you can also use the shorthand @click if you’d like).

<button v-on:click=“editEmployee(idx)” class="badge badge-primary">Edit</button>

The next step is to implement the editEmployee() click handler which we can do by adding it to the methods object in our Vue Instance. Remember we’re implementing all of our methods in our Vue Instance as arrow functions.

methods : { saveEmployee : () => { if ((app.employee.firstName) && (app.employee.lastName)) app.db.collection(‘Employees’).add(app.employee) .then(function() { app.clearEmployee() }) .catch(function() { console.log(‘Error saving employee ‘ + app.employee.firstName + ‘ ‘ + app.employee.lastName) }) else alert(‘You must enter both a first and last name to save.’) }, editEmployee : (idx) => { }, clearEmployee : () => { app.employee = { firstName : ‘’, lastName : ‘’, jobTitle : ‘’, dept : ‘’, age : 0 } } },

The job of the editEmployee function is to grab the selected employee’s information and populate the employee object’s properties from the correct element on the employees array. We can determine which employee in our array to use by referencing the idx parameter passed to the function from the button. Once our employee object is populated, Vue’s data binding takes care of the rest to display it on the page.

editEmployee : (idx) => { let emp = app.employees[idx] if (emp) { app.employee = { id : emp.id, firstName : emp.firstName, lastName : emp.lastName, jobTitle : emp.jobTitle, dept : emp.dept, age : emp.age } } },

Save the changes and refresh the browser and make sure everything is working as it should. You should see a blue Edit button in the Age column of the table and when you click it, that employee’s information should be displayed in the input boxes. We now need to handle saving updates to existing records when the user clicks Save rather than simply adding it as new record as it does now. We’ll keep the same Save button and the same saveEmployee click handler method, however we’ll make a decision as to whether we need to save as a new employee or as an update to an existing employee. The way to determine this is quite straightforward, if the employee object has a value in it’s id property it’s an existing record, otherwise it’s a new record. For this to work we need to make a quick change first to the clearEmployee method and ensure it clears the id property as well.

clearEmployee : () => { app.employee = { firstName : ‘’, lastName : ‘’, jobTitle : ‘’, dept : ‘’, age : 0, id : ‘’ } }

Let’s go to our saveEmployee method and add that condition to determine whether we’re saving a new employee or an existing one. For saving new employees we simply need to check if the employee.id isn’t set. Note that rather than simply save the employee object as we did previously, we’re defining a new object from the information in the employee object. The reason for this is simply that we’ve added an id property to the employee object and we don’t want to save this as an additional field on the document

saveEmployee : () => { if ((app.employee.firstName) && (app.employee.lastName)) { let saveEmp = { firstName : employee.firstName, lastName : employee.lastName, jobTitle : employee.jobTitle, dept : employee.dept, age : employee.age } if (! app.employee.id) //check if the id has not been set app.db.collection(‘Employees’).add(saveEmp) .then(() => { app.clearEmployee() }) .catch(() => { console.log(‘Error saving employee ‘ + app.employee.firstName + ‘ ‘ + app.employee.lastName) }) else { } //if the id has been set } else alert(‘You must enter both a first and last name to save.’) },

Now let’s see how to save an update to an existing employee. First of all we need to grab an object reference to the specific document we want to update and we get this using the id of the employee.

let docRef = app.db.collection('Employees').doc(app.employee.id)

Now we’ve got a reference to the specific document in our Employees collection in Firestore, we can just simply call Firestore’s set() method and pass in the object with our updated information and our employee record will be updated.

if (docRef) docRef.set(saveEmp)

The set() method is asynchronous, like most Firestore methods, so if there’s any code we want to execute once we’re certain that the update has saved, we can implement it in the returned promise’s then() method (as we covered in part 2). In the method we pass to then() we simply call clearEmployee to clear the inputs and make it ready to add a new employee again, just as we did when adding new employees (and we’ll add a catch() just in case).

if (docRef) docRef.set(saveEmp) .then(() => { app.clearEmployee() }) .catch(() => { console.log(’Update to ‘ + app.firstName + ' ' + app.lastName + ' did not save!'); })

So let’s put this all together in our saveEmployee method :

saveEmployee : () => { if ((app.employee.firstName) && (app.employee.lastName)) { let saveEmp = { firstName : app.employee.firstName, lastName : app.employee.lastName, jobTitle : app.employee.jobTitle, dept : app.employee.dept, age : app.employee.age } if (! app.employee.id) //check if the id has not been set app.db.collection(‘Employees’).add(saveEmp) .then(() => { app.clearEmployee() }) .catch(() => { console.log(‘Error saving employee ‘ + app.employee.firstName + ‘ ‘ + app.employee.lastName) }) else { //if the id has been set, we save an update let docRef = app.db.collection('Employees').doc(app.employee.id) if (docRef) docRef.set(saveEmp) .then(() => { app.clearEmployee() }) .catch(() => { console.log(’Error updating employee ‘ + app.firstName + ' ' + app.lastName) }) } } else alert(‘You must enter both a first and last name to save.’) },

This is fairly verbose and we could certainly tidy things up but it works and it’s verbosity helps to explain what it is we’re doing so we’ll leave this as it is. Before we close this part of the tutorial off and let you go and get a much deserved coffee, let’s add one more little piece of functionality to our app. Things are great, but let’s say the user clicks to Edit an employee and realises they don’t want to save - at the moment there’s no way for them to go back to add a new one. To get around this we’ll put an Add button next to the Save button that let’s them click to add a new employee. This button however should only be available if they’re editing an existing employee.

<label>Age</label> <input type=“number” v-model:number=“employee.age”></br> <button v-on:click=“saveEmployee()”>Save</button> <button v-on:click=“clearEmployee()”>Add</button> </div>

Notice we’re directly calling our clearEmployee method as our event handler as that does everything we need to put our app into Add mode. Cool, but remember we only want to show this button if the user is in Edit mode. The way to do this is to use Vue’s conditional directive, v-if. This lets us include a conditional statement on the element, whether directly of via a method call, to determine if it should be visible on the page. In this case we want to check if the current employee object has an id set, if it has then we’re in edit mode so show the Add button, otherwise don’t show it.

<button v-if=“employee.id” v-on:click=“clearEmployee()”>Add</button>

That’ll do for this part of the tutorial dedicated to the U in CRUD. In this part we’ve covered retrieving each document’s unique id along with the other fields. We’ve added an Edit button for each employee in the list and exposed an index from our v-for directive to assign an employee index from our array so each button knows which employee it relates to. We‘ve saved our employee updates back to the database using the unique id of each document and finally we covered using v-if to conditionally show a button on our page. In the next part of this tutorial we’ll cover the D in CRUD - deleting. Hope you can join me.

You can download the completed code for this part of the tutorial on Github using the repo below and select the part4 folder. https://github.com/MancDev/VueFire

1 note

·

View note

Text

Mobile App Design: Designing for a Web App vs. Native App

One of the major decisions enterprises face early on in the mobile app development process is building a native app, web app, or a hybrid of the two. This decision not only influences the overall design of a mobile product but also impacts how users interact with your specific product. Inevitably, this will dictate the varying aspects and requirements you outline in your product requirements document that will ultimately communicate to your product team what to build, who for, and how it benefits the end-user.

This article will outline how web apps and native apps each uniquely serve a different purpose. A web app is primarily used for accessing information, and mobile apps are typically used to complete a task (i.e., buying a product vs. searching a restaurant’s location.) Ultimately, taking a design thinking approach to development allows enterprises to understand users better and choose an app type that provides a solution to their pain points.

Below, we examine the difference between web apps and native apps, as well as several considerations for how to design a mobile product to ensure it serves your business objectives and your target audience’s needs.

hbspt.cta.load(1789978, '9a00bf2b-0f7e-4b0c-88db-1c2f2534c4e7', {});

What is a Web App

As mentioned above, websites provide users with a means to access information. While web apps can’t display as much information as a desktop version of a website, web apps condense the website content to improve functionality. Essentially a web app is an extension of your website with newer web features that offer a more native-like experience. Progressive web apps load in browsers like Chrome, Safari, or Firefox, and don’t need to be downloaded from app stores (Google Play, Apple’s App Store) like native mobile apps.

Web App Design Considerations

Web app design is focused on information architecture. Properly designing a web app will ensure users can easily navigate your app, finding the information they want or need quickly and efficiently.

Navigation

It is imperative to design a navigation system that is intuitive to use, allowing users to quickly locate the content they want. Furthermore, incorporating a proper navigation hierarchy will guide users through your site, allowing them to complete site goals, be it conversion, education, or awareness. Create a strong hierarchy by first eliminating unnecessary clicks. Use breadcrumbs to show the user where they currently are within your app and how they got there. Breadcrumbs also help users navigate to higher-level pages. Finally, when designing the navigation system, be sure to utilize font sizes, line height, and character count to draw the user’s attention to key information and navigation options.

Speed

It’s reported that 47% of consumers expect a web page to load in two seconds or less. Users are easily frustrated with performance and usability issues like load times, small images, and network availability. An easy way to combat a slow or laggy web app is to keep things lean. Display and incorporate only necessary information and features in your web app. The fewer elements there are, the quicker the app will load. As mentioned earlier, web apps condense web content to improve functionality. Make sure your web app focuses on providing a solution to only one user pain point and ensure it is easy for users to achieve this goal.

Adaptive vs. Responsive

With Statista forecasting that there will be over 16 billion mobile devices worldwide by 2023, businesses designing web apps need to keep in mind that no one device is alike, and therefore need to account for how their web app will display on varying screen sizes. The answer lies in either developing an adaptive web app or a responsive web app.

Responsive

A responsive design responds to changes in browser width by re-adjusting the design elements to fit the available space. On mobile phones, this process is automatic; the site checks for the available space and then presents itself in the ideal arrangement.

Adaptive

Adaptive design has multiple fixed layout sizes. When the site detects the available space, it selects the layout most appropriate for the screen. For example, if the app is opened on a mobile device the site chooses the best layout for that screen. In adaptive design, it’s normal to develop six designs for the six most common screen widths; 320, 480, 760, 960, 1200, and 1600 pixels.

hbspt.cta.load(1789978, '9a00bf2b-0f7e-4b0c-88db-1c2f2534c4e7', {});

Native App

Native mobile apps are the most common type of app. They are built for specific platforms (i.e., Apple or Android) and are written in languages that the platform accepts. For example, Swift and Objective-C for native iOS apps and Java or Kotlin for native Android apps. Most companies will invest in native mobile app development because of the many benefits offered in comparison to other types of apps.

Native App Design Considerations

Native app design is centered around what is known as interaction design. To be successful, it is imperative to have a thorough understanding of your users, their pain points, and what solution this app will provide. After pinpointing the task a user wants to accomplish, you can easily design the right user flow and user journey to guide the user through the app.

Use Familiar Gestures

Implementing gestures into your app design is an excellent method for creating shortcuts for navigation and key functions. However, introducing new user gestures in the UI that aren’t familiar can result in a learning curve. It is essential to implement standard gestures so they are consistent with other apps. Some of these gestures include double-tap, touch-and-hold, and pinch.

Also, invest in researching user motion patterns on their devices. Users hold their devices in different ways, understanding what motions they make and discovering the most accessible locations on the screen ensure a comfortable experience. Placing a critical function in an area that is hard to reach, for example, can be detrimental to the overall functionality of the app.

Keep Content and Interface Elements to a Minimum

Carefully and thoughtfully select what functions and features are needed for your native app. Filling your interface with unnecessary buttons, images, and icons can burden users with too much information. As there isn’t as much real estate on mobile devices as there is on desktops, it’s essential to get rid of anything that isn’t key to the app’s functionality. If it doesn’t help the user achieve their end goal, cut it out. This will ensure your users find your app easy to comprehend, allowing them to see a clear-cut purpose to your product.

Minimize User Input

For many users, entering any information into an app (be it registration, or filling out a form) is tedious. Due to the size of the keyboard that is often presented, the majority of information that is provided can be riddled with errors leading to further frustration. The simple fix is to minimize user input. Eliminate the need for unnecessary registration forms and other elements that require user input. However, if this is necessary to your app, some best practices can be implemented to make the process smoother. Try to keep forms as short as possible by removing any unnecessary fields. The app should ask for only essential information from the user. As a final step to avoid added frustration, implement autocomplete features and dynamically validated fields, as these will ensure data is correct before being submitted.

Final Thoughts

While there are commonalities, there is also a distinct difference in how native apps and web apps should be designed. First and foremost, it is vital to have a deep understanding of your target users and their pain points. Identifying their needs will guide you towards the approach that fits your needs. Keep in mind that native apps and web apps serve different purposes that dictate design elements and functionalities.

Above all, it is important to remember that mobile app development is an iterative process. Implementing the design considerations above in your app should be a starting point for continued testing. Use the provided data and user feedback to improve your design and overall experience continually.

hbspt.cta.load(1789978, '9a00bf2b-0f7e-4b0c-88db-1c2f2534c4e7', {});

Mobile App Design: Designing for a Web App vs. Native App published first on https://gpshandyorten.tumblr.com/

0 notes

Text

300+ TOP Apache TAPESTRY Interview Questions and Answers

Apache Tapestry Interview Questions for freshers experienced :-

1. What is Apache Tapestry? It is an open source web framework written in Java and can work under any application server. It is easily integrate with back ends like Hibernate and Spring etc. It is a component based web framework. 2. What are the benefits of Apache Tapestry? Benefits of Apache Tapestry are: Adaptive API Fast framework Build-in Inversion Control Highly scalable web applications Storage management of Persistent state 3. What are the features of Apache Tapestry? Features of Apache Tapestry are: Live class reloading Code less, deliver more Static structure and dynamic behavior Detailed and clear exception reporting Extensive use of POJOs (Plain Old Java Objects) 4. Who is the developer of Apache tapestry? Apache Tapestry is developed by “Howard Lewis Ship”. 5. What is the component annotations used in Apache Tapestry? Component annotations used in Apache Tapestry are: @Log @Path @import @Property @Parameter @Environmental 6. What is IoC annotation? IoC annotation: It is used to inject objects into IoC Container. Type of IoC annotation are: @Value @Inject 7. What is CleanupRender? CleanupRender: It is used to release the objects created during rendering process. It is the counterpart of the SetupRender. 8. What is Two-way Data Binding? In Two-way data binding, we can communicate and transfer data with the use of parameters, components and its corresponding page. 9. What is Validate expansion? Validate expansion: It is a specialized string that is used to specify the validation rule of an object. 10. What is Form Component? It is used to create a form in the tapestry page for user input. A form can contain text fields, checkbox fields, date fields, submit button, select options and more.

Apache TAPESTRY Interview Questions 11. Does Apache Tapestry use JSP Tag libraries? No, It does not use JSP Tag library. 12. What is TextField Component? TextField Component: It is used to edit a single line of text. 13. What are the significant parameters used in Form Validation? Significant parameters used in Form Validation are: Min Max Email MaxDate MaxLength MinLength 14. What are the ways provided by Apache Tapestry to persist the data? There are two ways provided by Apache Tapestry to persist the data are: Session Storage Persistence page data 15. What is SSO? SSO stands for Session Store Object. It is a specialized store that is used to store complex / special object. Data types can also be stored using SSO. 16. Why Do We Need @script In Apache Tapestry? The script framework is an effective means to bundle scripts in components. It provides scripts with the advantages of components. It can now be reused like a component and not have to worry about renaming field names or the wiring between the fields and the scripts. You just declare the component and you are good to go. It certainly is another layer of abstraction that one will have to learn but once you have learned it, it is very powerful. And honestly there is not much to it. The script framework is mandated by the fact that form element/field names are automatically generated by the framework. And so you write your script in XML and use variables for these names and let the framework provide the correct names during runtime. Going further, you may also ask the framework to provide other objects that would help in creating your script. For example… This defines an input variable “select” of type “org.apache.tapestry.form.PropertySelection”. All such variables/symbols passed in to the script is stored in a symbol map. And now you can use the form select list name by using an ant style syntax like ${select.name}. The expression within “${}” is an OGNL expression and is evaluated with respect to the symbol map. You may also define your own symbols/variables using like… document.${select.form.name} ${formObj}.${select.name} These variables/symbols are stored in the symbol map also. So now if you want to set the value of the form select list all you do is say ${formObj}.${selectObj}.value = ‘whatever’; this would be equivalent to document.myForm.mySelect.value = ‘whatever’; where myForm is the form name and mySelect is the select list name. s are like method parameters and s are like instance variables. Typically you would pass values to the s via the Script component like... The actual scripts are defined in one of the two sections of the script specification, or , depending on when you want the script to execute. If you want the script to execute on load of the page, then you define it in the , if you want it to execute on any other event, define it in the section of the specification. For example… function onChangeList(listObj) { alert(listObj.value); } ${selectObj}.onchange = function(e) { onChangeList(${selectObj}); } As you can see in the rendered page all scripts are aggregated at the top of the page body, there are no more scripts all over the page. Even event handlers are attached to form objects in the initialization block. One more thing to remember, scripts being components, and components by nature being independent of its environment, will render the script in the page once for every ocurrance of the component. If you want the body of the script to be rendered only once no matter how many times the component is used, just wrap the body in a tag like… function onChangeList(listObj) { alert(listObj.value); } 17. What’s The Lifecycle Of A Form Submit? Events will trigger in the following order: initialize() pageBeginRender() formListenerMethod() pageBeginRender() The form “rewind” cycle is nothing more than a render cycle where the output is buffered and scrapped rather than written to the servlet output stream. The second pageBeginRender() is triggered during the actual page rendering. You can use requestCycle.isRewinding() to distinguish between these two render cycles. 18. Can I Use The Same Component Multiple Times In One Template? No – but you can copy the definition of a component pretty easily. 19. How Should Do Page Navigation In Apache Tapestry? Usage page properties: Page1.page Page2.page Welcome.Action.java public void submitListener(IRequestCycle cycle) { if (success) cycle.activate(getSpecification().getProperty("success")); if (error) cycle.activate(getSpecification().getProperty("error")); } So on success, it will be redirected to Home2 and on error it will be redirected to Error2 page. 20. Is Tapestry A Jsp Tag Library? Tapestry is not a JSP tag library; Tapestry builds on the servlet API, but doesn’t use JSPs in any way. It uses it own HTML template format and its own rendering engine. Starting with release 3.0, Tapestry includes a simple JSP tag library to allow JSP pages to create links to Tapestry pages. Apache TAPESTRY Questions and Answers Pdf Download Read the full article

0 notes

Text

A Comprehensive Guide to Natural Language Generation

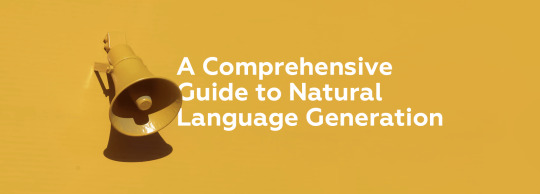

As long as Artificial Intelligence helps us to get more out of the natural language, we see more tasks and fields mushrooming at the intersection of AI and linguistics. In one of our previous articles, we discussed the difference between Natural Language Processing and Natural Language Understanding. Both fields, however, have natural languages as input. At the same time, the urge to establish two-way communication with computers has lead to the emergence of a separate subcategory of tasks dealing with producing (quasi)-natural speech. This subcategory, called Natural Language Generation will be the focus of this blog post.

What is NLG?

Natural Language Generation, as defined by Artificial Intelligence: Natural Language Processing Fundamentals, is the “process of producing meaningful phrases and sentences in the form of natural language.” In its essence, it automatically generates narratives that describe, summarize or explain input structured data in a human-like manner at the speed of thousands of pages per second.

However, while NLG software can write, it can’t read. The part of NLP that reads human language and turns its unstructured data into structured data understandable to computers is called Natural Language Understanding.

In general terms, NLG (Natural Language Generation) and NLU (Natural Language Understanding) are subsections of a more general NLP domain that encompasses all software which interprets or produces human language, in either spoken or written form:

NLU takes up the understanding of the data based on grammar, the context in which it was said and decide on intent and entities.

NLP converts a text into structured data.

NLG generates a text based on structured data.

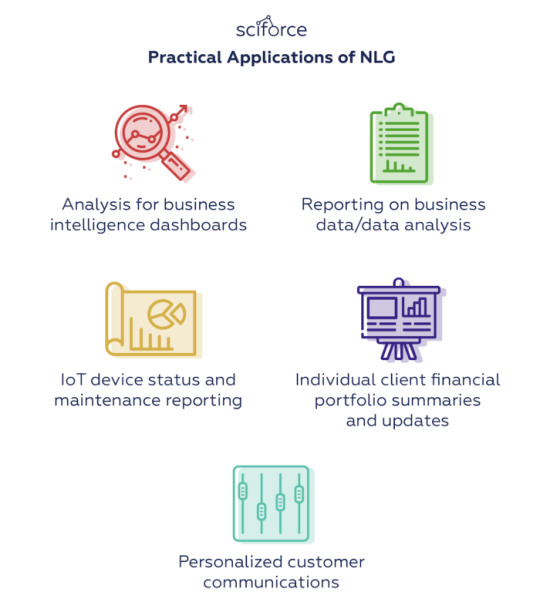

Major applications of NLG

NLG makes data universally understandable making the writing of data-driven financial reports, product descriptions, meeting memos, and more much easier and faster. Ideally, it can take the burden of summarizing the data from analysts to automatically write reports that would be tailored to the audience.The main practical present-day applications of NLG are, therefore, connected with writing analysis or communicating necessary information to customers:

Practical Applications of NLG

At the same time, NLG has more theoretical applications that make it a valuable tool not only in Computer Science and Engineering, but also in Cognitive Science and Psycholinguistics. These include:

NLG Applications in Theoretical Research

Evolution of NLG Design and Architecture

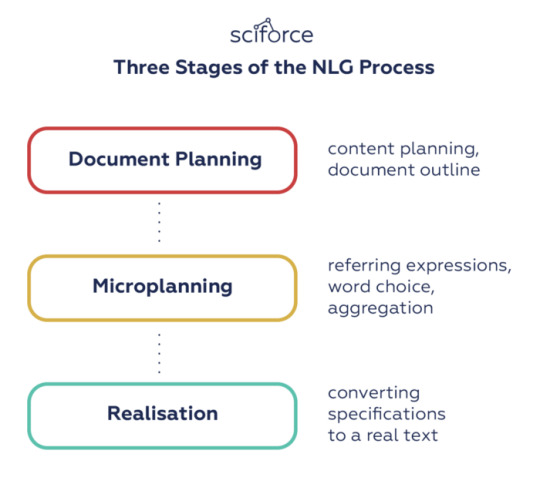

In the attempts to mimic human speech, NLG systems used different methods and tricks to adapt their writing style, tone and structure according to the audience, the context and purpose of the narrative. In 2000 Reiter and Dale pipelined NLG architecture distinguishing three stages in the NLG process:

1. Document planning: deciding what is to be said and creating an abstract document that outlines the structure of the information to be presented.

2. Microplanning: generation of referring expressions, word choice, and aggregation to flesh out the document specifications.

3.Realisation: converting the abstract document specifications to a real text, using domain knowledge about syntax, morphology, etc.

Three Stages of the NLG Process

This pipeline shows the milestones of natural language generation, however, specific steps and approaches, as well as the models used, can vary significantly with the technology development.

There are two major approaches to language generation: using templates and dynamic creation of documents. While only the latter is considered to be “real” NLG, there was a long and multistage way from basic straightforward templates to the state-of-the-art and each new approach expanded functionality and added linguistic capacities:

Simple Gap-Filling Approach

One of the oldest approaches is a simple fill-in-the-gap template system. In texts that have a predefined structure and need just a small amount of data to be filled in, this approach can automatically fill in such gaps with data retrieved from a spreadsheet row, database table entry, etc. In principle, you can vary certain aspects of the text: for example, you can decide whether to spell numbers or leave them as is, this approach is quite limited in its use and is not considered to be “real” NLG.

Scripts or Rules-Producing Text

Basic gap-filling systems were expanded with general-purpose programming constructs via a scripting language or by using business rules. The scripting approach, such as using web templating languages, embeds a template inside a general-purpose scripting language, so it allows for complex conditionals, loops, access to code libraries, etc. Business rule approaches, which are adopted by most document composition tools, work similarly, but focus on writing business rules rather than scripts. Though more powerful than straightforward gap filling, such systems still lack linguistic capabilities and cannot reliably generate complex high-quality texts.

Word-Level Grammatical Functions

A logical development of template-based systems was adding word-level grammatical functions to deal with morphology, morphophonology, and orthography as well as to handle possible exceptions. These functions made it easier to generate grammatically correct texts and to write complex template systems.

Dynamic Sentence Generation

Finally taking a step from template-based approaches to dynamic NLG, this approach dynamically creates sentences from representations of the meaning to be conveyed by the sentence and/or its desired linguistic structure. Dynamic creation means that the system can do sensible things in unusual cases, without needing the developer to explicitly write code for every boundary case. It also allows the system to linguistically “optimise” sentences in a number of ways, including reference, aggregation, ordering, and connectives.

Dynamic Document Creation

While dynamic sentence generation works at a certain “micro-level”, the “macro-writing” task produces a document which is relevant and useful to its readers, and also well-structured as a narrative. How it is done depends on the goal of the text. For example, a piece of persuasive writing may be based on models of argumentation and behavior change to mimic human rhetoric; and a text that summarizes data for business intelligence may be based on an analysis of key factors that influence the decision.

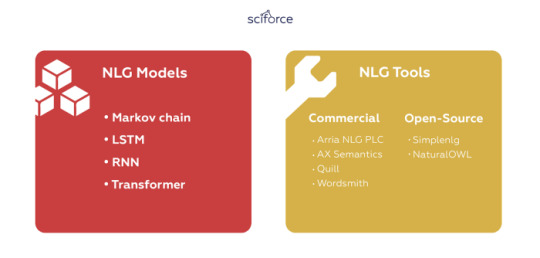

NLG Models

Even after NLG shifted from templates to dynamic generation of sentences, it took the technology years of experimenting to achieve satisfactory results. As a part of NLP and, more generally, AI, natural language generation relies on a number of algorithms that address certain problems of creating human-like texts:

Markov chain

The Markov chain was one of the first algorithms used for language generation. This model predicts the next word in the sentence by using the current word and considering the relationship between each unique word to calculate the probability of the next word. In fact, you have seen them a lot in earlier versions of the smartphone keyboard where they were used to generate suggestions for the next word in the sentence.

Recurrent neural network (RNN)

Neural networks are models that try to mimic the operation of the human brain. RNNs pass each item of the sequence through a feedforward network and use the output of the model as input to the next item in the sequence, allowing the information in the previous step to be stored. In each iteration, the model stores the previous words encountered in its memory and calculates the probability of the next word. For each word in the dictionary, the model assigns a probability based on the previous word, selects the word with the highest probability and stores it in memory. RNN’s “memory” makes this model ideal for language generation because it can remember the background of the conversation at any time. However, as the length of the sequence increases, RNNs cannot store words that were encountered remotely in the sentence and makes predictions based on only the most recent word. Due to this limitation, RNNs are unable to produce coherent long sentences.

LSTM

To address the problem of long-range dependencies, a variant of RNN called Long short-term memory (LSTM) was introduced. Though similar to RNN, LSTM models include a four-layer neural network. The LSTM consists of four parts: the unit, the input door, the output door and the forgotten door. These allow the RNN to remember or forget words at any time interval by adjusting the information flow of the unit. When a period is encountered, the Forgotten Gate recognizes that the context of the sentence may change and can ignore the current unit state information. This allows the network to selectively track only relevant information while also minimizing the disappearing gradient problem, which allows the model to remember information over a longer period of time.

Still, the capacity of the LSTM memory is limited to a few hundred words due to their inherently complex sequential paths from the previous unit to the current unit. The same complexity results in high computational requirements that make LSTM difficult to train or parallelize.

Transformer

A relatively new model was first introduced in the 2017 Google paper “Attention is all you need”, which proposes a new method called “self-attention mechanism.” The Transformer consists of a stack of encoders for processing inputs of any length and another set of decoders to output the generated sentences. In contrast to LSTM, the Transformer performs only a small, constant number of steps, while applying a self-attention mechanism that directly simulates the relationship between all words in a sentence. Unlike previous models, the Transformer uses the representation of all words in context without having to compress all the information into a single fixed-length representation that allows the system to handle longer sentences without the skyrocketing of computational requirements.

One of the most famous examples of the Transformer for language generation is OpenAI, their GPT-2 language model. The model learns to predict the next word in a sentence by focusing on words that were previously seen in the model and related to predicting the next word. A more recent upgrade by Google, the Transformers two-way encoder representation (BERT) provides the most advanced results for various NLP tasks.

NLG Tools

You can see that natural language generation is a complicated task that needs to take into account multiple aspects of language, including its structure, grammar, word usage and perception. Luckily, you probably won’t build the whole NLG system from scratch as the market offers multiple ready-to-use tools, both commercial and open-source.

Commercial NLG Tools

Arria NLG PLC is believed to be one of the global leaders in NLG technologies and tools and can boast the most advanced NLG engine and reports generated by NLG narratives. The company has patented NLG technologies available for use via Arria NLG platform.

AX Semantics: offers eCommerce, journalistic and data reporting (e.g. BI or financial reporting) NLG services for over 100 languages. It is a developer-friendly product that uses AI and machine learning to train the platform’s NLP engine.

Yseop is known for its smart customer experience across platforms like mobile, online or face-to-face. From the NLG perspective, it offers Compose that can be consumed on-premises, in the cloud or as a service, and offers Savvy, a plug-in for Excel and other analytics platforms.Quill by Narrative Science is an NLG platform powered by advanced NLG. Quill converts data to human-intelligent narratives by developing a story, analysing it and extracting the required amount of data from it.

Wordsmith by Automated Insights is an NLG engine that works chiefly in the sphere of advanced template-based approaches. It allows users to convert data into text in any format or scale. Wordsmith also provides a plethora of language options for data conversion.

Open-Source NLG Tools

Simplenlg is probably the most widely used open-source realiser, especially by system-builders. It is an open-source Java API for NLG written by the founder of Arria. It has the least functionality but also is the easiest to use and best documented.

NaturalOWL is an open-source toolkit which can be used to generate descriptions of OWL classes and individuals to configure an NLG framework to specific needs, without doing much programming.

Conclusion

NLG capabilities have become the de facto option as analytical platforms try to democratize data analytics and help anyone understand their data. Close to human narratives automatically explain insights that otherwise could be lost in tables, charts, and graphs via natural language and act as a companion throughout the data discovery process. Besides, NLG coupled with NLP are the core of chatbots and other automated chats and assistants that provide us with everyday support.

As NLG continues to evolve, it will become more diversified and will provide effective communication between us and computers in a natural fashion that many SciFi writers dreamed of in their books.

0 notes

Text

ESP32 / ESP8266 Arduino Tutorial:4. Protocol Buffers

Introduction

In this tutorial we will learn how to get started using Protocol Buffers with the Arduino core. In this introductory example, we will check how to declare a message type and how to encode it. This tutorial was tested both on the ESP32 and on the ESP8266.

Protocol buffers are a data serialization format from Google which are supported in multiple programming languages [1]. Protocol Buffers messages are encoded in a binary format [2], which means they are not human readable unless we decode them back to a readable format.

One of the main advantages of using Protocol Buffers is that the serialization and deserialization process is fast and the generated messages are small. For example, in the project website it is claimed that Protocol Buffers are 20 to 100 times faster and 3 to 10 times smaller than XML [3]. Around the web, there are also a lot of benchmarks that indicate Protocol Buffers are faster and smaller than JSON [4][5].

For our tests we are going to use Nanopb, a C implementation of Protocol Buffers that targets 32-bit microcontrollers [6]. You can check the GitHub page of the library here.

When using Protocol Buffers, we need to specify how we want to structure the data that will be serialized. This is done by defining message types in a .proto file [3]. After the definition of the messages, we need to run a protocol buffer compiler for the specific language we are using, to generate data access classes for our messages [3].

The tests on the ESP32 were performed using a DFRobot’s ESP-WROOM-32 device integrated in a ESP32 FireBeetle board. The tests on the ESP8266 were performed on a DFRobot’s ESP8266 FireBeetle board.

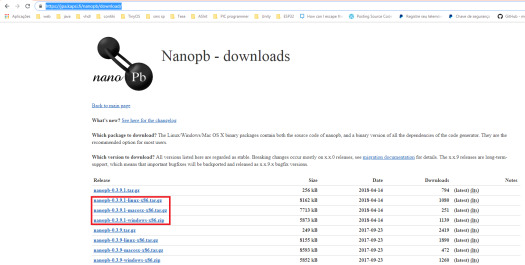

Setting up the environment

In order to get started, we need to download the latest release of Nanopb from the downloads page. The downloads page includes versions for Linux, Mac and Windows, as shown below at figure 1. I’ll be performing the tests on a Windows 8.1 machine.

Figure 1 – Downloading Nanopb.

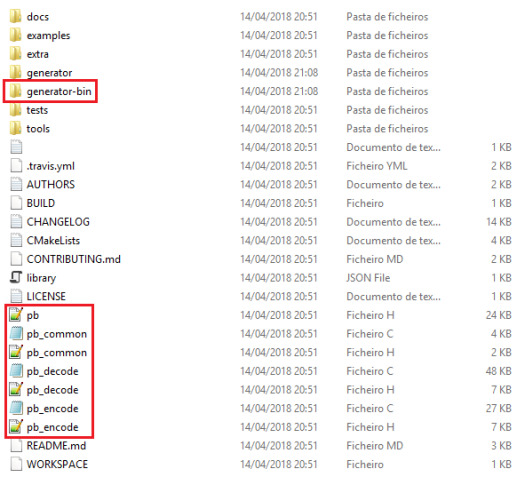

Once the download finishes, unzip the content. You should get a folder hierarchy similar to figure 2.

Figure 2 – Folder hierarchy of Nanopb.

In the previous image, there are two highlighted areas that are of interest for this tutorial. The first one is the generator-bin folder, which contains the already mentioned compiler that we will need to use to compile our .proto files.

Inside that folder, we can create a new folder called “proto_files“, where we will place our message definitions. Note that the name of the folder is arbitrary as it is its location. Naturally, we can define it where we want it in our computer as long as we use the correct paths when running the compilation tool.

Inside the folder, create a file called test.proto. For now, you can leave it empty. We will get back to it later.

If you go back to the root of the Nanopb project, you should see some .c and .h files, as also highlighted in figure 2. We will need those files when compiling our code. So, copy them all. The list of files copied should be the following:

pb.h

pb_common.h

pb_common.c

pb_encode.h

pb_encode.c

pb_decode.h

pb_decode.c

In order to be able to use Nanopb as a regular Arduino library, go to the folder where your Arduino libraries are. Typically, the path where the libraries are located is:

C:\Users\#yourUserName#\Documents\Arduino\libraries

Once you locate the Arduino libraries folder, create a new folder inside called Nanopb, next to your other libraries. There, paste the previously copied .c and .h files.

Defining the .proto file

Now that we have created our Nanopb library, we need to go back to the .proto file, so we can define our message.

In the first line, we need to declare the syntax version we are using to define our message. In our case, we will be using the proto2syntax.

syntax = "proto2";

Next, we need to define our message type. We start by using the message keyword, followed by the actual name of our message type. I’ll be calling it TestMessage.

Then, the message fields are declared between curly brackets.

message TestMessage { // body of message }

For each field we declare in our message, we need to specify its data type and its name. Additionally, each field needs to have a unique number. The unique numbers are used to identify the fields in the binary data format [7], so the name of the field is not serialized.

Additionally, for each field, we need to specify if it is optional, required or repeated. Required means the message must have that field exactly one time, optional means the field can not exist or the message can have it at most one time, and repeated means the field can be repeated any number of times (including zero) [7].

In or message, we will declare an integer field called test_number that will be required. For this simple example we will only make use of one field and thus we should assign it the unique number 1.

required int32 test_number = 1;

The final file content can be seen below. After finishing its edition, save it.

syntax = "proto2"; message TestMessage { required int32 test_number = 1; }

Now we need to compile the .proto file, so we can use it in our code. In case of Nanopb, since it offers a pure C implementation, this compiling procedure will generate C structs to represent our messages.

In order to compile it, open a command prompt and navigate to the generator-bin folder. There, the command to compile the messages has the following syntax, where you should change #pathToProtoFile# to the location of your .proto file:

protoc --nanopb_out=. #pathToProtoFile#

If you created a proto_files inside the generator-bin folder and placed the .proto file there like I did, the command is:

protoc --nanopb_out=. proto_files/test.proto

After running the command, a test.pb.c and a test.pb.h file should be created in the folder where you have your .proto definition, as shown in figure 3.

Figure 3 – Generated files.

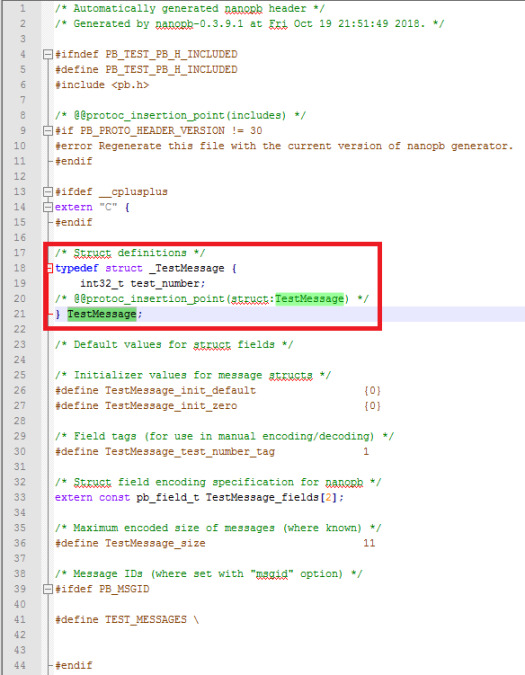

If you open the .h file, you should see that it has a struct called TestMessage defined there, as shown in figure 4. It matches the message name we have used in the .proto file. Also, the struct as an integer field called test_number, like we also specified.

Figure 4 – Struct definition in generated header file.

We will need both the .c and the .h file, so copy them. Since these are the message definitions which are specific of our program, we should not place them in the Nanopb library folder we have created previously.

So, on your Arduino sketch folder, simply create a folder called src and paste the previously copied filed there, as shown in figure 5.

Figure 5 – Arduino sketch folder with .c and .h files.

The code

We will start our code by doing some includes. The first one corresponds to the .h file we have obtained after compiling our .proto file. This will make available the struct that was created by the compilation process.

Remember that we have the file in a folder inside the sketch directory, so we need to provide the correct path when including the file.

#include "src/test.pb.h"

After that,we need to include the header files we have pasted in our Nanopb library folder, with the exception of the pb_decode.h, since we are not going to decode the message in this introductory tutorial.

#include "pb_common.h" #include "pb.h" #include "pb_encode.h"

Moving on to the Arduino setup function, we will start by opening a serial connection, to later output some results of our program.

Serial.begin(115200);

Then, we will declare an array of bytes that will serve as a buffer to hold the serialized message. For this tutorial we will declare a buffer with a size big enough to hold all our structure, so we won’t need to worry about calculating the maximum size the message can have.

uint8_t buffer[128];

Next, we will declare our message data structure and initialize it. Nanopb generates a define that allows to initialize the data struct, which has the following name:

#MessageName#_init_zero

So, in our case, the declaration and initialization of the structure is done like below:

TestMessage message = TestMessage_init_zero;

Nanopb uses the concept of streams to access data in encoded format [8]. So, the next thing we will do is calling the pb_ostream_from_buffer function, which constructs an output stream for writing into a memory buffer [9].

We need to pass as first input our previously declared buffer and as second input the maximum number of bytes to write, which should be the size of our buffer. This function call will return a struct of type pb_ostream_t.

pb_ostream_t stream = pb_ostream_from_buffer(buffer, sizeof(buffer));

Now that we have our stream, we should set the value of our message field before we encode it. Recall that we had a field called test_number in our .proto file, which also exists in the TestMessage struct we have declared.

So, we will access that field of the struct and assign it a value.

message.test_number = 540;

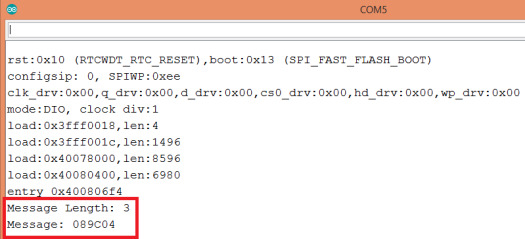

Now, to do the actual encoding, we need to call the pb_encode function.