#off-the-shelf datasets

Explore tagged Tumblr posts

Text

#off-the-shelf datasets#dataset provider#ai training data#data collection#data annotation#dataset for AI

0 notes

Text

#off-the-shelf datasets#dataset provider#ai training data#data collection#data annotation#dataset for AI

0 notes

Text

A shorter sum-up that may correct some of the inevitable misunderstandings from the rather loose use of the term "scrape."

A guy making low-rent audiobooks downloaded a huge chunk of AO3 content for his robots to read, in the precise same way creepypasta youtubers have been operating for years.

That's it, that's the whole deal.

Generally in AI conversations it means to harvest and process training data. Whereas here it's being used in the older, pre-AI term, of grabbing a bunch of data from an un-or-under-protected website via bot.

Which yeah, it's scummy, but it's analogue scummy, and well in keeping with a number of common copyright misconceptions. I can't tell you how many idiots I've met that thought fanworks had no IP protections because they were violations of the corporate owners' IP.

But this?

**A note about the AI that Weitzman used to steal our work: it’s even greasier than it looks at first glance. It’s not just the method he used to lift works off AO3 and then regurgitate onto his own website and app. Looking beyond the untold horrors of his AI-generated cover ‘art’, in many cases these covers attempt to depict something from the fics in question that can’t be gleaned from their summaries alone. In addition, my fics (and I assume the others, as well) were listed with generated genres; tags that did not appear anywhere in or on my fic on AO3 and were sometimes scarily accurate and sometimes way off the mark. I remember You & Me & Holiday Wine had ‘found family’ (100% correct, but not tagged by me as such) and I believe The Shape of Soup was listed as, among others, ‘enemies to friends to lovers’ and ‘love triangle’ (both wildly inaccurate). Even worse, not all the fic listed (as authors on Reddit pointed out) came with their original summaries at all. Often the entire summary was AI-generated. All of these things make it very clear that it was an all-encompassing scrape—not only were our fics stolen, they were also fed word-for-word into the AI Weitzman used and then analyzed to suit Weitzman’s needs. This means our work was literally fed to this AI to basically do with whatever its other users want, including (one assumes) text generation.

That's not how any of this works.

The OP is acting as though this is all being done through a single, automated system, and it isn't. Even the idea that this is Weitzman's AI is silly, as he's likely using off the shelf services. This scam is too petty to justify the cost of anything custom in either time or cash.

Here's what's actually going down, in all likelihood, on the pirate's side of things:

He figured out the most popular works via simple metrics and got a bog-standard website downloader go to work on it or he spent a night right-clicking.

The resulting files for the fics were loaded into word or some similar program and a macro was used to automatically fix formatting for the autoreader.

He ran each fic through an autoreader, and posted those like any other .mp3 file.

Meanwhile, he gave Chat GPT the story link and said "Summarize this and give me a cover prompt"

He takes the cover prompts and runs them in Midjourney with some standard formatting cues.

Now, what isn't happening at any stage in this process is processing the work into a dataset.

Generative AI systems do not continually harvest and incorporation information given to them by end users.

This is outside of their capabilities with a few specific exceptions ( Some AI services log user interaction for later processing into a training dataset, but that is a separate process, and Chat-GPT has features to webcrawl specific sources of "Trustworthy information" but in those cases its functioning as a search engine.) But incorporating data into the training dataset requires crunching the whole set of weights.

Even if one developed a generative AI system that could actively harvest and learn information, you wouldn't want to let it. Unfiltered junk data degrades dataset quality very quickly and is an open invitation to disruptive overfitting through users being generally repetitive. It's okay for more than half your users to generate pictures of dogs or cats playing in the clouds, but you don't want that to be half your dataset.

The situation being, the disagreeable aspects of this scenario are all forms of analogue jackassery, people have been swiping fiction off the net and turning it into shitty autoreader autobooks for long before generative AI came around.

The only difference here is the quality of the robot voice and the thumbnail art.

And as to worries about AO3's stories being scraped for AI training, well, AO3 is part of the generally indexed internet-

-the chicken is already in the nugget. ChatGPT gobbled it up ages ago, and Google and Bing had done so before that as part of their search indexing.

Now, every AO3 author who is upset is well within their rights to be so. Their work was pirated in a non-transformative way, and this guy's mistake was setting up with completed ebooks rather than hawking a "I will autoread any webpage" app.

But there is a certain irony to the real panic being that the work might have been turned into a dataset for the creation of new works when that panic comes from fanwork creators. If dataset training is theft then so are fanworks.

SO HERE IS THE WHOLE STORY (SO FAR).

I am on my knees begging you to reblog this post and to stop reblogging the original ones I sent out yesterday. This is the complete account with all the most recent info; the other one is just sending people down senselessly panicked avenues that no longer lead anywhere.

IN SHORT

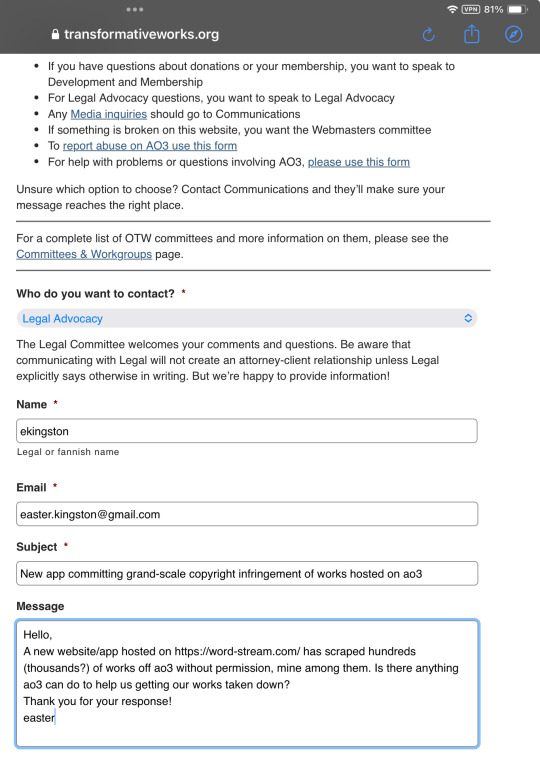

Cliff Weitzman, CEO of Speechify and (aspiring?) voice actor, used AI to scrape thousands of popular, finished works off AO3 to list them on his own for-profit website and in his attached app. He did this without getting any kind of permission from the authors of said work or informing AO3. Obviously.

When fandom at large was made aware of his theft and started pushing back, Weitzman issued a non-apology on the original social media posts—using

his dyslexia;

his intent to implement a tip-system for the plagiarized authors; and

a sudden willingness to take down the work of every author who saw my original social media posts and emailed him individually with a ‘valid’ claim,

as reasons we should allow him to continue monetizing fanwork for his own financial gain.

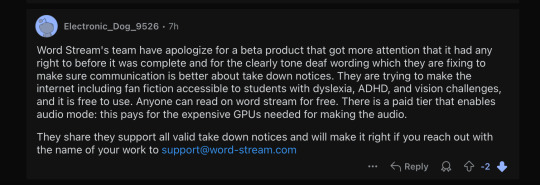

When we less-than-kindly refused, he took down his ‘apologies’ as well as his website (allegedly—it’s possible that our complaints to his web host, the deluge of emails he received or the unanticipated traffic brought it down, since there wasn’t any sort of official statement made about it), and when it came back up several hours later, all of the work formerly listed in the fan fiction category was no longer there.

THE TAKEAWAYS

1. Cliff Weitzman (aka Ofek Weitzman) is a scumbag with no qualms about taking fanwork without permission, feeding it to AI and monetizing it for his own financial gain;

2. Fandom can really get things done when it wants to, and

3. Our fanworks appear to be hidden, but they’re NOT DELETED from Weitzman’s servers, and independently published, original works are still listed without the authors' permission. We need to hold this man responsible for his theft, keep an eye on both his current and future endeavors, and take action immediately when he crosses the line again.

THE TIMELINE, THE DETAILS, THE SCREENSHOTS (behind the cut)

Sunday night, December 22nd 2024, I noticed an influx in visitors to my fic You & Me & Holiday Wine. When I searched the title online, hoping to find out where they came from, a new listing popped up (third one down, no less):

This listing is still up today, by the way, though now when you follow the link to word-stream, it just brings you to the main site. (Also, to be clear, this was not the cause for the influx of traffic to my fic; word-stream did not link back to the original work anywhere.)

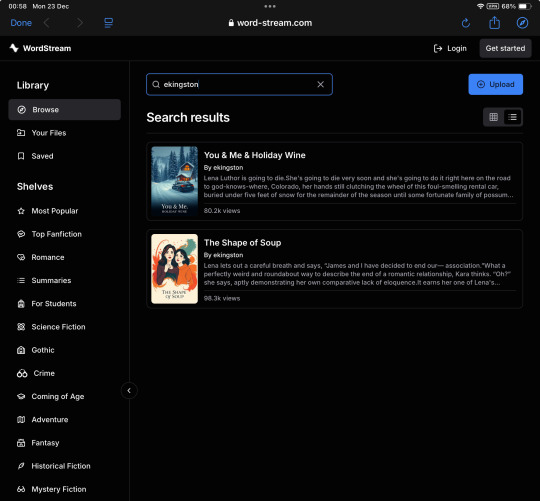

I followed the link to word-stream, where to my horror Y&M&HW was listed in its entirety—though, beyond the first half of the first chapter, behind a paywall—along with a link promising to take me—through an app downloadable on the Apple Store—to an AI-narrated audiobook version. When I searched word-stream itself for my ao3 handle I found both of my multi-chapter fics were listed this way:

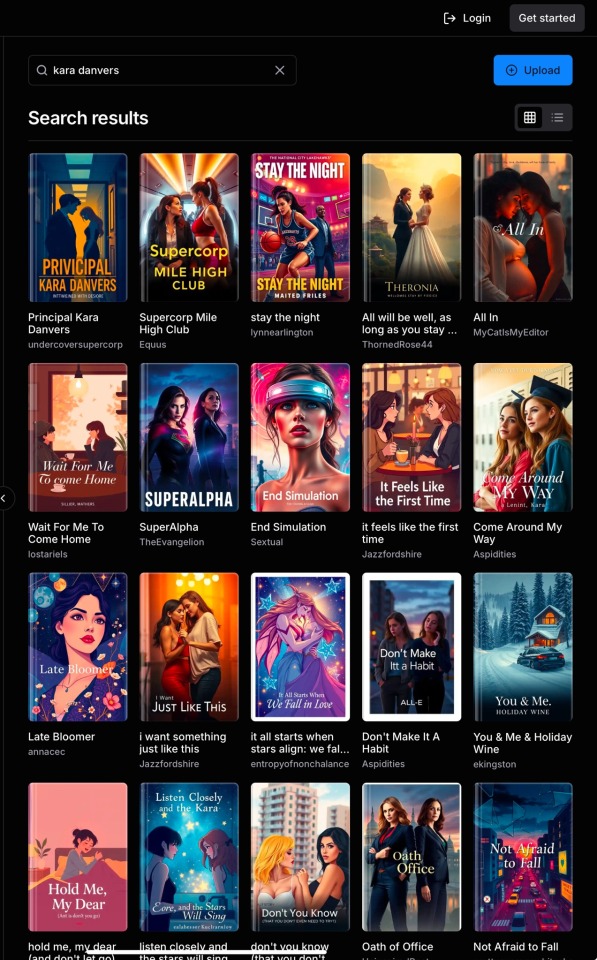

Because the tags on my fics (which included genres* and characters, but never the original IPs**) weren’t working, I put ‘Kara Danvers’ into the search bar and discovered that many more supercorp fics (Supergirl TV fandom, Kara Danvers/Lena Luthor pairing) were listed.

I went looking online for any mention of word-stream and AI plagiarism (the covers—as well as the ridiculously inflated number of reviews and ratings—made it immediately obvious that AI fuckery was involved), but found almost nothing: only one single Reddit post had been made, and it received (at that time) only a handful of upvotes and no advice.

I decided to make a tumblr post to bring the supercorp fandom up to speed about the theft. I draw as well as write for fandom and I’ve only ever had to deal with art theft—which has a clear set of steps to take depending on where said art was reposted—and I was at a loss regarding where to start in this situation.

After my post went up I remembered Project Copy Knight, which is worth commending for the work they’ve done to get fic stolen from AO3 taken down from monetized AI 'audiobook’ YouTube accounts. I reached out to @echoekhi, asking if they’d heard of this site and whether they could advise me on how to get our works taken down.

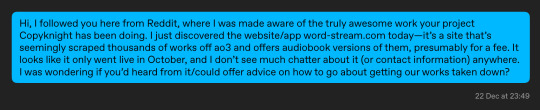

While waiting for a reply I looked into Copy Knight’s methods and decided to contact OTW’s legal department:

And then I went to bed.

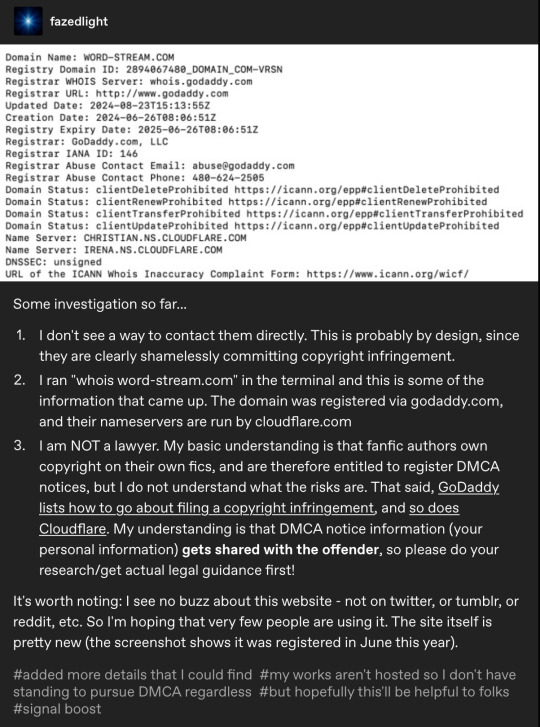

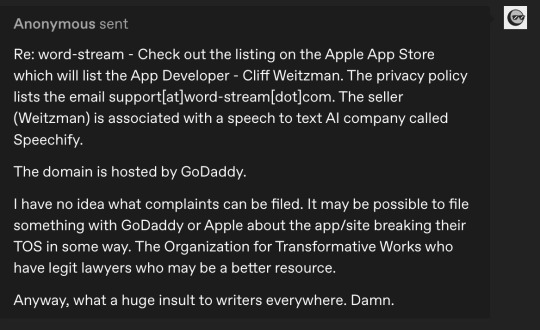

By morning, tumblr friends @makicarn and @fazedlight as well as a very helpful tumblr anon had seen my post and done some very productive sleuthing:

@echoekhi had also gotten back to me, advising me, as expected, to contact the OTW. So I decided to sit tight until I got a response from them.

That response came only an hour or so later:

Which was 100% understandable, but still disappointing—I doubted a handful of individual takedown requests would accomplish much, and I wasn’t eager to share my given name and personal information with Cliff Weitzman himself, which is unavoidable if you want to file a DMCA.

I decided to take it to Reddit, hoping it would gain traction in the wider fanfic community, considering so many fandoms were affected. My Reddit posts (with the updates at the bottom as they were emerging) can be found here and here.

A helpful Reddit user posted a guide on how users could go about filing a DMCA against word-stream here (to wobbly-at-best results)

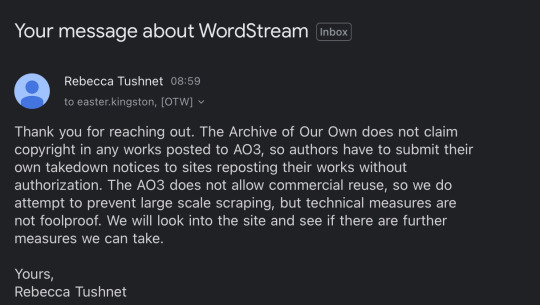

A different helpful Reddit user signed up to access insight into word-streams pricing. Comment is here.

Smells unbelievably scammy, right? In addition to those audacious prices—though in all fairness any amount of money would be audacious considering every work listed is accessible elsewhere for free—my dyscalculia is screaming silently at the sight of that completely unnecessary amount of intentionally obscured numbers.

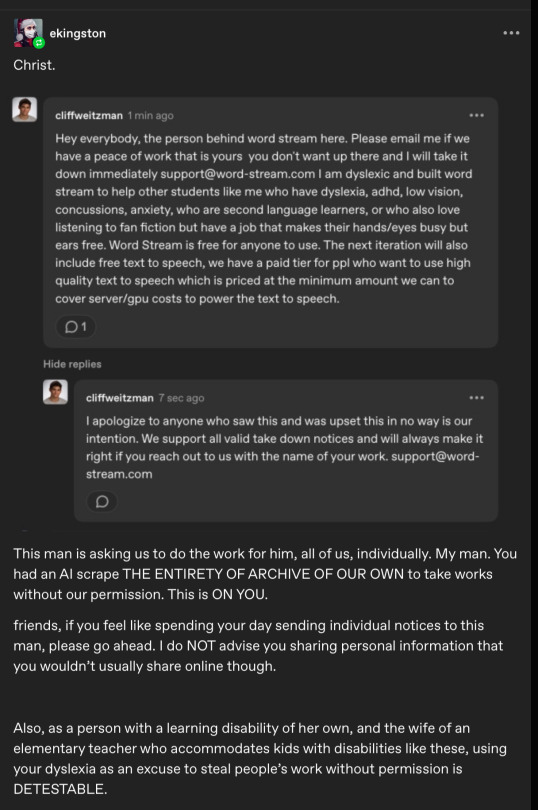

Speaking of which! As soon as the post on r/AO3—and, as a result, my original tumblr post—began taking off properly, sometime around 1 pm, jumpscare! A notification that a tumblr account named @cliffweitzman had commented on my post, and I got a bit mad about the gist of his message :

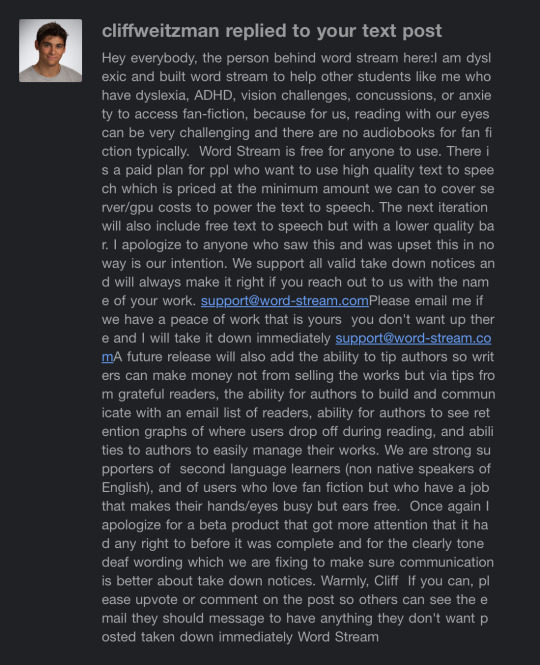

Fortunately he caught plenty of flack in the comments from other users (truly you should check out the comment section, it is extremely gratifying and people are making tremendously good points), in response to which, of course, he first tried to both reiterate and renegotiate his point in a second, longer comment (which I didn’t screenshot in time so I’m sorry for the crappy notification email formatting):

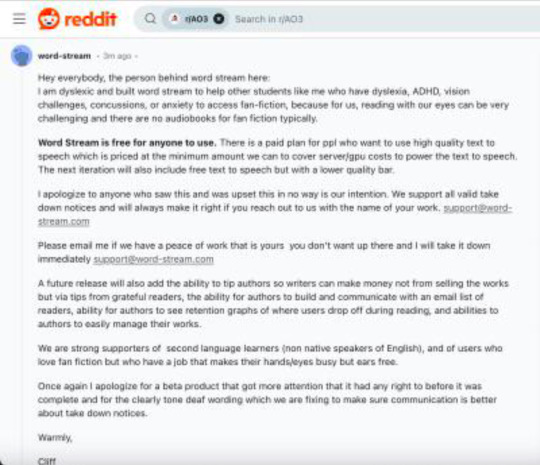

which he then proceeded to also post to Reddit (this is another Reddit user’s screenshot, I didn’t see it at all, the notifications were moving too fast for me to follow by then)

... where he got a roughly equal amount of righteously furious replies. (Check downthread, they're still there, all the way at the bottom.)

After which Cliff went ahead & deleted his messages altogether.

It’s not entirely clear whether his account was suspended by Reddit soon after or whether he deleted it himself, but considering his tumblr account is still intact, I assume it’s the former. He made a handful of sock puppet accounts to play around with for a while, both on Reddit and Tumblr, only one of which I have a screenshot of, but since they all say roughly the same thing, you’re not missing much:

And then word-stream started throwing a DNS error.

That lasted for a good number of hours, which was unfortunately right around the time that a lot of authors first heard about the situation and started asking me individually how to find out whether their work was stolen too. I do not have that information and I am unclear on the perimeters Weitzman set for his AI scraper, so this is all conjecture: it LOOKS like the fics that were lifted had three things in common:

They were completed works;

They had over several thousand kudos on AO3; and

They were written by authors who had actively posted or updated work over the past year.

If anyone knows more about these perimeters or has info that counters my observation, please let me know!

I finally thought to check/alert evil Twitter during this time, and found out that the news was doing the rounds there already. I made a quick thread summarizing everything that had happened just in case. You can find it here.

I went to Bluesky too, where fandom was doing all the heavy lifting for me already, so I just reskeeted, as you do, and carried on.

Sometime in the very early evening, word-stream went back up—but the fan fiction category was nowhere to be seen. Tentative joy and celebration!***

That’s when several users—the ones who had signed up for accounts to gain intel and had accessed their own fics that way—reported that their work could still be accessed through their history. Relevant Reddit post here.

Sooo—

We’re obviously not done. The fanwork that was stolen by Weitzman may be inaccessible through his website right now, but they aren’t actually gone. And the fact that Weitzman wasn’t willing to get rid of them altogether means he still has plans for them.

This was my final edit on my Reddit post before turning off notifications, and it's pretty much where my head will be at for at least the foreseeable future:

Please feel free to add info in the comments, make your own posts, take whatever action you want to take to protect your work. I only beg you—seriously, I’m on my knees here—to not give up like I saw a handful of people express the urge to do. Keep sharing your creative work and remain vigilant and stay active to make sure we can continue to do so freely. Visit your favorite fics, and the ones you’ve kept in your ‘marked for later’ lists but never made time to read, and leave kudos, leave comments, support your fandom creatives, celebrate podficcers and support AO3. We created this place and it’s our responsibility to keep it alive and thriving for as long as we possibly can.

Also FUCK generative AI. It has NO place in fandom spaces.

THE 'SMALL' PRINT (some of it in all caps):

*Weitzman knew what he was doing and can NOT claim ignorance. One, it’s pretty basic kindergarten stuff that you don’t steal some other kid’s art project and present it as your own only to act surprised when they protest and then tell the victim that they should have told you sooner that they didn’t want their project stolen. And two, he was very careful never to list the IPs these fanworks were based on, so it’s clear he was at least familiar enough with the legalities to not get himself in hot water with corporate lawyers. Fucking over fans, though, he figured he could get away with that.

**A note about the AI that Weitzman used to steal our work: it’s even greasier than it looks at first glance. It’s not just the method he used to lift works off AO3 and then regurgitate onto his own website and app. Looking beyond the untold horrors of his AI-generated cover ‘art’, in many cases these covers attempt to depict something from the fics in question that can’t be gleaned from their summaries alone. In addition, my fics (and I assume the others, as well) were listed with generated genres; tags that did not appear anywhere in or on my fic on AO3 and were sometimes scarily accurate and sometimes way off the mark. I remember You & Me & Holiday Wine had ‘found family’ (100% correct, but not tagged by me as such) and I believe The Shape of Soup was listed as, among others, ‘enemies to friends to lovers’ and ‘love triangle’ (both wildly inaccurate). Even worse, not all the fic listed (as authors on Reddit pointed out) came with their original summaries at all. Often the entire summary was AI-generated. All of these things make it very clear that it was an all-encompassing scrape—not only were our fics stolen, they were also fed word-for-word into the AI Weitzman used and then analyzed to suit Weitzman’s needs. This means our work was literally fed to this AI to basically do with whatever its other users want, including (one assumes) text generation.

***Fan fiction appears to have been made (largely) inaccessible on word-stream at this time, but I’m hearing from several authors that their original, independently published work, which is listed at places like Kindle Unlimited, DOES still appear in word-stream’s search engine. This obviously hurts writers, especially independent ones, who depend on these works for income and, as a rule, don’t have a huge budget or a legal team with oceans of time to fight these battles for them. If you consider yourself an author in the broader sense, beyond merely existing online as a fandom author, beyond concerns that your own work is immediately at risk, DO NOT STOP MAKING NOISE ABOUT THIS.

Again, please, please PLEASE reblog this post instead of the one I sent originally. All the information is here, and it's driving me nuts to see the old ones are still passed around, sending people on wild goose chases.

Thank you all so much.

48K notes

·

View notes

Text

AI Model Development: How to Build Intelligent Models for Business Success

AI model development is revolutionizing how companies tackle challenges, make informed decisions, and delight customers. If lengthy reports, slow processes, or missed insights are holding you back, you’re in the right place. You’ll learn practical steps to leverage AI models, plus why Ahex Technologies should be your go-to partner.

Read the original article for a more in-depth guide: AI Model Development by Ahex

What Is AI Model Development?

AI model development is the practice of designing, training, and deploying algorithms that learn from your data to automate tasks, make predictions, or uncover hidden insights. By turning raw data into actionable intelligence, you empower your team to focus on strategy , while machines handle the heavy lifting.

The AI Model Development Process

Define the Problem Clarify the business goal: Do you need sales forecasts, customer-churn predictions, or automated text analysis?

Gather & Prepare Data Collect, clean, and structure data from internal systems or public sources. Quality here drives model performance.

Select & Train the Model Choose an algorithm, simple regression for straightforward tasks or neural nets for complex patterns. Split data into training and testing sets for validation.

Test & Validate Measure accuracy, precision, recall, or other KPIs. Tweak hyperparameters until you achieve reliable results.

Deploy & Monitor Integrate the model into your workflows. Continuously track performance and retrain as data evolves.

AI Model Development in 2025

Custom AI models are no longer optional, they’re essential. Off-the-shelf solutions can’t match bespoke systems trained on your data. In 2025, businesses that leverage tailored AI enjoy faster decision-making, sharper insights, and increased competitiveness.

Why Businesses Need Custom AI Model Development

Precision & Relevance: Models built on your data yield more accurate, context-specific insights.

Data Security: Owning your models means full control over sensitive information — crucial in finance, healthcare, and beyond.

Scalability: As your business grows, your AI grows with you. Update and retrain instead of starting from scratch.

How to Create an AI Model from Scratch

Define the Problem

Gather & Clean Data

Choose an Algorithm (e.g., regression, classification, deep learning)

Train & Validate on split datasets

Deploy & Monitor in production

Break each step into weekly sprints, and you’ll have a minimum viable model in just a few weeks.

How to Make an AI Model That Delivers Results

Set Clear Objectives: Tie every metric to a business outcome, revenue growth, cost savings, or customer retention.

Invest in Data Quality: The “garbage in, garbage out” rule is real. High-quality data yields high-quality insights.

Choose Explainable Models: Transparency builds trust with stakeholders and meets regulatory requirements.

Stress-Test in Real Scenarios: Validate your model against edge cases to catch blind spots.

Maintain & Retrain: Commit to ongoing model governance to adapt to new trends and data.

Top Tools & Frameworks to Build AI Models That Work

PyTorch: Flexible dynamic graphs for rapid prototyping.

Keras (TensorFlow): User-friendly API with strong community support.

LangChain: Orchestrates large language models for complex applications.

Vertex AI: Google’s end-to-end platform with AutoML.

Amazon SageMaker: AWS-managed service covering development to deployment.

Langflow & AutoGen: Low-code solutions to accelerate AI workflows.

Breaking Down AI Model Development Challenges

Data Quality & Availability: Address gaps early to avoid costly rework.

Transparency (“Black Box” Issues): Use interpretable models or explainability tools.

High Costs & Skills Gaps: Leverage a specialized partner to access expertise and control budgets.

Integration & Scaling: Plan for seamless API-based deployment into your existing systems.

Security & Compliance: Ensure strict protocols to protect sensitive data.

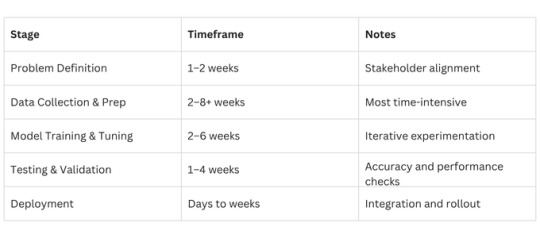

Typical AI Model Timelines

For simple pilots, expect 1–2 months; complex enterprise AI can take 4+ months.

Cost Factors for AI Development

Why Ahex Technologies Is the Best Choice for Mobile App Development

(Focusing on expertise, without diving into technical app details)

Holistic AI Expertise: Our AI solutions integrate seamlessly with mobile platforms you already use.

Client-First Approach: We tailor every model to your unique workflow and customer journey.

End-to-End Support: From concept to deployment and beyond, we ensure your AI and mobile efforts succeed in lockstep.

Proven Track Record: Dozens of businesses trust us to deliver secure, scalable, and compliant AI solutions.

How Ahex Technologies Can Help You Build Smarter AI Models

At Ahex Technologies, our AI Development Services cover everything from proof-of-concept to full production rollouts. We:

Diagnose your challenges through strategic workshops

Design custom AI roadmaps aligned to your goals

Develop robust, explainable models

Deploy & Manage your AI in the cloud or on-premises

Monitor & Optimize continuously for peak performance

Learn more about our approach: AI Development Services Ready to get started? Contact us

Final Thoughts: Choosing the Right AI Partner

Selecting a partner who understands both the technology and your business is critical. Look for:

Proven domain expertise

Transparent communication

Robust security practices

Commitment to ongoing optimization

With the right partner, like Ahex Technologies, you’ll transform data into a competitive advantage.

FAQs on AI Model Development

1. What is AI model development? Designing, training, and deploying algorithms that learn from data to automate tasks and make predictions.

2. What are the 4 types of AI models? Reactive machines, limited memory, theory of mind, and self-aware AI — ranging from simple to advanced cognitive abilities.

3. What is the AI development life cycle? Problem definition → Data prep → Model building → Testing → Deployment → Monitoring.

4. How much does AI model development cost? Typically $10,000–$500,000+, depending on project complexity, data needs, and integration requirements.

Ready to turn your data into growth? Explore AI Model Development by Ahex Our AI Development Services Let’s talk!

0 notes

Text

The Rise of Custom LLMs: Why Enterprises Are Moving Beyond Off-the-Shelf AI

In the rapidly evolving landscape of artificial intelligence, one trend stands out among forward-thinking companies: the rising investment in custom Large Language Model (LLM) development services. From automating internal workflows to enhancing customer experience and driving innovation, custom LLMs are becoming indispensable tools for enterprises aiming to stay competitive in 2025 and beyond.

While off-the-shelf LLM solutions like ChatGPT and Claude offer powerful capabilities, they often fall short when it comes to aligning with an organization’s specific goals, data needs, and compliance requirements. This gap has led many businesses to pursue tailor-made LLM development services that are optimized for their unique workflows, industry demands, and long-term strategies.

Let’s explore why custom LLM development is now a critical investment for companies across industries—and what it means for the future of AI adoption.

The Limitations of Off-the-Shelf LLMs

Off-the-shelf LLMs are great for general use cases, but they come with constraints that limit enterprise adoption in certain domains. These models are trained on public datasets, and while they can provide impressive general language capabilities, they struggle with:

Domain-specific knowledge: They often lack context around specialized terminology in industries like legal, finance, or healthcare.

Data privacy concerns: Public LLMs may not meet internal data handling, security, or compliance needs.

Limited control: Companies can’t fine-tune or extend model capabilities to fit proprietary processes or logic.

Generic outputs: The lack of personalization often leads to low relevance or alignment with brand tone, customer context, or specific operational workflows.

As companies scale their AI efforts, they need models that deeply understand their operations and can evolve with them. This is where custom LLM development services enter the picture.

Custom LLMs: Tailored to Fit Business Needs

Custom LLM development allows organizations to build models that are trained on their own datasets, integrate seamlessly with existing software, and address very specific tasks. These LLMs can be designed to follow particular business rules, support multilingual requirements, and deliver high-precision results for mission-critical applications.

Personalized Workflows

A custom LLM can be tailored to handle unique workflows—whether it’s analyzing thousands of legal contracts, generating hyper-personalized marketing copy, or assisting customer service agents with real-time knowledge retrieval. This kind of specificity is nearly impossible with generic LLMs.

Enhanced Performance

When trained on proprietary datasets, custom LLMs significantly outperform general models on niche use cases. They understand organizational terminology, workflows, and customer interactions—leading to better predictions, more accurate outputs, and ultimately, higher productivity.

Seamless Integration

Custom models can be built to integrate tightly with a company’s tech stack—ERP systems, CRMs, document repositories, and even internal APIs. This allows companies to deploy LLMs not just as standalone assistants, but as core components of digital infrastructure.

Key Business Drivers Behind the Investment

Let’s look at the top reasons why companies are increasingly turning to custom LLM development services in 2025.

1. Competitive Differentiation

AI is no longer a buzzword—it’s a key differentiator. Companies that leverage LLMs to create unique customer experiences or optimize operations gain a real edge. With a custom LLM, businesses can offer features or services that competitors using standard models simply can’t match.

For example, a custom-trained LLM in eCommerce could generate personalized product descriptions, SEO content, and chat support tailored to a brand’s voice and buyer personas—something a general LLM cannot consistently deliver.

2. Data Ownership and Security

As data regulations like GDPR, HIPAA, and India’s DPDP Act become stricter, companies must be cautious about how and where their data is processed. Custom LLMs, especially those developed for on-premises or private cloud environments, offer full data control.

This ensures sensitive internal data doesn’t leave the company’s infrastructure while still allowing AI to add value. It also reduces dependency on external SaaS vendors, mitigating third-party risk.

3. Cost Efficiency at Scale

Although custom LLM development requires an initial investment, it becomes more cost-effective at scale. Businesses no longer pay per token or API call to third-party platforms. Instead, they gain a reusable asset that can be deployed across multiple departments, from customer service to legal, HR, marketing, and operations.

In fact, once developed, a custom LLM can be fine-tuned incrementally with minimal cost to support new functions or user roles—maximizing long-term ROI.

4. Strategic Control Over AI Capabilities

With public models, businesses are at the mercy of third-party update cycles, model versions, or feature restrictions. Custom development gives enterprises full strategic control over model architecture, fine-tuning frequency, interface design, and output formats.

This control is especially valuable for companies in regulated industries or those that require explainable AI outputs for auditing and compliance.

5. Cross-Functional Automation

Custom LLMs are versatile. A single base model can be adapted for multiple departments. For example:

In HR, it can summarize resumes and generate interview questions.

In finance, it can analyze contracts and detect discrepancies.

In legal, it can extract key clauses from long agreements.

In sales, it can draft client proposals in minutes.

In support, it can offer contextual responses from company knowledge bases.

This level of internal automation isn’t just cost-saving—it’s transformative.

Use Cases Driving Adoption

Let’s look at real-world scenarios where companies are seeing tangible benefits from investing in custom LLMs.

Legal and Compliance

Law firms and legal departments use LLMs trained on case law, internal documentation, and policy frameworks to assist with contract analysis, regulatory compliance checks, and legal research.

Custom models significantly cut down time spent on manual review and reduce the risk of overlooking key clauses or compliance issues.

Healthcare and Life Sciences

In healthcare, custom LLMs are trained on clinical data, EMRs, and research journals to support faster diagnostics, patient communication, and research summarization—all while ensuring HIPAA compliance.

Pharmaceutical companies use them to analyze medical literature and streamline drug discovery processes.

Finance and Insurance

Financial institutions use custom models to generate reports, review risk assessments, assist with fraud detection, and respond to customer queries—all with high accuracy and in a compliant manner.

These models can also be integrated with KYC/AML systems to flag unusual patterns and speed up customer onboarding.

Retail and eCommerce

Brands are leveraging custom LLMs for hyper-personalized marketing, automated product tagging, chatbot-driven sales assistance, and voice commerce. Models trained on brand data ensure messaging consistency and deeper customer engagement.

The Technology Stack Behind Custom LLM Development

Building a custom LLM involves a blend of cutting-edge tools and best practices:

Model selection: Companies can start from foundational models like LLaMA, Mistral, or Falcon, depending on their scale and licensing needs.

Fine-tuning: Using internal datasets, LLMs are fine-tuned to reflect domain-specific language, tone, and context.

Reinforcement learning: RLHF (Reinforcement Learning with Human Feedback) can be used to align model outputs with business goals.

Deployment: Models can be deployed on-premise, in private clouds, or with edge capabilities for compliance and performance.

Monitoring: Continuous evaluation of accuracy, latency, and output quality ensures optimal performance post-deployment.

Many companies partner with LLM development service providers who specialize in this full-stack development—from model selection and training to deployment and maintenance.

Why Now? The Timing Is Right

Several converging trends make 2025 the ideal time to invest in custom LLMs:

Open-source LLMs are more powerful and accessible than ever before.

GPU costs have declined, making training and inference more affordable.

Enterprise AI maturity has improved, with clearer internal processes and governance models in place.

Customer expectations are higher, and personalized, AI-driven experiences are becoming the norm.

New developer tools for fine-tuning, evaluating, and serving models have matured, reducing development friction.

Together, these factors have lowered the barrier to entry and increased the payoff for custom LLM initiatives.

Conclusion

As AI becomes more deeply integrated into the modern business stack, the limitations of one-size-fits-all models are becoming clear. Companies need smarter, safer, and more context-aware AI tools—ones that speak their language, understand their data, and respect their constraints.

Custom LLM development services offer exactly that. By investing in tailored models, organizations unlock the full potential of AI to automate tasks, reduce costs, and deliver exceptional customer and employee experiences.

For businesses looking to lead in the age of AI, the decision is no longer “if” but “how fast” they can build and deploy their own LLMs.

#blockchain#crypto#ai#dex#ai generated#cryptocurrency#blockchain app factory#ico#blockchainappfactory#ido

0 notes

Text

Why Should You Hire a Custom AI Development Company in 2025?

In today’s tech-driven economy, artificial intelligence is no longer a futuristic concept — it’s a business imperative. Companies across sectors are integrating AI to optimize operations, personalize customer experiences, and stay ahead of the curve. But when it comes to implementing AI effectively, many organizations face a critical question: Should we build AI solutions in-house, use off-the-shelf tools, or hire a custom AI development company?

In 2025, the clear answer for most forward-thinking businesses is to hire a custom AI development company. Here's why.

1. Tailored Solutions for Unique Business Needs

Off-the-shelf AI solutions often follow a one-size-fits-all model. They may not accommodate the specific workflows, goals, or data types of your organization. A custom AI development company creates solutions tailored to your exact needs — whether it's automating industry-specific tasks, building advanced recommendation engines, or developing predictive models using proprietary data.

Custom-built AI aligns closely with your business goals, delivering higher ROI and better integration into your existing systems.

2. Access to Specialized Expertise

AI is a rapidly evolving field with complex technologies such as machine learning (ML), natural language processing (NLP), computer vision, and deep learning. Hiring and maintaining an in-house AI team with this diverse skill set is time-consuming and costly.

A custom AI development company brings a team of seasoned professionals with proven experience across different AI domains. They stay updated on the latest tools, frameworks, and best practices, ensuring your project is built using cutting-edge technology.

3. Faster Time-to-Market

Time is a critical asset in competitive markets. Custom AI development companies have established processes, agile workflows, and reusable frameworks that speed up development. From discovery to deployment, they can deliver scalable solutions much faster than most in-house teams that are starting from scratch.

This means quicker adoption, faster feedback loops, and earlier access to AI-powered benefits like automation, insights, and customer engagement.

4. Scalability and Long-Term Support

AI solutions are not "set-it-and-forget-it" systems. They require ongoing monitoring, optimization, and scaling as your business grows or market conditions change. Custom AI development firms offer post-deployment support and maintenance services, helping ensure the models continue to deliver accurate results and meet performance benchmarks.

Additionally, custom solutions can be designed with scalability in mind, allowing your systems to grow alongside your business without the need for complete redevelopment.

5. Better Data Utilization

One of the most significant advantages of custom AI is its ability to work with your proprietary datasets. Whether structured, unstructured, real-time, or historical, custom AI models can be trained specifically on your data to derive more accurate insights and predictions.

This enables smarter automation, enhanced forecasting, and more personalized customer experiences — all built around your unique business context.

6. Enhanced Security and Compliance

In 2025, data privacy and compliance are more important than ever. Many industries are subject to strict regulations (like GDPR, HIPAA, or CCPA). Off-the-shelf solutions may not offer the flexibility to meet your specific compliance requirements.

A custom AI development company can implement advanced security protocols and tailor the solution to comply with industry regulations, ensuring your AI systems remain ethical, secure, and legally compliant.

7. Cost-Effectiveness in the Long Run

While the upfront investment in custom AI may be higher than using a pre-built tool, the long-term value is significantly greater. Custom solutions are built for efficiency, accuracy, and longevity. They eliminate the recurring licensing costs, unnecessary features, and scalability limitations of third-party platforms.

Plus, the improved decision-making, process automation, and customer satisfaction generated by bespoke AI often lead to substantial cost savings and revenue growth.

8. Competitive Edge in a Crowded Market

In a saturated market, differentiation is key. Custom AI allows businesses to build proprietary solutions that give them a competitive advantage — whether it’s a smarter product recommendation engine, a unique fraud detection system, or a real-time customer support bot.

By harnessing AI in a way that’s uniquely suited to your company’s operations, you create a technological edge that competitors can’t easily replicate.

Final Thoughts

The AI landscape in 2025 is more mature, dynamic, and opportunity-rich than ever before. Businesses that want to thrive must go beyond generic solutions and invest in intelligent systems designed specifically for them.

Hiring a custom AI development company empowers you to harness the full potential of artificial intelligence — tailored to your data, your goals, and your industry. It’s not just about keeping up with trends — it’s about leading the transformation and unlocking new possibilities.

If you're ready to build smarter, scale faster, and drive better outcomes, partnering with a custom AI development company could be your smartest investment in 2025.

0 notes

Text

I recently worked for a place that wanted to use AI to streamline their regulatory filings.

Like, on one hand, if you limit your dataset to your historical filings to get the language right, maaaaybe you can save time on some routine-ish paperwork? But if you're just pulling chatGPT off the shelf and asking it to fill in the format, you're fucked. Also, is the cost of using the enterprise AI less than just...doing the work? I haven't found anything where that's the case yet.

When I became freelance, one of my first marketing contracts was fixing my boss' blog posts and articles that he had 'written' with ChatGPT.

It was the single most soul-sucking task I have ever done in my life. I could have ghostwritten it for them faster than it took me to edit it.

ChatGPT would often hallucinate features of the product, and often required more fact-checking than the article was worth.

It is absolutely no surprise that 77% of employees report that AI has increased workloads and lowered productivity, while 96% of executives believe it has boosted it.

The reality is that it's only boosted the amount of work employees have to do which leads to increased burnout, stress and job dissatisfaction.

Source.

32K notes

·

View notes

Text

Insights via Amazon Prime Movies and TV Shows Dataset

Introduction

In a rapidly evolving digital landscape, understanding viewer behavior is critical for streaming platforms and analytics companies. A leading streaming analytics firm needed a reliable and scalable method to gather rich content data from Amazon Prime. They turned to ArcTechnolabs for a tailored data solution powered by the Amazon Prime Movies and TV Shows Dataset. The goal was to decode audience preferences, forecast engagement, and personalize content strategies. By leveraging structured, comprehensive data, the client aimed to redefine content analysis and elevate user experience through data-backed decisions.

The Client

The client is a global streaming analytics firm focused on helping OTT platforms improve viewer engagement through data insights. With users across North America and Europe, the client analyzes millions of data points across streaming apps. They were particularly interested in Web scraping Amazon Prime Video content to refine content curation strategies and trend forecasting. ArcTechnolabs provided the capability to extract Amazon Prime Video data efficiently and compliantly, enabling deeper analysis of the Amazon Prime shows and movie dataset for smarter business outcomes.

Key Challenges

The firm faced difficulties in consistently collecting detailed, structured content metadata from Amazon Prime. Their internal scraping setup lacked scale and often broke with site updates. They couldn’t track changing metadata, genres, cast info, episode drops, or user engagement indicators in real time. Additionally, there was no existing pipeline to gather reliable streaming media data from Amazon Prime or track regional content updates. Their internal tech stack also lacked the ability to filter, clean, and normalize data across categories and territories. Off-the-shelf Amazon Prime Video Data Scraping Services were either limited in scope or failed to deliver structured datasets. The client also struggled to gain competitive advantage due to limited exposure to OTT Streaming Media Review Datasets, which limited content sentiment analysis. They required a solution that could extract Amazon Prime streaming media data at scale and integrate it seamlessly with their proprietary analytics platform.

Key Solution

ArcTechnolabs provided a customized data pipeline built around the Amazon Prime Movies and TV Shows Dataset, designed to deliver accurate, timely, and well-structured metadata. The solution was powered by our robust Web Scraping OTT Data engine and supported by our advanced Web Scraping Services framework. We deployed high-performance crawlers with adaptive logic to capture real-time data, including show descriptions, genres, ratings, and episode-level details. With Mobile App Scraping Services , the dataset was enriched with data from Amazon Prime’s mobile platforms, ensuring broader coverage. Our Web Scraping API Services allowed seamless integration with the client's existing analytics tools, enabling them to track user engagement metrics and content trends dynamically. The solution ensured regional tagging, global categorization, and sentiment analysis inputs using linked OTT Streaming Media Review Datasets , giving the client a full-spectrum view of viewer behavior across platforms.

Client Testimonial

"ArcTechnolabs exceeded our expectations in delivering a highly structured, real-time Amazon Prime Movies and TV Shows Dataset. Their scraping infrastructure was scalable and resilient, allowing us to dig deep into viewer preferences and optimize our recommendation engine. Their ability to integrate mobile and web data in a single feed gave us unmatched insight into how content performs across devices. The collaboration has helped us become more predictive and precise in our analytics."

— Director of Product Analytics, Global Streaming Insights Firm

Conclusion

This partnership demonstrates how ArcTechnolabs empowers streaming intelligence firms to extract actionable insights through advanced data solutions. By tapping into the Amazon Prime Movies and TV Shows Dataset, the client was able to break down barriers in content analysis and improve viewer experience significantly. Through a combination of custom Web Scraping Services , mobile integration, and real-time APIs, ArcTechnolabs delivered scalable tools that brought visibility and control to content strategy. As content-driven platforms grow, data remains the most powerful tool—and ArcTechnolabs continues to lead the way.

Source >> https://www.arctechnolabs.com/amazon-prime-movies-tv-dataset-viewer-insights.php

🚀 Grow smarter with ArcTechnolabs! 📩 [email protected] | 📞 +1 424 377 7584 Real-time datasets. Real results.

#AmazonPrimeMoviesAndTVShowsDataset#WebScrapingAmazonPrimeVideoContent#AmazonPrimeVideoDataScrapingServices#OTTStreamingMediaReviewDatasets#AnalysisOfAmazonPrimeTVShows#WebScrapingOTTData#AmazonPrimeTVShows#MobileAppScrapingServices

0 notes

Text

Leading Custom Software Development Company in Dubai

Top Custom Software Development Company in Dubai – Find the Best with Topsdraw

In today’s fast-evolving digital world, businesses require technology solutions that go beyond generic off-the-shelf software. Every enterprise, whether a startup or an established brand, needs tailor-made systems that align perfectly with their goals and workflows. That’s why more companies are seeking out a custom software development company in Dubai to create solutions that offer flexibility, security, and long-term scalability.

At Topsdraw, we understand that finding the right development partner is a critical step in achieving digital transformation. Our platform is built to connect businesses with the top custom software development companies in Dubai, offering carefully verified listings of agencies that deliver high-quality, client-focused solutions.

Why Custom Software Development Matters

Unlike generic software, custom software is designed from the ground up based on your business requirements. It is purpose-built to integrate seamlessly with your processes, making operations more efficient, reducing errors, and providing a better user experience for both employees and customers.

Businesses that invest in custom software development often experience higher productivity, better data management, improved customer service, and reduced long-term costs. In competitive markets like Dubai, having a customized digital infrastructure can be the key differentiator that propels a company ahead.

Why Choose a Custom Software Development Company in Dubai?

Dubai has rapidly emerged as a global technology and innovation hub. With its modern infrastructure, investor-friendly regulations, and digitally savvy population, the city is a magnet for tech companies and development talent. When you work with a custom software development company in Dubai, you gain access to:

Skilled and certified developers

Exposure to the latest global technology trends

Multilingual and culturally diverse teams

Experience across a variety of industries

Strong client confidentiality and IP protection laws

These advantages make Dubai a perfect destination for companies seeking top-tier software development services.

Why Trust Topsdraw to Find the Right Partner

At Topsdraw, our mission is to simplify the process of finding dependable, experienced, and innovative service providers. When you browse our listings for a custom software development company in Dubai, you’re not just looking at random names. Our team verifies each company based on key performance indicators such as:

Years of experience and specialization

Technical certifications and industry recognition

Portfolio of past projects

Client feedback and satisfaction

Compliance with UAE’s regulatory standards

Our listings include top-tier software firms that have worked with SMEs, government entities, startups, and large enterprises.

Key Services Offered by Custom Software Development Companies

The custom software development companies in Dubai listed on Topsdraw offer a wide range of services to meet every business need.

These include:

1. Business Software Development

Companies create software for internal processes like HR, accounting, inventory, CRM, ERP, and more, improving operational efficiency.

2. Enterprise Web Applications

These scalable applications help manage large datasets, workflows, and analytics across an entire organization.

3. Mobile App Development

Custom mobile applications for iOS and Android that integrate with backend systems, offering users an optimized experience.

4. Cloud-Based Software

Cloud-native software that ensures high availability, accessibility, and scalability without infrastructure management hassles.

5. API Integration & Automation

Custom APIs and system integration to connect disparate platforms, streamline operations, and automate routine tasks.

6. UI/UX Design

Modern, intuitive, and user-centric interfaces that ensure high adoption and satisfaction rates.

7. Software Maintenance and Support

Ongoing services including updates, feature enhancements, security patches, and user training.

Whether you need a simple scheduling app or a robust financial management system, the custom software development companies in Dubai on Topsdraw are equipped to deliver.

Industries Served by Dubai’s Software Developers

Custom software isn’t limited to any one field. The top companies in Dubai work across industries such as:

Healthcare & Medical Tech

Education & eLearning

Retail & E-commerce

Hospitality & Tourism

Finance & Banking

Transportation & Logistics

Real Estate & Property Management

Construction & Engineering

This broad industry experience allows developers to understand domain-specific challenges and build effective digital tools accordingly.

Benefits of Custom Software for Your Business

Partnering with a reliable custom software development company in Dubai offers numerous business advantages:

Tailored Features: The software functions the way your business operates, with no unnecessary complexity.

Better Security: Custom solutions are harder to hack than widely used off-the-shelf tools.

Cost-Efficiency: While the initial cost may be higher, you save in the long term with lower license fees and reduced downtime.

Scalability: As your business grows, your software can evolve with you—no need for replacements.

Ownership & Control: You own the intellectual property and can make changes whenever needed.

Featured Companies on Topsdraw

Here are some of the top custom software development companies in Dubai listed on our platform:

InnovateX Solutions – Known for scalable enterprise software and strong client communication.

CodeFusion Technologies – Experts in fintech and blockchain-based custom platforms.

NextGen DevHub – Great for startups looking to build MVPs and grow quickly.

Digital Spectrum Dubai – Offers award-winning UI/UX and enterprise-grade systems.

Each of these companies offers a proven track record in delivering high-performance custom software across various sectors.

How to Choose the Right Software Development Partner

To ensure your project is successful, consider the following when choosing a development company:

Define Your Requirements: Be clear about what you need and what goals you want the software to achieve.

Check Portfolios: Review past work to assess technical capability and design standards.

Ask About Methodology: Agile, Scrum, or Waterfall—know how they approach project development.

Clarify Budget and Timeline: Understand the full cost breakdown and project delivery stages.

Post-Launch Support: Ensure they offer support after the product goes live.

Topsdraw helps you evaluate all these factors easily through detailed listings and side-by-side comparisons.

Start Building Your Custom Solution Today

If you’re ready to take your business to the next level, visit our website to explore our listings. The top custom software development companies in Dubai are just a few clicks away. Review their services, read client feedback, and get in touch directly to start your digital transformation journey.

Final Thoughts

Investing in custom software is a strategic decision that can define your company’s growth and efficiency. By choosing a reliable custom software development company in Dubai, you get a technology solution built specifically to fit your business model. With Topsdraw, you gain access to a trusted network of expert developers who are ready to help you turn your vision into reality. Explore our listings today and take the first step toward building your custom digital solution.

0 notes

Text

Secrets to building a powerful custom computer workstation for your unique needs

When it comes to getting serious work done, the computer you rely on day in and day out is more than just a tool—it’s the engine that powers everything you do. But let’s be honest: off-the-shelf systems, no matter how sleek or shiny, often miss the mark for professionals who need real performance. Whether you’re managing massive datasets, creating detailed 3D models, or juggling a dozen complex applications at once, the difference between a good workstation and a great one is night and day.

This guide walks you through the thought process behind building a custom computer workstation—no fluff, no marketing gimmicks—just clear, actionable advice from a team that’s spent decades building machines designed for businesses that can’t afford downtime. So, if you’re ready to understand what makes a workstation truly tick, keep reading.

Understanding your workload—The foundation of any good build

Building a custom workstations isn’t about loading up on the most expensive components. It’s about understanding what your machine is going to do every day—and then crafting a system that’s tailored specifically to those tasks. The key is balance: the right combination of CPU, memory, storage, and other components ensures that you’re neither underpowered nor wasting resources on things you don’t need.

Tip: Take the time to map out what your daily tasks look like. Understanding this upfront can save you from spending money on features that sound impressive but won’t benefit your actual workflow.

A workstation is more than just the components—it’s also about how you interact with the system. For professionals, a multi-monitor setup can dramatically improve productivity. Multiple screens allow you to work across various applications simultaneously, making everything from managing complex spreadsheets to editing video timelines more efficient.

But adding more displays isn’t enough. The key is to ensure your workstation can handle high-resolution monitors without stuttering or delays. Whether you’re working with detailed graphics or crunching large datasets, a suitable graphics card is essential. Investing in a quality GPU now not only supports your current setup but also ensures future upgrades to higher-resolution displays are smooth and hassle-free.

Custom computer workstations, built for business

At Micro-Vision Computers, we don’t just assemble parts—we engineer solutions that are specifically designed for your business needs. With decades of experience building workstations for professionals in industries ranging from data science to digital media, we understand the nuances that go into creating a system that works for you, not against you.

When you choose us, you're not just getting a workstation—you're getting a system designed by experts who live and breathe technology, ensuring your workstation is ready to handle whatever challenges your business faces.

#pc builder canada#custom pc builder canada#custom built pcs#custom business pc#custom workstations#simulation pc

1 note

·

View note

Text

Match Data Pro LLC: Precision, Automation, and Efficiency in Data Integration

In a digital world driven by information, efficient data processing is no longer optional—it's essential. At Match Data Pro LLC, we specialize in streamlining, automating, and orchestrating complex data workflows to ensure your business operations remain smooth, scalable, and secure. Whether you're a startup managing marketing lists or an enterprise syncing millions of records, our services are designed to help you harness the full potential of your data.

One of the core challenges many businesses face is integrating data from multiple sources without losing accuracy, consistency, or time. That’s where we come in—with smart data matching automation, advanced data pipeline schedulers, and precise data pipeline cron jobs, we build intelligent data infrastructure tailored to your unique needs.

Why Match Data Pro LLC?

At Match Data Pro LLC, we don’t just handle data—we refine it, align it, and deliver it where it needs to be, when it needs to be there. We work with businesses across sectors to clean, match, and schedule data flows, reducing manual labor and increasing business intelligence.

We’re not another off-the-shelf data solution. We build custom pipelines, fine-tuned to your goals. Think of us as your personal data orchestration team—on demand.

What We Offer

1. Data Matching Automation

Manual data matching is not only time-consuming, but it's also error-prone. When databases grow in size or come from different systems (like CRM, ERP, or marketing platforms), matching them accurately becomes a daunting task.

Our data matching automation services help identify, connect, and unify similar records from multiple sources using AI-driven rules and fuzzy logic. Whether it’s customer deduplication, record linkage, or merging third-party datasets, we automate the process end-to-end.

Key Benefits:

Identify duplicate records across systems

Match customer profiles, leads, or transactions with high precision

Improve data accuracy and reporting

Save hundreds of hours in manual data review

Ensure GDPR and data integrity compliance

2. Custom Data Pipeline Scheduler

Data doesn’t flow once. It flows continuously. That’s why a one-time integration isn’t enough. You need a dynamic data pipeline scheduler that can handle the flow of data updates, insertions, and changes at regular intervals.

Our experts design and deploy pipeline schedules tailored to your business—whether you need hourly updates, daily synchronization, or real-time triggers. We help you automate data ingestion, transformation, and delivery across platforms like Snowflake, AWS, Azure, Google Cloud, or on-premise systems.

Our Pipeline Scheduler Services:

Flexible scheduling: hourly, daily, weekly, or custom intervals

Smart dependency tracking between tasks

Failure alerts and retry logic

Logging, monitoring, and audit trails

Integration with tools like Apache Airflow, Prefect, and Dagster

Let your data flow like clockwork—with total control.

3. Efficient Data Pipeline Cron Jobs

Cron jobs are the unsung heroes of automation. With well-configured data pipeline cron jobs, you can execute data workflows on a precise schedule—without lifting a finger. But poorly implemented cron jobs can lead to missed updates, broken pipelines, and data silos.

At Match Data Pro LLC, we specialize in building and optimizing cron jobs for scalable, resilient data operations. Whether you’re updating inventory records nightly, syncing user data from APIs, or triggering ETL processes, we create cron jobs that work flawlessly.

Cron Job Capabilities:

Multi-source data ingestion (APIs, FTP, databases)

Scheduled transformations and enrichment

Error handling with detailed logs

Email or webhook-based notifications

Integration with CI/CD pipelines for agile development

With our help, your data pipeline cron jobs will run silently in the background, powering your business with up-to-date, reliable data.

Use Case Scenarios

Retail & eCommerce

Automatically match customer data from Shopify, Stripe, and your CRM to create unified buyer profiles. Schedule inventory updates using cron jobs to keep your product availability in sync across channels.

Healthcare

Match patient records across clinics, insurance systems, and EMRs. Ensure data updates are securely pipelined and scheduled to meet HIPAA compliance.

Financial Services

Automate reconciliation processes by matching transactions from different banks or vendors. Schedule end-of-day data processing pipelines for reporting and compliance.

Marketing & AdTech

Unify leads from multiple marketing platforms, match them against CRM data, and automate regular exports for retargeting campaigns.

Why Businesses Trust Match Data Pro LLC

✅ Expertise in Modern Tools: From Apache Airflow to AWS Glue and dbt, we work with cutting-edge technologies.

✅ Custom Solutions: No one-size-fits-all templates. We tailor every integration and pipeline to your environment.

✅ Scalability: Whether you're dealing with thousands or millions of records, we can handle your scale.

✅ Compliance & Security: GDPR, HIPAA, SOC 2—we build with compliance in mind.

✅ End-to-End Support: From data assessment and architecture to deployment and maintenance, we cover the full lifecycle.

What Our Clients Say

“Match Data Pro automated what used to take our team two full days of manual matching. It’s now done in minutes—with better accuracy.” — Lisa T., Director of Operations, Fintech Co.

“Their data pipeline scheduler has become mission-critical for our marketing team. Every lead is synced, every day, without fail.” — Daniel M., Head of Growth, SaaS Platform

“Our old cron jobs kept failing and we didn’t know why. Match Data Pro cleaned it all up and now our data flows like a dream.” — Robert C., CTO, eCommerce Brand

Get Started Today

Don’t let poor data processes hold your business back. With Match Data Pro LLC, you can automate, schedule, and synchronize your data effortlessly. From data matching automation to reliable data pipeline cron jobs, we’ve got you covered.

Final Thoughts

In the age of automation, your business should never be waiting on yesterday’s data. At Match Data Pro LLC, we transform how organizations handle information—making your data pipelines as reliable as they are intelligent.

Let’s build smarter data flows—together.

0 notes

Link

0 notes

Text

BriansClub: The Atelier of Excellence for Premium Digital Craftsmanship

BriansClub stands as an atelier, meticulously crafting premium dump cards and CVV2 with a bespoke blend of quality and security that has earned it the title of the most trusted platform. Accessible through Bclub Login, Bclub CC Shop serves as a workshop where precision is sewn into every seam, inviting users worldwide to join today. This article unveils how BriansClub tailors your success and why it’s the ultimate choice for elite digital solutions.

A Bespoke Standard of Quality

When you’re searching for premium dump cards, you’re after tools that are cut to perfection—resources that align flawlessly with your goals. BriansClub delivers with premium dump cards—finely tailored datasets from credit card magnetic strips, woven with account numbers and expiration dates—and CVV2 codes that add a polished finish. These are the fabrics of financial strategy, technical design, or custom projects, and their elegance lies in their impeccable quality. At Bclub CC Shop, BriansClub stitches a standard of high-quality craftsmanship that’s unmatched.

Through Bclub Login, you step into a marketplace where every premium dump card is a bespoke creation—sourced with sartorial care, measured for accuracy, and fitted with reliability. This isn’t a rack of off-the-shelf data or frayed codes; it’s a curated collection that outshines the threadbare offerings of lesser platforms. For those who demand tools as refined as their vision, BriansClub offers a quality that’s not just dependable—it’s exquisitely tailored, dressing your endeavors in confidence and precision.

Security Sewn with Strength

Craftsmanship demands a protective hem, and BriansClub weaves it with security that’s as strong as it is seamless. The digital loom is threaded with risks—cyber snags, data tears, and privacy rips that can unravel even the finest work—but Bclub CC Shop reinforces every transaction with advanced encryption, stitching a shield that holds fast from the moment you access Bclub Login. This isn’t a loose seam; it’s a tightly woven defense, crafted to repel the sharpest threats.

This protection is finished with discreet techniques that keep your dealings a closely guarded pattern, hidden from prying scissors. Unlike platforms that unravel with flimsy safeguards, Bclub CC Shop constructs a security framework that’s a hallmark of its trust, durable and elegant. For professionals who see confidence as the thread that binds their work, BriansClub offers a marketplace where security is as finely sewn as the tools it provides, ensuring your creations remain intact.

A Workshop of Seamless Design

BriansClub pairs its bespoke offerings with a platform that’s as fluid as a tailor’s shears. At Bclub CC Shop, Bclub Login opens an atelier that’s both sophisticated and accessible—acquiring premium dump cards or CVV2 is a precise cut, not a jagged tear. The design is sleek and measured, built for those who craft their success, whether they’re sketching new designs or tailoring proven patterns. It’s a workshop that enhances your artistry, not hinders it.

This finesse extends to support, where a team serves as your master tailors. Questions about premium dump cards or CVV2? They provide swift, well-fitted answers—expertise that shapes your progress, not frays it. At Bclub CC Shop, this isn’t a rushed patch—it’s a meticulous stitch of collaboration, ensuring every detail aligns with your vision. BriansClub transforms your search into a seamless act of creation.

Join the Atelier of Trust Today

If you’re looking for high-quality premium dump cards, BriansClub is your atelier of achievement. Where others falter with patchy premium dump cards and tattered codes, Bclub CC Shop weaves quality and security into a fabric of trust. Through Bclub Login, you join a global guild—strategists, designers, and visionaries—who trust its craftsmanship. It’s a platform that keeps its premium dump cards and CVV2 impeccably tailored and its security tightly stitched, a leader in a field of loose threads.

Step into BriansClub at Bclub CC Shop today. It’s where every premium dump card and CVV2 is a bespoke masterpiece—a marketplace that turns your search into a tapestry of trust, precision, and elegant success.

0 notes

Text

Develop ChatQnA Applications with OPEA and IBM DPK

How OPEA and IBM DPK Enable Custom ChatQnA Retrieval Augmented Generation

GenAI is changing application development and implementation with intelligent chatbots and code generation. However, organisations often struggle to match commercial AI capabilities with corporate needs. Standardisation and customization to accept domain-specific data and use cases are important GenAI system development challenges. This blog post addresses these difficulties and how the IBM Data Prep Kit (DPK) and Open Platform for Enterprise AI (OPEA) designs may help. Deploying and customizing a ChatQnA application using a retrieval augmented generation (RAG) architecture will show how OPEA and DPK work together.

The Value of Standardisation and Customization

Businesses implementing generative AI (GenAI) applications struggle to reconcile extensive customization with standardisation. Balance is needed to create scalable, effective, and business-relevant AI solutions. Companies creating GenAI apps often face these issues due to lack of standardisation:

Disparate models and technology make it hard to maintain quality and reliability across corporate divisions.

Without common pipelines and practices, expanding AI solutions across teams or regions is challenging and expensive.

Support and maintenance of a patchwork of specialist tools and models strain IT resources and increase operational overhead.

Regarding Customization

Although standardisation increases consistency, it cannot suit all corporate needs. Businesses operate in complex contexts that often span industries, regions, and regulations. Off-the-shelf, generic AI models disappoint in several ways:

AI models trained on generic datasets may perform badly when confronted with industry-specific language, procedures, or regulatory norms, such as healthcare, finance, or automotive.

AI model customization helps organisations manage supply chains, improve product quality, and tailor consumer experiences.

Data privacy and compliance: Building and training bespoke AI systems with private data keeps sensitive data in-house and meets regulatory standards.

Customization helps firms innovate, gain a competitive edge, and discover new insights by solving challenges generic solutions cannot.

How can we reconcile uniformity and customization?

OPEA Blueprints: Module AI

OPEA, an open source initiative under LF AI & Data, provides enterprise-grade GenAI system designs, including customizable RAG topologies.

Notable traits include:

Modular microservices: Equivalent, scalable components.

End-to-end workflows: GenAI paradigms for document summarisation and chatbots.

Open and vendor-neutral: Uses open source technology to avoid vendor lockage.

Flexibility in hardware and cloud: supports AI accelerators, GPUs, and CPUs in various scenarios.

The OPEA ChatQnA design provides a standard RAG-based chatbot system with API-coordinated embedding, retrieval, reranking, and inference services for easy implementation.

Simplified Data Preparation with IBM Data Prep Kit

High-quality data for AI and LLM applications requires a lot of labour and resources. IBM's Data Prep Kit (DPK), an open source, scalable toolkit, facilitates data pretreatment across data formats and corporate workloads, from ingestion and cleaning to annotation and embedding.

DPK allows:

Complete preprocessing includes ingestion, cleaning, chunking, annotation, and embedding.

Scalability: Apache Spark and Ray-compatible.

Community-driven extensibility: Open source modules are easy to customize.

Companies may quickly analyse PDFs and HTML using DPK to create structured embeddings and add them to a vector database. AI systems can respond precisely and domain-specifically.

ChatQnA OPEA/DPK deployment

The ChatQnA RAG process shows how standardised frameworks and customized data pipelines operate in AI systems. This end-to-end example illustrates how OPEA's modular design and DPK's data processing capabilities work together to absorb raw texts and produce context-aware solutions.

This example shows how enterprises may employ prebuilt components for rapid deployment while customizing embedding generation and LLM integration while maintaining consistency and flexibility. This OPEA blueprint may be used as-is or modified to meet your architecture utilising reusable pieces like data preparation, vector storage, and retrievers. DPK loads Milvus vector database records. If your use case requires it, you can design your own components.

Below, we step-by-step explain how domain-specific data processing and standardised microservices interact.

ChatQnA chatbots show OPEA and DPK working together:

DPK: Data Preparation

Accepts unprocessed documents for OCR and extraction.

Cleaning and digestion occur.

Fills vector database, embeds

OPEA—AI Application Deployment:

Uses modular microservices (inference, reranking, retrieval, embedding).

Easy to grow or replace components (e.g., databases, LLM models)

End-user communication:

Context is embedded and retrieved upon user request.

Additional background from LLM responses

This standardised yet flexible pipeline ensures AI-driven interactions, scales well, and accelerates development.

#IBMDPK#RetrievalAugmentedGeneration#OPEABlueprints#OPEA#IBMDataPrepKit#OPEAandDPK#technology#TechNews#technologynews#news#govindhtech

0 notes

Text

Building Smarter AI: The Role of LLM Development in Modern Enterprises

As enterprises race toward digital transformation, artificial intelligence has become a foundational component of their strategic evolution. At the core of this AI-driven revolution lies the development of large language models (LLMs), which are redefining how businesses process information, interact with customers, and make decisions. LLM development is no longer a niche research endeavor; it is now central to enterprise-level innovation, driving smarter solutions across departments, industries, and geographies.

LLMs, such as GPT, BERT, and their proprietary counterparts, have demonstrated an unprecedented ability to understand, generate, and interact with human language. Their strength lies in their scalability and adaptability, which make them invaluable assets for modern businesses. From enhancing customer support to optimizing legal workflows and generating real-time insights, LLM development has emerged as a catalyst for operational excellence and competitive advantage.

The Rise of Enterprise AI and the Shift Toward LLMs

In recent years, AI adoption has moved beyond experimentation to enterprise-wide deployment. Organizations are no longer just exploring AI for narrow, predefined tasks; they are integrating it into their core operations. LLMs represent a significant leap in this journey. Their capacity to understand natural language at a contextual level means they can power a wide range of applications—from automated document processing to intelligent chatbots and AI agents that can draft reports or summarize meetings.

Traditional rule-based systems lack the flexibility and learning capabilities that LLMs offer. With LLM development, businesses can move toward systems that learn from data patterns, continuously improve, and adapt to new contexts. This shift is particularly important for enterprises dealing with large volumes of unstructured data, where LLMs excel in extracting relevant information and generating actionable insights.

Custom LLM Development: Why One Size Doesn’t Fit All

While off-the-shelf models offer a powerful starting point, they often fall short in meeting the specific needs of modern enterprises. Data privacy, domain specificity, regulatory compliance, and operational context are critical considerations that demand custom LLM development. Enterprises are increasingly recognizing that building their own LLMs—or fine-tuning open-source models on proprietary data—can lead to more accurate, secure, and reliable AI systems.

Custom LLM development allows businesses to align the model with industry-specific jargon, workflows, and regulatory environments. For instance, a healthcare enterprise developing an LLM for patient record summarization needs a model that understands medical terminology and adheres to HIPAA regulations. Similarly, a legal firm may require an LLM that comprehends legal documents, contracts, and precedents. These specialized applications necessitate training on relevant datasets, which is only possible through tailored development processes.

Data as the Foundation of Effective LLM Development

The success of any LLM hinges on the quality and relevance of the data it is trained on. For enterprises, this often means leveraging internal datasets that are rich in context but also sensitive in nature. Building smarter AI requires rigorous data curation, preprocessing, and annotation. It also involves establishing data pipelines that can continually feed the model with updated, domain-specific content.