#RetrievalAugmentedGeneration

Explore tagged Tumblr posts

Photo

Contextual Language Models for Enterprise AI: A Revolution in Reliable Intelligence

#AIhallucinationprevention#contextualAI#data-accurateAI#enterpriseAImodels#RAGtechnology#RetrievalAugmentedGeneration#SwissAItrends

0 notes

Text

Develop ChatQnA Applications with OPEA and IBM DPK

How OPEA and IBM DPK Enable Custom ChatQnA Retrieval Augmented Generation

GenAI is changing application development and implementation with intelligent chatbots and code generation. However, organisations often struggle to match commercial AI capabilities with corporate needs. Standardisation and customization to accept domain-specific data and use cases are important GenAI system development challenges. This blog post addresses these difficulties and how the IBM Data Prep Kit (DPK) and Open Platform for Enterprise AI (OPEA) designs may help. Deploying and customizing a ChatQnA application using a retrieval augmented generation (RAG) architecture will show how OPEA and DPK work together.

The Value of Standardisation and Customization

Businesses implementing generative AI (GenAI) applications struggle to reconcile extensive customization with standardisation. Balance is needed to create scalable, effective, and business-relevant AI solutions. Companies creating GenAI apps often face these issues due to lack of standardisation:

Disparate models and technology make it hard to maintain quality and reliability across corporate divisions.

Without common pipelines and practices, expanding AI solutions across teams or regions is challenging and expensive.

Support and maintenance of a patchwork of specialist tools and models strain IT resources and increase operational overhead.

Regarding Customization

Although standardisation increases consistency, it cannot suit all corporate needs. Businesses operate in complex contexts that often span industries, regions, and regulations. Off-the-shelf, generic AI models disappoint in several ways:

AI models trained on generic datasets may perform badly when confronted with industry-specific language, procedures, or regulatory norms, such as healthcare, finance, or automotive.

AI model customization helps organisations manage supply chains, improve product quality, and tailor consumer experiences.

Data privacy and compliance: Building and training bespoke AI systems with private data keeps sensitive data in-house and meets regulatory standards.

Customization helps firms innovate, gain a competitive edge, and discover new insights by solving challenges generic solutions cannot.

How can we reconcile uniformity and customization?

OPEA Blueprints: Module AI

OPEA, an open source initiative under LF AI & Data, provides enterprise-grade GenAI system designs, including customizable RAG topologies.

Notable traits include:

Modular microservices: Equivalent, scalable components.

End-to-end workflows: GenAI paradigms for document summarisation and chatbots.

Open and vendor-neutral: Uses open source technology to avoid vendor lockage.

Flexibility in hardware and cloud: supports AI accelerators, GPUs, and CPUs in various scenarios.

The OPEA ChatQnA design provides a standard RAG-based chatbot system with API-coordinated embedding, retrieval, reranking, and inference services for easy implementation.

Simplified Data Preparation with IBM Data Prep Kit

High-quality data for AI and LLM applications requires a lot of labour and resources. IBM's Data Prep Kit (DPK), an open source, scalable toolkit, facilitates data pretreatment across data formats and corporate workloads, from ingestion and cleaning to annotation and embedding.

DPK allows:

Complete preprocessing includes ingestion, cleaning, chunking, annotation, and embedding.

Scalability: Apache Spark and Ray-compatible.

Community-driven extensibility: Open source modules are easy to customize.

Companies may quickly analyse PDFs and HTML using DPK to create structured embeddings and add them to a vector database. AI systems can respond precisely and domain-specifically.

ChatQnA OPEA/DPK deployment

The ChatQnA RAG process shows how standardised frameworks and customized data pipelines operate in AI systems. This end-to-end example illustrates how OPEA's modular design and DPK's data processing capabilities work together to absorb raw texts and produce context-aware solutions.

This example shows how enterprises may employ prebuilt components for rapid deployment while customizing embedding generation and LLM integration while maintaining consistency and flexibility. This OPEA blueprint may be used as-is or modified to meet your architecture utilising reusable pieces like data preparation, vector storage, and retrievers. DPK loads Milvus vector database records. If your use case requires it, you can design your own components.

Below, we step-by-step explain how domain-specific data processing and standardised microservices interact.

ChatQnA chatbots show OPEA and DPK working together:

DPK: Data Preparation

Accepts unprocessed documents for OCR and extraction.

Cleaning and digestion occur.

Fills vector database, embeds

OPEA—AI Application Deployment:

Uses modular microservices (inference, reranking, retrieval, embedding).

Easy to grow or replace components (e.g., databases, LLM models)

End-user communication:

Context is embedded and retrieved upon user request.

Additional background from LLM responses

This standardised yet flexible pipeline ensures AI-driven interactions, scales well, and accelerates development.

#IBMDPK#RetrievalAugmentedGeneration#OPEABlueprints#OPEA#IBMDataPrepKit#OPEAandDPK#technology#TechNews#technologynews#news#govindhtech

0 notes

Photo

KIOXIA revolutioniert KI-Anwendungen mit Flash-Speicher und SSD-Lösungen auf der NVIDIA GTC 2025

0 notes

Text

Agentic RAG: Neste steg i KI-chat for innholdet i digitalarkivet

I forrige artikkel fortalte vi om hvordan vi har testet ut en KI-basert chatløsning med RAG (Retrieval Augmented Generation) i bunnen. Denne løsningen ga oss nyttige erfaringer med å kombinere generativ kunstig intelligens med vårt eget arkivmateriale og veiledninger, hjelpetekster og annet støttemateriale. Erfaringene har vist at RAG er et godt utgangspunkt, men at vi raskt støter på utfordringer når vi prøver å dekke flere behov enn tradisjonelle søk eller enkle KI-svar kan håndtere. "agentic RAG"

Vi har tatt et steg videre ved å prøve ut en "agentic RAG", som er en utvidelse av den tradisjonelle RAG-tilnærmingen. Mens man i en vanlig RAG-løsning hovedsakelig henter frem informasjon og svarer direkte på brukerens spørsmål ut fra dette, opptrer en agentic RAG mer som en selvstendig “agent” som dynamisk justerer sine egne arbeidsprosesser. Den kan for eksempel validere svar, foreslå mer presise spørsmål, og endre søkestrategier når resultatene ikke er gode nok. Fremover ønsker vi å implementere enda mer autonomi i valg av funksjoner og strategier ut fra et satt mål i løsningen, men dette er en reell start.

Hva er en agent og hva er agentic RAG?

Enkelt forklart kan man si at en agent er et system som observerer et miljø/en situasjon/noen parametre, den har også fått satt et mål. Basert på observasjonene utfører den handlinger for å oppnå dette målet. Agenten vurderer effekten av sine handlinger, justerer strategien og fortsetter mot målet.

En agentic RAG er i bunn og grunn en agentisk tilnærming til informasjonsinnhentings-oppgaver basert på en agent-arkitektur. I en tradisjonell RAG har man en ganske statisk prosess for å hente frem og presentere informasjon. En agentic RAG innebærer at KI-en kan ta beslutninger på egenhånd om hvordan den skal løse informasjonsinnhentingen. I stedet for å ha fast definerte trinn for «chunking», innhenting og generering, kan en agentisk RAG dynamisk justere søkeparametere, revidere spørsmål, foreslå nye strategier, bruke ulike “verktøy” (f.eks. API-kall eller funksjoner) og avgjøre når nok kontekst er innhentet til å svare på en tilfredsstillende måte.

Det er nettopp derfor det snakkes mye om agentisk RAG for tiden. Vi og mange andre har prøvd å løse RAG ved hjelp av statiske regler for oppdeling av tekstbiter og søk med ulik grad av kompleksitet, og da oppdager man raskt at virkeligheten er mer rotete. Dataene er ikke alltid som man har sett for seg, og spørsmålene kan være vage eller flertydige. Da trenger man et system som kan operere mer fleksibelt, mer utforskende og mer problemløsende.

Hvorfor trenger vi dette?

Arkivkunnskap kan være kompleks, og brukere vet ikke alltid hvilke ord de skal søke på eller hvor de bør starte. Her gjør agentisk funksjonalitet en stor forskjell:

Forbedre spørsmål: Agentic RAG kan omskrive og bearbeide brukerens spørsmål for å gjøre dem tydligere og mer presise.

Kombinere søkestrategier: Systemet bruker semantiske og hybride søk som finner meningsinnhold, ikke bare eksakte ord. Hva som egner seg, kan den avgjøre på egenhånd. Den kan justere parametere som similarity, og tilpasse taktikken basert på resultatene.

Dynamisk tilpasning: Hvis svarene er mangelfulle, foreslår løsningen nye spørsmål, prøver alternative søkeord eller utvider søket – alt uten at brukeren må vite hvordan.

Kvalitetssikring: Løsningen validerer svarene og foreslår presiseringer om nødvendig, slik at brukeren får mest mulig pålitelig informasjon.

Hvordan fungerer løsningen i praksis?

Når en bruker stiller et spørsmål, tar systemet først tak i spørsmålet, forbedrer det eller gjør andre tilpasninger og benytter deretter semantiske og hybride søk for å hente frem relevant informasjon fra arkivene. Denne informasjonen struktureres slik at KI-modellen kan formulere et svar med lenker til kilder Alt dette skjer uten at brukeren trenger å vite nøyaktig hvordan ting fungerer, systemet tar hånd om prosessen og jobber aktivt i kulissene for å levere best mulig svar, i stedet for bare å presentere det første og beste treffet.

Agentiske egenskaper

Målbasert tilnærming: Systemet har et klart mål: å besvare brukerens spørsmål med størst mulig nøyaktighet. Dette er tydelig i hvordan det validerer svar og bruker fallback-strategier for å forbedre resultatene når de ikke er tilstrekkelige.

Adaptiv respons: Ved lite treff, tilpasser løsningen arbeidsflyten ved å benytte alternative strategier som omskriving, utvidede spørringer eller oppfølgingsspørsmål. Altså en viss grad av dynamisk beslutningstaking.

Systemet integrerer ulike teknologier og verktøy (LLM, Elasticsearch, hybrid søk) og velger passende metoder basert på behov, så den har fleksibilitet i hvordan det løser oppgaver.

Integrert logikk: Agenten fungerer som en koordinator som setter sammen, validerer og justerer informasjon fra ulike kilder.

Dynamisk kontekststyring: Systemet kan ta hensyn til tidligere samtaler og tilpasser neste steg etter dette.

Fallback-optimalisering: Med flere iterasjoner og alternative strategier øker sannsynligheten for at brukeren får et tilfredsstillende svar.

Proof of concept og veien videre

Denne løsningen er på et utprøvingsstadium og ligger ikke ute i en beta-utgave. Det er mange muligheter for utvidelser, og selve grunnstrukturen må forbedres. Den viktigste utvidelsen vil være å sørge for at løsningen har enda mer autonomi i valg av funksjoner og strategier, for eksempel ved å gi LLM-en en beskrivelse av målet og tilgjengelige verktøy, og la den selv bestemme hvilke handlinger som er nødvendig for å oppnå målet. Dette ville bringe det nærmere en løsning med reell "agency." Dette er vi i gang med. Fremtidige andre utvidelser kan for eksempel omfatte (med ulik grad av kompleksitet):

Forbedret validering og resonnering

Dynamisk søketilpasning: Juster parametere som temperatur, "similarity” og vekting automatisk.

Oppgave-oppdeling: Løsningen bryter opp komplekse spørsmål i deloppgaver og løser dem trinnvis. For eksempel, for spørsmålet «Forklar forholdet mellom A og B», kan agenten først hente info om A, deretter B, og så sette sammen informasjonen selvstendig.

Forbedrede feedback-sløyfer: Brukerfeedback: La brukere gi tilbakemelding, slik at løsningen kan justere hvordan den oppfører seg over tid.

Kontekstrevisjon: La brukeren revidere tidligere innlegg i samtalen, slik at konteksten blir oppdatert dynamisk.

Integrasjon med kunnskapsgrafer og andre data: Bygge opp en enkel kunnskapsgraf: Følger entiteter, relasjoner og temaer på tvers av samtaler, og foreslå relevante, sammenkoblede opplysninger. Under dette ligger også integrasjon med arkivdata og arkivkunnskap. Ved at for eksempel systemet tilpasser hvilke arkiv den skal bruke basert på spørsmålet.

Oppsummert vil vi si at agentic RAG er en naturlig videreutvikling fra RAG. Ved å gi løsningen evnen til å ta egne avgjørelser, velge verktøy, og tilpasse strategien underveis, blir den i stand til å hente frem og formidle arkivkunnskap på en mer dynamisk og pålitelig måte.

Ta gjerne kontakt med oss på [email protected] hvis du har tilbakemeldinger, eller er nysgjerrig på arbeidet vårt med KI, søk eller digitalarkivet generelt.

0 notes

Text

Accelerating Enterprise AI Development with Retrieval-augmented Generation (RAG) is transforming how companies harness the power of AI. By integrating real-time data retrieval with generative models, businesses can deliver more accurate, context-aware solutions at scale. RAG not only enhances decision-making and personalization but also streamlines the deployment of AI across various industries. This cutting-edge approach accelerates innovation and optimizes resources, making AI more accessible for enterprises. If you're looking to enhance your AI capabilities, now is the time to explore RAG's potential.

👉 Read more

0 notes

Text

🚀 Accelerating Enterprise AI Development with Retrieval-augmented Generation

The future of enterprise AI is here with Retrieval-augmented Generation (RAG). By combining powerful generative AI with relevant data retrieval, companies can build smarter, context-driven applications at scale. From improving customer support with AI-driven responses to enhancing product recommendations, RAG technology is transforming industries. Want to harness AI with greater accuracy and performance? Adopt RAG to boost your enterprise workflows and stay ahead of the competition.

🔗 Learn how your company can accelerate AI development!

Read More

#AI#EnterpriseAI#MachineLearning#RetrievalAugmentedGeneration#TechInnovation#Automation#BusinessTech#AIforBusiness#RAG#AITransformation

0 notes

Video

youtube

🎥 RAG vs Fine-Tuning – Practical Comparison with Voice Prompts on Mobile...

#youtube#RAG FineTuning RetrievalAugmentedGeneration LLMs LanguageModels AIComparison VoicePromptAI MobileAI MachineLearning DeepLearning PromptEngin

0 notes

Text

The Mistral AI New Model Large-Instruct-2411 On Vertex AI

Introducing the Mistral AI New Model Large-Instruct-2411 on Vertex AI from Mistral AI

Mistral AI’s models, Codestral for code generation jobs, Mistral Large 2 for high-complexity tasks, and the lightweight Mistral Nemo for reasoning tasks like creative writing, were made available on Vertex AI in July. Google Cloud is announcing that the Mistral AI new model is now accessible on Vertex AI Model Garden: Mistral-Large-Instruct-2411 is currently accessible to the public.

Large-Instruct-2411 is a sophisticated dense large language model (LLM) with 123B parameters that extends its predecessor with improved long context, function calling, and system prompt. It has powerful reasoning, knowledge, and coding skills. The approach is perfect for use scenarios such as big context applications that need strict adherence for code generation and retrieval-augmented generation (RAG), or sophisticated agentic workflows with exact instruction following and JSON outputs.

The new Mistral AI Large-Instruct-2411 model is available for deployment on Vertex AI via its Model-as-a-Service (MaaS) or self-service offering right now.

With the new Mistral AI models on Vertex AI, what are your options?

Using Mistral’s models to build atop Vertex AI, you can:

Choose the model that best suits your use case: A variety of Mistral AI models are available, including effective models for low-latency requirements and strong models for intricate tasks like agentic processes. Vertex AI simplifies the process of assessing and choosing the best model.

Try things with assurance: Vertex AI offers fully managed Model-as-a-Service for Mistral AI models. Through straightforward API calls and thorough side-by-side evaluations in its user-friendly environment, you may investigate Mistral AI models.

Control models without incurring extra costs: With pay-as-you-go pricing flexibility and fully managed infrastructure built for AI workloads, you can streamline the large-scale deployment of the new Mistral AI models.

Adjust the models to your requirements: With your distinct data and subject expertise, you will be able to refine Mistral AI’s models to produce custom solutions in the upcoming weeks.

Create intelligent agents: Using Vertex AI’s extensive toolkit, which includes LangChain on Vertex AI, create and coordinate agents driven by Mistral AI models. To integrate Mistral AI models into your production-ready AI experiences, use Genkit’s Vertex AI plugin.

Construct with enterprise-level compliance and security: Make use of Google Cloud’s integrated privacy, security, and compliance features. Enterprise controls, like the new organization policy for Vertex AI Model Garden, offer the proper access controls to guarantee that only authorized models are accessible.

Start using Google Cloud’s Mistral AI models

Google Cloud’s dedication to open and adaptable AI ecosystems that assist you in creating solutions that best meet your needs is demonstrated by these additions. Its partnership with Mistral AI demonstrates its open strategy in a cohesive, enterprise-ready setting. Many of the first-party, open-source, and third-party models offered by Vertex AI, including the recently released Mistral AI models, can be provided as a fully managed Model-as-a-service (MaaS) offering, giving you enterprise-grade security on its fully managed infrastructure and the ease of a single bill.

Mistral Large (24.11)

The most recent iteration of the Mistral Large model, known as Mistral Large (24.11), has enhanced reasoning and function calling capabilities.

Mistral Large is a sophisticated Large Language Model (LLM) that possesses cutting-edge knowledge, reasoning, and coding skills.

Intentionally multilingual: English, French, German, Spanish, Italian, Chinese, Japanese, Korean, Portuguese, Dutch, Polish, Arabic, and Hindi are among the dozens of languages that are supported.

Multi-model capability: Mistral Large 24.11 maintains cutting-edge performance on text tasks while excelling at visual comprehension.

Competent in coding: Taught more than 80 coding languages, including Java, Python, C, C++, JavaScript, and Bash. Additionally, more specialized languages like Swift and Fortran were taught.

Agent-focused: Top-notch agentic features, including native function calls and JSON output.

Sophisticated reasoning: Cutting-edge reasoning and mathematical skills.

Context length: 128K is the most that Mistral Large can support.

Use cases

Agents: Made possible by strict adherence to instructions, JSON output mode, and robust safety measures

Text: Creation, comprehension, and modification of synthetic text

RAG: Important data is preserved across lengthy context windows (up to 128K tokens).

Coding includes creating, finishing, reviewing, and commenting on code. All popular coding languages are supported.

Read more on govindhtech.com

#MistralAI#ModelLarge#VertexAI#MistralLarge2#Codestral#retrievalaugmentedgeneration#RAG#VertexAIModelGarden#LargeLanguageModel#LLM#technology#technews#news#govindhtech

0 notes

Text

Microsoft SQL Server 2025: A New Era Of Data Management

Microsoft SQL Server 2025: An enterprise database prepared for artificial intelligence from the ground up

The data estate and applications of Azure clients are facing new difficulties as a result of the growing use of AI technology. With privacy and security being more crucial than ever, the majority of enterprises anticipate deploying AI workloads across a hybrid mix of cloud, edge, and dedicated infrastructure.

In order to address these issues, Microsoft SQL Server 2025, which is now in preview, is an enterprise AI-ready database from ground to cloud that applies AI to consumer data. With the addition of new AI capabilities, this version builds on SQL Server’s thirty years of speed and security innovation. Customers may integrate their data with Microsoft Fabric to get the next generation of data analytics. The release leverages Microsoft Azure innovation for customers’ databases and supports hybrid setups across cloud, on-premises datacenters, and edge.

SQL Server is now much more than just a conventional relational database. With the most recent release of SQL Server, customers can create AI applications that are intimately integrated with the SQL engine. With its built-in filtering and vector search features, SQL Server 2025 is evolving into a vector database in and of itself. It performs exceptionally well and is simple for T-SQL developers to use.Image credit to Microsoft Azure

AI built-in

This new version leverages well-known T-SQL syntax and has AI integrated in, making it easier to construct AI applications and retrieval-augmented generation (RAG) patterns with safe, efficient, and user-friendly vector support. This new feature allows you to create a hybrid AI vector search by combining vectors with your SQL data.

Utilize your company database to create AI apps

Bringing enterprise AI to your data, SQL Server 2025 is a vector database that is enterprise-ready and has integrated security and compliance. DiskANN, a vector search technology that uses disk storage to effectively locate comparable data points in massive datasets, powers its own vector store and index. Accurate data retrieval through semantic searching is made possible by these databases’ effective chunking capability. With the most recent version of SQL Server, you can employ AI models from the ground up thanks to the engine’s flexible AI model administration through Representational State Transfer (REST) interfaces.

Furthermore, extensible, low-code tools provide versatile model interfaces within the SQL engine, backed via T-SQL and external REST APIs, regardless of whether clients are working on data preprocessing, model training, or RAG patterns. By seamlessly integrating with well-known AI frameworks like LangChain, Semantic Kernel, and Entity Framework Core, these tools improve developers’ capacity to design a variety of AI applications.

Increase the productivity of developers

To increase developers’ productivity, extensibility, frameworks, and data enrichment are crucial for creating data-intensive applications, such as AI apps. Including features like support for REST APIs, GraphQL integration via Data API Builder, and Regular Expression enablement ensures that SQL will give developers the greatest possible experience. Furthermore, native JSON support makes it easier for developers to handle hierarchical data and schema that changes regularly, allowing for the development of more dynamic apps. SQL development is generally being improved to make it more user-friendly, performant, and extensible. The SQL Server engine’s security underpins all of its features, making it an AI platform that is genuinely enterprise-ready.

Top-notch performance and security

In terms of database security and performance, SQL Server 2025 leads the industry. Enhancing credential management, lowering potential vulnerabilities, and offering compliance and auditing features are all made possible via support for Microsoft Entra controlled identities. Outbound authentication support for MSI (Managed Service Identity) for SQL Server supported by Azure Arc is introduced in SQL Server 2025.

Additionally, it is bringing to SQL Server performance and availability improvements that have been thoroughly tested on Microsoft Azure SQL. With improved query optimization and query performance execution in the latest version, you may increase workload performance and decrease troubleshooting. The purpose of Optional Parameter Plan Optimization (OPPO) is to greatly minimize problematic parameter sniffing issues that may arise in workloads and to allow SQL Server to select the best execution plan based on runtime parameter values supplied by the customer.

Secondary replicas with persistent statistics mitigate possible performance decrease by preventing statistics from being lost during a restart or failover. The enhancements to batch mode processing and columnstore indexing further solidify SQL Server’s position as a mission-critical database for analytical workloads in terms of query execution.

Through Transaction ID (TID) Locking and Lock After Qualification (LAQ), optimized locking minimizes blocking for concurrent transactions and lowers lock memory consumption. Customers can improve concurrency, scalability, and uptime for SQL Server applications with this functionality.

Change event streaming for SQL Server offers command query responsibility segregation, real-time intelligence, and real-time application integration with event-driven architectures. New database engine capabilities will be added, enabling near real-time capture and publication of small changes to data and schema to a specified destination, like Azure Event Hubs and Kafka.

Azure Arc and Microsoft Fabric are linked

Designing, overseeing, and administering intricate ETL (Extract, Transform, Load) procedures to move operational data from SQL Server is necessary for integrating all of your data in conventional data warehouse and data lake scenarios. The inability of these conventional techniques to provide real-time data transfer leads to latency, which hinders the development of real-time analytics. In order to satisfy the demands of contemporary analytical workloads, Microsoft Fabric provides comprehensive, integrated, and AI-enhanced data analytics services.

The fully controlled, robust Mirrored SQL Server Database in Fabric procedure makes it easy to replicate SQL Server data to Microsoft OneLake in almost real-time. In order to facilitate analytics and insights on the unified Fabric data platform, mirroring will allow customers to continuously replicate data from SQL Server databases running on Azure virtual machines or outside of Azure, serving online transaction processing (OLTP) or operational store workloads directly into OneLake.

Azure is still an essential part of SQL Server. To help clients better manage, safeguard, and control their SQL estate at scale across on-premises and cloud, SQL Server 2025 will continue to offer cloud capabilities with Azure Arc. Customers can further improve their business continuity and expedite everyday activities with features like monitoring, automatic patching, automatic backups, and Best Practices Assessment. Additionally, Azure Arc makes SQL Server licensing easier by providing a pay-as-you-go option, giving its clients flexibility and license insight.

SQL Server 2025 release date

Microsoft hasn’t set a SQL Server 2025 release date. Based on current data, we can make some confident guesses:

Private Preview: SQL Server 2025 is in private preview, so a small set of users can test and provide comments.

Microsoft may provide a public preview in 2025 to let more people sample the new features.

General Availability: SQL Server 2025’s final release date is unknown, but it will be in 2025.

Read more on govindhtech.com

#MicrosoftSQLServer2025#DataManagement#SQLServer#retrievalaugmentedgeneration#RAG#vectorsearch#GraphQL#Azurearc#MicrosoftAzure#MicrosoftFabric#OneLake#azure#microsoft#technology#technews#news#govindhtech

0 notes

Text

Agentic RAG On Dell & NVIDIA Changes AI-Driven Data Access

Agentic RAG Changes AI Data Access with Dell & NVIDIA

The secret to successfully implementing and utilizing AI in today’s corporate environment is comprehending the use cases within the company and determining the most effective and frequently quickest AI-ready strategies that produce outcomes fast. There is also a great need for high-quality data and effective retrieval techniques like RAG retrieval augmented generation. The value of AI for businesses is further accelerated at SC24 by fresh innovation at the Dell AI Factory with NVIDIA, which also gets them ready for the future.

AI Applications Place New Demands

GenAI applications are growing quickly and proliferating throughout the company as businesses gain confidence in the results of applying AI to their departmental use cases. The pressure on the AI infrastructure increases as the use of larger, foundational LLMs increases and as more use cases with multi-modal outcomes are chosen.

RAG’s capacity to facilitate richer decision-making based on an organization’s own data while lowering hallucinations has also led to a notable increase in interest. RAG is particularly helpful for digital assistants and chatbots with contextual data, and it can be easily expanded throughout the company to knowledge workers. However, RAG’s potential might still be limited by inadequate data, a lack of multiple sourcing, and confusing prompts, particularly for large data-driven businesses.

It will be crucial to provide IT managers with a growth strategy, support for new workloads at scale, a consistent approach to AI infrastructure, and innovative methods for turning massive data sets into useful information.

Raising the AI Performance bar

The performance for AI applications is provided by the Dell AI Factory with NVIDIA, giving clients a simplified way to deploy AI using a scalable, consistent, and outcome-focused methodology. Dell is now unveiling new NVIDIA accelerated compute platforms that have been added to Dell AI Factory with NVIDIA. These platforms offer acceleration across a wide range of enterprise applications, further efficiency for inferencing, and performance for developing AI applications.

The NVIDIA HGX H200 and NVIDIA H100 NVL platforms, which are supercharging data centers, offer state-of-the-art technology with enormous processing power and enhanced energy efficiency for genAI and HPC applications. Customers who have already implemented the Dell AI Factory with NVIDIA may quickly grow their footprint with the same excellent foundations, direction, and support to expedite their AI projects with these additions for PowerEdge XE9680 and rack servers. By the end of the year, these combinations with NVIDIA HGX H200 and H100 NVL should be available.

Deliver Informed Decisions, Faster

RAG already provides enterprises with genuine intelligence and increases productivity. Expanding RAG’s reach throughout the company, however, may make deployment more difficult and affect quick response times. In order to provide a variety of outputs, or multi-modal outcomes, large, data-driven companies, such as healthcare and financial institutions, also require access to many data kinds.

Innovative approaches to managing these enormous data collections are provided by agentic RAG. Within the RAG framework, it automates analysis, processing, and reasoning through the use of AI agents. With this method, users may easily combine structured and unstructured data, providing trustworthy, contextually relevant insights in real time.

Organizations in a variety of industries can gain from a substantial advancement in AI-driven information retrieval and processing with Agentic RAG on the Dell AI Factory with NVIDIA. Using the healthcare industry as an example, the agentic RAG design demonstrates how businesses can overcome the difficulties posed by fragmented data (accessing both structured and unstructured data, including imaging files and medical notes, while adhering to HIPAA and other regulations). The complete solution, which is based on the NVIDIA and Dell AI Factory platforms, has the following features:

PowerEdge servers from Dell that use NVIDIA L40S GPUs

Storage from Dell PowerScale

Spectrum-X Ethernet networking from NVIDIA

Platform for NVIDIA AI Enterprise software

Together with the NVIDIA Llama-3.1-8b-instruct LLM NIM microservice, NVIDIA NeMo embeds and reranks NVIDIA NIM microservices.

The recently revealed NVIDIA Enterprise Reference Architecture for NVIDIA L40S GPUs serves as the foundation for the solution, which allows businesses constructing AI factories to power the upcoming generation of generative AI solutions cut down on complexity, time, and expense.

A thorough beginning strategy for enterprises to modify and implement their own Agentic RAG and raise the standard of value delivery is provided by the full integration of these components.

Readying for the Next Era of AI

As employees, developers, and companies start to use AI to generate value, new applications and uses for the technology are released on a daily basis. It can be intimidating to be ready for a large-scale adoption, but any company can change its operations with the correct strategy, partner, and vision.

The Dell AI factory with NVIDIA offers a scalable architecture that can adapt to an organization’s changing needs, from state-of-the-art AI operations to enormous data set ingestion and high-quality results.

The first and only end-to-end enterprise AI solution in the industry, the Dell AI Factory with NVIDIA, aims to accelerate the adoption of AI by providing integrated Dell and NVIDIA capabilities to speed up your AI-powered use cases, integrate your data and workflows, and let you create your own AI journey for scalable, repeatable results.

What is Agentic Rag?

An AI framework called Agentic RAG employs intelligent agents to do tasks beyond creating and retrieving information. It is a development of the classic Retrieval-Augmented Generation (RAG) method, which blends generative and retrieval-based models.

Agentic RAG uses AI agents to:

Data analysis: Based on real-time input, agentic RAG systems are able to evaluate data, improve replies, and make necessary adjustments.

Make choices: Agentic RAG systems are capable of making choices on their own.

Dividing complicated tasks into smaller ones and allocating distinct agents to each component is possible with agentic RAG systems.

Employ external tools: To complete tasks, agentic RAG systems can make use of any tool or API.

Recall what has transpired: Because agentic RAG systems contain memory, like as chat history, they are aware of past events and know what to do next.

For managing intricate questions and adjusting to changing information environments, agentic RAG is helpful. Applications for it are numerous and include:

Management of knowledge

Large businesses can benefit from agentic RAG systems’ ability to generate summaries, optimize searches, and obtain pertinent data.

Research

Researchers can generate analyses, synthesize findings, and access pertinent material with the use of agentic RAG systems.

Read more on govindhtech.com

#AgenticRAG#NVIDIAChanges#dell#AIDriven#ai#DataAccess#RAGretrievalaugmentedgeneration#DellAIFactory#NVIDIAHGXH200#PowerEdgeXE9680#NVIDIAL40SGPU#DellPowerScale#generativeAI#RetrievalAugmentedGeneration#rag#technology#technews#news#govindhtech

0 notes

Text

Google Public Sector’s AI adoption Framework For DoD

Google Public Sector’s AI Framework for Department of Defense Innovation

For the Department of Defense (DoD), generative AI offers enormous potential as well as difficulties. It has the ability to significantly improve decision-making, expedite tasks, and increase situational awareness. But the DoD’s particular needs particularly their strict security guidelines for cloud services (IL5) call for well-thought-out AI solutions that strike a balance between security and innovation.

The necessity to “strengthen the organizational environment” for AI deployment is emphasized in the DoD’s 2023 Data, Analytics, and Artificial Intelligence Adoption Strategy study. This emphasizes the significance of solutions that prioritize data security, allow for the responsible and intelligent use of AI, and integrate easily into current infrastructure.

Google Public Sector’s 4 AI pillars: A framework for DoD AI adoption

When developing solutions to empower the DoD, Google AI for Public Sector has concentrated on four areas to address the DoD’s particular challenges:

Adaptive: AI solutions need to blend in perfectly with the DoD’s current, intricate, and dynamic technological environment. In line with the DoD’s emphasis on agile innovation, Google places a high priority on flexible solutions that reduce interruption and facilitate quick adoption.

Secure: It’s critical to protect sensitive DoD data. The confidentiality and integrity of vital data are guaranteed by the strong security features included into Google’s AI products, such as Zero Trust architecture and compliance with IL5 standards.

Intelligent: Google AI tools are made to extract useful information from a wide range of datasets. Google technologies help the DoD make data-driven choices more quickly and accurately by utilizing machine learning and natural language processing.

Responsible: Google is dedicated to creating and implementing AI in an ethical and responsible way. Its research, product development, and deployment choices are guided by AI Principles, which make sure AI is applied responsibly and stays away from dangerous uses.

Breaking down data silos and delivering insights with enterprise search

Google Cloud‘s enterprise search solution is a potent instrument made to assist businesses in overcoming the difficulties posed by data fragmentation. It serves as a central hub that easily connects to both organized and unstructured data from a variety of sources throughout the department.

Intelligent Information Retrieval: Even when working with unstructured data, such as papers, photos, and reports, enterprise search provides accurate and contextually relevant responses to searches by utilizing cutting-edge AI and natural language processing.

Smooth Integration: Without transferring data or training a unique Large Language Model (LLM), federated search in conjunction with Retrieval Augmented Generation (RAG) yields pertinent query answers.

Enhanced Transparency and Trust: In addition to AI-generated responses, the solution offers links to source papers, enabling users to confirm information and increase system trust.

Strong Security: To protect critical DoD data, enterprise search integrates industry-leading security features, such as Role-Based Access Control (RBAC) and Common Access Card (CaC) compatibility, into all services used in the solution submitted for IL5 accreditation. Future-Proof Flexibility: A variety of Large Language Models (LLMs) are supported by the solution, such as Google’s Gemini family of models and Gemma family of lightweight, cutting-edge open models. Because Google provides flexibility, choice, and avoids vendor lock-in, the DoD may take advantage of the most recent developments in AI without having to undertake significant reconstruction.

The DoD’s mission is directly supported by Google Cloud‘s generative AI-infused solution, which streamlines data access, improves discoverability, and delivers quick, precise insights that improve decision-making and provide the agency a competitive edge.

With solutions that are not only strong and inventive but also safe, accountable, and flexible, Google Cloud is dedicated to helping the DoD on its AI journey. Google Cloud is contributing to the development of more nimble, knowledgeable, and productive military personnel by enabling the DoD to fully utilize its data.

Read more on govindhtech.com

#GooglePublicSector#AIadoption#FrameworkForDoD#generativeAI#ArtificialIntelligence#GoogleAI#naturallanguageprocessing#LargeLanguageModel#LLM#RetrievalAugmentedGeneration#RAG#Gemma#technology#technews#news#govindhtech

0 notes

Text

Test av KI-basert chat i Digitalarkivet

For et par år siden ble ChatGPT offentlig tilgjengelig, og det vi fikk prøve virket nesten litt… magisk? Plutselig var det mulig å kommunisere med en datamaskin med naturlig språk, og få fornuftige svar, til og med på norsk! Brukergrensesnittet minnet mye om chat-botene vi er vant med fra mange tjenester på nettet, men med KI-genererte svare føltes det nesten som at man kommuniserte med et menneske: Du kunne stille oppfølgingsspørsmål, eller be om enklere forklaringer, eller mer detaljer.

Kanskje en slik type KI-chat kunne være en være en fin måte å utforske og forstå arkivinnhold på, som et alternativ til tradisjonelt søk eller å få hjelp av en saksbehandler hos Arkivverket? Mange kan oppleve at arkivene kan være vanskelig å finne frem i, samtidig som arkivene inneholder mye materiale som er viktig eller interessant for store deler av befolkningen.

Men så var det med fornuftige svar, da. Helt fra starten var det åpenbart at ChatGPT og tilsvarende løsninger kunne komme med svar som var helt feil, med samme skråsikkerhet som riktige svar. Vi sier gjerne at den hallusinerer når den svarer feil. Dette er et stort problem med denne teknologien – du må egentlig dobbeltsjekke alle svar du får – og det sier også litt om måten slike KI-modeller utvikles på.

Bak KI-chatene ligger en stor språkmodell (LLM, eller Large Language Model). Disse lages (eller «trenes») ved å analysere store mengder tekst, i praksis store deler av internett. Disse modellene beregner (eller «predikerer») hva det neste ordet i svaret skal være. Det ødelegger kanskje litt av den magiske følelsen, men ChatGPT og tilsvarende løsninger er i bunn og grunn bare anvendt statistikk. Og hvis du spør om ting som er dårlig representert i treningsdataene så blir det statistisk grunnlag for å predikere ordene dårligere og du kan få oppdiktede svar. Det er også verdt å tenke på at KI-modellene ikke forholder seg til virkeligheten direkte, kun til tekster som beskriver virkeligheten. KI-modellen vet altså ikke selv om det den svarer er galt eller riktig.

Ofte inkluderer treningsdataene svært mye av tilgjengelig informasjon, men likevel vil fakta, meninger, tjenester og produkter som er viktige for f.eks. Digitalarkivet og arkiv-domenet ikke være en del av det modellen «vet». Det kan være fordi det er informasjon som er privat eller skjult, fordi den ikke anses som viktig nok til å inkludere eller fordi den er for ny. Hvis vi brukte f.eks. ChatGPT for å finne informasjon i Digitalarkivet vil den sjelden kunne gi riktige svar, samtidig som at det er en fare for at svarene den gir faktisk høres fornuftige ut.

Tillit til arkivene er svært viktig. Man må kunne stole på at det man finner er riktig, og at man finner det man trenger, og da passer det dårlig med løsninger som kan dikte opp opplysninger. Det er vanskelig å hindre hallusinasjoner i en AI-modell, men «Retrieval Augmented Generation» - eller RAG - er en måte å komme rundt problemet på.

RAG vil helt enkelt si at systemet kan basere svar på andre kilder enn de som modellen er trent på, slik at kunnskapshullene i modellen tettes. Det blir omtrent som å gi KI-modellen jukselapper. Det er fortsatt KI-modellen som skriver svarene, men den har altså tilgang til ekstra informasjon som den kan basere svarene på. RAG skjer i to trinn:

1. Hente informasjon Basert på informasjon i ulike databaser, kunnskapssamlinger, ontologier, dokumenter og bøker så lager man en samling over små kunnskapsbiter i form av "embeddings". Embeddings er et format som gjør at vi maskinelt kan finne likheter i betydning (semantisk) mellom f.eks ulike tekstsnutter. Når vi da får inn et spørsmål fra brukeren og gjør denne om til en embeddings kan vi gjøre et semantisk søk og finne de tekstbitene som er likest i betydning til det brukeren spør om.

2. Generere svar Brukerens spørsmål og de relevante bitene som har blitt funnet sendes som en pakke til en generativ KI-modell, som GPT, Claude, Mistral eller LlaMA, som laget et svar basert pakken den har fått. På denne måte kan vi sikre at KI-en har fått opplysningene den trenger for å gi et godt svar. Noe som er viktig i valg av modell er at den er god til å ta instruksjoner fra oss. I pakken vi sender legger vi nemlig til en hel masse beskjeder til modellen om hva den skal gjøre og ikke gjøre. Her finnes det et stort spenn av forskningsbaserte teknikker for å gi disse beskjedene på best mulig måte.

Denne teknologien har Arkivverket testet ut i en proof of concept (poc). Det er enkelt å sette opp en grunnleggende RAG-løsning, men for å få testet ut om RAG faktisk kan løse våre hypoteser om behov og utfordringer så har vi gått videre og laget en mer avansert og modulær RAG-arkitektur. Her har vi tatt i bruk ulike teknikker og algoritmer basert på forskning og det som rører seg i rag-verdenen på hvert av de ulike stegene i prosessen for å sørge for et mest mulig pålitelig og utfyllende svar til brukeren basert på våre data.

Poc-en består av to løsninger, som til sammen har latt oss teste ut RAG på flere typer innhold:

Den ene løsningen inneholder materiale om arkivkunnskap, som veiledninger og hjelpetekster. Dette kan være svært nyttig for brukere som har dårlig kjennskap til arkivene og som ikke helt vet hvordan de skal komme i gang med å finne informasjon.

Den andre inneholder to vidt forskjellige typer digitalisert arkivmateriale, henholdsvis arkiver fra Alexander Kielland-ulykken og dagbøker fra reindriftsforvaltningen.

En fordel med RAG er at det er relativt enkelt og billig å innarbeide mer informasjon, da dette skjer ved å oppdatere søket. Uten RAG ville vi vært nødt til å trene nye versjoner av selve KI-modellen for å oppdatere den med ny informasjon, noe som er langt mer ressurskrevende.

Det er et viktig poeng at løsning skal kjøre på våre egne systemer, heller enn at vi kobler oss på eksterne tjenester. Det er viktig at vi både har kontroll på teknologien vi bruker og på datagrunnlaget som legges inn i systemet. Det å kunne velge en modell som fungerer godt på norsk er viktig, og vi bør kunne bytte ut AI-modeller hvis det f. eks. dukker opp en ny som fungerer bedre til vårt bruk. Vi bør også ha mulighet til å velge teknologi ut ifra økonomiske faktorer.

Poc-en inkluderer også et chatte-grensesnitt, som du selv kan teste ut ved å klikke på lenkene nederst i artikkelen. I menyen til venstre kan du justere flere aspekter ved hvordan spørsmålene blir behandlet og hva slags svar du får, så her er det bare å leke seg!

Det er to momenter til som er verdt å legge merke til, som begge er viktige for å skape tillit til resultatene:

I tillegg til at løsningen svarer på spørsmål, gir den også en lenke til originalkildene slik at brukeren kan få bekreftet svaret eller bla videre i originalkilden hvis hen ønsker å utforske innholdet mer.

Løsningen forklarer at den ikke kan svare hvis brukeren spør om noe som den ikke har informasjon om, i stedet for å hallusinere frem feil svar.

_ _ _

Poc-en har vist oss at en chatteløsning med RAG i bunnen har mange fordeler:

Brukeren får beskjed hvis systemet ikke vet svaret, heller enn at løsningen dikter opp et svar.

Brukeren kan benytte naturlig språk, og skrivefeil eller dårlige formuleringer blir ofte forstått

Systemet vil forstår betydningen av det brukeren spør om og kan derfor gi svar som kan være nyttige for brukeren selv om det ikke samsvarer i språk. Den kan også gi svar ut fra informasjon som er relatert til brukerens spørsmål i større grad en f.eks et leksikalt søk.

Brukeren kan ha en dialog med systemet, og for eksempel stille oppfølgingsspørsmål eller be om presiseringer.

Brukeren får lenker til originalkildene slik at det lett å verifisere svarene hen får.

Vi ser også noen utfordringer ved en slik løsning:

En slik avansert RAG-arkitektur er avansert og ressurskrevende å lage. Det kan tenkes at det finnes andre løsninger som gir noen av de samme gevinstene.

Svarene man får er basert på arkivmateriale som kan inneholde utrykk og holdninger som er foreldede eller støtene. Slik utrykk og holdninger kan dermed også finne veien inn svarene som chatboten gir. Brukere er nok forberedt på at eldre materiale inneholder språk som vi ikke vil brukt i dag, men det kan virke støtende eller underlig hvis slikt språk benyttes i en nyskrevet tekst. Det finnes teknikker for å minimere dette problemet som vi kan ta i bruk, men man vil neppe klare å eliminere det helt.

Og selv om RAG reduserer faren for oppdiktede svar betraktelig så er det ikke helt en vanntett metode. Den generative modellen som skal formulere svaret kan fortsatt klare å hallusinere innhold som ikke var med i informasjonsbitene som svaret skal baseres på. (https://arstechnica.com/ai/2024/06/can-a-technology-called-rag-keep-ai-models-from-making-stuff-up)

_ _ _

Vi har et godt grunnlag som kan peke ut noen retninger for videre utforsking, og vi er spente på hva vi kan lære av dere som prøver løsningen. Veien videre har ikke blitt avgjort, men selv om vi har laget en omfattende og grundig poc så er det mye arbeid igjen for å få en ferdig løsning. Hensikten med en poc er å finne ut om man er inne på noe, om konseptet er teknisk mulig å realisere. Det langt unna et ferdig produkt, noe som betyr at det kan forekomme små og store feil. Merk også at datagrunnlaget som benyttes ikke nødvendigvis er oppdatert, og at f. eks. veiledningene man søker i kan inneholde feil.

Et kjent problem er at selv om kildehenvisningen blir riktig, så kan den en sjelden gang f. eks. starte nummereringen på 2 eller hoppe over 3. Årsaken er at det søket er mer optimistisk enn språkmodellen-en og derfor finner flere mulige kilder til svar enn det språkmodellen faktisk finner svar i. Dermed kan listen over kilder ha litt underlig nummerering.

Her er lenker til de to løsningene, så du selv kan teste dem:

Veiledninger og arkiv-kunnskap: https://rag.beta.arkivverket.no

Alexander Kielland-ulykken og dagbøker fra reindriftsforvaltningen: https://rag-transcriptions.beta.arkivverket.no

Ta gjerne kontakt med oss på [email protected] hvis du har tilbakemeldinger, eller er nysgjerrig på arbeidet vårt med KI, søk eller digitalarkivet generelt.

0 notes

Text

IBM Granite 3.0 8B Instruct AI Built For High Performance

IBM Granite 3.0: open, cutting-edge business AI models

IBM Granite 3.0, the third generation of the Granite series of large language models (LLMs) and related technologies, is being released by IBM. The new IBM Granite 3.0 models maximize safety, speed, and cost-efficiency for enterprise use cases while delivering state-of-the-art performance in relation to model size, reflecting its focus on striking a balance between power and usefulness.

Granite 3.0 8B Instruct, a new, instruction-tuned, dense decoder-only LLM, is the centerpiece of the Granite 3.0 collection. Granite 3.0 8B Instruct is a developer-friendly enterprise model designed to be the main building block for complex workflows and tool-based use cases. It was trained using a novel two-phase method on over 12 trillion tokens of carefully vetted data across 12 natural languages and 116 programming languages. Granite 3.0 8B Instruct outperforms rivals on enterprise tasks and safety metrics while matching top-ranked, similarly-sized open models on academic benchmarks.

Businesses may get frontier model performance at a fraction of the expense by fine-tuning smaller, more functional models like Granite. Using Instruct Lab, a collaborative, open-source method for enhancing model knowledge and skills with methodically created synthetic data and phased-training protocols, to customize Granite models to your organization’s specific requirements can further cut costs and timeframes.

Contrary to the recent trend of closed or open-weight models published by peculiar proprietary licensing agreements, all Granite models are released under the permissive Apache 2.0 license, in keeping with IBM’s strong historical commitment to open source. IBM is reinforcing its commitment to fostering transparency, safety, and confidence in AI products by disclosing training data sets and procedures in detail in the Granite 3.0 technical paper, which is another departure from industry trends for open models.

The complete IBM Granite 3.0 release includes:

General Purpose/Language: Granite 3.0 8B Instruct, Granite 3.0 2B Instruct, Granite 3.0 8B Base, Granite 3.0 2B Base

Guardrails & Safety: Granite Guardian 3.0 8B, Granite Guardian 3.0 2B

Mixture-of-Experts: Granite 3.0 3B-A800M Instruct, Granite 3.0 1B-A400M Instruct, Granite 3.0 3B-A800M Base, Granite 3.0 1B-A400M Base

Speculative decoder for faster and more effective inference: Granite-3.0-8B-Accelerator-Instruct

The enlargement of all model context windows to 128K tokens, more enhancements to multilingual support for 12 natural languages, and the addition of multimodal image-in, text-out capabilities are among the upcoming developments scheduled for the rest of 2024.

On the IBM Watsonx platform, Granite 3.0 8B Instruct and Granite 3.0 2B Instruct, along with both Guardian 3.0 safety models, are now commercially available. Additionally, Granite 3.0 models are offered by platform partners like as Hugging Face, NVIDIA (as NIM microservices), Replicate, Ollama, and Google Vertex AI (via Hugging Face’s connections with Google Cloud’s Vertex AI Model Garden).

IBM Granite 3.0 language models are trained on Blue Vela, which is fueled entirely by renewable energy, further demonstrating IBM’s dedication to sustainability.

Strong performance, security, and safety

Prioritizing specific use cases, earlier Granite model generations performed exceptionally well in domain-specific activities across a wide range of industries, including academia, legal, finance, and code. Apart from providing even more effectiveness in those areas, IBM Granite 3.0 models perform on par with, and sometimes better than, the industry-leading open-weight LLMs in terms of overall performance across academic and business benchmarks.

Regarding academic standards featured in Granite 3.0 8B, Hugging Face’s OpenLLM Leaderboard v2, Teach competitors with comparable-sized Meta and Mistral AI models. The code for IBM’s model evaluation methodology is available on the Granite GitHub repository and in the technical paper that goes with it.Image credit to IBM

It’s also easy to see that IBM is working to improve Granite 3.0 8B Instruct for enterprise use scenarios. For example, Granite 3.0 8B Instruct was in charge of the RAGBench evaluations, which included 100,000 retrieval augmented generation (RAG) assignments taken from user manuals and other industry corpora. Models were compared across the 11 RAGBench datasets, assessing attributes such as correctness (the degree to which the model’s output matches the factual content and semantic meaning of the ground truth for a given input) and faithfulness (the degree to which an output is supported by the retrieved documents).

Additionally, the Granite 3.0 models were trained to perform exceptionally well in important enterprise domains including cybersecurity: Granite 3.0 8B Instruct performs exceptionally well on both well-known public security benchmarks and IBM’s private cybersecurity benchmarks.

Developers can use the new Granite 3.0 8B Instruct model for programming language use cases like code generation, code explanation, and code editing, as well as for agentic use cases that call for tool calling. Classic natural language use cases include text generation, classification, summarization, entity extraction, and customer service chatbots. Granite 3.0 8B Instruct defeated top open models in its weight class when tested against six distinct tool calling benchmarks, including Berkeley’s Function Calling Leaderboard evaluation set.

Developers may quickly test the new Granite 3.0 8B Instruct model on the IBM Granite Playground, as well as browse the improved Granite recipes and how-to documentation on Github.

Transparency, safety, trust, and creative training methods

Responsible AI, according to IBM, is a competitive advantage, particularly in the business world. The development of the Granite series of generative AI models adheres to IBM’s values of openness and trust.

As a result, model safety is given equal weight with IBM Granite 3.0’s superior performance. On the AttaQ benchmark, which gauges an LLM’s susceptibility to adversarial prompts intended to induce models to provide harmful, inappropriate, or otherwise unwanted prompts, Granite 3.0 8B Instruct exhibits industry-leading resilience.Image credit to IBM

The team used IBM’s Data Prep Kit, a framework and toolkit for creating data processing pipelines for end-to-end processing of unstructured data, to train the Granite 3.0 language models. In particular, the Data Prep Kit was utilized to scale data processing modules from a single laptop to a sizable cluster, offering checkpoint functionality for failure recovery, lineage tracking, and metadata logging.

Granite Guardian: the best safety guardrails in the business

Along with introducing a new family of LLM-based guardrail models, the third version of IBM Granite offers the broadest range of risk and harm detection features currently on the market. Any LLM, whether proprietary or open, can have its inputs and outputs monitored and managed using Granite Guardian 3.0 8B and Granite Guardian 3.0 2B.

In order to assess and categorize model inputs and outputs into different risk and harm dimensions, such as jailbreaking, bias, violence, profanity, sexual content, and unethical behavior, the new Granite Guardian models are variations of their correspondingly sized base pre-trained Granite models.

A variety of RAG-specific issues are also addressed by the Granite Guardian 3.0 models.

Efficiency and speed: a combination of speculative decoding and experts (MoE) models

A speculative decoder for rapid inference and mixture of experts (MoE) models are two more inference-efficient products included in the Granite 3.0 version.

The initial MoE models from IBM Granite

Granite 3.0 3B-A800M and Granite 3.0 1B-A400M, offer excellent inference efficiency with little performance compromise. The new Granite MoE models, which have been trained on more than 10 trillion tokens of data, are perfect for use in CPU servers, on-device apps, and scenarios that call for incredibly low latency.

Both their active parameter counts the 3B MoE employ 800M parameters at inference, while the smaller 1B uses 400M parameters at inference and their overall parameter counts 3B and 1B, respectively are mentioned in their model titles. There are 40 expert networks in Granite 3.0 3B-A800M and 32 expert networks in Granite 3.0 1B-A400M. Top-8 routing is used in both models.

Both base pre-trained and instruction-tuned versions of the Granite 3.0 MoE models are available. You may now obtain Granite 3.0 3B-A800M Instructions from Hugging Face, Ollama, and NVIDIA. Hugging Face and Ollama provide the smaller Granite 3.0 1B-A400M. Only Hugging Face now offers the base pretrained Granite MoE models.

Speculative decoding for Granite 3.0 8B

An optimization method called “speculative decoding” helps LLMs produce text more quickly while consuming the same amount of computing power, enabling more users to use a model simultaneously. The recently published Granite-3.0-8B-Instruct-Accelerator model uses speculative decoding to speed up tokens per step by 220%.

LLMs generate one token at a time after processing each of the previous tokens they have generated so far in normal inferencing. LLMs also assess a number of potential tokens that could follow the one they are about to generate in speculative decoding; if these “speculated” tokens are confirmed to be correct enough, a single pass can yield two or more tokens for the computational “price” of one. The method was initially presented in two 2023 articles from Google and DeepMind, which used a small, independent “draft model” to perform the speculative job. An open source technique called Medusa, which only adds a layer to the base model, was released earlier this year by a group of university researchers.

Conditioning the hypothesized tokens on one another was the main advance made to the Medusa approach by IBM Research. The model will speculatively forecast what follows “I am” instead of continuing to predict what follows “happy,” for instance, if “happy” is the first token that is guessed following “I am.” Additionally, they presented a two-phase training approach that trains the base model and speculation simultaneously by utilizing a type of information distillation. Granite Code 20B’s latency was halved and its throughput quadrupled because to this IBM innovation.

Hugging Face offers the Granite 3.0 8B Instruct-Accelerator model, which is licensed under the Apache 2.0 framework.

Read more on govindhtech.com

#IBMGranite308B#InstructAIBuilt#HighPerformance#MistralAImodels#IBMWatsonxplatform#retrievalaugmentedgeneration#RAG#Ollama#GoogleVertexAI#Graniteseries#IBMGranite30open#languagemodels#IBMResearch#initialMoEmodels#technology#technews#news#govindhtech

0 notes

Text

SFT Supervised Fine Tuning Vs. RAG And Prompt Engineering

Supervised Fine Tuning

Supervised Fine-Tuning (SFT) enables robust models to be customized for specific tasks, domains, and even subtle stylistic differences. Questions about when to utilize SFT and how it stacks up versus alternatives like RAG, in-context learning, and prompt engineering are common among developers.

This article explores the definition of SFT, when to use it, and how it stacks up against other techniques for output optimization.

What is SFT?

Large language model (LLM) development frequently starts with pre-training. The model gains general language comprehension at this stage by reading vast volumes of unlabeled material. Pre-training’s main goal is to give the model a wide range of language understanding abilities. These previously trained LLMs have performed remarkably well on a variety of activities. The performance of this pre-trained model can then be improved for downstream use cases, such summarizing financial papers, that can call for a more in-depth understanding.

Using a task-specific annotated dataset, the pre-trained model is refined to make it suitable for certain use cases. This dataset contains examples of intended outputs (like a summary) correlating to input instances (like an earnings report). The model gains knowledge of how to carry out a particular task by linking inputs with their appropriate outputs. It refer to fine-tuning using an annotated dataset as supervised fine-tuning (SFT).

The main tool for modifying the behavior of the model is its parameters, which are the numerical values that are acquired during training. There are two popular supervised fine-tuning methods, albeit the number of model parameters it update during fine-tuning can vary:

Full fine-tuning: modifies every parameter in the model. However, comprehensive fine-tuning results in greater total costs because it requires more computer resources for both tuning and serving.

Parameter-Efficient Fine-Tuning (PEFT): In order to facilitate quicker and more resource-efficient fine-tuning, a class of techniques known as Parameter-Efficient Fine-Tuning (PEFT) freezes the initial model and only modifies a small number of newly introduced extra parameters. When dealing with huge models or limited computational resources, PEFT is especially helpful.

Although both PEFT and full fine-tuning are supervised learning techniques, they differ in how much parameter updating they carry out, thus one might be better suited for your situation. An example of a PEFT technique is LoRA (Low-Rank Adaptation), which is used in Supervised fine-tuning for Gemini models on Vertex AI.

When should one use supervised fine-tuning?

If you have access to a dataset of well-annotated samples and your objective is to improve the model’s performance on a particular, well-defined task, you should think about utilizing Supervised Fine Tuning. When the task is in line with the initial pre-training data, supervised fine-tuning is very useful for effectively activating and honing the pertinent knowledge that is already contained within the pre-trained model. Here are some situations in which Supervised Fine Tuning excels:

Domain expertise: Give your model specific knowledge to make it an authority on a certain topic, such as finance, law, or medical.

Customize the format: Make your model’s output conform to particular structures or formats.

Task-specific proficiency: Fine-tune the model for certain tasks, including brief summaries.

Edge cases: Boost the model’s capacity to manage particular edge cases or unusual situations.

Behavior Control: Direct the actions of the model, including when to give succinct or thorough answers.

One of SFT’s advantages is that it can produce gains even with a small quantity of excellent training data, which often makes it a more affordable option than complete fine-tuning. Moreover, refined models are typically more user-friendly. Supervised Fine Tuning helps the model become proficient at the job, which minimizes the need for long and intricate cues during inference. This results in decreased expenses and delayed inference.

SFT is an excellent tool for consolidating prior information, but it is not a panacea. In situations where information is dynamic or ever-changing, like when real-time data is involved, it might not be the best option. Let’s go over these additional possibilities as they may be more appropriate in some situations.

LLM Supervised Fine Tuning

Supervised Fine Tuning isn’t necessarily the only or best option for adjusting an LLM’s output, despite its strength. Effective methods for changing the behavior of the model can be found in a number of other approaches, each with advantages and disadvantages.

Prompt engineering is affordable, accessible, and simple to use for controlling outputs. For managing intricate or subtle jobs, it could be less dependable and necessitates experience and trial.

Like prompt engineering, In-Context Learning (ICL) is simple to use and makes use of examples found within the prompt to direct the behavior of the LLM. ICL, sometimes known as few-shot prompting, can be affected by the prompt’s examples and the sequence in which they are given. It might also not generalize well.

In order to increase quality and accuracy, Retrieval Augmented Generation (RAG) gathers pertinent data from Google search and other sources and gives it to the LLM. A strong knowledge base is necessary for this, and the extra step increases complexity and delay.

The capacity of a language model to recognize when external systems are required to respond to a user request and provide structured function calls in order to communicate with these tools and increase their capabilities is known as function calling. It might increase complexity and delay when employed.

Where to start?

You may be asking yourself, “What’s the right path now?” It’s critical to realize that the best course of action is determined by your particular requirements, available resources, and use case goals. These methods can be combined and are not exclusive to one another. Let’s examine a framework that can direct your choice:Image Credit to Google Cloud

If you want to be sure the model can understand the subtleties of your particular domain, you can begin by investigating prompt engineering and few-shot in-context learning. Here, Gemini’s huge context box opens up a world of options. You can experiment using Retrieval Augmented Generation (RAG) and/or Supervised Fine-Tuning (SFT) after you’ve perfected your prompting technique for even more refining. A lot of the most recent methods are shown in this graphic, although generative AI is a rapidly evolving subject.

Supervised Fine-Tuning on Vertex AI with Gemini

When you have a specific objective in mind and have labeled data to assist the model, Supervised Fine-Tuning (SFT) is the best option. Supervised Fine Tuning can be combined with other methods you may already be attempting to create more effective models, which could reduce expenses and speed up response times.

Read more on govindhtech.com

#SFTSupervisedFineTuning#PromptEngineering#rag#Geminimodels#Largelanguagemodel#LLM#SupervisedFineTuning#generativeAI#Promptengineering#RetrievalAugmentedGeneration#RAG#VertexAI#Gemini#technology#technews#news#govindhtech

0 notes

Text

IBM Watsonx Assistant For Z V2’s Document Ingestion Feature

IBM Watsonx Assistant for Z

For a more customized experience, clients can now choose to have IBM Watsonx Assistant for Z V2 ingest their enterprise documents.

A generative AI helper called IBM Watsonx Assistant for Z was introduced earlier this year at Think 2024. This AI assistant transforms how your Z users interact with and use the mainframe by combining conversational artificial intelligence (AI) and IT automation in a novel way. By allowing specialists to formalize their Z expertise, it helps businesses accelerate knowledge transfer, improve productivity, autonomy, and confidence for all Z users, and lessen the learning curve for early-tenure professionals.

Building on this momentum, IBM is announce today the addition of new features and improvements to IBM Watsonx Assistant for Z. These include:

Integrate your own company documents to facilitate the search for solutions related to internal software and procedures.

Time to value is accelerated by prebuilt skills (automations) offered for typical IBM z/OS jobs.

Simplified architecture to reduce costs and facilitate implementation.

Consume your own business records

Every organization has its own workflows, apps, technology, and processes that make it function differently. Over time, many of these procedures have been improved, yet certain specialists are still routinely interrupted to answer simple inquiries.

You can now easily personalize the Z RAG by ingesting your own best practices and documentation with IBM Watsonx Assistant for Z. Your Z users will have more autonomy when you personalize your Z RAG because they will be able to receive answers that are carefully chosen to fit the internal knowledge, procedures, and environment of your company.

Using a command-line interface (CLI), builders may import text, HTML, PDF, DOCX, and other proprietary and third-party documentation at scale into retrieval augmented generation (RAG). There’s no need to worry about your private content being compromised because the RAG is located on-premises and protected by a firewall

What is Watsonx Assistant for Z’s RAG and why is it relevant?

A Z domain-specific RAG and a chat-focused granite.13b.labrador model are utilized by IBM Watsonx Assistant for Z, which may be improved using your company data. The large language model (LLM) and RAG combine to provide accurate and contextually rich answers to complex queries. This reduces the likelihood of hallucinations for your internal applications, processes, and procedures as well as for IBM Z products. The answers also include references to sources.

Built-in abilities for a quicker time to value

For common z/OS tasks, organizations can use prebuilt skills that are accessible. This means that, without specialist knowledge, you may quickly combine automations like displaying all subsystems, determining when a program temporary fix (PTF) was installed, or confirming the version level of a product that is now operating on a system into an AI assistant to make it easier for your Z users to use them.

Additionally, your IBM Z professionals can accelerate time to value by using prebuilt skills to construct sophisticated automations and skill flows for specific use cases more quickly.

Streamlined architecture for easier deployment and more economical use

IBM Watsonx Discovery, which was required in order to provide elastic search, is no longer mandatory for organizations. Alternatively, they can utilize the integrated OpenSearch feature, which combines semantic and keyword searches to provide access to the Z RAG. This update streamlines the deployment process and greatly reduces the cost of owning IBM Watsonx Assistant for Z in addition to improving response quality.

Use IBM Watsonx Assistant for Z to get started

By encoding information into a reliable set of automations, IBM Watsonx Assistant for Z streamlines the execution of repetitive operations and provides your Z users with accurate and current answers to their Z questions.

Read more on govindhtech.com

#IBM#WatsonxAssistant#ZV2Document#IBMZ#IngestionFeature#artificialintelligence#AI#RAG#WatsonxAssistantZ#retrievalaugmentedgeneration#IBMWatsonx#automations#technology#technews#news#govindhtech

0 notes

Text

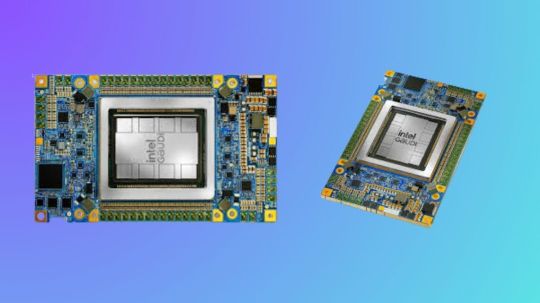

Use Intel Gaudi-3 Accelerators To Increase Your AI Skills

Boost Your Knowledge of AI with Intel Gaudi-3 Accelerators

Large language models (LLMs) and generative artificial intelligence (AI) are two areas in which Intel Gaudi Al accelerators are intended to improve the effectiveness and performance of deep learning workloads. Gaudi processors provide efficient solutions for demanding AI applications including large-scale model training and inference, making them a more affordable option than typical NVIDIA GPUs. Because Intel’s Gaudi architecture is specifically designed to accommodate the increasing computing demands of generative AI applications, businesses looking to implement scalable AI solutions will find it to be a highly competitive option. The main technological characteristics, software integration, and upcoming developments of the Gaudi AI accelerators are all covered in this webinar.

Intel Gaudi Al Accelerators Overview

The very resource-intensive generative AI applications, as LLM training and inference, are the focus of the Gaudi AI accelerator. While Intel Gaudi-3, which is anticipated to be released between 2024 and 2025, promises even more breakthroughs, Gaudi 2, the second-generation CPU, enables a variety of deep learning enhancements.

- Advertisement -

Intel Gaudi 2

The main attributes of Gaudi 2 consist of:

Matrix Multiplication Engine: Hardware specifically designed to process tensors efficiently.

For AI tasks, 24 Tensor Processor Cores offer high throughput.

Larger model and batch sizes are made possible for better performance by the 96 GB of on-board HBM2e memory.

24 on-chip 100 GbE ports offer low latency and high bandwidth communication, making it possible to scale applications over many accelerators.

7nm Process Technology: For deep learning tasks, the 7nm architecture guarantees excellent performance and power efficiency.

These characteristics, particularly the combination of integrated networking and high memory bandwidth, make Gaudi 2 an excellent choice for scalable AI activities like multi-node training of big models. With its specialized on-chip networking, Gaudi’s innovative design does away with the requirement for external network controllers, greatly cutting latency in comparison to competing systems.

Intel Gaudi Pytorch

Software Environment and Stack

With its extensive software package, Intel’s Gaudi platform is designed to interact easily with well-known AI frameworks like PyTorch. There are various essential components that make up this software stack:

Graph Compiler and Runtime: Generates executable graphs that are tailored for the Gaudi hardware using deep learning models.

Kernel Libraries: Reduce the requirement for manual optimizations by using pre-optimized libraries for deep learning operations.

PyTorch Bridge: Requires less code modification to run PyTorch models on Gaudi accelerators.

Complete Docker Support: By using pre-configured Docker images, users may quickly deploy models, which simplifies the environment setup process.

With a GPU migration toolset, Intel also offers comprehensive support for models coming from other platforms, like NVIDIA GPUs. With the use of this tool, model code can be automatically adjusted to work with Gaudi hardware, enabling developers to make the switch without having to completely rebuild their current infrastructure.

Open Platforms for Enterprise AI

Use Cases of Generative AI and Open Platforms for Enterprise AI

The Open Platform for Enterprise AI (OPEA) introduction is one of the webinar’s main highlights. “Enable businesses to develop and implement GenAI solutions powered by an open ecosystem that delivers on security, safety, scalability, cost efficiency, and agility” is the stated mission of OPEA. It is completely open source with open governance, and it was introduced in May 2024 under the Linux Foundation AI and Data umbrella.

It has attracted more than 40 industry partners and has members from system integrators, hardware manufacturers, software developers, and end users on its technical steering committee. With OPEA, businesses can create and implement scalable AI solutions in a variety of fields, ranging from chatbots and question-answering systems to more intricate multimodal models. The platform makes use of Gaudi’s hardware improvements to cut costs while improving performance. Among the important use cases are:

Visual Q&A: This is a model that uses the potent LLaVA model for vision-based reasoning to comprehend and respond to questions based on image input.

Large Language and Vision Assistant, or LLaVA, is a multimodal AI model that combines language and vision to carry out tasks including visual comprehension and reasoning. In essence, it aims to combine the advantages of vision models with LLMs to provide answers to queries pertaining to visual content, such as photographs.