#pathminder

Text

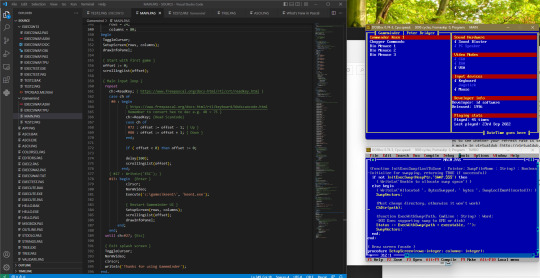

I'm proud to reveal a first peek at Gameminder

On my retro PCs I typically arrange my various games into groups of directories for specific genres (e.g action, shooter, strategy) however it can still be a bit fiddly scrolling through my games using various DOS commands.

Also when I come to launch a game, I often find that I'd not yet got it configured correctly or that there was a slight issue. For example, the sound effects not working.

All of these minor irritations got me thinking about creating a DOS based games launcher which would bypass these issues by creating a lightweight UI to browse my games and view information about them.

After a little research I decided to use Turbo Pascal 7 to create my launcher, using a combination of DOSBox and VSCode to provide a better IDE whilst compiling and testing in an emulated DOS environment.

I can imagine writing something like this on an actual retro PC in the Turbo Pascal being much more of a challenge! We've certainly been spoilt by using modern intuitive IDEs, large screens and other refinements.

One of the most complex challenges so far was working out how to launch a game without the launcher itself taking up too much memory. Thankfully some other skilled Pascal developers have already solved this issue by writing the current program to EMS or disk and leaving only a 2K stub in conventical RAM to manage the swap in and out of the launched executable.

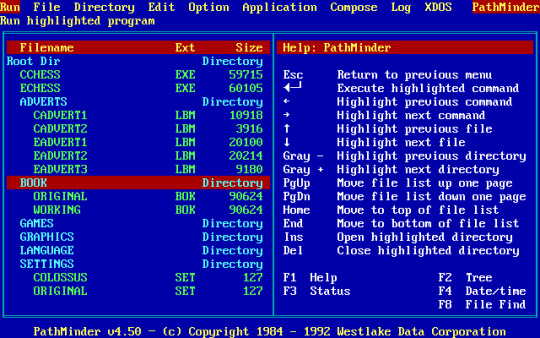

The UI is modelled on an old DOS utility used to navigate, edit and execute files called PathMinder.

I first came across this on my Dad's XT PC he used at home for work. When I got a 286 EGA PC a few years later, I carried on using and enjoying it.

I know that XTree seemed to be a more popular utility that performed a similar function, however I always preferred PathMinder. To me it was the superior underdog. So I'm creating GameMinder as a kind of tribute.

What's left to do

I'm currently struggling with understanding how to work with arrays of records in Pascal. Unfortunately it seems to be a lot more fiddley than the modern languages that I'm used to!

Once that's out the way, I need to implement the scanning of the file system to find the files in each game directory that will hold information about that game. These will need to be manually maintained and will contain simple text meta data about each game.

With that in place it'll really just be continued refinement of the UI to offer features such as

Ordering of games (Name, release year, most popular)

Playtime statistics (How many times played each game, when last played)

Help (List keyboard commands)

Editor (Setup meta data for each game)

I'll also make the full source code available so that others can extend, improve or just learn from what I've done.

44 notes

·

View notes

Text

Unlock the Potential of AI with Essential Tools and Symbolism Texta ai Blog Writer

Symbolic Reasoning Symbolic AI and Machine Learning Pathmind

As long as there is access to data, the connectionist approach does not require constant human coding and supervision. Symbolism and Connectionism in AI Artificial Intelligence Difference; The influence of AI at the individual and industrial levels is growing at a rapid pace. Despite the increasing ubiquity of artificial intelligence,…

View On WordPress

0 notes

Photo

From Werewolf by Night Vol. 3 #004

Art by Scot Eaton, Scott Hanna and Miroslav Mrva

Written by Taboo and B. Earl

#werewolf by night#jake gomez#hadley wagner#mother#eve makowski#molly#officer schilling#pathmind#dr. brick#red wolf#jj#lady hedge#whiptail#mr. gnoll#granny rora#cyberwolf#nails#marvel#comics#marvel comics

12 notes

·

View notes

Note

So why do themlets exist among moonshadow elves if clothing isn't gendered? And how come the only two moon themlets we know happen to be ghosted by the community?

Themlets? What makes you think all nonbinary Moonshadows are short? *briefly considers a measuring task* Ah, perhaps you're just tall, like me.

I've never thought too much about it, love, but I assume - and feel free to correct me if I'm wrong here - but I assume that nonbinary people exist because they wish to exist, and so that everyone may bask in their amazing presence. I'm certainly blessed by them.

*thoughtful craftsman frown, studies hammer in my hand* Swirlies don't have gender, you know. Doesn't matter how decorative I make the outside of this hammer. It's still a hammer. *Zen smile, lightly taps worktable* See? It was a hammer before I decorated it, and it'll still be a hammer if I file them all off. Ah, please don't ask me to do that, though! My poor swirlies... so pretty... *smooches hammer reassuringly* Don't worry, baby, I wasn't serious.

As for your other question, I'm sure I don't know who you mean. No one has been ghosted from the Silvergrove since Rayla. I've threatened to cry in public if anyone so much as breathes of it, and that terrifies even the hardiest of assassins. Likely because they fear the ghost of Runaan will crack open a fissure from the beyond and swoop back in to avenge me.

Just because you haven't met more nonbinary Moonshadows doesn't mean I haven't! The Silvergrove ambassador to Hollow Wood uses they/them, and so does my hairstylist, and the village eggkeeper, and the pathminder... two of Rayla's former teachers - they married a pair of sisters, it's entirely adorable... literally everyone in Basket End... Oop, I'm already out of fingers, but you get the idea, yes?

If you wish there were more enbies in the Moonshadow Forest, love, then your wish is granted, because they've been there all along.

#ask ethari#just moonshadow things#just gender things#binary is just code#and nonbinary is just cracking it#all elves are queer let them vibe#eljaal get back here you owe me a bushel of star plums#i have never forgotten and i never will i want my plums

19 notes

·

View notes

Text

Digital twins save the New Year

Artificial Intelligence Involved in Solving the Global Logistics Crisis

News of global supply chain problems has been around for months - that Christmas gifts may not reach customers on time, dozens of container ships queuing in ports, and retailers trying to find alternative shipping methods. As Joseph Marc Blumenthal writes, one of the ways to cope with this problem may be the proliferation of so-called digital twins.

By itself, this technology has been used for several years. The digital twin is a kind of virtual model of a real object, based on artificial intelligence and helping to optimize work. In order for the digital twin to properly reflect reality, it must receive a huge amount of data from its real prototype - this is done using various sensors, cameras and other data sources.

With the help of such systems, it is possible to simulate situations in production (or in logistics) and possible disruptions in work. AI can also provide guidance on how to deal with these violations.

However, until now, digital twins of limited objects have usually been created - from a single device to a group of enterprises.

Now we are talking about digital twins of supply chain systems as a whole - modeling an extremely complex system with numerous suppliers, complex transport networks, etc.

According to Hans Thalbauer, managing director of Google's supply chain and logistics division, the main problem for logistics companies is the inability to predict events that may affect supply chains. “Anyone in the supply chain business will tell you that they lack the breadth of vision they need to make decisions,” says Talbauer.

It is this latitude that digital twins can provide. If such an AI-based digital twin receives enough data from its physical counterpart, it can replay potential failures over and over again and find the best option through trial and error.

The data that the digital twin must receive for normal forecasting is very different. Information about the company itself, its suppliers, their stocks and planned delivery dates, data on consumer behavior based on financial forecasts and market research. And also information about the world as a whole, for example, about the likelihood of natural disasters and the geopolitical situation in different regions.

For example, it can test the assumption that Taiwan will experience a drought and water shortages will lead to a reduction in semiconductor production. The digital twin will predict the likelihood of such an event, track its impact on different parts of the supply chain, and suggest options on how to at least mitigate the blow. Even with the use of AI, such analysis is still a very difficult task.

So, the automaker Ford has more than 50 factories around the world, annually they use 35 billion parts to produce 6 million cars. These parts come from 1.4 thousand suppliers from 4.4 thousand factories, and in some cases supply chains come into play. A stress test of a system requires checking all its elements and exploring different options - what to do in case of a failure at a particular plant or at a particular supplier.

Amazon is already using digital twins for supply chains. Such technologies are being developed by Google, FedEx and DHL.

There are also companies specializing in digital twins for logistics systems, such as Pathmind or Deliverr.

Pathmind's digital twins work with existing supply chain management tools and data from a particular company. They use this data to model various problems in the system and options for solving problems. According to Pathmind founder Chris Nicholson, the resulting synthetic data - that is, not directly obtained, but generated by AI based on this direct data - helps to predict a variety of scenarios, such as a pandemic, and learn how to minimize their damage to supply chains.

“This is the answer to the question: why is AI smart? He just lives longer than we do in all these different worlds, many of which never existed, ”he says.

As the head of the data analysis laboratory of the Massachusetts Institute of Technology

David Simchi-Levy notes, one of the important goals of logistics companies with the onset of the pandemic and the crisis was to increase the resiliency of the supply chain, that is, the ability of the system to remain stable and operable in the event of failure of some of its elements. Companies are willing to invest in this, but they want to maintain a balance by strengthening the supply chain enough without spending too much money - and from this point of view, digital twins can also help.

“We are seeing more and more companies stress testing their supply chains using digital twins,” says Professor Simchi-Levy.

At the same time, experts admit that technology alone will not help here.

“Technology will not solve these problems. They won't help ships carry more containers. But digital twins will help us detect problems before they happen, ”says Professor Simchi-Levy.

Some elements of supply chains also lag behind in terms of digitizing data. “Many of the world's ports do business on paper, you are very lucky if they use PDF or email. And these are the big operators, not the New Hampshire candle manufacturer. Without digitalization, AI will not be able to work, ”says Mr Nicholson.

0 notes

Text

Embodied AI, superintelligence and the master algorithm

Embodied AI, superintelligence and the master algorithm

Chris Nicholson

Contributor

Share on Twitter

Chris Nicholson is the founder and CEO of Pathmind, a company applying deep reinforcement learning to industrial operations and supply chains.

More posts by this contributor

UPDATED: Machine learning can fix Twitter, Facebook, and maybe even America

Superintelligence, roughly defined as an AI algorithm that can solve all problems better than people,…

View On WordPress

0 notes

Text

Deep Learning Market Size By Manufacturer Growth Analysis, Industry Share, Business Growth and Trends

According to the latest report by IMARC Group, titled “Deep Learning Market: Global Industry Trends, Share, Size, Growth, Opportunity and Forecast 2021-2026,” the global deep learning market exhibited robust growth during 2015-2020. Looking forward, IMARC Group expects the global deep learning market to grow at a CAGR of around 40% during 2021-2026. Deep learning is a subset of machine learning (ML) in the field of artificial intelligence (AI), which imitates the workings of the human brain for processing big data and creating patterns. Also known as a deep neural network, it plays a vital role in data science, which includes statistics and predictive modeling. As a result, deep learning is widely employed in different industries, such as media, finance, medical, aerospace, defense and advertising, across the globe. For instance, it is used in driverless cars for recognizing a stop sign or distinguishing pedestrians from lampposts. Besides this, it assists in controlling consumer devices like tablets, smartphones, televisions and hands-free speakers.

As the novel coronavirus (COVID-19) crisis takes over the world, we are continuously tracking the changes in the markets, as well as the industry behaviours of the consumers globally and our estimates about the latest market trends and forecasts are being done after considering the impact of this pandemic.

Request Free Sample Report: https://www.imarcgroup.com/deep-learning-market/requestsample

Global Deep Learning Market Trends:

The market is currently experiencing growth owing to the expanding applications of deep learning solutions in cybersecurity, database management and fraud detection systems. Additionally, they are employed in the healthcare sector to process medical images for disease diagnosis and offer virtual patient assistance, which in turn is strengthening the market growth. Apart from this, the burgeoning information technology (IT) sector, in confluence with the increasing trend of digitalization, is boosting the sales of deep learning solutions across the globe. Furthermore, the integration of these solutions with big data analytics and cloud computing is bolstering the market growth. Moreover, leading vendors in the industry are focusing on increasing research and development (R&D) activities to introduce improved hardware and software processing technologies for deep learning.

Ask Customization and Browse Full Report with TOC & List of Figure: https://www.imarcgroup.com/deep-learning-market

Competitive Landscape with Key Player:

Amazon Web Services (Amazon.com Inc.)

Google LLC

International Business Machines (IBM) Corporation

Intel Corporation

Micron Technology, Inc.

Microsoft Corporation

Nvidia Corporation

Qualcomm Incorporated

Samsung Electronics Co. Ltd.

Sensory Inc.

Pathmind, Inc.

Xilinx, Inc.

Breakup by Product Type:

Software

Services

Hardware

Breakup by Application:

Image Recognition

Signal Recognition

Data Mining

Others

Breakup by End-Use Industry:

Security

Manufacturing

Retail

Automotive

Healthcare

Agriculture

Others

Breakup by Architecture:

RNN

CNN

DBN

DSN

GRU

Market Breakup by Region:

North America (United States, Canada)

Europe (Germany, France, United Kingdom, Italy, Spain, Others)

Asia Pacific (China, Japan, India, Australia, Indonesia, Korea, Others)

Latin America (Brazil, Mexico, Others)

Middle East and Africa (United Arab Emirates, Saudi Arabia, Qatar, Iraq, Other)

Key Highlights of the Report:

Market Performance (2015-2020)

Market Outlook (2021-2026)

Porter’s Five Forces Analysis

Market Drivers and Success Factors

SWOT Analysis

Value Chain

Comprehensive Mapping of the Competitive Landscape

If you need specific information that is not currently within the scope of the report, we can provide it to you as a part of the customization.

Other Report

United States Running Gear Market: https://www.imarcgroup.com/united-states-running-gear-market

Europe Rechargeable Battery Market: https://www.imarcgroup.com/europe-rechargeable-battery-market

United States Smart Windows Market: https://www.imarcgroup.com/united-states-smart-windows-market

Mobile Application Development Platform Market: https://www.imarcgroup.com/mobile-application-development-platform-market

Styrenic Block Copolymer Market: https://www.imarcgroup.com/styrenic-block-copolymer-market

Teeth Whitening Products Market: https://www.imarcgroup.com/teeth-whitening-products-market

About Us

IMARC Group is a leading market research company that offers management strategy and market research worldwide. We partner with clients in all sectors and regions to identify their highest-value opportunities, address their most critical challenges, and transform their businesses.

IMARC’s information products include major market, scientific, economic and technological developments for business leaders in pharmaceutical, industrial, and high technology organizations. Market forecasts and industry analysis for biotechnology, advanced materials, pharmaceuticals, food and beverage, travel and tourism, nanotechnology and novel processing methods are at the top of the company’s expertise.

Contact Us

IMARC Group

30 N Gould St, Ste R

Sheridan, WY (Wyoming) 82801 USA

Email: [email protected]

Tel No:(D) +91 120 433 0800

Americas:- +1 631 791 1145 | Africa and Europe :- +44-702-409-7331 | Asia: +91-120-433-0800, +91-120-433-0800

0 notes

Link

Reinforcement learning in Fortune 1000 companies. RBC Capital Markets -- new trading platform called Aiden, JP Morgan Foreign Exchange (FX) pricing and trading algorithms, Starbucks -- increase engagement on its mobile app, Cisco -- improve customer-facing services, Nike -- build personalization models, Wayfair -- their entire stack of recommenders, Fortinet -- part of their core product offerings, GE -- automated building management systems, Dell -- build storage solutions that can automatically change in response to workloads, Pathmind -- large-scale simulations and optimizations for semiconductors, Google -- chip design, Synopsys -- optimizing chip design, Cadence -- increase throughput and coverage of a formal verification system, and QuantumBlack (part of McKinsey) -- design of the boat used by the winning team of the 2021 America’s Cup.

0 notes

Text

Werewolf By Night #4 Review

Werewolf By Night #4 Review

Written By: Taboo, B.EarlArt By: Scot Eaton, Scott HannaColors By: Miroslav MrvaLetters By: VC’s Joe SabinoCover Price: $3.99Release Date: January 28th, 2021

Werewolf By Night #4 brings the current arc to a close. Jake is trapped in a specimen tube. JJ and Red Wolf are breaking into the lab to save him with the help of Pathmind. And Dr. Makowski is racing to finish the serum that will increase…

View On WordPress

0 notes

Text

Five Important Subsets of Artificial Intelligence – Analytics Insight

As far as a simple definition, Artificial Intelligence is the ability of a machine or a computer device to imitate human intelligence (cognitive process), secure from experiences, adapt to the most recent data and work people-like-exercises.

Artificial Intelligence executes tasks intelligently that yield in creating enormous accuracy, flexibility, and productivity for the entire system. Tech chiefs are looking for some approaches to implement artificial intelligence technologies into their organizations to draw obstruction and include values, for example, AI is immovably utilized in the banking and media industry. There is a wide arrangement of methods that come in the space of artificial intelligence, for example, linguistics, bias, vision, robotics, planning, natural language processing, decision science, etc. Let us learn about some of the major subfields of AI in depth.

Machine Learning

ML is maybe the most applicable subset of AI to the average enterprise today. As clarified in the Executive’s manual for real-world AI, our recent research report directed by Harvard Business Review Analytic Services, ML is a mature innovation that has been around for quite a long time.

ML is a part of AI that enables computers to self-learn from information and apply that learning without human intercession. When confronting a circumstance wherein a solution is covered up in a huge data set, AI is a go-to. “ML exceeds expectations at processing that information, extracting patterns from it in a small amount of the time a human would take and delivering in any case out of reach knowledge,” says Ingo Mierswa, founder and president of the data science platform RapidMiner. ML powers risk analysis, fraud detection, and portfolio management in financial services; GPS-based predictions in travel and targeted marketing campaigns, to list a few examples.

Neural Network

Joining cognitive science and machines to perform tasks, the neural network is a part of artificial intelligence that utilizes nervous system science ( a piece of biology that worries the nerve and nervous system of the human cerebrum). Imitating the human mind where the human brain contains an unbounded number of neurons and to code brain-neurons into a system or a machine is the thing that the neural network functions.

Neural network and machine learning combinedly tackle numerous intricate tasks effortlessly while a large number of these tasks can be automated. NLTK is your sacred goal library that is utilized in NLP. Ace all the modules in it and you’ll be a professional text analyzer instantly. Other Python libraries include pandas, NumPy, text blob, matplotlib, wordcloud.

Deep Learning

An explainer article by AI software organization Pathmind offers a valuable analogy: Think of a lot of Russian dolls settled within one another. “Profound learning is a subset of machine learning and machine learning is a subset of AI, which is an umbrella term for any computer program that accomplishes something smart.”

Deep learning utilizes alleged neural systems, which “learn from processing the labeled information provided during training and uses this answer key to realize what attributes of the information are expected to build the right yield,” as per one clarification given by deep AI. “When an adequate number of models have been processed, the neural network can start to process new, inconspicuous sources of info and effectively return precise outcomes.”

Deep learning powers product and content recommendations for Amazon and Netflix. It works in the background of Google’s voice-and image-recognition algorithms. Its ability to break down a lot of high-dimensional information makes deep learning unmistakably appropriate for supercharging preventive maintenance frameworks

Robotics

This has risen as an extremely sizzling field of artificial intelligence. A fascinating field of innovative work for the most part focuses around designing and developing robots. Robotics is an interdisciplinary field of science and engineering consolidated with mechanical engineering, electrical engineering, computer science, and numerous others. It decides the designing, producing, operating, and use of robots. It manages computer systems for their control, intelligent results and data change.

Robots are deployed regularly for directing tasks that may be difficult for people to perform consistently. Major robotics tasks included an assembly line for automobile manufacturing, for moving large objects in space by NASA. Artificial intelligence scientists are additionally creating robots utilizing machine learning to set interaction at social levels.

Computer Vision

Have you taken a stab at learning another language by labeling the items in your home with the local language and translated words? It is by all accounts a successful vocab developer since you see the words again and again. Same is the situation with computers fueled with computer vision. They learn by labeling or classifying various objects that they go over and handle the implications or decipher, however, at a much quicker pace than people (like those robots in science fiction motion pictures).

The tool OpenCV empowers processing of pictures by applying them to mathematical operations. Recall that elective subject in engineering days called “Fluffy Logic”? Truly, that approach is utilized in Image processing that makes it a lot simpler for computer vision specialists to fuzzify or obscure the readings that can’t be placed in a crisp Yes/No or True/False classification. OpenTLA is utilized for video tracking which is the procedure to find a moving object(s) utilizing a camera video stream.

Share This Article

Do the sharing thingy

Source

The post Five Important Subsets of Artificial Intelligence – Analytics Insight appeared first on abangtech.

from abangtech https://abangtech.com/five-important-subsets-of-artificial-intelligence-analytics-insight/

0 notes

Photo

Simulation and Automated Deep Learning http://ehelpdesk.tk/wp-content/uploads/2020/02/logo-header.png [ad_1] AnyLogic AI - https://www.anylog... #ai #androiddevelopment #angular #anylogic #arashmahdavi #automateddeeplearning #automl #c #css #dataanalysis #datascience #deeplearning #development #docker #drl #eduardogonzalez #iosdevelopment #java #javascript #machinelearning #node.js #pathmind #python #react #simulation #skymind #unity #webdevelopment #webinar

0 notes

Text

5 ways companies adapt to the lack of data scientists

Where are all the data scientists? Coping with the data scientist shortage is a struggle for many enterprises. Here are five ways to effectively manage with the shortage.

As enterprises establish data-centric cultures for decision-making and planning, data scientists continue to grow in importance to businesses around the world. But organizations can't hire data scientists fast enough, as the field of qualified candidates remains highly constrained.

In order to cope with this data scientist shortage, enterprises are taking a variety of approaches to get as much as they can from the few data professionals they can find and retain.

Automation

A lot of the work typically done by data scientists is focused on data management and operational tasks like identifying data sources, merging data sets and validating data quality. These tasks are not the high-value work that data scientists are generally hired to do. That's changing as more automation efforts comes into the enterprise.

"Model development, as well as model operationalization, can be significantly simplified by automation," said Ryohei Fujimaki, CEO and founder of DotData, an automated machine learning (ML) software company based in San Mateo, Calif. "New data science automation platforms will enable enterprises to deploy, operate and maintain data science processes in production with minimal efforts, helping companies maximize their AI and ML investments and their current data team."

According to Matthew Baird, founder and CTO of AtScale, an automated data engineering software company, some of the most promising developments in data science automation are in the area of autonomous data engineering, which automates data management and handling tasks.

"Such advances come in the form of 'just-in-time' data engineering -- automation that essentially acts like the perfect data engineering team if they had all that knowledge and complete input to data handling," Baird said, "including understanding how to best leverage underlying data structures of various databases, their unique network characteristics, data location, native security setup and policies."

Automation is the next step for advancements in business analytics.

Emphasizing self-service analytics

All this added data management and modeling automation is meant to not only serve to get the most out of senior data scientists but also democratize data resources for citizen data scientists. Scaling out data exploration with self-service analytics is another popular method of dealing with the data scientist shortage.

"The combination of autonomous data engineering advances and the increasing enablement of citizen analysts via self-service analytics are freeing valuable data science and data engineering resources to focus on higher-value activities such as building the next in machine learning or artificial intelligence models," Baird said.

Creating cross-functional teams

At the same time, enterprises are bumping into the limits of self-service analytics tooling and automation.

"Every tool that simplifies data science also limits the flexibility and options of the users, which means that certain complex tasks requiring customization are impossible," said Chris Nicholson, founder and CEO of Pathmind, a deep learning software company. Nicholson believes this reality has led many companies exploring new team strategies to get more out of their limited data experts.

"Many companies respond to the scarcity of data scientists by creating cross-functional data science teams that work with many business units across an organization or by hiring external consultants," Nicholson said. "Often what limits the value of data science in an organization is not the scarcity of data scientists themselves but the data that the organization gathers and how it lets people access and process that data."

Cross-functional teams can help companies get around fragmented data silos that have been created due to technical and internal political hurdles that can be overcome when the right stakeholders work together in the same teams, Nicholson said.

This can also alleviate a common problem that looks like a data scientist shortage but is even more fundamental -- namely that too many data science projects look unmanageable because they have no clear path to business value.

"Too many projects are wild goose chases where you throw a bunch of data to the data scientists and say, 'See what you can make of this,'" said Sten Vesterli, principal consultant at More Than Code, an IT consulting firm based in Denmark. "We've seen more than 80% of all data science projects fail to move from the lab into production code, and companies need to allocate their data scientist to the most high-value business goal."

Defining data science roles better

One of the big issues impeding effective recruitment of data scientists is that enterprises are making the data science title and role far too broad, said Amy Hodler, director of graph analytics and AI programs at graph database company Neo4j.

"This makes it difficult to find the right fit for any organization and means new employees have a harder time understanding and aligning to business objectives," Hodler said.

She believes that in the coming year, many organizations will start diversifying their data science-related titles, creating subcategory job focuses and more tightly focused job requirements.

Internal training

Hodler also believes the market will start responding to the data scientist shortage this year with more internal training of existing employees who exhibit any potential or desire to pivot into data science. This is going to be a hit-or-miss tactic, as organizations will have to be strategic about the specific skills they nurture in their budding data scientists, she said.

"A long-view mindset is required to clearly evaluate and define required skill sets in a way that balances not just the tools/approaches that are hot today but also investing in core concepts that can be built upon for years ahead," Hodler said. "Pairing junior and senior data scientists will become crucial to evolving and retaining these employees for the next few years."

This article has been published from a wire agency feed without modifications to the text. Only the headline has been changed.

Source link

Read the full article

0 notes

Text

Skymind Global Ventures launches $800M fund and London office to back AI startups

Skymind Global Ventures (SGV) appeared last year in Asia/US as a vehicle for the previous founders of a YC-backed open-source AI platform to invest in companies that used the platform.

Today it announces the launch of an $800 million fund to back promising new AI companies and academic research. It will consequently be opening a London office as an extension to its original Hong Kong base.

SGV Founder and CEO Shawn Tan said in a statement: “Having our operations in the UK capital is a strategic move for us. London has all the key factors to help us grow our business, such as access to diverse talent and investment, favorable regulation, and a strong and well-established technology hub. The city is also the AI growth capital of Europe with the added competitive advantage of boasting a global friendly time zone that overlaps with business hours in Asia, Europe and the rest of the world.”

SGV will use its London base to back research and development and generate business opportunities across Europe and Asia.

The company helps companies and organizations to launch their AI applications by providing them supported access to “Eclipse Deeplearning4j”, an open-source AI tool.

The background is that the Deeplearning4j tool was originally published by Adam Gibson in late 2013 and later became a YC-backed startup, called Pathmind, which was cofounded to commercialize Deeplearning4j. It later changed its name to Skymind.

SGV is a wholly separate investment company that Adam Gibson joined as VP to run its AI division, called Konduit. Konduit now commercializes the Deeplearning4j open source tools.

Adam Gibson now joins SGV as Vice President, to run its software division, Konduit, which delivers and supports Eclipse Deeplearning4j to clients, as well as offering training development.

SGV firm says it plans to train up to 200 AI professionals for its operations in London and Europe.

In December last year “Skymind AI Berhad”, the Southeast Asia arm of Skymind and Huawei Technologies signed a Memorandum of Understanding to develop a Cloud and Artificial Intelligence Innovation Hub, commencing with Malaysia and Indonesia in 2020.

from RSSMix.com Mix ID 8204425 https://ift.tt/2vxZnHj

via IFTTT

0 notes

Link

Article URL: https://announcement.pathmind.com/skymind-is-now-pathmind/

Comments URL: https://news.ycombinator.com/item?id=21817976

Points: 5

# Comments: 0

0 notes

Text

5 artificial intelligence (AI) types, defined – The Enterprisers Project

Artificial intelligence (AI) is redefining the enterprise’s notions about extracting insight from data. Indeed, the vast majority of technology executives (91 percent) and 84 percent of the general public believe that AI is the “next technology revolution,” according to Edelman’s 2019 Artificial Intelligence (AI) Survey. PwC has predicted that AI could contribute $15.7 trillion to the global economy by 2030.

Understanding the types of AI, how they work, and where they might add value is critical.

AI, in short, is a pretty big deal. However, it’s not a monolithic entity: There are multiple flavors of cognitive capabilities. Understanding the various types of AI, how they work, and where they might add value to the business is critical for both IT and line-of-business leaders.

[ What’s next? Read also: 10 AI trends to watch in 2020 and How big data and AI work together. ]

Five important kinds of AI

Let’s break down five types of AI and sample uses for them:

ML is perhaps the most relevant subset of AI to the average enterprise today. As explained in the Executive’s guide to real-world AI, our recent research report conducted by Harvard Business Review Analytic Services, ML is a mature technology that has been around for years.

When facing a situation in which a solution is hidden in a large data set, machine learning is a go-to.

ML is a branch of AI that empowers computers to self-learn from data and apply that learning without human intervention. When facing a situation in which a solution is hidden in a large data set, machine learning is a go-to. “ML excels at processing that data, extracting patterns from it in a fraction of the time a human would take, and producing otherwise inaccessible insight,” says Ingo Mierswa, founder and president of the data science platform RapidMiner.

ML use cases

ML powers risk analysis, fraud detection, and portfolio management in financial services; GPS-based predictions in travel; and targeted marketing campaigns, to list a few examples.

ML learning can get better at completing tasks over time based on the labeled data it ingests, explains ISG director of cognitive automation and innovation Wayne Butterfield, or it can power the creation of predictive models to improve a plethora of business-critical tasks.

Deep learning

An explainer article by AI software company Pathmind offers a useful analogy: Think of a set of Russian dolls nested within each other. “Deep learning is a subset of machine learning, and machine learning is a subset of AI, which is an umbrella term for any computer program that does something smart.”

This branch of AI tries to closely mimic the human mind.

In our plain English primer on deep learning, we offer this basic definition: the branch of AI that tries to closely mimic the human mind. With deep learning, CompTIA explains, “computers analyze problems at multiple layers in an attempt to simulate how the human brain analyzes problems. Visual images, natural language, or other inputs can be parsed into various components in order to extract meaning and build context, improving the probability of the computer arriving at the correct conclusion.”

Deep learning uses so-called neural networks, which “learn from processing the labeled data supplied during training, and uses this answer key to learn what characteristics of the input are needed to construct the correct output,” according to one explanation provided by deep AI. “Once a sufficient number of examples have been processed, the neural network can begin to process new, unseen inputs and successfully return accurate results.”

Deep learning use cases

Deep learning powers product and content recommendations for Amazon and Netflix. It works behind the scenes of Google’s voice- and image-recognition algorithms. Its capacity to analyze very large amounts of high-dimensional data makes deep learning ideally suited for supercharging preventive maintenance systems, as McKinsey pointed out in its Notes from the AI frontier: Applications and value of deep learning: “Layering in additional data, such as audio and image data, from other sensors – including relatively cheap ones such as microphones and cameras – neural networks can enhance and possibly replace more traditional methods. AI’s ability to predict failures and allow planned interventions can be used to reduce downtime and operating costs while improving production yield.”

[ Get our quick-scan primer on 10 key artificial intelligence terms for IT and business leaders: Cheat sheet: AI glossary. ]

NLP enables computers to understand, interpret, and manipulate human language.

When you take AI and focus it on human linguistics, you get NLP. SAS offers one of the clearest and most basic explanations of the term: “Natural language processing makes it possible for humans to talk to machines.” It’s the branch of AI that enables computers to understand, interpret, and manipulate human language.

NLP itself has a number of subsets, including natural language understanding (NLU), which refers to machine reading comprehension, and natural language generation (NLG), which can transform data into human words.

But, says ISG’s Butterfield, the premise is the same: “Understand language and sew something on the back of that understanding.”

NLP has roots in linguistics, where it emerged to enable computers to literally process natural language, explains Anil Vijayan, vice president at Everest Group. “Over the course of time, it evolved from rule-based to machine-learning infused approaches, thus overlapping with AI,” Vijayan says.

NLP might employ both ML learning and deep learning methodologies in combination with computational linguistics in order to effectively ingest and process unstructured speech and text datasets, says JP Baritugo, director at business transformation and outsourcing consultancy Pace Harmon.

NLP use cases

Natural language processing makes it possible for computers to extract key words and phrases, understand the intent of language, translate that to another language, or generate a response. “The enterprise literally runs through communication, either the written word or spoken conversation,” says Butterfield. “The ability to analyze this information and either find intent or insight will be absolutely critical to the enterprise of the future.”

Any area of the business where natural language is involved may be fodder for the deployment of NLP capabilities, says Vijayan. Think chatbots, social media feeds, emails, or complex documentation like contracts or claims forms.

Now let’s move on to the last two key types of AI: Computer vision and Explainable AI:

Pages

Source

The post 5 artificial intelligence (AI) types, defined – The Enterprisers Project appeared first on abangtech.

from abangtech https://abangtech.com/5-artificial-intelligence-ai-types-defined-the-enterprisers-project/

0 notes

Text

Skymind Global Ventures launches $800M fund and London office to back AI startups

Skymind Global Ventures (SGV) appeared last year in Asia/US as a vehicle for the previous founders of a YC-backed open-source AI platform to invest in companies that used the platform.

Today it announces the launch of an $800 million fund to back promising new AI companies and academic research. It will consequently be opening a London office as an extension to its original Hong Kong base.

SGV Founder and CEO Shawn Tan said in a statement: “Having our operations in the UK capital is a strategic move for us. London has all the key factors to help us grow our business, such as access to diverse talent and investment, favorable regulation, and a strong and well-established technology hub. The city is also the AI growth capital of Europe with the added competitive advantage of boasting a global friendly time zone that overlaps with business hours in Asia, Europe and the rest of the world.”

SGV will use its London base to back research and development and generate business opportunities across Europe and Asia.

The company helps companies and organizations to launch their AI applications by providing them supported access to “Eclipse Deeplearning4j”, an open-source AI tool.

The background is that the Deeplearning4j tool was originally published by Adam Gibson in late 2013 and later became a YC-backed startup, called Pathmind, which was cofounded to commercialize Deeplearning4j. It later changed its name to Skymind.

SGV is a wholly separate investment company that Adam Gibson joined as VP to run its AI division, called Konduit. Konduit now commercializes the Deeplearning4j open source tools.

Adam Gibson now joins SGV as Vice President, to run its software division, Konduit, which delivers and supports Eclipse Deeplearning4j to clients, as well as offering training development.

SGV firm says it plans to train up to 200 AI professionals for its operations in London and Europe.

In December last year “Skymind AI Berhad”, the Southeast Asia arm of Skymind and Huawei Technologies signed a Memorandum of Understanding to develop a Cloud and Artificial Intelligence Innovation Hub, commencing with Malaysia and Indonesia in 2020.

0 notes