#postgres installation error

Explore tagged Tumblr posts

Text

Updating a Tiny Tiny RSS install behind a reverse proxy

Screenshot of my Tiny Tiny RSS install on May 7th 2024 after a long struggle with 502 errors. I had a hard time when trying to update my Tiny Tiny RSS instance running as Docker container behind Nginx as reverse proxy. I experienced a lot of nasty 502 errors because the container did not return proper data to Nginx. I fixed it in the following manner: First I deleted all the containers and images. I did it with docker rm -vf $(docker ps -aq) docker rmi -f $(docker images -aq) docker system prune -af Attention! This deletes all Docker images! Even those not related to Tiny Tiny RSS. No problem in my case. It only keeps the persistent volumes. If you want to keep other images you have to remove the Tiny Tiny RSS ones separately. The second issue is simple and not really one for me. The Tiny Tiny RSS docs still call Docker Compose with a hyphen: $ docker-compose version. This is not valid for up-to-date installs where the hyphen has to be omitted: $ docker compose version. The third and biggest issue is that the Git Tiny Tiny RSS repository for Docker Compose does not exist anymore. The files have to to be pulled from the master branch of the main repository https://git.tt-rss.org/fox/tt-rss.git/. The docker-compose.yml has to be changed afterwards since the one in the repository is for development purposes only. The PostgreSQL database is located in a persistent volume. It is not possible to install a newer PostgreSQL version over it. Therefore you have to edit the docker-compose.yml and change the database image image: postgres:15-alpine to image: postgres:12-alpine. And then the data in the PostgreSQL volume were owned by a user named 70. Change it to root. Now my Tiny Tiny RSS runs again as expected. Read the full article

0 notes

Text

Ichiran@home 2021: the ultimate guide

Recently I’ve been contacted by several people who wanted to use my Japanese text segmenter Ichiran in their own projects. This is not surprising since it’s vastly superior to Mecab and similar software, and is occassionally updated with new vocabulary unlike many other segmenters. Ichiran powers ichi.moe which is a very cool webapp that helped literally dozens of people learn Japanese.

A big obstacle towards the adoption of Ichiran is the fact that it’s written in Common Lisp and people who want to use it are often unfamiliar with this language. To fix this issue, I’m now providing a way to build Ichiran as a command line utility, which could then be called as a subprocess by scripts in other languages.

This is a master post how to get Ichiran installed and how to use it for people who don’t know any Common Lisp at all. I’m providing instructions for Linux (Ubuntu) and Windows, I haven’t tested whether it works on other operating systems but it probably should.

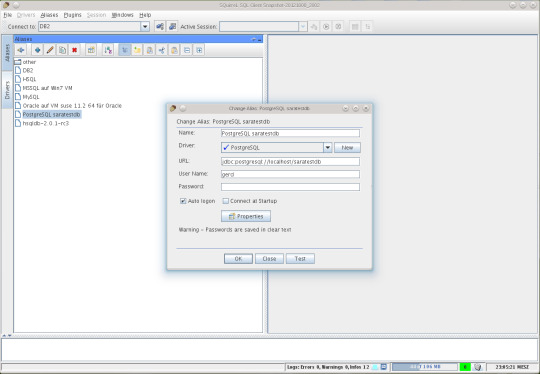

PostgreSQL

Ichiran uses a PostgreSQL database as a source for its vocabulary and other things. On Linux install postgresql using your preferred package manager. On Windows use the official installer. You should remember the password for the postgres user, or create a new user if you know how to do it.

Download the latest release of Ichiran database. On the release page there are commands needed to restore the dump. On Windows they don't really work, instead try to create database and restore the dump using pgAdmin (which is usually installed together with Postgres). Right-click on PostgreSQL/Databases/postgres and select "Query tool...". Paste the following into Query editor and hit the Execute button.

CREATE DATABASE [database_name] WITH TEMPLATE = template0 OWNER = postgres ENCODING = 'UTF8' LC_COLLATE = 'Japanese_Japan.932' LC_CTYPE = 'Japanese_Japan.932' TABLESPACE = pg_default CONNECTION LIMIT = -1;

Then refresh the Databases folder and you should see your new database. Right-click on it then select "Restore", then choose the file that you downloaded (it wants ".backup" extension by default so choose "Format: All files" if you can't find the file).

You might get a bunch of errors when restoring the dump saying that "user ichiran doesn't exist". Just ignore them.

SBCL

Ichiran uses SBCL to run its Common Lisp code. You can download Windows binaries for SBCL 2.0.0 from the official site, and on Linux you can use the package manager, or also use binaries from the official site although they might be incompatible with your operating system.

However you really want the latest version 2.1.0, especially on Windows for uh... reasons. There's a workaround for Windows 10 though, so if you don't mind turning on that option, you can stick with SBCL 2.0.0 really.

After installing some version of SBCL (SBCL requires SBCL to compile itself), download the source code of the latest version and let's get to business.

On Linux it should be easy, just run

sh make.sh --fancy sudo sh install.sh

in the source directory.

On Windows it's somewhat harder. Install MSYS2, then run "MSYS2 MinGW 64-bit".

pacman -S mingw-w64-x86_64-toolchain make # for paths in MSYS2 replace drive prefix C:/ by /c/ and so on cd [path_to_sbcl_source] export PATH="$PATH:[directory_where_sbcl.exe_is_currently]" # check that you can run sbcl from command line now # type (sb-ext:quit) to quit sbcl sh make.sh --fancy unset SBCL_HOME INSTALL_ROOT=/c/sbcl sh install.sh

Then edit Windows environment variables so that PATH contains c:\sbcl\bin and SBCL_HOME is c:\sbcl\lib\sbcl (replace c:\sbcl here and in INSTALL_ROOT with another directory if applicable). Check that you can run a normal Windows shell (cmd) and run sbcl from it.

Quicklisp

Quicklisp is a library manager for Common Lisp. You'll need it to install the dependencies of Ichiran. Download quicklisp.lisp from the official site and run the following command:

sbcl --load /path/to/quicklisp.lisp

In SBCL shell execute the following commands:

(quicklisp-quickstart:install) (ql:add-to-init-file) (sb-ext:quit)

This will ensure quicklisp is loaded every time SBCL starts.

Ichiran

Find the directory ~/quicklisp/local-projects (%USERPROFILE%\quicklisp\local-projects on Windows) and git clone Ichiran source code into it. It is possible to place it into an arbitrary directory, but that requires configuring ASDF, while ~/quicklisp/local-projects/ should work out of the box, as should ~/common-lisp/ but I'm not sure about Windows equivalent for this one.

Ichiran wouldn't load without settings.lisp file which you might notice is absent from the repository. Instead, there's a settings.lisp.template file. Copy settings.lisp.template to settings.lisp and edit the following values in settings.lisp:

*connection* this is the main database connection. It is a list of at least 4 elements: database name, database user (usually "postgres"), database password and database host ("localhost"). It can be followed by options like :port 5434 if the database is running on a non-standard port.

*connections* is an optional parameter, if you want to switch between several databases. You can probably ignore it.

*jmdict-data* this should be a path to these files from JMdict project. They contain descriptions of parts of speech etc.

ignore all the other parameters, they're only needed for creating the database from scratch

Run sbcl. You should now be able to load Ichiran with

(ql:quickload :ichiran)

On the first run, run the following command. It should also be run after downloading a new database dump and updating Ichiran code, as it fixes various issues with the original JMdict data.

(ichiran/mnt:add-errata)

Run the test suite with

(ichiran/test:run-all-tests)

If not all tests pass, you did something wrong! If none of the tests pass, check that you configured the database connection correctly. If all tests pass, you have a working installation of Ichiran. Congratulations!

Some commands that can be used in Ichiran:

(ichiran:romanize "一覧は最高だぞ" :with-info t) this is basically a text-only equivalent of ichi.moe, everyone's favorite webapp based on Ichiran.

(ichiran/dict:simple-segment "一覧は最高だぞ") returns a list of WORD-INFO objects which contain a lot of interesting data which is available through "accessor functions". For example (mapcar 'ichiran/dict:word-info-text (ichiran/dict:simple-segment "一覧は最高だぞ") will return a list of separate words in a sentence.

(ichiran/dict:dict-segment "一覧は最高だぞ" :limit 5) like simple-segment but returns top 5 segmentations.

(ichiran/dict:word-info-from-text "一覧") gets a WORD-INFO object for a specific word.

ichiran/dict:word-info-str converts a WORD-INFO object to a human-readable string.

ichiran/dict:word-info-gloss-json converts a WORD-INFO object into a "json" "object" containing dictionary information about a word, which is not really JSON but an equivalent Lisp representation of it. But, it can be converted into a real JSON string with jsown:to-json function. Putting it all together, the following code will convert the word 一覧 into a JSON string:

(jsown:to-json (ichiran/dict:word-info-json (ichiran/dict:word-info-from-text "一覧")))

Now, if you're not familiar with Common Lisp all this stuff might seem confusing. Which is where ichiran-cli comes in, a brand new Command Line Interface to Ichiran.

ichiran-cli

ichiran-cli is just a simple command-line application that can be called by scripts just like mecab and its ilk. The main difference is that it must be built by the user, who has already did the previous steps of the Ichiran installation process. It needs to access the postgres database and the connection settings from settings.lisp are currently "baked in" during the build. It also contains a cache of some database references, so modifying the database (i.e. updating to a newer database dump) without also rebuilding ichiran-cli is highly inadvisable.

The build process is very easy. Just run sbcl and execute the following commands:

(ql:quickload :ichiran/cli) (ichiran/cli:build)

sbcl should exit at this point, and you'll have a new ichiran-cli (ichiran-cli.exe on Windows) executable in ichiran source directory. If sbcl didn't exit, try deleting the old ichiran-cli and do it again, it seems that on Linux sbcl sometimes can't overwrite this file for some reason.

Use -h option to show how to use this tool. There will be more options in the future but at the time of this post, it prints out the following:

>ichiran-cli -h Command line interface for Ichiran Usage: ichiran-cli [-h|--help] [-e|--eval] [-i|--with-info] [-f|--full] [input] Available options: -h, --help print this help text -e, --eval evaluate arbitrary expression and print the result -i, --with-info print dictionary info -f, --full full split info (as JSON) By default calls ichiran:romanize, other options change this behavior

Here's the example usage of these switches

ichiran-cli "一覧は最高だぞ" just prints out the romanization

ichiran-cli -i "一覧は最高だぞ" - equivalent of ichiran:romanize :with-info t above

ichiran-cli -f "一覧は最高だぞ" - outputs the full result of segmentation as JSON. This is the one you'll probably want to use in scripts etc.

ichiran-cli -e "(+ 1 2 3)" - execute arbitrary Common Lisp code... yup that's right. Since this is a new feature, I don't know yet which commands people really want, so this option can be used to execute any command such as those listed in the previous section.

By the way, as I mentioned before, on Windows SBCL prior to 2.1.0 doesn't parse non-ascii command line arguments correctly. Which is why I had to include a section about building a newer version of SBCL. However if you use Windows 10, there's a workaround that avoids having to build SBCL 2.1.0. Open "Language Settings", find a link to "Administrative language settings", click on "Change system locale...", and turn on "Beta: Use Unicode UTF-8 for worldwide language support". Then reboot your computer. Voila, everything will work now. At least in regards to SBCL. I can't guarantee that other command line apps which use locales will work after that.

That's it for now, hope you enjoy playing around with Ichiran in this new year. よろしくおねがいします!

6 notes

·

View notes

Text

The data directory contains an old postmaster.pid file

PostgreSQL Connection Failure

I experienced this issue when my laptop crushed and after rebooting, PostgreSQL was unable to connect to the database. I attempted to start my server and this error dialog window appeared.

The Problem: Two instances of the same PostgreSQL server cannot run on the same data directory at the same time thanks to the postmaster.pid lock file. Follow the link for more information about what a postmaster.pid file is, otherwise let's move on to resolving our issues.

STEP 1:

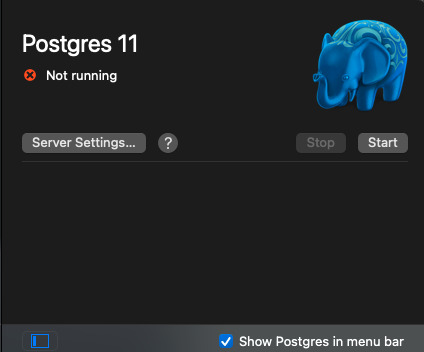

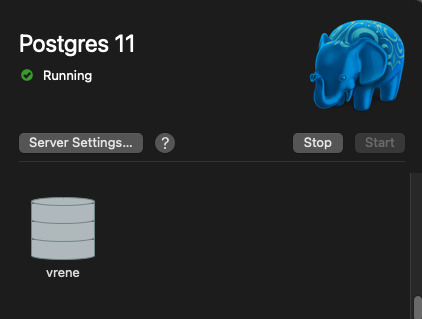

Click on the “OK” button to close the dialog window or open the Postgres.app desktop app if its not already open

STEP 2

Click on “Server Settings…” button

Click on Show button next to the Data Directory. This should open the data directory of your PostgreSQl installation.

STEP 3

Once you have located your postmaster.pid file. Delete it!

STEP 4

After deleting the file, the error message should change from "Stale postmaster.pid file" to "Not running" on the Postgres GUI app . Now just start your PostgreSQL server by clicking the "Start" button on the Postgres GUI app

Finally

0 notes

Text

Brew install postgres 12

BREW INSTALL POSTGRES 12 INSTALL

BREW INSTALL POSTGRES 12 SOFTWARE

Hopefully, you see some of your own Postgres situation in my particular path, and you can hop on somewhere and get to your own solution. If I was just getting started or I didn’t care about losing that data, I likely would have blown everything away and run rails db:prepare and been up and running with Postgres 13. I went this route as I really wanted to hold on to the data I had in my local DBs.

BREW INSTALL POSTGRES 12 INSTALL

I’m really unsure of what state the install is in, and it’s likely to trip me up in the future if I don’t handle it here while I have this context in my hed. I should remove it or otherwise plan on upgrading my app to it.

Postgres 13 is still installed on my machine.

With this, I could restart Rails and get my app up and running again. A quick start kicked the service off brew services start It’s important to note that even though I had linked brew to the old postgres version, when I was running brew commands, I still needed to reference the appropriate brew Postgres install, hence the Next Steps I began by checking which brew services were running with brew services list. Running Postgresįinally, I had to restart Postgres. Checking the version through the terminal confirmed this. After fumbling with a few commands and various Stack Overflow posts, I discovered brew link -overwrite This pointed the postgres command at the latest version. It was allowing both to be installed on my machine. Homebrew wasn’t installing the old version of Postgres over the existing 13.x. But checking the postgres version still returned the new Postgres install. brew install the old version my app was expecting onto my machine. Installing the older version of Postgres wasn’t too bad. I needed to install 12.x and then point homebrew at the install. The best way to do that would be to walk my local copy back to 12.x. I want to minimize the surface area and variables between known working versions of my software. I’m a believer in intentional upgrades and intentional changes.

Bring my install up to the latest, 13.x or.

Next, I check the version with postgres -version and I get back 13.x which is not the 12.x I expected. With a quick command in the terminal, which postgres, I see that it’s installed in /usr/local/bin which I know is where homebrew installs.

BREW INSTALL POSTGRES 12 SOFTWARE

In this case, I know that Postgres can be installed a number of different ways, and I want to verify how the software is installed. When I get stuck, I take a deep breath and gather up my assumptions. And which version of Postgres am I running?.Which install of Postgres am I running?.I then when through my typical debugging questions: I rushed through my typical fix of deleting the postmaster.pid which usually gets left behind when my machine restarts only to find that this can cause worse problems when done incorrectly. Here’s my particular breadcrumb trail.Īfter starting my local Rails server after the OSX upgrade, I was greeted with the dreaded error “connection to database failed: could not connect to server: No such file or directory”. After flailing and staring at countless Stack Overflow threads, I found my way out of the woods. This is pretty typical for me after OS updates the feedback loop between updates is too long for my memory. Traversing array in a loop with a loop variable and range is quite redundant it is only needed when the range is calculated and not the same as the array range otherwise for-in loop should be used.I recently ran into an issue with Postgres after upgrading to Big Sur. If a JSON key uses invalid JSONPath characters, then you can escape those characters using single quotes and brackets. each () is a generic iterator function for looping over object, arrays, and array-like objects. I believe jq does not provide a solution to this problem that is both elegant and intuitive. Created for … index("blue") is 0 and one wouldn't consider that a truthy value and might expect it to be excluded from the results. The return value from the comparer function basically identifies the sort order of subsequent array elements. The controls can be of several types: The. In the example above, the first expression, which is just an identifier, is applied to each element in the people array. Here's one way to create a JSON list from an array of hosts: hosts= (1. The array must be of a valid data type such as integer, character, or user-defined types. Every time the callback function is called it will return a value and store it in max. In this sense, typeof … JavaScript setAttribute () The setAttribute () method is used to set or add an attribute to a particular element and provides a value to it. price) | add' will take an array of JSON objects as input and return the sum of their "price" fields. Arrays in JSON are almost the same as arrays in JavaScript.

0 notes

Text

Chrome extension uuid generator postgres

Chrome extension uuid generator postgres generator#

d indicates the text input of the request body. Resizing a WebFOCUS Designer Esri map removes layer. An example request body is as follows: ' -H 'X-Auth-Token:MIISkAY***80T9wHQ=' -H 'Content-type: application/json' -X POST Error when migrating DB from SQL to Postgresql and running dbreplicate. rootaccounting-2 (master) sudo -u postgres psql -dbnameidempiere psql (11.8) Type 'help' for help. Where: PL/pgSQL function generateuuid() line 3 at RETURN This usually indicates that the uuid extension is missing from the database. Enter the request body in the text box below. You might need to add explicit type casts. Select raw and then JSON(application/json).

Chrome extension uuid generator postgres generator#

Use one of the UUID-OSSP generator function as th. Once UUID-OSSP is installed, declare a CREATE TABLE and UUID column. It can produce integers, longs, uuids, or strings, as shown in the following example. The algorithm used by the random() function generates pseudo-random values. setseed() sets the internal value for the generator. There is a UUID-OSSP server module to provide such functions, but it must be installed. LiveReload browser extensions are freely available for Chrome. PostgreSQL has two basic functions for generating random data: random() returns a random value with uniform distribution from the range 0.0, 1.0) (includes 0.0, but no 1.0). So you have to add a callback Bean to set your Id manually.Figure 3 Setting parameters on the Body tab page Answer: The PostgreSQL UUID type does not have a built-in sequence generator functions. Several sources ( here, here, here, here, here and here) indicate that there is no auto Id generation for R2DBC. I ditched the JPA approach - and note that we are using R2DBC, not JDBC, so the answer didn't work straight away. Currency conversion extension for Google Chrome and Edge browser that is based on the Chromium open-source project. Thanks to Sve Kamenska, with whose help I finally got it working eventually. The DDL for the table is like this: CREATE TABLE DOODAHS(id UUID not null, fieldA VARCHAR(10), fieldB VARCHAR(10)) NB the persistence is being handled by a class which looks like this: interface DoodahRepository extends CrudRepository Annotate class with Replace spring annotation with seen useful answers here, here and here but none have worked so far.use) or browsers extension such as Firefoxs HttpRequester, or Chromes Advanced. Create the UUID myself - results in Spring complaining that it can't find the row with that id. As an example, create a PyDev project called test-flask with a module.Neo4j: fixed actions menu for graph view tab. In PostgreSQL, there are a number of functions that generate UUID s: The uuid-ossp extension offers functions to generate UUID s. There are several standardized algorithms for that. Annotate the field with (in addition to existing spring Id) Cassandra: fixed generation of CQL script for only selected tables. A UUID (universally unique identifier) is a 128-bit number that is generated with an algorithm that effectively guarantees uniqueness.The class looks like this: class Doodah = strategy = false, unique = true) This extension allows to generate one or multiple Universal Unique Identifiers (UUID v4) with formatting options (with/out hyphens, with/out braces, lower/upper cased), and to copy rows of identifiers to the clipboard. This error indicates that it's expecting the ID column to be auto-populated (with some default value) when a row is inserted. This should be href="" rel="nofollow noreferrer">straightforward since hibernate and postgres have good support for UUIDs.Įach time I create a new instance and write it with save(), I get the following error: o.h.j.JdbcSQLIntegrit圜onstraintViolationException: NULL not allowed for column "ID" SQL statement: INSERT INTO DOODAHS (fieldA, fieldB) VALUES $1, $2). To protect user privacy, Google policies mandate that no data be passed to Google that Google could. The ID column of the table is a UUID, which I want to generate in code, not in the database. Avoid sending PII to Google when collecting Analytics data. How to view-source of a Chrome extension. I am trying to persist a simple class using Spring, with hibernate/JPA and a PostgreSQL database. Tags: uuid guid uuid-generator guid-generator generator time order rfc4122.

0 notes

Text

PgAdmin is an open-source and widely used frontend management tool for the PostgreSQL database system developed in Python language. It allows one to manage the PostgreSQL database from the web interface by providing all the required features. This release version PgAdmin 4 replaces the previous version PgAdmin 3 which was written in C++ language with support for PostgreSQL up to version 9.2. pgAdmin 4 comes with the following amazing features: A live SQL Query Tool with direct data editing A syntax-highlighting SQL editor Supportive error messages Helpful hints Has support for administrative queries A redesigned graphical interface Online help and information about using pgAdmin dialogs and tools. Responsive, context-sensitive behavior Auto-detection and support for objects discovered at run-time In this guide, we will systematically walk through how to install and use pgAdmin 4 on Rocky Linux 8 | AlmaLinux 8. Setup Pre-requisites Before we proceed, you will require to have PostgreSQL installed on your Rocky Linux 8 | AlmaLinux 8 with the aid of this guide Install PostgreSQL 14 on Rocky Linux 8|AlmaLinux 8|CentOS 8 You will also need a superuser account created. Login to the PostgreSQL shell. sudo -u postgres psql Now create a superuser to be used to connect and manage other users and databases. postgres-# CREATE ROLE admin WITH LOGIN SUPERUSER CREATEDB CREATEROLE PASSWORD 'Passw0rd'; postgres-# \q Install pgAdmin 4 on Rocky Linux 8 | AlmaLinux 8 Now with PostgreSQL installed, proceed and install PgAdmin 4 using the steps below. Step 1 – Add the PgAdmin4 Repository on Rocky Linux| AlmaLinux 8 We first need to add the pgAdmin YUM repository to our system to be able to install this latest pgAdmin version. Install the required package sudo dnf install yum-utils Then disable the PostgreSQL common repositories to allow us to grab the latest PgAdmin 4 packages from the PgAdmin repositories. sudo yum-config-manager --disable pgdg-common Now add the pgAdmin 4 repositories to our Rocky Linux| AlmaLinux 8 with the command: sudo rpm -i https://ftp.postgresql.org/pub/pgadmin/pgadmin4/yum/pgadmin4-redhat-repo-2-1.noarch.rpm Update your package index. sudo dnf update Step 2 – Install PgAdmin 4 on Rocky Linux |AlmaLinux 8 Now with the repositories added, installing pgAdmin 4 is as easy as robbing a child’s bank. Simply use the command below to install PgAdmin 4 on Rocky Linux |AlmaLinux 8. sudo dnf install pgadmin4 Dependency Tree: Dependencies resolved. ================================================================================ Package Arch Version Repo Size ================================================================================ Installing: pgadmin4 noarch 6.1-1.el8 pgAdmin4 6.2 k Installing dependencies: apr x86_64 1.6.3-11.el8.1 appstream 124 k apr-util x86_64 1.6.1-6.el8.1 appstream 104 k httpd x86_64 2.4.37-39.module+el8.4.0+655+f2bfd6ee.1 appstream 1.4 M httpd-filesystem noarch 2.4.37-39.module+el8.4.0+655+f2bfd6ee.1 appstream 38 k httpd-tools x86_64 2.4.37-39.module+el8.4.0+655+f2bfd6ee.1 appstream 105 k libatomic x86_64 8.4.1-1.el8 baseos 22 k mod_http2 x86_64 1.15.7-3.module+el8.4.0+553+7a69454b appstream 153 k pgadmin4-desktop x86_64 6.1-1.el8 pgAdmin4 87 M pgadmin4-server x86_64 6.1-1.el8 pgAdmin4 91 M pgadmin4-web noarch 6.1-1.el8 pgAdmin4 8.6 k python3-mod_wsgi x86_64 4.6.4-4.el8 appstream 2.5 M

rocky-logos-httpd noarch 84.5-8.el8 baseos 22 k Installing weak dependencies: apr-util-bdb x86_64 1.6.1-6.el8.1 appstream 23 k apr-util-openssl x86_64 1.6.1-6.el8.1 appstream 26 k Enabling module streams: httpd 2.4 Transaction Summary ================================================================================ Install 15 Packages Total download size: 182 M Installed size: 558 M Is this ok [y/N]: y Step 3 – Start the Apache webserver on Rocky Linux |AlmaLinux 8 To access the pgAdmin web UI, we need a web server, Apache has been installed automatically when installing pgAdmin. We, therefore, need to ensure that the Apache webserver is running on our system. sudo systemctl start httpd Enable Apache to run automatically on boot. sudo systemctl enable httpd Check the status of the service. $ sudo systemctl status httpd ● httpd.service - The Apache HTTP Server Loaded: loaded (/usr/lib/systemd/system/httpd.service; disabled; vendor preset: disabled) Active: active (running) since Thu 2021-11-04 04:01:12 EDT; 8s ago Docs: man:httpd.service(8) Main PID: 48450 (httpd) Status: "Started, listening on: port 80" Tasks: 241 (limit: 23532) Memory: 64.3M CGroup: /system.slice/httpd.service ├─48450 /usr/sbin/httpd -DFOREGROUND ├─48451 /usr/sbin/httpd -DFOREGROUND ├─48452 /usr/sbin/httpd -DFOREGROUND ├─48453 /usr/sbin/httpd -DFOREGROUND ├─48454 /usr/sbin/httpd -DFOREGROUND └─48455 /usr/sbin/httpd -DFOREGROUND Nov 04 04:01:12 localhost.localdomain systemd[1]: Starting The Apache HTTP Server... Nov 04 04:01:12 localhost.localdomain httpd[48450]: [Thu Nov 04 04:01:12.380306 2021] [so:warn] [pid 48450:tid 140690349939008] AH01574: module wsgi_> Nov 04 04:01:12 localhost.localdomain httpd[48450]: AH00558: httpd: Could not reliably determine the server's fully qualified domain name, using loca> Nov 04 04:01:12 localhost.localdomain systemd[1]: Started The Apache HTTP Server. Nov 04 04:01:12 localhost.localdomain httpd[48450]: Server configured, listening on: port 80 Step 4 – Configure the PgAdmin 4 Web Service PgAdmin has a script that creates a new user, sets up the PgAdmin web UI, and also manages the Apache webserver. The script is located at /usr/pgadmin4/bin/setup-web.sh and is executed as below: sudo /usr/pgadmin4/bin/setup-web.sh Now you will be prompted to provide details that will, later on, be used to login to the pgAdmin 4 web UI. Proceed as below. Setting up pgAdmin 4 in web mode on a Redhat based platform... Creating configuration database... NOTE: Configuring authentication for SERVER mode. Enter the email address and password to use for the initial pgAdmin user account: Email address: [email protected] Password: Enter Password here Retype password: Re-enter Password here pgAdmin 4 - Application Initialisation ====================================== Creating storage and log directories... Configuring SELinux... The Apache web server is running and must be restarted for the pgAdmin 4 installation to complete. Continue (y/n)? y Apache successfully restarted. You can now start using pgAdmin 4 in web mode at http://127.0.0.1/pgadmin4 Set SELinux in permissive mode as below. sudo setenforce permissive You also need to modify your firewall rules to allow HTTP traffic. sudo firewall-cmd --permanent --add-service=http sudo firewall-cmd --reload Use PgAdmin 4 in Rocky Linux | AlmaLinux 8 Linux Now everything is set up, we can proceed to access the pgAdmin Web UI so that we can manage our PostgreSQL instance. Access the web interface using the URL http://server-ip/pgadmin4 Login with the credentials created in step 4 above. On successful login. you will be able to see this pgAdmin dashboard. As seen, no database is

connected to pgAdmin, and therefore, we need to add our PostgreSQL server by clicking on “Add Server” as shown. Provide details for your PostgreSQL database server. Set the name for the database. Then proceed to the next ‘Connection‘ tab and enter the credentials for the PostgreSQL database as below. (I have entered credentials for the superuser account created at the beginning of this guide) If you are accessing the PostgreSQL database installed on a remote server, you will be required to Set SSH Tunnel. Provide the IP Address, username, and password of the remote server and proceed. With the details entered correctly, click save and you will see your database server added with additional details provided. Create a Database in PostgreSQL using PgAdmin 4. with the connection to your PostgreSQL server established, you can create a database as shown below. Set the database name. Proceed to the next tab and define your database. Click sav, and you will have your database created as shown. You can also make further configurations to the database. That was enough learning! We have successfully gone through how to install and use pgAdmin 4 on Rocky Linux 8 | AlmaLinux 8. There are a lot of configurations and database management tools available on pgAdmin 4. I have only demonstrated a few. I hope this guide was of value to you.

0 notes

Text

Download Soapui For Mac

Soap Ui Installer

Download Soapui For Mac

Download Soapui For Mac

Soapui Install

Soapui 5.4 Download For Mac

Before working with databases in ReadyAPI, you have to establish a database connection. For this, you use the connection drivers. You can install Postgres JDBC4 Driver or Microsoft JDBC Driver during the ReadyAPI installation.

SoapUI is a free and open source web service inspector software download filed under programming software and made available by SmartBear Software for Windows. The review for soapUI has not been completed yet, but it was tested by an editor here on a PC and a list of features has been compiled; see below. SoapUI SoapUI is a free and open source cross-platform Functional Testing solution. DOWNLOAD Pro Paint for Mac Paint for Mac Pro version is the realistic digital Mac paint program that is. Windows 7 sulietuvinimas atsisiusti. $29.99 DOWNLOAD. A powerful REST API Client with cookie management, environment variables, code generation,. Trusted Windows (PC) download soapUI Pro 5.1.2. Virus-free and 100% clean download. Get soapUI Pro alternative downloads.

Download soapUI- 5.0 for Mac from our website for free. The program is included in Developer Tools. This free program can be installed on Mac OS X 10.6 or later. The most popular versions among the application users are 5.0, 4.6 and 4.0. SoapUI- for Mac can also be called 'soapUI-beta1'. Soapui free version download. Download soapUI- 5.0 for Mac from our website for free. The program is included in Developer Tools. This free program can be installed on Mac OS X 10.6 or later. The most popular versions among the application users are 5.0, 4.6 and 4.0.

Install third-party JDBC drivers

To install a third-party JDBC driver, do the following:

Download soapUI-5.0 for Mac from our website for free. The program is included in Developer Tools. This free program can be installed on Mac OS X 10.6 or later. The most popular versions among the application users are 5.0, 4.6 and 4.0. SoapUI- for Mac can also be called 'soapUI-beta1'. The actual developer of this free Mac application is eviware. Free Trial Driver Booster 6 PRO (60% OFF when you buy) soapUI for Mac. 28,550 downloads Updated: December 5, 2017 Freeware. DOWNLOAD soapUI 5.4.0.

Download and install the needed JDBC driver package.

Put the driver files in the bin/ext directory of your ReadyAPI installation.

Restart ReadyAPI to load the driver.

In ReadyAPI, go to Preferences > JDBC Drivers and add the new connection template.

Tip:ReadyAPI has preconfigured connection string templates for a number of JDBC drivers.

Once you have prepared the driver and connection string, you will be able to add the database connection.

To learn how to use a Groovy script to register the JDBC driver, see Using JDBC Drivers From Scripts.

Install the MySQL JDBC driver

To learn how to properly install and configure the MySQL JDBC driver, see the corresponding topic.

Choose a driver for MS SQL databases

Currently, ReadyAPI supports two connection drivers for MS SQL databases:

Microsoft SQL JDBC driver – a database connection driver published and supported by Microsoft.

JTDS driver – an open source driver published under the GNU LGPL license by The JTDS Project.

We recommend using the Microsoft SQL JDBC driver: it is compatible with ReadyAPI and supports all necessary features. Use the JTDS driver if your database connection requires its specific properties.

Install the Oracle JDBC driver

To learn how to properly install and configure the Oracle JDBC driver, see Oracle JDBC Driver.

See Also

Soapui Download File

JDBC Drivers Database Manager Using JDBC Drivers From Scripts Data Sources and>

If you have an active license of ReadyAPI, you do not need to activate a new license.

VirtServer requires a separate license.

Ubuntu prerequisites

If you install ReadyAPI on the Ubuntu operating system, you should run the following command to avoid an error during the installation:

Download Soapui Windows

Installation steps

Soapui Download Free

Download the installer for your platform from the ReadyAPI Downloads Center.

If you install ReadyAPI on a Linux machine, make sure the installer has the appropriate permissions and can be executed. To do this, run the chmod +x ./ReadyAPI-x64-3.4.5.sh

You can also download the installer via ReadyAPI Updater.

Show instructionShow instruction

Select Help > Check for Updates.

Click Download in the New Version Check dialog.

Specify the download location and click Next.

Select either Yes, execute the update installer now or No, I will execute the update installer later and click Finish.

Note:If you select to execute the installer later, the steps in the Installation Wizard may differ.

Run the installer. The installer will unpack the files and prepare them for installation:

If ReadyAPI is not installed on your computer, you will see the Welcome screen. Click Next to proceed with the installation:

If ReadyAPI is already installed on your computer, you will be asked if you want to update the current installation or to install the product anew to a different directory. Select the needed option and click Next:

Tip:Point to to see where the current installation is located.

If you downloaded the installer via ReadyAPI Updater and selected to run it later, click Next in the Wizard.

If you install ReadyAPI for the first time, or have chosen to install it to a different directory, the wizard will ask you to specify the installation folder:

When the installation is complete, you can select the Run ReadyAPI check box to run ReadyAPI upon closing the wizard. You can also select the Create a desktop icon check box to create a desktop icon for ReadyAPI.

Click Finish.

Soap Ui Installer

See Also

Download Soapui For Mac

ReadyAPI Documentation System Requirements Licensing VirtServer Installation

SoapUI Tutorial

The latest version of SoapUI Pro is 5.1 on Mac Informer. It is a perfect match for IDE in the Developer Tools category. The app is developed by SmartBear Software and its user rating is 5 out of 5.

Soap Basics

For SoapUI Open Source. Firstly, Mac computer with 1GHz or higher 32-bit or 64-bit Intel or PowerPC processor; Secondly, 512MB of RAM; Subsequently, 140MB of hard disk space for installation (SoapUI and HermesJMS) In addition, Mac OS X 10.4 or later; Mac OS X Server 10.4 or later; Lastly, Java 7; For SoapUI Pro.

SoapUI Installation Guides. SoapUI is cross-platform, and can be used on either Windows, Mac or Linux/Unix, according to your needs and preferences. SoapUI is an open source testing tool which is used to test web services built on both SOA (Service Oriented Architecture) and REST protocol (REpresentational State Transfer).

Our software library provides a free download of SoapUI Pro 5.1 for Mac. The file size of the latest installation package available for download is 221.8 MB. The program belongs to Developer Tools. This Mac download was checked by our built-in antivirus and was rated as malware free. This Mac app was originally created by SmartBear Software.

SoapUI Basics

SoapUI Useful Resources

Selected Reading

SoapUI is a cross-platform tool. It supports Windows, Linux, and Mac operating systems.

Prerequisites

Processor − 1GHz or higher 32-bit or 64-bit processor.

RAM − 512MB of RAM.

Hard Disk Space − Minimum 200MB of hard disk space for installation.

Operating System Version − Windows XP or later, MAC OS 10.4 or later.

JAVA − JAVA 6 or later.

Download Process

Step 1 − Go to www.soapui.org and click Download SoapUI.

Step 2 − Click ‘Get It’ to download SoapUI Open Source. It will start downloading 112MB .exe file in the system. Wait till the download process is complete.

Installation Process

Step 1 − After downloading, run the .exe file as “Run as administrator”.

Windows will start the set up process as shown in the following screenshot.

Step 2 − Once set up, the process window displays the following screen, click Next.

Step 3 − Accept the license agreement and click Next.

Step 4 − Choose the installation directory or keep it as the default path selected by the system. Click Next.

Step 5 − Choose the components that you want to install. Click Next.

Step 6 − Accept the License Agreement for HermesJMS and click Next.

Step 7 − Select the target directory to save tutorials and click Next.

Step 8 − Choose the start menu folder location or else leave the default location as is and click 'Next'.

Step 9 − Enable the checkbox 'create a desktop icon' and click 'Next'.

Now, the installation starts. It will take a few minutes to complete.

Step 10 − After completion of installation, click Finish in the following wizard.

Upon clicking on Finish, SoapUI is launched.

Soapui 5.4 Download For Mac

Menu bar

Tool bar

Project Navigation Bar

Workspace Properties

Log Panel

Configuration Process

The first step is to create a workspace that can contain multiple projects.

Download Soapui For Mac

Step 1 − Go to File → New Workspace.

Step 2 − Add the name of workspace and click OK.

Step 3 − Now, select the path where workspace xml will be saved.

Step 4 − Select the path and click Save.

Soapui Download File

Workspace is created as shown in the following screenshot. Workspace properties is also exhibited.

Download

Download Soapui Pro For Mac

The program can not be downloaded: the download link is not available.External download links have become invalid for an unknown reason.Sorry, but we cannot ensure safeness of third party websites.

Often downloaded with

SOAP ClientSOAP Client is a free Cocoa-based developer tool that allows you access and..DOWNLOAD

Soap SAPSoap SAP - a freeware application for soap makers and lye calculator & oil and..DOWNLOAD

Soap Opera DashHelp Rosie film the best Soap Opera ever! Run the sets and make sure all the..$6.99DOWNLOAD

UI BrowserUI Browser is the ultimate assistant for Apple's AppleScript GUI Scripting and..$55DOWNLOAD

Sia-UISia-UI is the user interface for Sia, a desktop application based off the..DOWNLOAD

Download Soapui For Mac

Soapui Download For Windows 10

Soapui Install

PDF Printer Lite - Easily Print Document to PDF

Soapui 5.4 Download For Mac

Rsa securid software token 5.0 2 download for mac. Office Tools

0 notes

Text

Aug 23

Starting day at 8:42AM bc I fell asleep again at 7.

Allara chillara stretching and walking around flexing all the while tensing about the day.

What's the point of yoga if you're stressed the whole time?

Be cool

Wait, what do I do? Do they reach out to me? Should I mail? it's 9:34AM.

Shit did they forget?

They can't fire me on the first day, right?

I swear if I get fired in under a month, I'm gonna give up this career and start painting.

And I could just opensource for the love of dev.

Chill!!

OKay, I pinged Rejulettan, they’re creating accounts and stuff.

11AM First standup. Said “Hi”. ;_; WHY? All that stuff you prepped?

That’s a lot of accounts

Digesting a jargon explosion.

WTF are all these for?

Terraform? Like what they plan to do with mars.

I have access to everything.

I can burn down the whole thing if I wanted to.

UNLIMITED POWER!!! !

... R+A helping me with setup. Cool.

X is helping with the a/cs. Isn't he busy? Okay, I'll never be able to do that job. Working and handling all this people and keeping in touch with every one of em to make sure everything's okay. I mean, I can't even properly eat lunch and watch a sitcom together.

I'm writing too much. If I put all this effort into working, I would be a lot more productive.

But if I stopped logging here, I would be distracted more.

Only writing helps me discipline now. Is it tho? I haven't explored more ways yet.

Am I a machine now? I need proper algorithms and instructions to do stuff now

The daily update doc.

2:30PM mentors mailed.

3:49PM finished up with email reply. Explained stuff.

4:05PM Setting up things.

Distracted 5:18PM - It’s okay. Complete the project in your own time. No one’s judging you for failing gsoc. If they are, fuck them.

When was the last time you failed sth? Exactly.

5:30PM - yea right, you can finish setting up by 6.. Haha. idiot. Stop blasting alice glass in your ears and you can focus more.

Pottathi!!

Setup - following doc https://3.basecamp.com/3910353/buckets/5944669/documents/2878232344

sudo apt-get install postgresql postgresql-contrib

Trying to start psql cli

Psql psql: error: FATAL: role "compile" does not exist

5:46PM - distracted again.

Distracted again 5:51PM. That lasted 30mins. Looks like an improvement tho

initdb /usr/local/var/postgres initdb: command not found

sudo -u postgres; psql psql: error: FATAL: role "compile" does not exist

sudo -i -u postgres - yaay

psql :D Yep. default postgres db exists.

pg_ctl -D /usr/local/var/postgres start

pg_ctl -D /usr/local/var/postgres stop pg_ctl: command not found

Okay, skipping to creating databases. Varnodth vech kaana

create database falcon OWNER postgres

create database falcon_export_wip OWNER postgres

create database healthgraph_export OWNER postgres

create database sheldon OWNER postgres

create database falcon_export OWNER postgres

\l gives only 3 dbs now. What?

Okay, all of those need semicolons, idiot

$ sudo -u postgres createuser anaswara

$ sudo -u postgres psql

ALTER USER anaswara WITH ENCRYPTED PASSWORD '@atr7070';

Wait, should I encrypt or postgres chythoolo? YEP!! IDIOT!!

What if postgres encrypts my sha hash again? NOOOO!!!

Okay, nthelum idu

GRANT ALL PRIVILEGES ON DATABASE falcon TO anaswara; GRANT ALL PRIVILEGES ON DATABASE falcon_export_wip TO anaswara; GRANT ALL PRIVILEGES ON DATABASE healthgraph_export TO anaswara; GRANT ALL PRIVILEGES ON DATABASE sheldon TO anaswara; GRANT ALL PRIVILEGES ON DATABASE falcon_export TO anaswara;

https://zulip.compile.com/user_uploads/2/pQztq9JGf4b0nhUOXHkWaAXm/falcon.schema.sql.gz

https://zulip.compile.com/user_uploads/2/SNCT7OHeOZQd2vq1PErA8rXd/falcon.data.sql.gz Unauthorized

OKay, got the files.

zcat ~/Downloads/falcon.schema.sql-1629724096467.gz | psql -d falcon -O -X /usr/lib/postgresql/12/bin/psql: invalid option -- 'O'

Try "psql --help" for more information.

It’s already 7:02PM.

7:14PM - Distracted again!!! Okay, it’s DA from now on. Don’t think about gsoc for 2 days.

zcat ~/Desktop/falcon.schema.sql-1629724096467.gz | psql -d falcon -0 -X The flag was actually 0 not o ………..(-_-)

But psql: error: FATAL: role "compile" does not exist.

zcat ~/Desktop/falcon.schema.sql-1629724096467.gz | psql -d falcon -0 -X -U anaswara -W

psql: error: FATAL: Peer authentication failed for user "anaswara"

Okay, https://stackoverflow.com/a/17443990

Can be solved with this https://www.postgresql.org/docs/9.0/auth-methods.html#:~:text=19.3.1.-,Trust%20authentication,-When%20trust%20authentication

But let’s just create new roles with same username and password as unix user. “Compile” - “compile”

Zcat

“

ALTER TABLE

ALTER TABLE

WARNING: no privileges could be revoked for "public"

REVOKE

WARNING: no privileges could be revoked for "public"

REVOKE

WARNING: no privileges were granted for "public"

GRANT

WARNING: no privileges were granted for "public"

GRANT

REVOKE

REVOKE

GRANT

ERROR: role "redash" does not exist

ERROR: role "gandalf" does not exist

REVOKE

REVOKE

GRANT

ERROR: role "redash" does not exist

ERROR: role "gandalf" does not exist

REVOKE

REVOKE

”

GRANT ALL PRIVILEGES ON DATABASE falcon TO compile;

GRANT ALL PRIVILEGES ON DATABASE falcon_export_wip TO compile;

GRANT ALL PRIVILEGES ON DATABASE healthgraph_export TO compile;

GRANT ALL PRIVILEGES ON DATABASE sheldon TO compile;

GRANT ALL PRIVILEGES ON DATABASE falcon_export TO compile;

Ignoring all the errors

sudo apt-get install -y python-dev build-essential git python-pip virtualenvwrapper mosh libyajl2 graphviz supervisor libpq-dev postgresql-client nginx libffi-dev libyaml-dev zip unzip apgdiff autoconf automake libtool libleveldb-dev python2-dev swig libssl-dev

Sds Reading package lists... Done

Building dependency tree

Reading state information... Done

Note, selecting 'python-dev-is-python2' instead of 'python-dev'

Package python-pip is not available, but is referred to by another package.

This may mean that the package is missing, has been obsoleted, or

is only available from another source

However the following packages replace it:

python3-pip

E: Package 'python-pip' has no installation candidate

Cloning into 'healthgraph'...

The authenticity of host 'yoda.compile.com (139.59.30.154)' can't be established.

ECDSA key fingerprint is SHA256:N2I9nBx9N72puf3bUAYfBeMUr1V0ZmZVKTpx1nZzGp8.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'yoda.compile.com,139.59.30.154' (ECDSA) to the list of known hosts.

[email protected]'s password:

Permission denied, please try again.

Retry

Cloning into 'healthgraph'...

sign_and_send_pubkey: signing failed for ED25519 "/home/compile/.ssh/id_ed25519" from agent: agent refused operation

[email protected]'s password:

What’s goin on? I added ssh keys this morning

Adding ssh keys again

Should I generate ssh keys again? Nop

Oh ssh-add

Yaay

That’s a hugerepo. Healthgraph. HUH. DUH.

Cause that’s the only way the roses bloom… in my mind.. Aaa

git clone [email protected]:engineering/sheldon.git

Omu, they’re dorks.

It’s 9:11PM

Booo..

3 minutes to break time. I want break now. Well, you’re writing so it’s a break. Okay, put on some music.

Sheldon is a small repo. Young sheldon.

Wtf am I doing? Successfully wasted break. Nop.

10:48PM. I think I ate too much.

Okay, finish up.

0 notes

Text

Full Stack Development with Next.js and Supabase – The Complete Guide

Supabase is an open source Firebase alternative that lets you create a real-time backend in less than two minutes.

Supabase has continued to gain hype and adoption with developers in my network over the past few months. And a lot of the people I've talked to about it prefer the fact that it leverages a SQL-style database, and they like that it's open source, too.

When you create a project Supabase automatically gives you a Postgres SQL database, user authentication, and API. From there you can easily implement additional features like realtime subscriptions and file storage.

In this guide, you will learn how to build a full stack app that implements the core features that most apps require – like routing, a database, API, authentication, authorization, realtime data, and fine grained access control. We'll be using a modern stack including React, Next.js, and TailwindCSS.

I've tried to distill everything I've learned while myself getting up to speed with Supabase in as short of a guide as possible so you too can begin building full stack apps with the framework.

The app that we will be building is a multi-user blogging app that incorporates all of the types of features you see in many modern apps. This will take us beyond basic CRUD by enabling things like file storage as well as authorization and fine grained access control.

You can find the code for the app we will be building here.

By learning how to incorporate all of these features together you should be able to take what you learn here and build out your own ideas. Understanding the basic building blocks themselves allows you to then take this knowledge with you in the future to put it to use in any way you see fit.

Supabase Overview

How to Build Full Stack Apps

I'm fascinated by full stack Serverless frameworks because of the amount of power and agility they give to developers looking to build complete applications.

Supabase brings to the table the important combination of powerful back end services and easy to use client-side libraries and SDKs for an end to end solution.

This combination lets you not only build out the individual features and services necessary on the back end, but easily integrate them together on the front end by leveraging client libraries maintained by the same team.

Because Supabase is open source, you have the option to self-host or deploy your backend as a managed service. And as you can see, this will be easy for us to do on a free tier that does not require a credit card to get started with.

Why Use Supabase?

I've led the Front End Web and Mobile Developer Advocacy team at AWS, and written a book on building these types of apps. So I've had quite a bit of experience building in this space.

And I think that Supabase brings to the table some really powerful features that immediately stood out to me when I started to build with it.

Data access patterns

One of the biggest limitations of some of the tools and frameworks I've used in the past is the lack of querying capabilities. What I like a lot about Supabase is that, since it's built on top of Postgres, it enables an extremely rich set of performant querying capabilities out of the box without having to write any additional back end code.

The client-side SDKs provide easy to use filters and modifiers to enable an almost infinite combination of data access patterns.

Because the database is SQL, relational data is easy to configure and query, and the client libraries take it into account as a first class citizen.

Permissions

When you get past "hello world" many types of frameworks and services fall over very quickly. This is because most real-world use cases extend far beyond the basic CRUD functionality you often see made available by these tools.

The problem with some frameworks and managed services is that the abstractions they create are not extensible enough to enable easy to modify configurations or custom business logic. These restrictions often make it difficult to take into account the many one-off use cases that come up with building an app in the real-world.

In addition to enabling a wide array of data access patterns, Supabase makes it easy to configure authorization and fine grained access controls. This is because it is simply Postgres, enabling you implement whatever row-level security policies you would like directly from the built-in SQL editor (something we will cover here).

UI components

In addition to the client-side libraries maintained by the same team building the other Supabase tooling, they also maintain a UI component library (beta) that allows you to get up and running with various UI elements.

The most powerful is Auth which integrates with your Supabase project to quickly spin up a user authentication flow (which I'll be using in this tutorial).

Multiple authentication providers

Supabase enables all of the following types of authentication mechanisms:

Username & password

Magic email link

Google

Facebook

Apple

GitHub

Twitter

Azure

GitLab

Bitbucket

Open Source

One of the biggest things it has going for it is that it is completely open source (yes the back end too). This means that you can choose either the Serverless hosted approach or to host it yourself.

That means that if you wanted to, you could run Supabase with Docker and host your app on AWS, GCP, or Azure. This would eliminate the vendor lock-in issue you may run into with Supabase alternatives.

How to Get Started with Supabase

Project setup

To get started, let's first create the Next.js app.

npx create-next-app next-supabase

Next, change into the directory and install the dependencies we'll be needing for the app using either NPM or Yarn:

npm install @supabase/supabase-js @supabase/ui react-simplemde-editor easymde react-markdown uuid npm install tailwindcss@latest @tailwindcss/typography postcss@latest autoprefixer@latest

Next, create the necessary Tailwind configuration files:

npx tailwindcss init -p

Now update tailwind.config.js to add the Tailwind typography plugin to the array of plugins. We'll be using this plugin to style the markdown for our blog:

plugins: [ require('@tailwindcss/typography') ]

Finally, replace the styles in styles/globals.css with the following:

@tailwind base; @tailwind components; @tailwind utilities;

Supabase project initialization

Now that the project is created locally, let's create the Supabase project.

To do so, head over to Supabase.io and click on Start Your Project. Authenticate with GitHub and then create a new project under the organization that is provided to you in your account.

Give the project a Name and Password and click Create new project.

It will take approximately 2 minutes for your project to be created.

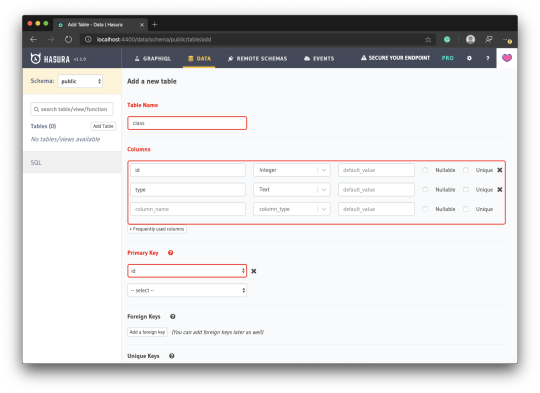

How to create a database table in Supabase

Once you've created your project, let's go ahead and create the table for our app along with all of the permissions we'll need. To do so, click on the SQL link in the left hand menu.

In this view, click on Query-1 under Open queries and paste in the following SQL query and click RUN:

CREATE TABLE posts ( id bigint generated by default as identity primary key, user_id uuid references auth.users not null, user_email text, title text, content text, inserted_at timestamp with time zone default timezone('utc'::text, now()) not null ); alter table posts enable row level security; create policy "Individuals can create posts." on posts for insert with check (auth.uid() = user_id); create policy "Individuals can update their own posts." on posts for update using (auth.uid() = user_id); create policy "Individuals can delete their own posts." on posts for delete using (auth.uid() = user_id); create policy "Posts are public." on posts for select using (true);

This will create the posts table that we'll be using for the app. It also enabled some row level permissions:

All users can query for posts

Only signed in users can create posts, and their user ID must match the user ID passed into the arguments

Only the owner of the post can update or delete it

Now, if we click on the Table editor link, we should see our new table created with the proper schema.

That's it! Our back end is ready to go now and we can start building out the UI. Username + password authentication is already enabled by default, so all we need to do now is wire everything up on the front end.

Next.js Supabase configuration

Now that the project has been created, we need a way for our Next.js app to know about the back end services we just created for it.

The best way for us to configure this is using environment variables. Next.js allows environment variables to be set by creating a file called .env.local in the root of the project and storing them there.

In order to expose a variable to the browser you have to prefix the variable with NEXT_PUBLIC_.

Create a file called .env.local at the root of the project, and add the following configuration:

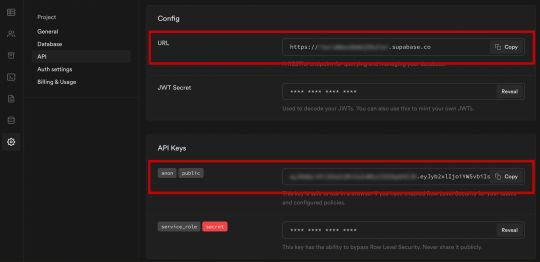

NEXT_PUBLIC_SUPABASE_URL=https://app-id.supabase.co NEXT_PUBLIC_SUPABASE_ANON_KEY=your-public-api-key

You can find the values of your API URL and API Key in the Supabase dashboard settings:

Next, create a file called api.js in the root of the project and add the following code:

// api.js import { createClient } from '@supabase/supabase-js' export const supabase = createClient( process.env.NEXT_PUBLIC_SUPABASE_URL, process.env.NEXT_PUBLIC_SUPABASE_ANON_KEY )

Now we will be able to import the supabase instance and use it anywhere in our app.

Here's an overview of what it looks like to interact with the API using the Supabase JavaScript client.

Querying for data:

import { supabase } from '../path/to/api' const { data, error } = await supabase .from('posts') .select()

Creating new items in the database:

const { data, error } = await supabase .from('posts') .insert([ { title: "Hello World", content: "My first post", user_id: "some-user-id", user_email: "[email protected]" } ])

As I mentioned earlier, the filters and modifiers make it really easy to implement various data access patterns and selection sets of your data.

Authentication – signing up:

const { user, session, error } = await supabase.auth.signUp({ email: '[email protected]', password: 'example-password', })

Authentication – signing in:

const { user, session, error } = await supabase.auth.signIn({ email: '[email protected]', password: 'example-password', })

In our case we won't be writing the main authentication logic by hand, we'll be using the Auth component from Supabase UI.

How to Build the App

Now let's start building out the UI!

To get started, let's first update the app to implement some basic navigation and layout styling.

We will also configure some logic to check if the user is signed in, and show a link for creating new posts if they are.

Finally we'll implement a listener for any auth events. And when a new auth event occurs, we'll check to make sure there is currently a signed in user in order to show or hide the Create Post link.

Open _app.js and add the following code:

// pages/_app.js import Link from 'next/link' import { useState, useEffect } from 'react' import { supabase } from '../api' import '../styles/globals.css' function MyApp({ Component, pageProps }) { const [user, setUser] = useState(null); useEffect(() => { const { data: authListener } = supabase.auth.onAuthStateChange( async () => checkUser() ) checkUser() return () => { authListener?.unsubscribe() }; }, []) async function checkUser() { const user = supabase.auth.user() setUser(user) } return ( <div> <nav className="p-6 border-b border-gray-300"> <Link href="/"> <span className="mr-6 cursor-pointer">Home</span> </Link> { user && ( <Link href="/create-post"> <span className="mr-6 cursor-pointer">Create Post</span> </Link> ) } <Link href="/profile"> <span className="mr-6 cursor-pointer">Profile</span> </Link> </nav> <div className="py-8 px-16"> <Component {...pageProps} /> </div> </div> ) } export default MyApp

How to make a user profile page

Next, let's create the profile page. In the pages directory, create a new file named profile.js and add the following code:

// pages/profile.js import { Auth, Typography, Button } from "@supabase/ui"; const { Text } = Typography import { supabase } from '../api' function Profile(props) { const { user } = Auth.useUser(); if (user) return ( <> <Text>Signed in: {user.email}</Text> <Button block onClick={() => props.supabaseClient.auth.signOut()}> Sign out </Button> </> ); return props.children } export default function AuthProfile() { return ( <Auth.UserContextProvider supabaseClient={supabase}> <Profile supabaseClient={supabase}> <Auth supabaseClient={supabase} /> </Profile> </Auth.UserContextProvider> ) }

The profile page uses the Auth component from the Supabase UI library. This component will render a "sign up" and "sign in" form for unauthenticated users, and a basic user profile with a "sign out" button for authenticated users. It will also enable a magic sign in link.

How to create new posts

Next, let's create the create-post page. In the pages directory, create a page named create-post.js with the following code:

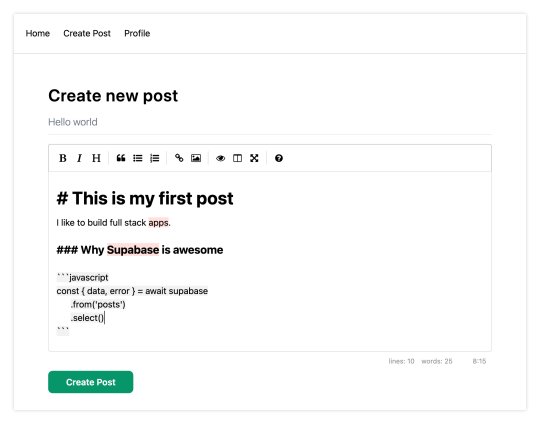

// pages/create-post.js import { useState } from 'react' import { v4 as uuid } from 'uuid' import { useRouter } from 'next/router' import dynamic from 'next/dynamic' import "easymde/dist/easymde.min.css" import { supabase } from '../api' const SimpleMDE = dynamic(() => import('react-simplemde-editor'), { ssr: false }) const initialState = { title: '', content: '' } function CreatePost() { const [post, setPost] = useState(initialState) const { title, content } = post const router = useRouter() function onChange(e) { setPost(() => ({ ...post, [e.target.name]: e.target.value })) } async function createNewPost() { if (!title || !content) return const user = supabase.auth.user() const id = uuid() post.id = id const { data } = await supabase .from('posts') .insert([ { title, content, user_id: user.id, user_email: user.email } ]) .single() router.push(`/posts/${data.id}`) } return ( <div> <h1 className="text-3xl font-semibold tracking-wide mt-6">Create new post</h1> <input onChange={onChange} name="title" placeholder="Title" value={post.title} className="border-b pb-2 text-lg my-4 focus:outline-none w-full font-light text-gray-500 placeholder-gray-500 y-2" /> <SimpleMDE value={post.content} onChange={value => setPost({ ...post, content: value })} /> <button type="button" className="mb-4 bg-green-600 text-white font-semibold px-8 py-2 rounded-lg" onClick={createNewPost} >Create Post</button> </div> ) } export default CreatePost

This component renders a Markdown editor, allowing users to create new posts.

The createNewPost function will use the supabase instance to create new posts using the local form state.

You may notice that we are not passing in any headers. This is because if a user is signed in, the Supabase client libraries automatically include the access token in the headers for a signed in user.

How to view a single post

We need to configure a page to view a single post.

This page uses getStaticPaths to dynamically create pages at build time based on the posts coming back from the API.

We also use the fallback flag to enable fallback routes for dynamic SSG page generation.

We use getStaticProps to enable the Post data to be fetched and then passed into the page as props at build time.

Create a new folder in the pages directory called posts and a file called [id].js within that folder. In pages/posts/[id].js, add the following code:

// pages/posts/[id].js import { useRouter } from 'next/router' import ReactMarkdown from 'react-markdown' import { supabase } from '../../api' export default function Post({ post }) { const router = useRouter() if (router.isFallback) { return <div>Loading...</div> } return ( <div> <h1 className="text-5xl mt-4 font-semibold tracking-wide">{post.title}</h1> <p className="text-sm font-light my-4">by {post.user_email}</p> <div className="mt-8"> <ReactMarkdown className='prose' children={post.content} /> </div> </div> ) } export async function getStaticPaths() { const { data, error } = await supabase .from('posts') .select('id') const paths = data.map(post => ({ params: { id: JSON.stringify(post.id) }})) return { paths, fallback: true } } export async function getStaticProps ({ params }) { const { id } = params const { data } = await supabase .from('posts') .select() .filter('id', 'eq', id) .single() return { props: { post: data } } }

How to query for and render the list of posts

Next, let's update index.js to fetch and render a list of posts:

// pages/index.js import { useState, useEffect } from 'react' import Link from 'next/link' import { supabase } from '../api' export default function Home() { const [posts, setPosts] = useState([]) const [loading, setLoading] = useState(true) useEffect(() => { fetchPosts() }, []) async function fetchPosts() { const { data, error } = await supabase .from('posts') .select() setPosts(data) setLoading(false) } if (loading) return <p className="text-2xl">Loading ...</p> if (!posts.length) return <p className="text-2xl">No posts.</p> return ( <div> <h1 className="text-3xl font-semibold tracking-wide mt-6 mb-2">Posts</h1> { posts.map(post => ( <Link key={post.id} href={`/posts/${post.id}`}> <div className="cursor-pointer border-b border-gray-300 mt-8 pb-4"> <h2 className="text-xl font-semibold">{post.title}</h2> <p className="text-gray-500 mt-2">Author: {post.user_email}</p> </div> </Link>) ) } </div> ) }

Let's test it out

We now have all of the pieces of our app ready to go, so let's try it out.

To run the local server, run the dev command from your terminal:

npm run dev

When the app loads, you should see the following screen:

To sign up, click on Profile and create a new account. You should receive an email link to confirm your account after signing up.

You can also create a new account by using the magic link.

Once you're signed in, you should be able to create new posts:

Navigating back to the home page, you should be able to see a list of the posts that you've created and be able to click on a link to the post to view it:

How to Edit Posts

Now that we have the app up and running, let's learn how to edit posts. To get started with this, let's create a new view that will fetch only the posts that the signed in user has created.

To do so, create a new file named my-posts.js in the root of the project with the following code:

// pages/my-posts.js import { useState, useEffect } from 'react' import Link from 'next/link' import { supabase } from '../api' export default function MyPosts() { const [posts, setPosts] = useState([]) useEffect(() => { fetchPosts() }, []) async function fetchPosts() { const user = supabase.auth.user() const { data } = await supabase .from('posts') .select('*') .filter('user_id', 'eq', user.id) setPosts(data) } async function deletePost(id) { await supabase .from('posts') .delete() .match({ id }) fetchPosts() } return ( <div> <h1 className="text-3xl font-semibold tracking-wide mt-6 mb-2">My Posts</h1> { posts.map((post, index) => ( <div key={index} className="border-b border-gray-300 mt-8 pb-4"> <h2 className="text-xl font-semibold">{post.title}</h2> <p className="text-gray-500 mt-2 mb-2">Author: {post.user_email}</p> <Link href={`/edit-post/${post.id}`}><a className="text-sm mr-4 text-blue-500">Edit Post</a></Link> <Link href={`/posts/${post.id}`}><a className="text-sm mr-4 text-blue-500">View Post</a></Link> <button className="text-sm mr-4 text-red-500" onClick={() => deletePost(post.id)} >Delete Post</button> </div> )) } </div> ) }

In the query for the posts, we use the user id to select only the posts created by the signed in user.

Next, create a new folder named edit-post in the pages directory. Then, create a file named [id].js in this folder.

In this file, we'll be accessing the id of the post from a route parameter. When the component loads, we will then use the post id from the route to fetch the post data and make it available for editing.

In this file, add the following code:

// pages/edit-post/[id].js import { useEffect, useState } from 'react' import { useRouter } from 'next/router' import dynamic from 'next/dynamic' import "easymde/dist/easymde.min.css" import { supabase } from '../../api' const SimpleMDE = dynamic(() => import('react-simplemde-editor'), { ssr: false }) function EditPost() { const [post, setPost] = useState(null) const router = useRouter() const { id } = router.query useEffect(() => { fetchPost() async function fetchPost() { if (!id) return const { data } = await supabase .from('posts') .select() .filter('id', 'eq', id) .single() setPost(data) } }, [id]) if (!post) return null function onChange(e) { setPost(() => ({ ...post, [e.target.name]: e.target.value })) } const { title, content } = post async function updateCurrentPost() { if (!title || !content) return await supabase .from('posts') .update([ { title, content } ]) router.push('/my-posts') } return ( <div> <h1 className="text-3xl font-semibold tracking-wide mt-6 mb-2">Edit post</h1> <input onChange={onChange} name="title" placeholder="Title" value={post.title} className="border-b pb-2 text-lg my-4 focus:outline-none w-full font-light text-gray-500 placeholder-gray-500 y-2" /> <SimpleMDE value={post.content} onChange={value => setPost({ ...post, content: value })} /> <button className="mb-4 bg-blue-600 text-white font-semibold px-8 py-2 rounded-lg" onClick={updateCurrentPost}>Update Post</button> </div> ) } export default EditPost

Now, add a new link to our navigation located in pages/_app.js:

// pages/_app.js { user && ( <Link href="/my-posts"> <span className="mr-6 cursor-pointer">My Posts</span> </Link> ) }

When running the app, you should be able to view your own posts, edit them, and delete them from the updated UI.

How to enable real-time updates

Now that we have the app running it's trivial to add real-time updates.

By default, Realtime is disabled on your database. Let's turn on Realtime for the posts table.

To do so, open the app dashboard and click on Databases -> Replication -> 0 Tables (under Source). Toggle on Realtime functionality for the posts table. Here is a video walkthrough of how you can do this for clarity.

Next, open src/index.js and update the useEffect hook with the following code:

useEffect(() => { fetchPosts() const mySubscription = supabase .from('posts') .on('*', () => fetchPosts()) .subscribe() return () => supabase.removeSubscription(mySubscription) }, [])

Now, we will be subscribed to realtime changes in the posts table.

The code for the app is located here.

Next Steps

By now you should have a good understanding of how to build full stack apps with Supabase and Next.js.

If you'd like to learn more about building full stack apps with Supabase, I'd check out the following resources.

If you read this far, tweet to the author to show them you care.

0 notes

Text

Options for legacy application modernization with Amazon Aurora and Amazon DynamoDB