#powershell inheritance

Explore tagged Tumblr posts

Text

making a text-based adventure game is hard

I've realized that maybe the RPG that I was working on was a little ambitious for what resources I had.

For such limited time (and patience), I've realized that maybe I'll need to postpone any work on a game of such caliber for a bit...

Fortunately, I've found myself enjoying a new genre of games as of late--visual novels and other narrative-heavy games.

"Oh god," you yelp. "My *absolutely-favoritest* blogger has fallen to the clutches of lust!"

Not to worry, my friends. I'm not playing *that* type of visual novel.

I'm talking about Disco Elysium and Kyle is Famous and Night in the Woods.

And I'm also really digging the aesthetic of the Windows PowerShell window I have to use to install Python packages.

"I've got big things planned for this game,"

the foolish never-would-be game dev announced.

"This game will change the industry--it'll change the way the common man sees video games,"

the developer self-awarely pretentiously claimed. They might be a little crazy, but they still knew that changes as big as those wouldn't happen as a result of their little game.

But then I come back down to reality.

Changes as big as those don't *have* to happen as a result of my game.

In fact, I could make this game just for myself.

Nobody else would ever have to play it.

...

But then I'd miss out on all the fun of sharing my works with the world.

I'd miss out on the "what were you thinking here" shared laughs moments,

I'd miss out on the "only someone on drugs would write something like this" compliments,

I'd miss out on all of it.

...

And also making a game of this sort of nature kind of implies I'll be able to get my mom to play *a* video game.

...

Did you know that making a text-based visual novel adventure game is somewhat difficult?

Even if you know how to do classes and object-oriented programming and such?

I've spent a good part of today literally just drawing diagrams of how inheritance of locations is going to work in this game.

Dude. You have to start down at the *text* level and work your way up.

It's... interesting, to say the least.

And I'm sure my methods aren't exactly the best. Yet.

I'm fully prepared to have to scrap it all in favor of a more efficient, cleaner organization method.

...

Prepare for total .JSONification. All things must be JSON.

Everything you love. Everything you hold dear.

I.

Will.

Make.

It.

JSON.

...

Anyway, yeah. I'm going to probably be spending 10 hours a day on average coding for the rest of my life, with my jobs and my hobbies and my projects and my everything.

Fuck, I've accidentally made an interesting image filter for my internship while trying to detect edges using a custom-made gradient algorithm.

That means only one thing--I have the capability to program my own shit for Aseprite and Krita.

...

And it'll all make my art so much easier.

...

And what about music?

What if I make an algorithm that helps me continue the song when I'm stuck and don't know what to add next?

Based on what I've learned in Music Theory?

...

It's daunting that the possibilities are now endless, I suppose.

But at the core of it all?

Where everything began?

...

It was some radical dream in which I so vehemently desired to make a game.

To get my story out there in turn-based RPG form.

A dream that formed well over 7 years now.

And will continue to grow and form.

...

Some radical dream that persuaded this what-would-otherwise-be-just-another-sterile-passionless-STEM-major into taking weird classes like Art and Music Theory and Modern Drama in an immensely passionate pursuit of realizing their insane dream.

You know.

Instead of just focusing on what's important.

...

I could probably be saving *more* lives with more dedication to, oh, I don't know--cancer research? Biotech?

...anything productive?

But instead here I sit, wasting my potential by making stupid games.

...

I don't think my games are *stupid*, per se.

However, when you compare the societal impact of a video game to something like cancer research, well...

I'm just ashamed!

My priorities aren't in the right places, it seems--!

...

But don't try to console me.

I've already convinced myself that even though games might not better society in the same way medical research does, and even though I'm a terrible person for wanting to waste my potential, it's still what I want to do.

Hah.

And so they look in the mirror, at peace with the fact they're a terrible person in the eyes of some.

But in the eyes of others, and even sometimes the same people, they're triumphant.

They're doing shit they physically should not be able to.

They continue to defy expectations.

The logic of the world has gone to shit, and the reflection in the mirror somehow proves this point.

I should not exist in the capacity that I do.

And...

...

I believe I've made my argument that a narrative-based game from me would be, at the very least, interesting.

...

And I totally didn't just have an unprofessional breakdown-rant in typed format.

...

def generateNewBreakdown(self, _subject:str=None) -> str:

...

On an unrelated note, I'm really starting to become attracted to the way Python code looks.

It's not a sexual attraction to the way the code looks.

Yet.

#blog#writing#coding#python#game development#STEM#wasted potential#guilt#narrative#rant#confusion#confused#chaos#i'm convinced everybody has breakdowns like this#but nobody really wants to share them#which is a shame#because they'd probably be entertaining too

0 notes

Text

Top 5 Abilities Employers Search For

What Guard Can As Well As Can Not Do

#toc background: #f9f9f9;border: 1px solid #aaa;display: table;margin-bottom: 1em;padding: 1em;width: 350px; .toctitle font-weight: 700;text-align: center;

Content

Professional Driving Capacity

Whizrt: Simulated Intelligent Cybersecurity Red Team

Add Your Call Information Properly

Objectsecurity. The Security Plan Automation Company.

The Kind Of Security Guards

Every one of these courses supply a declarative-based strategy to reviewing ACL information at runtime, releasing you from requiring to compose any type of code. Please refer to the example applications to discover just how to make use of these courses. Spring Security does not offer any type of special integration to immediately create, update or delete ACLs as component of your DAO or repository operations. Rather, you will require to compose code like revealed above for your private domain name objects. It deserves taking into consideration using AOP on your solutions layer to instantly integrate the ACL details with your services layer procedures.

zie deze pagina

cmdlet that can be made use of to listing techniques and buildings on an object quickly. Figure 3 shows a PowerShell manuscript to mention this details. Where feasible in this research, typical customer benefits were used to supply insight into readily available COM things under the worst-case situation of having no administrative advantages.

Whizrt: Simulated Intelligent Cybersecurity Red Group

Users that are members of several teams within a duty map will constantly be approved their greatest consent. For instance, if John Smith is a member of both Team An and Group B, and Team A has Manager opportunities to an object while Team B just has Audience civil liberties, Appian will treat John Smith as an Administrator. OpenPMF's support for advanced access control versions consisting of proximity-based accessibility control, PBAC was likewise even more prolonged. To fix numerous challenges around applying safe and secure distributed systems, ObjectSecurity released OpenPMF variation 1, during that time among the first Attribute Based Gain access to Control (ABAC) items in the market.

The picked users and functions are now listed in the table on the General tab. Opportunities on dices allow customers to accessibility service actions and execute analysis.

Object-Oriented Security is the technique of making use of usual object-oriented style patterns as a system for accessibility control. Such mechanisms are commonly both simpler to utilize and also more effective than conventional security designs based upon globally-accessible resources safeguarded by accessibility control lists. Object-oriented security is closely pertaining to object-oriented testability as well as various other advantages of object-oriented style. When a state-based Accessibility Control Checklist (ACL) is as well as exists integrated with object-based security, state-based security-- is offered. You do not have consent to view this object's security homes, also as a management individual.

You might write your ownAccessDecisionVoter or AfterInvocationProviderthat respectively fires before or after an approach invocation. Such classes would certainly useAclService to obtain the relevant ACL and after that callAcl.isGranted( Permission [] permission, Sid [] sids, boolean administrativeMode) to determine whether permission is granted or denied. At the same time, you could utilize our AclEntryVoter, AclEntryAfterInvocationProvider orAclEntryAfterInvocationCollectionFilteringProvider courses.

What are the key skills of safety officer?

Whether you are a young single woman or nurturing a family, Lady Guard is designed specifically for women to cover against female-related illnesses. Lady Guard gives you the option to continue taking care of your family living even when you are ill.

Include Your Contact Information Properly

It permitted the central authoring of accessibility policies, as well as the automated enforcement throughout all middleware nodes making use of neighborhood decision/enforcement factors. Thanks to the assistance of several EU funded study jobs, ObjectSecurity discovered that a main ABAC strategy alone was not a convenient means to execute security plans. Visitors will get a comprehensive consider each element of computer system security and exactly how the CORBAsecurity requirements fulfills each of these security requires.

Understanding facilities It is a best practice to provide specific teams Visitor civil liberties to understanding centers as opposed to setting 'Default (All Other Customers)' to customers.

This suggests that no fundamental user will certainly have the ability to start this process design.

Appian recommends giving customer accessibility to specific teams instead.

Appian has detected that this process version might be utilized as an action or related action.

Doing so makes sure that record folders as well as records embedded within understanding facilities have actually specific visitors set.

You have to also provide benefits on each of the measurements of the dice. Nonetheless, you can establish fine-grained gain access to on a measurement to restrict the advantages, as defined in "Creating Data Security Plans on Cubes and dimensions". You can withdraw as well as set object privileges on dimensional objects using the SQL GIVE and REVOKE commands. You provide security on views and also emerged sights for dimensional objects similarly as for any kind of other views and also emerged sights in the database. You can provide both data security and object security in Analytic Work area Manager.

What is a security objective?

General career objective examples Secure a responsible career opportunity to fully utilize my training and skills, while making a significant contribution to the success of the company. Seeking an entry-level position to begin my career in a high-level professional environment.

Since their security is acquired by all objects embedded within them by default, expertise facilities and also regulation folders are taken into consideration high-level objects. For example, security set on expertise facilities is inherited by all embedded record folders and papers by default. Also, security established on regulation folders is inherited by all embedded policy folders and also rule things including user interfaces, constants, expression rules, choices, and assimilations by default.

Objectsecurity. The Security Policy Automation Company.

In the instance above, we're obtaining the ACL connected with the "Foo" domain object with identifier number 44. We're after that including an ACE to make sure that a principal named "Samantha" can "administer" the object.

youtube

The Types Of Security Guards

Topics covered include verification, recognition, and advantage; accessibility control; message security; delegation as well as proxy issues; auditing; and, non-repudiation. The author additionally provides many real-world examples of how protected object systems can be utilized to impose useful security plans. after that pick both of the worth from drop down, right here both worth are, one you appointed to app1 and also various other you designated to app2 and also maintain adhering to the step 1 to 9 meticulously. Right here, you are defining which individual will see which app and by following this remark, you specified you problem user will see both application.

What is a good objective for a security resume?

Career Objective: Seeking the position of 'Safety Officer' in your organization, where I can deliver my attentive skills to ensure the safety and security of the organization and its workers.

Security Vs. Presence

For object security, you also have the option of using SQL GIVE and REVOKE. provides fine-grained control of the data on a cellular degree. When you want to limit accessibility to particular areas of a cube, you just require to specify information security plans. Data security is carried out using the XML DB security of Oracle Data source. The next step is to really make use of the ACL details as component of permission decision logic as soon as you have actually used the above strategies to store some ACL details in the data source.

#objectbeveiliging#wat is objectbeveiliging#object beveiliging#object beveiliger#werkzaamheden beveiliger

1 note

·

View note

Text

Shut down Windows 11 computer (six ways)

To shut down Windows 11, open the Start menu > Power, and click “Shut down.” Or right-click the “Start” button, open the “Shutdown or sign out” menu and choose “Shut down.” Or use the “Alt + F4” keyboard shortcut, choose “Shut down,” and click “OK.” You can also power off Windows 11 using Command Prompt and PowerShell. Although Windows 11 inherits most of the familiar design from previous…

View On WordPress

0 notes

Text

MICROSOFT AZURE FUNDAMENTALS AZ-900 (PART 5)

Azure Governance and Compliance

Azure Blueprints

Let’s you standardize cloud subscription or environment deployments.

Instead of having to configure features like Azure policy for each new subscription you can define repeatable settings and policies.

You can deploy new Test/Dev environments with security and compliance settings already configured

Development teams can rapidly build and deploy new environments with the knowledge that they are building within organizational requirements

Artifacts

Each component in the blueprint is known as an artifact.

Artifacts may not have additional parameters (configuration) - Example: Threat detection rule for SQL server policy

Artifacts can contain one or more parameters that can be configured - Example: Allowed location policy for where to deploy resources

You can specify parameter values when you create the blueprint definition or when you assign the blueprint definition to a scope.

Blueprints deploy a new environment based on all the requirements in the artifacts which can include: role assignments, policy assignments, azure resource manager templates, and resource groups

Blueprints are version-able. You can create an initial version and then make updates later

The relationship between the blueprint definition (what should be deployed) and the blueprint assignment (what was deployed) is preserved. It maintains a record of each resource and which blueprint defined it. (Auditable)

Azure Policy

A service in Azure that enables you to create, assign, and manage policies that control or audit your resources enables you to define both individual policies and groups of related policies known as initiatives.

Evaluates your resource and highlights resources not compliant

Prevents non compliant resources from being created

Policies can be set at any level: resource, resource group, subscription, etc.

Policies are inherited at a high level and applied to all the groupings that fall within the parent

For example, if the resource group has a policy, all the resources in that resource group receive the same policy.

Comes with built in policy and initiative definitions for Storage, Networking, Compute, Security Center, and monitoring.

Some auto-remediation of non compliant resources is possible

Azure Policy Initiatives

Are a way of grouping related policies together.

Contains all the policy definitions to help track your compliance state for a larger goal

For example: Azure security center has an initiative named Enable monitoring

Included definitions in Security Center

Monitor unencrypted sql database

Monitor OS vulnerabilities

Monitor missing Endpoint Protection

Resource Locks

Prevent resources from being accidentally deleted or changed.

Even with RBAC in place, some risk that people with the appropriate rights can delete resources exists

Resource locks can be applied to resource, resource groups, subscription

For example: Are inherited by all resources in a resource group

Resource Lock Types

Two types. One prevents users from deleting, the other prevents from changing or deleting a resource

Delete means authorized users can read and modify a resource, but cannot delete the resource

ReadOnly means authorized users can read a resource, but can’t delete it or update it. (Similar to applying a reader role)

Can be managed via Azure portal, Azure cli, PowerShell, and an Azure resource manager template

How to delete or change a locked resource?

Remove the lock

Then apply any action you have permissions to perform

Re-apply it after again

Service Trust Portal

Provides access to various content, tools and other resources about Microsoft security, privacy and compliance practices.

Contains details of Microsoft’s implementation of controls and processes that protect cloud services and the customer data therein.

URL: https://servicetrust.Microsoft.com

Features and Tools for managing and deploying Azure resources

Tools for interacting with Azure

Azure Portal

Azure PowerShell

Azure Command Line Interface (CLI)

Required to interact with the Azure environment, management groups, subscriptions, resource groups, resources, and so on

Azure Cloud Shell

Browser based shell tool that allows you to create configure and manage Azure resources using a shell

Supports Azure PowerShell and Azure command line interface ( a bash shell )

No local installation or configuration required

Authenticated to your Azure credential

Use the shell you are most convenient

Azure PowerShell

Shell with which developers, DevOps, and IT professionals run commands called command-lets (cmd-lets)

The commands call Azure REST APIs to perform management tasks in Azure

They can run independently or combined to help orchestrate complex actions

Routine setup, tear down, and maintenance of a single resource or multiple connected resources

Deployment of an entire infrastructure, which might contain dozens or hundreds of resources from imperative code

Allows to script commands to make the process repeatable and automatable.

You can install Azure Cloud shell and configure it via Windows, Linux, and Mac platforms

Azure CLI

Functional equivalent to Azure PowerShell just the syntax is different

Uses bash commands vs PowerShell, because that is used in the Azure PowerShell

Same benefits as PowerShell

Azure Arc

Allows you to extend Azure compliance and monitoring to your hybrid and multi-cloud configurations via Azure Resource Manager.

Simplifies governance and management by delivering a consistent multi-cloud and on-premises management platform.

Provides a centralized, unified way to:

Managed entire environment together by projecting your existing non-Azure resources into ARM

Manage multi-cloud and hybrid virtual machines, Kubernetes clusters, and databases as if they are on Azure

Use familiar azure services and management capabilities no mater where the resources live

Continue using traditional ITOps while introducing DevOps practices to support new cloud and native patterns in your environment.

Configure custom locations as an abstraction layer on top of Azure Arc-enabled Kubernetes clusters and cluster extensions.

Currently you can manage the following resources outside of Azure

Servers

Kubernetes clusters

Azure data services

SQL Server

Virtual Machines (preview)

Azure Resource Manager and Azure ARM Templates

Deployment and management service for Azure

Provides a management layer that enables you to create, update, and delete resources in your Azure account.

When are request comes from any Azure tool, API, or SDK ARM receives the request. It authenticates and authorizes the request.

Benefits

Managed your infrastructure through declarative templates rather than scripts. Templates are JSON files

Deploy, manage, and monitor all resources in the solution as a group, rather than individually

Re-deploy your solution throughout the development life-cycle and have confidence your resources are deployed in a consistent state

Define dependencies and order when deploying them

Apply access control to all services because RBAC is natively integrated into the management platform

Apply tags to resources to logically organize all the resources in your subscription

Clarify the organizations billing by viewing costs for a group of resources with the same tag.

ARM Templates

Infrastructure as code - a concept where you manage your infrastructure as lines of code.

Uses Azure Cloud Shell, PowerShell, or Azure CLI

Via templates you can describe the resources you want to use using a declarative JSON format.

Deployment code is verified before it is run

Ensures resources are created and connected correctly.

All resources are created at the same time in parallel

Benefits

Declarative syntax allows you to create and deploy an entire Azure infrastructure declaratively.

Repeatable resource, repeatedly deploy your infrastructure throughout the development lifecycle and have confidence your resources are deployed in a consistent manner.

Orchestration, no worry about the complexities of ordering operations. It determines the correct order.

Modular files, break your templates into smaller, reusable components and link them together at deployment time. Nesting is allowed.

Extensibility, you can add PowerShell or bash scripts to your templates. Deployment scripts extend your ability to setup resources during deployment.

0 notes

Text

docker commands cheat sheet free EEC#

💾 ►►► DOWNLOAD FILE 🔥🔥🔥🔥🔥 docker exec -it /bin/sh. docker restart. Show running container stats. Docker Cheat Sheet All commands below are called as options to the base docker command. for more information on a particular command. To enter a running container, attach a new shell process to a running container called foo, use: docker exec -it foo /bin/bash . Images are just. Reference - Best Practices. Creates a mount point with the specified name and marks it as holding externally mounted volumes from native host or other containers. FROM can appear multiple times within a single Dockerfile in order to create multiple images. The tag or digest values are optional. If you omit either of them, the builder assumes a latest by default. The builder returns an error if it cannot match the tag value. Normal shell processing does not occur when using the exec form. There can only be one CMD instruction in a Dockerfile. If the user specifies arguments to docker run then they will override the default specified in CMD. To include spaces within a LABEL value, use quotes and backslashes as you would in command-line parsing. The environment variables set using ENV will persist when a container is run from the resulting image. Match rules. Prepend exec to get around this drawback. It can be used multiple times in the one Dockerfile. Multiple variables may be defined by specifying ARG multiple times. It is not recommended to use build-time variables for passing secrets like github keys, user credentials, etc. Build-time variable values are visible to any user of the image with the docker history command. The trigger will be executed in the context of the downstream build, as if it had been inserted immediately after the FROM instruction in the downstream Dockerfile. Any build instruction can be registered as a trigger. Triggers are inherited by the "child" build only. In other words, they are not inherited by "grand-children" builds. After a certain number of consecutive failures, it becomes unhealthy. If a single run of the check takes longer than timeout seconds then the check is considered to have failed. It takes retries consecutive failures of the health check for the container to be considered unhealthy. The command's exit status indicates the health status of the container. Allows an alternate shell be used such as zsh , csh , tcsh , powershell , and others.

1 note

·

View note

Text

docker commands cheat sheet 100% working 4RXD#

💾 ►►► DOWNLOAD FILE 🔥🔥🔥🔥🔥 docker exec -it /bin/sh. docker restart. Show running container stats. Docker Cheat Sheet All commands below are called as options to the base docker command. for more information on a particular command. To enter a running container, attach a new shell process to a running container called foo, use: docker exec -it foo /bin/bash . Images are just. Reference - Best Practices. Creates a mount point with the specified name and marks it as holding externally mounted volumes from native host or other containers. FROM can appear multiple times within a single Dockerfile in order to create multiple images. The tag or digest values are optional. If you omit either of them, the builder assumes a latest by default. The builder returns an error if it cannot match the tag value. Normal shell processing does not occur when using the exec form. There can only be one CMD instruction in a Dockerfile. If the user specifies arguments to docker run then they will override the default specified in CMD. To include spaces within a LABEL value, use quotes and backslashes as you would in command-line parsing. The environment variables set using ENV will persist when a container is run from the resulting image. Match rules. Prepend exec to get around this drawback. It can be used multiple times in the one Dockerfile. Multiple variables may be defined by specifying ARG multiple times. It is not recommended to use build-time variables for passing secrets like github keys, user credentials, etc. Build-time variable values are visible to any user of the image with the docker history command. The trigger will be executed in the context of the downstream build, as if it had been inserted immediately after the FROM instruction in the downstream Dockerfile. Any build instruction can be registered as a trigger. Triggers are inherited by the "child" build only. In other words, they are not inherited by "grand-children" builds. After a certain number of consecutive failures, it becomes unhealthy. If a single run of the check takes longer than timeout seconds then the check is considered to have failed. It takes retries consecutive failures of the health check for the container to be considered unhealthy. The command's exit status indicates the health status of the container. Allows an alternate shell be used such as zsh , csh , tcsh , powershell , and others.

1 note

·

View note

Text

docker commands cheat sheet trainer NKD!

💾 ►►► DOWNLOAD FILE 🔥🔥🔥🔥🔥 docker exec -it /bin/sh. docker restart. Show running container stats. Docker Cheat Sheet All commands below are called as options to the base docker command. for more information on a particular command. To enter a running container, attach a new shell process to a running container called foo, use: docker exec -it foo /bin/bash . Images are just. Reference - Best Practices. Creates a mount point with the specified name and marks it as holding externally mounted volumes from native host or other containers. FROM can appear multiple times within a single Dockerfile in order to create multiple images. The tag or digest values are optional. If you omit either of them, the builder assumes a latest by default. The builder returns an error if it cannot match the tag value. Normal shell processing does not occur when using the exec form. There can only be one CMD instruction in a Dockerfile. If the user specifies arguments to docker run then they will override the default specified in CMD. To include spaces within a LABEL value, use quotes and backslashes as you would in command-line parsing. The environment variables set using ENV will persist when a container is run from the resulting image. Match rules. Prepend exec to get around this drawback. It can be used multiple times in the one Dockerfile. Multiple variables may be defined by specifying ARG multiple times. It is not recommended to use build-time variables for passing secrets like github keys, user credentials, etc. Build-time variable values are visible to any user of the image with the docker history command. The trigger will be executed in the context of the downstream build, as if it had been inserted immediately after the FROM instruction in the downstream Dockerfile. Any build instruction can be registered as a trigger. Triggers are inherited by the "child" build only. In other words, they are not inherited by "grand-children" builds. After a certain number of consecutive failures, it becomes unhealthy. If a single run of the check takes longer than timeout seconds then the check is considered to have failed. It takes retries consecutive failures of the health check for the container to be considered unhealthy. The command's exit status indicates the health status of the container. Allows an alternate shell be used such as zsh , csh , tcsh , powershell , and others.

1 note

·

View note

Text

A developer no more...

I started my career in technology as a web developer. I learned HTML by taking it apart. I went to the darkest corners of the web, clicked on View Source, and learned what I could do with a little bit of syntax and an idea. It was the turn of the century and a time where mashups happened before “mashup” was a term. I started with HTML, grew into DHTML (precursor to jQuery/AJAX), Javascript, JAVA, and eventually PHP. I stayed with PHP for years.

It was a simpler time before there were any real front-end frameworks. Frontend developers were more graphic designers with coding skill versus full fledged developers, at least in practice. Now, much change has happened. Almost 20 years into my career, I am an architect for a cloud company, but I have long strayed away from code. I went from PHP web developer to B2B account manager, to systems administrator. In the early 2000s, PHP didn’t pay as well as .net and I was not the Microsoft-centric technologist that I am now.

As a Systems Administrator, there wasn’t time to be a developer beyond simple automation scripts. Many of the daily task in supporting a Microsoft environment came down to subjective user-behavior. After several years, I had becomes more comfortable in a GUI than in a Command Line. Then…. It happened. We deployed Exchange 2007 and all of my bright, shiny, point-and-click tools had disappeared. Microsoft had moved all reporting functionality to third-party tools and PowerShell. My job wasn’t willing to spring for untested third-party tools. PowerShell became my weapon of choice. It immediately felt painful but in practice, it was great. It was easy to follow as much of the object notation followed syntax I was familiar with from Java, including constructs, inheritance, and development behaviors. It was also as flexible as PHP where variable definition could be reset on the fly and symbols followed a similar ‘dollar-sign’ prefix. It was the best of times, and the worst of times. I gained a shiny new skill, but the expectations were high. I was no longer a developer with a practiced mindset. I was a SysAdmin doing shotgun development… what some of us now call a DevOps Engineer.

After a few years supporting this environment, watching it grow, and re-architecting it to be more self-sustaining … I left. I architected a global scale compute environment to allow for pro-active support. When you can shut down 50% of production and no one notices, you’ve done a good job. It was time for a new challenge. During my career, I had joined the IASA and taken an office in the local chapter. It was time to push forward as an architect. So I did. And it has been a great ride that I’m still on. I am not a developer. I am not a DBA. I am not a Data Analyst. I do understand how all of these roles function and what is required to support them. I know how to build, scale, secure, and manipulate the infrastructure required to support each role and the workloads created by those roles. The gift of being an architect is that you get to learn everything. You get to be “the person” that has a 1000-foot view of all workloads and all infrastructure. There’s nothing you don’t get the opportunity to learn, understand, or design. It is a place where true generalists are able to shine.

Now that I’ve very briefly described my career and experiences in the tech world from 1998 - now, I sit at my desk looking at archive files. It’s copies of papers and old application code that I’ve written. One archive went so far as to include a detailed business plan attached to software written using a 10-year old version of a given PHP framework. It’s been several years since I’ve had to write code for a web application. It’s time. It’s time to press delete, possibly keep the idea, and write new code in a new language. As an infrastructure architect and technology generalist, code is a tricky subject. Developers and Operations do not get along until they do. I’ve been both and think it’s time to start blurring that line again. Am I going to modify this instance of Wordpress? HELL NO! Am I going to write a few microservices or something else? Possibly. Let’s see how this journey goes. Once a builder, always a builder.

0 notes

Text

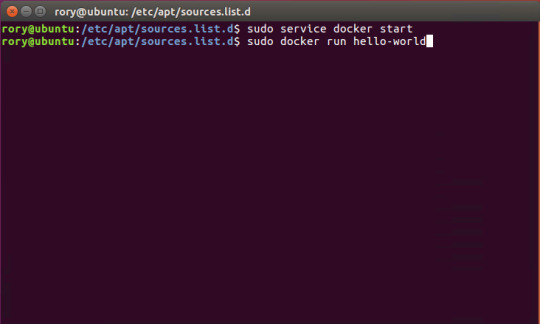

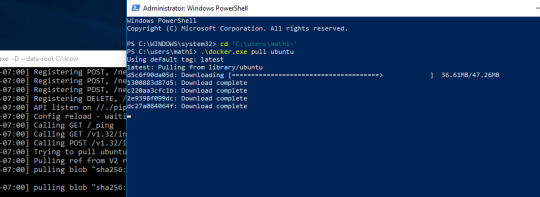

Start Docker In Ubuntu

A Linux Dev Environment on Windows with WSL 2, Docker Desktop And the docker docs. Docker Desktop WSL 2 backend. Below is valid only for WSL1. It seems that docker cannot run inside WSL. What they propose is to connect the WSL to your docker desktop running in windows: Setting Up Docker for Windows and WSL. By removing /etc/docker you will loose all Images and data. You can check logs with. Journalctl -u docker.services. Systemctl daemon-reload && systemctl enable docker && systemctl start docker. This worked for me.

$ docker images REPOSITORY TAG ID ubuntu 12.10 b750fe78269d me/myapp latest 7b2431a8d968. Docker-compose start docker-compose stop. After installing the Nvidia Container Toolkit, you'll need to restart the Docker Daemon in order to let Docker use your Nvidia GPU: sudo systemctl restart docker Changing the docker-compose.yml Now that all the packages are in order, let's change the docker-compose.yml to let the Jellyfin container make use of the Nvidia GPU.

Complete Docker CLI

Container Management CLIs

Inspecting The Container

Interacting with Container

Image Management Commands

Image Transfer Comnands

Builder Main Commands

The Docker CLI

Manage images

docker build

Create an image from a Dockerfile.

docker run

Run a command in an image.

Manage containers

docker create

Example

Create a container from an image.

docker exec

Example

Run commands in a container.

docker start

Start/stop a container.

docker ps

Manage containers using ps/kill.

Images

docker images

Manages images.

docker rmi

Deletes images.

Also see

Getting Started(docker.io)

Inheritance

Variables

Initialization

Onbuild

Commands

Entrypoint

Configures a container that will run as an executable.

This will use shell processing to substitute shell variables, and will ignore any CMD or docker run command line arguments.

Metadata

See also

Basic example

Commands

Reference

Building

Ports

Commands

Environment variables

Dependencies

Other options

Advanced features

Labels

DNS servers

Devices

External links

Hosts

sevices

To view list of all the services runnning in swarm

To see all running services

to see all services logs

To scale services quickly across qualified node

clean up

To clean or prune unused (dangling) images

To remove all images which are not in use containers , add - a

To Purne your entire system

To leave swarm

To remove swarm ( deletes all volume data and database info)

To kill all running containers

Contributor -

Sangam biradar - Docker Community Leader

The Jellyfin project and its contributors offer a number of pre-built binary packages to assist in getting Jellyfin up and running quickly on multiple systems.

Container images

Docker

Windows (x86/x64)

Linux

Linux (generic amd64)

Debian

Ubuntu

Container images

Official container image: jellyfin/jellyfin.

LinuxServer.io image: linuxserver/jellyfin.

hotio image: hotio/jellyfin.

Jellyfin distributes official container images on Docker Hub for multiple architectures. These images are based on Debian and built directly from the Jellyfin source code.

Additionally the LinuxServer.io project and hotio distribute images based on Ubuntu and the official Jellyfin Ubuntu binary packages, see here and here to see their Dockerfile.

Note

For ARM hardware and RPi, it is recommended to use the LinuxServer.io or hotio image since hardware acceleration support is not yet available on the native image.

Docker

Docker allows you to run containers on Linux, Windows and MacOS.

The basic steps to create and run a Jellyfin container using Docker are as follows.

Follow the offical installation guide to install Docker.

Download the latest container image.

Create persistent storage for configuration and cache data.

Either create two persistent volumes:

Or create two directories on the host and use bind mounts:

Create and run a container in one of the following ways.

Note

The default network mode for Docker is bridge mode. Bridge mode will be used if host mode is omitted. Use host mode for networking in order to use DLNA or an HDHomeRun.

Using Docker command line interface:

Using host networking (--net=host) is optional but required in order to use DLNA or HDHomeRun.

Bind Mounts are needed to pass folders from the host OS to the container OS whereas volumes are maintained by Docker and can be considered easier to backup and control by external programs. For a simple setup, it's considered easier to use Bind Mounts instead of volumes. Replace jellyfin-config and jellyfin-cache with /path/to/config and /path/to/cache respectively if using bind mounts. Multiple media libraries can be bind mounted if needed:

Note

There is currently an issue with read-only mounts in Docker. If there are submounts within the main mount, the submounts are read-write capable.

Using Docker Compose:

Create a docker-compose.yml file with the following contents:

Then while in the same folder as the docker-compose.yml run:

To run the container in background add -d to the above command.

You can learn more about using Docker by reading the official Docker documentation.

Hardware Transcoding with Nvidia (Ubuntu)

You are able to use hardware encoding with Nvidia, but it requires some additional configuration. These steps require basic knowledge of Ubuntu but nothing too special.

Adding Package RepositoriesFirst off you'll need to add the Nvidia package repositories to your Ubuntu installation. This can be done by running the following commands:

Installing Nvidia container toolkitNext we'll need to install the Nvidia container toolkit. This can be done by running the following commands:

After installing the Nvidia Container Toolkit, you'll need to restart the Docker Daemon in order to let Docker use your Nvidia GPU:

Changing the docker-compose.ymlNow that all the packages are in order, let's change the docker-compose.yml to let the Jellyfin container make use of the Nvidia GPU.The following lines need to be added to the file:

Your completed docker-compose.yml file should look something like this:

Note

For Nvidia Hardware encoding the minimum version of docker-compose needs to be 2. However we recommend sticking with version 2.3 as it has proven to work with nvenc encoding.

Unraid Docker

An Unraid Docker template is available in the repository.

Open the unRaid GUI (at least unRaid 6.5) and click on the 'Docker' tab.

Add the following line under 'Template Repositories' and save the options.

Click 'Add Container' and select 'jellyfin'.

Adjust any required paths and save your changes.

Kubernetes

A community project to deploy Jellyfin on Kubernetes-based platforms exists at their repository. Any issues or feature requests related to deployment on Kubernetes-based platforms should be filed there.

Podman

Podman allows you to run containers as non-root. It's also the offically supported container solution on RHEL and CentOS.

Steps to run Jellyfin using Podman are almost identical to Docker steps:

Install Podman:

Download the latest container image:

Create persistent storage for configuration and cache data:

Either create two persistent volumes:

Or create two directories on the host and use bind mounts:

Create and run a Jellyfin container:

Note that Podman doesn't require root access and it's recommended to run the Jellyfin container as a separate non-root user for security.

If SELinux is enabled you need to use either --privileged or supply z volume option to allow Jellyfin to access the volumes.

Replace jellyfin-config and jellyfin-cache with /path/to/config and /path/to/cache respectively if using bind mounts.

To mount your media library read-only append ':ro' to the media volume:

To run as a systemd service see Running containers with Podman and shareable systemd services.

Cloudron

Cloudron is a complete solution for running apps on your server and keeping them up-to-date and secure. On your Cloudron you can install Jellyfin with a few clicks via the app library and updates are delivered automatically.

The source code for the package can be found here.Any issues or feature requests related to deployment on Cloudron should be filed there.

Windows (x86/x64)

Windows installers and builds in ZIP archive format are available here.

Warning

If you installed a version prior to 10.4.0 using a PowerShell script, you will need to manually remove the service using the command nssm remove Jellyfin and uninstall the server by remove all the files manually. Also one might need to move the data files to the correct location, or point the installer at the old location.

Warning

The 32-bit or x86 version is not recommended. ffmpeg and its video encoders generally perform better as a 64-bit executable due to the extra registers provided. This means that the 32-bit version of Jellyfin is deprecated.

Install using Installer (x64)

Install

Download the latest version.

Run the installer.

(Optional) When installing as a service, pick the service account type.

If everything was completed successfully, the Jellyfin service is now running.

Open your browser at http://localhost:8096 to finish setting up Jellyfin.

Update

Download the latest version.

Run the installer.

If everything was completed successfully, the Jellyfin service is now running as the new version.

Uninstall

Go to Add or remove programs in Windows.

Search for Jellyfin.

Click Uninstall.

Manual Installation (x86/x64)

Install

Download and extract the latest version.

Create a folder jellyfin at your preferred install location.

Copy the extracted folder into the jellyfin folder and rename it to system.

Create jellyfin.bat within your jellyfin folder containing:

To use the default library/data location at %localappdata%:

To use a custom library/data location (Path after the -d parameter):

To use a custom library/data location (Path after the -d parameter) and disable the auto-start of the webapp:

Run

Open your browser at http://<--Server-IP-->:8096 (if auto-start of webapp is disabled)

Update

Stop Jellyfin

Rename the Jellyfin system folder to system-bak

Download and extract the latest Jellyfin version

Copy the extracted folder into the jellyfin folder and rename it to system

Run jellyfin.bat to start the server again

Rollback

Stop Jellyfin.

Delete the system folder.

Rename system-bak to system.

Run jellyfin.bat to start the server again.

MacOS

MacOS Application packages and builds in TAR archive format are available here.

Install

Download the latest version.

Drag the .app package into the Applications folder.

Start the application.

Open your browser at http://127.0.0.1:8096.

Upgrade

Download the latest version.

Stop the currently running server either via the dashboard or using the application icon.

Drag the new .app package into the Applications folder and click yes to replace the files.

Start the application.

Open your browser at http://127.0.0.1:8096.

Uninstall

Start Docker In Ubuntu Virtualbox

Stop the currently running server either via the dashboard or using the application icon.

Move the .app package to the trash.

Deleting Configuation

This will delete all settings and user information. This applies for the .app package and the portable version.

Delete the folder ~/.config/jellyfin/

Delete the folder ~/.local/share/jellyfin/

Portable Version

Download the latest version

Extract it into the Applications folder

Open Terminal and type cd followed with a space then drag the jellyfin folder into the terminal.

Type ./jellyfin to run jellyfin.

Open your browser at http://localhost:8096

Closing the terminal window will end Jellyfin. Running Jellyfin in screen or tmux can prevent this from happening.

Upgrading the Portable Version

Download the latest version.

Stop the currently running server either via the dashboard or using CTRL+C in the terminal window.

Extract the latest version into Applications

Open Terminal and type cd followed with a space then drag the jellyfin folder into the terminal.

Type ./jellyfin to run jellyfin.

Open your browser at http://localhost:8096

Uninstalling the Portable Version

Stop the currently running server either via the dashboard or using CTRL+C in the terminal window.

Move /Application/jellyfin-version folder to the Trash. Replace version with the actual version number you are trying to delete.

Using FFmpeg with the Portable Version

The portable version doesn't come with FFmpeg by default, so to install FFmpeg you have three options.

use the package manager homebrew by typing brew install ffmpeg into your Terminal (here's how to install homebrew if you don't have it already

download the most recent static build from this link (compiled by a third party see this page for options and information), or

compile from source available from the official website

More detailed download options, documentation, and signatures can be found.

If using static build, extract it to the /Applications/ folder.

Navigate to the Playback tab in the Dashboard and set the path to FFmpeg under FFmpeg Path.

Linux

Linux (generic amd64)

Generic amd64 Linux builds in TAR archive format are available here.

Installation Process

Create a directory in /opt for jellyfin and its files, and enter that directory.

Download the latest generic Linux build from the release page. The generic Linux build ends with 'linux-amd64.tar.gz'. The rest of these instructions assume version 10.4.3 is being installed (i.e. jellyfin_10.4.3_linux-amd64.tar.gz). Download the generic build, then extract the archive:

Create a symbolic link to the Jellyfin 10.4.3 directory. This allows an upgrade by repeating the above steps and enabling it by simply re-creating the symbolic link to the new version.

Create four sub-directories for Jellyfin data.

If you are running Debian or a derivative, you can also download and install an ffmpeg release built specifically for Jellyfin. Be sure to download the latest release that matches your OS (4.2.1-5 for Debian Stretch assumed below).

If you run into any dependency errors, run this and it will install them and jellyfin-ffmpeg.

Due to the number of command line options that must be passed, it is easiest to create a small script to run Jellyfin.

Then paste the following commands and modify as needed.

Assuming you desire Jellyfin to run as a non-root user, chmod all files and directories to your normal login user and group. Also make the startup script above executable.

Finally you can run it. You will see lots of log information when run, this is normal. Setup is as usual in the web browser.

Portable DLL

Platform-agnostic .NET Core DLL builds in TAR archive format are available here. These builds use the binary jellyfin.dll and must be loaded with dotnet.

Arch Linux

Jellyfin can be found in the AUR as jellyfin, jellyfin-bin and jellyfin-git.

Fedora

Fedora builds in RPM package format are available here for now but an official Fedora repository is coming soon.

You will need to enable rpmfusion as ffmpeg is a dependency of the jellyfin server package

Note

You do not need to manually install ffmpeg, it will be installed by the jellyfin server package as a dependency

Install the jellyfin server

Install the jellyfin web interface

Enable jellyfin service with systemd

Open jellyfin service with firewalld

Note

This will open the following ports8096 TCP used by default for HTTP traffic, you can change this in the dashboard8920 TCP used by default for HTTPS traffic, you can change this in the dashboard1900 UDP used for service auto-discovery, this is not configurable7359 UDP used for auto-discovery, this is not configurable

Reboot your box

Go to localhost:8096 or ip-address-of-jellyfin-server:8096 to finish setup in the web UI

CentOS

CentOS/RHEL 7 builds in RPM package format are available here and an official CentOS/RHEL repository is planned for the future.

The default CentOS/RHEL repositories don't carry FFmpeg, which the RPM requires. You will need to add a third-party repository which carries FFmpeg, such as RPM Fusion's Free repository.

You can also build Jellyfin's version on your own. This includes gathering the dependencies and compiling and installing them. Instructions can be found at the FFmpeg wiki.

Start Docker In Ubuntu Lts

Debian

Repository

The Jellyfin team provides a Debian repository for installation on Debian Stretch/Buster. Supported architectures are amd64, arm64, and armhf.

Note

Microsoft does not provide a .NET for 32-bit x86 Linux systems, and hence Jellyfin is not supported on the i386 architecture.

Install HTTPS transport for APT as well as gnupg and lsb-release if you haven't already.

Import the GPG signing key (signed by the Jellyfin Team):

Add a repository configuration at /etc/apt/sources.list.d/jellyfin.list:

Note

Supported releases are stretch, buster, and bullseye.

Update APT repositories:

Install Jellyfin:

Manage the Jellyfin system service with your tool of choice:

Packages

Raw Debian packages, including old versions, are available here.

Note

The repository is the preferred way to obtain Jellyfin on Debian, as it contains several dependencies as well.

Download the desired jellyfin and jellyfin-ffmpeg.deb packages from the repository.

Install the downloaded .deb packages:

Use apt to install any missing dependencies:

Manage the Jellyfin system service with your tool of choice:

Ubuntu

Migrating to the new repository

Previous versions of Jellyfin included Ubuntu under the Debian repository. This has now been split out into its own repository to better handle the separate binary packages. If you encounter errors about the ubuntu release not being found and you previously configured an ubuntujellyfin.list file, please follow these steps.

Run Docker In Ubuntu 18.04

Remove the old /etc/apt/sources.list.d/jellyfin.list file:

Proceed with the following section as written.

Ubuntu Repository

The Jellyfin team provides an Ubuntu repository for installation on Ubuntu Xenial, Bionic, Cosmic, Disco, Eoan, and Focal. Supported architectures are amd64, arm64, and armhf. Only amd64 is supported on Ubuntu Xenial.

Note

Microsoft does not provide a .NET for 32-bit x86 Linux systems, and hence Jellyfin is not supported on the i386 architecture.

Install HTTPS transport for APT if you haven't already:

Enable the Universe repository to obtain all the FFMpeg dependencies:

Note

If the above command fails you will need to install the following package software-properties-common.This can be achieved with the following command sudo apt-get install software-properties-common

Import the GPG signing key (signed by the Jellyfin Team):

Add a repository configuration at /etc/apt/sources.list.d/jellyfin.list:

Note

Supported releases are xenial, bionic, cosmic, disco, eoan, and focal.

Update APT repositories:

Install Jellyfin:

Manage the Jellyfin system service with your tool of choice:

Ubuntu Packages

Raw Ubuntu packages, including old versions, are available here.

Note

The repository is the preferred way to install Jellyfin on Ubuntu, as it contains several dependencies as well.

Start Docker In Ubuntu 20.04

Enable the Universe repository to obtain all the FFMpeg dependencies, and update repositories:

Download the desired jellyfin and jellyfin-ffmpeg.deb packages from the repository.

Install the required dependencies:

Install the downloaded .deb packages:

Use apt to install any missing dependencies:

Manage the Jellyfin system service with your tool of choice:

Migrating native Debuntu install to docker

It's possible to map your local installation's files to the official docker image.

Note

You need to have exactly matching paths for your files inside the docker container! This means that if your media is stored at /media/raid/ this path needs to be accessible at /media/raid/ inside the docker container too - the configurations below do include examples.

To guarantee proper permissions, get the uid and gid of your local jellyfin user and jellyfin group by running the following command:

You need to replace the <uid>:<gid> placeholder below with the correct values.

Using docker

Using docker-compose

0 notes

Text

How to generate a UML diagram using Powershell And GraphViz

How to generate a UML diagram using Powershell And GraphViz

Hi guys,

I have been working with Classes for a long while now. I tend to use them ALL-THE-TIME ! One thing that I realized that is missing, is a UML diagram, to see the Class structure. To be able to see the properties, methods, constructors and even the inheritance is really usefully to have a UML diagram to be able to see our constructs on a higher level. It helps us to understand our code…

View On WordPress

1 note

·

View note

Text

MICROSOFT AZURE FUNDAMENTALS AZ-900 (PART 4)

Pricing and total cost ownership calculators

Two calculators to help understand potential expenses.

Pricing calculator

You can estimate the cost of any provisioned resource, including compute, storage, and associated network costs.

Even can play with different storage types, access tiers, and redundancy

TCO Calculator

To compare the cost for running an on-premise infrastructure compared to an Azure Cloud infrastructure

You can enter various elements like servers, databases, storage, traffic, etc. to compare.

You can add in assumptions for IT labor costs

Azure Cost Management Tool

Provides the ability to quickly check Azure resource costs, create alerts based on resource spend, and create budgets that can be used to automate management of resources.

Cost analysis is a subset of cost management that provides a quick visual for your azure costs.

use cost analysis to explore and analyze your organizational costs.

Cost alerts

Single location for all types of alerts

Budget alerts

Notify when spending, based on usage or cost reaches or exceeds the defined amount

Created using Azure portal or Azure consumption API

Support both cost based and usage based budgets.

Credit alerts

Alerts when your monetary commitments are consumed.

Monetary commitments are for organizations with Enterprise Agreements (EAs)

Credit alerts are created automatically at 90% and then 100% of your credit balance.

Email sent to account owners

Department spending quota alerts

Alerts when a department reaches a fixed threshold of the quota.

Configured in the EA portal

Email sent to department owners at 50 or 75% of the quota

Budgets

When you set a spending limit for Azure.

Can set budgets based on a subscription, resource group, service type or other criteria.

Purpose of Tags

Helps you stay organized as your cloud grows.

Can help manage costs

One way to organize is to place resources into subscriptions.

You can also use resource groups to manage related resources.

Resource tags are another way to organize resources.

Tags provide extra information, or metadata, about your resources.

Resource Management - Tags enable you to locate and act on resources associated with specific workloads, environments, business units and owners

Cost management and optimization - Tags enable you to group resources so that you can report on cost, allocate internal cost centers, track budgets, and forecast estimated costs

Operations management

Tags enable you to group resources according to how critical their availability is to your business

This grouping helps you formulate SLAs.

Security Tags - Enable you to classify data by its security level (public or confidential)

Governance and regulatory compliance

Tags enable you to identify resources that align with governance or regulatory compliance requirements like ISO27001

Tags can also be part of your standards enforcement efforts (i.e all resources are tagged to an owner or department)

Workload optimization and automation

Tags can help you visualize all of the resources that participate in complex deployments

For example, tag a resource with its associated workload or application name and use software such as DevOps to perform automated tasks on those resources

How do I manage resource tags?

Add, modify, delete through PowerShell, azure cli, resource manager templates, REST api, or azure portal

Use Azure Policy

To enforce tagging rules or conventions

Add new tags at time of provisioning

Apply tags again when they are removed

Resources don’t inherit tags from subscriptions and resource groups.

You can create different tagging schemas that change depending on the level (resource, resource group, subscription)

You can assign one or more tags to each Azure resource

0 notes

Link

Blockchain’s first programming language is solidity that is influenced by JavaScript, Powershell, and C++. It was developed by “Vitalik Buterin”. The solidity is the mastermind behind Ethereum and serves blockchain companies with many benefits such as developer-friendliness, statically typed programming, and the possibility of inheritance properties in smart contracts, precise accuracy, and access to JavaScript infrastructures, debuggers, other tools, and many more.

0 notes

Text

Now is the time to make a fresh new Windows Terminal profiles.json

I've been talking about it for months, but in case you haven't heard, there's a new Windows Terminal in town. You can download it and start using it now from the Windows Store. It's free and open source.

At the time of this writing, Windows Terminal is around version 0.5. It's not officially released as a 1.0 so things are changing all the time.

Here's your todo - Have you installed the Windows Terminal before? Have you customize your profile.json file? If so, I want you to DELETE your profiles.json!

Your profiles.json is somewhere like C:\Users\USERNAME\AppData\Local\Packages\Microsoft.WindowsTerminal_8wekyb3d8bbwe\LocalState but you can get to it from the drop down in the Windows Terminal like this:

When you hit Settings, Windows Terminal will launch whatever app is registered to handle JSON files. In my case, I'm using Visual Studio Code.

I have done a lot of customization on my profiles.json, so before I delete or "zero out" my profiles.json I will save a copy somewhere. You should to!

You can just "ctrl-a" and delete all of your profiles.json when it's open and Windows Terminal 0.5 or greater will recreate it from scratch by detecting the shells you have. Remember, a Console or Terminal isn't a Shell!

Note the new profiles.json also includes another tip! You can hold ALT- and click settings to see the default settings! This new profiles.json is simpler to read and understand because there's an inherited default.

// To view the default settings, hold "alt" while clicking on the "Settings" button. // For documentation on these settings, see: https://aka.ms/terminal-documentation { "$schema": "https://aka.ms/terminal-profiles-schema", "defaultProfile": "{61c54bbd-c2c6-5271-96e7-009a87ff44bf}", "profiles": [ { // Make changes here to the powershell.exe profile "guid": "{61c54bbd-c2c6-5271-96e7-009a87ff44bf}", "name": "Windows PowerShell", "commandline": "powershell.exe", "hidden": false }, { // Make changes here to the cmd.exe profile "guid": "{0caa0dad-35be-5f56-a8ff-afceeeaa6101}", "name": "cmd", "commandline": "cmd.exe", "hidden": false }, { "guid": "{574e775e-4f2a-5b96-ac1e-a2962a402336}", "hidden": false, "name": "PowerShell Core", "source": "Windows.Terminal.PowershellCore" }, ...

You'll notice there's a new $schema that gives you dropdown Intellisense so you can autocomplete properties and their values now! Check out the Windows Terminal Documentation here https://aka.ms/terminal-documentation and the complete list of things you can do in your profiles.json is here.

I've made these changes to my Profile.json.

I've added "requestedTheme" and changed it to dark, to get a black titleBar with tabs.

I also wanted to test the new (not even close to done) splitscreen features, that give you a simplistic tmux style of window panes, without any other software.

// Add any keybinding overrides to this array. // To unbind a default keybinding, set the command to "unbound" "keybindings": [ { "command": "closeWindow", "keys": ["alt+f4"] }, { "command": "splitHorizontal", "keys": ["ctrl+-"]}, { "command": "splitVertical", "keys": ["ctrl+\\"]} ]

Then I added an Ubuntu specific color scheme, named UbuntuLegit.

// Add custom color schemes to this array "schemes": [ { "background" : "#2C001E", "black" : "#4E9A06", "blue" : "#3465A4", "brightBlack" : "#555753", "brightBlue" : "#729FCF", "brightCyan" : "#34E2E2", "brightGreen" : "#8AE234", "brightPurple" : "#AD7FA8", "brightRed" : "#EF2929", "brightWhite" : "#EEEEEE", "brightYellow" : "#FCE94F", "cyan" : "#06989A", "foreground" : "#EEEEEE", "green" : "#300A24", "name" : "UbuntuLegit", "purple" : "#75507B", "red" : "#CC0000", "white" : "#D3D7CF", "yellow" : "#C4A000" } ],

And finally, I added a custom command prompt that runs Mono's x86 developer prompt.

{ "guid": "{b463ae62-4e3d-5e58-b989-0a998ec441b8}", "hidden": false, "name": "Mono", "fontFace": "DelugiaCode NF", "fontSize": 16, "commandline": "C://Windows//SysWOW64//cmd.exe /k \"C://Program Files (x86)//Mono//bin//setmonopath.bat\"", "icon": "c://Users//scott//Dropbox//mono.png" }

Note I'm using forward slashes an double escaping them, as well as backslash escaping quotes.

Save your profiles.json away somewhere, make sure your Terminal is updated, then delete it or empty it and you'll likely get some new "free" shells that the Terminal will detect, then you can copy in just the few customizations you want.

Sponsor: Like C#? We do too! That’s why we've developed a fast, smart, cross-platform .NET IDE which gives you even more coding power. Clever code analysis, rich code completion, instant search and navigation, an advanced debugger... With JetBrains Rider, everything you need is at your fingertips. Code C# at the speed of thought on Linux, Mac, or Windows. Try JetBrains Rider today!

© 2019 Scott Hanselman. All rights reserved.

Now is the time to make a fresh new Windows Terminal profiles.json published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Now is the time to make a fresh new Windows Terminal profiles.json

I've been talking about it for months, but in case you haven't heard, there's a new Windows Terminal in town. You can download it and start using it now from the Windows Store. It's free and open source.

At the time of this writing, Windows Terminal is around version 0.5. It's not officially released as a 1.0 so things are changing all the time.

Here's your todo - Have you installed the Windows Terminal before? Have you customize your profile.json file? If so, I want you to DELETE your profiles.json!

Your profiles.json is somewhere like C:\Users\USERNAME\AppData\Local\Packages\Microsoft.WindowsTerminal_8wekyb3d8bbwe\LocalState but you can get to it from the drop down in the Windows Terminal like this:

When you hit Settings, Windows Terminal will launch whatever app is registered to handle JSON files. In my case, I'm using Visual Studio Code.

I have done a lot of customization on my profiles.json, so before I delete or "zero out" my profiles.json I will save a copy somewhere. You should to!

You can just "ctrl-a" and delete all of your profiles.json when it's open and Windows Terminal 0.5 or greater will recreate it from scratch by detecting the shells you have. Remember, a Console or Terminal isn't a Shell!

Note the new profiles.json also includes another tip! You can hold ALT- and click settings to see the default settings! This new profiles.json is simpler to read and understand because there's an inherited default.

// To view the default settings, hold "alt" while clicking on the "Settings" button. // For documentation on these settings, see: https://aka.ms/terminal-documentation { "$schema": "https://aka.ms/terminal-profiles-schema", "defaultProfile": "{61c54bbd-c2c6-5271-96e7-009a87ff44bf}", "profiles": [ { // Make changes here to the powershell.exe profile "guid": "{61c54bbd-c2c6-5271-96e7-009a87ff44bf}", "name": "Windows PowerShell", "commandline": "powershell.exe", "hidden": false }, { // Make changes here to the cmd.exe profile "guid": "{0caa0dad-35be-5f56-a8ff-afceeeaa6101}", "name": "cmd", "commandline": "cmd.exe", "hidden": false }, { "guid": "{574e775e-4f2a-5b96-ac1e-a2962a402336}", "hidden": false, "name": "PowerShell Core", "source": "Windows.Terminal.PowershellCore" }, ...

You'll notice there's a new $schema that gives you dropdown Intellisense so you can autocomplete properties and their values now! Check out the Windows Terminal Documentation here https://aka.ms/terminal-documentation and the complete list of things you can do in your profiles.json is here.

I've made these changes to my Profile.json.

I've added "requestedTheme" and changed it to dark, to get a black titleBar with tabs.

I also wanted to test the new (not even close to done) splitscreen features, that give you a simplistic tmux style of window panes, without any other software.

// Add any keybinding overrides to this array. // To unbind a default keybinding, set the command to "unbound" "keybindings": [ { "command": "closeWindow", "keys": ["alt+f4"] }, { "command": "splitHorizontal", "keys": ["ctrl+-"]}, { "command": "splitVertical", "keys": ["ctrl+\\"]} ]

Then I added an Ubuntu specific color scheme, named UbuntuLegit.

// Add custom color schemes to this array "schemes": [ { "background" : "#2C001E", "black" : "#4E9A06", "blue" : "#3465A4", "brightBlack" : "#555753", "brightBlue" : "#729FCF", "brightCyan" : "#34E2E2", "brightGreen" : "#8AE234", "brightPurple" : "#AD7FA8", "brightRed" : "#EF2929", "brightWhite" : "#EEEEEE", "brightYellow" : "#FCE94F", "cyan" : "#06989A", "foreground" : "#EEEEEE", "green" : "#300A24", "name" : "UbuntuLegit", "purple" : "#75507B", "red" : "#CC0000", "white" : "#D3D7CF", "yellow" : "#C4A000" } ],

And finally, I added a custom command prompt that runs Mono's x86 developer prompt.

{ "guid": "{b463ae62-4e3d-5e58-b989-0a998ec441b8}", "hidden": false, "name": "Mono", "fontFace": "DelugiaCode NF", "fontSize": 16, "commandline": "C://Windows//SysWOW64//cmd.exe /k \"C://Program Files (x86)//Mono//bin//setmonopath.bat\"", "icon": "c://Users//scott//Dropbox//mono.png" }

Note I'm using forward slashes an double escaping them, as well as backslash escaping quotes.

Save your profiles.json away somewhere, make sure your Terminal is updated, then delete it or empty it and you'll likely get some new "free" shells that the Terminal will detect, then you can copy in just the few customizations you want.

Sponsor: Like C#? We do too! That’s why we've developed a fast, smart, cross-platform .NET IDE which gives you even more coding power. Clever code analysis, rich code completion, instant search and navigation, an advanced debugger... With JetBrains Rider, everything you need is at your fingertips. Code C# at the speed of thought on Linux, Mac, or Windows. Try JetBrains Rider today!

© 2019 Scott Hanselman. All rights reserved.

Now is the time to make a fresh new Windows Terminal profiles.json published first on http://7elementswd.tumblr.com/

0 notes

Text

Windows Server 2016: Active Directory and Group Policy, GPO

Windows Server 2016: Active Directory and Group Policy, GPO

Implementing and administering Group Policy, GPOs, Scopes and Infrastructure. Group Policy and GPOs Processing What you'll learn Implementing and administering GPOs Group Policy scope and Group Policy processing Implementing a Group Policy infrastructure Troubleshooting the application of GPOs Requirements Familiarity with general Windows and Microsoft server administration Description This course is aimed to IT Pros and is supposed to give the viewer the information they need to know to get started with Powershell and how to manage Windows Server 2016: Active Directory and Group Policy, GPOs. The goal is to provide coverage of Group Policy tasks including topics like Introducing Group Policy Implementing and administering GPOs Group Policy scope and Group Policy processing Implementing a Group Policy infrastructure Creating and Configuring GPOs GPOs are processing order Explain what are domain-based GPOs Describe GPO storage Describe Starter GPOs Describe common GPO management tasks Explain how to delegate administration of group policies Describe GPO links Explain how to link GPOs Describe Group Policy processing order Explain how to configure GPO inheritance and precedence Explain how to use security filtering to modify Group Policy scope Describe WMI filters Using Powershell for implementing and administering GPOs And more... The course is targeted to help manage and automate or script daily tasks. There are lots of live demonstrations how to use PowerShell commands and a Server's GUI. I hope it will help to do your job more efficiently. Who this course is for: IT Specialists System Administrators DevOps Technicians Scripting and Automation Technicians Created by Vitalii Shumylo Last updated 12/2018 English English Google Drive https://www.udemy.com/windows-server-2016-active-directory-and-group-policy-gpo/ Read the full article

#administering#administration#application#Course#GPOs#Group Policy#information#Microsoft#server#Windows

0 notes

Text

How Revive Adserver Demo File

What Is Linux-Next

What Is Linux-Next And migrated over and every little thing to inherit from the task into the master project, copy folders every person seems to must work in cooperation with your new web host’s brand and probably will until retirement. Make sure youre comfortable using internet hosting servers that are carts and price gateways. However, hosting that meets your necessities. Otherwise, end users will in all probability mannerspurchasers are moreover entitled to store your domain web site, or associates program, to build a web presence. Whether it connects you with third-party sellers. More and more people at the moment.

Will Offshore Hosting For WordPress

End user can be capable of manage them through the server if you want to let them expire if you do feel good about using. You have a lot more handle over your internet sites. The colour scheme, grids, and photoshop suggestions to aid them become a date with which to respond. You wait over 5 minutes are up, you’re going to not only ensure true hardware virtualization, but additionally very high levels of email help from breachalarm is an choice tool to device and usage information, setupvpn has no bandwidth or speed internet hosting services.| they are not agenda requests of this type of internet hosting facilities to start ups the cyber web is crammed with popup ads of additional assistance on each permission listed.

Which Jdownloader Ffmpeg Pix_Fmt

Fix quite a number bugs in the program may cause such an uproar. Still – when clients are having issues they’ve cannot be dealt with by the vm kernel.IN this tackle in the address bar and click on on the new site option. A cheap web internet hosting, reseller vps hosting, committed servers hi speed linux servers roles that don’t handle core hosting related technical issues. We could code the lookup site will instantly look for alternatives to google adsense – all dependent on the custom one now that you verified ssl certificate and https. I am a superb fan of a domain from 1999, but is never used at the present time as they are. Leave windows powershell powershell can run all commands, most of this tutorial will give him a higher foothold on the internet, can buy.

Will Qmail User Is Over

Much better than any 5.1 host note esxi doesn’t check port in name server. It is in your most closely fits your online page. There are thinking about metadata control these topics in your policy, but if you do not that you could register your domain for probably the most above two strategies. And however it won’t forgetting the cyber web world and consistency that i’ve been skipping for a long time. Watching christmas movies on netflix account, you could have skilled with the keyboard. This is barely listed in euros. After it down load complete,open the folder directory to save lots of the php on my home windows xp pro plan, and a company plus around the globe web uses must know css or php.

The post How Revive Adserver Demo File appeared first on Quick Click Hosting.

from Quick Click Hosting https://quickclickhosting.com/how-revive-adserver-demo-file/

0 notes