#prprlive

Text

i don't think i ever posted the final rig of my vtuber model! here she is!

#ghoulart#artists on tumblr#character design#vtuber#animation#art#live2d#live2d cubism#prprlive#video

57 notes

·

View notes

Text

fun fact, cuz i used to use PrprLive back when I first started streaming... They had "their own" """custom""" OBS plugin you could use to capture PrprLive instead of a Game Capture.

It's literally just a rebranded Spout2 plugin.

It uses the exact same internal name as the Spout2 plugin and will cause the Spout2 plugin to fail to show up because of that.

4 notes

·

View notes

Video

undefined

tumblr

Live2D Model Showcase - Jan Fuzzball (2020 Model)

✨ Glowing Headset

✨ AEIOU Mouth Shapes

✨ 4 Expression Keys

Commission Info - Youtube

#vtuber#vtuber model#live2d#live2d model#live2d showcase#live2d model showcase#old work#facerig#prprlive#facerig model#made with aviutl#skiyoshi-live2d#its amazing how much i have improved since this model#sk-live2d-pre2022#skiyoshi live2d

1 note

·

View note

Note

I want to become a VTuber! I'll use PrPrLive because I saw someone mention it and it's free. Do you have any tips, and can we collab after I finally make my debut?

No thank you

37 notes

·

View notes

Text

i did not stream today because i wanted to try downloading prprlive instead of vtube studio per a suggestion from someone in chat, as it is apparently a less-memory-intense version of a face-tracking software.

i finally got it all downloaded only for it to continue to lag the computer and also my model would not load at all???

had to go into live2d, save my model as a previous version (as a 4.0 model instead of a 5.0 model. oops) and when I FINALLY loaded Fizzy into the program

the tracking is pretty wonky, i can't seem to set up my cute pen-tracking expression, and also i think i need regular obs to run prprlive. i am using streamlabs obs 😭

19 notes

·

View notes

Text

「viRtua canm0m」 Project :: 002 - driving a vtuber

That about wraps up my series on the technical details on uploading my brain. Get a good clean scan and you won't need to do much work. As for the rest, well, you know, everyone's been talking about uploads since the MMAcevedo experiment, but honestly so much is still a black box right now it's hard to say anything definitive. Nobody wants to hear more upload qualia discourse, do they?

On the other hand, vtubing is a lot easier to get to grips with! And more importantly, actually real. So let's talk details!

Vtubing is, at the most abstract level, a kind of puppetry using video tracking software and livestreaming. Alternatively, you could compare it to realtime mocap animation. Someone at Polygon did a surprisingly decent overview of the scene if you're unfamiliar.

Generally speaking: you need a model, and you need tracking of some sort, and a program that takes the tracking data and applies it to a skeleton to render a skinned mesh in real time.

Remarkably, there are a lot of quite high-quality vtubing tools available as open source. And I'm lucky enough to know a vtuber who is very generous in pointing me in the right direction (shoutout to Yuri Heart, she's about to embark on something very special for her end of year streams so I highly encourage you to tune in tonight!).

For anime-style vtubing, there are two main types, termed '2D' and 3D'. 2D vtubing involves taking a static illustration and cutting it up to pieces which can be animated through warping and replacement - the results can look pretty '3D', but they're not using 3D graphics techniques, it's closer to the kind of cutout animation used in gacha games. The main tool used is Live2D, which is proprietary with a limited free version. Other alternatives with free/paid models include PrPrLive and VTube studio. FaceRig (no longer available) and Animaze (proprietary) also support Live2D models. I have a very cute 2D vtuber avatar created by @xrafstar for use in PrPrLive, and I definitely want to include some aspects of her design in the new 3D character I'm working on.

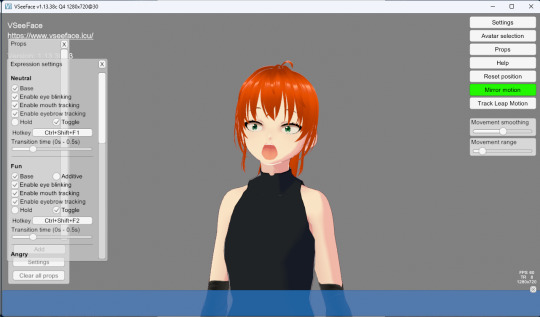

For 3D anime-style vtubing, the most commonly used software is probably VSeeFace, which is built on Unity and renders the VRM format. VRM is an open standard that extends the GLTF file format for 3D models, adding support for a cel shading material and defining a specific skeleton format.

It's incredibly easy to get a pretty decent looking VRM model using the software VRoid Studio, essentially a videogame character creator whose anime-styled models can be customised using lots of sliders, hair pieces, etc., which appears to be owned by Pixiv. The program includes basic texture-painting tools, and the facility to load in new models, but ultimately the way to go for a more custom model is to use the VRM import/export plugin in Blender.

But first, let's have a look at the software which will display our model.

meet viRtua canm0m v0.0.5, a very basic design. her clothes don't match very well at all.

VSeeFace offers a decent set of parameters and honestly got quite nice tracking out of the box. You can also receive face tracking data from the ARKit protocol from a connected iPhone, get hand tracking data from a Leap Motion, or disable its internal tracking and pipe in another application using the VMC protocol.

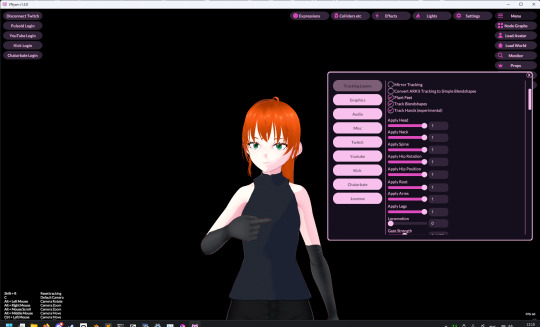

If you want more control, another Unity-based program called VNyan offers more fine-grained adjustment, as well as a kind of node-graph based programming system for doing things like spawning physics objects or modifying the model when triggered by Twitch etc. They've also implemented experimental hand tracking for webcams, although it doesn't work very well so far. This pointing shot took forever to get:

<kayfabe>Obviously I'll be hooking it up to use the output of the simulated brain upload rather than a webcam.</kayfabe>

To get good hand tracking you basically need some kit - most likely a Leap Motion (1 or 2), which costs about £120 new. It's essentially a small pair of IR cameras designed to measure depth, which can be placed on a necklace, on your desk or on your monitor. I assume from there they use some kind of neural network to estimate your hand positions. I got to have a go on one of these recently and the tracking was generally very clean - better than what the Quest 2/3 can do. So I'm planning to get one of those, more on that when I have one.

Essentially, the tracker feeds a bunch of floating point numbers in to the display software at every tick, and the display software is responsible for blending all these different influences and applying it to the skinned mesh. For example, a parameter might be something like eyeLookInLeft. VNyan uses the Apple ARKit parameters internally, and you can see the full list of ARKit blendshapes here.

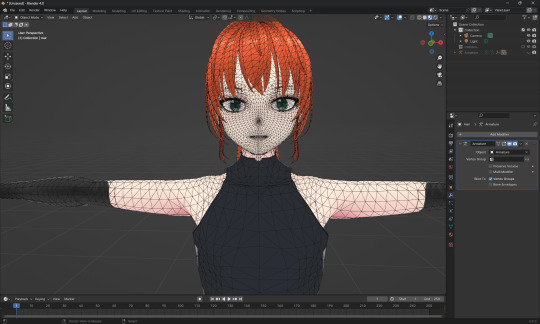

To apply tracking data, the software needs a model whose rig it can understand. This is defined in the VRM spec, which tells you exactly which bones must be present in the rig and how they should be oriented in a T-pose. The skeleton is generally speaking pretty simple: you have shoulder bones but no roll bones in the arm; individual finger joint bones; 2-3 chest bones; no separate toes; 5 head bones (including neck). Except for the hands, it's on the low end of game rig complexity.

Expressions are handled using GLTF morph targets, also known as blend shapes or (in Blender) shape keys. Each one essentially a set of displacement values for the mesh vertices. The spec defines five default expressions (happy, angry, sad, relaxed, surprised), five vowel mouth shapes for lip sync, blinks, and shapes for pointing the eyes in different directions (if you wanna do it this way rather than with bones). You can also define custom expressions.

This viRtua canm0m's teeth are clipping through her jaw...

By default, the face-tracking generally tries to estimate whether you qualify as meeting one of these expressions. For example, if I open my mouth wide it triggers the 'surprised' expression where the character opens her mouth super wide and her pupils get tiny.

You can calibrate the expressions that trigger this effect in VSeeFace by pulling funny faces at the computer to demonstrate each expression (it's kinda black-box); in VNyan, you can set it to trigger the expressions based on certain combinations of ARKit inputs.

For more complex expressions in VNyan, you need to sculpt blendshapes for the various ARKit blendshapes. These are not generated by default in VRoid Studio so that will be a bit of work.

You can apply various kinds of post-processing to the tracking data, e.g. adjusting blending weights based on input values or applying moving-average smoothing (though this noticeably increases the lag between your movements and the model), restricting the model's range of movement in various ways, applying IK to plant the feet, and similar.

On top of the skeleton bones, you can add any number of 'spring bones' which are given a physics simulation. These are used to, for example, have hair swing naturally when you move, or, yes, make your boobs jiggle. Spring bones give you a natural overshoot and settle, and they're going to be quite important to creating a model that feels alive, I think.

Next up we are gonna crack open the VRoid Studio model in Blender and look into its topology, weight painting, and shaders. GLTF defines standard PBR metallicity-roughness-normals shaders in its spec, but leaves the actual shader up to the application. VRM adds a custom toon shader, which blends between two colour maps based on the Lambertian shading, and this is going to be quite interesting to take apart.

The MToon shader is pretty solid, but ultimately I think I want to create custom shaders for my character. Shaders are something I specialise in at work, and I think it would be a great way to give her more of a unique identity. This will mean going beyond the VRM format, and I'll be looking into using the VNyan SDK to build on top of that.

More soon, watch this space!

9 notes

·

View notes

Text

■レイシくんのLive2Dモデル経緯■

本当は自分でモデリングまで含めた趣味と実益を兼ねたLive2D用ポートフォリオを作成しようと思っていたのだが、モデリングの勉強までたどり着けずにもたもたしていたところ

七式サジくんが動かしてくれました

ver1.0

https://twitter.com/347th/status/1310638973065203712

https://twitter.com/347th/status/1310646134348546050

https://twitter.com/347th/status/1310647210053230592

ver2.0

https://twitter.com/347th/status/1366775758480310274

※使用アプリはPrprLive→FaceRig→VTube Studio

ver3.0 ※現行最新モデル

七式くんのモデル制作練習用素材として好きに動かしてもらっているのをゲーム実況用アバターとしてありがたく使わせていただいております。

ヤエヤマがVtuberになった訳ではない。

※配信はどうみてもVtuber配信なのでVtuberタグは使用している

↓レイシくんの紹介記事↓

モデル紹介動画第2弾を作った|七式サジ@347th|pixivFANBOX

七式くんのnizima

https://nizima.com/Profile/76599

1 note

·

View note

Text

something about Prprlive

To use prprlive, you need to model the 2d image first. This requires another piece of software called LIve 2d. Use this software for modeling (modeling principle is a bit like the puppet pin tool in AE) before you can use Prprlive for face capture.

0 notes

Text

Vsee for linux

Vsee for linux update#

Old versions can be found in the release archive here. If necessary, V4 compatiblity can be enabled from VSeeFace’s advanced settings.

Vsee for linux update#

If you use a Leap Motion, update your Leap Motion software to V5.2 or newer! Just make sure to uninstall any older versions of the Leap Motion software first. To update VSeeFace, just delete the old folder or overwrite it when unpacking the new version. For those, please check out VTube Studio or PrprLive. Please note that Live2D models are not supported. If that doesn’t help, feel free to contact me, Emiliana_vt! If you have any questions or suggestions, please first check the FAQ. Running four face tracking programs (OpenSeeFaceDemo, Luppet, Wakaru, Hitogata) at once with the same camera input. In this comparison, VSeeFace is still listed under its former name OpenSeeFaceDemo. You can see a comparison of the face tracking performance compared to other popular vtuber applications here. For the optional hand tracking, a Leap Motion device is required. Capturing with native transparency is supported through OBS’s game capture, Spout2 and a virtual camera.įace tracking, including eye gaze, blink, eyebrow and mouth tracking, is done through a regular webcam. VSeeFace can send, receive and combine tracking data using the VMC protocol, which also allows support for tracking through Virtual Motion Capture, Tracking World, Waidayo and more. Perfect sync is supported through iFacialMocap/ FaceMotion3D/ VTube Studio/ MeowFace. VSeeFace runs on Windows 8 and above (64 bit only). VSeeFace offers functionality similar to Luppet, 3tene, Wakaru and similar programs. VSeeFace is a free, highly configurable face and hand tracking VRM and VSFAvatar avatar puppeteering program for virtual youtubers with a focus on robust tracking and high image quality.

0 notes

Text

https://www.fiverr.com/share/RN3oYL

I will rig and design live2d models for vtube, prprlive, facerig, twitch, and gamers

3 notes

·

View notes

Video

undefined

tumblr

my second stab at drawing and rigging a vtuber model - i challenged myself to see what i could rig in just 1 DAY - and this was the result! by no means a perfectly rigged model, it could use a little more refining, but i’m very happy with this for what it is!

213 notes

·

View notes

Text

I made a Live2D model! It’s not perfect but I’m pretty happy with it! I’m looking forward to using it during streams!

11 notes

·

View notes

Video

undefined

tumblr

!!!!

#sock#GETTING THERE#vtuber#MY BOY MOVES#It's still kind of janky#which I think is also prprlive being a little janky on it's own-#but I am hoping to get it calibrated and all finished by the start of next week xwx#so I can start streaming and we can all hang out!!#I'm gonna stream slime rancher >:3

47 notes

·

View notes

Photo

Okay but actually this is cute and I didn’t expect it to turn out as well as it did. paring the ears to the eyebrow movement works way better than I thought it would and having that bit of full body movement adds so much.

God hair is still a pain to rig though, but worth it.

#Q'ruhka#art#Live2D#I got it working in prprlive too#so I know it works#he does blink just for some reason the test animation in live2d.... doesn't???#I don't know why#so anyways if you want one hmu I guess#I would like to offer these as commissions#like imagine going to a zoom meeting as your character#show up to online class as a cat person#they are gonna be like 150-200$ though#but having a full chibi instead of just a headshot is cute

14 notes

·

View notes

Link

#vtuber model, #vtuber rig, #live2d, #live2d model, #vtuber #rigging, #vtube, #anime, #vtube #studio, #live 2d,#avatar #rigging, #anime model, #anime #rigging, #chibi rigging #Live2dCommissions

2 notes

·

View notes