#raspberry pi cluster

Explore tagged Tumblr posts

Text

the computer blade | source

#i do not know enough about pi clusters to write ids for this post. apologies#talos gifs#stim gifs#stim#tech stim#technology#techcore#computers#raspberry pi#circuit boards#wires#cables#blinking lights#glow#plastic#black#gray#purple#pink#blue#green#cyberpunk#robotcore#robot stim

4K notes

·

View notes

Text

1 note

·

View note

Text

blog reintroduction

the last time i used this account was when i was still a teenager. didn't feel like deleting and remaking on this handle though so uh. hey, i'm robin. i'm a married butch lesbian in mn. mostly logging back in so i can archive stuff on here where other people can see if they want

stuff i might post abt (under the cut)

managing physical/mental disability (my wife and i both have eds)

being broke and maintaining sanity/quality of life

it stuff. i have a cluster of servers at home running a lot of stuff to automate life. some stuff replaces subscriptions but i also do some budget smarthome stuff to make our living situation a little more accessible. i've also dabbled in backing up my personal healthcare record

more generally, extending the lifespan of (sometimes much older) tech & bending it to your will

some interests, though i tend to stay out of fandoms: trigun, dunmeshi, pokemon, ffxiv, persona series (but i dont touch that fandom with a ten foot pole) and a few others im sure i cant think of rn

also, feel free to send me asks about random tech things. i'll post some of my stuff later, but a quick rundown of my daily stuff

JUST replaced my phone. i only got my head out of the apple brainrot very recently, but my phone is a refurb'd note20 ultra that i intend to use for a very long time

desktop i built on the cheap. i5 (dont buy intel new, theyre zionists), intel arc graphics for video encoding

working on moving from a 2018-ish macbook air that's somehow barely runing to a 2010 thinkpad i salvaged from an ewaste bin. it's running pretty well on arch with a cheap SSD but it needs a new battery and a better display (and a modern wifi card eventually)

my server cluster that i've built over the last 10 years or so:

the manager computer is a 2017 imac i cut open to put more ram in. it was my primary computer until this year

2 raspberry pis that the manager dishes out tasks to. one is hooked up to an 8tb hard drive that it serves to the other 2 computers. the other has a zigbee receiver to handle cheap smarthome devices

3rd raspberry pi dedicated to networking. outside of the cluster, hosts a vpn so i can get into my stuff from anywhere without exposing it to the internet

salvaged two acer EEE laptops that i really want to convert into parts of the cluster bc i'm running out of cpu. they didn't come with power supplies and i have yet to get them to turn on

part of my goal for being active on here is to put some accessible resources on home servers out there. bc imo theyre insanely useful and learning how to do this stuff in general is good in the age of technological walled gardens

ok bye. follow me if you want ig, send me asks abt it stuff and i'll probably have something to say

3 notes

·

View notes

Text

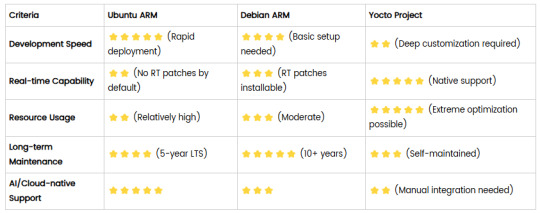

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

Elmalo, let's commit to that direction. We'll start with a robust Sensor Fusion Layer Prototype that forms the nervous system of Iron Spine, enabling tangible, live data connectivity from the field into the AI's processing core. Below is a detailed technical blueprint that outlines the approach, components, and future integrability with your Empathic AI Core.

1. Hardware Selection

Edge Devices:

Primary Platform: NVIDIA Jetson AGX Xavier or Nano for on-site processing. Their GPU acceleration is perfect for real-time preprocessing and running early fusion algorithms.

Supplementary Controllers: Raspberry Pi Compute Modules or Arduino-based microcontrollers to gather data from specific sensors when cost or miniaturization is critical.

Sensor Modalities:

Environmental Sensors: Radiation detectors, pressure sensors, temperature/humidity sensors—critical for extreme environments (space, deep sea, underground).

Motion & Optical Sensors: Insect-inspired motion sensors, high-resolution cameras, and inertial measurement units (IMUs) to capture detailed movement and orientation.

Acoustic & RF Sensors: Microphones, sonar, and RF sensors for detecting vibrational, audio, or electromagnetic signals.

2. Software Stack and Data Flow Pipeline

Data Ingestion:

Frameworks: Utilize Apache Kafka or Apache NiFi to build a robust, scalable data pipeline that can handle streaming sensor data in real time.

Protocol: MQTT or LoRaWAN can serve as the communication backbone in environments where connectivity is intermittent or bandwidth-constrained.

Data Preprocessing & Filtering:

Edge Analytics: Develop tailored algorithms that run on your edge devices—leveraging NVIDIA’s TensorRT for accelerated inference—to filter raw inputs and perform preliminary sensor fusion.

Fusion Algorithms: Employ Kalman or Particle Filters to synthesize multiple sensor streams into actionable readings.

Data Abstraction Layer:

API Endpoints: Create modular interfaces that transform fused sensor data into abstracted, standardized feeds for higher-level consumption by the AI core later.

Middleware: Consider microservices that handle data routing, error correction, and redundancy mechanisms to ensure data integrity under harsh conditions.

3. Infrastructure Deployment Map

4. Future Hooks for Empathic AI Core Integration

API-Driven Design: The sensor fusion module will produce standardized, real-time data feeds. These endpoints will act as the bridge to plug in your Empathic AI Core whenever you’re ready to evolve the “soul” of Iron Spine.

Modular Data Abstraction: Build abstraction layers that allow easy mapping of raw sensor data into higher-level representations—ideal for feeding into predictive, decision-making models later.

Feedback Mechanisms: Implement logging and event-based triggers from the sensor fusion system to continuously improve both hardware and AI components based on real-world performance and environmental nuance.

5. Roadmap and Next Steps

Design & Prototype:

Define the hardware specifications for edge devices and sensor modules.

Develop a small-scale sensor hub integrating a few key sensor types (e.g., motion + environmental).

Data Pipeline Setup:

Set up your data ingestion framework (e.g., Apache Kafka cluster).

Prototype and evaluate basic preprocessing and fusion algorithms on your chosen edge device.

Field Testing:

Deploy the prototype in a controlled environment similar to your target extremes (e.g., a pressure chamber, simulated low-gravity environment).

Refine data accuracy and real-time performance based on initial feedback.

Integration Preparation:

Build standardized API interfaces for future connection with the Empathic AI Core.

Document system architecture to ensure a smooth handoff between the hardware-first and AI-core teams.

Elmalo, this blueprint establishes a tangible, modular system that grounds Iron Spine in reality. It not only demonstrates your vision but also builds the foundational “nervous system” that your emergent, empathic AI will later use to perceive and interact with its environment.

Does this detailed roadmap align with your vision? Would you like to dive further into any individual section—perhaps starting with hardware specifications, software configuration, or the integration strategy for the future AI core?

0 notes

Text

0 notes

Text

Raspberry Pi cluster spotted inside $6k audio processor | Jeff Geerling

0 notes

Text

Routing Mess

Well, I got a router to get better control over my network. I have an ISP that shall not be named that wouldn't let me get certain perks unless I use their router/modem hybrid motherfucker. It has a disgusting lack of configuration, so I bit the bullet and got a TP-Link AX1800 router from Wal-Mart. I hear these things die after a few years BUT it already has granted so much more control over my network than the other thing. I can finally route all DNS through Lenny (Raspberry Pi) so I'm utilizing Pi-hole to its fullest.

UNFORTUNATELY I did not prepare properly for the move, so I ended up blowing up my Proxmox cluster. I just acquired a very old Gateway PC from like, 2012, and I've been using it as a second member of my cluster (the Nuclear cluster). His name is Nicholas and he's got a 5 dollar terabyte drive that's used but passes the smart check. However, after switching over to the new router and following some instructions improperly for the Proxmox install on Julian (Gateway PC) and Adelle (Dell PC), the Nuclear Cluster royally broke and I had to reinstall on Julian and remove Adelle from the cluster. I also had to update Caddy and a bunch of other services to make everything work again because I moved from one IP address scheme to another.

Anyway, let me tell you about getting Julian (Gateway). I found this computer part store that's just full of computer junk. Anything and everything. My boyfriend drove me over there and I went in with him and the place is LINED with COMPUTERS and computer parts and computers running without cases and it was just BEAUTIFUL. I'm poking through PCs, trying to find a cheap one I can make into a NAS, and it's kinda hard because I'm in a wheelchair and all the PCs are on the ground and it's a small place, so my boyfriend starts poking around too. And then he goes, "HEY LOOK", and he rotates a desktop PC around and IT'S A GATEWAY! An old-ass Gateway. And I just had to bring it home!

Then today I found a PC for like 10 bucks, but it doesn't have RAM. It's once again a Dell.

I also brought home another Dell that I plan to make my media server. Any ideas on names?

1 note

·

View note

Text

i built a Raspberry Pi SUPER COMPUTER!! // ft. Kubernetes (k3s cluster w...

youtube

0 notes

Text

For over a decade, the tech industry has been chasing unicorns—those elusive startups valued at over $1 billion. The obsession began in 2013, when Aileen Lee—a VC based in Palo Alto—coined the term that captured the imaginations first of founders and investors, and then prime ministers and presidents. But these mythical beasts are also rare: only 1 percent of VC-backed startups ever reach this status.

As society enters the age of AI, and financial markets put renewed value on business fundamentals, our understanding of what makes a successful tech company is evolving. Promise alone doesn't make a national, regional, or global champion. Champions are those companies that combine both the promise of untapped growth and the fundamental metrics that demonstrate strong and sustainable customer demand.

Until recently, Silicon Valley has been seen as the world's undisputed unicorn factory. But Europe's innovation ecosystem has matured to a point where it is consistently producing companies with both the vision to change the world and the fundamentals to sustain that change. Leading the pack is a cohort of more than 507 “thoroughbreds”—startups with annual revenues of at least $100 million.

More than a third of these high-potential companies are headquartered in what we call New Palo Alto: not a singular location, but a network of interconnected ecosystems within a five-hour train ride of London. After the Bay Area, this is the world's second most productive innovation cluster and includes cities with industrial heritage like Glasgow, Eindhoven, and Manchester, as well as world-renowned capitals of culture, policy, and academia like Amsterdam, Cambridge, Edinburgh, London, Oxford, and Paris.

They’re home to companies such as low-cost computer maker Raspberry Pi, whose technology was invented and developed in Cambridge, manufactured in Pencoed, South Wales, and sold worldwide. Raspberry Pi recently crowned over a decade of growth with a listing on the London Stock Exchange. At the time of listing, it had revenue of $265 million and $66 million in gross operating profits.

Other New Palo Alto thoroughbreds include fintechs Monzo, Revolut, and Tide, which provides mobile-first banking to SMEs, as well as fast-growing companies such as iPhone challenger Nothing and London-founded Cleo, the conversational AI pioneer that helps young US consumers manage their finances.

Seven of Europe's 10 most valuable tech companies founded after 1990 have emerged from New Palo Alto: Booking.com and Adyen from Amsterdam; Wise, Revolut, and Monzo from London; ASML from Eindhoven; and Arm from Cambridge. All are products of this interconnected ecosystem.

Yet, for all its promise, New Palo Alto remains an underinvested region. While early-stage funding is now higher than the Bay Area, thoroughbreds face a staggering $30 billion gap in funding at the crucial scale-up stage compared to their Bay Area counterparts.

Governments of the leading economies in New Palo Alto—Britain and France—have delivered progressive policy frameworks to support innovation and tech companies, including investment in R&D, talent, and visa programs. They are also putting in place policies including the UK’s Mansion House Compact and France’s Tibi, to support more scale-up capital.

But no innovation cluster ever became great because of policy alone. Success occurs when investors fully understand the investment opportunity. Now that we have nearly 1,000 venture-backed companies in EMEA with revenues of more than $25 million, helping this ecosystem to achieve its full potential is no longer about solving a policy challenge. It’s about recognizing a huge investment opportunity.

This is why in the last decade, the amount of venture capital coming into the region has increased nine times, and why in the next decade, large institutional investors in the UK and in France will bring billions of dollars of investment to back private companies.

The new British prime minister's home constituency includes Somers Town, an area close to St Pancras station and within sight of Google and Meta’s huge European headquarters. Yet for all the gleaming towers, too many neighborhoods in New Palo Alto have been left behind by technology. In Somers Town, 50 percent of children receive free school meals, 70 percent of residents receive social care, and adults live 20 years fewer than in leafy Highgate, only 20 minutes up the road.

As the tech industry faces increased scrutiny, we have an opportunity to offer an alternative model of innovation. By building thoroughbreds to be sustainable, transparent companies, we can begin the work of sharing the benefits of innovation more equally.

Just as some of the most iconic US cities take their names from the ancient cities of Europe—New York and New Orleans—New Palo Alto pays respect to its namesake while also signaling a deliberate choice for the future.

0 notes

Text

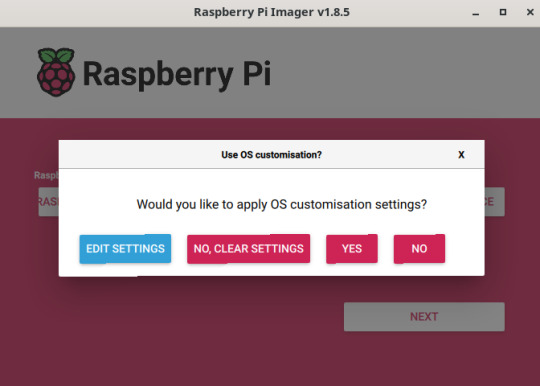

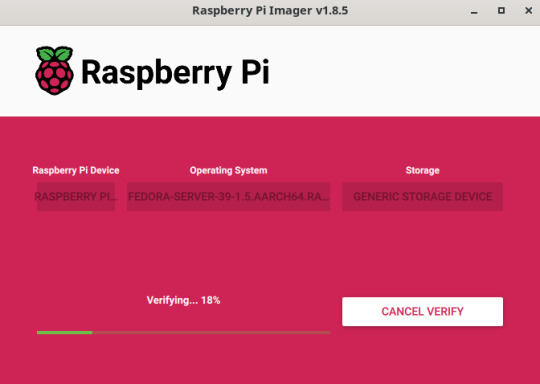

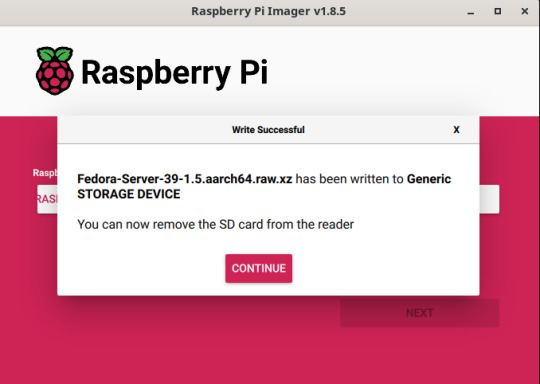

Fedora Gnu/Linux üzerinde Rpi imager ile Raspberry pi 4 üzerine Fedora Server Aarch64 edition kurulumu ve ayarlar – Bölüm 0

Hazırlayan:Tayfun Yaban

Merhaba,

Bu sefer sizlerle “kuşe kağıda baskılı :)” bir yazı paylaşmak istedim.

Burada amacım aslında Raspberry üzerine Raspberry Pi OS(Raspbian) yerine başka dağıtımlar da kurabileceğinizi göstermektir.Aslında raspberry üzerine birçok Gnu/Linux dağıtımı kurabilirsiniz, ancak ben Fedora kullandığım için bu dağıtımı seçtim.Raspberry üzerine bir GNU/Linux dağıtımı kurduğunuzda bir çok amaç için özelleştirebilirsiniz;örneğin web server,VPN server,programlama,medya server,Retro gaming,K8s cluster gibi kısacası bir Gnu/linux sistemde neler yapabiliyorsanız aynılarını yapabilirsiniz.

Dikkat etmeniz gereken şey Raspberry işlemci mimarisi Aarch64(Arm based) ve işlemcisi Broadcom BCM2835'tir.Yapacaklarınızı ve indireceklerinizi buna göre planlamanız gerekmektedir.Ayrıntılı bilgiye aşağıdaki linklerden ulaşabilirsiniz.

Ayrıca ek bir bilgi olarak raspberry üzerine sadece Gnu/Linux dağıtımları değil FreeBSD de kurabilirsiniz.

Ayrıntılı bilgi için aşağıdaki linkleri inceleyebilirsiniz.

Gelelim kurulumu nasıl yapacağımıza,

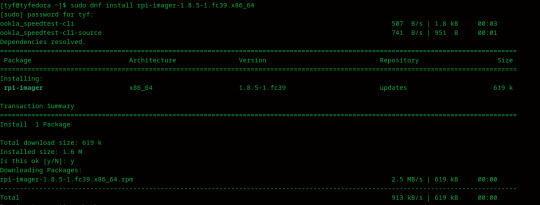

“sudo dnf install rpi-imager-1.8.5-1.fc39.x86_64” komutu ile “Raspberry Pi imager” yazılımının kurulumunu yapıyoruz.

İsterseniz gui ile aramaya “rpi” yazarak,isterseniz de terminal kullanarak “rpi-imager” yazarak yazılımı başlatabilirsiniz.

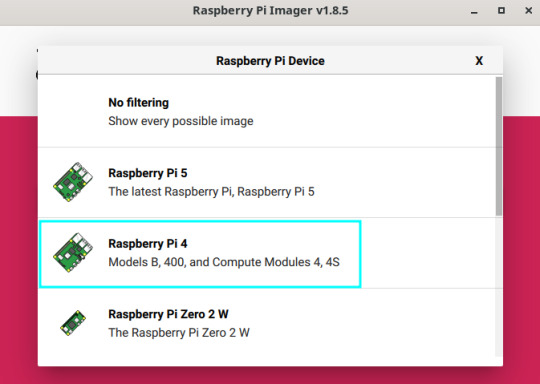

İlk çalıştırdığımızda aşağıdaki gibi bir ekran bizi karşılıyor.Burada dikkat edilmesi gereken nokta kurulum yapacağımız raspberry cihazını doğru seçmemiz,bendeki cihaz Raspberry Pi 4 ve buradan “Choose device” ile kendime uygun olan donanım ile devam ediyorum. (Buradaki işlemler pcnizden ve bir SD kart okuyucu ile yapılacaktır.)

Eğer elinizdeki SD kart daha önceden kullanılmış ise aşağıdaki şekilde formatlayabilirsiniz.

Sonrasında ilk ekrana dönerek “Choose OS” ile kuracağımız işletim sistemi imajını “Use custom” seçeneği ile rpi imager yazılımına gösteriyoruz.Buradan hangi imajı indirdiysek seçerek devam ediyoruz.Ben Fedora kuruyorum.

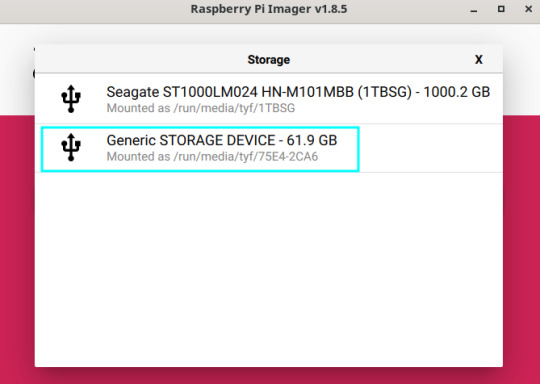

Imajı seçtikten sonra aşağıdaki pencerede “Edit Settings” seçerek devam ediyoruz.Burası bizi hostname,username,password,ssh gibi ayarların olduğu kısıma götürüyor.

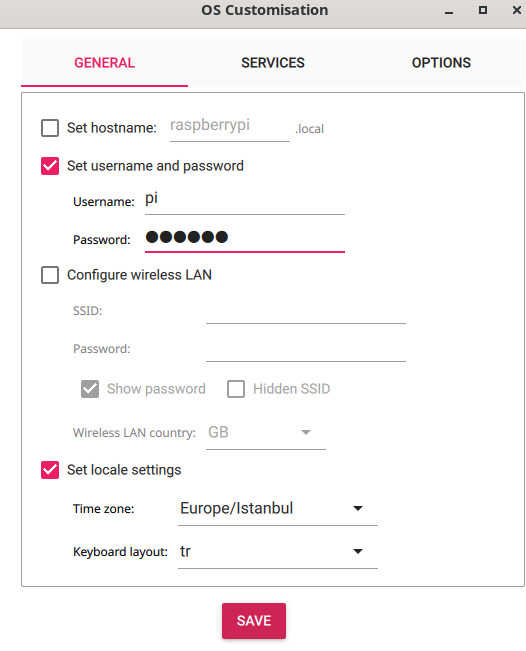

Burada General kısmında ise hostname,username and password,wireless lan gibi ayarlarımızı yapabiliriz.

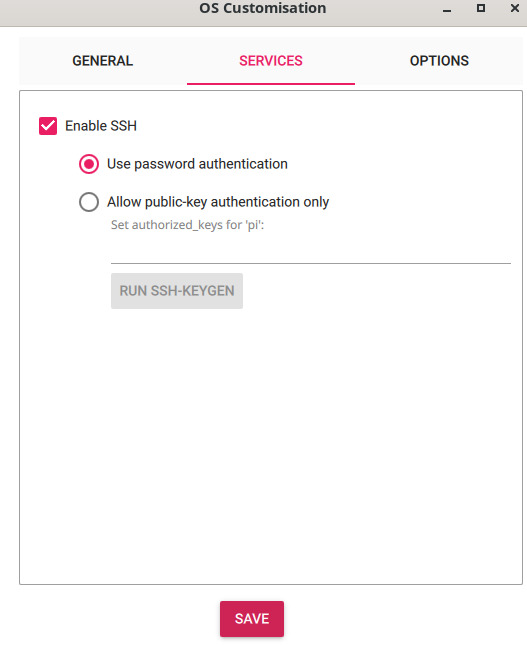

Bu ekranda ise isterseniz public key authentication isterseniz de parola ile SSH ayarlarınızı yapabilirsiniz.Daha sonra “SAVE” ile kaydederek çıkıyoruz.

Bu ekranda ise yaptığımız ayarları uygulamak için “Yes” ile devam ediyoruz.Eğer ayarları yeniden yapmak isterseniz “Clear settings” ile baştan başlayabilirsiniz.

SD kartımız üzerindeki tüm verinin silineceğine dair bilgilendirmeye “Yes” ile devam ediyoruz ve yükleme işlemini başlatıyoruz.

Doğrulama kısmında isterseniz bekleyebilirsiniz.

Artık SD kartımızı okuyucudan çıkartarak Raspberry Pi cihazımıza takarak başlatabiliriz.

Bir sonraki bölümde görüşmek üzere...

0 notes

Text

if this trends continue, i might become a fullstack 3d artist developer who can create a website using python in blender rendered on a cluster of raspberry pis and thinkpads

and the website is that frog who speaks bank of america chinese scam and then closes the tab by itself once its played.

0 notes

Text

Features and Applications of Debian/Arch Linux ARM OS

1. Core Features of Debian ARM

(1) Stable and Reliable Foundation

Community-maintained: Developed by global contributors without commercial influence

Extended support cycle: 5-year security updates for each stable release (extendable to 10 years via LTS project)

Rigorous quality control: Packages undergo strict stability testing before entering stable repos

(2) Broad Hardware Compatibility

Supports full ARMv7/ARMv8 architectures from Cortex-A7 to A78

Officially maintains ports for over 20 single-board computers (including all Raspberry Pi models)

(3) Lightweight Design

Minimal installation requires only ~128MB RAM

Offers systemd-free Devuan branch alternative

(4) Software Ecosystem

Includes over 59,000 precompiled packages

Provides newer software versions via backports repository

2. Typical Applications of Debian ARM

(1) Server Domain

Low-power ARM servers (e.g. AWS Graviton instances)

Network infrastructure (routers, firewalls)

(2) Embedded Systems

Industrial control equipment (requiring long-term stable operation)

Medical devices (compliant with IEC 62304 standard)

(3) Education & Research

Computer architecture teaching platforms

Scientific computing cluster nodes

3. Core Features of Arch Linux ARM

(1) Rolling Release Model

Provides latest software versions (kernel/toolchain etc.)

Daily synchronization with upstream Arch Linux updates

(2) Ultimate Customization

Build from base system according to needs

Supports custom kernel compilation (e.g. enabling specific CPU features)

(3) Community Support

Active AUR (Arch User Repository)

Detailed Wiki documentation

(4) Performance Optimization

Default ARM-specific compilation optimizations

NEON instruction set acceleration support

4. Typical Applications of Arch Linux ARM

(1) Development Platform

Embedded development testing environment

Kernel/driver development platform

(2) Enthusiast Devices

Customized smart home hubs

Portable development workstations

(3) Cutting-edge Technology Testing

New architecture validation (e.g. ARMv9)

Machine learning framework experimentation

5. Comparative Summary

6. Usage Recommendations

Choose Debian ARM: For mission-critical systems, industrial control requiring long-term stability

Choose Arch Linux ARM: For latest software features, hardware R&D or deep customization

0 notes

Text

Yeah, worst part is that it's not even a great server either. It does work well in clusters, but that and the fact it destroys MicroSD cards is horrible and their heat production and overall degradation rate is abysmal. A cheap used, broken display, x220 runs better, but not as small ofc. The Raspberry Pi 4 isn't much better in these regards either. I tend to use them for the "brain" to my robotic systems for testing and connection of sensors, but even that I am currently working on something better from scratch with less memory but better consistency (offloading the harder computation to a central local "server" like a NUC).

NUCs are about (a little above) the same price point and give so much better power capabilities and handle so many things better.

man the raspberry pi 3 really isn't happy being a desktop system. I haven't tried to use one as anything other than a headless compute box in years, the combination of cheap sd card flash, broadcom embedded GPU and pretty mid CPU cores really are bad tastes that taste bad together. There's core 2 duos with magnetic hard drives that feel snappier than this.

25 notes

·

View notes

Text

DeskPi Super6C Mini-ITX Cluster Board for Raspberry Pi CM4 6 RPi CM4 Slots (US) - RobotShop

0 notes