#redshift migration

Explore tagged Tumblr posts

Text

Best Practices for a Smooth Data Warehouse Migration to Amazon Redshift

In the era of big data, many organizations find themselves outgrowing traditional on-premise data warehouses. Moving to a scalable, cloud-based solution like Amazon Redshift is an attractive solution for companies looking to improve performance, cut costs, and gain flexibility in their data operations. However, data warehouse migration to AWS, particularly to Amazon Redshift, can be complex, involving careful planning and precise execution to ensure a smooth transition. In this article, we’ll explore best practices for a seamless Redshift migration, covering essential steps from planning to optimization.

1. Establish Clear Objectives for Migration

Before diving into the technical process, it’s essential to define clear objectives for your data warehouse migration to AWS. Are you primarily looking to improve performance, reduce operational costs, or increase scalability? Understanding the ‘why’ behind your migration will help guide the entire process, from the tools you select to the migration approach.

For instance, if your main goal is to reduce costs, you’ll want to explore Amazon Redshift’s pay-as-you-go model or even Reserved Instances for predictable workloads. On the other hand, if performance is your focus, configuring the right nodes and optimizing queries will become a priority.

2. Assess and Prepare Your Data

Data assessment is a critical step in ensuring that your Redshift data warehouse can support your needs post-migration. Start by categorizing your data to determine what should be migrated and what can be archived or discarded. AWS provides tools like the AWS Schema Conversion Tool (SCT), which helps assess and convert your existing data schema for compatibility with Amazon Redshift.

For structured data that fits into Redshift’s SQL-based architecture, SCT can automatically convert schema from various sources, including Oracle and SQL Server, into a Redshift-compatible format. However, data with more complex structures might require custom ETL (Extract, Transform, Load) processes to maintain data integrity.

3. Choose the Right Migration Strategy

Amazon Redshift offers several migration strategies, each suited to different scenarios:

Lift and Shift: This approach involves migrating your data with minimal adjustments. It’s quick but may require optimization post-migration to achieve the best performance.

Re-architecting for Redshift: This strategy involves redesigning data models to leverage Redshift’s capabilities, such as columnar storage and distribution keys. Although more complex, it ensures optimal performance and scalability.

Hybrid Migration: In some cases, you may choose to keep certain workloads on-premises while migrating only specific data to Redshift. This strategy can help reduce risk and maintain critical workloads while testing Redshift’s performance.

Each strategy has its pros and cons, and selecting the best one depends on your unique business needs and resources. For a fast-tracked, low-cost migration, lift-and-shift works well, while those seeking high-performance gains should consider re-architecting.

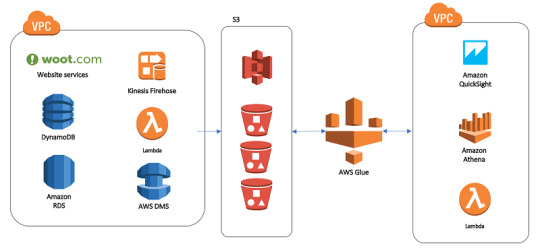

4. Leverage Amazon’s Native Tools

Amazon Redshift provides a suite of tools that streamline and enhance the migration process:

AWS Database Migration Service (DMS): This service facilitates seamless data migration by enabling continuous data replication with minimal downtime. It’s particularly helpful for organizations that need to keep their data warehouse running during migration.

AWS Glue: Glue is a serverless data integration service that can help you prepare, transform, and load data into Redshift. It’s particularly valuable when dealing with unstructured or semi-structured data that needs to be transformed before migrating.

Using these tools allows for a smoother, more efficient migration while reducing the risk of data inconsistencies and downtime.

5. Optimize for Performance on Amazon Redshift

Once the migration is complete, it’s essential to take advantage of Redshift’s optimization features:

Use Sort and Distribution Keys: Redshift relies on distribution keys to define how data is stored across nodes. Selecting the right key can significantly improve query performance. Sort keys, on the other hand, help speed up query execution by reducing disk I/O.

Analyze and Tune Queries: Post-migration, analyze your queries to identify potential bottlenecks. Redshift’s query optimizer can help tune performance based on your specific workloads, reducing processing time for complex queries.

Compression and Encoding: Amazon Redshift offers automatic compression, reducing the size of your data and enhancing performance. Using columnar storage, Redshift efficiently compresses data, so be sure to implement optimal compression settings to save storage costs and boost query speed.

6. Plan for Security and Compliance

Data security and regulatory compliance are top priorities when migrating sensitive data to the cloud. Amazon Redshift includes various security features such as:

Data Encryption: Use encryption options, including encryption at rest using AWS Key Management Service (KMS) and encryption in transit with SSL, to protect your data during migration and beyond.

Access Control: Amazon Redshift supports AWS Identity and Access Management (IAM) roles, allowing you to define user permissions precisely, ensuring that only authorized personnel can access sensitive data.

Audit Logging: Redshift’s logging features provide transparency and traceability, allowing you to monitor all actions taken on your data warehouse. This helps meet compliance requirements and secures sensitive information.

7. Monitor and Adjust Post-Migration

Once the migration is complete, establish a monitoring routine to track the performance and health of your Redshift data warehouse. Amazon Redshift offers built-in monitoring features through Amazon CloudWatch, which can alert you to anomalies and allow for quick adjustments.

Additionally, be prepared to make adjustments as you observe user patterns and workloads. Regularly review your queries, data loads, and performance metrics, fine-tuning configurations as needed to maintain optimal performance.

Final Thoughts: Migrating to Amazon Redshift with Confidence

Migrating your data warehouse to Amazon Redshift can bring substantial advantages, but it requires careful planning, robust tools, and continuous optimization to unlock its full potential. By defining clear objectives, preparing your data, selecting the right migration strategy, and optimizing for performance, you can ensure a seamless transition to Redshift. Leveraging Amazon’s suite of tools and Redshift’s powerful features will empower your team to harness the full potential of a cloud-based data warehouse, boosting scalability, performance, and cost-efficiency.

Whether your goal is improved analytics or lower operating costs, following these best practices will help you make the most of your Amazon Redshift data warehouse, enabling your organization to thrive in a data-driven world.

#data warehouse migration to aws#redshift data warehouse#amazon redshift data warehouse#redshift migration#data warehouse to aws migration#data warehouse#aws migration

0 notes

Text

Maximizing Performance: Tips for a Successful MySQL to Redshift Migration Using Ask On Data

Migrating your data from MySQL to Redshift can be a significant move for businesses looking to scale their data infrastructure, optimize query performance, and take advantage of advanced analytics capabilities. However, the process requires careful planning and execution to ensure a smooth transition. A well-executed MySQL to Redshift migration can lead to several notable benefits that can enhance your business's ability to make data-driven decisions.

Key Benefits of Migrating from MySQL to Redshift

Improved Query Performance

One of the main reasons for migrating from MySQL to Redshift is the need for enhanced query performance, particularly for complex analytical workloads. MySQL, being a transactional database, can struggle with running complex queries over large datasets. In contrast, Redshift is designed specifically for online analytical processing (OLAP), making it highly efficient for querying large volumes of data. By utilizing columnar storage and massively parallel processing (MPP), Redshift can execute queries much faster, improving performance for analytics, reporting, and real-time data analysis.

Enhanced Scalability

Redshift provides the ability to scale easily as your data volume grows. With MySQL, scaling often involves manual interventions, which can become time-consuming and resource-intensive. Redshift, however, allows for near-infinite scaling capabilities with its distributed architecture, meaning you can add more nodes as your data grows, ensuring that performance remains unaffected even as the amount of data expands.

Cost-Effective Storage and Processing

Redshift is optimized for cost-effective storage and processing of large datasets. The use of columnar storage, which allows for efficient storage and retrieval of data, enables you to store vast amounts of data at a fraction of the cost compared to traditional relational databases like MySQL. Additionally, Redshift’s pay-as-you-go pricing model means that businesses can pay only for the resources they use, leading to cost savings, especially when dealing with massive datasets.

Advanced Analytics Capabilities

Redshift integrates seamlessly with a wide range of analytics tools, including machine learning frameworks. By migrating your data from MySQL to Redshift, you unlock access to these advanced analytics capabilities, enabling your business to perform sophisticated analysis and gain deeper insights into your data. Redshift's built-in integrations with AWS services like SageMaker for machine learning, QuickSight for business intelligence, and AWS Glue for data transformation provide a robust ecosystem for developing data-driven strategies.

Seamless Integration with AWS Services

Another significant advantage of migrating your data to Redshift is its seamless integration with other AWS services. Redshift sits at the heart of the AWS ecosystem, making it easier to connect with various tools like S3 for data storage, Lambda for serverless computing, and DynamoDB for NoSQL workloads. This tight integration allows for a comprehensive and unified data infrastructure that streamlines workflows and enables businesses to leverage AWS's full potential for data processing, storage, and analytics.

How Ask On Data Helps with MySQL to Redshift Migration

Migrating your data from MySQL to Redshift is a complex process that requires careful planning and execution. This is where Ask On Data, an advanced data wrangling tool, can help. Ask On Data provides a user-friendly, AI-powered platform that simplifies the process of cleaning, transforming, and migrating data from MySQL to Redshift. With its intuitive interface and natural language processing (NLP) capabilities, Ask On Data allows businesses to quickly prepare and load their data into Redshift with minimal technical expertise.

Moreover, Ask On Data offers seamless integration with Redshift, ensuring that your data migration is smooth and efficient. Whether you are looking to migrate large datasets or simply perform routine data cleaning and transformation before migration, Ask On Data’s robust features allow for optimized data workflows, making your MySQL to Redshift migration faster, easier, and more accurate.

Conclusion

Migrating your data from MySQL to Redshift can significantly improve query performance, scalability, and cost-effectiveness, while also enabling advanced analytics and seamless integration with other AWS services. By leveraging the power of Ask On Data during your migration process, you can ensure a smoother, more efficient transition with minimal risk and maximum performance. Whether you are handling massive datasets or complex analytical workloads, Redshift, powered by Ask On Data, provides a comprehensive solution to meet your business’s evolving data needs.

0 notes

Text

Unravel: Migrating and Scaling Data Pipelines with AI on Amazon EMR, Redshift & Athena

Learn more about AWS Startups at – Unravel CEO Kunal Agarwal and CTO Shivnath Babu talk about … source

0 notes

Text

Expert Cloud Migration Services and Optimization | ITTStar

Experience advanced cloud migration and optimization with ITTStar. Our experts provide data migration and smart solutions to enhance your cloud infrastructure. ITTStar Consulting helps businesses optimize cloud spend with effective cost-management strategies and tools.

0 notes

Text

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

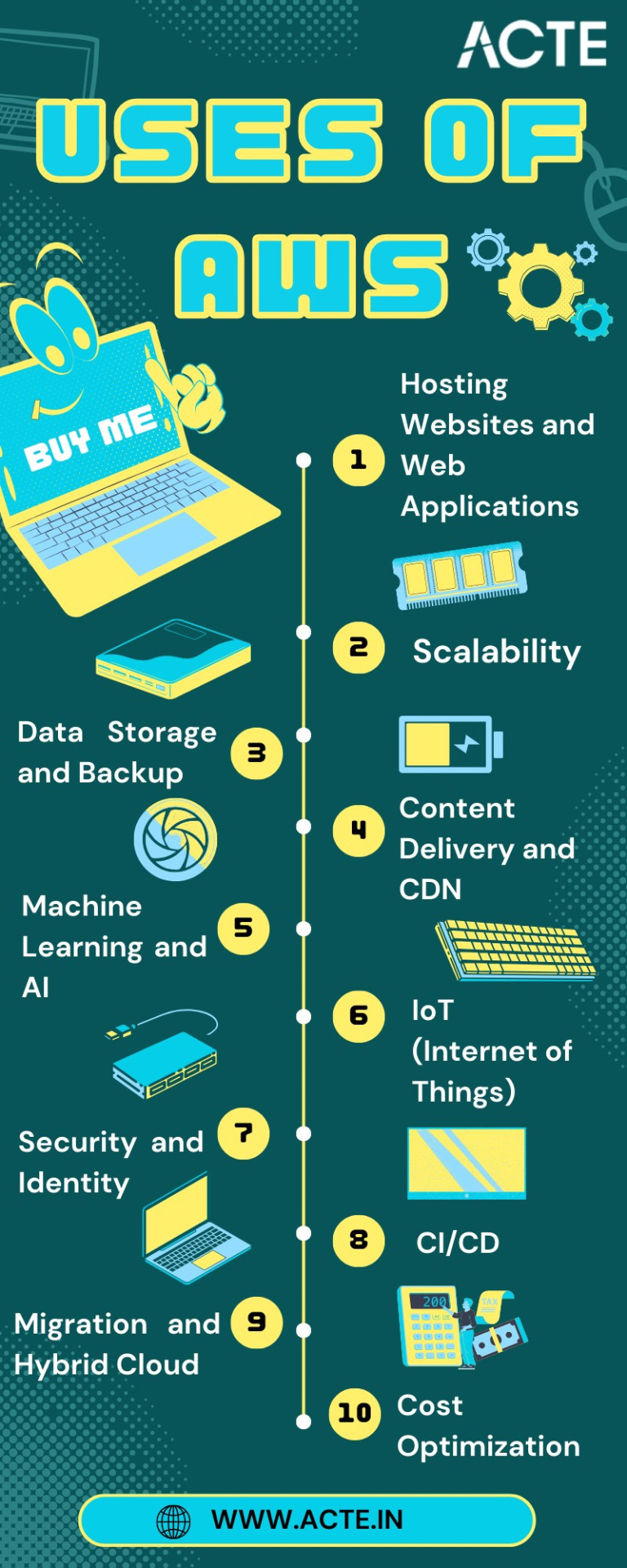

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text

QuickSight vs Tableau: Which One Works Better for Cloud-Based Analytics?

In today’s data-driven business world, choosing the right tool for cloud-based analytics can define the efficiency and accuracy of decision-making processes. Among the top contenders in this space are Amazon QuickSight and Tableau, two leading platforms in data visualization applications. While both offer powerful tools for interpreting and presenting data, they vary significantly in features, pricing, integration capabilities, and user experience.

This article will delve deep into a comparative analysis of QuickSight vs Tableau, evaluating their capabilities in cloud environments, their support for Augmented systems, alignment with current data analysis trends, and suitability for various business needs.

Understanding Cloud-Based Analytics

Cloud-based analytics refers to using remote servers and services to analyze, process, and visualize data. It allows organizations to leverage scalability, accessibility, and reduced infrastructure costs. As businesses migrate to the cloud, choosing tools that align with these goals becomes critical.

Both QuickSight and Tableau offer cloud-based deployments, but they approach it from different perspectives—QuickSight being cloud-native and Tableau adapting cloud support over time.

Amazon QuickSight Overview

Amazon QuickSight is a fully managed data visualization application developed by Amazon Web Services (AWS). It is designed to scale automatically and is embedded with machine learning (ML) capabilities, making it suitable for interactive dashboards and report generation.

Key Features of QuickSight:

Serverless architecture with pay-per-session pricing.

Native integration with AWS services like S3, RDS, Redshift.

Built-in ML insights for anomaly detection and forecasting.

SPICE (Super-fast, Parallel, In-memory Calculation Engine) for faster data processing.

Support for Augmented systems through ML-based features.

Tableau Overview

Tableau is one of the most well-known data visualization applications, offering powerful drag-and-drop analytics and dashboard creation tools. Acquired by Salesforce, Tableau has expanded its cloud capabilities via Tableau Online and Tableau Cloud.

Key Features of Tableau:

Rich and interactive visualizations.

Connects to almost any data source.

Advanced analytics capabilities with R and Python integration.

Strong user community and resources.

Adoption of Augmented systems like Tableau Pulse and Einstein AI (through Salesforce).

Comparative Analysis: QuickSight vs Tableau

1. User Interface and Usability

QuickSight is lightweight and streamlined, designed for business users who need quick insights without technical expertise. However, it may seem less flexible compared to Tableau's highly interactive and customizable dashboards.

Tableau excels in usability for data analysts and power users. Its drag-and-drop interface is intuitive, and it allows for complex manipulations and custom visual storytelling.

Winner: Tableau (for advanced users), QuickSight (for business users and simplicity)

2. Integration and Ecosystem

QuickSight integrates seamlessly with AWS services, which is a big plus for organizations already on AWS. It supports Redshift, Athena, S3, and more, making it an ideal choice for AWS-heavy infrastructures.

Tableau, on the other hand, boasts extensive connectors to a vast range of data sources, from cloud platforms like Google Cloud and Azure to on-premise databases and flat files.

Winner: Tie – depends on your existing cloud infrastructure.

3. Performance and Scalability

QuickSight's SPICE engine allows users to perform analytics at lightning speed without impacting source systems. Since it’s serverless, scalability is handled automatically by AWS.

Tableau provides robust performance but requires configuration and optimization, especially in self-hosted environments. Tableau Online and Cloud offer better scalability but may incur higher costs.

Winner: QuickSight

4. Cost Structure

QuickSight offers a pay-per-session pricing model, which can be highly economical for organizations with intermittent users. For example, you only pay when a user views a dashboard.

Tableau follows a user-based subscription pricing model, which can become expensive for large teams or casual users.

Winner: QuickSight

5. Support for Augmented Systems

QuickSight integrates ML models and offers natural language querying through Q (QuickSight Q), allowing users to ask business questions in natural language and receive answers instantly. This is a great example of how Augmented systems are becoming more mainstream.

Tableau, through its parent company Salesforce, is integrating Augmented systems like Einstein Discovery. It provides predictions and AI-powered insights directly within dashboards.

Winner: Tableau (more mature and integrated AI/ML features through Salesforce)

6. Alignment with Data Analysis Trends

Both platforms are aligned with modern data analysis trends, including real-time data streaming, AI/ML integration, and predictive analytics.

QuickSight is riding the wave of serverless architecture and real-time analytics.

Tableau is advancing toward collaborative analytics and AI-driven insights, especially after Salesforce’s acquisition.

Tableau Pulse is a recent feature that reflects current data analysis trends, helping users get real-time alerts and updates without logging into the dashboard.

Winner: Tableau (more innovations aligned with emerging data analysis trends)

7. Collaboration and Sharing

In QuickSight, collaboration is limited to dashboard sharing and email reports. While effective, it lacks some of the deeper collaboration capabilities of Tableau.

Tableau enables shared workbooks, annotations, embedded analytics, and enterprise-level collaboration across teams, especially when integrated with Salesforce.

Winner: Tableau

8. Data Security and Compliance

Both platforms offer enterprise-grade security features:

QuickSight benefits from AWS's robust security and compliance frameworks (HIPAA, GDPR, etc.).

Tableau also supports a wide range of compliance requirements, with added security controls available through Tableau Server.

Winner: Tie

9. Customization and Extensibility

Tableau offers superior extensibility with support for Python, R, JavaScript API, and more. Developers can build custom dashboards and integrations seamlessly.

QuickSight, while customizable, offers fewer extensibility options. It focuses more on ease-of-use than flexibility.

Winner: Tableau

10. Community and Support

Tableau has one of the largest user communities, with forums, certifications, user groups, and an active marketplace.

QuickSight is newer and has a smaller but growing community, primarily centered around AWS forums and documentation.

Winner: Tableau

Use Case Comparison

Use CaseBest ToolAWS-Native WorkloadsQuickSightComplex Dashboards & VisualizationsTableauOccasional Dashboard ViewersQuickSightAdvanced Analytics and ModelingTableauTight Budget and Cost ControlQuickSightCollaborative Enterprise AnalyticsTableau

The Verdict: Which Works Better for Cloud-Based Analytics?

Choosing between QuickSight vs Tableau depends heavily on your specific business needs, existing cloud ecosystem, and user types.

Choose QuickSight if you’re already using AWS extensively, have a limited budget, and need fast, scalable, and easy-to-use data visualization applications.

Choose Tableau if you need rich customization, are heavily invested in Salesforce, or have data analysts and power users requiring advanced functionality and support for Augmented systems.

In terms of data analysis trends, Tableau is more in tune with cutting-edge features like collaborative analytics, embedded AI insights, and proactive alerts. However, QuickSight is rapidly closing this gap, especially with features like QuickSight Q and natural language queries.

Conclusion

Both QuickSight and Tableau are excellent platforms in their own right, each with its strengths and limitations. Organizations must consider their long-term data strategy, scalability requirements, team expertise, and cost constraints before choosing the best fit.

As data analysis trends evolve, tools will continue to adapt. Whether it’s through more intuitive data visualization applications, AI-driven Augmented systems, or better collaboration features, the future of analytics is undeniably in the cloud. By choosing the right tool today, businesses can set themselves up for more informed, agile, and strategic decision-making tomorrow.

0 notes

Text

Azure Data Warehouse Migration for AI-Based Healthcare Company

IFI Techsolutions integrated Azure SQL Database migration for a healthcare company in the USA enhancing their scalability, flexibility and operational efficiency.

0 notes

Text

Want to Optimize Your Data Strategy? Discover the Power of Cloud Data Warehouse Consulting Services

What is Cloud Data Warehouse Consulting?

Cloud data warehouse consulting helps businesses design, build, and manage a modern data architecture in the cloud. At Dataplatr, we specialize in cloud data ware house solutions, offering end-to-end solutions to ensure your data is well-organized, secure, and ready for analytics.

How Can Data Warehouse Consulting Transform Your Business?

By using data warehouse consulting, you can accelerate your data migration, optimize storage and performance, and get advanced analytics. Our team of experts ensures seamless integration and ongoing management, so your business can focus on growth and innovation.

Benefits of Cloud Data Warehouse Consulting Services

At Dataplatr, our cloud data warehouse consulting services focus on delivering tailored solutions that fit your unique business requirements. Here’s how we add value:

Expert Assessment & Planning: We evaluate your existing data infrastructure and help you build a roadmap for cloud migration or optimization.

Seamless Migration: Our team ensures minimal disruption while moving your data to modern cloud data warehouses like Snowflake, BigQuery, or Redshift.

Performance Optimization: We fine-tune your cloud data warehouse environment to ensure fast query performance and cost efficiency.

Security & Compliance: We implement best practices to safeguard your data and meet industry compliance standards.

Ongoing Support: Beyond deployment, we provide continuous monitoring and enhancements to keep your data warehouse running smoothly.

Why Choose Dataplatr for Cloud Data Warehouse Consulting?

Dataplatr combines deep industry knowledge with technical expertise to deliver cloud data warehouse services that empower your analytics and business intelligence initiatives. Our partnership-driven approach ensures that we align solutions with your strategic goals, maximizing the value of your data assets.

Take the First Step to a Smarter Data Strategy Today

Achieve the true power of your business data with Dataplatr’s expert cloud data warehouse consulting services. Whether you’re starting fresh or looking to optimize your existing setup, our team is here to guide you every step of the way.

Contact us now to schedule a consultation and discover customized cloud data warehouse solutions designed to drive efficiency, scalability, and growth for your organization.

1 note

·

View note

Text

Data Warehouse Migration to Microsoft Azure | IFI Techsolutions IFI Techsolutions used PolyBase to move AWS Redshift data into Azure SQL Data Warehouse by running T-SQL commands against internal and external tables.

#DataMigration#CloudMigration#AzureSQL#AzureDataWarehouse#CloudComputing#DataAnalytics#AzureExpertMSP#AzureMigration#IFITechsolutions

0 notes

Text

How AWS Transforms Raw Data into Actionable Insights

Introduction

Businesses generate vast amounts of data daily, from customer interactions to product performance. However, without transforming this raw data into actionable insights, it’s difficult to make informed decisions. AWS Data Analytics offers a powerful suite of tools to simplify data collection, organization, and analysis. By leveraging AWS, companies can convert fragmented data into meaningful insights, driving smarter decisions and fostering business growth.

1. Data Collection and Integration

AWS makes it simple to collect data from various sources — whether from internal systems, cloud applications, or IoT devices. Services like AWS Glue and Amazon Kinesis help automate data collection, ensuring seamless integration of multiple data streams into a unified pipeline.

Data Sources AWS can pull data from internal systems (ERP, CRM, POS), websites, apps, IoT devices, and more.

Key Services

AWS Glue: Automates data discovery, cataloging, and preparation.

Amazon Kinesis: Captures real-time data streams for immediate analysis.

AWS Data Migration Services: Facilitates seamless migration of databases to the cloud.

By automating these processes, AWS ensures businesses have a unified, consistent view of their data.

2. Data Storage at Scale

AWS offers flexible, secure storage solutions to handle both structured and unstructured data. With services Amazon S3, Redshift, and RDS, businesses can scale storage without worrying about hardware costs.

Storage Options

Amazon S3: Ideal for storing large volumes of unstructured data.

Amazon Redshift: A data warehouse solution for quick analytics on structured data.

Amazon RDS & Aurora: Managed relational databases for handling transactional data.

AWS’s tiered storage options ensure businesses only pay for what they use, whether they need real-time analytics or long-term archiving.

3. Data Cleaning and Preparation

Raw data is often inconsistent and incomplete. AWS Data Analytics tools like AWS Glue DataBrew and AWS Lambda allow users to clean and format data without extensive coding, ensuring that your analytics processes work with high-quality data.

Data Wrangling Tools

AWS Glue DataBrew: A visual tool for easy data cleaning and transformation.

AWS Lambda: Run custom cleaning scripts in real-time.

By leveraging these tools, businesses can ensure that only accurate, trustworthy data is used for analysis.

4. Data Exploration and Analysis

Before diving into advanced modeling, it’s crucial to explore and understand the data. Amazon Athena and Amazon SageMaker Data Wrangler make it easy to run SQL queries, visualize datasets, and uncover trends and patterns in data.

Exploratory Tools

Amazon Athena: Query data directly from S3 using SQL.

Amazon Redshift Spectrum: Query S3 data alongside Redshift’s warehouse.

Amazon SageMaker Data Wrangler: Explore and visualize data features before modeling.

These tools help teams identify key trends and opportunities within their data, enabling more focused and efficient analysis.

5. Advanced Analytics & Machine Learning

AWS Data Analytics moves beyond traditional reporting by offering powerful AI/ML capabilities through services Amazon SageMaker and Amazon Forecast. These tools help businesses predict future outcomes, uncover anomalies, and gain actionable intelligence.

Key AI/ML Tools

Amazon SageMaker: An end-to-end platform for building and deploying machine learning models.

Amazon Forecast: Predicts business outcomes based on historical data.

Amazon Comprehend: Uses NLP to analyze and extract meaning from text data.

Amazon Lookout for Metrics: Detects anomalies in your data automatically.

These AI-driven services provide predictive and prescriptive insights, enabling proactive decision-making.

6. Visualization and Reporting

AWS’s Amazon QuickSight helps transform complex datasets into easily digestible dashboards and reports. With interactive charts and graphs, QuickSight allows businesses to visualize their data and make real-time decisions based on up-to-date information.

Powerful Visualization Tools

Amazon QuickSight: Creates customizable dashboards with interactive charts.

Integration with BI Tools: Easily integrates with third-party tools like Tableau and Power BI.

With these tools, stakeholders at all levels can easily interpret and act on data insights.

7. Data Security and Governance

AWS places a strong emphasis on data security with services AWS Identity and Access Management (IAM) and AWS Key Management Service (KMS). These tools provide robust encryption, access controls, and compliance features to ensure sensitive data remains protected while still being accessible for analysis.

Security Features

AWS IAM: Controls access to data based on user roles.

AWS KMS: Provides encryption for data both at rest and in transit.

Audit Tools: Services like AWS CloudTrail and AWS Config help track data usage and ensure compliance.

AWS also supports industry-specific data governance standards, making it suitable for regulated industries like finance and healthcare.

8. Real-World Example: Retail Company

Retailers are using AWS to combine data from physical stores, eCommerce platforms, and CRMs to optimize operations. By analyzing sales patterns, forecasting demand, and visualizing performance through AWS Data Analytics, they can make data-driven decisions that improve inventory management, marketing, and customer service.

For example, a retail chain might:

Use AWS Glue to integrate data from stores and eCommerce platforms.

Store data in S3 and query it using Athena.

Analyze sales data in Redshift to optimize product stocking.

Use SageMaker to forecast seasonal demand.

Visualize performance with QuickSight dashboards for daily decision-making.

This example illustrates how AWS Data Analytics turns raw data into actionable insights for improved business performance.

9. Why Choose AWS for Data Transformation?

AWS Data Analytics stands out due to its scalability, flexibility, and comprehensive service offering. Here’s what makes AWS the ideal choice:

Scalability: Grows with your business needs, from startups to large enterprises.

Cost-Efficiency: Pay only for the services you use, making it accessible for businesses of all sizes.

Automation: Reduces manual errors by automating data workflows.

Real-Time Insights: Provides near-instant data processing for quick decision-making.

Security: Offers enterprise-grade protection for sensitive data.

Global Reach: AWS’s infrastructure spans across regions, ensuring seamless access to data.

10. Getting Started with AWS Data Analytics

Partnering with a company, OneData, can help streamline the process of implementing AWS-powered data analytics solutions. With their expertise, businesses can quickly set up real-time dashboards, implement machine learning models, and get full support during the data transformation journey.

Conclusion

Raw data is everywhere, but actionable insights are rare. AWS bridges that gap by providing businesses with the tools to ingest, clean, analyze, and act on data at scale.

From real-time dashboards and forecasting to machine learning and anomaly detection, AWS enables you to see the full story your data is telling. With partners OneData, even complex data initiatives can be launched with ease.

Ready to transform your data into business intelligence? Start your journey with AWS today.

0 notes

Text

Navigating the Cloud with Confidence: Secure AWS Cloud, DevOps, AI & the Incloudo Advantage

The necessity of cloud migration has replaced traditional choice selection in the digital transformation age. The essential issue now becomes how organizations can safely grow their cloud operations while making them smarter through AI capabilities.

The trio made of Secure AWS Cloud together with AWS Cloud DevOps and AWS Cloud AI Services functions as the solution. And at the heart of this transformation? Incloudo stands as a premier cloud consulting organization which delivers superior AWS cloud solutions to businesses.

Secure AWS Cloud: Safety at Scale

The cloud infrastructure bases its existence on security which extends beyond being a single feature. Secure AWS Cloud provides top-tier protection for your infrastructure together with applications and data.

Secure AWS Cloud offers these main features:

Identity and Access Management (IAM) provides organizations with security features through access controls established using the least privilege principle.

The encryption solution AES-256 functions within all services including S3, RDS, and Redshift.

AWS provides support for HIPAA and GDPR as well as PCI-DSS among other security standards but system compliance requires collaboration between organizations.

Smooth cloud protection occurs with the combination of AWS CloudTrail and Amazon GuardDuty as they identify risks while tracking all user activities.

To achieve the most secure AWS Cloud deployment consistently use multi-factor authentication (MFA) along with automatic compliance verification through AWS Config.

AWS Cloud DevOps accelerates development speed and deploys applications even faster

DevOps functions as an operational way of life which embraces both speed and automation through continuous improvement. AWS Cloud DevOps enables the application of powerful tools and services to implement cloud development operations.

AWS DevOps Powers:

Benefits of AWS Cloud DevOps:

Speed & Agility: Automate deployments with zero downtime.

Scalability: Manage hundreds of instances as easily as one.

Security & Compliance: Use DevOps practices without sacrificing the Secure AWS Cloud principles.

Pro Tip: Integrate your CI/CD pipelines with AWS IAM roles for secure deployment and faster feedback cycles.

AWS Cloud AI Services: Intelligence at Your Fingertips

Why just run your applications when you can make them smart? With AWS Cloud AI Services, you can embed machine learning and artificial intelligence into your cloud-native apps — no PhD required.

Top AWS Cloud AI Services:

Amazon Rekognition: Image & video analysis

Amazon Comprehend: NLP for text analytics

Amazon Forecast: Predictive analytics for demand forecasting

Amazon SageMaker: Build, train, and deploy ML models at scale

Example: An e-commerce company used Amazon Personalize (an AWS AI service) to boost customer conversions by 20% through tailored recommendations.

How AI Enhances the Cloud:

Smarter Decisions: Real-time data-driven insights.

Automation: Reduce manual workload with AI-powered automation.

Customer Experience: Improve personalization and engagement.

Tip: Combine AWS Cloud DevOps with AI model deployment pipelines for a full machine learning lifecycle on the cloud.

Meet Incloudo: Your Trusted AWS Cloud Partner

Now, let’s talk about the team that brings it all together

Based in India and serving clients across the globe, Incloudo is a cloud consulting firm that helps businesses succeed with:

Secure AWS Cloud solutions for industries like finance, healthcare, and education.

AWS Cloud DevOps services for startups and enterprises looking to streamline operations.

AWS Cloud AI Services that enable businesses to innovate faster with intelligence.

Why Choose Incloudo?

✔Rapid Response: We guarantee replies within 24 hours — every time.

✔Customized Solutions: No one-size-fits-all approach; we tailor strategies for your specific needs.

✔Certified AWS Experts: Our team follows industry best practices with the latest tools.

✔End-to-End Services: From cloud migration to CI/CD, monitoring, and AI deployment — we do it all.

Cloud Confidence Starts with Incloudo

The power of Secure AWS Cloud, AWS Cloud DevOps, and AWS Cloud AI Services can transform your business — but only if implemented right.

That’s where Incloudo steps in. With our passion, precision, and cloud expertise, we don’t just help you move to the cloud — we help you thrive in it.

Ready to future-proof your business?

Let Incloudo take you there. Because in the cloud, excellence is no longer optional — it’s expected.

0 notes

Text

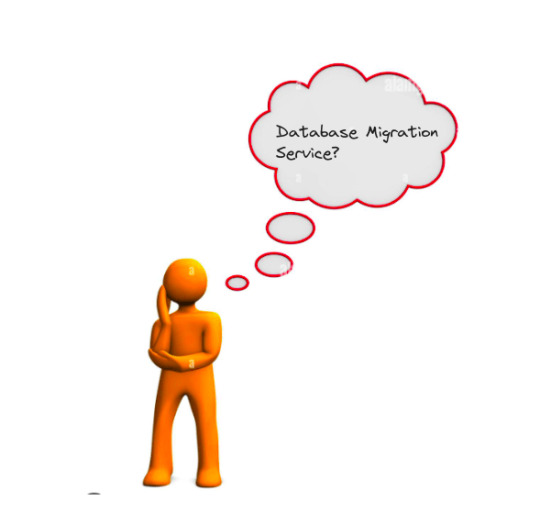

How to migrate databases using AWS DMS

Introduction

What is AWS Database Migration Service(DMS)?

AWS Database Migration Service (AWS DMS) is a cloud service that makes it possible to migrate relational databases, data warehouses, NoSQL databases, and other types of data stores. You can use AWS DMS to migrate your data into the AWS Cloud or between combinations of cloud and on-premises setups. The databases that are offered include Redshift, DynamoDB, ElasticCache, Aurora, Amazon RDS, and DynamoDB.

Now let’s check why DMS is important

Why do we need Database Migration Service?

Reduced Downtime: Database migration services minimize downtime during the migration process. By continuously replicating changes from the source database to the target database, they ensure that data remains synchronized, allowing for a seamless transition with minimal disruption to your applications and users.

Security: Migration services typically provide security features such as data encryption, secure network connections, and compliance with industry regulations.

Cost Effectiveness: Data Migration Service is a free migration solution for switching to DocumentDB, Redshift, Aurora, or DynamoDB (Supports Most Notable Databases). You must pay for other databases based on the volume of log storing and the computational load.

Scalability and Performance: Migration services are designed to handle large-scale migrations efficiently. They employ techniques such as parallel data transfer, data compression, and optimization algorithms to optimize performance and minimize migration time, allowing for faster and more efficient migrations.

Schema Conversion: AWS DMS can automatically convert the source database schema to match the target database schema during migration. This eliminates the need for manual schema conversion, saving time and effort.

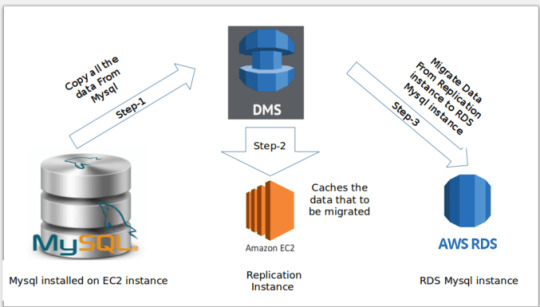

How Does AWS Database Migration Service Work?

Pre-migration steps

These steps should be taken before actually migrating the database, which will include basic planning, structuring, understanding the requirements, and finalizing the move.

Migration Steps

These are the steps that are to be taken while implementing database migration. These steps should be accomplished with proper accountability taking utmost care about data governance roles, risks related to migration, etc.

Post-migration steps

Once db migration is complete, there might be some issues that would have gone unnoticed during the process. These steps would be necessarily taken to ensure that the migration process gets over in an error-free manner.

Now let’s move forward to use cases of DMS!

You can check more info about: aws database migration service.

0 notes

Text

Seamlessly MySQL to Redshift Migration with Ask On Data

MySQL to Redshift migration is a critical component for businesses looking to scale their data infrastructure. As organizations grow, they often need to transition from traditional relational databases like MySQL to more powerful cloud data warehouses like Amazon Redshift to handle larger datasets, improve performance, and enable real-time analytics. The migration process can be complex, but with the right tools, it becomes much more manageable. Ask On Data is a tool designed to streamline the data wrangling and migration process, helping businesses move from MySQL to Redshift effortlessly.

Why Migrate from MySQL to Redshift?

MySQL, a widely-used relational database management system (RDBMS), is excellent for managing structured data, especially for small to medium-sized applications. However, as the volume of data increases, MySQL can struggle with performance and scalability. This is where Amazon Redshift, a fully managed cloud-based data warehouse, comes into play. Redshift offers powerful query performance, massive scalability, and robust integration with other AWS services.

Redshift is built specifically for analytics, and it supports parallel processing, which enables faster query execution on large datasets. The transition from MySQL to Redshift allows businesses to run complex queries, gain insights from large volumes of data, and perform advanced analytics without compromising performance.

The Migration Process: Challenges and Solutions

Migrating from MySQL to Redshift is not a one-click operation. It requires careful planning, data transformation, and validation. Some of the primary challenges include:

Data Compatibility: MySQL and Redshift have different data models and structures. MySQL is an OLTP (Online Transaction Processing) system optimized for transactional queries, while Redshift is an OLAP (Online Analytical Processing) system optimized for read-heavy, analytical queries. The differences in how data is stored, indexed, and accessed must be addressed during migration.

Data Transformation: MySQL’s schema may need to be restructured to fit Redshift’s columnar storage format. Data types and table structures may also need adjustments, as Redshift uses specific data types optimized for analytical workloads.

Data Volume: Moving large volumes of data from MySQL to Redshift can take time and resources. A well-thought-out migration strategy is essential to minimize downtime and ensure the integrity of the data.

Testing and Validation: Post-migration, it is crucial to test and validate the data to ensure everything is accurately transferred, and the queries in Redshift return the expected results.

How Ask On Data Eases the Migration Process

Ask On Data is a powerful tool designed to assist with data wrangling and migration tasks. The tool simplifies the complex process of transitioning from MySQL to Redshift by offering several key features:

Data Preparation and Wrangling: Before migration, data often needs cleaning and transformation. Ask On Data makes it easy to prepare your data by handling missing values, eliminating duplicates, and ensuring consistency across datasets. It also provides automated data profiling to ensure data quality before migration.

Schema Mapping and Transformation: Ask On Data supports schema mapping, helping you seamlessly convert MySQL schemas into Redshift-compatible structures. The tool automatically maps data types, handles column transformations, and generates the necessary scripts to create tables in Redshift.

Efficient Data Loading: Ask On Data simplifies the process of transferring large volumes of data from MySQL to Redshift. With support for bulk data loading and parallel processing, the tool ensures that the migration happens swiftly with minimal impact on production systems.

Error Handling and Monitoring: Migration can be prone to errors, especially when dealing with large datasets. Ask On Data offers built-in error handling and monitoring features to track the progress of the migration and troubleshoot any issues that arise.

Post-Migration Validation: Once the migration is complete, Ask On Data helps validate the data by comparing the original data in MySQL with the migrated data in Redshift. It ensures that data integrity is maintained and that all queries return accurate results.

Conclusion

Migrating from MySQL to Redshift can significantly improve the performance and scalability of your data infrastructure. While the migration process can be complex, tools like Ask On Data can simplify it by automating many of the steps involved. From data wrangling to schema transformation and data validation, Ask On Data provides a comprehensive solution for seamless migration. By leveraging this tool, businesses can focus on analyzing their data, rather than getting bogged down in the technicalities of migration, ensuring a smooth and efficient transition to Redshift.

0 notes

Text

Accelerating Innovation with Data Engineering on AWS and Aretove’s Expertise as a Leading Data Engineering Company

In today’s digital economy, the ability to process and act on data in real-time is a significant competitive advantage. This is where Data Engineering on AWS and the support of a dedicated Data Engineering Company like Aretove come into play. These solutions form the backbone of modern analytics architectures, powering everything from real-time dashboards to machine learning pipelines.

What is Data Engineering and Why is AWS the Platform of Choice?

Data engineering is the practice of designing and building systems for collecting, storing, and analyzing data. As businesses scale, traditional infrastructures struggle to handle the volume, velocity, and variety of data. This is where Amazon Web Services (AWS) shines.

AWS offers a robust, flexible, and scalable environment ideal for modern data workloads. Aretove leverages a variety of AWS tools—like Amazon Redshift, AWS Glue, and Amazon S3—to build data pipelines that are secure, efficient, and cost-effective.

Core Benefits of AWS for Data Engineering

Scalability: AWS services automatically scale to handle growing data needs.

Flexibility: Supports both batch and real-time data processing.

Security: Industry-leading compliance and encryption capabilities.

Integration: Seamlessly works with machine learning tools and third-party apps.

At Aretove, we customize your AWS architecture to match business goals, ensuring performance without unnecessary costs.

Aretove: A Trusted Data Engineering Company

As a premier Data Engineering Aws , Aretove specializes in end-to-end solutions that unlock the full potential of your data. Whether you're migrating to the cloud, building a data lake, or setting up real-time analytics, our team of experts ensures a seamless implementation.

Our services include:

Data Pipeline Development: Build robust ETL/ELT pipelines using AWS Glue and Lambda.

Data Warehousing: Design scalable warehouses with Amazon Redshift for fast querying and analytics.

Real-time Streaming: Implement streaming data workflows with Amazon Kinesis and Apache Kafka.

Data Governance and Quality: Ensure your data is accurate, consistent, and secure.

Case Study: Real-Time Analytics for E-Commerce

An e-commerce client approached Aretove to improve its customer insights using real-time analytics. We built a cloud-native architecture on AWS using Kinesis for stream ingestion and Redshift for warehousing. This allowed the client to analyze customer behavior instantly and personalize recommendations, leading to a 30% boost in conversion rates.

Why Aretove Stands Out

What makes Aretove different is our ability to bridge business strategy with technical execution. We don’t just build pipelines—we build solutions that drive revenue, enhance user experiences, and scale with your growth.

With a client-centric approach and deep technical know-how, Aretove empowers businesses across industries to harness the power of their data.

Looking Ahead

As data continues to fuel innovation, companies that invest in modern data engineering practices will be the ones to lead. AWS provides the tools, and Aretove brings the expertise. Together, we can transform your data into a strategic asset.

Whether you’re starting your cloud journey or optimizing an existing environment, Aretove is your go-to partner for scalable, intelligent, and secure data engineering solutions.

0 notes

Text

AWS Unlocked: Skills That Open Doors

AWS Demand and Relevance in the Job Market

Amazon Web Services (AWS) continues to dominate the cloud computing space, making AWS skills highly valuable in today’s job market. As more companies migrate to the cloud for scalability, cost-efficiency, and innovation, professionals with AWS expertise are in high demand. From startups to Fortune 500 companies, organizations are seeking cloud architects, developers, and DevOps engineers proficient in AWS.

The relevance of AWS spans across industries—IT, finance, healthcare, and more—highlighting its versatility. Certifications like AWS Certified Solutions Architect or AWS Certified DevOps Engineer serve as strong indicators of proficiency and can significantly boost one’s resume.

According to job portals and market surveys, AWS-related roles often command higher salaries compared to non-cloud positions. As cloud technology continues to evolve, professionals with AWS knowledge remain crucial to digital transformation strategies, making it a smart career investment.

Basic AWS Knowledge

Amazon Web Services (AWS) is a cloud computing platform that provides a wide range of services, including computing power, storage, databases, and networking. Understanding the basics of AWS is essential for anyone entering the tech industry or looking to enhance their IT skills.

At its core, AWS offers services like EC2 (virtual servers), S3 (cloud storage), RDS (managed databases), and VPC (networking). These services help businesses host websites, run applications, manage data, and scale infrastructure without managing physical servers.

Basic AWS knowledge also includes understanding regions and availability zones, how to navigate the AWS Management Console, and using IAM (Identity and Access Management) for secure access control.

Getting started with AWS doesn’t require advanced technical skills. With free-tier access and beginner-friendly certifications like AWS Certified Cloud Practitioner, anyone can begin their cloud journey. This foundational knowledge opens doors to more specialized cloud roles in the future.

AWS Skills Open Up These Career Roles

Cloud Architect Designs and manages an organization's cloud infrastructure using AWS services to ensure scalability, performance, and security.

Solutions Architect Creates technical solutions based on AWS services to meet specific business needs, often involved in client-facing roles.

DevOps Engineer Automates deployment processes using tools like AWS CodePipeline, CloudFormation, and integrates development with operations.

Cloud Developer Builds cloud-native applications using AWS services such as Lambda, API Gateway, and DynamoDB.

SysOps Administrator Handles day-to-day operations of AWS infrastructure, including monitoring, backups, and performance tuning.

Security Specialist Focuses on cloud security, identity management, and compliance using AWS IAM, KMS, and security best practices.

Data Engineer/Analyst Works with AWS tools like Redshift, Glue, and Athena for big data processing and analytics.

AWS Skills You Will Learn

Cloud Computing Fundamentals Understand the basics of cloud models (IaaS, PaaS, SaaS), deployment types, and AWS's place in the market.

AWS Core Services Get hands-on with EC2 (compute), S3 (storage), RDS (databases), and VPC (networking).

IAM & Security Learn how to manage users, roles, and permissions with Identity and Access Management (IAM) for secure access.

Scalability & Load Balancing Use services like Auto Scaling and Elastic Load Balancer to ensure high availability and performance.

Monitoring & Logging Track performance and troubleshoot using tools like Amazon CloudWatch and AWS CloudTrail.

Serverless Computing Build and deploy applications with AWS Lambda, API Gateway, and DynamoDB.

Automation & DevOps Tools Work with AWS CodePipeline, CloudFormation, and Elastic Beanstalk to automate infrastructure and deployments.

Networking & CDN Configure custom networks and deliver content faster using VPC, Route 53, and CloudFront.

Final Thoughts

The AWS Certified Solutions Architect – Associate certification is a powerful step toward building a successful cloud career. It validates your ability to design scalable, reliable, and secure AWS-based solutions—skills that are in high demand across industries.

Whether you're an IT professional looking to upskill or someone transitioning into cloud computing, this certification opens doors to roles like Cloud Architect, Solutions Architect, and DevOps Engineer. With real-world knowledge of AWS core services, architecture best practices, and cost-optimization strategies, you'll be equipped to contribute to cloud projects confidently.

0 notes

Text

AWS Cloud Development Partner: Enabling Innovation and Scalability

In today’s rapidly evolving digital landscape, leveraging cloud computing has become essential for businesses accelerating their digital transformation journeys. Amazon Web Services (AWS) remains the leading cloud platform, offering an extensive suite of services to facilitate cloud-native development, application modernization, and infrastructure optimization. However, navigating AWS’s expansive ecosystem requires specialized expertise, strategic planning, and advanced technical skills. This is where an AWS Cloud Development Partner plays a pivotal role, equipping businesses with the guidance and capabilities needed to fully harness the potential of AWS.

What is an AWS Cloud Development Partner?

An AWS Cloud Development Partner is a certified organization or consultant with proven expertise in designing, developing, deploying, and managing applications on AWS. Recognized by AWS for their technical proficiency, these partners assist businesses in building scalable, secure, and high-performance cloud solutions.

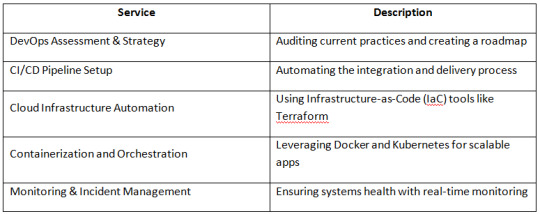

AWS partners deliver comprehensive services, encompassing cloud architecture design, application development, security implementation, DevOps, and cost optimization strategies. Whether a business aims to migrate existing workloads to AWS, develop cloud-native applications, or implement artificial intelligence and big data analytics, an AWS Cloud Development Partner ensures seamless execution and drives long-term success.

Key Services Provided by an AWS Cloud Development Partner

1. Cloud-Native Application Development

AWS Cloud Development Partners specialize in designing and building cloud-native applications utilizing AWS services such as AWS Lambda, Amazon API Gateway, and Amazon DynamoDB. These applications are crafted for scalability, cost-efficiency, and high availability.

2. Cloud Migration and Modernization

Migrating legacy applications to AWS requires meticulous planning and execution. AWS partners develop and implement seamless migration strategies, including rehosting, replatforming, and refactoring applications to enhance performance and scalability.

3. DevOps and CI/CD Implementation

Automation is a cornerstone of modern application development. AWS partners adopt DevOps best practices, enabling continuous integration and delivery (CI/CD) through AWS tools like AWS CodePipeline, AWS CodeBuild, and AWS CodeDeploy.

4. Security and Compliance

Security is a critical element of cloud adoption. AWS Cloud Development Partners assist businesses in implementing robust security frameworks, including encryption protocols, identity and access management (IAM), and adherence to industry standards such as GDPR, HIPAA, and ISO 27001.

5. Data Analytics and AI/ML Solutions

Leveraging AWS analytics and AI/ML services like Amazon SageMaker, AWS Glue, and Amazon Redshift, AWS partners empower businesses to drive data-driven decision-making and foster innovation.

6. Multi-Cloud and Hybrid Cloud Strategy

For organizations operating in multi-cloud or hybrid cloud environments, AWS partners facilitate seamless integration with other cloud providers and on-premises systems, ensuring operational flexibility and efficiency.

7. Cost Optimization and Performance Tuning

AWS partners help businesses optimize cloud expenses by rightsizing resources, utilizing reserved instances, and implementing serverless architectures, enabling cost-effective and efficient operations.

Advantages of Partnering with an AWS Cloud Development Partner

1. Expertise from Certified AWS Professionals

AWS partners provide access to certified professionals with specialized expertise in cloud development, ensuring tailored solutions that align with business objectives.

2. Accelerated Time-to-Market

By leveraging agile methodologies and automation, AWS partners expedite the development and deployment of applications, helping businesses achieve faster time-to-market.

3. Strengthened Security and Reliability

With a focus on AWS security best practices, AWS partners help businesses safeguard their applications and data, ensuring resilience against cyber threats and maintaining high availability.

4. Cost Efficiency

Through optimized cloud resource management and strategic cost-saving measures, AWS partners help businesses minimize unnecessary expenses while maximizing efficiency.

5. Continuous Innovation

With access to the latest AWS technologies, businesses can drive innovation and maintain a competitive edge in dynamic markets.

Conclusion

Partnering with an AWS Cloud Development Partner provides businesses with the expertise and tools necessary to build, optimize, and scale cloud solutions on AWS. These partners offer value through their proficiency in cloud-native development, DevOps, security, and cost optimization, empowering organizations to unlock the full potential of AWS. Whether embarking on a cloud transformation journey or refining existing cloud applications, working with an AWS expert ensures sustainable growth and success in the rapidly evolving digital landscape.

0 notes