#AWS Data Analytics

Explore tagged Tumblr posts

Text

How does AWS Data Analytics Services process and analyze large datasets efficiently?

AWS Data Analytics Services use a combination of tools and technologies to process large datasets efficiently:

Amazon S3 stores vast amounts of raw data securely.

AWS Glue automates data preparation and ETL (extract, transform, load) processes.

Amazon Redshift enables fast querying and data warehousing.

Amazon Kinesis handles real-time data streaming and analytics.

AWS Lambda allows serverless data processing without managing servers.

Conclusion:By integrating these services, AWS offers a flexible and scalable platform that accelerates data analysis workflows for large and complex datasets.

0 notes

Text

Struggling to get insights from your data? Traditional analytics hold you back. Explore how AWS data analytics empowers businesses to transform data into a strategic asset and make data-driven decisions.

#AWS Data Analytics Services#AWS Data & Analytics Platform#AWS Cloud#data analytics for business#Aws Data Analytics

1 note

·

View note

Text

Sigma Solve helps businesses unlock the value of their data using AWS data analytics services, leveraging advanced analytics techniques, and delivering actionable insights for informed decision-making. Whether you are considering a cloud migration, need assistance with cloud strategy, or require ongoing cloud management, a dedicated cloud expert brings invaluable skills and experience to streamline your digital transformation journey.

0 notes

Text

Abathur

At Abathur, we believe technology should empower, not complicate.

Our mission is to provide seamless, scalable, and secure solutions for businesses of all sizes. With a team of experts specializing in various tech domains, we ensure our clients stay ahead in an ever-evolving digital landscape.

Why Choose Us? Expert-Led Innovation – Our team is built on experience and expertise. Security First Approach – Cybersecurity is embedded in all our solutions. Scalable & Future-Proof – We design solutions that grow with you. Client-Centric Focus – Your success is our priority.

#Software Development#Web Development#Mobile App Development#API Integration#Artificial Intelligence#Machine Learning#Predictive Analytics#AI Automation#NLP#Data Analytics#Business Intelligence#Big Data#Cybersecurity#Risk Management#Penetration Testing#Cloud Security#Network Security#Compliance#Networking#IT Support#Cloud Management#AWS#Azure#DevOps#Server Management#Digital Marketing#SEO#Social Media Marketing#Paid Ads#Content Marketing

2 notes

·

View notes

Text

Master Playwright Test Automation with Expert-Led Online Training

Master Playwright Test Automation with Expert-Led Online Training

#AWS Training#Azure#DevOps Training#Data Science#MSBI#Data Analytics Training#Cyber Securit#playwrite

0 notes

Text

Top Certifications That Can Land You a Job in Tech

Published by Prism HRC – Leading IT Recruitment Agency in Mumbai

Breaking into the tech industry doesn’t always require a degree. With the rise of online learning and skill-based hiring, certifications have become the new ticket into some of the highest-paying and most in-demand jobs. Whether you're switching careers or upskilling to stay ahead, the right certification can boost your credibility and fast-track your job search.

Why Certifications Matter in Tech

Tech employers today are less focused on your college background and more interested in what you can actually do. Certifications show you're committed, skilled, and up-to-date with industry trends a huge plus in a fast-moving field like IT.

Let’s explore the top certifications in 2025 that are actually helping people land real tech jobs.

1. Google IT Support Professional Certificate

Perfect for: Beginners starting in tech or IT support This beginner-friendly course, offered through Coursera, teaches you everything from troubleshooting to networking. It’s backed by Google and respected across the industry.

Tip: It’s also a great way to pivot into other IT roles, including cybersecurity and network administration.

2. AWS Certified Solutions Architect – Associate

Perfect for: Cloud engineers, DevOps aspirants With cloud computing continuing to explode in 2025, AWS skills are hotter than ever. This cert proves you know how to design secure, scalable systems on the world’s most popular cloud platform.

Real Edge: Many employers prioritize candidates with AWS experience even over degree holders.

3. Microsoft Certified: Azure Fundamentals

Perfect for: Beginners interested in Microsoft cloud tools Azure is a close second to AWS in the cloud market. If you’re looking for a job at a company that uses Microsoft services, this foundational cert gives you a leg up.

4. CompTIA Security+

Perfect for: Cybersecurity beginners If you're aiming for a job in cybersecurity, this is often the first certification employers look for. It covers basic network security, risk management, and compliance.

Why it matters: As cyber threats grow, demand for cybersecurity professionals is rising rapidly.

5. Google Data Analytics Professional Certificate

Perfect for: Aspiring data analysts This course teaches data analysis, spreadsheets, SQL, Tableau, and more. It’s beginner-friendly and widely accepted by tech companies looking for entry-level analysts.

Industry Insight: Data skills are now essential across tech, not just for analysts, but for marketers, product managers, and more.

6. Certified ScrumMaster (CSM)

Perfect for: Project managers, product managers, team leads Tech teams often use Agile frameworks like Scrum. This certification helps you break into roles where communication, leadership, and sprint planning are key.

7. Cisco Certified Network Associate (CCNA)

Perfect for: Network engineers, IT support, and infrastructure roles If you’re into hardware, routers, switches, and network troubleshooting, this foundational cert is gold.

Why it helps: Many entry-level IT jobs prefer CCNA holders over generalists.

8. Meta (Facebook) Front-End Developer Certificate

Perfect for: Front-end developers and web designers This cert teaches HTML, CSS, React, and design systems. It’s hands-on and offered via Coursera in partnership with Meta.

The bonus? You also get portfolio projects to show in interviews.

How to Choose the Right Certification

Match it to your career goal – Don't do a cert just because it’s popular. Focus on the role you want.

Check job listings – Look at what certifications are frequently mentioned.

Time vs Value – Some certs take weeks, others months. Make sure the ROI is worth it.

- Based in Gorai-2, Borivali West, Mumbai - www.prismhrc.com - Instagram: @jobssimplified - LinkedIn: Prism HRC

#Tech Certifications#IT Jobs#Top Certifications 2025#Cloud Computing#Cybersecurity#Data Analytics#AWS Certification#Microsoft Azure#CompTIA Security+#Prism HRC#IT Recruitment#Mumbai IT#Skill-Based Hiring#Future of Tech#Mumbai IT Jobs#Google IT Support#Google Data Analytics

0 notes

Text

Data labeling and annotation

Boost your AI and machine learning models with professional data labeling and annotation services. Accurate and high-quality annotations enhance model performance by providing reliable training data. Whether for image, text, or video, our data labeling ensures precise categorization and tagging, accelerating AI development. Outsource your annotation tasks to save time, reduce costs, and scale efficiently. Choose expert data labeling and annotation solutions to drive smarter automation and better decision-making. Ideal for startups, enterprises, and research institutions alike.

#artificial intelligence#ai prompts#data analytics#datascience#data annotation#ai agency#ai & machine learning#aws

0 notes

Text

Ariyalam offers online training courses and certifications. As an education for all movements, it was started to prove that software training is possible at a cost-effective and quality training to all interested people. Training will be provided by quality trainers at the convenience of the students. Our course syllabus is tailored to suit the current situation.

1 note

·

View note

Text

Explore AWS Data Analytics Services for scalable, secure, and cost-effective solutions. Leverage powerful tools like Amazon Redshift, Athena, and QuickSight to gain insights and make data-driven decisions effortlessly.

0 notes

Text

AWS Data Analytics Training | AWS Data Engineering Training in Bangalore

What’s the Most Efficient Way to Ingest Real-Time Data Using AWS?

AWS provides a suite of services designed to handle high-velocity, real-time data ingestion efficiently. In this article, we explore the best approaches and services AWS offers to build a scalable, real-time data ingestion pipeline.

Understanding Real-Time Data Ingestion

Real-time data ingestion involves capturing, processing, and storing data as it is generated, with minimal latency. This is essential for applications like fraud detection, IoT monitoring, live analytics, and real-time dashboards. AWS Data Engineering Course

Key Challenges in Real-Time Data Ingestion

Scalability – Handling large volumes of streaming data without performance degradation.

Latency – Ensuring minimal delay in data processing and ingestion.

Data Durability – Preventing data loss and ensuring reliability.

Cost Optimization – Managing costs while maintaining high throughput.

Security – Protecting data in transit and at rest.

AWS Services for Real-Time Data Ingestion

1. Amazon Kinesis

Kinesis Data Streams (KDS): A highly scalable service for ingesting real-time streaming data from various sources.

Kinesis Data Firehose: A fully managed service that delivers streaming data to destinations like S3, Redshift, or OpenSearch Service.

Kinesis Data Analytics: A service for processing and analyzing streaming data using SQL.

Use Case: Ideal for processing logs, telemetry data, clickstreams, and IoT data.

2. AWS Managed Kafka (Amazon MSK)

Amazon MSK provides a fully managed Apache Kafka service, allowing seamless data streaming and ingestion at scale.

Use Case: Suitable for applications requiring low-latency event streaming, message brokering, and high availability.

3. AWS IoT Core

For IoT applications, AWS IoT Core enables secure and scalable real-time ingestion of data from connected devices.

Use Case: Best for real-time telemetry, device status monitoring, and sensor data streaming.

4. Amazon S3 with Event Notifications

Amazon S3 can be used as a real-time ingestion target when paired with event notifications, triggering AWS Lambda, SNS, or SQS to process newly added data.

Use Case: Ideal for ingesting and processing batch data with near real-time updates.

5. AWS Lambda for Event-Driven Processing

AWS Lambda can process incoming data in real-time by responding to events from Kinesis, S3, DynamoDB Streams, and more. AWS Data Engineer certification

Use Case: Best for serverless event processing without managing infrastructure.

6. Amazon DynamoDB Streams

DynamoDB Streams captures real-time changes to a DynamoDB table and can integrate with AWS Lambda for further processing.

Use Case: Effective for real-time notifications, analytics, and microservices.

Building an Efficient AWS Real-Time Data Ingestion Pipeline

Step 1: Identify Data Sources and Requirements

Determine the data sources (IoT devices, logs, web applications, etc.).

Define latency requirements (milliseconds, seconds, or near real-time?).

Understand data volume and processing needs.

Step 2: Choose the Right AWS Service

For high-throughput, scalable ingestion → Amazon Kinesis or MSK.

For IoT data ingestion → AWS IoT Core.

For event-driven processing → Lambda with DynamoDB Streams or S3 Events.

Step 3: Implement Real-Time Processing and Transformation

Use Kinesis Data Analytics or AWS Lambda to filter, transform, and analyze data.

Store processed data in Amazon S3, Redshift, or OpenSearch Service for further analysis.

Step 4: Optimize for Performance and Cost

Enable auto-scaling in Kinesis or MSK to handle traffic spikes.

Use Kinesis Firehose to buffer and batch data before storing it in S3, reducing costs.

Implement data compression and partitioning strategies in storage. AWS Data Engineering online training

Step 5: Secure and Monitor the Pipeline

Use AWS Identity and Access Management (IAM) for fine-grained access control.

Monitor ingestion performance with Amazon CloudWatch and AWS X-Ray.

Best Practices for AWS Real-Time Data Ingestion

Choose the Right Service: Select an AWS service that aligns with your data velocity and business needs.

Use Serverless Architectures: Reduce operational overhead with Lambda and managed services like Kinesis Firehose.

Enable Auto-Scaling: Ensure scalability by using Kinesis auto-scaling and Kafka partitioning.

Minimize Costs: Optimize data batching, compression, and retention policies.

Ensure Security and Compliance: Implement encryption, access controls, and AWS security best practices. AWS Data Engineer online course

Conclusion

AWS provides a comprehensive set of services to efficiently ingest real-time data for various use cases, from IoT applications to big data analytics. By leveraging Amazon Kinesis, AWS IoT Core, MSK, Lambda, and DynamoDB Streams, businesses can build scalable, low-latency, and cost-effective data pipelines. The key to success is choosing the right services, optimizing performance, and ensuring security to handle real-time data ingestion effectively.

Would you like more details on a specific AWS service or implementation example? Let me know!

Visualpath is Leading Best AWS Data Engineering training.Get an offering Data Engineering course in Hyderabad.With experienced,real-time trainers.And real-time projects to help students gain practical skills and interview skills.We are providing 24/7 Access to Recorded Sessions ,For more information,call on +91-7032290546

For more information About AWS Data Engineering training

Call/WhatsApp: +91-7032290546

Visit: https://www.visualpath.in/online-aws-data-engineering-course.html

#AWS Data Engineering Course#AWS Data Engineering training#AWS Data Engineer certification#Data Engineering course in Hyderabad#AWS Data Engineering online training#AWS Data Engineering Training Institute#AWS Data Engineering training in Hyderabad#AWS Data Engineer online course#AWS Data Engineering Training in Bangalore#AWS Data Engineering Online Course in Ameerpet#AWS Data Engineering Online Course in India#AWS Data Engineering Training in Chennai#AWS Data Analytics Training

0 notes

Text

Unlocking Innovation: Top IT Solutions From Data Analytics To Web Development In India

India has emerged as a global hub for technological innovation, hosting a plethora of top-tier IT companies that cater to businesses worldwide. From Data Analytics Companies in India to leading-edge web and software development firms, the country’s IT landscape continues to thrive, offering transformative solutions for businesses of all sizes.

The Role Of Data Analytics In Modern Businesses

In today’s data-driven world, the ability to harness insights from vast amounts of information is a game-changer. Data Analytics Companies in India specialise in empowering organisations to make informed decisions by leveraging cutting-edge tools and techniques. These firms offer services ranging from predictive analytics to real-time data processing, enabling businesses to identify trends, optimise operations and gain a competitive edge. Indian companies are renowned for their expertise in developing cost-effective, scalable and customisable solutions tailored to specific business needs.

The Demand For Web Application Development

As the digital era progresses, businesses need robust web applications to ensure seamless customer engagement and operational efficiency. A Web Application Development Company in India focuses on crafting interactive, scalable and secure applications for diverse industries. Indian firms leverage advanced technologies like React, Angular and cloud computing to deliver high-quality web solutions that enhance user experiences and improve productivity. Their ability to blend creativity with technology ensures exceptional results.

Software Development: Building Digital Ecosystems

Indian companies are at the forefront of software innovation, providing end-to-end solutions that cater to unique business challenges. A Software Development Company in India prioritises agility, scalability and performance, crafting solutions that integrate seamlessly into existing workflows. From enterprise resource planning (ERP) systems to AI-powered platforms, Indian software developers excel in delivering tailor-made products that drive digital transformation.

Why Choose Indian IT Companies?

India’s IT companies are synonymous with quality, reliability and innovation. With access to a large talent pool, cutting-edge technology and a customer-centric approach, Indian firms have become the go-to destination for businesses worldwide. Competitive pricing and robust after-sales support further enhance their appeal.

Conclusion: BuzzyBrains – A Leader In IT Solutions

Among the myriad IT firms in India, BuzzyBrains stands out as a trusted partner in digital transformation. Established in 2016, BuzzyBrains specialises in software product development, web application solutions and advanced data analytics. Their focus on innovation, transparency and client satisfaction makes them a preferred choice for businesses seeking comprehensive IT solutions.

BuzzyBrains’ expertise spans multiple domains, delivering cutting-edge web applications and high-performing software systems. Their advanced data analytics services empower organisations to unlock actionable insights and achieve measurable results. With a commitment to excellence and a proven track record, BuzzyBrains exemplifies the qualities that position Indian IT companies as leaders on the global stage.

Whether you need bespoke software, interactive web applications, or robust data analytics solutions, BuzzyBrains offers the perfect blend of innovation and reliability, setting the standard for IT excellence in India.

#data analytics company in india#buzzybrains software#web application development company in india#software development company india#aws development india

0 notes

Text

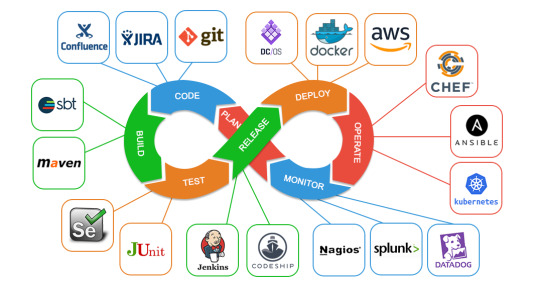

Top 5 In-Demand DevOps Roles and Their Salaries in 2025

The DevOps field continues to see massive growth in 2025 as organizations across industries prioritize automation, collaboration, and scalability. Here’s a look at the top 5 in-demand DevOps roles, their responsibilities, and salary trends.

1. DevOps Engineer

Role Overview: The cornerstone of any DevOps team, DevOps Engineers manage CI/CD pipelines, ensure system automation, and streamline collaboration between development and operations teams.

Skills Required: Proficiency in Jenkins, Git, Docker, Kubernetes, and scripting languages like Python or Bash.

Salary Trends (2025):

Freshers: ₹6–8 LPA

Experienced: ₹15–20 LPA

2. Site Reliability Engineer (SRE)

Role Overview: SREs focus on maintaining system reliability and improving application performance by blending engineering and operations expertise.

Skills Required: Knowledge of monitoring tools (Prometheus, Grafana), incident management, and system architecture.

Salary Trends (2025):

Entry-Level: ₹7–10 LPA

Experienced: ₹18–25 LPA

3. Cloud Engineer

Role Overview: Cloud Engineers design, manage, and optimize cloud infrastructure, ensuring it is secure, scalable, and cost-effective.

Skills Required: Hands-on experience with AWS, Azure, or Google Cloud, along with infrastructure as code (IaC) tools like Terraform or Ansible.

Salary Trends (2025):

Beginners: ₹8–12 LPA

Senior Professionals: ₹20–30 LPA

4. Kubernetes Specialist

Role Overview: As businesses increasingly adopt containerization, Kubernetes Specialists manage container orchestration to ensure smooth deployments and scalability.

Skills Required: Deep understanding of Kubernetes, Helm, Docker, and microservices architecture.

Salary Trends (2025):

Mid-Level: ₹8–15 LPA

Senior-Level: ₹18–25 LPA

5. DevSecOps Engineer

Role Overview: DevSecOps Engineers integrate security practices within the DevOps lifecycle, ensuring robust systems from development to deployment.

Skills Required: Expertise in security tools, vulnerability assessment, and compliance frameworks.

Salary Trends (2025):

Early Career: ₹9–14 LPA

Experienced Professionals: ₹22–30 LPA

Upskill for Your Dream Role

The demand for DevOps professionals in 2025 is higher than ever. With the right skills and certifications, you can land these lucrative roles.

At Syntax Minds, we offer comprehensive DevOps training programs to equip you with the tools and expertise needed to excel. Our job-oriented courses are available both offline and online, tailored for fresh graduates and working professionals.

Visit Us: Flat No. 202, 2nd Floor, Vanijya Complex, Beside VRK Silks, KPHB, Hyderabad - 500085

Contact: 📞 9642000668, 9642000669 📧 [email protected]

Start your journey today with Syntax Minds and take your career to new heights!

#artificial intelligence#data science#deep learning#data analytics#machine learning#DevOps#Cloud#AWS#AZURE#data scientist

0 notes

Text

Anaconda Launches First Unified AI Platform for Open Source, Redefining Enterprise-Grade AI Development

New Post has been published on https://thedigitalinsider.com/anaconda-launches-first-unified-ai-platform-for-open-source-redefining-enterprise-grade-ai-development/

Anaconda Launches First Unified AI Platform for Open Source, Redefining Enterprise-Grade AI Development

In a landmark announcement for the open-source AI community, Anaconda Inc., a long-time leader in Python-based data science, has launched the Anaconda AI Platform — the first unified AI development platform tailored specifically to open source. Aimed at streamlining and securing the end-to-end AI lifecycle, this platform enables enterprises to move from experimentation to production faster, safer, and more efficiently than ever before.

The launch represents not only a new product offering but a strategic pivot for the company: from being the de facto package manager for Python to now becoming the enterprise AI backbone for open-source innovation.

Bridging the Gap Between Innovation and Enterprise-Grade AI

The rapid rise of open-source tools has been a catalyst in the AI revolution. However, while frameworks like TensorFlow, PyTorch, scikit-learn, and Hugging Face Transformers have lowered the barrier to experimentation, enterprises face unique challenges in deploying these tools at scale. Issues like security vulnerabilities, dependency conflicts, compliance risks, and governance limitations often block enterprise adoption — slowing innovation just when it’s most needed.

Anaconda’s new platform is purpose-built to close this gap.

“Until now, there hasn’t been a single destination for AI development with open source, which is the backbone for inclusive and innovative AI,” said Peter Wang, Co-founder and Chief AI & Innovation Officer of Anaconda. “We’re not only offering streamlined workflows, enhanced security, and substantial time savings, but ultimately, giving enterprises the freedom to build AI their way — without compromise.”

What Makes It the First Unified AI Platform for Open Source?

The Anaconda AI Platform centralizes everything enterprises need to build and operationalize AI solutions based on open-source software. Unlike other platforms that specialize in just model hosting or experimentation, Anaconda’s platform covers the full AI lifecycle — from sourcing and securing packages to deploying production-ready models across any environment.

Key Capabilities of the Platform Include:

Trusted Open-Source Package Distribution:Includes access to over 8,000 pre-vetted, secure packages fully compatible with Anaconda Distribution. All packages are continuously tested for vulnerabilities, making it easier for enterprises to adopt open-source tools with confidence.

Secure AI & Governance:Enterprise-grade security features like Single Sign-On (SSO), role-based access control, and audit logging ensure traceability, user accountability, and compliance with regulations such as GDPR, HIPAA, and SOC 2.

AI-Ready Workspaces & Environments:Pre-configured “Quick Start” environments for use cases like finance, machine learning, and Python analytics accelerate time to value and reduce the need for configuration-heavy setup.

Unified CLI with AI Assistant:A command-line interface powered by an AI assistant helps developers resolve errors automatically, minimizing context switching and debugging time.

MLOps-Ready Integration:Built-in tools for monitoring, error tracking, and package auditing streamline MLOps (Machine Learning Operations), a critical discipline that bridges data science and production engineering.

What Is MLOps and Why Does It Matter?

MLOps is to AI what DevOps is to software development: a set of practices and tools that ensure machine learning models are not only developed but also deployed, monitored, updated, and scaled responsibly. Anaconda’s AI Platform is tightly aligned with MLOps principles, allowing teams to standardize workflows, track model lineage, and optimize model performance in real-time.

By centralizing governance, automation, and collaboration, the platform simplifies what is typically a fragmented and error-prone process. This unified approach is a game-changer for organizations trying to industrialize AI capabilities across teams.

Why Now? A Surge in Open-Source AI, But With Hidden Costs

Open source has become the foundation of modern AI. A recent study cited by Anaconda found that 50% of data scientists rely on open-source tools daily, and 66% of IT administrators confirm that open-source software plays a critical role in their enterprise tech stacks. However, the freedom and flexibility of open source come with trade-offs — especially around security and compliance.

Each time a team installs a package from a public repository like PyPI or GitHub, they introduce potential security risks. These vulnerabilities are difficult to track manually, especially when organizations rely on hundreds of packages, often with deep dependency trees.

With the Anaconda AI Platform, this complexity is abstracted away. Teams gain real-time visibility into package vulnerabilities, usage patterns, and compliance requirements — all while using the tools they know and love.

Enterprise Impact: Measurable ROI and Reduced Risk

To understand the business value of the platform, Anaconda commissioned a Total Economic Impact™ (TEI) study from Forrester Consulting. The findings are striking:

119% ROI over three years.

80% improvement in operational efficiency (worth $840,000).

60% reduction in risk of security breaches tied to package vulnerabilities.

80% reduction in time spent on package security management.

These results demonstrate that the Anaconda AI Platform is not just a developer tool — it’s a strategic enterprise asset that reduces overhead, enhances productivity, and accelerates time-to-value in AI development.

A Company Rooted in Open Source, Built for the AI Era

Anaconda isn’t new to the AI or data science space. The company was founded in 2012 by Peter Wang and Travis Oliphant, with the mission to bring Python — then an emerging language — into the mainstream of enterprise data analytics. Today, Python is the most widely used language in AI and machine learning, and Anaconda sits at the heart of that movement.

From a team of a few open-source contributors, the company has grown into a global operation with over 300 full-time employees and 40 million+ users around the world. It continues to maintain and steward many of the open-source tools used daily in data science, such as conda, pandas, NumPy, and more.

Anaconda is not just a company — it’s a movement. Its tools underpin key innovations at companies like Microsoft, Oracle, and IBM, and power integrations like Python in Excel and Snowflake’s Snowpark for Python.

“We are — and always will be — committed to fostering open-source innovation,” says Wang. “Our job is to make open source enterprise-ready so that innovation isn’t slowed down by complexity, risk, or compliance barriers.”

A Future-Proof Platform for AI at Scale

The Anaconda AI Platform is available now and can be deployed across public cloud, private cloud, sovereign cloud, and on-premise environments. It’s also listed on AWS Marketplace for seamless procurement and enterprise integration.

In a world where speed, trust, and scale are paramount, Anaconda has redefined what’s possible for open-source AI — not just for individual developers, but for the enterprises that depend on them.

#000#access control#adoption#ai#ai assistant#AI development#ai platform#amp#anaconda#Analytics#approach#Artificial Intelligence#audit#automation#AWS#barrier#Business#catalyst#Cloud#Collaboration#command#command-line interface#Community#Companies#complexity#compliance#compromise#consulting#data#data analytics

0 notes

Text

Discover how AWS enables businesses to achieve greater flexibility, scalability, and cost efficiency through hybrid data management. Learn key strategies like dynamic resource allocation, robust security measures, and seamless integration with existing systems. Uncover best practices to optimize workloads, enhance data analytics, and maintain business continuity with AWS's comprehensive tools. This guide is essential for organizations looking to harness cloud and on-premises environments effectively.

#AWS hybrid data management#cloud scalability#hybrid cloud benefits#AWS best practices#hybrid IT solutions#data management strategies#AWS integration#cloud security benefits#AWS cost efficiency#data analytics tools

0 notes

Text

Why AWS is Becoming Essential for Modern IT Professionals

In today's fast-paced tech landscape, the integration of development and operations has become crucial for delivering high-quality software efficiently. AWS DevOps is at the forefront of this transformation, enabling organizations to streamline their processes, enhance collaboration, and achieve faster deployment cycles. For IT professionals looking to stay relevant in this evolving environment, pursuing AWS DevOps training in Hyderabad is a strategic choice. Let’s explore why AWS DevOps is essential and how training can set you up for success.

The Rise of AWS DevOps

1. Enhanced Collaboration

AWS DevOps emphasizes the collaboration between development and operations teams, breaking down silos that often hinder productivity. By fostering communication and cooperation, organizations can respond more quickly to changes and requirements. This shift is vital for businesses aiming to stay competitive in today’s market.

2. Increased Efficiency

With AWS DevOps practices, automation plays a key role. Tasks that were once manual and time-consuming, such as testing and deployment, can now be automated using AWS tools. This not only speeds up the development process but also reduces the likelihood of human error. By mastering these automation techniques through AWS DevOps training in Hyderabad, professionals can contribute significantly to their teams' efficiency.

Benefits of AWS DevOps Training

1. Comprehensive Skill Development

An AWS DevOps training in Hyderabad program covers a wide range of essential topics, including:

AWS services such as EC2, S3, and Lambda

Continuous Integration and Continuous Deployment (CI/CD) pipelines

Infrastructure as Code (IaC) with tools like AWS CloudFormation

Monitoring and logging with AWS CloudWatch

This comprehensive curriculum equips you with the skills needed to thrive in modern IT environments.

2. Hands-On Experience

Most training programs emphasize practical, hands-on experience. You'll work on real-world projects that allow you to apply the concepts you've learned. This experience is invaluable for building confidence and competence in AWS DevOps practices.

3. Industry-Recognized Certifications

Earning AWS certifications, such as the AWS Certified DevOps Engineer, can significantly enhance your resume. Completing AWS DevOps training in Hyderabad prepares you for these certifications, demonstrating your commitment to professional development and expertise in the field.

4. Networking Opportunities

Participating in an AWS DevOps training in Hyderabad program also allows you to connect with industry professionals and peers. Building a network during your training can lead to job opportunities, mentorship, and collaborative projects that can advance your career.

Career Opportunities in AWS DevOps

1. Diverse Roles

With expertise in AWS DevOps, you can pursue various roles, including:

DevOps Engineer

Site Reliability Engineer (SRE)

Cloud Architect

Automation Engineer

Each role offers unique challenges and opportunities for growth, making AWS DevOps skills highly valuable.

2. High Demand and Salary Potential

The demand for DevOps professionals, particularly those skilled in AWS, is skyrocketing. Organizations are actively seeking AWS-certified candidates who can implement effective DevOps practices. According to industry reports, these professionals often command competitive salaries, making an AWS DevOps training in Hyderabad a wise investment.

3. Job Security

As more companies adopt cloud solutions and DevOps practices, the need for skilled professionals will continue to grow. This trend indicates that expertise in AWS DevOps can provide long-term job security and career advancement opportunities.

Staying Relevant in a Rapidly Changing Industry

1. Continuous Learning

The tech industry is continually evolving, and AWS regularly introduces new tools and features. Staying updated with these advancements is crucial for maintaining your relevance in the field. Consider pursuing additional certifications or training courses to deepen your expertise.

2. Community Engagement

Engaging with AWS and DevOps communities can provide insights into industry trends and best practices. These networks often share valuable resources, training materials, and opportunities for collaboration.

Conclusion

As the demand for efficient software delivery continues to rise, AWS DevOps expertise has become essential for modern IT professionals. Investing in AWS DevOps training in Hyderabad will equip you with the skills and knowledge needed to excel in this dynamic field.

By enhancing your capabilities in collaboration, automation, and continuous delivery, you can position yourself for a successful career in AWS DevOps. Don’t miss the opportunity to elevate your professional journey—consider enrolling in an AWS DevOps training in Hyderabad program today and unlock your potential in the world of cloud computing!

#technology#aws devops training in hyderabad#aws course in hyderabad#aws training in hyderabad#aws coaching centers in hyderabad#aws devops course in hyderabad#Cloud Computing#DevOps#AWS#AZURE#CloudComputing#Cloud Computing & DevOps#Cloud Computing Course#DeVOps course#AWS COURSE#AZURE COURSE#Cloud Computing CAREER#Cloud Computing jobs#Data Storage#Cloud Technology#Cloud Services#Data Analytics#Cloud Computing Certification#Cloud Computing Course in Hyderabad#Cloud Architecture#amazon web services

0 notes

Text

Aretove Technologies specializes in data science consulting and predictive analytics, particularly in healthcare. We harness advanced data analytics to optimize patient care, operational efficiency, and strategic decision-making. Our tailored solutions empower healthcare providers to leverage data for improved outcomes and cost-effectiveness. Trust Aretove Technologies for cutting-edge predictive analytics and data-driven insights that transform healthcare delivery.

#Data Science Consulting#Predictive Analytics in Healthcare#Sap Predictive Analytics#Ai Predictive Analytics#Data Engineering Consulting Firms#Power Bi Predictive Analytics#Data Engineering Consulting#Data Engineering Aws#Data Engineering Company#Predictive and Prescriptive Analytics#Data Science and Analytics Consulting

0 notes